94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 16 September 2022

Sec. Perception Science

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.959124

This article is part of the Research Topic Deep Learning Techniques Applied to Affective Computing View all 11 articles

Micro-expression (ME) is an extremely quick and uncontrollable facial movement that lasts for 40–200 ms and reveals thoughts and feelings that an individual attempts to cover up. Though much more difficult to detect and recognize, ME recognition is similar to macro-expression recognition in that it is influenced by facial features. Previous studies suggested that facial attractiveness could influence facial expression recognition processing. However, it remains unclear whether facial attractiveness could also influence ME recognition. Addressing this issue, this study tested 38 participants with two ME recognition tasks in a static condition or dynamically. Three different MEs (positive, neutral, and negative) at two attractiveness levels (attractive, unattractive). The results showed that participants recognized MEs on attractive faces much quicker than on unattractive ones, and there was a significant interaction between ME and facial attractiveness. Furthermore, attractive happy faces were recognized faster in both the static and the dynamic conditions, highlighting the happiness superiority effect. Therefore, our results provided the first evidence that facial attractiveness could influence ME recognition in a static condition or dynamically.

Micro-expression (ME) is an instinctive facial movement that expresses emotion and cognition. It is difficult for individuals to identify MEs since they are rapid (usually lasting for 40–200 ms), local, low-intensity facial responses (Liang et al., 2013). On the contrary, macro-expression is easily identifiable and lasts between 500 ms and 4 s (Takalkar et al., 2021). Ekman and Friesen (1969) indicated that the only difference between ME and macro-expression is their duration. According to Shen et al. (2012), the duration of the expressions influences the accuracy of ME recognition, the proper upper limit of duration of ME may be 200 ms or less. Shen et al. (2016) utilized electroencephalogram (EEG) and event-related potentials (ERPs) and found that the EEG/ERPs neural mechanisms for recognizing MEs differ from those for recognizing macro-expressions. From their findings, the vertex positive potential (VPP) at the electrodes Cz and CPz were significantly different between MEs (duration of less than 200 ms) and macro-expressions (duration of greater than 200 ms), and the VPP amplitude of negative expression was larger than that of positive and neutral expression with the duration of less than 200 ms, while when the duration was greater than 200 ms, there was no difference in VPP amplitude induced by different emotional expressions.Previous studies discovered that emotional contexts influence ME processing at an early stage. Zhang et al. (2018) found that early ERP differences in emotional contexts on ME processing, more positive P1 (an early component related to the visual processing of faces, peaking at approximately 100 ms) and N170 (peaking at around 160 ms) elicited by targeting ME followed negative and positive contexts rather than neutral contexts. Previous functional magnetic resonance imaging (fMRI) research found that emotional contexts reduce the accuracy of ME recognition while increasing context-related activation in some emotional and attentional regions (Zhang et al., 2020). Due to the additional monitoring and attention required for emotional context inhibition, the increased perceptual load of negative and positive contexts results in increased brain activation as well as decreased behavioral performance (Siciliano et al., 2017). Studies of emotion perception have demonstrated that ME recognition is similar to macro-expression recognition and that it is affected by variety of factors, such as gender (Abbruzzese et al., 2019), age (Abbruzzese et al., 2019), occupation (Hurley, 2012), culture (Iria et al., 2019), and individual psychological characteristics (Zhang et al., 2017). ME recognition is widely used in the fields of national security, judicial interrogation, and clinical fields as an effective clue for detecting deceptions (Ekman, 2009), as MEs occurred too quickly and are very difficult to detect, scholars have long endeavored to explore and improve individuals' ability to recognize MEs. Previous studies have typically focused on how facial attractiveness moderates macro-expression recognition. To the best of our knowledge, no previous study on macro-expressions has employed facial expressions of 200 ms or less as their stimuli, it remains unclear whether the durations of facial expressions are able to modulate the effects of facial attractiveness on facial emotion recognition (FER).

Facial attractiveness is the extent to which a face makes an individual feel good and happy, and how much it makes them want to get closer to it (Rhodes, 2006). Attractiveness is a strong signal of social interaction, reflecting all facial features (Rhodes, 2006; Li et al., 2019). Attractive faces are commonly connected with good features such as personal attributes (Eagly et al., 1991; Lindeberg et al., 2019) and higher intelligence levels (Jackson et al., 1995; Mertens et al., 2021). Abundant evidence showed that facial attractiveness affects the ability to recognize facial expressions (e.g., Dion et al., 1972; Cunningham, 1986; Otta et al., 1996; Hugenberg and Sczesny, 2006; Krumhuber et al., 2007; Zhang et al., 2016). For example, Lindeberg et al. (2019) asked participants to recognize happy or angry expressions and rate the level of attractiveness of their faces, the results show that attractiveness has a strong influence on emotion perception. According to Lindeberg et al. (2019) facial attractiveness moderates expressions recognition, participants showed the happiness superiority effect for the faces with higher attractiveness levels but not for the unattractive ones, i.e., people tend to recognize happiness faster in attractive faces than in unattractive faces, while there is no such effect in other emotions recognition (i.e., anger, sadness, surprise, Leppänen and Hietanen, 2004). Li et al. (2019) also observed that facial attractiveness moderates the happiness superiority effect, participants could identify the happy expression faster in higher attractive faces, which is consistent with the findings of Lindeberg et al. (2019). Furthermore, in the study by Golle et al. (2014), the authors utilized two-alternative-forced choice paradigms, which required participants to choose one stimulus above the other. The result revealed that facial attractiveness affects happy expression recognition. When happy faces were likewise more attractive, identifying them was easier. Mertens et al. (2021) employ the mood-of-the-crowd task to compare attractive and unattractive crowds. According to the research, participants were more quick and accurate when rating happy crowds. Attractive crowds were perceived as happier than unattractive crowds, that is, people in crowds with unattractive faces were regarded to be in a negative mood, which supports the assumption that attractiveness could moderate emotion perception.

However, a few studies failed to demonstrate that facial attractiveness influences facial emotion recognition (e.g., Jaensch et al., 2014). For example, Taylor and Bryant (2016) asked participants to classify happiness, neutral, or anger emotions at two attractiveness levels (attractive, unattractive), according to the findings of their study, the detection of happiness or anger is not significantly influenced by facial attractiveness. It should be noted that Taylor and Bryant (2016) used anger as the negative expression, however, anger is often mistaken for those other emotions (Taylor and Jose, 2014), which may have contributed to the masculinization of attractive female faces that made them seem less attractive (Jaensch et al., 2014) and lead to unreliable results. Thus, this study used disgust expression as experimental material which extends the existing research. Furthermore, previous research on recognizing facial expressions has employed static stimuli, while human faces in real life are not static. As humans utilize dynamic facial expressions in everyday conversation, the ability to accurately recognize dynamic expressions makes more sense (Li et al., 2019). In contrast to static facial expressions, previous studies show that dynamic facial expressions are more ecologically valid and could induce more obvious behavioral responses, such as emotion perception (Recio et al., 2011), emotion elicitation (Scherer et al., 2019), and imitation of facial expressions (Sato and Yoshikawa, 2007). This evidence suggests that dynamic stimuli are better identified than static ones, according to face processing literature (Zhang et al., 2015). In this study, we showed participants static and dynamic stimuli to recognize MEs.

To this end, we aimed to explore whether facial attractiveness moderates ME recognition processing. In Experiment 1, static expressions of disgust, neutral, and happiness were presented. Furthermore, Experiment 2 replicated and extended Experiment 1's results by using dynamic stimuli (happy, disgust). We hypothesized that attractive faces could be judged faster overall in a static condition or dynamically; participants could recognize happiness more accurately in attractive faces than in unattractive faces.

We adopted a recognition task modified from the Brief Affect Recognition Test (BART) to simulate a ME (Shen et al., 2012). In the BART paradigm (Ekman and Friesen, 1974), one of the six emotions (happiness, disgust, anger, fear, surprise, and sadness) was presented for 10 ms to 250 ms. In Experiment 1 we presented static stimuli with a duration of 200 ms (happiness as positive ME, disgust as negative ME, and neutral as a control condition) to investigate the effects of facial attractiveness on the processing of MEs. We hypothesized that participants could judge attractive faces faster in static faces, and facial attractiveness moderates the happiness superiority effect, participants could identify the happy expression faster in higher attractive faces but not for the unattractive ones.

The number of participants was similar to or larger than previous research examining the effect of facial attractiveness on expression recognition (e.g., Taylor and Bryant, 2016; Li et al., 2019). Based on a post hoc power analysis by using G*Power 3.1 (Faul et al., 2007) and calculating power analysis for the main effect of ME (a partial η2 equal to 0.349, an alpha of 0.05, and a total sample size of 38) and attractiveness (a partial η2 equal to 0.535, an alpha of 0.05, and a total sample size of 38), we observed that this sample size generated a high power of 1-β equal to 0.978 and 0.999 separately. Thus, thirty-eight right-handed participants from Beijing Normal University, Zhuhai (M = 20.24 years, SD = 0.675 years, 20 women) were recruited and received remuneration for completing the experiment. All participants had a normal or corrected-to-normal vision and no psychiatric history. This study adhered to the Declaration of Helsinki and was approved by the Institutional Review Board of the Institute of Psychology, Chinese Academy of Sciences.

Experiment 1 adopted a 3 (ME: happy, neutral, disgust) × 2 (Attractiveness: attractive, unattractive) within-subject factors design. The dependent variables were the participants' mean accuracy score (%) and the mean reaction times (ms) for participants to accurately detect MEs.

The Extended Cohn-Kanade Dataset (CK+) face database was used to choose images of faces (Lucey et al., 2010). CK+ is the most frequently used laboratory-controlled facial expression classification database that conforms to the Facial Action Coding System (Ekman and Friesen, 1978). At the individual (within-culture) level, Matsumoto et al. (2007) observed consistent and dependable positive connections among the response systems across all seven emotions (happiness, disgust, sadness, contempt, fear, anger, and surprise). These associations indicated that the response systems were coherent with one another. According to Ekman (1992), the response systems for anger, fear, happiness, sadness, and disgust are coherent across cultures which are based not only on a high level of agreement in the labeling of what these expressions signal across literate and preliterate cultures, but also on studies of the actual expression of emotions, both deliberately and spontaneously, as well as the association of expressions with social interactive contexts. Therefore, Caucasian faces can be used to measure Chinese college students (Zhang et al., 2017). From the CK+ face database, we picked 120 pictures of 40 different models whose facial expressions included disgust, happiness, and neutral. Twenty-two additional Chinese participants rated each neutral expression's level of attractiveness on a 7-point Likert scale (1 = very unattractive, 7 = very attractive). A paired sample t-test confirmed that the attractive faces (M = 4.18, SD = 0.152) were significantly higher than unattractive faces (M = 2.23, SD = 0.148), t(4) = 15.764, p < 0.001. The five faces with the highest and lowest average attractiveness ratings were chosen for the research, resulting in a total of 60 trials. In these trials, ten different model faces were used for each emotion: five attractive models representing the three emotions (happiness, neutral, and disgust) and five unattractive models expressing the same emotions. All photos were 350 × 418 pixels in size and shown on a white background. A Lenovo computer (23.8-inch CRT monitor, resolution 1,920 × 1,080 pixels) and E-Prime (version 2.0) were used to present the stimuli and collect the data.

In a quiet environment, participants were tested individually. First, they were given a practice block consisting of nine trials, to begin with, so that they could get familiar with the task. It was requested of the participants that they maintain their gaze on a center fixation cross that was shown on the screen for a duration of 500 ms, then one of the three basic expressions was shown for the duration of 200 ms in the middle of the screen. Participants were told to press the appropriate key according to the micro-expression they considered the face revealed (the “J” key for happy, “K” key for neutral, or the “L” key for disgust) and rate each face on attractiveness using a 7-point Likert scale (1 = very unattractive, 7 = very attractive), each trial only displayed a single image. After 2,000 ms, the reaction screen vanished automatically. The participants were instructed to complete the task in as little time as possible while maintaining the highest level of accuracy. The experimental blocks didn't utilize the practice block's images. Each experimental block included all 30 photographs, one of each face shown twice in random order. Testing took about 15 min (refer to Figure 1).

The average accuracy and mean reaction times for each combination were calculated in both experiments. To deal with the reaction time outliers, we adopted an approach suggested in Ratcliff (1993) and set up a cut-off point of 1.5 SDs above the mean. After that, the reaction time was processed in the same way as the accuracy. We utilized Greenhouse- Geisser correction for heterogeneity of covariances (if sphericity could not be assumed) and Bonferroni correction for post-hoc pairwise comparisons. SPSS 26.0 program was used for the data analysis.

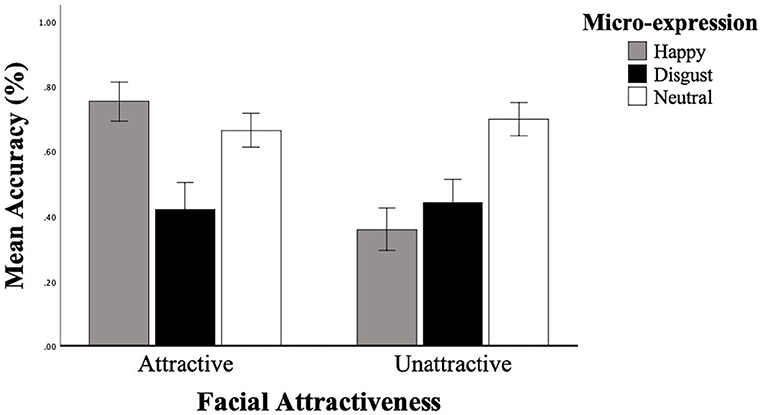

We launched a 3 × 2 repeated measures ANOVA with ME (happy, neutral, disgust) and Attractiveness (attractive, unattractive) as within-subject factors, and with mean accuracy as dependent variables. The mean accuracy of the three MEs is shown in Figure 2. The results revealed a significant main effect of ME, [F(2, 74) = 19.823, p < 0.001, = 0.349], a significant main effect of attractiveness, [F(1, 37) = 42.519, p < 0.001, = 0.535]. The interactions between ME and attractiveness were significant, [F(1.580, 2.019) = 41.447, p < 0.001, = 0.528]. Pairwise comparisons with Bonferroni correction show that for ME, mean accuracy were significantly higher when responding to happiness compared to disgust (p = 0.011, 95% CI [0.024, 0.228]) neutral identified higher recognition accuracy than happiness (p = 0.002, 95% CI [0.041, 0.209]), and disgust (p < 0.001, 95% CI [0.139, 0.364]). A simple main effect of ME was analyzed to examine the interaction between attractiveness and ME. The results revealed a significant simple main effect of ME under the attractive faces condition, [F(2, 36) = 27.777, p < 0.001, = 0.607], and a significant simple main effect of ME under the unattractive faces condition, [F(2, 36) = 38.731, p < 0.001, = 0.683]. Under the attractive faces condition, happiness (M = 0.755, SD = 0.030) identified higher recognition accuracy than disgust [M = 0.666, SD = 0.026, t(36) = 2.34, p = 0.023, d = 0.780, 95% CI [0.013, 0.166]], and neutral [M = 0.442, SD = 0.036, t(36) = 7.45, p < 0.001, d = 2.48, 95% CI [0.229, 0.397]], disgust identified higher recognition accuracy than neutral [t(36) = 4.571, p < 0.001, d = 1.524, 95% CI [0.125, 0.322]]. Furthermore, neutral (M = 0.700, SD = 0.025) identified higher recognition accuracy than happiness [M = 0.421, SD = 0.042, t(36) = 5.167, p < 0.001, d = 1.722, 95% CI [0.169, 0.389]] and disgust [M = 0.361, SD = 0.032, t(36) = 8.692, p < 0.001, d = 2.897, 95% CI [0.261, 0.418]] under the unattractive faces condition, but no significant differences between happiness and disgust (p = 0.242, 95% CI [−0.043, 0.164]) (refer to Table 1).

Figure 2. Participants' mean accuracy of the static micro-expression recognition task in two facial attractiveness levels (attractive, unattractive). Error bars reflect the 95% CIs for the mean accuracy.

Mean reaction times were submitted to a second repeated measures ANOVA with the same factors described above, outliers (reaction times exceeding the mean of each participant by 1.5 SD) were not included in the analysis. There was no significant main effect of ME, [F(2, 56) = 1.661, p = 0.199], and attractiveness, [F(1, 28) = 0.453, p = 0.507], no significant interactions between ME and attractiveness, [F(2, 56) = 1.363, p = 0.264].

Attractiveness ratings were submitted to a third repeated measures ANOVA with the same factors described above. The results revealed a significant main effect of ME, [F(2, 74) = 62.595, p < 0.001, = 0.628], a significant main effect of attractiveness, [F(1, 37) = 64.526, p < 0.001, = 0.636]. The interactions between ME and attractiveness were significant, [F(2, 74) = 7.786, p = 0.001, = 0.174], indicating that the attractive manipulation of the stimuli used in the current study is effective. Pairwise comparisons with Bonferroni correction show that for ME, the score of attractiveness ratings was significantly higher when responding to happiness compared to disgust (p < 0.001, 95% CI [0.500, 0.939]), and neutral (p < 0.001, 95% CI [0.427, 0.737]), neutral were rated as more attractive than disgust (p = 0.027, 95% CI [0.013, 0.264]). Further analysis revealed a significant simple main effect of ME under the attractive faces condition, [F(2, 36) = 30.378, p < 0.001, = 0.628], and a significant simple main effect of ME under the unattractive faces condition, [F(2, 36) = 23.264, p < 0.001, = 0.564]. Under the attractive faces condition, happiness (M = 4.337, SD = 0.164) were rated with a higher score than disgust [M = 3.421, SD = 0.135, t(36) = 7.508, p < 0.001, d = 2.503, 95% CI [0.668, 1.164]], and neutral [M = 3.582, SD = 0.123, t(36) = 7.704, p < 0.001, d = 2.568, 95% CI [556, 0.954]], disgust were rated with lower score than neutral [t(36) = 2.439, p = 0.020, d = 0.813, 95% CI [−0.294, −0.027]]. Under the unattractive faces condition, happiness (M = 3.361, SD = 0.163) were rated with higher score than disgust [M = 2.837, SD = 0.143, t(36) = 6.39, p < 0.001, d = 2.13, 95% CI [0.358, 0.690]] and neutral [M = 2.953, SD = 0.163, t(36) = 5.826, p < 0.001, d = 1.942, 95% CI [0.266, 0.550]], no significant differences between disgust and neutral [t(36) = 1.634, p = 0.112, d = 0.545, 95% CI [−0.260, 0.029]].

In this study, we examine how facial attractiveness influences the processing of ME recognition in static conditions. Analysis of accuracy indicated that the recognition of ME is influenced by attractiveness. Participants categorized attractive faces more accurately than unattractive faces. Specifically, participants showed the happiness superiority effect for the faces with higher attractiveness levels but not for the unattractive ones, the expression of happiness on the attractive faces was the easiest to recognize, followed by neutral, and then disgust.

In Experiment 2, we presented dynamic stimuli to investigate the effects of facial attractiveness on the processing of MEs. We hypothesized that participants could judge attractive faces faster overall in a dynamic context; participants showed the happiness superiority effect for the faces with higher attractiveness levels but not for the unattractive ones.

Experiment 2 employed a 2 (ME: happy, disgust) × 2 (Attractiveness: attractive, unattractive) within-subject factors design. The dependent variables were the participants' mean accuracy score (%) and the mean reaction times (ms) for participants to accurately detect MEs. Participants and procedure were the same as in Experiment 1. Based on a post-hoc power analysis by using G*Power 3.1 (Faul et al., 2007) and calculating power analysis for the main effect of attractiveness (a partial η2 equal to 0.436, an alpha of 0.05, and a total sample size of 38), we observed that this sample size generated a high power of 1-β equal to 0.999. To exclude practice effects, we balanced the order of Experiment 1 and Experiment 2 between participants. Thirty-eight participants were randomly divided into two groups (Group A and B), each comprised of 19 participants. Group A completed Experiment 1 follow by Experiment 2, and Group B did the opposite. Also, we used the materials from Experiment 1 to create short video clips. Shen et al. (2012) found a significant difference in recognition accuracy with durations of 40 ms and 120 ms under the METT paradigm condition; however, when the duration was greater than 120 ms, there was no difference in accuracy rate. Thus, we employ the intermediate values with a duration of 80 ms as the target stimulus. Based on the neutral-emotional-neutral paradigm (Zhang et al., 2014), we used neutral as the context expression in this experiment. Zhang et al. (2014) indicated that MEs are contained in the flow of expressions including both neutral and other emotional MEs, considering that a ME is occurred very fast and is always submerged in other MEs, the neutral faces before and after the target ME were presented for 60 ms in order to simulate the real situation in which the ME happened, with happiness or disgust flashed briefly for 80 ms, resulting in a total of 200 ms. Thus, the dynamic stimuli consisted of 20 clips (each clip lasting for 200 ms and showing the same model), comprised of two levels of Attractiveness (attractive and unattractive) and presented as two stimulus types (neutral-happiness-neutral and neutral-disgust-neutral) for each of the 10 models, each clip was shown twice in random order. E-Prime (version 3.0) was used to show the stimuli and collect the data.

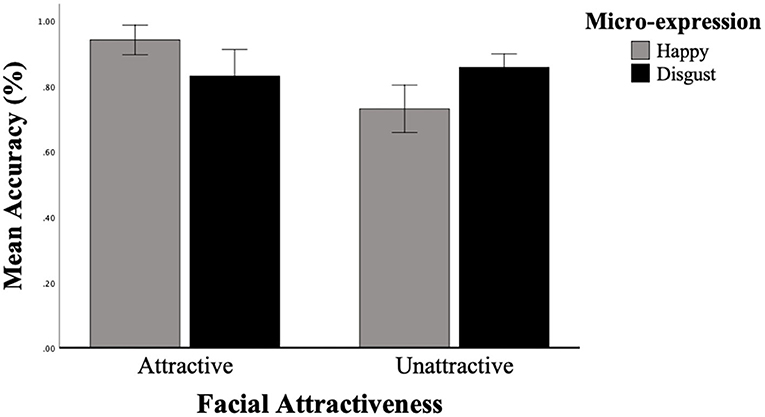

We launched a 2 × 2 repeated measures ANOVA with ME (happy, disgust) and Attractiveness (attractive, unattractive) as within-subject factors, and with mean accuracy as dependent variables. The mean accuracy of the two MEs is shown in Figure 3. The results revealed a significant main effect of attractiveness, [F(1, 37) = 28.560, p < 0.001, = 0.436]. The main effect of ME was not significant, [F(1, 37) = 0.062, p = 0.805]. The interactions between ME and attractiveness were significant, [F(1, 37) = 14.637, p < 0.001, = 0.283]. A simple main effect of ME was analyzed to examine the interaction between attractiveness and ME. The results revealed a significant simple main effect of ME under the attractive faces condition, [F(1, 37) = 5.512, p = 0.024, = 0.130], and a significant simple main effect of ME under the unattractive faces condition, [F(1, 37) = 9.294, p = 0.004, = 0.201]. Furthermore, happiness (M = 0.942, SD = 0.022) identified higher recognition accuracy than disgust [M = 0.732, SD = 0.036, t(37) = 2.362, p = 0.024, d = 0.777, 95% CI [0.015, 0.206]] under the attractive faces condition, happiness (M = 0.832, SD = 0.040) identified lower recognition accuracy than disgust [M = 0.858, SD = 0.021, t(37) = 3.073, p = 0.004, d = 1.010, 95% CI [−0.210, −0.042]] under the unattractive faces condition (refer to Table 2).

Figure 3. Participants' mean accuracy of the dynamic micro-expression recognition task in two facial attractiveness levels (attractive, unattractive). Error bars reflect the 95% CIs for the mean accuracy.

Mean reaction times were submitted to a second repeated measures ANOVA with the same factors described above, outliers (reaction times exceeding the mean of each participant by 1.5 SD) were not included in the analysis. There was no significant main effect of ME, [F(1, 35) = 0.218, p = 0.644], or a significant main effect of attractiveness, [F(1, 35) =2.492, p = 0.123]. Remarkably, the interaction of ME × Attractiveness was significant, [F(1, 35) = 21.245, p < 0.001, = 0.378]. A follow-up simple effect analysis was employed to investigate the effect of ME within each level of attractiveness. The results revealed a significant simple main effect of ME under the attractive faces condition, [F(1, 37) = 9.267, p = 0.004, = 0.200], and a significant simple main effect of ME under the unattractive faces condition, [F(1, 37) = 21.773, p < 0.001, = 0.370]. Happiness (M = 758.280, SD = 55.873) identified faster than disgust (M = 919.013, SD = 79.390) under the attractive faces condition [t(37) = 3.044, p = 0.004, d = 1.001, 95% CI [–267.715, –53.752]], disgust (M = 821.605, SD = 66.602) identified faster than happiness (M = 982.400, SD = 76.192) under the unattractive faces condition [t(37) = 4.666, p < 0.001, d = 1.534, 95% CI [–230.616, –90.973]].

Attractiveness ratings were submitted to a third repeated measures ANOVA with the same factors described above. The results revealed a significant main effect of ME, [F(1, 37) = 62.947, p < 0.001, = 0.630], a significant main effect of attractiveness, [F(1, 37) = 101.369, p < 0.001, = 0.733]. The interactions between ME and attractiveness were significant, [F(1, 37) = 20.428, p < 0.001, = 0.356], indicating that the attractive manipulation of the stimuli used in the current study is effective. Further analysis revealed a significant simple main effect of ME under the attractive faces condition, [F(1, 37) = 143.607, p < 0.001, = 0.795], and a significant simple main effect of ME under the unattractive faces condition, [F(1, 37) = 29.711, p < 0.001, = 0.445]. Under the attractive faces condition, happiness (M = 4.471, SD = 0.173) was rated with a higher score than disgust [M = 3.195, SD = 0.167, t(37) = 11.925, p < 0.001, d = 3.921, 95% CI [1.061, 1.492]]. Under the unattractive faces condition, happiness (M = 3.374, SD = 0.132) was rated with a higher score than disgust [M = 2.682, SD = 0.146, t(37) = 5.449, p < 0.001, d = 1.792, 95% CI [0.435, 0.949]].

In this study, we examine how facial attractiveness influences the processing of ME recognition in dynamic conditions. Analysis of accuracy indicated that attractiveness affects ME recognition. Participants could recognize attractive faces more accurately. Specifically, we observed a higher accuracy rate for happiness than disgust under the attractive faces condition, which supports the assumption that attractiveness could moderate the happiness superiority effect. For the response times, the interaction of Attractiveness × ME was significant, attractive faces were recognized faster than unattractive faces, and happiness was categorized faster than disgust under the attractive face condition whereas this happiness superiority effect did not apply to unattractive faces. According to the results of attractiveness ratings, the advantage of happy faces may be caused by their attractiveness. Overall, participants could identify the happy expression faster and more accurately in higher attractive faces, demonstrating that participants have a stronger ability to identify dynamic expressions that are very attractive.

Across two experiments, we showed participants static and dynamic faces to recognize MEs. We revealed evidence of the effect of attractiveness on the recognition of ME in either static conditions or dynamically. The results suggest that these two attributes (Attractiveness × ME) are strongly interconnected. Participants showed the happiness superiority effect for the faces with higher attractiveness levels but not for the unattractive ones in both experiments. These findings are in line with the attractiveness stereotype, which defines the phenomena in which individuals correlate physical appearance with a variety of beneficial qualities (Eagly et al. 1991). For instance, attractiveness could boost job interview chances (Watkins and Johnston, 2000). According to the attractiveness stereotype, attractive appearance and good qualities have a strong association with the thoughts of people. Therefore, the identification of attractive faces and positive emotions may be rewarded with an advantage, enhancing their speedy recognition (Golle et al., 2014).

The happiness superiority effect was strengthened by neuroimaging evidence indicating that the medial frontal cortex plays an important role in happy face recognition (Kesler et al., 2001). Ihme et al. (2013) used functional magnetic resonance imaging (fMRI) for the first time to explore the brain mechanism of JACBART and revealed increasing activation with higher performance in the basal ganglia for the negative faces and orbitofrontal areas for happiness and anger. Furthermore, previous research implicated that basal ganglia and orbitofrontal cortex are both involved in the processing of emotional facial expressions. According to O'Doherty et al. (2003), the medial orbitofrontal cortex (OFC) is a region that is known involved in representing stimulus reward value and was shown to be more active when an attractive face was associated with a happy expression, rather than a neutral one. Further studies should find out whether facial attractiveness that correlates with the detection performance of MEs predicts activation in basal ganglia and orbitofrontal cortex.

In general, this study aimed to explore the effects of facial attractiveness on the processing of MEs in static and dynamic experimental conditions. The findings of our study verified and represent an extension of previous research. On one hand, the results show that participants could identify the happy expression quicker in higher attractive faces, which supports the happiness superiority effect and strengthens this theory with more evidence. On the other hand, this research suggests that the moderation of ME recognition is not limited to invariant facial attributes (such as gender and race) but also applies to variable face features such as facial attractiveness. Furthermore, previous studies suggest that ME recognition training has significant effects on the recognition of MEs (Matsumoto and Hwang, 2011). However, the selection of stimulus material in prior research may not address the variations in the attractiveness of the faces representing the various groups. The current findings demonstrate that facial attractiveness is processed quickly enough to influence ME recognition; hence, facial attractiveness should be considered when selecting faces as stimuli for ME recognition training. Also, since individuals can be trained to recognize MEs more accurately and quickly in as little as a few hours, the effects of facial attractiveness on ME recognition may be reduced when individuals receive ME training.

The present experiments entailed several limitations. First, this research only used two basic expressions as experimental materials. It remains unclear whether facial attractiveness affects other MEs (such as a sadness expression) as much as in our research, a wider range of facial expressions should be examined in future research. Second, we used synthetic MEs in the experiences, while natural MEs may be shorter, asymmetrical, and weaker than synthetic MEs, future research could use natural MEs with more ecological validity as research materials. However, this would require a ME database with a rich sample. Third, we employed the Caucasian faces as experimental materials, which were outgroup members to the participants of the current study. However, evidence from cross-cultural studies suggests that the ME recognition process might differ between the ingroup members and outgroup members. For example, Elfenbein and Ambady (2002) suggested that individuals are more accurate at identifying ingroup emotions since they are more familiar with their own race expressions and faces. Therefore, it may be useful to use a wider variety of face types in future studies to evaluate the ingroup advantage in ME recognition-related facial attractiveness in a context of stimulus equivalence. Finally, since a ME is often embedded in the flow of other MEs, we employed 80 ms for target MEs, and the neutral MEs before and after the emotional MEs were only presented for 60 ms to simulate the actual situation in which the ME occurred. This led to the neutral expressions and target ME being combined and the entire duration was examined. Future studies could employ an ERP experiment to investigate the modulation of early visual processing (e.g., P1 and N170) by using natural MEs in order to investigate the neural mechanism for the effect of facial attractiveness on ME. Moreover, this research only examined the presentation time of MEs at 200 ms. Shen et al. (2012) showed that the accuracy of MEs recognition depends on how long they last and reaches a turning point at 200 ms or maybe even less than 200 ms before leveling off. This suggests that the critical time point that differentiates MEs may be 1/5 of a second. Does facial attractiveness have different effects on ME recognition with longer and shorter presentation times? These questions need to be further explored.

In conclusion, the current research provides objective evidence that facial attractiveness influences the processing of MEs. Specifically, we observed that attractive happy faces can be recognized faster and more accurately, emphasizing the happiness superiority effect whether in a static condition or dynamically. Moreover, these new results support the assumption that facial attractiveness could moderate emotion perception. Further studies should employ eye tracker technology to detect visual attention mechanisms in MEs processing that is influenced by facial attractiveness.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Institute of Psychology, Chinese Academy of Sciences, Beijing, China. The patients/participants provided their written informed consent to participate in this study.

QL has contributed the main body of text and the main ideas. ZD has contributed to the construction of the text and refinement of ideas and provided extensive feedback and commentary. QZ was responsible for constructing the partial research framework. S-JW led the project and acquired the funding support. All authors contributed to the article and approved the submitted version.

This article was supported by grants from the National Natural Science Foundation of China (U19B2032).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbruzzese, L., Magnani, N., Robertson, I. H., and Mancuso, M. (2019). Age and gender differences in emotion recognition. Front. Psychol. 10, 2371. doi: 10.3389/fpsyg.2019.02371

Cunningham, M. R. (1986). Measuring the physical in physical attractiveness: quasi-experiments on the sociobiology of female facial beauty. J. Pers. Soc. Psychol. 50, 925. doi: 10.1037/0022-3514.50.5.925

Dion, K., Berscheid, E., and Walster, E. (1972). What is beautiful is good. J. Pers. Soc. Psychol. 24, 285. doi: 10.1037/h0033731

Eagly, A. H., Ashmore, R. D., Makhijani, M. G., and Longo, L. C. (1991). What is beautiful is good, but…: A meta-analytic review of research on the physical attractiveness stereotype. Psychol. Bull. 110, 109. doi: 10.1037/0033-2909.110.1.109

Ekman, P. (1992). An argument for basic emotions. Cogn. Emot. 6, 169–200. doi: 10.1080/02699939208411068

Ekman, P. (2009). Telling lies: Clues to Deceit in the Marketplace, Politics, and Marriage (Revised Edition). New York, NY: WW Norton & Company.

Ekman, P., and Friesen, W. V. (1969). Nonverbal behavior and clues to deception. Psychiatry 32, 88–106. doi: 10.1080/00332747.1969.11023575

Ekman, P., and Friesen, W. V. (1974). “Nonverbal behavior and psychopathology,” in The Psychology of Depression: Contemporary Theory and Research (Washington, DC), 3–31.

Ekman, P., and Friesen, W. V. (1978). “Facial action coding system,” in Environmental Psychology and Nonverbal Behavior (Salt Lake City).

Elfenbein, H. A., and Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128, 203–235. doi: 10.1037/0033-2909.128.2.203

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G* power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Golle, J., Mast, F. W., and Lobmaier, J. S. (2014). Something to smile about: The interrelationship between attractiveness and emotional expression. Cogn. Emot. 28, 298–310. doi: 10.1080/02699931.2013.817383

Hugenberg, K., and Sczesny, S. (2006). On wonderful women and seeing smiles: social categorization moderates the happy face response latency advantage. Soc. Cogn. 24, 516–539. doi: 10.1521/soco.2006.24.5.516

Hurley, C. M. (2012). Do you see what i see? learning to detect micro expressions of emotion. Mot. Emot.36, 371–381. doi: 10.1007/s11031-011-9257-2

Ihme, K., Lichev, V., Rosenberg, N., Sacher, J., and Villringer, A. (2013). P 59. Which brain regions are involved in the correct detection of microexpressions? preliminary results from a functional magnetic resonance imaging study. Clin. Neurophysiol. 124, e92–e93. doi: 10.1016/j.clinph.2013.04.137

Iria, C., Paixão, R., and Barbosa, F. (2019). Facial expression recognition of portuguese using american data as a reference. J. Psychol. Res. 2, 700. doi: 10.30564/jpr.v2i1.700

Jackson, L. A., Hunter, J. E., and Hodge, C. N. (1995). Physical attractiveness and intellectual competence: a meta-analytic review. Soc. Psychol. Q. 58, 108–122. doi: 10.2307/2787149

Jaensch, M., van den Hurk, W., Dzhelyova, M., Hahn, A. C., Perrett, D. I., Richards, A., et al. (2014). Don't look back in anger: the rewarding value of a female face is discounted by an angry expression. J. Exp. Psychol. 40, 2101. doi: 10.1037/a0038078

Kesler, M. L., Andersen, A. H., Smith, C. D., Avison, M. J., Davis, C. E., Kryscio, R. J., et al. (2001). Neural substrates of facial emotion processing using fmri. Cogn. Brain Res. 11, 213–226. doi: 10.1016/S0926-6410(00)00073-2

Krumhuber, E., Manstead, A. S., and Kappas, A. (2007). Temporal aspects of facial displays in person and expression perception: the effects of smile dynamics, head-tilt, and gender. J. Nonverbal. Behav. 31, 39–56. doi: 10.1007/s10919-006-0019-x

Leppänen, J. M., and Hietanen, J. K. (2004). Positive facial expressions are recognized faster than negative facial expressions, but why? Psychol. Res. 69, 22–29. doi: 10.1007/s00426-003-0157-2

Li, J., He, D., Zhou, L., Zhao, X., Zhao, T., Zhang, W., et al. (2019). The effects of facial attractiveness and familiarity on facial expression recognition. Front. Psychol. 10, 2496. doi: 10.3389/fpsyg.2019.02496

Liang, J., Yan, W., Wu, Q., Shen, X., Wang, S., and Fu, X. (2013). Recent advances and future trends in micro-expression research. Bull. Natl. Natural Sci. Foundat. China. 27, 75–78. doi: 10.16262/j.cnki.1000-8217.2013.02.003

Lindeberg, S., Craig, B. M., and Lipp, O. V. (2019). You look pretty happy: attractiveness moderates emotion perception. Emotion 19, 1070. doi: 10.1037/emo0000513

Lucey, P., Cohn, J. F., Kanade, T., Saragih, J., Ambadar, Z., and Matthews, I. (2010). “The extended cohn-kanade dataset (CK+): a complete dataset for action unit and emotion-specified expression,” in 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops (San Francisco, CA: IEEE), 94–101.

Matsumoto, D., and Hwang, H. S. (2011). Evidence for training the ability to read microexpressions of emotion. Mot. Emot. 35, 181–191. doi: 10.1007/s11031-011-9212-2

Matsumoto, D., Nezlek, J. B., and Koopmann, B. (2007). Evidence for universality in phenomenological emotion response system coherence. Emotion 7, 57. doi: 10.1037/1528-3542.7.1.57

Mertens, A., Hepp, J., Voss, A., and Hische, A. (2021). Pretty crowds are happy crowds: the influence of attractiveness on mood perception. Psychol. Res. 85, 1823–1836. doi: 10.1007/s00426-020-01360-x

O'Doherty, J., Winston, J., Critchley, H., Perrett, D., Burt, D. M., and Dolan, R. J. (2003). Beauty in a smile: the role of medial orbitofrontal cortex in facial attractiveness. Neuropsychologia 41, 147–155. doi: 10.1016/S0028-3932(02)00145-8

Otta, E., Abrosio, F. F. E., and Hoshino, R. L. (1996). Reading a smiling face: messages conveyed by various forms of smiling. Percept. Motor Skills 82(3_Suppl.), 1111–1121. doi: 10.2466/pms.1996.82.3c.1111

Ratcliff, R. (1993). Methods for dealing with reaction time outliers. Psychol. Bull. 114, 510. doi: 10.1037/0033-2909.114.3.510

Recio, G., Sommer, W., and Schacht, A. (2011). Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 1376, 66–75. doi: 10.1016/j.brainres.2010.12.041

Rhodes, G. (2006). The evolutionary psychology of facial beauty. Annu. Rev. Psychol. 57, 199–226. doi: 10.1146/annurev.psych.57.102904.190208

Sato, W., and Yoshikawa, S. (2007). Spontaneous facial mimicry in response to dynamic facial expressions. Cognition 104, 1–18. doi: 10.1016/j.cognition.2006.05.001

Scherer, K. R., Ellgring, H., Dieckmann, A., Unfried, M., and Mortillaro, M. (2019). Dynamic facial expression of emotion and observer inference. Front. Psychol. 10, 508. doi: 10.3389/fpsyg.2019.00508

Shen, X., Wu, Q., Zhao, K., and Fu, X. (2016). Electrophysiological evidence reveals differences between the recognition of microexpressions and macroexpressions. Front. Psychol. 7, 1346. doi: 10.3389/fpsyg.2016.01346

Shen, X.-B., Wu, Q., and Fu, X.-L. (2012). Effects of the duration of expressions on the recognition of microexpressions. J. Zhejiang Univ. Sci. B 13, 221–230. doi: 10.1631/jzus.B1100063

Siciliano, R. E., Madden, D. J., Tallman, C. W., Boylan, M. A., Kirste, I., Monge, Z. A., et al. (2017). Task difficulty modulates brain activation in the emotional oddball task. Brain Res. 1664, 74–86. doi: 10.1016/j.brainres.2017.03.028

Takalkar, M. A., Thuseethan, S., Rajasegarar, S., Chaczko, Z., Xu, M., and Yearwood, J. (2021). Lgattnet: automatic micro-expression detection using dual-stream local and global attentions. Knowl. Based Syst. 212, 106566. doi: 10.1016/j.knosys.2020.106566

Taylor, A. J., and Bryant, L. (2016). The effect of facial attractiveness on facial expression identification. Swiss J. Psychol. 75, 175–181. doi: 10.1024/1421-0185/a000183

Taylor, A. J. G., and Jose, M. (2014). Physical aggression and facial expression identification. Europes J. Psychol. 10, 816. doi: 10.5964/ejop.v10i4.816

Watkins, L. M., and Johnston, L. (2000). Screening job applicants: the impact of physical attractiveness and application quality. Int. J. Select. Assess. 8, 76–84. doi: 10.1111/1468-2389.00135

Zhang, J., Li, L. U., Ming, Y., Zhu, C., and Liu, D. (2017). The establishment of ecological microexpressions recognition test(emert): an improvement on jacbart microexpressions recognition test. Acta Psychol. Sin. 49, 886. doi: 10.3724/SP.J.1041.2017.00886

Zhang, L. L., Wei, B., and Zhang, Y. (2016). Smile modulates the effect of facial attractiveness: an eye movement study. Psychol. Explor. 36, 13.

Zhang, M., Fu, Q., Chen, Y.-H., and Fu, X. (2014). Emotional context influences micro-expression recognition. PLoS ONE 9, e95018. doi: 10.1371/journal.pone.0095018

Zhang, M., Fu, Q., Chen, Y.-H., and Fu, X. (2018). Emotional context modulates micro-expression processing as reflected in event-related potentials. Psych J. 7, 13–24. doi: 10.1002/pchj.196

Zhang, M., Zhao, K., Qu, F., Li, K., and Fu, X. (2020). Brain activation in contrasts of microexpression following emotional contexts. Front. Neurosci. 14, 329. doi: 10.3389/fnins.2020.00329

Keywords: facial attractiveness, micro-expression, micro-expression recognition, emotion recognition, happy-face-advantage

Citation: Lin Q, Dong Z, Zheng Q and Wang S-J (2022) The effect of facial attractiveness on micro-expression recognition. Front. Psychol. 13:959124. doi: 10.3389/fpsyg.2022.959124

Received: 01 July 2022; Accepted: 11 August 2022;

Published: 16 September 2022.

Edited by:

Xiaopeng Hong, Harbin Institute of Technology, ChinaCopyright © 2022 Lin, Dong, Zheng and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Su-Jing Wang, d2FuZ3N1amluZ0Bwc3ljaC5hYy5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.