- School of New Media, Peking University, Beijing, China

Algorithms embedded in media applications increasingly influence individuals’ media practice and behavioral decisions. However, it is also important to consider how the influence of such algorithms can be resisted. Few studies have explored the resistant outcomes of the interactions with algorithms. Based on an affordance perspective, this study constructed a formation framework of algorithmic resistance in the context of short videos in China. Survey responses from 2,000 short video users to test the model. Exploratory factor analysis, confirmatory factor analysis, and structural equation modeling were used for data analysis. The findings reveal two types of “moderate” resistance: avoidance and obfuscation. Specific needs, such as the motivations of peeking and escapism, are significantly related to perceived algorithmic affordance, which, in turn, encourages the tactics of avoidant and obfuscated resistance. The results provide new insights into the potential formation mechanisms of algorithmic resistance. The forms of resistance highlighted in the paper evolve alongside algorithms and have significant practical implications for users and platforms.

Introduction

Algorithms are an essential part of Internet infrastructure and have reshaped reality, especially media life (Deuze, 2011). Algorithms have transformed the presentation of information and interaction by aggregating, filtering, recommending, and rating (Kitchin, 2017; Dogruel et al., 2020). Because the interactions between algorithms and users can be considered a socio-technological system with recursive relationships (Gillespie, 2014; Willson, 2017), insights into how users perceive and interact with these systems are essential.

Much of past research on algorithms has addressed the “transformative effects” (Kitchin, 2017) of algorithms as powerful information agents, as well as the potential risks and consequences (Neyland and Möllers, 2017; Dogruel et al., 2020; Gran et al., 2020). A few scholars have started to focus on users’ perceptions and behavior of recommendation algorithms. The previous study found that users’ awareness of algorithms was low and their level of understanding varied widely (Eslami et al., 2015; Rader and Gray, 2015). Due to the “black box” attributes of algorithms, the research method has been a challenge for scholars in the social sciences. But without empirical evidence from the user perspective, it is also impossible to develop effective policies on algorithms (Latzer and Festic, 2019). Prior studies on users’ perceptions and behaviors about algorithms were mostly carried out in qualitative methods. For example, Bucher (2017) studied users’ awareness and experience of algorithms in daily life through tweets and interviews. Bishop (2019) adopts an ethnographic approach to study the formation of beauty vloggers’ algorithmic knowledge and how it guides content production.

In terms of research content, previous studies focused on algorithms in social media News Feed. Scholars have proposed some concepts, such as algorithmic imaginary, algorithmic gossip, algorithmic skills, and algorithmic knowledge, to describe users’ perceptions and understanding of algorithms (Bucher, 2017; Bishop, 2019; Hargittai et al., 2020). Folk theories have also been developed to explain how users’ perceptions guide practice (DeVito et al., 2018). And in recent years, research has gradually expanded into more areas, such as video sites, product content, and advertising. Some papers analyzed user behaviors when confronted with algorithms and new types of advertising in videos (Belanche et al., 2017a,b). And several studies found that influencers, especially vloggers, have a good understanding of algorithms and prefer to use algorithms to increase their popularity and build and govern audiences (Cotter, 2019; Mac Donald, 2021).

While critical work is coming out in this area, there are also some gaps. These findings explained the actions of digital subjection when encountering algorithms yet lacked attention to resistance. The new information systems, like recommendation algorithms and skippable advertising, not only change the way information is presented, but also empower the user (Belanche et al., 2019, 2020). Users’ active roles are reflected both in the ability to use the algorithms to achieve their goals and in the ability to maintain critical thinking and resist. However, only a few studies have theoretically analyzed the possibilities of user resistance, while in-depth empirical studies are inadequate. Velkova and Kaun (2021) emphasized that as algorithms play a predominant role in media power, it is becoming increasingly critical to deliberate how and to what extent their power can be resisted. Furthermore, most research does not extend beyond users in the West, leaving issues for platforms and users in other cultural settings as a gap in the literature.

To fill these gaps, this paper studies the formation mechanism of algorithmic resistance in the context of short videos in China. Specifically, the research aims to resolve how people resist algorithms and what factors would trigger the tactics of resistance. This study contributes in three areas. First, the research provides new insights into the potential formation mechanisms of algorithmic resistance by introducing affordance perspectives. The paper constructed a structural equation model to explain how the specific needs of users trigger algorithmic resistance through the mediating role of perceived explainability of algorithms, which empirically supports the formation framework of algorithmic resistance. Second, the paper has extended the research on algorithmic awareness and behavior to the context of short video platforms. Past research mostly touched on Facebook, YouTube, and Instagram. However, short video platforms (like Douyin and Kuaishou), which are driven by algorithms, have become one of the most popular social media platforms, especially in China. The research fully considers the impact of users’ specific intention of using short videos on their perception and understanding of algorithms, as well as resistance behavior. Third, this study emphasizes the tactics of avoidance and obfuscation, which expands the range of possibilities for actions on algorithms. These findings could have important implications for enhancing the autonomy of user content curation, optimizing algorithms, and promoting the sustainable development of social media ecosystems.

Theoretical background and hypotheses

Affordance perspectives and algorithmic affordance

As a complex social technology system, algorithms have been embedded in all subsystems of society. The research on algorithm issues in the field of social sciences can be classified as the research on the relationship between technology and society. The research in this field is common in two theoretical value orientations, one is technological determinism, and the other is social constructivism. Especially in the face of the application brought by the new technological revolution, the proposition of technological determinism is more powerful. For example, in the early stage, due to the black box nature of algorithms (Pasquale, 2015), scholars mostly used the viewpoint of technological determinism to discuss the strong impact, risks, and challenges of algorithms on society. But only from the perspective of technological determinism, we cannot explain how algorithms perform specific tasks in various social practices, which involves the sociality of algorithms. The viewpoint of social constructivism began to appear. Social constructivism emphasizes the subjective role of human beings. However, some scholars have found that it is difficult to fully explain the use of algorithms from the perspective of constructivism. The role of people is also limited, and their behavior is affected by many factors. Thus, the concept of affordances is attractive for communication researchers because it suggests that neither materiality nor a constructivist view is sufficient to explain technology use (Leonardi and Barley, 2008), and advocates focusing on relational actions that occur among people and technologies (Faraj and Azad, 2012).

Gibson (1979), the ecological psychologist who first proposed the concept of “affordance,” held a relational view of animals and the environment, exploring what affordances and actionable possibilities the environment provides the animal. Subsequent scholars have since reinterpreted the concept prescriptively in human-computer interaction (Norman, 1988; Kuhn, 2012), to reflect a valuable approach in the design of software.

Norman (1988) defined affordances as “the perceived and actual properties of an object, primarily those fundamental properties that determine how the thing could be used.” Given the focus on interface design, Norman abstracted the concept of “perceived affordance,” referring to the range of possible actions perceived by the user of an object. Norman considered that a good designer should make an effort to reduce the gap between design affordances and perceived affordances, and focus on the users’ needs and cognitive models (Norman, 1993). His approach inspired later scholars to empirically research technology affordance from the perspective of users. From this viewpoint, affordance was related to the characteristics of technical functions and linked to the users’ intention, perception, and understanding. The affordance perspective provides a framework that describes the many-sided relational structure between a technology and the users that allows or restricts possible behavioral outcomes in a particular context. The framework helps interpret the mutuality between the users, the material features of the technologies, and the situational nature of use (Evans et al., 2016).

The perspective of affordance is appealing to communication scholars because it suggests that any one-side view (materiality or constructivist) is insufficient to explain the use of technologies (Leonardi and Barley, 2008). The approach advocates a focus on the relational actions occurring between humans and technologies. Thus, media scholars introduced affordance perspectives into the studies of human and artificial intelligence (AI) interaction to answer the questions, such as “What can AI offer to users?” “How can AI allow users action, and how can we use AI for our particular needs?” (Jakesch et al., 2019; Flanagin, 2020; Sundar, 2020).

Shin and Park (2019) proposed an operational definition for the concept of “algorithmic affordance.” They referred to the possibilities of actions that people perceive concerning features in algorithms, based on four dimensions – fairness, accountability, transparency, and explainability (Shin, 2020). Users have limited direct evidence for the first three dimensions. Because few platforms officially disclose information about the training set and models of recommendation algorithms (Cotter, 2019). Explainability of an algorithm could be referred to as the ability to explain how an algorithm works and what range of potential outcomes it has offered (Barredo Arrieta et al., 2020). Users can understand and explain the outputs or procedures of the algorithms through experimentation and adaptation to the interface (Leonardi et al., 2011). There were also many folk theories (Ytre-Arne and Moe, 2021) about algorithms on how recommendation algorithms function through recursive loops of the interaction between users and algorithms (Gillespie, 2014). This means that the recommendation systems of a social media platform provide the possibilities to be understood. Furthermore, Shin (2021) proved that the explainability of algorithms was the premise of perceived fairness, perceived transparency, and accountability. Thus, explainability is the most crucial element (Ehsan and Riedl, 2019; Barredo Arrieta et al., 2020).

Particular needs and perception: Trigger process of the sensing algorithm

A small number of studies have investigated Internet user awareness of algorithms (Bucher, 2017; Proferes, 2017; Klawitter and Hargittai, 2018). For example, a laboratory study recruited 40 Facebook users to examine perceptions of Facebook’s News Feed algorithms and found that the bulk of participants (62.5%) were not aware of the algorithms and did not understand them (Eslami et al., 2015). In contrast to Eslami’s results, other studies indicate that most survey respondents perceive that they are aware of algorithms, although the level of awareness varies (Rader and Gray, 2015; Gran et al., 2020). Despite contradictory findings, these studies raise an important question: in what usage scenarios do people become aware of and perceive algorithms? What are the predictors of this awareness?

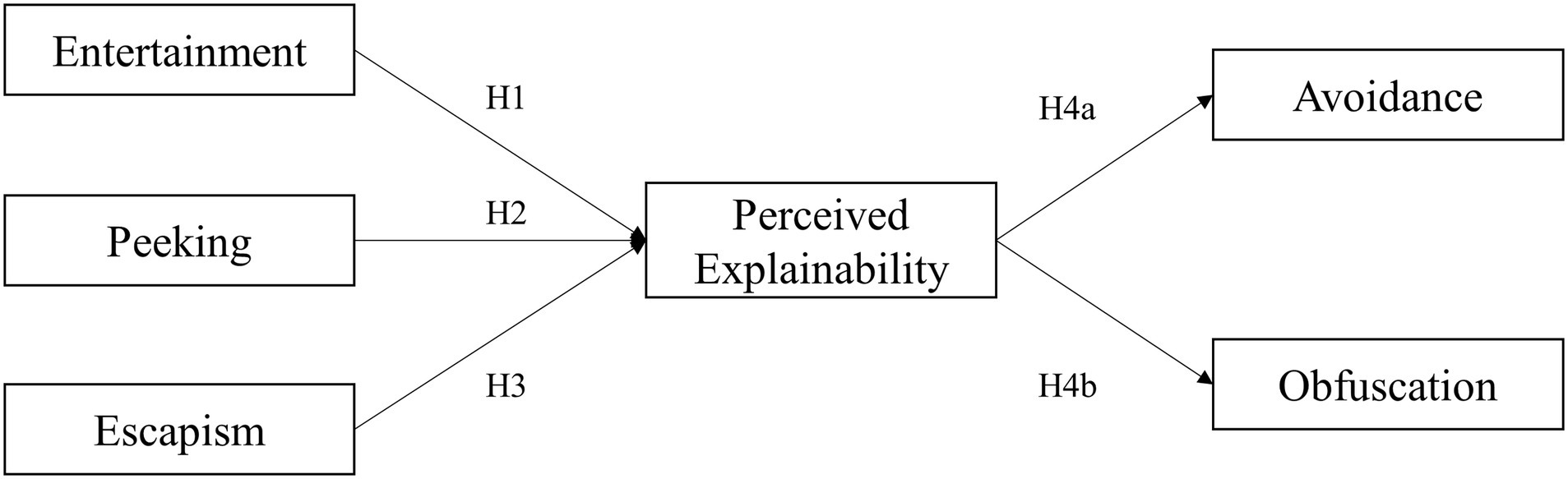

The affordance approach emphasizes the multifaceted relationship between individuals’ goals, the properties of technology, and the context in which the technology is used (Evans et al., 2016). Social media research has long proposed that social media use is goal-driven to meet individual needs (Papacharissi and Mendelson, 2011). The short video is an important social media driven by algorithms, which has developed rapidly in recent years. Algorithms have transformed the presentation of information and interaction by aggregating, filtering, recommending, and rating (Kitchin, 2017; Dogruel et al., 2020). Therefore, compared with other social media platforms, the change of short videos in information presentation and reception is more attractive to users. Ordinary users mainly use short videos for entertainment, escapism, and peeking (Fayard and Weeks, 2014; Omar and Dequan, 2020; Rach and Peter, 2021). Although self-presentation is also one of the motivations for people to use short videos, in this context, the goals of ordinary users and vloggers are not different. Thus, this study focuses on these goals in using short videos entertainment, escapism, and peeking. For example, TikTok is regarded as the go-to app for escapism (Rach and Peter, 2021). Based on the affordance perspective, Norman (1988) believed that the perceived affordance was subject to the mental models of users. Users can perceive a range of action possibilities from technology, but the nature and level of the perceived affordance depend on the goals and usage scenarios of users (Fayard and Weeks, 2014). Thus, based on affordance theory, this study infers those goals for short video use will facilitate the perceived explainability of recommendation algorithms. The study hypotheses were as follows:

H1: Entertainment is positively related to perceived explainability of algorithms.

H2: Peeking is positively related to perceived explainability of algorithms.

H3: Escapism is positively related to perceived explainability of algorithms.

Algorithmic resistance: User heuristics of algorithmic affordance

Many researchers have concentrated on the negative impacts of algorithms, such as ethical problems related to invisibility and accountability (Bucher, 2012; Kitchin, 2017; Ananny and Crawford, 2018). However, limited attention has been paid to the possibility of users resisting algorithmic power. Unlike the conventional understanding of resistance—often regarded as an organized collective action – algorithmic resistance is unorganized and individual. It implies accommodation with the systems rather than remaining mutually exclusive (Scott, 2008). Users are not passive observers in this process. Velkova and Kaun (2021) revealed a progressive role users played in reshaping the operation of algorithms. People begin to develop tactics of resistance through alternative uses. Ettlinger (2018) offered a taxonomy of resistance, including productive resistance, avoidance, and disruption or obfuscation. For ordinary Internet platform users, the “threshold” of productive resistance, such as hacking, platform cooperatives, and “cloud protesting” (Ettlinger, 2018), is too high to be widely popular. Thus, this study focused on two other tactics of resistance. Avoidance is a mode of strategy involving complete or resistance partially withdrawal from digital platforms (Ettlinger, 2018). It refers specifically to the behavioral outcome where users do not interact with the platforms in this study. Another way of algorithmic resistance aims to obfuscate the processes of algorithms through “tricking” algorithms, for example, producing false behavioral data to confuse the algorithms, ensuring that user preferences remain unidentified (Brunton and Nissenbaum, 2015; Velkova and Kaun, 2021).

The theoretical framework of affordance suggests that users’ perception and understanding of technologies enable the possible actions in specific situations (Evans et al., 2016). This theory provides evidence to verify the heuristic process of a perceived affordance on outcomes. Thus, this study infers that the perceived explainability of algorithms may promote the possibilities for resistance against algorithms. Recent research also provides more empirical evidence about the view. Facebook users who understood the logic of algorithms trained the algorithms for better content curation, co-produced by both users and platforms (Eslami et al., 2016; Velkova and Kaun, 2021). One study found that co-production would enhance or constrain certain engagement practices (Gerlitz and Helmond, 2013). Understanding and explaining how algorithms work led to engagement with gearing algorithmic workings toward users’ benefits. Cotter (2019) found that content producers of Instagram, such as online celebrities, were trying to understand how recommendation algorithms work to stimulate or boost their own popularity. Another study suggested that perception and understanding of Twitter algorithms promoted users’ rights to achieve resistance goals (Treré et al., 2017). In some situations, perceived algorithms can promote tactical interventions to trick algorithms (Bucher, 2017). For ordinary users, privacy is an important right to be protected. In some cases, for example, when a privacy risk is perceived, user perception of explainability may constrain engagement practice and facilitate the tactic of obfuscation. Therefore, the present research hypothesizes:

H4: Perceived explainability of algorithms will have a positive effect on avoidant resistance (H4a); obfuscated resistance (H4b).

Affordance theory requires recognition of the role of affordances in mediating the link among objects (e.g., technology or some technological features), human goals, and outcomes (Parchoma, 2014; Evans et al., 2016). Ignoring this aspect of affordances implies that an object results in the behavioral outcome without any sign of the underlying process—a theoretical leap (Evans et al., 2016). Therefore, in the context of algorithms, the present study deduces that perceived algorithmic affordance mediates the relationship between motivations and resistance actions. These processes suggest the following hypotheses:

H5: Perceived explainability of algorithms mediates the relationship between entertainment and avoidant resistance (H5a); entertainment and obfuscated resistance (H5b).

H6: Perceived explainability of algorithms mediates the relationship between peeking and avoidant resistance (H6a); peeking and obfuscated resistance (H6b).

H7: Perceived explainability of algorithms mediates the relationship between escapism and avoidant resistance (H7a); escapism and obfuscated resistance (H7b).

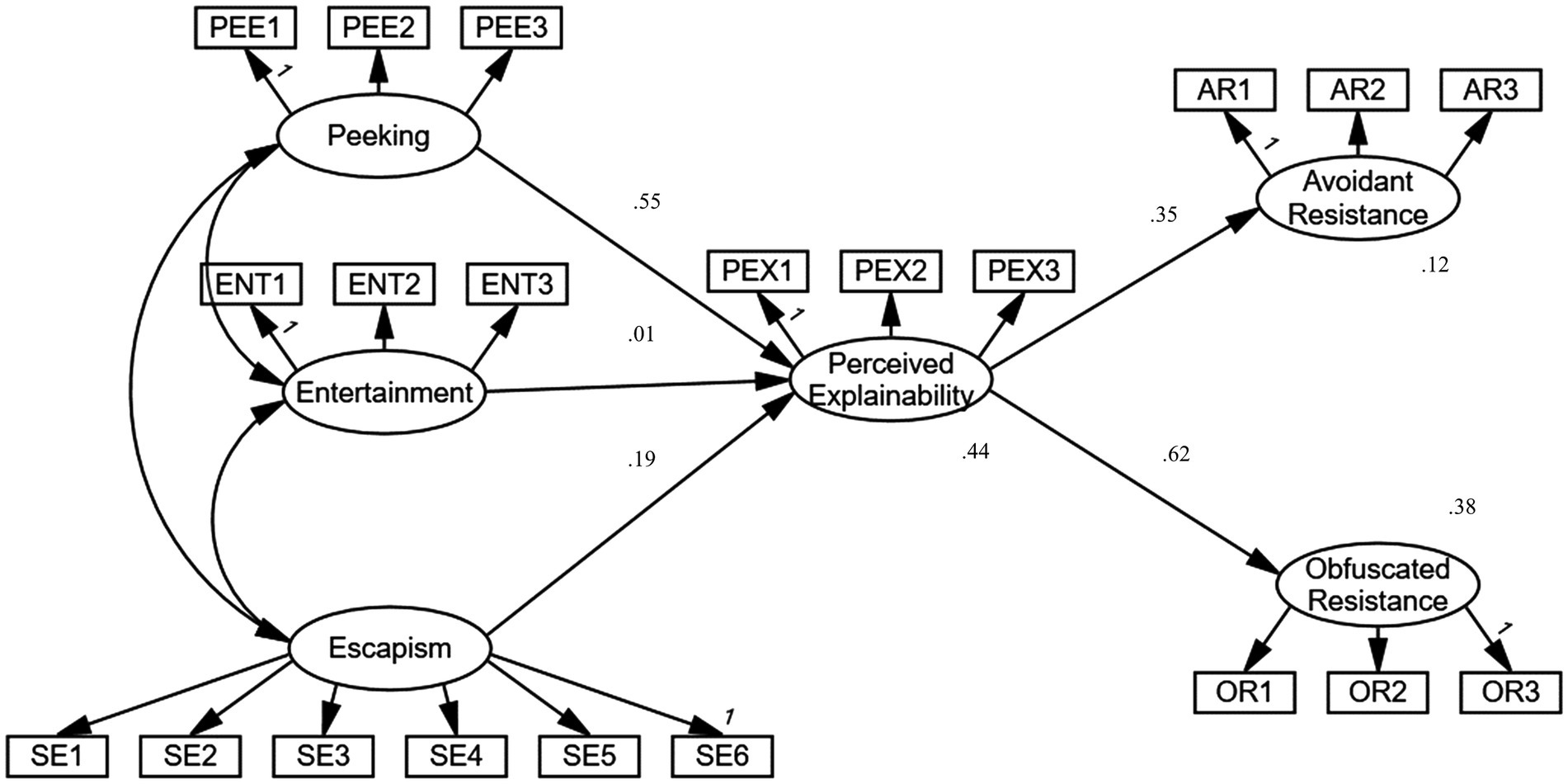

Figure 1 delineates the model used in the present study.

Materials and methods

Construction of the scale of algorithmic resistance

The study focused on the algorithm recommender systems of short video platform, a typical form of social media. The first reason was that algorithm recommender systems are the most common algorithm system. The second reason is that, compared with other platforms, the content presentation form of short video is easier for users to perceive the recursive relationship with the algorithm, which is conducive to the in-depth research.

Based on reviewing the previous literature, this study identified three subdimensions: productive resistance, avoidance, and obfuscation. For ordinary users, the “threshold” of productive resistance is too high to be widely popular. Thus, this study focused on the other two dimensions of resistance. And four focus group discussions (n = 16) were organized to construct effective items for the Algorithmic Resistance Scale (ARS). Participants were asked whether they would resist algorithmic recommendations in short video and what resistant behavior they would take. The participants included 16 doctoral students with professional knowledge of using algorithm services. This study collected a pool of 12 items relating to resistance. To improve the applicability of the scale among the public, 30 participants with no professional background (28 ≤ age ≤ 62, men = 14) were subsequently invited to test the items. They were asked to identify and exclude repetitive or puzzling items. The scale was distilled down to six items and was adapted to the expressions which were easy to understand for the public.

Procedure and participants

Data collection was in three phases. First, to examine the structure of ARS, recognize the number of factors to be preserved, and eliminate the substandard items, a pre-survey (n = 500) was conducted for Exploratory Factor Analysis (EFA). Second, using the second pre-survey sample (n = 500), the study performed confirmatory factor analyses (CFA) to test the construct validity of the ARS and further decrease redundancy in the scale. Third, after the structural analyses were complete, the ARS was included in the formal questionnaire to investigate how people perceive algorithms and the behavioral outcomes.

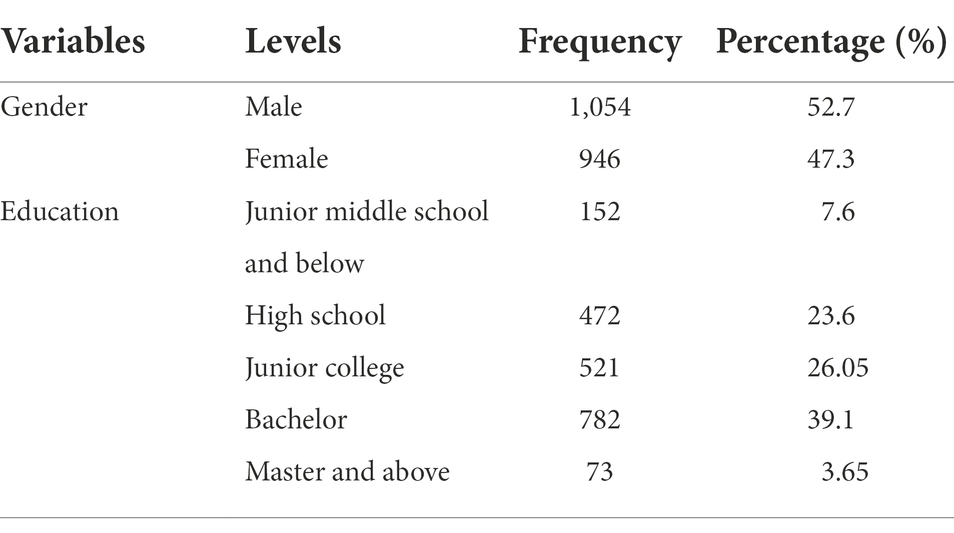

All surveys were conducted via online questionnaires, which were then entrusted to a professional market research company named Ipsos. The agency used quota sampling, stratified by age, gender, and educational level of Chinese netizens. According to the 47th Statistical Report on China’s Internet Development, the number of short video users accounts for 88.3% of the total number of netizens in China, and the user composition is basically the same as that of the overall Internet users. The study makes a more accurate quota division combined with the user information disclosed in the data reports of the two main short video platforms (Douyin and Kuaishou). Data collection commenced in March 2021. Finally, the agency collected 2,000 effective samples. And the number of samples is also our requirement which should ensure at least 2,000 effective samples. This study concludes with three data analysis processes: EFA, CFA, and structural equation model. Referring to previous studies with similar procedures, it is found that the sample size of EFA and CFA is about 500, and the sample size of the overall model validation is about 2,000 (Bruun et al., 2016; Zarouali et al., 2021). Therefore, this study adopts a large sample size of 2000 to ensure its rationality. Participants were aged between 16 and 62 years (M = 31.36, SD = 9.67). The sample composition is presented in Table 1, which conforms with the basic composition of Chinese short video users.

Measurement

Short video goals pursuit: Entertainment, peeking, and escapism

Scale items for short video users’ goals were developed from the Internet-use-motivation literature. Items suggested by Papacharissi and Rubin (2000) were used as the basis for the operationalization of the entertainment construct. This scale, containing three items, was modified based on the short video scenarios. For example, “Using short video is a way of entertainment,” “I use short video to relax,” and “Using short video is enjoyable.” The scales for peeking were adapted from Lee et al. (2015) and consisted of three items. For example, “To browse daily lives of celebrities,” “To browse daily lives of people from all walks of life,” and “To browse the lives of people with similar experiences.” Items to measure escapism were initially taken from Stenseng et al. (2012). Considering the motivations of negative behavior, the study particularly selected the dimension of the self-suppression scale, containing six items (e.g., “When I use short videos, I try to suppress my problems,” and “I try to prevent negative thoughts about myself.”). Entertainment and Peeking constructs were measured on a seven-point scale (1 = completely disagree, 7 = strongly agree), while participants rated the Escapism Scale items on a five-point scale.

Perceived explainability of algorithms

Items to measure Perceived Explainability were adapted from Shin (2021). The scale measured the users’ awareness and understanding of algorithm recommender systems of short video, comprising three items (e.g., “I found algorithms are easily understandable,” “I think the algorithm services are interpretable,” and “I can figure out how the platforms recommend content to me.”). These items were rated by participants on a seven-point scale (1 = do not agree at all, and 7 = completely agree).

Algorithmic resistance

The six items of ARS were developed and refined from the focus groups and two pretests were applied in this survey. The items of algorithmic resistance broadly assess the “dark side” of online participation (Lutz and Hoffmann, 2017), such as not actively generating interactive data with algorithms or interacting consciously and critically with algorithms (Gran et al., 2020; see Table 2 for the final scale items). Responses were recorded along a seven-point scale.

Data analysis

Exploratory factor analysis of algorithmic resistance

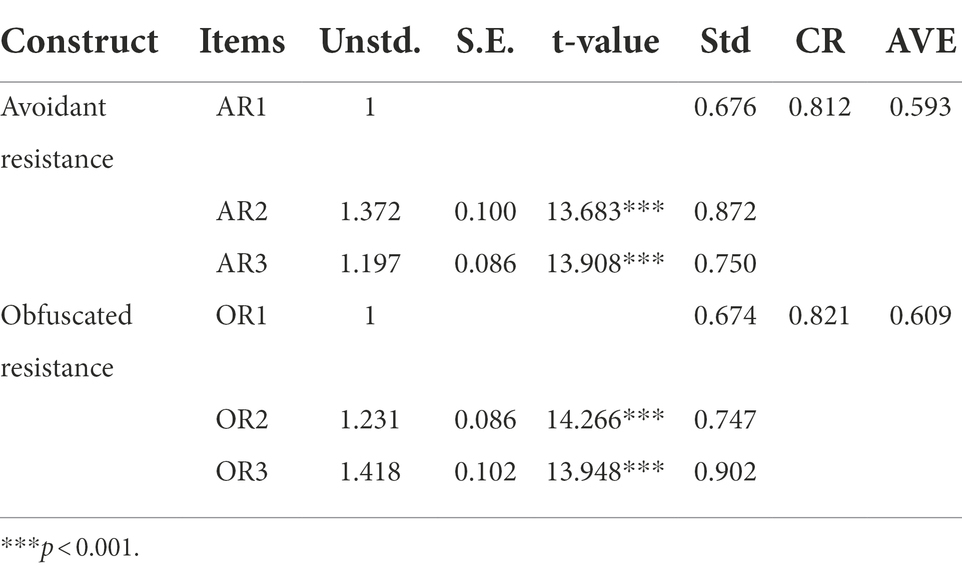

Using the exploratory sample (n = 500), the six resistant behavior items were subjected to statistical analyses to ensure item variance, determine the factor structure, and set up acceptable item-total correlation. Principal component analysis (PCA) with varimax rotation was performed to determine the underlying structure that exists for resistant behavior. The PCA was assessed using the following standards: eigenvalue (q > 1.0), variance explained by each component, loading the score for each factor (q ≥ 0.60), and the meaningfulness of each dimension. EFA (using SPSS version 26) found two factors with eigenvalues greater than 1.0. The item loadings were all above 0.75 (see Table 2). Thus, all six items remained. A meaningful two-component solution was obtained, with the two factors accounting for 72.268% of the total variance. The first factor, labeled “avoidant resistance,” explained 37.081% of the variance after rotation, and its three items formed a reliable scale, as evaluated by Cronbach’s alpha (α = 0.802). The second component, “obfuscated resistance,” consisted of three items, accounting for 35.187% of the variance (α = 0.794).

Confirmatory factor analysis

In the second stage of analysis, another pretest sample (n = 500) was used to do Confirmatory Factor Analyses (CFA) in Amos version 26. CFA was used to validate the results of the EFA. The findings indicate the acceptable factor loadings of items. The factor loadings for all were significant, which means valid internal consistency is good. To evaluate the validity, correlation analyses were carried out to calculate reciprocal relationships among variables. Pearson’s r was employed to assess the significance of observed relationships. There were no indications that the mutual relations among the variables are multicollinearity. Thus, discriminant validity was acceptable (see Table 3).

The data from the factor loadings (unstandardized and standardized), composite reliability (CR), and AVE values for each construct suggest that the indicators account for a large portion of the variance of the corresponding latent construct and thus provide evidence for measurement modeling.

Results

Structural model testing

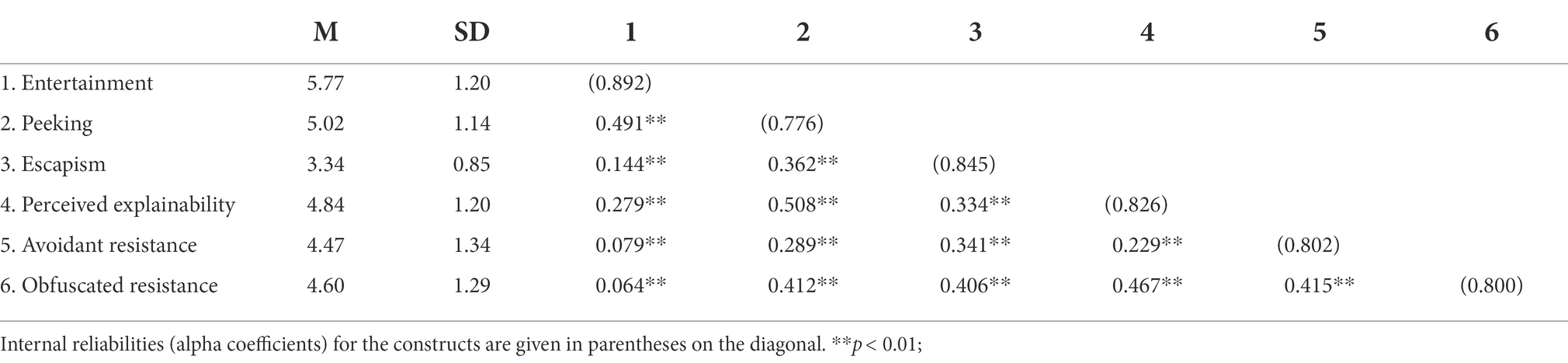

First, bivariate correlations were computed using all formal investigation samples (N = 2000). Descriptive statistics and the zero-correlations of all variables are displayed in Table 4. The results were credible because all the internal consistency alphas values exceeded 0.75. Users evaluated entertainment highly (M = 5.77, SD = 1.20), and evaluation of entertainment correlated positively with the perceived explainability (r = 0.279, p < 0.01). Peeking and escapism also correlated positively with the perceived explainability (rpeeking = 0.508, rescapism = 0.334, p < 0.01). The findings indicated that the perceived explainability was positively related with avoidant resistance (r = 0.229, p < 0.01) and obfuscated resistance (r = 0.467, p < 0.01).

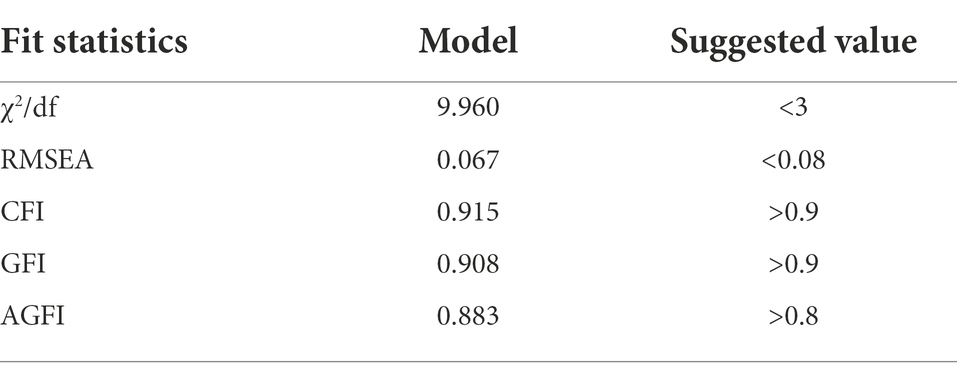

This study drew on the bootstrapping method (Preacher and Hayes, 2008), which produces 95% bias-corrected confidence intervals from 5,000 resamples of the data. And goodness-of-fit indices were used to evaluate the model whether it could be accepted or not. Previous research used the chi-squared value per degrees of freedom (χ2/df), Comparative fit index (CFI), Goodness of fit index (GFI), and the root mean square error of approximation (RMSEA) to measure the model fit (Jackson et al., 2009). In this study, most of the indices indicated a good fit suggesting a high probability of good fit (see Table 5).

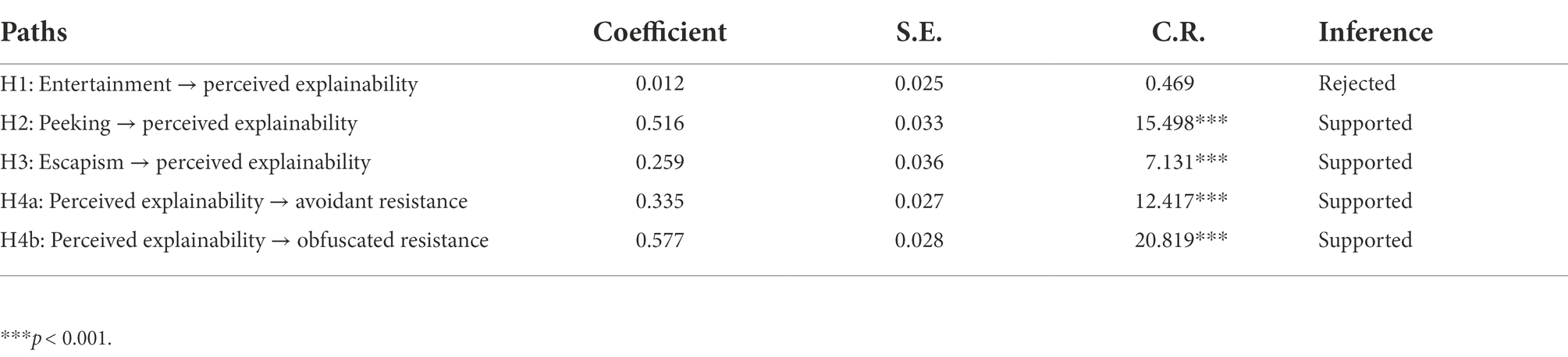

The results of structural path testing supported most of the hypotheses (Figure 2; Table 6). All the path coefficients were statistically significant (p < 0.001) except the path from entertainment to perceived explainability (p = 0.639), meaning H1 was not supported. Perceived explainability is significantly influenced by the motivations of peeking and escapism, determined by peeking. These factors altogether account for 44% of perceived explainability variable (R2 = 0.44). Obfuscated resistance values are greatly influenced by the perceived explainability. The model explained a significant portion of the variance in each construct. The strong paths imply a fundamental connection between the perceived algorithmic affordance and its antecedents. Given the significant effect of the perceived algorithmic affordance on avoidant resistance and obfuscated resistance, it would be desirable to examine the possible mediating effects of perceived algorithmic affordance on other outcomes.

Test for mediation

There was also evidence of a significant indirect effect of the motivations of peeking and escapism via perceived explainability on avoidant and obfuscated resistance. Specifically, the motivations of peeking and escapism were both indirectly associated with increased avoidant resistance via perceived explainability (ß Peeking = 0.190, p < 0.001, CI95% [0.145, 0.240]; ß escapism = 0.067, CI95% [0.043, 0.100]). Therefore, H6a and H7a were supported. Regarding obfuscated resistance, there was evidence of a significant, positive indirect effect such that the motivations of peeking and escapism were associated with obfuscated resistance, respectively, (ß Peeking = 0.339, p < 0.001, CI95% [0.280, 0.403]; ß escapism = 0.119, CI95% [0.081, 0.164]), supporting H6b and H7b. The results indicated that the indirect effect of entertainment via perceived explainability is not significant. Therefore, H5a and H5b were not supported.

Discussion

This study explains the formation progress of users’ algorithmic resistance with empirical evidence, which is hardly explored by previous research. Specifically, the motivations of peeking and escapism positively correlate with the perceived explainability of algorithms, which, in turn, encourages avoidant and obfuscated resistance. These results supported H1–H4, and H6–H7. In line with previous research on algorithmic awareness and behavior, the findings strengthen the active role of users (Belanche et al., 2017b, 2019; Dogruel et al., 2020). The good use of algorithms is not only reflected in achieving personal or organizational goals but also reflected in avoiding risks. As Taylor’s view, to ensure data fairness, users should have the freedom to access data technology and the freedom to refuse (Taylor, 2017). Besides, this study believes that users should have the ability to refuse.

Different from productive resistance, the two tactics of algorithmic resistance concerned in this paper are not destructive. The user achieves the goal of resistance based on the algorithmic rule. Like skipping advertisements (Belanche et al., 2019), deliberately not interacting with the platform is an avoidance behavior. Intentional non-participation behavior reflects the subjectivity of the user. Algorithms will evoke some sense of identity in the user (Karizat et al., 2021). When this awareness makes users feel uneasy, they may adjust their strategies.

Different from previous studies, the findings imply that users’ subjectivity is also affected by the functions of technology, context, and personal goals. For the purpose of economic interests, bloggers will obey the algorithm rules to improve their visibility, but they are also controlled by the algorithm rules (Mac Donald, 2021). For the purpose of peeking and escapism, when users realize algorithms accurately evaluate them, they become more sensitive and vigilant about algorithms (Bucher, 2017).

Algorithms may ultimately define the scope of human knowledge and cognitive style (Gillespie, 2014). The algorithm determines the content people are exposed to and affects the way users perceive the world (Just and Latzer, 2017). Its power and prejudice may pose a threat to personal rights and publicity (Nguyen et al., 2014). The ability to develop algorithmic resistance may benefit people’s public life and democracy.

Theoretical implications

The present study offers several theoretical implications and complements existing research. First, this study offered a new theoretical perspective on the interaction between users and algorithms by employing the affordance framework. Technological determinism and constructivist views are limited in explaining technology use (Leonardi and Barley, 2008). Affordance perspectives break through the limitation of one explanatory power by highlighting the multiple relations between technologies, users, and the context. A priori knowledge of algorithms is not necessarily required to perceive algorithmic affordance, which can emerge and accumulate through experimentation and adaptation with algorithms. Thus, the concept of perceived algorithmic affordance is a result of the interaction and the cognitive basis for triggering subsequent interactions. The model in this study shows a cross-section of interactions between users and algorithms. We can regard the actual reciprocal actions as the process of circulation of the model. Specifically, users’ needs have promoted the tactics through perceived algorithmic affordance. This interactive experience may change the prior understanding of how algorithms work, triggering a new interaction in a different context. Therefore, our findings provide a framework ideally suited to explain the recursive loops of users and algorithms.

Second, our work highlights the significance of the specific context on perceived affordance. Most studies have mentioned outcomes of perceived affordance but ignored their predictors (Bucher, 2017; Shin, 2020, 2021). The present study provided an empirical context for interacting with algorithms to make affordance—a type of middle-range theorizing—concrete and contextual. Our findings contributed to developing the specific predictors of perceived affordance via discussion of contextual factors. In the present study, peeking, escapism, and entertainment reflect the needs and goals of users, forming different contexts combined with social media practice. The findings show how diverse motivations have different effects on perceived algorithmic affordance. The latter could also be regarded as an ability for increased awareness and understanding of how algorithms function, corresponding to a new digital divide (Gran et al., 2020; Hargittai et al., 2020). As algorithms increasingly influence content delivery and presentation, it is important to determine whether understanding algorithms impacts our perceptions of the world and our actions in a decisive way. This information may have implications for related issues of public life and democracy (Dogruel et al., 2020; Gran et al., 2020). Hence, to narrow the gap of perceived algorithmic affordance among users, more scholarly attention should be paid to the specific context.

Last, our study extends the possible outcomes of algorithmic affordance. Former studies show that the understanding of algorithm operation could promote algorithmic subjection, for instance, obeying the algorithmic logic to enhance the visibility of content (Bucher, 2017; Karakayali et al., 2018). However, few studies explore the algorithmic resistance among ordinary users. Some scholars claim that the variations in an affordance may relate to different outcomes, even contradictory behavior (Fayard and Weeks, 2014; Evans et al., 2016). Thus, our findings supplement empirical evidence to support how perceived algorithmic affordance might trigger the behavioral outcomes of resistance. Our work pays attention to two types of resistance from the list of possible resistance tactics: avoidant resistance and obfuscated resistance.

The similarity of the two outcomes may be that they are both tactics of resistance rooted in the socio-cultural context of China. As stressed earlier by Gibson (1979), only considering users with specific needs and practices within a particular socio-culture context can make the discussion on affordance more significant. Thus, this study set out to explore algorithmic affordance and its impact on resistant behavior in the socio-cultural context of China. Individuals in this context tend to attach importance to social harmony and implicit, indirect expressions (Hall and Hall, 1989). In this socio-cultural situation, users’ perception of algorithmic affordance might incline to “moderate” resistance, such as avoidance and obfuscation, rather than antagonistic conflict (Hofstede et al., 2005). However, the difference between the two outcomes lies in whether data are actively produced. Avoidant resistance emphasizes minimizing the production of behavioral data, while obfuscation stresses creating misleading, false, or ambiguous data and feeding it back to the algorithms (Casemajor et al., 2015). Unlike features, affordances are not binary, but somewhat variable. Scholars suggest this may relate to individual differences, such as demographics, motivational traits, goals, experience, and capabilities (Lai, 2019). The variability of affordances might promote the different behaviors of individuals using the same technology to achieve specific outcomes (Evans et al., 2016). Our findings merely show the two resistant outcomes of perceived algorithmic affordance, rather than deeply explaining the specific mechanisms leading to particular outcomes. Thus, we recommend that future studies should explore a wider breadth of outcomes of perceived algorithmic affordance and elaborate how the variations of affordance may lead to the outcomes.

Practical implications

The study outlines the game between ordinary users and algorithms embedded in social media platforms. The process of coevolution has remarkable practical implications for users, algorithms, and the industry. First, the findings imply that users will have greater autonomy in content curation as their understanding of algorithms grows. As an infrastructural technology embedded in our daily life, algorithms may influence users’ options, but they do not determine them. Although recommendation algorithms are designed to direct users toward particular modes of engagement with the platforms, it is impossible to fully predict the ways in which users might use algorithms. Users may find more meanings and functions than intended by the designers. The algorithmic resistance illustrated in the study is exactly the strategy selection for users to game with algorithms. Users who perceived algorithmic affordance could resist algorithms by reshaping the datasets on which the algorithm crafts its output. Through the process, users and algorithms could coordinate the content curation. Overall, realizing and perceiving more about the structural power that shapes the platforms is an online skill and a necessary condition for managing information as a citizen.

Second, the synergy between users and algorithms may optimize algorithms and related services. Based on the research model, we could infer that these restricted conditions may reveal that algorithmic resistance lacks sustainability. In other words, the interaction between users and algorithms suggests a dynamic interplay between resistance and subjection. However, for the platforms, the resistance of avoidance and obfuscation are not conducive to platform owners’ development of new modes of profitable subjection to gain a competitive advantage. From the platforms’ perspective, the work of designers is to narrow the gap in perceived and actual affordance (Norman, 1993; Fayard and Weeks, 2014). Algorithmic “bugs” will eventually be modified by their designers, ensuring that algorithms will co-evolve with use and resistance. On the other hand, the platform can further improve users’ awareness and knowledge formation of algorithms by improving the visibility of information and functions of algorithms, optimizing the interface, and promoting the harmonious coexistence and collaborative development of users and algorithms.

Limitations and future studies

To provide a reasonable understanding of the applications of this study, its limitations should be set out. First, the research design has inherent limitations as self-reporting measures may be subject to bias, and participants’ perceptions are subjective. Researchers do not necessarily know the ground truth of algorithms, making it difficult to develop measures of how the algorithms function (DeVito et al., 2018; Hargittai et al., 2020). Thus, the relevant empirical research is very limited. While algorithms certainly constitute a black box (Pasquale, 2015), we should not remain at the stage of theory and conjecture. This present study focused on a more general perception of algorithmic affordance, rather than a particular algorithmic operational rule. The study used a self-reporting scale to measure perceived algorithmic affordance. Thus, the challenge of using objective measures to understand how algorithms work was overcome. Although self-reporting scales have some limitations, they also provide the most direct and cost–benefit methods for measuring media-related concepts in large samples (Slater, 2004). Further study needs to use objective assessment for crosschecking the validity of self-report measure. Moreover, to avoid the limitations of one certain method, studies can employ multiple methods such as natural experiments, interviews, and questionnaires to deepen the study of the interactions between users and algorithms.

Second, the cross-section survey-based methodology did not lend itself to reaching causal conclusions. To gain a more precise understanding of recursive loops between users and algorithms, studies could adopt experimental designs with a longitudinal or panel study approach—to track repeated observations of users over long periods—and measure the interaction effects between the users and AI algorithms.

Third, the study only considered the context of recommendation algorithms system in short video platforms—just one kind of algorithmic situation. Another significant algorithmic context is algorithmic decision-making (Kitchin, 2017; Danaher, 2019), embedded in daily life as intelligent assistants. Our model suggests that users may decide to resist algorithms. Algorithmic decision-making is more complex than recommendation algorithms, depending more on the context, experience, and autonomy (Danaher, 2019; Dogruel et al., 2020). Thus, future studies could expand the model to other situational factors to examine how user experience and autonomy impact algorithmic tactics.

Finally, this study considered the socio-cultural context of China, but did not explore the impact of the cultural milieu on perceived algorithmic affordance and related actor strategies in the model. Further research could explore the interaction of users and algorithms using a comparative, cross-cultural perspective in more detail. For example, future studies could compare the differences between the users’ perceived algorithms and behavioral outcomes in Douyin and TikTok (Chinese and international versions of the same application) to analyze the role of culture.

Conclusion

This study considered why and how people resist algorithms in the context of short videos. Based on the perspective of affordance, we uncovered the formation mechanism and heuristic process of algorithmic resistance. The findings show that different goals will mobilize users’ perceptions of algorithms and trigger various strategies. To avoid being tracked by algorithms, users may choose tactics of algorithmic resistance. The present study indicated that the motivations of short videos are positively related to algorithmic resistance through the key mediating role of perceived algorithmic affordance. The study expands and enriches the theoretical framework of affordance by revealing new predictors of perceived algorithmic affordance and the possible outcomes of algorithmic resistance. By focusing on algorithmic resistance possibilities, the current research provides insights into the synergy between users and algorithms to co-evolve and create reality. As algorithms gradually become more extensively embedded in all aspects of human social life, we need more empirical research representing the user perspective about the recursive loops between users and algorithms.

Data availability statement

The datasets presented in this article are not readily available because they are part of an ongoing research project. Requests to access the datasets should be directed to YmFpcWl5dV9wa3VAMTYzLmNvbQ==.

Ethics statement

The studies involving human participants were reviewed and approved by New Media School, Peking University. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

XX and YD contributed to the design, conceptualization, methodology, data analysis, and writing. QB contributed to the design, revision, and supervision of the work. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by the National Social Science Fund of China (grant number 18ZDA317).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ananny, M., and Crawford, K. (2018). Seeing without knowing: limitations of the transparency ideal and its application to algorithmic accountability. New Media Soc. 20, 973–989. doi: 10.1177/1461444816676645

Barredo Arrieta, A., Díaz-Rodríguez, N., del Ser, J., Bennetot, A., Tabik, S., Barbado, A., et al. (2020). Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inform. Fusion 58, 82–115. doi: 10.1016/j.inffus.2019.12.012

Belanche, D., Flavián, C., and Pérez-Rueda, A. (2017a). Understanding interactive online advertising: congruence and product involvement in highly and lowly arousing, Skippable video ads. J. Interact. Mark. 37, 75–88. doi: 10.1016/j.intmar.2016.06.004

Belanche, D., Flavián, C., and Pérez-Rueda, A. (2017b). User adaptation to interactive advertising formats: the effect of previous exposure, habit and time urgency on ad skipping behaviors. Telematics Inform. 34, 961–972. doi: 10.1016/j.tele.2017.04.006

Belanche, D., Flavián, C., and Pérez-Rueda, A. (2019). Consumer empowerment in interactive advertising and eWOM consequences: the PITRE model. J. Mark. Commun. 26, 1–20. doi: 10.1080/13527266.2019.1610028

Belanche, D., Flavián, C., and Pérez-Rueda, A. (2020). Brand recall of skippable vs non-skippable ads in you tube. Online Inf. Rev. 44, 545–562. doi: 10.1108/OIR-01-2019-0035

Bishop, S. (2019). Managing visibility on YouTube through algorithmic gossip. New Media Soc. 21, 2589–2606. doi: 10.1177/1461444819854731

Brunton, F., and Nissenbaum, H. (2015). Obfuscation: A user's guide for Privacy and Protest. MA: MIT Press.

Bruun, A., Raptis, D., Kjeldskov, J., and Skov, M. B. (2016). Measuring the coolness of interactive products: the COOL questionnaire. Behav. Inform. Technol. 35, 233–249. doi: 10.1080/0144929X.2015.1125527

Bucher, T. (2012). Want to be on the top? Algorithmic power and the threat of invisibility on Facebook. New Media Soc. 14, 1164–1180. doi: 10.1177/1461444812440159

Bucher, T. (2017). The algorithmic imaginary: exploring the ordinary affects of Facebook algorithms. Inf. Commun. Soc. 20, 30–44. doi: 10.1080/1369118X.2016.1154086

Casemajor, N., Couture, S., Delfin, M., Goerzen, M., and Delfanti, A. (2015). Non-participation in digital media: toward a framework of mediated political action. Media Cult. Soc. 37, 850–866. doi: 10.1177/0163443715584098

Cotter, K. (2019). Playing the visibility game: how digital influencers and algorithms negotiate influence on Instagram. New Media Soc. 21, 895–913. doi: 10.1177/1461444818815684

Danaher, J. (2019). “The Ethics of algorithmic outsourcing in everyday life,” in Algorithmic regulation. eds. K. Yeung and M. Lodge (New York: Oxford University Press), 98–118.

DeVito, M. A., Birnholtz, J., Hancock, J. T., French, M., and Liu, S. (2018). “How people form folk theories of social media feeds and what it means for how we study self-presentation.” in Proceedings of the 2018 CHI conference on human factors in computing systems, Montreal, Canada. 21-26 April 2018; New York, NY: ACM, 1–12.

Dogruel, L., Facciorusso, D., and Stark, B. (2020). I’m still the master of the machine. Internet users’ awareness of algorithmic decision-making and their perception of its effect on their autonomy. Inf. Commun. Soc. 25, 1311–1332. doi: 10.1080/1369118X.2020.1863999

Ehsan, U., and Riedl, M. O. (2019). On design and Evaluation of human-Centered Explainable AI Systems, Glasgow’19, ACM, Scotland.

Eslami, M., Karahalios, K., Sandvig, C., Vaccaro, K., Rickman, A., and Hamilton, K. (2016). “First I ‘like’ it, then I hide it: folk theories of social feeds.” in Proceedings of the 20 CHI conference on human factors in computing systems - CHI’, San Jose, CA; 7-12 May; New York, NY: ACM, 2371–2382.

Eslami, M., Rickman, A., Vaccaro, K., Aleyasen, A., Vuong, A., Karahalios, K., et al. (2015). “I always assumed that I wasn’t really that close to [her]”: reasoning about invisible algorithms in news feeds.” in Proceedings of the 33rd Annual ACM Conference on human Factors in Computing Systems, Seoul, Republic of Korea: ACM, 153–162.

Ettlinger, N. (2018). Algorithmic affordances for productive resistance. Big Data Soc. 5:205395171877139. doi: 10.1177/2053951718771399

Evans, S. K., Pearce, K. E., Vitak, J., and Treem, J. W. (2016). Explicating affordances: a conceptual framework for understanding affordances in communication research. J. Comput.-Mediat. Commun. 22, 35–52. doi: 10.1111/jcc4.12180

Faraj, S., and Azad, B. (2012). “The materiality of technology: An affordance perspective,” Materiality and organizing: Social interaction in a technological world. 237:258.

Fayard, A.-L., and Weeks, J. (2014). Affordances for practice. Inf. Organ. 24, 236–249. doi: 10.1016/j.infoandorg.2014.10.001

Flanagin, A. J. (2020). The conduct and consequence of research on digital communication. J. Comput. Mediat. Commun. 25, 23–31. doi: 10.1093/jcmc/zmz019

Gerlitz, C., and Helmond, A. (2013). The like economy: social buttons and the data-intensive web. New Media Soc. 15, 1348–1365. doi: 10.1177/1461444812472322

Gran, A.-B., Booth, P., and Bucher, T. (2020). To be or not to be algorithm aware: a question of a new digital divide? Inf. Commun. Soc. 24, 1779–1796. doi: 10.1080/1369118X.2020.1736124

Hall, E. T., and Hall, M. R. (1989). Understanding Cultural Differences. Yarmouth, ME: Intercultural Press.

Hargittai, E., Gruber, J., Djukaric, T., Fuchs, J., and Brombach, L. (2020). Black box measures? How to study people’s algorithm skills. Inf. Commun. Soc. 23, 764–775. doi: 10.1080/1369118X.2020.1713846

Hofstede, G., Hofstede, G. J., and Minkov, M. (2005). Cultures and Organizations: Software of the mind. Vol. 2. New York: Mcgraw-Hill Press.

Jackson, D. L., Gillaspy, J. A., and Purc-Stephenson, R. (2009). Reporting practices in confirmatory factor analysis: an overview and some recommendations. Psychol. Methods 14, 6–23. doi: 10.1037/a0014694

Jakesch, M., French, M., Ma, X., Hancock, J. T., and Naaman, M. (2019). “AI-mediated communication: How the perception that profile text was written by AI affects trustworthiness.” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems; May: New York: ACM, 1-13.

Just, N., and Latzer, M. (2017). Governance by algorithms: reality construction by algorithmic selection on the internet. Media Cult. Soc. 39, 238–258. doi: 10.1177/0163443716643157

Karakayali, N., Kostem, B., and Galip, I. (2018). Recommendation systems as Technologies of the Self: algorithmic control and the formation of music taste. Theory Cult. Soc. 35, 3–24. doi: 10.1177/0263276417722391

Karizat, N., Delmonaco, D., Eslami, M., and Andalibi, N. (2021). Algorithmic folk theories and identity: how Tik Tok users co-produce knowledge of identity and engage in algorithmic resistance. Proc. ACM Hum. Comput. Interact. 5, 1–44. doi: 10.1145/3476046

Kitchin, R. (2017). Thinking critically about and researching algorithms. Inf. Commun. Soc. 20, 14–29. doi: 10.1080/1369118X.2016.1154087

Klawitter, E., and Hargittai, E. (2018). “It’s like learning a whole other language”: the role of algorithmic skills in the curation of creative goods. Int. J. Commun. 12, 3490–3510. doi: 10.5167/uzh-168021

Lai, C.-H. (2019). Motivations, usage, and perceived social networks Within and Beyond social media. J. Comput.-Mediat. Commun. 24, 126–145. doi: 10.1093/jcmc/zmz004

Latzer, M., and Festic, N. (2019). A guideline for understanding and measuring algorithmic governance in everyday life. Internet Policy Rev. 8, 1–19. doi: 10.14763/2019.2.1415

Lee, E., Lee, J. A., Moon, J. H., and Sung, Y. (2015). Pictures speak louder than words: motivations for using Instagram. Cyberpsychol. Behav. Soc. Netw. 18, 552–556. doi: 10.1089/cyber.2015.0157

Leonardi, P. M., and Barley, S. R. (2008). Materiality and change: challenges to building better theory about technology and organizing. Inform. Organ. 18, 159–176. doi: 10.1016/j.infoandorg.2008.03.001

Leonardi, P. L. (2011). When flexible routines meet flexible technologies: affordance, Contraint, and the Imbricaiton of human and material agencies. MIS Q. 35, 147–167. doi: 10.2307/23043493

Lutz, C., and Hoffmann, C. P. (2017). The dark side of online participation: exploring non-, passive and negative participation. Inf. Commun. Soc. 20, 876–897. doi: 10.1080/1369118X.2017.1293129

Mac Donald, T. W. (2021). “How it actually works”: algorithmic lore videos as market devices. New Media Soc. 6:14614448211021404. doi: 10.1177/14614448211021404

Neyland, D., and Möllers, N. (2017). Algorithmic IF … THEN rules and the conditions and consequences of power. Inf. Commun. Soc. 20, 45–62. doi: 10.1080/1369118X.2016.1156141

Nguyen, T. T., Hui, P. K., Harper, F. M., Terveen, L., and Konstan, J. A. (2014). “Exploring the filter bubble: the effect of using recommender systems on content diversity.” in Proceedings of the 23rd International Conference on World Wide Web; April 2014; Seoul, Korea, 677-686.

Norman, D. A. (1993). Things that make us smart: Defending human Attributes in the age of the Machine. Cambridge, MA: Perseus Publishing.

Omar, B., and Dequan, W. (2020). Watch, share or create: The influence of personality traits and user motivation on TikTok mobile video usage. Int. J. Interact. Mob. Technol. 14, 121-137. doi: 10.3991/ijim.v14i04.12429

Papacharissi, Z., and Mendelson, A. (2011). “Toward a new (er) sociability: Uses, gratifications, and social capital on Facebook,” in Media Perspectives for the 21st Century. ed. S. Papathanassopoulos (London: Routledge), 225–243.

Papacharissi, Z., and Rubin, A. M. (2000). Predictors of internet use. J. Broadcast. Electron. Media 44, 175–196. doi: 10.1207/s15506878jobem4402_2

Parchoma, G. (2014). The contested ontology of affordances: implications for researching technological affordances for collaborative knowledge production. Comput. Hum. Behav. 37, 360–368. doi: 10.1016/j.chb.2012.05.028

Preacher, K. J., and Hayes, A. F. (2008). Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav. Res. Methods 40, 879–891. doi: 10.3758/BRM.40.3.879

Proferes, N. (2017). Information flow solipsism in an exploratory study of beliefs about twitter. Soc. Media Soc. 3:205630511769849. doi: 10.1177/2056305117698493

Rach, M., and Peter, M. K. (2021). “How TikTok’s algorithm beats facebook & co. for attention under the theory of escapism: a network sample analysis of Austrian, German and Swiss users,” in Digital Marketing and eCommerce Conference; Jun 2021; Springer, Cham.

Rader, E., and Gray, R. (2015). “Understanding user Beliefs about Algorithmic Curation in the Facebook news Feed.” in Proceedings of the 33rd annual ACM Conference on Human Factors in Computing Systems, Seoul, South Korea; 18-23 April; New York: ACM, 173–182.

Shin, D. (2020). User perceptions of algorithmic decisions in the personalized AI system: perceptual evaluation of fairness, accountability, transparency, and explainability. J. Broadcast. Electron. Media 64, 541–565. doi: 10.1080/08838151.2020.1843357

Shin, D. (2021). The effects of explainability and causability on perception, trust, and acceptance: implications for explainable AI. Int. J. Hum. Comput. Stud. 146:102551. doi: 10.1016/j.ijhcs.2020.102551

Shin, D., and Park, Y. J. (2019). Role of fairness, accountability, and transparency in algorithmic affordance. Comput. Hum. Behav. 98, 277–284. doi: 10.1016/j.chb.2019.04.019

Slater, M. D. (2004). Operationalizing and analyzing exposure: The Foundation of Media Effects Research. J. Mass Commun. Quart. 81, 168–183. doi: 10.1177/107769900408100112

Stenseng, F., Rise, J., and Kraft, P. (2012). Activity engagement as escape from self: The role of self-suppression and self-expansion. Leis. Sci. 34, 19–38. doi: 10.1080/01490400.2012.633849

Sundar, S. S. (2020). Rise of machine agency: A framework for studying the psychology of human–AI interaction (HAII). J. Comput.-Mediat. Commun. 25, 74–88. doi: 10.1093/jcmc/zmz026

Taylor, L. (2017). What is data justice? The case for connecting digital rights and freedoms globally. Big Data Soc. 4:205395171773633. doi: 10.1177/2053951717736335

Treré, E., Jeppesen, S., and Mattoni, A. (2017). Comparing digital protest media imaginaries: anti-austerity movements in Greece, Italy & Spain. J. Glob. Sustain. Inform. Soc. 15, 404–422. doi: 10.31269/triplec.v15i2.772

Velkova, J., and Kaun, A. (2021). Algorithmic resistance: media practices and the politics of repair. Inf. Commun. Soc. 24, 523–540. doi: 10.1080/1369118X.2019.1657162

Willson, M. (2017). Algorithms (and the) everyday. Inf. Commun. Soc. 20, 137–150. doi: 10.1080/1369118X.2016.1200645

Ytre-Arne, B., and Moe, H. (2021). Folk theories of algorithms: understanding digital irritation. Media Cult. Soc. 43, 807–824. doi: 10.1177/0163443720972314

Keywords: algorithms, algorithmic resistance, affordance, short video, motivation

Citation: Xie X, Du Y and Bai Q (2022) Why do people resist algorithms? From the perspective of short video usage motivations. Front. Psychol. 13:941640. doi: 10.3389/fpsyg.2022.941640

Edited by:

Marko Tkalcic, University of Primorska, SloveniaReviewed by:

Carlos Flavián, University of Zaragoza, SpainChandan Kumar Behera, Ulster University, United Kingdom

Copyright © 2022 Xie, Du and Bai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qiyu Bai, YmFpcWl5dV9wa3VAMTYzLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Xinzhou Xie

Xinzhou Xie Yan Du

Yan Du Qiyu Bai

Qiyu Bai