- 1Smart Health Big Data Analysis and Location Services Engineering Lab of Jiangsu Province, Nanjing University of Posts and Telecommunications, Nanjing, China

- 2State Key Laboratory for Novel Software Technology, Nanjing University, Nanjing, China

- 3School of Computer Science and Technology, Nanjing Tech University, Nanjing, China

- 4Guangdong Provincial Key Laboratory of Computer Vision and Virtual Reality Technology, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Electroencephalography (EEG) based emotion recognition enables machines to perceive users' affective states, which has attracted increasing attention. However, most of the current emotion recognition methods neglect the structural information among different brain regions, which can lead to the incorrect learning of high-level EEG feature representation. To mitigate possible performance degradation, we propose a novel nuclear norm regularized deep neural network framework (NRDNN) that can capture the structural information among different brain regions in EEG decoding. The proposed NRDNN first utilizes deep neural networks to learn high-level feature representations of multiple brain regions, respectively. Then, a set of weights indicating the contributions of each brain region can be automatically learned using a region-attention layer. Subsequently, the weighted feature representations of multiple brain regions are stacked into a feature matrix, and the nuclear norm regularization is adopted to learn the structural information within the feature matrix. The proposed NRDNN method can learn the high-level representations of EEG signals within multiple brain regions, and the contributions of them can be automatically adjusted by assigning a set of weights. Besides, the structural information among multiple brain regions can be captured in the learning procedure. Finally, the proposed NRDNN can perform in an efficient end-to-end manner. We conducted extensive experiments on publicly available emotion EEG dataset to evaluate the effectiveness of the proposed NRDNN. Experimental results demonstrated that the proposed NRDNN can achieve state-of-the-art performance by leveraging the structural information.

Introduction

Affective brain computer interface (aBCI) can establish an effective communication pathway between brain and devices (Mühl et al., 2014). Emotion recognition enables aBCI to accurately perceive the affective states of brains, which has attracted increasing attention (Fragopanagos and Taylor, 2005). Naturally, there exist several patterns of emotion expression, such as voice signals (Ang et al., 2002), facial expressions (Xiaohua et al., 2017), body gestures (Yan et al., 2014), electromyogram (EMG) signals (Cheng and Liu, 2008), electrocardiogram (ECG) signals (Agrafioti et al., 2012), and electroencephalogram (EEG) signal (Zheng, 2017). Among the above techniques, EEG is the most extensively adopted to record brain electrical activities caused by emotional fluctuations because of its portability and non-invasive way (Liu et al., 2017; Zheng, 2017).

As shown in Figure 1, a classical EEG-based aBCI system can be divided into four parts (Li J. et al., 2019), namely, signal acquisition, preprocess, feature extraction, and emotion recognition. For the acquisition of EEG signal, the electrical signal of brain activity can be efficiently obtained by the non-invasive electrodes along the scalp. Then, some kinds of filters, e.g., Butterworth and Chebyshev (Bustamante et al., 2015), are adopted to preprocess the original EEG signals to clean the noise. Subsequently, the affective EEG data can be transformed into a suitable feature representation by using the domain-specific feature extractors. In general, the extracted features are mainly represented as follows: (1) time feature; (2) frequency feature; (3) time-frequency feature; and (4) spatial feature. For example, Hjorth Features (Petrantonakis and Hadjileontiadis, 2010) and independent component analysis (ICA) (Iacoviello et al., 2015) are the widely used time domain feature extractors. Wavelet transform (WT) (Mazumder, 2019) and wavelet packet decomposition (WPD) (Ting et al., 2008) are commonly adopted as the affective EEG feature extractors in time-frequency domain. Besides, fast Fourier transform (FFT) (Murugappan and Murugappan, 2013) and autoregressive (AR) model (Atyabi et al., 2016) are two widely used frequency domain affective EEG feature extractors. Common spatial pattern (CSP) is the typical spatial feature extractors for EEG data (Ramoser et al., 2000). The main principle of CSP is to learn an optimal spatial projection, which can maximize the variance of two classes by simultaneous diagonalization of their covariance matrices. Besides the methods mentioned above, many other feature extractors have also been developed for affective EEG feature extraction, such as differential entropy (DE) method (Duan et al., 2013), which is widely used for extracting EEG features in time-spatial domain. After feature extraction, many classifiers can be exploited to emotion recognition, such as support vector machine (SVM) (Zhang et al., 2018), k-nearest neighbor (KNN) (Tang et al., 2019), and Linear Discriminant Analysis (LDA) (Zhang et al., 2013). The recognition results are finally feedback to the users.

Although the abovementioned methods have shown their efficacy in EEG-based aBCI system, these methods belong to shallow learning methods that cannot exploit deep EEG feature representations with the powerful deep learning framework. Therefore, many deep learning methods have been developed for EEG-based emotion recognition. For example, Zheng and Lu (2015) proposed to utilize deep belief network to construct emotion recognition model. Pandey and Seeja (2019) developed a multilayer perceptron-based neural network for EEG-based emotion recognition. In Song et al. (2018), proposed to use graph convolution neural network extract EEG features. Besides, Li Y. et al. (2019) developed a spatial-temporal deep neural network model for emotion recognition. In addition to the above methods, many other deep models have been exploited for decoding EEG, some of them greatly advanced the performance in feature representation and classification. Readers can refer to the in-depth systematic review in Alarcao and Fonseca (2017) for details. Although existing deep learning methods exhibit powerful feature learning capability in dealing with EEG-based emotion data, they have not considered the different contributions of individual brain regions to EEG feature representation and pattern classification.

Recently, neuroscience researches have shown that human emotions are correlated to multiple cerebral cortex regions, such as orbitofrontal cortex and ventromedial prefrontal cortex (Lotfi et al., 2014). Hence, the EEG signals associated with different brain regions might provide different contribution to emotion recognition (Lindquist et al., 2012). In view of this, Li Y. et al. (2019) assigned a set of weights to EEG signals within different brain regions to strengthen or weaken their contributions to EEG decoding. Besides, Park and Chung (2019) selected some good local regions by interquartile range and then adopted local CSP to extract their features. Despite promising progress, most of the current methods do not take into account the reliability of structural information among different brain regions, which can lead to the incorrect learning of high-level EEG feature representation.

Recently, certain methods have been developed to capture the structural information within the EEG feature, such as support matrix machine (SMM) (Luo et al., 2015), Robust SMM (Zheng et al., 2018), and deep stacked SMM (Hang et al., 2020). In Luo et al. (2015), a spectral elastic net regularization was combined with the hinge loss to formulate a matrix classifier, named SMM, which uses nuclear norm to exploit the structural information within EEG feature matrices. Based on SMM, robust SMM (Zheng et al., 2018) was proposed to eliminate outliers within EEG signals and construct a matrix classifier using the recovered clean data. Besides, Hang et al. (2020) adopted SMM as the basic building block to construct a deep stacked SMM, which inherits the characteristic of SMM that can learn the structural information of data as well as the powerful capability of deep representation learning. Although these methods have achieved promise EEG classification performance, they take pre-extracted EEG features as input, which heavily relies on the expertise.

In this study, a novel nuclear norm regularized deep neural network framework (NRDNN) is proposed to capture the structural information among different brain regions in affective EEG decoding. To learn high-level feature representations of multiple brain regions, the proposed NRDNN utilizes different deep neural networks for decoding EEG signals within multiple regions. In view of different brain regions may have different functions for the EEG emotion recognition, NRDNN introduces a region-attention layer to automatically learn weights of different brain regions to strengthen or weaken their corresponding contributions. To leverage the structural information among different brain regions, the weighted feature representations of multiple brain regions are stacked into a feature matrix, the nuclear norm regularization with the hinge loss is used for EEG-based emotion recognition. Besides, NRDNN can be efficiently optimized through standard end-to-end manner. To validate the effectiveness of the proposed method, we conducted extensive experiments on publicly available affective EEG dataset. Experimental results demonstrate that our NRDNN outperforms other comparison methods.

The remainder of this study is organized as follows: the “Nuclear norm regularized deep neural network” section illustrates the proposed NRDNN model and its learning algorithm in detail. In the “Experiments” section, extensive experiments and result analyses are presented. Finally, the conclusions of the study can be found in the “Conclusion” section.

Nuclear Norm Regularized Deep Neural Network

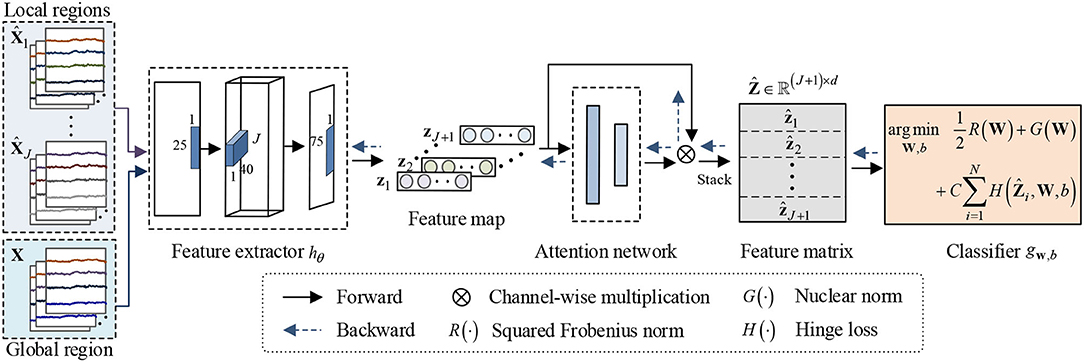

Neuroscience studies have shown that relevant information exists among different brain regions (Clark, 1994; Vecchio et al., 2013; Kurmukov et al., 2016). To a certain extent, the structural information among brain regions can reflect this relevant information. Since the nuclear norm of the matrix is the convex approximation of its rank, it can directly capture its structural information between columns or rows. Hence, this study develops an end-to-end nuclear norm regularized deep learning framework by using the structural information in EEG decoding. The flowchart of proposed framework is schematized in Figure 2. As shown in Figure 2, NRDNN first utilizes deep neural networks to learn high-level feature representations of multiple brain regions, respectively. Then, a set of weights indicating the contributions of each brain region can be automatically learned using a region-attention layer. Subsequently, the weighted feature representations of multiple brain regions are stacked into a feature matrix, and the nuclear norm regularization is adopted to capture the structural information within the feature matrix.

Figure 2. Framework of the proposed NRDNN for EEG-based emotion recognition. NRDNN first utilizes deep neural networks to learn high-level feature representations of multiple brain regions. Then, a set of weights indicating the contributions of each brain region can be automatically learned using a region-attention layer. Subsequently, the weighted feature representations of multiple brain regions are stacked into a feature matrix, and the nuclear norm regularization is adopted to capture the structural information within feature matrix.

Brain Region EEG Feature Learning

Given a trial of raw affective EEG signal, we aim to indentify the emotion states by decoding this signal. Suppose the EEG signal has m channels, each of which has a t time point, Thus, the EEG signal can be represented as a two-dimensional matrix X=[x1,x2,⋯,xm]∈ℝm×t. Here, xi=[xi1,xi2,⋯,xit]∈ℝt denotes the i-th channel of the EEG signal and xij,j = 1, 2, ⋯ , t denotes the value of the i-th channel at the time point t.

According to the principle of brain regions (Vecchio et al., 2013), we divide the entire EEG signal X into J parts located in different brain regions. Without loss of generality, EEG signal located in the j-th brain region can be represented as follows:

Here, we use xjk, which represents the EEG signal of the k-th channel located in the j-th brain region. mj denotes the number of channels, which is located in the j-th brain region. Besides, we have m1+m2+⋯+mJ = m.

In general, a deep classification model f can be decomposed as f = g ◦ h, in which hθ:X→ℝd parameterized by the network weight θ that maps the input EEG signal X to the high-level feature representation space. Besides, gw,b:Z→[0,1]K parameterized by the weight W and bias b that maps the feature representation to the final output.

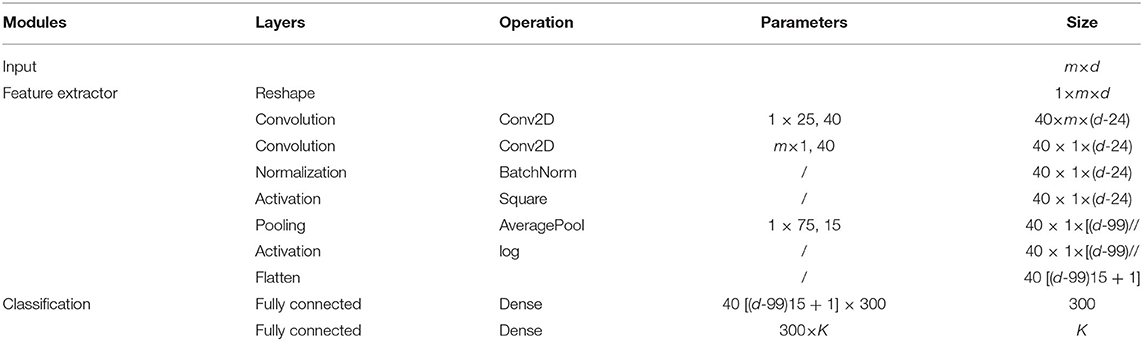

Currently, many deep neural networks can be used for EEG feature extraction, such as Shallow ConvNet (SConvNet) and Deep ConvNet (DConvNet) developed in Schirrmeister et al. (2017), and EEGNet developed in Lawhern et al. (2018). However, these widely used neural networks focus on motor imagery EEG classification. Hence, we slightly modify SConvNet to form the backbone network for affective EEG decoding (called AConvNet for simplicity), and the detailed network architecture of AConvNet is given in Table 1. To learn deep feature representation of the j-th brain region, feature extractor hθj:ˆXj→zj parameterized by the network weight θj that maps the EEG signal located in the j-th brain region to feature zj. Here, zj, j = 1, 2, ⋯ , J denotes the deep EEG feature of the j-th brain region. In addition, we also apply AConvNet to learn the high-level EEG feature representation of the global brain region, which can be represented as hθ:X → zJ+1.

Discriminative Feature Identification

As pointed earlier, human emotions are correlated to multiple cerebral cortex regions, such as orbitofrontal cortex and ventromedial prefrontal cortex (Lotfi et al., 2014). Hence, the EEG signals acquired from different brain regions would contribute differently to emotion recognition (Lindquist et al., 2012). To identify the contribution of different brain regions, we first reshape all the local and global EEG feature representations into a feature map, which can be represented as follows:

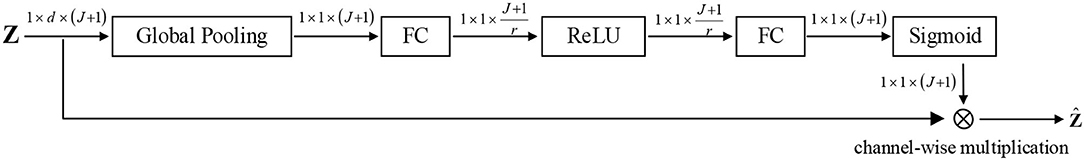

Thus, identification of the contribution of different brain regions equals to assign a set of appropriate weights to J + 1 channels. To achieve this goal, we use squeeze-and-excitation (SE) block (Hu et al., 2018) to adaptively emphasize informative channels and suppress the less useful ones, as shown in Figure 3.

To abstract the information of different brain regions, the global average pooling is used to produce channel-wise statistics s ∈ ℝJ+1, which can be obtained by

where zij and sj denote the i-th and the j-th element of zj and s, respectively. To capture the channel-wise dependencies, the following gating operator and an activation is utilized:

where δ(·) and σ(·) denote the activation functions ReLU (Nair and Hinton, 2010) and sigmoid, respectively. Besides, W1∈ℝJ+1r×(J+1) and W2∈ℝ(J+1)×J+1r. Here, u ∈ ℝJ+1 represents the weights of multiple channels, which can reflect the contributions of brain regions.

Finally, the weighted feature representation of brain region can be repesented as the channel-wise multiplication between the scale uj and the feature map zj:

where uj denotes the j-th element of u.

Leaning Structural Information

To capture the structural information among multiple brain regions, we first stack the weighted feature representation of multiple brain regions into a feature matrix:

Then, we focus on construct a matrix classifier, i.e., gw,b:ˆZ→y, which can exploit the structural information to help the emotion recognition. Hence, the classifier g can be formulated as follows:

where W ∈ ℝ(J+1) × d and b represent the regression matrix and bias, respectively. C > 0 is the trade-off parameter. is the squared Frobenius norm of regression matrix W, which can be used to control the complexity of model and avoid the overfitting problem. G(W) = τ‖W‖* denotes the nuclear norm of W, where τ > 0 is the penalty parameter. As the convex approximation of the rank of regression matrix W, nuclear norm can grasp the structural infromation within the featrue matrix . Besides, we adopt the widely used hinge loss as the loss function because of its ability in sparseness and robustness modeling.

Finally, the prediction of test emotion data using classifier g can be represented as follows:

Optimization

To optimize the parameter of deep classification model f, we use the stochastic gradient descent (SGD) method to optimize the objective function in Equation (7), so that the end-to-end training of both feature extractor h and classifier g can be carried out via standard backpropagation. The partial derivatives of the objective function with respect to the regression matrix W and bais b can be computed efficiently as follows:

where the gradient of nuclear norm ∂G/∂Wcould be calculated according to Papadopoulo and Lourakis (2000).

Experiments

In this section, the proposed NRDNN is evaluated on the publicly available affective EEG datasets [i.e., DEAP dataset (Koelstra et al., 2011)]. The affective EEG dataset are first preprocessed. Then, we introduce the comparison methods and their parameter settings. The experimental results are subsequently presented and discussed in detail. Finally, we conclude this study.

Affective EEG Data Preparation

The DEAP dataset contains multiple channel physiological signals for analyzing human emotional states. It is composed of 32-channel EEG signals recorded from 32 subjects. The sampling rate is set to 512 Hz. All subjects are required to watch 40 one-min long music video so that their various emotions are stimulated accordingly. Therefore, there are 40 trials per subject, each of which corresponds to affective EEG data stimulated by one music video. After each trial, all subjects are required to perform self-assessments on five dimensions, i.e., valence (from sad to joyful), arousal (from calm to excited), dominance (from submissive to dominant), liking (related to the preference of participants), and familiarity (related to the prior experience of participants). In addition to the rating range of familiarity, which is distributed from 1 (weakest) to 5 (strongest), the remaining dimensions range from 1 to 9. Referring to Yang et al. (2018), we adopt 5 as the threshold of the valence dimension to divide EEG trials into two categories, i.e., if the valence rating is greater (smaller) than 5, it is positive (negative). In this study, we downsampled 32-channel affective EEG signals to 128 Hz. We then bandpass filtered EEG signals between 4 and 45-Hz frequency band. Without loss of generality, we only take the first half subjects to evaluate the effectiveness of the proposed NRDNN, in order to reduce the training time.

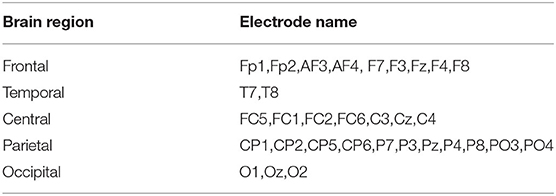

The EEG signals in DEAP database were recorded with 32 electrodes following the international 10/20 system. According to the spatial locations of electrodes, we grouped the 32 electrodes into 5 brain regions. Table 2 summarizes the EEG electrodes located in each brain region in detail (Li Y. et al., 2019).

Experimental Setup

Baseline Methods

In the experiments, the proposed method was compared with the following comparison methods by using the aforementioned affective EEG classification tasks: (1) Support vector machine (SVM) (Zhang et al., 2018), (2) Support matrix machine (SMM) (Luo et al., 2015), (3) EEGNet (Lawhern et al., 2018), (4) Shallow ConvNet for affective EEG (AConvNet) (Schirrmeister et al., 2017), (5) Fusion ConvNet (FConvNet) (Liang et al., 2020), (6) Deep learning with SVM (DLSVM) (Tang, 2013), (7) NRDNN without region-attention layer (DNN), and (8) Our NRDNN.

Implementation Details

As the format of the input data of SMM should be matrices, the principal component analysis (PCA) (Placidi et al., 2016) was adopted to reduce the dimension of EEG data to 32 × 16 matrix features. Then, the obtained two-dimensional EEG features were reshaped into vectors, which were used as the input for SVM. Besides, AConvNet was used as the network backbone for FConvNet. Referring to Liang et al. (2020), we took the high-level feature representations of multiple brain regions as multiple views, which were then classified using cross-entropy loss. For DLSVM, we also used AConvNet as its network backbone. The obtained high-level feature representations of multiple brain regions were concatenated and then classified using SVM in an end-to-end manner. The trade-off parameter C of SVM, SMM, DLSVM, and our NRDNN was decided through searching from the set {1e − 2, 1e − 1, 1e0, 1e1, 1e2}. The parameter τ of SMM and our NRDNN was decided through searching from the set {1e − 3, 2e − 3, 5e − 3, 1e − 2, 2e − 2, 5e − 2, 1e − 1, 2e − 1, 5e − 1, 1e0}. For all comparison methods, the optimal parameters C and τ were chosen by using the 5-fold cross-validation method on the training dataset. For deep learning methods EEGNet, AConvNet, FConvNet, DLSVM, and NRDNN, the batch size and epoch were set to 40 and 1,000, respectively. The learning rate was dynamically changed during optimization using the formula as follows (Pei et al., 2018): , in which p linearly changes from 0 to 1, η0 = 1e − 3, α = 10, and β = 0.75. Besides, the parameter r is set to 2 in the region-attention network.

Following the evaluation protocol developed by Lan et al. (2018), we used the leave-one-subject-out cross-validation method to evaluate the affective EEG classification performance on each subject. The following metrics (Chen et al., 2020) on the test dataset were adopted, i.e., Accuracy (ACC), F1score (F1), and the area under the receiver operating characteristics curve (AUC). Herein, ACC = (TP+TN)/(TP+FN+FP+TN) and F1 = 2 × PPV × SEN/(PPV+SEN), in which the positive predictive value (PPV) = TP/(TP+FP) and sensitivity (SEN) = TP/(TP+FN). Generally, the higher are the metric values, the better affective is the EEG classification performance.

Experimental Results Analysis

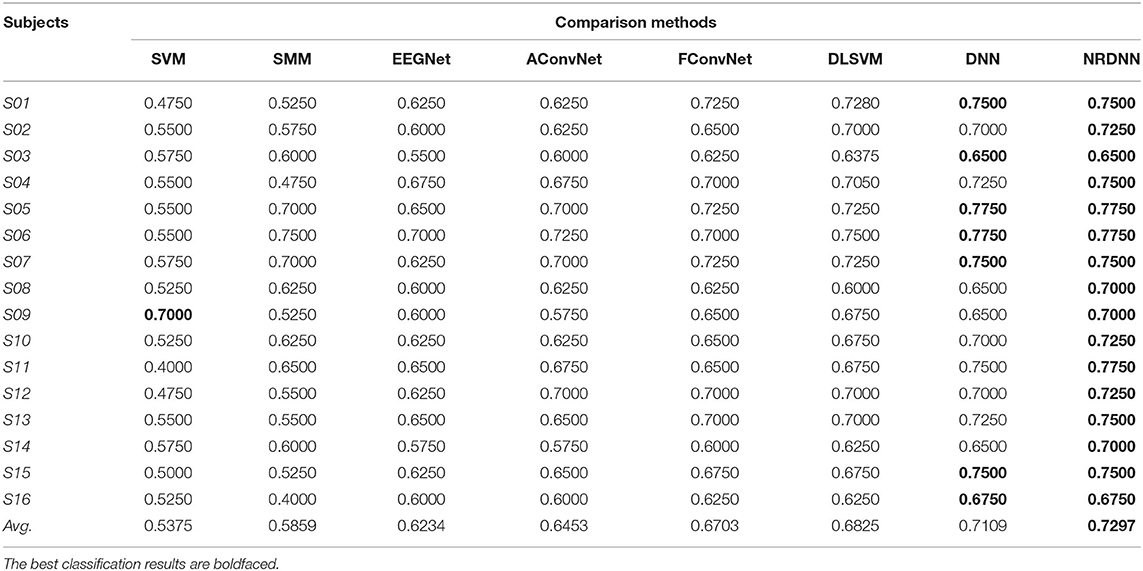

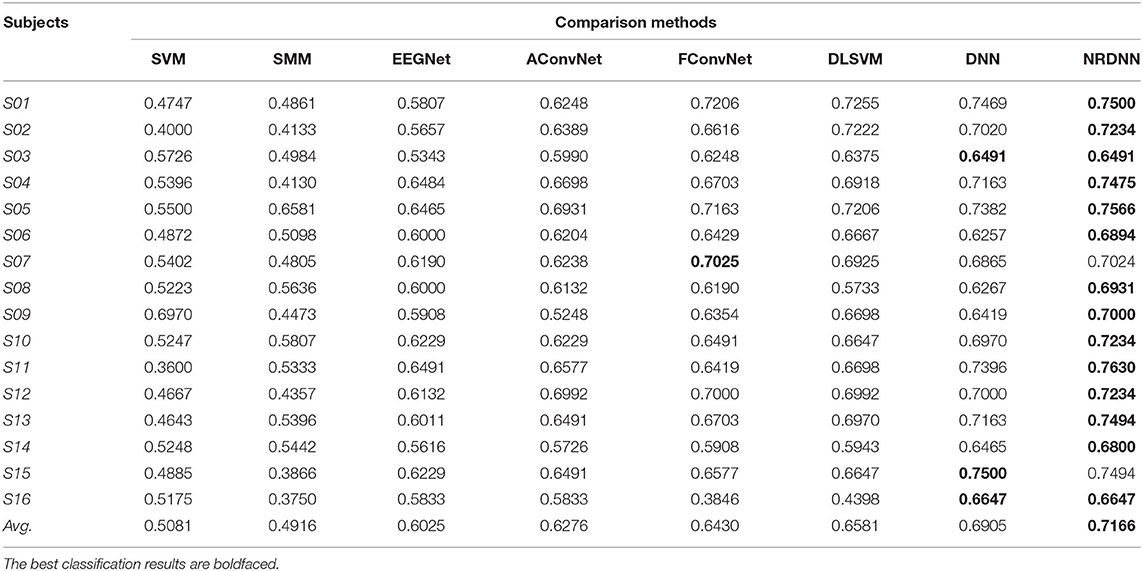

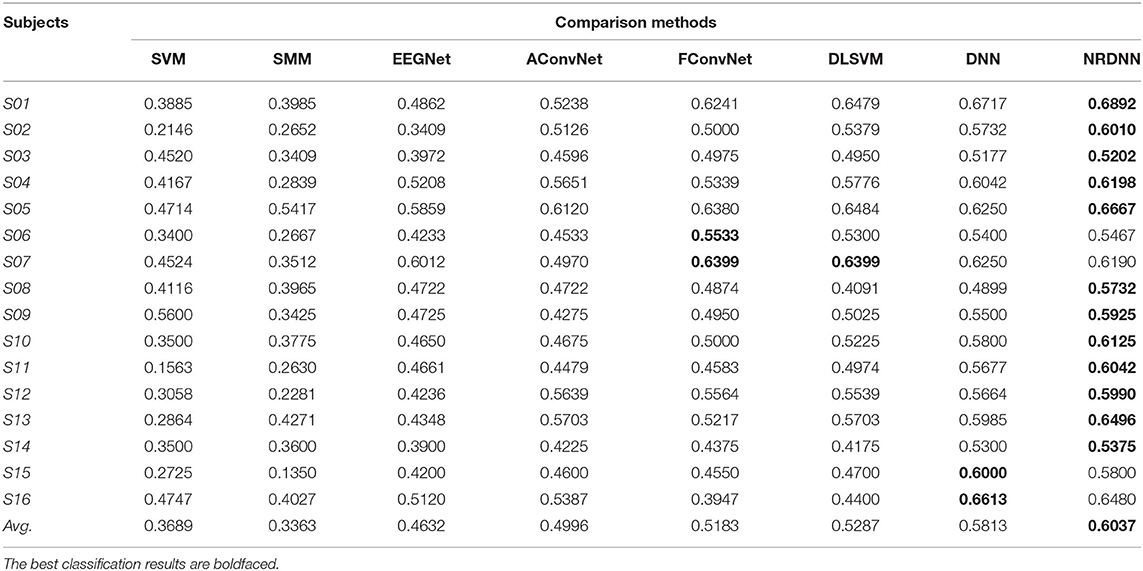

The classification accuracy (ACC), F1 score (F1), and AUC of all comparison methods on 16 subjects are presented in Tables 3–5. The best classification results are boldfaced. From these classification results, we can obtain the following observations. In terms of ACC, matrix learning method SMM can obtain better classification performance than vector-based classifier SVM. This is because SMM can exploit the correction within EEG feature matrices to improve the classification performance. In addition, deep learning methods, such as EEGNet, AConvNet, FConvNet, DLSVM, and NRCNN, can yield better classification results than shallow methods, such as SVM and SMM, in almost all cases. Compared with shallow methods, deep neural networks can automatically learn high-level feature representations from the raw data, resulting in better EEG decoding performance. It is notable that our NRDNN can obtain the best classification performance than other deep learning methods. The promising results are mainly attributed to the fact that NRDNN can not only learn high-level feature representations of EEG signals located in multiple brain regions but also capture the structural information among different brain regions. The experimental results verify the fact that the structural information among different brain regions is conductive to boost the decoding performance of affective EEG signals.

As summarized in Tables 3–5, we can observe that the proposed method obtains the highest average classification results. Specifically, the proposed NRDNN achieves promising average results of 72.97, 71.66, and 60.37% in terms of ACC, F1, and AUC. Compared with the baseline SVM, the absolute average of ACC, F1, and AUC increases by 19.22, 20.85, and 23.48%, respectively. NRDNN outperforms the results of SMM by an average of 14.38, 22.5, and 26.74%, which validates the high-level feature learning capability of our NRDNN. Compared with DLSVM that does not leverage the structural information among multiple brain regions, the average classification results of NRDNN are increased by 4.72, 5.85, and 7.5% in terms of ACC, F1, and AUC, respectively. Besides, NRDNN outperforms EEGNet by 10.63, 11.41, and 14.5%, and yields 8.44, 8.9, and 10.41% higher average classification results than AConvNet. NRDNN is superior to FConvNet by 5.94, 7.36, and 8.54% in terms of ACC, F1, and AUC, respectively. Furthermore, NRDNN outperforms the results of DNN by an average of 1.88, 2.61, and 2.24%, which validates the contributions of different brain regions that can be automatically identified by our NRDNN. These experimental results demonstrate the effectiveness of the proposed NRDNN.

Discussion

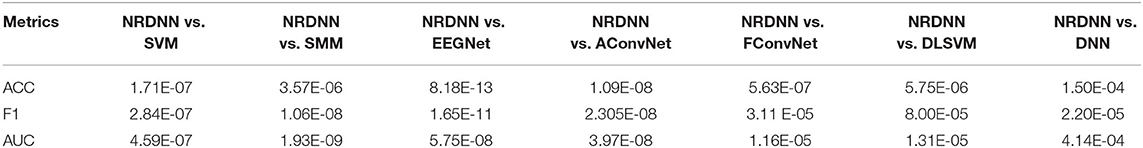

To evaluate the statistical significance of the experimental results, we further perform pairwise two-tailed t-test (Zheng et al., 2018) to verify whether there exist significant differences with a confidence level of 95% between the proposed NRDNN and the comparison methods. The statistical significance comparisons of ACC and F1 of NRDNN and other comparison methods are given in Table 6. The p-value less than 0.05 expresses that significant differences exist between the proposed NRDNN and the comparison methods. We highlighted the p-values that are less than 0.05 in boldface. As summarized in Table 6, we can see that the null hypothesis can be rejected with 95% confidence level in each case. The statistical results verify that the proposed NRDNN significantly outperformed the comparison methods. This further indicated the capability of the NRDNN to exploit the structural information among multiple brain regions, as well as the powerful high-level affective EEG feature learning capability. The above experimental results illustrate that the proposed NRDNN is suitable for the classification of affective EEG data.

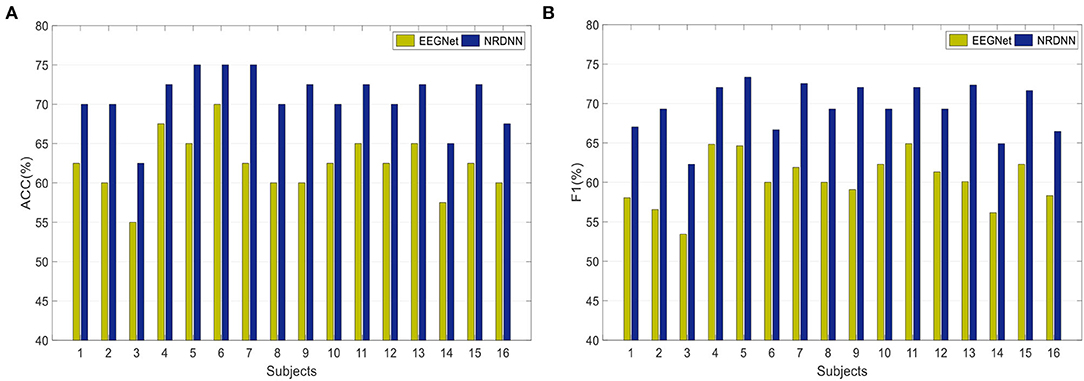

To obtain a better insight into the classification result of our nuclear norm regularized deep neural network framework, we further investigated the effects of different network backbones on the classification performance. Figure 4A presents the ACCs of both EEGNet and the proposed framework NRDNN using EEGNet as its network backbone. Figure 4B gives the F1s. It can be found that NRDNN yields better results than the baseline EEGNet in all cases. In terms of ACC, NRDNN is superior to EEGNet by 7.5, 10, 7.5, 5, 10, 5, 12.5, 10, 12.5, 7.5, 7.5, 7.5, 7.5, 7.5, 10, and 7.5% on 16 subjects, respectively. Compared to EEGNet that does not leverage the deep features of multiple brain regions and their structural information, the classification F1s of NRDNN are increased by 8.96, 12.74, 8.86, 7.22, 8.68, 6.67, 10.63, 9.31, 12.98, 7.02, 7.15, 7.99, 12.23, 8.75, 9.34, and 8.14%, respectively. The average ACC and F1 of NRDNN are 70.78 and 69.41%. The absolute values are increased by 8.44 and 9.16% compared with EEGNet.

Figure 4. Classification results of both EEGNet and the proposed framework NRDNN using EEGNet as its network backbone. (A) Classification performance (ACC), and (B) Classification performance (F1).

Overall, the proposed NRDNN improves the affective EEG classification performance using different network backbones. The abovementioned results validate that NRDNN can effectively learn deep features of multiple brain regions and their corresponding structural information using the nuclear norm regularization. To summarize, NRDNN integrates the powerful deep feature learning capability and the structural information learning ability of matrix classifier. The experimental results demonstrate that the proposed NRDNN framework could achieve better classification performance than the comparison methods.

Conclusion

In this study, we first presented a deep neural network, named AConvNet, for affective EEG decoding. Based on AConvNet, we further proposed a novel nuclear norm regularized deep neural network framework called NRDNN. The proposed NRDNN can effectively learn high-level feature representations of EEG signals located in multiple brain regions using AConvNet, as well as discriminate the contributions of multiple brain regions using a set of automatically learned weights. Besides, NRDNN can exploit the structural information among multiple brain regions using the introduced nuclear norm regularization. The proposed NRDNN can be carried out in an efficient end-to-end fashion. Extensive experimental results on publicly available emotion dataset demonstrate the superiority of our NRDNN.

Despite the promising classification performance of NRDNN, there is still room for further improvement. For example, the development of more advance attention mechanism is conductive to the identification of the contribution of different brain regions. Besides, extending the proposed method to multi-class classification is another interesting direction. Furthermore, more powerful discriminative high-level features with both spatial and temporal information of EEG signals can further improve the performance of EEG-based emotion recognition. We will address these issues in the future studies.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found at: http://www.eecs.qmul.ac.uk/mmv/datasets/deap/download.html.

Author Contributions

SL is responsible for study design and manuscript writing. MY and YH are responsible for data processing and data analysis. XD is responsible for manuscript editing. QW is responsible for experimental design. All authors contributed to the article and approved the submitted version.

Funding

This study was supported in part by the National Natural Science Foundation of China under Grants (61902197). Research on development and application of intelligent vehicle testing simulator based on augmented reality was funded by the Guangdong Provincial Basic and Applied Basic Research Fund-Regional Joint Fund (2020B1515130004), the Open Project of State Key Laboratory for Novel Software Technology at Nanjing University (KFKT2020B11), and the NUPTSF (NY219034).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agrafioti, F., Hatzinakos, D., and Anderson, A. K. (2012). ECG pattern analysis for emotion detection. IEEE Trans. Affect. Comput. 3, 102–115. doi: 10.1109/T-AFFC.2011.28

Alarcao, S., and Fonseca, M. (2017). Emotions recognition using EEG signals: a survey. IEEE Transac. Affect Comput. 10, 374–393. doi: 10.1109/TAFFC.2017.2714671

Ang, J., Dhillon, R., Krupski, A., Shriberg, E., and Stolcke, A. (2002). “Prosody-based automatic detection of annoyance and frustration in humancomputer dialog,” in INTERSPEECH (Denver, CO), 2037–2040.

Atyabi, A., Shic, F., and Naples, A. (2016). Mixture of autoregressive modeling orders and its implication on single trial EEG classification. Expert Syst. With Applic. 65, 164–180. doi: 10.1016/j.eswa.2016.08.044

Bustamante, P., Lopez Celani, N., Perez, M., and Quintero, M. O. (2015). “Recognition and regionalization of emotions in the arousal-valence plane,” in Proceedings of the 2015 Milano, Italy 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) p. 6042–6045. doi: 10.1109/EMBC.2015.7319769

Chen, Y., Hang, W., Liang, S., Liu, X., Li, G., and Wang, Q. (2020). A novel transfer support matrix machine for motor imagery-based brain computer interface. Front. Neurosci. 14, 606949. doi: 10.3389/fnins.2020.606949

Cheng, B., and Liu, G. (2008). “Emotion recognition from surface EMG signal using wavelet transform and neural network,” in Proceedings of the 2nd International Conference on Bioinformatics and Biomedical Engineering. (Shanghai), p. 1363–1366. doi: 10.1109/ICBBE.2008.670

Clark, J. M. (1994). Modularity, abstractness and the interactive brain. Behav. Brain Sci. 17, 67–68. doi: 10.1017/S0140525X00033380

Duan, R., Zhu, J., and Lu, B. (2013). “Differential entropy feature for EEG-based emotion classification,” in 6th International IEEE/EMBS Conference on Neural Engineering (NER). p. 81–84. doi: 10.1109/NER.2013.6695876

Fragopanagos, N., and Taylor, J. G. (2005). Emotion recognition in human–computer interaction. Neural Netw. 18, 389–405. doi: 10.1016/j.neunet.2005.03.006

Hang, W., Feng, W., Liang, S., Wang, Q., Liu, X., and Choi, K. S. (2020). Deep stacked support matrix machine based representation learning for motor imagery EEG classification. Comput. Methods Progr. Biomed. 193, 105466. doi: 10.1016/j.cmpb.2020.105466

Hu, J., Shen, L., and Sun, G. (2018). “Squeeze-and-excitation networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (Salt Lake City, UT: IEEE), 7132–7141. doi: 10.1109/CVPR.2018.00745

Iacoviello, D., Petracca, A., Spezialetti, M., and Placidi, G. (2015). A real-time classification algorithm for EEG-based BCI driven by self-induced emotions. Comput. Methods Programs Biomed. 122, 293–303. doi: 10.1016/j.cmpb.2015.08.011

Koelstra, S., Muhl, C., and Soleymani, M. (2011). Deap: A database for emotion analysis using physiological signals. IEEE Transac. Affect. Comput. 3, 18–31. doi: 10.1109/T-AFFC.2011.15

Kurmukov, A., Dodonova, Y., Burova, M., Mussabayeva, A., Petrov, D., and Faskowitz, J. (2016). “Topological modules of human brain networks are anatomically embedded: evidence from modularity analysis at multiple scales,” in International Conference on Network Analysis, Cham: Springer. p. 299–308. doi: 10.1007/978-3-319-96247-4_22

Lan, Z., Sourina, O., Wang, L., Scherer, R., and Müller-Putz, G. R. (2018). Domain adaptation techniques for EEG-based emotion recognition: a comparative study on two public datasets. IEEE Transac. Cogn. Develop. Syst. 11, 85–94. doi: 10.1109/TCDS.2018.2826840

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 15, 056013. doi: 10.1088/1741-2552/aace8c

Li, J., Qiu, S., Shen, Y., Liu, C. L., and He, H. (2019). Multisource transfer learning for cross-subject EEG emotion recognition. IEEE Transac. Cybernet. 50, 3281–3293. doi: 10.1109/TCYB.2019.2904052

Li, Y., Zheng, W., Wang, L., Zong, Y., and Cui, Z. (2019). From regional to global brain: A novel hierarchical spatial-temporal neural network model for EEG emotion recognition. IEEE Transac. Affect Comput. 13, 568–578. doi: 10.1109/TAFFC.2019.2922912

Liang, E., Elazab, A., Liang, S., Wang, Q., and Lei, B. (2020). “Walking imagery evaluation based on multi-view features and stacked denoising auto-encoder network,” in 2020 IEEE 17th International Symposium on Biomedical Imaging. p. 1896–1899. doi: 10.1109/ISBI45749.2020.9098522

Lindquist, K. A., Wager, T. D., Kober, H., Bliss-Moreau, E., and Barrett, L. F. (2012). The brain basis of emotion: a meta-analytic review. Behav. Brain Sci. 35, 121–143. doi: 10.1017/S0140525X11000446

Liu, Y. J., Yu, M., Zhao, G., Song, J., Ge, Y., and Shi, Y. (2017). Real-time movie-induced discrete emotion recognition from EEG signals. IEEE Transac. Affect. Comput. 9, 550–562. doi: 10.1109/TAFFC.2017.2660485

Lotfi, E., and Akbarzadeh, -T M. R. (2014). Practical emotional neural networks. Neural Netw. 59, 61–72. doi: 10.1016/j.neunet.2014.06.012

Luo, L., Xie, Y., Zhang, Z., and Li, W. (2015). “Support matrix machines,” in International Conference on Machine Learning. (Lille), p. 938–947.

Mazumder, I. (2019). “An analytical approach of EEG analysis for emotion recognition,” in Proceedings of the 2019 Devices for Integrated Circuit (DevIC). p. 256–260. doi: 10.1109/DEVIC.2019.8783331

Mühl, C., Allison, B., Nijholt, A., and Chanel, G. (2014). A survey of affective brain computer interfaces: Principles, state-of-the-art, and challenges. Brain Comput. Interfaces 1, 66–84. doi: 10.1080/2326263X.2014.912881

Murugappan, M., and Murugappan, S. (2013). “Human emotion recognition through short time Electroencephalogram (EEG) signals using Fast Fourier Transform (FFT),” in Proceedings of the 2013 IEEE 9th International Colloquium on Signal Processing and its Applications. (Kuala Lumpur: IEEE), p. 289–294. doi: 10.1109/CSPA.2013.6530058

Nair, V., and Hinton, G. E. (2010). “Rectified linear units improve restricted boltzmann machines,” in International Conference on Machine Learning (Haifa), 807–814. doi: 10.5555/3104322.3104425

Pandey, P., and Seeja, K. (2019). “Emotional state recognition with eeg signals using subject independent approach,” in Data Science and Big Data Analytics. Singapore: Springer. p. 117–124. doi: 10.1007/978-981-10-7641-1_10

Papadopoulo, T., and Lourakis, M. I. A. (2000). “Estimating the jacobian of the singular value decomposition: Theory and applications,” in European Conference on Computer Vision. (Dublin), p. 554–570. doi: 10.1007/3-540-45054-8_36

Park, Y., and Chung, W. (2019). Frequency-optimized local region common spatial pattern approach for motor imagery classification. IEEE Transac. Neural Syst. Rehabilit. Eng. 27, 1378–1388. doi: 10.1109/TNSRE.2019.2922713

Pei, Z., Cao, Z., Long, M., and Wang, J. (2018). “Multi-adversarial domain adaptation,” in Thirty-second AAAI Conference on Artificial Intelligence.

Petrantonakis, P. C., and Hadjileontiadis, L. J. (2010). Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Transac. Affect. Comput. 1, 81–97. doi: 10.1109/T-AFFC.2010.7

Placidi, G., Giamberardino, P. D., Petracca, A., Spezialetti, M., and Iacoviello, D. (2016). “Classification of Emotional Signals from the DEAP dataset,” in International Congress on Neurotechnology, Electronics and Informatics. vol. 2, p. 15–21. doi: 10.5220/0006043400150021

Ramoser, H., Muller-Gerking, J., and Pfurtscheller, G. (2000). Optimal spatial filtering of single trial eeg during imagined hand movement. IEEE Transac. Rehabilit. Eng. 8, 441–446. doi: 10.1109/86.895946

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., and Tangermann, M. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Human Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Song, T., Zheng, W., Song, P., and Cui, Z. (2018). Eeg emotion recognition using dynamical graph convolutional neural networks. IEEE Transac. Affect Comput. 11, 532–41. doi: 10.1109/TAFFC.2018.2817622

Tang, X., Wang, T., Du, Y., and Dai, Y. (2019). Motor imagery EEG recognition with KNN-based smooth auto-encoder. Artif Intell. Med. 101, 101747. doi: 10.1016/j.artmed.2019.101747

Tang, Y. (2013). Deep learning using support vector machines. CoRR, abs/0239, 2.1. doi: 10.48550/arXiv.1306.0239

Ting, W., Guo-Zheng, Y., Bang-Hua, Y., and Hong, S. (2008). EEG feature extraction based on wavelet packet decomposition for brain computer interface. Meas. J. Int. Meas. Confed. 41, 618–625. doi: 10.1016/j.measurement.2007.07.007

Vecchio, F., Babiloni, C., Buffo, P., Rossini, P. M., and Bertini, M. (2013). Inter-hemispherical functional coupling of EEG rhythms during the perception of facial emotional expressions. Clin. Neurophysiol. 124, 263–272. doi: 10.1016/j.clinph.2012.03.083

Xiaohua, H., Wang, S. J., Liu, X., Zhao, G., Feng, X., and Pietikainen, M. (2017). Discriminative spatiotemporal local binary pattern with revisited integral projection for spontaneous facial micro-expression recognition. IEEE Trans. Affect. Comput. 10, 32–47. doi: 10.1109/TAFFC.2017.2713359

Yan, J., Zheng, W., Xin, M., and Yan, J. (2014). Integrating facial expression and body gesture in videos for emotion recognition. IEICE Transac. Inf. Syst. E97-D, 610–613. doi: 10.1587/transinf.E97.D.610

Yang, Y., Wu, Q., Qiu, M., Wang, Y., and Chen, X. (2018). “Emotion recognition from multi-channel eeg through parallel convolutional recurrent neural network,” in International Joint Conference on Neural Networks. (Rio de janeiro), p. 1–7. doi: 10.1109/IJCNN.2018.8489331

Zhang, Y., Nam, C. S., Zhou, G., Jin, J., Wang, X., and Cichocki, A. (2018). Temporally constrained sparse group spatial patterns for motor imagery BCI. IEEE Transac. Cybernet. 49, 3322–3332. doi: 10.1109/TCYB.2018.2841847

Zhang, Y., Zhou, G., Zhao, Q., Jin, J., Wang, X., and Cichocki, A. (2013). Spatial-temporal discriminant analysis for ERP-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 21, 233–243. doi: 10.1109/TNSRE.2013.2243471

Zheng, Q., Zhu, F., and Heng, P. A. (2018). Robust support matrix machine for single trial eeg classification. IEEE Transac. Neural Syst. Rehabil. Eng. 26, 551–562. doi: 10.1109/TNSRE.2018.2794534

Zheng, W. (2017). Multichannel EEG-based emotion recognition via group sparse canonical correlation analysis. IEEE Transac. Cogn. Develop. Syst. 9, 281–290. doi: 10.1109/TCDS.2016.2587290

Keywords: electroencephalography (EEG), emotion recognition, affective brain-computer interface (aBCI), structural information, nuclear norm regularization

Citation: Liang S, Yin M, Huang Y, Dai X and Wang Q (2022) Nuclear Norm Regularized Deep Neural Network for EEG-Based Emotion Recognition. Front. Psychol. 13:924793. doi: 10.3389/fpsyg.2022.924793

Received: 20 April 2022; Accepted: 24 May 2022;

Published: 29 June 2022.

Edited by:

Yizhang Jiang, Jiangnan University, ChinaCopyright © 2022 Liang, Yin, Huang, Dai and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qiong Wang, d2FuZ3Fpb25nQHNpYXQuYWMuY24=

Shuang Liang

Shuang Liang