- 1College of Foreign Languages, Hunan University, Changsha, China

- 2School of Foreign Studies, Hunan University of Humanities, Science and Technology, Loudi, China

Previous studies that explored the impact of task-related variables on translation performance focused on task complexity but reported inconsistent findings. This study shows that, to understand the effect of task complexity on translation process and its end product, performance in translation tasks of various complexity levels needs to be compared in a specific setting, in which more factors are considered besides task complexity—especially students’ translating self-efficacy belief (TSEB). Data obtained from screen recording, subjective rating, semi-structured interview, and quality evaluation were triangulated to measure how task complexity influenced the translation performance of Chinese students with high and low TSEB. We found that the complex task led to significantly longer task duration, greater self-reported cognitive effort, lower accuracy, and poorer fluency than the simple one among students, irrespective of their TSEB level. Besides, the high-TSEB group outperformed the low-TSEB group in translation accuracy and fluency in both tasks. However, the interaction effect of task complexity and TSEB was not significant, due possibly to weak problem awareness among students. Our study has implications for effectively designing task complexity, getting the benefits of TSEB, and improving research on translation performance.

Introduction

Translation process research, which has been ongoing for about 40 years, focuses on human cognition during translation (Alves and Hurtado Albir, 2010; Jääskeläinen, 2016). In translation process research, simultaneous analysis of the translation process and its end product is vitally important since “looking only at the process or the product…is looking at only one side of a coin” (O’Brien, 2013: 6). Translators, related both to the process and product of translation, have thus attracted great attention in translation process research (e.g., Muñoz Martín, 2014; Lehka-Paul and Whyatt, 2016; Araghian et al., 2018). Although several factors can affect translators’ mental process and product quality, previous studies have identified the task itself as a key factor (e.g., Kelly, 2005; O’Brien, 2013). First, there is a need for translation teachers to design appropriate tasks as per pedagogical objectives. However, traditional translator training is often criticized for the lack of appropriate criteria for source text selection and task design in general (Kelly, 2005). In addition, task design is also an important element in research design (O’Brien, 2013) as tasks adopted shall be appropriate for the research project. The past decades have seen a growing interest in investigating how task properties may influence translators’ performance. Researchers have explored task type (e.g., Jia et al., 2019), task modality (e.g., Chmiel et al., 2020), task condition (e.g., Weng et al., 2022), and task complexity (e.g., Feng, 2017; Sun et al., 2020). Among them, studies dealing with task complexity have remained a major focus and reported interesting, albeit inconsistent, findings.

Although a major line of task-based research investigated the impact of task complexity, it focused primarily on the translation process and largely did not relate its findings to specific translator factors (e.g., Feng, 2017; Liu et al., 2019). Translation has often been depicted as a cognitive task driven by problem-solving (Angelone, 2018). As translation problems are individual and arise from the interplay between task properties and characteristics of task performers (Muñoz Martín and Olalla-Soler, 2022), translators may deliver varied performance even on the same task. Studies that investigated the interplay between task properties and translator characteristics worked on such individual differences as L2 proficiency (Pokorn et al., 2019), working memory capacity (Wang, 2022), and emotional intelligence (Ghobadi et al., 2021), among others. However, to the best of our knowledge, no study has so far investigated how task complexity influences the translation performance of students with different levels of self-efficacy belief, an important affective variable influencing students’ motivation and learning (Bandura, 1997) and a construct recently introduced into translation studies (Yang et al., 2021a). This study attempts to contribute further empirical data to translation performance research by analyzing both the process and the product of written translation and by considering the interaction of task complexity and translating self-efficacy belief (TSEB).

Literature review

Tapping into the process and product data of translation performance

Literature regarding the nature of translation performance reveals two assessment approaches: product-and behavior-based assessment. Most studies perceived translation performance as the result of translation activities and assessed it by evaluating the quality of the products only (Jääskeläinen, 2016). While exploring the relationship between personality type and performance in translating expressive, appellative and informative texts, Shaki and Khoshsaligheh (2017) concluded that the sensing-type students delivered less successful performance than the intuitive-type students in all three tasks. Their performance was evaluated against Waddington’s (2001) rubric, which was also adopted by Ghobadi et al. (2021) to assess participants’ translation performance. Meanwhile, some studies considered translation performance as the sum of behaviors that participants controlled in the process of translation (Muñoz Martín, 2014). Therefore, participants’ translation performance was understood by evaluating their behaviors in the translation process. For example, to better understand the mental processes involved in translation, Lörscher (1991) investigated the strategic translation performance of 56 secondary school and university students by utilizing think-aloud protocols. Besides, Rothe-Neves (2003) analyzed the process features of translation performance with processing time and writing effort measures.

To solve the riddles of translation as a process and a product, there is a need to combine quality assessment and process findings for performance evaluation. This is in line with the suggestion of Jääskeläinen (2016) and Angelone (2018) that translation performance research should be grounded in both process and product data. Combining the two data sources opens up new research avenues and increases the possibilities of finding explanations and generalizing results to real-life circumstances. It may help explain, for instance, why longer task duration sometimes leads to better performance, but sometimes to poorer performance among the same group of students (e.g., Seufert et al., 2017). Despite potential benefits of using the integrated approach, product quality assessment has been integrated with only a few process-oriented studies (Saldanha and O’Brien, 2014). This study aims to demonstrate the utility of this approach by collecting empirical evidence on both the translation process and its end product.

Task complexity and translation performance

Task complexity, defined as “the result of the attentional, memory, reasoning, and other information-processing demands imposed by the structure of the task” on task performers (Robinson, 2011: 106), can affect cognitive processing (Plass et al., 2010). Translation is a high-order cognitive task that imposes cognitive load on and engages cognitive effort of task performers (Liu et al., 2019). Therefore, we must first differentiate cognitive load and cognitive effort before discussing the relationship between task complexity and translation performance. In the present study, cognitive load is associated with the complexity of a task as it refers to the demand for cognitive resources imposed on students by the task, and cognitive effort associated with the actual response by a student as it is the amount of cognitive resources that the student expends to accomplish the task. This is consistent with the constructs of cognitive load and cognitive effort developed in educational psychology (Sweller et al., 1998). While the cognitive load of a task can theoretically be identical for different students (Liu et al., 2019; Ehrensberger-Dow et al., 2020), the cognitive effort expended in a task is individual since students have certain freedom regarding how much effort to expend and how to expend it (Feng, 2017; Sun et al., 2020).

Previous research shows that the highest level of cognitive effort and task performance occur when the task imposes moderate cognitive load (e.g., Plass et al., 2010; Chen et al., 2016). Therefore, translation tasks can optimize students’ opportunities for performance and development if they are of moderate complexity. Such a claim aligns with the social constructivist approach to translator education, where Kiraly emphasizes the use of scaffolded learning activities (Kiraly, 2000). By far, task complexity has been an issue central to curriculum and test development in translator education (Sun and Shreve, 2014). Myriad factors contribute to the complexity of translation tasks, such as source text complexity (Sun and Shreve, 2014), source text quality (Ehrensberger-Dow et al., 2020), the number of simultaneous tasks (Sun et al., 2020), task familiarity (Pokorn et al., 2019), and directionality (Whyatt, 2019). Among them, source text complexity has been a constant focus. To investigate the level of text complexity, researchers have resorted to various measures, including readability (Sun and Shreve, 2014; Whyatt, 2019), word frequency and non-literalness (Liu et al., 2019), degree of polysemy (Mishra et al., 2013), dependency distance (Liang et al., 2017), text structure (Yuan, 2022), and cohesion (Wu, 2019).

Although numerous studies have analyzed how task complexity affects translation performance, the research findings are conflicting. For example, by operationalizing task complexity as the number of simultaneous tasks, Sun et al. (2020) concluded that compared with translating silently, translating while thinking aloud resulted in a higher level of cognitive effort as indicated by task duration, fixation duration and self-ratings; however, the dual-task condition had no influence on translation quality when the source text was complex. Sun et al.’s (2020) findings were only partly consistent with the findings of Wu (2019). In Wu’s (2019) study, task complexity, operationalized as several text characteristics, was positively correlated with self-reported cognitive effort but negatively correlated with translation quality. Despite their discrepancy, Sun et al. (2020) and Wu (2019) revealed that students devoted a higher level of cognitive effort with an increase in task complexity. When task complexity was operationalized as directionality, according to Fonseca (2015), the consensus among translators and translation scholars was that translating into a non-native language (also known as L2 translation) was cognitively more demanding than translating into the native language (also known as L1 translation). However, empirical studies dealing with the effect of directionality on translation performance also reported inconsistent findings (Whyatt, 2019). One possible reason underlying such inconsistency is that existing research on the impact of task complexity does not consider its interplay with translator characteristics.

Self-efficacy belief in translation

How task performance is influenced by the interaction between task properties and learner characteristics has been consistently studied in second language acquisition (Robinson, 2011) and educational psychology (Sweller et al., 1998). Given that individual differences may influence how many cognitive resources to devote and how to expend them in task implementation (e.g., Hoffman and Schraw, 2009; Wang, 2022), there is a need to study translation performance by giving due consideration to translator characteristics. Among the various translator factors, it is critical to examine whether self-efficacy interacts with task complexity in influencing task performance—an observation made in prior research in other disciplines (e.g., Judge et al., 2007; Hoffman and Schraw, 2009; Rahimi and Zhang, 2019). Interestingly, while Judge et al. (2007) reported that the benefits of self-efficacy were difficult to realize in more complex tasks, some studies concluded that the role of self-efficacy was more manifest when task complexity was higher (e.g., Hoffman and Schraw, 2009; Rahimi and Zhang, 2019).

As an affective factor influencing cognitive and motivational processes (Bandura, 1997), self-efficacy can motivate learners and encourage them to put in more effort once an action has been initiated (Bandura, 1995). However, the construct has only recently begun to draw attention from researchers in the field of translation (e.g., Bolaños-Medina, 2014; Muñoz Martín, 2014; Bolaños-Medina and Núñez, 2018). Muñoz Martín (2014) attached importance to the correlation between self-efficacy and translation expertise by specifically including self-efficacy as one of the minimal sub-dimensions of self-concept, which constitutes translation expertise together with knowledge, adaptive psychophysiological traits, problem-solving skills, and regulatory skills. Bolaños-Medina (2014) also proposed that self-efficacy was a construct of relevance for translation process research, related particularly to proficient source language reading comprehension, tolerance of ambiguity, general text translation, and documentation abilities. Besides, Moores et al. (2006) pointed out that an understanding of self-efficacy was required if training programs were designed to develop expert performance level in complex tasks.

Notably, the one-measure-fit-all approach usually has constrained explanatory and predictive value because “most of the items in an all-purpose test may have little or no relevance to the domain of functioning” (Bandura, 2006: 307). This idea aligns with Alves and Hurtado Albir’s (2010: 34) proposal that translation process research should “design its own instruments for data collection.” Therefore, empirical research on self-efficacy belief in translation shall utilize measurement scales tailored for translation tasks. So far, several such scales have been developed (e.g., Bolaños-Medina and Núñez, 2018; Yang et al., 2021a). Since this study focused on the Chinese-English language pair, the Translating Self-Efficacy China scale developed by Yang et al. (2021a) was adopted, which was specifically designed for students with Chinese as their mother tongue and English as a foreign language.

The present study

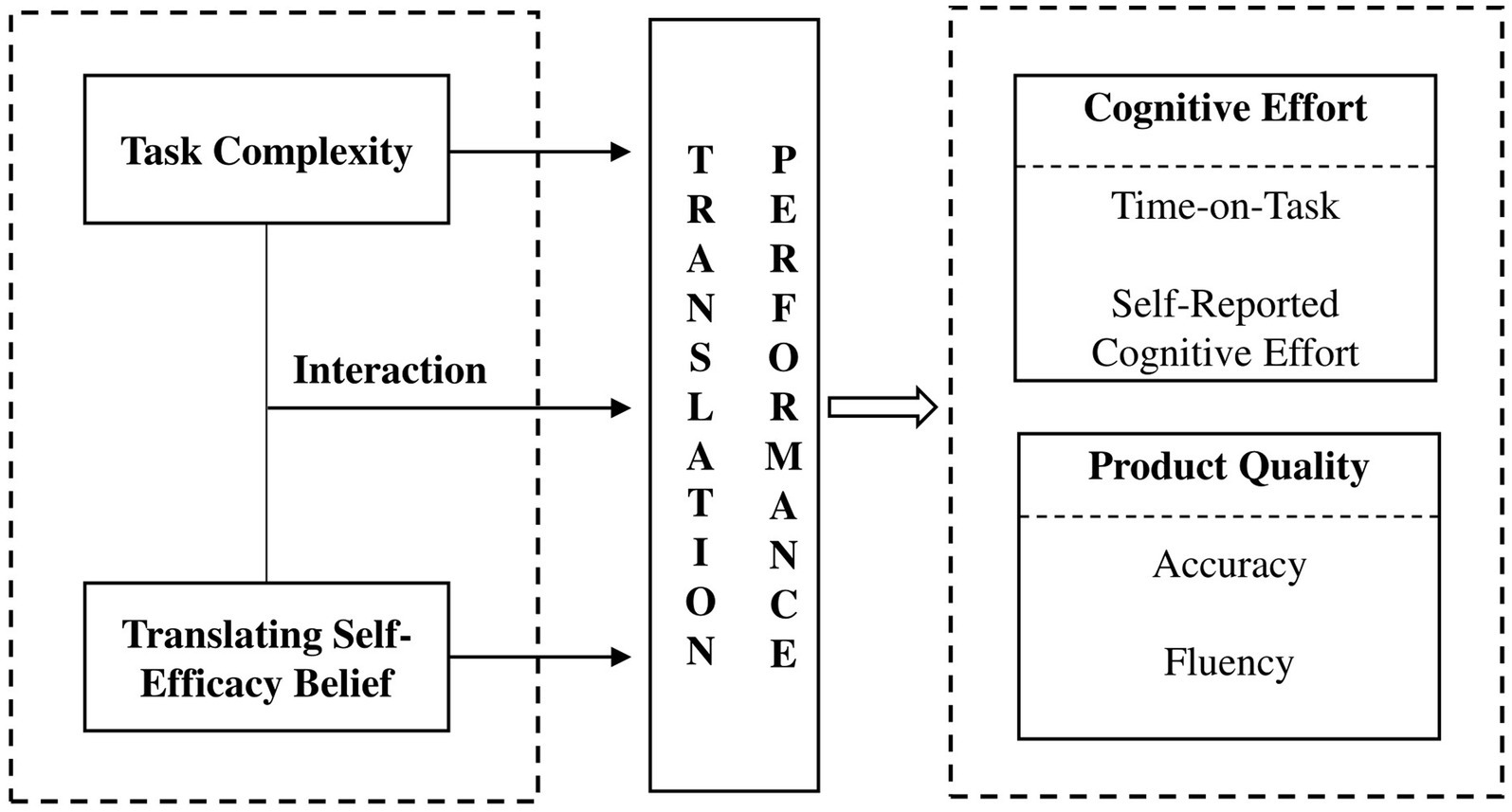

Considering the limitations in earlier studies, the current study attempts to provide further empirical evidence on translation performance. It collects data on both the process and product of two written translation tasks, which are of different complexity levels and performed by homogeneous groups of students with high and low TSEB. A framework is proposed to delineate variables contributing to and measures of translation performance in the current study (see Figure 1). We promote the idea that task complexity and TSEB influence both students’ mental process and product quality; moreover, task complexity might interact with TSEB in influencing their translation performance. For process features of translation performance, we adopt two measures of cognitive effort following previous research (Ehrensberger-Dow et al., 2020; Sun et al., 2020): time-on-task and self-reported cognitive effort. Regarding the quality of translation products, accuracy and fluency are discussed against assessment guidelines.

In brief, to investigate how task complexity and TSEB influence students’ cognitive effort and product quality, and whether there is an interaction effect between the two independent variables, the following research questions (RQs) are raised:

RQ1: What is the impact of task complexity on cognitive effort and product quality of students?

RQ2: How does TSEB influence students’ cognitive effort and product quality?

RQ3: Does task complexity interact with TSEB in influencing students’ translation performance? If yes, how?

Materials and methods

Participants

Brysbaert (2019) proposed that an experiment involving interaction required a minimum sample size of 100 in psychology research. Thus, 136 second-year translation students were recruited for the study on a voluntary basis. The students were from one comprehensive university in mainland China. They all enrolled in the “Translation Theory and Practice” course, which constituted their first experience of intensive translation training after a foundation year with modules in their two working languages (Chinese as their mother tongue and English as a foreign language), an introduction to linguistics for translation, and instrumental skills such as documentary research and computer skills. When the experiment was conducted, the participants had learned English for about 10 years. Thus, they were generally equipped with basic translation skills and language abilities that could guarantee their completion of the translation tasks. The students were aged between 19 and 22, and their gender was primarily female (N = 116, 85.3%).

Based on the measures of TSEB, students were divided into two groups as per the guideline of median split (Malik et al., 2021). Namely, the top 50% students (N = 68) with their TSEB value above the median value were assigned to the high-TSEB group, while the remaining 50% (N = 68) assigned to the low-TSEB group. Participants’ CET4 score, a national test designed to measure English proficiency of undergraduates in China, was used as a measure of their L2 proficiency. The results of the independent samples t-test showed that the two groups were significantly different in TSEB (t = −13.704, p < 0.001) and in L2 proficiency (t = −2.297, p < 0.05).

Students were involved for pedagogical considerations. The research outcome is expected to help translation teachers make informed decisions in translation task selection and help students benefit from high TSEB for performance improvement. Task selection is crucial for translator training, particularly at the initial training stage, as unrealistically complex tasks prove to be a source of frustration for students (Kelly, 2005; Wu, 2019).

Translation tasks

Translation direction

Demand for translation from Chinese into English has remained strong in China. According to Kelly (2000), translation into a non-mother tongue is a professional necessity in many local translation markets and a useful training exercise that contributes to students’ understanding of translation problems. However, despite the presence of L2 translation on the market, the performance of L2 translation remains under-researched (Pokorn et al., 2019). In the hope of contributing to research on L2 translation, we decided to implement Chinese-to-English as the translation direction.

Text selection

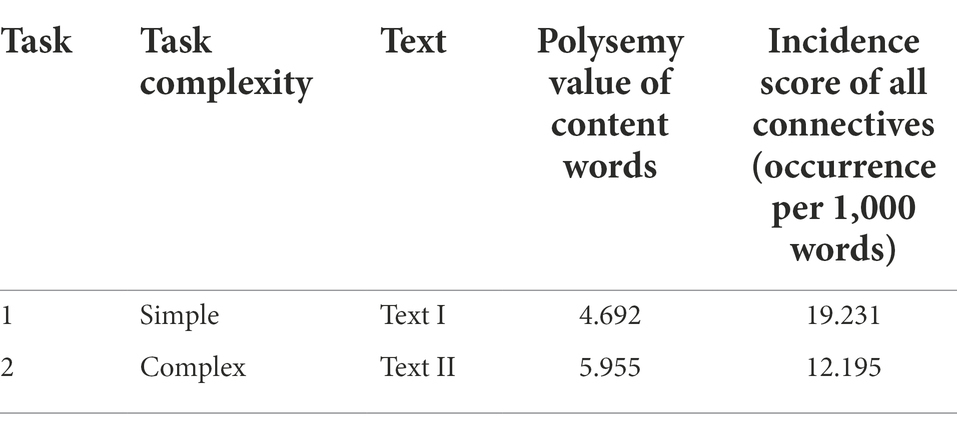

The current study operationalized task complexity as quantifiable measures of text characteristics following Wu’s (2019) suggestion. The two source texts are both about mobile phones and between 160 and 170 Chinese characters (see Appendix A for details). This precludes the possibility that unfamiliarity with the subject domain would skew task performance. The selection of quantifiable measures was guided by literature review. First, lexical polysemy indicates translation ambiguity and task complexity (Mishra et al., 2013). As a word with more senses may be ambiguous and thus slow down processing for learners with a low level of skill and knowledge (McNamara et al., 2014), word polysemy value is positively correlated with task processing demands. Second, low cohesion may increase reading time and disrupt comprehension (McNamara et al., 2014). Connectives are very important in establishing cohesion (Graesser et al., 2011). In Chinese-to-English interpreting, connectives were added to enhance cohesion by professional translators, so as to make implicit information in the Chinese text explicit in the English text (Tang and Li, 2017). Therefore, the incidence score of connectives in the Chinese text is reversely linked to task processing demands.

We utilized the Coh-Metrix Web Tool (Traditional Chinese version) to analyze properties of the two source texts. Coh-Metrix, a linguistic workbench that uses indices to scale texts on characteristics related to words, sentences, and connections between sentences, has been adopted to analyze text characteristics in academic research (Graesser et al., 2011). Coh-Metrix reports the average polysemy for content words in a text, and provides an incidence score for all connectives (occurrence per 1,000 words). Although the specific Coh-Metrix measures vary across versions and tools, the measures are quite similar (McNamara et al., 2014). According to the analysis results of the Coh-Metrix Web Tool (Traditional Chinese version), Text II has a larger polysemy value and a lower incidence score of connectives, which indicates a higher level of text complexity. Therefore, Task 2, which corresponds to Text II, is more complex than Task 1. The details are illustrated in Table 1. Moreover, as experts’ intuition is reasonably reliable when it comes to text complexity evaluation (Sun and Shreve, 2014), an expert panel was recruited to assess task complexity. The expert panel consisted of two translation teachers with over 5 years’ teaching experience and three professional translators with over 10 years’ translation experience. Their conclusion also indicates that Task 2 is more complex than Task 1.

Quality assessment metrics

The produced translation texts were evaluated by two Chinese translation teachers with over 5 years’ experience in Chinese–English translation teaching and assessment. Their assessment guidelines were adapted from Waddington’s (2001) rubric. The original rubric consists of three measures—accuracy of transfer of source text content (i.e., accuracy), quality of expression in the target language (i.e., fluency), and task completion degree. As translation quality was discussed in terms of accuracy and fluency in our study, we only considered the first two measures when developing the assessment guidelines (see Appendix B for details). Besides, for each measure, there are five levels, and each level corresponds to two possible marks; this is to comply with the marking system of 0–10, and to give raters freedom to award the mark according to whether the candidate fully meets the requirements of a particular level or falls between two levels but is closer to the upper one (Waddington, 2001).

The two raters were first invited to get familiar with the assessment guidelines and then worked together to negotiate quality assessment in the marking process. Previous studies revealed that even precise guidelines were given, cognitive bias and disagreement might still occur during the assessment process (Eickhoff, 2018; Islam et al., 2022). The negotiation approach has been widely adopted in writing assessment research and proved as an effective way to reduce raters’ bias (Trace et al., 2015). During the assessment process, the two raters analyzed product quality and reached a consensus through discussions and consultations (Yang et al., 2021b). Namely, when there was a discrepancy between their scores, the raters negotiated by providing an explanation and justification for their score assignment, in the hope of reaching a consensus score. If there was still disagreement in marking, they solved it by consulting a third person, a professional translator with over 10 years’ working experience.

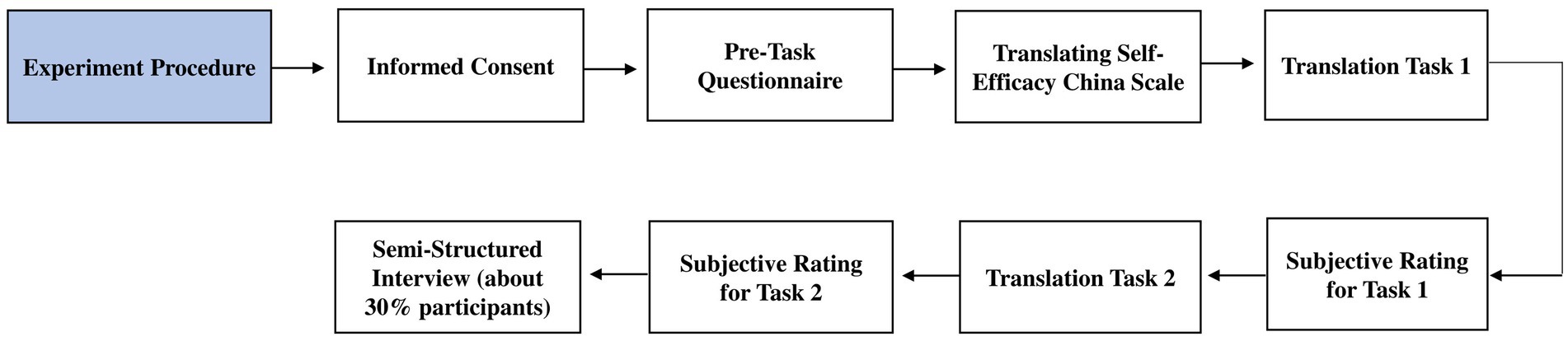

Procedure

The experiment took about two and a half hours. First, participants signed an Informed Consent Form approved by the university’s Ethics Committee, and completed a language background questionnaire and the Translating Self-Efficacy China scale. Then, all participants performed two translation tasks, with their task duration and translation behaviors observable on the screen recorded by screen capture software. Each of the translation tasks was followed by a subjective rating with a questionnaire adapted from NASA-Task Load Index (NASA-TLX) (Hart and Staveland, 1988), which was aimed to measure their cognitive effort invested in the preceding task. The revised NASA-TLX questionnaire, which comprises mental demand, effort, frustration, and performance subscales, is of good reliability and has been applied in some previous research to measure the amount of cognitive resources devoted to task implementation (e.g., Sun and Shreve, 2014; Sun et al., 2020; Yuan, 2022). For details, see Appendix C. To avoid sequencing effects, tasks were pseudo-randomly ordered so that participants would alternate between the two tasks. Participants were told that their translations would be assessed for external dissemination; therefore, they could review and revise their translations. This was intended to encourage participants to try their best in the experiment. This session had no time limits, and participants were allowed to access the Internet.

Upon completing the translation tasks, about 30% of the participants (N = 20) were randomly selected from each group to participate in a semi-structured interview, which was designed to understand students’ perceptions of task complexity and their translation performance, solution to uncertainties and ambiguities during translation, and willingness to invest cognitive effort in the process. Please refer to Figure 2 for the flow chart of the experiment procedure.

Data quality and statistical analysis

To measure how task complexity, TSEB and their interaction influence students’ cognitive effort and translation quality, a mixed-methods approach was adopted to collect and analyze data. Specifically, subjective rating and quality evaluation were used to collect quantitative data, semi-structured interview was adopted to collect qualitative data, while screen recording was employed to collect both quantitative data (participants’ task duration) and qualitative data (participants’ translation behaviors observable on the screen). Data quality was ensured with two measures: First, EV Screen Recorder software was installed on each computer to monitor the translation process. Besides, after gathering process data, we found some outliers in the task duration and translation quality dataset. The recordings of EV Screen Recorder showed that, seven students failed to record the translation process in a complete manner or to submit their translation(s) due to technical issues with the computer. Their data were therefore excluded from the dataset. Consequently, there were 65 students in the low-TSEB group and 64 in the high-TSEB group in our data analysis.

In quantitative analysis, linear mixed-effects models (LMEMs), which can compensate for weak control of variables in naturalistic translation tasks (Saldanha and O’Brien, 2014), were employed as one of the analytical techniques to account for high variability among participants and increase the power of tests (Mellinger and Hanson, 2018). We built four LMEMs altogether. The dependent variable of the four models was (1) time-on-task, (2) self-reported cognitive effort, (3) accuracy score, and (4) fluency score, respectively. Burnham and Anderson (2004) provided rules of thumb when assessing plausible models. They believed that the best model was considered as the one with the lowest Bayesian information criterion (BIC) value. Obtained results in this study suggested that the models with interaction were better than the null ones.

For all four models, the random effects were always the participants, while the fixed effects were task complexity (simple and complex) and TSEB (low and high). As previous studies revealed a strong correlation between L2 proficiency and translation performance (e.g., Jiménez Ivars et al., 2014; Pokorn et al., 2019), the influence of L2 proficiency was controlled by adding it to the four LMEMs as a covariate. During data analysis, we first verified whether there was a significant main effect and then checked the interaction effect of task complexity and TSEB. All statistical analyses were run on IBM SPSS Statistics 26. The significance level was set at p = 0.05. Cohen’s f2 was used to measure the effect size. The results of the four LMEMs are discussed in the following section.

Results

Process feature: Time-on-task

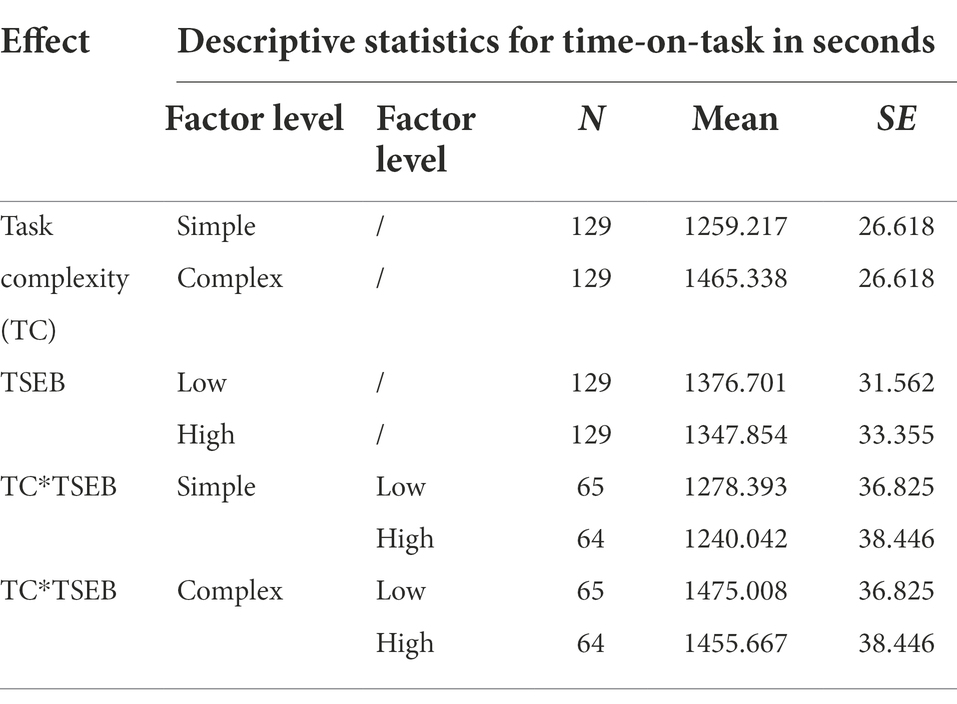

The first dependent variable in our LMEMs is time-on-task. Measured by the time from task onset to task completion, time-on-task has often been used as a measure of cognitive effort (Sweller et al., 2011). Overall, the first LMEM showed a significant main effect of task complexity (b = −215.625, SE = 38.239, t = −5.639, p < 0.001, 95% CI −291.323 ~ −139.927, f2 = 0.112); but neither TSEB nor the interaction of the two independent variables proved significant (p > 0.05; p > 0.05). Table 2 shows the descriptive statistics for time-on-task in the simple and complex tasks for both groups, and the interaction between task complexity and TSEB. As indicated by task duration, the complex task engaged more cognitive effort than the simple one.

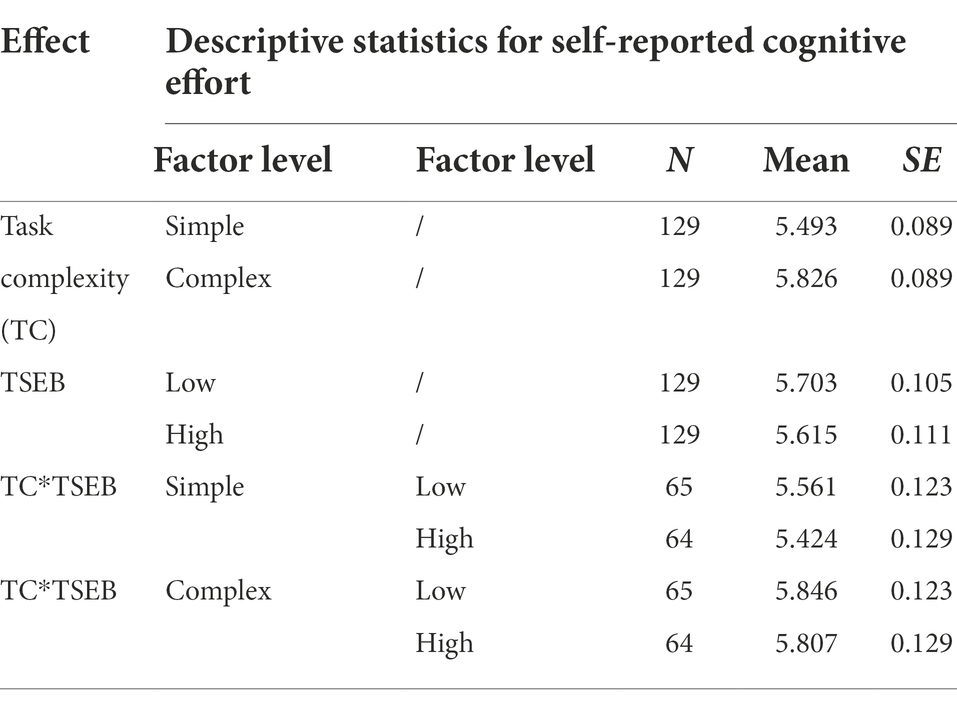

Process feature: Self-reported cognitive effort

The second variable in our LMEMs is self-reported cognitive effort, which was measured with the revised NASA-TLX questionnaire mentioned above. Regarding the measurement of cognitive effort invested in task implementation, self-rating scales were more sensitive and far less intrusive (Sweller et al., 2011). The overall results showed statistically significant effect of task complexity (b = −0.383, SE = 0.130, t = −2.938, p < 0.01, 95% CI −0.641 ~ −0.125, f2 = 0.024); however, the main effect of TSEB and the interaction effect of task complexity and TSEB did not reach statistical significance (p > 0.05; p > 0.05). Table 3 summarizes the descriptive statistics for self-reported cognitive effort. According to self-ratings of cognitive effort, students put in more cognitive effort in the complex task than in the simple one.

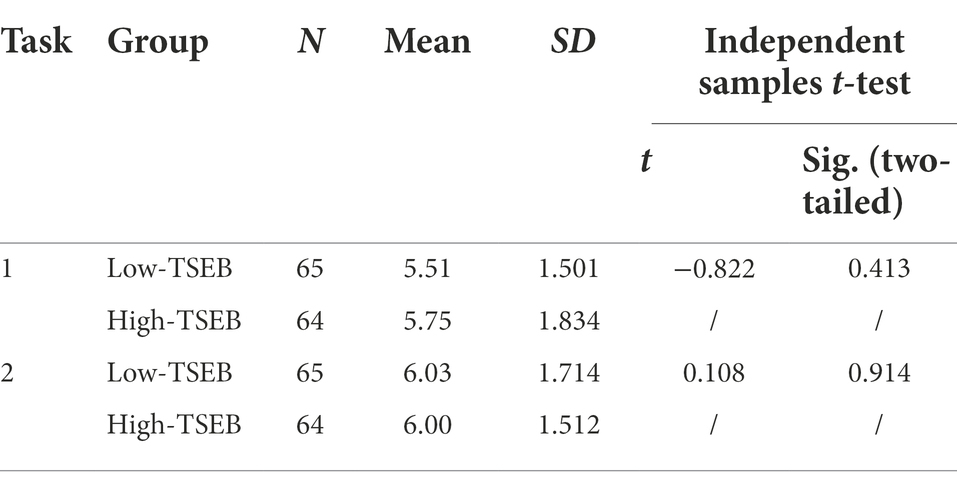

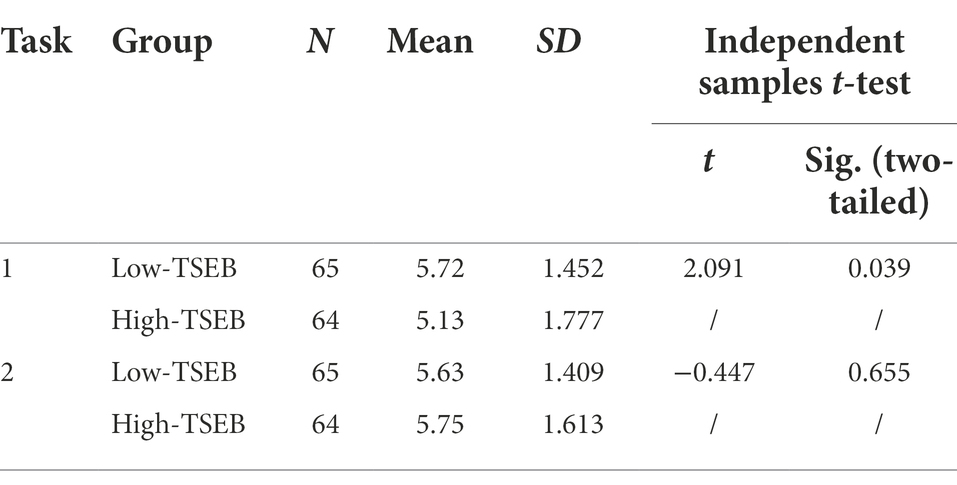

Table 4 provides details of an independent samples t-test for mental demand rating, a subscale of the revised NASA-TLX questionnaire. The table shows that the mean perceived mental demand of Task 1 and Task 2 were 5.51 and 6.03 in the low-TSEB group, and were 5.75 and 6.00 in the high-TSEB group, respectively. The two groups did not vary significantly in the perceived mental demand of Task 1 and Task 2, respectively (t = −0.822, p > 0.05; t = 0.108, p > 0.05). In addition, to assess whether the self-rated mental demand of Task 1 and Task 2 differed significantly in each group, a paired samples t-test was employed for both groups. The results showed that the perceived mental demand increased significantly from Task 1 to Task 2 for the low-TSEB group (t = −2.790, p < 0.01), but not for the high-TSEB group (t = −1.183, p > 0.05). In a word, the two groups had similar perceptions of task demands in Task 1 and Task 2, respectively; besides, only the low-TSEB group realized a significant increase in task demands when task complexity changed from simple to complex.

Table 5 summarizes the results of an independent samples t-test for performance rating, which is also a subscale of the revised NASA-TLX questionnaire and ranges from good (coded as one point) to poor (coded as 10 points). It is shown that the mean perceived quality of Task 1 and Task 2 were 5.72 and 5.63 in the low-TSEB group, and were 5.13 and 5.75 in the high-TSEB group, respectively. The two groups differed significantly in the perceived quality of Task 1 (t = 2.091, p < 0.05), but not in that of Task 2 (t = −0.447, p > 0.05). In short, the high-TSEB group was significantly more confident in their translation quality than the low-TSEB group in the simple task. But this was not true for the complex task.

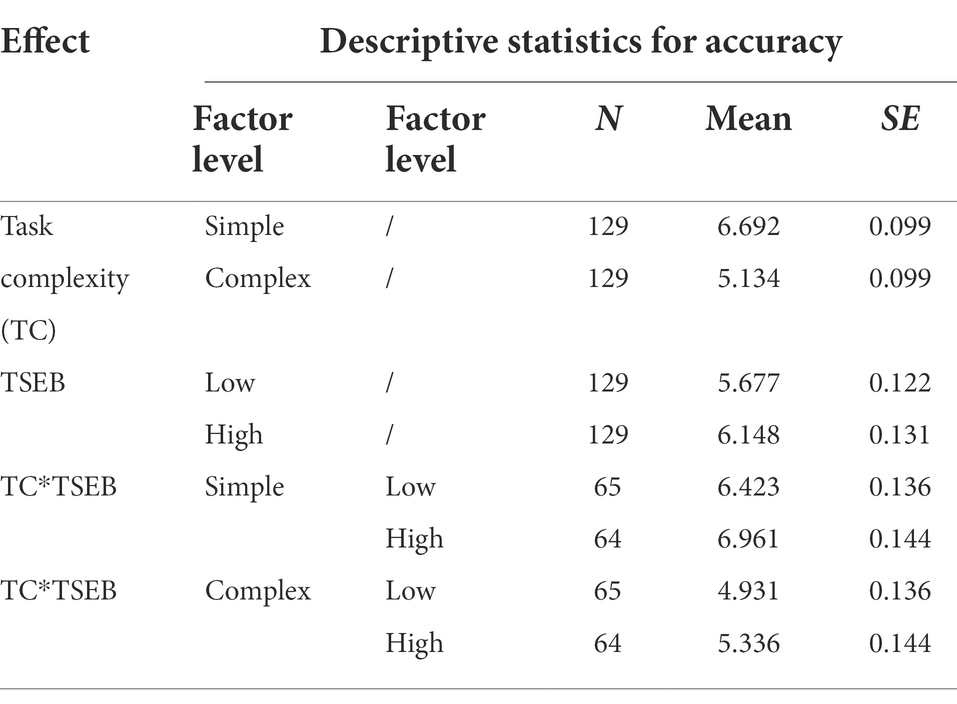

Product feature: Accuracy

The quality of translation products is analyzed in terms of accuracy and fluency. In this paragraph, the dependent variable discussed is the accuracy score. The overall results revealed that both fixed factors (task complexity and TSEB) significantly influenced translation accuracy (b = 1.625, SE = 0.121, t = 13.457, p < 0.001, 95% CI 1.386 ~ 1.864, f2 = 0.456; b = −0.405, SE = 0.198, t = −2.044, p < 0.05, 95% CI −0.796 ~ −0.014, f2 = 0.017). However, their interaction effect was not significant (p > 0.05). In other words, students produced a significantly less accurate translation in the complex task than in the simple one, regardless of their TSEB level. Besides, students with high TSEB significantly outperformed their counterparts with low TSEB in terms of accuracy in both tasks. The descriptive statistics for accuracy are provided in Table 6.

Product feature: Fluency

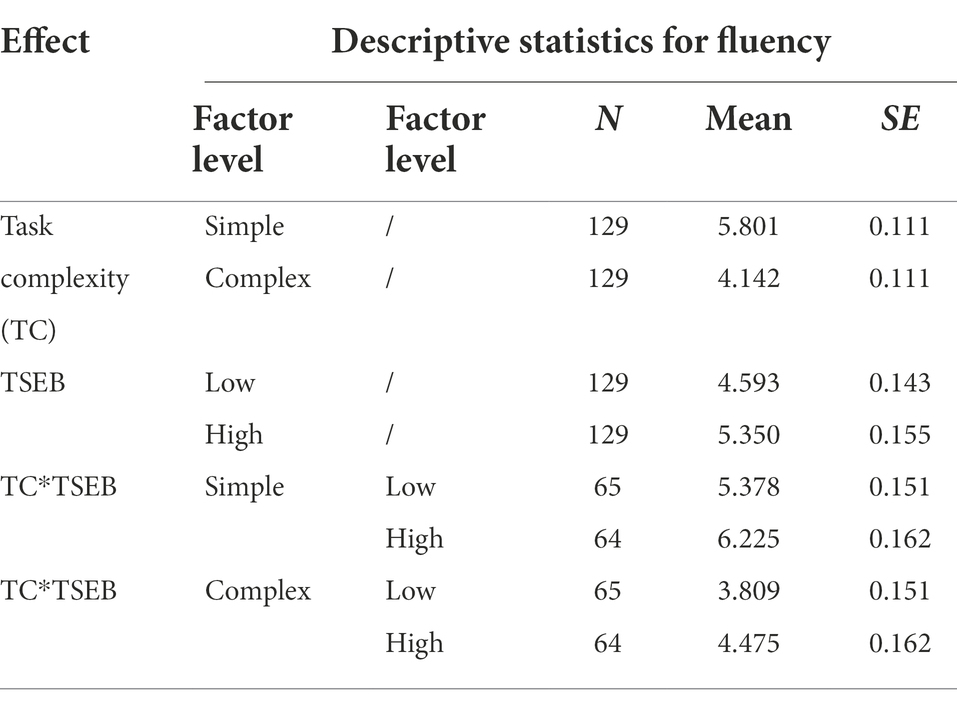

The fourth LMEM was built with the fluency score as the dependent variable. Statistically significant effects of task complexity and TSEB were found on translation fluency (b = 1.750, SE = 0.099, t = 17.599, p < 0.001, 95% CI 1.553 ~ 1.947, f2 = 0.391; b = −0.666, SE = 0.222, t = −3.003, p < 0.01, 95% CI −1.104 ~ −0.228, f2 = 0.042). However, the interaction effect of task complexity and TSEB did not reach statistical significance (p > 0.05). This means that both groups produced significantly poorer fluency in the complex task than in the simple one. In addition, high-TSEB students achieved significantly greater fluency than low-TSEB students. The descriptive statistics for fluency are provided in Table 7.

Discussion

The Results section shows complex effects of task complexity and TSEB on students’ translation process and product quality, and prove the importance of TSEB in investigating the impact of task complexity on translation performance. We found that the complex task led to significantly longer time-on-task, greater self-reported cognitive effort, lower accuracy, and poorer fluency than the simple one in both groups. Moreover, the high-TSEB group achieved significantly higher accuracy and greater fluency when compared with the low-TSEB group in both tasks. However, the interaction effect of task complexity and TSEB was not statistically significant. The findings are further discussed in the following paragraphs.

Effects of task complexity on translation performance

Effect of task complexity on cognitive effort

Irrespective of their TSEB level, students put in a higher level of cognitive effort in the complex task as measured by the time-on-task and self-reported cognitive effort. Our finding corresponds to some previous findings that complex tasks engage greater cognitive effort. For example, Feng (2017) reported that L2 translation, which was cognitively more demanding than L1 translation, involved greater cognitive effort as indicated by longer task duration. Besides, Wang (2022) also concluded that translation students invested more cognitive effort in the complex task than in the simple one, which was indicated by their longer production time and longer pausing time.

The time-consuming effect resulting from task complexity may be explained by differences in participants’ strategic behaviors since cognitive load can impact mental processes (Muñoz Martín, 2014). Analysis of the screen recordings gave us some insights into students’ translation process. First, regarding cognitive resources allocated to the three phases of translation (Jakobsen, 2002), students’ time on initial orientation increased with task complexity, although they generally spent short time on initial orientation in both tasks. Participant 62 spent about 20 s on initial orientation in the simple task, compared to 105 s in the complex task. For Participant 111, her orientation time on the simple and the complex tasks were 25 and 75 s, respectively. Second, when faced with higher task complexity, students tended to improve their output by monitoring the translation process during both the drafting and the revision stages. For example, Participants 42 and 80 exhibited a higher level of product monitoring (evaluation) in the complex task than in the simple one. Finally, students had a higher frequency of pauses in the complex condition than in the simple condition, such as Participants 16 and 88. Angelone (2010) proposed that pauses or hesitations were a diagnostic sign of uncertainties in the problem-solving process, which could occur at any translation phase. Such uncertainties might cause students to doubt their comprehension of the source text, ability to work out a solution, or solution evaluation capacity.

However, it is interesting to find out that no statistically significant correlation was observed between time-on-task and self-reported cognitive effort (Pearson’s r = 0.098, p > 0.05). This indicates that task complexity influenced the two measures of cognitive effort in a separate manner. Ogawa (2021) also revealed that task complexity may affect translators’ task duration and subjective rating in a different way. A possible explanation is that students recruited in the current study were undergraduates and had weak problem awareness, which led to underrating of cognitive effort and in turn to insignificant correlation between the two measures. Such an idea was corroborated by data from the semi-structured interview. The interview data demonstrated that students on the whole had low problem awareness. To be specific, when asked how to assess task complexity in the semi-structured interview, 18 of the 20 high-TSEB interviewees stated that task complexity depended on the frequency of new words, and only one interviewee mentioned the number of connectives. In the low-TSEB group, 16 of the 20 interviewees responded that they evaluated task complexity based primarily on the number of new words; besides, topic familiarity, lexical polysemy, and use of connectives were, respectively, mentioned by two interviewees. Such a finding highlights the importance of recruiting students with diverse education backgrounds in future studies so as to compare their performance.

Effect of task complexity on product quality

A significant main effect of task complexity was observed on the accuracy and fluency scores. Higher task complexity led to poorer translation quality. Our finding lends support to Michael et al. (2011), who claimed that ambiguous words, indicative of high complexity, were translated less accurately as compared to unambiguous words. Whyatt (2019) reported that participants made more grammar mistakes in L2 translation than in the less demanding L1 translation, indicating that higher task demands led to reduced fluency. Wu (2019) also reported that higher text complexity led to greater inaccuracy and dysfluency in students’ performance.

However, the finding conflicts with Sun et al. (2020), who arrived at their conclusion when using a complex text as the source material and operationalizing task complexity as the number of simultaneous tasks. According to Cognitive Load Theory, students generally “increase cognitive effort to match increasing task demands up until they reach the limit of their mental capacities” (Chen et al., 2016: 3). As a result, with an increase in task complexity, students can adjust their level of cognitive effort to maintain the quality level achieved in the less complex task. That explains why students’ translation quality had no significant change when the condition changed from single-task to dual-task in Sun et al. (2020).

Previous research showed that the relationship between cognitive effort and performance quality was not linear: Increased effort may lead to enhanced, unchanged, or reduced quality depending on whether task complexity is low, moderate, or high (Charlton, 2002; Seufert et al., 2017). In the current study, students produced poorer translation quality in the complex task despite investment of more cognitive effort, as they, with weak problem awareness, failed to adequately increase their cognitive effort to match increasing task demands and properly tackle the translation problems.

Effects of TSEB on translation performance

Effect of TSEB on cognitive effort

The low-TSEB and high-TSEB groups were similar in cognitive effort as per time-on-task and self-reported cognitive effort. Such a finding contradicts Araghian et al. (2018), who found that high self-efficacy led participants to spend less time on the translation task as highly efficacious students had greater confidence in dealing with larger translation units and reported fewer lexical and sentential problems. However, the comparison should be made with caution since the task involved in their study was translating an English text into Persian, a low-resource language (Fadaei and Faili, 2020). Translating from a high-resource language to a low-resource language poses challenges related to word ordering (Fadaei and Faili, 2020), semantic and sentence representations (Gu et al., 2018), and so on. It is different from Chinese-to-English translation implemented in the current study, as both Chinese and English are high-resource languages (Aysa et al., 2022).

According to Cognitive Load Theory, devoting greater cognitive effort is on the condition that task performers have consciously realized increased task demands and/or feel motivated to do so (Chen et al., 2016). However, first, the two groups had similar mental demand ratings (i.e., perceived task demands) in Task 1 and Task 2, respectively (see section Process feature: Self-reported cognitive effort for more details). This was corroborated by the interview data. As is mentioned in section Effect of task complexity on cognitive effort, although the low-TSEB group performed slightly better in assessing task complexity than their counterparts, students overall had weak problem awareness as they mainly referred to new words for complexity assessment. In this study, task complexity was operationalized as word polysemy value and incidence score of connectives. Ignorance of translation problems resulted in their failure to accurately assess processing demands of the complex task and in turn adequately increase cognitive effort to match increased task demands. Our finding corresponds to the finding of Jääskeläinen (1996: 67) that students “translate quickly and effortlessly” because they problematized less than semi-professionals.

Besides, according to the semi-structured interview, high-TSEB students were more willing to put in greater cognitive effort than their counterparts with low TSEB, but largely on the condition that “the task becomes more demanding.” However, as previously mentioned, the high-TSEB group failed to realize that Task 2 was significantly more demanding than Task 1 (see section Process feature: Self-reported cognitive effort). In other words, the two groups did not vary significantly in cognitive effort due possibly to similar perceptions of task demands in each task and lack of strong motivation to invest more cognitive effort in the complex task.

Effect of TSEB on product quality

TSEB had a significant effect on students’ translation accuracy and fluency. This finding lends support to Jiménez Ivars et al. (2014) who concluded that self-efficacy could boost translation quality. Given that TSEB was a strong predictor of translation quality but not of cognitive effort, it was possible that self-efficacy enhanced product quality through resourceful use of strategies rather than changing task duration, which echoes the findings of Hoffman and Schraw (2009). Araghian et al. (2018) also concluded that self-efficacy might influence students’ strategy use. According to Bandura (1993), it required a strong sense of efficacy to remain task oriented in the face of pressing demands and to effectively process information that contained many ambiguities and uncertainties. Therefore, when faced with a translation problem, students with high TSEB might be more resourceful in the allocation and adaptation of alternative strategies than the low-TSEB students, which in turn led to higher translation quality.

An analysis of data collected via the semi-structured interview underpins such an explanation. For example, a low-TSEB participant mentioned in the interview that she mainly resorted to external resources to reach a definitive solution to translation problems, while a high-TSEB participant stated that depending on the nature of the translation problem, she alternated between relying on her own knowledge and using external resources to reach a solution. Both internal and external support can help address unfamiliar terms whose equivalent expression in the target language is available on the Internet. However, it might be futile to resort solely to external resources when it comes to translation uncertainties and ambiguities arising from polysemous words or text cohesion. Resourceful strategy use by the high-TSEB group is indicative of their better allocation of cognitive resources during the translation process.

Interaction effect of task complexity and TSEB on translation performance

No statistically significant interaction effect of task complexity and TSEB was found on students’ cognitive effort and product quality. Our finding is inconsistent with that of Rahimi and Zhang (2019), who identified an interaction effect between task complexity and self-efficacy. First, writing tasks were used in their study, which are different from translation, a complex cognitive task that comprises source text reading and target text production (Feng, 2017). Second, increased task complexity did not result in evident differences in the cognitive effort of the two groups because neither group put in significantly more effort in the complex task than in the simple one. These reasons could potentially explain the contradiction in the findings.

However, despite insignificant interaction effect on cognitive effort and product quality, the two groups displayed obvious differences in other aspects with increased task complexity. First, although the two groups did not expend significantly more cognitive effort in the complex task, the main reason behind their decision was different: The high-TSEB group did not devote more effort due to their failure in realizing a significant increase in task demands, whereas the low-TSEB group had low willingness to devote more effort. Second, the two groups had observable differences in quality perception in the simple task, but such differences diminished in the complex task (refer to section Process feature: Self-reported cognitive effort for details). This shows that high task complexity may reduce the effect of TSEB, which lends support to Judge et al. (2007), who believed that the role of self-efficacy was more evident in less complex tasks.

Conclusion

This study examined students with high and low TSEB when they performed written translation tasks across two complexity levels. To the best of our knowledge, this study is the first to examine the effects of task complexity and TSEB on both the process and the product of written translation. The research questions raised at the beginning of the paper are addressed based on qualitative and quantitative analysis of data from screen recording, subjective rating, semi-structured interview, and quality evaluation.

First, the impact of task complexity was found on both the translation process and the end product of students. Irrespective of their TSEB level, students had longer task duration, higher self-ratings of cognitive effort, lower accuracy, and poorer fluency in the complex task than in the simple one. The evidence seems to reveal that, when faced with a higher level of cognitive load, students would put in more cognitive effort. However, unrealistically high cognitive load would reduce their translation quality. Second, high TSEB was associated with higher accuracy and greater fluency, but did not cause significant differences in time-on-task and self-reported cognitive effort. The evidence seems to indicate that highly efficacious students produced higher translation quality through more flexible allocation of cognitive effort rather than expending more cognitive effort in the translation process. That may also explain why the interaction effect of task complexity and TSEB was not significant on cognitive effort.

Examining the findings in this study together with those in previous studies, it becomes evident that the relationship between cognitive effort and task performance is not linear, depending on the level of task complexity. The finding proves the importance of quantifiable measures for categorizing task complexity. Otherwise, a task considered simple in one study might not be defined as such in another. Quantifiable measures were adopted in the present study to categorize task complexity, which can provide a reference for future translation studies to compare research results. Besides, the study also highlights the necessity of problem awareness cultivation among students since awareness of cognitive load increase is one prerequisite for students to put in more cognitive effort. With problem awareness in hand, students are in a better position to know what to look for in their performance so that their performance can be self-assessed, not just from the perspective of the end product, but also from the perspective of the translation process that contributes to its production.

The research findings can, firstly, inform translation teachers to gear task complexity to students’ developmental levels of translation competence and to pedagogical objectives. For instance, simple tasks can be assigned to help students build self-efficacy, and moderately complex tasks be assigned to facilitate their development (Graesser et al., 2011). If challenging tasks are assigned for a particular objective, scaffolds can be used to reduce the impact of task complexity. For example, Jia et al. (2019) found that neural machine translation can help students address terminology issues and reduce their cognitive effort when specialized texts, indicative of high complexity, were assigned to develop their background knowledge. Secondly, our findings also highlight potential benefits of TSEB. To help students benefit from high TSEB, teachers can draw on existing research findings on self-efficacy development, which relies on enactive mastery experience, vicarious experience, verbal persuasion, and physiological and emotional states (Bandura, 1997). Lastly, from a methodological perspective, we believe that the integrated approach adopted in this study, namely combining process and product data for translation performance research, allows us to bring to light results that might have been more difficult to identify using the onefold approach. By observing participants’ translation process, and not only their products, future studies may develop a better understanding of translation performance.

Although a mixed-methods design was adopted to collect data from several sources for triangulation purposes, this study still has some limitations. First, key-logging and eye-tracking data could be utilized to better observe students’ translation behaviors, so as to illustrate and explain their translation process more vividly. Second, there are only a limited number of source texts, single text type and language pair, and students with similar education background involved in the experiment. Third, the current study focuses on human translation. Considering the recent success of neural machine translation (Almansor and Al-Ani, 2018; Islam et al., 2021), it will contribute further to translation performance research if different task types (i.e., human translation, and post-editing of neural machine translation) are taken into account. Future studies could diversify the design of task features (e.g., task type) and select participants with different language pairs and diverse education backgrounds, so as to explore further the relationships between variables in task complexity, learner factors, and translation performance with larger samples.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Ethics Committee of Hunan University. The participants provided their written informed consent to participate in this study.

Author contributions

XZ and XW contributed to the conception of the study. XZ conducted the experiment and drafted the manuscript. XW and XL contributed to the revision of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by National Social Science Foundation of China (grant no. 22BYY015), Education Department of Hunan Province (grant no. 21C0791) and Social Science Evaluation Committee of Hunan Province (grant no. XSP22YBZ035).

Acknowledgments

We would like to thank all the participants in this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.911850/full#supplementary-material

References

Almansor, E. H., and Al-Ani, A. (2018). “A hybrid neural machine translation technique for translating low resource languages,” in Proceedings of International Conference on Machine Learning and Data Mining in Pattern Recognition, ed. MLDM. Cham: Springer. 347–356.

Alves, F., and Hurtado Albir, A. (2010). “Cognitive approaches,” in Handbook of translation studies, eds. Y. Gambier and L. van Doorslaer (Amsterdam/Philadelphia: John Benjamins Publishing Company), 28–35.

Angelone, E. (2010). “Uncertainty, uncertainty management, and metacognitive problem solving in the translation task,” in Translation and cognition. eds. E. Angelone and G. M. Shreve (Amsterdam/Philadelphia: John Benjamins Publishing Company), 17–40.

Angelone, E. (2018). “Reconceptualizing problems in translation using triangulated process and product data,” in Innovation and expansion in translation process research. eds. I. Lacruz and R. Jääskeläinen (Amsterdam/Philadelphia: John Benjamins Publishing Company), 17–36.

Araghian, R., Ghonsooly, B., and Ghanizadeh, A. (2018). Investigating problem-solving strategies of translation trainees with high and low levels of self-efficacy. Transl. Cogn. Behav. 1, 74–97. doi: 10.1075/tcb.00004.ara

Aysa, A., Ablimit, M., Yilahun, H., and Hamdulla, A. (2022). Chinese-Uyghur bilingual lexicon extraction based on weak supervision. Information 13, 1–18. doi: 10.3390/info13040175

Bandura, A. (1993). Perceived self-efficacy in cognitive development and functioning. Educ. Psychol. 28, 117–148. doi: 10.1207/s15326985ep2802_3

Bandura, A. (2006). “Guide for constructing self-efficacy scales,” in Self-efficacy beliefs of adolescents. eds. F. Pajares and T. Urdan (Greenwich, CT: Information Age Publishing), 307–337.

Bolaños-Medina, A. (2014). Self-efficacy in translation. Transl. Interpreting Stud. 9, 197–218. doi: 10.1075/tis.9.2.03bol

Bolaños-Medina, A., and Núñez, J. L. (2018). A preliminary scale for assessing translators’ self-efficacy. Across Lang. Cult. 19, 53–78. doi: 10.1556/084.2018.19.1.3

Brysbaert, M. (2019). How many participants do we have to include in properly powered experiments? A tutorial of power analysis with reference tables. J. Cogn. 2, 1–38. doi: 10.5334/joc.72

Burnham, K. P., and Anderson, D. R. (2004). Multimodel inference: understanding AIC and BIC in model selection. Sociol. Methods Res. 33, 261–304. doi: 10.1177/0049124104268644

Charlton, S. G. (2002). “Measurement of cognitive states in test and evaluation” in Handbook of human factors testing and evaluation. eds. S. G. Charlton and T. G. O’Brien 2nd ed (Mahwah: Lawrence Erlbaum), 97–126.

Chen, F., Zhou, J. L., Wang, Y., Yu, K., Arshad, S. Z., Khawaji, A., et al. (2016). Robust multimodal cognitive load measurement. Cham: Springer Nature Switzerland AG.

Chmiel, A., Janikowski, P., and Cieślewicz, A. (2020). The eye or the ear? Source language interference in sight translation and simultaneous interpreting. Interpreting 22, 187–210. doi: 10.1075/intp.00043.chm

Ehrensberger-Dow, M., Albl-Mikasa, M., Andermatt, K., Hunziker Heeb, A., and Lehr, C. (2020). Cognitive load in processing ELF: translators, interpreters, and other multilinguals. J. Engl. Lingua Franca 9, 217–238. doi: 10.1515/jelf-2020-2039

Eickhoff, C. (2018). “Cognitive biases in crowdsourcing,” in Proceedings of WSDM 2018: The Eleventh ACM International Conference on Web Search and Data Mining. ed. ACM. New York: Association for Computing Machinery. 1–9.

Fadaei, H., and Faili, H. (2020). Using syntax for improving phrase-based SMT in low-resource languages. Digit. Scholarsh. Human. 35, 507–528. doi: 10.1093/llc/fqz033

Feng, J. (2017). Comparing cognitive load in L1 and L2 translation: evidence from eye-tracking. Foreign Lang. China 14, 79–91. doi: 10.13564/j.cnki.issn.1672-9382.2017.04.012

Fonseca, N. B. (2015). Directionality in translation: investigating prototypical patterns in editing procedures. Transl. Interpret. 7, 111–125. doi: 10.ti/106201.2015.a08

Ghobadi, M., Khosroshahi, S., and Giveh, F. (2021). Exploring predictors of translation performance. Transl. Interpret. 13, 65–78. doi: 10.12807/ti.113202.2021.a04

Graesser, A. C., McNamara, D. S., and Kulikowich, J. M. (2011). Coh-Metrix: providing multilevel analyses of text characteristics. Educ. Res. 40, 223–234. doi: 10.3102/0013189X11413260

Gu, J. T., Hassan, H., Devlin, J., and Li, V. O. K. (2018). “Universal neural machine translation for extremely low resource languages,” in Proceedings of NAACL-HLT 2018, ed. ACL. New Orleans: Association for Computational Linguistics. 344–354.

Hart, S. G., and Staveland, L. E. (1988). “Development of NASA-TLX (Task Load Index): results of empirical and theoretical research” in Human Mental Workload. eds. P. A. Hancock and N. Meshkati (Amsterdam: North-Holland), 139–183.

Hoffman, B., and Schraw, G. (2009). The influence of self-efficacy and working memory capacity on problem-solving efficiency. Learn. Individ. Differ. 19, 91–100. doi: 10.1016/j.lindif.2008.08.001

Islam, M. A., Anik, M. S. H., and Islam, A. B. M. A. A. (2021). Towards achieving a delicate blending between rule-based translator and neural machine translator. Neural Comput. Applic. 33, 12141–12167. doi: 10.1007/s00521-021-05895-x

Islam, M. A., Mukta, M. S. H., Olivier, P., and Rahman, M. M. (2022). “Comprehensive guidelines for emotion annotation,” in Proceedings of the 22nd ACM International Conference on Intelligent Virtual Agents, ed. ACM. New York: Association for Computing Machinery. 1–8.

Jääskeläinen, R. (1996). Hard work will bear beautiful fruit. A comparison of two think-aloud protocol studies. Meta 41, 60–74. doi: 10.7202/003235ar

Jääskeläinen, R. (2016). “Quality and translation process research,” in Reembedding translation process research. ed. R. Muñoz Martín (Amsterdam/Philadelphia: John Benjamins Publishing Company), 89–106.

Jakobsen, A. L. (2002). “Translation drafting by professional translators and by translation students,” in Empirical translation studies: Process and product. ed. G. Hansen (Copenhagen: Samfunds 1itteratur), 191–204.

Jia, Y. F., Carl, M., and Wang, X. L. (2019). How does the post-editing of neural machine translation compare with from-scratch translation? A product and process study. J. Spec. Transl. 31, 60–86.

Jiménez Ivars, A., Pinazo Catalayud, D., and Ruizi Forés, M. (2014). Self-efficacy and language proficiency in interpreter trainees. Interpret. Transl. Trainer 8, 167–182. doi: 10.1080/1750399X.2014.908552

Judge, T. A., Jackson, C. L., Shaw, J. C., Scott, B. A., and Rich, B. L. (2007). Self-efficacy and work-related performance: the integral role of individual differences. J. Appl. Psychol. 92, 107–127. doi: 10.1037/0021-9010.92.1.107

Kelly, D. (2000). “Text selection for developing translator competence,” in Developing translation competence. eds. C. Schäffner and B. J. Adab (Amsterdam/Philadelphia: John Benjamins Publishing Company), 157–167.

Kiraly, D. (2000). A social constructivist approach to translator education. London and New York: Routledge.

Lehka-Paul, O., and Whyatt, B. (2016). Does personality matter in translation? Interdisciplinary research into the translation process and product. Poznań Stud. Contempor. Linguist. 52, 317–349. doi: 10.1515/psicl-2016-0012

Liang, J., Fang, Y., Lv, Q., and Liu, H. (2017). Dependency distance differences across interpreting types: implications for cognitive demand. Front. Psychol. 8:2132. doi: 10.3389/fpsyg.2017.02132

Liu, Y. M., Zheng, B. H., and Zhou, H. (2019). Measuring the difficulty of text translation: the combination of text-focused and translator-oriented approaches. Targets 31, 125–149. doi: 10.1075/target.18036.zhe

Lörscher, W. (1991). Translation performance, translation process and translation strategies: A psycholinguistic investigation. Tübingen: Narr.

Malik, A. A., Williams, C. A., Weston, K. L., and Barker, A. R. (2021). Influence of personality and self-efficacy on perceptual responses during high-intensity interval exercise in adolescents. J. Appl. Sport Psychol. 33, 590–608. doi: 10.1080/10413200.2020.1718798

McNamara, D. S., Graesser, A. C., McCarthy, P. M., and Cai, Z. Q. (2014). Automated evaluation of text and discourse with Coh-Metrix. New York: Cambridge University Press.

Mellinger, C. D., and Hanson, T. A. (2018). Order effects in the translation process. Transl. Cogn. Behav. 1, 1–20. doi: 10.1075/tcb.00001.mel

Michael, E. B., Tokowicz, N., Degani, T., and Smith, C. J. (2011). Individual differences in the ability to resolve translation ambiguity across languages. Vigo Int. J. Appl. Linguist. 8, 79–97.

Mishra, A., Bhattacharyya, P., and Carl, M. (2013). “Automatically predicting sentence translation difficulty,” in Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), eds. H. Schuetze, P. Fung, and M. Poesio. Sofia: Association for Computational Linguistics. 346–351.

Moores, T. T., Chang, J. C. J., and Smith, D. K. (2006). Clarifying the role of self-efficacy and metacognition as predictors of performance: construct development and test. SIGMIS Database 37, 125–132. doi: 10.1145/1161345.1161360

Muñoz Martín, R. (2014). “Situating translation expertise: a review with a sketch of a construct,” in The development of translation competence: Theories and methodologies from psycholinguistics and cognitive science. eds. J. W. Schwieter and A. Ferreira (Newcastle upon Tyne: Cambridge Scholar Publishing), 2–56.

Muñoz Martín, R., and Olalla-Soler, C. (2022). Translating is not (only) problem solving. J. Spec. Transl. 38, 1–26.

O’Brien, S. (2013). The borrowers: researching the cognitive aspects of translation. Targets 25, 5–17. doi: 10.1075/target.25.1.02obr

Ogawa, H. (2021). Difficulty in English-Japanese translation: Cognitive effort and text/translator characteristics. Dissertation. Ohio: Kent State University.

Plass, J. L., Moreno, R., and Brünken, R. (2010). Cognitive load theory. Cambridge: Cambridge University Press.

Pokorn, N. K., Blake, J., Reindl, D., and Peterlin, A. P. (2019). The influence of directionality on the quality of translation output in educational settings. Interpreter Transl. Trainer 14, 58–78. doi: 10.1080/1750399X.2019.1594563

Rahimi, M., and Zhang, L. J. (2019). Writing task complexity, students’ motivational beliefs, anxiety and their writing production in English as a second language. Read. Writ. 32, 761–786. doi: 10.1007/s11145-018-9887-9

Robinson, P. (2011). “Second language task complexity, the cognition hypothesis, language learning, and performance,” in Second language task complexity. ed. P. Robinson (Amsterdam/Philadelphia: John Benjamins Publishing Company), 3–38.

Rothe-Neves, R. (2003). “The influence of working memory features on some formal aspects of translation performance,” in Triangulating translation: Perspectives in process oriented research. ed. F. Alves (Amsterdam/Philadelphia: John Benjamins Publishing Company), 97–119.

Saldanha, G., and O’Brien, S. (2014). Research methodologies in translation studies. New York: Routledge.

Seufert, T., Wagner, F., and Westphal, J. (2017). The effects of different levels of disfluency on learning outcomes and cognitive load. Instr. Sci. 45, 221–238. doi: 10.1007/s11251-016-9387-8

Shaki, R., and Khoshsaligheh, M. (2017). Personality type and translation performance of Persian translator trainees. Indonesian J. Appl. Linguist. 7, 122–132. doi: 10.17509/ijal.v7i2.8348

Sun, S., Li, T., and Zhou, X. (2020). Effects of thinking aloud on cognitive effort in translation. LANS-TTS 19, 132–151. doi: 10.52034/lanstts.v19i0.556

Sun, S., and Shreve, G. M. (2014). Measuring translation difficulty: an empirical study. Targets 26, 98–127. doi: 10.1075/target.26.1.04sun

Sweller, J., Ayres, P., and Kalyuga, S. (2011). Cognitive load theory. New York: Springer Science + Business Media.

Sweller, J., van Merrienboer, J. J. G., and Paas, F. G. W. C. (1998). Cognitive architecture and instructional design. Educ. Psychol. Rev. 10, 251–296. doi: 10.1023/a:1022193728205

Tang, F., and Li, D. C. (2017). A corpus-based investigation of explicitation patterns between professional and student interpreters in Chinese-English consecutive interpreting. Interpreter Transl. Trainer 11, 373–395. doi: 10.1080/1750399X.2017.1379647

Trace, J., Janssen, G., and Meier, V. (2015). Measuring the impact of rater negotiation in writing performance assessment. Lang. Test. 34, 3–22. doi: 10.1177/0265532215594830

Waddington, C. (2001). Different methods of evaluating student translations: the question of validity. Meta 46, 311–325. doi: 10.7202/004583ar

Wang, F. X. (2022). Impact of translation difficulty and working memory capacity on processing of translation units: evidence from Chinese-to-English translation. Perspectives 30, 306–322. doi: 10.1080/0907676X.2021.1920989

Weng, Y., Zheng, B. H., and Dong, Y. P. (2022). Time pressure in translation: psychological and physiological measures. Targets 34, 601–626. doi: 10.1075/target.20148.wen

Whyatt, B. (2019). In search of directionality effects in the translation process and in the end product. TCB 2, 79–100. doi: 10.1075/tcb.00020.why

Wu, Z. W. (2019). Text characteristics, perceived difficulty and task performance in sight translation: an exploratory study of university-level students. Interpreting 21, 196–219. doi: 10.1075/intp.00027.wu

Yang, Y. X., Cao, X., and Huo, X. (2021a). The psychometric properties of translating self-efficacy belief: perspectives from Chinese learners of translation. Front. Psychol. 12:642566. doi: 10.3389/fpsyg.2021.642566

Yang, Y. X., Wang, X. L., and Yuan, Q. Q. (2021b). Measuring the usability of machine translation in the classroom context. Transl. Interpreting Stud. 16, 101–123. doi: 10.1075/tis.18047.yan

Keywords: task complexity, translating self-efficacy belief, interaction, translation process, product quality

Citation: Zhou X, Wang X and Liu X (2022) The impact of task complexity and translating self-efficacy belief on students’ translation performance: Evidence from process and product data. Front. Psychol. 13:911850. doi: 10.3389/fpsyg.2022.911850

Edited by:

Simone Aparecida Capellini, São Paulo State University, BrazilReviewed by:

Pierre Gander, University of Gothenburg, SwedenGhayth Kamel Shaker AlShaibani, UCSI University, Malaysia

Md Adnanul Islam, Monash University, Australia

Juan Antonio Prieto-Velasco, Universidad Pablo de Olavide, Spain

Lieve Macken, Ghent University, Belgium

Copyright © 2022 Zhou, Wang and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiangling Wang, eGxfd2FuZ0BobnUuZWR1LmNu

Xiangyan Zhou

Xiangyan Zhou Xiangling Wang

Xiangling Wang Xiaodong Liu

Xiaodong Liu