- 1Centre for Psychiatry Research, Department of Clinical Neuroscience, Karolinska Institutet, and Stockholm Health Care Services, Stockholm, Sweden

- 2MIC Lab AB, Stockholm, Sweden

- 3Division of Psychology, Department of Clinical Neuroscience, Karolinska Institutet, Stockholm, Sweden

- 4Stockholm Centre for Eating Disorders, Stockholm County Council, Stockholm, Sweden

As evaluation of practitioners’ competence is largely based on self-report, accuracy in practitioners’ self-assessment is essential for ensuring high quality treatment-delivery. The aim of this study was to assess the relationship between independent observers’ ratings and practitioners’ self-reported treatment integrity ratings of Motivational interviewing (MI). Practitioners (N = 134) were randomized to two types of supervision [i.e., regular institutional group supervision, or individual telephone supervision based on the MI Treatment Integrity (MITI) code]. The mean age was 43.2 years (SD = 10.2), and 62.7 percent were females. All sessions were recorded and evaluated with the MITI, and the MI skills were self-assessed with a questionnaire over a period of 12 months. The associations between self-reported and objectively assessed MI skills were overall weak, but increased slightly from baseline to the 12-months assessment. However, the self-ratings from the group that received monthly objective feedback were not more accurate than those participating in regular group supervision. These results expand findings from previous studies and have important implications for assessment of practitioners’ treatment fidelity: Practitioners may learn to improve the accuracy of self-assessment of competence, but to ensure that patients receive intended care, adherence and competence should be assessed objectively.

Introduction

Treatment fidelity is defined as the extent to which a treatment is delivered according to a given standard (Hogue et al., 2015). It includes two factors: (1) Adherence (i.e., the extent of proposed treatment components present in the session); and (2) Competence (i.e., practitioners’ skills) (Beidas et al., 2014b; Bearman et al., 2017). Assessment of treatment fidelity is a crucial part of understanding if and how a treatment works, and thus an important part of the development of all evidence-based treatments (EBT) (McLeod et al., 2013). Fidelity assessment is also key for the dissemination and implementation of EBT, as it is a prerequisite for both effective training of practitioners and quality assurance of clinical practice (McHugh et al., 2009; Hogue et al., 2015). Primary methods of measuring fidelity are direct (i.e., monitoring of sessions) or indirect (i.e., practitioners’ self-report) (Beidas et al., 2014a). However, results from a number of studies highlight the inaccuracy of practitioners’ self-report (Carroll et al., 1998, 2010; Brosan et al., 2008; Beidas and Kendall, 2010). These weak correlations between practitioners and observers are in large part due to practitioners overestimating their levels of adherence and competence, and has been shown in both manualized research treatments and routine practices (Hogue et al., 2015). For example, in a summary of findings from trials with a comparatively large number of recorded sessions of addiction practitioners delivering standard treatment (e.g., CBT and the 12 Steps program), Carroll et al. (2010) found that the practitioners frequently overestimated their time spent on EBT. Moreover, EBT components occurred at low levels and in less than five percent of all sessions. Instead, clinician-initiated unrelated discourse (i.e., chat) was one of the more frequently observed interventions.

Motivational interviewing (MI) is a collaborative, client-centered and directional method for strengthening clients’ motivation to change (Miller and Rollnick, 2013), implemented in a variety of healthcare settings (Miller and Moyers, 2017). However, results from previous MI training research shows that also MI practitioner often overestimates their levels of fidelity compared to objective observers (Miller and Mount, 2001; Miller et al., 2003, 2004; Martino et al., 2009; Decker et al., 2013; Wain et al., 2015). The most used instrument for measuring MI fidelity is the Motivational Interviewing Treatment Integrity code (MITI) (Moyers et al., 2016). MITI 3.1 comprises two parts: (1) The five global ratings (Empathy, Evocation, Collaboration, Autonomy, and Direction), which provide an overall assessment of the practitioner’s performance on a five-point scale; and (2) The behavior counts, which are the frequency of the MI-practitioner’s utterances coded in seven different categories (Giving information, MI adherent behaviors, MI non-adherent behaviors, Closed questions, Open questions, Simple reflections, and Complex reflections). The coding instrument also includes recommended indicators of MITI proficiency to aid the evaluation of clinicians’ skillfulness in MI (Moyers et al., 2010).

Within education, metacognition is a well-known concept. Defined as the students’ thinking about their own learning process (Benassi et al., 2014), metacognition is a crucial factor in students’ ability to evaluate progress and decide on strategies for improvement (Cutting and Saks, 2012; Gooding et al., 2017). Also in this area of research, repeated studies have shown students’ ability to assess their own performance as limited (Rohrer and Pashler, 2010; Benassi et al., 2014). However, when repeatedly tested, students’ assessments improve (Rohrer and Pashler, 2010; Cutting and Saks, 2012; Benassi et al., 2014). Additionally, active-learning techniques have also shown to increase metacognition within education (Gooding et al., 2017). An active use of fidelity tools, such as the MITI, during supervision might thereby be an efficient way for practitioners to learn how to more accurately estimate their levels of adherence and competence following MI training.

The objective of this study was to expand previous research by examining the relationship between participants’ self-reported MI skills and objectively assessed MI skills over a period of 12 month that included six monthly supervision sessions. Two different types of supervision were included: Regular group supervision, or individual telephone supervision based on objective MITI feedback. In line with previous findings, we hypothesized that the relationships between participants’ self-reported skills and objectively assessed skills would be overall weak. However, we also hypothesized that the associations would increase over time, and that the associations would be stronger in the group that received monthly individual supervision sessions based on the MITI.

Methods

Data were obtained from an MI implementation study (Beckman et al., 2021) conducted from September 2014 to January 2017 at the Swedish National Board of Institutional Care (SiS), a Swedish government agency for young people with psychosocial problems and adults with substance use disorders. The main aim of the study were to assess MI skills within the agency, and to evaluate two forms of MI supervision on the supervisor-supervisee working alliance, the supervisees’ feelings of discomfort/distress and MI skill acquisition. The analyses did not show any form of supervision as more effective, or that the MITI feedback did evoke negative emotions, or negatively affected the supervisor-supervisee working alliance or the supervisee’s skill acquisition (Beckman et al., 2021).

Participants

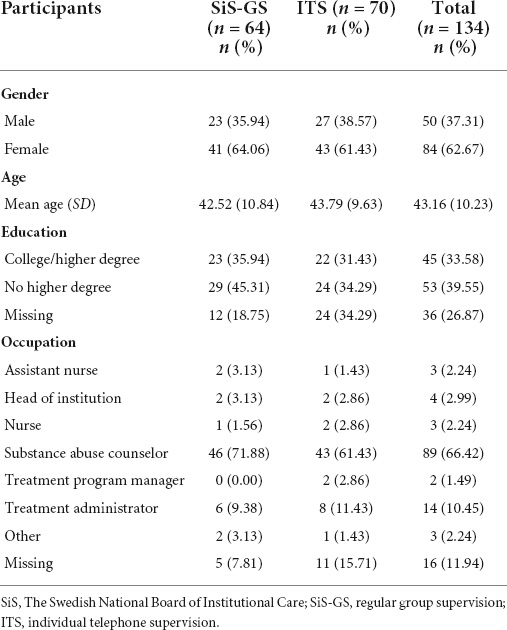

Participants were 134 employees from 12 SiS institutions who previously had received at least one MI workshop during their employment at SiS. Demographic variables are presented in Table 1.

Procedure

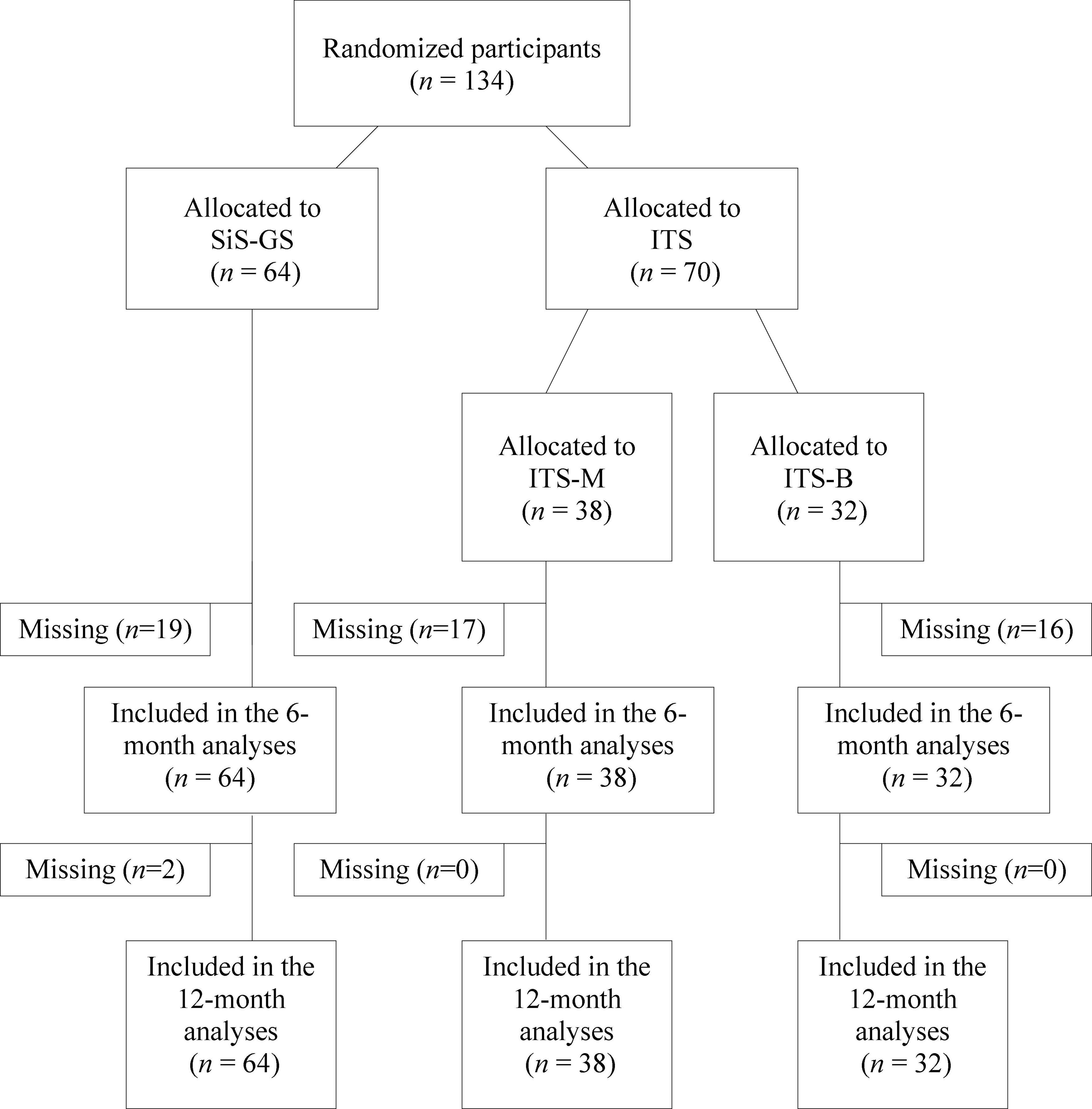

All participants signed informed consent before participating in the study (the Regional Ethical Review Board in Stockholm, Sweden; dnr. 2013/904-31). They were then randomized to: (1) Six months of regular group supervision at their SiS-institutions (SiS-GS, n = 64), or (2) Six monthly individual telephone supervision sessions based on two types of MITI feedback (ITS, n = 70) (Figure 1). The randomization was conducted with a random number generator without stratification. Within SiS, a variety of substance abuse treatment options are available, including MI. In addition, all verbal interactions with clients must be performed in accordance with MI. SiS therefore offers all personnel who interact with clients a four- to five-day MI workshop with subsequent group supervision. This SiS regular group supervision is conducted at all institutions and is open to employees with at least one completed workshop in MI. The regular supervision session content varied slightly at the different SiS institutions during the course of the study (e.g., reports of/listening to self-selected parts of sessions from some of the participants, discussions, coaching, and role-plays). The monthly 30 min individual telephone supervision sessions were manual based and are described in more detail elsewhere (Beckman et al., 2017, 2019). All participants recorded three 20-min MI-sessions at their institution, either with a client or a real play (i.e., one practitioner recounts a personal experience to the other, who act as a therapist in relation to that situation) together with a colleague: At baseline, six (the 6-month assessment), and 12 (the 12-month follow-up) months after the baseline recording. The ITS group recorded four extra sessions in between baseline and the 6-month assessment, and received individual telephone supervision after all recordings except the 12-month follow-up. Following each recording, all participants self-assessed their MI skills in a questionnaire mirroring the MITI.

Figure 1. Flow diagram. SiS, The Swedish National Board of Institutional Care; SiS-GS, SiS group supervision; ITS, individual telephone supervision; ITS-B, individual telephone supervision including systematic feedback based on only the behavior counts part of the objective protocol; ITS-M, individual telephone supervision including systematic feedback based on the entire objective protocol.

Assessment

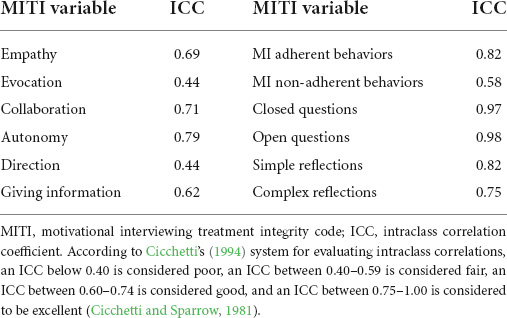

The recorded MI sessions were assessed for proficiency (i.e., adherence and competence) by coders at MIC Lab, now named the Motivational Interviewing Quality Assurance (MIQA) group at Karolinska Institutet (KI) in Stockholm, with a translated version of the MITI, version 3.1 (Forsberg et al., 2011a). All MIQA coders complete 120 h of training, take part in weekly group codings, and double-code 12 randomly selected recordings twice a year. At the middle of the study period, the ICC for the MITI variables ranged between good to excellent for all variables except for Direction and MI non-adherent, for which ICC was considered fair (Table 2). The Clinical Experience Questionnaire (CEQ) (Forsberg et al., 2011b) was used to self-assess MI skills. CEQ contains nine items: The first five correspond to each of the five global variables of the MITI (i.e., Empathy, Evocation, Collaboration, Autonomy, and Direction). In the following four, participants are asked to estimate the proportion of the behavior counts using a three-point scale (i.e., more Reflections than Questions, roughly the same, or more Questions than Reflections; more Complex reflections than Simple reflections, roughly the same, or more Simple reflections than Complex reflections; more Open questions than Closed questions, roughly the same, or more Closed than Open questions; more MI adherent utterances than MI non-adherent utterances, roughly the same, or more MI non-adherent than MI adherent utterances).

Table 2. The MIQA coder’s inter-rater reliability, assessed with a two-way mixed model with absolute agreement, single measures, ICC.

Data analyses

The inter-rater agreement of the MITI coding was assessed with a two-way mixed model with absolute agreement, single measures, ICC. Due to non-normally distributed data, Spearman’s rho were used to test the correlations between the nine MITI proficiency measures and the nine CEQ-scores for each supervision group at baseline, the 6-month assessment and the 12-month follow-up. The Bonferroni correction was applied for multiple comparisons with the significance cut-off at p < 0.05. To examine whether the correlation coefficients were significantly different, the Fisher r-to-z transformation was used. To test the effectiveness of the different types of supervision on self-rating of competence, new variables based on the difference between the participants’ self-reported MI skills and the objectively assessed MI skills were created, and a generalized linear mixed model (GLMM) was then used to analyze both main (i.e., group, and time) and interaction (group X time) effects. Descriptive statistics, such as QQ-plots, showed which distribution best represented the data (i.e., nesting, repeated measures within individuals and random intercept for individuals). For one variable where we found a significant baseline difference, we included the baseline assessment as a fixed covariate to adjust for the initial imbalance. The Bonferroni correction was used for multiple comparisons within each GLMM analysis, and the intervention effect was calculated using Cohen’s d. All data were analyzed with the Statistical Package for the Social Sciences (SPSS), version 22.0.

Results

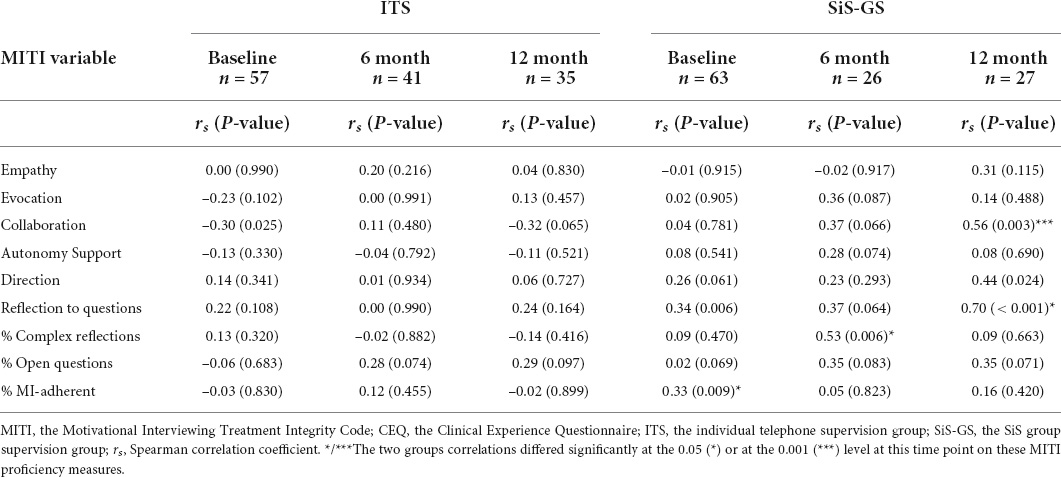

Table 3 shows the correlations between the objectively assessed MITI proficiency measures and the participants’ self-reported CEQ-scores for the two groups at the three assessment points. Consistent with the first hypothesis, the associations between participants’ self-reports and the objective assessments were overall weak. Consistent with the second hypothesis, the discrepancy between self-reports and objective assessments (i.e., the MITI) then decreased over time: The GLMM-analysis showed significant time effects for four of the seven MITI proficiency measures: Collaboration [F(2, 113) = 4.97, p = 0.009, d = 0.42], Reflection to questions [F(2, 111) = 3.67, p = 0.029, d = 0.36], Percent complex reflections [F(2, 113) = 5.91, p = 0.004, d = 0.46], and Percent MI-adherent [F(2, 113) = 4.31, p = 0.016, d = 0.39].

Table 3. Correlations between the MITI proficiency measures and the practitioners’ self-assessment (i.e., the CEQ-scores) at the three assessment points.

Although the correlations were persistently higher for the SiS-GS group, only one out of nine was significantly higher at the 6-month assessment (i.e., Percent complex reflections), and only two were significantly higher at the 12-month follow up (i.e., Collaboration and Reflection to question) (Table 3). Additionally, contrary to the third hypothesis, the two groups did not develop differently over time: The GLMM-analysis showed no significant interaction or group effects.

Discussion

This study examined the relationship between practitioners self-reported MI skills and objectively assessed MI skills. The participants received either 6 months of regular institutional group supervision, or six monthly individual telephone supervision sessions based on objective feedback. The associations between self-reported and objectively assessed MI skills were overall weak, but both groups’ weak correlations then increased somewhat over time. However, the associations between self-reported and objectively assessed MI skills were not stronger in the group that received individual telephone supervision based on objective feedback.

Practitioners’ difficulties in assessing their own performance have repeatedly been shown in previous studies (Carroll et al., 1998, 2010; Brosan et al., 2008; Beidas and Kendall, 2010). However, within educational research, metacognition is well-known and numerous studies have shown that estimates become more accurate during recurrent testing (Rohrer and Pashler, 2010; Cutting and Saks, 2012; Benassi et al., 2014). In this study, the ITS group’s MI skills were tested in six monthly recordings followed by supervision based on the results of objective assessment of those recordings (i.e., the MITI). Though participants’ self-reported MI skills were never a part of these supervision sessions. The MITI results were a regular part of every session, but they were never compared with the participants’ self-reports. In fact, none of the participants got the chance to see their self-reports once they were handed in. The SiS-GS group was also tested during the course of the study, but only three times as opposed to seven for the ITS group. Thus, six monthly recordings and subsequent supervision sessions, including detailed compilations of the objectively assessed skills, do not seem sufficient for practitioners to develop better estimates of their actual MI performances. On the contrary – for the group that received supervision as usual (i.e., SiS-GS) we found generally higher correlations between objective and subjective ratings. Notably, only 3 of these 18 sets of correlations were significantly higher at the 6-, and 12-month assessments (Table 3), and the two groups did not develop differently over time according to the GLMM analysis (no significant interaction). Nevertheless, the participants’ ability to self-assess increased somewhat in both groups over time (a Time-effect in the GLMM). The implementation study (Beckman et al., 2021) showed increased adherence and competence for the participants over time, and there may be a relationship between increased skills and the ability to self-assess.

Since previous research has highlighted metacognition as critical to learning and performance, and increasingly important as learners advance (Benassi et al., 2014), an additional way to use fidelity tools for enhanced learning during supervision might be to actively work with the supervisee’s abilities to self-assess to thereby promote metacognition. Fidelity based self-assessment as a supervision tool may also inform practitioners on opportunities for development, and thereby contribute to a more efficient training (Cutting and Saks, 2012). By comparing self-rated assessment with independent observers during supervision sessions, practitioners’ ability to self-assess may also be improved. Since evaluation of practitioners’ competence and adherence relies heavily on self-reports, low ability in this domain has serious implications for both effective supervision and practice. Discrepancy in perception and actual behavior is especially problematic when practitioners are overestimating their levels of adherence and competence, given the findings of better outcomes when treatments is conducted with fidelity (Beidas and Kendall, 2010).

This study has several limitations: The implementation study had recruitment difficulties, the participants were self-selected, and 40.3 percent of the participants dropped out, which limits the generalizability of the findings. Additionally, the coders were not blind to the group allocation, and the same coders both rated the sessions and conducted the supervision. This procedure may have affected the coding reliability (Moyers et al., 2016). Despite these limitations, the present study contributes to the knowledge of the relationship between self-reported and objectively assessed MI skills by being one of few studies that compare the accuracy of practitioners’ self-assessment in two different supervision groups using a relatively large sample in a naturalistic setting over a period of 12 month, and can therefore provide some direction for future MI training and implementation studies.

Conclusion

This study contributes to the field by confirming and expanding previous findings on the inaccuracy of practitioners’ self-reports. The ability to self-assess skills after MI-training seems to increase somewhat over time, but six monthly recordings and subsequent MI supervision sessions, including detailed compilations of the objectively assessed skills, were not sufficient for the SiS-practitioners in this study to develop better estimates of actual performances. An additional way to use fidelity tools, such as the MITI, for enhanced learning during supervision could be to promote metacognition. Fidelity based self-assessment as a supervision tool may also inform practitioners on opportunities for development and thereby contribute to a more efficient training, and might also enhance practitioners’ ability to self-assess.

Practice Implications

The results shed a light on practitioners’ difficulties in assessing their own competence. Practitioners’ ability to self-assess may increase with specific training, but to ensure that patients receive intended care, adherence and competence should in any case be assessed objectively.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Regional Ethical Review Board in Stockholm, Sweden; dnr. 2013/904-31. The patients/participants provided their written informed consent to participate in this study.

Author contributions

MB: conceptualization, methodology, data curation, formal analysis, funding acquisition, and writing – original draft, review and editing. HL: conceptualization, methodology, formal analysis, and writing – review and editing. LÖ: project administration and writing – review and editing. LF: conceptualization, methodology, funding acquisition, and writing – review and editing. TL: resources, supervision and writing – review and editing. AG: conceptualization, methodology, data curation, formal analysis, supervision, and writing – review and editing. All authors contributed to the article and approved the submitted version.

Funding

The Swedish National Board of Institutional Care provided financial support for this work [Statens institutionsstyrelse (SiS) dnr 41–185-2012].

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.890579/full#supplementary-material

References

Bearman, S. K., Schneiderman, R. L., and Zoloth, E. (2017). Building an evidence base for effective supervision practices: an analogue experiment of supervision to increase EBT fidelity. ADM Policy Ment. Health 44, 293–307.

Beckman, M., Forsberg, L., Lindqvist, H., and Ghaderi, A. (2019). Providing objective feedback in supervision in motivational interviewing: results from a randomized controlled trial. Behav. Cogn. Psychother 2019, 1–12. doi: 10.1017/S1352465819000687

Beckman, M., Forsberg, L., Lindqvist, H., Diez, M., Eno Persson, J., and Ghaderi, A. (2017). The dissemination of motivational interviewing in swedish county councils: results of a randomized controlled trial. PLoS One 12:e0181715. doi: 10.1371/journal.pone.0181715

Beckman, M., Lindqvist, H., Ohman, L., Forsberg, L., Lundgren, T., and Ghaderi, A. (2021). Ongoing supervision as a method to implement motivational interviewing: results of a randomized controlled trial. Patient Educ. Couns. 104, 2037–2044. doi: 10.1016/j.pec.2021.01.014

Beidas, R. S., and Kendall, P. C. (2010). Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin. Psychol. 17, 1–30. doi: 10.1111/j.1468-2850.2009.01187.x

Beidas, R. S., Cross, W., and Dorsey, S. (2014a). Show me, don’t tell me: behavioral rehearsal as a training and analogue fidelity tool. Cogn. Behav. Pract. 21, 1–11. doi: 10.1016/j.cbpra.2013.04.002

Beidas, R. S., Edmunds, J., Ditty, M., Watkins, J., Walsh, L., Marcus, S., et al. (2014b). Are inner context factors related to implementation outcomes in cognitive-behavioral therapy for youth anxiety? ADM Policy Ment. Health 41, 788–799. doi: 10.1007/s10488-013-0529-x

Benassi, V. A., Overson, C. E., and Hakala, C. M. (2014). “Applying science of learning in education: infusing psychological science into the curriculum,” in Retrieved from the Society for the Teaching of Psychology. Available online at: http://teachpsych.org/ebooks/asle2014/index.php (accessed August 23, 2021).

Brosan, L., Reynolds, S., and Moore, R. G. (2008). Self-evaluation of cognitive therapy performance: do therapists know how competent they are? Behav. Cogn. Psychother. 36:581. doi: 10.1017/S1352465808004438

Carroll, K., Martino, S., and Rounsaville, B. (2010). No train, no gain? Clin. Psychol. Sci. Pract. 17, 36–40. doi: 10.1111/j.1468-2850.2009.01190.x

Carroll, K., Nich, C., and Rounsaville, B. (1998). Utility of therapist session checklists to monitor delivery of coping skills treatment for cocaine abusers. Psychother. Res. 8, 307–320. doi: 10.1093/ptr/8.3.307

Cicchetti, and Sparrow. (1981). Developing criteria for establishing interrater reliability of specific items: applications to assessment of adaptive behavior. Am. J. Ment. Defi. 86, 127–137.

Cicchetti, D. (1994). Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 6, 284–290. doi: 10.1037/1040-3590.6.4.284

Cutting, M. F., and Saks, N. S. (2012). Twelve tips for utilizing principles of learning to support medical education. Med. Teach. 34, 20–24. doi: 10.3109/0142159X.2011.558143

Decker, S. E., Carroll, K. M., Nich, C., Canning-Ball, M., and Martino, S. (2013). Correspondence of motivational interviewing adherence and competence ratings in real and role-played client sessions. Psychol. Assess. 25, 306–312. doi: 10.1037/a0030815

Forsberg, L., Forsberg, L., Forsberg, K., Van Loo, T., and RöNnqvist, S. (2011a). Motivational Interviewing Treatment Integrity 3.1 (MITI 3.1): MITI Kodningsmanual 3.1. Available online at: https://www.miclab.org/sites/default/files/files/MITI%203_1%20SV.pdf (accessed August 23, 2021).

Forsberg, L., Lindqvist, H., and Forsberg, L. (2011b). The Clinical Experience Questionnaire (CEQ). Stockholm: Karolinska Institutet.

Gooding, H. C., Mann, K., and Armstrong, E. (2017). Twelve tips for applying the science of learning to health professions education. Med. Teach. 39, 26–31. doi: 10.1080/0142159X.2016.1231913

Hogue, A., Dauber, S., Lichvar, E., Bobek, M., and Henderson, C. E. (2015). Validity of therapist self-report ratings of fidelity to evidence-based practices for adolescent behavior problems: correspondence between therapists and observers. ADM Policy Ment. Health 42, 229–243. doi: 10.1007/s10488-014-0548-2

Martino, S., Ball, S., Nich, C., Frankforter, T. L., and Carroll, K. M. (2009). Correspondence of motivational enhancement treatment integrity ratings among therapists, supervisors, and observers. Psychother. Res. 19, 181–193. doi: 10.1080/10503300802688460

McHugh, R. K., Murray, H. W., and Barlow, D. H. (2009). Balancing fidelity and adaptation in the dissemination of empirically-supported treatments: the promise of transdiagnostic interventions. Behav Res Ther 47, 946–953. doi: 10.1016/j.brat.2009.07.005

McLeod, B. D., Southam-Gerow, M. A., Tully, C. B., Rodriguez, A., and Smith, M. M. (2013). Making a case for treatment integrity as a psychosocial treatment quality indicator for youth mental health care. Clin. Psychol. 20, 14–32. doi: 10.1111/cpsp.12020

Miller Moyers. (2017). Motivational interviewing and the clinical science of carl rogers. J. Consult Clin. Psychol. 85, 757–766. doi: 10.1037/ccp0000179

Miller Rollnick. (2013). Motivational Interviewing: Helping People Change. New York: Guilford Press.

Miller, W. R., Yahne, C. E., and Tonigan, J. S. (2003). Motivational interviewing in drug abuse services: a randomized trial. J. Consult. Clin. Psychol. 71, 754–763. doi: 10.1037/0022-006X.71.4.754

Miller, W. R., Yahne, C. E., Moyers, T. B., Martinez, J., and Pirritano, M. (2004). A randomized trial of methods to help clinicians learn motivational interviewing. J. Consult. Clin. Psychol. 72, 1050–1062. doi: 10.1037/0022-006X.72.6.1050

Miller, W., and Mount, K. (2001). A small study of training in motivational interviewing: does one workshop change clinician and client behavior? Behav. Cognit. Psychother. 29, 457–471. doi: 10.1017/S1352465801004064

Moyers, R., Manuel, E., and Houck. (2016). The motivational interviewing treatment integrity code (MITI 4): rationale, preliminary reliability and validity. J. Subst. Abuse Treat. 65, 36–42. doi: 10.1016/j.jsat.2016.01.001

Moyers, T. B., Martin, T., Manuel, J. K., Miller, W. R., and Ernst, D. (2010). Revised Global Scales: Motivational Interviewing Treatment Integrity 3.1.1 (MITI 3.1.1) [Online]. Available online at: http://casaa.unm.edu/download/MITI3_1.pdf (accessed August 23, 2021).

Rohrer, D., and Pashler, H. (2010). Recent research on human learning challenges conventional instructional strategies. Educ. Res. 39, 406–412.

Keywords: Motivational interviewing (MI), treatment fidelity, evidence-based treatments, MITI, self-assessment, metacognition

Citation: Beckman M, Lindqvist H, Öhman L, Forsberg L, Lundgren T and Ghaderi A (2022) Correspondence between practitioners’ self-assessment and independent motivational interviewing treatment integrity ratings. Front. Psychol. 13:890579. doi: 10.3389/fpsyg.2022.890579

Received: 06 March 2022; Accepted: 26 July 2022;

Published: 26 July 2022.

Edited by:

Lisa A. Osborne, The Open University, United KingdomReviewed by:

Paul Cook, University of Colorado, United StatesDaniel Demetrio Faustino-Silva, Conceição Hospital Group, Brazil

Copyright © 2022 Beckman, Lindqvist, Öhman, Forsberg, Lundgren and Ghaderi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maria Beckman, bWFyaWEuYmVja21hbkBraS5zZQ==

Maria Beckman

Maria Beckman Helena Lindqvist1

Helena Lindqvist1 Lars Forsberg

Lars Forsberg Tobias Lundgren

Tobias Lundgren Ata Ghaderi

Ata Ghaderi