94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 15 June 2022

Sec. Human-Media Interaction

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.883417

As technological development is driven by artificial intelligence, many automotive manufacturers have integrated intelligent agents into in-vehicle information systems (IVIS) to create more meaningful interactions. One of the most important decisions in developing agents is how to embody them, because the different ways of embodying agents will significantly affect user perception and performance. This study addressed the issue by investigating the influences of agent embodiments on users in driving contexts. Through a factorial experiment (N = 116), the effects of anthropomorphism level (low vs. high) and physicality (virtual vs. physical presence) on users' trust, perceived control, and driving performance were examined. Results revealed an interaction effect between anthropomorphism level and physicality on both users' perceived control and cognitive trust. Specifically, when encountering high-level anthropomorphized agents, consumers reported lower ratings of trust toward the physically present agent than toward the virtually present one, and this interaction effect was mediated by perceived control. Although no main effects of anthropomorphism level or physicality were found, additional analyses showed that anthropomorphism level significantly improved users' cognitive trust for those unfamiliar with IVIS. No significant differences were found in terms of driving performances. These results indicate the influences of in-vehicle agents' embodiments on drivers' experience.

Driven by developments in artificial intelligence, an increasing number of intelligent agents have been developed and integrated into various contexts in everyday life. For instance, the agent “Alexa” has been integrated into smart speakers to assist users with domestic tasks. Intelligent agents exhibit a certain degree of autonomy and they can perceive and communicate with their surroundings (Ferber and Weiss, 1999). Automobile manufacturers also echo this trend by developing in-vehicle agents, which are also referred to as Driving Support Agent (DSA) (Tanaka et al., 2018a,b; Karatas et al., 2019; Lee et al., 2019; Miyamoto et al., 2021). Drivers can interact with in-vehicle agents [often integrated into in-vehicle information systems (IVIS)], through voice commandsin order to learn about driving-related information (e.g., traffic, weather conditions) and completing some secondary tasks (e.g., turning the air conditioner on/off, opening/closing windows). Some in-vehicle agents can even provide assistance for safer driving (e.g., lane keeping, speed control, fuel management). Through sensing, listening, and taking active roles to give advice, the in-vehicle agents can potentially transform the traditional human-machine interaction into human-human interaction, facilitating a natural and intuitive interaction.

Increasing research attention has been paid to developing intelligent agents in driving contexts for enriching the driving experience. Recent studies have explored the desirable personality of in-vehicle agents (Braun et al., 2019), the utterance of in-vehicle agents influences drivers' acceptance in self-driving (Miyamoto et al., 2021) and autonomous driving contexts (Lee et al., 2019). However, besides in-vehicle agents' conversational styles, agent embodiments as another important factor, also deserve more research attention. Agent appearance has been found to significantly influence user experience (Shiban et al., 2015; Abubshait and Wiese, 2017; Ter Stal et al., 2020a). Agents can be created to resemble humans, animals, objects, robots, or mystical creatures (Straßmann and Krämer, 2017). They can also be virtual or physical, i.e., created as a virtual character that is only presented on digital screens, or physically with tangible materials and structure (Li, 2015).

In fact, agents are supported by sophisticated algorithms. Designers and developers have the freedom to embody agents in various ways and thereby deliberately influence user experience. Different agent appearances can trigger users to interact with agents differently. Therefore, it is crucial to understand how different agent embodiments influence user responses in driving contexts. This study specifically focused on two dimensions of agent embodiments (Ziemke, 2001): anthropomorphism level (low vs. high) and physicality (virtual vs. physical).

Prior studies have demonstrated the significant influences of agents' anthropomorphism and physicality on user experience and performance. For instance, anthropomorphised agents were found to improve users' perceived enjoyments and trust of online shopping websites (Luo et al., 2006; Qiu and Benbasat, 2009), and student performance in online learning contexts (Li et al., 2016). Agent physicality could also improve user trust (Wainer et al., 2006; Kiesler et al., 2008), enjoyments (Wainer et al., 2007; Kose-Bagci et al., 2009), and attitudes (Kiesler et al., 2008). However, few studies have investigated the interaction effects between anthropomorphism level and physicality, while examining the interactions among different layers of agent embodiments has drawn more and more research attention, such as the study on the interaction between agency and anthropomorphism (Nowak and Biocca, 2004; Kang and Kim, 2020), the interplay of bodily appearance and movement (Castro-González et al., 2016), and the interaction between anthropomorphism and realism (Li et al., 2016). This study aimed to extend this line of research by investigating the interaction effects between anthropomorphism level and physicality in driving contexts.

Furthermore, past research has pointed out that the influences of agent embodiments are specific to task and interaction characteristics (van Vugt et al., 2007; Hofmann et al., 2015; Schrader, 2019). Schrader (2019) found that people created virtual agents differently for learning and entertainment contexts. Thus, it is still questionable whether these findings on agents' anthropomorphism and physicality found in other contexts (e.g., online learning, online shopping, smart home contexts) can be applicable in driving contexts. Considering the differences between driving and other contexts, it is necessary to examine how agents' anthropomorphism level and physicality influence driving experience and performance, which can contribute to current literature by considering the influence of specific task contexts.

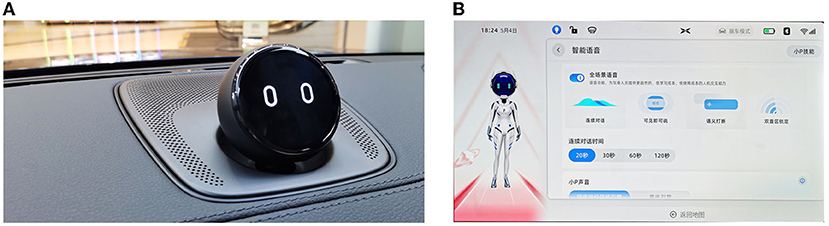

From a practical perspective, this topic is also worthy of investigation given the two important factors for developers to decide while embodying agents. Different ways of agent embodiments exist in the current practices of automobile manufacturers. For example, the Chinese manufacturer NIO developed the in-vehicle agent NOMI, which is physically located in the vehicle with a shape resembling the human head and can exhibit facial expressions (see Figure 1A). Another Chinese manufacturer XiaoPeng Motors developed an agent named XiaoP, which is virtually presented on the screen, and highly resembles a person with multiple human-like characteristics, such as facial expressions, head, body, arms, and legs (see Figure 1B). Therefore, it is crucial to understand how agents' anthropomorphism and physicality will indeed influence user experience.

Figure 1. Examples of agents in different forms: (A) NOMI in the physical form; (B) XiaoP in the virtual form.

In-vehicle information systems is indispensable for users' driving, which becomes increasingly complex nowadays because of the integration of newly-added functions (e.g., advice-giving function, voice interaction function). Complex and sophisticated IVIS can cause user resistance for effective usage (Kim and Lee, 2016), and therefore user trust of IVIS becomes crucial. In fact, prior research has shown that user trust is a precondition for their effective usage of complex and sophisticated systems (Lee and See, 2004). Trust largely alleviates users' anxiety triggered by the increasingly complicated systems. Users are more likely to use systems that they trust while avoiding systems that they do not trust. Greater trust has been demonstrated to improve users' usage of voice interaction technology (Nguyen et al., 2019), mobile payment system (Yan and Yang, 2015), and autonomous vehicle (Choi and Ji, 2015). Furthermore, trust is a multi-dimension concept (Soh et al., 2009), which involves the users' belief about the credibility of information given by a system and users' subjective feelings toward the system. To capture the different facets, cognitive and affective dimension are proposed. Cognitive trust relates rational processing and focuses on the users' evaluation of credibility of given information, while affective trust refers to the users' subjective feeling toward a system (Lewis and Weigert, 1985; Soh et al., 2009).

Perceived control is an important factor that influences user trust toward a system. Perceived control refers to one's subjective evaluation of his or her ability to exert influence over the environment or systems (Bowen and Johnston, 1999). It relates to a situational perceived ability to affect the outcome of a system (Pacherie, 2007; Pacheco et al., 2013). People favor situations that they can control while avoiding situations they cannot control (Klimmt et al., 2007). The positive influences of perceived control have been demonstrated in the context of online games (Wang, 2014) and mobile health service systems (Zhao et al., 2018). In driving contexts, perceived control closely relates to driving experience and driving performance (Murata et al., 2016; Wen et al., 2019). Users' perceived control is influenced by the functions provided by IVIS. Certain driving support functions (e.g., lane-keeping monitor) decreases the drivers' sense of agency (Yun et al., 2018), which hinders drivers' sense of control, leading to drivers' disengagement (Navarro et al., 2016) and threatens driving safety (Oviedo-Trespalacios et al., 2019). The involvement of in-vehicle agents can also potentially influence the users' perceived control, which further affects user's trust of IVIS. Depending on different agent embodiments (i.e., anthropomorphism level and physicality), users may respond to IVIS differently and be affected in user experience and performance.

Anthropomorphism refers to the attribution of humanlike characteristics (e.g., human forms, voices, behaviors) to inanimate, artificial agents such as robots and agents (Bartneck et al., 2009; Waytz et al., 2014). While interacting with computers and systems, people mindlessly apply interpersonal social rules as if they interact with human beings (Nass et al., 1995; Reeves and Nass, 1996). The involvement of anthropomorphism reinforces the tendency to interact with computers and systems in social ways, which further bring natural interactions in various contexts. For instance, anthropomorphized agents have improved users' engagement and attitudes toward learning systems (Moundridou and Virvou, 2002; Chang et al., 2010), users' enjoyment and trust of e-commerce websites (Qiu and Benbasat, 2009), user trust in diabetes decision-support aid systems (Pak et al., 2012), and users' trust of autonomous vehicles (Lee et al., 2015).

With the acknowledgment of benefits of the anthropomorphism strategy, a large number of studies have been conducted to further explore the optimal level of anthropomorphism. Should an agent be highly similar to a human being? Or should limited anthropomorphic cues (e.g., head, facial expression, body) be sufficient? Thus far, the findings are inconsistent. On the one hand, prior research concluded that as users generally consider agents as independent social actors, the similarities between an agent and a user are positively correlated with user responses (Sundar and Nass, 2000). Following this, the increasing similarities will trigger users to respond to the agents more socially. For instance, prior research found that, in comparison to low-level anthropomorphized agents, high-level anthropomorphized agents improved consumer trust and attitudes to e-commerce websites (Luo et al., 2006). Gong (2008) further compared agents of four varying anthropomorphism levels progressing from low-, medium-, high-anthropomorphism to real human pictures, and found that users' trust improved along with the increase of anthropomorphism level.

On the other hand, the uncanny valley theory pointed out that high-level anthropomorphism can be detrimental. Specifically, users respond to robots more positively with the increasing similarities between robots and a real person. However, users start to have negative responses when the robots become highly realistic (Mori et al., 2012). It is not easy to create super realistic anthropomorphized agents to reach the reverse point. Nevertheless, there is evidence showing that anthropomorphized agents make negative influences in certain contexts. In game playing contexts, the presence of an anthropomorphized computer helper threatens users' perceived control. Consequently, users experienced less enjoyment from playing the games (Kim et al., 2016). Prior research further supported that users' adoption of task-specific advice has not been influenced by agents that exhibited different anthropomorphism levels (i.e., no, low-, high-level anthropomorphism agent), although they reported higher trust levels toward high-level anthropomorphism agents (Kulms and Kopp, 2019).

Furthermore, the influences of an agent's anthropomorphism level are likely to be moderated by users' individual differences. In fact, prior research has stated that a users' own characteristics can moderate the influences of anthropomorphism level (Złotowski et al., 2015). People's tendency to anthropomorphize agents increases with their motivation to understand the agents (Epley et al., 2007). When users lack understanding of a system, it is more likely for users to anthropomorphize agents. When people have a comprehensive understanding of systems, the tendency of anthropomorphizing agents can be largely reduced.

Agents can be presented to users physically or virtually, to which people will respond differently. In general, a physically present agent can provide better affordance, which is likely to trigger more social interactions with agents (Fong et al., 2003). In comparison to virtual presence, physical presence allows users to feel agents with richer senses, including vision, touch, and smell. As a result, users are more likely to consider the existence of a system as a real person and interact with a system as a real person (Lee et al., 2006, p. 10; Mann et al., 2015, p. 35).

Previous studies further found that physicality can improve user trust (Wainer et al., 2006; Kiesler et al., 2008), enjoyments (Wainer et al., 2007; Kose-Bagci et al., 2009), and attitudes (Kiesler et al., 2008). In driving contexts, similar findings were also reported. When encountering physical agents, users reported a stronger tendency of considering the agents as real persons (Lee et al., 2019) and form higher trust levels (Kraus et al., 2016). In terms of the influence of physicality on user performance, Li (2015) suggested that the presence of a physical robot will draw more user attention, which could lead to lower driving performance. However, in a mathematical puzzle-solving task, no significant influences of physicality were found in terms of users' performance (Hoffmann and Krämer, 2013).

To summarize, previous studies have shown the respective influences of agent anthropomorphism level and physicality on user trust of agents. However, how agents' anthropomorphism level and physicality influence user trust synergistically remain unclear. Moreover, most of existing research on agents' anthropomorphism level and physicality was conducted in the contexts of healthcare (Mann et al., 2015) and online shopping (Luo et al., 2006). As interaction task characteristics largely influence user experience (van Vugt et al., 2007; Hofmann et al., 2015; Schrader, 2019), it is still questionable whether the findings on anthropomorphism level and physicality can be directly applicable to driving contexts. The current study addressed these questions by manipulating agents' anthropomorphism level and physicality on user trust and performance in driving contexts. Because of the inconsistent findings in current literature and the uniqueness of driving contexts, we did not give directional hypotheses but propose the following two research questions:

RQ1. How does agents' anthropomorphism level influence users' trust and driving performance?

RQ2. How does agents' physicality influence users' trust and driving performance?

Moreover, there is a possible interaction effect between anthropomorphism level and physicality. Specifically, as demonstrated in prior research, agents' anthropomorphism level increases similarities between agents and humans, which trigger users to consider agents as real social actors (Gong, 2008). In comparison to virtual presence, physical presence further enhances the tendency (Lee et al., 2006), which may deliver a stronger feeling of “another person” rather than a “system.” As a result, the combination of high-level anthropomorphism and physicality can reinforce users' perception of “another person.” The strong sense of “another person” can bring benefits in the contexts when users seek help from systems, such as in online shopping contexts (Qiu and Benbasat, 2009) and decision-making assisted systems (Pak et al., 2012). However, users' strong sense of “another person” is not always beneficial. For instance, Bartneck et al. (2010) report that a stronger feeling of “another person” made users feel embarrassed in a medical examination, where users were asked to take off clothes for examination. The stronger feeling of “another person” reduces perceived enjoyments that people gained from game playing (Kim et al., 2016) and hinders users' performance in searching tasks (Rickenberg and Reeves, 2000).

However, in driving contexts, where users intend to have full control over the driving process (Murata et al., 2016; Wen et al., 2019), the stronger feeling of “another person being” may be detrimental because it may threaten users' perceived control. In other words, perceived control may serve as a mediator. Therefore, to understand how anthropomorphism level and physicality interact with each other as well as the role of perceived control, the following research question is proposed.

RQ3. How do agents' anthropomorphism level and physicality interactively influence users' perceived control, trust, and driving performance?

While investigating the influences of anthropomorphism level and physicality, we also consider the moderating role of users' familiarity with IVIS. As users' own characteristics can moderate the influences of anthropomorphism level (Złotowski et al., 2015), the influence of anthropomorphism level is likely to be significant for people who are unfamiliar with IVIS. Similarly, when users have limited understanding of a system, a physically present agent might be more desirable because of the stronger sense of “another person being.” Therefore, we proposed the following research question.

RQ4. How do users' familiarity with IVIS moderate the effects of agents' anthropomorphism level and physicality?

To address the above research questions, a 2 × 2 factorial between-subjects experiment was designed and conducted, with agent anthropomorphism level (low vs. high) and agent physicality (virtual vs. physical) as independent variables. Participants were randomly assigned to one of the four conditions and were asked to complete the driving tasks and interact with agents during driving. The driving task and interaction tasks were identical among four conditions.

Participants were recruited for the experiment through volunteering to respond to online advertisements posted on a public university campus. One hundred sixteen participants were collected (62.93% male, mean age = 22.88). All the participants had qualified driving licenses and normal or corrected visual acuity. They received a small amount of compensation for their participation in the experiment.

The experiment was conducted using a medium fidelity driving simulator (see Figure 2). The simulator provides a 135° field of view of the driving environment with three 27-inch monitors. In addition, to stimulate the visual interfaces of IVIS, a tablet was used with 10.2 inches screen and a resolution of 2160*1620. This tablet was positioned on the right side of the steering wheel. Participants were asked to drive along a route, which was created to resemble the typical roads in China. The driving route was around 4 km long, and consisted of a variety of scenarios, including stop-sign intersections (with/without crossing traffic), signalized intersections, car-following, and pedestrian crossing to resemble a real driving experience as much as possible. Participants drove in the right lane of the road.

Figure 2. The driving simulator used in the experiment: the condition of a physical present agent with low-level anthropomorphism.

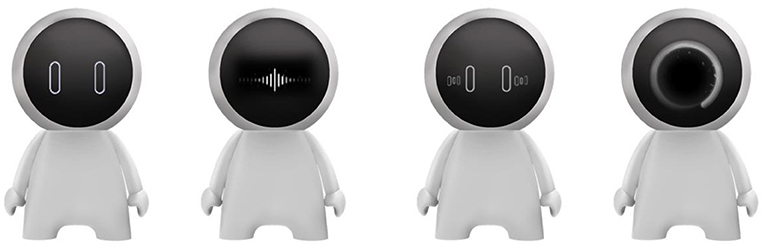

To stimulate the four experimental conditions, four types of agents were created: agents in both low and high anthropomorphism levels, which were embodied virtually and physically. To manipulate anthropomorphism level, we followed the morphological way, which has been used in prior research (Goudey and Bonnin, 2016; Kang and Kim, 2020), and in current automobile manufacturers' practice (see Figure 1). Specifically, we created low-level anthropomorphized agents by including only one morphological characteristic of a head. High-level anthropomorphized agents were created through including more morphological characteristics, including a head, arms, legs, and a body. Moreover, as user responses are influenced by agents' gender, age, and personality (Cafaro et al., 2012; Lee et al., 2018; Ter Stal et al., 2020b), the involvements of these factors can possibly confound with the influences of anthropomorphism level and physicality. While creating agents, we attempted to design them in a neutral way to avoid any possible confounding influences. To do so, we firstly created line drawings of low-level and high-level anthropomorphized agents. Next, we created both virtual and physical agents (see Figure 3). For virtual conditions, the virtual agents were integrated into IVIS interfaces by arranging on the left-top part of the interfaces. For the physical conditions, the physical agents were positioned on the dashboard. The size of physical agents and virtual agents was kept as similar as possible.

To improve the realism of the created intelligent agents, we created different facial expressions according to system status, including waiting, listening, talking, and loading (see Figure 4). The agents support voice interaction with users, and the voice was created by a voice generator (www.xunjie.com) with a female voice version.

Figure 4. Four facial expressions exhibited by in-vehicle agents: waiting, listening, talking, and loading, in the condition of virtual high-level anthropomorphised agents.

The pre-test was conducted to examine the success of manipulations of anthropomorphism level. Specifically, a controlled experiment was conducted with anthropomorphism level as the between-subject factor and agents' physicality as the within-subject factor. Each participant was randomly assigned to one of the two conditions (low-level vs. high-level anthropomorphism). They were asked to evaluate two agents: virtual and physical agents. The order of presenting the virtual and physical agent was counterbalanced.

Forty participants were collected (mean age = 24.3, 62.5% male). Participants were firstly invited to the laboratory. Next, they were asked to view the agent (either virtual or physical agent) and then fill in questionnaires based on relatedness between stimuli and human. Specifically, as we manipulate anthropomorphism level by including a different number of morphological features, we measured anthropomorphism level by examining to what extent the agents facilitate users to relate to a person. They were asked to respond toward the following three-item statements from 1 (strongly disagree) to 7 (strongly agree): “after seeing this profile, I am confident to draw the conclusion that it resembles the image of a human,” “after seeing this profile, I am able to relate to the image of a human,” and “after seeing this profile, I can think of the image of a human immediately” (α = 0.916–0.964) (adapted from the measure of visual relatedness, adapted from Cheng et al., 2019).

One-way ANOVA was conducted with anthropomorphism level as independent variable and participants' ratings on visual relatedness in virtual and physical condition as dependent variables. Results showed that anthropomorphism level exerts significant influences on participants' ratings on visual relatedness across both virtual (F (1.38) = 19.69, p < 0.01) and physical conditions (F (1.38) = 15.92, p < 0.01). Specifically, in virtual condition, participants reported significant higher ratings of visual relatedness for the high-level anthropomorphised agent than the low-level one (Mhigh−level = 5.82 vs. Mlow−level = 3.93). Similar results were found in physical condition (Mhigh−level = 5.18 vs. Mlow−level = 3.35). Taken together, these results confirm the success of created stimuli.

The main experiment adopted a Wizard-of-Oz setup (Dahlbäck et al., 1993). Participants thought that the system automatically interacted with them, but the experimenter controlled the system in order to respond to participants simultaneously. While participants interacted with the system, the agent responded by conversational speech and different facial expressions. Meanwhile, IVIS interfaces presented the immediate information related to driving status. The voice and facial expressions were identical among four conditions. The only difference among the four conditions lay in the ways of embodied agents.

Upon arrival, we firstly welcomed participants and explained the aim and procedure of the experiment. Next, the driving simulator and IVIS with in-vehicle agents were introduced to them. They were also instructed on how to use the driving simulator and how to interact with the IVIS by voice interaction. They were given time for a trial on the simulator and an opportunity to interact with the agent until they confirmed that they acquired how to drive and interact with the agents. Next, before the main session, they were asked to view the agent carefully and respond to the questions related to visual relatedness that were identical to the measures in the pre-test. Their answers were further used for manipulation checks.

In the main session, all participants drove the car in the simulator along the set course. They were asked to drive steadily as they usually did in a real-life setting. During driving, participants were required to perform several tasks through interacting with agents by voice interaction. These interaction tasks include search for music, re-navigation, and send a message to a friend. The whole driving task was around 10 min. After completing the driving task, participants were asked to fill out a questionnaire to indicate their subjective perceptions of the IVIS and driving experience.

We used questionnaires to measure participants' trust and their perceived control of their driving process. In order to improve reliability, multiple items were used to measure each construct. In the data analysis, the multiple items were combined by calculating the average score for each respondent. Following (Soh et al., 2009), the multidimensional trust scale was used in this study to measure participants' cognitive and affective trust in the IVIS integrated agent. Cognitive trust was measured by three 7-point Likert scales by rating the following statements “the system that I just used was credible/accurate/trustworthy” from 1 (strongly disagree) to 7 (strongly agree) (α = 0.937) (Lee et al., 2015; Kang and Kim, 2020). Affective trust was measured by asking participants to what extent they believe the following adjectives (i.e., positive, enjoyable, likable) can describe the IVIS anchored from 1 (describes very poorly) to 7 (describes very well) (α = 0.878) (Lee et al., 2015). Perceived control was measured by asking participants to indicate to what extent they agreed with the following two items: “when I interacted with the IVIS, I feel that usage procedure is completely up to me” and “when I interacted with the IVIS, I feel more control over it” on a 7-point Likert scale from 1 (strongly disagree) to 7 (strongly agree) (r = 0.731, p < 0.01) (adapted from (Wen et al., 2019; Anjum and Chai, 2020).

To validate the success of created stimuli, we included measures of visual relatedness, which are identical to those measures used in pre-test (α = 0.914). Moreover, prior research shows that users respond to attractive agents more positively (Khan and Sutcliffe, 2014). To control the possible confounding effects brought by agent attractiveness, we measured attractiveness by asking participants to indicate their opinions based on a 7-point scale on the following two items: “this agent looks unattractive/attractive” and “this agent looks ugly/beautiful” (r = 0.809, p < 0.01).

We also considered the possible influence of participants' familiarity of IVIS, which was measured by answering the following three questions: “to what extent are you familiar with knowledge on IVIS” from 1 (not at all familiar) to 7 (very familiar), “to what extent do you consider yourself have a good level of knowledge of IVIS” from 1 (no knowledge) to 7 (a lot of knowledge), and “to what extent do you consider yourself about IVIS” from 1 (very well-informed) to 7 (uninformed) (α = 0.845) [adapted from (Thompson et al., 2005)].

In addition to the subjective measures, the objective driving performance was assessed by the mean lane deviation data. Prior to start of each main session, the simulator was reset. The vehicle was centered on the lane without any deviation. During main sessions, any movements toward the center and edge line were recorded by the simulator automatically every second. Along the driving task, the average deviation is calculated, which is used to measure driving performance.

To check the success of the manipulation of anthropomorphism level, one-way ANOVA was conducted with anthropomorphism level as independent variable and participants' ratings on visual relatedness as the dependent variable. Results showed that anthropomorphism level exerts a significantly positive influence on participants' rating on visual relatedness [F (1, 114) = 5.50, MSE = 2.015, p = 0.01, M low−anthropomorphism = 4.25 vs. M high−anthropomorphism = 4.87]. This result confirms that the manipulation of agents' anthropomorphism level is successful. To avoid confounding effects brought by attractiveness, two-way ANOVA was conducted with anthropomorphism level and physicality as independent variables, and participants' ratings on attractiveness as the dependent variable. No significant differences were detected in terms of the influences of anthropomorphism level (p > 0.10) or physicality (p > 0.10). These results ruled out the possibility of the confounding effect brought by attractiveness.

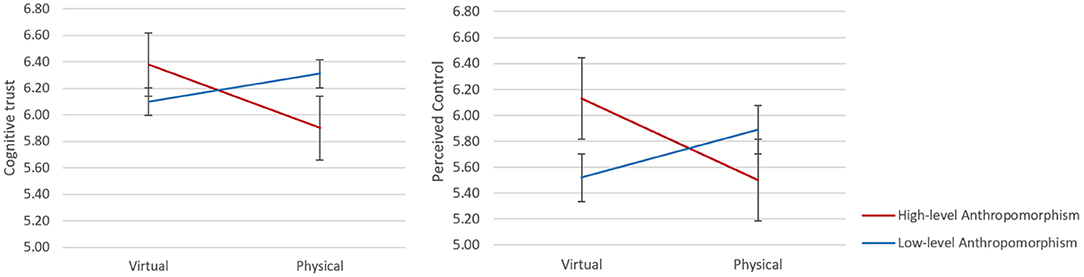

Research questions 1–3 aimed to investigate the influences of anthropomorphism level and physicality and their interaction. Multivariate ANOVA was conducted with anthropomorphism level and physicality as independent variables and user perceived control, affective trust, cognitive trust, and driving performance as dependent variables. No significant influences of anthropomorphism level or physicality were detected for users' perceived control (p > 0.10), cognitive trust (p > 0.10), affective trust (p > 0.10), and driving performance (p > 0.10). However, the result revealed a significant interaction effect between anthropomorphism level and physicality on participants' ratings of affective trust [F (1, 112) = 5.43, MSE = 0.836, p = 0.022, η2 = 0.046], cognitive trust [F (1, 112) = 5.20, MSE = 0.649, p = 0.024, η2 = 0.044], and perceived control [F (1, 112) = 6.66, MSE = 1.10, p = 0.011, η2 = 0.056]. The interaction effect was not significant for driving performance [F (1, 112) = 0.268, MSE = 514.513, p = 0.61, η2 = 0.002].

We conducted further analysis to interpret these interaction effects. Specifically, for agents with a high level of anthropomorphism, participants who interacted with virtual agents reported significantly higher ratings than the ones who interacted with physical agents in terms of cognitive trust [F (1, 58) = 5.77,MSE = 0.593, p = 0.020, η2 = 0.09] and perceived control [F (1, 58) = 6.85, MSE = 0.879, p = 0.011, η2 = 0.106] (see Table 1). In terms of affective trust, a marginal significant difference was found between virtual and physical condition [F (1, 58) = 3.59, MSE = 0.668, p = 0.063, η2 = 0.058]. But when interacting with low-level anthropomorphized agents, participants did not show significant differences between the virtual and physical conditions in terms of trust and perceived control (see Figure 5).

Figure 5. Interaction effects of anthropomorphism level and physicality of participants' cognitive trust and perceived control.

To further examine the mediating role of perceived control, we conducted a mediation analysis with MODMED model 8 (see Figure 6) by following the methodology proposed by Preacher and Hayes (2004). Results revealed that perceived control mediated the interaction effects of agents' anthropomorphism level and physicality on user cognitive trust (95% CI, −0.73– −0.08) and affective trust (95% CI, −0.92– −0.12) without including zero (Preacher and Hayes, 2004; Zhao et al., 2010). To examine the moderated mediation, we further explored the indirect effects for both anthropomorphism levels separately. For high-level anthropomorphism, the mediation through perceived control was significant for cognitive trust (B = −0.24, 95%CI, −0.44– −0.06) and affective trust (B = −0.31, 95%CI, −0.59– −0.08). Differently, for low-level anthropomorphism, the mediation through perceived control was not significant for cognitive trust (B = 0.14, 95%CI, −0.07–0.40) or affective trust (B = 0.18, 95%CI, −0.11–0.48) (see Table 2 for results). Taken together, these results demonstrated the mediator role of perceived control between the interaction effects of anthropomorphism level and physicality on users' cognitive and affective trust.

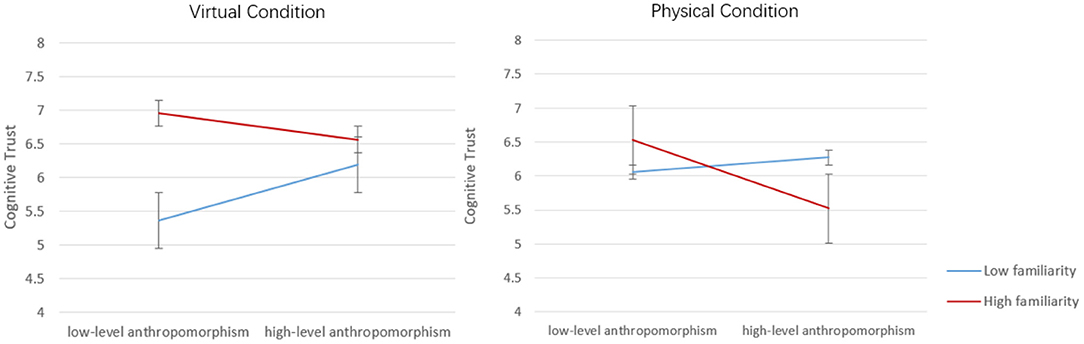

We conducted further analyses to learn the moderating role of users' familiarity. Participants vary in their familiarity with IVIS (M = 4.63; SD = 1.23). As users' familiarity is a continuous variable, the statistical power of dichotomizing can be low, which could possibly cause misleading interpretations (Irwin and McClelland, 2001; Fitzsimons, 2008). Thus, multiple regression analyses are strongly recommended to test the moderating effect rather than dichotomizing. This method has been used in examining the moderating role of individual characteristic in current literature (e.g., Mugge and Schoormans, 2012; Cheng and Mugge, 2022). There are three steps to conduct the analysis: (1) moderated regression analysis to learn the influences of IVs, moderators, and their interaction; (2) with significant interaction effects, spotlight analyses to learn to which group the influences of IVs are stronger. This analysis was conducted through replicating the regression analysis at low (-SD) and high level (+SD) of moderator value.

First, moderated multiple regression analyses were conducted to test the moderating effect of users' familiarity on the influence of anthropomorphism level and physicality. Specifically, we firstly created dummy variables of anthropomorphism level (0 = low-level anthropomorphism; 1 = high-level anthropomorphism), physicality (0 = virtual; 1 = physical), standardized users' familiarity, the interaction variable anthropomorphism level × physicality, the interaction variable anthropomorphism level × users' familiarity, the interaction variable physicality × users' familiarity. Next, these variables were used as independent variables. Finally, affective trust and cognitive trust were included as dependent variables. The regression analyses revealed a series of findings. As for cognitive trust, results revealed that it was significantly influenced by users' familiarity (ß = 0.485, p < 0.05), the interaction between anthropomorphism level and physicality (ß = −0.332, p < 0.05), and the interaction between anthropomorphism level and users' familiarity (ß = −0.249, p < 0.05) (see Table 3). These results replicate the interaction effects of anthropomorphism and physicality on cognitive trust. Results also showed that the influences of anthropomorphism level and physicality on cognitive trust significantly differ depending on users' familiarity with IVIS.

Next, to further interpret these interactions, we conducted spotlight analyses for users who are familiar with IVIS (one standard deviation above mean) and users who are unfamiliar with IVIS (one standard deviation below mean). For users who are not familiar with IVIS, anthropomorphism level makes a significant positive effect on user trust (b = 0.321, p < 0.05), but no significant effects were found on physicality (b = 0.258, p>0.10) (see Table 4). For users who are familiar with IVIS, no significant effects of anthropomorphism level (b = −0.052, p>0.10) or physicality (b = −0.086, p>0.10) were detected (see Table 4). These results were visualized in Figure 7. It can be clearly seen that anthropomorphism level makes a significant improvement for high familiarity users (blue line) in both virtual and physical conditions. But for users with high familiarity, the influence of anthropomorphism is not significant (red line).

Figure 7. The moderating role of user familiarity on the influence of anthropomorphism level for virtual and physical conditions.

These findings suggest that the influences of anthropomorphism level on user cognitive trust differ from users' familiarity with IVIS. For users who are less familiar with IVIS, anthropomorphism level makes significant influences on cognitive trust. Differently, for users who are familiar with IVIS, anthropomorphism level and physicality make no significant influence on cognitive trust. Taken together, these results demonstrate the moderating role of user familiarity of IVIS.

This research focused on the different ways of embodying agents integrated into IVIS in driving contexts, specifically the influences of agents' anthropomorphism level and physicality on users' perception and driving performances. Through a factorial experiment, results revealed that there was an interaction effect between anthropomorphism level and physicality on user cognitive trust, not on affective trust. It indicates that the two layers of agent embodiments (anthropomorphism level and physicality) promotes users' rational and systematic processing (Kim and Sundar, 2016), which further influence users' perception of information credibility given by IVIS. Furthermore, mediation analysis further shows that the interaction effect was mediated by perceived control. The influence of anthropomorphism level on user trust was significant for users who were unfamiliar with IVIS. For users who were familiar with IVIS, no significant influence of anthropomorphism was found. In addition, no significant differences were detected in terms of driving performance.

Findings of this study have extended current literature in several ways. First, this study contributes to previous studies on exploring the influences of agent embodiment influences on user responses (Ziemke, 2001; Lee et al., 2006). How agent embodiments influence user responses has been explored in learning (Li et al., 2016) and e-commerce contexts (Luo et al., 2006). This study contributes to this line of studies by focusing on the context of driving. Moreover, extensive studies have explored the interaction effects of different agent embodiment layers on user responses in various contexts, such as the interaction of agency and anthropomorphism (Nowak and Biocca, 2004; Kang and Kim, 2020), the interplay of bodily appearance and movement (Castro-González et al., 2016), and the interaction of anthropomorphism and realism (Li et al., 2016). This study extends this line of studies by investigating the interaction influences of anthropomorphism level and physicality in driving contexts.

By manipulating the two factors, we have found interaction effects on user cognitive trust and the mediating role of perceived control, which suggest that users' relationship with systems is an important factor to consider while embodying agents. Specifically, in contexts where agents play a secondary role (e.g., assistant), a stronger sense of the agent being “another person” seems to be beneficial, such as in online shopping (Qiu and Benbasat, 2009) and learning contexts (Moundridou and Virvou, 2002; Chang et al., 2010). However, while users' agency becomes prominent, the stronger sense of “another person being” seems to make no significant or even negative influences, such as in decision making (Kulms and Kopp, 2019) and game-playing contexts (Kim et al., 2016). In driving contexts, the higher tendency to consider agents as social actors makes drivers no longer consider agents as driving assistants, but as “another person” who intends to take control of driving. As a consequence, users feel less perceived control and less trust in IVIS.

Furthermore, this study has demonstrated the mediating role of perceived control. The importance of perceived control in drivers' experience has been well-supported by current literature, which showed that some driving assisted functions, such as lane-keeping and speed controlling, reduce users' perceived control (Mulder et al., 2012; Navarro et al., 2016). Extending these studies, this research further shows that users' perceived control is not only influenced by objective functions but also agent embodiments. Consistent with the prior finding (Hoffmann and Krämer, 2013), users experience less control when they feel agents become more dominant. In addition to the presence of a smile (Kim et al., 2016) and the physical presence of a robot (Hoffmann and Krämer, 2013), a physically present high-level anthropomorphized agent can also create a dominated feeling, threatening drivers' sense of control.

In addition, this study reveals the moderating role of user familiarity on the effects of anthropomorphism level. Results showed that the positive influences of anthropomorphism are only significant for users who have less experience with IVIS. This finding is well-supported by the notion that the effects of anthropomorphism are moderated by users' characteristics (Epley et al., 2007; Złotowski et al., 2015). Prior research demonstrated the positive effects of anthropomorphism level on user trust, but these effects were found in the contexts of autonomous vehicles (Lee et al., 2015), where users have limited experience and knowledge. Instead, in driving contexts, in this study, users are equipped with rich knowledge and experience with IVIS because of the extensive training for driving licenses. Consequently, the influence of anthropomorphism on user trusts in IVIS is largely eliminated.

As for physicality, no significant effect on user trust was found. The effect of physicality on user cognitive trust only reaches marginal significance (p <0.10) when considering the moderating role of users' familiarity with IVIS. But in further spotlight analysis, no significant results were found. These findings contrast with the current findings related to physicality (Li, 2015; Mann et al., 2015). The size of physical agents might cause a possible explanation for this. In previous studies, the positive effects of physicality were found in the contexts of human-robot interaction (Lee et al., 2006; Mann et al., 2015) and the robots used in these studies are much larger. For instance, the robot used by Mann et al. (2015) was 450 mm tall and 320 mm wide, and Sony Aibo used by Jung and Lee (2004) was 293 mm tall and 180 mm wide. In related studies of driving contexts (Kraus et al., 2016; Lee et al., 2019), Social NAO was used, which was 273 mm tall and 275 mm wide. However, in this study, the low-level anthropomorphized agent was 59 mm tall and 58 mm wide, and the high-level anthropomorphized agent was 114 mm tall and 95 mm wide. Therefore, future research could replicate this study by increasing the agents' size, revealing a significant influence of physicality. Another possible reason is that the interaction tasks in this study did not require users to touch the physical agents. As suggested in previous studies (Jung and Lee, 2004; Hoffmann and Krämer, 2013), touch is required in users' interaction with agents to maximize the potential of physicality. Future research could explore the influences of agent physicality by involving touch required interactions or gestures interactions.

Results of this research offer important implications for practice. With the advancements of artificial intelligence in automobile interaction, an increasing number of agents have been developed to integrate into IVIS. It is an important decision for manufacturers in terms of how the agents should be embodied, for which the results of this study have provided recommendations. Designers should carefully consider how different ways of embodying in-vehicle agents may influence user trust. Designers can embody agents in high-level anthropomorphism or create physical embodiments, but designers should avoid embodying agents in high-level anthropomorphism and present agents physically, which can threaten drivers' sense of control while driving, leading to lower trust. Moreover, because of the neutral way of creating agents to avoid confounding effects, this study only detects influence of agent embodiments on cognitive trust not on affective trust. In practice, with alternative ways to create anthropomorphized agents, designers can possibly influence both cognitive and affective trust. As a result, users may feel the systems are credible and favorable.

Moreover, this study reveals the mediating role of perceived control. Besides anthropomorphism and physicality, many other factors may affect users' perceived control. For example, speech can take different styles, such as dry speech, formal command, informal conversion. The formal command may make drivers feel a lack of control. Therefore, designers and engineers should carefully assess the possible influences of these factors on users' sense of control.

The implications of the results of this study are not only limited to driving contexts but also applicable to agent embodiments in other contexts. One of the findings of this study is the importance of perceived control in users' driving experience. To what level users require perceived control depends on specific interaction contexts. As demonstrated by Kim and Mutlu (2014), users experienced robots differently when they had cooperation relationships than competition relationships. In smart home contexts, anthropomorphism largely alleviates users' feeling of losing control (Kang and Kim, 2020) because users demand a sense of connectedness in home contexts. Therefore, when embodying agents, developers and designers should firstly consider the relationships between users and agents in specific contexts, which determines to what extent users need the sense of control. Then, designers can explore optimal ways to embody agents to create the desired sense of control.

Another finding of this study lies in revealing the moderating role of users' familiarity with IVIS on the effects of anthropomorphism. This finding suggests that anthropomorphism strategy can be particularly effective for users' unfamiliar technologies, such as the voice interaction technology. Thus far, the adoption rate of voice interaction technology is around 40% (DBS interactive, 2022), indicating that most people may lack experience and knowledge of voice interaction. Especially for older adults, the adoption of voice interaction can help them reduce distraction in driving (Jonsson et al., 2005), but they show serious resistance (Heinz et al., 2013). In addition to improving users' trust, anthropomorphism strategy can bring other benefits, such as fostering social connectedness (Kang and Kim, 2020; Yang et al., 2020), which can even contribute to older adults' well-being.

Although this research is carefully conducted, it carries some limitations, which might be improved in future research. First, while manipulating anthropomorphism levels, we followed the morphological method, in which the high-level anthropomorphized agents were created by including more morphological features. This manner allowed us to improve anthropomorphism levels while controlling the influences of other confounding effects. However, there can be alternative ways to manipulate anthropomorphism, such as improving realism (van Vugt et al., 2007) and including more anthropomorphic cues (e.g., intentions, empathy, emotions, etc.) (Lee et al., 2015). The involvement of these anthropomorphic cues can further improve anthropomorphism levels and make agents exhibit certain personalities (Hwang et al., 2013). Prior research suggested that people often respond to agents more positively when they exhibit similar personalities (Isbister and Nass, 2000; Braun et al., 2019). People are more likely to consider agents with similar personalities as teammates than competitors. Thus, it could be possible that the similarities between agents and users can eliminate users' feeling of lack of control found in this study, which could be interesting for future research.

Moreover, this study was conducted in a laboratory setting with a driving simulator. We measured both users' subjective perceptions with IVIS and objective driving performance by lane deviation. Although results did not reveal the significant influences of agent embodiments on objective driving performance, it does not mean agent embodiments make no influence on driving performance. In fact, the insignificant influence on driving performance might be caused by the simplified driving contexts and the short driving period in the experiment. Users can easily perform well in the simplified and short driving task. Differently, in real driving contexts, the traffic condition can be more complicated and drivers often drive for a longer time. Driving performance are more likely to be influenced by agents. More specifically, in addition to lane deviation, many alternative indicators are also important for driving safety, such as off-road glances, task completion time, and distractions. Future research can explore the influences in real driving contexts and examine whether agent embodiments can influence these indicators.

Furthermore, perceived control has received increasing research attention in the field of human-computer interactions. In this context, perceived control relates to one's perceived ability to affect the outcomes (Pacherie, 2007; Pacheco et al., 2013). This study reveals the mediating role of perceived control. It would be interesting for future research to manipulate perceived control directly, to further reveal its influence on users' performance and experience. In addition, to measure perceived control, we used two-item scales, which have been developed and used in previous studies (Anjum and Chai, 2020; Zafari and Koeszegi, 2020). However, these two items mainly capture users' own feelings over the systems while not considering the possible constraints made by systems, which could also influence users' perceived control. Therefore, it is worthwhile developing perceived control scales to capture users' subjective feeling from both aspects, which could be interesting for future research.

Another limitation lies in the sample. This study collected participants in a university. These participants are relatively young, and most participants majored in technology-related areas, such as computer science and electronic engineering. They are more likely to be familiar with IVIS and to accept in-vehicle agents. Moreover, although all the participants have driving licenses, they can still be different from people who drive every day for commute. Future research may replicate this study with the general population.

Furthermore, this study focused on a practical context of users driving the vehicle by themselves. However, self-driving vehicles are rising nowadays, which do not require drivers' interference during driving. It is particularly interesting to consider the role of users' sense of control in this context. In this case, drivers need to share controls with self-driving vehicles, and it is important for self-driving systems to assist drivers to fulfill their goals without hindering their sense of control (Wen et al., 2019). Probably, in self-driving status, agents need to exhibit prominence to convince drivers that the self-driving system is sufficiently powerful and reliable to drive safely. Differently, in the situations where users need to drive, agents may play an assistant role to let users feel complete control over driving. Future research could explore the dynamic role of agents, which could facilitate user trust, leading to the successful adoption of self-driving vehicles.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

PC and JY: conceptualization and hypotheses development. FM and YW: stimuli creation. FM: data collection. PC: data analysis and draft preparation. JY: revision and editing. All authors contributed to the article and approved the submitted version.

This work was supported by the National Natural Science Foundation of China (Grant No. 72002057), Humanities and Social Science Projects of the Ministry of Education in China (Grant No. 20YJC760009), National Social Science Fund of China (Grant No. 16BSH097), and Shenzhen Basic Research Program (Grant No. JCYJ20190806142401703).

YW was employed by the company Kuaishou Technology.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abubshait, A., and Wiese, E. (2017). You look human, but act like a machine: agent appearance and behavior modulate different aspects of human–robot interaction. Front. Psychol. 8, 1393. doi: 10.3389/fpsyg.2017.01393

Anjum, S., and Chai, J. (2020). Drivers of cash-on-delivery method of payment in E-commerce shopping: evidence from Pakistan. SAGE Open 10, 1–14. doi: 10.1177/2158244020917392

Bartneck, C., Bleeker, T., Bun, J., Fens, P., and Riet, L. (2010). The influence of robot anthropomorphism on the feelings of embarrassment when interacting with robots. Paladyn 1, 109–115. doi: 10.2478/s13230-010-0011-3

Bartneck, C., Kanda, T., Mubin, O., and Al Mahmud, A. (2009). Does the design of a robot influence its animacy and perceived intelligence? Int. J. Soc. Robot. 1, 195–204. doi: 10.1007/s12369-009-0013-7

Bowen, D. E., and Johnston, R. (1999). “Internal service recovery: developing a new construct”. Int. J. Serv. Ind. Manag. 10, 118–131. doi: 10.1108/09564239910264307

Braun, M., Mainz, A., Chadowitz, R., Pfleging, B., and Alt, F. (2019). “At your service: designing voice assistant personalities to improve automotive user interfaces.” in Paper Presented at the CHI Conference on Human Factors in Computing Systems. New York.

Cafaro, A., Vilhjálmsson, H. H., Bickmore, T., Heylen, D., Jóhannsdóttir, K. R., and Valgarð*sson, G. S. (2012). “First impressions: users' judgments of virtual agents' personality and interpersonal attitude in first encounters,” in Paper Presented at the International Conference on Intelligent Virtual Agents (Berlin, Heidelberg).

Castro-González, A., Admoni, H., and Scassellati, B. (2016). The appearance effect: Influences of virtual agent features on performance and motivation. Int. J. Hum. Comput. Stud. 90, 27–38. doi: 10.1016/j.ijhcs.2016.02.004

Chang, C. W., Lee, J. H., Chao, P. Y., Wang, C. Y., and Chen, G. D. (2010). Exploring the possibility of using humanoid robots as instructional tools for teaching a second language in primary school. J. Educ. Techno. Soc. 13, 13–24. Available online at: http://www.jstor.org/stable/jeductechsoci.13.2.13

Cheng, P., and Mugge, R. (2022). Seeing is believing: investigating the influence of transparency on consumers' product perceptions and attitude. J. Engg. Des. 33, 284–304. doi: 10.1080/09544828.2022.2036332

Cheng, P., Mugge, R., and De Bont, C. (2019). “Smart home system is like a mother”: the potential and risks of product metaphors to influence consumers' comprehension of really new products (RNPs). Int. J. Des. 13, 1–19. doi: 10.33114/adim.2017.47

Choi, J. K., and Ji, Y. G. (2015). Investigating the importance of trust on adopting an autonomous vehicle. Int. J. Hum. Comput. Stud. 31, 692–702. doi: 10.1080/10447318.2015.1070549

Dahlbäck, N., Jönsson, A., and Ahrenberg, L. (1993). Wizard of Oz studies—why and how. Knowl. Based Sys. 6, 258–266. doi: 10.1016/0950-7051(93)90017-N

DBS interactive. (n.d.). Voice Search Statistics Emerging Trends. Available online at: https://www.dbswebsite.com/blog/trends-in-voice-search/ (accessed May 25 2022).

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864. doi: 10.1037/0033-295X.114.4.864

Ferber, J., and Weiss, G. (1999). Multi-Agent Systems: An Introduction to Distributed Artificial Intelligence. Reading, MA: Addison-Wesley.

Fong, T., Nourbakhsh, I., and Dautenhahn, K. (2003). A survey of socially interactive robots. Robot. Auton. Syst. 42, 143–166. doi: 10.1016/S0921-8890(02)00372-X

Gong, L.. (2008). How social is social responses to computers? The function of the degree of anthropomorphism in computer representations. Comput. Hum. Behav. 24, 1494–1509. doi: 10.1016/j.chb.2007.05.007

Goudey, A., and Bonnin, G. (2016). Must smart objects look human? Study of the impact of anthropomorphism on the acceptance of companion robots. Recherche et Applications en Marketing 31, 2–20. doi: 10.1177/2051570716643961

Heinz, M., Martin, P., Margrett, J. A., Yearns, M., Franke, W., Yang, H. I., et al. (2013). Perceptions of technology among older adults. J. Gerontol. Nurs. 39, 42–51. doi: 10.3928/00989134-20121204-04

Hoffmann, L., and Krämer, N. C. (2013). Investigating the effects of physical and virtual embodiment in task-oriented and conversational contexts. Int. J. Hum. Comput. Stud. 71, 763–774. doi: 10.1016/j.ijhcs.2013.04.007

Hofmann, H., Tobisch, V., Ehrlich, U., and Berton, A. (2015). Evaluation of speech-based HMI concepts for information exchange tasks: a driving simulator study. Comput. Speech Lang. 33, 109–135. doi: 10.1016/j.csl.2015.01.005

Hwang, J., Park, T., and Hwang, W. (2013). The effects of overall robot shape on the emotions invoked in users and the perceived personalities of robot. Appl. Ergon. 44, 459–471. doi: 10.1016/j.apergo.2012.10.010

Irwin, J. R., and McClelland, G. H. (2001). Misleading heuristics and moderated multiple regression models. J. Mark. Res. 38, 100–109. doi: 10.1509/jmkr.38.1.100.18835

Isbister, K., and Nass, C. (2000). Consistency of personality in interactive characters: verbal cues, non-verbal cues, and user characteristics. Int. J. Hum. Comput. Stud. 53, 251–267. doi: 10.1006/ijhc.2000.0368

Jonsson, I. M., Zajicek, M., Harris, H., and Nass, C. (2005). “Thank you, I did not see that: in-car speech based information systems for older adults,” in Paper Presented at the CHI'05 Extended Abstracts on Human Factors in Computing Systems. Portland, Oregon.

Jung, Y., and Lee, K. M. (2004). “Effects of physical embodiment on social presence of social robots,” in Paper Presented at the Presence. Amsterdam.

Kang, H., and Kim, K. J. (2020). Feeling connected to smart objects? a moderated mediation model of locus of agency, anthropomorphism, and sense of connectedness. Int. J. Hum. Comput. Stud. 133, 45–55. doi: 10.1016/j.ijhcs.2019.09.002

Karatas, N., Tamura, S., Fushiki, M., and Okada, M. (2019). Improving human-autonomous car interaction through gaze following behaviors of driving agents. Trans. Japan. Soc. Artif. Intell. 34, A-IA1_11. doi: 10.1527/tjsai.A-IA1

Khan, R. F., and Sutcliffe, A. (2014). Attractive agents are more persuasive. Int. J. Hum. Comput. Stud. Interaction 30, 142–150. doi: 10.1080/10447318.2013.839904

Kiesler, S., Powers, A., Fussell, S. R., and Torrey, C. (2008). Anthropomorphic interactions with a robot and robot-like agent. Soc. Cogn. 26, 169–181. doi: 10.1521/soco.2008.26.2.169

Kim, D., and Lee, H. (2016). Effects of user experience on user resistance to change to the voice user interface of an in-vehicle infotainment system: Implications for platform and standards competition. Int. J. Inf. Manage 36, 653–667. doi: 10.1016/j.ijinfomgt.2016.04.011

Kim, K. J., and Sundar, S. S. (2016). Mobile persuasion: Can screen size and presentation mode make a difference to trust? Hum. Commun. Res. 42, 45–70. doi: 10.1111/hcre.12064

Kim, S., Chen, R. P., and Zhang, K. (2016). Anthropomorphized helpers undermine autonomy and enjoyment in computer games. J. Consum. Res. 43, 282–302. doi: 10.1093/jcr/ucw016

Kim, Y., and Mutlu, B. (2014). How social distance shapes human–robot interaction. Int. J. Hum. Comput. Stud. Stud. 72, 783–795. doi: 10.1016/j.ijhcs.2014.05.005

Klimmt, C., Hartmann, T., and Frey, A. (2007). Effectance and control as determinants of video game enjoyment. Cyberpsychol. Behav. 10, 845–848. doi: 10.1089/cpb.2007.9942

Kose-Bagci, H., Ferrari, E., Dautenhahn, K., Syrdal, D. S., and Nehaniv, C. L. (2009). Effects of embodiment and gestures on social interaction in drumming games with a humanoid robot. Adv. Robot. 23, 1951–1996. doi: 10.1163/016918609X12518783330360

Kraus, J. M., Nothdurft, F., Hock, P., Scholz, D., Minker, W., and Baumann, M. (2016). “Human after all: Effects of mere presence and social interaction of a humanoid robot as a co-driver in automated driving,” in Paper Presented at the Adjunct Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications. Ann Arbor.

Kulms, P., and Kopp, S. (2019). “More human-likeness, more trust? The effect of anthropomorphism on self-reported and behavioral trust in continued and interdependent human-agent cooperation,” in Proceedings of Mensch Und Computer 2019. (New York, NY: ACM), 31–42.

Lee, J. D., and See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Hum. Fact. 46, 50–80. doi: 10.1518/hfes.46.1.50_30392

Lee, J. G., Kim, K. J., Lee, S., and Shin, D. H. (2015). Can autonomous vehicles be safe and trustworthy? Effects of appearance and autonomy of unmanned driving systems. Int. J. Hum. Comput. Int. 31, 682–691. doi: 10.1080/10447318.2015.1070547

Lee, K. M., Jung, Y., Kim, J., and Kim, S. (2006). Are physically embodied social agents better than disembodied social agents? the effects of physical embodiment, tactile interaction, and people's loneliness in human–robot interaction. Int. J. Hum. Comput. Stud. 64, 962–973. doi: 10.1016/j.ijhcs.2006.05.002

Lee, S. C., Sanghavi, H., Ko, S., and Jeon, M. (2019). “Autonomous driving with an agent: Speech Style and Embodiment,” in Paper Presented at the In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings. Utrecht.

Lee, Y.-H., Xiao, M., and Wells, R. H. (2018). The effects of avatars' age on older adults' self-disclosure and trust. Cyberpsychol. Behav. Soc. Netw. 21, 173–178. doi: 10.1089/cyber.2017.0451

Lewis, J. D., and Weigert, A. (1985). Trust as a social reality. Soc. Forces 63, 967–985. doi: 10.2307/2578601

Li, J.. (2015). The benefit of being physically present: a survey of experimental works comparing copresent robots, telepresent robots and virtual agents. Int. J. Hum. Comput. Stud. 77, 23–37. doi: 10.1016/j.ijhcs.2015.01.001

Li, J., Kizilcec, R., Bailenson, J., and Ju, W. (2016). Social robots and virtual agents as lecturers for video instruction. Comput. Hum. Behav. 55, 1222–1230. doi: 10.1016/j.chb.2015.04.005

Luo, J. T., McGoldrick, P., Beatty, S., and Keeling, K. A. (2006). On-screen characters: their design and influence on consumer trust. J. Serv. Mark. 20, 112–124. doi: 10.1108/08876040610657048

Mann, J. A., MacDonald, B. A., Kuo, I. H., Li, X., and Broadbent, E. (2015). People respond better to robots than computer tablets delivering healthcare instructions. Comput. Hum. Behav. 43, 112–117. doi: 10.1016/j.chb.2014.10.029

Miyamoto, T., Katagami, D., Shigemitsu, Y., Usami, M., Tanaka, T., Kanamori, H., et al. (2021). Influence of social distance expressed by driving support agent's utterance on psychological acceptability. Front. Psychol. 12, 254. doi: 10.3389/fpsyg.2021.526942

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robot. Autom. Mag. 19, 98–100. doi: 10.1109/MRA.2012.2192811

Moundridou, M., and Virvou, M. (2002). Evaluating the persona effect of an interface agent in a tutoring system. J. Comput. Assist. Learn 18, 253–261. doi: 10.1046/j.0266-4909.2001.00237.x

Mugge, R., and Schoormans, J. P. (2012). Product design and apparent usability. The influence of novelty in product appearance. Appl. Ergonom. 43, 1081–1088. doi: 10.1016/j.apergo.2012.03.009

Mulder, M., Abbink, D. A., and Boer, E. R. (2012). Sharing control with haptics: seamless driver support from manual to automatic control. Hum. Factors 54, 786–798. doi: 10.1177/0018720812443984

Murata, A., Wen, W., and Asama, H. (2016). The body and objects represented in the ventral stream of the parieto-premotor network. Neurosci. Res. 104, 4–15. doi: 10.1016/j.neures.2015.10.010

Nass, C., Moon, Y., Fogg, B. J., Reeves, B., and Dryer, D. C. (1995). Can computer personalities be human personalities?. Int. J. Hum. Comp. Stud. 43, 223–239. doi: 10.1006/ijhc.1995.1042

Navarro, J., François, M., and Mars, F. (2016). Obstacle avoidance under automated steering: impact on driving and gaze behaviours. Transport. Res. F: Traffic Psychol. Behav. 43, 315–324. doi: 10.1016/j.trf.2016.09.007

Nguyen, Q. N., Ta, A., and Prybutok, V. (2019). An integrated model of voice-user interface continuance intention: the gender effect. Int. J. Hum. Comput. Int. 35, 1362–1377. doi: 10.1080/10447318.2018.1525023

Nowak, K. L., and Biocca, F. (2004). The effect of the agency and anthropomorphism on users' sense of telepresence, copresence, and social presence in virtual environments. Presence 12, 481–494. doi: 10.1162/105474603322761289

Oviedo-Trespalacios, O., Nandavar, S., and Haworth, N. (2019). How do perceptions of risk and other psychological factors influence the use of in-vehicle information systems (IVIS)? Transp. Res. Part F. 67, 113–122. doi: 10.1016/j.trf.2019.10.011

Pacheco, N. A., Lunardo, R., and Santos, C. P. D. (2013). A perceived-control based model to understanding the effects of co-production on satisfaction. BAR-Brazilian Admin. Rev. 10, 219–238. Available online at: https://www.scielo.br/j/bar/a/VZhG4dbkRHWKCP4C6QGSKpc/?lang=en

Pacherie, E.. (2007). The sense of control and the sense of agency. Psyche 13, 1–30. Available online at: http://journalpsyche.org/files/0xab10.pdf

Pak, R., Fink, N., Price, M., Bass, B., and Sturre, L. (2012). Decision support aids with anthropomorphic characteristics influence trust and performance in younger and older adults. Ergonomics 55, 1059–1072. doi: 10.1080/00140139.2012.691554

Preacher, K. J., and Hayes, A. F. (2004). SPSS and SAS procedures for estimating indirect effects in simple mediation models. Behav. Res. Meth. Instrum. Comput. 36, 717–731. doi: 10.3758/BF03206553

Qiu, L., and Benbasat, I. (2009). Evaluating anthropomorphic product recommendation agents: a social relationship perspective to designing information systems. J. Manag. Inf. Syst. 25, 145–182. doi: 10.2753/MIS0742-1222250405

Reeves, B., and Nass, C. (1996). The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places. New York, NY: Cambridge University Press.

Rickenberg, R., and Reeves, B. (2000). “The effects of animated characters on anxiety, task performance, and evaluations of user interfaces,” in Paper Presented at the CHI Letters. The Hague, NL.

Schrader, C.. (2019). Creating avatars for technology usage: context matters. Comput. Hum. Behav. 93, 219–225. doi: 10.1016/j.chb.2018.12.002

Shiban, Y., Schelhorn, I., Jobst, V., Hörnlein, A., Puppe, F., Pauli, P., et al. (2015). The appearance effect: influences of virtual agent features on performance and motivation. Comput. Hum. Behav. 49, 5–11. doi: 10.1016/j.chb.2015.01.077

Soh, H., Reid, L. N., and King, K. W. (2009). Measuring trust in advertising. J. Advert. 38, 83–104. doi: 10.2753/JOA0091-3367380206

Straßmann, C., and Krämer, N. C. (2017). “A categorization of virtual agent appearances and a qualitative study on age-related user preferences,” in Paper Presented at the International Conference on Intelligent Virtual Agents.

Sundar, S. S., and Nass, C. (2000). Source orientation in human-computer interaction: programmer, networker, or independent social actor. Commun. Res. 27, 683–703. doi: 10.1177/009365000027006001

Tanaka, T., Fujikake, K., Yonekawa, T., Inagami, M., Kinoshita, F., Aoki, H., et al. (2018b). Effect of difference in form of driving support agent to driver's acceptability-driver agent for encouraging safe driving behavior (2). J. Transport. Technol. 8, 194–208. doi: 10.4236/jtts.2018.83011

Tanaka, T., Fujikake, K., Yoshihara, Y., Yonekawa, T., Inagami, M., Aoki, H., et al. (2018a). “Driving behavior improvement through driving support and review support from driver agent,” in Proceedings of the 6th International Conference on Human-Agent Interaction. (Southampton), 36–44.

Ter Stal, S., Broekhuis, M., van Velsen, L., Hermens, H., and Tabak, M. (2020a). Embodied conversational agent appearance for health assessment of older adults: explorative study. JMIR Hum. Factors. 7, e19987. doi: 10.2196/preprints.19987

Ter Stal, S., Tabak, M., opden Akker, H., Beinema, T., and Hermens, H. (2020b). Who do you prefer? The effect of age, gender and role on users' first impressions of embodied conversational agents in eHealth. Int. J. Hum. Comput. Int. 36, 881–892. doi: 10.1080/10447318.2019.1699744

Thompson, D. V., Hamilton, R. W., and Rust, R. T. (2005). Feature fatigue: when product capabilities become too much of a good thing. J. Mark. Res. 42, 431–442. doi: 10.1509/jmkr.2005.42.4.431

van Vugt, H. C., Konijn, E. A., Hoorn, J. F., Keur, I., and Eliéns, A. (2007). Realism is not all! User engagement with task-related interface characters. Interact. Comput. 19, 267–280. doi: 10.1016/j.intcom.2006.08.005

Wainer, J., Feil-Seifer, D. J., Shell, D. A., and Mataric, M. J. (2006). “The role of physical embodiment in human–robot interaction,” Proceedings of the 15th IEEE International Symposium on Robot and Human Interactive Communication, ROMAN. IEEE, 117–122.

Wainer, J., Feil-Seifer, D. J., Shell, D. A., and Mataric, M. J. (2007). “Embodiment and human–robot interaction: a task-based perspective,” in Proceedings of the 16th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN. IEEE, 872–877.

Wang, E. S. T.. (2014). Perceived control and gender difference on the relationship between trialability and intent to play new online games. Comput. Hum. Behav. 30, 315–320. doi: 10.1016/j.chb.2013.09.016

Waytz, A., Heafner, J., and Epley, N. (2014). The mind in the machine: anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 52, 113–117. doi: 10.1016/j.jesp.2014.01.005

Wen, W., Kuroki, Y., and Asama, H. (2019). The sense of agency in driving automation. Front. Psychol. 10, 1–12. doi: 10.3389/fpsyg.2019.02691

Yan, H., and Yang, Z. (2015). Examining mobile payment user adoption from the perspective of trust. Int. J. u e Serv. Sci. Technol. 8, 117–130. doi: 10.14257/ijunesst.2015.8.1.11

Yang, L. W., Aggarwal, P., and McGill, A. L. (2020). The 3 C's of anthropomorphism: connection, comprehension, and competition. Consum. Psychol. Rev. 3, 3–19. doi: 10.1002/arcp.1054

Yun, S., Wen, W., An, Q., Hamasaki, S., Yamakawa, H., and Tamura, Y. (2018). “Investigating the relationship between assisted driver's SoA and EEG.” in Paper Presented at the International Conference on NeuroRehabilitation. Cham.

Zafari, S., and Koeszegi, S. T. (2020). Attitudes toward attributed agency: role of perceived control. Int. J. Soc. Robot. 1–10. doi: 10.1007/s12369-020-00672-7 [Epub ahead of print].

Zhao, X., Lynch, J. G. Jr, and Chen, Q. (2010). Reconsidering Baron and Kenny: Myths and truths about mediation analysis. J. Consum. Res. 37, 197–206. doi: 10.1086/651257

Zhao, Y., Ni, Q., and Zhou, R. (2018). What factors influence the mobile health service adoption? A meta-analysis and the moderating role of age. Int. J. Inf. Manag. 43, 342–350. doi: 10.1016/j.ijinfomgt.2017.08.006

Ziemke, T.. (2001). “Disentangling notions of embodiment,” in Paper Presented at the In Workshop on Developmental Embodied Cognition. (Edinburgh), 83.

Keywords: agents, anthropomorphism, driving, physicality, perceived control, trust

Citation: Cheng P, Meng F, Yao J and Wang Y (2022) Driving With Agents: Investigating the Influences of Anthropomorphism Level and Physicality of Agents on Drivers' Perceived Control, Trust, and Driving Performance. Front. Psychol. 13:883417. doi: 10.3389/fpsyg.2022.883417

Received: 25 February 2022; Accepted: 03 May 2022;

Published: 15 June 2022.

Edited by:

Lee Skrypchuk, Jaguar Land Rover, United KingdomReviewed by:

Rachel M. Msetfi, University of Limerick, IrelandCopyright © 2022 Cheng, Meng, Yao and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jie Yao, eWFvamllanVsaWVAaGl0LmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.