95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 09 May 2022

Sec. Emotion Science

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.877375

This article is part of the Research Topic The Interpersonal Effects of Emotions: The Influence of Facial Expressions on Social Interactions View all 8 articles

Previous research has explored how emotional valence (positive or negative) affected face-context associative memory, while little is known about how arousing stimuli that share the same valence but differ in emotionality are bound together and retained in memory. In this study, we manipulated the emotional similarity between the target face and the face associated with the context emotion (i.e., congruent, high similarity, and low similarity), and examined the effect of emotional similarity of negative emotion (i.e., disgust, anger, and fear) on face-context associative memory. Our results showed that the greater the emotional similarity between the faces, the better the face memory and face-context associative memory were. These findings suggest that the processing of facial expression and its associated context may benefit from taking into account the emotional similarity between the faces.

In daily life, when we encounter strangers, we often make decisions on whether to approach them by their appearance, especially by their facial expressions. Of course, facial expressions don’t appear in isolation, they are usually embedded in a rich and informative context (Wieser and Brosch, 2012). For instance, smiling facial expressions are more frequent at weddings, disgusted facial expressions seem to be paired with a garbage area, etc. Previous studies have examined the effect of the emotionality of contexts on the judgment of facial expressions (Barrett and Kensinger, 2010; Aviezer et al., 2011a; Barrett et al., 2011). Researchers found the judgment of facial expressions was facilitated in the emotionally congruent condition (i.e., the emotionality is congruent between facial expressions and their surrounding contexts), and facial expressions that were embedded in emotionally congruent scenes were categorized faster than that in incongruent scenes (Van den Stock et al., 2007, 2014; Righart and de Gelder, 2008a,b; Barrett and Kensinger, 2010; Aviezer et al., 2011a). Given the influence of emotional congruency between faces and contexts on the judgment of facial expressions, we were interested in how emotional congruency between faces and contexts would influence face memory and face-context associative memory.

Righi et al. (2015) were the first to use face-context composite pictures (happy faces or fearful faces that were superimposed on happy contexts or fearful contexts) to examine the item memory of emotional faces and the associative memory of face-context in the congruent and incongruent conditions. They found face memory benefited from the affective congruency between faces and contexts, with better memory for happy faces embedded in happy contexts. In contrast, although the associative memory of faces was not influenced by affective congruency between faces and contexts, the associative memory performance of happy faces was always higher than that of fearful faces. In the study of Righi et al. (2015), however, it is noteworthy that what researchers conducted was between-valence comparisons of faces (i.e., happy face vs. fearful face) rather than within-valence comparisons of faces (i.e., fearful face vs. angry face). Obviously, there are significant differences in facial configurations and perceptual similarity between happy faces and fearful faces (Aguado et al., 2018). Therefore, we speculated that when participants could easily distinguish the two kinds of facial expressions (e.g., happy face and fearful face), they might not rely on contexts to process faces, such that face-context associative memory did not benefit from the affective congruent condition. Furthermore, this also raised a question about what role the context played when facial expressions were hard to distinguish, especially when they were affectively congruent but emotionally incongruent (i.e., fearful face vs. angry face).

In fact, Aviezer et al. (2008) have ever proposed that the magnitude of contextual effect may be modulated by the degree of the emotional similarity presented in the facial expressions. For instance, disgusted faces are emotionally similar to angry faces but dissimilar to fearful faces. Therefore, disgusted faces will be strongly affected by angry contexts but weakly affected by fearful contexts. That is, people are more likely to perceive the disgusted faces embedded in the angry scenes as angry faces than perceive the disgusted faces embedded in the fearful scenes as fearful faces, which is a pattern of the similarity effect (Susskind et al., 2007; Aviezer et al., 2008, 2009). Further, Aviezer et al. (2011b) manipulated three different levels of similarity between the actually presented faces and the faces that are typically associated with the context emotion (i.e., emotionally congruent, high similarity, low similarity), and examined the effect of emotional similarity on face-context integration. Specifically, in the congruent condition, angry, disgusted, fearful, and sad faces appeared in their respective emotional context; in the high similarity condition, angry faces appeared in disgusted contexts, disgusted faces appeared in angry contexts, and sad faces appeared in fearful contexts; in the low similarity condition, angry faces appeared in sad contexts, sad faces appeared in angry contexts, and disgusted faces appeared in fearful contexts. Researchers found that participants were most likely to categorize the emotion of faces as the emotion of contexts in the congruent and high similarity conditions, while they were least likely to categorize the emotion of faces as the emotion of contexts in the low similarity condition. Such findings seemed to suggest that the greater the emotional similarity between the target face and the context-associated face was, the more it was for participants to rely on contexts to process faces.

Since the emotions of anger, disgust, and fear allow people to prioritize the detection and processing of important information, and keep the individual away from a potentially threatening environment (Rozin and Fallon, 1987), we aimed to further explore how emotional similarity of negative facial expressions would affect face memory and face-context associative memory. Our main interest was to test whether emotional congruency and high similarity contributed to enhancing face-context associative memory. Because it was difficult to distinguish between two highly similar faces, participants might attempt to use available context information to process the face. Especially, they might devote more processing resources to encoding and remembering the context when they were asked to perceive the emotion in a face (Barrett and Kensinger, 2010; Aviezer et al., 2011b). Therefore, we hypothesized that the higher the degree of emotional similarity between the target face and the face associated with the context emotion, the more likely participants were to rely on context information to process the target face, such that the better the associative memory between the target face and its paired context was. In other words, we speculated that the associative memory performance might be the greatest in the congruent condition, intermediate in the high similarity condition, and worst in the low similarity condition. Besides, given that facial expression processing might benefit from the emotional congruency between faces and contexts (Righi et al., 2015), we expected a better face memory in the emotionally congruent condition.

Thirty-eight healthy undergraduates (11 males), aged 18–22 (M = 20.03, SD = 0.81) participated in this experiment. Data from one participant was discarded because the mean accuracy of most conditions was below 3 standard deviations from the mean. The remaining 37 participants had normal or corrected-to-normal vision. All of them were volunteers and received gifts after this experiment. Based on the effect size for the interaction between expression valence and context valence (f = 0.59) in the study of Righi et al. (2015), we set a medium effect size f = 0.25. According to G*power 3.1, the current sample size was adequate to achieve 95% power to observe an interaction between emotional face and emotional context (α = 0.05).

According to Aviezer et al. (2008), we used three kinds of negative facial expressions that were affectively congruent but emotionally incongruent (i.e., disgusted vs. angry vs. fearful) as experimental materials. We chose the images of facial expressions consisting of 60 disgusted faces, 50 angry faces, and 50 fearful faces from the Chinese facial affective picture system (Gong et al., 2011), 100 disgusted contexts, 70 angry contexts, and 70 fearful contexts from International Affective Picture System (IAPS; Lang et al., 1999). Half of the faces were males, and the other half were females. All the face images were gray-scale and were adjusted to the same size (130 × 148 pixels), luminance and contrast by using Photoshop CS6. All the context pictures were adjusted to the same size (700 × 525 pixels) by using Photoshop CS6.

Ten participants who did not participate in the formal experiment were asked to rate a series of dimensions of facial expressions and emotional contexts, respectively. Valence was rated on a 9-point scale (1 = the most negative, 9 = the most positive), arousal was rated on a 9-point scale (1 = extremely calm, 9 = extremely arousing), whether the label of facial expressions or contexts corresponding to one of the three basic emotions (disgusted, angry, and fearful) was rated on a 7-point scale (1 = not at all, 7 = very much) (Adolphs et al., 1994; Susskind et al., 2007). At last, 45 disgusted faces (mean valence = 3.67, SD = 0.78; mean arousal = 5.89, SD = 0.43), 45 angry faces (mean valence = 3.08, SD = 0.48; mean arousal = 6.79, SD = 0.33), and 45 fearful faces (mean valence = 3.19, SD = 0.80; mean arousal = 6.90, SD = 0.68) were selected. The label of these facial expressions was considered a good match to their actual emotion with a score above 6. Meanwhile, 45 disgusted contexts (mean valence = 3.33, SD = 0.82; mean arousal = 6.20, SD = 0.74), 45 angry contexts (mean valence = 3.15, SD = 0.41; mean arousal = 6.17, SD = 0.36), and 45 fearful contexts (mean valence = 3.29, SD = 0.97; mean arousal = 5.79, SD = 0.88) were selected. The label of these contexts was considered a good match to their actual emotion with a score above 6.

Ninety faces (30 disgusted faces, 30 angry faces, 30 fearful faces) and 90 contexts (30 disgusted contexts, 30 angry contexts, 30 fearful contexts) were combined into 90 composite pictures as the materials in the study phase. These composite pictures thus produced three different levels of emotional similarity between faces and contexts: emotionally congruent, high similarity, and low similarity. Previous work has established that the facial expression of disgust shares a decreasing degree of emotional similarity with the facial expression of anger, sadness, and fear in order (Susskind et al., 2007; Aviezer et al. 2008, 2011b). Hence, in the congruent condition, disgusted faces, angry faces, and fearful faces appeared in their respective emotional context (e.g., a fearful face was placed onto a fearful context). In the high similarity condition, disgusted faces appeared in angry contexts, angry faces appeared in disgusted contexts. In the low similarity condition, disgusted faces appeared in fearful contexts, fearful faces appeared in disgusted contexts, angry faces appeared in fearful contexts, and fearful faces appeared in angry contexts. To ensure that essential parts of the context were not concealed, we placed half of the emotional faces on the left of the context and the other half on the right of the context.

Test materials consisted of 90 face images (45 studied face images and 45 new face images) and 135 context pictures (45 studied intact contexts that were presented with the 45 studied faces in the study phase, 45 studied rearranged contexts that were presented with the other 45 face images in the study phase, and 45 new ones that did not appear in the study phase).

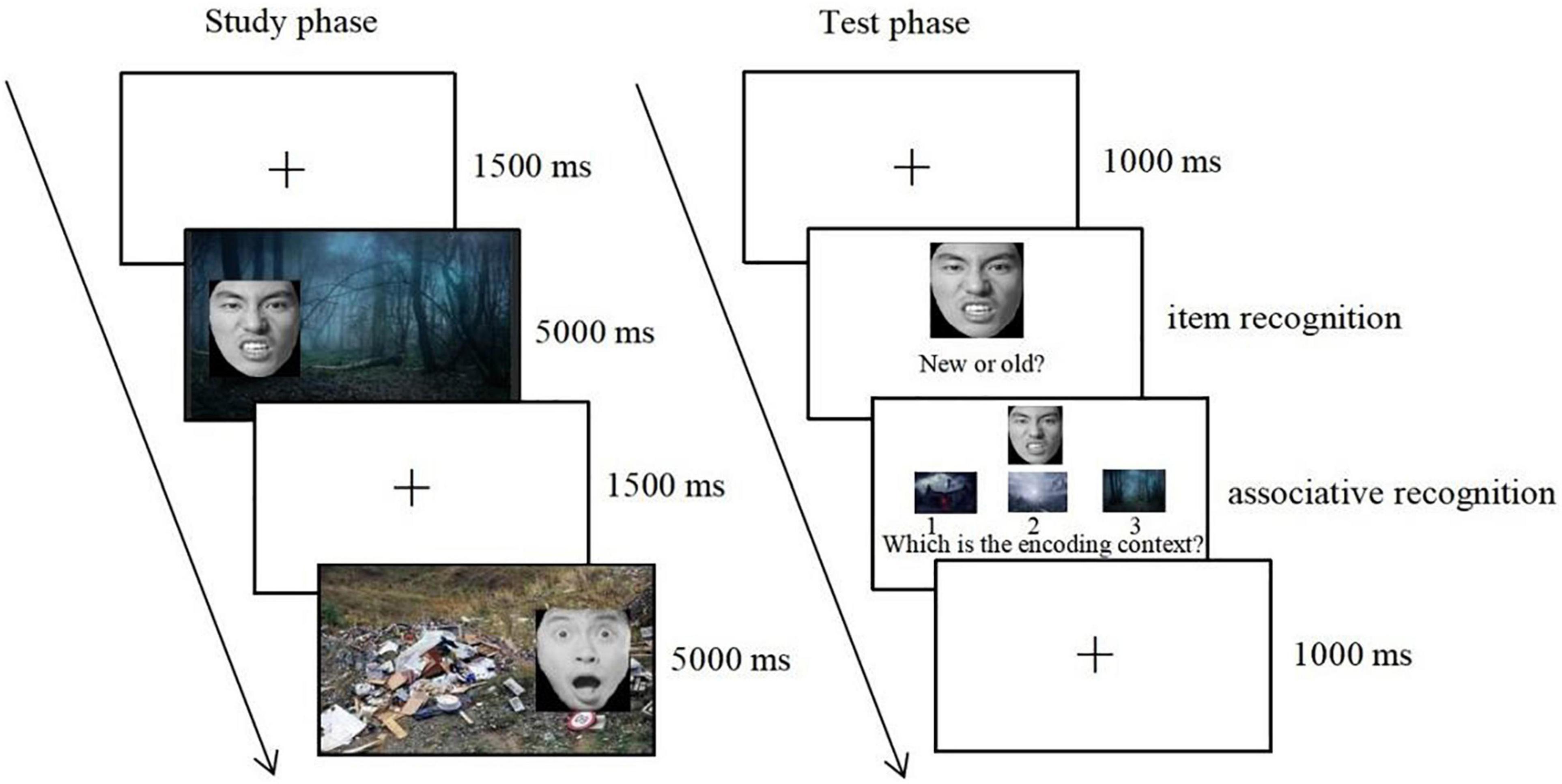

In the study phase, participants were informed that they would view a series of composite pictures on the computer screen and needed to indicate the emotion evoked by the facial expression from a list of emotion labels (disgust, anger, fear, sadness, and happiness) listed under each picture. Meanwhile, they were asked to try their best to remember these pictures for a later memory test. After a fixation cross of 1,500 ms, 90 face-context composite pictures were presented for 5,000 ms one by one, including 30 emotionally congruent face-context composites (10 disgusted faces-disgusted contexts, 10 angry faces-angry contexts, 10 fearful faces-fearful contexts), 20 high similarity face-context composites (10 disgusted faces-angry contexts, 10 angry faces-disgusted contexts), and 40 low similarity face-context composites (10 disgusted faces-fearful contexts, 10 fearful faces-disgusted contexts, 10 angry faces-fearful contexts, 10 fearful faces-angry contexts). These composite pictures were randomly presented. Meanwhile, the same face that appeared in different emotional contexts was counterbalanced across participants, such that the same face was embedded either in a disgusted context, in an angry context, or in a fearful context to different participants.

After the study phase, participants completed a 5-min arithmetic task. They were asked to compute the sum of three-digit numbers.

In the test phase, after a fixation cross was presented for 1,000 ms, 45 studied face images mixed with 45 new face images were presented randomly in the center of the screen. Participants were first asked to perform a self-paced recognition task and indicated whether they had seen the face during the study phase by pressing “F” (yes) or “J” (no). If participants correctly judged the studied face as “old” items, they were then shown the three context pictures. One was an “intact” context that was presented with the old face in the study phase, the second was a “recombined” context that was presented with a different face in the study phase, and the third was a “new” context that did not appear in the study phase. It should be noted that the “recombined” and “new” context were from the same emotional category as the “intact” context. Participants were required to make a decision on which context had been originally presented with the old face. The spatial position of the three contexts was counterbalanced across participants (see Figure 1).

Figure 1. The procedure for the study phase and the test phase. Copyright: Chinese Facial Affective Picture System (Gong et al., 2011), republished with permission.

No trial was excluded from the analysis. According to Bisby and Burgess (2014) and Funk and Hupbach (2014), item memory and associative memory scores were corrected by hits minus false alarms. Since the violations of normality for most conditions, all data were analyzed using non-parametric tests (Friedman test and Wilcoxon signed rank test). Bonferroni correction was used for multiple pairwise comparisons.

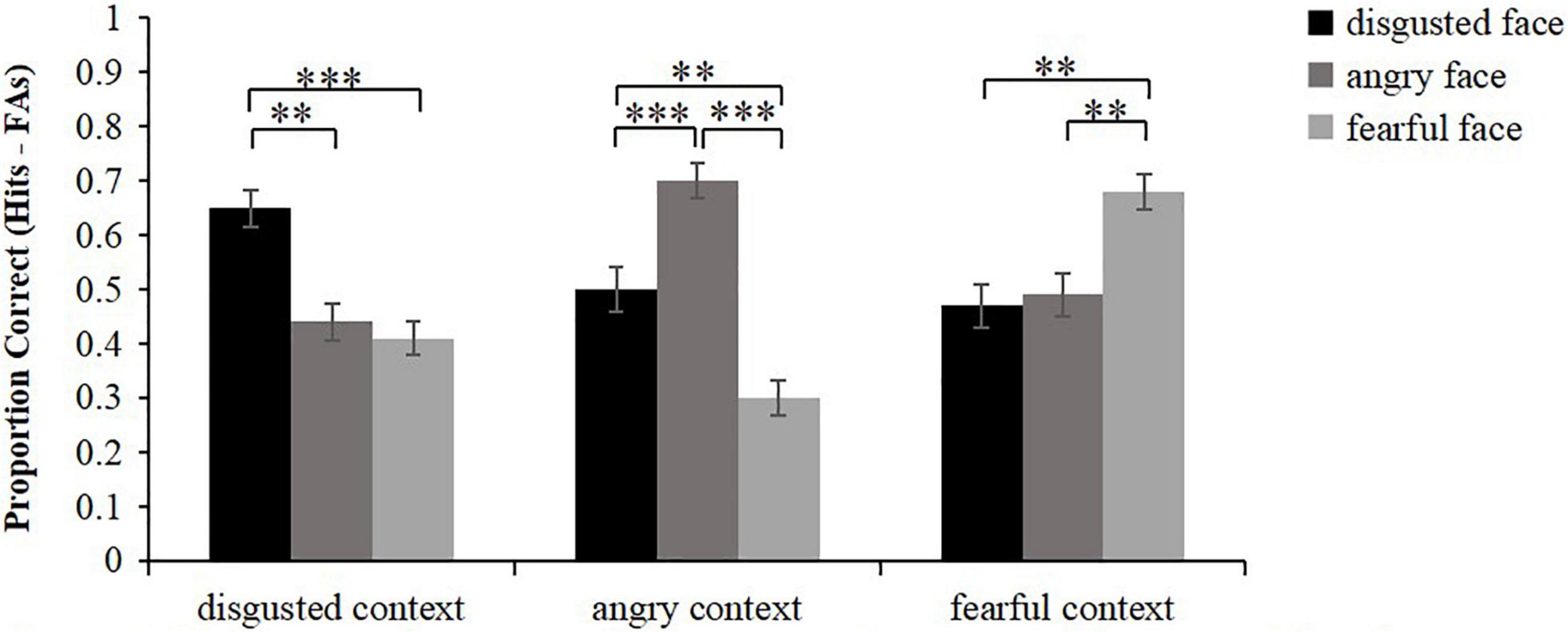

The corrected accuracy of face memory was calculated as hits (old faces were correctly recognized as being old) minus false alarms (new faces were incorrectly recognized as being old) (Figure 2). Friedman test was conducted to analyze the corrected recognition scores of faces. The results showed that there were significant differences in item memory among different conditions, χ2(8) = 86.14, p < 0.001. Wilcoxon signed rank test was then used to conduct pairwise comparisons. The results showed that for the faces that were embedded in disgusted contexts, disgusted faces were remembered better than angry faces and fearful faces (z = 3.36, p = 0.003; z = 3.94, p < 0.001), but the memory performance of angry faces did not differ from that of fearful faces (z = 0.67, p = 1.000). For the faces that were embedded in angry contexts, angry faces were remembered better than disgusted faces and fearful faces (z = 3.63, p < 0.001; z = 4.96, p < 0.001), and disgusted faces were remembered better than fearful faces (z = 3.10, p = 0.006). For the faces that were embedded in fearful contexts, fearful faces were remembered better than disgusted faces and angry faces (z = 3.21, p = 0.003; z = 3.23, p = 0.003), while the memory performance of disgusted faces did not differ from that of angry faces (z = 0.12, p = 1.000). In brief, these results basically revealed that face memory was always the best in the congruent condition. Meanwhile, face memory of the high similarity condition was greater than that of the low similarity condition when faces were embedded in angry contexts.

Figure 2. Mean proportion correct for face recognition scores (hits minus false alarms) as a function of emotional face and emotional context. Error bars represent standard error. **p < 0.01, ***p < 0.001.

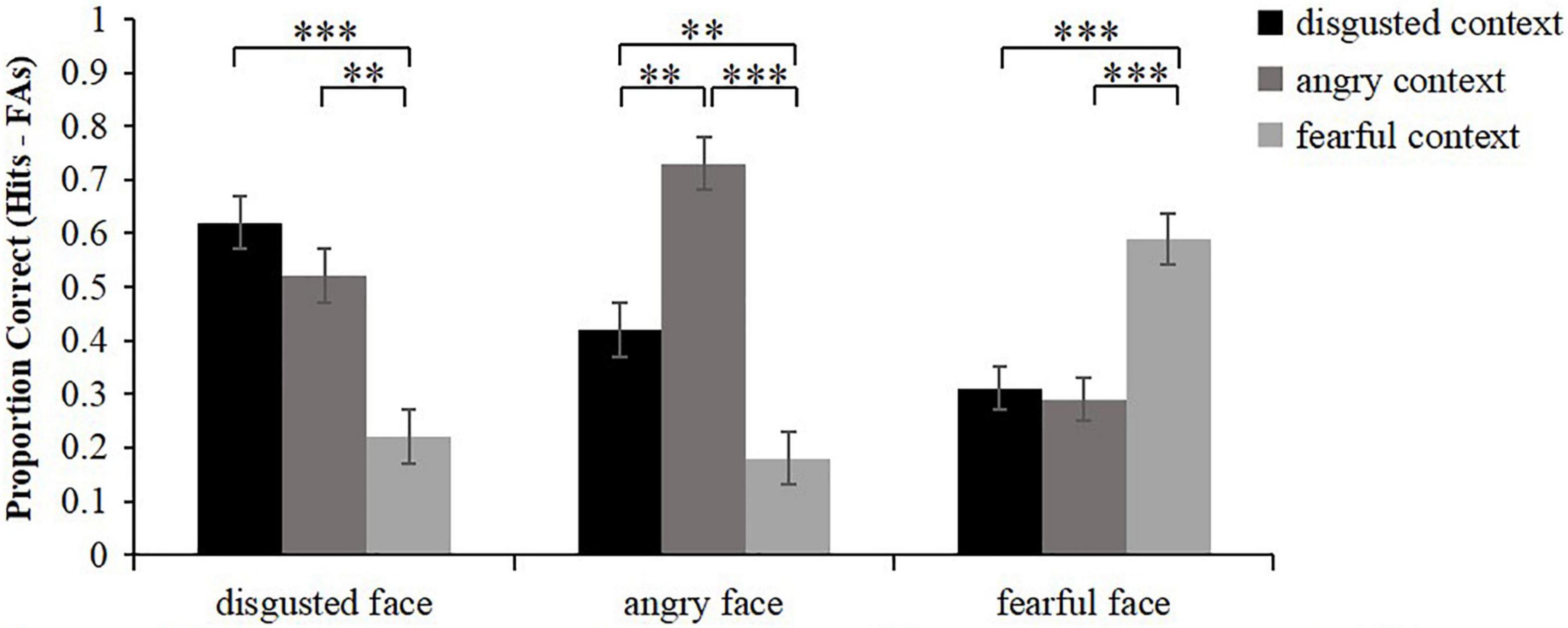

Similarly, the corrected accuracy for associative memory was calculated as hits (intact contexts were correctly recognized as being intact) minus false alarms (new contexts and recombined contexts were incorrectly recognized as being intact) (Figure 3). Friedman test was conducted to analyze the corrected recognition scores of intact contexts. The results showed that there were significant differences in associative memory among different conditions, χ2(8) = 86.36, p < 0.001. Wilcoxon signed rank test was then used to conduct pairwise comparisons. The results showed that for the intact contexts that were associated with disgusted faces, disgusted intact contexts and angry intact contexts were both remembered better than fearful intact contexts (z = 4.21, p < 0.001; z = 3.48, p = 0.003), while the memory performance of disgusted intact contexts did not differ from that of angry intact contexts (z = 1.32, p = 0.564). For the intact contexts that were associated with angry faces, angry intact contexts were remembered better than disgusted intact contexts and fearful intact contexts (z = 3.42, p = 0.003; z = 4.65, p < 0.001), and the memory performance of disgusted intact was greater than that of fearful intact contexts (z = 3.11, p = 0.006). For the intact contexts that were associated with fearful faces, fearful intact contexts were remembered better than disgusted intact contexts and angry intact contexts (z = 3.55, p < 0.001; z = 3.74, p < 0.001), while the memory performance of disgusted intact contexts did not differ from that of angry intact contexts (z = 0.22, p = 1.000). In sum, these results revealed that the associative memory of disgusted faces was better in the congruent condition and high similarity condition than that in the low similarity condition. The associative memory of angry faces was the best in the congruent condition, intermediate in the high similarity condition, and worst in the low similarity condition. Meanwhile, the associative memory of fearful faces was better in the congruent condition than that in the low similarity condition.

Figure 3. Mean proportion correct for recognition of the associated intact context (hits minus false alarms) as a function of emotional face and emotional context. Error bars represent standard error. **p < 0.01, ***p < 0.001.

On the basis of the prior work concerning the effect of emotional similarity on the judgment of facial expression (Susskind et al., 2007; Aviezer et al., 2008, 2011b), we established three kinds of emotional similarity conditions (i.e., emotionally congruent, high similarity, low similarity) to examine the effect of emotional similarity of negative facial expressions on face memory and face-context associative memory. As predicted, our results indicated that the face memory and the face-context associative memory were the greatest in the congruent condition, intermediate in the high similarity condition, and worst in the low similarity condition as a whole. These similarity patterns not only extended the findings obtained from previous studies, but also contributed to understanding face-context integration process.

First, face memory and face-context associative memory both benefited from emotional congruency and high similarity. The results from item memory showed face memory performance was the highest in the emotionally congruent context, intermediate in the high similarity context, and worst in the low similarity context, suggesting that the processing of facial expressions could be strongly influenced by contexts, especially when the target face was emotionally similar to the facial expression associated with the context (Aviezer et al., 2008, 2011b). Such results were also in line with previous studies that showed the advantage of the emotional congruency effect. For instance, facial expressions were recognized faster or were remembered better in emotionally congruent contexts than in incongruent contexts (Righart and de Gelder, 2008a,b; Diéguez-Risco et al., 2013, 2015; Righi et al., 2015).

Meanwhile, the results from associative memory were somewhat consistent with the pattern observed in item memory, showing that face-context associative memory was the best in the emotionally congruent context, intermediate in the high similarity context, and worst in the low similarity context. In fact, Barrett and Kensinger (2010) have ever reported that perceivers might encode the context when they were required to make a more specific inference about a target person’s emotion. Therefore, we speculated that when two highly similar faces were presented, it was difficult for participants to perceive the emotion of faces just by the face itself. In such a case, participants might use whatever they could to distinguish between highly similar faces, such as available context information. As a consequence, they might devote more attention to encoding and remembering the context, such that face-context associative memory was enhanced in the congruent and high similarity conditions.

Second, face-context associative memory benefited from the congruent contexts and high similarity contexts, which might potentially be explained by unitization. A substantial body of research has shown that when two distinct items were processed as a single unitized item, such as the word pair “traffic-jam,” the associative memory of items would be enhanced (Quamme et al., 2007; Parks and Yonelinas, 2009, 2015). Because the intact contexts associated with faces shared more emotional characteristics with faces and were remembered better in the congruent and high similarity conditions, we speculated that faces and contexts were more likely to be unitized as a coherent whole in congruent and high similarity conditions compared to low similarity condition. In other words, emotional congruency and high similarity might be conducive to promoting the level of unitization between faces and contexts, thereby increasing face-context associative memory. However, it is noteworthy that more research is needed to test whether high emotional similarity would be a way of promoting unitization between items.

Third, it is important to note that face-context associative memory would be enhanced when the target face and the face associated with the context emotion were highly emotionally similar. Righi et al. (2015) investigated how different emotional valence (positive or negative) influenced face memory and face-context associative memory. And they found that happy and fearful emotions might influence the binding process between faces and contexts in different ways. Namely, regardless of whether the context was positive or negative, positive faces might broaden the attention and foster the integration of faces and surrounding contexts (Shimamura et al., 2006; Tsukiura and Cabeza, 2008), while negative faces might narrow attention and impair the encoding of surrounding contexts associated with faces (Mather, 2007; Mather and Sutherland, 2011; Murray and Kensinger, 2013). By contrast, in the present study, we manipulated the emotional similarity of negative faces, and found that negative emotion also could promote face-context associative memory when two negative faces were highly emotionally similar. Of course, given that the emotions of disgust, anger, and fear are likely to be processed with partially different neural systems of the brain (Calder et al., 2001, 2004; Anderson et al., 2003), it may be interesting to further study whether these brain regions are involved in processing emotional faces and contexts, and whether the neural activities would be enhanced when the emotionality between facial expressions and surrounding contexts is congruent or highly similar.

It is important to point out that in this study, we only focused on the emotional similarity of negative facial expressions. More research is still needed to understand the relationship between emotional similarity (e.g., emotional similarity of positive facial expressions) and memory. Besides, although the facial expressions we used were all negative, there were some differences in the arousal among different facial expressions. The differences of arousal might affect memory performance, which should be controlled for in future studies.

Taken together, our findings suggest that the greater the emotional similarity between the faces, the better the face memory and face-context associative memory were. The present results not only enrich the theoretical knowledge about the effect of emotional similarity on associative memory, but may also have important implications for understanding how and to what extent the interplay between facial expression and emotional context can affect an individual’s memory in everyday social interactions.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Academic Board of Shandong Normal University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

WM conceived and designed the experiments, and wrote the manuscript with SA and MZ. FQ performed the experiments and collected the data. SA and HZ analyzed the data under the supervision of WM. All authors contributed to the article and approved the submitted version.

This research was supported by the National Natural Science Foundation of China (31571113) and Natural Science Foundation of Shandong Province (ZR2021MC081).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. R. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672. doi: 10.1038/372669a0

Aguado, L., Martínez-García, N., Solís-Olce, A., Dieguez-Risco, T., and Hinojosa, J. A. (2018). Effects of affective and emotional congruency on facial expression processing under different task demands. Acta Psychol. 187, 66–76. doi: 10.1016/j.actpsy.2018.04.013

Anderson, A. K., Christoff, K., Panitz, D., De Rosa, E., and Gabrieli, J. D. E. (2003). Neural correlates of the automatic processing of threat facial signals. J. Neurosci. 23, 5627–5633. doi: 10.1186/1471-2202-4-15

Aviezer, H., Bentin, S., Hassin, R. R., Meschino, W. S., Kennedy, J., Grewal, S., et al. (2009). Not on the face alone: perception of contextualized face expressions in Huntington’s disease. Brain 132, 1633–1644. doi: 10.1093/brain/awp067

Aviezer, H., Dudarev, V., Bentin, S., and Hassin, R. R. (2011a). The automaticity of emotional face-context integration. Emotion 11, 1406–1414. doi: 10.1037/a0023578

Aviezer, H., Hassin, R. R., and Bentin, S. (2011b). Impaired integration of emotional faces and affective body context in a rare case of developmental visual agnosia. Cortex 48, 689–700. doi: 10.1016/j.cortex.2011.03.005

Aviezer, H., Hassin, R. R., Ryan, J., Grady, C., Susskind, J., Anderson, A., et al. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19, 724–732. doi: 10.1111/j.1467-9280.2008.02148.x

Barrett, L. F., and Kensinger, E. A. (2010). Context is routinely encoded during emotion perception. Psychol. Sci. 21, 595–599. doi: 10.1177/0956797610363547

Barrett, L. F., Mesquita, B., and Gendron, M. (2011). Context in emotion perception. Curr. Dir. Psychol. Sci. 20, 286–290. doi: 10.1177/0963721411422522

Bisby, J. A., and Burgess, N. (2014). Negative affect impairs associative memory but not item memory. Learn. Memory 21, 760–766. doi: 10.1101/lm.032409.113

Calder, A. J., Keane, J., Lawrence, A. D., and Manes, F. (2004). Impaired recognition of anger following damage to the ventral striatum. Brain 127, 1958–1969. doi: 10.1093/brain/awh214

Calder, A. J., Lawrence, A. D., and Young, A. W. (2001). Neuropsychology of fear and loathing. Nat. Rev. Neurosci. 2, 352–363. doi: 10.1038/35072584

Diéguez-Risco, T., Aguado, L., Albert, J., and Hinojosa, J. A. (2013). Faces in context: modulation of expression processing by situational information. Soc. Neurosci. 8, 601–620. doi: 10.1080/17470919.2013.834842

Diéguez-Risco, T., Aguado, L., Albert, J., and Hinojosa, J. A. (2015). Judging emotional congruency: explicit attention to situational context modulates processing of facial expressions of emotion. Biol. Psychol. 112, 27–38. doi: 10.1016/j.biopsycho.2015.09.012

Funk, A. Y., and Hupbach, A. (2014). Memory for emotionally arousing items: context preexposure enhances subsequent context-item binding. Emotion 14, 611–614. doi: 10.1037/a0034017

Gong, X., Huang, Y. X., Wang, Y., and Luo, Y. J. (2011). Revision of the Chinese facial affective picture system. Chin. Ment. Health J. 25, 40–46. doi: 10.3969/j.issn.1000-6729.2011.01.011

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (1999). International Affective Picture System (IAPS): Instruction Manual and Affective Ratings. Technical report A-4. Gainesville, FL: The Center for Research in Psychophysiology.

Mather, M. (2007). Emotional arousal and memory binding: an object-based framework. Perspect. Psychol. Sci. 2, 33–52. doi: 10.1111/j.1745-6916.2007.00028.x

Mather, M., and Sutherland, M. R. (2011). Arousal-biased competition in perception and memory. Perspect. Psychol. Sci. 6, 114–133. doi: 10.1177/1745691611400234

Murray, B. D., and Kensinger, E. A. (2013). A review of the neural and behavioral consequences for unitizing emotional and neutral information. Front. Behav. Neurosci. 7:42. doi: 10.3389/fnbeh.2013.00042

Parks, C. M., and Yonelinas, A. P. (2009). Evidence for a memory threshold in second-choice recognition memory responses. Proc. Natl. Acad. Sci. U.S.A. 106, 11515–11519. doi: 10.1073/pnas.0905505106

Parks, C. M., and Yonelinas, A. P. (2015). The importance of unitization for familiarity-based learning. J. Exp. Psychol. Learn. Mem. Cogn. 41, 881–903. doi: 10.1037/xlm0000068

Quamme, J. R., Yonelinas, A. P., and Norman, K. A. (2007). Effect of unitization on associative recognition in amnesia. Hippocampus 17, 192–200. doi: 10.1002/hipo.20257

Righart, R., and de Gelder, B. (2008a). Recognition of facial expressions is influenced by emotional scene gist. Cogn. Affect. Behav. Neurosci. 8, 264–272. doi: 10.3758/CABN.8.3.264

Righart, R., and de Gelder, B. (2008b). Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc. Cogn. Affect. Neurosci. 3, 270–278. doi: 10.1093/scan/nsn021

Righi, S., Gronchi, G., Marzi, T., Rebai, M., and Viggiano, M. P. (2015). You are that smiling guy I met at the party! Socially positive signals foster memory for identities and contexts. Acta Psychol. 159, 1–7. doi: 10.1016/j.actpsy.2015.05.001

Rozin, P., and Fallon, A. E. (1987). A perspective on disgust. Psychol. Rev. 94, 23–41. doi: 10.1037//0033-295X.94.1.23

Shimamura, A. P., Ross, J. G., and Bennett, H. D. (2006). Memory for facial expressions: the power of a smile. Psychon. Bull. Rev. 13, 217–222. doi: 10.3758/BF03193833

Susskind, J. M., Littlewort, G., Bartlett, M. S., Movellan, J., and Anderson, A. K. (2007). Human and computer recognition of facial expressions of emotion. Neuropsychologia 45, 152–162. doi: 10.1016/j.neuropsychologia.2006.05.001

Tsukiura, T., and Cabeza, R. (2008). Orbitofrontal and hippocampal contributions to memory for face–name associations: the rewarding power of a smile. Neuropsychologia 46, 2310–2319. doi: 10.1016/j.neuropsychologia.2008.03.013

Van den Stock, J., Righart, R., and de Gelder, B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7, 487–494. doi: 10.1037/1528-3542.7.3.487

Van den Stock, J., Vandenbulcke, M., Sinke, C., and de Gelder, B. (2014). Affective scenes influence fear perception of individual body expressions. Hum. Brain Mapp. 35, 492–502. doi: 10.1002/hbm.22195

Keywords: facial expression, emotional similarity, emotional congruency, face memory, face-context associative memory

Citation: An S, Zhao M, Qin F, Zhang H and Mao W (2022) High Emotional Similarity Will Enhance the Face Memory and Face-Context Associative Memory. Front. Psychol. 13:877375. doi: 10.3389/fpsyg.2022.877375

Received: 16 February 2022; Accepted: 08 April 2022;

Published: 09 May 2022.

Edited by:

Laura Sagliano, University of Campania Luigi Vanvitelli, ItalyReviewed by:

Markku Kilpeläinen, University of Helsinki, FinlandCopyright © 2022 An, Zhao, Qin, Zhang and Mao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Weibin Mao, d2JfbWFvQDE2My5jb20=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.