- 1The Graduate Institute of Design Science, Tatung University, Taipei, Taiwan

- 2Department of Industrial Design, Tatung University, Taipei, Taiwan

- 3The College of Fine Arts, Guangdong Polytechnic Normal University, Guangzhou, China

Since their development, social robots have been a popular topic of research, with numerous studies evaluating their functionality or task performance. In recent years, social robots have begun to be regarded as social actors at work, and their social attributes have been explored. Therefore, this study focused on four occupational fields (shopping reception, home companion, education, and security) where robots are widely used, exploring the influence of robot gestures on their perceived personality traits and comparing the gesture design guidelines required in specific occupational fields. The study was conducted in two stages. In the first stage, an interactive script was developed; moreover, observation was employed to derive gestures related to the discourse on the fields of interest. The second stage involved robot experimentation based on human–robot interaction through video. Results show that metaphoric gestures appeared less frequently than did deictic, iconic, or beat gestures. Robots’ perceived personality traits were categorized into sociality, competence, and status. Introducing all types of gestures helped enhance perceived sociality. The addition of deictic, and iconic gestures significantly improved perceived competence and perceived status. Regarding the shopping reception robot, after the inclusion of basic deictic and iconic gestures, sufficient beats gestures should be implemented to create a friendly and outgoing demeanor, thereby promoting user acceptance. In the home companion, education, and security contexts, the addition of beat gestures did not affect the overall acceptance level; the designs should instead be focused on the integration of the other gesture types.

Introduction

In recent years, social robots have been widely applied in numerous occupations. They are not only suitable for unique, dangerous, or professional fields, but also as a part of people’s everyday lives. Social robots are used in various spaces and serve in a range of roles and tasks (e.g., in supermarkets, hospitals, schools, homes, and restaurants; Looije et al., 2010; Koceski and Koceska, 2016; Sabelli and Kanda, 2016; Benitti and Spolaôr, 2017; McGinn et al., 2017; Chang et al., 2018; Savela et al., 2018).

In human–human interaction, social perception refers to the process in which people automatically classify social groups (e.g., people of a particular gender, age group, or race) based on social cues, such as the tone of voice and gestures (Jussim, 1991). Psychologists have noted that according to role congruity theories, men are more likely to acquire leadership positions due to their assertiveness, strength, and independence, whereas women are more likely to fill communal roles due to traits of sensitivity and emotional expressiveness (Eagly et al., 2000; Ceci et al., 2009; Barth et al., 2015).

In the earliest research on human–computer interaction, some scholars reported that beyond technological products, computers possess certain social attributes. This theory was termed computers as social actor (CASA) theory (Nass et al., 1994) and is widely acknowledged by scholars in the field of human–robot interaction (HRI). Researchers have observed that when people interact with robots, they exhibit behaviors and reactions similar to those displayed when they interact with other humans (Lee et al., 2006; Kim et al., 2013). This suggests that robots are socially perceived in a manner similar to humans (Hendriks et al., 2011; Stroessner and Benitez, 2019) and people’s needs can no longer be met by the functionality of other non-robotic tools; a robot must have social and emotional value. The introduction of perceptual design has greatly increased the public’s acceptance of robots (Kirby et al., 2010; Hwang et al., 2013).

Japanese scholars proposed the Kensei evaluation method, which is used to quantify the characteristics of a target at the perceptual level such that the characteristics can be evaluated through mathematical analysis (Matsubara and Automation, 1996). This method has also been applied in several other HRI studies (Kanda et al., 2001; Takeuchi et al., 2006; Mitsunaga et al., 2008; Usui et al., 2008; Hendriks et al., 2011; Tay et al., 2014). These scholars have collected and analyzed the perceptual vocabulary used in HRI to evaluate the interaction process or the robot. One part of the vocabulary described the feeling involved with the interaction, whereas the other part described the personality of the robot. Other scholars examining robots’ personalities have directly applied the human psychology model to characterize the personalities of robots (Kim et al., 2008) or used the personality trait model of human psychology as a reference to increase or reduce the number of items (Eysenck, 1991; Tapus et al., 2008). A more common reference is to the Big Five Theory, which is widely used to evaluate human personality traits (Norman, 1963; Barrick and Mount, 1991; Cattell and Mead, 2008). With reference to this theory, Hwang et al. (2013) performed an analysis in which 13 personality trait adjectives were extracted and used to evaluate differences in perceptions of several robots’ personalities based on the robots’ appearances. These studies reveal a key method of evaluating perceptions of robots’ personalities.

The appearance, gestures, expressions, and voices of robots in social interactions are called social cues (Saygin et al., 2012; Hwang et al., 2013; Niculescu et al., 2013; Stanton and Stevens, 2017; Menne and Schwab, 2018). They can be classified as verbal or non-verbal (Tojo et al., 2000; Mavridis, 2015). In human interaction, non-verbal communication can explain and supplement verbal communication, improve communication efficiency, and make dialog more lively (Mehrabian and Williams, 1969; Argyle, 1972). In HRI, these social cues also affect people’s perception and opinion of robots (Fong et al., 2003; Hancock et al., 2011). Robots’ appearance has been gradually developed and perfected since the uncanny valley theory was advanced (Mori et al., 2012), and most robots currently on the market have a humanoid appearance. Regarding facial expressions, mechanical and humanoid robots do not have complete facial expression functionality; expressive light is typically used as a substitute for facial expressions (Collins et al., 2015; Baraka et al., 2015, 2016; Baraka and Veloso, 2018; Song and Yamada, 2018; Westhoven et al., 2019). Our previous works have comprehensively discussed the use of voice and expressive light in social robots, providing a functionality reference for applications in three occupational fields: shopping reception, home companion, and education (Dou et al., 2020a,b, 2021).

However, the difference of gesture design in various applications were not discussed in our previous study. In non-verbal communication, gestures are pivotal because they both transmit information (Kendon, 1988; Gullberg and Holmqvist, 1999) and affect social cognition and persuasiveness (Maricchiolo et al., 2009). One study reported that in HRI, an instance of non-verbal communication is more effective than an instance of verbal communication (Chidambaram et al., 2012). A robot’s gestures also have the potential to shift cognitive framing, elicit specific emotional and behavioral responses, and enhance task performance (Ham et al., 2011; Lohse et al., 2014; Saunderson and Nejat, 2019). Currently, gestures of robots are quite basic. For example, robot Pepper often use wave, hand shake, nod, bow or arm swaying, etc. (Hsieh et al., 2017; De Carolis et al., 2021). Some robots even have no flexible and complete fingers to represent numbers (Kim et al., 2007; Chidambaram et al., 2012). In real industrial applications, the situation is even worse. Therefore, it is important to make the gestures of robots more comprehensive and meet various needs, thereby improving the interaction of different applications.

Studies on robot gestures can be divided into two categories: (1) those exploring the influence of physical parameters, such as the amplitude, frequency, speed, smoothness, and duration of a single gesture on the user’s psychology (Kim et al., 2008; Riek et al., 2010) and (2) those exploring the effects of various types of gestures (Breazeal et al., 2005; Nehaniv et al., 2005; Chidambaram et al., 2012; Huang and Mutlu, 2013). Evidence on the second category of research is more abundant. This is because robots must complete a series of behaviors in HRIs; moreover, multiple types of gestures may be involved and undergo several changes. Therefore, investigating various gesture types and their impacts is preferable to studying a single type of gesture. The mainstream view is that gestures can be divided into four categories, as proposed by McNeill (1992): (1) deictics, which indicate concrete objects or space; (2) iconics, which relate to concrete objects or events; (3) beats, which include short, quick, and frequent hand and arm movements; and (4) metaphorics, which promote visualization of abstract concepts or objects through the presentation of concrete metaphors. This theory was applied to robot research by Huang and Mutlu (2013), who observed that all types of gestures affect user perceptions of the robot’s narrative performance.

We discovered a considerable knowledge gap in relation to the design of gestures in social robots, with other scholars also noting that robot gestures shift cognitive framing, elicit specific emotional and behavioral responses, and improve task performance (Ham et al., 2011; Lohse et al., 2014; Saunderson and Nejat, 2019). Two shortcomings in these studies are notable. First, environmental differences were not considered. One study reported that even for robots of the lowest level (e.g., cleaning robots), regarding the household as an environment is necessary, and the influence of factors, such as people, products, and activities, in that environment must be considered (Forlizzi and DiSalvo, 2006). Users exhibit varying attitudes toward, preferences for, and degrees of trust in robots depending on the environment (Huang and Mutlu, 2013; Savela et al., 2018). Scholars have also begun to study users’ preferences for and trust in robots according to specific occupational fields (Huang and Mutlu, 2013; Savela et al., 2018), such as shopping reception (Aaltonen et al., 2017; Bertacchini et al., 2017), education (Robins et al., 2005; Tanaka and Kimura, 2010; Cheng et al., 2018), home-based care (Broadbent et al., 2018), and security or healthcare (Tay et al., 2014). With the introduction of robots into numerous occupational fields, the interactive environment has also changed, which suggests that future robot gesture studies should consider occupational fields to promote design customization. Second, most robot gesture studies have centered on task performance, persuasiveness, and trust in association with distinct gestures (Li et al., 2010; Lee et al., 2013; Saunderson and Nejat, 2019). The effects of individual gestures on the sociality of robots (i.e., differences in perceptions of personality traits) have not been discussed in detail.

The purpose of the study are as follows: (1) Study whether the four types gestures appear frequently in these four applications (shopping reception; home companion; education; security). (2) Discuss whether different gestures significantly affect robots’ perceived personalities. (3) Propose the design guidance of gestures for these four applications. In our previous study (Dou et al., 2021), we have confirmed that the voices and expressive lights of social robots enable the perception of distinct personality traits. Moreover, the optimal configuration of voice and lighting design parameters for social robots applied in the four fields of interest has been determined (Dou et al., 2020a,b, 2021). New discoveries on robot gestures are also presented herein.

Materials and Methods

Stage 1: Gesture Extraction

Dialog Script

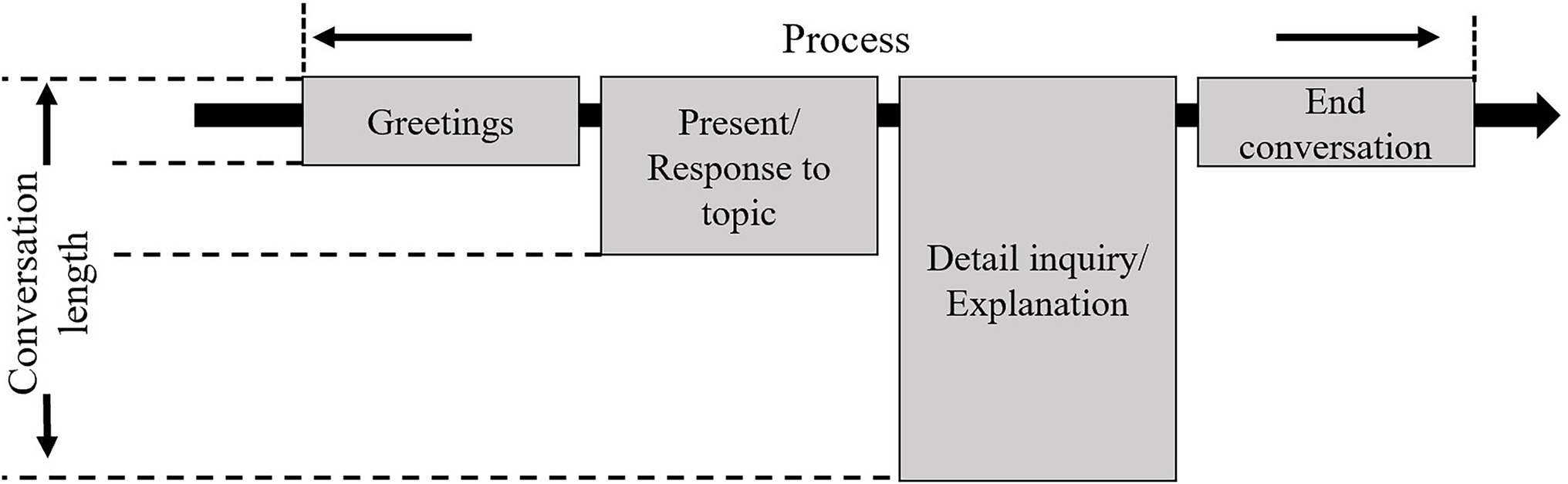

Some scholars have established scripted dialogs to plan robot behavior during HRI (Hinds et al., 2004; Louie et al., 2014; Hammer et al., 2016). In the present study, to examine the differences associated with dialog content, we defined the dialog structure and length consistently for the four applications (Figure 1).

The narration is typically the most critical part of communication (Huang and Mutlu, 2013); it is the stage with the greatest amount of information transfer and is often accompanied by non-verbal communication using various methods. Therefore, we mainly focused on recording and examining gestures displayed during this component. In accordance with the dialog structure, we devised a dialog script for each of the occupational fields (shopping reception, home companion, education, and security).

In the script of the shopping reception robot (Supplementary Table S1), the robot acts as shopping reception that is questioned by customers. The customers plan to buy a refrigerator, and the robot conveys information concerning product functions and features. In the dialog script of the home companion robot (Supplementary Table S2), the robot acts as a family caregiver communicating with an elderly person, relaying the details of a dinner recipe and the nutritional benefits of the food. In the dialog script of the educational robot (Supplementary Table S3), the robot acts as a teacher explaining the concept of the golden ratio to a user (student). In the security dialog, the robot acts as a home security manager giving a solution of house fire hazard for house owner (Supplementary Table S4).

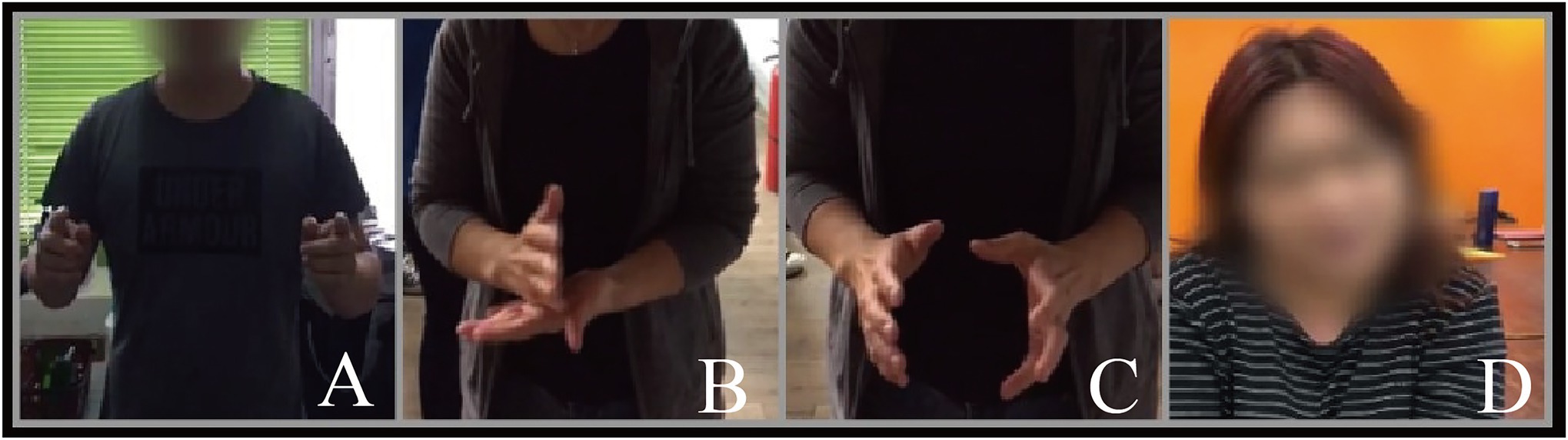

Observation and Extraction

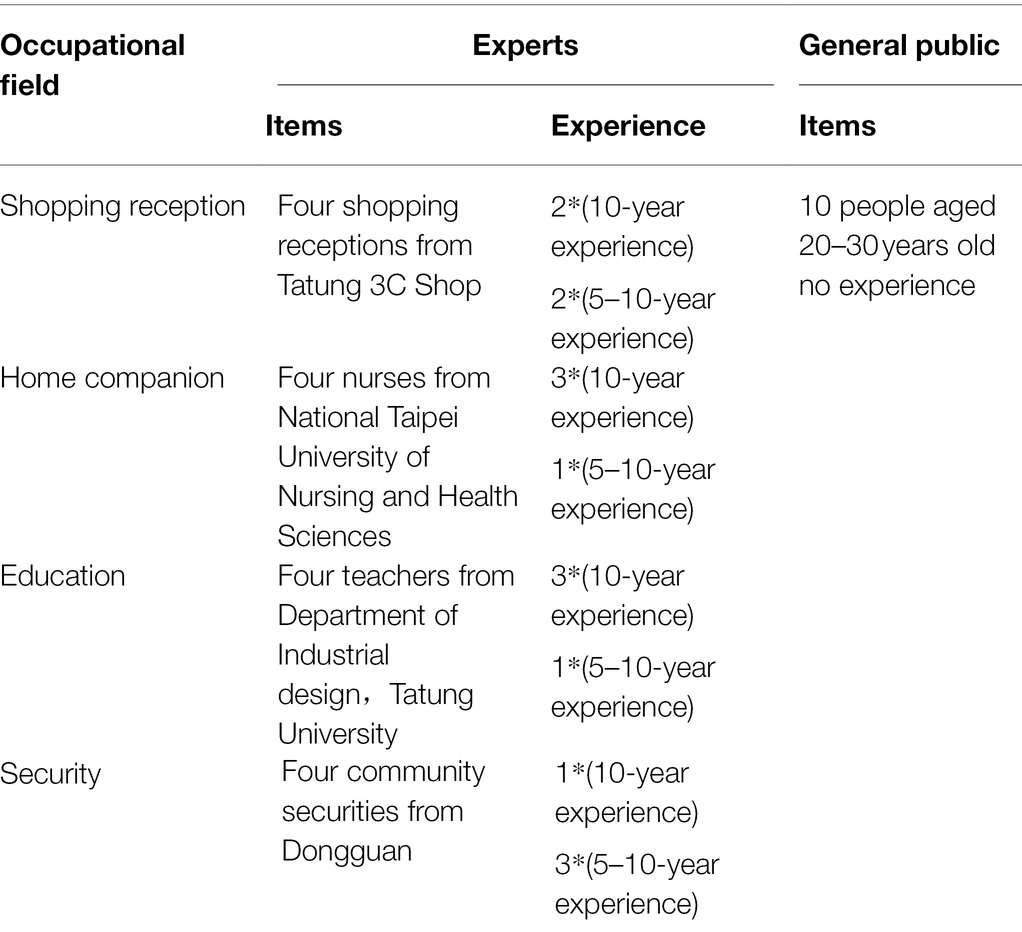

Observation is a method in which researchers use their senses and auxiliary tools to scrutinize a target for a certain research purpose, outline, or observation table (Baker, 2006). To capture gestures in real occupational environments, we developed dialog scenarios for the four occupations. We recruited 26 participants (four experts in each of the four fields and 10 members of the public; Table 1). After informed consent was obtained, video recording and observation were conducted (Figure 2). During the observation, the participants were involved in the dialog scenarios, with a staff member acting as the user. The participants were asked to express the content as clearly as possible.

Figure 2. Selected screenshots from the observation (A: longer segment, B: chop into pieces, C: washing, D: titling head).

Each of the 16 experts only participated in one observation (in the relevant occupational field). The 10 members of the general public were required to participate in observations in all four fields. Because the experts had at least 5 years of work experience, we believe that their behavior in the interaction was professional. Therefore, they only participated in observations related to the applications in which they had experience. The experts’ gestures were assigned a high priority for consideration in the behavioral analysis. Participants from the public were not experienced practitioners in these fields; they had no experience-related impact on gestures for any of these domains. Therefore, we requested that they participate in all observations of four applications, analyzing their behavior as supplementary content.

On the basis of our expected results and the actual observations, we categorized the gestures associated with the four occupational fields, correlating them with specific elements of the dialog (Supplementary Table S5). Some gestures only appeared when specific information that was highly relevant to the overall dialog content was discussed (Huang and Mutlu, 2013). These gestures were defined as Type 1 (T1) gestures and included gestures in the deictics, iconics, and metaphorics categories. Gestures were labeled as (A) shopping reception, (B) home companion, (C) education, and (D) security. Another type of gesture, denoted as Type 2 (T2) gestures, recurred randomly and was unrelated to any specific information. Because the mode of expressing such gestures did not vary among the four fields, we labeled them (F). Regarding the extraction criteria of certain gestures, we used the number of people in the expert group greater than or equal to 50% (i.e., 2 out of 4) and the overall level greater than or equal to 50% (7 out of 14). Such gestures were considered more likely to occur in the interaction than were other gestures. Science too many items of gesture extraction cannot be listed in detail in the text, we list them in Supplementary Table S5. Supplementary Table S5 records the complete gesture extraction results, both selected and unselected.

The T1 gestures extracted for robots in four occupational fields were as follows: in the shopping reception dialog, A1 (“this refrigerator”) (one hand palms out, point to the refrigerator), A2 (“three, four people”) (put up 3 or 4 finger), A3 (“level 1”) (put up one finger), A4 (“ice maker”) (two palms facing each other in front of the chest represent a space), and A5 (“fruit and vegetable storeroom”) (the same as A4) all met the screening requirements. In the home companion dialog, B1 (“three to four spareribs”) (put up 3 or 4 fingers), B3 (“add […] scallions and salt”) (fingers of one hand pinching and shaking), B6 (“one person”) (put up a finger), and B7 (“chopped into small pieces”) (One hand is placed horizontally and the other is moved up and down) fulfilled our screening conditions. In the education dialog, C2 (“a line”) (put up a finger), C3 (“two segments”) (put up two fingers), C4 (“shorter segment”) (two index fingers facing each other in the space), C5 (“longer segment”) (the same as C3 but longer spacing) and C6 (“this is called”) (palms out and point to the screen) satisfied our screening requirements. In security dialog, D1 (“the water heater”) (palms out and point to the weather heater), D3 (“2.4 meters”) (point out 2 fingers then 4 fingers), D4 (“an exhaust fan”) (use the thumb and index finger of both hands to represent a circle in space), D5 (“the window”) (point to the window), D6 (“there are…”) (point to the sundries) were selected. These gestures are considered relatively common in the fields of interest. Although some individuals have expressed other information through gestures, such as A6 (“−18 degrees”) (two hands cross for 10 then put up one thumb and index finger for 8), A8 (“rapid refrigeration”) (one finger circle fast in space), A11 (“keeps the meat fresh”) (palms together), B8 (“washing”) (two hands move up and down relative to each other), and D2 (“the height”) (one hand placed horizontal by the side). They did not meet the screening criteria and thus could not be used as a reference for the robot gesture design in the Stage 2: experiment.

For the T2 gestures that were unrelated to the dialog content, F1 (“beat gestures”) (one or two hands shake intermittently) met the extraction criteria. Only one person used F3 (“put up a finger”) (hold a finger in the air and remain still for a long period of time) in observation. Although F2 (“tilting head”) (tilt the head to one side and hold it for a longer period of time) did not meet the criteria, the occurrence in the public group was close to half. The reasons underlying the screening of gestures that occurred at this stage of the study are presented in the Discussion.

Stage 2: Robot Experimentation

We developed the robot gestures used in the experiment based on the results of the preliminary study. Experiments were designed to verify the impacts of the T1 and T2 gestures on perceptions of robots’ personality traits in the four occupational fields.

Participants

The study was permitted by National Taiwan University: Office of Research and Development. The participants were recruited from internet. Interested persons were invited to the school for assessment. To ensure that the participants could easily complete the experiment, the following inclusion criteria were applied: (1) Possesses a basic understanding of social robots (has watched or personally interacted with such a robot) and (2) has no physical or cognitive impairment that could prevent completion of the experiment. Subjects who meet the requirements were asked to sign an informed consent form. Only signed subjects were able to participate in the experiment. After the experiment, subjects were rewarded with NT$200. According to our assessment, 3 had high interactive experience, 24 medium, and 7 low. Of the 34 questionnaires returned, 6 were invalid and thus excluded from further analysis. A total of 28 valid responses belonging to 13 male and 15 female participants [M = 31.45, standard deviation (SD) = 8.85] were analyzed.

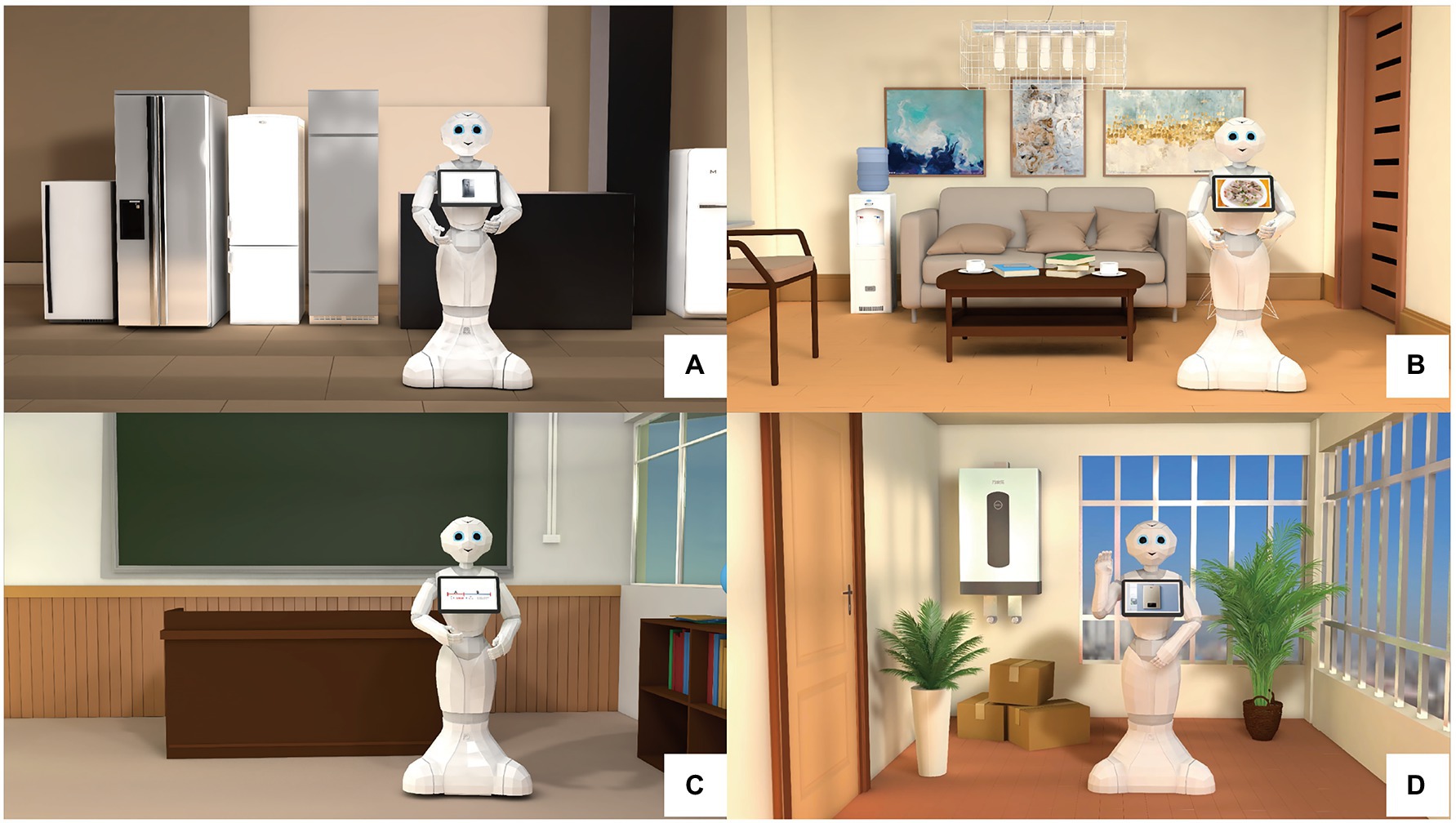

Experimental Design

Because this experiment involved the design of robot gestures, we selected Pepper, a semi-humanoid robot, as the sample model. This is because compared with other common robots (e.g., NAO and Alpha), Pepper has a complete hand structure that is relatively humanlike, enabling the accurate display of various gestures. We employed a video HRI method; that is, we produced robot-centered videos for the experiment. In recent years, this method of using real robots as models in animation has been acknowledged by scholars (Takayama et al., 2011; Walters et al., 2011; van den Brule et al., 2014). In robot-related experiments, computer-based simulations are typically easier to manipulate than are physical prototypes and exhibit greater flexibility (Figure 3; Bartneck et al., 2004).

Figure 3. Video captures from the video HRI. (A) Shopping reception; (B) home companion; (C) education; (D) security.

Each video contained three key design components: the robots’ gesture, sound, and background environment. To ensure that the study remained focused on the robots’ gestures, vocal interference was eliminated, and to ensure that the vocal pitch and dialog speed were the same across occupational fields, we used synthetic speech (iFLYTEK Voice) to provide uniform voice processing. According to the dialog scenarios for the four fields, we introduced background furniture that suited the respective situations. In the gesture observation, two types of gestures were defined: T1 (deictics, iconics, and metaphorics), which occurred when topic-specific information was conveyed, and T2 (beat gestures), which randomly recurred many times. To examine the impact of these two types of gestures on perceptions of the robots’ personality traits, we established three levels of gestures as follows: level 1 (no gestures), level 2 (T1 gestures only), and level 3 (T1 + T2 gestures). The independent variable of the experiment is gesture type (level 1; level 2; level 3) and application fields (shopping reception; education; home companion; security). Given that three experimental variants corresponded to each of the four occupational scenarios, a total of 12 experimental scenarios were designed. The dependent variables of the experiment were 20 perceived personality adjectives and the overall acceptance of the robot.

Experimental Steps

The experiment was set up in our laboratory. In accordance with the video HRI approach, the participant interacted with the robot in the video. Participants were asked to interact with scripted tasks of different applications. And the robot’s responses were controlled as per the Wizard of Oz experimental method (Dahlbäck et al., 1993). The Wizard of Oz experiment method means that the robots in the experiment were not fully automatic and relied on the control by research staff. In HRI researches, some scholars use this method to control and simulate robots’ behavior when the robot is not autonomous and intelligent enough (Riek, 2012; Marge et al., 2017). In our experiment, whenever the user finished speaking, the experimental staff controls the robot to answer on the screen through another computer. After each animation interaction, the participants were required to complete questionnaires before interacting with the next animation. The play order of the experimental animations and the order of the questionnaire items were randomized to prevent any bias in the experimental results. Each video interaction is within 2 min, and then the subjects have 2 min to fill out the questionnaire, and there is a 10-min break in the middle of the experiment. The total experiment is about 1 h (12*4 + 10 = 58).

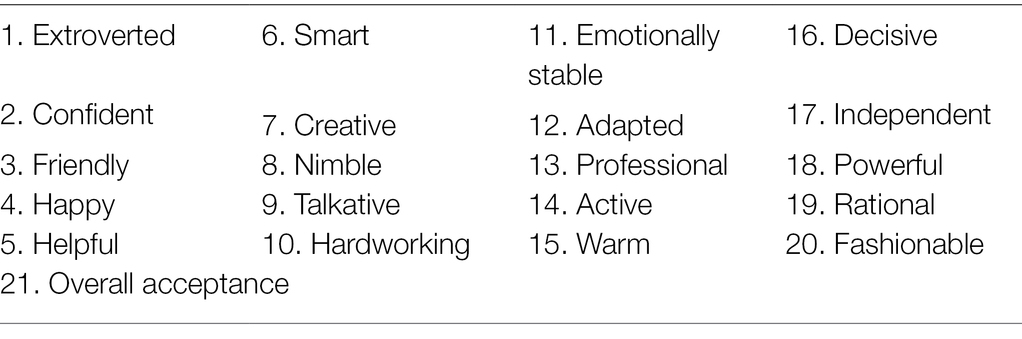

Questionnaire

In HRI researches, Godspeed questionnaires are a method for evaluation (Bartneck et al., 2009). However, some items are not evaluation of perceived personalities. For example, (Machinelike–Humanlike) in anthropomorphism and (Mechanical–Organic) in Animacy are more fits for evaluations of physical properties. Therefore, we referred to the methods of scholar (Hwang et al., 2013) and selected the adjectives of perceived personality traits. On the basis of the literature, we compiled an initial list of relevant adjectives describing perceptions of the robots’ personality traits (Norman, 1963; Barrick and Mount, 1991; Eysenck, 1991; Cattell and Mead, 2008; Kim et al., 2008; Tapus et al., 2008; Hwang et al., 2013). After expert discussion, adjectives with high similarity were merged, and finally, 20 items were generated (Table 2). For understanding the user’s overall evaluation of the robot, the Acceptance item was added. Which means: I like this robot and am willing to accept its services. All 21 items were used to evaluate 12(3*4) experimental samples. Participants were required to score all 21 items after each interactive video. The questionnaire uses a five-point Likert scale. A score of 5 indicates that the robot fits the description of the item, and a score of 1 indicates that the robot does not match.

Results

Factor Analysis

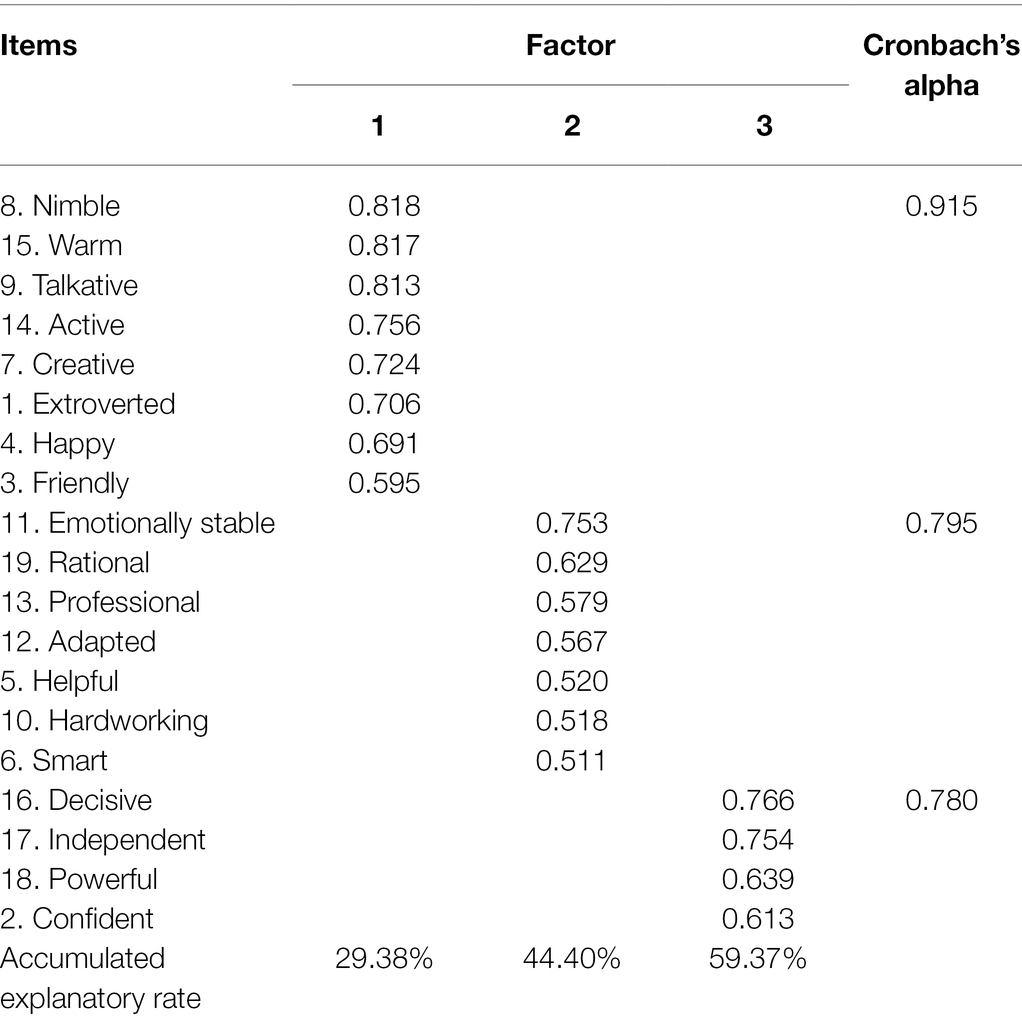

To assess the influence of gestures on user perceptions, we conducted factor analysis to reduce the dimensionality of the data (20 adjectives). The first-order factor analysis (Kaiser–Meyer–Olkin value = 0.937, p < 0.000) revealed that “20 Fashionable” lower than 0.5 in all three dimensions; hence, it could not be classified and was eliminated. The remaining 19 words were subjected to second-order factor analysis (Kaiser–Meyer–Olkin value = 0.937, p < 0.000). These words were divided into three dimensions, as detailed in Table 3. According to the reliability analysis, the F1, F2, and F3 Cronbach’s alpha values were 0.915, 0.795, and 0.780, respectively.

Factor analysis revealed that “8 Nimble,” “15 Warm,” “9 Talkative,” “14 Active,” “7 Creative,” “1 Extroverted,” “4 Happy,” and “3 Friendly” were contained within F1. These words were likely to be used to describe the robot’s attitude toward the outside world (i.e., the robot’s sociality). Thus, we termed F1 the social factor. F2 included the “11 Emotionally stable,” “19 Rational,” “13 Professional,” “12 Adaptable,” “5 Helpful,” “10 Hardworking,” and “6 Smart,” items. These words tended to be used to express robots’ ability to work or perform tasks. Thus, F2 was named the competence factor. F3 comprised the items “16 Decisive,” “17 Independent,” “18 Powerful,” and “2 Confident,” which tended to be employed to describe status in an interaction; therefore, we named F3 the status factor. Our identification of status in addition to the two well-known dimensions of sociality and competence is notable. This is further explained in the Discussion.

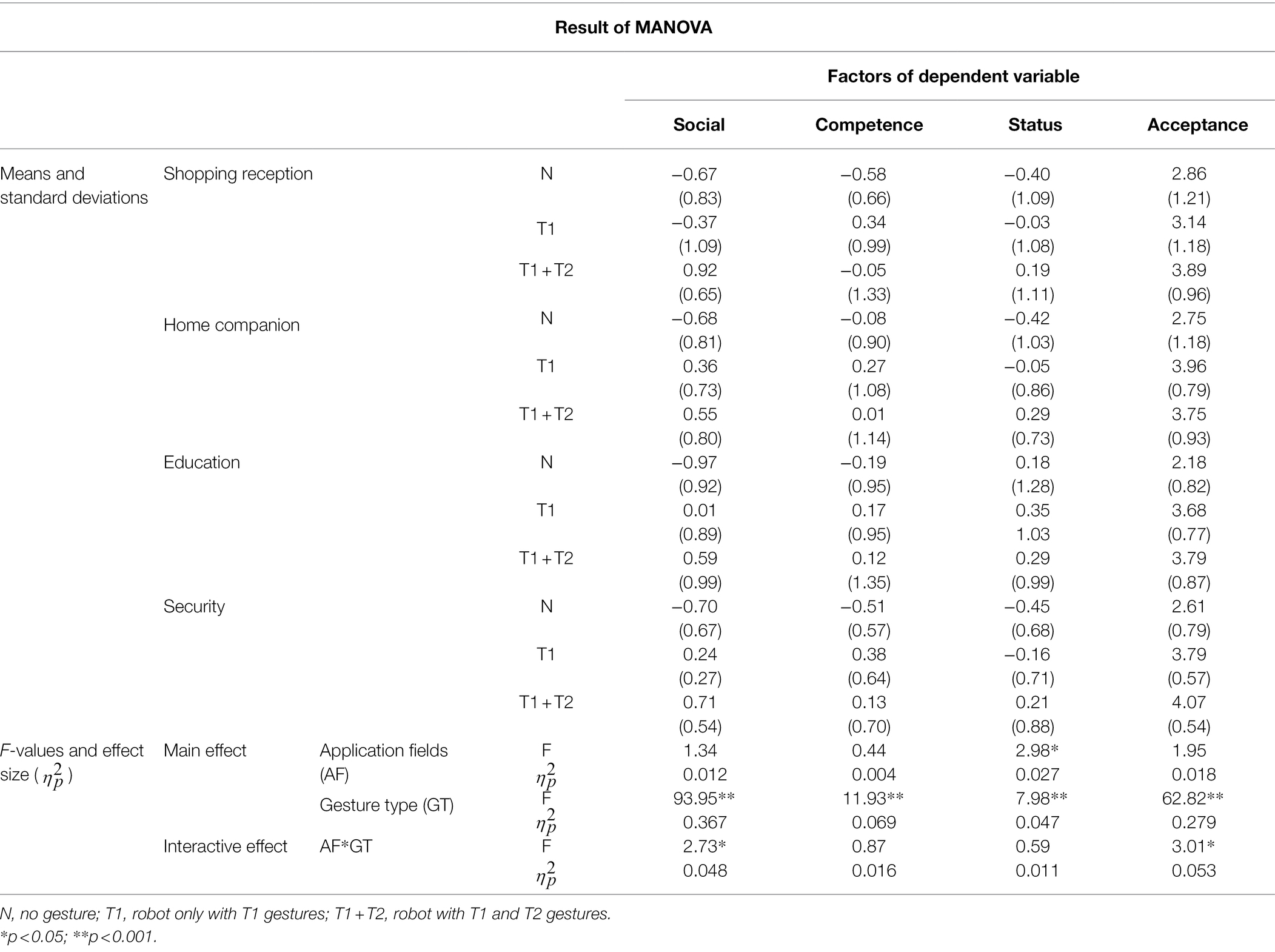

Multivariate Analysis of Variance

The independent variables, namely, gesture type (none, T1, or T1 + T2) and occupational field (shopping reception, home companion, education, and security), were subjected to a 3 × 4 multivariate analysis of variance. Dependent variables consisted of F1 (social), F2 (competence), F3 (status), and overall acceptance.

Main Effect

The multivariate analysis of variance results (Table 4) suggests that occupational field exerted a significant effect only on F3 status, F (3, 6) = 2.98, p < 0.05. No significant difference was observed between shopping reception (M = −0.08, SD = 1.11), home companion (M = −0.06, SD = 0.92) and security (M = −0.13, SD = 0.80). The education score (M = 0.27, SD = 1.10) was significantly higher than other three. This indicated that in terms of the status factor (F3), shopping reception, home companion and security had relatively similar results. Thus, the sense of status the education robot conveyed in the interaction was notably greater than that of the other three robots.

The gestures significantly affected F1 social, F (2, 6) = 93.95, p < 0.001; F2, F (2, 6) = 11.93, p < 0.001; F3, F (2, 6) = 7.98, p < 0.001; and acceptance, F (2, 6) = 62.82, p < 0.001. Regarding the social factor (F1), the no gestures category (M = −0.76, SD = 0.81) registered a significantly smaller effect than the T1 category (M = 0.06, SD = 0.83) and the T1 + T2 category (M = 0.69, SD = 0.77). T1 also had a significantly smaller effect than did T1 + T2. This suggests that regardless which type of gesture was employed, the robot’s sociality tended to increase, appearing more outgoing. As for the competence factor (F2), the no gestures category (M = −0.34, SD = 0.80) was associated with significantly lower scores than was the T1 category (M = 0.29, SD = 0.92). This demonstrates that the addition of T1 gestures can improve perceptions of the robot’s competence. But T1 and T1 + T2 (M = 0.05, SD = 1.15) exhibited no significant difference [M(I-J) = 0.24, SD = 0.13, p > 0.05] [M(I-J) reports the difference in Mean of two categories: Mean(T1) (I)-Mean(T1 + T2) (J)]. This demonstrates that the addition of T2 gestures in T1 exerts no such effect. Regarding the status factor (F3), no gestures (M = −0.27, SD = 1.06) exerted a significantly smaller effect than T1 (M = 0.03, SD = 0.94) and T1 + T2 (M = 0.25, SD = 0.93). However, no significant difference was observed between T1 and T1 + T2[M(I-J) = −0.22, SD = 0.13, p > 0.05]. This indicates that the introduction of T1 gestures enhances perceptions of the robot’s status but that the T2 gestures exert no such effect. The acceptance variable also exhibited the same pattern, with the no gestures category (M = 2.60, SD = 1.04) associated with significantly lower scores than the T1 category (M = 3.64, SD = 0.90), and T1 + T2 (M = 3.88, SD = 0.84). No significant difference between T1 and T1 + T2 was noted.

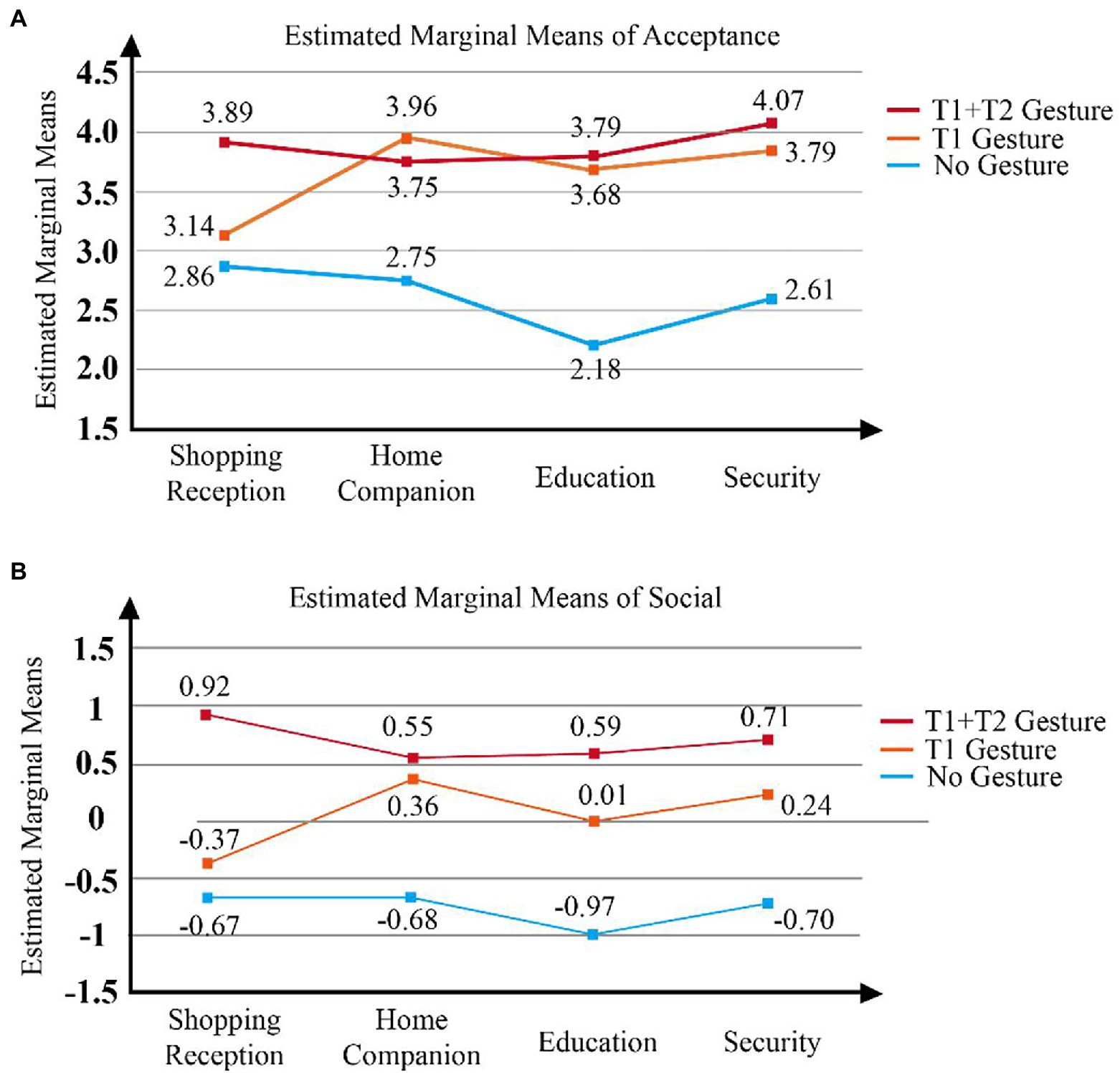

Interactive Effect

The results indicate a significant difference among gesture types and occupational fields in terms of both F1: F (6, 330) = 2.73, p < 0.05 and Acceptance: F (6, 330) = 3.01, p < 0.05. Figure 4 shows the interactive effect of gesture types and application fields. The figures of interactive effect indicated the difference of gesture design in four application fields. Which means robot gestures should be designed differently according to applications.

Figure 4. Interactive effect. (A) Estimated Marginal Means of Acceptance; (B) Estimated Marginal Means of Social.

The F1 results suggest no significant difference between the no gestures category (M = −0.67, SD = 0.83) and the T1 gesture category (M = −0.37, SD = 1.09) for the shopping reception robot. However, T1 + T2 substantially outperformed these two categories (M = 0.92, SD = 0.65). This implies that in the shopping reception field, the addition of T1 gestures does not improve the robot’s sociality, but the introduction of T2 gestures significantly improves the sociality factor (F1). However, in the home companion context, no significant difference between T1 (M = 0.36, SD = 0.73) and T1 + T2 (M = 0.55, SD = 0.80) was noted in terms of F1, but both significantly outperformed the no gestures category (M = −0.68, SD = 0.81). This suggests that the addition of T2 gestures in T1 gestures are less effective at promoting sociality in home companion applications than in shopping reception applications. Conversely, in this case, T1 gestures augmented the robot’s perceived sociality. In education contexts, the no gestures category (M = −0.97, SD = 0.92) registered a significantly lower F1 score than did the other two gesture categories, with the T1 category (M = 0.01, SD = 0.89) having a significantly lower score than the T1 + T2 category (M = 0.59, SD = 0.99). T1 + T2 score is also significantly higher than T1. In security fields, T1 + T2 score (M = 0.71, SD = 0.54) is also significantly higher than the other two, and T1 score (M = 0.24, SD = 0.27) is higher than no gestures category (M = −0.70, SD = 0.67). These indicating that with regard to education and security, T1 and T2 gestures can both significantly improve robots’ perceived sociality. This also demonstrates the differences associated with applications in distinct occupational fields.

In terms of overall acceptance, in the shopping reception application, no significant difference between the no gestures category (M = 2.86, SD = 1.21) and the T1 gestures category (M = 3.14, SD = 1.18) was noted, and T1 + T2 (M = 3.89, SD = 0.96) significantly outperformed these two groups because T2 gestures considerably improve sociality and therefore acceptance. However, in the home companion context, the no gestures category (M = 2.75, SD = 1.18) was significantly lower than both T1 (M = 3.96, SD = 0.79) and T1 + T2 (M = 3.75, SD = 0.93), and T1 and T1 + T2 did not differ significantly. In the education context, the no gestures category (M = 2.18, SD = 0.82) also had significantly lower scores than did the T1 category (M = 3.68, SD = 0.77) and T1 + T2 (M = 3.79, SD = 0.87), and T1 did not significantly differ from T1 + T2. In security, the performance is consistent with education and home companion, no gesture score (M = 2.61, SD = 0.79) is significantly lower than the other two, and T1 category (M = 3.79, SD = 0.57) and T1 + T2 category (M = 4.07, SD = 0.54) have no difference. In sum, although the addition of T2 gestures improves the sociality of the robot in the education and security context, the overall acceptance does not necessarily improve. This suggests that in home companion, education, and security applications, T1 gestures are more essential, whereas T2 gestures are more integral to shopping reception scenarios.

Discussion

The purpose of this study is to explore the influence of robot gesture type on perceived personality traits in real applications and to indicate gesture design guidance in these four applications. The main findings of this study are as follows: (1) A summary about the occurrence of four types of gestures in real industry interactions. (2) Results shows that perceived personality traits of robot can be divided into three dimensions, and we explored the influence of different gesture types on these three dimensions. (3) The optimal robot gesture design guidelines for these four different applications are proposed. The specific discussions are as follows:

First, the result of observation indicated that deictics and simple iconics occurs frequently in four applications. For example, most subjects expressed deictics: “this refrigerator,” “this is called…,” and “the window” and simple iconics: “level 1,” “3–4 spareribs,” and “2.4 meters” in observation. This is because these two gestures can be performed in one hand quickly and easily. However complex numbers “−18°C” and “1.618 times” needs the cooperation of two hands. This also shows that not all iconic gestures need to appear in robot design. In addition to specific numbers, iconic gestures also mean to express specific things, such as “ice making box,” “add...scallions and salt,” “chop into pieces,” and “an exhaust fan.” This type also occurs frequently in conversation. Usually, they are used to describe specific objects or daily behaviors. The interval between gestures is very important because “chop into pieces” and “washing” are very close in context, most of the participants selected the earlier-occurring phrase “chop into pieces” to avoid presenting both within a short time interval. This also indicates that adequate time intervals in robots should be ensured. However, metaphoric gestures only had few occurrences, such as “rapid refrigeration capacity,” “space allocation,” “nutritious and light,” “aesthetic feeling,” and “fire and gas poisoning.” Most of the expected metaphoric gestures did not reach the screening threshold and therefore were beyond the scope of this study. This is because compared with iconic and deictic gestures, metaphorics are subconscious and conceptual. They cannot be expressed as intuitive and quickly as the other two. People prefer to express concrete objects rather than abstract concepts in these four applications. However, metaphoric gestures play a key role in one individual describing a foreign concept to another. In the future, we will compare professional and informal narrative content and further explore how to integrate metaphoric gestures into robot designs used in various fields. Besides, in the video, we observed that the expert and public groups frequently displayed “beat gestures” in all three occupational contexts, suggesting that beat gestures are common in narrative communication. However, we also noted that although “head tilting” did not appear as frequently as hand gestures (thus failing to meet our screening requirements), this behavior occurred often in the public group (nearly 50%). In the phenomenon of movement synchrony in human interaction, when one person unconsciously moves their head, the other tend to imitate that movement (Kendon, 1970). In the present observation, the observer likely took a head tilting to indicate that they were paying attention to the participants. The participants then subconsciously mirrored that movement. Scholars have reported that when a robot tilts its head frequently, its behavior also appears significantly more natural (Liu et al., 2012). Regarding the unconscious behavior of raising a finger, only one participant made this gesture. We believe that this type of behavior is a personal habit of that participant.

Second, perceptions of robots’ personality traits can be divided into three categories. In our previous studies, sociality and competence were considered the key categories (Dou et al., 2020a,b, 2021). However, in the present study, the additional analysis of gestures led to the discovery of a third category, status. This demonstrates that gestures and voice affect perceptions of robots’ personalities differently. The reason is that deictic and iconic gestures enhance perceptions of robots’ characteristics, including self-confidence, status, and decisiveness, thereby strengthening their perceived status. Since no metaphoric gesture were considered in experiment, the effect of this type cannot be discussed. For sociality, increases in the frequency, speed, and amplitude of any type of gesture reinforces perceptions of the robot’s extroversion (Kim et al., 2008). In the competence factor, the addition of deictic and iconic gestures improved this effect, whereas that of beat gestures produced no such impact. This is because iconics and deictics are closely integrated with words and have specific functions. The appearance of such gestures can help explain and supplement the expression of words, thus making the robot appear more competent.

Third, the best design guidelines for four applications are as follows: In the shopping reception application, the optimal design is a combination of iconic, deictic, and beat gestures. Because the application of iconic and deictic gestures alone does not exert a particularly favorable effect, the addition of beat gestures is required to significantly improve sociality and user acceptance. This is ascribable to the fact that beat gestures can significantly increase the sense of warmth, liveliness, and joy associated with a robot. These characteristics are critical for user acceptance of robots in shopping reception. Thus, for such robots, enhancing sociality through the programming of beat gestures should constitute the key design focus. In the home companion, education, and security contexts, the design recommendation is to focus on introducing iconic and deictic gestures and avoid adding an excessive number of beat gestures. This is because for these three occupational fields, the competence and status conveyed by iconic and deictic gestures are more essential. This is reflected in the fact that although the addition of beat gestures reinforces sociality in the education and security context, acceptance does not tend to improve accordingly; in other words, sociality is less important than are status and competence for educational and security robots. In home companion, beat gestures even cannot improve either robots’ sociality and acceptance. These may be because people are inclined to view these three fields as relatively quiet or serious, requiring only minimum sociality and overt enthusiasm. Instead, robots are required to be highly competent at communicating or performing tasks. In view of cost considerations, redundant gestures are a waste of funds. Hence, the industry must include and omit beat gestures from robot designs based on each gesture’s field-specific importance.

Moreover, the occupational field determines the status factor of the robot. The status required in education contexts is considerably higher than that required in shopping reception, home companion, and security applications. This perception derives from social stereotypes; sales assistants, caregivers, housekeepers, and guards are typically seen as being in supportive and passive roles, whereas teachers are usually regarded as leaders. Therefore, an image of status is required, demonstrating once more that the inclusion of iconic and deictic gestures is integral to the design of robots deployed in the education field.

The contribution of this study is to conduct research in combination with specific application fields (shopping reception, home companion, education, and security). Compared with researches only discussed both sides of human–robot interaction, the study is more in line with the actual application situation. The study on the perceived personality traits of robots also found the three dimensions of “social,” “competence,” and “status,” which is helpful for understanding and designing the perceptual value of robots in more detail. Regard of the robot gestures, the study analyzed the differences of deictic, iconic, metaphoric, and beat gestures in four applications. And finally, the guidelines for the optimized gesture design in each application were indicated, so as to facilitate more customized robot design in the future.

Conclusion

In this study, we mainly discussed the effects of robot gestures on perceived personality traits in different applications. In the stage 1: observation, we found that metaphor gestures appear very rarely in real industrial interactions, mainly in deictic, iconic, or beat gestures. Therefore, in the second stage of the study, we explored the effect of these three types of gestures on the robot’s perceived personality traits. The result indicated that the perceived personalities of robots can be divided into three factors, among which iconic and deictic gestures significantly improve perceived competence and status. All gestures significantly improved perceived sociality. For the gesture design of robots in these four applications, adding beat gestures to robots in shopping reception can significantly improve the overall acceptance of users. However, in the other three applications, the addition of deictic and iconic gestures improved overall acceptance, while beat gesture did not. This reveals that the application fields will cause the differences in robot gesture design. The study also provides a direction for future robotics research, which is to comprehensively compare various applications, so as to propose differentiated robot design guidance.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Research Ethics Office of National Taiwan University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

JN worked on investigation, conducting experiment, data analysis, and article writing. C-FW guided and supervised the study and submitted article. XD assisted in investigation, conducting experiment, and data analysis. K-CL assisted in investigation and conducting experiment. All authors agree to be accountable for the content of the work and contributed to the article and approved the submitted version.

Funding

This study was funded by Ministry of Science and Technology, Taiwan (107-2221-E-036-014-MY3).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors are very grateful for the funding of Ministry of Science and Technology, Taiwan. At the same time, Tatung University offered a very good platform for our study. All the teachers, students, and subjects are deserved to be thanked.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.876972/full#supplementary-material

References

Aaltonen, I., Arvola, A., Heikkilä, P., and Lammi, H. (2017). “Hello Pepper, may I Tickle you? Children's and adults' Responses to an Entertainment robot at a Shopping mall.” in Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction ; March 06, 2017; New York, USA.

Argyle, M. (1972). “Non-verbal communication in human social interaction,” in Non-Verbal Communication. ed. R. A. Hinde (Cambridge: Cambridge University Press).

Baker, L. J. (2006). Observation: A complex research method. Libr. Trends 55, 171–189. doi: 10.1353/lib.2006.0045

Baraka, K., Paiva, A., and Veloso, M. (2015). “Expressive lights for revealing mobile service robot state.” in Robot 2015: Second Iberian Robotics Conference ; December 02, 2015; Lisbon, Portugal.

Baraka, K., Rosenthal, S., and Veloso, M. (2016). “Enhancing human understanding of a mobile robot's state and actions using expressive lights.” in 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) ; August 26-31, 2016; New York, USA.

Baraka, K., and Veloso, M. M. (2018). Mobile service robot state revealing through expressive lights: formalism, design, and evaluation. Int. J. Soc. Rob. 10, 65–92. doi: 10.1007/s12369-017-0431-x

Barrick, M. R., and Mount, M. K. (1991). The big five personality dimensions and job performance: a meta-analysis. Pers. Psychol. 44, 1–26. doi: 10.1111/j.1744-6570.1991.tb00688.x

Barth, J. M., Guadagno, R. E., Rice, L., Eno, C. A., and Minney, J. A. (2015). Untangling life goals and occupational stereotypes in men’s and women’s career interest. Sex Roles 73, 502–518. doi: 10.1007/s11199-015-0537-2

Bartneck, C., Kulić, D., Croft, E., and Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 1, 71–81. doi: 10.1007/s12369-008-0001-3

Bartneck, C., Reichenbach, J., and Breemen, V. A. (2004). “In your face, robot! The influence of a character’s embodiment on how users perceive its emotional expressions.” in Proceedings of the Design and Emotion Conference ; July 12-14, 2004; Ankara, Turkey, 19.

Benitti, F. B. V., and Spolaôr, N. (2017). How Have Robots Supported STEM Teaching? In Robotics in STEM Education. Springer, Cham, 103–129.

Bertacchini, F., Bilotta, E., and Pantano, P. (2017). Shopping with a robotic companion. Comput. Hum. Behav. 77, 382–395. doi: 10.1016/j.chb.2017.02.064

Breazeal, C., Kidd, C. D., Thomaz, A. L., Hoffman, G., and Berlin, M. (2005). “Effects of nonverbal communication on efficiency and robustness in human-robot teamwork.” in 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems; August 2-6, 2005; Edmonton, AB, Canada.

Broadbent, E., Feerst, D. A., Lee, S. H., Robinson, H., Albo-Canals, J., Ahn, H. S., et al. (2018). How could companion robots be useful in rural schools? Int. J. Soc. Rob. 10, 295–307. doi: 10.1007/s12369-017-0460-5

Cattell, H. E., and Mead, A. D. (2008). “The sixteen personality factor questionnaire (16PF), Personality measurement and testing,” in The SAGE Handbook of Personality Theory and Assessment. Vol. 2. eds. G. J. Boyle, G. Matthews, and D. H. Saklofske (Sage Publications, Inc.), 135–159.

Ceci, S. J., Williams, W. M., and Barnett, S. M. (2009). Women's underrepresentation in science: sociocultural and biological considerations. Psychol. Bull. 135, 218–261. doi: 10.1037/a0014412

Chang, R. C. S., Lu, H. P., and Yang, P. J. (2018). Stereotypes or golden rules? Exploring likable voice traits of social robots as active aging companions for tech-savvy baby boomers in Taiwan. Comput. Hum. Behav. 84, 194–210. doi: 10.1016/j.chb.2018.02.025

Cheng, Y. W., Sun, P. C., and Chen, N. S. (2018). The essential applications of educational robot: requirement analysis from the perspectives of experts, researchers and instructors. Comput. Educ. 126, 399–416. doi: 10.1016/j.compedu.2018.07.020

Chidambaram, V., Chiang, Y. H., and Mutlu, B. (2012). “Designing Persuasive Robots: How Robots Might Persuade People using vocal and Nonverbal cues.” in Proceedings Of The Seventh Annual Acm/Ieee International Conference On Human-Robot Interaction; March 05, 2012; Boston MA, USA.

Collins, E. C., Prescott, T. J., and Mitchinson, B. (2015). “Saying it with light: a pilot study of affective communication using the MIRO robot.” in Conference on Biomimetic and Biohybrid Systems; July 24, 2015; Barcelona, Spain.

Dahlbäck, N., Jönsson, A., and Ahrenberg, L. J. (1993). Wizard of Oz studies—why and how. Knowl. Based Syst. 6, 258–266. doi: 10.1016/0950-7051(93)90017-N

De Carolis, B., D’Errico, F., Macchiarulo, N., and Palestra, G. (2021). “Socially inclusive robots: learning culture-related gestures by playing with pepper,” in proceedings of the ACM IUI 2021 Workshops; April 13-17, 2021; College Station, USA.

Dou, X., Wu, C. F., Lin, K. C., Gan, S., and Tseng, T. M. (2020a). Effects of different types of social robot voices on affective evaluations in different application fields. Int. J. Soc. Rob. 13, 615–628. doi: 10.1007/s12369-020-00654-9

Dou, X., Wu, C. F., Wang, X., and Niu, J. (2020b). “User expectations of social robots in different applications: an online user study.” in International Conference on Human–Computer Interaction; October 17, 2020; Copenhagen, Denmark.

Dou, X., Wu, C. F., Niu, J., and Pan, K. R. (2021). Effect of voice type and head-light color in social robots for different applications. Int. J. Soc. Rob. 14, 229–244. doi: 10.1007/s12369-021-00782-w

Eagly, A. H., Wood, W., and Diekman, A. B. (2000). Social role theory of sex differences and similarities: a current appraisal. The Developmental Social Psychology of Gender. eds. T. Eckes and H. M. Trautner (New York: Psychology Press), 174.

Eysenck, H. J. (1991). Dimensions of personality: 16, 5 or 3?—Criteria for a taxonomic paradigm. Pers. Individ. Diff. 12, 773–790. doi: 10.1016/0191-8869(91)90144-Z

Fong, T., Nourbakhsha, I., and Dautenhahnc, K. (2003). A survey of socially interactive robots. Rob. Auton. Syst. 42, 143–166. doi: 10.1016/S0921-8890(02)00372-X

Forlizzi, J., and DiSalvo, C. (2006). “Service robots in the domestic environment: a study of the roomba vacuum in the home.” in Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human–Robot Interaction; March 02, 2006; Salt Lake City Utah, USA.

Gullberg, M., and Holmqvist, K. (1999). Keeping an eye on gestures: visual perception of gestures in face-to-face communication. Pragmat. Cogn. 7, 35–63. doi: 10.1075/pc.7.1.04gul

Ham, J., Bokhorst, R., Cuijpers, R., van der Pol, D., and Cabibihan, J. J. (2011). “Making robots persuasive: the influence of combining persuasive strategies (gazing and gestures) by a storytelling robot on its persuasive power.” in International Conference on Social Robotics; November 24-25, 2011; Amsterdam, The Netherlands.

Hammer, S., Lugrin, B., Bogomolov, S., Janowski, K., and André, E. (2016). “Investigating politeness strategies and their persuasiveness for a robotic elderly assistant.” in International Conference on Persuasive Technology; March 11, 2016; Salzburg, Austria.

Hancock, P. A., Billings, D. R., Schaefer, K. E., Chen, J. Y. C., de Visser, E. J., and Parasuraman, R. (2011). A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 53, 517–527. doi: 10.1177/0018720811417254

Hendriks, B., Meerbeek, B., Boess, S., Pauws, S., and Sonneveld, M. H. (2011). Robot vacuum cleaner personality and behavior. Int. J. Soc. Rob. 3, 187–195. doi: 10.1007/s12369-010-0084-5

Hinds, P. J., Roberts, T. L., and Jones, H. J. (2004). Whose job is it anyway? A study of human-robot interaction in a collaborative task. Hum. Comput. Interact. 19, 151–181. doi: 10.1207/s15327051hci1901%262_7

Hsieh, W. F., Sato-Shimokawara, E., and Yamaguchi, T. (2017). “Enhancing the familiarity for humanoid robot pepper by adopting customizable motion.” In IECON 2017-43rd Annual Conference of the IEEE Industrial Electronics Society; October 29-November 1, 2017; (IEEE), 8497–8502.

Huang, C. M., and Mutlu, B. (2013). “Modeling and evaluating narrative gestures for humanlike robots” in Robotics: Science and Systems. Online Proceedings IX; June 24-28, 2013; Technische Universität Berlin, Berlin, Germany, 57–64.

Hwang, J., Park, T., and Hwang, W. J. (2013). The effects of overall robot shape on the emotions invoked in users and the perceived personalities of robot. Appl. Ergonom. 44, 459–471. doi: 10.1016/j.apergo.2012.10.010

Jussim, L. J. (1991). Social perception and social reality: a reflection-construction model. Psychol. Rev. 98, 54–73. doi: 10.1037/0033-295X.98.1.54

Kanda, T., Ishiguro, H., and Ishida, T. (2001). “Psychological analysis on human-robot interaction.” in Proceedings 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No. 01CH37164); May 21-26, 2001; Seoul, Korea (South).

Kendon, A. (1970). Movement coordination in social interaction: some examples described. Acta Psychol. 32, 101–125. doi: 10.1016/0001-6918(70)90094-6

Kendon, A. (1988). “How gestures can become like words,” in Cross-Cultural Perspectives in Nonverbal Communication. ed. F. Poyatos (Chicago: Hogrefe & Huber Publishers), 131–141.

Kim, H. H., Lee, H. E., Kim, Y. H., Park, K. H., and Bien, Z. Z. (2007). “Automatic generation of conversational robot gestures for human-friendly steward robot.” In RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication; August 26-29, 2007; IEEE, 1155–1160.

Kim, H., Kwak, S. S., and Kim, M. (2008). “Personality design of sociable robots by control of gesture design factors.” in RO-MAN 2008-The 17th IEEE International Symposium on Robot and Human Interactive Communication; August 1-3, 2008; Munich.

Kim, Y., Kwak, S. S., and Kim, M. (2013). Am I acceptable to you? Effect of a robot’s verbal language forms on people’s social distance from robots. Comput. Hum. Behav. 29, 1091–1101. doi: 10.1016/j.chb.2012.10.001

Kirby, R., Forlizzi, J., and Simmons, R. (2010). Affective social robots. Rob. Auton. Syst. 58, 322–332. doi: 10.1016/j.robot.2009.09.015

Koceski, S., and Koceska, N. (2016). Evaluation of an assistive telepresence robot for elderly healthcare. J. Med. Syst. 40:121. doi: 10.1007/s10916-016-0481-x

Lee, J. J., Knox, B., and Breazeal, C. (2013). “Modeling the dynamics of nonverbal behavior on interpersonal trust for human-robot interactions.” in 2013 AAAI Spring Symposium Series; March 15, 2013; San Francisco, USA.

Lee, K. M., Jung, Y., Kim, J., and Kim, S. R. (2006). Are physically embodied social agents better than disembodied social agents? The effects of physical embodiment, tactile interaction, and people's loneliness in human–robot interaction. Int. J. Hum. Comput. Stud. 64, 962–973. doi: 10.1016/j.ijhcs.2006.05.002

Li, D., Rau, P. P., and Li, Y. J. (2010). A cross-cultural study: effect of robot appearance and task. 2010. Int. J. Soc. Rob. 2, 175–186. doi: 10.1007/s12369-010-0056-9

Liu, C., Ishi, C. T., Ishiguro, H., and Hagita, N. (2012). “Generation of nodding, head tilting and eye gazing for human-robot dialogue interaction.” in 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI); March 5-8, 2012; Boston, MA, USA (IEEE), 285–292.

Lohse, M., Rothuis, R., Gallego-Pérez, J., Karreman, D. E., and Evers, V. (2014). “Robot gestures make difficult tasks easier: the impact of gestures on perceived workload and task performance.” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; April 26, 2014; New York, USA.

Looije, R., Neerincx, M. A., and Cnossen, F. J. (2010). Persuasive robotic assistant for health self-management of older adults: design and evaluation of social behaviors. Int. J. Hum. Comput. 68, 386–397. doi: 10.1016/j.ijhcs.2009.08.007

Louie, W. Y. G., McColl, D., and Nejat, G. (2014). Acceptance and attitudes toward a human-like socially assistive robot by older adults. Assist. Technol. 26, 140–150. doi: 10.1080/10400435.2013.869703

Maricchiolo, F., Gnisci, A., Bonaiuto, M., and Ficca, G. (2009). Effects of different types of hand gestures in persuasive speech on receivers’ evaluations. Lang. Cogn. Process. 24, 239–266. doi: 10.1080/01690960802159929

Marge, M., Bonial, C., Byrne, B., Cassidy, T., Evans, A. W., Hill, S. G., et al. (2017). Applying the wizard-of-Oz technique to multimodal human-robot dialogue. arXiv [Preprint] arXiv:1703.03714.

Matsubara, Y. J. M. A., and Automation, H. (1996). Kansei virtual reality technology and evaluation on kitchen design. Manufact. Agil. Hybrid Autom., 81–84.

Mavridis, N. (2015). A review of verbal and non-verbal human–robot interactive communication. Rob. Auton. Syst. 63, 22–35. doi: 10.1016/j.robot.2014.09.031

McGinn, C., Sena, A., and Kelly, K. J. (2017). Controlling robots in the home: factors that affect the performance of novice robot operators. Appl. Ergonom. 65, 23–32. doi: 10.1016/j.apergo.2017.05.005

Mehrabian, A., and Williams, M. (1969). Nonverbal concomitants of perceived and intended persuasiveness. J. Pers. Soc. Psychol. 13, 37–58. doi: 10.1037/h0027993

Menne, I. M., and Schwab, F. (2018). Faces of emotion: investigating emotional facial expressions towards a robot. Int. J. Soc. Rob. 10, 199–209. doi: 10.1007/s12369-017-0447-2

Mitsunaga, N., Miyashita, Z., Shinozawa, K., Miyashita, T., Ishiguro, H., and Hagita, N. (2008). “What makes people accept a robot in a social environment-discussion from six-week study in an office.” in 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems; September 22-26, 2008; France (Nice).

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley (1970). IEEE Rob. Autom. Magaz. 19, 98–100. doi: 10.1109/MRA.2012.2192811

Nass, C., Steuer, J., and Tauber, E. R. (1994). “Computers are social actors,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; April 24-28, 1994; Boston, MA, USA, 72–78.

Nehaniv, C. L., Dautenhahn, K., Kubacki, J., Haegele, M., Parlitz, C., and Alami, R. (2005). “A methodological approach relating the classification of gesture to identification of human intent in the context of human-robot interaction.” in ROMAN 2005. IEEE International Workshop on Robot and Human Interactive Communication, 2005; October 03, 2005; Nashville, TN, USA.

Niculescu, A., Van Dijk, B., Nijholt, A., and Li, H. (2013). Making social robots more attractive: The effects of voice pitch, humor and empathy. Int. J. Soc. Rob. 5, 171–191. doi: 10.1007/s12369-012-0171-x

Norman, W. T. (1963). Toward an adequate taxonomy of personality attributes: replicated factor structure in peer nomination personality ratings. J. Abnorm. Psychol. 66, 574–583. doi: 10.1037/h0040291

Riek, L. D., Rabinowitch, T. C., Bremner, P., Pipe, A. G., Fraser, M., and Robinson, P. (2010). “Cooperative gestures: effective signaling for humanoid robots.” in 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI); March 2-5, 2010; Osaka, Japan.

Riek, L. D. (2012). Wizard of oz studies in hri: a systematic review and new reporting guidelines. J. Hum. Robot Interact. 1, 119–136. doi: 10.5898/JHRI.1.1.Riek

Robins, B., Dautenhahn, K., Te Boekhorst, R., and Billard, A. (2005). Robotic assistants in therapy and education of children with autism: can a small humanoid robot help encourage social interaction skills? Univ. Access Inform. Soc. 4, 105–120. doi: 10.1007/s10209-005-0116-3

Sabelli, A. M., and Kanda, T. (2016). Robovie as a mascot: a qualitative study for long-term presence of robots in a shopping mall. Int. J. Soc. Rob. 8, 211–221. doi: 10.1007/s12369-015-0332-9

Saunderson, S., and Nejat, G. (2019). How robots influence humans: a survey of nonverbal communication in social human–robot interaction. Int. J. Soc. Rob. 11, 575–608. doi: 10.1007/s12369-019-00523-0

Savela, N., Turja, T., and Oksanen, A. (2018). Social acceptance of robots in different occupational fields: A systematic literature review. Int. J. Soc. Rob. 10, 493–502. doi: 10.1007/s12369-017-0452-5

Saygin, A. P., Chaminade, T., Ishiguro, H., Driver, J., and Frith, C. (2012). The thing that should not be: predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Soc. Cogn. Affect. Neurosci. 7, 413–422. doi: 10.1093/scan/nsr025

Song, S., and Yamada, S. (2018). “Bioluminescence-inspired human-robot interaction: Designing expressive lights that affect human's willingness to interact with a robot.” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction; March 5-8, 2018; Chicago, IL, USA.

Stanton, C. J., and Stevens, C. J. (2017). Don’t stare at me: the impact of a humanoid robot’s gaze upon trust during a cooperative human–robot visual task. Int. J. Soc. Rob. 9, 745–753. doi: 10.1007/s12369-017-0422-y

Stroessner, S. J., and Benitez, J. (2019). The social perception of humanoid and non-humanoid robots: effects of gendered and machinelike features. Int. J. Soc. Rob. 11, 305–315. doi: 10.1007/s12369-018-0502-7

Takayama, L., Dooley, D., and Ju, W. (2011). “Expressing thought: improving robot readability with animation principles.” in Proceedings of the 6th International Conference on Human-Robot Interaction; March 06, 2011; Lausanne, Switzerland.

Takeuchi, J., Kushida, K., Nishimura, Y., Dohi, H., Ishizuka, M., Nakano, M., et al. (2006). “Comparison of a humanoid robot and an on-screen agent as presenters to audiences.” in 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems; October 9-15, 2006; Beijing, China.

Tanaka, F., and Kimura, T. (2010). Care-receiwere not discussed in our previous study. In non-verbal ing robot as a tool of teachers in child education. Interact. Stud. 11, 263–268. doi: 10.1075/is.11.2.14tan

Tapus, A., Ţăpuş, C., and Matarić, M. J. (2008). User—robot personality matching and assistive robot behavior adaptation for post-stroke rehabilitation therapy. Intell. Serv. Rob. 1, 169–183. doi: 10.1007/s11370-008-0017-4

Tay, B., Jung, Y., and Park, T. (2014). When stereotypes meet robots: The double-edge sword of robot gender and personality in human–robot interaction. Comput. Hum. Behav. 38, 75–84. doi: 10.1016/j.chb.2014.05.014

Tojo, T., Matsusaka, Y., Ishii, T., and Kobayash, T. (2000). “A conversational robot utilizing facial and body expressions.” in SMC 2000 Conference Proceedings 2000 IEEE International Conference on Systems, Man and Cybernetics.'Cybernetics Evolving to Systems, Humans, Organizations, and Their Complex Interactions'(Cat. no. 0); October 8-11, 2000; Nashville, TN, USA.

Usui, T., Kume, K., Yamano, M., and Hashimoto, M. (2008). “A robotic KANSEI communication system based on emotional synchronization.” in 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems; September 22-26, 2008; Nice, France.

van den Brule, R., Bijlstra, G., Wigboldus, D., Haselager, P., and Dotsch, R. (2014). Do robot performance and behavioral style affect human trust? Int. J. Soc. Rob. 6, 519–531. doi: 10.1007/s12369-014-0231-5

Walters, M. L., Lohse, M., Hanheide, M., Wrede, B., Syrdal, D. S., Koay, K. L., et al. (2011). Evaluating the robot personality and verbal behavior of domestic robots using video-based studies. Adv. Rob. 25, 2233–2254. doi: 10.1163/016918611X603800

Keywords: social robot, occupational field, social cue, gesture, perceived personalities

Citation: Niu J, Wu C-F, Dou X and Lin K-C (2022) Designing Gestures of Robots in Specific Fields for Different Perceived Personality Traits. Front. Psychol. 13:876972. doi: 10.3389/fpsyg.2022.876972

Edited by:

Andrej Košir, University of Ljubljana, SloveniaReviewed by:

Iveta Eimontaite, Cranfield University, United KingdomSandra Cano, Pontificia Universidad Católica de Valparaiso, Chile

Copyright © 2022 Niu, Wu, Dou and Lin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chih-Fu Wu, d2NmQGdtLnR0dS5lZHUudHc=

Jin Niu

Jin Niu Chih-Fu Wu

Chih-Fu Wu Xiao Dou

Xiao Dou Kai-Chieh Lin2

Kai-Chieh Lin2