- 1Department of Applied Psychology, Ningbo University, Ningbo, China

- 2Department of Psychological and Quantitative Foundations, University of Iowa, Iowa City, IA, United States

In the current paper, we propose a latent interdependence approach to modeling psychometric data in social networks. The idea of latent interdependence is adopted from social relations models (SRMs), which formulate a mutual-rating process by both dyad members’ characteristics. Under the framework of the latent interdependence approach, we introduce two psychometric models: The first model includes the main effects of both rating-sender and rating-receiver, and the second model includes a latent distance effect to assess the influence from the dissimilarity between the latent characteristics of both sides. The latent distance effect is quantified by the Euclidean distance between both sides’ trait scores. Both models use Bayesian estimation via Markov chain Monte Carlo. How accurately model parameters were estimated was evaluated in a simulation study. Parameter recovery results showed that all parameters were accurately recovered under most of the conditions investigated. As expected, the accuracy of model estimation was significantly improved as network size grew. Also, through analyzing empirical data, we showed how to use the estimates of model parameters to predict the latent weight of connections among group members and rebuild either a univariate or multivariate network at a latent trait level. Finally, we discuss issues regarding model comparison and offer suggestions for future studies.

Introduction

Social and behavioral scientists use the word relation to portray the way in which individuals or other social entities (e.g., organizations or countries) are connected. Conceptually, a relation reveals the relevance of one entity to another, and its fundamental unit is a dyadic interaction. Relations are sometimes simply defined as connections or links between dyad members, and at other times by various relational constructs (e.g., collaboration and attachment). In the latter condition, researchers assess these constructs by observing dyad members’ responses to a set of well-designed items, inferring each member’s construct scores via observed psychometric relational data. Within these measurement settings, relations are practically treated as individuals’ personal traits, defined as a person’s attitude toward or willingness and actions to develop a certain type of interpersonal interaction with others.

Modeling and analyzing relational data are the goals of two major statistical analysis paradigms: dyadic data analysis (DDA) and social network analysis (SNA). The focus of DDA (e.g., the social relations model, Kenny and La Voie, 1984; Kashy and Kenny, 1990; Nestler, 2018; Nestler et al., 2020) is on quantifying non-independence through a partitioning of the variance observed in dyadic data (Kenny et al., 2006). SNA, in contrast, attempts to explain the distributions of observed ties (or weighted ties) among social entities using a series of models (e.g., the p* models, the exponential random graphs models, and the latent space models, Wasserman and Pattison, 1996; Pattison and Wasserman, 1999; Hoff et al., 2002; Snijders et al., 2006; Sewell and Chen, 2015). Despite having been considered as routine methodological choices by social and behavioral researchers in studying relations, both approaches are limited in modeling psychometric data representing relational constructs (e.g., interpersonal trust) with potentially complex latent structures due to the insufficiency of psychometric models within their formulations (Snijders, 2009).

In recent years, the efforts to combine network analysis and psychometrics could be seen in attempts to apply the idea of network modeling in psychometrics (e.g., Schmittmann et al., 2013; Epskamp et al., 2017; Jin and Jeon, 2019; Jeon et al., 2021). For instance, Epskamp et al. (2017) introduced a framework to incorporate network modeling into structural equation modeling. The framework features latent network modeling and residual network modeling, in which the covariance structures of both latent variables and residuals are explained as the results of the interplay of each pair of latent or residual components. These efforts advanced the development and application of psychological networks (e.g., Cramer et al., 2010), in which nodes are variables. Also, in analyzing item response data, Jin and Jeon (2019) proposed a joint network modeling approach to detect the local dependence among items and among test-takers. By adapting a latent space approach (Hoff et al., 2002), they constructed multi-layer item networks and multi-layer person networks from item response data, which are modeled as a function of the latent positions of items or persons. Although researchers combined network and psychometric modeling, these approaches did not focus on modeling psychometric relational data within networks, as in the present study.

Recent work by Nestler and colleagues (e.g., Nestler, 2018; Nestler et al., 2020) under the framework of the social relations model (SRM) have made significant advances in modeling psychometric relational data. Specifically, they extended the classic SRM from single-item to multivariate settings and proposed social relations structural equation models (SR-SEM) for data coming from “multiple” round-robin designs (i.e., that require mutual ratings on multiple items). In SR-SEM, person-level SRM effects (or true SRM effects) function as latent factors in explaining item-level SRM effects. They represent a person’s general tendency to perceive others in a certain way (the true actor/perceiver effect), their general tendency to be perceived by others in a certain way (the true partner/target effect), and the true uniqueness of a given dyad’s mutual ratings. From a psychometric standpoint, SR-SEM produces estimates for each person’s two traits (i.e., their general tendencies to perceive others and to be perceived by others in a certain way) and for each dyad’s uniqueness. However, it does not directly provide estimates for the relational construct that the items are meant to measure. As aforementioned, when defined as a personal trait, a relational construct describes one’s attitude toward or willingness and actions to develop or maintain a certain type of interpersonal interaction with others. For instance, consider a scenario in which a researcher created a few items (e.g., “I can rely on my partner.”) to measure a relational construct interpersonal trust and administered them to a group of individuals. Using the SR-SEM, the researcher could estimate everyone’s general tendency to be trusted and that to trust others, and every dyad’s relational uniqueness. However, it should be noted that these two tendencies (i.e., one’s trustfulness and trustworthiness) from SR-SEM are not the relational construct of interpersonal trust itself, which, as a personal trait, is defined as one’s attitude to engaging in a mutual trust with others. Those who score higher on this trait feel more positive about mutual trust and show a stronger tendency to develop or maintain a mutual trust with others.

Moreover, in modeling dyadic data, traditional approaches (e.g., SRM and SNA) give less attention to the characteristics of an item. However, in a round-robin design, for example, dyadic data may be generated from both person-focused items (e.g., “I like my partner.”) and dyad-focused items (e.g., “My partner and I [or We] like each other”). In fact, it is common to see both types of items from the same instrument [e.g., McAllister’s (1995) Interpersonal Trust Measure; Horvath and Greenberg’s (1989) Working Alliance Inventory]. For interpersonal relationship researchers, understanding what these differences mean in terms of measuring a relational construct is of practical importance for the relationship assessment.

The goal of this paper is to present and evaluate a set of new psychometric models—latent interdependence models (LIDM)—that describe continuous dyadic relational data within a social network. The models aim to score each person’ traits directly along each latent dimension of a relational construct while evaluating the properties of the items that measure each trait. We introduce two models under the framework of LIDM, in which the first model is nested within the second one. We establish the link between group members’ responses to an item and their latent relational traits based on an understanding that the responses come from a mutual-rating process and therefore reflect the characteristics of both members in a dyad. To formulate this fundamental process, in Model 1, we explain the dyadic responses using the main effects of both dyad members’ latent traits. Model 2 then builds on Model 1 to formulate the influence from the dissimilarity between dyad members as a penalty by including a testable model component for the distance between dyad members’ latent traits.

The influence of dyad members’ characteristics and the dissimilarity between dyad members on their responses to a given item is also conditional on the item’s properties—namely, its sensitivity to a change of trait scores and to a change in the distance between dyad members’ scores. In what follows, we define these properties as the item-specific rating-sender effect (i.e., the effect of rating-sender’s latent traits on all responses to a given item), the item-specific rating-receiver effect (i.e., the effect of rating-receiver’s trait on all responses to a given item), and the item-specific latent distance effect (i.e., the effect of the dissimilarity between latent traits on all responses to a given item).

The view that dyadic data come from a mutual-rating process and are therefore interdependent was initially formulated in the social relation model (SRM, e.g., Kenny and La Voie, 1984; Kashy and Kenny, 1990), which has been used to analyze social network data from a round-robin design (Back and Kenny, 2010). The SRM takes an approach similar to that of analysis of variance, assuming observed variances come from three sources (a.k.a. “three SRM effects”): the rating sender’s general rating tendency (the actor effect), the rating receiver’s general rating-reception tendency (the partner effect), and the uniqueness of a given sender-receiver dyad (the relational effect). In the LIDM proposed in the present study, the notion of interdependence is formulated by including the latent traits of both the rating sender and the rating receiver as explanatory components for dyad members’ responses to an item.

Another presumed mechanism underlying mutual-rating processes was described by Hoff et al. (2002) based on the view that the closer two group members’ latent positions are in a social space, the more likely it is that a tie exists between them. Accordingly, they presented latent space models (LSM), where social space is a hypothetical concept, defined as “a space of unobserved latent characteristics that represent potential transitive tendencies in network relations” (p. 1091), and latent positions are abstract points in the hypothetical space that indicate group members’ relative positions. Under the framework of LIDM, we assume the rule suggested by LSM also function under the mutual-rating process, by which we build Model 2 (with between-trait interaction) on Model 1 (with main effects of traits only). In Model 2, we assume the relative spatial positions of rating senders and rating receivers along latent dimensions account for a unique proportion of variance of both sides’ responses; we term their contributions as latent distance effects. As such, Model 1—with latent distance effects constrained to be zero—is nested within Model 2. The latent distance is operationally defined as the dissimilarity between two members’ latent traits.

The remainder of this paper is organized as follows. We first introduce a scenario in which the proposed LIDM would apply. We then describe the development of the two proposed models in more detail, as well as the Bayesian estimation using Markov chain Monte Carlo (MCMC) algorithm with which to estimate their parameters. We then present a simulation study to evaluate how accurately each proposed model can be estimated. We then show via an illustrative example of real data how to use each model variant. We conclude with recommendations for model selection and future research on LIDM development.

The Latent Interdependence Models

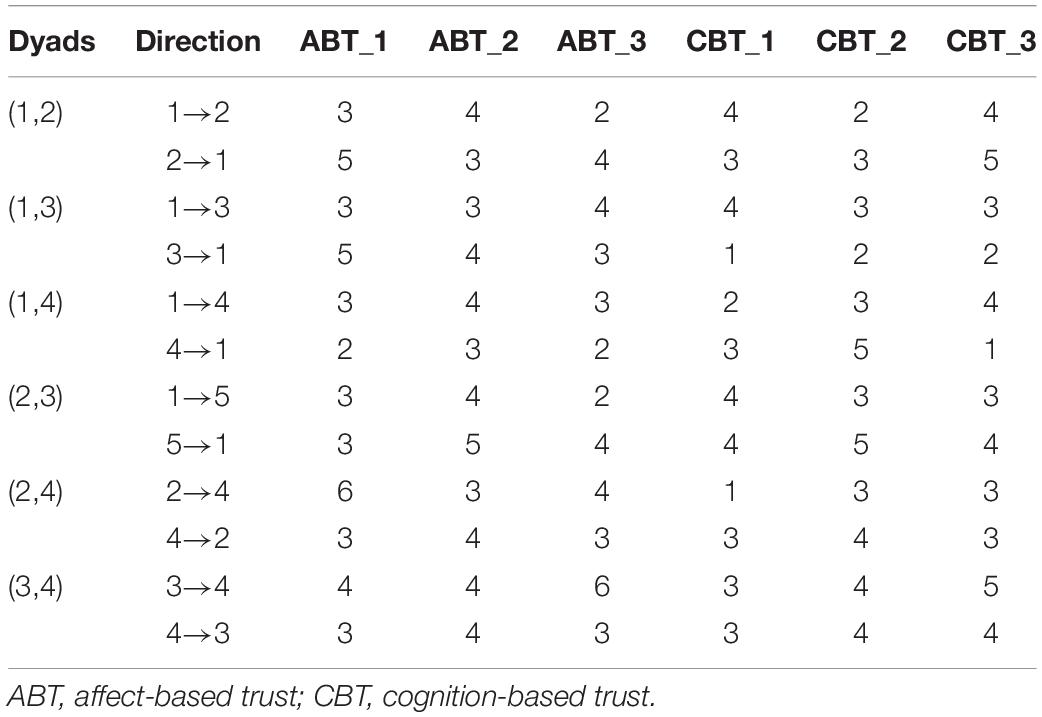

To illustrate the scenario to which the LIDM are intended to apply, we present a portion of the interpersonal trust survey data in Table 1, which were collected as part of a course evaluation protocol. Respondents were 15 graduate students (11 females and 4 males) from a master’s program in Applied Psychology who attended a group therapy course at a comprehensive university in central China. The trust survey was adapted from McAllister’s (1995) Interpersonal Trust Measure (ITM). Three items were selected from the ITM’s affect-based trust (ABT) subscale and were administered to students at the second to last meeting. A sample ABT item is “We have a sharing relationship. We can both freely share our ideas, feelings, and hopes.” The other three items were selected from its cognition-based trust (CBT) subscale and were completed by students at the last meeting. A sample CBT item is “I can rely on this person not to make my job more difficult by careless work.” Students rated their agreement with each statement on a 7-point Likert scale ranging from 1 (Strongly disagree) to 7 (Strongly agree) about each individual classmate in the class. Students’ responses were treated as continuous variables. In Table 1, the first two columns describe the structure of a network, and the remainder of the columns holds the psychometric responses indicating the two latent dimensions of interpersonal trust. In the following sections, we first introduce the format of a network and the format of psychometric data in a network.

Social Networks

For a social network that consists of n members, the number of dyads in the network is . Let yA,B denote the relations between group members, A, B = 1,…, n, A ≠ B. Conventionally, the social network data are represented by an n × n sociomatrix Y,

where yA,B can be any type of observed variable, and yA,B = 0 by convention for A = B. The social network data can also be thought of as a graph in which the nodes are group members and the edge set is {(A,B):yA,B≠0}. Of relevance for the present study, yA,B can also be latent variables representing the magnitude of member’ perceived connections with others along a given latent dimension of a relational construct. In this present case, yA,B needs to be inferred through observed psychometric relational data in social networks, as described next.

Psychometric Relational Data in Social Networks

Let d = 1,2,…, be the dth dyad of the network, l = 1,2,…, L be the lth subscale, and i = 1, 2,…, I be the ith item in the subscale. Then, the responses from the lth subscale for the dth dyad of members A and B, are contained in an I × 2 dyadic matrix U. The first column of U contains the responses of A, and the second column contains the responses of B. Considering a situation in which all subscales have an equal number of items, the dyadic responses of both members to all items can be expressed as a T × 2 matrix, V, T = I × L.

where and are member A’s responses relating to member B and B’s responses relating to A, respectively, to the ith item that measures the lth latent dimension. Let v1 and v2 be the two column vectors of V. We assume both vectors follow the same multivariate Gaussian density:

where ΣT is a T × T variance–covariance matrix.

Model Development

To present the latent interdependence models (LIDM), we first describe two general models to formulate the interdependence process within a network. We then introduce two latent interdependence models (named as Model 1 and Model 2) as the constrained form of these two general models. Both the general models and their constrained forms (i.e., LIDM) assume that group members’ responses are conditionally independent given their latent trait scores, the dyads they are embedded in, and the items they respond to. In building the general models and LIDM, we consider situations in which each item only measures one latent trait, and we denote and as group member A’s and B’s latent trait scores on the lth latent dimension, respectively. For instance, in the interpersonal trust example, θA and θB could represent any pair of students’ trait scores on either ABT or CBT dimension.

To formulate the interdependence process, in the first general model, two dyad members’ observed responses are predicted using a linear function, in which a member’s response to an item depends on both members’ scores on the latent trait measured by the item, the shared effect of two members from the dyad they both belong to, as well as the item effect due to all group members repeatedly responding to and being rated on the same item in a round-robin setting. That is,

where β0 is the grand mean of all responses by all group members, () and are the dyad-specific effects of one dyad member’s and the other’s latent trait, respectively, is the dyad-specific effect shared by both members on the given item, is the item effect encapsulating two components for the effect from the ith item on all rating senders and that on all rating receivers, respectively, and and are the residuals for each pair of ratings.

The model is presented in a dyadic format, in which Function (1) explains dyad member A’s observed rating to member B, and Function (2) explains the process that generates B’s observed rating to A. Note that a member’s latent trait, for instance, has different effects on the observed responses in Function (1) and (2), for in a mutual-rating process a member needs to play two different roles—as both a rating sender and a rating receiver. For example, in Function (1), where member A plays the rating sender, contributes to A’s observed response through a rating-sender effect. In contrast, in Function (2), where A plays a rating receiver, contributes to B’s response through a rating-receiver effect. For this reason, to estimate , observed responses from both A and B are needed.

The rationale behind this formulation is that a relation reveals relevance of one to another, and therefore items designed to measure a relational construct in any dyadic (e.g., a round robin design) or network settings should be reflective of a dyadic combination, rather than the characteristics of an individual alone. In the interpersonal trust example, students’ responses to the item “We have a sharing relationship” [extracted from McAllister’s (1995) Interpersonal Trust Measure] designed to measure affect-based trust, should be reflective of both one’s own trait as the rating sender and that of the rating receiver.

The second general model formulates the interdependence process to include the dissimilarity between dyad members’ characteristics as a penalty, such that a dyad member’s response to an item is a function of both members’ latent trait scores, the Euclidean distance between their latent trait scores, and the item effect:

where Ed is the Euclidean distance between two members of the dth dyad and () is the dyad specific effect of the distance metric related to different members. For and ∈ℝN,

and Ed=|θA-θB| for L = 1. A correlation between sender effects (e.g., ) and receiver effects (e.g.,) associated with the same trait is allowed across dyads, suggesting that the role a rating sender’s trait plays in their ratings to receivers may correlate with the role the same trait plays in the receivers’ ratings to the sender.

The latent interdependence models (LIDM) are constrained versions of more general models. To describe these constraints, we first define and as sender effects and and as receiver effects. We then constrain these receiver effects to be positive and the variance of the receiver effects to be one to identify the covariance matrix for the sender- and receiver- effects. Also, we constrain the value of to be negative with an assumption that the distance metric always has a negative contribution to the response, as also stated in Hoff et al.’s (2002, p. 1091) latent space models. Further, we assume that, within a dyad, = , = , = , and = . Finally, sender effects, receiver effects, distance metric effects, and the variance of the residuals are assumed to be invariant across dyads and only to vary across items. Following these imposed restrictions, a correlation between item-specific sender effects and item-specific receiver effects associated with the same trait is allowed across items for LIDM.

As Model 1, the first constrained form of the LIDM can be written as:

where = + is the item-specific residual term. We present Model 2 as the second constrained form of the LIDM, which can be written as:

where = + , and is the item-specific effect of the distance metric.

Let S represent the person providing the rating (the Sender) and R represent the person being rated (the Receiver). We define as an observed response of any rating sender to an item from the lth subscale for any rating receiver. Accordingly, and , respectively, represent a rating sender’s and a rating receiver’s trait, and and , respectively, denote an item-specific sender effect and an item-specific receiver effect. Then, the dyadic format of Model 1 and Model 2 can be, respectively, simplified as:

The item-specific parameters, , , and , describe the characteristics of a given item—namely, its ability to differentiate rating senders’ traits, rating receivers’ traits, and sender–receiver trait dissimilarities.

Equations (10) and (11) specify the relationships between the observed item responses and the latent traits, and therefore they are regarded as the measurement model portion of the LIDM. Another key component is the structural model, which specifies the relationships among all latent traits within a dyad. Let ΘSR be an L×2 matrix, containing the latent trait scores for a dyad. Then, the structural model can be expressed as:

where μSR is an L×2 matrix containing the means for all latent traits, and ΞSR is an L×2 matrix containing the random errors of all latent traits. Let Ξ be either of column vectors of ΞSR, such that Ξ follows a multivariate normal distribution 𝒩(0,Σθ).

Under the LIDM, the probability of observing the relational data for a social network with n members generated from Model 1 can be written as:

where BS and BR are T × 1 matrices, T = I × L, containing all item-specific sender effects and receiver effects, respectively. Also, Θ is a W×1 matrix, such that W = n×L, containing the latent trait scores for all group members. The probability of observing relational data for a social network with n members generated from Model 2 can be written as:

where BD is a T × 1 matrix containing the distance effects associated with all items.

According to the LIDM, the prior example of interpersonal trust data can be modeled as follows. Under Model 1, the response of student A to an ABT item regarding student B, is determined by B’s ABT trait score and A’s own ABT trait score. Similarly, under Model 2, A’s response to an ABT item is determined by their own and B’s ABT scores, as well as their relative position (i.e., the distance) in a latent space defined by two given dimensions—the ABT trait and the CBT trait. The closer two students are in this latent “trust” space, the more likely they can be regarded as similar in considering their “trust” traits. Students of the same “trust” type may share some common values and/or other important common characteristics. In the LIDM, as a testable hypothesis in explaining one’s response to any items, it is possible these similarities may have a unique contribution to the responses (beyond that accounted for by both students’ traits being measured by any given “trust” item). The same pattern would hold for the students’ responses to the CBT items. The models produce the posterior distributions of all students’ ABT and CBT latent trait scores, as well as the covariance between these two latent traits; these distributions can be summarized with means (i.e., the estimates via expected a posteriori [EAP] estimation) or medians. Also, the weights of all directed connections among students along the ABT and CBT dimensions can be derived based on the estimates of the latent traits and their related effects and interpreted using the original metric of the survey.

Parameter Estimation

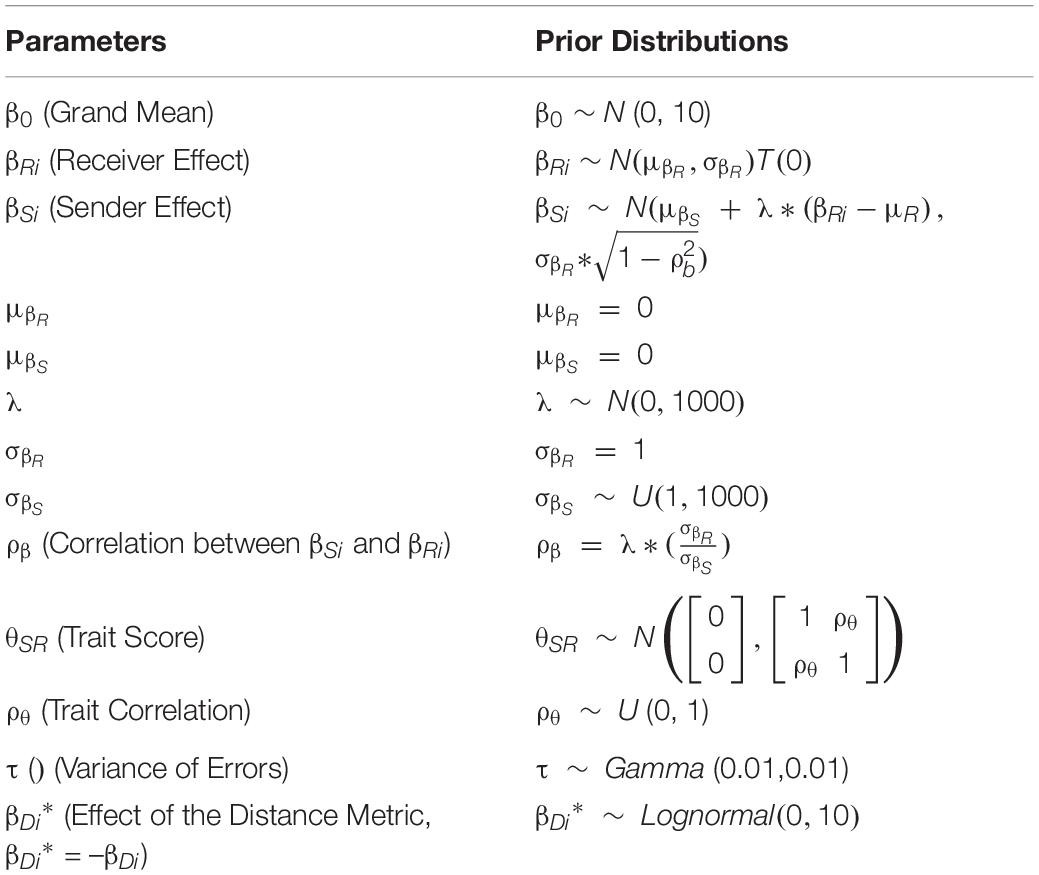

To estimate the LIDM, we have taken a Bayesian approach, in which the objective is to find the desired estimate using the posterior probability distribution of a given parameter. As for the prior distributions of model parameters, we assume:

where = , and is a vector of the sender effect and receiver effect associated with the same item, which follows a truncated multivariate normal distribution in which the dimension of receiver effect is bounded above 0. Then, the full posterior distribution of the parameters in Model 1 and Model 2, respectively, can be defined as:

and

The likelihood function in the Functions (15) and (16) can be expanded as in Equations (13) and (14).

We used a Markov chain Monte Carlo (MCMC) to sample multivariate random quantities from a full posterior distribution. In this study, sampling from the posterior distributions of parameters of interest was implemented by the computer program JAGS (Just Another Gibbs Sampler, Plummer, 2003) via a slice sampling algorithm (Neal, 2003). The point estimates of model parameters have been summarized by the EAP estimates of posterior distributions.

A Simulation Study

Design

The primary goal of this simulation study reported next was to evaluate how accurately the parameters of two proposed LIDM can be recovered. To do so, psychometric relational data were simulated under Model 1 and Model 2 and the parameters of each model were estimated with its own data. The accuracy of parameter recovery was evaluated by root-mean-squared errors (RMSE), normalized root-mean-squared error (NRMSE), bias, and coefficient of determination (R2), which are, respectively, calculated as follows:

and

where n denotes the number of simulated data sets, φi and denote (respectively) the true value of a given parameter φ and its estimated value in each simulation and estimation trial, and denotes the mean of the true value of φ across n simulations. In Function (18), σφ represents the standard deviation of .

The second goal of this study was to evaluate the impact of network size on the efficacy of model estimation. To that end, the performance of each model was evaluated with varying network sizes in terms of its accuracy in recovering its own model parameters with the change of network size. Lastly, we examined the accuracy of parameter recovery when each model was estimated with data generated under the other model—this was done to evaluate the robustness of parameter estimates to a particular mis-specified model under the framework of LIDM.

To evaluate the impact of network size on the efficacy of model estimation, five network sizes (n = 5, 10, 20, 30, and 100) were investigated. These network sizes were chosen to reflect a research context in which eight items were administered to measure a two-dimensional relational construct. In such a scenario, each group member rated all others on all eight items.

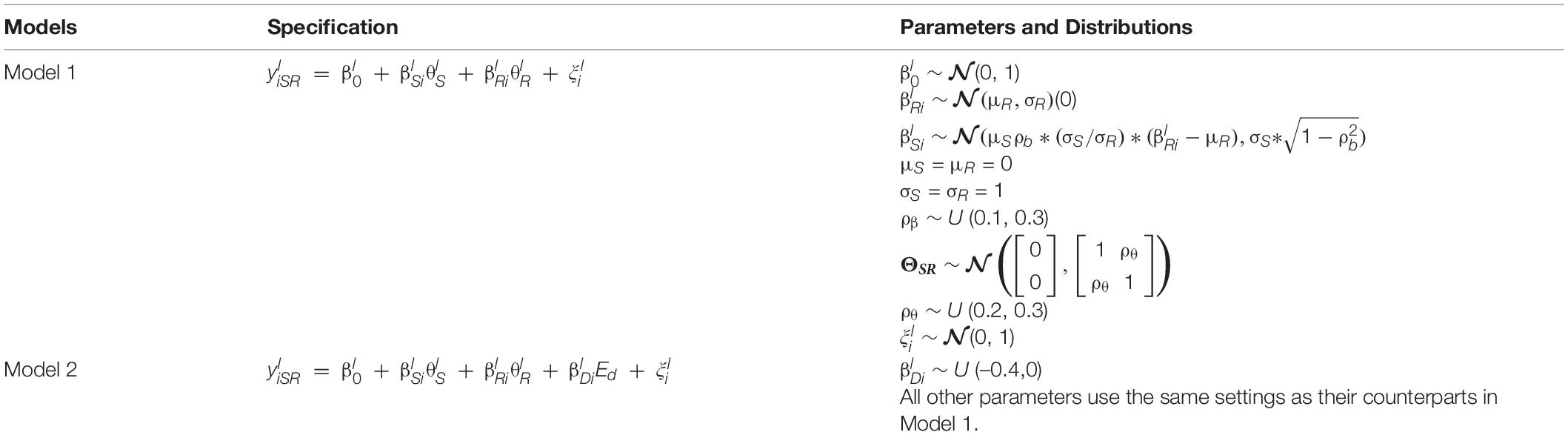

Data Generation and Evaluation

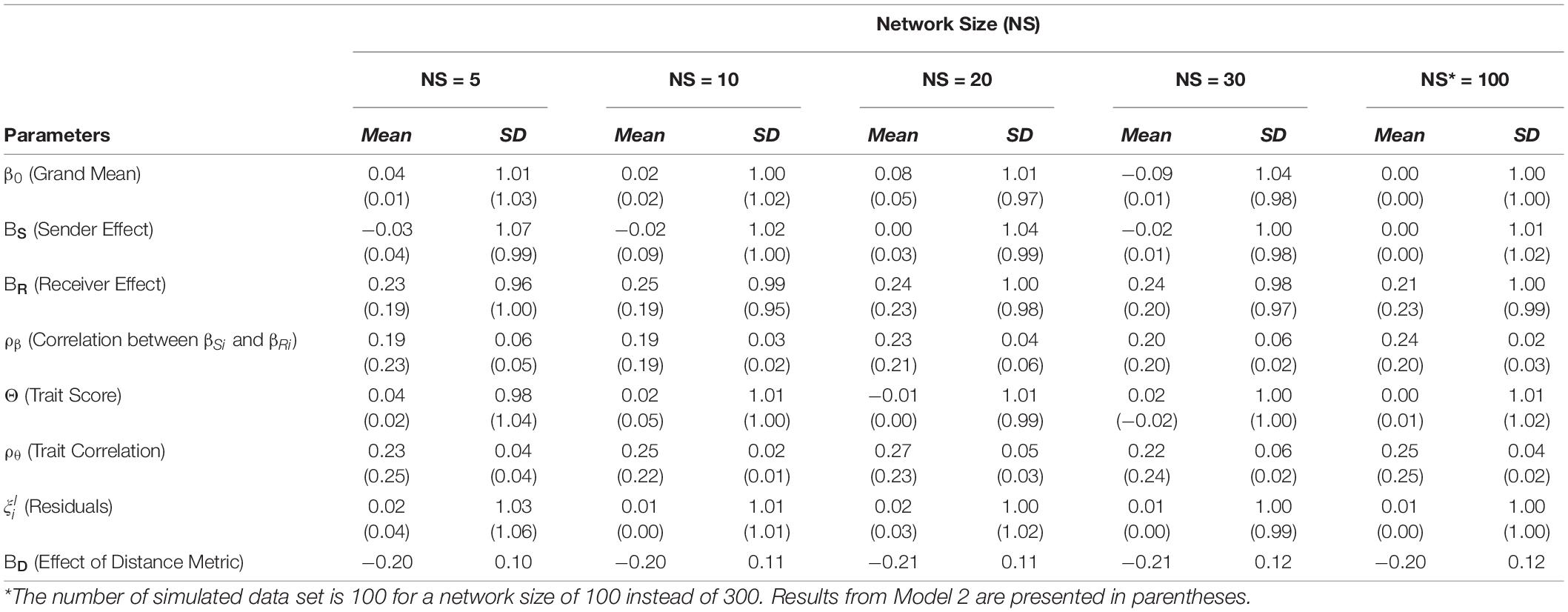

Using a simulated network size of 5, 10, 20, 30, or 100, dyadic response data were generated based on the parameterizations of the two proposed models from eight items; each of the two latent dimensions was measured by four items and each item only measured one dimension. Table 2 shows the model parameterizations and the probability distributions used to generate each model’s parameters. The probability distributions were chosen based on the properties of each parameter, but it was expected that, regardless of choice of prior distributions, the estimation procedures would recover all model parameters accurately. The means and standard deviations of the simulated (true) values for all parameters are shown in Table 3.

Accuracy of model parameter recovery was indicated by RMSE, NRMSE, bias, and R2. The calculations of all these indexes were based on 300 simulated data sets for all investigated network sizes except for size 100, which were based on 100 replications instead due to the inefficiency of computation for this condition.

Data Analysis

Bayesian estimation was performed using the program JAGS (Just Another Gibbs Sampler, Plummer, 2003). For all models, two Markov chains were generated with each chain including 100,000 samples (number of iterations = 100,000). To represent the posterior distribution of each parameter, the first 50,000 samples were discarded as a burn-in period. Also, to lower the autocorrelation in a single Markov chain, every 2nd simulated sample (rate of thinning = 2) was kept in the chain. In addition, given the complexity of model parameterization, 10,000 adaptive steps were allowed for the program to adjust its algorithms (i.e., change its step size for random walks) to different model parameters. Table 4 shows the prior distributions used for all parameters.

Results

The Effectiveness of Model Parameterization and Model Estimation

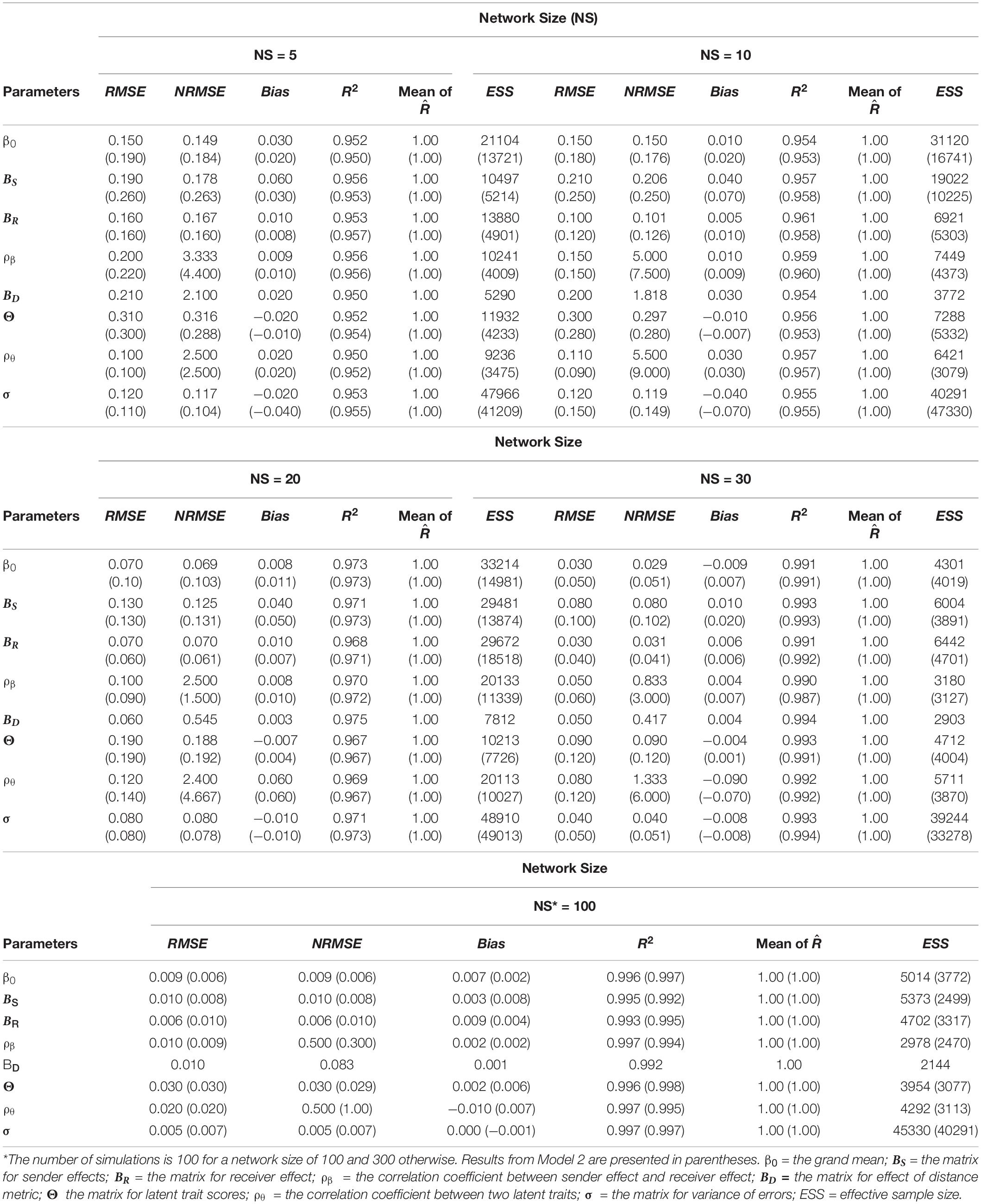

Table 5 presents RMSE, NRMSE, bias, R2, and the cross-sample average of the Gelman-Rubin statistic () for each parameter. Overall, the chains for all samples converged successfully, yielding estimates1 for all parameters with small biases and high R2 values. These convergence and parameter recovery results support the parameterizations of proposed LIDM. As shown in Table 5, RMSE, NRMSE, and bias under most conditions were small and all R2 values were above 0.95, suggesting the estimation procedures produced accurate estimates.

Table 5. Root-mean-squared error (RMSE), normalized root-mean-squared error (NRMSE), bias, and coefficient of determination (R2) of parameter recovery, convergence diagnosis index (, and effective sample size (ESS).

The accuracy of recovery varied across parameters and conditions. In estimating both models, the largest RMSE (0.31 for Model 1 and 0.30 for Model 2) was associated with the latent trait matrix (Θ) when the network size was 5, although the biases associated with Θ were small under all conditions. Also, in fitting Model 2, the RMSE and bias associated with the effect of the distance metric (BD) were small. In estimating both models, relatively large NRMSEs were observed for the parameters ρβ and ρθ under all conditions due to the relatively small standard deviations of their simulated values. Also, Model 2 produced larger NRMSEs under most conditions than did Model 1 for almost all parameters, suggesting Model 1 generally resulted in more accurate estimates than did Model 2.

The Effects of Network Size on Model Estimation

As shown in Table 5, for most parameters in both models, clearly smaller RMSE and bias values, as well as higher R2 values, were observed as network size grew, suggesting the overall accuracy of model estimation improved when estimating both models with a larger network. An exception was the correlation coefficient ρθ. The changes of RMSE and bias associated with ρθ as a function of network size were not as clear. Specifically, the largest RMSE for ρθ was associated with a network size of 20 in fitting both models. It should be noted that although the size of the matrix Θ was a function of network size, smaller RMSE and bias were observed for Θ as the network grew.

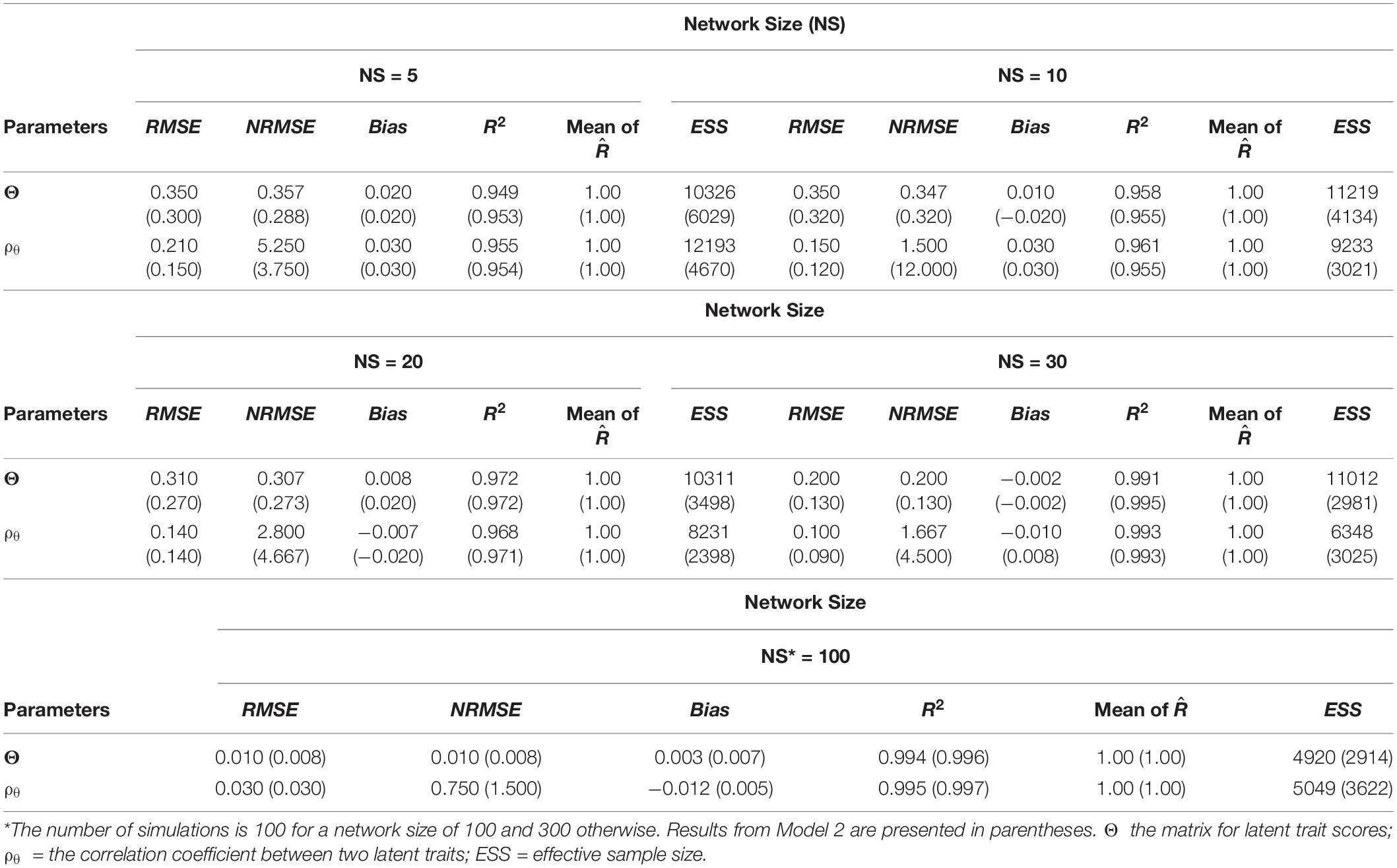

The Robustness of Model Estimation to the Violation of Model Parametrization

Table 6 shows the indices for parameter recovery accuracy and MCMC convergence when fitting one proposed model with data generated under the other model. In analyzing data that violate model parameterizations, both models converged successfully with all simulated samples. Moreover, both models produced small biases for the latent trait matrix (Θ) with a wide range spanning from 0.002 to 0.020. RMSE ranged from 0.01 to 0.35, with larger errors arising from smaller networks. The recovery of correlation coefficient (ρθ) yielded generally small RMSE (ranging from 0.03 to 0.21) and small but also widely ranging bias (spanning from 0.002 to 0.030). Moreover, high R2 (above 0.95) was observed for all parameters under almost all conditions. These results support the robustness of both models despite misspecification.

Table 6. Root-mean-squared error (RMSE), normalized root-mean-squared error (NRMSE), bias, and coefficient of determination (R2) of parameter recovery, convergence diagnosis index (, and effective sample size (ESS) from model cross-estimation.

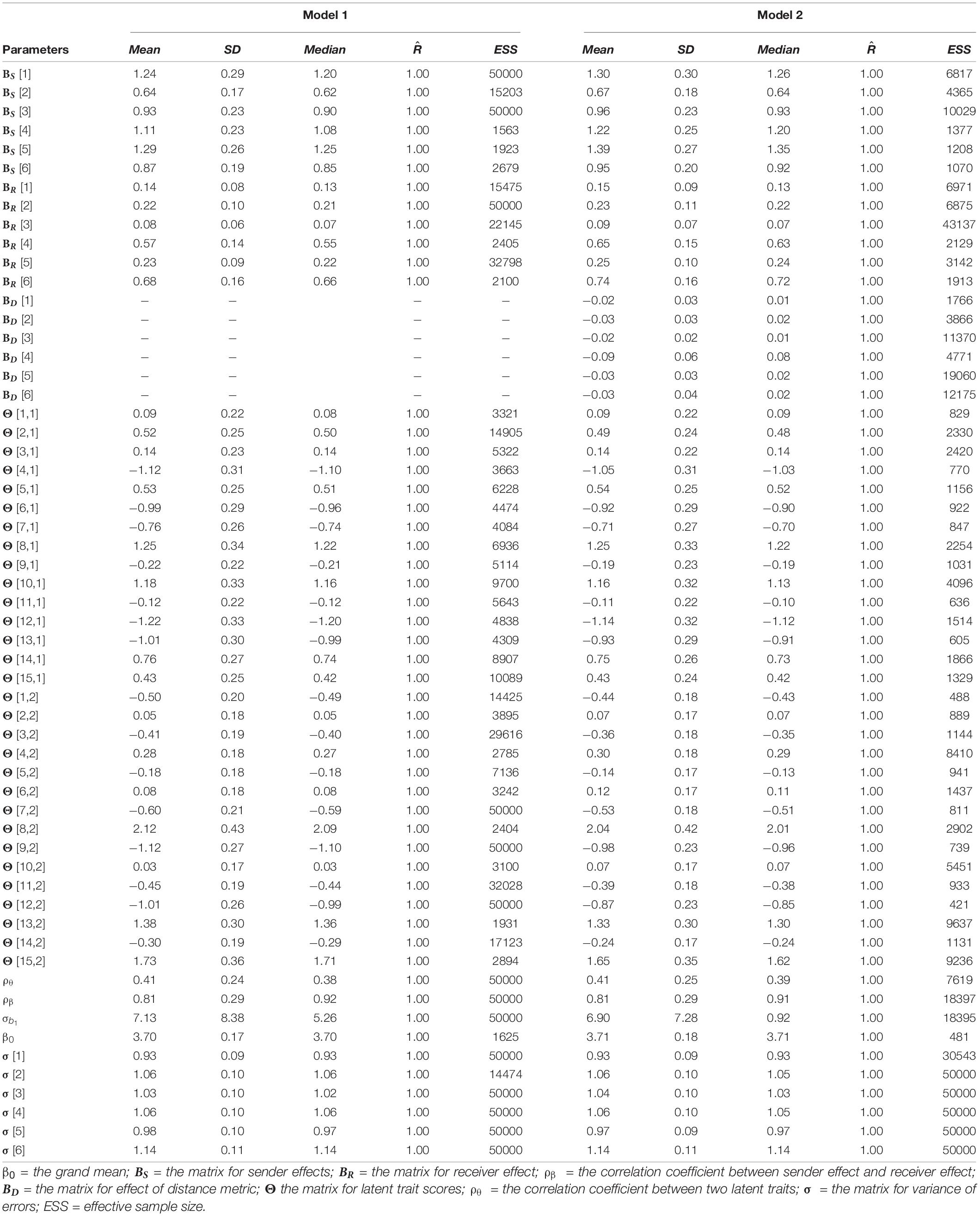

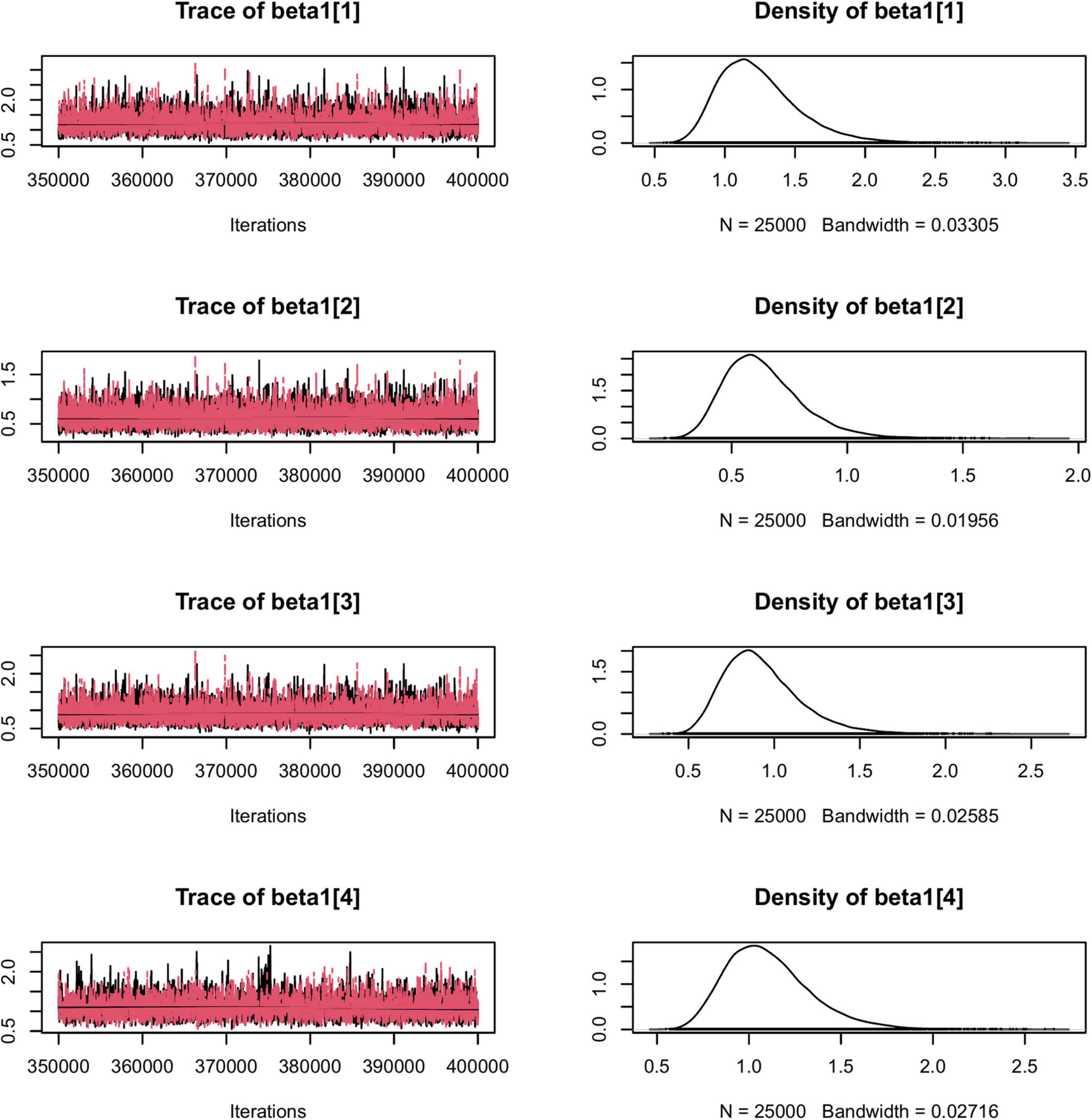

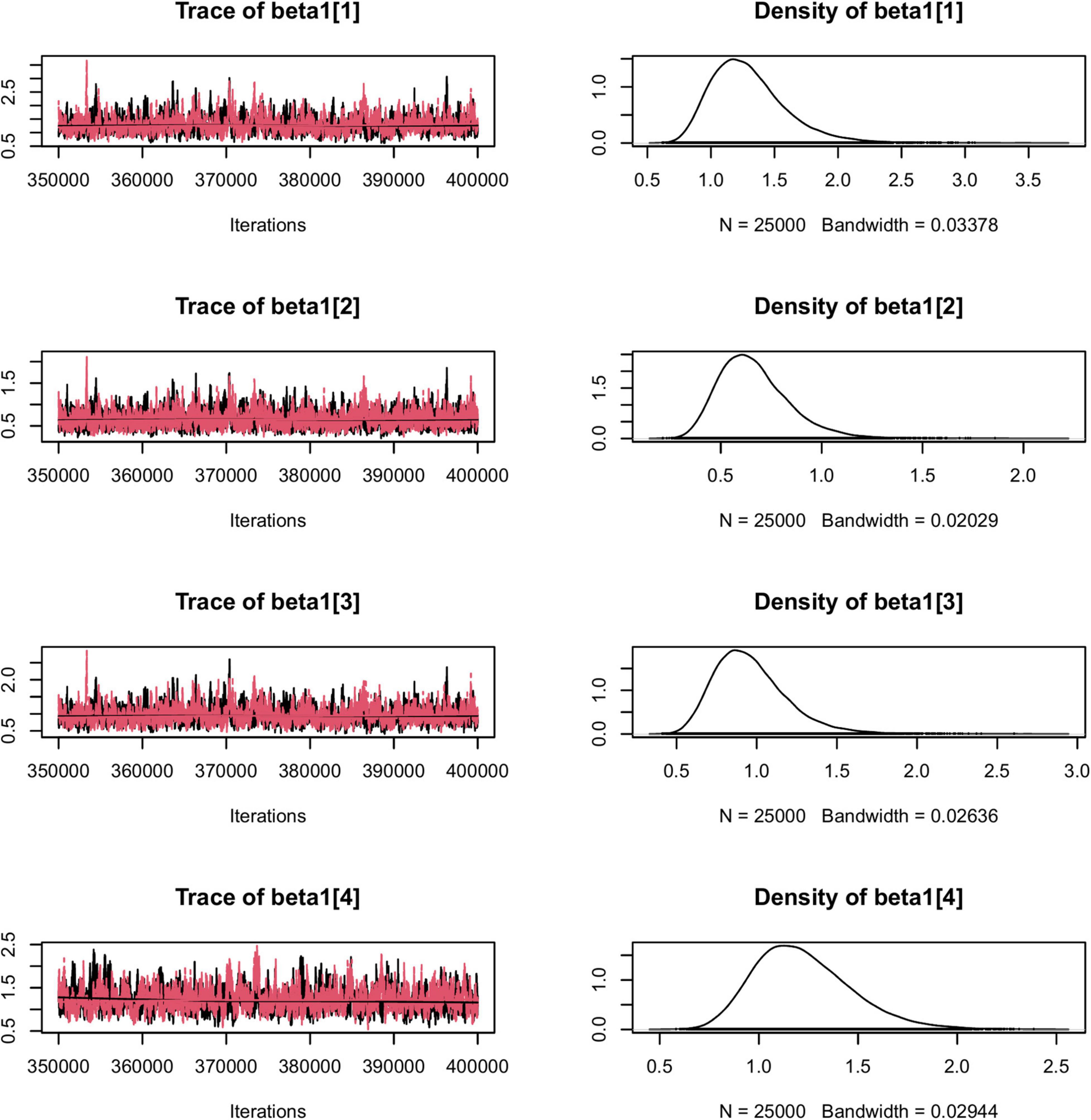

Fitting the Latent Interdependence Models on Empirical Data

To illustrate the usefulness of the LIDM, we estimated the two proposed models using interpersonal trust survey data as described previously. The data are complete item responses from a total of 105 dyads. Table 7 presents the results of the two proposed models with the interpersonal trust data. Due to limited space, in Figures 1, 2 we present only some examples of the traces for model convergence and the posterior distributions of model parameters; a complete report of the parameter estimation results is included in the Supplementary Materials, which can be accessed via the attached FigShare link. Overall, the two models produced close estimates for most parameters. Besides producing the estimates for each student’s trait scores for affect-based trust (ABT) and cognition-based trust (CBT), the models also provided estimates for the items’ differentiation of dyad members’ traits and the dissimilarity between traits. Specifically, large positive item-specific sender effects (ranging from 0.64 to 1.29 under Model 1 and from 0.67 to 1.39 under Model 2) were found for all six items, indicating all items were highly sensitive to the change of rating sender’s trait scores. That is, students with different trust scores tend to respond quite differently to these items regarding a same rating target.

Table 7. Means and standard deviations of LIDM estimated parameters, convergence diagnosis index (, and effective sample size (ESS) in empirical data analysis.

Figure 1. The traces for model convergence and the posterior distributions of a portion of Model 1 parameters.

Figure 2. The traces for model convergence and the posterior distributions of a portion of Model 2 parameters.

Large receiver effects (0.65 under Model 1 and 0.74 under Model 2) were found for Item 6 (“Other work associates who must interact with this individual consider him/her to be trustworthy.”). Also, significant receiver effects (0.57 under Model 1 and 0.68 under Model 2) were found for Item 2 (“If I shared my problems with this person, I know (s)he would respond constructively and caringly.”). These two items showed strong abilities in differentiating both rating receivers’ and rating senders’ trait scores. In other words, in measuring interpersonal trust, students’ responses to these two items were highly sensitive indicators in capturing both sides’ trust. In contrast, the other four items responded strongly to the change of rating senders’ traits scores, but weakly to the score change of rating receivers, indicating more differentiative indicators for one member’s trait than for that of the other. Additionally, the effects of the distance metric produced under Model 2 were small (ranging from −0.09 to −0.02) for all six items, suggesting no items were influenced by the dissimilarity between students’ trust. Finally, a high correlation (0.81 under both models) between sender effects and receiver effects from the same trust trait was found, suggesting one’s general attitude toward interpersonal trust may play a highly similar role in their own ratings to others as in the ratings they received from others.

Overall, as with psychometric models, the LIDM can be used as a tool by which to score group members’ relational traits and to evaluate items’ characteristics in capturing different aspects of a dyadic interaction in measurement settings that feature mutual ratings within networks. These aspects include items’ abilities to differentiate rating-receivers’ and rating-senders’ traits for any given relational constructs, as well as the dissimilarities among dyad members’ traits. Researchers may need to decide for themselves which specific item characteristics ought to be preferred or valued in measuring a certain type of relationship. These decisions ought to be subject to the theories on the construct of interest as well as the purposes of measurement.

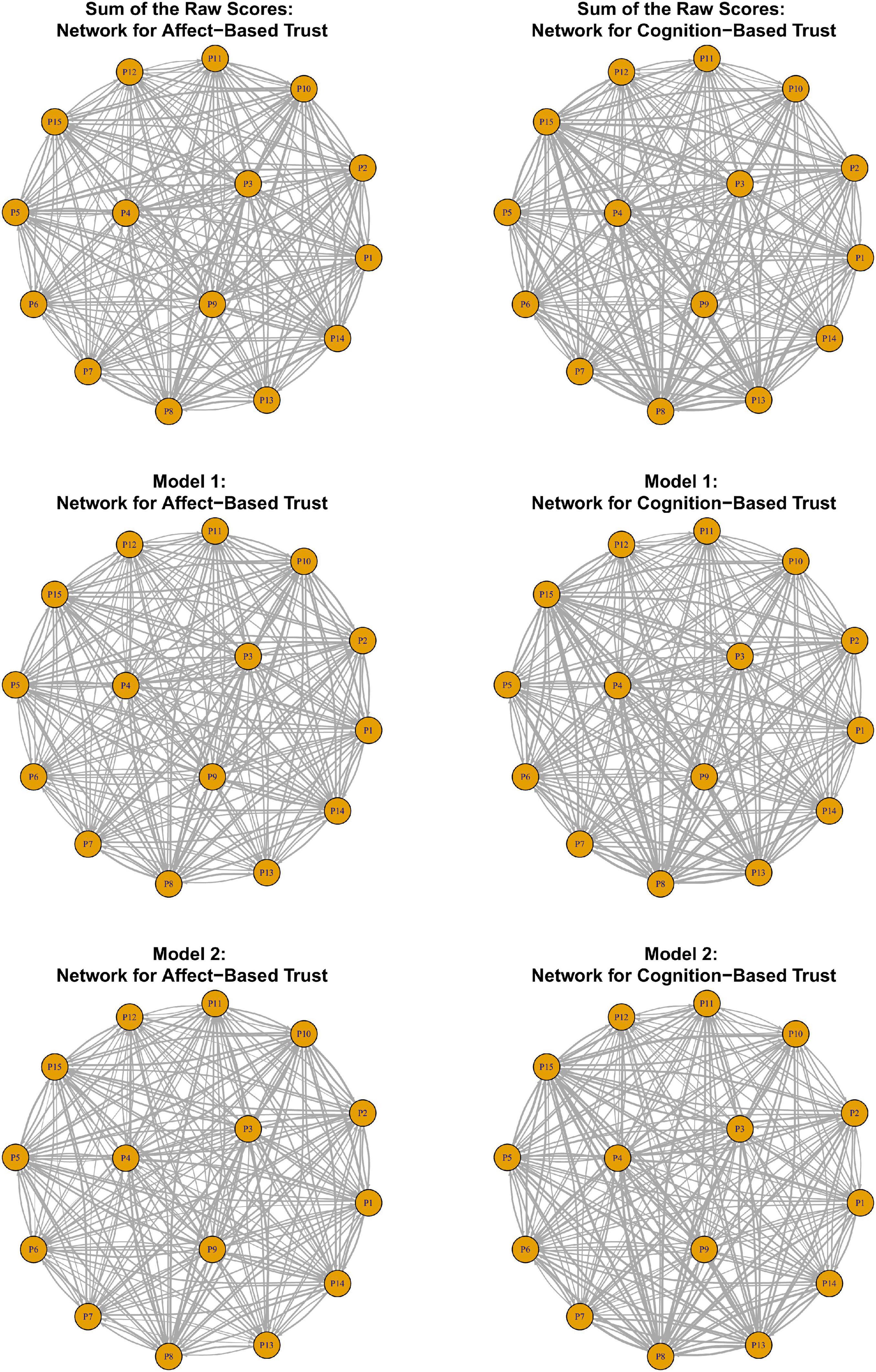

Further, using the estimates of students’ trait scores, we calculated the predicted score for each observed response (excluding measurement error). The predicted scores represent students’ “true” responses to a given item at their latent trait level. A student’s predicted responses from the same subscale are summed up to quantify the “true” magnitude of the trait-level ties they have to others in the network, which can be interpreted using the original metric of the trust measure. We present these “true” magnitudes of each directed trait-level tie in Supplementary Table 1, which has been included in the Supplementary Materials. Figure 3 presents the affect-based trust networks and the cognition-based trust networks constructed by the sum of the raw response scores and the trait-level weighted ties under Model 1 and Model 2.

Figure 3. Affect-based trust networks and cognition-based trust networks generated from the raw scores and the estimates from Model 1 and Model 2.

Discussion

On the Development of the Latent Interdependence Models

Psychometric relational data come from a variety of different research settings (e.g., “multiple” round-robin designs and block designs). The data we focused on in this paper are continuous psychometric data with a network structure (as illustrated by Table 1), which typically come from a setting in which each network member rates their relations with all others. As psychometric models, the goal of the LIDM is to score individuals’ relational traits and to evaluate the characteristics of the items. The estimates of model parameters can also be used to predict the magnitude of the connections among the group members along each latent dimension. These weighted connections construct univariate or multivariate social networks at a latent-trait level and can be interpreted using the metric of a given measure.

The LIDM adopt the idea of interdependence from the social relations model (SRM), in which a mutual-rating process is viewed as being influenced by both rating senders’ and receivers’ characteristics. However, they differ from SRMs in that they score everyone’s relational traits directly. Moreover, under the LIDM, the influence of ones’ traits on dyadic responses is conditional on an item’s characteristics—its ability to differentiate rating-senders’ and rating-receivers’ traits and the dissimilarities between traits. These item characteristics provide researchers with some useful perspectives, enabling them to evaluate how to measure a given relational construct properly. For instance, in measuring friendship, researchers may have an opportunity to evaluate the differences between using a “individual-focused” item (e.g., “I like my friend.”) and using a “dyad-focused” item (e.g., “My friend and I like each other.”).

In the LIDM, the basic units of modeling are dyads, which makes the LIDM distinct from other psychometric models (e.g., univariate or multivariate item response models) whose modeling units are single persons. For instance, in multivariate item response models, multiple latent traits capture an examinee’s set of abilities, with no need to involve the abilities of other individuals, nor any need to involve a component for the dissimilarity between two individuals’ abilities in the measurement or structural model.

Moreover, unlike traditional social network models (e.g., p* models and latent space model) that evolved from data-mining methodologies, the LIDM can be applied to theory-driven studies using psychometric relational data to represent a latent structure of relational constructs. By yielding accurate estimates for the latent traits and their covariance, the LIDM prepares social network researchers for further examinations of the mechanisms that underlie the formation of ties and for visualizing multivariate social networks at a latent-trait level.

To make the models estimable, constraints were placed onto certain parameters. In both models, the loadings conveying the effects of receiver’s latent traits were restricted to be positive, to have a fixed variance of one, to be equal across dyads, and to be item-specific. The variances of the random errors were also held equal across dyads and varied only across items. In addition, in Model 2, the effects of the distance metric were constrained to be negative and to be item-specific. By specifying these constraints, the number of parameters to be estimated does not rise as the network size grows. We note that although these proposed constraints and estimation procedures have been shown to be effective for continuous outcomes, further investigation is needed to generalize these constraints to other types of outcome data. It should be noted that with receiver effects being constrained to be positive, group members’ responses need to be scored in a proper way before entering analysis. That is, reverse scoring may be needed for some items to make sure a rating-sender’s higher score implies a rating-receiver’s higher trait score.

On the Simulation Study

The objectives of the simulation study were to evaluate the parameterizations of the two proposed models and to investigate the effectiveness of model estimation at different network sizes. The results showed that, using the proposed Bayesian estimation procedures with uninformative priors, both models converged successfully for all simulated samples and that model parameters were accurately recovered under most conditions. These findings support the effectiveness of the parameterizations of both models. Moreover, when estimated with data that were generated from a different model, both models yielded relatively accurate results for the latent trait scores, suggesting a tolerance of both models to some model misspecification.

As expected, the accuracy of parameter recovery was improved when network size increased, suggesting that increasing network size can improve estimation accuracy. Since an increase in network size would burden group members by necessitating more ratings, researchers may face the trade-off between the quality of model estimation and the practicability of data collection. Additional non-round-robin data collection designs (where not all raters rate all others) need further research, as implications for missing data are not considered in this paper. Although producing small biases, the estimation from a small network with five or ten members was not satisfactorily accurate in general due to relatively large RMSE for certain parameters. We suggest that caution with respect to estimation accuracy when interpreting the results from the analyses with small networks. In addition, given a substantial improvement in the accuracy of parameter recovery when the network size grew from 10 to 20, a network size over 20 may suffice in terms of reaching satisfactory estimation accuracy under the parameter values studied here.

In the simulation study estimation procedures using Bayesian methods, weakly informative prior distributions were chosen for all parameters but the latent traits to maximally reflect the information from the data. It should be noted that in this study, the estimates of parameters are obtained via expected a posteriori (EAP) estimation. It has been noted that the EAP estimates and other Bayesian methods (e.g., MAP estimates) are inwardly biased with a tendency toward the mean of a given parameter in estimating traits under item response theory models (e.g., Wang, 2015; Feuerstahler, 2018). For future studies, a systematic evaluation of various estimation methods with LIDM is needed.

Limitations and Future Studies

Despite evidence that supports the parametrization of the proposed models, we note some considerations on model development and the design of the simulation study. First, in this study, it was assumed that the dyadic relational response data were continuous. In fact, psychometric relational data can have multiple sample spaces. For instance, data collected using a behavior checklist may be binary. In such cases, generalized latent interdependence models with appropriate distributions and link functions could be used. The models can also be generalized to other types of data (e.g., ordinal data and count data). In developing the LIDM, we only considered the situations where each item only measures one relational trait and constrained the form of distance metric as the Euclidean metric. Future studies are needed to explore a more general form of the models that would apply for items measuring multiple traits and/or that include a more general distance metric (e.g., the Minkowski distance).

Second, the evidence from the simulation study does not guarantee the usefulness of the proposed models in other settings. In the simulation study, data were generated under a particular design with known dimensionality, in which eight items measured two latent traits (and each item measured only one latent trait). Although such a design seems to be practical for social and behavioral sciences research, sometimes researchers must compromise their design to address practical concerns. For instance, because group members could be burdened by a heavy item load, researchers may consider using only a few items to measure each relation in a network. Unfortunately, small numbers of items may cause estimation issues, such that extra constraints may need to be put onto the models. Further studies are needed to address these issues.

Finally, it should be noted that Model 1 is nested within Model 2 (given a zero effect of the distance metric). In practice, researchers can build their models starting from Model 1, with which not only could they score latent traits and evaluate item characteristics in terms of their sensitivity to trait change, but also understand which part of a dyad dominates the rating process. Moving to Model 2, researchers have an opportunity to test the effect of the interplay between dyad members’ latent characteristics. Empirically comparing the two models would involve a consideration of the information obtained from statistics (such as Bayes factors) or an examination of the highest density posterior credible intervals for the parameters of Model 2 that are not part of Model 1. Future studies are needed to derive and test Bayes factors for the LIDM.

Concluding Remarks

Interpersonal relations can be studied at different levels. Researchers collect psychometric relational data within network settings to study those relational constructs that could be conceptualized as psychological processes with multiple latent dimensions. The development of the latent interdependence models (LIDM) is an effort to model psychometric data in social networks. The LIDM have the potential to be used in theory-driven studies to help explain the latent structure of any relational constructs. They produce estimates for group members’ relational traits and for the effects capturing items’ sensitivity to the change of traits and to the dissimilarity between a pair of members’ traits. Moreover, through deriving the magnitudes of the connections among group members, such an analytical strategy translates heavy-laden observed networks into practically analyzable multivariate networks at a latent-trait level. Further analyses (e.g., network properties calculation and multivariate networks visualization) could be done with the latent univariate or multivariate networks, opening the door to continued development.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: DOI: 10.6084/m9.figshare.14925474.v4, reference number https://figshare.com/s/1c5bac0b88596795c3c4.

Author Contributions

JT and LH contributed to the development of the proposed models, supervised the design of the study, and reviewed the manuscript. BH developed the models and estimation procedures, stimulated the data, performed the model evaluations, and wrote the manuscript. All authors contributed to the article and approved the submitted version.

Funding

JT was supported by Grants DRL-1813760 from the National Science Foundation and R305A190079 from the Institute of Education Sciences.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.860837/full#supplementary-material

Footnotes

- ^ Both mean-based estimates and median-based estimates were obtained. The results of parameter recovery were reported based on mean-based estimates. The results based on median-based estimates have been included in the Supplementary Tables M1, M2.

References

Back, M. D., and Kenny, D. A. (2010). The social relations model: how to understand dyadic processes. Soc. Pers. Psychol. Compass 4, 855–870. doi: 10.1111/j.1751-9004.2010.00303.x

Cramer, A. O., Waldorp, L. J., van der Maas, H. L., and Borsboom, D. (2010). Comorbidity: a network perspective. Behav. Brain Sci. 33, 137–150. doi: 10.1017/S0140525X09991567

Epskamp, S., Rhemtulla, M., and Borsboom, D. (2017). Generalized network psychometrics: combining network and latent variable models. Psychometrika 82, 904–927. doi: 10.1007/s11336-017-9557-x

Feuerstahler, L. M. (2018). Sources of error in IRT trait estimation. Appl. Psychol. Meas. 42, 359–375. doi: 10.1177/0146621617733955

Hoff, P. D., Raftery, A. E., and Handcock, M. S. (2002). Latent space approaches to social network analysis. J. Am. Stat. Assoc. 97, 1090–1098. doi: 10.1198/016214502388618906

Horvath, A. O., and Greenberg, L. S. (1989). Development and validation of the Working Alliance Inventory. J. Couns. Psychol. 36:223. doi: 10.1037/0022-0167.36.2.223

Jeon, M., Jin, I. H., Schweinberger, M., and Baugh, S. (2021). Mapping unobserved item–respondent interactions: a latent space item response model with interaction map. Psychometrika 86, 378–403. doi: 10.1007/s11336-021-09762-5

Jin, I. H., and Jeon, M. (2019). A doubly latent space joint model for local item and person dependence in the analysis of item response data. Psychometrika 84, 236–260. doi: 10.1007/s11336-018-9630-0

Kashy, D. A., and Kenny, D. A. (1990). Analysis of family research designs: a model of interdependence. Commun. Res. 17, 462–482. doi: 10.1177/009365090017004004

Kenny, D. A., and La Voie, L. (1984). “The social relations model,” in Advances in Experimental Social Psychology, Vol. 18, ed. L. Berkowitz (Orlando, FL: Academic Press), 142–182.

Kenny, D. A., Kashy, D. A., Cook, W. L., and Simpson, J. A. (2006). Dyadic Data Analysis (Methodology in the Social Sciences). New York, NY: Guilford.

McAllister, D. (1995). Affect- and cognition-based trust as foundations for interpersonal cooperation in organizations. Acad. Manag. J. 38, 24–59. doi: 10.2307/256727

Nestler, S. (2018). Likelihood estimation of the multivariate social relations model. J. Educ. Behav. Stat. 43, 387–406. doi: 10.3102/1076998617741106

Nestler, S., Lüdtke, O., and Robitzsch, A. (2020). Maximum likelihood estimation of a social relations structural equation model. Psychometrika 85, 870–889. doi: 10.1007/s11336-020-09728-z

Pattison, P., and Wasserman, S. (1999). Logit models and logistic regressions for social networks: II. Multivariate relations. Br. J. Math. Stat. Psychol. 52, 169–193. doi: 10.1348/000711099159053

Plummer, M. (2003). JAGS: A Program for Analysis of Bayesian Graphical Models Using Gibbs Sampling. Available online at: http://citeseer.ist.psu.edu/plummer03jags.html (accessed October 12, 2018).

Schmittmann, V. D., Cramer, A. O. J., Waldorp, L. J., Epskamp, S., Kievit, R. A., and Borsboom, D. (2013). Deconstructing the construct: a network perspective on psychological phenomena. New Ideas Psychol. 31, 43–53. doi: 10.1016/j.newideapsych.2011.02.007

Sewell, D. K., and Chen, Y. (2015). Latent space models for dynamic networks. J. Am. Stat. Assoc. 110, 1646–1657. doi: 10.1080/01621459.2014.988214

Snijders, T. A. B. (2009). “Longitudinal methods of network analysis,” in Encyclopedia of Complexity and System Science, ed. B. Meyers (New York, NY: Springer Verlag), 5998–6013. doi: 10.1007/978-0-387-30440-3_353

Snijders, T. A., Pattison, P. E., Robins, G. L., and Handcock, M. S. (2006). New specifications for exponential random graph models. Sociol. Methodol. 36, 99–153. doi: 10.1111/j.1467-9531.2006.00176.x

Wang, C. (2015). On latent trait estimation in multidimensional compensatory item response models. Psychometrika 80, 428–449. doi: 10.1007/S11336-013-9399-0

Keywords: psychometric models, relationship measurement, social networks, latent inter-dependence models, Bayesian estimation

Citation: Hu B, Templin J and Hoffman L (2022) Modeling Psychometric Relational Data in Social Networks: Latent Interdependence Models. Front. Psychol. 13:860837. doi: 10.3389/fpsyg.2022.860837

Received: 23 January 2022; Accepted: 11 March 2022;

Published: 07 April 2022.

Edited by:

Alexander Robitzsch, IPN - Leibniz Institute for Science and Mathematics Education, GermanyReviewed by:

Terrence D. Jorgensen, University of Amsterdam, NetherlandsDavid Jendryczko, University of Konstanz, Germany

Copyright © 2022 Hu, Templin and Hoffman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bo Hu, aHVib0BuYnUuZWR1LmNu

Bo Hu

Bo Hu Jonathan Templin

Jonathan Templin Lesa Hoffman

Lesa Hoffman