- 1Department of Psychology, University of Bamberg, Bamberg, Germany

- 2Bamberg Graduate School of Affective and Cognitive Sciences (BaGrACS), Bamberg, Germany

The ability to read emotions in faces helps humans efficiently assess social situations. We tested how this ability is affected by aspects of familiarization with face masks and personality, with a focus on emotional intelligence (measured with an ability test, the MSCEIT, and a self-report scale, the SREIS). To address aspects of the current pandemic situation, we used photos of not only faces per se but also of faces that were partially covered with face masks. The sample (N = 49), the size of which was determined by an a priori power test, was recruited in Germany and consisted of healthy individuals of different ages [M = 24.8 (18–64) years]. Participants assessed the emotional expressions displayed by six different faces determined by a 2 (sex) × 3 (age group: young, medium, and old) design. Each person was presented with six different emotional displays (angry, disgusted, fearful, happy, neutral, and sad) with or without a face mask. Accuracy and confidence were lower with masks—in particular for the emotion disgust (very often misinterpreted as anger) but also for happiness, anger, and sadness. When comparing the present data collected in July 2021 with data from a different sample collected in May 2020, when people first started to familiarize themselves with face masks in Western countries during the first wave of the COVID-19 pandemic, we did not detect an improvement in performance. There were no effects of participants’ emotional intelligence, sex, or age regarding their accuracy in assessing emotional states in faces for unmasked or masked faces.

Significance Statement

The present study validates previous findings that the reading of emotions in faces is impaired when faces are partially covered with a mask (the emotional state of disgust was especially difficult to read)—even 1 year after wearing face masks became common. Although there was a wide range of performance levels, emotional intelligence, assessed with a performance test or with self-reports, did not affect the specific confusion of perceived emotions for faces with or without masks. During a pandemic, it seems necessary to provide and use additional information so that interaction partners’ emotions can be assessed accurately.

Introduction

A face probably conveys hundreds of dimensions of information, which people can typically read quickly and with little cognitive effort. Besides the socially important dimension of identification, even if we take only a single glance at a person’s face before executing any deeper exploration (Carbon, 2011), their face allows us to assess several other dimensions that are relevant for the raw assessment of a social situation, for example, attractiveness (Carbon et al., 2018), bodyweight (Schneider et al., 2012), and trustworthiness (Willis and Todorov, 2006). The perception of emotions is an additional highly complex ability (Herpertz et al., 2016) as not only basic emotions but even highly differentiated mental states can be inferred from faces, especially on the basis of the region around the eyes (Schmidtmann et al., 2020). All of these pieces of information are assumed to be processed in a rather parallel and highly efficient way (Bruce and Young, 1986), a theoretical claim that indeed has found support from brain research (Haxby et al., 2001). Emotion perception can be considered an aspect of emotional intelligence and is an ability that is related to the wellbeing of both actors and partners (Koydemir and Schütz, 2012) and can be increased through training (Gessler et al., 2021). Such a highly optimized and efficient way of processing facial information can easily be impaired.

During the COVID-19 pandemic, a substantial change in the opportunity to thoroughly perceive facial expressions occurred when the use of face masks became obligatory, which was the case in many countries during the first wave of COVID-19 in May 2020. This global change in the opportunity to perceive facial expressions provides an interesting setting for testing whether the ability to read faces can adapt to such an environmental change. The present study was aimed at analyzing indications of improvements in face reading after having been exposed to partially covered faces for 1 year. For interested readers, we would like to refer to an overview of all kinds of effects documented so far for the use of masks (Pavlova and Sokolov, 2021). However, in the following study, we focus on the effects on emotion reading. We were interested in not only such a possible adaptation but also in variables that could potentially affect the ability to read emotions in faces, foremost the personality variable of emotional intelligence (Mayer and Salovey, 1997).

We know from research during the COVID-19 pandemic that adults (Carbon, 2020) as well as (9–11 year old) children (Carbon and Serrano, 2021) are less effective in reading emotions when face masks cover a target’s mouth and nose region. These general findings were replicated several times in 2020 (e.g., Gori et al., 2021; Grundmann et al., 2021) and 2021 (Ramachandra and Longacre, 2022). Specific emotions are especially difficult to discern when face masks are worn. This is the case for all emotions that are strongly expressed by movements in the mouth area (e.g., disgust, anger, sadness, and happiness; see Bombari et al., 2013). For these emotions, recognition is heavily impaired when a face mask is worn (Carbon, 2020). Pre-COVID-19 studies had already shown this general finding, although the results had been inconsistent (see Bassili, 1979; Fischer et al., 2012; Kret and de Gelder, 2012), which calls for further investigation into the specific impairments of face covers for certain emotions.

Emotional intelligence (EI) plays a significant role in the decoding of facial expressions. More precisely, EI is the ability to perceive and regulate emotions in oneself and in others (Mayer and Salovey, 1997). Individuals with better emotion perception skills are especially sensitive to changes in facial expressions and thereby better able to recognize emotions in others (Karle et al., 2018). In their updated Four-Branch Model of Emotional Intelligence, Mayer et al. (2008b) not only make the assumption that individuals high in EI are more adept at recognizing verbal and non-verbal information in others, such as facial or vocal cues, but also differentiate reasoning skills for each of the four branches which range from basic to more complex cognitive processes. According to this model, high EI individuals possess enhanced cognitive abilities that allow them to recognize emotions even under difficult conditions, such as integrating contextual and cultural aspects when decoding emotional expressions. Thus, especially when face masks cover parts of the face, individuals with high emotional intelligence should be better at identifying emotions in others and more confident in their judgments.

Throughout life, individuals continue to develop their emotional intelligence, which includes the ability to perceive emotions (Mayer et al., 2008a), and previous studies have shown that emotional intelligence can be improved by traditional face-to-face training (e.g., Hodzic et al., 2018; Gessler et al., 2021) as well as online training (Köppe et al., 2019), thus highlighting the importance of experience and practice in developing and increasing emotion perception skills. With regard to the current context of a pandemic, individuals who regularly interact with others who wear face masks should be especially skilled at detecting emotions despite the use of face masks. In addition, it is reasonable to assume that individuals have improved their emotion perception skills after having been confronted with partially covered faces for a while. Thus, the respective abilities should be better now than they were at the onset of the COVID-19 pandemic.

When assessing the impact of emotional intelligence, it is important to differentiate between performance-based and self-report measures as performance-based measures are more strongly related to cognitive ability, whereas self-reports are more closely linked to other personality traits (Mayer et al., 2008b; Joseph and Newman, 2010). As a result, studies have revealed only weak correlations between performance-based and self-report measures (e.g., Brackett et al., 2006). For this reason, we employed both performance-based and self-report measures in the present study.

Numerous studies have indicated that individuals’ emotion perception also depends on their attitudes, beliefs, and stereotypes regarding other people. Individuals who did not adhere to wearing face masks in everyday life exhibited more negative attitudes toward face masks (Taylor and Asmundson, 2021) and in the context of the COVID-19 pandemic, individuals who object to the COVID-19 restrictions appear to be separating themselves from the “mainstream” and their previous in-group. As a consequence, individuals who do not wear face masks should be worse at detecting emotions in masked faces (i.e., out-group members).

Last but not least, we were also interested in potential gender differences in processing facial emotions—a topic that has largely been neglected but has piqued interest in recent years, probably influenced by a meta-analysis on this topic in 2013 (Herlitz and Lovén, 2013). The authors of this meta-analysis showed that women had better performance in facial recognition and memories for faces than men (Herlitz and Lovén, 2013) and suspected that this advantage was due to more efficient configural and holistic processing, which also reflects an expertise-based mode of processing (Carbon and Leder, 2005; Rhodes et al., 2006) when processing facial information (e.g., age, Hole and George, 2011). However, in a recent study with a large sample of 343 participants employing the Cambridge Face Memory Test (CFMT; Duchaine and Nakayama, 2006) and the Cambridge Face Perception Test (CFPT; Duchaine et al., 2007), the holistic processing hypothesis was not supported (Østergaard Knudsen et al., 2021). Still, specifically for emotion recognition, it has been shown that women regularly outperform men, for instance, in the acoustic domain (e.g., for the recognition of vocal emotions; Mishra et al., 2019) or the visual domain [e.g., for the recognition of facial emotions (Wingenbach et al., 2018)].

On the basis of these considerations, we tested the following hypotheses, which were previously documented in our preregistration available on the Open Science Framework (OSF), retrievable via:1

•(H1) Emotion recognition will be better and participants’ confidence in their judgments higher for faces without masks than for faces with masks.

•(H2) Participants’ EI (both performance-based and self-reported) will be positively related to their ability to recognize emotions in faces and to their confidence in doing so.

•(H3) Participants’ emotion recognition will be better and confidence will be higher than emotion recognition in a different but comparable sample measured 1 year ago.

•(H4) Familiarity with face masks will be positively related to emotion recognition and confidence.

•(H5) Positive attitudes toward face masks will be positively related to emotion recognition and confidence.

•(H6) For masked faces, emotion recognition will be worse for emotions in which the mouth area is important than in other emotions.

•(H7) Women will show better emotion recognition and higher confidence than men.

•(H8) Younger participants will show better emotion recognition and higher confidence than older adults.

Experimental Study

The major aims of the present study were to gain knowledge about whether the ability to process facial emotions is adaptive and can be affected by presentation (masks vs. no masks), time (during the COVID-19 pandemic), and participants’ sex and age. Further, we aimed to test whether performance in the processing of facial emotions can be affected by participants’ emotional intelligence (EI) or participants’ attitude toward face masks.

Materials and Methods

Participants

Calculation of the Sample Size

We calculated the required sample size N using a test model that included EI as a fixed factor (Model 2) compared with a baseline model without EI (Model 1). As we followed a test strategy based on linear mixed models (LMMs), we calculated the test power a priori using the R package simr (Green and MacLeod, 2016). To compare the two models, we set the intercept to 60% (the dependent variable was the performance level, which could range from 0 to 100%, so 60% indicates a medium-high performance level given a six Alternative Forced Choice (AFC) design with a 16.7% base rate). The slopes of the fixed effects of emotions were set to −2, +5, +5, +5, and + 10 for the different emotional states (disgust, anger, neutral, happiness, and fear, respectively) compared with the emotional state of sadness, based on typical findings for these emotions (e.g., Carbon, 2020). For Model 2, we set the slope of the fixed effect of EI to +2, the random intercept variance was set to 10, and the residual standard deviation was set to 20. With 1,000 simulations, we obtained a test power of 90% [95% CI (89.16, 92.79)] with a sample size of N = 46.

We recruited the N we needed plus 5 additional individuals (initially we planned to oversample up to N = 54) given that invalid data are typically expected from about 1/5 of participants, but a preliminary inspection of the data (looking for potential indicators of data that should be excluded as documented in the preregistration, i.e., very low performance and many missing data points) indicated a much smaller amount of invalid data; only two participants were excluded due to the preregistered outlier criterion of having correctly identified the emotional states of faces without face masks in less than 50% of the cases.

Sample

The final sample consisted of 49 participants [Mage = 24.8 years (18–64 years), Nfemale = 39], yielding a post-hoc power of 92.10% [95% CI (90.25, 93.70)]. People had been recruited by different online advertisements; they were not directly incentivized but had the option to participate in a lottery with prizes ranging from 10 to 100 Euros (5 × 10 Euros, 1 × 50 Euros, and 1 × 100 Euros).

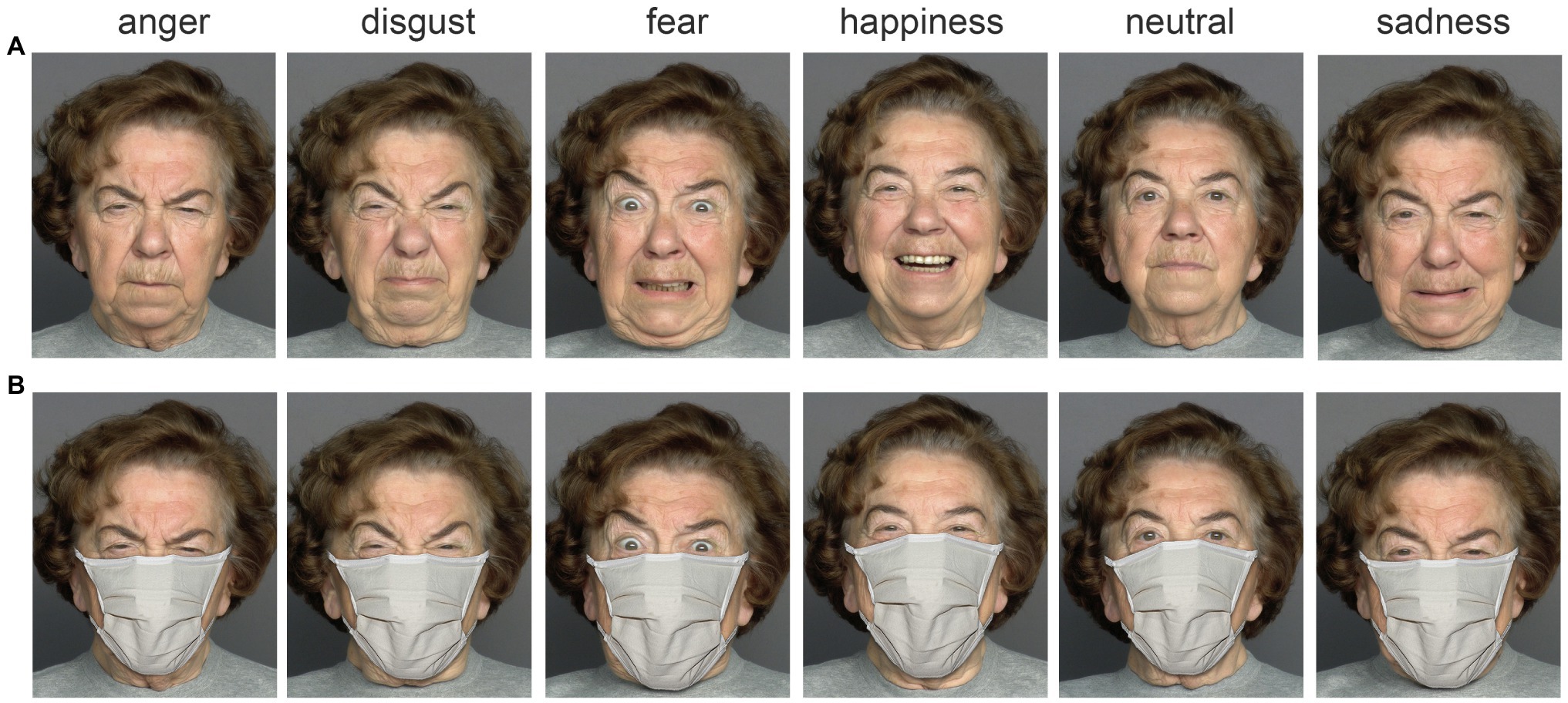

Material

The baseline face stimuli without masks were obtained from the MPI FACES database (Ebner et al., 2010) on the basis of a study-specific contract effective 19 April 2021. We used frontal photos of six Caucasians (three female and three male) who belonged to three different face age groups [young, medium = middle-aged, elderly with average perceived ages of 25.5, 41.5, and 67.0 years, respectively, as shown in a previous study by Ebner et al. (2010)] as baseline images to which we subsequently applied face masks with a graphics editor. Each person showed the emotional states anger, disgust, fear, happiness, and sadness, and one neutral expression. Each face sex × face age group cell was represented by one specific person. We doubled all of the 2 [face sex] × 3 [face age groups] × 6 [emotional states] = 36 baseline pictures to apply a typical face mask used during the COVID-19 pandemic (a so-called “community mask” colored beige). For each manipulated picture, the mask was individually adapted to fit the different faces perfectly; we added realistic shadow effects to further increase the realism of the pictures with face masks (Figure 1).

Figure 1. The figure illustrates the six emotional variations (anger, disgust, fear, happiness, neutral, and sadness) of one person without (A) and with (B) a face mask. This specific person was not part of our experimental material but is presented here for illustrative purposes. The authors would like to thank the Max Planck Institute for providing the baseline stimuli (without masks), which came from the MPI FACES database (Ebner et al., 2010).

Overall, the material consisted of 2 [no mask vs. mask] × 36 = 72 facial stimuli, half of the original material originally used by Carbon (2020). Specifically, we used only one of the two face age group representatives per sex from the original study. This was done to reduce the total duration of the present study, which was substantially extended by adding the personality variables.

Apparatus

Study Platform

As the study platform, we used the online tool SoSci Survey,2 which is freely available for non-profit-oriented scientific projects.

Measures

Ability-Based Emotional Intelligence

We used the faces and images subtasks from the German version of the Mayer-Salovey-Caruso Emotional Intelligence Test (MSCEIT; Steinmayr et al., 2011) to assess ability-based emotion perception. Participants used a 5-point scale to indicate the degree to which each of five emotions was expressed in a photograph (faces subtask) or pictures of landscapes and abstract patterns (images subtask). In line with previous research, internal consistency analyses revealed a Cronbach’s alpha of α = 0.683 for the faces subtask, α = 0.833 for the images subtask, and α = 0.857 for the emotion perception scale in this study.

Self-Reported Emotional Intelligence

The Perceiving Emotion subscale from the German version of the Self-Rated Emotional Intelligence Questionnaire (SREIS, see Vöhringer et al., 2020) was employed to assess emotion perception skills via self-report. Participants rated their emotion perception skills on a 5-point scale ranging from 1 (very inaccurate) to 5 (very accurate). Again, internal consistency analyses were computed, and Cronbach’s alpha for the SREIS was α = 0.520.

Attitudes Toward Face Masks

Participants’ overall attitude toward face masks was measured with a single item “What is your personal opinion toward the mandatory use of masks?” with the response options: “I do not consider the mandatory use of masks a problem,” “To me the mandatory use of masks is annoying but bearable,” and “I consider the mandatory use of masks unreasonable and burdensome.” Further, we employed the 12-item scale developed by Taylor and Asmundson (2021) with answers that were rated on a 7-point scale ranging from 1 (strongly disagree) to 7 (strongly agree) to allow for a more fine-grained analysis of face mask attitudes. Internal consistency was α = 0.906 for the 12-item scale.

Face Mask Use

Participants indicated their individual face mask use (FamiliarityOwnMask) by rating the item “On average, how many hours a day do you wear a face mask?” on a 10-point scale ranging from 1 (max. 30 min) to 10 (more than 8 h). In addition, we asked participants to rate their daily duration of interpersonal contact with face masks (FamiliarityOthersMasks) by answering the item “How many hours per day are you in face-to-face contact with others who wear a face mask?” on a 10-point scale ranging from 1 (max. 30 min) to 10 (more than 8 h).

Procedure

We conducted the study between 13 July 2021 (13:09 local time; CEST) and 19 July 2021 (11:52 local time; CEST) during the end of the third wave of the COVID-19 pandemic in Germany. Each participant gave written consent to participate in the study; data were collected anonymously. We first asked general demographic questions about participants’ age and sex. Then, the experimental part began. We fully randomized the order of the stimuli for each participant. The participant’s task was to assess the depicted person’s emotional state using a six Alternate Forced Choice (AFC) task where all six of the possible emotional states were shown as written labels (in German: anger, disgust, fear, happiness, neutral, and sadness) along with a confidence scale. So by clicking on one scale point of the respective confidence scale the participants indicated the perceived emotional state as well as the confidence with just one click. The confidence scale was used to assess the participant’s confidence in their recognition of the respective emotion expression on a 7-point scale ranging from 1 (not confident at all) to 7 (very confident). We did not set a time limit for the assessment but asked participants to respond spontaneously. The next trial started after the participant pushed a response key, initiated by a short, intermediate pause with a blank screen presented for half a second. After the experimental part, we administered all questionnaires and single questions. Participants took 27.5 min on average to successfully complete the whole study. We obtained ethical approval for the general psychophysical study procedure from the local ethics committee of the University of Bamberg (Ethikrat).

Results

We employed R 4.1.2 (R Core Team, 2021) to process and analyze the empirical data, mainly by using linear mixed models (LMM) in the package lme4 (Bates et al., 2015). The preregistered study as well as the (anonymized) data can be found on the OSF.3

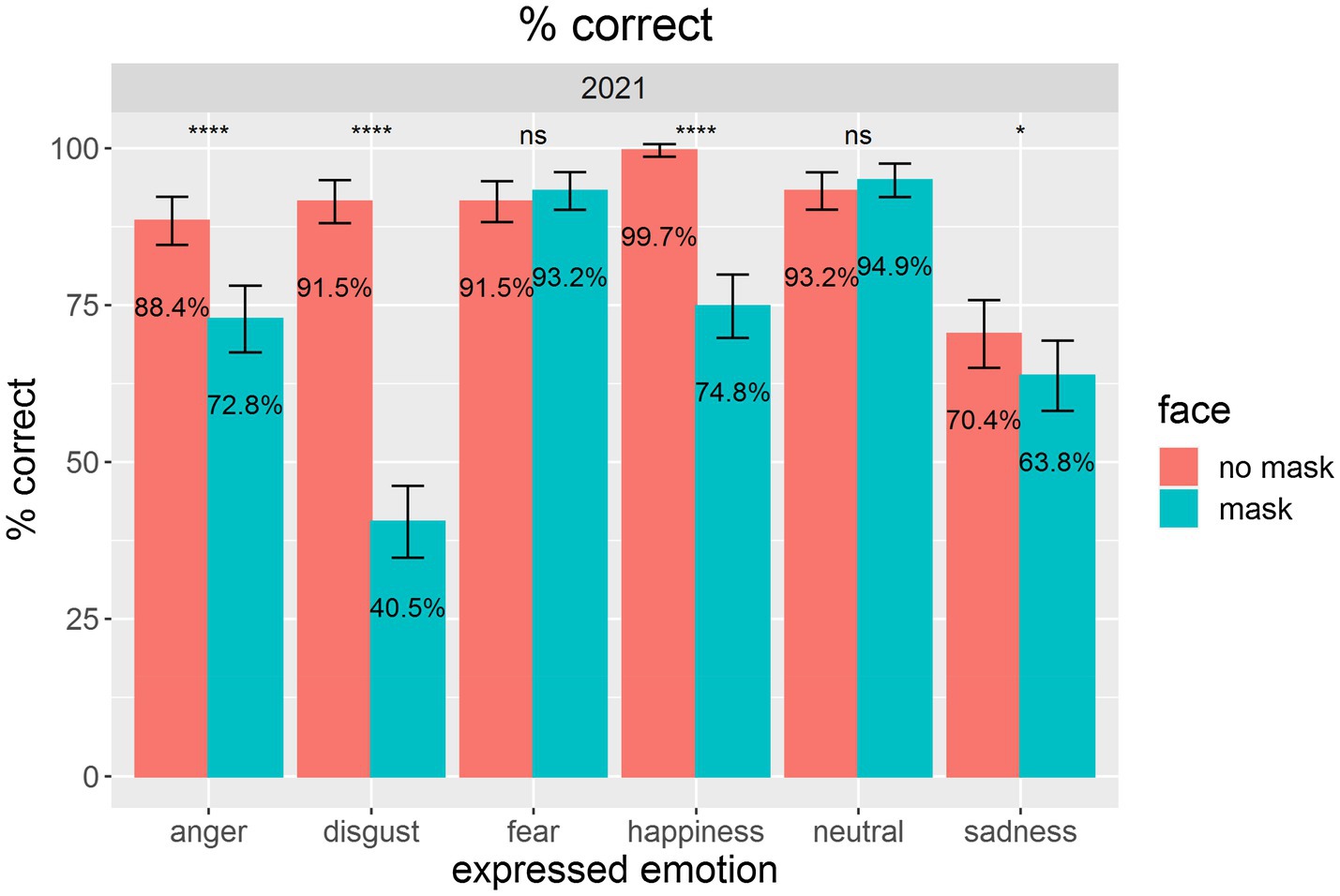

Performance was calculated as a percentage of correct data, confidence was converted to percentage ratings such that the minimum confidence rating of 1 corresponded to 0%, and the maximum confidence rating of 7 corresponded to 100% confidence. We obtained a mean performance level for the baseline condition of faces without masks of M = 89.1%, which is remarkable given that a chance rating for a six AFC is 16.7%. For the faces with masks, the performance level dropped to 73.3%, which was still much higher than chance. The drop in performance was evident for four of the six emotional states from the visual inspection of Figure 2—for anger, disgust, happiness, and sadness.

Figure 2. The figure demonstrates mean performance levels for assessments of emotional states for faces without masks (red) compared with faces with masks (blue). Error bars indicate 95% confidence intervals (CIs) according to Morey (2008). Pairwise comparisons of the presentation conditions were calculated via undirected paired t-tests. *p < 0.05. ****p < 0.0001. Nonsignificant results are marked with ns.

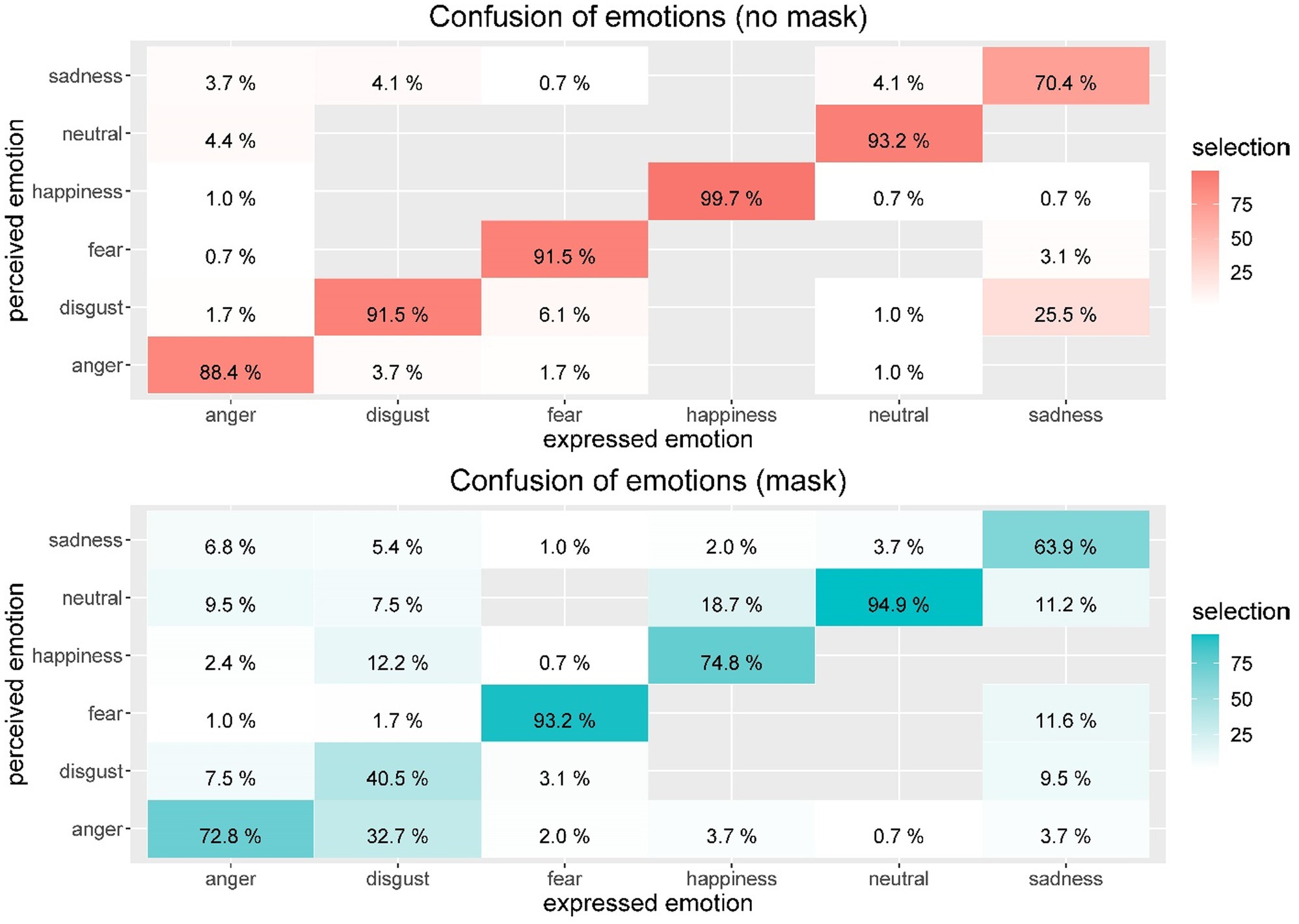

We inspected the drop in performance when faces wearing masks had to be inspected by observing the confusion matrices for expressed versus assessed emotions. As Figure 3 indicates, there was confusion of emotions even when the entire face (without a mask) was shown. This was especially true for sadness, which was correctly identified in 70.4% of the cases and misinterpreted as disgust in about 25% of the cases. Recognition of the other emotions was quite good with correctness levels above 88.4% (for anger) or higher (e.g., 99.7% for happiness).

Figure 3. This figure shows the confusion matrices for expressed versus perceived emotions for the original faces without face masks (top red) and faces with face masks (bottom blue). Mean performance levels in assessing the emotional states are given by percentage correctness rates (if >0.5%, otherwise data were suppressed for better readability of the matrices). The better the performance, the more saturated were the confusion matrix cells.

When faces were shown with masks, participants were more confused about which emotion was displayed. This was particularly the case for disgust, which participants very often misinterpreted as anger (32.7%). Sadness was diffusely assessed, with no clear misinterpretation for a single emotion, but with a broad spectrum of interpretations ranging across fear, neutral, disgust, or anger. The exceptions to the rule were neutral and fear, which were not negatively affected by adding a face mask.

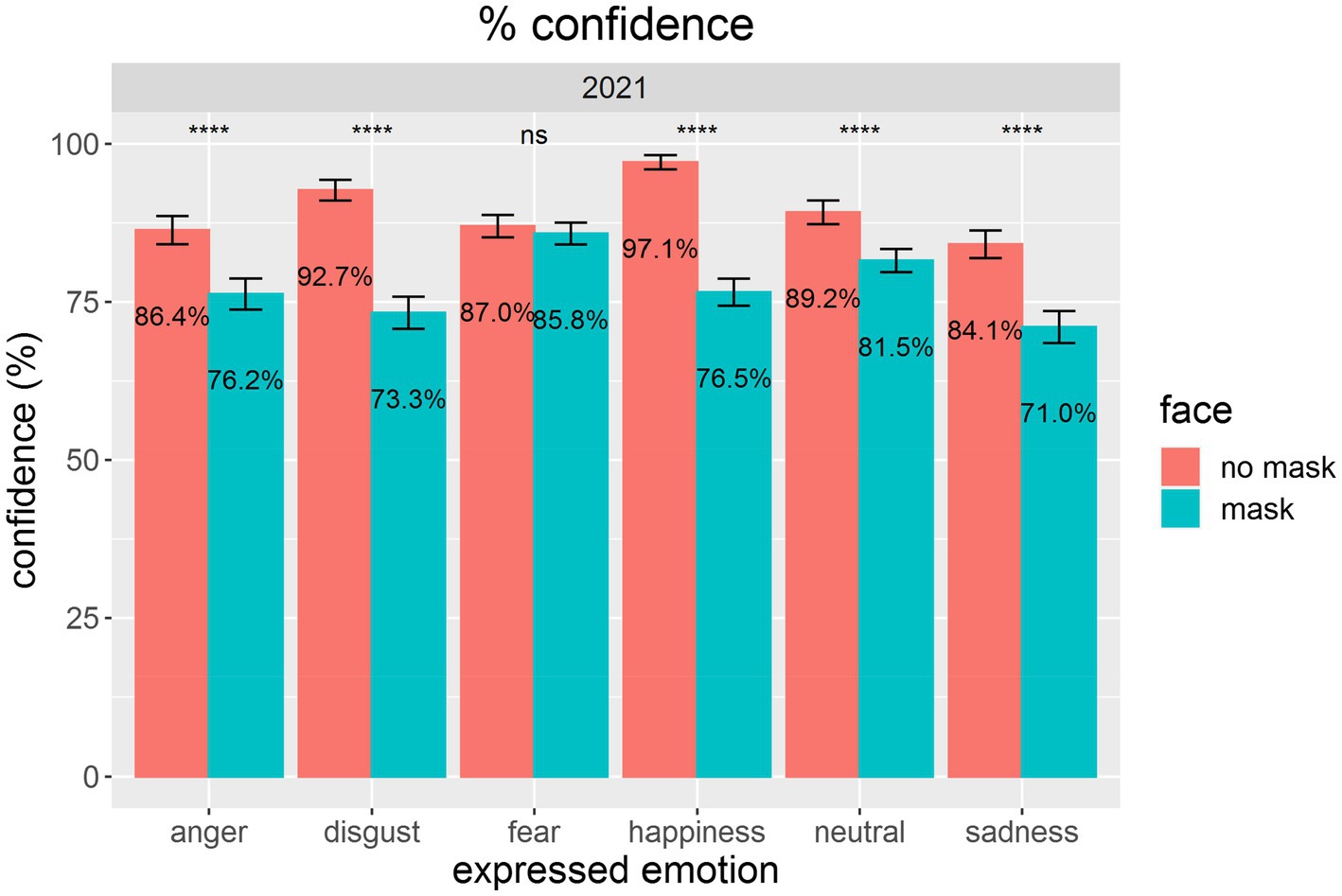

We also analyzed the data on participants’ confidence in choosing the respective emotional state. As Figure 4 indicates, participants showed numerically lower confidence when assessing masked faces. With five out of six emotions, we found statistically significant drops in confidence: for anger, disgust, happiness, neutral, and sadness.

Figure 4. This figure shows mean confidence levels for assessments of emotional states for faces without masks (red) compared with faces with masks (blue). Error bars indicate 95% CIs according to Morey (2008). Pairwise comparisons of the presentation conditions were calculated via paired t-tests. ****p < 0.0001. Nonsignificant results are marked with ns.

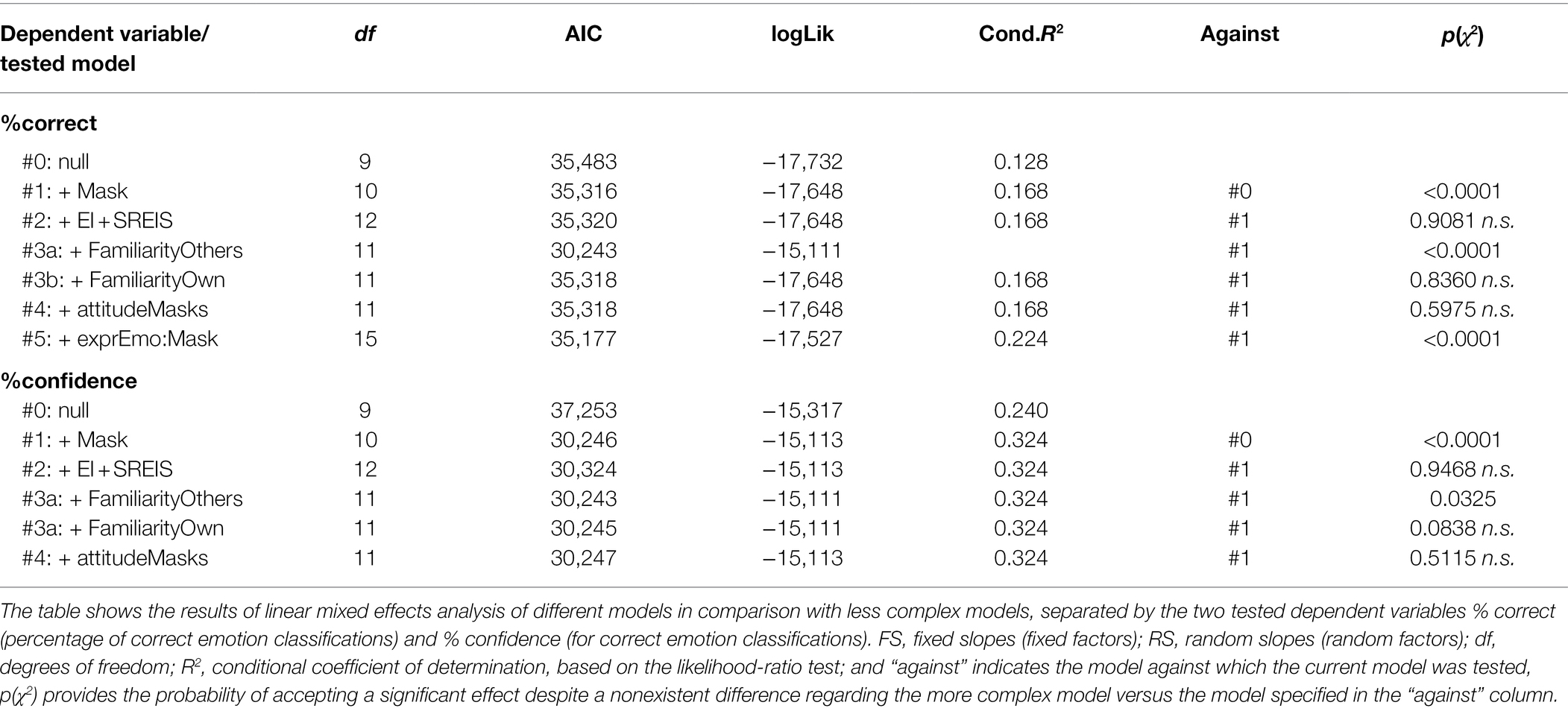

We tested the effect of wearing masks on performance and confidence with two separate linear mixed models (LMMs). As the null model (Model 0), we used a simple one containing the participants and baseline stimuli as random intercepts and facial emotion as a fixed effect. For Model 1, we added face mask (face with a mask vs. without a mask) as a fixed factor. The coefficient of determination for each model was calculated via a likelihood-ratio test utilizing the R package MuMIn (Barton, 2019).

For both dependent variables (i.e., performance and confidence), we obtained significant effects of face mask, ps < 0.0001, with a drop in performance of 15.8% and a drop in confidence of 11.9%. This result supported H1. For details, see Table 1.

We also tested H2, in which we focused on the relationship between participants’ ability-based or self-reported emotional intelligence (EI) and their performance and confidence in assessing emotional expressions in faces. We used an LMM approach with Model 2 including EI (ability-based emotional intelligence) and SREIS (self-reported emotional intelligence) as fixed factors compared with Model 1 where these EI-related scores were not included. We also analyzed the correlation between EI and SREIS, which turned out to be nonsignificant, r = 0.01, p = 0.93, ns. For both dependent variables, we did not explain more variance by including EI-related scores (see Table 1). Thus, H2was not supported.

We also tested H3, which proposed that people in the present sample from 2021 would have higher scores (higher performance and higher confidence, respectively) than the original sample tested with the same experimental procedure in 2020. Note: As the 2020 study used twice as many stimuli, we analyzed only the material used in both studies. Again, we followed an LMM approach, this time with the merged data set, which comprised the 2020 sample consisting of 41 participants and the 2021 sample consisting of 49 participants, yielding a total of N = 90 participants. This time, as the null model, we used Model A0, which in fact reflected the previous Model 0 but was fed by the overall data set comprising the 2020 and 2021 data. Model A1, which included Study (2021 vs. 2020) as a fixed factor, was not able to explain additional variance for the performance or the confidence data. Thus, H3 was not supported, ps > 0.7638.

Regarding H4, we tested whether greater familiarity with face masks would lead to better performance or confidence, respectively, in assessing facial emotions. This was done with Model 3 to which we added familiarity. We measured the familiarity with face masks in two ways: The first item asked about familiarity with face masks in terms of a person’s own use of face masks per day (FamiliarityOwnMask), whereas the second item asked about familiarity with face masks in terms of perceiving other people with face masks (FamiliarityOthersMasks). As the two aspects capture different perspectives of the aspect of familiarity, we decided to add them to Model 3 as two different fixed factors (Models 3a and 3b, respectively), which we tested against Model 1. We revealed that FamiliarityOthersMasks was significantly related to higher performance as well as higher confidence in the assessment of facial emotions, whereas FamiliarityOwnMask failed to reach significance with the given power.

We tested H5, which were about the relationships between people’s attitudes toward face masks and the dependent variables performance and confidence, respectively, in assessing facial emotions. Again, we tested this against Model 1 with an LMM. Model 4 which included the additional fixed factor attitudeMasks did not explain more variance than Model 1, so H5 was rejected.

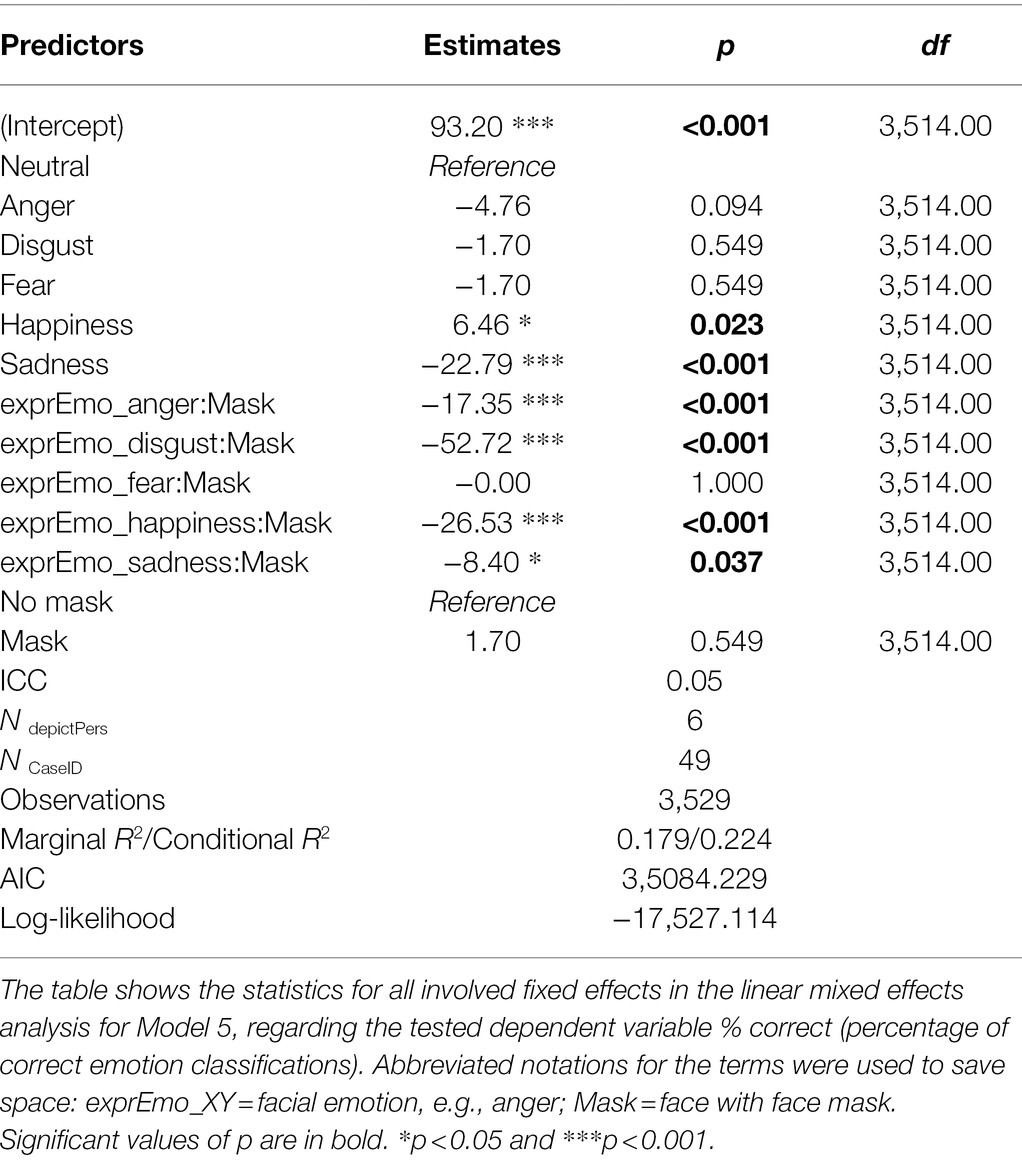

Regarding H6, we analyzed the selective decrease in performance in assessing certain facial emotions when faces were shown with masks, again utilizing an LMM approach. We did not use face mask as a fixed factor as in Model 1 but as an interactive effect with exprEmo and tested this Model 5 against Model 1. As expected, we found a stronger effect of face masks on performance in identifying facial emotions for which the mouth area was indicative (anger, disgust, happiness, and sadness) versus nonindicative (fear). As shown in Table 2, we obtained a nonsignificant effect of the interaction between face mask and the emotion fear, probably because fear is mainly expressed by the eyes. By contrast, we obtained clearly reduced performances in detecting anger, disgust, happiness, and sadness when a mask covered the mouth region. The largest effect was observed for disgust.

Table 2. Results of the linear mixed effects analysis for emotion recognition performance testing Model 5 against Model 1.

H7 addressed effects of participants’ sex on performance and confidence, respectively, of correctly assessing the emotional states depicted in faces. We tested both hypotheses with an LMM by adding the fixed factor of participants’ sex (Model 6) against Model 1. There was no significant effect of participants’ sex for performance or for confidence, ps > 0.5970.

H8 tested effects of participants’ age on performance and confidence. We tested both hypotheses with an LMM by adding the fixed factor of participants’ age (Model 7) against Model 1. There was no significant effect of participants’ age for performance or for confidence, ps > 0.1121.

Discussion

During the different waves of the COVID-19 pandemic, face masks have consistently been used as simple, cheap, and easy-to-apply methods to effectively reduce the transmission of CoV-SARS2 (Howard et al., 2021). Having started with low acceptance in Western countries due to the lack of familiarity with its use in early 2020, the face mask became an ideogram of the pandemic, and with everyday experience, acceptance increased (Carbon, 2021).

In the present study, we tested how individual difference variables were related to the ability to assess emotions in faces with and without masks and whether exposure to masks has improved the ability to infer emotional states from the remaining facial area that is not covered by the mask. We know from the literature that such little facial information is sufficient for recognizing mental states, such as being confident, doubtful, upset, or uneasy (Schmidtmann et al., 2020). This is astonishing because, in typical everyday life situations, aside from a pandemic such as the COVID-19 pandemic, we are typically not exposed to such a reduction in facial information. When we conducted the present study at a time when people in Germany had been obliged to wear face masks in public for more than 1 ¼ years. This led us to assume that people would be familiar with face masks and skilled in reading emotions in partly covered faces. Despite this high level of familiarity with face masks, we observed reduced performance and confidence when people interpreted masked faces. Moreover, people were not better than people had been a year earlier (in April 2020, see also Mitze et al., 2020), but we have to be very cautious about making this comparison because we did not test the same people nor did we use a matched sample. Still, the key parameters were very similar (German sample, mean age differed by only 1.8 years, comparable relative number of female to male participants).

Confusion between emotions in 2021 was similar to the effects documented 1 year earlier: whereas neutral faces and fear were usually well detected even when a face mask was present, anger was often misinterpreted as neutral, disgust, or sadness. Furthermore, sadness was often misinterpreted as neutral, fear, or disgust. Most dramatically, disgust was misinterpreted in nearly 1/3 of the cases and was identified as anger, happiness, or a neutral expression. Interestingly, happiness was often misinterpreted as a neutral expression. Such misinterpretations could be socially relevant in everyday life. For instance, if our counterpart signals affirmation or gratitude by expressing a happy face, we might not see this positive feedback and could misinterpret this social situation. Familiarity with masks was not a relevant factor regarding the ability to read faces either: Only when people were very often exposed to masked faces was their confidence slightly higher.

When analyzing the specific drops in performance or confidence regarding the recognition of emotions in masked faces, we found the expected result—that all emotions that are strongly expressed by the mouth area (e.g., the “smiling mouth” for happy faces or drawing down the labial angles for sad faces) were particularly impaired when a mask covered the mouth area.

In the present study, we further addressed the question of whether emotional intelligence (EI) is linked to the ability to assess facial emotions (with and without masks). However, neither ability-based nor self-reported EI was significantly linked to performance or confidence ratings. Due to the low internal consistency of the SREIS in this sample, the respective results should be interpreted with caution. We also did not find a relationship between attitude toward wearing masks and ability or confidence—so even people who had negative attitudes about using face masks were not worse at or less sure about identifying emotions.

Most of our effects were based on confidence as the dependent variable. When analyzing who was specifically affected by face masks, we found that people who were high performers in the condition without masks were more affected than others. However, this was true only for the confidence ratings but not for actual performance. We did not find an effect of participants’ sex on performance or confidence. Similarly, age had no effect.

Taken together, we were able to detect clear impairments in the ability to read facial emotions as soon as a face was partly covered by a face mask. With the exception of disgust, where we found a dramatic reduction in performance and confidence, most people were less impaired than one might think, considering how much the faces were covered. With an average performance level of 73.3%, participants were much better than chance, a level that had similarly been observed in children only recently (Carbon and Serrano, 2021).

It is important to consider that we used high-quality face stimuli, which had been tested for clear emotional expressions and were characterized by perfect illumination and a frontal perspective. Moreover, participants were able to look at the pictures without time pressure and with the opportunity to fixate perfectly. Such ideal presentations are not available in everyday life, where faces have to be read in complex situations (Yang and Huang, 1994) and where time to inspect the counterpart is limited because of other task requirements or cultural factors, such as maximally accepted eye fixation durations (Haensel et al., 2021). In other words, in everyday life, the general performance of recognizing emotions is probably much lower, and facial masks would be an additional burden. We do not really know how much we gain, on the other side, when encountering faces in reality, e.g., by using dynamic 3D information (see Dobs et al., 2018).

Still, does such reduced information jeopardize communication? Basing the understanding of our counterpart’s emotional or mental state on only facial expressions would be pretty inefficient. More than this, reliance on just one source of information would be reckless and improbable from an evolutionary point of view. Typically, highly developed social species such as humans use different channels of sensory inputs (Shi and Mueller, 2013) and build mental models to predict plausible outcomes (Johnson-Laird, 2010). Furthermore, humans can disambiguate difficult situations (e.g., the uncertain status of a counterpart) by verbally posing questions or simply by waiting for additional information.

On the basis of a comparison of data from 2020 to 2021, we showed that people apparently did not easily adapt their emotion reading skills but people can use additional sources of information. We only tested the loss of information in one channel, but other researchers collected supplemental information (for a short list of ways to cope with the loss of information, see Mheidly et al., 2020).

As a conclusion we speculate that the first important step toward facilitating communication among people who wear face masks would be to raise awareness regarding the challenges to communication that hail from the loss of facial information. Additional steps are to utilize information on body language and gestures. Considering the social situation, we are currently in, we can also use scripts and schemata typically employed in such situations, which help us predict what others will feel and how they will be affected by the current situation. Lastly, we can directly approach our counterparts and explicitly ask them whether pieces of information are missing.

The COVID-19 pandemic comes as a worldwide crisis with specific challenges. The impaired ability to read facial information is definitely one of these challenges. However, intelligent species adapt adequately to better cope with such a situation by developing new means of communication and social interaction. In the end, true social competence manifests itself in the ability to adapt to given task demands. If we use this ability flexibly, we will effectively cope with the communicative challenges inherent in the present pandemic.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://osf.io/rfmv7.

Ethics Statement

We obtained ethical approval for the general psychophysical study procedure from the local ethics committee of the University of Bamberg (Ethikrat). The participants provided their written informed consent to participate in this study.

Author Contributions

C-CC and AS had the initial idea for this research. C-CC made the statistics and the figures and wrote the initial paper. MH enriched the statistics by adding consistency scores. AS and MH revised the manuscript. All authors contributed to the article and approved the submitted version.

Funding

The publication of this article was supported by the OpenAccess publication fund of the University of Bamberg.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We are indebted to the Max Planck Institute Berlin for providing the baseline stimuli (without masks) for the present study. We thank Jane Zagorski for language editing.

Footnotes

References

Barton, K. (2019). MuMIn: Multi-Model Inference. R package version 1.6. Available at: https://CRAN.R-project.org/package=MuMIn (Accessed January 27, 2022).

Bassili, J. N. (1979). Emotion recognition: the role of facial movement and the relative importance of upper and lower areas of the face. J. Pers. Soc. Psychol. 37, 2049–2058. doi: 10.1037/0022-3514.37.11.2049

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Bombari, D., Schmid, P. C., Schmid Mast, M., Birri, S., Mast, F. W., and Lobmaier, J. S. (2013). Emotion recognition: the role of featural and configural face information. Q. J. Exp. Psychol. 66, 2426–2442. doi: 10.1080/17470218.2013.789065

Brackett, M. A., Rivers, S. E., Shiffman, S., Lerner, N., and Salovey, P. (2006). Relating emotional abilities to social functioning: a comparison of self-report and performance measures of emotional intelligence. J. Pers. Soc. Psychol. 91, 780–795. doi: 10.1037/0022-3514.91.4.780

Bruce, V., and Young, A. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x

Carbon, C. C. (2011). The first 100 milliseconds of a face: on the microgenesis of early face processing. Percept. Mot. Skills 113, 859–874. doi: 10.2466/07.17.22.PMS.113.6.859-874

Carbon, C. C. (2020). Wearing face masks strongly confuses counterparts in reading emotions. Front. Psychol. 11:566886. doi: 10.3389/fpsyg.2020.566886

Carbon, C. C. (2021). About the acceptance of wearing face masks in times of a pandemic. i-Perception 12, 1–14. doi: 10.1177/20416695211021114

Carbon, C. C., Faerber, S. J., Augustin, M. D., Mitterer, B., and Hutzler, F. (2018). First gender, then attractiveness: indications of gender-specific attractiveness processing via ERP onsets. Neurosci. Lett. 686, 186–192. doi: 10.1016/j.neulet.2018.09.009

Carbon, C. C., and Leder, H. (2005). When feature information comes first! Early processing of inverted faces. Perception 34, 1117–1134. doi: 10.1068/p5192

Carbon, C. C., and Serrano, M. (2021). The impact of face masks on the emotional reading abilities of children—A lesson from a joint school–university project. i-Perception 12, 1–17. doi: 10.1177/20416695211038265

Dobs, K., Bülthoff, I., and Schultz, J. (2018). Use and usefulness of dynamic face stimuli for face perception studies: a review of behavioral findings and methodology. [mini review]. Front. Psychol. 9:1355. doi: 10.3389/fpsyg.2018.01355

Duchaine, B. C., Germine, L., and Nakayama, K. (2007). Family resemblance: ten family members with prosopagnosia and within-class object agnosia. Cogn. Neuropsychol. 24, 419–430. doi: 10.1080/02643290701380491

Duchaine, B. C., and Nakayama, K. (2006). The Cambridge face memory test: results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia 44, 576–585. doi: 10.1016/j.neuropsychologia.2005.07.001

Ebner, N. C., Riediger, M., and Lindenberger, U. (2010). FACES-A database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362. doi: 10.3758/BRM.42.1.351

Fischer, A. H., Gillebaart, M., Rotteveel, M., Becker, D., and Vliek, M. (2012). Veiled emotions: the effect of covered faces on emotion perception and attitudes. Soc. Psychol. Personal. Sci. 3, 266–273. doi: 10.1177/1948550611418534

Gessler, S., Nezlek, J. B., and Schütz, A. (2021). Training emotional intelligence: does training in basic emotional abilities help people to improve higher emotional abilities? J. Posit. Psychol. 16, 455–464. doi: 10.1080/17439760.2020.1738537

Gori, M., Schiatti, L., and Amadeo, M. B. (2021). Masking emotions: face masks impair how we read emotions. Front. Psychol. 12:669432. doi: 10.3389/fpsyg.2021.669432

Green, P., and MacLeod, C. J. (2016). Simr: an R package for power analysis of generalised linear mixed models by simulation. Methods Ecol. Evol. 7, 493–498. doi: 10.1111/2041-210X.12504

Grundmann, F., Epstude, K., and Scheibe, S. (2021). Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS One 16:e0249792. doi: 10.1371/journal.pone.0249792

Haensel, J. X., Smith, T. J., and Senju, A. (2021). Cultural differences in mutual gaze during face-to-face interactions: a dual head-mounted eye-tracking study. Vis. Cogn. 1-16, 1–16. doi: 10.1080/13506285.2021.1928354

Haxby, J. V., Gobbini, M. I., Furey, M. L., Ishai, A., Schouten, J. L., and Pietrini, P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293, 2425–2430. doi: 10.1126/science.1063736

Herlitz, A., and Lovén, J. (2013). Sex differences and the own-gender bias in face recognition: a meta-analytic review. Vis. Cogn. 21, 1306–1336. doi: 10.1080/13506285.2013.823140

Herpertz, S., Schütz, A., and Nezlek, J. (2016). Enhancing emotion perception, a fundamental component of emotional intelligence: using multiple-group SEM to evaluate a training program. Personal. Individ. Differ. 95, 11–19. doi: 10.1016/j.paid.2016.02.015

Hodzic, S., Scharfen, J., Ripoll, P., Holling, H., and Zenasni, F. (2018). How efficient are emotional intelligence trainings: a meta-analysis. Emot. Rev. 10, 138–148. doi: 10.1177/1754073917708613

Hole, G., and George, P. (2011). Evidence for holistic processing of facial age. Vis. Cogn. 19, 585–615. doi: 10.1080/13506285.2011.562076

Howard, J., Huang, A., Li, Z., Tufekci, Z., Zdimal, V., van der Westhuizen, H.-M., et al. (2021). An evidence review of face masks against COVID-19. Proc. Natl. Acad. Sci. 118:e2014564118. doi: 10.1073/pnas.2014564118

Johnson-Laird, P. N. (2010). Mental models and human reasoning. Proc. Natl. Acad. Sci. U. S. A. 107, 18243–18250. doi: 10.1073/pnas.1012933107

Joseph, D. L., and Newman, D. A. (2010). Emotional intelligence: an integrative meta-analysis and cascading model. J. Appl. Psychol. 95, 54–78. doi: 10.1037/a0017286

Karle, K. N., Ethofer, T., Jacob, H., Bruck, C., Erb, M., Lotze, M., et al. (2018). Neurobiological correlates of emotional intelligence in voice and face perception networks. Soc. Cogn. Affect. Neurosci. 13, 233–244. doi: 10.1093/scan/nsy001

Köppe, C., Held, M. J., and Schütz, A. (2019). Improving emotion perception and emotion regulation through a web-based emotional intelligence training (WEIT) program for future leaders. Int. J. Emot. Educ. 11, 17–32. doi: 10.20378/irb-46523

Koydemir, S., and Schütz, A. (2012). Emotional intelligence predicts components of subjective well-being beyond personality: a two-country study using self- and informant reports. J. Posit. Psychol. 7, 107–118. doi: 10.1080/17439760.2011.647050

Kret, M. E., and de Gelder, B. (2012). Islamic headdress influences how emotion is recognized from the eyes. Front. Psychol. 3:110. doi: 10.3389/fpsyg.2012.00110

Mayer, J. D., Roberts, R. D., and Barsade, S. G. (2008a). Human abilities: emotional intelligence. Annu. Rev. Psychol. 59, 507–536. doi: 10.1146/annurev.psych.59.103006.093646

Mayer, J. D., and Salovey, P. (1997). “What is emotional intelligence?” in Emotional Development and Emotional Intelligence: Implications for Educators. eds. P. Salovey and D. Sluyter (New York, NY: Basic Books), 3–31.

Mayer, J. D., Salovey, P., and Caruso, D. R. (2008b). Emotional intelligence: new ability or eclectic traits? Am. Psychol. 63, 503–517. doi: 10.1037/0003-066X.63.6.503

Mheidly, N., Fares, M. Y., Zalzale, H., and Fares, J. (2020). Effect of face masks on interpersonal communication during the COVID-19 pandemic. Front. Public Health 8:582191. doi: 10.3389/fpubh.2020.582191

Mishra, M. V., Likitlersuang, J., Wilmer, J. B., Cohan, S., Germine, L., and DeGutis, J. M. (2019). Gender differences in familiar face recognition and the influence of sociocultural gender inequality. Sci. Rep. 9:17884. doi: 10.1038/s41598-019-54074-5

Mitze, T., Kosfeld, R., Rode, J., and Wälde, K. (2020). Face masks considerably reduce COVID-19 cases in Germany. Proc. Natl. Acad. Sci. 117, 32293–32301. doi: 10.1073/pnas.2015954117

Morey, R. D. (2008). Confidence intervals from normalized data: a correction to Cousineau (2005). Tutorials Quant. Methods Psychol. 4, 61–64. doi: 10.20982/tqmp.04.2.p061

Østergaard Knudsen, C., Winther Rasmussen, K., and Gerlach, C. (2021). Gender differences in face recognition: the role of holistic processing. Vis. Cogn. 29, 379–385. doi: 10.1080/13506285.2021.1930312

Pavlova, M. A., and Sokolov, A. A. (2021). Reading covered faces. Cereb. Cortex 32, 249–265. doi: 10.1093/cercor/bhab311

R Core Team (2021). R: A Language and Environment for Statistical Computing. Available at: http://www.R-project.org/ (Accessed January 27, 2022).

Ramachandra, V., and Longacre, H. (2022). Unmasking the psychology of recognizing emotions of people wearing masks: the role of empathizing, systemizing, and autistic traits. Personal. Individ. Differ. 185:111249. doi: 10.1016/j.paid.2021.111249

Rhodes, G., Hayward, W. G., and Winkler, C. (2006). Expert face coding: configural and component coding of own-race and other-race faces. Psychon. Bull. Rev. 13, 499–505. doi: 10.3758/BF03193876

Schmidtmann, G., Logan, A. J., Carbon, C. C., Loong, J. T., and Gold, I. (2020). In the blink of an eye: Reading mental states from briefly presented eye regions. I-Perception 11:2041669520961116. doi: 10.1177/2041669520961116

Schneider, T. M., Hecht, H., and Carbon, C. C. (2012). Judging body weight from faces: The height-weight illusion. Perception 41, 121–124. doi: 10.1068/p7140

Shi, Z., and Mueller, H. (2013). Multisensory perception and action: development, decision-making, and neural mechanisms. Front. Integr. Neurosci. 7:81. doi: 10.3389/fnint.2013.00081

Steinmayr, R., Schütz, A., Hertel, J., and Schröder-Abé, M. (2011). “German adaptation of the mayer-salovey-caruso emotional intelligence test (MSCEIT),” in Mayer-Salovey-Caruso Emotional Intelligence Test (MSCEIT). eds. Mayer J. D., Salovey P., and Caruso D. R. (Huber).

Taylor, S., and Asmundson, G. J. G. (2021). Negative attitudes about facemasks during the COVID-19 pandemic: the dual importance of perceived ineffectiveness and psychological reactance. PLoS One 16:e0246317. doi: 10.1371/journal.pone.0246317

Vöhringer, M., Schütz, A., Geßler, S., and Schröder-Abé, M. (2020). SREIS-D: die deutschsprachige version der self-rated emotional intelligence scale. Diagnostica 66, 200–210. doi: 10.1026/0012-1924/a000248

Willis, J., and Todorov, A. (2006). First impressions: making up your mind after a 100-ms exposure to a face. Psychol. Sci. 17, 592–598. doi: 10.1111/j.1467-9280.2006.01750.x

Wingenbach, T. S. H., Ashwin, C., and Brosnan, M. (2018). Sex differences in facial emotion recognition across varying expression intensity levels from videos. PLoS One 13:e0190634. doi: 10.1371/journal.pone.0190634

Keywords: emotion perception, face mask, personality, emotional intelligence, accuracy, face perception, COVID-19 pandemic, cover

Citation: Carbon C-C, Held MJ and Schütz A (2022) Reading Emotions in Faces With and Without Masks Is Relatively Independent of Extended Exposure and Individual Difference Variables. Front. Psychol. 13:856971. doi: 10.3389/fpsyg.2022.856971

Edited by:

Birgitta Dresp-Langley, Centre National de la Recherche Scientifique (CNRS), FranceReviewed by:

Marco Viola, University of Turin, ItalyJohn Mwangi Wandeto, Dedan Kimathi University of Technology, Kenya

Copyright © 2022 Carbon, Held and Schütz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Claus-Christian Carbon, Y2NjQHVuaS1iYW1iZXJnLmRl

Claus-Christian Carbon

Claus-Christian Carbon Marco Jürgen Held

Marco Jürgen Held Astrid Schütz

Astrid Schütz