94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 10 May 2022

Sec. Auditory Cognitive Neuroscience

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.840541

Several studies have reported the better auditory performance of early-blind subjects over sighted subjects. However, few studies have compared the auditory functions of both hemispheres or evaluated interhemispheric transfer and binaural integration in blind individuals. Therefore, we evaluated whether there are differences in dichotic listening, auditory temporal sequencing ability, or speech perception in noise (all of which have been used to diagnose central auditory processing disorder) between early-blind subjects and sighted subjects. The study included 23 early-blind subjects and 22 age-matched sighted subjects. In the dichotic listening test (three-digit pair), the early-blind subjects achieved higher scores than the sighted subjects in the left ear (p = 0.003, Bonferroni’s corrected α = 0.05/6 = 0.008), but not in the right ear, indicating a right ear advantage in sighted subjects (p < 0.001) but not in early-blind subjects. In the frequency patterning test (five tones), the early-blind subjects performed better (both ears in the humming response, but the left ear only in the labeling response) than the sighted subjects (p < 0.008, Bonferroni’s corrected α = 0.05/6 = 0.008). Monosyllable perception in noise tended to be better in early-blind subjects than in sighted subjects at a signal-to-noise ratio of –8 (p = 0.054), the results at signal-to-noise ratios of –4, 0, +4, and +8 did not differ. Acoustic change complex responses to/ba/in babble noise, recorded with electroencephalography, showed a greater N1 peak amplitude at only FC5 electrode under a signal-to-noise ratio of –8 and –4 dB in the early-blind subjects than in the sighted subjects (p = 0.004 and p = 0.003, respectively, Bonferroni’s corrected α = 0.05/5 = 0.01). The results of this study revealed early-blind subjects exhibited some advantages in dichotic listening, and temporal sequencing ability compared to those shown in sighted subjects. These advantages may be attributable to the enhanced activity of the central auditory nervous system, especially the right hemisphere function, and the transfer of auditory information between the two hemispheres.

Because congenitally blind or early-blind individuals depend exclusively upon auditory sensory cues, without visual cues when communicating, their auditory processing can develop differently from that of sighted subjects. Several studies have reported the better performance of early-blind subjects over sighted subjects in speech memory (Amedi et al., 2003), pitch discrimination in pure tone (Gougoux et al., 2004; Shim et al., 2019), temporal resolution (Stevens and Weaver, 2005; Shim et al., 2019), ultrafast speech comprehension (Hertrich et al., 2013), and dichotic listening (Hugdahl et al., 2004). Some studies found impaired sound localization abilities in early-blind subjects, especially in the sound localization task in the vertical plane (Zwiers et al., 2001; Lewald, 2002) or the performance of more complex tasks requiring a metric representation of the auditory space (Gori et al., 2010, 2014; Finocchietti et al., 2015; Vercillo et al., 2016). However, a recent study showed enhanced spatial hearing abilities in early-blind subjects and claimed that vision is not a prerequisite for developing an auditory sense of space (Battal et al., 2020). The difference in auditory performance in early-blind subjects compared to sighted subjects is presumed to be due to plastic changes in the central auditory system. Abundant neuroimaging evidence supports the theory that the cerebral cortex experiences compensatory plasticity after visual deprivation. The visual cortices of blind subjects have been shown to be recruited following auditory signals (Weeks et al., 2000; Voss et al., 2008; Gougoux et al., 2009). However, few studies have compared the auditory functions of both hemispheres or evaluated interhemispheric transfer and binaural integration in blind individuals (Hugdahl et al., 2004). Several behavioral tests have been developed to evaluate the central auditory function in each targeted process, and they have been used to diagnose central auditory processing disorder. In most listening environments, both ears do not receive the same signal at the same time, so the brain must be able to integrate the potentially competing information from both ears. A dichotic listening test assesses the auditory function in the left and right hemispheres separately and evaluates the biaural integration that occurs through information transfer from the right hemisphere to the left hemisphere (Musiek, 1983; Jutras et al., 2012; Rajabpur et al., 2014; Fischer et al., 2017). The frequency pattern test measures frequency discrimination and temporal sequencing ability for sound and is known to be highly sensitive to and specific for lesions in the corpus collosum, which is responsible for connecting the information between both hemispheres (Musiek, 1994; Marshall and Jones, 2017). Here, we used the dichotic listening and frequency pattern tests to compare early-blind subjects and sighted subjects.

Moreover, the ability to process low-redundancy speech, such as speech perception in noise, can be one way to explore the central auditory system. It has not been concluded whether there are differences in speech perception in noise between early-blind individuals and sighted individuals. Several studies that compared speech perception between early-blind and sighted subjects have shown varying results, depending on the experimental conditions (Gougoux et al., 2009; Menard et al., 2009; Hertrich et al., 2013; Arnaud et al., 2018). In a previous study, we confirmed that early-blind subjects showed greater frequency and temporal resolution insofar as they scored better on spectral discrimination and modulated detection thresholds than sighted subjects (Shim et al., 2019). Auditory spectral resolution and temporal resolution are the fundamental aspects of speech perception. However, there was no difference of two-syllable speech perception in noise between the blind subjects and sighted subjects. When interpreting the results for the auditory performance of blind subjects in our previous study, together with other results in the literature, we wondered why there was no consistent result for speech perception in noise, even though frequency and temporal resolution were consistently better in blind subjects in previous studies. We speculated that the dichotic listening and frequency pattern tests may provide clues to resolving this question. We hypothesized that plastic changes in the central auditory system after visual deprivation affect the two cerebral hemispheres differently, and affect the interhemispheric transfer from the right to the left cortex in early-blind individuals.

In our previous study (Shim et al., 2019), two-syllable words were presented in background noise, and we assume that this condition involves the listener’s ability to achieve auditory closure with redundancy cues, in addition to his/her ability to discriminate speech cues (Aylett and Turk, 2004). To minimize the redundancy cues in speech perception and to force the listener to focus on speech cues itself, we used monosyllabic words consisting of consonant–vowel (CV) or consonant–vowel–consonant (CVC) sequences in the present study.

In addition to the monosyllabic speech perception test, we recorded the acoustic change complex (ACC) in response to/ba/in babble noise. The ACC is an auditory evoked potential (P1-N1-P2) that can be elicited by the listener’s ability to detect a change in an ongoing sound in passive listening conditions (Martin and Boothroyd, 1999; Tremblay et al., 2003).

The purpose of this study was to evaluate whether there are differences in dichotic listening, auditory temporal sequencing ability, and speech perception in noise between early-blind subjects and sighted subjects. In the current study, the early-blind subjects were limited to those who were blind at birth or who became blind within 1 year of birth because late-blind subjects showed lower levels of plasticity in spectral resolution than early-blind subjects in our previous study (Shim et al., 2019).

The study population included a group of 23 early-blinded subjects aged 19–40 years (29.57 ± 5.03 years, male:female [M:F] = 12:11) and an age-matched control group of 22 sighted subjects (28.59 ± 4.66 years, M:F = 11:11). There were no significant differences between the two groups in age (p > 0.05). All the subjects were right-handed, aged < 40 years, with normal symmetric hearing thresholds (≤20 dB hearing level at 0.25, 0.5, 1, 2, 3, 4, and 8 kHz), and the pure tone averages did not differ significantly between the two groups (p > 0.05). They had no other neurological or otological problems. In the blind group, only those who were blind at birth or who became blind within 1 year of birth, who were classified in categories 4 and 5 according to the 2006 World Health Organization guidelines for the clinical diagnosis of visual impairment (category 4, “light perception” but no perception of “hand motion”; category 5, “no light perception”), were included. Table 1 shows the characteristics of the blind subjects. We confirmed the normal cognitive abilities of all the subjects using the Korean Mini-Mental State Examination. The Mini-Mental State Examination was used except for vision-related items, and no difference was detected between the two groups (p > 0.05). The study was conducted in accordance with the Declaration of Helsinki and the recommendations of the Institutional Review Board of Nowon Eulji Medical Center, with written informed consent from all subjects. Informed consent was obtained verbally from the blind subjects in the presence of a guardian or third party. The subjects then signed the consent form, and a copy was given to them.

Three behavioral tests were used to evaluate central auditory processing: the dichotic digit test, the frequency pattern test, and the monosyllable perception in noise test. The digit span test was also conducted to examine the effect of working memory on central auditory processing. All tests were conducted in a sound-proofed room with an audiometer (Madsen Astera 2; GN Otometrics, Taastrup, Denmark) and inserted earphones (ER-3A; Etymotic Research, Inc., Elk Grove Village, IL, United States). Behavior tests were presented in the same order: digit span test, dichotic digit test, frequency pattern test—humming, frequency pattern test—labeling, and monosyllable perception test. Each frequency pattern test was performed first on the right ear and then on the left ear. The monosyllable perception test was conducted randomly at five signal-to-noise ratios (SNRs).

The dichotic digit test, developed in Korean by Jeon and Jang (2009), was used to evaluate dichotic listening ability. The Korean dichotic digit test, consisting of one-, two-, and three- digit pairs, was standardized (Jang et al., 2014). In this study, two subtests were performed, the dichotic two-digit test and the dichotic three-digit test, which previous studies have suggested are appropriate for clinical use in young adults with normal hearing (Jeon and Jang, 2009; Jang et al., 2014). Each subtest consisted of 20 items with a 500 ms interdigit interval and a 5 s interstimulus interval. The digit stimuli were presented to the bilateral ears simultaneously at 60 dB HL, and the subjects responded verbally in the free-recall mode, in which they were asked to say all the digits they heard in both ears. The numbers of correct responses in the right and left ears were calculated individually in both subtests.

The frequency pattern test measures temporal sequencing ability and assesses the integrity of the hemispheric and interhemispheric transfer of neural information (Musiek, 1994). The frequency pattern test was presented in three sequential tones, which were “high” (1,122 Hz) or “low” (880 Hz) in frequency. The test was performed in each ear and the subjects were instructed to respond by humming or labeling. The subjects were instructed to hum or label (e.g., high–low–high–high–low) in response to the given stimulus, which was presented monaurally at 60 dB HL. The humming condition, which imitates the pitch pattern of the tone heard, evaluates the function of the right hemisphere, which is mainly responsible for the recognition of acoustic contour and patterns. In contrast, the labeling condition, which is a verbal response to the pitch patterns, reflects the integrity of the left hemisphere, which dominates speech recognition (Kimura, 1961; Blumstein and Cooper, 1974). In this study, the test complexity was modified from three to five sequential tones, with reference to the study of Yoon et al. (2019), which reported the differences in the frequency pattern test performance of young adults with normal hearing. Each tone was 150 ms in duration, with a 10 ms rise–fall time and a 200 ms intertone interval. The frequency pattern test consisted of 60 items, which were divided into 30 items each for the humming and labeling responses. The order of the high–low tone sequence was controlled in such a way that no more than three identical tones were presented in a row. A total of 30 possible patterns were presented equally to the four conditions (left-humming, left-labeling, right-humming, and right-labeling) of 15 items. The numbers of correct responses (15 scores for each condition) in the right and left ears were calculated individually for both the humming and labeling responses.

The monosyllable perception in noise test was performed at five SNRs (+8, +4, 0, –4, and –8) using five lists, each containing 25 Korean monosyllabic words, which were spoken by a male speaker, and eight-talker babble noise. The mixture of the target word and the noise stimuli was presented monaurally to the test ear, and the subjects were asked to repeat the words while ignoring the noise. The noise level was fixed at 70dB SPL and the level of the target words was varied. The number of correct responses for a total of 25 words under each of the SNR conditions was measured. For the sighted subjects, the test was performed under both auditory-only (AO) and audio–visual (AV) conditions. For the AV condition, video clips that included the speaker’s face and sound were presented through a monitor and a loudspeaker located 1 m in front in a sound-attenuating booth, and for the AO condition, only the sound signal was presented. The main comparison was between the performance of early-blind subjects and that of sighted subjects under the AO condition, so the monosyllable perception in noise was first measured under the AO condition and then under the AV condition in the sighted subjects.

All digit span tests consisted of digits from 1 to 9, and the digit sets presented increased consecutively from three to 10 digits. Digit sets of the same numbers were presented twice. The threshold of the digit span test was determined to be at least two incorrect responses to the previous digit series. The sets of digits were presented to the bilateral ears simultaneously at 60 dB HL, with a 1 s interval between sets, and the subjects were asked to repeat the set of digits in a forward manner. The highest total score for the test was 16, and the maximum number of digits was 10 (Supplementary Material 1).

The first 400 ms of the stimulus consisted of babble noise and the last 400 ms contained babble noise plus/ba/sound in five SNR conditions (–8, –4, 0, +4, and +8 dB) followed by an inter-stimulus interval of 150 ms. Each SNR condition involved 100 random presentations of babble noise –/ba/with the same noise stimuli. The stimuli were presented to the bilateral ears simultaneously at 60 dB HL.

Acoustic change complex responses were recorded across 32 channels using the actiCHamp Brain Products recording system (Brain Products GmbH, Inc., Munich, Germany) during passive listening to babble noise -/ba/with the same noise. The blind subjects sat in a comfortable chair reading a braille book. During recording, we encouraged the participants to stay still during the test with their heads and the elbows within a fixed range. We also instructed them not to move their wrist and fingers. The sighted subjects sat watching a muted, closed-captioned movie. A notch filter at 60 Hz was set to prevent powerline noise and the impedance of all scalp electrodes was kept <5 kΩ.

The collected data were analyzed using Brain vision analyzer version 2.0 (Brain Products GmbH, Inc., Munich, Germany). The band-pass filter was set at 0.1–60 Hz after removing eye blinks and body movement artifacts. In addition, independent component analysis was used to adjust for eye blinks. Babble noise –/ba/with same noise stimuli data were separated from 200 ms before stimulus presentation to 200 ms after stimulation based on the babble noise only stimulus presentation time. Baseline correction was performed using the interval before stimulation presentation, and potential averaging was performed. Using a semi-automatic peak detection algorithm in the Brain vision analyzer software, the largest negative deflection that occurred between 100 and 200 ms after stimulus onset was defined as the peak of N1. The peaks were visually inspected and manually adjusted if necessary.

To analyze the behavioral tests in this study, descriptive statistics, including the mean and standard deviation of each test, were determined for each group. Non-parametric tests were analyzed by confirming that each group did not follow a normal Kolmogorov–Smirnov distribution. The statistical comparisons between groups were made for each test with multiple independent t-tests or the Mann–Whitney test with Bonferroni’s correction. Within-group comparisons were made with multiple Wilcoxon signed-rank tests with Bonferroni’s correction. Correlation analyses were based on Pearson’s correlation coefficient.

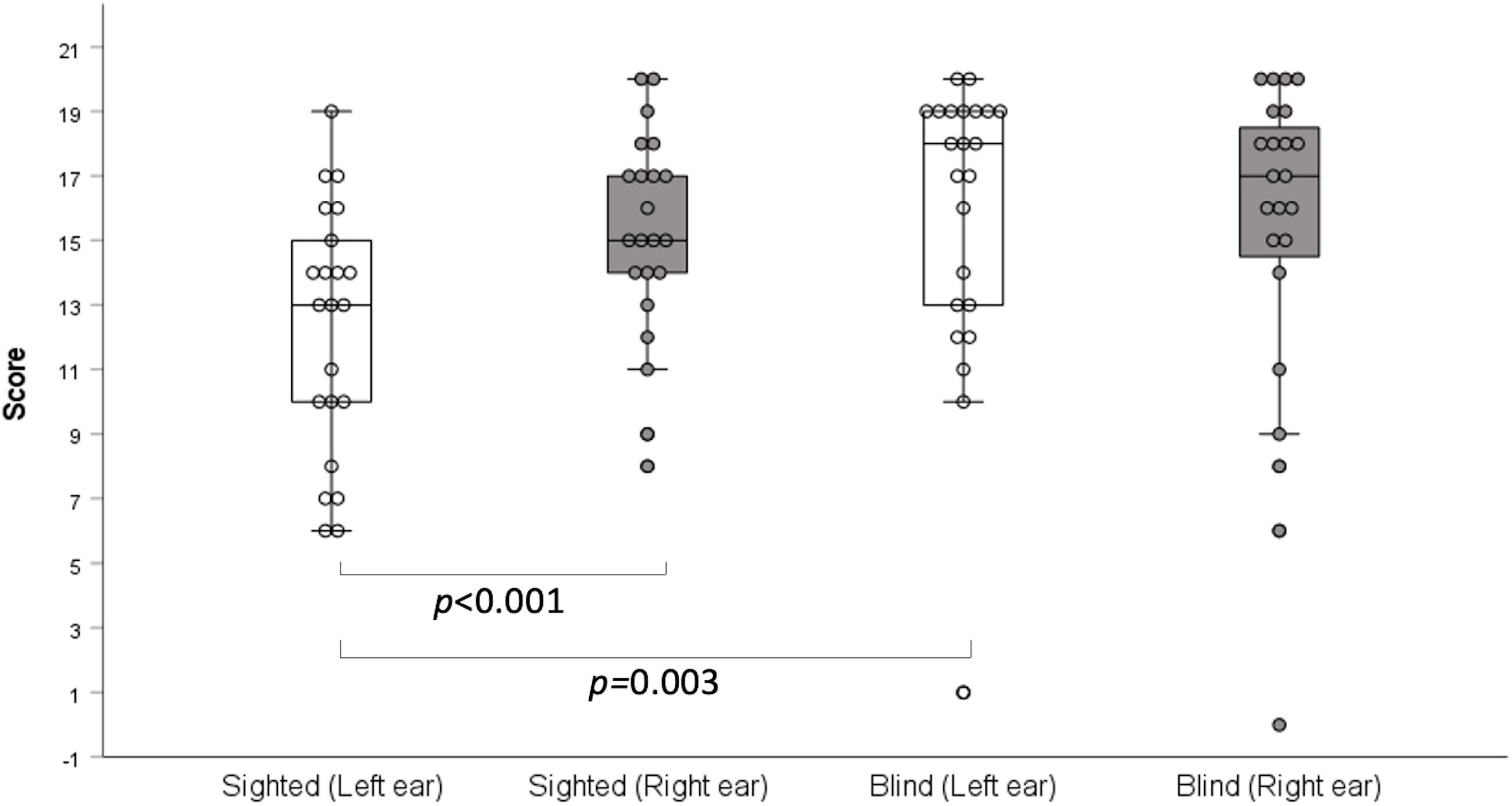

In the two-digit dichotic test, there was no significant difference between the two groups (right ear: z = –0.997, p = 0.319; left ear: z = –0.861, p = 0.389; Table 2). However, in the three-digit dichotic test, the early-blind subjects scored higher than the sighted subjects in the left ear (z = –2.979, p = 0.003, Bonferroni’s corrected α = 0.05/6 = 0.008; Table 2 and Figure 1) but not in the right ear. These results demonstrate that the right ear advantage was present in the sighted subjects (z = —3.715 p < 0.001, Bonferroni’s corrected α = 0.05/6 = 0.008), but not in the early-blind subjects (Figure 1).

Figure 1. Dichotic three-digit test. Early-blind subjects had higher scores than the sighted subjects in the left ears (p = 0.003), but not in the right ears. A right-ear advantage was detected in the sighted subjects (p < 0.001), but not the early-blind subjects.

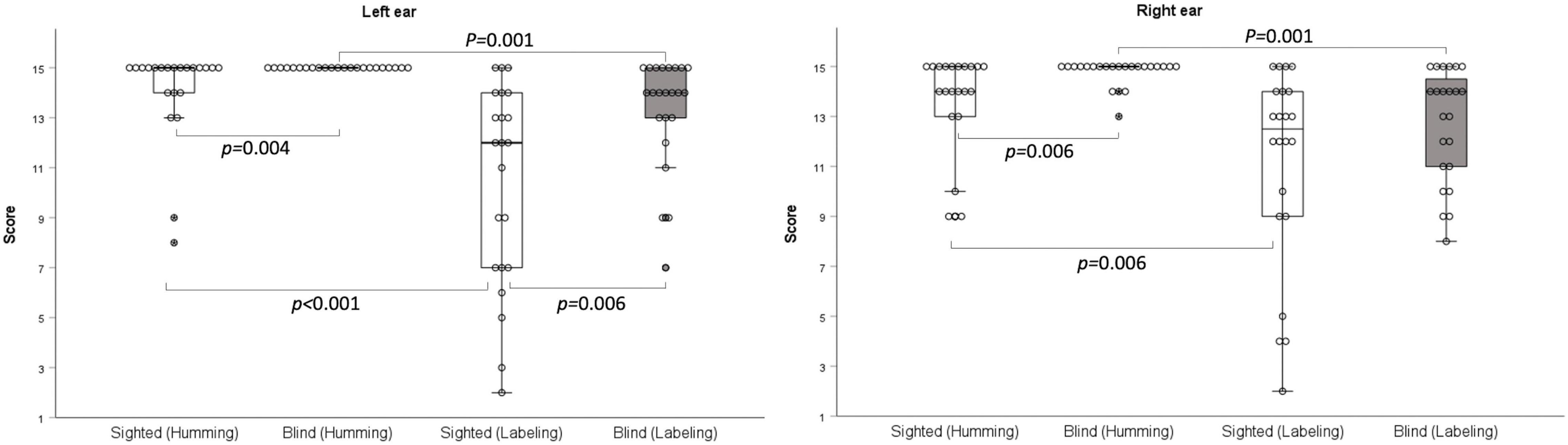

The scores on the humming frequency pattern test were significantly greater for the early-blind subjects than the sighted subjects (left ear: z = –2.899, p = 0.004; right ear: z = –2.722, p = 0.006; Bonferroni’s corrected α = 0.05/6 = 0.008; Table 2 and Figure 2). All 23 early-blind subjects achieved full marks in the left frequency pattern test (15.0 ± 0.0). The results of the labeling test in the right ear did not differ significantly between the two groups, but the left ear score was significantly higher in the early-blind subjects (z = –2.755, p = 0.006, Bonferroni’s corrected α = 0.05/6 = 0.008; Table 2 and Figure 2). The results for the humming response were always higher than those for the labeling response, regardless of the side or group (p < 0.006, Bonferroni’s corrected α = 0.05/6 = 0.008; Figure 2).

Figure 2. Frequency pattern test. With the humming response, early-blind subjects achieved significantly higher scores than sighted subjects (right: p = 0.006; left p = 0.004). With the labeling response, the scores were significantly higher in the early-blind subjects in the left ear only (p = 0.006).

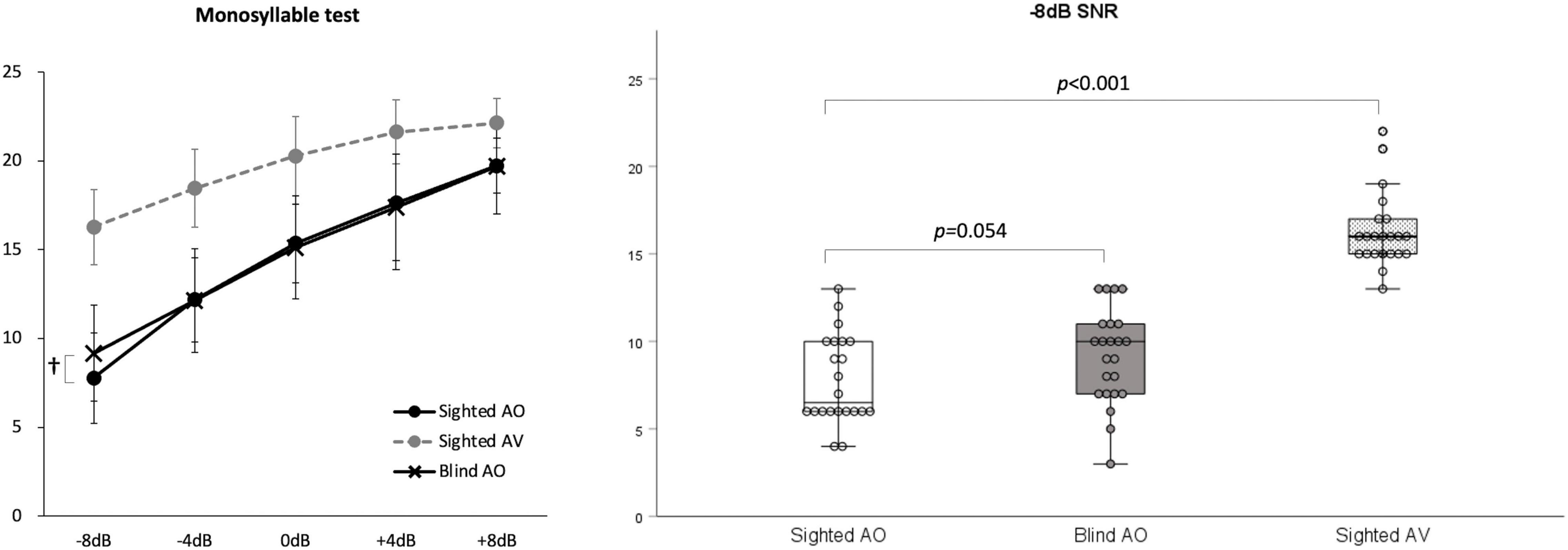

The results at SNRs of –8, –4, 0, +4, and +8 under AO conditions did not differ significantly between the early-blind and sighted subjects, but tended to be better in the former group at an SNR of –8 dB (z = –1.9, p = 0.054; Table 3 and Figure 3). At all five SNRs, the scores of the sighted subjects under the AV condition were much higher than those of the early-blind subjects and the sighted subjects under the AO condition (p < 0.003, Bonferroni’s corrected α = 0.05/15 = 0.003; Table 3).

Figure 3. Monosyllable perception in noise test. At signal-to-noise ratio (SNR)s of –4, 0, +4, and +8 under auditory-only (AO) conditions, there were no significant differences between the early-blind and sighted subjects, but the early-blind subjects tended to do better than the sighted subjects at an SNR of –8 (p = 0.054). The scores of the sighted subjects under audio–visual (AV) conditions were much higher than those of the early-blind subjects and sighted subjects under the AO conditions (p < 0.001). †0.05 < p < 0.06.

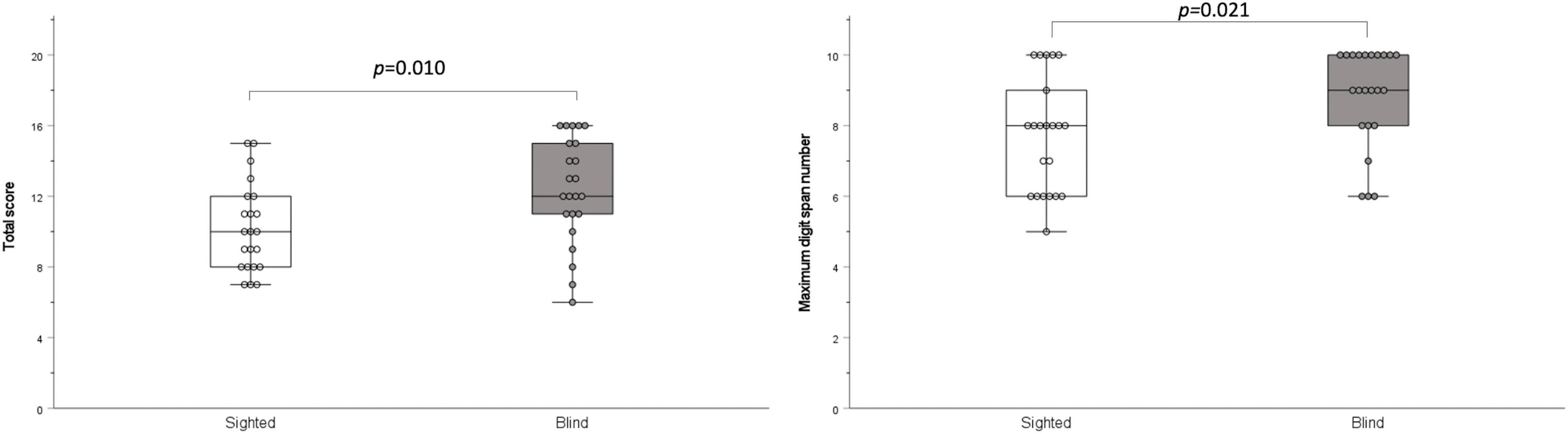

The results of the digit span test were significantly higher in the early-blind subjects than in the sighted subjects (total score: z = –2.579, p = 0.010; maximum digit number: z = –2.306, p = 0.021; Figure 4).

Figure 4. Digit span test. The total score and maximum digit number were significantly higher in the blind group than in the sighted group (p = 0.010 and p = 0.021, respectively).

In the early-blind subjects, the total score on the digit span test correlated significantly with the score on the three-digit dichotic test in the right ear (r = 0.698, p < 0.001) and the left ear (r = 0.531, p = 0.011), and the maximum digit number on the digit span test also correlated significantly with the score on the three-digit dichotic test in the right ear (r = 0.735, p < 0.001) and the left ear (r = 0.612, p = 0.002). In the early-blind subjects, the total scores on the digit span test correlated significantly with the score on the humming frequency pattern test in the right ear (r = 0.451, p = 0.035), and the maximum digit number on the digit span test also correlated significantly with the score on the humming frequency pattern test in the right ear (r = 0.445, p = 0.038). However, there was no correlation between the result of the digit span test and the scores on the three-digit dichotic or frequency pattern tests in sighted subjects.

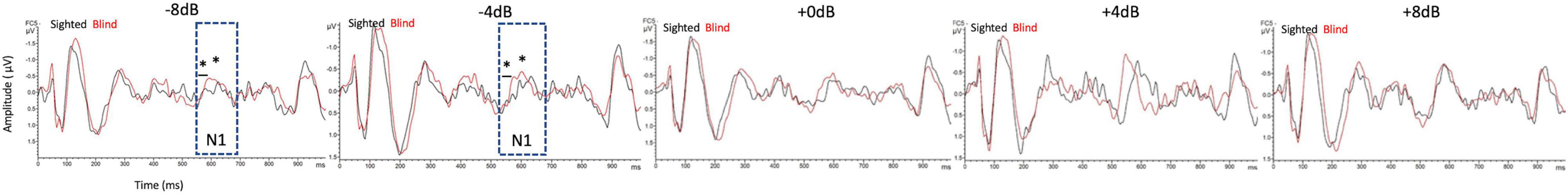

Figure 5 shows the grand mean ACC potentials in response to the signal change at the FC5 electrodes. The N1 peak amplitude originating from/ba/in babble noise was greater in the early-blind subjects than in the sighted subjects only at FC5 electrode under SNRs of –8 dB and –4 dB [t(42) = –3.088, p = 0.004 and t(42) = –3.112, p = 0.003 respectively, Bonferroni’s corrected α = 0.05/5 = 0.01]. The latency of the N1 peak at the FC5 electrode was shorter in the early-blind subjects than in the sighted subjects under an SNR of –8 and –4 dB [t(42) = –2.827, p = 0.007 and t(42) = –4.911, p < 0.001 respectively, Bonferroni’s corrected α = 0.05/5 = 0.01].

Figure 5. Comparisons of acoustic change complex (ACC) at the FC5 electrode between early-blind subjects and sighted subjects. The N1 peak amplitude originating from/ba/in babble noise was greater in early-blind subjects than in sighted subjects at SNRs -of -8 dB and –4 dB (p = 0.004 and p = 0.003, respectively, Bonferroni’s corrected α = 0.05/5 = 0.01). The latency of the N1 peak at the FC5 electrode was shorter in the early-blind subjects than in the sighted subjects under an SNR of –8 and –4 dB (p = 0.007 and p < 0.001 respectively, Bonferroni’s corrected α = 0.05/5 = 0.01). *Significant in the N1 peak amplitude; * Significant in the peak latency.

In the dichotic three-digit test, the early-blind subjects showed an equally high performance in both ears, indicating no ear advantage. By contrast, the sighted subjects displayed the typical right-ear advantage, consistent with the findings of another study (Jang et al., 2014), which assumes that the right ear connects directly to the left hemisphere, which is dominant in right-handed subjects. Therefore, the dichotic three-digit test results revealed better right hemispheric function or interhemispheric function in early-blind subjects than in sighted subjects. In other words, the symmetric performances of both ears (i.e., no right-ear advantage) in early-blind subjects are attributed to the enhanced function of the right hemisphere, which is dominantly connected with the left ear. This finding suggests evidence of plastic functional changes in the brain after long-term visual deprivation. Another explanation is that the education of early-blind subjects to read Braille with a two-handed technique (Hermelin and O’Connor, 1971) may affect symmetric performance in the dichotic test because the dichotic-listening ear advantage is known to be associated with the subject’s handedness (Hu and Lau, 2019). Although all early-blind subjects in the present study were right-handed, two-hand usage in early-blind subjects could functionally enhance the right hemisphere. In a previous study using a dichotic test with the pairwise presentation of CV syllables, the overall correct scores were higher in the early-blind group than in the sighted subjects, consistent with our data using a three-digit dichotic test (Hugdahl et al., 2004). However, those authors documented a right-ear advantage in early-blind subjects as in sighted subjects, implying that this difference was caused by the test materials (digits vs CV syllables).

In the frequency pattern test, the early-blind subjects performed better in the humming response than the sighted subjects. With the labeling response in words, the early-blinded subjects achieved higher scores than the sighted subjects when the stimuli were presented to the left ear. The left hemisphere plays a dominant role in speech and language, whereas the right hemisphere recognizes acoustic contours and patterns (Blumstein and Cooper, 1974). Therefore, the results for the humming response reflect the better function of the right hemisphere in early-blind subjects, and the results for the linguistic labeling response in the left ear imply greater transfer of the acoustic signals from the right hemisphere to the left hemisphere in early-blind subjects.

If the results of the two tests are combined and interpreted, blind subjects might have (1) better right hemisphere function; (2) greater transfer of auditory information from the right to the left hemisphere; or (3) better binaural integration than sighted subjects. Previous studies that evaluated the auditory performance of blind subjects examined various psychoacoustic abilities, such as pitch discrimination (Gougoux et al., 2004; Shim et al., 2019), temporal resolution (Stevens and Weaver, 2005; Shim et al., 2019), ultrafast speech comprehension (Hertrich et al., 2013), sound localization (Zwiers et al., 2001; Lewald, 2002; Gori et al., 2010, 2014; Finocchietti et al., 2015; Vercillo et al., 2016; Battal et al., 2020), and speech perception (Gougoux et al., 2009; Guerreiro et al., 2015; Shim et al., 2019), and those abilities are also affected by the function of the peripheral auditory system. Both the dichotic digit test and the frequency pattern test reflect the central auditory processing of a subject, with no influence from any mild to moderate damage in the peripheral auditory system (Musiek, 1994; Mukari et al., 2006). The results of the present study suggest that long-term visual deprivation reduces the natural advantage of the left hemisphere for speech perception by enhancing the function of right hemisphere and enhancing the transfer of information from the right hemisphere to the left hemisphere. An early neuroimaging study showed preferential activation of right occipital cortex during sound localization in early-blind subjects implying the auditory recruitment of right visual cortex (Weeks et al., 2000). This plastic change in favor of the right brain is consistent with the results of the present study.

Many previous studies have compared the speech perception abilities of early-blind subjects with those of sighted subjects, but the results varied according to the experimental setting. Several studies showed better speech perception in the early-blind group (Menard et al., 2009; Arnaud et al., 2018), whereas other studies found no behavioral differences between early-blind and sighted subjects (Gougoux et al., 2009; Guerreiro et al., 2015; Shim et al., 2019). In a previous study, we used two-syllable words and white noise and found no difference between the blind and sighted groups. However, in the present study, the test words were changed to monosyllabic words, which allow fewer redundancy cues in speech perception. Furthermore, babble noise presents more challenging interference to speech perception than white noise because it has a high time-evolving structure and is more similar to the target speech (Cooke, 2006). However, there were no differences between the early-blind subjects and sighted subjects under four high SNRs (–4 dB, 0 dB, +4 dB, and +8 dB). Only under the most severely degraded listening conditions (i.e., SNR of –8 dB) did the early-blind subjects tend to perform better than sighted subjects on monosyllabic perception in noise, although the difference was not significant. Therefore, it is still difficult to conclude whether there are differences in speech perception in noise between early-blind individuals and sighted individuals. The ACC responses to/ba/in babble noise recorded at the FC5 electrode showed significantly greater neuronal power at –8 and –4 dB SNRs in the early blind subjects than in the sighted subjects. Because the ACC stimuli were designed to detect speech perception, a significant difference might only be detected at the left-hemispheric electrode. These findings suggest that early-blind subjects could have an advantage in speech perception when the original speech cue is severely degraded, despite the inconclusive result on the behavioral test. The inconsistent results for speech perception in many previous studies, including ours, might be attributable to plastic changes that occur in the central auditory system after long-term visual deprivation, mainly involving the right hemisphere rather than the left hemisphere, which plays a dominant role in speech and language (Kimura, 1961; Blumstein and Cooper, 1974). The plasticity of the left hemisphere, which is indirectly affected by the interhemispheric transfer from the right hemisphere, might be limited. The better performance of sighted subjects under AV conditions than the performance of early-blind subjects or sighted subjects under AO conditions was entirely predictable. The multisensory integration of auditory and visual stimuli facilitates speech recognition in noise better than under unimodal conditions (Giard and Peronnet, 1999).

The digit span test is traditionally used worldwide to evaluate memory span, especially verbal working memory (Harris et al., 2013). Our results for the digit span test show that the early-blind subjects had a significantly larger memory span than the sighted subjects. Previous research findings for working memory in early-blind subjects compared with those of sighted subjects have been inconclusive. Several studies found no differences between blind and sighted subjects (Cornoldi and Vecchi, 2000; Swanson and Luxenberg, 2009), whereas other studies have reported that blind subjects have an advantage over their sighted peers in working memory tasks, including the digit span test (Rokem and Ahissar, 2009; Withagen et al., 2012), and the word memory test (Raz et al., 2007). Raz et al. (2007) argued that early-blind subjects have trained themselves in serial strategies to compensate for the lack of visual information, and that their perception of space is likely to be highly dependent on memory. This superior ability could be attributed to actual brain reorganization in blind subjects, whose brains become more adapted to spatial, sequential, and verbal information (Cornoldi and Vecchi, 2000). In the present study, the results of the digit span test correlated with the score on the three-digit dichotic test and the score on part of the frequency pattern test in the early-blind subjects, but not in the sighted subjects. These results imply that the advantages of early-blind subjects in the dichotic digit test and frequency pattern test may be partly dependent on their superior working memory.

In conclusion, early-blind subjects are advantaged in dichotic listening and temporal sequencing ability. They also tend to have an advantage in monosyllable perception in very noisy backgrounds. These advantages may be attributable to the enhanced activity of the central auditory nervous system, especially the right hemisphere function, and the transfer of auditory information between hemispheres.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

The studies involving human participants were reviewed and approved by Institutional Review Board of Nowon Eulji Medical Center. The patients/participants provided their written informed consent to participate in this study.

HS: conceptualization and funding acquisition. HS and EB: experiment design. EB: experiment performance and data preparation and analysis. HJ and EB: methodology. HJ and HS: critical review. All authors contributed to the writing manuscript and approved the submitted version.

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (NRF-2019R1H1A2039693 and 2020R1I1A3071587).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Ji-Hye Han and Jeong-Sug Kyong helped to set up the EEG and analyzed the EEG data. We thank Hye Young Han from Nowon Eulji Medical Center Library for manuscript’s technical editing.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.840541/full#supplementary-material

Amedi, A., Raz, N., Pianka, P., Malach, R., and Zohary, E. (2003). Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nat. Neurosci. 6, 758–766. doi: 10.1038/nn1072

Arnaud, L., Gracco, V., and Menard, L. (2018). Enhanced perception of pitch changes in speech and music in early blind adults. Neuropsychologia 117, 261–270. doi: 10.1016/j.neuropsychologia.2018.06.009

Aylett, M., and Turk, A. (2004). The smooth signal redundancy hypothesis: A functional explanation for relationships between redundancy, prosodic prominence, and duration in spontaneous speech. Lang. speech 47, 31–56. doi: 10.1177/00238309040470010201

Battal, C., Occelli, V., Bertonati, G., Falagiarda, F., and Collignon, O. (2020). General enhancement of spatial hearing in congenitally blind people. Psychol. Sci. 31, 1129–1139. doi: 10.1177/0956797620935584

Blumstein, S., and Cooper, W. E. (1974). Hemispheric processing of intonation contours. Cortex 10, 146–158. doi: 10.1016/s0010-9452(74)80005-5

Cooke, M. (2006). A glimpsing model of speech perception in noise. J. Acoust. Soc. Am. 119, 1562–1573. doi: 10.1121/1.2166600

Cornoldi, C., and Vecchi, T. (2000). “Mental imagery in blind people: the role of passive and active visuospatial processes,” in Touch, Representation and Blindness, ed. M. Heller (New York: Oxford University Press), 143–181.

Finocchietti, S., Cappagli, G., and Gori, M. (2015). Encoding audio motion: spatial impairment in early blind individuals. Front. Psychol. 6:1357. doi: 10.3389/fpsyg.2015.01357

Fischer, M. E., Cruickshanks, K. J., Nondahl, D. M., Klein, B. E., Klein, R., Pankow, J. S., et al. (2017). Dichotic digits test performance across the ages: results from two large epidemiologic cohort studies. Ear Hear. 38, 314–320. doi: 10.1097/AUD.0000000000000386

Giard, M. H., and Peronnet, F. (1999). Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J. Cogn. Neurosci. 11, 473–490. doi: 10.1162/089892999563544

Gori, M., Sandini, G., Martinoli, C., and Burr, D. (2010). Poor haptic orientation discrimination in nonsighted children may reflect disruption of cross-sensory calibration. Curr. Biol. 20, 223–225. doi: 10.1016/j.cub.2009.11.069

Gori, M., Sandini, G., Martinoli, C., and Burr, D. C. (2014). Impairment of auditory spatial localization in congenitally blind human subjects. Brain 137, 288–293. doi: 10.1093/brain/awt311

Gougoux, F., Belin, P., Voss, P., Lepore, F., Lassonde, M., and Zatorre, R. J. (2009). Voice perception in blind persons: a functional magnetic resonance imaging study. Neuropsychologia 47, 2967–2974. doi: 10.1016/j.neuropsychologia.2009.06.027

Gougoux, F., Lepore, F., Lassonde, M., Voss, P., Zatorre, R. J., and Belin, P. (2004). Neuropsychology: pitch discrimination in the early blind. Nature 430:309. doi: 10.1038/430309a

Guerreiro, M. J., Putzar, L., and Roder, B. (2015). The effect of early visual deprivation on the neural bases of multisensory processing. Brain 138, 1499–1504. doi: 10.1093/brain/awv076

Harris, M. S., Kronenberger, W. G., Gao, S., Hoen, H. M., Miyamoto, R. T., and Pisoni, D. B. (2013). Verbal short-term memory development and spoken language outcomes in deaf children with cochlear implants. Ear Hear. 34, 179–192. doi: 10.1097/AUD.0b013e318269ce50

Hermelin, B., and O’Connor, N. (1971). Right and left handed reading of Braille. Nature 231:470. doi: 10.1038/231470a0

Hertrich, I., Dietrich, S., and Ackermann, H. (2013). How can audiovisual pathways enhance the temporal resolution of time-compressed speech in blind subjects? Front. Psychol. 4:530. doi: 10.3389/fpsyg.2013.00530

Hu, X. J., and Lau, C. C. (2019). Factors affecting the Mandarin dichotic digits test. Int. J. Audiol. 58, 774–779. doi: 10.1080/14992027.2019.1639081

Hugdahl, K., Ek, M., Takio, F., Rintee, T., Tuomainen, J., Haarala, C., et al. (2004). Blind individuals show enhanced perceptual and attentional sensitivity for identification of speech sounds. Brain Res. Cogn. Brain Res. 19, 28–32. doi: 10.1016/j.cogbrainres.2003.10.015

Jang, H., Jeon, A., Yoo, J. H., and Kim, Y. (2014). Development and Standardization of Korean Dichotic Digit test. J. Spec. Educ.: Theory Pract. 15, 489–506.

Jeon, A. R., and Jang, H. (2009). Effects of stimulus complexity, attention mode & age on dichotic digit tests. J. Spec. Educ. 10, 377–395.

Jutras, B., Mayer, D., Joannette, E., Carrier, M.-E., and Chenard, G. (2012). Assessing the development of binaural integration ability with the French dichotic digit test: Ecoute Dichotique de Chiffres. Am. J. Audiol. 21, 51–59. doi: 10.1044/1059-0889(2012/10-0040)

Kimura, D. (1961). Cerebral dominance and the perception of verbal stimuli. Can. J. Psychol. Revue Can. de Psychol. 15, 166–171. doi: 10.1037/h0083219

Lewald, J. (2002). Vertical sound localization in blind humans. Neuropsychologia 40, 1868–1872. doi: 10.1016/s0028-3932(02)00071-4

Marshall, E. K., and Jones, A. L. (2017). Evaluating test data for the duration pattern test and pitch pattern test. Speech Lang. Hear. 20, 241–246. doi: 10.1080/2050571x.2016.1275098

Martin, B. A., and Boothroyd, A. (1999). Cortical, auditory, event-related potentials in response to periodic and aperiodic stimuli with the same spectral envelope. Ear Hear. 20, 33–44. doi: 10.1097/00003446-199902000-00004

Menard, L., Dupont, S., Baum, S. R., and Aubin, J. (2009). Production and perception of French vowels by congenitally blind adults and sighted adults. J. Acoust. Soc. Am. 126, 1406–1414. doi: 10.1121/1.3158930

Mukari, S. Z., Keith, R. W., Tharpe, A. M., and Johnson, C. D. (2006). Development and standardization of single and double dichotic digit tests in the Malay language: Desarrollo y estandarización de pruebas de dígitos dicóticos sencillos y dobles en lengua malaya. Int. J. Audiol. 45, 344–352. doi: 10.1080/14992020600582174

Musiek, F. E. (1983). Assessment of central auditory dysfunction: the dichotic digit test revisited. Ear Hear. 4, 79–83. doi: 10.1097/00003446-198303000-00002

Musiek, F. E. (1994). Frequency (pitch) and duration pattern tests. J. Am. Acad. Audiol. 5, 265–268.

Rajabpur, E., Hajiablohasan, F., Tahai, S. A.-A., and Jalaie, S. (2014). Development of the Persian single dichotic digit test and its reliability in 7-9 year old male students. Audiology 23, 68–77.

Raz, N., Striem, E., Pundak, G., Orlov, T., and Zohary, E. (2007). Superior serial memory in the blind: a case of cognitive compensatory adjustment. Curr. Biol. 17, 1129–1133. doi: 10.1016/j.cub.2007.05.060

Rokem, A., and Ahissar, M. (2009). Interactions of cognitive and auditory abilities in congenitally blind individuals. Neuropsychologia 47, 843–848. doi: 10.1016/j.neuropsychologia.2008.12.017

Shim, H. J., Go, G., Lee, H., Choi, S. W., and Won, J. H. (2019). Influence of Visual Deprivation on Auditory Spectral Resolution, Temporal Resolution, and Speech Perception. Front. Neurosci. 13:1200. doi: 10.3389/fnins.2019.01200

Stevens, A. A., and Weaver, K. (2005). Auditory perceptual consolidation in early-onset blindness. Neuropsychologia 43, 1901–1910. doi: 10.1016/j.neuropsychologia.2005.03.007

Swanson, H. L., and Luxenberg, D. (2009). Short-term memory and working memory in children with blindness: support for a domain general or domain specific system? Child Neuropsychol. 15, 280–294. doi: 10.1080/09297040802524206

Tremblay, K. L., Friesen, L., Martin, B. A., and Wright, R. (2003). Test-retest reliability of cortical evoked potentials using naturally produced speech sounds. Ear Hear. 24, 225–232. doi: 10.1097/01.Aud.0000069229.84883.03

Vercillo, T., Burr, D., and Gori, M. (2016). Early visual deprivation severely compromises the auditory sense of space in congenitally blind children. Dev. Psychol. 52, 847–853. doi: 10.1037/dev0000103

Voss, P., Gougoux, F., Zatorre, R. J., Lassonde, M., and Lepore, F. (2008). Differential occipital responses in early- and late-blind individuals during a sound-source discrimination task. Neuroimage 40, 746–758. doi: 10.1016/j.neuroimage.2007.12.020

Weeks, R., Horwitz, B., Aziz-Sultan, A., Tian, B., Wessinger, C. M., Cohen, L., et al. (2000). A positron emission tomographic study of auditory localization in the congenitally blind. J. Neurosci. 20, 2664–2672.

Withagen, A., Kappers, A. M., Vervloed, M. P., Knoors, H., and Verhoeven, L. (2012). Haptic object matching by blind and sighted adults and children. Acta Psychol. 139, 261–271. doi: 10.1016/j.actpsy.2011.11.012

Yoon, K., Alsabbagh, N., and Jang, H. (2019). Temporal processing abilities of adults with tune deafness. Audiol. Speech Res. 15, 196–204. doi: 10.21848/asr.2019.15.3.196

Keywords: early visual deprivation, dichotic listening, temporal sequencing ability, speech in noise, central auditory processing, visual cortex

Citation: Bae EB, Jang H and Shim HJ (2022) Enhanced Dichotic Listening and Temporal Sequencing Ability in Early-Blind Individuals. Front. Psychol. 13:840541. doi: 10.3389/fpsyg.2022.840541

Received: 21 December 2021; Accepted: 13 April 2022;

Published: 10 May 2022.

Edited by:

Josef P. Rauschecker, Georgetown University, United StatesReviewed by:

Malte Wöstmann, University of Lübeck, GermanyCopyright © 2022 Bae, Jang and Shim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hyun Joon Shim, ZWFyZG9jMTFAbmF2ZXIuY29t

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.