94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 30 May 2022

Sec. Cognition

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.823700

Neurophysiological research on the bilingual activity of interpretation or interpreting has been very fruitful in understanding the bilingual brain and has gained increasing popularity recently. Issues like word interpreting and the directionality of interpreting have been attended to by many researchers, mainly with localizing techniques. Brain structures such as the dorsolateral prefrontal cortex have been repeatedly identified during interpreting. However, little is known about the oscillation and synchronization features of interpreting, especially sentence-level overt interpreting. In this study we implemented a Chinese-English sentence-level overt interpreting experiment with electroencephalography on 43 Chinese-English bilinguals and compared the oscillation and synchronization features of interpreting with those of listening, speaking and shadowing. We found significant time-frequency power differences in the delta-theta (1–7 Hz) and gamma band (above 30 Hz) between motor and silent tasks. Further theta-gamma coupling analysis revealed different synchronization networks in between speaking, shadowing and interpreting, indicating an idea-formulation dependent mechanism. Moreover, interpreting incurred robust right frontotemporal gamma coactivation network compared with speaking and shadowing, which we think may reflect the language conversion process inherent in interpreting.

Over half of the world’s population can speak two languages or more (Grosjean, 1994), and the practice of alternatively using two languages is referred to as bilingualism (Weinreich, 1953). It is believed that the brain’s ability to switch between two languages may also generalize to non-linguistic tasks requiring selective attention and inhibition (cf. Lehtonen et al., 2018). Among the many bilingual switching activities, interpreting is perhaps the most interesting. Interpreting refers to a process, in which a first and final rendition in another language is produced on the basis of a one-time presentation of an utterance in a source language (Pöchhacker, 2004, p. 11). Interpreting is one of the most cognitively demanding language tasks for the human brain, involving decoding the source language, storing the information in working memory, reformulating the information and articulating in the target language, all having to be completed within a tight time window measurable in seconds (Christoffels et al., 2006; Elmer et al., 2010; Klein et al., 2018).

Although it has been suggested that investigating the neural mechanisms of interpreting would benefit the area of neurolinguistics as a whole (García, 2013), these remain scarcely investigated. Of the existing neurolinguistic research on interpreting, the majority are localization studies using functional magnetic resonance imaging (Lehtonen et al., 2005; Elmer, 2016; Elmer et al., 2014; Hervais-Adelman et al., 2015a,b, 2017; Zheng et al., 2020), positron emission tomography (Klein et al., 1995; Price et al., 1999; Rinne et al., 2000; Tommola et al., 2000), functional near-infrared spectroscopy (Quaresima et al., 2002; Lin et al., 2018a,b; Ren et al., 2019), and diffusion tensor imaging (Van de Putte et al., 2018). Though findings vary, brain structures that have been consistently identified as being involved in interpreting include the dorsolateral prefrontal cortex, pars triangularis and supramarginal gyrus.

As such, although the cerebral “where” question of interpreting has been partly answered, the answers to the “how” question of interpreting remain elusive. For example, we still do not know why interpreting is difficult, what the functional features underlying interpreting are, and whether they are intrinsically different from those of other bilingual activities. To further demystify the neural workings of interpreting, some pioneering researchers conducted experiments with electroencephalography (EEG), which has the benefit of helping us understand the timing of neural events during language processing. Early EEG studies of interpreting mainly showed a temporal beta-band oscillation associated with word interpreting, larger centroparietal theta increase and frontal alpha decrease for interpreting low-frequency words relative to high-frequency words, and wider brain activations for first language (L1) to second language (L2) interpreting than for L2–L1 interpreting (Petsche et al., 1993; Kurz, 1995; Grabner et al., 2007). Note, however, that these early studies all adopted the “mental interpreting” or the “typing response” design paradigm (i.e., silently interpreting the words or typing down the answer while seeing the stimulus words, both without any form of overt speaking), which are not in line with real-life interpreting activities. This makes the validity of the early studies and their results problematic.

Revolutions of experiment design began soon after researchers realized the flaws in ecological validity in the early studies. Janyan et al. (2009) made the first attempt to test overt spoken interpreting with EEG. They asked 22 Bulgarian-English bilinguals to interpret visually presented L2 words into L1, taking cognates/non-cognates and word concreteness as the independent variables. The results showed that interpreting cognates elicited centrotemporal N400 component and that the word concreteness effect was only associated with cognates. Later, Christoffels et al. (2013) adapted this paradigm and measured the event-related-potentials (ERPs) of 57 Dutch-English bilinguals when they were doing two-way overt word interpreting and naming. Their experiment revealed that participants began to differentiate the direction of interpreting at around 200 ms, and activation reflecting the meaning of words occurred at 300 ms. They also found that L1–L2 interpreting elicited more P2 component while L2–L1 interpreting brought about more N400 component. Following this, Jost et al. (2018) examined the ERP of 15 French-English bilinguals in such overt tasks as two-way word interpreting, L1 word generation and L2 word generation. The results showed that the differences between word generation and word interpreting were manifested in the 424–630 ms time window. They also noted that backward interpreting (BI; L2 to L1) was easier than forward interpreting (L1 to L2), as the latter incurred wider brain activations. But this finding was not completely unchallenged. Dottori et al. (2020) compared the neurophysiological signatures of word interpreting between professional Spanish-English simultaneous interpreters and bilingual Spanish-English non-interpreter controls. Their results showed that it was the BI (L2–L1) that triggered the more widespread neural activation among professional interpreters. They also found a consistently higher delta-theta power of professional interpreters than by the controls. Pérez et al. (2022) implemented a similar EEG study on Spanish-English bilinguals and compared the oscillation differences in two-way word translation tasks. Their results mainly showed that, compared with backward translation, forward translation yielded higher frontal theta in an early window, lower central beta in a later window, and a positive early theta-behavioral data association. In short, testing overt spoken interpreting has been proved not only feasible but also fruitful. The N400 component was identified as being closely related to interpreting, and more evidence showed that BI (L2–L1) is easier than forward interpreting (L1–L2) than the reverse way. In contrast to these ERP studies, to our knowledge there is no oscillation research on overt spoken interpreting.

Despite the progress made in the experiment paradigm, there are two other gaps in the existing literature on interpreting research: (1) there are very few sentence-level studies; and (2) most of the sentence-level studies were on the localization side (e.g., Lehtonen et al., 2005; Hervais-Adelman et al., 2015a,b; Zheng et al., 2020). Thus, there is a need for oscillation research on sentence-level overt interpreting.

Another important problem with previous research lies in the experiment mode. Nearly all prior studies chose to present their stimuli visually to the participants, which, strictly speaking, should be called “sight translation” in reference to the immediate oral rendition of the written text, as a specific mode of interpreting (He et al., 2017). As word interpreting and sentence interpreting do not share the same neural mechanisms (Klein et al., 1995; García, 2013; Muñoz et al., 2019), and that the human brain processes visual and acoustic information differently (Baddeley, 2007), it is an important next step to examine the EEG oscillation features of sentence-level interpreting in an auditory-spoken design.

Against this backdrop, we conducted an EEG study that aims to reveal the functional workings of sentence interpreting with an auditory-spoken design. Specifically, we implemented four language tasks in the EEG environment: (i) L2 listening (L2L); (ii) L1 speaking (L1S); (iii) L2 shadowing (L2SH); and (iv) BI. Our aim was to determine the dominant EEG frequency bands while participants are interpreting. We also addressed whether the frequency bands were unique to interpreting or general to other language tasks. Though our study was exploratory regarding hypotheses, particularly as to the language conversion process specific to interpreting, some related studies are noteworthy. For example, Grabner et al. (2007), in a silent experiment design, found a theta increase for BI; Dottori et al. (2020) partly corroborated this and further reported a delta-theta (1–8 Hz) increase in an overt interpreting task, indicating that the theta band (4–7 Hz) or even the broader delta-theta band (1–8 Hz) is closely related to the word interpreting process. On the other hand, as noted, sentence interpreting involves much more semantic and syntactic integration (García, 2013). We therefore predict that, apart from theta band activation, overt sentence-level BI may also incur stronger syntactic-related alpha/beta band (Davidson and Indefrey, 2007; Bastiaansen et al., 2010; Segaert et al., 2018) activation, as well as semantic-related gamma band (Hald et al., 2006; Wang et al., 2012; Rommers et al., 2013; Bastiaansen and Hagoort, 2015) activation than other language tasks.

Forty-six subjects were recruited for the current study. One participant did not finish the experiment and another two were excluded due to noisy data. The remaining forty-three subjects were used for the current data analysis.

Participants were bilingual Chinese (L1) – English (L2) non-interpreters with varying degrees of L2 proficiency, roughly gender-balanced (female = 21, male = 22), aged between 19 and 36 years (M = 26.05, SD = 4.55). They began learning English as second language from a mean age of 8.12 years, SD = 3.16, and have been learning English for 17.6 years on average, SD = 4.59. Their average stay in English-speaking countries was 3.43 years (SD = 3.79). None had a history of brain injury, and all had normal hearing and speaking abilities according to their self-reported answers in the questionnaire.

All participants gave written, informed consent and were compensated with a 20-dollar grocery voucher for their participation. Experiments were implemented in accordance with the Declaration of Helsinki. The study was approved by The University of Auckland Human Participants Ethics Committee (Ref. 022991).

Before the EEG session, participants were given time to read through the Participant’s Information Sheet and the Consent Form. Then they were asked to complete the Oxford Placement Test (within 30 min) and a demographic questionnaire that mainly covered their language backgrounds.

The experiment consisted of four language tasks, as shown in Figure 1. Participants first listened to the general instructions of the whole experiment which detailed what to do in each task. Next, participants listened to the instructions on the L2L task and subsequently listened to the auditory stimuli. During this task participants were required to comprehend the news without needing to give other responses. The L2L task was designed to test the brain oscillations in a sensory task. In the L1S task participants first heard instructions on a topic they were going to talk about. After that they had 1 min to prepare. A beep sound popped up at the end of the preparation, reminding them to start speaking until the second beep came up to stop them. This task was aimed at examining the neural oscillation features of L1 motor process. In the L2SH task participants listened to instructions first, and then they were required to listen to a paragraph in English and concurrently repeat every word of it. The goal of the L2SH task was to probe the oscillations underlying L2 motor process. Each of the first three tasks consisted of a long single trial of 2 min. In the BI task participants were asked to listen to (a) short English sentence(s), after which they had several seconds for comprehension, and then they had to interpret the sentence(s) into Chinese upon hearing the first beep and stop upon the second. The BI procedure repeated ten times for ten different sentence groups and was designed to test if there was a language conversion mechanism manifested in the oscillations. Such experiment design was adapted from that used in Hervais-Adelman et al. (2015b), and here we added a speaking task to include more possibilities of comparison. The whole experiment was conducted in an EEG setting using E-Prime 2.0 (Psychology Tools, Inc., Pittsburgh, PA, United States) and participants’ verbal outputs were recorded with a voice recorder.

The EEG recordings were conducted in an electrically shielded room (IAC Noise Lock Acoustic – Model 1375, Hampshire, United Kingdom) using 128-channel Ag/AgCl electrode nets (Tucker, 1993) from Electrical Geodesics Inc (Eugene, OR, United States). EEG was recorded continuously (1,000 Hz sample rate: 0.1–400 Hz analog bandpass) with Electrical Geodesics Inc. amplifiers (300-MΩ input impedance). Electrode impedances were kept below 40 kΩ, an acceptable level for this system (Ferree et al., 2001). Common vertex (Cz) was used as a reference. During the EEG, participants were comfortably seated in a chair 60 cm away from the screen. As there was no visual information involved, we asked the participants to close their eyes during the experiment, both to reduce noise in data from eye movements and to eliminate visual distractions (even if fixation has been widely used in previous studies to control eye movement, there is still a + sign in the middle of the screen that would potentially activate the brain’s visual system).

To familiarize participants with these tasks a practice session was administered before the real experiment. To avoid fatigue-induced performance deteriorations, the four tasks were designed to appear in a balanced sequence so that each task was used as the first condition for an equal number of times. Between the second and third task there was a break time (about 10 min). The whole experiment lasted for about 60 min.

A written version of the Oxford Placement Test1 was used for the testing of participants’ English proficiency before the EEG experiment. The experiment consisted of three custom-made English audio clips. The first clip was a passage of news, lasting for 2 min and was used for the L2L condition (162 words per minute). The second clip was also a 2-min news excerpt but was much slower (95 words per minute) and was used for the L2SH condition. The third clip was made up of ten groups of English sentences with varying length (11 to 33 words, mean = 20.5, SD = 8.18), played at a mean speed of 153 words per minute, and used for the BI condition. All sentences were in the simple declarative form (e.g., S1 for interpreting: The United Kingdom research councils are establishing their first overseas office in Beijing.) so that syntactic complexity as a confound variable can be ruled out. The full list of sentences used for BI can be found in section “Appendix.”

EEGlab v2020.0 (Delorme and Makeig, 2004) was used for the preprocessing of the raw data. The raw data was first downsampled to 250 Hz and high-pass filtered at 1 Hz. Then the data was cleaned using EEGlab plugins Cleanline (v1.04) and Clean_rawdata (v.2.2). Next, bad channels were interpolated and data re-referenced to the average of all channels. After this, Independent Component Analysis (ICA) was performed based on the EEGlab default algorithm of “runica,” and the number of principal components (PCs) to decompose was set to be equal to the number of channels. Finally MARA (Winkler et al., 2011) was applied to remove artifactual components using default parameters. Considering the potential motor artifacts which the overt tasks may produce, we doublechecked the remaining component map and removed those that looked suspicious. After this we again inspected the time domain signal and removed any portion of data that looked noisy. Specific artifact removal and noisy data exclusion criteria are as follows. Any signal portion with a 5-s flatline duration or longer was removed, and a channel with less than 85% self-reconstruction based on other channels was considered abnormal and then removed. A channel was interpolated if the line noise was four standard deviations higher than its signal based on the whole channel population. Signal bursts whose variance was 10 standard deviations higher than the calibration data were considered missing and were removed. A maximum of 25% of contaminated channels in a certain time window for data repairment was considered tolerable, otherwise that window was removed. On average, bad channel interpolation rate was 5.84%, 57 independent components were computed, and 4% of the original signal (about 52 s) was removed, across subjects.

Then we extracted epochs of 60 s (0–60 s) from the preprocessed data for the L2L, L1S, and L2SH conditions, respectively. Since the BI task was in the form of ten consecutive sentences, we concatenated the preprocessed sentence data and then extracted a 60-s epoch in line with the other three data segments. The epoch extraction of BI sentences was synchronized with the overt interpreting. Data exclusion was based on the type of blanks in the data segment, such that blank periods where the brain was not involved in language conversion (e.g., after the interpreting finishes but before the stopping beep sounds) were removed, while those during which language conversion happens (e.g., pauses in the middle of interpreting) were kept. We did not take individual participant’s interpreting speed into account, because when we classified the types of blanks speed was no more concern for data exclusion. A 2-s baseline was drawn from the resting state data for each individual, respectively.

The functional mechanism of the four bilingual processing tasks in which we were most interested was indexed by time-frequency power values. We analyzed the time frequency power dynamics of all datasets using the method of complex Morlet wavelet convolution in Matlab R2019b (The MathWorks, Inc., Natick, MA, United States). The 2-s resting state dataset drawn from each individual subjet’s preprocessed data was used as the baseline window for that particular subject accordingly. Then the convolution frequencies were created as a linearly spaced vector ranging from 1 to 40 Hz in 30 steps. The key parameter of the complext Morlet wavelet, i.e., the full width at half maximum, was defined as a vector from 300 ms to 600 ms logarithmically spaced in 30 steps, in order to achieve an ideal tradeoff between time and frequency precision. The task-induced time-frequency data was then decibel normalized by the baseline as a way to reveal the task-relevant dynamics. We then averaged all the data across 43 participants in one condition to obtain group results for that particular task. The raw time-frequency power data was downsampled at a time interval of 200 ms, which had no impact on the time-frequency resolution but saved much storage space of the computer.

We then performed condition-wise subtractions on the group-level time-frequency data in order to see if there were frequencies unique to a specific task. Such subtraction analysis has been adopted effectively by earlier research (Klein et al., 1995; Rinne et al., 2000; Perani et al., 2003; Wang et al., 2018; Muñoz et al., 2019). As such, we adopted the non-parametric cluster-based permutation test (Maris and Oostenveld, 2007) for statistical testing. First, the power values of the frequencies of interest (1–40 Hz) in each channel and time point within the 0–60 s window were clustered depending on if they exceed the dependent t-test threshold. This was repeated 1,000 times through random partitions in which the labels of the two conditions were shuffled. Then the largest cluster size was counted for all 1,000 partitions. After that the distribution of the 1,000 largest cluster sizes was calculated and a 95 percentile identified as the true threshold (i.e., the Monte Carlo p-value) to reject the null hypothesis. Lastly the permuted threshold was applied to the original data, and clusters with size values higher than such threshold were kept while those lower than the threshold were deemed chance results, thus non-significant.

Note that the time-frequency analysis was implemented in a lobe-wise fashion, i.e., for a certain lobe the data was averaged across all electrodes in that lobe to obtain a grand result. For instance, the time-frequency result for the frontal lobe was obtained by averaging the individual results across channel F1 to F10. The condition-wise subtractions were conducted within the same lobe, while the more dynamic cross-region oscillation analysis was attended to in the later section for cross-frequency-coupling.

Demographical and behavioral data were analyzed using IBM SPSS version 26 (SPSS Inc, Chicago, IL, United States). We measured participants’ L2 proficiency and transcribed and marked their oral interpreting output as the main behavioral index. Interpreting performance was measured as the ratio of correctly interpreted words to the total words in the original sentence. For example, if a sentence contained 12 content words (i.e., excluding articles such as the) and the participant correctly interpreted four of them, then his/her interpreting accuracy was 33.33%. The average score of the 43 participants in the Oxford Quick English Placement test was 44.37 (full marks = 60), SD = 7.32. The mean accuracy of their interpreting performance was 54.59%, SD = 0.15.

Correlations were then performed on the behavioral index and participants’ language background. There was a significant correlation between participants’ L2 proficiency and their interpreting accuracy, r(41) = 0.67, p < 0.01. There was also a significant correlation between the time length that participants spent living in English-speaking countries and their interpreting performance, r(41) = 0.46, p < 0.01. There were no significant correlations between interpreting performance and age, age of L2 acquisition and L2 learning years, all ps > 0.05.

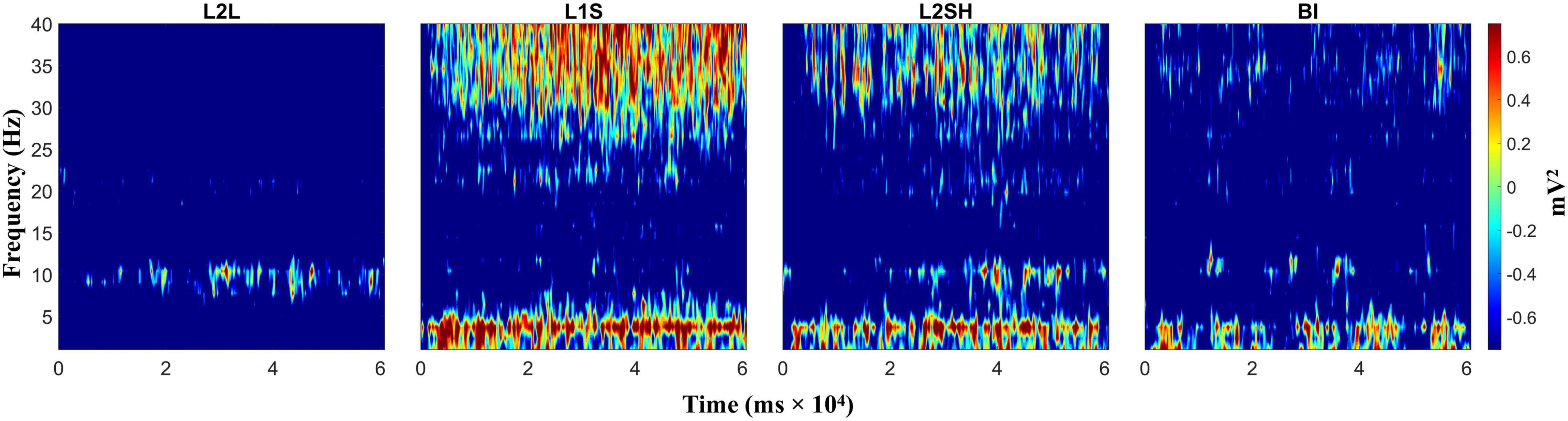

We first evaluated the raw time-frequency dynamics in each condition, with the intention to identify the dominant frequency bands in the four language tasks. We did not have a priori hypotheses on any regions of interest, so we did this for the frontal, occipital, parietal, and temporal regions, respectively, by averaging the TF dynamics across all electrodes in that specific region. The results are not qualitatively different for the four lobes, and one example is shown in Figure 2.

Figure 2. Raw time-frequency power in all conditions (language tasks). From left to right: L2 listening (L2L), L1 speaking (L1S), L2 shadowing (L2SH), and backward interpreting (BI), respectively. TF power values are gained by task/baseline division.

Figure 2 shows a marked TF power difference between L2L and the other three conditions, meanwhile the last three language tasks also demonstrated slight TF variations between each other, though the general activation pattern was similar. Specifically, L2L was dominated by alpha activation (around 10 Hz), while L1S, L2SH, and BI mainly elicited coactivation between delta-theta and gamma bands. L1S had the highest power values, most sustained activation and widest frequency ranges. L2SH in contrast, showed less activation in terms of frequency coverage and consistency. BI was even less in all the activation indexes. Note, however, that both L2SH and BI elicited slightly stronger alpha activation than L1S. Particularly in BI, the theta and alpha activations seemed to appear roughly in an alternating order.

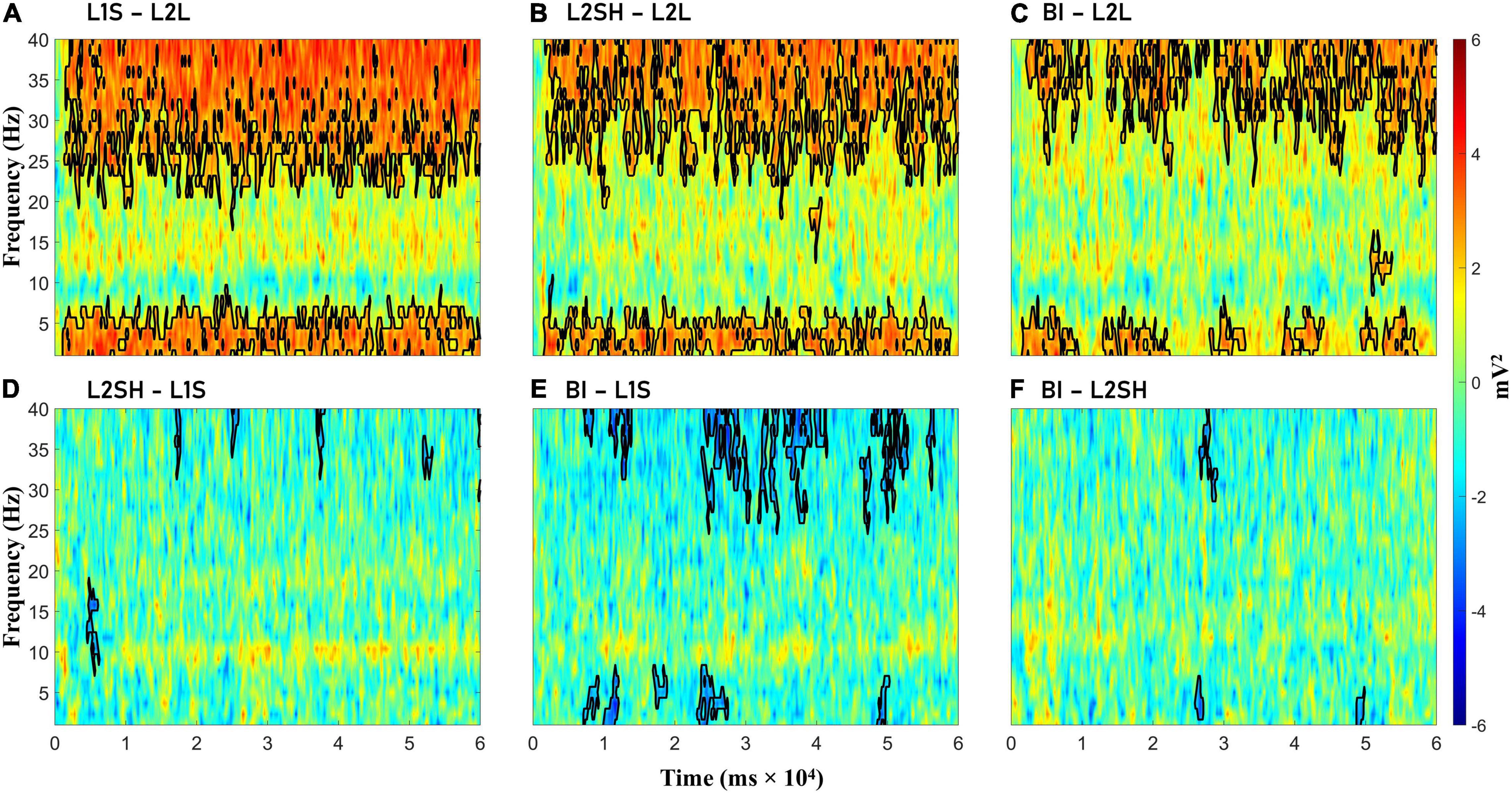

Figure 3 illustrates the condition-wise TF power subtractions. We observed significant gamma and delta-theta increase in the three overt tasks (i.e., L1S, L2SH, and BI) compared to L2L, but virtually no significant differences among the three tasks themselves, except in BI minus L1S, where the former showed some inconsistent but significant gamma and delta-theta power decrease than the latter. L2SH and BI also had consistent higher alpha power than L1S, but the differences were non-significant.

Figure 3. Condition-wise time-frequency power differences. (A) L1 speaking (L1S) minus L2 listening (L2L). (B) L2 shadowing (L2SH) minus L2L. (C) Backward interpreting (BI) minus L2L. (D) L2SH minus L1S. (E) BI minus L1S. (F) BI minus L2SH. Statistically significant differences are marked with black lines.

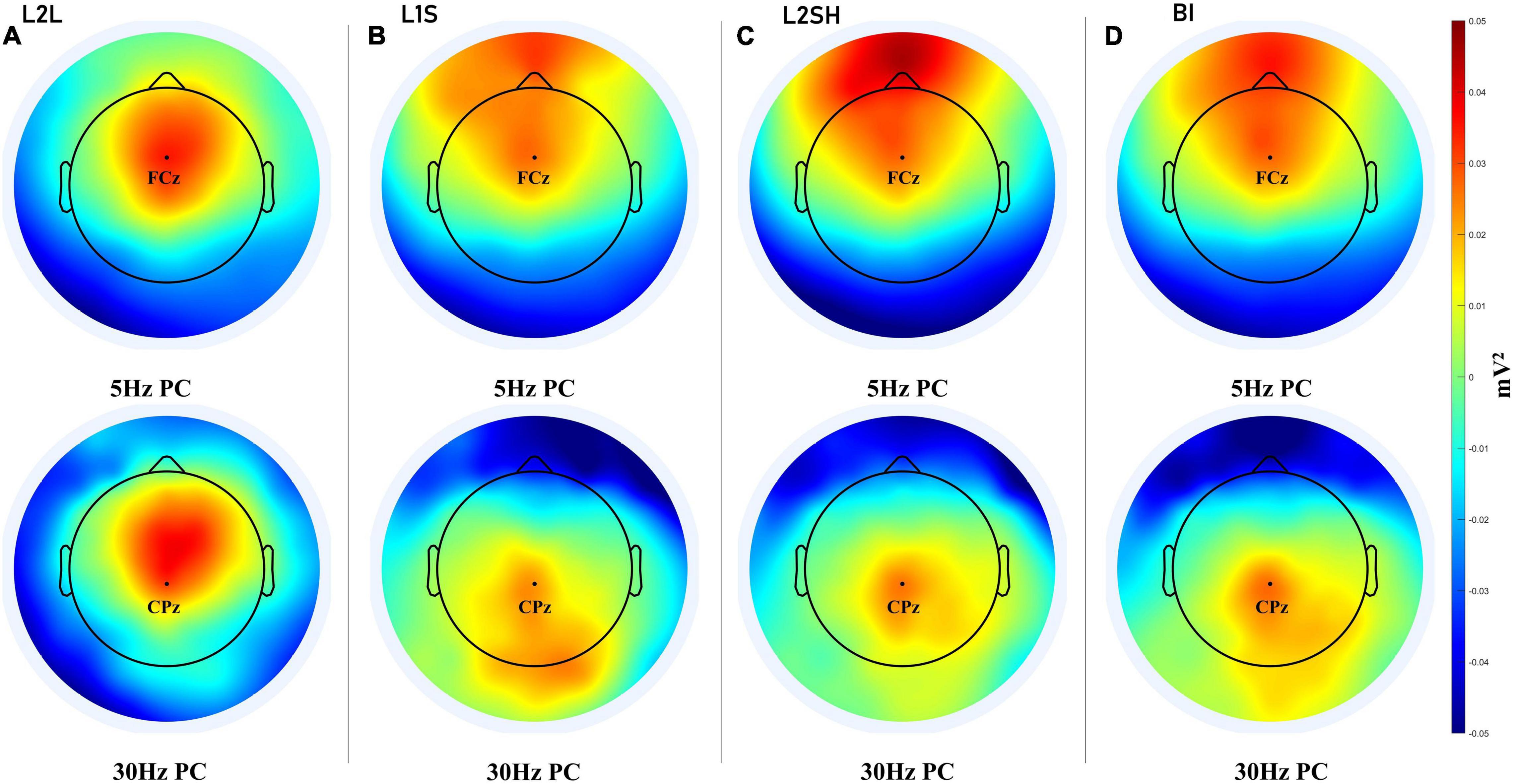

As L1S, L2SH, and BI all involved overt speaking, we do not know if the observed results in Figure 3 were real effects or muscle artifacts. Therefore we did a further PCs analysis to extract the main topography for the three overt tasks. If the three tasks share the same PCs, then the TF results are nothing but muscle artifacts; if they involve distinct PCs then it is very likely that the results are real effects. For comparison we also analyzed the PCs in L2L.

We first narrowband filtered the data at 5 Hz and 30 Hz, respectively, with a full width at half maximum of 2 Hz, so that the filtered data fell into the delta-theta band (3–7 Hz) and the lower gamma band (28–32 Hz). Then we extracted the PCs of the filtered data using the method of eigendecomposition. Finally we plotted the first PCs in the these tasks which are presented in Figure 4.

Figure 4. PCA results for all language tasks. (A) PC for 5 and 30 Hz in L2L. (B) PC for 5 and 30 Hz in L1S. (C) PC for 5 and 30 Hz in L2SH. (D) PC for 5 and 30 Hz in BI. For each panel channel FCz and channel CPz are marked for the convenience of comparison.

The principal component analysis (PCA) results first showed a clear-cut distinction between L2L and the other three tasks. L2L elicited the coactivation of frontal theta and frontal gamma, while the other three conditions either invovled a frontal theta and a parietal gamma or a prefrontal theta and a parieto-occipital gamma. Specifically, L1S was characterized by the most extensive parietal and bilateral occipital gamma coupled by a frontal theta, while L2SH was marked by a strong prefrontal theta and a fairly limited centroparietal gamma. BI had more right-lateralized parietal and occipital and even temporal gamma, and a frontal theta that is not very strong. This result further distinguished the three overt tasks, but to unveil the mechanisms of each task more analysis is needed.

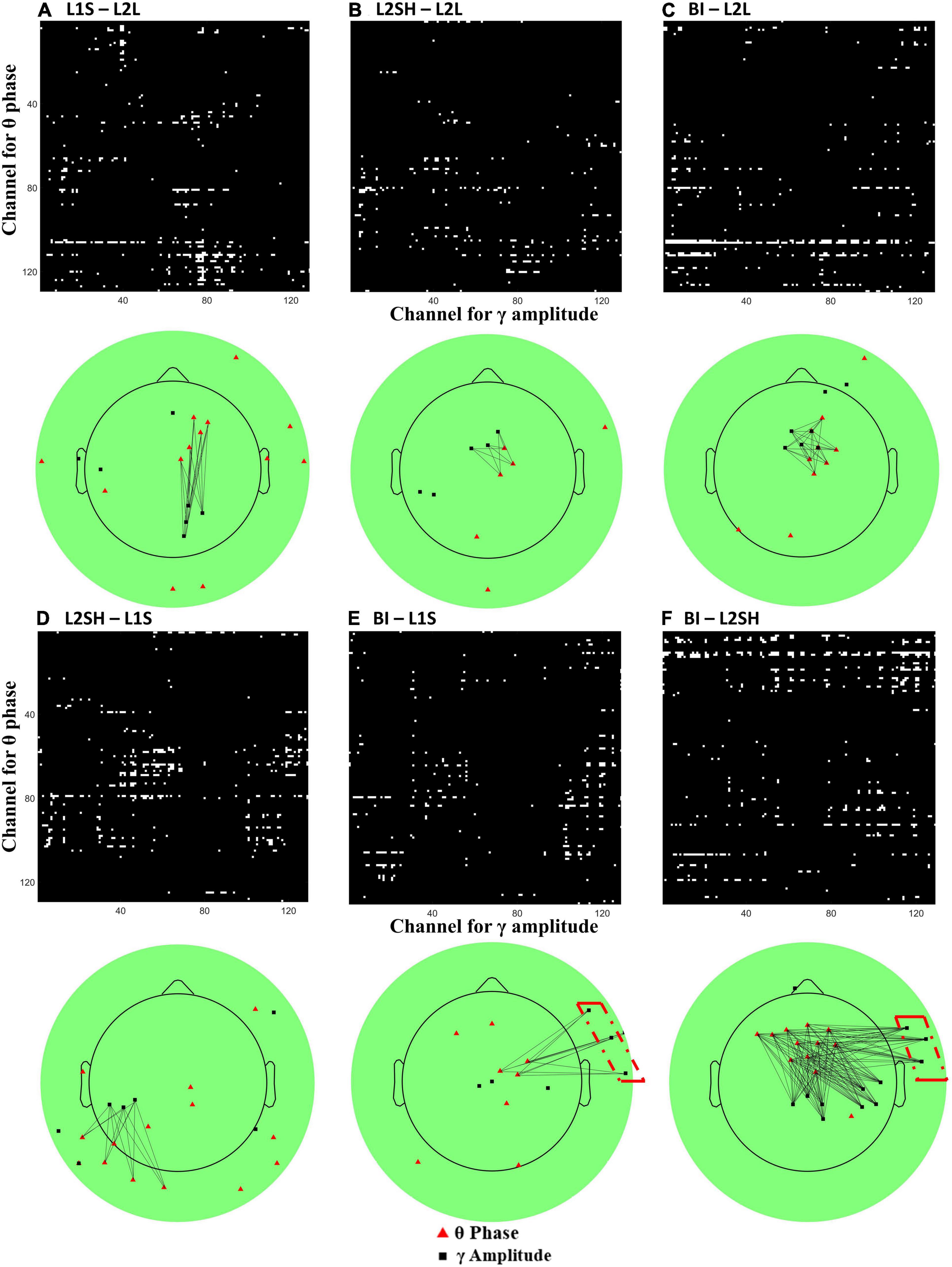

Since the time-frequency results did not differentiate BI from other overt spoken tasks, we sought to find BI-specific features in other ways. It has been proposed that, aside from the static individual frequency bands’ activities, the interactions between different frequency bands, often known as cross-frequency coupling (CFC) or phase-amplitude coupling, may even better reflect the functional dynamics of the brain (Canolty and Knight, 2010). Given that theta and gamma bands have been identified as the frequencies of interest in the aforementioned time-frequency results, we further performed CFC analysis on these two bands across all electrodes, with the intention to find more nuanced synchronization mechanisms for each condition. We first selected one channel (beginning from number 1) and narrowband filtered its data at theta band (3–7 Hz) and gamma band (30–60 Hz). Then we extracted the theta band phase angles and the gamma band amplitude values using Hilbert transform, eulerizing the two numbers to get a CFC value. After that we did a permutation test (number of iterations = 2,000) on the CFC value to eliminate chance results and assigned the permuted value to that particular channel pair. We moved on to the next channel and repeated this analysis until we finished the loop over all 128 electrodes. Following that we averaged the data across all participants and obtained a group-level synchronization map for each condition. Lastly we applied a stringent threshold (2 standard deviations above the median) to the map in order to reveal the robust hubs of synchronization. The results are presented in Figure 5.

Figure 5. All-to-all theta-gamma coupling (TGC) synchronization map and its topography. (A) L1 speaking minus L2 listening. (B) L2 shadowing minus L2 listening. (C) Backward interpreting minus L2 listening. (D) L2 shadowing minus L1 speaking. (E) Backward interpreting minus L1 speaking. (F) Backward interpreting minus L2 shadowing. On each panel the upper half is channel-to-channel theta (Y axis) and gamma (X axis) coupling map, where white pixels represent TGC values surviving a threshold of two standard deviations above the median, and the lower half is the projection of the upper map onto the 128-channel EEG scalp, where the red triangles sit in the position of electrodes for theta activation and the black squares gamma activation. The black lines mark the potential synchronizations between electrodes. Note that only clusters (neighboring electrode numbers > 3) are linked in the plot for illustration purposes. The red dash-dot boxes on (E,F) mark the unique synchronization of BI compared to L1S and L2SH.

Figure 5 shows two salient condition-wise differences regarding theta-gamma coupling (TGC) features. First, the three plots in the first row show a consistent right hemisphere central or frontocentral theta activation. Figures 5B,C show even similar TGC directions compared with Figure 5A. The second feature is that in the second row the TGC synchronization exhibits more disparities than in the first row. Specifically, L2SH elicited more extensive TGC synchronization than L1S (Figure 5D), while BI incurred more clustered TGC than L2SH (Figure 5F). Compared with L1S and L2SH, BI consistently triggered extra right temporal gamma synchronization (highlighted with red dash-dot boxes in Figures 5E,F).

To explore if there is a unique oscillation mechanism for the interpreting activity, we conducted an EEG experiment on 43 Chinese-English bilingual non-interpreters and analyzed their oscillation dynamics in 4 tasks: listening to an excerpt of English news (L2L); speaking on a given topic in Chinese (L1S); listening to an excerpt of English news and concurrently repeating it (L2SH); and interpreting English sentences into Chinese (BI). To our knowledge, the current study is the first EEG experiment on auditory-oral sentence-level interpreting. Such design is novel perhaps due to the sensitive nature of the EEG technique to motor artifacts, i.e., head and muscle movement caused by speaking can affect the EEG signal. We tried to eliminate the artifacts by removing the muscle components both with ICA and by handpicking the noisy parts of the data after preprocessing. The analysis of the data presented novel findings on both the behavioral side and the oscillatory side.

The behavioral results revealed that participants’ interpreting performance was significantly correlated with their L2 proficiency and L2 exposure, but not with their age, age of L2 acquisition and L2 learning years. Our results on the one hand corroborated previous findings on the positive relation between L2 proficiency and interpreting/translation performance (De Groot and Poot, 1997; Tzou et al., 2012; Mayor, 2015; Chen et al., 2020), on the other hand provided novel evidence on the association between interpreting performance and age, age of L2 acquisition and L2 learning years, which have never been reported before. Since interpreting performance was not influenced by age-related factors, we therefore regard interpreting as an activity that relies more on intensive training than on biological aptitude.

With respect to oscillations, we expected to see the delta-theta band activation in the BI condition (as has been found in previous word level research), stronger alpha/beta band activation for syntactic binding and gamma band activation for semantic integration in BI relative to other three language tasks. The results revealed different patterns in TF power and CFC.

First of all, condition-wise TF subtractions revealed significant differences between three overt tasks (L1S, L2SH, and BI) and L2L in the gamma band and the delta-theta band. On the one hand, these results confirmed part of our hypothesis, including the delta-theta band and the gamma band activations for BI. On the other hand, however, such TF patterns were not unique to BI, instead they looked quite similar across the three overt tasks. Hence we conducted a PCA on the theta and gamma band data, respectively, in trying to extract the topography of the three overt tasks. The PCA results revealed a frontal delta-theta and a parietal gamma in the three tasks, with varying size and energy that were distinguishable for each task. Following this, we extracted all-to-all TGC synchronization difference maps as an attempt to locate robust hubs for task-related synchronization. The results showed a consistent central/frontocentral theta synchronization for three overt tasks (L1S, L2SH, and BI) as compared to L2L. We therefore speculate that the frontocentral theta coactivation is an indispensable part of overt speaking-related tasks. Moreover, both L2SH and BI incurred similar synchronization networks, i.e., between right frontocentral theta and mid-frontal gamma, which was different from the pattern in L1S. Considering that in both L2SH and BI participants were fed with well-formed ideas from others, while L1S involved the stage of “message generation” on ones’ own (Levelt, 1989), it is reasonable to infer that genuine idea forming is manifested in synchronization between right frontocentral theta and right parieto-occipital gamma, whereas reformulating other’s ideas lies in the network of right frontocentral theta and midfrontal gamma coupling.

Another important finding of the current study is that in between the three overt tasks, BI consistently triggered more right frontotemporal gamma synchronization than the other two. This is in general agreement with the PCA result for gamma in BI, which was more right lateralized than L1S and L2SH. Therefore the right frontotemporal gamma coactivation seems quite robust. Since BI consists of an extra language conversion stage that none of the other three tasks involve, it is very likely that the right frontotemporal gamma synchronization mirrors the underlying mechanism for BI, or at least part of such mechanism.

Although our experiment design is novel, we are not among the first to discover TGC synchronization during a cognitive task. TGC has already been found in animals for over two decades (cf. Jensen and Colgin, 2007). In humans TGC was later observed and linked to visual short-term memory (Sauseng et al., 2009), spatial memory (Park et al., 2011), working memory (Park et al., 2013), the binding of visual perceptual features (Köster et al., 2018), and verbal long-term memory formation (Lara et al., 2018). Our findings corroborated the mnemonic function of frontal/temporal TGC reported by previous research in that all the three overt tasks in our experiment involve demanding mnemonic processes, including long-term memory for L1S and working memory for L2SH and BI.

Note that the above mentioned results by others were all based on visual stimuli. Wang et al. (2014) administered a speech perception task in EEG and analyzed the TGC. They presented the participants with pictures and auditory stimuli and asked them to judge whether the word matches the picture. Greater TGC was observed in the frontal and left-temporal areas in the match condition only, which the authors took as suggesting an integration of bottom-up and top-down information processing during speech perception. This result is more relevant to our study as it was based on an auditory paradigm. Other research that reported results similar to ours was done by Köster et al. (2014). In that study, researchers implemented a picture encoding and retrieval experiment, and found that prefrontal theta and parietal gamma was related to the controlled retrieval of sequential information of a former event, or episodic retrieval, which is very similar to the TGC pattern for L1S in the current study.

On the other hand, however, the predicted alpha/beta turned out very weak in the raw TF dynamics and was even missing in the subtraction plots. As noted, there is some consensus on the role of alpha/beta as the storage of syntactic phrases in verbal working memory (cf. Meyer, 2018). The question is, therefore, how to explain the alpha/beta absence in these results. One possibility is that in L1S and BI the participants already had sufficient linguistic units before opening their mouth to speak (both tasks preceded by a preparation period), thus no or very little workload for structuring and restructuring syntactic units while they were performing the real oral task, coupled by the fact that both conditions were in L1, which was highly automatic process for the brain. For L2SH, although participants were speaking in L2 and there was some working memory load, the auditory input was syntactically correct and well-organized. As such, they did not have to pin the material to their working memory as we told them not to remember but to keep pace with the audio. This was also manifested on the TF subtraction maps (Figure 3), where L2SH had higher alpha power than L1S and BI, albeit not statistically significant. Another explanation is related to our experiment design. We asked our participants to close their eyes during the experiment in order to exclude visual confounds and reduce artifacts, but some studies have discovered that alpha power varies hugely in eyes-closed and eyes-open experiments (Barry et al., 2007; Putilov and Donskaya, 2014). Which possibility is more plausible to explain our findings still needs further research.

Several limitations of the current research warrant consideration, the first of which is the overt experiment mode. Although the overt speaking tasks had never been adopted in EEG studies of interpreting, and therefore can be revealing, they inevitably produced motor noise for the EEG data in the present research. Our preprocessed data could still contain a tiny amount of artifacts, even if the most strict data cleaning measures were applied. Secondly, the method of condition-wise subtraction may have overlooked other mechanisms involved in bilingual tasks. For example, though we asked participants to only comprehend the audio in the L2L task and not to give any response, there still might be a silent interpreting process involved in such task. Therefore BI minus L2L may reflect either the overt interpreting mechanism, or merely the motor process of language production. Besides these, our sample size was quite limited and we did not measure participants’ cognitive abilities such as working memory capacity. There’s also the limitation of language-pair selected in this research.

Future studies can compare overt speaking with silent design to further validate the results of the current study. The current paradigm should also be replicated on other language pairs, for instance, the German-English language pair. Future research should also explore the effects of local features of the stimuli (such as the acoustic traits of the audio) as well as the language backgrounds of the participants (how many languages they speak) on oscillations, which were not analyzed in the current study.

This is the first study showing the TF dynamics between BI and other sentence-level auditory-oral language activities. We found significantly higher gamma band and delta-theta band TF power values in overt motor language tasks, particularly in BI, relative to auditory comprehension task. We also found right frontocentral theta and parieto-occipital gamma synchronization for articulating self-generated ideas, as compared to the frontocentral theta and midfrontal gamma network for non-self generated ideas. Most importantly, we found for the first time, distinct TGC patterns in BI, i.e., a robust right frontotemporal gamma coactivation, that may indicate the fundamental neural workings of that unique, difficult language task. Our findings could help inform interpreter training and the treatment of interpreting/translation-related aphasia. For example, equipment can be employed to physically stimulate the right frontotemporal region at 40 Hz to strengthen the neuronal network of interpreting.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by The University of Auckland Human Participants Ethics Committee (Ref. 022991). The patients/participants provided their written informed consent to participate in this study.

YZ designed the protocol, implemented the experiment, collected and analyzed the data, and drafted the manuscript. IK helped with the analysis methods and revised the manuscript. TC helped with coding and plotting. MO’H revised the manuscript. KW coordinated the whole project and revised the manuscript. All authors contributed to the article and approved the submitted version.

Funding was provided by the Royal Society of New Zealand Marsden (Grant No. 3718586).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Barry, R. J., Clarke, A. R., Johnstone, S. J., Magee, C. A., and Rushby, J. A. (2007). EEG differences between eyes-closed and eyes-open resting conditions. Clin. Neurophysiol. 118, 2765–2773. doi: 10.1016/j.clinph.2007.07.028

Bastiaansen, M., and Hagoort, P. (2015). Frequency-based segregation of syntactic and semantic unification during online sentence level language comprehension. J. Cogn. Neurosci. 27, 2095–2107. doi: 10.1162/jocn_a_00829

Bastiaansen, M., Magyari, L., and Hagoort, P. (2010). Syntactic unification operations are reflected in oscillatory dynamics during on-line sentence comprehension. J. Cogn. Neurosci. 22, 1333–1347. doi: 10.1162/jocn.2009.21283

Canolty, R. T., and Knight, R. T. (2010). The functional role of cross-frequency coupling. Trends Cogn. Sci. 14, 506–515. doi: 10.1016/j.tics.2010.09.001

Chen, P., Hayakawa, S., and Marian, V. (2020). Cognitive and linguistic predictors of bilingual single-word translation. J. Cult. Cogn. Sci. 4, 145–164. doi: 10.1007/s41809-020-00061-6.Cognitive

Christoffels, I. K., de Groot, A. M. B., and Kroll, J. F. (2006). Memory and language skills in simultaneous interpreters: the role of expertise and language proficiency. J. Mem. Lang. 54, 324–345. doi: 10.1016/j.jml.2005.12.004

Christoffels, I. K., Ganushchak, L., and Koester, D. (2013). Language conflict in translation: an ERP study of translation production. J. Cogn. Psychol. 25, 646–664. doi: 10.1080/20445911.2013.821127

Davidson, D. J., and Indefrey, P. (2007). An inverse relation between event-related and time-frequency violation responses in sentence processing. Brain Res. 1158, 81–92. doi: 10.1016/j.brainres.2007.04.082

De Groot, A. M. B., and Poot, R. (1997). Word translation at three levels of proficiency in a second language: the ubiquitous involvement of conceptual memory. Lang. Learn. 47, 215–264. doi: 10.1111/0023-8333.71997007

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Dottori, M., Hesse, E., Santilli, M., Vilas, M. G., Martorell Caro, M., Fraiman, D., et al. (2020). Task-specific signatures in the expert brain: differential correlates of translation and reading in professional interpreters. NeuroImage 209:116519. doi: 10.1016/j.neuroimage.2020.116519

Elmer, S. (2016). Broca Pars Triangularis Constitutes a “Hub” of the language-control network during simultaneous language translation. Front. Human Neurosci. 10:491. doi: 10.3389/fnhum.2016.00491

Elmer, S., Hänggi, J., and Jäncke, L. (2014). Processing demands upon cognitive, linguistic, and articulatory functions promote grey matter plasticity in the adult multilingual brain: insights from simultaneous interpreters. Cortex 54, 179–189. doi: 10.1016/j.cortex.2014.02.014

Elmer, S., Meyer, M., and Jancke, L. (2010). Simultaneous interpreters as a model for neuronal adaptation in the domain of language processing. Brain Res. 1317, 147–156. doi: 10.1016/j.brainres.2009.12.052

Ferree, T. C., Luu, P., Russell, G. S., and Tucker, D. M. (2001). Scalp electrode impedance, infection risk, and EEG data quality. Clin. Neurophysiol. 112, 536–544. doi: 10.1016/S1388-2457(00)00533-2

García, A. M. (2013). Brain activity during translation: a review of the neuroimaging evidence as a testing ground for clinically-based hypotheses. J. Neurolinguistics 26, 370–383. doi: 10.1016/j.jneuroling.2012.12.002

Grabner, R. H., Brunner, C., Leeb, R., Neuper, C., and Pfurtscheller, G. (2007). Event-related EEG theta and alpha band oscillatory responses during language translation. Brain Res. Bull. 72, 57–65. doi: 10.1016/j.brainresbull.2007.01.001

Grosjean, F. (1994). Individual Bilingualism. In The Encyclopedia Of Language And Linguistics. Oxford: Pergamon Press, 1656–1660.

Hald, L. A., Bastiaansen, M. C. M., and Hagoort, P. (2006). EEG theta and gamma responses to semantic violations in online sentence processing. Brain Lang. 96, 90–105. doi: 10.1016/j.bandl.2005.06.007

He, Y., Wang, M.-Y., Li, D., and Yuan, Z. (2017). Optical mapping of brain activation during the english to chinese and chinese to english sight translation. Biomed. Opt. Express 8:5399. doi: 10.1364/boe.8.005399

Hervais-Adelman, A., Moser-Mercer, B., and Golestani, N. (2015a). Brain functional plasticity associated with the emergence of expertise in extreme language control. NeuroImage 114, 264–274. doi: 10.1016/j.neuroimage.2015.03.072

Hervais-Adelman, A., Moser-Mercer, B., Michel, C. M., and Golestani, N. (2015b). FMRI of simultaneous interpretation reveals the neural basis of extreme language control. Cereb. Cortex 25, 4727–4739. doi: 10.1093/cercor/bhu158

Hervais-Adelman, A., Moser-Mercer, B., Murray, M. M., and Golestani, N. (2017). Cortical thickness increases after simultaneous interpretation training. Neuropsychologia 98, 212–219. doi: 10.1016/j.neuropsychologia.2017.01.008

Janyan, A., Popivanov, I., and Andonova, E. (2009). “Concreteness effect and word cognate status: ERPs in single word translation,” in Brain talk: Discourse With and In The Brain, Eds. Edn, eds K. Alter, M. Horne, M. Lindgren, M. Roll, and J. von Koss Torkildsen (Lunds: Lunds Universitet), 21–30.

Jensen, O., and Colgin, L. L. (2007). Cross-frequency coupling between neuronal oscillations. Trends Cogn. Sci. 11, 267–269. doi: 10.1016/j.tics.2007.05.003

Jost, L. B., Radman, N., Buetler, K. A., and Annoni, J. M. (2018). Behavioral and electrophysiological signatures of word translation processes. Neuropsychologia 109, 245–254. doi: 10.1016/j.neuropsychologia.2017.12.034

Klein, C., Metz, S. I., Elmer, S., and Jäncke, L. (2018). The interpreter’s brain during rest–Hyperconnectivity in the frontal lobe. PLoS One 13:e0202600. doi: 10.1371/journal.pone.0202600

Klein, D., Milner, B., Zatorre, R. J., Meyer, E., and Evans, A. C. (1995). The neural substrates underlying word generation: a bilingual functional-imaging study. Proc. Natl. Acad. Sci. 92, 2899–2903. doi: 10.1073/pnas.92.7.2899

Köster, M., Finger, H., Graetz, S., Kater, M., and Gruber, T. (2018). Theta-gamma coupling binds visual perceptual features in an associative memory task. Sci. Rep. 8, 1–10. doi: 10.1038/s41598-018-35812-7

Köster, M., Friese, U., Schöne, B., Trujillo-Barreto, N., and Gruber, T. (2014). Theta-gamma coupling during episodic retrieval in the human EEG. Brain Res. 1577, 57–68. doi: 10.1016/j.brainres.2014.06.028

Kurz, I. (1995). Watching the brain at work–an exploratory study of EEG changes during simultaneous interpreting (SI). Int. Newsl. 6, 3–16.

Lara, G. A., Alekseichuk, I., Turi, Z., Lehr, A., Antal, A., and Paulus, W. (2018). Perturbation of theta-gamma coupling at the temporal lobe hinders verbal declarative memory. Brain Stimul. 11, 509–517. doi: 10.1016/j.brs.2017.12.007

Lehtonen, H., Laine, M., Niemi, J., Thomsen, T., Vorobyev, A., and Hugdahl, K. (2005). Brain correlates of sentence translation in finnish-norwegian bilinguals. NeuroReport 16, 607–610. doi: 10.1097/00001756-200504250-00018

Lehtonen, M., Soveri, A., Laine, A., Järvenpää, J., de Bruin, A., and Antfolk, J. (2018). Is bilingualism associated with enhanced executive functioning in adults? A meta-analytic review. Psychol. Bull. 144, 394–425. doi: 10.1037/bul0000142

Lin, X., Lei, V. L. C., Li, D., and Yuan, Z. (2018b). Which is more costly in Chinese to English simultaneous interpreting, “pairing” or “transphrasing”? Evidence from an fNIRS neuroimaging study. Neurophotonics 5:025010. doi: 10.1117/1.NPh.5.2.025010

Lin, X., Lei, V. L. C., Li, D., Hu, Z., Xiang, Y., and Yuan, Z. (2018a). Mapping the small-world properties of brain networks in chinese to english simultaneous interpreting by using functional near-infrared spectroscopy. J. Innov. Opt. Health Sci. 11:1840001. doi: 10.1142/S1793545818400011

Maris, E., and Oostenveld, R. (2007). Nonparametric statistical testing of EEG–and MEG-data. J. Neurosci. Met. 164, 177–190. doi: 10.1016/j.jneumeth.2007.03.024

Mayor, M. J. B. (2015). L2 proficiency as predictor of aptitude for interpreting: an empirical study. Transl. Interpret. Stud. 10, 108–132. doi: 10.1075/tis.10.1.06bla

Meyer, L. (2018). The neural oscillations of speech processing and language comprehension: state of the art and emerging mechanisms. Europ. J. Neurosci. 48, 2609–2621. doi: 10.1111/ejn.13748

Muñoz, E., Calvo, N., and García, A. M. (2019). Grounding translation and interpreting in the brain: what has been, can be, and must be done. Perspectives 27, 483–509. doi: 10.1080/0907676X.2018.1549575

Park, J. Y., Jhung, K., Lee, J., and An, S. K. (2013). Theta-gamma coupling during a working memory task as compared to a simple vigilance task. Neurosci. Lett. 532, 39–43. doi: 10.1016/j.neulet.2012.10.061

Park, J. Y., Lee, Y. R., and Lee, J. (2011). The relationship between theta-gamma coupling and spatial memory ability in older adults. Neurosci. Lett. 498, 37–41. doi: 10.1016/j.neulet.2011.04.056

Perani, D., Abutalebi, J., Paulesu, E., Brambati, S., Scifo, P., Cappa, S. F., et al. (2003). The role of age of acquisition and language usage in early, high-proficient bilinguals: an fMRI study during verbal fluency. Human Brain Mapp. 19, 170–182. doi: 10.1002/hbm.10110

Pérez, G., Hesse, E., Dottori, M., Birba, A., Amoruso, L., Martorell Caro, M., et al. (2022). The bilingual lexicon, back and forth: electrophysiological signatures of translation asymmetry. Neuroscience 481, 134–143. doi: 10.1016/j.neuroscience.2021.11.046

Petsche, H., Etlinger, S. C., and Filz, O. (1993). Brain electrical mechanisms of bilingual speech management: an initial investigation. Electroencephalogr. Clin. Neurophysiol. 86, 385–394. doi: 10.1016/0013-4694(93)90134-H

Price, C. J., Green, D. W., and Von Studnitz, R. (1999). A functional imaging study of translation and language switching. Brain 122, 2221–2235. doi: 10.1093/brain/122.12.2221

Putilov, A. A., and Donskaya, O. G. (2014). Alpha attenuation soon after closing the eyes as an objective indicator of sleepiness. Clin. Exp. Pharmacol. Physiol. 41, 956–964. doi: 10.1111/1440-1681.12311

Quaresima, V., Ferrari, M., Van Der Sluijs, M. C. P., Menssen, J., and Colier, W. N. J. M. (2002). Lateral frontal cortex oxygenation changes during translation and language switching revealed by non-invasive near-infrared multi-point measurements. Brain Res. Bull. 59, 235–243. doi: 10.1016/S0361-9230(02)00871-7

Ren, H., Wang, M., He, Y., Du, Z., Zhang, J., Zhang, J., et al. (2019). A novel phase analysis method for examining fNIRS neuroimaging data associated with chinese/english sight translation. Behav. Brain Res. 361, 151–158. doi: 10.1016/j.bbr.2018.12.032

Rinne, J. O., Tommola, J., Laine, M., Krause, B. J., Schmidt, D., Kaasinen, V., et al. (2000). The translating brain: cerebral activation patterns during simultaneous interpreting. Neurosci. Lett. 294, 85–88. doi: 10.1016/S0304-3940(00)01540-8

Rommers, J., Dijkstra, T., and Bastiaansen, M. (2013). Context- dependent semantic processing in the human brain: evidence from idiom comprehension. J. Cogn. Neurosci. 25, 762–776.

Sauseng, P., Klimesch, W., Heise, K. F., Gruber, W. R., Holz, E., Karim, A. A., et al. (2009). Brain oscillatory substrates of visual short-term memory capacity. Curr. Biol. 19, 1846–1852. doi: 10.1016/j.cub.2009.08.062

Segaert, K., Mazaheri, A., and Hagoort, P. (2018). Binding language: structuring sentences through precisely timed oscillatory mechanisms. Eur. J. Neurosci. 48, 2651–2662. doi: 10.1111/ejn.13816

Tommola, J., Laine, M., Sunnari, M., and Rinne, J. O. (2000). Images of shadowing and interpreting. Interpreting 5, 147–169. doi: 10.1075/intp.5.2.06tom

Tucker, D. M. (1993). Spatial sampling of head electrical fields: the geodesic sensor net. Electroencephalogr. Clin. Neurophysiol. 87, 154–163. doi: 10.1016/0013-4694(93)90121-B

Tzou, Y. Z., Eslami, Z. R., Chen, H. C., and Vaid, J. (2012). Effect of language proficiency and degree of formal training in simultaneous interpreting on working memory and interpreting performance: evidence from mandarin-english speakers. Int. J. Biling. 16, 213–227. doi: 10.1177/1367006911403197

Van de Putte, E., De Baene, W., García-Pentón, L., Woumans, E., Dijkgraaf, A., and Duyck, W. (2018). Anatomical and functional changes in the brain after simultaneous interpreting training: a longitudinal study. Cortex 99, 243–257. doi: 10.1016/j.cortex.2017.11.024

Wang, J., Gao, D., Li, D., Desroches, A. S., Liu, L., and Li, X. (2014). Theta-gamma coupling reflects the interaction of bottom-up and top-down processes in speech perception in children. NeuroImage 102, 637–645. doi: 10.1016/j.neuroimage.2014.08.030

Wang, L., Hagoort, P., and Jensen, O. (2018). Language prediction is reflected by coupling between frontal gamma and posterior alpha oscillations. J. Cogn. Neurosci. 30, 432–447.

Wang, L., Zhu, Z., and Bastiaansen, M. (2012). Integration or predictability? A further specification of the functional role of gamma oscillations in language comprehension. Front. Psychol. 3:187. doi: 10.3389/fpsyg.2012.00187

Winkler, I., Haufe, S., and Tangermann, M. (2011). Automatic classification of artifactual ICA-Components for artifact removal in EEG signals. Behav. Brain Funct. 7, 1–15. doi: 10.1186/1744-9081-7-30

Zheng, B., Báez, S., Su, L., Xiang, X., Weis, S., Ibáñez, A., et al. (2020). Semantic and attentional networks in bilingual processing: fMRI connectivity signatures of translation directionality. Brain Cogn. 143:105584. doi: 10.1016/j.bandc.2020.105584

Sentence stimuli used for the BI task:

S1: The United Kingdom research councils are establishing their first overseas office in Beijing.

S2: Over the next several months, let us do what Americans have always done, and build a better world for our children and our grandchildren.

S3: America’s economy is the fastest growing of any major industrialized nation.

S4: To make our economy stronger and more dynamic, we must prepare a rising generation to fill the jobs of the twenty first century.

S5: And I do not mistrust the future, I do not fear what is ahead, for our problems are large, but our heart is larger, our challenges are great, but our will is greater.

S6: To make our economy stronger and more productive, we must make healthcare more affordable.

S7: Baseball is the most popular sport in the United States. It is played throughout the spring and summer, and professional baseball teams play well into the fall.

S8: The year was 1982. The IBM personal computer had only been born the year before.

S9: In 1942, only one out of every 25 high schools in America offers science courses.

S10: With a healthy growing economy, with more Americans going back to work, with our nation and active force for good in the world, the state of our union is confident and strong.

Keywords: overt interpreting, EEG oscillations, theta-gamma coupling, time-frequency power, bilingualism

Citation: Zheng Y, Kirk I, Chen T, O’Hagan M and Waldie KE (2022) Task-Modulated Oscillation Differences in Auditory and Spoken Chinese-English Bilingual Processing: An Electroencephalography Study. Front. Psychol. 13:823700. doi: 10.3389/fpsyg.2022.823700

Received: 28 November 2021; Accepted: 26 April 2022;

Published: 30 May 2022.

Edited by:

Sharlene D. Newman, University of Alabama, United StatesReviewed by:

Yanyu Xiong, Indiana University, United StatesCopyright © 2022 Zheng, Kirk, Chen, O’Hagan and Waldie. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Karen E. Waldie, ay53YWxkaWVAYXVja2xhbmQuYWMubno=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.