- 1Department of Nursing, The Second Hospital, Cheeloo College of Medicine, Shandong University, Jinan, China

- 2Department of Nursing, Qilu Hospital, Cheeloo College of Medicine, Shandong University, Jinan, China

- 3School of Nursing and Rehabilitation, Shandong University, Jinan, China

- 4Nursing Theory and Practice Innovation Research Center, Shandong University, Jinan, China

- 5Department of Nursing, Shandong Provincial Hospital Affiliated to Shandong First Medical University, Jinan, China

- 6The Vice-Chancellor’s Unit, University of Wollongong, Wollongong, NSW, Australia

Background: Mobile health (mHealth) apps have shown the advantages of improving medication compliance, saving time required for diagnosis and treatment, reducing medical expenses, etc. The World Health Organization (WHO) has recommended that mHealth apps should be evaluated prior to their implementation to ensure their accuracy in data analysis.

Objective: This study aimed to translate the patient version of the interactive mHealth app usability questionnaire (MAUQ) into Chinese, and to conduct cross-cultural adaptation and reliability and validity tests.

Methods: The Brislin’s translation model was used in this study. The cross-cultural adaptation was performed according to experts’ comments and the results of prediction test. The convenience sampling method was utilized to investigate 346 patients who used the “Good Doctor” (“Good Doctor” is the most popular mHealth app in China), and the reliability and validity of the questionnaire were evaluated as well.

Results: After translation and cross-cultural adaptation, there were a total of 21 items and 3 dimensions: usability and satisfaction (8 items), system information arrangement (6 items), and efficiency (7 items). The content validity index was determined to be 0.952, indicating that the 21 items used to evaluate the usability of the Chinese version of the MAUQ were well correlated. The Cronbach’s α coefficient of the total questionnaire was 0.912, which revealed that the questionnaire had a high internal consistency. The values of test-retest reliability and split-half reliability of the Chinese version of the MAUQ were 0.869 and 0.701, respectively, representing that the questionnaire had a good stability.

Conclusion: The translated questionnaire has good reliability and validity in the context of Chinese culture, and it could be used as a usability testing tool for the patient version of interactive mHealth apps.

Introduction

The Global Observatory for eHealth of the World Health Organization (WHO) defined mHealth as “medical and public health practice supported by mobile devices, such as mobile phones, patient monitoring devices, personal digital assistants, and other wireless devices.” A large number of investigations and studies on mHealth apps have shown that mHealth apps can improve medication compliance, save time required for diagnosis and treatment, and reduce medical expenses (Seto et al., 2012; Fairman et al., 2013; Parmanto et al., 2013; Pfammatter et al., 2016). With the aging of the population, demand for healthcare services has markedly increased, imposing a huge pressure on medical institutions (Association of American Medical Colleges [AAMC], 2021). According to a previous research, the Internet-based treatment could noticeably reduce the number of outpatient visits (National Health Service [NHS], 2019). It could also decrease the burden on hospital resources, medical staff’s workload, and medical expenses (Shah et al., 2021). Besides, mHealth apps have shown a potential to greatly improve healthcare systems.

China has a large population and relatively few medical resources. Faced with the fact that the development of mHealth apps can relieve the tension of medical institutions, the government has put forward the development of the Internet-based healthcare services. The coronavirus disease 2019 (Covid-19) pandemic has caused the urgent need to redesign public health systems from reactive to proactive and to develop innovations that may provide real-time information for making proactive decisions. Owing to the limited healthcare resources, several national healthcare providers are turning to digital healthcare solutions, such as virtual ward, remote patient monitoring, and telemedicine, to minimize the risk of Covid-19, in which mHealth apps have shown a noticeable efficiency (Mann et al., 2020; Schinkothe et al., 2020).

According to the published statistics, more than 325,000 mHealth apps have been universally developed in recent years (Maramba et al., 2019). However, nearly half of the million mHealth programs that have been developed are not extensively utilized. Because there was no effective usability evaluation of mHealth apps, clinicians, scholars, and patients are skeptical about the reliability of mHealth programs (Vera et al., 2019). These limitations have negatively influenced the applicability of mHealth apps. Hence, the WHO has recommended that mHealth apps should be evaluated prior to their actual use in order to ensure the accuracy in data analysis (World Health Organization [WHO], 2020). At the initial stage of the use of a mHealth app, usability assessment is the key, which can remarkably motivate individuals to use a mHealth app more easily and efficiently.

The definition of usability by the International Organization for Standardization (IOS) :the extent to which a system, product or service can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified circumstance (International Organization for Standardization [ISO], 2018). To evaluate the usability of apps, qualitative methods are used previously, such as interviews (Stinson et al., 2006), cognitive walkthrough (Van Velsen et al., 2018) and heuristic evaluation (Walsh et al., 2017), etc. However, these methods are mainly for tracking usability problems. They do not calculate the absolute score of a system’s usability, which can be achieved via usability tests instead. A usability test is a clear indicator to show whether the usability of an app is sufficient or insufficient (Broekhuis et al., 2021). Ideally, usability test should be conducted at each step of the program development, which includes an iterative cycle of system design and validation (Ivory and Marti, 2001). Testing the usability of an app is most frequently done by means of usability questionnaires (Peute et al., 2008).

The usability Questionnaire is the most common tool for usability assessment, as it is user-friendly and the data analysis is intuitive (Sevilla-Gonzalez et al., 2020). There are currently many usability questionnaires that are validated and reliable, such as Questionnaire for User Interface Satisfaction (Chin et al., 1988),the Post-Study System Usability Questionnaire (PSSUQ) (Lewis, 2002), Perceived Usefulness and Ease of Use (Davis, 1989), System Usability Scale (SUS) (Brooke, 1996), and Usefulness, Satisfaction, and Ease of Use Questionnaire (Lund, 2001). These questionnaires have been used widely in lots of mHealth app usability studies. However, none of them was specifically designed for evaluating the usability of mHealth apps (Zhou et al., 2017). Despite the large number of mHealth apps released to the public, there is still a lack of targeted evaluation tools. Therefore, Zhou et al. (2019) developed and validated a new mHealth app usability questionnaire (MAUQ), which is a targeted reliable usability testing tool in the mHealth domain. The MAUQ, based on the heterogeneity of definitions and methods, is used to evaluate the usability of mHealth apps. Three usability-based dimensions that were consistent with the definition were explored: Ease of use and satisfaction (corresponding to satisfaction of usability); System information arrangement (corresponding to the efficiency of availability); and practicability (corresponding to the availability).

MHealth apps can be divided into two different versions for patients and healthcare providers depending on the target audience. According to the interactive status of mHealth apps, they can be divided into interactive mHealth apps and standalone mHealth apps. Zhou et al. (2019) developed the MAUQ based on a number of existing questionnaires used in previous mobile app usability studies. Then, they utilized MAUQ, SUS, and PSSUQ to investigate the usability of two mHealth apps: an interactive mHealth app and a standalone mHealth app. Four versions of the MAUQ were developed in association with the type of app (interactive or standalone) and target user of the app (patient or provider). A website was created to make it convenient for developers of mHealth apps to use the MAUQ to assess the usability of their mHealth apps (PITT Usability Questionnaire, 2021).

MHealth app usability questionnaire has been widely utilized in the United States (Sengupta et al., 2020; Sood et al., 2020; Cummins et al., 2021), Sweden (Anderberg et al., 2019), Australia (Menon et al., 2019), Spain (Soriano et al., 2020), South Africa (Gous et al., 2020), and other countries. It is mainly used to study the usability of digital healthcare systems. Its standalone patient version has been translated into the Malaysian version, and it has shown an acceptable practicability (Mustafa et al., 2021). However, China has not yet introduced a Chinese version of the MAUQ, the present study aimed to translate and verify the patient version of the interactive mHealth app usability questionnaire (MAUQ) into Chinese, and to provide a usability testing tool for developers of mHealth apps in China.

Materials and Methods

Overview

Zhou et al. (2019) developed the MAUQ in 2019. It contains a total of 21 items and 3 dimensions: usability and satisfaction (8 items), system information arrangement (6 items), and efficiency (7 items). Cronbach’s alpha coefficients of the three dimensions of the original questionnaire were 0.895, 0.829, and 0.900, respectively, indicating a strong internal consistency of the MAUQ. The 7-point Likert scoring system was adopted as follows: 1 (extremely strongly agree), 2 (strongly agree), 3 (agree), 4 (neutral), 5 (disagree), 6 (strongly disagree), and 7 (extremely strongly disagree). The questionnaire score is the total score of each item divided by the number of items. The closer the mean value is to 1, the higher the app’s usability will be.

Questionnaire Translation

It is important to contact a questionnaire’s developer(s) to obtain the permission. The author of the original questionnaire has been contacted and permission for translation and use has been obtained. The Brislin’s translation model (Brislin, 1976) was adopted as follows: Step 1. Forward translation (from English into Chinese): The questionnaire was translated by two translators with high English proficiency, and then, the research group and the two translators could jointly form the Chinese version of the MAUQ (A); Step 2. Back translation: Two translators who had not contacted the original questionnaire translated the Chinese version of the MAUQ (A) into English version, and the research group finalized the English version. After that, the research group and all the translators compared the translated version (From Chinese into English) with the original questionnaire, and the Chinese version of MAUQ (B) could be provided after discussing and performing the required amendments.

Cross-Cultural Adaptation

Literal translation is not sufficient to produce an equivalent questionnaire, and cultural sensitivities or cultural influences may not cause problems, while they may lead to a misunderstanding of the question being asked, indicating the necessity of conducting cross-cultural adaptation (Epstein et al., 2015; Mohamad Marzuki et al., 2018). Therefore, we conducted cross-cultural adaptation according to experts’ comments and prediction test.

Experts’ Comments

Seven experts familiar with the field of mHealth were invited: two associate professors of medicine, one senior engineer of software design, one doctoral assistant researcher, one associate professor of nursing, and two master’s degree nurses. Two researchers of the group took back the expert opinions on site. According to their theoretical knowledge and practical experience, each expert evaluates the accuracy of translation, content comprehension, language expression habits and consistency on cultural background of each item one by one. For the suggestions that are not easy to understand, the researcher had an in-depth discussion with the experts and made records in detail. Finally, the group summarized all the opinions from experts.

Prediction Test

A preliminary survey was conducted on 30 patients who used the mHealth app of “Good Doctor” (“Good Doctor” is the most popular mHealth app in China, and this app showed a high utilization rate), using convenience sampling method, among outpatients of a third-class hospital, aiming to figure out respondents’ understanding of the questionnaire and their feelings on items of the questionnaire. The researcher explained the purpose of the survey to the respondents, made an investigation using the translated questionnaire, and asked the following questions: (1). Do you understand the content of this item? Or whether there is any ambiguity? (2). Do you know how to answer? If you don’t, what might be the difficulty? (3). Does it conform to Chinese language expression habits, if not, in which way you think it should be expressed? After the investigation and communication with the respondents, the items in the questionnaire that can be confusing and difficult to understand or answer were marked. The questions and suggestions raised by the respondents were recorded.

The Chinese version of MAUQ (B) was revised to form the final Chinese version of the MAUQ based on experts’ suggestions and respondents’ feedback.

Research Objects and Research Tools

This cross-sectional, descriptive study was conducted in four large hospitals of Jinan, Shandong Province, China, from October 2020 to February 2021. The convenience sampling method was adopted to select users of the patient version of the “Good Doctor” mHealth app as research objects. Inclusion criteria were as follows: owning a smart phone and being proficient in mobile phone apps; users who aged ≥ 18, ≤ 65 years old, with good verbal and written communication skills; users who have used this app for diagnosis/treatment twice or more in the past month (Sevilla-Gonzalez et al., 2020). Exclusion criteria were as follows: language barriers or communication difficulties; being concomitant with psychiatric or neurological disorders. All participants gave informed consent and voluntarily participated in the study.

After obtaining the study approval from relevant departments, the research team members, who had received unified training, conducted the investigation. The research tools included users’ general information, involving users’ age, gender, place of residence, marital status, educational level, annual income, and the frequency of using “Good Doctor” mHealth app in the last 2 months. The purpose and significance of the questionnaire, as well as the confidentiality principle were explained to subjects before commencing the survey.

Two well-trained and eligible research investigators collected data. The collected questionnaires are numbered uniformly, and the data was entered by two people, excel used for data entry and SPSS 21 used for statistical analysis, and AMOS 23 statistical software used for confirmatory factor analysis to verify the appropriateness and stability of the construct validity of the scale.

Verification of the Questionnaire

Item Analysis

Examine the central tendency of the answers to an item, if there is a choice in the answer set for an item and the percentage is more than 80%, indicating that the discrimination ability of the item is weak, and should be deleted. The total score of the questionnaire was arranged in a logical order. The t-test was used to examine the mean difference, and items with no significant difference between high-score and low-score groups were deleted. Using the Pearson’s correlation coefficient method, and according to the item score and the total score of the questionnaire, items with a very poor correlation with the total score of the questionnaire were deleted (r < 0.30).

Reliability and Validity Testing of the Questionnaire

Seven experts in mHealth were invited to evaluate the relevance of the questionnaire contents. The 4-point Likert scoring system was used to evaluate the correlation between each item and the questionnaire topic [1 (no correlation), 2 (poor correlation), 3 (strong correlation), and 4 (extremely strong correlation)]. The content validity index (CVI) of all items and the CVI of the total score of the questionnaire were calculated. Principal component analysis and maximum variance orthogonal rotation were utilized for exploratory factor analysis (EFA). Items with a load factor of < 0.40 were deleted. The correlation matrix between each dimension and the total score of the questionnaire was tested and the internal correlation analysis was carried out. Confirmatory factor analysis (CFA) was undertaken to verify whether the structure of the questionnaire was consistent with the theoretical structure of the original questionnaire. The reliability of the questionnaire was evaluated by the Cronbach’s α coefficient, test-retest reliability, and split-half reliability.

This study was approved by the Ethics Committee of Qilu Hospital of Shandong University (Jinan, China; Approval No. KYLL-2020-460).

Results

Cross-Cultural Adaptation

According to the experts’ comments and respondents’ feedback, related to content understanding, language expression habits, and cultural background of the questionnaire, and the results of prediction test, the research group modified the contents as follows: (1). The term “various social environments” in item 5 was not consistent with the language expression habits in China. Therefore, item 5 “I feel comfortable using this app in various social environments” was changed to “I feel comfortable using this app in various public places” (2). Item 6 “The time required to use the app is appropriate for me.” was changed to “Using this app will not take up much of my time” (3). The 12th item was not easy to understand after literal translation, thus, the item 12 “navigation is consistent when switching pages” was changed to “the way and process of switching pages are consistent”.

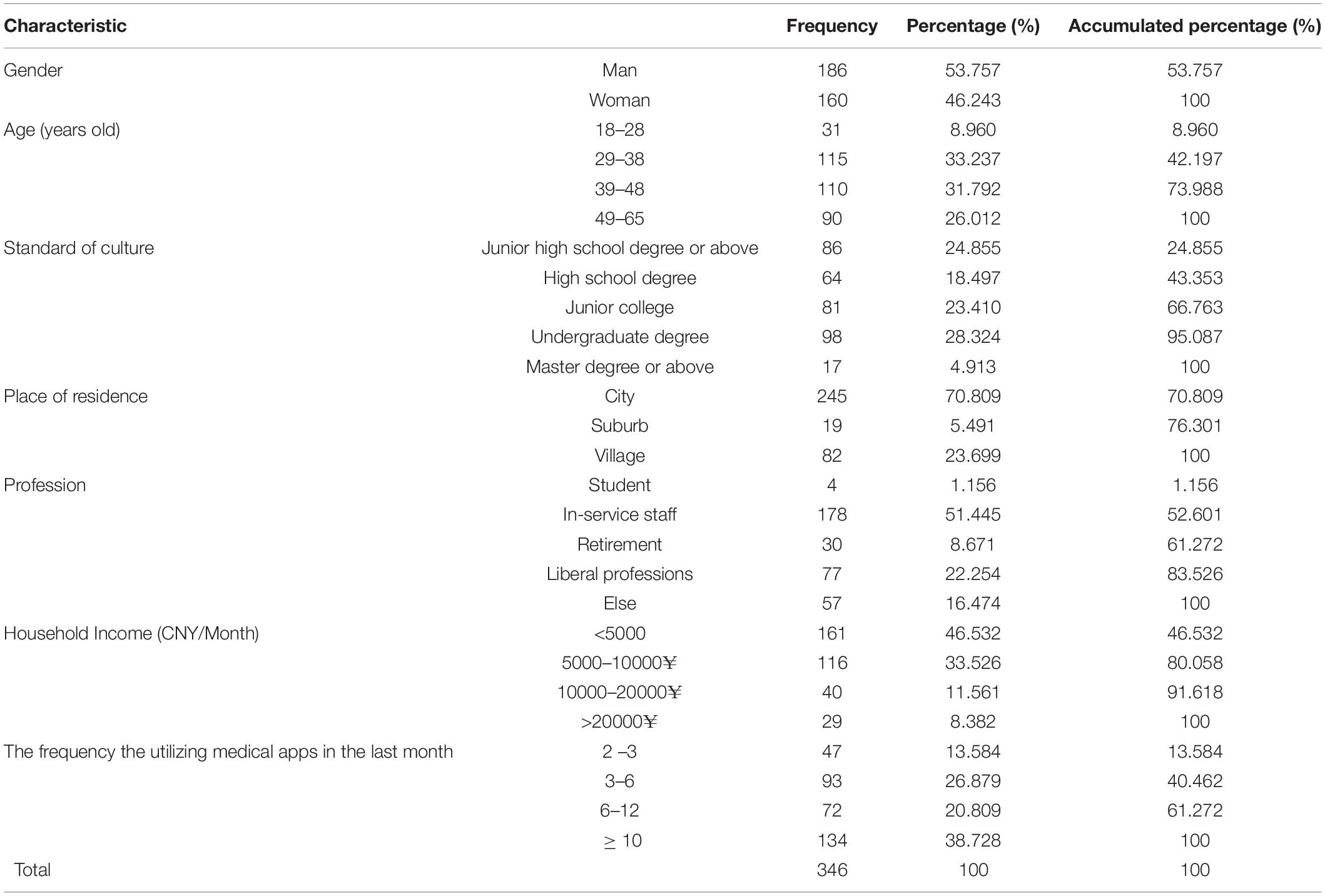

General Information of the Subjects

A total of 444 electronic questionnaires were forwarded to users of the patient version of the “Good Doctor” mHealth app, and 346 questionnaires were effectively received with an effective recovery rate of 77.920%. Analysis of the results showed that, there were 186 and 160 male and female cases, accounting for 53.757% and 46.243% of the total sample size, respectively. In terms of age, the majority of users aged between 29 and 48 years old. The dominancy of bachelor’s degree was found among users. Besides, users mainly lived in urban areas. The proportion of “in-service” personnel was the highest. Table 1 summarizes further details.

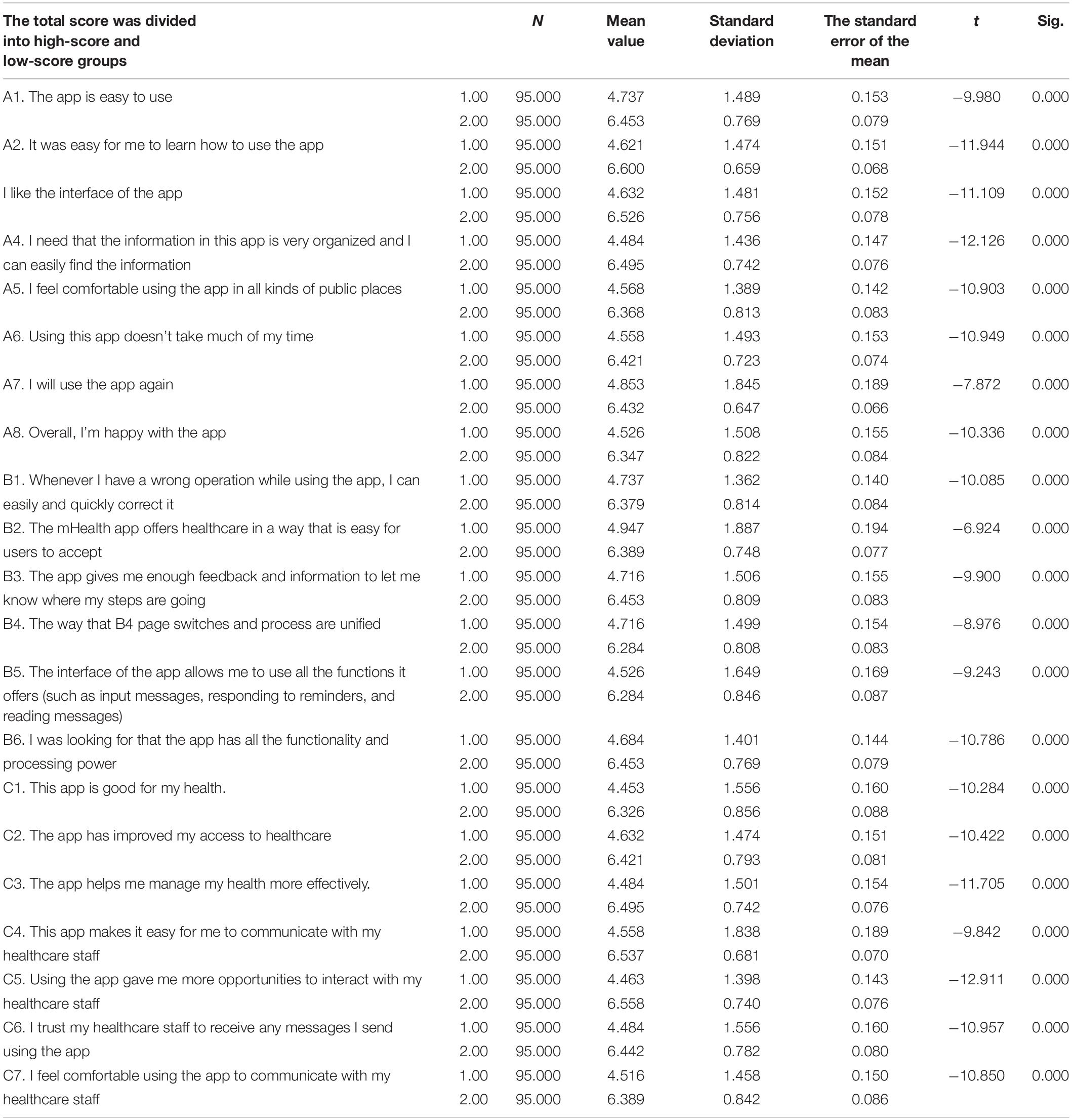

Item Analysis

Table 2 shows the results of the standalone-samples t-test, comparing differences in each item between high-score and low-score groups. Items with no statistically significant difference between high-score and low-score groups were deleted (Jones et al., 2001), and the results showed that significant differences existed in each item between high-score and low-score groups, thus, no item was deleted.

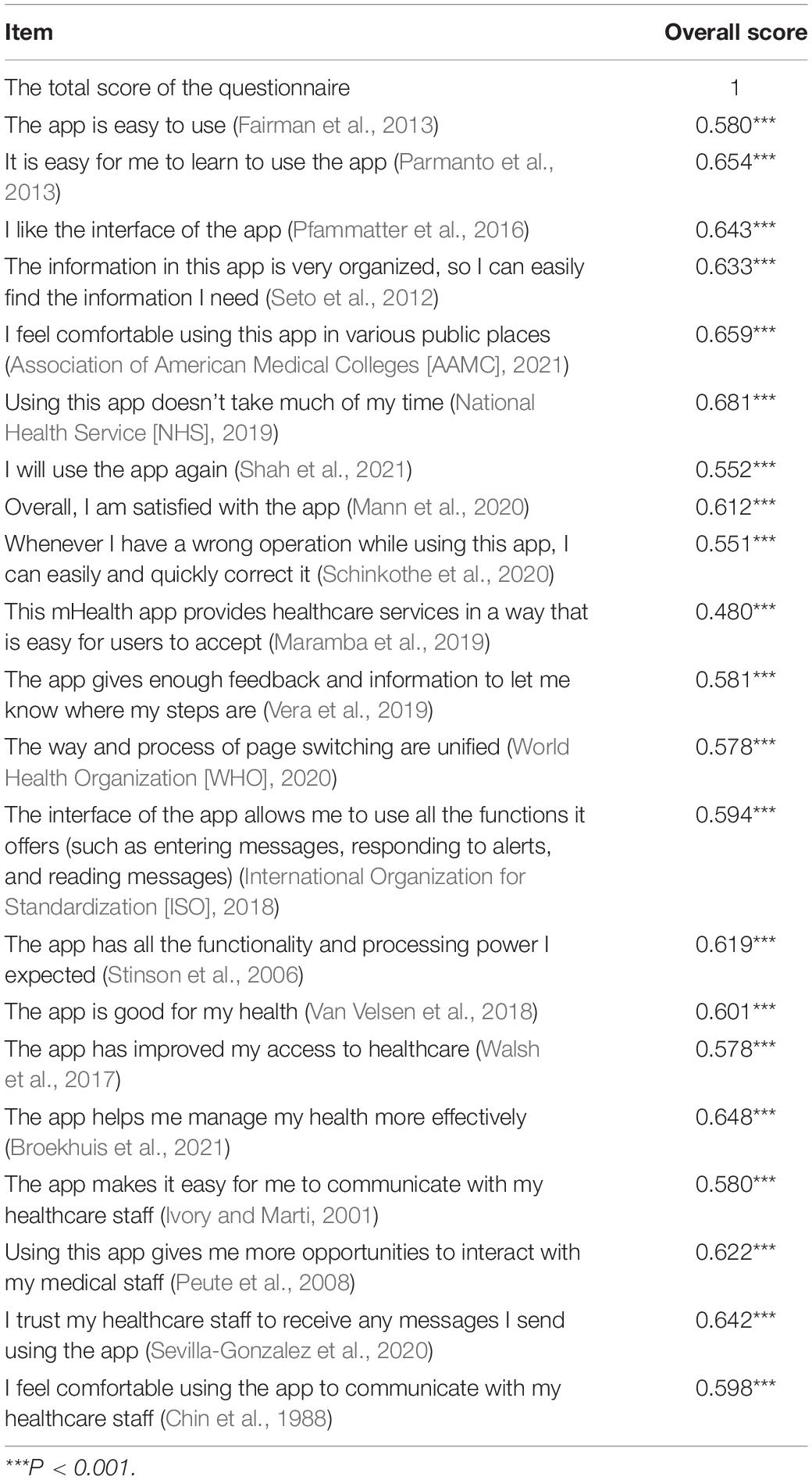

The Pearson’s correlation coefficient was used to assess the correlation among 21 items. As shown in Table 3, all the items were significant, and a positive correlation was found among the values of assessment phase. The correlation coefficient between the score of each item and the total score of the questionnaire was calculated, 0.480∼0.681 (P < 0.001), thus, all items were retained.

Table 3. The Pearson’s correlation coefficient between each item and the total score of the questionnaire.

Validity Testing of the Questionnaire

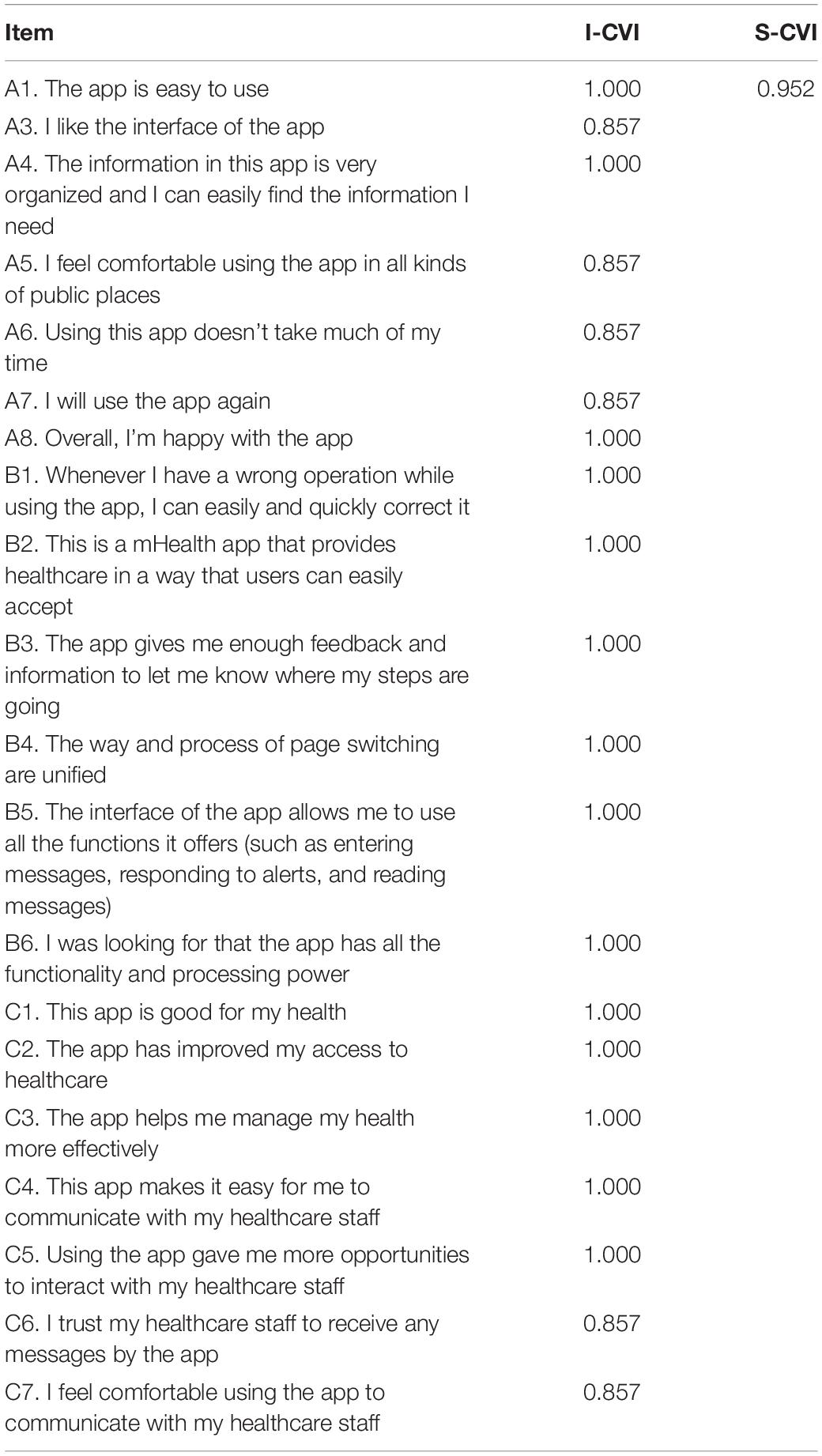

Content Validity

As shown in Table 4, the CVI of each item in the questionnaire was > 0.790, indicated that the items were comprehensible to the target users (Zamanzadeh et al., 2015). and the CVI of the total score of the questionnaire was 0.952.

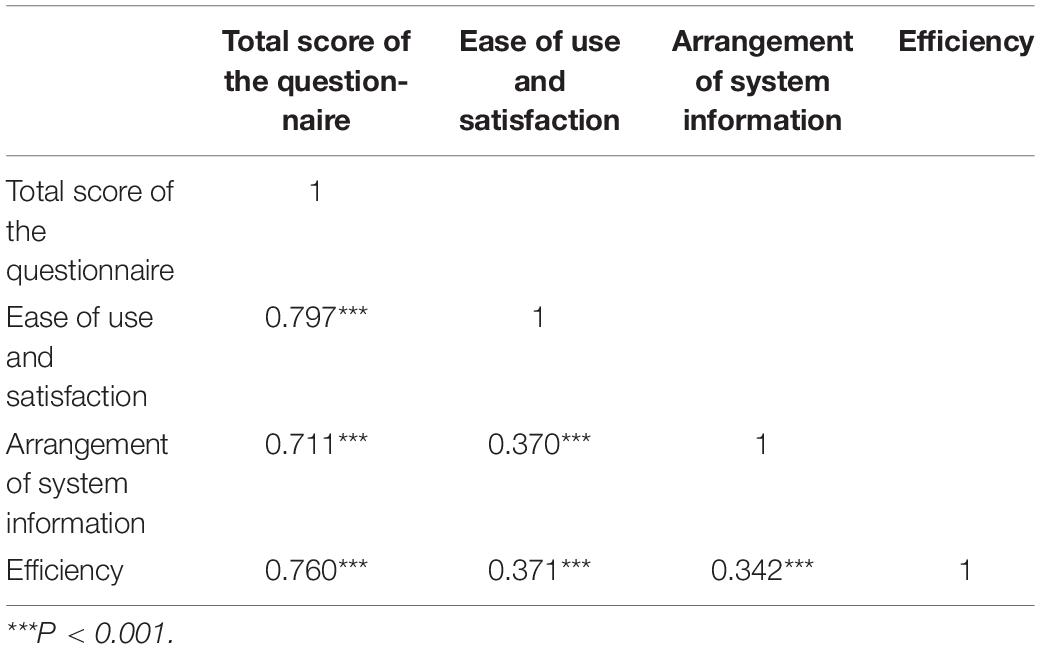

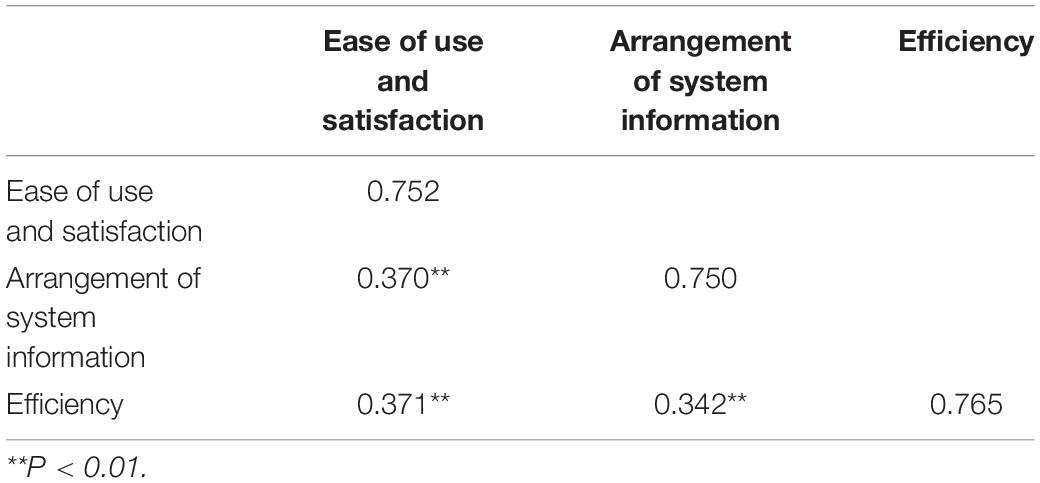

Correlation Analysis

Correlation analysis was used to study the correlation between the overall usability and satisfaction of the questionnaire, the arrangement of system information, and the efficiency of the questionnaire. The Pearson’s correlation coefficient was used to indicate the strength of the correlation. The results of correlation analysis revealed that, usability and satisfaction, arrangement of system information, and efficiency of the questionnaire were all significant, and the correlation coefficients between each dimension and the total score were 0.797, 0.711, and 0.760 respectively, indicating the existence of positive correlations among the usability and satisfaction, the arrangement of system information, and the efficiency of the questionnaire (Table 5).

Structural Validity

Exploratory Factor Analysis

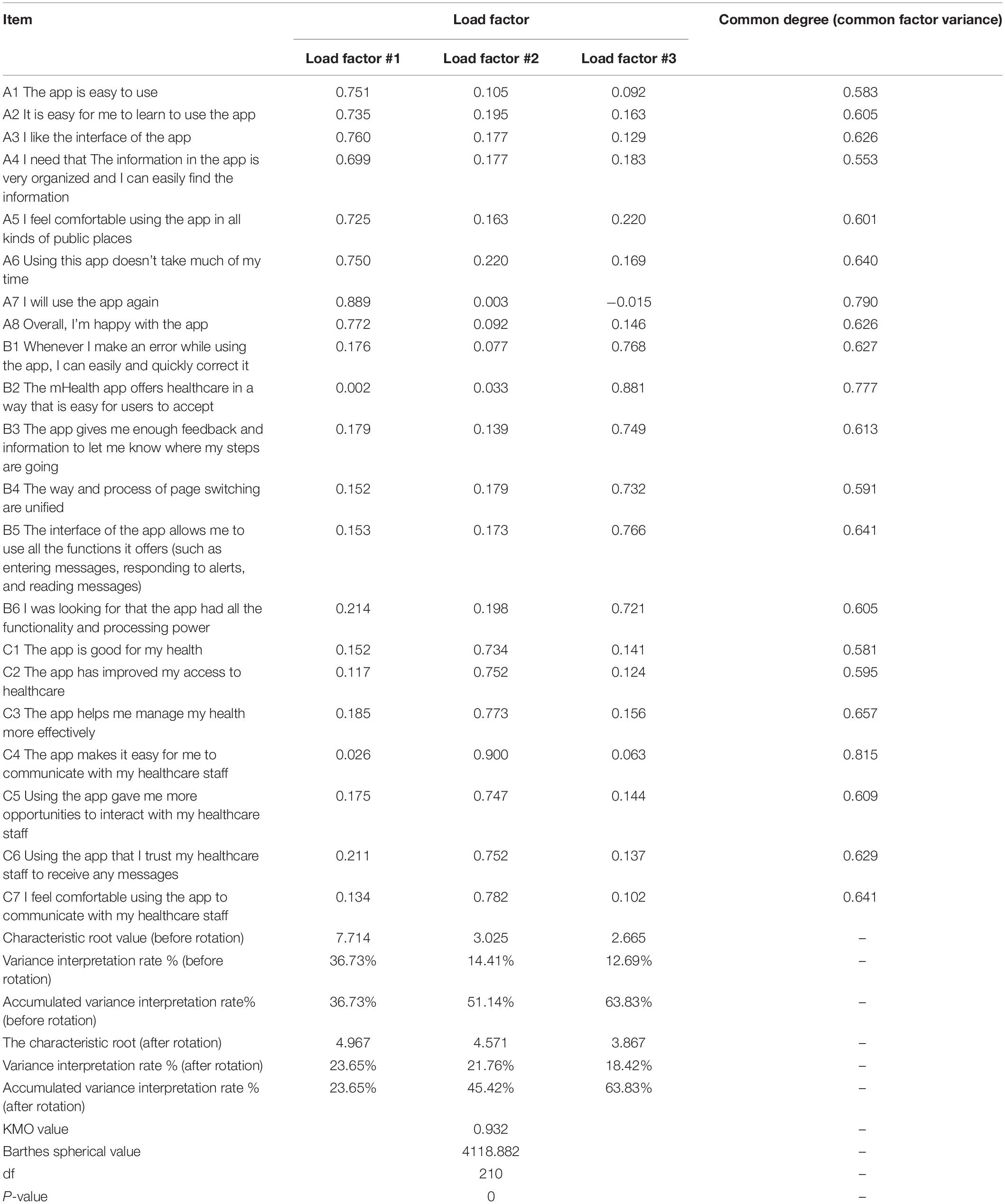

The validity of the questionnaire was assessed through EFA of the values of Kaiser-Meyer-Olkin (KMO), common degree (common factor variance), variance interpretation rate, load factor, and other indicators. The corresponding common degree values of the research items were all higher than 0.400, Kaiser gave the commonly used KMO measurement standard: above 0.9 means it is very suitable; 0.8 means suitable; 0.7 means general; 0.6 or below means not suitable. The KMO value of this study was 0.932 > 0.800, suitable for factor analysis which indicated that the questionnaire had an acceptable validity. The variance interpretation rates of the three factors were 23.65%, 21.76%, and 18.42%, respectively, and the cumulative variance interpretation rate after rotation was 63.830% > 60%, which revealed that all the information could be extracted effectively. After rotating the component matrix for each question in the questionnaire, the load factor of each item in a certain dimension was > 0.500. Therefore, the validity of the questionnaire was noticeable and the questionnaire was efficient (Table 6).

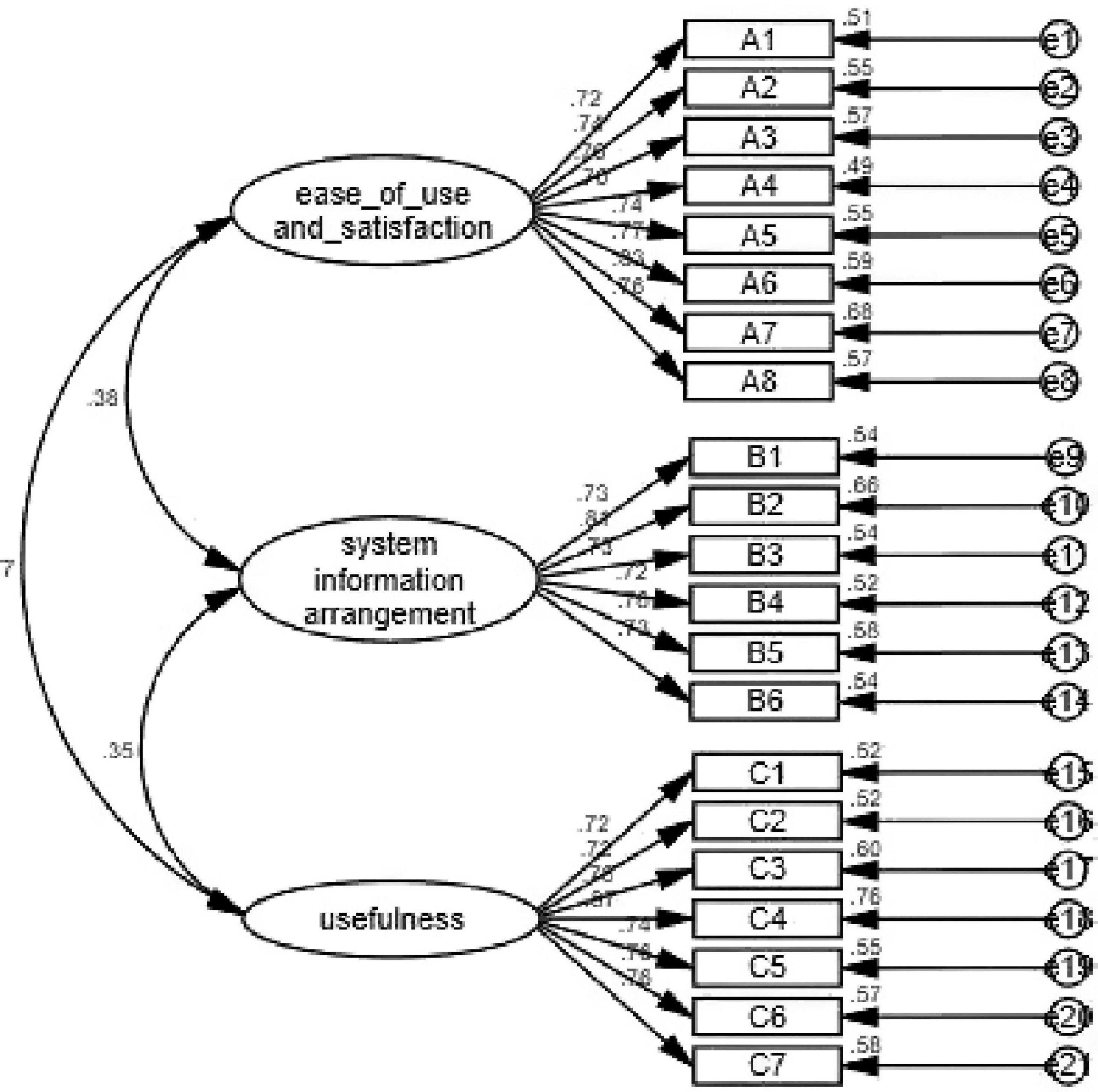

Confirmatory Factor Analysis

Confirmatory factor analysis was used in this study to indicate whether the structure of the questionnaire was consistent with the theoretical structure of the original questionnaire. As shown in Figure 1, when the measurement model was established, the maximum likelihood estimation method was used to estimate the parameters of the model by maximizing the likelihood function. The estimated parameters were load factor, equation error, and error covariance. The most direct parameter that was used to evaluate the goodness of fit of the model. The evaluation of goodness-of-fit the model included the testing of degree of fitting and testing of parameters. In this study, the minimum discrepancy/degrees of freedom (CMIN/DF), normed fit index (NFI), incremental fit index (IFI), Tucker-Lewis index (TLI), comparative fit index (CFI), goodness-of-fit index (GFI), adjusted GFI (AGFI), root mean square error of approximation (RMSEA), and other model fitness indices all met the requirements, indicating that the fitness of the model was good (Table 7).

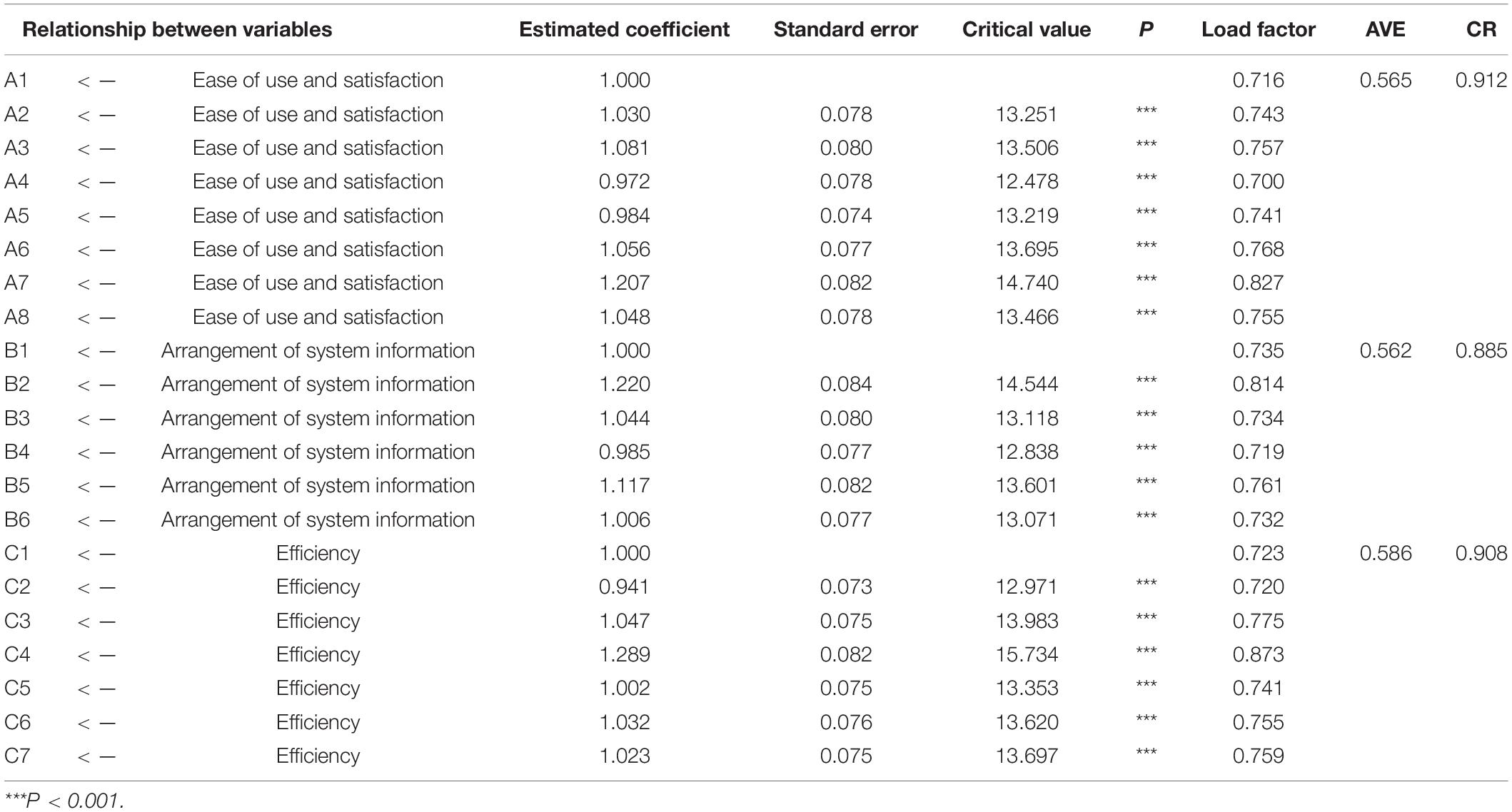

Analysis of Convergent Validity and Discriminant Validity

In this study, the composite reliability (CR) and average variance extracted (AVE) values were used as the evaluation criteria for convergence validity. When the CR value of each factor is > 0.700 and the AVE value is > 0.500, it is generally considered that the convergent validity is good. In addition, when the square root value of AVE of each factor is higher than the correlation coefficient between this factor and other factors, it indicates a high discriminant validity. The results of convergence validity and discriminant validity are presented in Table 8. As shown in Table 8, the mean AVE value of each dimension is greater than 0.5, and the mean CR value is greater than 0.700, indicating that the convergence validity of the questionnaire is noticeable.

According to the results of the discriminant validity analysis (Table 9), the square root of the AVE was greater than the value of the correlation with other factors, thus, the discriminant validity (among internal factors) of each variable was very promising.

Table 9. The results of the discriminant validity analysis using the Pearson’s correlation coefficient.

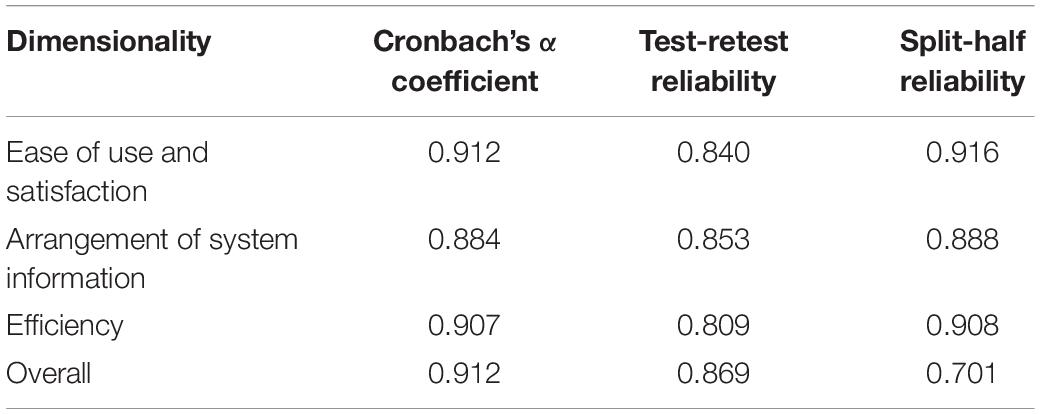

Reliability Testing of the Questionnaire

The Cronbach’s α coefficient of the Chinese version of the MAUQ was 0.912, higher α value suggests greater internal reliability and more than0.700 is acceptable as good internal reliability (Allen et al., 2014). The values of test-retest reliability were 0.869, and the values of split-half reliability were 0.701. The reliability of each dimension of the questionnaire is presented in Table 10.

Discussion

This study described the process of cross-cultural translation and adaption of the MAUQ questionnaire Semantic and cultural equivalence was achieved between the two versions and the adapted one showed excellent internal consistency and good validity finally.

The development of new questionnaires requires the joint efforts of members of professional research teams, while attaining the objective is costly and time-consuming. Therefore, it is recommended to adapt the established, reliable, and available questionnaires and record their validity in the language used (Mohamad Marzuki et al., 2018). However, due to the linguistic and cultural differences between Chinese and English, literal translation may cause ambiguity in the meaning expressed in a part of the content, hindering the production of an authenticated questionnaire. Therefore, cross-cultural adaptation of the questionnaire is necessary (Epstein et al., 2015). The present study used the Brislin’s translation model (Brislin, 1976) for translation, and the translation results showed that the majority of elements were easy to understand, while few items were adjusted after translation according to Chinese expression habits.

The verification analysis of the questionnaire is an important step to ensure that the composition of the translated version of the questionnaire is as same as that of the original version. CVI and reliability index were used in the current research. The CVI is basically used to measure the validity of the questionnaire (Maramba et al., 2019). It is easy to measure and understand, and the content of each item can be modified or deleted according to the detailed information of each item (Polit et al., 2007; Zamanzadeh et al., 2015). The results of this study showed that the CVI value of each item was 0.857∼1.000, and the total CVI was 0.952 > 0.790 (benchmark value), indicating that the Chinese version of the MAUQ could properly represent each item. Reliability is the degree to which an assessment tool produces stable and consistent results. In the present study, the Cronbach’s α coefficient, split-half reliability, and test-retest reliability were used to evaluate the reliability of the questionnaire. The results showed that the values of Cronbach’s α coefficient, test-retest reliability, and split-half reliability were 0.912 > 0.800, 0.869 > 0.800, and 0.701 > 0.700, respectively. The results confirmed that the questionnaire had a good internal consistency and stability.

In the present study, the reliability and validity of the MAUQ were tested to provide an evaluation tool for mobile medical services in China. However, there are some limitations in this study. Firstly, 346 patients recruited in this study were outpatients from a third-class hospital, particularly youth who were familiar with smart phones. The results of this study have not been generalized to a wider population, especially the elderly. Secondly, this study only concentrated on the evaluation of the patient version of the “Good Doctor” app, thus, the scope of our research was limited, and it could not be applied to other versions (provider version or medical version) of different mHealth apps. In the future research, we will concentrate on the usability testing tools for mHealth apps in all aspects, so as to further promote the development of mHealth apps.

Conclusion

The Chinese version of the MAUQ, an interactive mHealth app for patients, contains 21 items and 3 dimensions, which is consistent with the theoretical structure of the original questionnaire and has a high reliability and validity. All indicators of the Chinese version of the MAUQ could meet the requirements of the measurement, which could effectively and scientifically evaluate the patient version of the interactive mHealth app.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Qilu Hospital of Shandong University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

SZ and YC contributed to the study design, implementation, analysis, and manuscript writing. HC and KL contributed to the statistical design and analysis. XL, JZ, and YL contributed to the analysis of the data. PD contributed to the translation of the scale. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank Leming Zhou for granting permission to convert the MAUQ into Chinese. We highly appreciate the author PD for his guidance during the translation of the MAUQ. We also acknowledge Chongzhong Liu, Yu Han, and Qianqian Xu for providing significant technical comments on the questionnaire content.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.813309/full#supplementary-material

Abbreviations

CVI, content validity index; KMO, Kaiser Meyer Olkin; MAUQ, mHealth app usability questionnaire; mHealth, mobile health.

References

Allen, P., Bennett, K., and Heritage, B. (2014). SPSS Statistics version 22: A Practical Guide. Sydney: Cengage Learning Australia Pty Limited.

Anderberg, P., Eivazzadeh, S., and Berglund, J. S. (2019). A novel instrument for measuring older people’s attitudes toward technology (TechPH): development and validation. J. Med. Internet Res. 21:e13951. doi: 10.2196/13951

Association of American Medical Colleges [AAMC] (2021). The Complexities of Physician Supply and Demand: Projections from 2018 to 2033. Washington, DC: Association of American Medical Colleges.

Brislin, R. W. (1976). Comparative research methodology: cross-cultural studies. Int. J. Psychol. 113, 215–229. doi: 10.1080/00207597608247359

Broekhuis, M., van Velsen, L., Peute, L., Halim, M., and Hermens, H. (2021). Conceptualizing usability for the eHealth context: content analysis of usability problems of eHealth applications. JMIR Form. Res. 5:e18198. doi: 10.2196/18198

Chin, J. P., Diehl, V. A., and Norman, K. L. (1988). “Development of an instrument measuring user satisfaction of the human-computer interface,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, (Gaithersburg, MD: ACM Press).

Cummins, K. M., Brumback, T., Chung, T., Moore, R. C., Henthorn, T., Eberson, S., et al. (2021). Acceptability, validity, and engagement with a mobile app for frequent, continuous multiyear assessment of youth health behaviors (mNCANDA): mixed methods study. JMIR Mhealth Uhealth 9:e24472. doi: 10.2196/24472

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

Epstein, J., Santo, R. M., and Guillemin, F. (2015). A review of guidelines for cross-cultural adaptation of questionnaires could not bring out a consensus. J. Clin. Epidemiol. 68, 435–441. doi: 10.1016/j.jclinepi.2014.11.021

Fairman, A. D., Dicianno, B. E., Datt, N., Garver, A., Parmanto, B., and McCue, M. (2013). Outcomes of clinicians, caregivers, family members and adults with Spina bifida regarding receptivity to use of the iMHere mHealth solution to promote wellness. Int. J. Telerehabil. 5, 3–16. doi: 10.5195/ijt.2013.6116

Gous, N., Fischer, A. E., Rhagnath, N., Phatsoane, M., Majam, M., and Lalla-Edward, S. T. (2020). Evaluation of a mobile application to support HIV self-testing in Johannesburg, South Africa. South. Afr. J. HIV Med. 21:1088. doi: 10.4102/sajhivmed.v21i1.1088

International Organization for Standardization [ISO] (2018). ISO 9241. International Organization for Standardization. Available Online at: https://www.iso.org/obp/ui/#iso:std:iso:9241:-11:ed-2:v1:en (accessed November 11, 2019).

Ivory, M. Y., and Marti, A. H. (2001). The state of the art in automating usability evaluation of user interfaces. ACM Comput. Surv. 33, 470–516. doi: 10.1145/503112.503114

Jones, P. S., Lee, J. W., Phillips, L. R., Zhang, X. E., and Jaceldo, K. B. (2001). An adaptation of Brislin’s translation model for cross-cultural research. Nurs. Res. 50, 300–304. doi: 10.1097/00006199-200109000-00008

Lewis, J. R. (2002). Psychometric evaluation of the PSSUQ using data from five years of usability studies. Int. J. Hum. Comput. Interact. 14, 463–488. doi: 10.1207/s15327590ijhc143%264_11

Lund, A. (2001). STC Usability SIG Newsletter. Measuring Usability with the USE Questionnaire. Available Online at: https://www.researchgate.net/publication/230786746_Measuring_usability_with_the_USE_questionnaire (accessed July 28, 2017).

Mann, D. M., Chen, J., Chunara, R., Testa, P. A., and Nov, O. (2020). COVID-19 transforms health care through telemedicine: evidence from the field. J. Am. Med. Inform. Assoc. 27, 1132–1135. doi: 10.1093/jamia/ocaa072

Maramba, I., Chatterjee, A., and Newman, C. (2019). Methods of usability testing in the development of eHealth applications: a scoping review. Int. J. Med. Inform. 126, 95–104. doi: 10.1016/j.ijmedinf.2019.03.018

Menon, A., Fatehi, F., Ding, H., Bird, D., Karunanithi, M., Gray, L., et al. (2019). Outcomes of a feasibility trial using an innovative mobile health programme to assist in insulin dose adjustment. BMJ Health Care Inform. 26:e100068. doi: 10.1136/bmjhci-2019-100068

Mohamad Marzuki, M. F., Yaacob, N. A., and Yaacob, N. M. (2018). Translation, cross-cultural adaptation, and validation of the Malay version of the system usability scale questionnaire for the assessment of mobile apps. JMIR Hum. Factors 5:e10308. doi: 10.2196/10308

Mustafa, N., Safii, N. S., Jaffar, A., Sani, N. S., Mohamad, M. I., Rahman, A. H. A., et al. (2021). Malay version of the mHealth app usability questionnaire (M-MAUQ): translation, adaptation, and validation study. JMIR Mhealth Uhealth 9:e24457. doi: 10.2196/24457

Parmanto, B., Pramana, G., Yu, D. X., Fairman, A. D., Dicianno, B. E., and McCue, M. P. (2013). iMHere: a novel mHealth system for supporting self-care in management of complex and chronic conditions. JMIR Mhealth Uhealth 1:e10. doi: 10.2196/mhealth.2391

Peute, L. W. P., Spithoven, R., Bakker, P. J. M., and Jaspers, M. W. M. (2008). Usability studies on interactive health information systems; where do we stand? Stud. Health Technol. Inform. 136, 327–332.

Pfammatter, A., Spring, B., Saligram, N., Davé, R., Gowda, A., Blais, L., et al. (2016). mHealth intervention to improve diabetes risk behaviors in India: a prospective, parallel group cohort study. J. Med. Internet Res. 18:e207. doi: 10.2196/jmir.5712

PITT Usability Questionnaire (2021). For Telehealth Systems (TUQ) and Mobile Health Apps (MAUQ). Available Online at: https://ux.hari.pitt.edu/app/about (accessed July 11, 2021).

Polit, D. F., Beck, C. T., and Owen, S. V. (2007). Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res. Nurs. Health 30, 459–467. doi: 10.1002/nur.20199

Schinkothe, T., Gabri, M. R., Mitterer, M., Gouveia, P., Heinemann, V., Harbeck, N., et al. (2020). A web- and app-based connected care solution for COVID-19 in- and outpatient care: qualitative study and application development. JMIR Public Health Surveill. 6:e19033. doi: 10.2196/19033

Sengupta, A., Beckie, T., Dutta, K., Dey, A., and Chellappan, S. (2020). A mobile health intervention system for women with coronary heart disease: usability study. JMIR Form. Res. 4:e16420. doi: 10.2196/16420

Seto, E., Leonard, K. J., Cafazzo, J. A., Barnsley, J., Masino, C., and Ross, H. J. (2012). Perceptions and experiences of heart failure patients and clinicians on the use of mobile phone-based telemonitoring. J. Med. Internet Res. 14:e25. doi: 10.2196/jmir.1912

Sevilla-Gonzalez, M. D. R., Moreno Loaeza, L., Lazaro-Carrera, L. S., Ramirez, B. B., Rodríguez, A. V., Peralta-Pedrero, M. L., et al. (2020). Spanish version of the system usability scale for the assessment of electronic tools: development and validation. JMIR Hum. Factors. 7:e21161. doi: 10.2196/21161

Shah, S. S., Gvozdanovic, A., Knight, M., and Gagnon, J. (2021). Mobile app-based remote patient monitoring in acute medical conditions: prospective feasibility study exploring digital health solutions on clinical workload during the COVID crisis. JMIR Form. Res. 5:e23190. doi: 10.2196/23190

Sood, R., Stoehr, J. R., Janes, L. E., Ko, J. H., Dumanian, G. A., and Jordan, S. W. (2020). Cell phone application to monitor pain and quality of life in neurogenic pain patients. Plast. Reconstr. Surg. Glob. Open 8:e2732. doi: 10.1097/GOX.0000000000002732

Soriano, J. B., Fernandez, E., de Astorza, A., de Llano, L. A. P., Fernández-Villar, A., Carnicer-Pont, D., et al. (2020). Hospital epidemics tracker (HEpiTracker): description and pilot study of a mobile app to track COVID-19 in hospital workers. JMIR Public Health Surveill. 6:e21653. doi: 10.2196/21653

Stinson, J. N., Petroz, G. C., Tait, G., Feldman, B. M., Streiner, D., McGrath, P. J., et al. (2006). e-Ouch: usability testing of an electronic chronic pain diary for adolescents with arthritis. Clin. J. Pain 22, 295–305. doi: 10.1097/01.ajp.0000173371.54579.31

Van Velsen, L., Evers, M., Bara, C.-D., Den Akker, H. O., Boerema, S., and Hermens, H. (2018). Understanding the acceptance of an eHealth technology in the early stages of development: an end-user walkthrough approach and two case studies. JMIR Form. Res. 2:e10474. doi: 10.2196/10474

Vera, F., Noel, R., and Taramasco, C. (2019). Standards, processes and instruments for assessing usability of health mobile apps: a systematic literature review. Stud. Health Technol. Inform. 264, 1797–1798. doi: 10.3233/SHTI190653

Walsh, L., Hemsley, B., Allan, M., Adams, N., Balandin, S., Georgiou, A., et al. (2017). The e-health literacy demands of Australia’s my health record: a heuristic evaluation of usability. Perspect. Health Inf. Manag. 14:1f.

World Health Organization [WHO] (2020). Process of Translation and Adaptation of Instruments. Geneva: World Health Organization.

Zamanzadeh, V., Ghahramanian, A., Rassouli, M., Abbaszadeh, A., Alavi-Majd, H., and Nikanfar, A. R. (2015). Design and implementation content validity study: development of an instrument for measuring patient-centered communication. J. Caring Sci. 4, 165–178. doi: 10.15171/jcs.2015.017

Zhou, L., Bao, J., and Parmanto, B. (2017). Systematic review protocol to assess the effectiveness of usability questionnaires in mHealth app studies. JMIR Res. Protoc. 6:e151. doi: 10.2196/resprot.7826

Keywords: mHealth apps, content validity index, cross-cultural adaptation, questionnaire translation, usability testing tools

Citation: Zhao SQ, Cao YJ, Cao H, Liu K, Lv XY, Zhang JX, Li YX and Davidson PM (2022) Chinese Version of the mHealth App Usability Questionnaire: Cross-Cultural Adaptation and Validation. Front. Psychol. 13:813309. doi: 10.3389/fpsyg.2022.813309

Received: 03 December 2021; Accepted: 10 January 2022;

Published: 02 February 2022.

Edited by:

Sina Hafizi, University of Manitoba, CanadaReviewed by:

Donna Slovensky, University of Alabama at Birmingham, United StatesShuo Zhou, University of Colorado, Denver, United States

Copyright © 2022 Zhao, Cao, Cao, Liu, Lv, Zhang, Li and Davidson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yingjuan Cao, caoyingjuan@hotmail.com; caoyj@sdu.edu.cn

Shuqing Zhao

Shuqing Zhao