- 1Department of Public Health, Policy, and Social Sciences, Swansea University, Swansea, United Kingdom

- 2Department of Experimental Clinical and Health Psychology, Ghent University, Ghent, Belgium

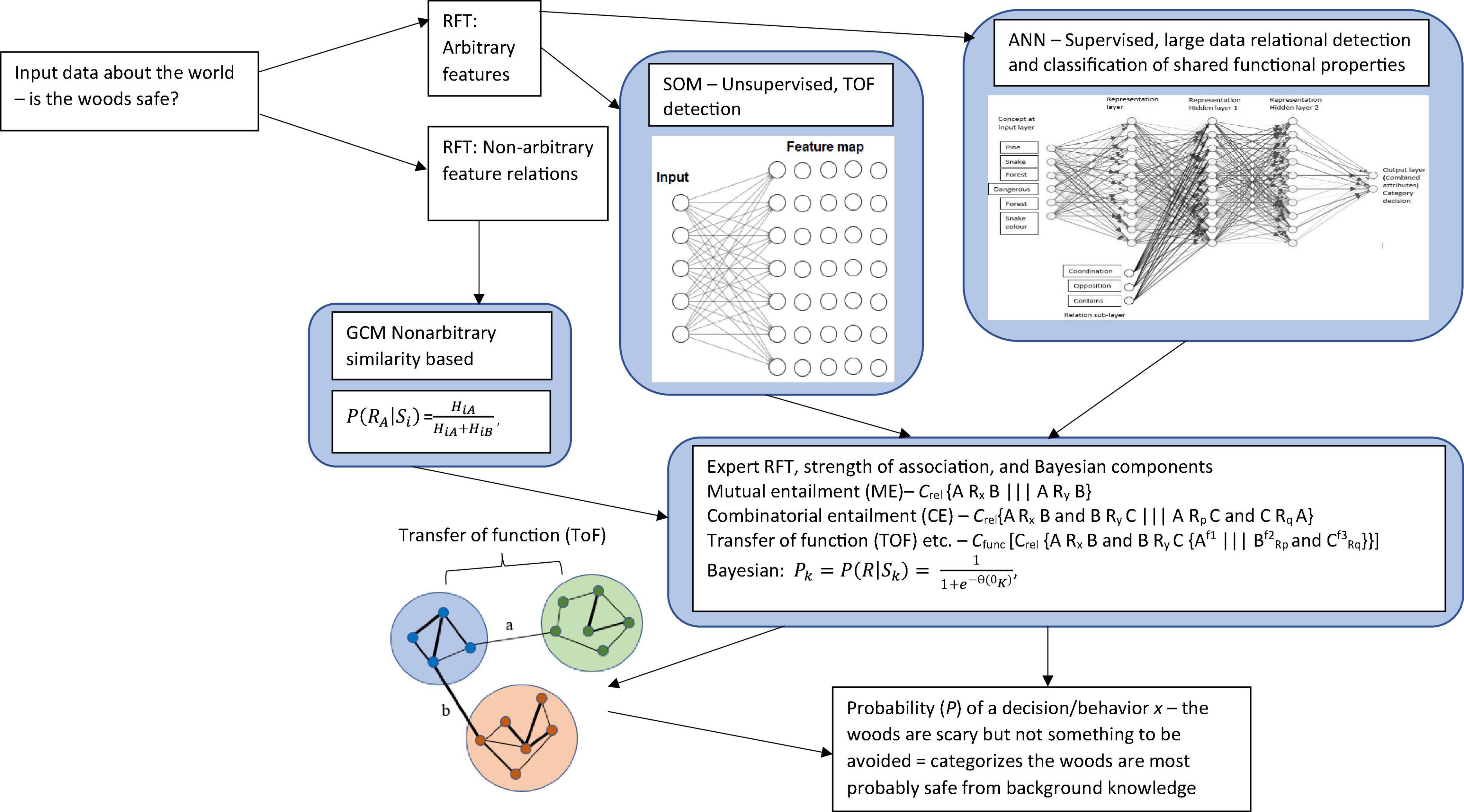

Psychology has benefited from an enormous wealth of knowledge about processes of cognition in relation to how the brain organizes information. Within the categorization literature, this behavior is often explained through theories of memory construction called exemplar theory and prototype theory which are typically based on similarity or rule functions as explanations of how categories emerge. Although these theories work well at modeling highly controlled stimuli in laboratory settings, they often perform less well outside of these settings, such as explaining the emergence of background knowledge processes. In order to explain background knowledge, we present a non-similarity-based post-Skinnerian theory of human language called Relational Frame Theory (RFT) which is rooted in a philosophical world view called functional contextualism (FC). This theory offers a very different interpretation of how categories emerge through the functions of behavior and through contextual cues, which may be of some benefit to existing categorization theories. Specifically, RFT may be able to offer a novel explanation of how background knowledge arises, and we provide some mathematical considerations in order to identify a formal model. Finally, we discuss much of this work within the broader context of general semantic knowledge and artificial intelligence research.

Introduction

Category learning has been described as fundamental to all aspects of decision-making, and refers to the process of organizing sensory experience into groups and appears to be key to understanding the world (Margolis and Laurence, 1999; Lakoff, 2008). The main purpose of the human cognitive system for developing concepts and categories is cognitive economy, which allows individuals to process complex information in a manageable way in spite of finite memory storage (Goldstone et al., 2018). Categorization researchers use models to describe the process of categorization in a formal (and sometimes mathematical), principled and lawful way, in an attempt to achieve prediction over behavior in particular categorization tasks (Pothos and Wills, 2011).

Categorization processes can be separated into four distinct areas of research, supervised categorization (Nosofsky, 1988; Minda and Smith, 2001; Hampton, 2007; Kurtz, 2007; Vanpaemel and Storms, 2008) and unsupervised categorization (Fleming and Cottrell, 1990; Ashby et al., 1999; Pothos and Chater, 2002; Pothos and Close, 2008; Pothos et al., 2011) which can utilize a similarity or rule based function. There is also the emerging area of categorization called relational representation (Stewart et al., 2005; Edwards et al., 2012) which explores inference learning in categorization, and also an area known as background knowledge (Heit, 2001) which attempts to incorporate many of the above approaches to understand how background knowledge emerges and affects category decision making.

Background knowledge in categorization refers to the beliefs that individuals may have about the interrelations and causal connections among features and concepts which emerge through prior learning, and how this affects category decision making (Keil, 1989; Murphy, 1993). This differs in some ways from other approaches which attempt to model knowledge such as theories in semantics, for example semantic memory. Semantic memory can be regarded as a category of long-term memory which involves the recollection of ideas, concepts, and facts commonly regarded as general knowledge (Zesch et al., 2008; Paradis, 2012; Russo et al., 2021). However, the focus within semantics has primarily been on natural language, such as the logical relations within sentence structures which give meaning to the language being expressed (Löbner et al., 2021) (however there is modest overlap – see the section on “The limited success of semantics” for a more in depth overview). Crucially, background knowledge in categorization, does not specifically and primarily explore logical relations within linguistics (as semantics does), but instead, focuses primarily on the category structure developed because of prior learning which helps form some general knowledge about some concept, and facilitates some category decision in the present moment.

In an example of background knowledge, if you were to ask a lay person (non-animal specialist) to describe the categorical features of a bird and a bat, they may respond by saying; “Birds and bats fly by using their wings to do so. A bat prefers to fly at night, whilst a bird prefers to fly in the day. Birds has feathers whilst bats do not.” This relies on prior knowledge learned perhaps in early school, through parents and general books the person may have read out of interest. Causal connections and interrelations then are drawn by the participant from this knowledge in order for them to describe how wings are used to allow the bird and bat to fly. Specific differences are also identified by the participant such as when birds and bats prefer to fly, but little causal connections are drawn by the lay person as they lack the essential background knowledge of why this may be the case. However, if you were to ask an animal specialist, they may add to this by saying; “A bat uses echolocation to fly at night, to identify food, and to navigate, whilst a bird requires light to fly and does not use echolocation. A bat is a mammal, and feeds on milk from its mother while growing, whilst a bird is a member of the Aves, and is not a mammal.” This specialist background knowledge may have been learned during higher education and allows the specialist to draw stronger and more accurate causal interrelations and connections amongst the feature of birds and bats. The study of background knowledge, is thus to identify how these causal connections and interrelations between concepts develop, in what context they emerge, and to identify the different ways in which this knowledge can influence categorization behavioral decisions in a given categorization task (Heit, 2001).

In this current review and conceptual development paper, we (1) firstly broadly explain why existing categorization models fail to account for background knowledge. We also briefly highlight why the problem of background knowledge is also a problem in artificial intelligence (AI) research, for those who seek to develop artificial general intelligence (AGI), and for which development in that area may be dependent on a model for background knowledge. We, therefore, seek this literature for clues of how we may develop mathematical models to solve the problem of background knowledge which may not have been considered previously; (2) We offer a functional contextual approach to understanding background knowledge which has not yet been considered in background knowledge research; (3) Finally, as part of this exploration, we offer a formal mathematical model of this functional contextual approach for use with Background knowledge experiments, which is consistent with the approach made by many other researchers who offer mathematical accounts of their categorization models, and for which may be of interest to categorization as well as AI mathematical modelers. This takes into consideration the modeling of both similarity as well as functional contextual properties within a RFT framework. We then make suggestions for future work, which specifically test the descriptive and predictive power of this RFT approach for background knowledge in categorization.

The Problem of Background Knowledge and Why Existing Modeling Efforts Are So Far Incomplete

The area of background knowledge has been the most difficult for categorization researchers to formalize a specific model, and most attempts have only provided an intuitive account of this thus far (Heit, 1997; Dreyfus et al., 2000; Pothos and Wills, 2011) with most efforts in this area having now been redirected to less difficult problems such as simple induction (inference) modeling (B. K. Hayes and Heit, 2013; Hawkins et al., 2016). Indeed, approaches based on similarity (i.e., identifying similar features when categorizing) which have been used to explain behavior in unsupervised and supervised tasks have not provided an effective means of explaining the emergence of background knowledge though some promising progress has been made in relation to understanding background knowledge effects on categorization decisions.

Supervised learning models are often explained by exemplar and prototype theories which involve matching through similarity (magnitudes of length, height, color, etc.), either the individual memory trace of exemplars for the to-be categorized novel stimuli, or matching a prototype (e.g., a prototypical average representation of all chairs represented in memory) to the to-be categorized novel stimuli. This typically involves modeling how stimuli correspond to points in multidimensional space (usually Euclidean), and some similarity function is defined based on some mathematical axioms about how the stimuli should be categorized on this similarity basis (Lamberts and Shapiro, 2002; Pothos and Wills, 2011).

Similarity approaches are one of the earliest and most successful explanations of how people categorize (Posner and Keele, 1968; Reed, 1972; Rosch and Mervis, 1975; Pothos and Wills, 2011). In this account, the more similar item X is to what is known about category A (e.g., the magnitude of size), the more likely X will be categorized as belonging to A. Consider, for example, the classification of a novel bird. According to one similarity model called the prototype account (Rosch and Mervis, 1975), the reasoner would take notice of typical features such as color, wing-span, type of beak, and where the bird lives. They would then categorize the bird on these bases as belonging to a particular bird category if this bird had similar features to the prototypical list of features identified in that category (e.g., similar shaped beak, sized wing, etc.).

In typical similarity tasks, such as unsupervised categorization, participants are asked to put several items into sets of categories that they feel to be most intuitive. Crucially, the participant is not informed of any category structure, thus cannot infer from pre-existing knowledge about category structure. These unsupervised tasks are thought to involve category coherence (Rosch and Mervis, 1975; Murphy and Medin, 1985), which allow researchers to hypothesize about how intuitive the structures being categorized are, and how participants process this information without any background knowledge of what the category structure should look like, in order to form distinct categories. So, in this case, unsupervised categorization may be less helpful in facilitating researchers to identify a model of background knowledge, as these tasks typically do not require the use of it.

In supervised (also a similarity based account) categorization tasks (Nosofsky, 1988; Hampton, 2007), participants are shown the exemplars of each category (such as 20 pictures of two novel creatures) in addition to a category label (such as Blib or Chomp), and thus learn the category structure. They are then asked to decide which category a set of new items (pictures of new creatures) belong to (either the Blib or Chomp creature category). Hence, unlike unsupervised tasks, supervised tasks involve some aspect of learning about the category structure before the categorization decisions are made, and the categorization process relies of these previous memory traces of the category structure when making category decisions. This, therefore, at a conceptual level may be more helpful in facilitating researchers to identify a model of background knowledge, as these tasks require the use of memory trace, and background knowledge presumably would need to be remembered via such a memory trace. This is because, if a categorization task asks the participants, ‘is a bat and a bird in the same category?’, their answer should be dependent on some memory trace of background knowledge that a “bat is a mammal, whilst a bird is not,” therefore the participants come to the conclusion that they are not in the same category. Without such a memory trace, the participant may determine that a bat and bird are in the same category on the basis of some more general similarity function based on the shape and size similarity of the creatures (i.e., similar to an unsupervised approach which does not require a strong memory trace).

In both supervised and unsupervised categorization tasks, many models have been formalized mathematically, and are typically based on a similarity axiom. For example, the Generalized Context Model (GCM; Nosofsky, 1986) is a generalization of the context model by Medin and Schaffer (1978), integrated with similarity and choice of classical theories by Garner (1974). It is also one of the most heavily cited and influential models in categorization research (over 3200 citations). The GCM incorporates multidimensional scaling (MDS) to model similarity, whereby multidimensional space is used to represent the exemplars and the similarity is a decreasing function of their distance in this space. There are, of course, many other similarity-based models, and a detailed examination of these is beyond of the scope of this current article. One example of an unsupervised categorization model is the simplicity model which predicts the “optimal” categories through an information reductionism perspective (Pothos and Chater, 2002; Nikolopoulos and Pothos, 2009). This model assumes that information theory applies to cognition through a simplicity principle, which states that we tend to prefer simpler and not more complex perceptual organizations. There are also models, such as the rational model (Anderson, 1990) which use features in their description of a categories should emerge. This takes the dimensional features (e.g., has four legs, barks, and has tail) and identifies the probability of which category the item belongs to, based on how similar its features are to the features within each of the categories (where assignment is made with the greatest similarity).

However, criticisms of these similarity models as a basis for natural concepts came as far back as from Murphy and Medin (1985) in a seminal paper concerning knowledge effects on concept learning. Rips (1989) then extended this criticism by reporting a number of cases whereby categorization behavior was better explained by a rule based account as opposed to a similarity-based account. For example, he highlighted the pizza coin experiment, where participants were asked to imagine an item that was halfway between two categories (a pizza and a coin), where one of the categories had fixed magnitudes of properties (such as fixed size – coin) and the other had variable magnitudes of properties (such as variable size – pizza). Participants were then asked: (1) whether an item was more similar to one of the categories than the other, and (2) whether an item was more likely to be a member of one category rather than the other. Rips found that participants were more likely to categorize the imagined item as belonging to the variable category (pizza) but more similar to the fixed category (coin). Rips concluded that there was sometimes a disassociation between similarity and categorization, i.e., individuals categorization behavior were not always consistent with how similar they felt items were with category members.

Perhaps in an even more convincing example, Rips (1989) asked participants to imagine a creature which accidentally metamorphosized from one category (bird) to another (insect). When asked whether the creature was more similar to a bird or an insect, most participants selected insect, and yet they more readily categorized the creature as a bird. In a separate condition, some participants were asked to judge the similarity of the creature before it transformed and to assume that metamorphosis happened naturally. In this condition, the participants deemed the creature more similar to a bird, and this finding suggested that some background knowledge about essential qualities (e.g., DNA) were being inferred from the detail of metamorphosis and the extent to which this was a natural process. Again, Rips proposed that this was evidence that participants were using formal rules of inference from background knowledge to inform their categorization decisions, and not merely similarity.

So, in spite of the success of similarity models in predicting categorization behavior based on essential category structure in many supervised and unsupervised tasks, they appear less effective in predicting behavior in tasks which involve a rules option and involve inferences made through background knowledge. The view that in addition to perceptual similarity, rule, and background knowledge information pertaining to the stimulus context may also be relevant, represented a shift away from similarity-based explanations, and toward the inclusion of critical features (Katz, 1972; Komatsu, 1992). In this classic view of critical features, necessary or sufficient features were deemed important in categorization that involved generalizable background knowledge.

In an example of critical features, consider the statement “not coming from Mars” as a necessary feature of the concept “human,” which can only be derived through background knowledge context (i.e., humans do not come from Mars). However, this classical theory may also be limited as many non-humans also do not come from mars, so the classification of “necessary” may be overly simplistic. In another example, the concept “man” is a necessary feature of “bachelor,” however, some men are not bachelors. So, again, utilizing the simple idea of a “necessary” feature is clearly limited. Indeed, one of the main problems with the naïve theory approach, is that the formalization of background knowledge into a specific model has been shown to be very difficult. As a result of this, only an intuitive account of background knowledge has been achieved through the cognitive categorization approach (Heit, 1997; Dreyfus et al., 2000; Pothos and Wills, 2011). The difficulties of tackling the very complex problem of background knowledge as a formal model, had led categorization researchers to return to similarity models and rule-based explanations to explain some of the simpler elements of knowledge effects on the categorization process.

Neural (connectionist) networks have also been used to model how people categorize stimuli, which utilize learned weighted associations between features in order to make classifications. One example of this is the competitive learning feature detector neural network (Rumelhart and Zipser, 1985) for unsupervised categorization. Another example is the adaptive resonance theory (ART) model, which is based on the stability-plasticity dilemma (Carpenter and Grossberg, 1988). The dilemma refers to the problem of how a learning system can remain adaptive or plastic in response to significant events and can remain stable against irrelevant events. The mathematical model uses a self-organizing neural network which organizes arbitrary input patterns in real time.

Though all of these models provide some basis for exploring how background knowledge can affect a categorization task, it is acknowledged these models are incomplete, as the models do not specify a framework which can be used in order to specify the relevant information (B. K. Heit, 1997; Hayes and Heit, 2013; Hawkins et al., 2016). This incompleteness stems in what is generally referred to by Heit as the knowledge selection problem, and it specifically relates to the problem, whereby, though the models discussed can address the processes in which background knowledge and new information are combined, they cannot address the processes for how a learner dynamically determines which background knowledge is most relevant, how this knowledge is generated, which context are important, and how causal connections and interrelations amongst concepts emerge within learning. As an example of this, consider Heit (1994) example of learning about joggers. In this study, and a follow up study (Heit, 1995), Heit demonstrated that information at various times points were being inferred and integrated, whereby new knowledge was derived from simple combinations of background knowledge and observations. However, this was a simple observation about the integration of knowledge, and was without a precise formal model to specify how exactly such information was being integrated and within what specific context.

Heit (1994), had made specific observations that when participants were asked to categorize whether people in a new city were joggers, background knowledge was assumed to be used about joggers in a previous city. While this assumption may be straightforward in a laboratory context, real life contains an almost infinite number of possibilities for knowledge selection, such as cultural background, occupation, hobbies, prior city experience, etc. This makes the knowledge selection problem very difficult to circumvent.

One possible way forward which has been proposed is to explore knowledge inference and induction more formally, as Heit (1997) suggested, which comes from the reasoning and memory literature (Anderson, 1991; Ross and Murphy, 1996), and may give us some clues of how to progress theories in background knowledge for categorization, albeit still not a complete model. When utilizing reasoning in the form of induction, for example, you may expect that if you learn that a person belongs to a category of “salespersons” you may then infer that this person will try to sell you something. This of course is missing the importance of context, as a “salesperson” is only likely to sell you something in the context of the place where they work. So, any complete model would need both induction and sensitivity to context.

Induction tasks are designed to answer questions about how participants can draw inferences for the information provided and when using background knowledge. A simple example of this would be asking a participant; ‘Crows are likely to contract a disease, how likely is it that robins will contract a disease?’ If the participant answers that it is likely that the robin will also contract the disease, this indicates that knowledge about the similarity between robins and crows may have been inferred.

Variations of this type of inductive reasoning task have shown that there are two kinds of inductive information which are important for understanding how background knowledge is generated and utilized within categorization tasks (Rips, 1975; Osherson et al., 1990). The first relates to the suggestion that when the premise category (e.g., crow) and the conclusion category (e.g., robin) are more similar, the induction inferences will have a stronger effect. The second is that lower rather than higher variability in the category leads to stronger inferences (Nisbett et al., 1983). In order to model these influences of inferences in category knowledge a category-based induction (CBI) model was developed (Osherson et al., 1990) which addresses some of these effects.

Another effect, called the selective weighting effects, has also been suggested as evidence of inductive reasoning. Here, focusing on certain features of the categories based on background knowledge has been suggested to be important (Heit and Rubinstein, 1994). For instance, consider this argument being presented to a participant: ‘Robins travel shorter distances in the extreme heat. How likely is it that bats travel shorter distances in extreme heat?’ In this case participants focused on the behavioral property “to travel” when comparing categories of robins and bats. As bats and robins are similar in that they both fly, then most participants inferred that bats and robins would be similar in the distance of travel when given extreme heat conditions. However, when asked a different way: ‘Robins have livers with two chambers. How likely is it that bats have livers with two chambers?’ Now the feature in comparing categories of bats with robins was focused on the “anatomy.” As bats are mammals and robins are birds, participants inferred that it was less likely that bats and robins would be similar in terms of anatomy (two chambers in their liver). This selective weighting implies that knowledge selection is based on context, in this case functional properties of flying and anatomy.

Other more applied areas to consider, in for example the social domain (social categories), relate induction within the area of social psychology and relational memory, such as the influence of social stereotypes and schema which are social categories. Stangor and Ruble (1989) demonstrated that congruent commercials (girls playing with doll) were recalled better than incongruent commercials (girls playing with trucks). This may represent some important areas for applied work in the future, where a background knowledge model could help to identify relevant functional processes within the background knowledge which need to be targeted by some social intervention in order to remediate these types of stereotypes.

However, although these models are very encouraging, and have demonstrated that background knowledge affects category learning, where inductive learning and memory have been identified as important in this process, these models have still been suggested to be incomplete. Heit (1997) suggested that their incompleteness relates to the need for more complex conceptions of representation outside of just exemplars, prototype, and rule based models. From this perspective, he suggests, a multi-modal representational scheme which accounts for both the relations among categories and the knowledge at multiple levels of abstraction, is needed, rather than overly focusing on whether one model (e.g., exemplar) provides a slightly better fit than another (e.g., prototype).

More recently in the last twenty years, specific modeling efforts for background knowledge categorization tasks have progressed very little. The more recent efforts of Heit and others have been to largely focus on similar problems of induction in categorization but more generally, and tweaking these types of models under different situations. For example, in one relatively recent study (B. K. Hayes and Heit, 2013), it was demonstrated that memory recognition shared some properties of induction. These category-based inductive inference models involve using the relations between categories to predict how individuals generalize novel properties of category exemplars, and reach conclusions that are likely but not certain given the available information. Jern and Kemp (2009) also showed that induction was involved in semantic repository, generalization, discovery, and identification, in relation to specific categorization tasks. The study identified, for example, that generalization was a problem for both supervised and unsupervised tasks, but unsupervised tasks also included the problem of relation discovery. Heit and colleagues have also explored how inferences are made over time, through the development of the Dynamic model for reasoning and memory (Hawkins et al., 2016). Through this model and corresponding data, they demonstrated that sequential sampling based on exemplar similarity and combined with hierarchical Bayesian analysis provided a more informative analysis in terms of the processes involved in inductive reasoning than the examination of choice data alone could provide.

So, induction and context seem to be an important avenue to further develop models for background knowledge. Unfortunately, however, many of the studies have focused on easier and more solvable problems such as congruent vs. incongruent tasks, or simple inference tasks. This perhaps falls short of what Heit had previously suggested, about the need for a multi-modal representational scheme which accounts for both the relations among categories and the knowledge at multiple levels of abstraction (Heit, 1997). Although these offer a useful starting point, they appear to tell us little about how or why knowledge is selected, i.e., they fail to solve knowledge selection problem. Developing a model which helps to understand what context knowledge is selected, or how the knowledge dynamically develops at a multi-modal representation level, and connects within a network of inference to allow for multiple levels of abstraction, are all important details in answering this problem.

In order to achieve this goal, a more general and holistic approach may be preferred, perhaps with new and fresh perspectives outside of traditional cognitive science approaches, and with a different ontological approach altogether. This approach should also capture a multi-modal representational scheme which will allow it to model multiple levels of abstraction and context. This should allow for greater ability to model more complex scenarios outside of simple congruence and inference tasks, such as how we make complex category decisions in the real world. For this, we propose considering other perspectives (in line with Heit’s suggestion) outside of the usual memory trace based exemplar, and prototype domain, and more in line with his recent work of category induction. In order to do this, we propose exploring a comprehensive functional contextual account which considers inference in the form of derived relations. In addition to this, this will include multiple modes of non-arbitrary similarity functions and arbitrary non-similarity learned contextual functions in order to account for categorization learning which uses background knowledge processes, and which may be able to offer some greater insights into solving the knowledge selection problem through modeling contextual learning more comprehensively.

As such, one approach may be to focus on functions and equivalent classes more concretely, and in a more formalized way in the form of functional contextualism. Cognitivism is based on the theorizing about mental representation, where memory trace, attention, inference between exemplar representations, etc., are specified and highlighted. In this way cognitivism in categorization can be thought of as a philosophy of science consistent with the ontology of realism which is a phenomenological paradigm, and which assumes that much of our perceptual reality exists based on the language and concepts which our cognitive system produces (Zahidi, 2014). Functional contextualism, on the other hand, is based on the ontology of pragmatism, and contextualism (Pepper, 1942; David and Mogoase, 2015) which instead of mental representation, it emphasizes the importance of what specific factors predicts and influences emotion, thoughts and behavior (decision making) which include categorization tasks. Crucially, it identifies the context in which the function of concepts, stimuli, thoughts, etc., occur, and how these exert different control on behavior and decision-making, with an emphasis on how an organism interacts with historically and situationally defined contexts in order to explain how background knowledge emerges and influences category decisions.

Through this functional-analytic approach some of the very specific problems with the knowledge selection problem may be overcome. This is because ontologically the cognitive mechanism approaches largely try to define the form of the environment through similarity and rule based approaches. This contrasts with a functional contextual approach which tries to define the context in which stimuli exert some functional control over the behavior and decision processes of the individual – hence enabling a more structured way to model learning in context and therefore enabling greater predictive and descriptive power over subtle contextual dependencies in which inference learning arises. These specific conceptual and ontological differences (form vs. function) may be key to resolving the knowledge selection problem, as categorization decision making which is dependent on background knowledge may be largely defined by our contextualized learning histories which are specific to each individual given their experiences.

Functional contextualism, therefore, may be helpful in providing such a philosophical foundation for modeling and formalizing an account of background knowledge by incorporating functions and the context of environmental stimuli at a holistic level, and more concretely than previous modeling attempts through inference (derived relation) type processes. This approach focuses on functional properties (such as functional equivalence) and contextual cues. For example, in an example of functional equivalence by Sidman (1994), given the category of cutlery, forks, and knives may be grouped together because of the background knowledge that these items share the functional property “to eat with.” However, in a different context, a knife may be used to peel paint off a wall (if you did not have a proper paint stripper tool) or defend yourself if you were attacked in the most extreme setting. Thus, the function is the purpose or use of that concept within a particular contextual setting. However, this early functional equivalence approach developed from Sidman (1994) has matured into a more formal model today. This approach is now embedded within the philosophical world view of functional contextualism, which defines that functions are dependent on context and specifically constitutes a post-Skinnerian behavior-analytic account of language known as Relational Frame Theory (RFT) (Barnes-Holmes et al., 2001; Blackledge, 2003; Torneke, 2010; De Houwer, 2013).

This fits well within the cognitive literature, as researchers such as Oaksford (2008) suggest that stimulus equivalence can to some extent explain the origins of reasoning, memory, and language generation. An equivalence class refers to the shared functional properties of items within that class (or category) which can be assumed to be equivalent (Sidman, 1994). For example, fork and knife are within the same category (or class) of cutlery and share the function “to eat with.” Goldstone et al. (2018) accept that the items within a category can be broadly considered an equivalent class. If a functional-analytic approach can be adopted with regard to simple category similarity, then we propose that this approach can also be adopted to our understanding of the emergence of background knowledge.

The Limited Success of Linguistical Semantics and Sematic Logic of Artificial Intelligence in Accounting for Background Knowledge

The related field of semantics refers to the study of cognitive structures in the brain from a variety of perspectives including cognitive psychology, neuroscience, linguistics, philosophy, and AI (Löbner et al., 2021). There are several branches in semantics. Formal semantics is particularly relevant to the study of background knowledge in categorization, and can offer some useful perspectives. For example, it does not focus directly on cognition, and instead it focuses on natural language, such as the logical relations within sentence structures (e.g., equivalence) which give meaning to the language being expressed (Löbner et al., 2021). As such, one very important observation made in some seminal work of the formal semantics domain (Montague, 1970; Lewis, 1976) is that meaning embedded in some linguistic content is context dependent. Influenced from this early work, most semantic theories now adopt some form of pluralism about linguistic content and its attributed meaning based on this context-dependency (Potts, 2004; Zimmermann, 2012; Ciardelli and Roelofsen, 2017). Context here can relate to the objective and subjective meaning of a given linguistic sentence, epistemic content, intentional content, etc., in order to assign truth-values to linguistic utterances (Löbner et al., 2021).

This context dependency work has also highlighted cross-linguistic, and cross cultural linguistic differences that can alter and shape the meaning of concepts and sentences, which indicates that linguistic meaning is more complex than the words formed in a sentence (Machery et al., 2004; Smith et al., 2018). One of the perhaps most well know theories to have emerged from this work is called the Sapir-Whorf’s linguistic relativity hypothesis which suggests that the structure of language affects the speaker’s cognitive worldview (e.g., Eskimos have many more words to describe and conceptualize snow than Europeans), and that the perception people develop about some concept is relative to the context of their spoken language (Koerner, 1992; Hussein, 2012; O’Neill, 2015).

Another branch of semantics which is relevant to studies in categorization theory of background knowledge is lexical semantics, which emphasizes linguistic concepts in the context of frames which attribute-value structures (Fillmore, 1976; Barsalou, 1992, 2014). Here, a cascade is a combinations of frames in a tree, and category prototypes are structured within these knowledge trees. The frames mediate the input information and output behavior through a Bayesian inference model of category learning (Taylor and Sutton, 2021). Frame-theoretic representations in the form of recursive attribute-value structures organized around a central node is clearly an improvement compared to simple feature list models (Anderson, 1991; Sanborn et al., 2006; Goodman et al., 2008; Shafto et al., 2011). For example, a feature list would simply consist of a list of features, e.g., fur, black, and soft, however, a semantic cascade of frames can add further information to the features, such as representing how these features are related by defining each feature as a value of some attribute. For example, by specifying through a frame that fur has two attributes – color (black) and texture (soft). These types of Bayesian models can allow frames to assign more or less weight to attribute values given their importance in the category structure.

These semantic theories have not just influenced our understanding of cognition, but they have a long standing bidirectional relation with influencing and being influenced by work in computer science, specifically in the area of attempts to formalize approaches of logic and linguistics for general knowledge in the discipline of AI through the development of knowledge tree attribute-value matrices of computational linguistics (Gärdenfors, 2004; Gardenfors, 2014). AI was developed in the 1950, and were largely based on mathematical logic programming such as propositional logic (assigning truth and false to statements), first order logic (formulas which specify some relation to objects) and second order logic (specifying relations between relations), as well as conditional logic (IF-THEN rules) (Luger, 2005; Stuart and Peter, 2020). In line with semantics which seek truth in statements, logical statements of truth can be interpreted as formal semantics, and drew from these early AI approaches. In an example of first order logic, the statement “there is a mother to all children,” can be stated as:

Whereby ∀, expresses “for all instances,” child is x, → is a connective between the two statements (where this connective is only true if both statements are true), ∃ expresses “there exists,” mother is y, y, x, denotes y for instance x. This is constructed as a statement of truth, through the use of the connective symbol (i.e., by explicitly stating, when this statement is true, this statement is also true).

These semantic approaches developed further as cognitive semantics emerged in the late 1970s out of cognitive linguistics and mathematical logic, largely based on the initial work of Noam Chomsky’s (a linguist) semantic structures, theory of generative grammars (Chomsky, 1957), and largely due to the dissatisfaction of behavioral approaches (at the time) to explain a model for natural language (Andresen, 1991; Harris, 2010, 2021). Hence, this early work in AI and cognition was heavily influenced by formal approaches of cognitive semantics (Kuznetsov, 2013; Stuart and Peter, 2020).

However, these approaches have been entirely unsatisfactory, and have only led to very narrow approaches to AI, and have not led to the ability of AI to develop broad and deep general knowledge about the use of language in the world (Floridi, 2020; Stuart and Peter, 2020; Toosi et al., 2021). Perhaps the biggest limitation with this approach is that, though context was highlighted early as important, these development were based on narrow and overly simplistic forms of knowledge trees, which do not capture rich contextual structure in the real world. The problem of developing AGI has been suggested (Mitchell, 2021) to depend on some more generalized accounting of (background) knowledge that goes beyond simple statistical, or similarity methods which are limited in nature.

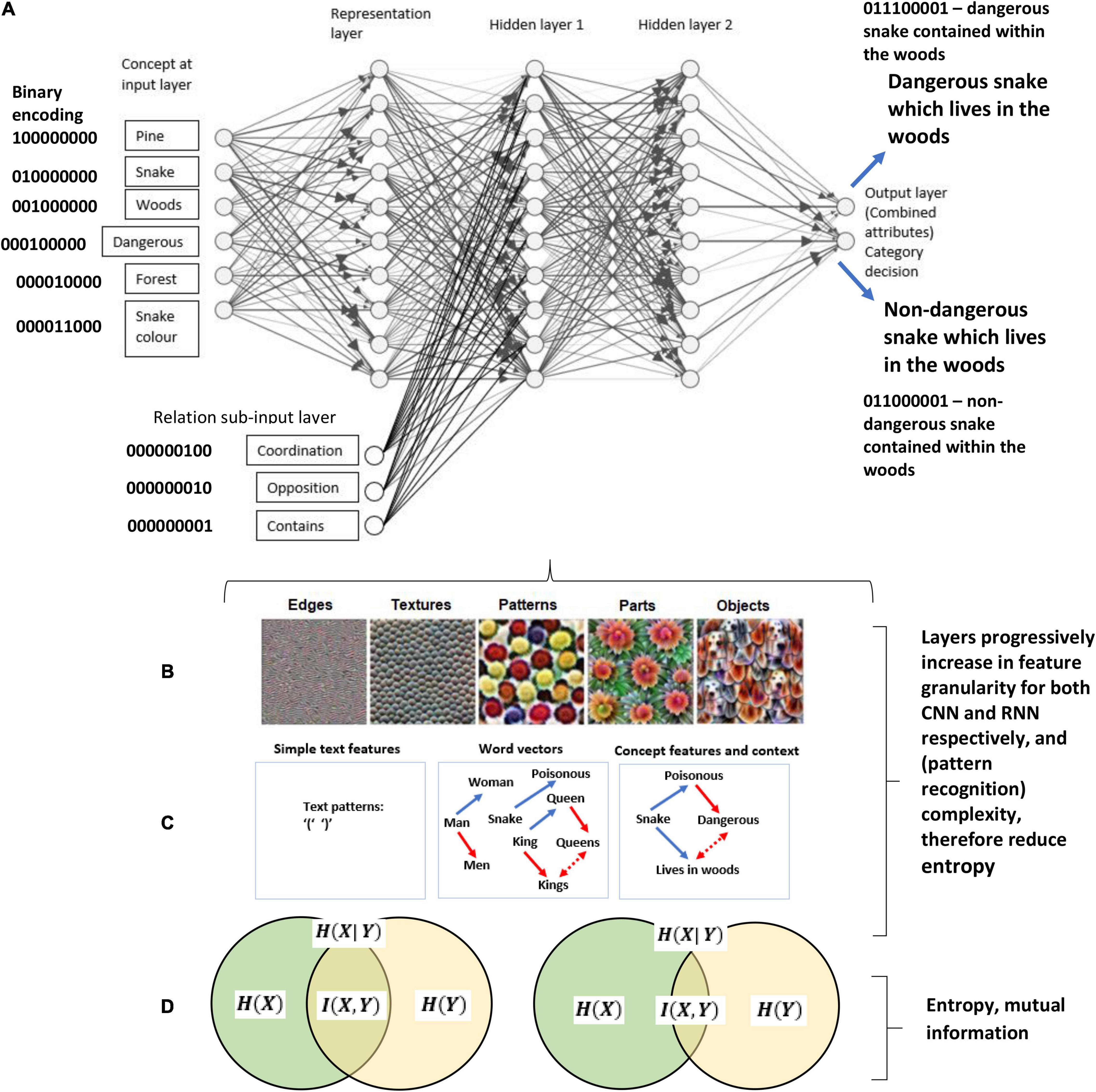

Since this early work on semantics there has been many breakthrough with machine learning approaches, and mathematical models of reinforcement learning (behavioral approaches) are making a return (Silver et al., 2021), and may (with functional context) be an important part the solution for AGI. Deep learning neural networks (DNN) have recently been developed with the advent of powerful processing computers (which as not the case decades ago) which has allowed researchers in the area of semantics to exploit (Rogers and McClelland, 2011) which extends previous work of distributed memory model (McClelland and Rumelhart, 1986) and the semantic memory models (Hinton, 1986; Rumelhart, 1990; Rumelhart and Todd, 1993; Hinton and Anderson, 2014). This allows the model to capture complex structure based attributes as well as relational components, and is perhaps the most promising work within semantics (which is relevant to background knowledge) at this time. This, therefore, may represent a promising way forward for modeling background knowledge in a categorization setting, and general knowledge more widely. However, the relational attributes within this approach are still very limited (e.g., “can,” “is,” etc.), representing simple word structures, and may need to be further developed and updated from a model which specializes in deep contextual (relational) learning.

A Functional Contextual Account of Background Knowledge – A Potential Solution

One approach which we may look to progress semantic models is Relational Frame Theory (RFT), which is a modern behavioral theory of human language and cognition, which is rooted in functional contextualism (it represents the formal organizational model of functional contextualism), and offers a formal model within a functional-analytic approach to higher cognition (Barnes-Holmes et al., 2001; De Houwer, 2013). In doing so, the theory extends Skinner’s concept of verbal operants to include generativity (thus accounting for Chomsky’s criticism of the behavioral theory) to capture greater complexity within cognition. From an RFT perspective, language can be thought of as learned patterns of generalized contextually controlled, derived relational responding (somewhat similar to inference), called relational frames (Barnes-Holmes et al., 2001). With its basis in functional contextualism, the theory focuses on the role of context (via contextual cues) in facilitating the emergence of specific patterns of relating and how (behavioral) functions come to be attached to these patterns.

Relational responding can be either arbitrarily applicable or non-arbitrary in nature. Non-arbitrary relational responding is based on physical features (such as magnitudes of size, shape, or color) of the stimuli involved (not unlike similarity theories in the categorization literature). By contrast, arbitrarily applicable relational responding is not based on formal stimulus properties but is instead largely controlled by historical contextual learning. The theory specifies several different patterns of arbitrarily applicable relational responding, including (but not exclusively): co-ordination (e.g., stimulus X is equivalent to stimulus Y); comparison (e.g., A is bigger than B); opposition (e.g., up is the opposite of down); distinction (e.g., C is not the same as D); hierarchy (e.g., an Alsatian is a type of dog); and perspective-taking (often referred to as deictic) which involves the interpersonal (I vs. YOU), spatial (HERE vs. THERE) and temporal relations (NOW vs. THEN).

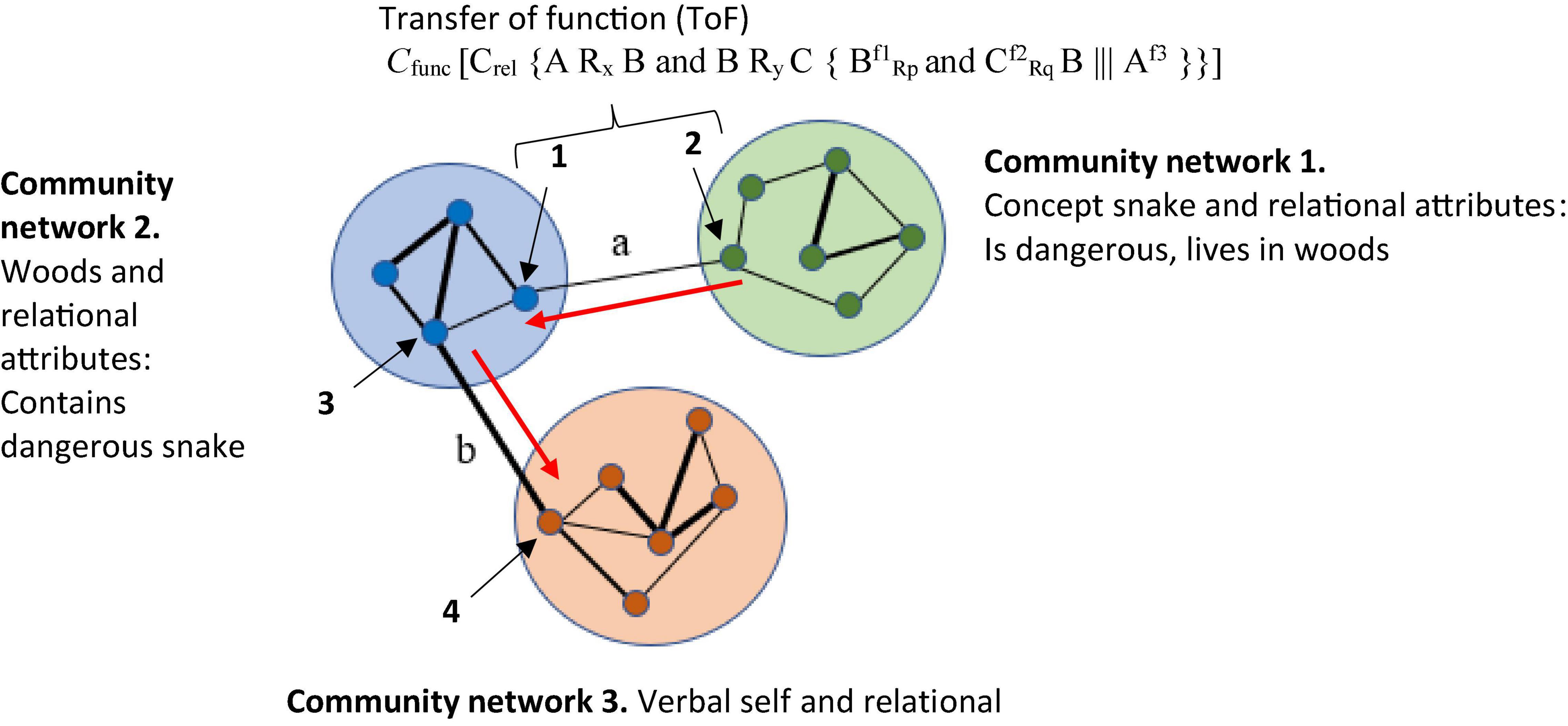

Relational Frame Theory appears to share some features with rule-based categorization but relies specifically on a history of operant conditioning across a wide range of situations in order for the early patterns of relating to be established. Indeed, the focus of a relational frame, and its definition, require the development of three properties: (1) In mutual entailment (ME), relating to one stimulus entails relating to a second stimulus. For example, if A = B, then B = A. Similarly, if you are told that X1 is smaller than X2, you can derive (entail) that X2 must be bigger than X1. (2) In combinatorial entailment (CE), relating a first stimulus to a second and relating the second to a third facilitates entailment between the first and the third stimuli. For example, if you are told that A is greater than B and B is greater than C, you will derive (combinatorially entail) that A is greater than C and C is less than A. (3) The third core property of a relational frame is known as the transfer (or transformation) of stimulus function (ToF), through which the functions of any stimulus that participates within a relational frame may be transferred or transformed in line with the relations that stimulus shares with other stimuli also participating in that frame. For example, consider a situation whereby a shock is delivered to your arm each time stimulus A appears on a screen. If you are then told that stimulus B is greater than A, fear in the presence of B can actually be stronger than fear in the presence of A, even though shock was directly paired with A and not B. This is because fear as a behavioral function that is established to A is transferred to B in greater magnitude because of the established relation that B is greater than A. In other words, the fear function is transformed (increased) because of the comparison relation between A and B (Dougher et al., 2007).

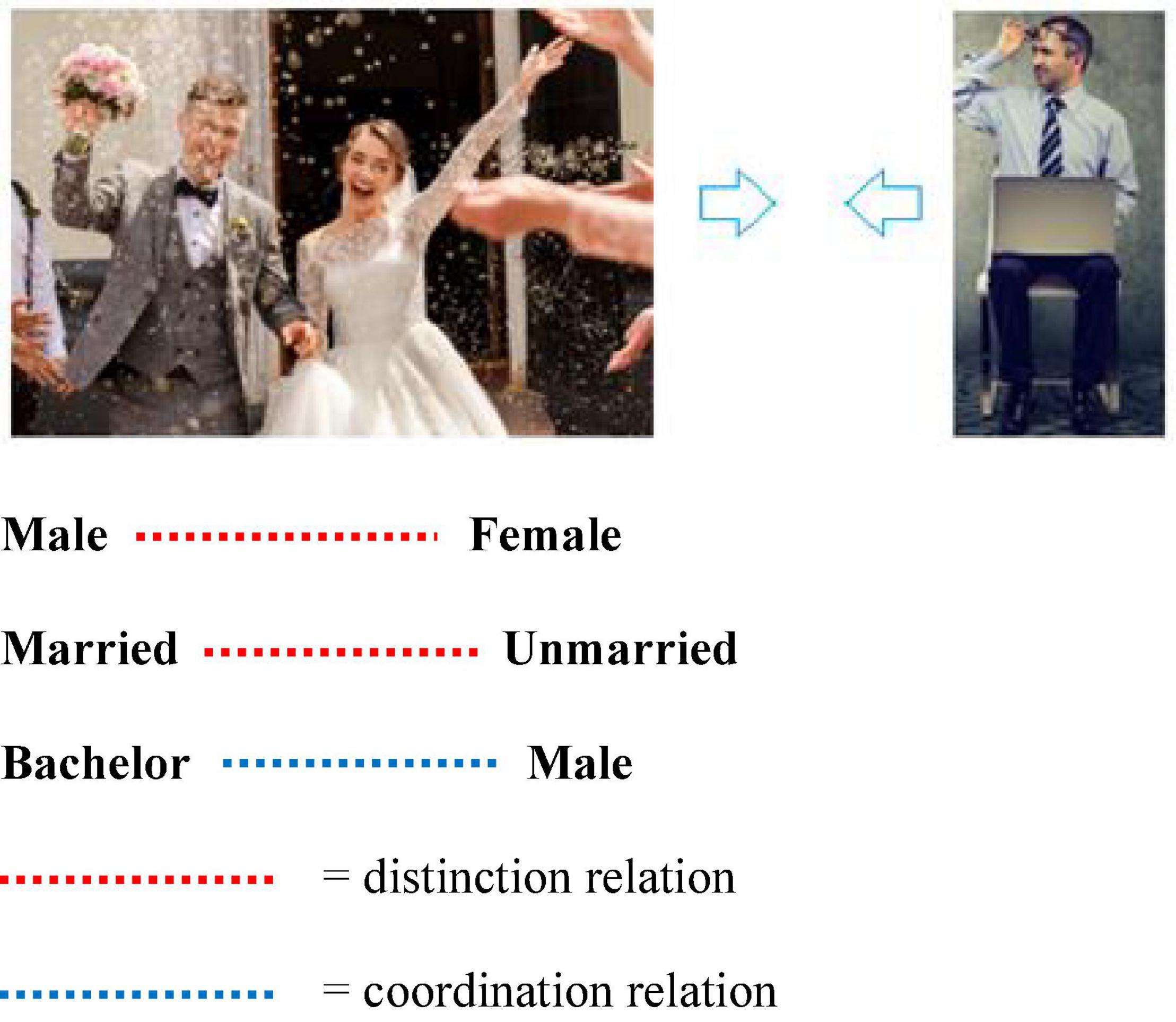

For RFT, complex social concepts or background knowledge are established as broad patterns of arbitrarily applicable relational responding (AARR) in the form of complex relational networks and adjoining behavioral functions. For example, the accepted social knowledge that “all bachelors are males” involves at least the following: a co-ordination relation between the concept bachelors and the concept male; a distinction relation between male and female; and a distinction relation between married and unmarried (see Figure 1). In other words, for RFT we acquire broad and complex knowledge through verbal operant conditioning, including how males and females differ, why a bachelor is a man, but why a man may not be a bachelor. Each, on the surface, appears to be a simple concept or a comparison of simple concepts, but for RFT the stimuli or concepts participate in much more complex patterns of related or integrated social knowledge. This, therefore, does away with any need to specify a necessary or sufficient feature, and instead prefers context dependent relational networks and associated behavioral functions, which may provide a more accurate account of how causal connections and interrelations within background knowledge about these concepts emerge within their context and influence categorization behavioral decisions about these and other related concepts. As the next section will demonstrate, this approach may help extend existing background knowledge categorization models in interesting ways to help resolve the knowledge selection problem. It will do this by offering some insights into how knowledge is selected in background knowledge categorization tasks, by specifying the context in which stimuli is presented (i.e., via contextual clues) and explain how the relevant functions of stimuli emerge as they dynamically change through inference based (derived relational) networks. Crucially, this will offer some explanation as to how category decisions based on background knowledge are made.

Figure 1. An RFT interpretation of a simple relational frame of coordination between the concept bachelor and the concept male. This includes a distinction relation between male and female and a distinction relation between married and unmarried(Images from adobe stock with license and permission to use and modify given. Credit for image on right “pathdoc” and image on left “wedding photography”).

Specific Examples of Categorization Modeling for Background Knowledge and Why a Functional Contextual Model May Improve on These Accounts

In one attempt to use a similarity-based model to address background knowledge, Heit developed the integration model of categorization (Heit, 1994), which has been explored in a number of background knowledge studies (Heit, 1994, 1995, 1998). In this approach, Heit had modified the similarity based exemplar model (Medin and Schaffer, 1978) in a way which would take into consideration influences of background knowledge such as congruence in category learning (Heit, 1994, 1997).

Several studies have found supporting evidence for congruence in category learning. For example, if a question is presented to participants which is congruent with their existing background knowledge, this will more likely facilitate their memory (Greve et al., 2019), learning of relational properties (Ostreicher et al., 2010) and the classification of new items as consistent with the background knowledge they have (Heit, 1994). For example, in a study presented by Heit (1994), participants were asked a congruent question such as ‘how likely is it that someone with expensive trainers is a jogger?’, and this was compared with an incongruent question such as ‘how likely is it that someone who attends parties is shy?’ It was found that participants were more likely to judge a new person as being a jogger when asked a question which was congruent to the background knowledge of the participant rather than incongruent.

However, in the same study by Heit (1994) it was demonstrated that congruence with background knowledge was not always the strongest factor in influencing categorization behavior. Specifically, Heit found that integration model outperformed the distortion model in predicting whether a group of individuals were “joggers” or not, given a set of categorization behavior. During an experimental setting in this study, participants were shown a training set of joggers (including associated behavioral characteristics). The distortion model predicted that the learned exemplars about these joggers in this experimental setting would undergo a form of distortion in memory to fit better with the previous background knowledge about joggers that the participants had learned over the years (such as joggers typically have expensive trainers), in order to be more congruent with their background knowledge. However, instead of undergoing distortion, the “new joggers” selected by the participants were more similar to the “jogger” training set shown, as opposed to features which were consistent with background knowledge (features typical to joggers).

There are two problems with the above approach. Firstly, and most importantly, congruence in category learning only explains why knowledge is selected in very specific cases and tasks. This is an incomplete model of background knowledge. Secondly, the model fails to predict or explain in what cases some similarity function, or some distortion of memory should be preferred in order to increase congruence with background knowledge. The ability to capture and predict cases of congruence with background knowledge may be captured more accurately utilizing the functional contextual (RFT) model. The RFT approach assumes that relational framing occurs amongst the properties of background knowledge of a typical jogger and some new instance of jogger, which allows for congruence.

So, in this case the non-arbitrary properties would be consistent with a more similarity based situation, whilst arbitrary properties would be consistent with the background knowledge of the jogger. From there, the attributes of the concept “jogger” are related in the network according to the properties of ME, CE, and ToF, where information is derived within the network according to information which can be coordinated, opposed, or where a distinction is made (hence, for example, coordinated concepts would be more congruent that those which are opposed in the network). Hierarchical information can also be held, such as structuring joggers within the network as containers of their personal values such as “healthy living,” “fitness,” etc., through determining the function of their behavior (i.e., why do joggers jog?) which may give more clues about relevant background knowledge of a typical jogger, and the crucial knowledge which needs to be selected dependent on some experimental context. Crucially, RFT can specify how this knowledge is structured within a relational network, and how context of a categorization task (what it asks the participant to categorize exactly) can help determine which knowledge is specifically selected (i.e., the context determines this). For example, if a participant in a study were asked to categorize the personal values of a jogger, then the hierarchical component of the network may be recalled to help the participant decide that jogger’s value “healthy living” and “fitness” based on the participants functional interpretation of why a joggers jog (i.e., RFT determines functions within context are crucial for understanding which knowledge is selected, and thus provides some insight into overcoming the knowledge selection problem).

The RFT model also goes beyond simple congruence by specifying under which conditions equivalence, opposition, mutual entailment, and combinatorial entailment occur more globally, so its ability to specify precisely how congruence can emerge is understood precisely through the models’ ability to predict under what circumstances entailment is generated in the relation network given very specific modeling of historical reinforcing contingences. Some recent evidence has shown that the model was able to accurately predict relational framing of categories in three domains, which included non-arbitrary, arbitrary containment, and arbitrary hierarchical relations (Mulhern et al., 2017). In that study, language and cognition which are relevant to background knowledge were assumed to be patterns of generalized, contextually controlled relational responding. The researchers suggested that this approach offers a more global accounting of knowledge generation and is in contrast with the more localized environment-behavior interactions prediction of congruence formations as is seen in many categorization approaches used (Laurence and Margolis, 1999; Murphy, 2002; Palmer, 2002).

In another example, rule-based approaches of categorization describe how “if” and “then” logical rules are used to define a category (E. E. Trabasso and Bower, 1968; Smith and Sloman, 1994; E. E. Smith et al., 1998), for example, “if” X barks “then” X is a dog. Evidence has shown that in some situations rules do apply (Rouder and Ratcliff, 2006). It has been suggested that rules can be applied to many settings, such as when recognizing that 683 is an odd number (Armstrong et al., 1983) and why raccoons’ offspring look like skunks but are not skunks (Keil, 1989). However, there are some problems within the categorization literature as current categorization models do not have any means to specify specific rules, identify the context in which rules emerge from environmental stimuli, or how they can be organized into complex background knowledge.

There have been some useful rule based models such as the competition between verbal and implicit systems (COVIS) model (Ashby et al., 1998) which suggests that explicit verbal (rules) and procedural (implicit) systems which integrates information at the point of pre-decision, are adopted depending on the context of the situation. Some evidence has suggested that implicit procedural information is integrated when it is difficult to define the rules verbally, but when these can be defined verbally, they usually supersede the procedural system (E. E. Smith et al., 1998). However, others have acknowledged that the exact interplay between rules and procedural systems has yet to be discovered (Milton and Pothos, 2011).

Some more specific rule-based explanations of the effects of background knowledge on categorization have been applied in conceptual acquisition tasks. These tasks were developed in order to identify situations where the background knowledge may facilitate or hinder concept acquisition. In an example of this, Pazzani (1991) used conceptual acquisition tasks involving photos of people performing actions on objects. Each picture showed either an adult or child performing an action on an uninflated balloon (dipping it in water or stretching it) that varied in size and color (it was either large or small, and either yellow or purple).

Pazzani compared two types of categories – a disjunctive category, and under what conditions would each of these emerge. In one experiment the participants were instructed to either learn about a category of balloons that inflate or learn something about an arbitrary category simply labeled Alpha. The assumption made by Pazzani was that participants in the inflate category would be influenced by their background knowledge about what would be needed to inflate the balloons, whereas no such influence from background knowledge would take place.

In their pre-test study, Pazzani found that the action of stretching a balloon would facilitate participants expectation that a balloon would inflate, and that adults would be more successful at inflating the balloon than children. The stimuli used in the experiment were pictures of scenes which differed on four dimensions; (1) adult or child; (2) stretched balloon or balloon dipped in water; (3) yellow or purple balloon; (4) and small or large balloon. For the disjunctive condition, a disjunctive rule defined the inflate category, i.e., that these balloons must be stretched or inflated by an adult. As the pre-test study showed, this should be consistent with the background knowledge that the stretching of a balloon by an adult is more likely to lead to the balloon being inflated (i.e., adults are stronger and more capable to inflating a balloon than children, as well as the knowledge that balloons are stretched when they inflate). In the conjunctive condition, the target category (Alpha) was defined by the arbitrary rule, that these must be small and yellow. This rule is not consistent with any background knowledge about inflating a balloon. As expected, Pazzani found that learning was faster for the disjunctive-inflate condition than the conjunctive-Alpha condition. It was concluded that as the disjunctive-inflate condition was consistent with the existing background knowledge, it was easier to learn than the inconsistent disjunctive-Alpha condition. Pazzani concluded that this was evidence that demonstrated that a simple similarity function in the form of feature selection does not connect category knowledge, rather it is background knowledge which bind the category knowledge in these tasks.

The functional contextual (RFT) approach may be able to improve both the descriptive and predictive power of why the disjunctive rule was followed and not a simple similarity function. Here RFT can account and explain the specific context in which background knowledge emerges based on some hierarchical organization of rules which supersede a similarity function. More specifically, RFT can explain the context in why the disjunctive rule was followed in the Pazzani (1991) study, as derived relations between predicting whether the balloon would inflate, and the background knowledge provides relational clues about likely hypotheses such as the age of the person carrying out the action, and this (according to RFT) is structured within a complex hierarchical and relational network (i.e., inflating the balloon action “belongs to” a specific person who was stretching the balloon). Similar to the previous example about joggers, the context of the study provides clues as to what the relevant functions of the behavior are (i.e., someone inflating a balloon may be doing so to stretch it), and may, therefore, help determine which knowledge should be selected in the participant’s hierarchal relational network in order to make a category decision. The specification of an explicit rule which is consistent with the hierarchical background knowledge associated with stretching a balloon, may supersede any similarity function in a similar way as any explicit rules supersede implicit integrated knowledge in procedural tasks. This RFT approach, again, may help provide greater context for solving the knowledge selection problem by specifying the functions relevant to a specific context, and outlining how this knowledge is connected dynamically within a participant’s relational network, and utilized in a categorization task that draws on background knowledge.

To support this claim, there has been much empirical evidence for the way RFT structures knowledge within relational networks. For example, evidence of RFT has been able to accurately predict and specify complex ruled based organizations of hierarchical responding in categorization tasks (Greene, 1994; Slattery et al., 2011) which may be seen as a particular type of relational responding called hierarchical relational framing, and which may form via a non-arbitrary relational pattern such as containment. For hierarchical classification, the classes themselves are categorized into higher order classes (Greene, 1994; Slattery et al., 2011). An example of hierarchal classification could be ordering “Alsatian” into (contained within) the category “dog” and “dog” into (contained within) the category “Animal.”

This distinction of hierarchy seems similar to the cognitive interpretation of hierarchy, except the RFT model is providing a broader relational framework in which hierarchical processes occur. For example, in one study of hierarchical relational responding of categories (Gi et al., 2012), there were five phases to the study. In phase one, four arbitrary shaped stimuli were established as, and several contextual cues were given such as “includes,” “belongs to,” “same (similarity).” In the second phase, the arbitrary shaped stimuli were trained and tested for derived arbitrary sameness (equivalence), i.e., between the arbitrary stimuli and some nonsense words. In the third phase, deriving relations of containment between lower (novel and additional) and higher levels (identified through the cues “includes,” and “belongs to”), induced responding in accordance with higher levels in the hierarchical network. In the fourth phase, particular functions were established in particular stimuli (i.e., some stimuli were directly trained to associate a function, e.g., the function of fear) at different levels of the hierarchical network. In the final phase, patterns of ToF were demonstrated where stimuli acquired novel untrained functions because of their position in the network. Therefore, again, RFT can specific complex hierarchical networks for which background knowledge can be stored, relationally structured with other concepts and knowledge, and recalled to help the participant identify a category decision based on clues of functions relating to concepts, events, and behaviors given some specific context, and drawn to make categorization decisions.

Connectionist models in the form of neural networks have also been used to explain the influence of background knowledge (Gluck and Bower, 1988; Shanks, 1991). In these models, category learning is thought to correspond to a set of weighted association of nodes, which activate in response to an input pattern. Choi et al. (1993) used connectionist neural networks to model how learning disjunctively defined concepts is easier than learning conjunctively defined concepts. In connectionist neural networks these hypotheses can be simulated with negative (inhibitory) links between nodes for conjunctions and output nodes which correspond to category labels. After many variations, Choi and colleagues incorporated background knowledge into Kruschke (1992) attention, learning, covering map (ALCOVE) model which represents a hybrid between an exemplar (similarity) and connectionist model. In the original version of a two layer backpropagation model of ALCOVE, this did not fit rule-based data very well, however, when implementing background knowledge biases into and adapted version which consisted of a neural network, whereby the biases were captured by the network weighting (through training), categorization performance improved dramatically. This demonstrates the usefulness and flexibility of neural networks for the study of approximating background knowledge in category learning.

Other notable connectionist methods have included the, and the knowledge resonance model (KRES) (Rehder and Murphy, 2003) and the Baywatch model which is a Bayesian and connectionist model (Heit and Bott, 2000). The KRES model is a connectionist model which takes account of background knowledge, but unlike other approaches, it uses a recurrent network with bidirectional symmetric connections whereby the weights are updated by a Hebbian learning rule instead of a feedforward network which relies on a delta rule or backpropagation. Rehder and Murphy assume that knowledge is directly learned or attributed through an inferential process, and they have shown that some inferential properties can be captured through their network. The Baywatch model is very interesting and has had some success in tackling the knowledge selection problem. This is a neural network model which includes Bayesian probability, and converts the networks activation outputs into a probability measure of the likelihood categorizing in a particular way based on some given background knowledge. In order to do this, the model uses the logistic transformation as outlined in Gluck and Bower (1988) (see formal mathematical approach section below, there, this is utilized and expanded on for an RFT interpretation). The network was able to progressively learn which sources of background knowledge correspond to some target categories – hence identifies in some instances which knowledge is selected in these types of tasks.

However, though these connectionist models are useful, and heading in the right direction, they are currently overly simplistic with a single hidden layer and with only three layers in total. Much of the AI literature suggest that deep (multi-layered) networks are more able to capture knowledge properties (Mitchell, 2021). Furthermore, RFT goes beyond simple inference learning (which the connectionist networks are trying to capture). For example, evidence has shown that by using the RFT model, researchers are able to predict how relationally framed patters of ToF emerged throughout the network, and this makes this framework unique when compared to simple hierarchical or Bayesian inference frameworks of order which are typically described within the categorization literature (Murphy and Lassaline, 1997). The RFT approach, instead, precisely defines the controlling function of the stimuli and under what context does such inference occur. For example, Slattery and Stewart (2014) demonstrated many knowledge based properties which could be captured via the RFT approach, and argued that hierarchical relational framing involves two forms of hierarchical responding, both hierarchical classification as well as hierarchical containment. They found multiple properties of unilateral property induction, transitive class containment, and asymmetrical class containment, which again shows how RFT was able to model complex relational networks within hierarchical organizations applicable to categorization, and beyond the simple inference accounting and knowledge induction of previous connectionist models such as the Baywatch model (Heit and Bott, 2000).

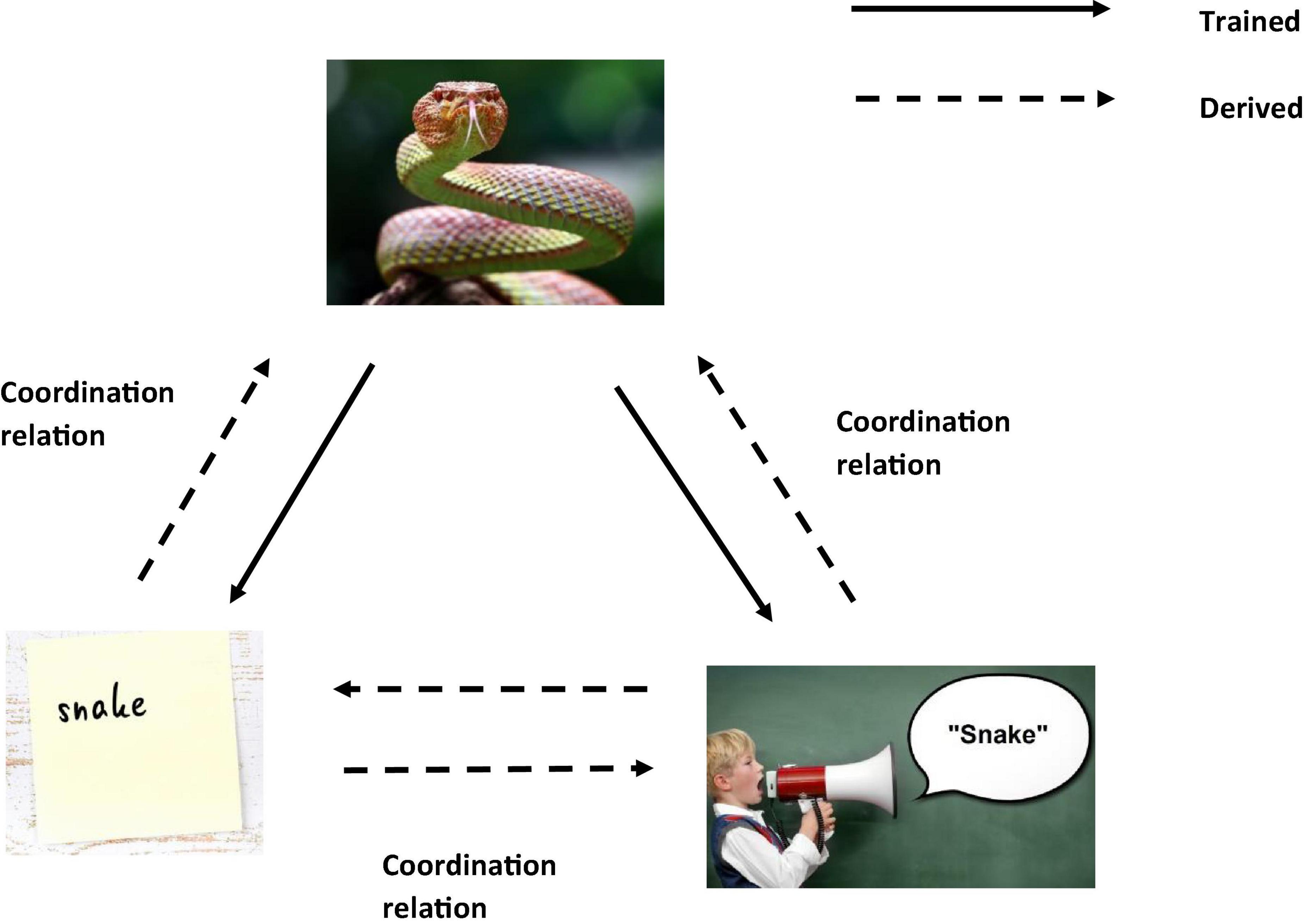

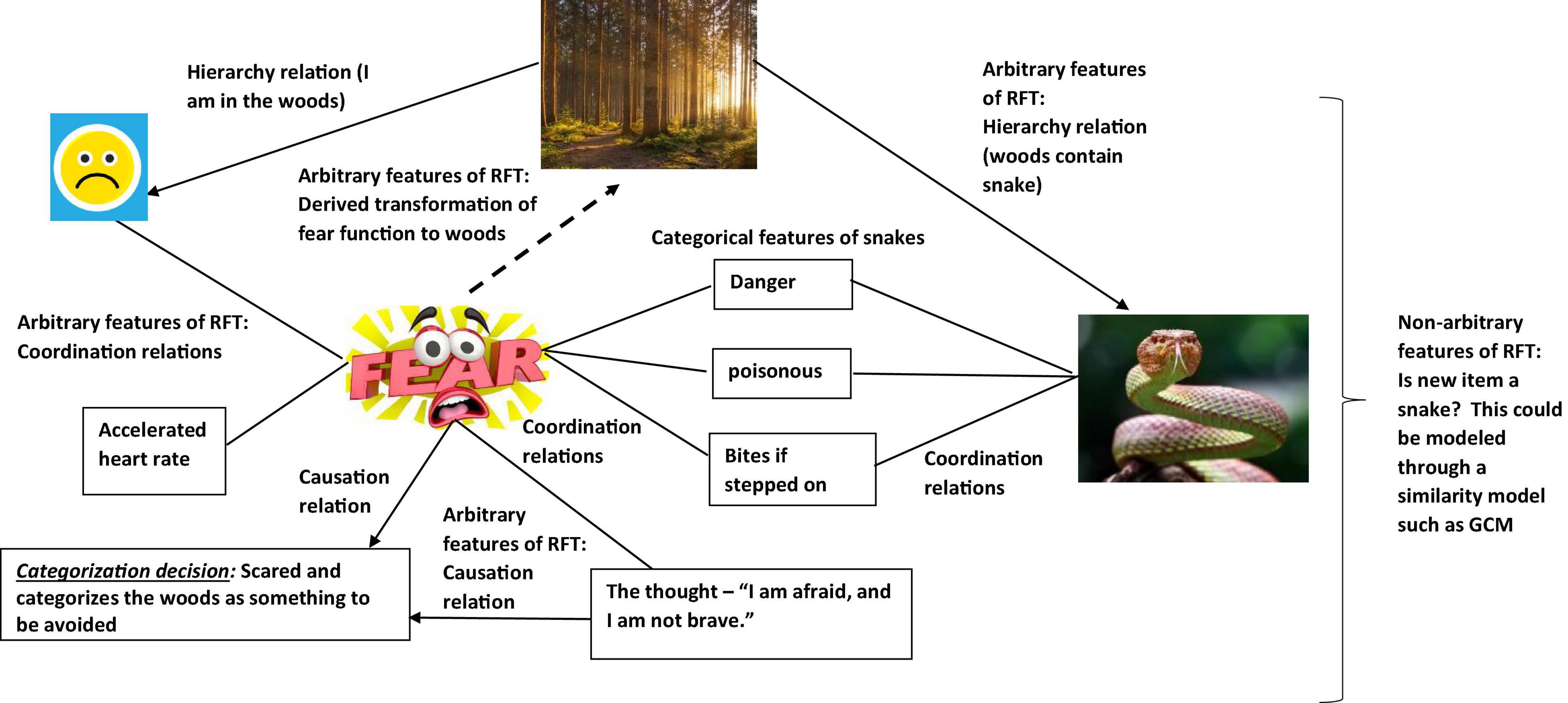

One of the core advantages of RFT’s approach to background knowledge, over and above the previous categorization models mentioned lies not only in its ability to account for high levels of complexity, inference, and context, but also to account for meaning and the effect of a given stimulus, through ToF. Consider the real world example where in a laboratory experiment participants are asked (these would be the dependent measures) to (1) categorize whether the woods are safe, and (2) whether the woods are safe enough to walk through. In this experiment, the participants are told that poisonous snakes live in these woods. The participant when deciding whether the woods are safe or not, may draw on their background knowledge about the snake and themselves. For example, when considering the concept of a snake, the relevant background knowledge can include the common rule “Stay away from snakes, they’re dangerous,” and the very real fear that likely emerges for some when you are near a snake, even when it is in a terrarium. For RFT, fear is an established function of actual snakes and the word “snake” (fear and snake will be coordinated even if you have never seen a real snake). This also applied to the written word “snake” which is also coordinated with the actual “snake” and the verbal word “snake” (see Figure 2).

Figure 2. An RFT interpretation of a simple example of trained vs. derived relations(Images from adobe stock with license and permission to use and modify. Credit for image on top “kuritafsheen,” bottom right “Coloures-Pic,” and bottom left “iushakovsky”).

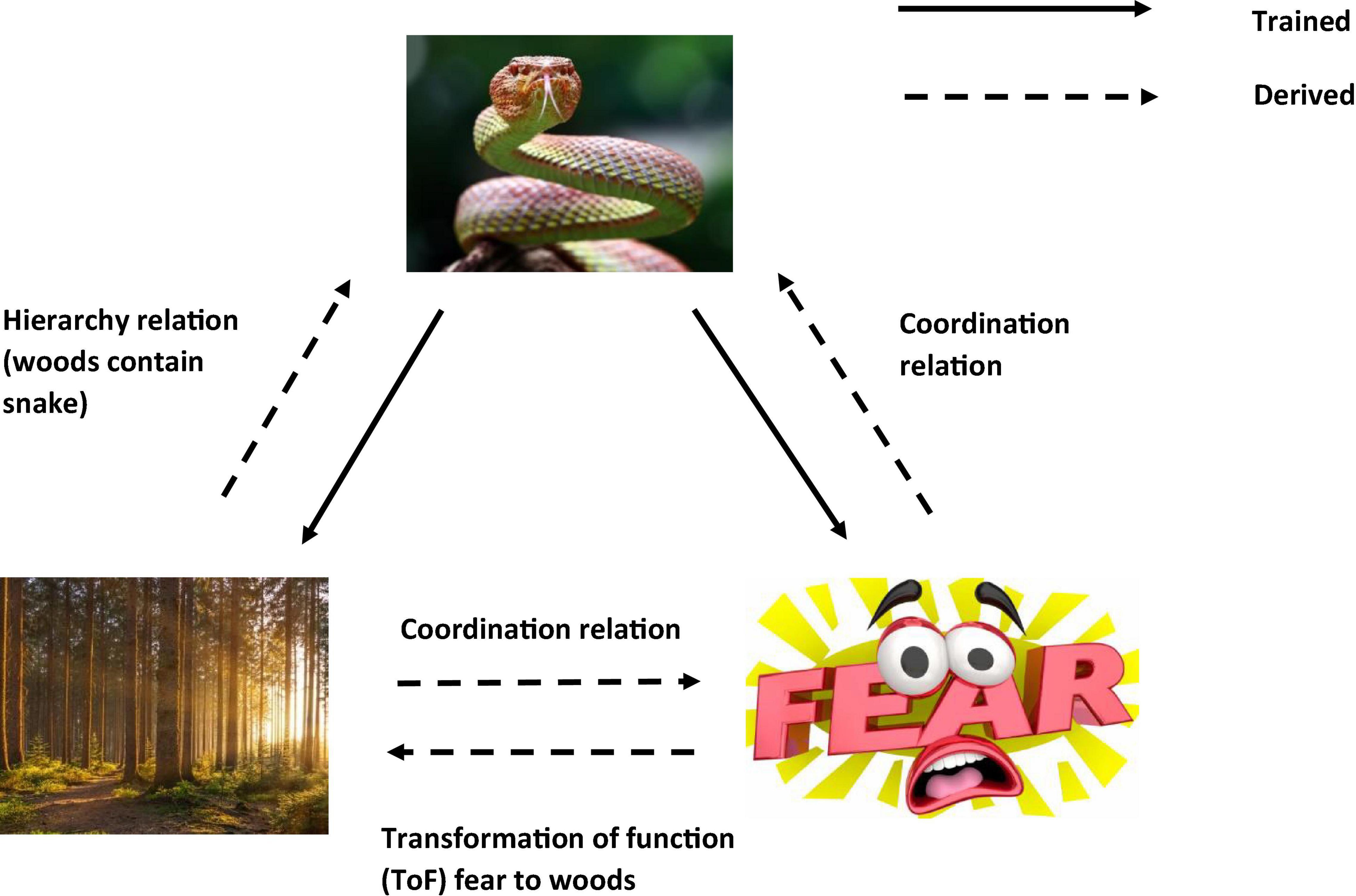

So, in a condition when the participant is told that there may be snakes in the woods. Even without seeing a snake there, the function of fear will be transferred to the woods as soon as you hear about the possibility of snakes living in the woods. This is because the concept of snakes (and the attached fear function) is contained (relationally) within the concept of woods, so that the fear of snakes transfers to woods and now you are afraid of just entering the woods (see Figure 3). In other words, woods helplessly evoke fear of snakes. It is even possible that if you are very afraid of snakes, you would avoid woods altogether, without consciously intending to do so (i.e., an avoidance function is established to woods).

Figure 3. An RFT interpretation of a simple example of transformation of stimulus function, as the individual learns in this context the category “woods are dangerous”(Images from adobe stock with license and permission to use and modify. Credit for image on top: “kuritafsheen,” bottom right: “iQoncept,” bottom left “AA + W”).

As a result, the background knowledge of the participant’s concept for woods as well as their behavior regarding woods can be altered considerably, and RFT provides a model for how background knowledge develops and changes over time. So, it is clear to see through this framework how the background knowledge of snake and woods are derived. In a background knowledge categorization task, where an individual is asked to categorize whether the woods in this circumstance (which contain snakes) is safe, then the knowledge selection clearly involves derived (induced) knowledge of fear functions about snakes and the woods. Of course, though, these derived relations can be scaled up and without any limit on scalability, to include many other derived relations which the individual may network together in terms of the concepts of safe and woods. RFT allows for this continuous scaling up, as new relevant information can be continually added to the relational network model as they are identified, and the framework can thus explain how the processes then further build in additional new background knowledge, and how this will affect a background knowledge related category decision.

It is also important to note that this background knowledge may not have been directly trained and is not based on any form of similarity function between woods and snakes, such as would be stipulated in the exemplar model such as the GCM that try to model background knowledge. In contrast, RFT can explain complex arbitrarily applied relationships among concepts and how these can activate complex behavioral functions in specific contexts. This approach thus goes some way in explaining what types of background information are relevant given the specific learning history of the individual and the context of the categorization task, thus providing greater context for further research to tackle the knowledge selection problem more generally.

In relation to a background knowledge categorization tasks specifically, for example, where we are trying to predict how an individual will categorize whether a particular woods is safe enough to walk through or not, then as suggested, this can be scaled up beyond simple fear of snake. RFT’s framework for describing behavioral outcomes over a wide range of situations, through the frames defined of ME, CE, and ToF, may be helpful as this can help specify complex contextual histories which may help identify how background knowledge is developed, and changes over time, given different contextual settings.

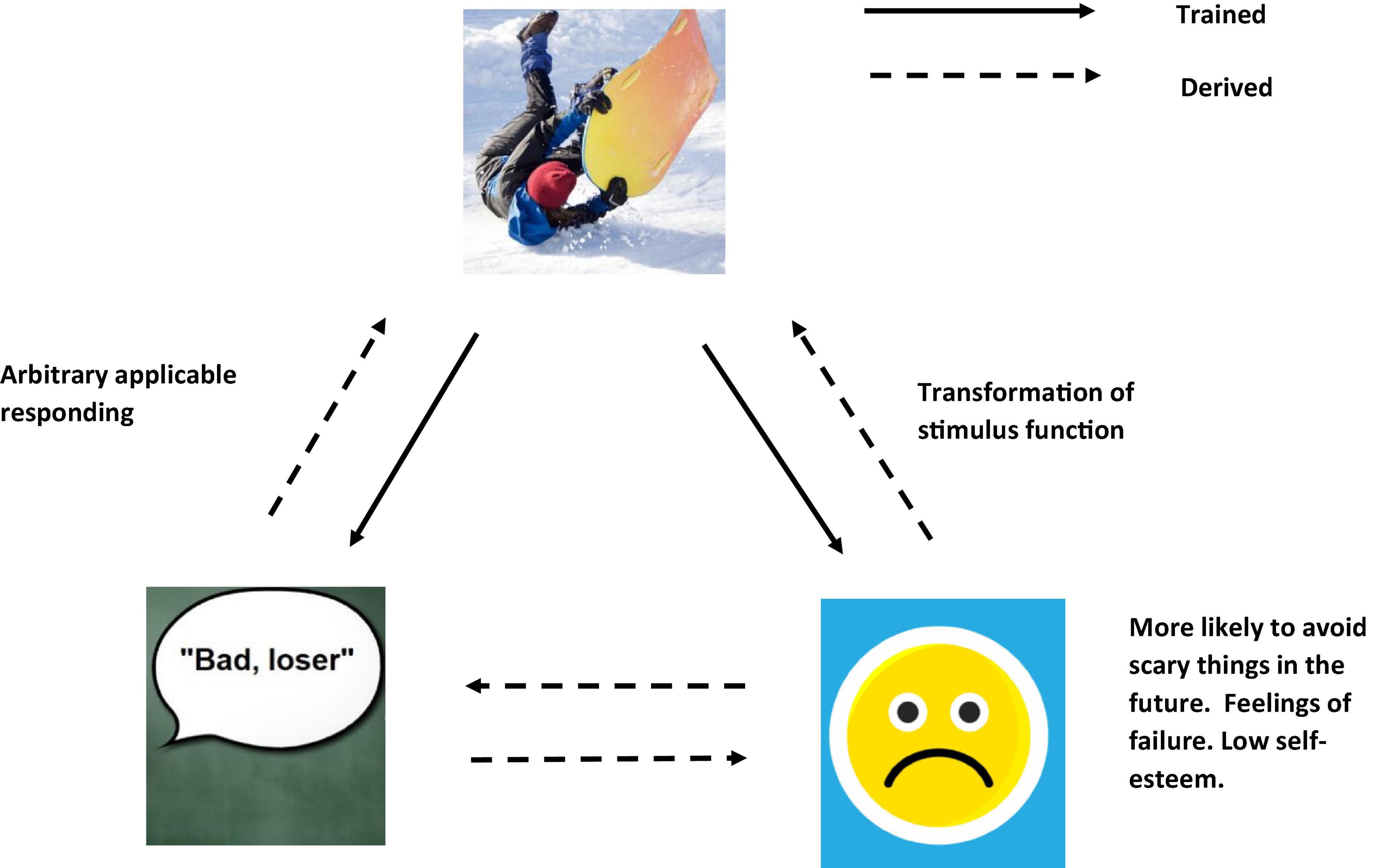

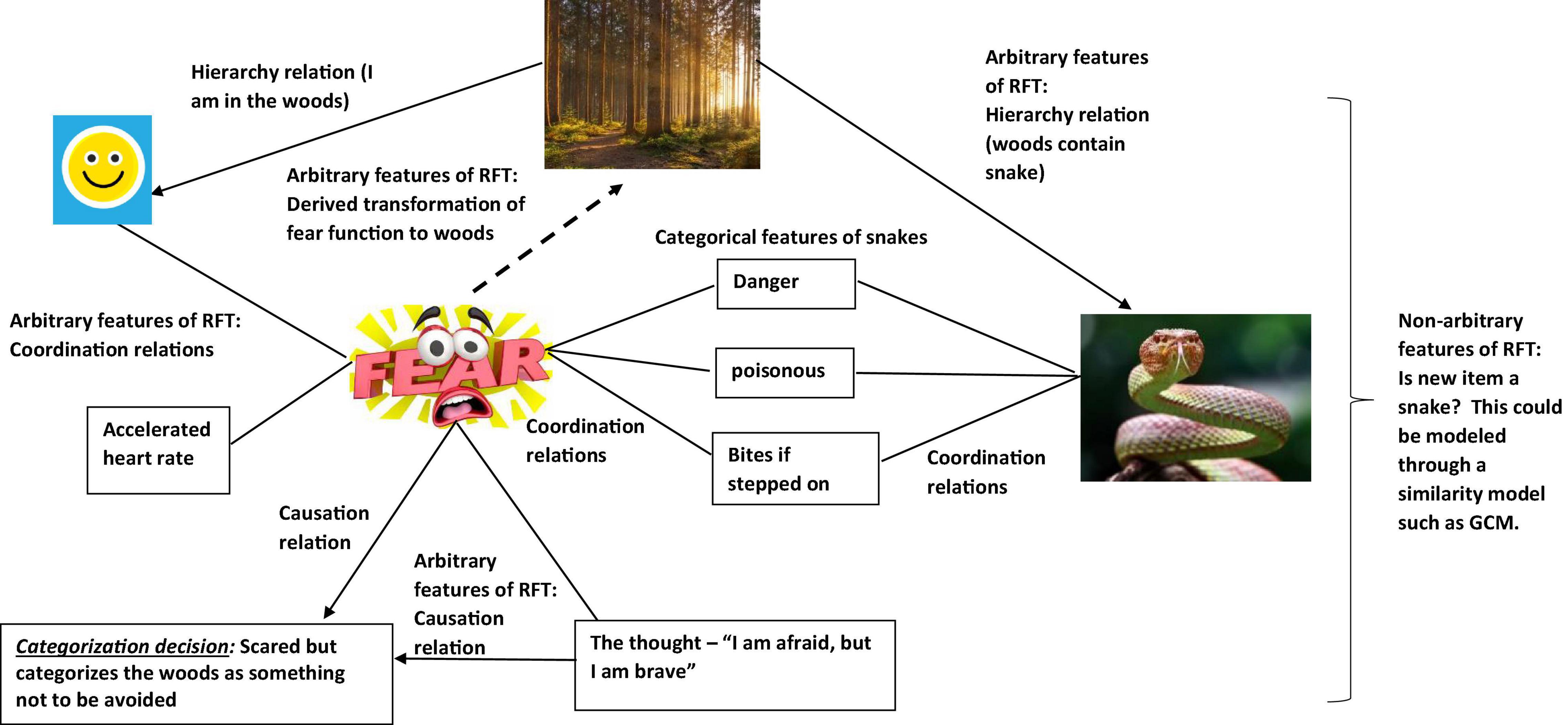

In scaling up, further relations can be added. For example, if you had experienced many accidents within your life, and felt that something always bad happens to you, you may frame yourself in the context of “I” as “bad” and “a failure” where “always bad things happen to me,” thus is “likely to get bitten.” This may then have affected your self-esteem and confidence in a negative way (see Figure 4), and with low self-esteem and confidence, with an expectation that something is always bad is going happen to you, this may cause more avoidant type behavior, and lead to greater certainty you would indeed categorize the woods as “something unsafe and to be avoided” (see Figure 5).

Figure 4. An RFT interpretation of a simple example of transformation of stimulus function, as the individual learns and derives a relation of “self”(Images from adobe stock with license and permission to use and modify. Credit for image on top “Tardigrade,” bottom right “Riska,” and bottom left “Coloures-Pic”).

Figure 5. An RFT interpretation illustrating complex frames of derived background knowledge, and how concepts can transfer functions in category learning to develop new functional categories, thus building up the complexity of existing background knowledge(Images from adobe stock with license and permission to use and modify. Credit for image from left clockwise around: “Tardigrade,” “AA + W,” “kuritafsheen,” and “iQoncept”).

Alternately, if the persons contextual history contained many examples of success, and praise from others, they may derive that their derived concept of self “I” is “good” and “successful” where “only good things happen to me” and this may lead to higher levels of self-esteem and confidence (see Figure 6). This may then lead you to conclude that despite feeling fear of the woods, you are confident that you will not get bitten, and as a result may still categorize the woods as “unsafe” but do not categorize the woods as “something to be avoided” (see Figure 7).

Figure 6. An RFT interpretation of simple example of transformation of stimulus function, as the individual learns and derives relation of “self”(Images from adobe stock with license and permission to use and modify. Credit for image on top “Maridav,” bottom right “Riska,” and bottom left “Coloures-Pic”).

Figure 7. An RFT interpretation illustrating complex frames of derived background knowledge, and how concepts can transfer functions in category learning to develop new functional categories, thus building up the complexity of existing background knowledge(Images from adobe stock with license and permission to use and modify. Credit for image from left clockwise around: “Tardigrade,” “AA + W,” “kuritafsheen,” and “iQoncept”).

Recent Hyperdimensional Relational Frame Theory Developments Which Expands the Dynamics of Relational Framing Within the Context of Background Knowledge

There have been recent developments of the RFT model worth noting, which maybe additionally useful for studying background knowledge in categorization. The first is the recent development of an RFT framework called multidimensional, multilevel (MDML) framework (Barnes-Holmes et al., 2017, 2020). According to this framework, AARR is assumed explicitly to be able to account for much more complexity than suggested by the standard RFT model (Barnes-Holmes et al., 2001). MDML (RFT) assumes that AARR can develop from not just; (1) mutual entailment; and (2) simple networking involving frames (coordination, distinction, etc.); but also (3) more complex networking involving rules; (4) the relating of relations such as involved in analogical reasoning; and (5) relating relational networks which are involved in extended narratives, and advanced problem solving (which maybe typical for complex background knowledge narratives). The framework also specifies each of these five levels as having multiple dimensions: coherence, complexity, derivation, and flexibility, so has a broader analytic framework, which again maybe particularly useful in the study of background knowledge of categorization.

Coherence refers to the extent to which patterns of AARR are consistent with other patterns of AARR. For example, stating “A motorbike is larger than a train” would be lacking coherence with the wider verbal community (who may state the reverse, i.e., “a train is larger than a motorbike”). However, in another context the statement maybe coherent, if for example, the person verbalizing the statement was playing a game, where the objective of the game was to “state the opposite of how you believe concepts actually relate” (Barnes-Holmes et al., 2020). It is perhaps important to note that this extends the notion of coherence as expressed in the categorization literatures (Rosch and Mervis, 1975; Murphy and Medin, 1985) which assumes it relates to a similarly function of intuition, instead MDML (RFT) framework refers to the coherence of more specifically defined (contextual) derived learning.