94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 16 January 2023

Sec. Human-Media Interaction

Volume 13 - 2022 | https://doi.org/10.3389/fpsyg.2022.1111003

This article is part of the Research TopicPost-pandemic Digital Realities of Older AdultsView all 11 articles

Ioana Iancu1†

Ioana Iancu1† Bogdan Iancu2*†

Bogdan Iancu2*†Introduction: Within the technological development path, chatbots are considered an important tool for economic and social entities to become more efficient and to develop customer-centric experiences that mimic human behavior. Although artificial intelligence is increasingly used, there is a lack of empirical studies that aim to understand consumers’ experience with chatbots. Moreover, in a context characterized by constant population aging and an increased life-expectancy, the way aging adults perceive technology becomes of great interest. However, based on the digital divide (unequal access to technology, knowledge, and resources), and since young adults (aged between 18 and 34 years old) are considered to have greater affinity for technology, most of the research is dedicated to their perception. The present paper investigates the way chatbots are perceived by middle-aged and aging adults in Romania.

Methods: An online opinion survey has been conducted. The age-range of the subjects is 40–78 years old, a convenience sampling technique being used (N = 235). The timeframe of the study is May–June 2021. Thus, the COVID-19 pandemic is the core context of the research. A covariance-based structural equation modelling (CB-SEM) has been used to test the theoretical assumptions as it is a procedure used for complex conceptual models and theory testing.

Results: The results show that while perceived ease of use is explained by the effort, the competence, and the perceive external control in interacting with chatbots, perceived usefulness is supported by the perceived ease of use and subjective norms. Furthermore, individuals are likely to further use chatbots (behavioral intention) if they consider this interaction useful and if the others’ opinion is in favor of using it. Gender and age seem to have no effect on behavioral intention. As studies on chatbots and aging adults are few and are mainly investigating reactions in the healthcare domain, this research is one of the first attempts to better understand the way chatbots in a not domain-specific context are perceived later in life. Likewise, judging from a business perspective, the results can help economic and social organizations to improve and adapt AI-based interaction for the aging customers.

The COVID-19 pandemic situation has transformed technology into a focal point. From work-from-home situations to remote education and remote communication, the pandemic forced individuals, regardless of their cognitive, affective, and behavioral profile, to adopt all types of technologies that have been rapidly developed and adapted. Although the technological solutions existed before the crisis, the rhythm of implementing them increased exponentially. Likewise, since the beginning of the pandemic, there is an increasing pressure on the healthcare system, especially dedicated to aging population, and, thus, digital solutions are intensively searched for (Valtolina and Marchionna, 2021). Artificial Intelligence (AI) is considered as one of the most important priorities when it comes to investment (Sheth et al., 2019). In this respect, chatbots’ market is expected to growth to USD 24.98 billion with a 24.2% Compound Annual Growth Rate (CAGR) by 2030 (Market Research Report, 2022).

Within the technological development path, chatbots are an important tool for companies to become more efficient (Canhoto and Clear, 2020), to create a more personalized digital experience, and to develop “customer-centric” experiences that mimic human behavior (Toader et al., 2020). Moreover, chatbots are increasingly used for healthcare purposes (Tamamizu et al., 2017; Mesbah and Pumplun, 2020; Zhang and Zheng, 2021), as they support the independence of aging adults, reduce the burden on caregivers, have the capacity to make people talk more honestly (Miura et al., 2022), and are effective in increasing the conversation time (Ryu et al., 2020).

Being built based on AI’s features, chatbots are considered intelligent entities that understand verbal, written, or multimodal communication, that are programmed to semantically respond, using natural conversational language, and that can learn from past experiences to improve themselves (Sheth et al., 2019; Toader et al., 2020). Although chatbots are already usually found in the online retail domain, their presence is increasingly acknowledged in the healthcare field (Valtolina and Marchionna, 2021). Thus, the most common chatbot applications are in domains as healthcare, e-commerce / customer services, education, financial and banking services (Bächle et al., 2018; Toader et al., 2020; Alt et al., 2021), or tourism (Melián-González et al., 2021).

The changes brought by the technological development have led to fundamental changes in the interaction between economic or social entities and consumers (Toader et al., 2020). Thus, studying chatbots’ impact on individuals’ perception becomes of great importance. Although AI technologies are increasingly used in interactions with customers, from pre-purchase to service support, there is a lack of empirical studies that aim to understand consumers’ experience with AI in general, and with chatbots in particular (Ameen et al., 2020; Nichifor et al., 2021). Likewise, most of the studies are in the computer science domain and are technically testing chatbots’ prototypes.

Moreover, in social sciences, chatbot studies on the Romanian context are limited. The existing ones are mainly focused on the relationship between chatbots’ error and gender, social presence, perceived competence, anthropomorphic design and trust in the digital marketing domain (Toader et al., 2020), on the acceptance of digital banking services (Alt et al., 2021; Schipor and Duhnea, 2021), on electronic commerce (Nichifor et al., 2021), or on marketing communication (Popescu, 2020). Yet, a not domain-specific approach that considers regular chatbots used in all types of daily online interactions might add value to the already existing research.

Simultaneously with the technological development, one of the most significant social transformations of the twenty-first century is the population aging. Globally, persons aged 65 or above have outnumbered children under 5 years old and it is estimated that by 2050, there will be around 2.1 billion aging adults worldwide (United Nations (UN), 2022). In the case of Romania, the average age is already 42 years old, and the most numerous age-range is 50–54 years old (Dan, 2022). Furthermore, it is estimated that by 2050, 60% of the population will be over 65 years old (Coman, 2021) and, thus, loneliness and isolation are predicted to deepen (Da Paixão Pinto et al., 2021). This reality is believed to increase both the economic and social pressure, as there is a rise in public health expenditure (Chen and Schulz, 2016; Fang and Chang, 2016; Segercrantz and Forss, 2019), a permanent need for improved healthcare assistance and assistive living, and a scarce of providers (Hofmann, 2013; Bassi et al., 2021). Low income and high workload generate a shortage of caregivers (Yang et al., 2015).

Thus, technology is believed to solve the gap between the needs of aging population and the potential of the society to overcome them, to prevent isolation, to communicate, to interact, and to monitor (Niehaves and Plattfaut, 2014; Petrie et al., 2014; Huh and Seo, 2015). In this context, an improved quality of life means smart medical care, virtual companion, mental health monitoring, open-ended conversations, emotional and knowledge-based responses, reminders, notifications, or financial duties (Bassi et al., 2021).

Baby boomers (people between 57 and 75 years old) and generation X (people between 42 and 56 years old) are considered “digital illiterates” (Vasilateanu and Turcus, 2019). As this dichotomy is too sharp, Lenhart and Horrigan (2003) consider that a digital spectrum approach is more correct. Relying on the fact that each generation has its technology laggards, on the fact that aging adults are not a homogenous cohort in terms of technology use, age should be correlated with other variables, as education or frequency of use of a certain application or device (Loos, 2012). Based on the digital divide (unequal access to technology, knowledge, and resources; DiMaggio et al., 2001), since young people are considered to have a greater affinity for technology, most of the research is dedicated to the perception of Generation Z and Millennials (people between 18 and 34 years old; Nichifor et al., 2021; Schipor and Duhnea, 2021). Thus, unfairly, the views of middle-aged individuals and aging adults on technology are receiving less attention (Nikou, 2015). Digital divide should rather be understood as relative rather than absolute inequalities that can be reduced (Van Dijk and Hacker, 2003). Furthermore, as the literature highlights a varied range of technologies that can improve aging adults’ lives, their perception on technology is rarely assessed (Pal et al., 2018). Since the technology developers are usually young people, a gap between what is invented and what is needed appears (Lee and Coughlin, 2014). Although chatbots are designed to be able to identify health problems based on the exposed symptoms, such applications are mainly restricted to young generations (Mesbah and Pumplun, 2020). Thus, the aging adults’ user experience should be better understood for more suitable innovations.

To the best of our knowledge, there is a lack of studies that focus on the general use of chatbots by aging population, without particularly emphasizing the healthcare or assistive domains. One of the newest paper in this respect is a systematic literature review written by Da Paixão Pinto et al. (2021) in which authors are interested in the engagement strategies of the chatbots, in their computational environments, in the input data format accepted, in the different types of personalization offered, and in the evaluation techniques for conversational agents. Being based on a systematic analysis of 53 papers, the main results of the study emphasize that personalization, context adaptation, a speech type input, and an intuitive system are at the core of an increased engagement and interaction with chatbots (Da Paixão Pinto et al., 2021). However, based on the existing findings, further empirical investigation is needed.

Based on the existing literature, we find that chatbots are mainly studied from a computer science perspective, on a young audience, or with a strong focus on healthcare domain. Thus, the present paper aims to empirically deepen the social-science knowledge on chatbots both by understanding the middle-aged and aging adults’ perceptions on chatbots in Romania and by analyzing possible determinants of behavioral intention on using chatbots in a not domain-specific perspective. An online opinion survey has been conducted on a convenience sample (N = 235), aged between 40 and 78 years old (M = 51.13, SD = 5.954). Although aging adults, or elderly, are persons aged 60 years old and above (United Nations (UN), 2017), due to the limitations given by the convenience sampling procedure, this study extends the analyzed age range and aims to comparatively investigate the differences, if any, between middle-aged adults and aging ones. The survey has been applied in the COVID-19 pandemic situation, between May and June 2021. By relying on Technology Acceptance Model (Davis, 1989) and on its extended version (Venkatesh and Bala, 2008), the respondents are mainly inquired on the general perceived ease of use, perceived usefulness, enjoyment, competence, effort, pressure, satisfaction, perception of external control, subjective norms, and behavioral intention, all related to the use of chatbots.

Based on Digital Economy and Society Index (2022) report, Romania is ranked as the last European Union (EU) country on digital skills and with a poor performance regarding the integration of digital technologies and digital public services. Furthermore, among developing countries, Romania is considered to be a case in which aging adults are the latest technology adopters (Ivan and Cutler, 2021). This situation has been deepened by the COVID-19 pandemic (Motorga, 2022). Considering this poor digital literacy context, Romania becomes a significant case-study on which technological development and adoption is urgently needed.

The implications of the paper are at least threefold. First, the present study aims to enrich the existing literature with a technology acceptance overview on the way chatbots are perceived, regardless of the domain. Interestingly, in comparison with the results of other studies testing technology acceptance models, the present data show that some variables (e.g., enjoyment, satisfaction, etc) do not have the hypothesized effect in explaining behavioral intention in respect to chatbot interaction. Explanations might be related to the target group of the study (which is different than most of the samples used in similar context), or to their understanding and experience with chatbots. Thus, the results open new research perspectives for verifying the present model’s outcomes. Second, the research fulfills the existing gap on the target group. Since most of the studied samples are composed of young people, the present approach focuses on middle-aged and aging adults. Finally, judging from a business perspective, the results can help economic and social organizations to improve and adapt AI-based interaction for the aging customers.

Chatbots are also known as chatterbots (Miliani et al., 2021), smart bots or interactive agents (Adamopoulou and Moussiades, 2020), virtual assistants or conversational agents (Sheth et al., 2019). They are chatty software machines that, based on artificial intelligence features and natural language processing, interact with users using written text or spoken language (Bächle et al., 2018) and relying on image, video, and audio processing (Bala et al., 2017). Put it differently, a chatbot is a computer program designed to interact through a natural dialog with users and to create the sensation of communicating with a human being (Hussain et al., 2019). Conversational agents are either on-screen or as voice assistants (Gunathilaka et al., 2020).

When Alan Turing proposed the Turing Test [in which users are tested if they are capable to differentiate between an interaction with a human being or a machine (Chen et al., 2020)], starting from the question “if machines can think?,” the idea of chatbots started to spread (Adamopoulou and Moussiades, 2020). Chatbot ELIZA, a simulation of a person-centric psychotherapist, is the first chatbot attempt. It was developed in 1966 by Josepth Weizenbaum and it used word and pattern matching techniques to conduct simple conversation (Bächle et al., 2018; Nichifor et al., 2021). ELIZA program used to search for keywords within the user’s input and transform the sentence into a script (Hussain et al., 2019). In the 80’s, chatbots have been mainly developed for the gaming industry and they have been used for testing if individuals can recognize them as being machines or humans (Bächle et al., 2018). Although early chatbots lacked the ability to maintain a conversation going (Hussain et al., 2019), as the conversational agents use more and more natural language processing, they pass the Turing Test (Justo et al., 2021). In 1995, ALICE chatbot, a highly awarded software, has been developed and has been considered the “most human computer” until that moment (Adamopoulou and Moussiades, 2020). After the development of chatbots available through messenger application, like SmarterChild, in 2001, or Wechat in 2009 (Mokmin and Ibrahim, 2021), the creation of virtual personal assistance has begun (e.g., Siri form Apple, Cortana from Microsoft, Alexa from Amazon, Google Assistant, or IBM Watson; Liu et al., 2018; Adamopoulou and Moussiades, 2020; Gunathilaka et al., 2020).

Chatbots can be either task-oriented or non-task-oriented (Hussain et al., 2019). Task-oriented chatbots are created for very specific tasks and domain-based conversations. Examples in this respect are booking accommodation, booking a flight, placing an order in online shopping, accessing specific information, etc (Hussain et al., 2019). The drawback of a task-oriented system is that it cannot exceed the programmed topic scope (Su et al., 2017). Non-task-oriented chatbots is rather keen on conversating for entertainment purpose in all kinds of domains and in an unstructured manner (Hussain et al., 2019; Justo et al., 2021).

As chatbots are imitating human-to-human interaction, they are often perceived as anthropomorphic (Seeger et al., 2018). Moreover, they are one of the most used examples of intelligent human-computer interaction (Adamopoulou and Moussiades, 2020). The literature talks about the capability of chatbots to expand beyond repetitive tasks (mechanically intelligent AI) and conduct thinking tasks (analytical intelligent AI), creative tasks (intuitive intelligent AI), and feeling tasks (empathetic intelligent AI). While mechanic chatbots provide predefined responses, the analytical chatbots analyze the given problems, intuitive chatbots contextually understand complains, and emphatic chatbots recognize and understand users’ emotions (Youn and Jin, 2021).

While the technological development’s aim is that of creating realistic human-like chatbots, in comparison with an employee, a chatbot is constantly updating, has unlimited memory, acts instantly, and it is available all the time (Lo Presti et al., 2021). The most important features of chatbots are their capability of being self-contained, always active, and able to track users’ interest, preferences, and socio-demographics (Tascini, 2019). Chatbots can be used for customer services, allowing companies to target consumers in a very personalized way (Alt et al., 2021) and expanding satisfaction and engagement (Maniou and Veglis, 2020). They are considered a technological trend for the companies as they can speed up and facilitate customer service process through providing online information or placing orders in real time (Ashfaq et al., 2020; Nichifor et al., 2021). Chatbots allow users to interact online with different organizations, anytime and from any place, and offer quick and meaningful responses (Alt et al., 2021). A useful chatbot is responsible to provide assistance without interfering, and developing a sense of trust (Zamora, 2017).

The functionality of chatbots is either for entertainment or utilitarian (Zamora, 2017). Conversational systems are increasingly used and are useful both at home and for leisure (e.g., Alexa, Siri), or in our professional life (e.g., Siri, Cortana) for managing the schedule or for educational purposes (Justo et al., 2021; Valtolina and Hu, 2021). Chatbots are mainly used for obtaining information, for interacting needs and out of curiosity (Gunathilaka et al., 2020).

Studies have revealed that chatbots perform better is they are specifically created for a certain domain or group (De Arriba-Pérez et al., 2021). Chatbots can be used in domains such as e-commerce, business, marketing, communication, education, news, health, food, design, finance, entertainment, travel, or utilities, but not limited to them (Liu et al., 2018; Adamopoulou and Moussiades, 2020). Technology is undoubtedly perceived as being the solution for improving healthcare. Scholars that develop chatbots talk about the need of designing empathetic virtual companions to alleviate isolation and loneliness (Da Paixão Pinto et al., 2021) and to fulfill the emotional needs of the aging adults and to increase likeability and trustworthiness towards machines (Yang et al., 2015). As the main motivation to use chatbot is productivity, together with entertainment and socialization, chatbots should be equally built as a tool, a toy, and a friend (Brandtzaeg and Følstad, 2017).

The literature also emphasizes on the downsides of chatbots use, especially on the reluctance on interacting with an impersonal machine instead of a human being (Nichifor et al., 2021). Although chatbots are increasingly resembling humans, this can be perceived as a disadvantage since privacy and security issues are associated with human hackers (Michiels, 2017). At the same time, while programmed with natural language processing, the interaction with chatbots is not intuitive enough and might imply errors. Thus, the lack of human feelings and emotions echoes on the lack of engagement and personality (Knol, 2019). For instance, in a shopping context, most of the users feel uncomfortable receiving personalized feedback from chatbots and consider them as being immature technology (Rese et al., 2020; Smutny and Schreiberova, 2020). Hildebrand and Bergner (2019) highlight the possibility that a chatbot service interaction provided to an already disappointed consumer might have a deep negative effect on both the service value and the brand or the organization. Furthermore, while users might have limited knowledge on chatbots and might consider them as inferior and unworthy entities to communicated with, the feeling of discomfort can lead to the refusal of interaction (Ivanov and Webster, 2017). When it comes to elderly, chatbots are associated with privacy issues, with loss of autonomy, with technical fears, or with lack of usefulness that can increase their resistance and avoidance (Da Paixão Pinto et al., 2021). Thus, although useful for organizations, there are many variables that can deter a good communication flow between chatbots and users. While, on one hand, there are the features of the chatbots (e.g., the way they are designed, their cognitive capabilities, etc), on the other hand there are the variables affecting the perception on them (e.g., knowledge on and experience with technology, the need for a human-natural conversation, or usefulness).

Renaud and Van Biljon (2008) talk about three main reasons for technology adoption. First, there is the support given for certain activities, as information, communication, administrative, entertainment, or health monitoring. Second, there is the convenience reason, referring to reducing physical and mental endeavor. Finally, there are the technology features, namely the design of the device, specific actions, and options. The attitude toward technology, measured on the strength of how much an individual likes or dislikes it, is also believed to be a key factor in accepting and adopting a particular technology (Edison and Geissler, 2003).

The most referred to theory on technology acceptance is Technology Acceptance Model (TAM; Davis, 1989). Aiming to predict behavior, this theory is inspired from the Theory of Reasoned Action (TRA; Fishbein and Ajzen, 1975) and the Theory of Planned Behavior (TPB; Ajzen, 1985). TAM emphasizes that perceived ease of use and the perceived usefulness, together with other external variables, can predict the attitude towards using a certain technology, the intentional behavior, and, finally, the actual behavior (Davis, 1989; Renaud and Van Biljon, 2008; Minge et al., 2014; Alt et al., 2021). While perceived ease of use is defined as the degree to which using a particular device or system is free from effort, perceived usefulness is the degree to which using a certain device enhances one’s performance (Davis, 1989; Wang and Sun, 2016). Although perceived usefulness is considered as being a stronger predictor for the intentional behavior, perceived ease of use is a key variable for the initial acceptance (Lin et al., 2007; Renaud and Ramsay, 2007). Behavioral intention, as a strong predictor of the actual behavior, is defined as the strength of one’s aim to execute a specific behavior (Fishbein and Ajzen, 1975). Furthermore, as a certain device or application is easy to use, it is predicted that this perception is likely to influence the perceived usefulness (Venkatesh and Bala, 2008).

One of the most complex models that aims to explain technology acceptance is TAM3 (Venkatesh and Bala, 2008). Being developed in a managerial context and on a longitudinal perspective, the new model builds on the anchoring and adjustment framing of human decision and adds new variables and connections to the previous models. In the context of decision making, anchoring refers to relying on the available initial information, information that can further influence the decision but that will decline over time when adjustment knowledge will be accessible (Cohen and Reed, 2006; Qiu et al., 2016). Thus, the anchor variables, as device self-efficacy [perceived abilities to perform a specific task using a certain technology (Compeau and Higgins, 1995)], perception of external control [perceived control and resources on using a certain technology (Venkatesh et al., 2003)], and device anxiety (fear on using a certain technology) are considered as influencing the perceived ease of use (Venkatesh and Bala, 2008).

The same relationship is developed when it comes to adjustment variables as perceived enjoyment on the use of a certain technology and objective usability (the effort required to interact with a certain technology; Venkatesh, 2000). The literature stresses on some universal incentives that can motivate individuals and that can predict intention to use a certain technology. While on one hand, there are the utilitarian rewards, as achievements, or ease of use, on the other hand, there are the hedonic rewards, as enjoyment and entertainment (Kim et al., 2018; Van Roy et al., 2018). Relying on Uses and gratification theory (Blumler and Katz, 1974), users tend to accept a device or an application if they feel rewarded (in terms of knowledge, relaxation, escapism, or social interaction) by using it (Kim et al., 2018; Wang et al., 2018). Likewise, an enjoyable experience with the technology is becoming increasingly infused into acceptance decision (Deng, 2017; Tsoy, 2017). Strongly linked with enjoyment, satisfaction is also considered as an import variable that can positively influence the perceived ease of use of a certain device or application (Zamora, 2017). At the same time, subjective norms, defined as the degree to which users consider that people in their trust circle should use a certain technology (Venkatesh and Davis, 2000), are believed to influence the perceived usefulness and behavioral intention.

Especially affected by the pandemic, but even beyond it, aging adults’ lives are usually characterized by loneliness and isolation. To avoid mental issues, like depression and anxiety, they need to be engaged into the daily routine, to be stimulated and entertained (Valtolina and Hu, 2021). Social interaction is considered a basic need for aging population in which solitude is one of the biggest issues (De Arriba-Pérez et al., 2021). Like in a vicious circle, social isolation, rejection, or loneliness seems to have a paramount negative effect on the general and mental health of an individual. Thus, assistive technology, by its social interaction capabilities and engagement, can help in preventing illnesses (Gunathilaka et al., 2020) and offers a more comfortable and cost-effective medical care (Mesbah and Pumplun, 2020). However, although technology can solve this problem, aging adults are resistant to change (Vichitvanichphong et al., 2017) and the digital divide is a barrier (De Arriba-Pérez et al., 2021). Considering that many devices and applications are created without considering users’ perception and are designed by young people, many aging adults are reluctant to products they do not understand (Lee and Coughlin, 2014; Pelizäus-Hoffmeister, 2016). In this respect, the experience with a certain technology is a key moderator variable for perceived ease of use and perceived usefulness relationship, for the technology anxiety and perceived ease of use, and for the perceived ease of use and behavioral intention (Venkatesh and Bala, 2008). In the present context, the previous use of chatbots (Luo and Remus, 2014), the previous knowledge on them (Cui and Wu, 2019), the initial attitude on them (Hall et al., 2017), and how it is liked might be important variables.

Some studies, by offering a generalized perspective, claim that aging adults perceive themselves as being too old to learn how to use technology (Feist et al., 2010). They are having less experience with devices, have fewer specific skills (Damant and Knapp, 2015), and the feelings of helplessness are being reinforced by failed previous experiences (Minge et al., 2014). In the case of aging population, some of the main largely accepted motivations to learn to use technology are social integration, usefulness, and security (Chou and Liu, 2016; Wang et al., 2018). Thus, being helpful and fulfilling needs and expectations become paramount variables (Gatti et al., 2017).

When it comes to chatbots, most of the studies are relying on young samples. Moreover, the literature review reveals that almost all papers on the use of chatbots for aging population are related to the healthcare or assistive domain. Almost all of them are written from a technical point of view by computer science specialists. Although AI developments in assistive technology are advanced, there is still work to be done for achieving a proper chatbot for end aging users (De Arriba-Pérez et al., 2021). Thus, the existing studies refer to presenting, designing, and testing prototypes of conversational agents with meaningful, empathetic emotional, and friendship capabilities (Yang et al., 2015; Bassi et al., 2021), with personalized entertainment content access (De Arriba-Pérez et al., 2021), with workplace environment facilities (Bächle et al., 2018), with capabilities to promote healthy habits through a coaching model (Justo et al., 2021), with public administration abilities (Miliani et al., 2021), with virtual caregiving attributions (Su et al., 2017; Ryu et al., 2020; Valtolina and Hu, 2021; Miura et al., 2022).

In a systematic literature review on general use of chatbots by aging adults, Da Paixão Pinto et al. (2021) have found that, considering the innovations in natural language processing, speech is the most used and preferred input format for aging adults to interact with chatbots. They also emphasize that assistive conversational agents still face acceptance problems, low involvement, and low user satisfaction mainly due to the loss of autonomy, privacy, and technical errors (Da Paixão Pinto et al., 2021).

Based on a set of interviews on assistive living with long term patients in Sri Lanka, Gunathilaka et al. (2020) have found that, due to poor sight, voice-based conversational agents can be helpful for long-term patient care if they are specialized for specific requirements, in accordance with individuals’ needs. However, although virtual agents can help aging adults to be more independent and better enjoy the lonely moments, the devices cannot substitute a human caregiver especially from an emotional point of view (Gunathilaka et al., 2020).

A comprehensive study on aging adults’ acceptance of health chatbots is using the extended Unified Theory of Acceptance and Use of Technology (UTAUT2) model (Venkatesh et al., 2012) to qualitatively test the factors that contribute to the adoption intention (Mesbah and Pumplun, 2020). Beside the interviews, the respondents have tested a health chatbot for a better understanding of the technology. The results show that behavioral intention to use a health chatbot depends not only on the performance expectancy, effort, social influence, facilitating conditions, as the initial model states, but also on variables as patience, resistance to change, need for emotional support, technology self-efficacy and anxiety, privacy risk expectancy, or trust in the technology and in the recommendation of a chatbot (Amato et al., 2017; Mesbah and Pumplun, 2020). As trust is especially considered an important factor in technology acceptance, it is predicted to positively impact the intention to use a certain technology, the perceived ease of use and the perceived usefulness when it comes to e-commerce and e-services (Gefen and Straub, 2003; Pavlou, 2003; Lankton et al., 2015; Cardona et al., 2021).

When it comes to gender, technology acceptance is debatable. While some studies consider that there are no significant relationships between gender and computer attitude (Nash and Moroz, 1997), men tend to score higher than women in affinity for technology (Edison and Geissler, 2003). Gender is usually considered as a moderator variable within the technology acceptance models (Bagana et al., 2021). Although the difference between men and women is narrow, men are believed to experience a lower level of technology anxiety (Damant and Knapp, 2015) and thus a higher behavioral intention.

Based on the above-described literature, the hypotheses of the paper are listed below:

H1: Perceived ease of use of chatbots is positively impacted by enjoyment (H1a), satisfaction (H1b), effort (H1c), competence (H1d), pressure (H1e), and perception of external control (H1f).

H2: Perceived usefulness of a chatbot is positively impacted by the perceived ease of use (H2a) and the subjective norms (H2b).

H3: Behavioral intention to use a chatbot in the future is positively impacted by the perceived usefulness (H3a), perceived ease of use (H3b), subjective norms (H3c), and previous experience with a chatbot [knowledge on chatbots (H3d), hearing about chatbots (H3e), use of chatbots (H3f), and like chatbots (H3g)].

H4: Men in comparison with women (H4a), and middle-aged in comparison with aging adults (H4b) are more likely to report a higher level of behavioral intention to use chatbots in the future.

The schematic version of the structural model is presented in the Figure 1.

To assess the above-mentioned relationships, an online opinion survey has been conducted. The questionnaire has been designed in Google Forms and it has been self-administrated during COVID-19 pandemic, namely between May and June 2021. Considering that the sample is formed of people over 40 years old and to avoid discrepancies due to communication difficulties (Cardona et al., 2021), the questionnaire has been developed in Romanian language. For a clear understanding of the research scope, the questionnaire has an introduction part in which the aim of the survey is presented together with information about the anonymity and confidentiality of the data. Moreover, for a more accurate understanding of a chatbot interaction, a small example has been given at the beginning of the survey (Figure 2). Andrei is the chosen name for the chatbot, as it is one of the most familiar names in Romania.

The questionnaire is composed of three main sections. The first section evaluates the previous experience with chatbots (if the users have heard of chatbots, of they use them, if they have knowledge on them, and if they like interacting with them). At the same time, the situations in which chatbots have been used and their perceived benefits are inquired. The second section highlights the main variables of technology acceptance models: perceived ease of use, perceived usefulness, enjoyment, satisfaction, effort, competence, pressure, perception of external control, subjective norms, and behavioral intention. The last section is dedicated to the socio-demographical variables.

A covariance-based structural equation modelling (CB-SEM) has been used to test the theoretical assumptions as it is a procedure used for complex conceptual models (Nitzl, 2016; Hair J. et al., 2019) and theory testing (Hair J. et al., 2019; Hair J. F. et al., 2019). The data has been analyzed using IBM SPSS and Amos 26 version.

The analyzed sample (N = 235) is formed of middle-aged (57.4%) and aging adults (41.6%), out of which 59.1% are females. The average age of the respondents is M = 51.13, SD = 5.954. Age has been measured as a continuous variable (“Please state your age in full years”). While middle-aged people are considered adults between 36 and 55 years old (Petry, 2002), aging adults are people over 50 years old (Mitzner et al., 2008; Renaud and Van Biljon, 2008). In the case of the present paper, middle-aged respondents are considered the ones between 40 and 50, and the aging ones are over 50. The age range of the present sample is 40–78 and these age limits are due to the sample selection process. The sample has been selected using the convenience sampling technique (Parker et al., 2019). Undergraduate communication students, on a voluntary basis and coordinated by the authors, sent the online questionnaires to their aging relatives. The questionnaires have been filled in between May and June 2021. Most of the respondents have an urban residence, have university studies, and have an income higher than 500 euros per month. Thus, there is the need to emphasize, from the very beginning, an over-representation of some demographic groups in the sample.

The table below (Table 1) summarizes the main demographic variables of the respondents.

The measurements have been adapted from the previous validated methodologies and developed based on the literature.

The previous experience with chatbots is measured by using the following variables. The respondents have been asked if they have heard about chatbots (HC) and if they have ever used them (UC; Lou and Remus, 2014) on scale from 1 to 7, where 1 = never and 7 = very frequently. Likewise, on a 7-point scale (1 = nothing at all, 7 = a lot), they have been inquired on their knowledge about chatbots (KC; Cui and Wu, 2019). The general attitude towards chatbots has been measured by using a simple question on liking this type of interaction (LKC; Edison and Geissler, 2003), on a 7-point scale, where 1 = not at all and 7 = very much.

The perceived ease of use (PEOU) scale, with seven items, is measured on 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Van der Heijden et al., 2003; Venkatesh and Bala, 2008; Luo and Remus, 2014).

The perceive usefulness (PU) of interacting with chatbots uses nine items and it is measured on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Venkatesh and Bala, 2008; Luo and Remus, 2014; O’Brien et al., 2018).

The enjoyment (ENJ) produced by interacting with a chatbot is measured through eight items, on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Ryan, 1982; Ryan et al., 1983, 1990, 1991; Plant and Ryan, 1985; McAuley et al., 1987; Deci et al., 1994; Venkatesh and Bala, 2008).

The satisfaction (SA) with the interaction, or the output quality is measured through six items on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Lou and Remus, 2014; Sherry et al., 2006; Venkatesh and Bala, 2008).

The effort (EFF) involved in chatbot interaction, or the objective usability, is measured using two items, on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Ryan, 1982; Ryan et al., 1983, 1990, 1991; Plant and Ryan, 1985; McAuley et al., 1987; Deci et al., 1994; Venkatesh and Bala, 2008).

The perceived competence (COMP) of using a chatbot, or technology self-efficacy, is measured based on four items, on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Ryan, 1982; Ryan et al., 1983, 1991; Plant and Ryan, 1985; McAuley et al., 1987; Ryan et al., 1990; Deci et al., 1994; Venkatesh and Bala, 2008).

The pressure (PRS) or anxiety generated by an interaction with a chatbot is measured by using 5 items on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Ryan, 1982; Ryan et al., 1983, 1990, 1991; Plant and Ryan, 1985; McAuley et al., 1987; Deci et al., 1994; Venkatesh and Bala, 2008).

The perception of external control (PEC), or how much control one has on interacting with chatbots, is measured through two items on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Venkatesh and Bala, 2008).

The subjective norms (SN) variable (the degree to which one perceives that people who are important to that person think he/she should use the system) is measured through two items on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Venkatesh and Bala, 2008).

The behavioral intention (BI), or the intention to use chatbots in the future, is measured through four items on a 7-point scale, where 1 = strongly disagree and 7 = strongly agree (Lou and Remus, 2014).

The table below (Table 2) summarizes all the variable and items used and provides descriptive data for each item. The internal consistency has been computed using Cronbach’s α value. Overall, the results are satisfactory as all the constructs are higher than the acceptable threshold value of 0.6 (Fornell and Larcker, 1981; Nam et al., 2018). Factor loading has been assessed for each item. Some items have been removed due to low factor loadings (<0.05; e.g., PRS1, PRS3, and BI3).

From the point of view of previous experience with chatbot, more than 75% of the respondents have heard at least once about chatbots (M = 3.64, SD = 1.64) and around 70% have used this interaction at least on one occasion (M = 2.89, SD = 1.710). Table 3 presents this information in a comparative manner between women and men and between middle-aged and aging adults emphasizing on the upper part of the used scale. In this respect, age has been transformed into a dummy variable ([40–50] and [51–78] intervals). Overall, men seem to have more experience with chatbots. However, when it comes to age, the differences between middle-aged and aging adults are not that significant. Paradoxically, and probably due to social desirability, although a large majority of the respondents declare that they like chatbots (M = 4.12, SD = 2.006), only a small part of them have increased knowledge on them (M = 3.19, SD = 1.598).

As presented in Figure 3, the situations in which chatbots have been used regularly (Lou and Remus, 2014) are related to customer services and online shopping. These chatbots are similar in functionality and interaction and they are only tailored made for those domains. Furthermore, when it comes to benefits (State of Chatbot Report, 2018), as the Figure 4 shows, the respondents strongly appreciate chatbots mainly due to their availability (e.g., 24 h a day) and capabilities to solve problems (e.g., quick answers, register complains, simplify the communication process).

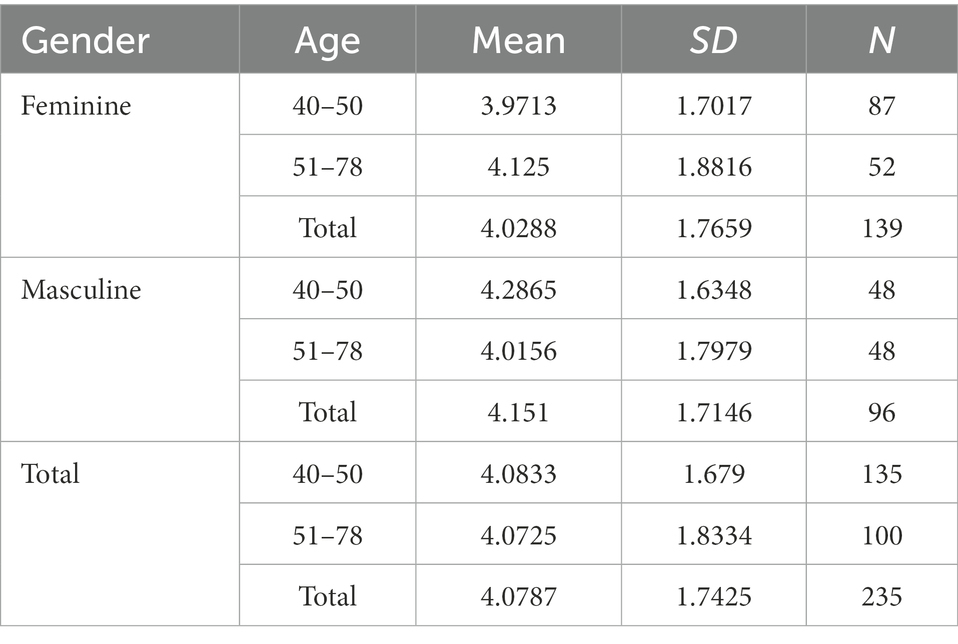

To better understand if there is an interaction between gender and age on the intentional behavior to use chatbots, a Two-way ANOVA analysis has been performed. The table below (Table 4) summarizes the descriptive statistics.

Table 4. The descriptive statistics for Two-way ANOVA [the dependent variable is Behavioral intention (BI)].

The test of between-subjects effect shows no significant difference in mean behavioral intention between males and female [F(1, 231) = 0.191, p = 0.662] and between middle-aged and aging adults [F(1,231) = 0.62, p = 0.804].

Table 5 presents the correlation matrix between the main variables of the study. The most powerful relationships are going to be highlighted in the following rows. While perceived ease of use is strongly, positively, and significantly correlated with perceived usefulness, competence, and pressure, the perceived usefulness is strongly linked with enjoyment, pressure, and satisfaction. Finally, the more one likes chatbots, perceive them as being useful, enjoy them, and feel satisfaction while using them, the higher is the intention to use chatbots in the future. It is important to notice that gender is significantly correlated only with hearing on chatbots, using them, and have knowledge on them, men being more prone to that. However, the relationship is a weak one. Age is significantly and negatively correlated with perceived ease of use, perceived usefulness, effort, and pressure. Although the relationships are weak, further analyses should investigate more if middle-aged adults are perceiving chatbots as being easier to use and more useful, and if they indeed invest less effort and feel less pressure when using chatbots.

A structural model assessment has been used to test the initial hypothesized relationships. The model-fit measurements have been used to evaluate the overall goodness of fit. In this respect, the following table (Table 6) summarizes the main indicators for the model and the standard values for a good fit. The standard values for a good fit are documented from Schumacker and Lomax (2004), Schreiber et al. (2006), and Shi et al. (2019). Overall, the data show that these indicators respect the recommended values for an acceptable fit. Thus, no modifications to the model have been done.

The study assesses the impact of different independent variables related to chatbots used on perceived ease of use, perceived usefulness, and behavioral intention. The following table (Table 7) summarizes the results.

The impact of enjoyment, satisfaction, and pressure on perceived ease of use of a chatbot are not significant (p > 0.05). Thus, hypotheses H1a, H1b, and H1e are not supported. However, the data show that effort (β = −0.138, t = −3.197, p = 0.001), competence (β = 0.569, t = 9.923, p < 0.001), and perceived external control (β = 0.124, t = 2.710, p = 0.007) impact the perceived ease of use chatbots in a significant manner. Hence, H1c, H1d, and H1f are supported.

Perceived usefulness is positively and significantly impacted by both perceived ease of use (β = 0.951, t = 9.541, p < 0.001) and subjective norms (β = 0.806, t = 4.434, p < 0.001). Thus, H2a and H2b are supported by the data.

Behavioral intention to use a chatbot is not significantly impacted by perceived ease of use, age, gender, or the previous experience with the interaction (knowledge on chatbot, hearing of chatbot, use of chatbots, or like chatbots). Hence, hypotheses H3b, H3d, H3e, H3f, H3g, H4a, and H4b are not supported. However, perceived usefulness (β = 0.113, t = 7.397, p < 0.001) of a chatbot and subjective norms (β = 0.255, t = 3.581, p < 0.001) are positively and significantly impacting the behavioral intention of using this interaction in the future. Consequently, H3a and H3c are supported.

The square multiple correlation is R2 = 0.644 for perceived ease of use. It means that 64% of the variance in the perceived ease of use is accounted by enjoyment, satisfaction, effort, competence, pressure, and perception of external control (however, only effort, competence, and perceived external control being significant). For the perceived usefulness, the square multiple correlation is R2 = 0.142, which means that 14% of the variance in the perceived usefulness is explained by perceived ease of use and subjective norms. Finally, for the behavioral intention, the square multiple correlation is R2 = 0.571. It means that 57% of the variance in the behavioral intention of using a chatbot is significantly accounted by perceived usefulness and subjective norms (perceived ease of use, age, gender, and experience variables not being significantly linked with behavioral intention).

The results of the structural model are summarized in the conceptual schema below (Figure 5).

In a context in which the technological development is increasingly impacting the socio-economic environment and in which the aging population is already an acknowledged phenomenon, the present paper aims to better understand the way chatbots are perceived by middle-aged and aging adults in Romania. Since the existing literature on chatbots is mostly written in the computer science domain and/or with a strong focus on healthcare and assistive perspective, one of the original contributions of this paper resides in assessing the general view, not domain-specific, on chatbots later in life and from a social science standpoint. Moreover, since most devices and applications are designed by young specialists, the aging adults’ inputs are mandatory.

Starting from the COVID-19 pandemic situation, the need for digital solutions is emphasized (Valtolina and Marchionna, 2021). However, as older individuals are more reluctant to technology than youngsters (Edison and Geissler, 2003), investigating perception on technology later in life is paramount, not only thinking about the need for smart healthcare, but also considering daily routine activities, as paying a bill or shopping online.

By relying on complex theoretical models of technology acceptance, the present paper highlights the role of perceived ease of use of chatbots, their perceived usefulness, previous experience with chatbots and demographics on the behavioral intention to further use this type of interaction. A structural model has been used for hypotheses testing. The first assumption of the paper (H1) is introducing a wide range of variables as possible explanations for the perceived ease of use of chatbots. Chatbots are perceived as easy to use if the effort implied is low and if the users feel competent for this type of interaction. However, contrary to expectations, enjoyment, satisfaction, or pressure, although significantly correlated to perceived ease of use, are not directly influencing it. These results are contrasting a large set of findings on technology acceptance (Venkatesh, 2000; Zamora, 2017; Kim et al., 2018). Possible explanations might be related to the limits of the sample (in terms of number or over-representation of certain socio-demographical features, i.e., education), to the lack of knowledge on chatbots, or to a poor exposure to this type of technology. Thus, associating chatbots with different degrees of enjoyment, satisfaction, or pressure might be accomplished only after an adjustment time frame and an increased experience. Consequently, further investigation is needed on the way aging population perceive the ease of use of chatbots, a topic that is scarcely studied.

The second assumption (H2) of the paper implies that perceived usefulness of chatbots is predicted by the perceived ease of use of this technology and by the subjective norms. Data show that this hypothesis is supported. Thus, middle-aged and aging users consider that chatbots are useful mainly if they find them easy to be used, is people around them consider they should use this interaction, and if they are helped into this process. This conclusion is in line to the results of Venkatesh and Davis (2000) and Venkatesh and Bala (2008).

The third assumption (H3) is hypothesizing that behavioral intention is impacted by the perceived ease of use, perceived usefulness, subjective norms, and previous experience with chatbots. This assumption is based on the results of Venkatesh and Davis (2000), Lin et al. (2007), Renaud and Ramsay (2007), or Venkatesh and Bala (2008). The data show that, although there are significant correlations between all these variables, behavioral intention is only explained by the perceived usefulness of chatbots and by the subjective norms. In this respect, later in life, a more utilitarian perspective of technology and the role of peers seem to be more important.

Finally, the last hypothesis (H4) refers to the role of age and gender on the way further intentions to use chatbots are perceived. The data show that there are no relations between the way women or men, and middle-aged or aging adults are perceiving intentional behavior to use chatbots. This lack of difference seems to be acknowledged by Modahl (1999) that concludes that, mainly when it comes to internet use, the role of age and gender is reduced. In terms of age, the results can be explained by the low age average (M = 51.13) and by the fact that people above 60 years old are under-represented (8.2%) within the sample.

As studies on chatbots and aging adults are few and are mainly investigating reactions in the healthcare domain, this research is one of the first attempts to better understand the way chatbots in a not domain-specific context are perceived later in life. However, as some of the results are contradicting the existing theoretical models that explain technology acceptance, further inquiries are needed. One of the limits of the present paper is the small sample size and the convenience sampling method. Convenience samples are valuable for assessing attitudes and identifying new possible hypotheses that need further rigorous investigation (Galloway, 2005). In this respect, larger targets and a more in-depth approach should be investigated. Considering that age alone is not a socio-demographic sufficient variable to explain technology use (Loos, 2012), one important limit of the paper refers to the fact that aging adults that have rural residence, are less educated, and have low income are underrepresented in this study. To have a generalization potential of the results, future investigations should consider a better representation of the population within the sample for all the important socio-demographical variables. Likewise, as older people are not that comfortable with online questionnaires (Kelfve et al., 2022), doubled by a large range of statements investigated, the method might have created bias and desirability. It is very likely that an experimental setting would better fit the issue of chatbot testing. At the same time, a future comparative approach between the way not domain-specific chatbots and domain specific ones are perceived becomes of great interest. Another limit of the paper refers to single country study. Emphasizing the case of Romania and its specific digital literacy characteristics, the data cannot be generalized to any other socio-economic or cultural context. However, Romania, being ranked the last in digital skills among EU countries can serve as a valuable case-study for different techniques to overcome and improve the digital literacy gap. For a more comprehensive and global perspective, a comparative analysis with other countries is needed. Since assistive technologies are already largely used in developed countries, a best practice guide to reduce the economic and social gaps might be of great value.

The present paper’s contributions are twofold. On one hand, it is one of the first attempts to explore the middle-aged and aging adults’ perceptions on chatbots in a non-healthcare context in Romania. Considering that technology is increasingly present in our daily routine, this type of investigation is of great use. Furthermore, some of the variables included in other studies analyzing technology perception in different setups seem not that important in the case of chatbots’ use later in life and in the context of the least digitally educated country in EU. In this particular case, a lower degree of effort, an increased feeling of competence, external control and subjective norms, and a high utilitarian role of technology seem to be utmost factors in chatbots’ use. Finally, it is important to notice the inexistant differences at the age and gender levels. Thus, stereotypical perceptions should be overcome.

On the other hand, the implications of the present investigation echo at the managerial and business level. As training might be uncomfortable for aging individuals, developing chatbots that are intuitive and that do not need much preparation to be used might be a winning solution (Da Paixão Pinto et al., 2021). The practitioners that develop technological interactive systems should be aware of the needs of the aging adults. Thus, the take aways imply designing useful technologies that do not require effort in use and that provide feelings of competence for the user.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical approval was not provided for this study on human participants because informed implicit consent was obtained when the survey has been conducted and the data was anonymized. The survey has been conducted online and the respondents opted in to participate. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adamopoulou, E., and Moussiades, L. (2020). “An overview of chatbot technology” in Artificial intelligence applications and innovations. AIAI 2020. IFIP advances in information and communication technology. eds. I. Maglogiannis, L. Iliadis, and Pimenidis, vol. 584 (Cham: Springer) doi: 10.1007/978-3-030-49186-4_31

Ajzen, I. (1985). “From intentions to actions: a theory of planned behavior” in Action control: From cognition to behavior. eds. J. Kuhl and J. Beckmann (Berlin, Heidelber, New York: Springer-Verlag), 11–39. doi: 10.1007/978-3-642-69746-3_2

Alt, M. A., Vizeli, I., and Săplăcan, Z. (2021). Banking with a chatbot – a study on technology acceptance. Studia Universitatis Babeș-Bolyai Oeconomica 66, 13–35. doi: 10.2478/subboec-2021-0002

Amato, F., Marrone, S., Moscato, V., Piantadosi, G., Picariello, A., and Sansone, C. (2017). Chatbots meet Ehealth: Automatizing healthcare. Workshop on Artificial Intelligence with Application in Health.

Ameen, N., Tarhini, A., Reppel, A., and Anand, A. (2020). Customer experiences in the age of artificial intelligence. Comput. Hum. Behav. 114:106548. doi: 10.1016/j.chb.2020.106548

Ashfaq, M., Yun, J., Yu, S., and Loureiro, S. (2020). I, chatbot: modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telematics Inform. 54:101473. doi: 10.1016/j.tele.2020.101473

Bächle, M., Daurer, S., Judt, A., and Mettler, T. (2018). Chatbots as a user Interface for assistive technology in the workplace conference: Usability day XVI. Dornbirn, Austria: Zentrum für Digitale Innovationen (ZDI). Available at: https://serval.unil.ch/resource/serval:BIB_C2614A9FE3FC.P001/REF [].

Bagana, B. D., Irsad, M., and Santoso, I. H. (2021). Artificial intelligence as a human substitution? Customer's perception of the conversational user interface in banking industry based on UTAUT concept. Rev. Manag. Entrepr. 5, 33–44. doi: 10.37715/RME.V5I1.1632

Bala, K., Kumar, M., Hulawale, S., and Pandita, S. (2017). Chatbot for college management system using AI. Int. Res. J. Eng. Technol. 4, 2030–2033.

Bassi, A., Chan, J., and Mongkolnam, P. (2021). Virtual companion for the elderly: conceptual framework. SSRN Electron. J. doi: 10.2139/ssrn.3953063

Blumler, J., and Katz, E. (1974). The uses of mass communications: Current perspectives on gratifications research. Beverly Hills, California, Sage Publications.

Brandtzaeg, P.B., and Følstad, A. (2017). “Why people use chatbots”, in Internet science. eds. I. I. Kompatsiaris, J. Cave, A. Satsiou, G. Carle, A. Passani, E. Kontopoulos, S. Diplaris, and D. McMillan (Cham: Springer), 377–392.

Canhoto, A. I., and Clear, F. (2020). Artificial intelligence and machine learning as business tools: a framework for diagnosing value destruction potential. Bus. Horiz. 63, 183–193. doi: 10.1016/j.bushor.2019.11.003

Cardona, D. R., Janssen, A. H., Guhr, N., Breitner, M. H., and Milde, J. (2021). A matter of trust? Examination of Chatbot Usage in Insurance Business. Hawaii International Conference on System Sciences (HICSS).

Chen, R., Dannenberg, R., Raj, B., and Singh, R. (2020). Artificial creative intelligence: breaking the imitation barrier. Proceedings of the 11th International Conference on Computational Creativity, Association for Computational Creativity. 319–325.

Chen, Y., and Schulz, P. (2016). The effect of information communication technology interventions on reducing social isolation in the elderly: a systematic review. J. Med. Internet Res. 18:e18. doi: 10.2196/jmir.4596

Chou, M., and Liu, C. (2016). Mobile instant messengers and middle-aged and elderly adults in Taiwan: uses and gratifications. Int. J. Hum. Comp. Inter. 32, 835–846. doi: 10.1080/10447318.2016.1201892

Cohen, J. B., and Reed, A. II (2006). A multiple pathway anchoring and adjustment (MPAA) model of attitude generation and recruitment. J. Consum. Res. 33, 1–15. doi: 10.1086/504121

Coman, I. (2021). INS: Îmbătrânirea demografică a populației României continuă să se accentueze. Digi24.. Available at: https://www.digi24.ro/stiri/actualitate/social/ins-imbatranirea-demografica-a-populatiei-romaniei-continua-sa-se-accentueze-1649915

Compeau, D. R., and Higgins, C. A. (1995). Computer self-efficacy: development of a measure and initial test. MIS Q. 19, 189–211. doi: 10.2307/249688

Cui, D., and Wu, F. (2019). The influence of media use on public perceptions of artificial intelligence in China: evidence from an online survey. Inf. Dev. 37, 45–57. doi: 10.1177/0266666919893411

Da Paixão Pinto, N., Dos Santos França, J., De Sá Sousa, H., Vivacqua, A., and Garcia, A. (2021). “Conversational agents for elderly interaction” in 2021 IEEE 24th international conference on computer supported cooperative work in design (CSCWD), 1–6. doi: 10.1109/CSCWD49262.2021.9437883

Damant, J., and Knapp, M. (2015). “What are the likely changes in society and technology which will impact upon the ability of older adults to maintain social (extra-familial)” in Networks of support now, in 2025 and in 2040?. Future of aging: Evidence review (London, UK: Government Office for Science)

Dan, C. (2022). România continuă să îmbătrânească, iar vârsta medie a ajuns la 42,1 ani. Forbes Romania Available at: https://www.forbes.ro/romania-continua-sa-imbatraneasca-iar-varsta-medie-a-ajuns-la-421-ani-295789

Davis, F. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

De Arriba-Pérez, F., García-Méndez, S., González-Castaño, F., and Costa-Montenegro, E. (2021). Evaluation of abstraction capabilities and detection of discomfort with a newscaster chatbot for entertaining elderly users. Sensors 21:5515. doi: 10.3390/s21165515

Deci, E. L., Eghrari, H., Patrick, B. C., and Leone, D. (1994). Facilitating internalization: the self- determination theory perspective. J. Pers. 62, 119–142. doi: 10.1111/j.1467-6494.1994.tb00797.x

Digital Economy and Society Index (2022). Romania. Available at: https://digital-strategy.ec.europa.eu/en/policies/desi-romania [].

DiMaggio, P., Hargittai, E., Neuman, W., and Robinson, J. (2001). Social implication of the internet. Annu. Rev. Sociol. 27, 307–336. doi: 10.1146/annurev.soc.27.1.307

Edison, S., and Geissler, G. (2003). Measuring attitudes towards general technology: antecedents, hypotheses and scale development. J. Target. Meas. Anal. Mark. 12, 137–156. doi: 10.1057/palgrave.jt.5740104

Fang, Y., and Chang, C. (2016). Users' psychological perception and perceived readability of wearable devices for elderly people. Behav. Inform. Technol. 35, 225–232. doi: 10.1080/0144929X.2015.1114145

Feist, H., Parker, K., Howard, N., and Hugo, G. (2010). New technologies: their potential role in linking rural older people to community. Int. J. Emerg. Technol. 8, 68–84.

Fishbein, M., and Ajzen, I. (1975). Belief, attitude, intention, and behavior: An introduction to theory and research. Reading, MA: Addison-Wesley.

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.1177/002224378101800104

Galloway, A. (2005). Non-probability sampling. Encyc. Soc. Meas., 859–864. doi: 10.1016/B0-12-369398-5/00382-0

Gatti, F., Brivio, E., and Galimberti, C. (2017). “The future is ours too”: a training process to enable the learning perception and increase self- efficacy in the use of tablets in the elderly. Educ. Gerontol. 43, 209–224. doi: 10.1080/03601277.2017.1279952

Gefen, D., and Straub, D. (2003). Managing user trust in B2C E-services. e-Service 2, 7–24. doi: 10.2979/esj.2003.2.2.7

Gunathilaka, L., Weerasinghe, W., Wickramasinghe, I., Welgama, V., and Weerasinghe, A. (2020). “The use of conversational interfaces in long term patient care” in 20th international conference on advances in ICT for emerging regions (ICTer), 131–136. doi: 10.1109/ICTer51097.2020.9325473

Hair, J. F., Risher, J. J., Sarstedt, M., and Ringle, C. (2019). When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 31, 2–24. doi: 10.1108/EBR-11-2018-0203

Hair, J., Sarstedt, M., and Ringle, C. (2019). Rethinking some of the rethinking of partial least squares. Eur. J. Mark. 53, 566–584. doi: 10.1108/EJM-10-2018-0665

Hall, M., Elliott, K., and Meng, J. (2017). Using the PAD (pleasure, arousal, and dominance) model to explain Facebook attitudes and use intentions. J. Soc. Media Soc. 6, 144–169.

Hildebrand, C., and Bergner, A. (2019). AI-driven sales automation: using Chatbots to boost sales. NIM Market. Intellig. Rev. 11, 36–41. doi: 10.2478/nimmir-2019-0014

Hofmann, B. (2013). Ethical challenges with welfare technology: a review of the literature. Sci. Eng. Ethics 19, 389–406. doi: 10.1007/s11948-011-9348-1

Huh, J., and Seo, K. (2015). Design and implementation of the basic Technology for Solitary Senior Citizen's lonely death monitoring system using PLC. J. Korea Multimed. Soc. 18, 742–752. doi: 10.9717/kmms.2015.18.6.742

Hussain, S., Ameri Sianaki, O., and Ababneh, N. (2019). “A survey on conversational agents/Chatbots classification and design techniques” in Web, artificial intelligence and network applications. WAINA 2019. Advances in intelligent systems and computing. eds. L. Barolli, M. Takizawa, F. Xhafa, and T. Enokido, vol. 927 (Cham: Springer) doi: 10.1007/978-3-030-15035-8_93

Ivan, L., and Cutler, S. J. (2021). Older adults and the digital divide in Romania: implications for the COVID-19 pandemic. Journal of elder. Policy 1. doi: 10.18278/jep.1.3.5

Ivanov, S., and Webster, C. (2017). Adoption of robots, artificial intelligence and service automation by travel, tourism and hospitality companies – a cost-benefit analysis. International Scientific Conference “Contemporary tourism – traditions and innovations”. Sofia University [].

Justo, R., Letaifa, L., Olaso, J., López-Zorrilla, A., Develasco, M., Vázquez, A., et al. (2021). “A Spanish corpus for talking to the elderly” in Conversational dialogue Systems for the Next Decade. Lecture notes in electrical engineering. eds. L. F. D'Haro, Z. Callejas, and S. Nakamura, vol. 704 (Singapore: Springer) doi: 10.1007/978-981-15-8395-7_13

Kelfve, S., Kivi, M., Johansson, B., and Lindwall, M. (2022). Going web or staying paper? The use of web-surveys among older people. BMC Med. Res. Methodol. 20:252. doi: 10.1186/s12874-020-01138-0

Kim, M., Lee, C., and Contractor, N. (2018). Seniors’ usage of mobile social network sites: applying theories of innovation diffusion and uses and gratifications. Comput. Hum. Behav. 90, 60–73. doi: 10.1016/j.chb.2018.08.046

Knol, C. (2019). Chatbots — an organisation’s friend or foe? Res. Hosp. Manag. 9, 113–116. doi: 10.1080/22243534.2019.1689700

Lankton, N. K., McKnight, D. H., and Tripp, J. (2015). Technology, humanness, and trust: rethinking trust in technology. J. Assoc. Inf. Syst. 16, 880–918. doi: 10.17705/1jais.00411

Lee, C., and Coughlin, J. (2014). Older adults’ adoption of technology: an integrated approach to identifying determinants and barriers. J. Prod. Innov. Manag. 32, 747–759. doi: 10.1111/jpim.12176

Lenhart, A., and Horrigan, J. B. (2003). Re-visualizing the digital divide as a digital Spectrum. IT Soc. 5, 23–39.

Lin, C., Shih, H., and Sher, P. (2007). Integrating technology readiness into technology acceptance: the TRAM model. Psychol. Mark. 24, 641–657. doi: 10.1002/mar.20177

Liu, W., Chuang, K., and Chen, K. (2018). The design and implementation of a Chatbot's character for elderly care. ICSSE 2018, 1–5. doi: 10.1109/ICSSE.2018.8520008

Lo Presti, L., Maggiore, G., and Marino, V. (2021). The role of the chatbot on customer purchase intention: towards digital relational sales. Ital. J. Mark. 2021, 165–188. doi: 10.1007/s43039-021-00029-6

Loos, E. (2012). Senior citizens: digital immigrants in their own country? Observatorio 6, 1–23. Available at: http://obs.obercom.pt/index.php/obs/article/view/513/477

Luo, M. M., and Remus, W. (2014). Uses and gratifications and acceptance of web-based information services: an integrated model. Comput. Hum. Behav. 38, 281–295. doi: 10.1016/j.chb.2014.05.042

Maniou, T. A., and Veglis, A. (2020). Employing a chatbot for news dissemination during crisis: design. Impl. Eval. Fut. Int. 12:109. doi: 10.3390/fi12070109

McAuley, E., Duncan, T., and Tammen, V. V. (1987). Psychometric properties of the intrinsic motivation inventory in a competitive sport setting: a confirmatory factor analysis. Res. Q. Exerc. Sport 60, 48–58.

Melián-González, S., Gutiérrez-Taño, D., and Bulchand-Gidumal, J. (2021). Predicting the intentions to use chatbots for travel and tourism. Curr. Issue Tour. 24, 192–210. doi: 10.1080/13683500.2019.1706457

Mesbah, N., and Pumplun, L. (2020). “Hello, I'm here to help you” – medical care where it is needed most: seniors’ acceptance of health chatbots. Proceedings of the 28th European Conference on Information Systems (ECIS), an online AIS Conference, June 15–17, 2020. Available at: https://aisel.aisnet.org/ecis2020_rp/209

Michiels, E. (2017). Modelling chatbots with a cognitive system allows for a differentiating user experience. Doct. Consort. Ind. Track Pap. 2027, 70–78.

Miliani, M., Benedetti, M., and Lenci, A. (2021). Language disparity in the interaction wit Chatbots for the administrative domain. AIUCD. 545–549. Available at: https://colinglab.fileli.unipi.it/wp-content/uploads/2021/03/miliani_etal_AIUCD2021.pdf [accessed October 2022]

Minge, M., Bürglen, J., and Cymek, D. H. (2014). “Exploring the potential of Gameful interaction design of ICT for the elderly” in Posters’ extended abstracts international conference, HCI international 2014 Heraklion, Crete, Greece, June 22–27, 2014 proceedings, part II. ed. C. Stephanidis (Cham: Springer), 304–309. doi: 10.1007/978-3-319-07854-0

Mitzner, T., Fausset, C., Boron, J., Adams, A., Dijkstra, K., Lee, C., et al. (2008). Older adults’ training preferences for learning to use technology. Proceedings of the Human Factors and Ergonomics Society 52 Annual Meeting, Santa Monica, CA: Human Factors and Ergonomics Society. 52, 2047–2051. doi: 10.1177/154193120805202603.

Miura, C., Chen, S., Saiki, S., Nakamura, M., and Yasuda, K. (2022). Assisting personalized healthcare of elderly people: developing a rule-based virtual caregiver system using Mobile chatbot. Sensors 22:3829. doi: 10.3390/s22103829

Modahl, M. (1999). Now or never: How companies must change today to win the battle for internet consumers. Harper Business, New York, NY.

Mokmin, M., and Ibrahim, N. (2021). The evaluation of chatbot as a tool for health literacy education among undergraduate students. Educ. Inf. Technol. 26, 6033–6049. doi: 10.1007/s10639-021-10542-y

Motorga, E. (2022). Public policy and digital government public framework in Romania during the pandemic. The case of aging adults. Pol. Stud. For. 3, 93–124.

Nam, S., Kim, D., and Jin, C. (2018). A comparison analysis among structural equation modeling (AMOS, LISREL and PLS) using the same data. J. Korea Inst. Inform. Commun. Eng. 22, 978–984. doi: 10.6109/JKIICE.2018.22.7.978

Nash, J. B., and Moroz, P. A. (1997). An examination of the factor structures of the computer attitude scale. J. Educ. Comput. Res. 17, 341–356. doi: 10.2190/NGDU-H73E-XMR3-TG5J

Nichifor, E., Trifan, A., and Nechifor, E. M. (2021). Artificial intelligence in electronic commerce: basic Chatbots and the consumer journey. Amfiteatru Econ. 23, 87–101. doi: 10.24818/EA/2021/56/87

Niehaves, B., and Plattfaut, R. (2014). Internet adoption by the elderly: employing is technology acceptance theories for understanding the age-related digital divide. Eur. J. Inf. Syst. 23, 708–726. doi: 10.1057/ejis.2013.19

Nikou, S. (2015). Mobile technology and forgotten consumers: the young-elderly. Int. J. Consum. Stud. 39, 294–304. doi: 10.1111/ijcs.12187

Nitzl, C. (2016). The use of partial least squares structural equation modelling (PLS-SEM) in management accounting research: directions for future theory development. J. Account. Lit. 37, 19–35. doi: 10.1016/j.acclit.2016.09.003

O’Brien, H. L., Cairns, P. A., and Hall, M. (2018). A practical approach to measuring user engagement with the refined user engagement scale (UES) and new UES short form. Int. J. Hum. Com. Stud. 112, 28–39. doi: 10.1016/j.ijhcs.2018.01.004

Pal, D., Funilkul, S., Charoenkitkarn, N., and Kanthamanon, P. (2018). Internet-of-things and smart homes for elderly healthcare: an end user perspective. IEEE Access 6, 10483–10496. doi: 10.1109/ACCESS.2018.2808472

Parker, C., Scott, S., and Geddes, A. (2019). Snowball sampling. UK: SAGE Research Methods Foundations.

Pavlou, P. (2003). Consumer acceptance of electronic commerce: integrating trust and risk with the technology acceptance model. Int. J. Electron. Commer. 7, 101–134. doi: 10.1080/10864415.2003.11044275

Pelizäus-Hoffmeister, H. (2016). “Motives of the elderly for the use of technology in their daily lives” in Aging and technology perspectives from the social sciences. eds. E. Domínguez-Rué and L. Nierling (transcript Verlag: Bielefeld), 27–46.

Petrie, H., Gallagher, B., and Darzentas, J. (2014). “Technology for Older People: a critical review” in Posters’ extended abstracts international conference, HCI international 2014 Heraklion, Crete, Greece, June 22–27, 2014 proceedings, part II. ed. C. Stephanidis (Cham: Springer). 310–315. doi: 10.1007/978-3-319-07854-0

Petry, N. (2002). A comparison of young, middle-aged, and older adult treatment-seeking pathological gamblers. The Gerontologist 42, 92–99. doi: 10.1093/geront/42.1.92

Plant, R. W., and Ryan, R. M. (1985). Intrinsic motivation and the effects of self-consciousness, self- awareness, and ego-involvement: an investigation of internally-controlling styles. J. Pers. 53, 435–449. doi: 10.1111/j.1467-6494.1985.tb00375.x

Qiu, L., Cranage, D., and Mattila, A. (2016). How anchoring and self-confidence level influence perceived saving on tensile price claim framing. J. Rev. Pric. Manag. 15, 138–152. doi: 10.1057/rpm.2015.49

Renaud, K., and Ramsay, J. (2007). Now what was that password again? A more flexible way of identifying and authenticating our seniors. Behav. Inform. Technol. 26, 309–322. doi: 10.1080/01449290601173770

Renaud, K., and Van Biljon, J. (2008). Predicting technology acceptance and adoption by the elderly: a qualitative study. SAICSIT 2008, Proceeding of the 2008 annual research conference of the South African Institute of Computer Scientists and Information Technologists on iIT research in developing countries: riding the wave of technology, ACM, New York, 210–219.

Report, Market Research. (2022). Available at: https://www.marketresearchfuture.com/reports/chatbots-market-2981 [].

Rese, A., Ganster, L., and Baier, D. (2020). Chatbots in retailers’ customer communication: how to measure their acceptance? J. Retail. Consum. Serv. 56:102176. doi: 10.1016/j.jretconser.2020.102176

Ryan, R. M. (1982). Control and information in the intrapersonal sphere: an extension of cognitive evaluation theory. J. Pers. Soc. Psychol. 43, 450–461. doi: 10.1037/0022-3514.43.3.450

Ryan, R. M., Connell, J. P., and Plant, R. W. (1990). Emotions in non-directed text learning. Learn. Individ. Differ. 2, 1–17. doi: 10.1016/1041-6080(90)90014-8

Ryan, R. M., Koestner, R., and Deci, E. L. (1991). Varied forms of persistence: when free-choice behavior is not intrinsically motivated. Motiv. Emot. 15, 185–205. doi: 10.1007/BF00995170

Ryan, R. M., Mims, V., and Koestner, R. (1983). Relation of reward contingency and interpersonal context to intrinsic motivation: a review and test using cognitive evaluation theory. J. Pers. Soc. Psychol. 45, 736–750. doi: 10.1037/0022-3514.45.4.736

Ryu, H., Kim, S., Kim, D., Han, S., Lee, K., and Kang, Y. (2020). Simple and steady interactions win healthy mentality: designing a chatbot Service for the Elderly. Proceedings of the ACM on human-computer interaction, Vol. 4, CSCW2, article 152, 1–25. doi: 10.1145/3415223

Schipor, G. L., and Duhnea, C. (2021). The consumer acceptance of the digital banking Services in Romania: an empirical investigation. Balkan Near Eastern J. Soc. Sci. 7, 57–62.

Schreiber, J., Nora, A., Stage, F., Barlow, E., and King, J. (2006). Reporting structural equation modeling and confirmatory factor analysis results: a review. J. Educ. Res. 99, 323–338. doi: 10.3200/JOER.99.6.323-338

Schumacker, R. E., and Lomax, R. G. (2004). A beginner's guide to structural equation modeling. Second edition. Mahwah, NJ: Lawrence Erlbaum Associates, doi: 10.4324/9781410610904.

Seeger, A.-M., Pfeiffer, J., and Heinzl, A. (2018). Designing anthropomorphic conversational agents: development and empirical evaluation of a design framework. Proceedings of the 39th International Conference on Information Systems. San Francisco, USA. 1–17.

Segercrantz, B., and Forss, M. (2019). Technology implementation in elderly care: subject positioning in times of transformation. J. Lang. Soc. Psychol. 38, 628–649. doi: 10.1177/0261927X19830445

Sherry, J. L., Lucas, K., Greenberg, B. S., and Lachlan, K. (2006). “Video game uses and gratifications as predictors of use and game preference” in Playing video games. Motives, responses, and consequences. eds. P. Vorderer and J. Bryant (Lawrence Erlbaum Associates Publishers), 213–224.

Sheth, A., Yip, H., Iyengar, A., and Tepper, P. (2019). Cognitive services and intelligent Chatbots: current perspectives and special issue introduction. IEEE Internet Comput. 23, 6–12. doi: 10.1109/MIC.2018.2889231