- 1English Department, College of Education for Human Sciences, University of Diyala, Diyala, Iraq

- 2Department of English Language and Literature, Faculty of Humanities and Social Sciences, Golestan University, Gorgan, Iran

- 3Department of Information Technology, Al Buraimi University College, Al Buraimi, Oman

- 4Faculty of Informatics and Management, University of Hradec Kralove, Hradec Kralove, Czechia

- 5Department of Islamic Studies in English, Al-Imam Al-Adhdham University College, Baghdad, Iraq

Given the spread of the COVID-19 pandemic, online classes have received special attention worldwide. Since teachers have a lasting effect on the students, the teacher–student relationship is a pivotal factor in language learning classes. Students will not be engaged in class activities if they are not sufficiently challenged by them or if they do not find them interesting, especially in online classes. From this point of view, motivating, engaging, and testing techniques in online classes are highly important. The present study attempts to demonstrate a correlation between structured feedback and three types of engagement in an online class: cognitive, behavioral, and emotional engagement. The structured feedback, which is used at the end of each lesson lets the students express what they know, what they want to know, and what they learned. The sample of the study consists of 114 EFL third-year college students. The study's findings reveal positive and significant correlations between the three types of engagement; cognitive, behavioral, and emotional, and the use of structured feedback in online classes. In a nutshell, some academic implications and recommendations are provided.

Introduction

Technology has brought inevitable effects on different aspects of human life, particularly education (Aghaei et al., 2020; Derakhshan and Malmir, 2021). Due to the advancement of technology, there has been a shift from traditional classes to online learning during the last decade. How dynamic the students are in online courses can be a simple definition of engagement. Generally, the exploitation of resources (time and effort) by students or instructors to improve the learning experience and the learning results is referred to as engagement (Trowler, 2010). Academic engagement among students is a prerequisite for L2 learning (Dotterer and Lowe, 2011; Nejati et al., 2014; Derakhshan, 2021, 2022c; Shakki, 2022). It is related to “the quality of how students connect or involve themselves in educational activities” (Skinner et al., 2009, p. 495). According to Amerstorfer and Freiin von Münster-Kistner (2021), students' academic engagement depends on many factors related to the learner, teacher, teaching methods, colleagues, and some features in the learning environment.

As stated by many studies such as Carini et al. (2006), Trowler (2010), Wang J. et al. (2022), and Wang et al. (2022a,b), students' satisfaction, persistence, and academic achievement influence the students' engagement, which is where individual's attention is allocated in active reaction to the environment; it is then a growth-producing activity (Csikszentmihalyi, 1990). School engagement (student involvement) has emerged as a critical notion linked to a variety of educational outcomes, such as achievement, behavior, attendance, conduct, and dropout/completion (Jimerson et al., 2003, 2009). Fredricks et al. (2016) stated that there are three components of engagement, which are cognitive, behavioral, and emotional and they are all related to each other in the process of engagement (Wang et al., 2016).

Nowadays, engagement in online teaching and learning has become crucial, especially after the COVID-19 pandemic. This engagement should be characterized by the effort, concentration, active participation, and emotional responsiveness (Philp and Duchesne, 2016; Aghaei et al., 2022). Because of the widespread use of online learning, it is more vital than ever to figure out how engagement relates to students' performance (i.e., the measurement of results) and specific characteristics of the online classroom (i.e., the types of educational practices). Every responsible English language teacher makes every effort to meet the needs of their students to improve their level of performance (Derakhshan and Shakki, 2020). To accomplish this, they employ a variety of approaches and strategies, and all of this can be accomplished effectively if some responses are received from students in the form of feedback. The use of feedback in EFL classes of all types can promote an effective interactive class environment.

This viewpoint was highlighted by Hyland (2006) who mentioned that one of the most crucial jobs of a teacher is to provide feedback to students, as it allows for the kind of individual attention that is otherwise difficult to achieve in a classroom setting. As a kind of feedback, reinforcement and interpersonal attraction theories may also be related to students' engagement (Derakhshan et al., 2019). This theory proposes that once an individual finds anything satisfying in interacting with another person, they will want to communicate with that person again. In educational settings, teachers' non-verbal acts in interacting with their pupils, if deemed gratifying, may contribute to improving student classroom engagement (Witt et al., 2004). As a result, the more regular and constructive the feedback is in online education, the greater the potential for performance improvement (Walther and Burgoon, 1992; Flahery and Pearce, 1998; Eslami and Derakhshan, 2020). In relation to that, the use of structured feedback is highlighted in the present study.

Recently, and within the current wave of online teaching in colleges and schools, the challenge of motivating students to engage in online classes has become urgent. However, if the right pedagogy is not used, the haste to add online classes to the calendar might result in losing connectivity with the students. As mentioned by Shu-Fang and Aust (2008), online learning possesses two distinguished pedagogical features that were inefficient in the earlier generations of distance education. One is interaction and the other is collaboration.

The nature of human–computer interaction (HCI) has changed dramatically in recent decades, transitioning from simple user interfaces to interactive and engaging experiences (Shankar et al., 2016). This study tries to focus on using structured feedback from the students at the end of each online session. Structured feedback as mentioned by (Larsen-Freeman and Anderson, 2011, p. 67) happened when “the students are invited to make observations about the day's lesson and what they have learned. The teacher accepts the students' comments in a non-defensive manner, hearing things that will help give him direction for where he should work when the class meets again.” In the present study, the teacher asked the students to determine what they already know about the material of the lesson (their previous knowledge), what they want specifically to know, and what they actually learned in the class. This technique is used to represent structured feedback in online classes. Thus, this study shows which type of engagement in the students may be developed (cognitive, behavioral, and emotional) and which can be correlated with structured feedback in online classes. This desideratum can be filled out by answering the following research questions:

• Is there a correlation between EFL college students' behavioral engagement and their attitudes toward structured feedback in online classes?

• Is there a correlation between EFL college students' emotional engagement and their attitudes toward structured feedback in online classes?

Literature review

Engagement

Engagement has become a popular psychological term that influences human behavior and decision-making in a variety of areas, including education, employment, leisure, and marketing. Kuh (2009) explained students' engagement as “…the more students study a subject, the more they know about it, and the more students practice and get feedback from faculty and staff members on their writing and collaborative problem solving, the deeper they come to understand what they are learning” (p. 5). According to the student involvement theory, the more involved a student is in college, the more learning and personal growth they will receive (Astin, 1984; Wang et al., 2021).

In the literature, most of the previous studies focused on traditional students' engagement in universities worldwide (Robinson and Hullinger, 2008). After the COVID-19 pandemic, all universities worldwide moved into online learning. This movement was unusual to most students, especially for those who have not joined this system yet though most studies proved its positive role in many aspects in relation to language teaching. Because of its virtues and the benefits for English students, researchers consider EFL online classes an innovative approach that can answer students' concerns in English lessons (Tawafak et al., 2019; Alahmadi and Alraddadi, 2020; Hamouda, 2020; Pikhart and Klimova, 2020).

Empirical studies

In the same concern, Rad et al. (2022) recommend using flipped learning as a way of online education, as it positively impacts instructors' support, team support, and positive subjective feelings about the course material. Moreover, Çakmak et al. (2021) reported the essential role of online education, specifically in vocabulary learning and retention. On the contrary, Pikhart et al. (2022) found some dissatisfaction among students with online education in the EFL context as they much prefer traditional face-to-face classes and written textbooks.

As far as students' engagement is concerned, it could be divided into three interrelated components, which are cognitive, behavioral, and emotional engagement (Fredricks et al., 2016; Al-Bahadli, 2020). Through cognitive engagement, students apply mental energy during the learning process. First, according to Nguyen et al. (2016), cognitive engagements deal with the student's enrolment in the learning process, which refers to the students' improvement in understanding, studying, and getting the knowledge shown in their academic work. This means that cognitive engagement is very important in identifying the students' psychological motivations, which are connected directly with their engagement. Second, in behavioral engagement, the students perform special behaviors while they are learning. According to Nguyen et al. (2016), behavioral engagement is related to students' participation and activities in the classroom that motivates the students to be a part of the school learning environment. Third, while they are learning, students should experience positive emotions to get emotional engagement (Derakhshan, 2022a).

The student's engagement could be considered as the analysis of their positive behaviors, such as students' participation, attendance, and attention (Derakhshan, 2022b). This engagement analyzes the students' psychological experience and their feelings in the schools. Through the chain mediation of autonomous motivation and positive academic emotions (such as satisfaction and relief), teacher engagement had an impact on students' English achievement (Derakhshan et al., 2022; Wang et al., 2022a,b; Wang J. et al., 2022). Another dimension, which is social engagement was also added by Fredricks et al. (2016). This is to recognize that learning possibilities are embedded in a social environment (Wang and Hofkens, 2019), as evidenced by students' participation in social contact or collaboration during the learning process. In this concern, Latipah et al. (2020) demonstrated that students were positively engaged in terms of behavioral, emotional, and cognitive engagement, according to the findings of their study. In terms of behavioral engagement, students made a significant effort to study English before class by watching a video and performing admirably. They are more engaged in learning activities and most students respond positively to emotional engagement as they were enthusiastic about learning English.

Pilotti et al. (2017) reported that the richness of the discussion prompts in classes was found to have a favorable relationship with students' cognitive engagement and instructors' behavioral engagement. With increased class size, both cognitive and behavioral measures of student involvement decreased (Derakhshan and Shakki, 2019). So, the type of engagement varies from context to context depending on the type of techniques used by the teachers and the class environment. Based on that, this study tries to discover the type of correlations that can be existed between the three types of engagement and structured feedback, which is used by teachers in online English classes.

Methods

Participants

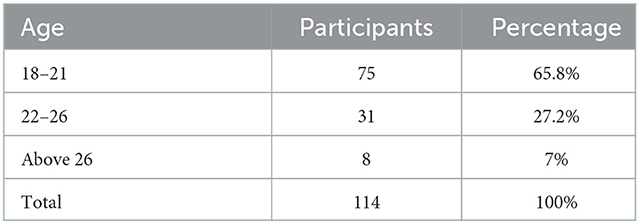

The total number of participants was 114. They were all EFL third-year college students at the English department of the University of Diyala, Iraq, who were exposed to the structured feedback in their daily lessons for 8 weeks. The ages of the participants varied between 18 and 26 years, but most of them were 18–21 years as shown in Table 1.

According to Table 1, the highest number of participants was from the age of 18–21 years (65.8%), and the less participants were with the age older than 26 years (7%). Regarding the specialization, all 114 students belong to English Department, and they were all third-year students.

Instruments

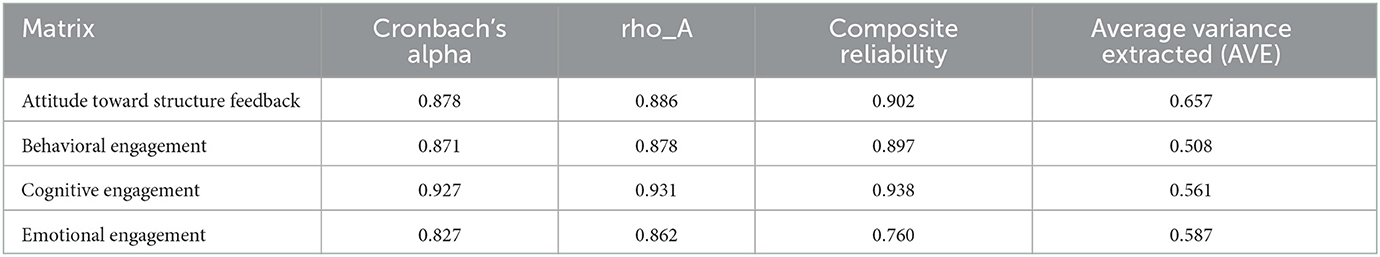

The instrument used to collect data, to verify/falsify the hypotheses, was an online questionnaire that the researchers constructed (see Appendix 1). SESQ Students' Engagement in Schools Questionnaire was used, which was constructed by many researchers (see Lam and Jimerson, 2008). The SESQ consisted of 109 items focused on the comprehensive assessment of the construct of students' engagement. The researchers summarized the number of items to suit the study's aim and context. Students' attitudes questionnaire was adopted from a questionnaire developed by Barmby et al. (2008) in the same process of writing the questionnaire (see Lam and Jimerson, 2008). The questionnaire, after a short introduction with the consent to take part in the survey, contained a few demographic questions related to age and specialization. The validity and reliability of the items were confirmed statistically, as shown in Table 4. The results show that the sample can be accepted, and the test is statistically valid. Face validity was also gained by exposing the instruments and the idea of the study to some specialists in the domain of the English language and taking their notes into consideration.

In each lesson, the students were invited to register what they noticed in the lecture in three domains; what they already knew about the material of the lecture (their previous knowledge), what they want specifically to know, and what they actually learned in the same lecture. The teacher then, at the end of each lecture, tries to listen to the students, kindly discussing some points, and attempting to respond to all their inquiries. The students were third-year college students and the material is a method of English language teaching, a book by Larsen-Freeman and Anderson (2011) “Techniques and Principles in Language Teaching.” The questionnaire was submitted to the participants online via Google Forms. The data collection took place in January and February 2022, just at the beginning of the second semester. Once the questionnaire was finalized, the next step would be to test the reliability of the questionnaire using the Cronbach alpha test. If the reliability passed Cronbach's alpha of >0.7, the questionnaire would be distributed to the sample of the study. However, it is always advantageous to pilot the questionnaire first. This is in line with Sekaran and Bougie (2016)'s recommendation. They suggested that before collecting data, useful statistics from the original study should be calculated to ascertain reliability. This section discusses how the acceptance model was piloted in this study.

The pilot test was conducted by one of the researchers on her section's students. They were 58 undergraduate students from two sections. A copy of the questionnaire was distributed during class time. The aim was to check if students could answer the questionnaire without any difficulty. The participants that were selected for the pilot study received a preliminary declaration stating that their participation was voluntary and that their anonymity would be guaranteed if they chose to complete the questionnaire survey.

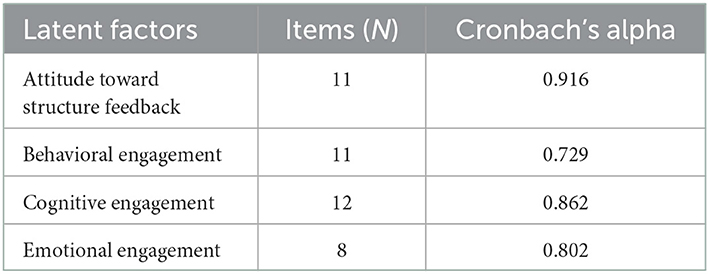

As shown in Table 2, the internal consistency of the items was measured using Cronbach's alpha analysis on Statistical Package for the Social Sciences (SPSS). Since Cronbach's alpha fell within the acceptable range (0.729–0.916) >0.7, the reliability of the scale was confirmed (Tawafak et al., 2018). This shows that the current model is applicable to the acceptance model and the measures reflect the research goal. The questionnaire was then distributed online using Google Forms and a free online survey service that can be used to collect responses. In the first step, the researchers tested the initial results after the 114 responses received to check whether the survey is working properly or not.

Reliability

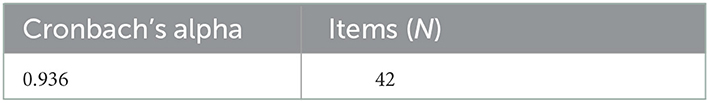

Table 3 shows a high acceptance of reliability. The normal acceptance needs to be >0.7, and the current test of this survey gave 0.936 as a significant accepted result.

Conceptual research model

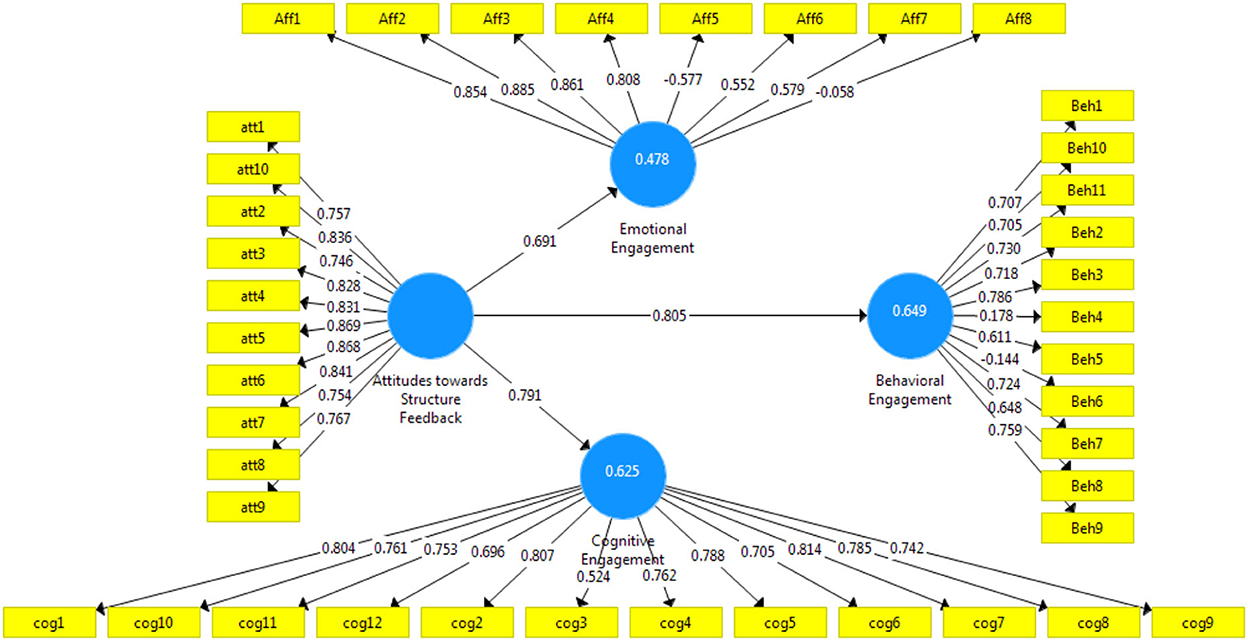

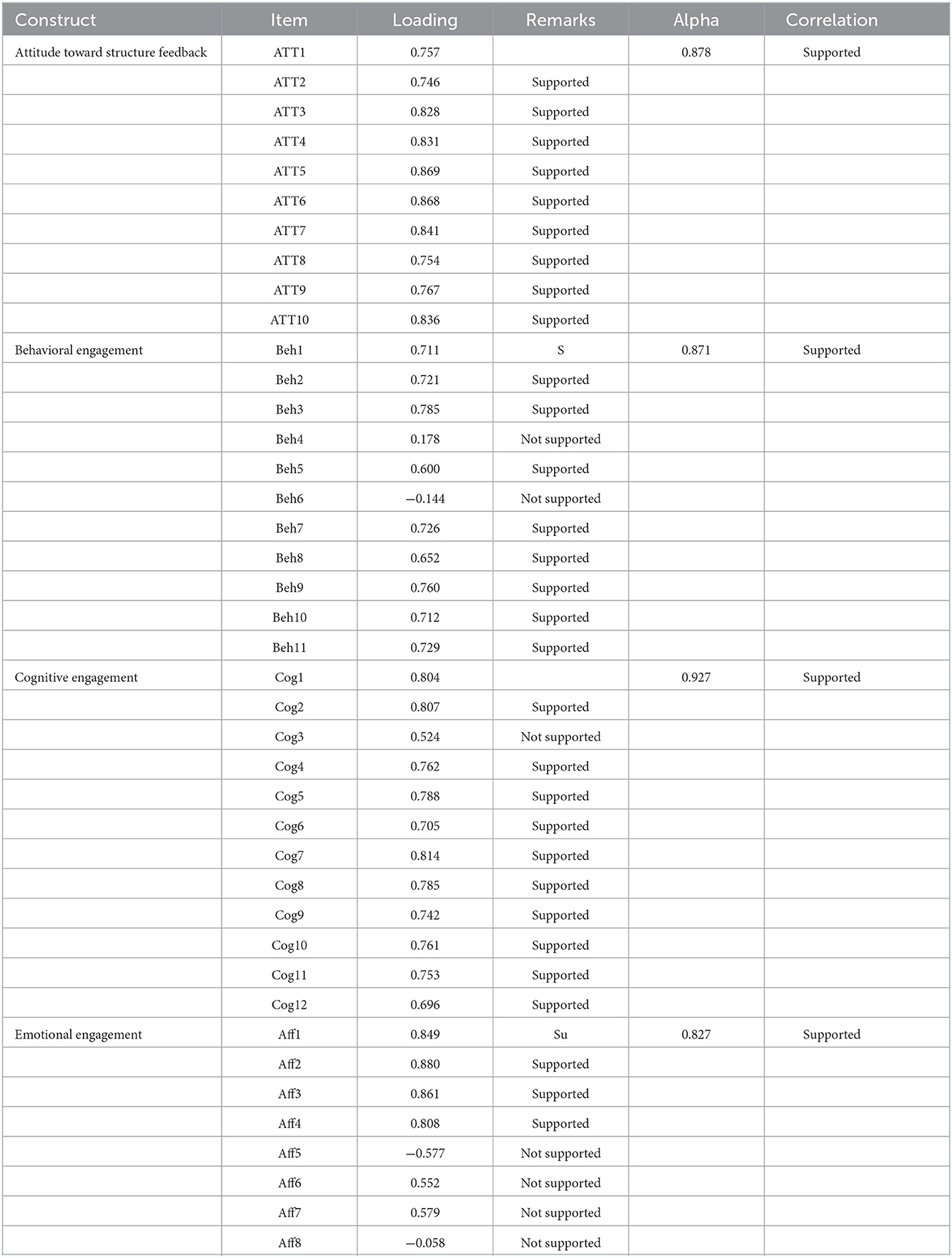

The function of construct validity is to validate the assessment that ensures the factors measure what it intends to measure (Mohajan, 2017). This study includes subsections of construct validity, such as the evaluation of reliability and convergent validity, as well as data screening and measurement model. Moreover, the validation of the structural model and hypothesis testing are also described. The survey, which comprised 42 questions, distributed four factors, as shown in Figure 1.

The research paper developed a model consisting of four factors, as shown in Figure 1. Attitude toward structured feedback connected with 11 items of contrasts. Emotional engagement is linked with eight items of the survey questionnaire. Behavioral engagement connected with 11 items, and finally, cognitive engagement used 12-item questions. The main influences individually linked the attitude toward structured feedback with the other three factors.

Results

Once the acceptance model passed the reliability test, data could be collected. It is important to collect information from every single individual in the population. Hence, sampling means collecting sufficient information from particular participants in the population to popularize the findings of the entire population (Hair et al., 2014). The data to validate the model were collected from four different HEIs from the sample of students. All these four HEIs apply to an online learning system. The next main criterion, therefore, was that the research sites must be using e-learning systems. According to Cone and Foster (1993), a few departments in universities were already using e-learning or had participated in earlier research as the teachers were allowed to use e-learning in combination with their subject knowledge at that point in time.

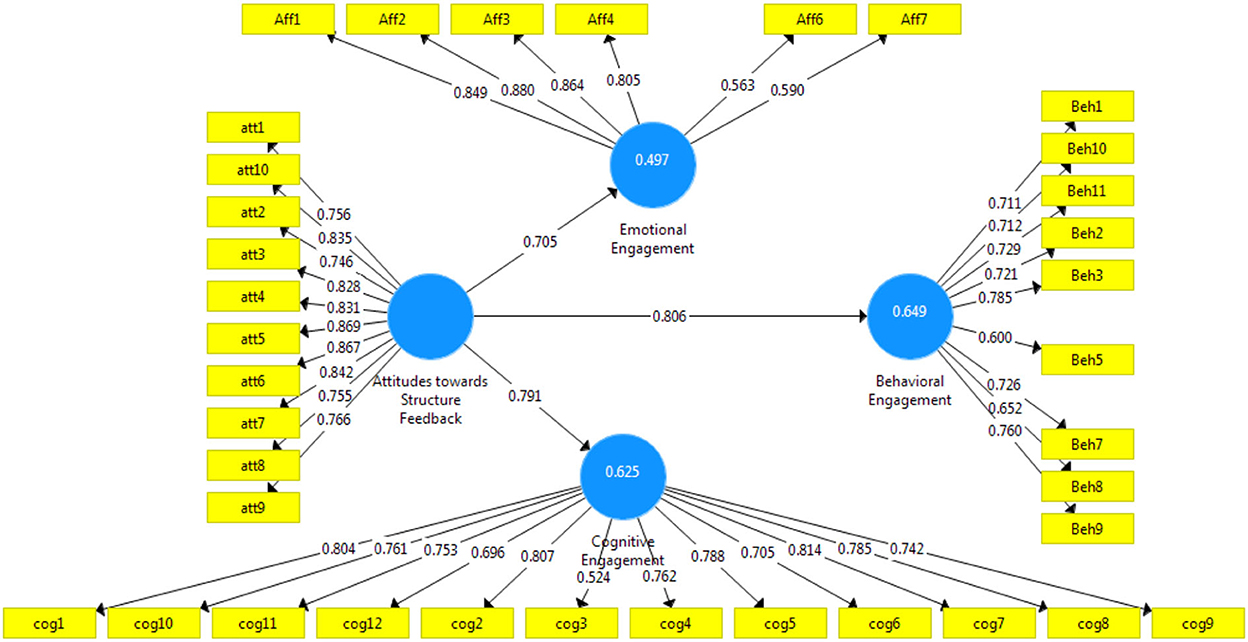

Others were still in the early stages of the innovation-decision process or were transferring from a period of investigation into a phase where e-learning was considered part of the institutional agenda (Tawafak et al., 2018). The data entered in an Excel file and saved as vs. extension were tested using PLS-SEM software based on a set of data collection used to evaluate all the questions with different factors. The total number of respondents was 114, making it a representative sample. For this research, the students were considered as the key participants to evaluate the factors and the acceptance of the conceptual research model as mentioned in Figure 2 shows the results of using PLS-SEM construction and the validity of its items and influence links.

As shown in Figure 2, some of the items in the model showed low loadings on their pertinent factors. These items endanger the internal validity of the model and have to be excluded. Table 4 shows the loading for each item used in the model and which questions are the most highly impacted and strongly connected.

Table 4 shows the item loading and Cronbach's alpha values for all constructs/factors in the measurement model, which exceeded the recommended threshold values. In summary, the adequacy of the measurement model indicated that all items were reliable indicators of the hypothesized constructs. According to Table 4 and concerning the results of the factor “attitude toward structure feedback,” the highest impact items used are item numbers 3 to 6 and 10, with 0.828, 0.831, 0.869, 0.868, 0.841, and 0.836, respectively. In the behavioral engagement factor, all 11 items are significant and remarks as supported except for items Beh4 (its loading 0.178) and Beh6 (its loading −0.144), and its remarked as not supported by the total questions designed in the survey. In the cognitive engagement factor, all 12 items are remarked as supported items except for Cog3, its item loading value of 0.524, which is <0.6, and remarks as not supported in the survey factors. Although it should be mentioned that Hair et al. (2006) explained that an item with loading above 0.5 can also be supported if the total AVE of the construct is not endangered.

Regarding the affective section of the survey (emotional engagement) factor in the model design, this factor is supported by all calculations. Moreover, the item loading values are divided into two categories, the first four items are highly supported remarks, while the second four items (items 5 to 8) are not supported because their negative loading values impact the model design. The two of these items (items 6 and 7) had loadings above 0.5 and could be included in the model if they do not endanger the total AVE of the factor. Also, the student feedback was not supporting the results in these last four items. By the end of all PLS calculations, the emotional engagement is remarked as supported and significant results. Based on the results explained earlier, the final model was run after the exclusion of problematic items (Beh4, Beh6, Aff5, and Aff7). The final model is shown in Figure 3.

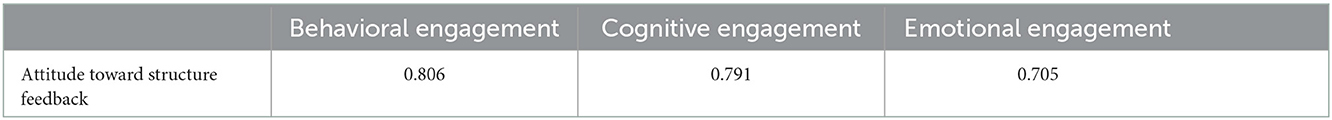

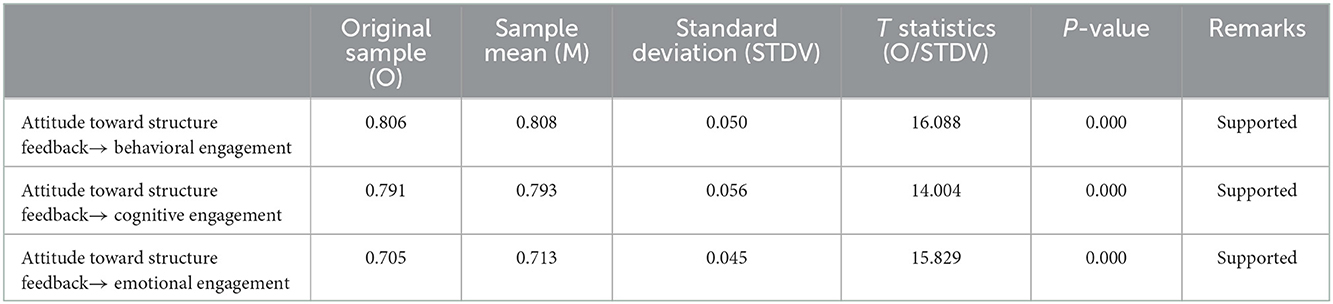

The path coefficient results for three dependent factors show an acceptable value where all results are above 0.5, the standard condition for being accepted (Table 5).

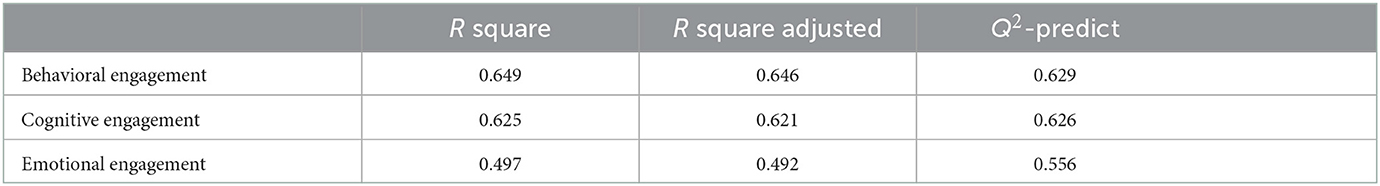

Table 6 shows the validity of R square results. According to Hair et al. (2006), the R square have three categories: 0 to 0.29 is a weak and mostly rejected model, 0.3–0.45 is acceptable, and from 0.46 to 0.99 is highly accepted and a significant model result. Regarding this analysis, this model is fully accepted with its three R square results constructed directly from the attitude toward structured feedback to the other three related factors with R square 0.649, 0.625, and 0.478 to behavioral engagement, cognitive engagement, and emotional engagement, respectively. Concerning the prediction values of Q square, the accepted results can be any value greater than 0.4. For Table 5, all Q square predicted values are 0.631, 0.613, and 0.434, respectively, which indicates a significant value with all factors.

The structural model's characteristics are measured by studying R square determination coefficients, regression estimates, and statistical significance. The R2 value assesses the amount of predictive power and shows the extent of divergence, justified by its antecedent factors in the model. The model's R2 values should be high enough to reach a minimum level of explanatory power (Urbach and Ahlemann, 2010). Accordingly, R2 values of 0.67 are considered significant, 0.33 to be reasonable, and 0.19 to be poor. Another measure that is carried out in the assessment of the structural model is the path coefficient value, which measures how strong the link is between the independent factors and dependent factors. To assess if the path coefficient is significant, the value should be higher than 0.100 within the model and be substantive at the 0.05 level of significance at least. Figure 3 shows the real numbers of contrast among factors and their items.

In addition, it shows the active results of R square as it is shown in Table 6. In addition to the path coefficient with smooth relationships from the attitude toward structured feedback to the other three factors of the conceptual model as the same values mentioned in Table 5. The standard path coefficient to be accepted should be above 0.5 to prove the link between the factors in the model design.

The convergent validity assesses to what extent the construct measures are different from the other constructs in the model. The value of the convergent validity measure is based on a merge or percentage of variance. Several techniques are employed to measure the relative quantum of convergent validity among measured items. Accordingly, Hair et al. (2006) suggested that the use of factor loadings, composite reliability, and average variance extracted (AVE) in measuring the convergent validity, where factor loadings ≥0.5 and preferably ≥0.70, show a high convergent validity. Moreover, composite reliability with estimates ≥0.70 shows enough convergence or internal consistency. The AVE exhibits the indicator total variance accounted for by the latent construct and the value of the AVEs should be ≥0.5. Thus, when the values are higher than the minimum recommended score for factor loading, composite reliability, and AVE, it signifies the instrument items are valid and reliable.

As can be seen in Table 7, a discriminant validity measure is another test carried out to measure the extent to which a construct is truly different from other constructs. A discriminant validity measure is another test carried out to measure the extent to which a construct is truly different from other constructs. A high discriminating validity shows that a concept is specific and highlights some effects overlooked by other measures. To assess discriminating validity, latent construct correlation matrices are applied where the square roots of the AVEs along with the diagonals are indicated. Correlational statistics between constructs are shown in the lower left off-diagonal elements in the matrix. Thus, discriminant validity is realized when the diagonal elements (square roots of AVEs) exceed the off-diagonal elements (correlations between constructs) in the same row and column as suggested by Fornell and Larcker (1981). Table 8 shows the discriminant validity results.

In testing the validity of the model constructs, two measures were considered, which are convergent validity and discriminant validity, where convergent validity was employed to assess whether items within the same construct were highly correlated with each other. Moreover, discriminant validity was used to assess if the items loaded more on their intended construct than on other constructs (Lai and Chen, 2011). Therefore, construct validity was tested using factor analysis with principal component analysis and varimax rotation. The diagonal line of loading between 0.45 and 0.54 is generally considered fair, loading between 0.55 and 0.62 is good, loading between 0.63 and 0.70 is very good, and loading is considered excellent if it is higher than 0.71 (Comrey and Lee, 2013). The modified factor loading analysis indicated that all the constructs in the model have both excellent convergent and discriminant validity with each AVE value greater than the threshold value, as shown in Table 8.

According to Table 9, the factors used in this study show significantly supported remarks regarding the PLS-SEM grogram. Therefore, this model shows a high correlation between these factors.

Discussion

Teaching may be extremely rewarding when students are engaged, profoundly interested in the subject matter, and intelligently participating. However, strong student engagement is difficult to create. This study promotes structured feedback via online education as one of these ways since the ability to adapt and nurture improved student engagement frequently involves research and preparation. Student engagement is crucial in every class, but it is essential in the online learning environment where students must be disciplined enough to avoid distractions and other obligations competing for their time while being cut off from their instructor and other students. According to extensive studies in class engagement, student's engagement differs depending on the environment that is established by the school and instructor as well as the learning opportunities that are provided in the classrooms, which is in notable agreement with this study, as the environment turned to online one (Watanabe, 2008; Kelly and Turner, 2009; Nasir et al., 2011).

According to the results of this study, all three types of engagement (cognitive, behavioral, and emotional) are positively correlated with the structured feedback used in online classes, which is in line with the following studies that used different types of feedback in online classes (Flahery and Pearce, 1998; Dixson, 2010; Chakraborty and Nafukho, 2014; Martin and Bolliger, 2018). So, the three types of engagement were achieved during class time, which is in agreement with Latipah et al. (2020). The type of structured feedback that is used in this study led the students to ask themselves what I know, what I want to know, and what I learned, in each lesson, which shows positive to very positive results in relation to students' engagement. Wenger (1998) and Vonderwell and Zachariah (2005) stated that participating in an online class involves more than just joining the class or commenting on a message board. These researchers concluded that participating in a discussion and being active are crucial components of being engaged.

Although students can post to the discussion board, real engagement occurs in the dialogue that develops after the first post. The environment of online classes that make learners passive receivers of knowledge, just listeners, specifically in human science lectures, requires a rethinking process of the ways used in presenting the material, which is in agreement with Garrison et al. (2000) who stated that “potential for creating an educational community” referring to the exploitation of subject material. Hence, teachers need to activate the ideas of student-centered education with some types of class discussion (Mandernach et al., 2006) to ensure wide participation of the students in the class, which in turn develops their class engagement. The results of the study in relation to each question can be stated as follows:

First research question

Is there a correlation between EFL college students' cognitive engagement and their attitudes toward structured feedback in online education?

Depending on the results gained, cognitive engagement achieved very positive results in relation to structured feedback between the two others. This result demonstrates that leading students to think in an online class can play a role in increasing their engagement, specifically cognitive engagement (Nguyen et al., 2016; Pilotti et al., 2017). Let us first clarify cognitive psychology and discuss how it affects student engagement and active learning. According to cognitive psychology, active learning entails the growth of cognition, which is accomplished through accumulating systematic knowledge structures and methods for understanding, remembering, and solving issues, and these processes are related to applying structured feedback. Active learning also involves an interpretation process, whereby new information is connected to previously learned information and retained in a way that emphasizes the extended significance of these links, and this can interpret the positive results in relation to cognitive engagement in the current study.

At the start of a new unit or lesson, online teachers routinely provide context and meaning to students, which promotes improved retention and mastery. Cognitively speaking, because memory is associative, the environment can influence the information and vice versa. When new memories are generated, neurons wire together. Students' curiosity and learning capacity might be piqued by a teaching technique that uses questions to guide lesson ideas, and this explanation can provide another justification for the positive results of cognitive engagement in relation to structured feedback in online education.

The results of the present study are in agreement with Mandernach (2009) who claims that an online course encourages the best level of student cognitive involvement if it:

• Incorporates authentic learning tasks and active learning environments.

• Encourages personal connections between students and teachers in the class.

• Helps to learn to take place in a virtual setting.

It is evident that all these three points mentioned by Mandernach (2009) exist in the current study, so for this reason, the results are notably positive in relation to the existence of cognitive engagement in the online environment.

Second research question

Is there a correlation between EFL college students' behavioral engagement and their attitudes toward structured feedback in online education?

In relation to the results achieved, behavioral engagement is positively correlated with structured feedback in online education. Results prove that if the students interact behaviorally in class, this will affect positively their behavioral engagement and this result is in line with Nguyen et al. (2016). The behavioral engagement domain asks about how students behave in class, how they participate in extracurricular activities, and how interested they are in their academic assignments. All these three domains are under the light in the present study as they are all related to how EFL college students engage behaviorally via online education using structured feedback. The varied activities used in structured feedback lead the students to ask; what I know, what I want to know, and what I learned and try to provide answers for all of them, encouraging the students to engage indirectly with their class activities, and show interest in applying them.

Focusing on the student's support during the activities (such as attendance and pleasant interactions), research on school participation has shed light on the student's motivation to participate in school to gain positive class engagement (Jones et al., 2008; Wang and Holcombe, 2010). So, it is clear that the right choice of class activity plays a crucial role in activating students' engagement as a result of raising their motivation. The students' interest in their academic assignment, which refers to the concrete behavioral acts displayed by the students to demonstrate their desire to participate in classroom activities and their will to tackle difficult material, is also a pivotal component of behavioral engagement. Research in this area sheds light on the classroom exercises that result in the student displaying concrete behavioral engagement, such as perseverance, concentration, asking questions, and participating in different class discussions (Yazzie-Mintz and McCormick, 2012; Cooper, 2014).

Engaging students as autonomous learners in online contexts without the presence of a teacher is still complex. This has prompted more research to be done on the variables affecting students' engagement in this situation. Student time-on-task behavior is referred to engagement with the assigned activities. Throughout the class, students were required to participate in a variety of activities, specifically in online education to prove their active existence in class and to be engaged gradually.

Another point is where the students showed strong engagement in the feedback on various activities. As in the present study, it is discovered that this feature was excellent for learning, specifically online learning. For instance, after receiving feedback that revealed their presumptive understanding to be false, students revisited the simulation model and further investigated the concepts. It helped them to better comprehend what was going on at the molecular level. This research can generally be divided into three primary categories: interactions between students and the teacher, interactions between students and their peers, and interactions between students and the subject matter. All these categories are part of the structured feedback used in the current study. which proves its effectiveness in activating students' engagement of all types.

Third research question

Is there a correlation between EFL college students' emotional engagement and their attitudes toward structured feedback in online education?

According to the results, emotional intelligence was correlated positively with using structured feedback in online education. Due to the nature of online learning as a distant learning experience, there are obstacles to student engagement and learning. It is observed that low student engagement and greater dropout rates in online courses are primarily a result of these impediments. Emotions are a potent weapon in the struggle for online student attention, engagement, and persistence (Deng, 2021). The difficulties of forming social and emotional connections with and between them are the biggest obstacle to the success of online students (Gallien and Oomen-Early, 2008).

However, combining technology and emotion in the classroom might have an even greater impact. Creating an emotional connection to the material inside the classroom can be a vital tool for student retention. Students will be better able to comprehend, relate, and recognize the significance of the course subject through powerful teaching techniques, such as structured feedback. They will not perceive the course material as meaningless facts; instead, it might elicit an emotional reaction that makes it easier for them to retain it for the duration of the course (Wang et al., 2022a,b; Wang J. et al., 2022). Hence, the role of the teacher in nurturing emotional intelligence in an online class is crucial and essential (Al-Obaydi et al., 2022).

Online classes can also be used to address students' emotions. Discussing hot-button issues that concern students is one way to do this. Designing discussion platforms, like structured feedback, such that students can express their own emotions and that automatically encourage engagement (Deng, 2021). This viewpoint is also expressed by Niedenthal et al. (2006) who mentioned that learning is cognitive and emotional simultaneously. To ensure that students treat one another with respect despite any disputes, it is crucial to set ground rules before these dialogues. Overall, rather than having students study content inside a framework of monotony, it is critical to use technology to evoke emotion and keep content exciting.

Online social and emotional support for students will boost their engagement, perseverance, learning, and success. Helping students get through the obstacles that arise because of the distance involved with taking online classes is, in fact, one of the best things online instructors can do (Xie and Derakhshan, 2021). In this concern, (Rodríguez-Ardura and Meseguer-Artola, 2016, p.100) stated that many learners “feel individually placed within a true humanized education environment” in which they feel that they are taking part “in a true teaching-learning process, interacting with their lecturers and peer students.” Finally, the more alternatives we can give to online students in online classes, the more they engaged in all three types of engagement and the more control they will have over their education, and the ability to choose activities that are important to their personal, academic, and professional goals.

Conclusion

The focus on the students and how tactics in online education classes affect their participation and learning in the classroom from their point of view is what makes this study significant. The use of structured feedback in an online class for EFL college students, which gave the students a chance to think, speak, participate, express, and compete at the same time proves its successful utilization in a college context. For the following reasons, this study will be necessary for higher education institutions and professors instructing distance learning programs. The results of this study may help administrators and teachers in online education make judgments on engagement tactics for current online education courses. Numerous prior studies on online education of all types have been conducted from the perspective of the faculty; this study would enable the faculty to learn about successful engagement techniques by hearing from the students.

In addition, it is clear that improved learner engagement does not always result from the use of online platforms. When using online education, it is essential to carefully plan educational tactics to boost and maintain learner engagement. As a drawback, giving this type of feedback may have the unintended consequence of decreasing student learning autonomy. Making the student an independent learner is the major goal of the online education environment. Therefore, when proper teaching methods are offered in this sort of learning, a balance between individualized learning and reducing the teacher's involvement is always preferred. Thus, it has been suggested that more work can be done researching the negative and positive sides of using feedback in online education.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Golestan University. The patients/participants provided their written informed consent to participate in this study.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.1083673/full#supplementary-material

References

Aghaei, K., Ghoorchaei, B., Rajabi, M., and Ayatollahi, M. A. (2022). Iranian EFL learners' narratives in a pandemic pedagogy: appreciative inquiry-based approach. Lang. Relat. Res. 13, 285–314. doi: 10.52547/LRR.13.3.12

Aghaei, K., Rajabi, M., Lie, K. Y., and Ajam, F. (2020). Flipped learning as situated practice: a contrastive narrative inquiry in an EFL classroom. Educ. Inform. Technol. 25, 1607–1623. doi: 10.1007/s10639-019-10039-9

Alahmadi, N. S., and Alraddadi, B. M. (2020). The impact of virtual classes on second language interaction in the Saudi EFL context: a case study of Saudi undergraduate students. Arab. World Eng. J. 11, 56–72. doi: 10.31235/osf.io/mscdk

Al-Bahadli, K. H. (2020). The correlation between Iraqi college students' engagement and their academic achievement. Int. J. Res. Soc. Sci. Hum. 10, 247–257. doi: 10.37648/ijrssh.v10i02.021

Al-Obaydi, L. H., Pikhart, M., and Derakhshan, A. (2022). A qualitative exploration of emotional intelligence in English as foreign language learning and teaching: evidence from Iraq and the Czech Republic. Appl. Res. Eng. Lang. 11, 93–124. doi: 10.22108/ARE.2022.132551.1850

Amerstorfer, C. M., and Freiin von Münster-Kistner, C. (2021). Student perceptions of academic engagement and student-teacher relationships in problem-based learning. Front. Psychol. 12, 713057. doi: 10.3389/fpsyg.2021.713057

Astin, A. (1984). Student involvement: a developmental theory for higher education. J. Coll. Stud. Dev. 40, 518–529.

Barmby, P., Kind, P., and Jones, K. (2008). Examining changing attitudes in secondary school science. Int. J. Sci. Educ. 30, 1075–1093. doi: 10.1080/09500690701344966

Çakmak, F., Namaziandost, E., and Kumar, T. (2021). CALL-enhanced L2 vocabulary learning: using spaced exposure through CALL to enhance L2 vocabulary retention. Educ. Res. Int. 2021, 5848525. doi: 10.1155/2021/5848525

Carini, R. M., Kuh, G. D., and Klein, S. P. (2006). Student engagement and student learning: testing the linkages. Res. High. Educ. 47, 1–32. doi: 10.1007/s11162-005-8150-9

Chakraborty, M., and Nafukho, F. M. (2014). Strengthening student engagement: what do students want in online courses? Eur. J. Train. Dev. 38, 782–802. doi: 10.1108/EJTD-11-2013-0123

Comrey, A. L., and Lee, H. B. (2013). A First Course in Factor Analysis. London: Psychology press. doi: 10.4324/9781315827506

Cone, J. D., and Foster, S. L. (1993). Dissertations and Theses From Start to Finish: Psychology and Related Fields. Washington, DC: American Psychological Association.

Cooper, K. S. (2014). Eliciting engagement in the high school classroom a mixed-methods examination of teaching practices. Am. Educ. Res. J. 51, 363–402. doi: 10.3102/0002831213507973

Csikszentmihalyi, M. (1990). Flow: The Psychology of Optimal Experience. New York, NY: Harper & Row Publishers.

Deng, R. (2021). Emotionally engaged learners are more satisfied with online courses. Sustainability 13, 11169. doi: 10.3390/su132011169

Derakhshan, A. (2021). The predictability of Turkman students' academic engagement through Persian language teachers' nonverbal non-verbal immediacy and credibility. J. Teach. Persian Speakers Other Lang. 10, 3–26. doi: 10.30479/jtpsol.2021.14654.1506

Derakhshan, A. (2022a). The “5Cs” Positive Teacher Interpersonal Behaviors: Implications for Learner Empowerment and Learning in an L2 Context. Cham: Springer. Available online at: https://link.springer.com/book/9783031165276 (accessed October, 2022).

Derakhshan, A. (2022b). [Review of the book Positive psychology in second and foreign language education, by Katarzyna Budzińska, and Olga Majchrzak (eds).]. ELT J. 76, 304–306. doi: 10.1093/elt/ccac002

Derakhshan, A. (2022c). Revisiting research on positive psychology in second and foreign language education: trends and directions. Lang. Relat. Res. 13, 1–43. doi: 10.52547/LRR.13.5.1

Derakhshan, A., Fathi, J., Pawlak, M., and Kruk, M. (2022). Classroom social climate, growth language mindset, and student engagement: the mediating role of boredom in learning English as a foreign language. J. Multiling. Multicult. Dev. doi: 10.1080/01434632.2022.2099407

Derakhshan, A., and Malmir, A. (2021). The role of language aptitude in the development of L2 pragmatic competence. TESL-EJ 25.

Derakhshan, A., Saeidi, M., and Beheshti, F. (2019). The interplay between Iranian EFL teachers' conceptions of intelligence, care, feedback, and students' stroke. IUP J. Eng. Stud. 14, 81–98.

Derakhshan, A., and Shakki, F. (2019). A critical review of language teacher education for a global society: A modular model for knowing, analyzing, recognizing, doing, and seeing. Crit. Stud. Texts Pgm. Human Sci. 19, 109–127.

Derakhshan, A., and Shakki, F. (2020). [Review of the book Worldwide English Language Education Today: ideologies, policies, and practices, by A. Al-Issa and S. A. Mirhosseini]. System. 90, 102224. doi: 10.1016/j.system.2020.102224

Dixson, M. D. (2010). Creating effective student engagement in online courses: what do students find engaging? J. Scholarship Teach. Learn. 10, 1–13.

Dotterer, A. M., and Lowe, K. (2011). Classroom context, school engagement, and academic achievement in early adolescence. J. Youth Adolesc. 40, 1649–1660. doi: 10.1007/s10964-011-9647-5

Eslami, Z. R., and Derakhshan, A. (2020). Promoting advantageous ways of corrective feedback in EFL/ESL classroom (special issue: in honor of andrew cohen's contributions to L2 teaching and learning research). Lang. Teach. Res. Quart. 19, 48–65. doi: 10.32038/ltrq.2020.19.04

Flahery, L., and Pearce, K. (1998). Interrnet and face to face communication: not functional alternatives. commun. Quartely. 46, 250–268.

Fornell, C., and Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement error: algebra and statistics. J. Market. Res. 18, 382–388. doi: 10.1177/002224378101800313

Fredricks, J. A., Filsecker, M., and Lawson, M. A. (2016). Student engagement, context, and adjustment: addressing definitional, measurement, and methodological issues. Learn. Instruct. 43, 1–4. doi: 10.1016/j.learninstruc.2016.02.002

Gallien, T., and Oomen-Early, J. (2008). Personalized versus collective instructor feedback in the online courseroom: does type of feedback affect student satisfaction, academic performance and perceived connectedness with the instructor? Int. J. E-Learning. 7.

Garrison, D. R., Anderson, T., and Archer, W. (2000). Critical inquiry in a text-based environment: computer conferencing in higher education. Internet Higher Educ. 2, 87–105. doi: 10.1016/S1096-7516(00)00016-6

Hair, E., Halle, T., Terry-Humen, E., Lavelle, B., and Calkins, J. (2006). Children's school readiness in the ECLS-K: predictions to academic, health, and social outcomes in first grade. Early Child. Res. Q. 21, 431–454. doi: 10.1016/j.ecresq.2006.09.005

Hair, J. F. Jr., Sarstedt, M., Hopkins, L., and Kuppelwieser, V. J. (2014). Partial least squares structural equation modeling (PLS-SEM) An emerging tool in business research. Eur. Business Rev. 26, 106–121. doi: 10.1108/EBR-10-2013-0128

Hamouda, A. (2020). The effect of virtual classes on Saudi EFL students' speaking skills. Int. J. Ling. Liter. Transl. 3, 174–204. doi: 10.32996/ijllt.2020.3.4.18

Hyland, K. (2006). English for Academic Purposes. An Advanced Resource Book. London: Routledge. doi: 10.4324/9780203006603

Jimerson, S. R., Campos, E., and Greif, J. L. (2003). Toward an understanding of definitions and measures of school engagement and related terms. The California School Psychologist 8, 7–27. doi: 10.1007/BF03340893

Jimerson, S. R., Renshaw, T. L., Stewart, K., Hart, S., and O'Malley, M. (2009). Promoting school completion through understanding school failure: a multi-factorial model of dropping out as a developmental process. Romanian J. Schl. Psychol. 2, 12–29.

Jones, R. D., Marrazo, M. J., and Love, C. J. (2008). Student Engagement—Creating a Culture of Academic Achievement. Rexford, NY: International Center for Leadership in Education.

Kelly, S., and Turner, J. (2009). Rethinking the effects of classroom activity structure on the engagement of low-achieving students. Teach. Coll. Rec. 111, 1665–1692. doi: 10.1177/016146810911100706

Kuh, G. D. (2009). What student affairs professionals need to know about student engagement. J. Coll. Stud. Dev. 50, 683–706. doi: 10.1353/csd.0.0099

Lai, W. T., and Chen, C. F. (2011). Behavioral intentions of public transit passengers-the roles of service quality, perceived value, satisfaction and involvement. Transport Policy 18, 318–325. doi: 10.1016/j.tranpol.2010.09.003

Lam, S. F., and Jimerson, S. R. (2008). Exploring Student Engagement in Schools Internationally: Consultation Paper. Chicago, IL: International School Psychologist Association.

Larsen-Freeman, D., and Anderson, M. (2011). Techniques and Principles in Language Teaching. Oxford: Oxford University Press.

Latipah, I., Saefullah, H., and Rahmawati, M. (2020). Students' behavioral, emotional, and cognitive engagement in learning vocabulary through flipped classroom. Eng. Ideas J. Eng. Lang. Educ. 1, 93–100

Mandernach, B. J. (2009). Effect of instructor-personalized multimedia in the online classroom. Int. Rev. Res. Open Dist. Learn. 10, 1–19. doi: 10.19173/irrodl.v10i3.606

Mandernach, B. J., Gonzales, R. M., and Garrett, A. L. (2006). An examination of online instructor presence via threaded discussion participation. J. Online Learn. Teach. 2, 1–19.

Martin, F., and Bolliger, D. U. (2018). Engagement matters: student perceptions on the importance of engagement strategies in the online learning environment. Online Learn. 22, 205–222. doi: 10.24059/olj.v22i1.1092

Mohajan, H. K. (2017). Two criteria for good measurements in research: validity and reliability. Ann. Spiru Haret Univ. Econ. Ser. 17, 59–82. doi: 10.26458/1746

Nasir, N. S., Jones, A., and McLaughlin, M. W. (2011). School connectedness for students in low-income urban high schools. Teach. Coll. Rec. 113, 1755–1793. doi: 10.1177/016146811111300805

Nejati, R., Hassani, M. T., and Sahrapour, H. A. (2014). The relationship between gender and student engagement, instructional strategies, and classroom management of Iranian EFL teachers. Theory Pract. Lang. Stud. 4, 1219–1226. doi: 10.4304/tpls.4.6.1219-1226

Nguyen, T. D., Cannata, M., and Miller, J. (2016). Understanding student behavioral engagement: importance of student interaction with peers and teachers. J. Educ. Res. doi: 10.1080/00220671.2016.1220359

Niedenthal, P., Krauth-Gruber, S., and Ric, F. (2006). Psy-chology of Emotion: Interpersonal, Experiential and Cognitive Approaches. Psychology Press.

Philp, J., and Duchesne, S. (2016). Exploring engagement in tasks in the language classroom. Annu. Rev. Appl. Linguist. 36, 50–72. doi: 10.1017/S0267190515000094

Pikhart, M., Al-Obaydi, L. H., and Abdur Rehman, M. (2022). A quantitative analysis of the students' experience with digital media in L2 acquisition. Psycholinguistics 31, 118–140. doi: 10.31470/2309-1797-2022-31-1-118-140

Pikhart, M., and Klimova, B. (2020). ELearning 4.0 as a sustainability strategy for generation Z language learners: applied linguistics of second language acquisition in younger adults. Societies 10, 1–10. doi: 10.3390/soc10020038

Pilotti, M., Anderson, S., Hardy, P., Murphy, P., and Vincent, P. (2017). Factors related to cognitive, emotional, and behavioral engagement in the online asynchronous classroom. Int. J. Teach. Learn. High. Educ. 29, 145–153.

Rad, H. S., Namaziandost, E., and Razmi, M. H. (2022). Integrating STAD and flipped learning in expository writing skills: impacts on students' achievement and perceptions. J. Res. Technol. Educ. doi: 10.1080/15391523.2022.2030265

Robinson, C. C., and Hullinger, H. (2008). New benchmarks in higher education: student engagement in online learning. J. Educ. Business 84, 101–109. doi: 10.3200/JOEB.84.2.101-109

Rodríguez-Ardura, I., and Meseguer-Artola, A. (2016). Presence in personalised eLearning—the impact of cognitive and emotional factors and the moderating role of gender. Behav. Inform. Technol. 35, 1008–1018. doi: 10.1080/0144929X.2016.1212093

Sekaran, U., and Bougie, R. (2016). Research Methods for Business: A Skill Building Approach. New York, NY: John Wiley and Sons.

Shakki, F. (2022). Iranian EFL students' L2 engagement: the effects of teacher-student rapport and teacher support. Lang. Relat. Res. 13, 175–198. doi: 10.52547/LRR.13.3.8

Shankar, V., Kleijnen, M., Ramanathan, S., Rizley, R., Holland, S., and Morrissey, S. (2016). Mobile shopper marketing: key issues, current insights, and future research avenues. J. Interact. Market. 34, 37–48. doi: 10.1016/j.intmar.2016.03.002

Shu-Fang, N., and Aust, R. (2008). Examining teacher verbal immediacy and sense of classroom community in online classes. Int. J. E-Learn. 7, 477–498.

Skinner, E. A., Kindermann, T. A., and Furrer, C. J. (2009). A motivational perspective on engagement and disaffection: conceptualization and assessment of children's behavioral and emotional participation in academic activities in the classroom. Educ. Psychol. Meas. 69, 493–525. doi: 10.1177/0013164408323233

Tawafak, R. M., Romli, A. B., and Alsinani, M. (2019). E-learning system of UCOM for improving student assessment feedback in Oman higher education. Educ. Inform. Technol. 24, 1311–1335. doi: 10.1007/s10639-018-9833-0

Tawafak, R. M., Romli, A. B., and Arshah, R. B. A. (2018). Continued intention to use UCOM: four factors for integrating with a technology acceptance model to moderate the satisfaction of learning. IEEE Access 6, 66481–66498. doi: 10.1109/ACCESS.2018.2877760

Urbach, N., and Ahlemann, F. (2010). Structural equation modeling in information systems research using partial least squares. J. Inform. Technol. Theory Applic. 11, 5–40.

Vonderwell, S., and Zachariah, S. (2005). Factors that influence participation in online learning. J. Res. Technol. Educ. 38, 213–230. doi: 10.1080/15391523.2005.10782457

Walther, J., and Burgoon, J. (1992). Relational communication in computer-mediated interaction Human Communication Research. 19, 50–88. doi: 10.2333/jbhmk.19.2_50

Wang, J., Zhang, X., and Zhang, L. J. (2022). Effects of teacher engagement on students' achievement in an online English as a foreign language classroom: the mediating role of autonomous motivation and positive emotions. Front. Psychol. 13, 950652. doi: 10.3389/fpsyg.2022.950652

Wang, M.-T., Fredricks, J. A., Ye, F., Hofkens, T. L., and Linn, J. S. (2016). The math and science engagement scales: scale development, validation, and psychometric properties. Learn. Instruct. 43, 16–26. doi: 10.1016/j.learninstruc.2016.01.008

Wang, M. T., and Hofkens, T. L. (2019). Beyond classroom academics: a school-wide and multi-contextual perspective on student eng agement in school. Adolesc. Res. Rev. 1, 1–15.

Wang, M. T., and Holcombe, R. (2010). Adolescents' perceptions of school environment, engagement, and academic achievement in middle school. Am. Educ. Res. J. 47, 633–662. doi: 10.3102/0002831209361209

Wang, Y., Derakhshan, A., and Azari Noughabi, M. (2022a). The interplay of EFL teachers' immunity, work engagement, and psychological well-being: evidence from four Asian countries. J. Multiling. Multicult. Dev. doi: 10.1080/01434632.2022.2092625

Wang, Y., Derakhshan, A., and Pan, Z. (2022b). Positioning an agenda on a loving pedagogy in second language acquisition: conceptualization, practice, and research. Front. Psychol. 13, 894190. doi: 10.3389/fpsyg.2022.894190

Wang, Y. L, Derakhshan, A., and Zhang, L. J. (2021). Researching and practicing positive psychology in second/foreign language learning and teaching: the past, current status and future directions. Front. Psychol. 12, 731721. doi: 10.3389/fpsyg.2021.731721

Watanabe, M. (2008). Tracking in the era of high stakes state accountability reform: case studies of classroom instruction in North Carolina. Teach. Coll. Rec. 110, 489–534. doi: 10.1177/016146810811000307

Wenger, E. (1998). Communities of Practice: Learning, Meaning, and Identity. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511803932

Witt, P. L., Wheeless, L. R., and Allen, M. (2004). A meta-analytical review of the relationship between teacher immediacy and student learning. Commun. Monogr. 71, 184–207. doi: 10.1080/036452042000228054

Xie, F., and Derakhshan, A. (2021). A conceptual review of positive teacher interpersonal communication behaviors in the instructional context. Front. Psychol. 12, 708490. doi: 10.3389/fpsyg.2021.708490

Keywords: cognitive engagement, behavioral engagement, emotional engagement, structured feedback, online education

Citation: Al-Obaydi LH, Shakki F, Tawafak RM, Pikhart M and Ugla RL (2023) What I know, what I want to know, what I learned: Activating EFL college students' cognitive, behavioral, and emotional engagement through structured feedback in an online environment. Front. Psychol. 13:1083673. doi: 10.3389/fpsyg.2022.1083673

Received: 29 October 2022; Accepted: 01 December 2022;

Published: 04 January 2023.

Edited by:

Jian-Hong Ye, Beijing Normal University, ChinaReviewed by:

Le Pham Hoai Huong, Hue University, VietnamMojtaba Rajabi, Gonbad Kavous University, Iran

Copyright © 2023 Al-Obaydi, Shakki, Tawafak, Pikhart and Ugla. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Farzaneh Shakki,  Zi5zaGFra2lAZ3UuYWMuaXI=

Zi5zaGFra2lAZ3UuYWMuaXI=

†ORCID: Liqaa Habeb Al-Obaydi orcid.org/0000-0003-3991-6035

Farzaneh Shakki orcid.org/0000-0002-2253-0876

Ragad M. Tawafak orcid.org/0000-0001-8969-1642

Marcel Pikhart orcid.org/0000-0002-5633-9332

Raed Latif Ugla orcid.org/0000-0002-8468-3747

Liqaa Habeb Al-Obaydi

Liqaa Habeb Al-Obaydi Farzaneh Shakki

Farzaneh Shakki Ragad M. Tawafak

Ragad M. Tawafak Marcel Pikhart

Marcel Pikhart Raed Latif Ugla5†

Raed Latif Ugla5†