- 1School of Foreign Languages and Culture, Chongqing University, Chongqing, China

- 2Faculty of Education and Social Work, University of Auckland, Auckland, New Zealand

Classroom-based assessment (CBA) is an approach for learning improvement that has been advocated as having strong potential in enhancing learner autonomy of young language learners (YLLs). This study investigated Chinese primary school English as a foreign language (EFL) teachers’ beliefs about CBA, their assessment practices, and the relationship between their CBA beliefs and practices. Drawing on data from a survey of 195 Chinese primary school EFL teachers, results showed that the teachers positively believed in the value of various CBA processes, including planning assessment, collecting learning evidence, making professional judgments and providing appropriate feedback, and they also attempted to enact these assessment practices; belief-practice alignment was also identified, showing that teachers’ beliefs about CBA were significant predictors of their assessment practices. Implications are provided for promoting the implementation of CBA for YLLs in similar contexts.

Introduction

Classroom-based assessment (CBA) emphasizes the integration of assessment in the instructional process in order to facilitate student learning (Cizek, 2010). Ever since Black and Wiliam (1998) landmark work on the role of teachers’ classroom assessment in maximizing students’ learning gains, CBA has been drawing increasing research attention. CBA is considered particularly critical for young language learners (YLLs), defined as children aged approximately 6–12 learning a foreign or second language (Britton, 2021). This is because YLLs bring to their language learning their own unique characteristics of cognitive, social, emotional and physical growth, literacy development, and vulnerability (McKay, 2006). Characteristics such as these warrant special attention to assessment of YLLs. Thus, CBA has been strongly promoted for YLLs given its potential to foster children’s development of self-regulation (Butler, 2021).

However, despite a growing research interest in assessment of YLLs, little empirical evidence has been reported on how language teachers implement CBA in young learner contexts. In their critical review of the most important publications on assessing YLLs’ language abilities over the past two decades, Nikolov and Timpe-Laughlin (2021) noted that CBA for YLLs is an area in need of more consideration and research. Specifically, the limited body of research on CBA for YLLs has examined the accuracy of CBA as a tool for measuring the language abilities of YLLs, effectiveness of CBA as a means of stimulating language learning of YLLs, and teachers’ CBA practices for YLLs as well as influencing factors (e.g., Butler and Lee, 2010; Liu and Brantmeier, 2019; Kaur, 2021). These studies yielded mixed results about the accuracy of CBA for YLLs, unpacked the effects of CBA on YLLs’ language learning performance and attitudes, and offered insights into the current status of the implementation of CBA for YLLs. Nevertheless, much less attention has been paid to teachers’ beliefs and practices pertinent to CBA for YLLs. This is an important gap because teachers’ beliefs can have a powerful impact on their instructional practices (Pajares, 1992; Borg, 2003; Dixon et al., 2011; Sun and Zhang, 2021). The extent to which CBA is effectively implemented in young learner classrooms will be affected by teachers’ beliefs about the purposes and nature of CBA. Examining the interaction between teachers’ CBA beliefs and practices, therefore, can help us better understand how CBA can be integrated into language instruction to young learners. Understanding teachers’ beliefs is also an essential part of teacher education programs that aim to promote change in teachers’ classroom behaviors (Borg, 2011; Wu et al., 2021a). Studying teachers’ beliefs about CBA, therefore, may shed light on how to design teacher professional development activities aimed at promoting the enactment of CBA for YLLs.

To fill this void, this study aims to investigate primary school English-as-a-foreign-language (EFL) teachers’ beliefs and practices related to CBA in the Chinese context. The study contributes to the literature by uncovering teacher belief-practice relationships in CBA for YLLs. It also seeks to offer practical implications for teacher educators, school administrators and policy makers so as to help advance the implementation of CBA in young EFL learner contexts.

Literature review

CBA

CBA refers to any assessment embedded in classroom instruction, either explicit or implicit, regarded as in opposition to traditional large-scale tests external to the classroom (Turner, 2012). Technically, it is viewed as a process that “teachers and students use in collecting, evaluating, and using evidence of student learning” (McMillan, 2013, p. 1). In this sense, CBA is a process-based practice rather than a simple assessment instrument. CBA is broadly conceived as serving two purposes: summative assessment (SA) and formative assessment (FA), often referred to as assessment of learning and assessment for learning, respectively (Brookhart, 2004; Wu et al., 2021c). SA is primarily designed to ‘elicit evidence regarding the amount or level of knowledge, expertise or ability’ (Wiliam, 2001, p. 169) for administrative or reporting purposes, with a focus on scores. FA, on the other hand, focuses on using assessment to promote student learning (Black and Wiliam, 2009). It emphasizes the provision of descriptive feedback (rather than scores) as evidence about student learning, through which students are informed about their strengths and weakness, and scaffolded to close the gap between their current and desired performance (Sadler, 1989). Students, in particular, play a vital role in the assessment process, whereby they have an awareness of learning goals and assessment criteria, and actively engage themselves in self-and peer assessment (Pryor and Crossouard, 2008). Assessment conducted in this way is seen to have great potential in developing students’ capacity to self-regulate their learning (Assessment Reform Group, 2002; Panadero et al., 2018). Notwithstanding the two different purposes for assessment, the boundary between SA and FA is not as clear cut as usually represented because the same assessment information can be used for different purposes at different times (Rea-Dickins, 2007). It is how the information is used which provides the key distinction. In some cases, SA can be used formatively and FA can be used to serve a summative function (Dixson and Worrell, 2016; Dolin et al., 2018). Summative tests, for example, which typically take place at the end of a unit or term to record learning attainments, can be used to adjust teaching and improve students’ learning in the future. FA practices, such as self-and peer assessment, have the potential to generate a lot of data about students’ progress, which can be recorded and further used for summative reporting purposes. More recently, scholars have pointed out that, in an assessment for learning culture, all assessments, including those for administrative and reporting purposes, can and need to be implemented with a central aim of facilitating learning (Black and Wiliam, 2018; Davison, 2019).

Researchers have put forward a number of frameworks casting light on how to translate CBA for learning principles into practice. These include Black and Wiliam (2009) five strategies of formative assessment, Hill and McNamara (2011) framework for CBA processes, Davison and Leung (2009) framework for teachers-based assessment, and more recently learning-oriented assessment related frameworks (Carless, 2011; Turner and Purpura, 2016). Despite their differences, all the frameworks emphasize three instructional processes that are critical to student learning improvement, i.e., where learners are going, where they are in their learning, and how to get there (Wiliam and Thompson, 2008). Davison and Leung (2009) framework is particularly operational as it conceptualizes CBA into a cycle of four steps, addressing the process-based feature of CBA. It provides a working approach for the analysis of teachers’ classroom-embedded assessment practice, and thus was selected as the analytical framework for the present study.

The first step of Davison and Leung (2009) framework, planning assessment (PA), focuses on clarifying learning goals and assessment criteria to ensure that students have an awareness of where they are going. Selecting assessment methods that suit the needs of students is also an important element of this step. The second step, collecting learning evidence, is to collect instructionally tractable evidence of student learning, which can be achieved through various methods, such as spontaneous assessment opportunities (SAO), planned assessment opportunities, and formal assessment tasks (FATs; Hill and McNamara, 2011; Turner and Purpura, 2016). In the next step, making professional judgments (MPJ), collected learning evidence is interpreted in relation to established standards (i.e., criterion-referenced assessment) or students’ progress made over time (i.e., pupil-referenced assessment). The final step, providing appropriate feedback, moves students forward through descriptive feedback that enables students to recognize their learning gap and monitor their own learning to close such gap. These four steps of CBA are interrelated with one another, rather than separated, and need to be implemented in a holistic way to fulfil the overriding aim of supporting student learning.

Assessing YLLs

Assessing YLLs warrants a great deal of attention because of their unique age-related characteristics, which are generally categorized into three types: growth, literacy and vulnerability (McKay, 2006). First, YLLs are undergoing cognitive, social and emotional, as well as physical growth, which is nonlinear and dynamic (Berk, 2017). For example, they have short attention span, usually love physical activities, and are developing social awareness and a sense of self-esteem, which generates a strong demand for teachers to select or conduct appropriate assessment tasks (Patekar, 2021). Second, compared with older or adult learners, YLLs are still developing literacy skills in their first language when they are learning a foreign language, and this can have both conflicting and constructing influence on their literacy skills in the foreign language (Butler, 2016). Third, YLLs are particularly vulnerable to adults’ praise and criticism concerning their assessment performance. Their experience with assessments can have a long-lasting impact on their learning motivation, self-confidence, and learning outcome (Butler, 2019).

Against the backdrop of the above unique characteristics of YLLs, researchers have proposed principles for effective assessment of YLLs (Edelenbos and Vinjé, 2000; Hasselgreen, 2000; Cameron, 2001). Some key principles are summarized as follows: assessment tasks should fit with YLLs’ learning experience, reflecting those activities conducted in class; traditional achievement tests should not be viewed as the only form of assessment, instead, alternative forms of assessment, such as student portfolios, self-and peer assessment, need to be promoted for YLLs; assessment processes should help YLLs to monitor their language learning and develop their self-regulation abilities. CBA has been recognized as a practical solution to address such principles. It incorporates clear clarification of learning goals, multiple assessment methods, and quality feedback toward learning goals. Through CBA, YLLs can become aware of goals, develop positive learning attitudes, and gradually develop a sense of control over their own learning (Butler, 2021).

Given that assessment of YLLs is a newly emerging field (Hasselgreen, 2012), many areas have been underexplored, among which is CBA for YLLs, in particular. Limited research on this area has, however, highlighted that the implementation of CBA in young learner contexts is less than straightforward. For example, teachers of young learners fail to make assessment criteria explicit (Hild and Nikolov, 2011), frequently apply traditional assessment methods (e.g., objective tests; Prošić-Santovac et al., 2019; Yan et al., 2021), provide mostly evaluative feedback (e.g., marks) (Brumen et al., 2009), and mainly use assessment for summative purposes (Rixon, 2016). Moreover, research suggests that much can constrain teachers’ CBA enactment, such as teachers’ low assessment literacy (Vogt and Tsagari, 2014), lack of training in assessment (Patekar, 2021), limited opportunities for professional development (Lee et al., 2019), tight curriculum content (Mak and Lee, 2014), and an examination-oriented culture (Kaur, 2021). It could thus be said that although CBA is regarded as an effective approach to the improvement of YLLs’ learning, the implementation of CBA in local contexts is a challenging endeavor.

L2 teachers’ beliefs and practices regarding CBA

Despite the aforementioned research efforts into the enactment of CBA in young learner contexts, teachers’ beliefs and practices about CBA for YLLs, as well as their relationship, remains largely under-investigated. Teacher beliefs, used interchangeably with teacher conceptions and teacher cognition, generally refer to “the unobservable cognitive dimension of teaching—what teachers know, believe and think” (Borg, 2003, p. 81). Teachers hold beliefs about various aspects of their work like teaching, learning, teachers, students, curriculum and materials (Borg, 2001), and their beliefs exist as a system wherein some core beliefs are stable and hard to change, while others are peripheral (Pajares, 1992). Studies have shown that teachers’ beliefs can exert a powerful influence on their instructional decisions (Burns et al., 2015; Li, 2020), though such beliefs may not always be reflected in their practices (Johnson, 1992; Dixon et al., 2011). Multiple factors, such as contextual complexities (e.g., class size, time constraints, authority’s influence), teachers’ teaching experience, and students’ needs, can determine the extent to which teachers can act according to their beliefs (Phipps and Borg, 2009; Roothooft, 2014). In this study, teachers’ CBA beliefs refer to teachers’ views toward the processes of CBA, including PA, collecting evidence, making professional judgments, and providing feedback, and teachers’ CBA practices are described as the enactment of these processes.

Research on L2 teachers’ beliefs and practices related to CBA has produced mixed results. There is evidence of a powerful effect that teachers’ beliefs have on the way they implement CBA practices (e.g., Mui So and Hoi Lee, 2011; Wang, 2017; Prošić-Santovac et al., 2019; Wu et al., 2021b). Mui So and Hoi Lee (2011), for instance, investigated English-as-a-second-language (ESL) secondary school teachers’ beliefs and practices of CBA for learning and found that their assessment practices consistently reflected their beliefs about the purpose of CBA. Similar congruence between teachers’ CBA beliefs and practices was identified in EFL contexts (Zhou and Deneen, 2016; Wu et al., 2021c). However, more studies have reported discrepancies teachers’ CBA beliefs and practices (e.g., Xu and Liu, 2009; Chen et al., 2014; Gan et al., 2018; Nasr et al., 2018; Vattøy, 2020; Wang et al., 2020; Mäkipää, 2021). For example, in their study of two university EFL teachers’ enactment of CBA, Chen et al. (2014) found that the teachers expressed positive attitudes toward students’ involvement in the assessment process, whereas in their actual practice, they seldom engaged students in self-and peer assessment. More recently, Vattøy (2020) interviewed ten secondary EFL teachers in Norway and found misalignment between teachers’ beliefs and practices regarding formative teacher feedback. These studies have also shown that L2 teachers’ CBA beliefs and practices are affected by individual factors (e.g., students’ needs, core beliefs held by teachers and their teaching experience) and sociocultural factors (e.g., policy support, class size, time constraints, prescribed curriculum and assessment culture).

One significant gap emerges from the existing studies on L2 teachers’ CBA beliefs and practices is that a majority of the previous studies were concerned mainly with secondary and university teachers, and less space has been devoted to teachers of YLLs. More research is needed regarding teachers’ beliefs and practices pertinent to CBA in young learner contexts. As discussed earlier, YLLs set themselves apart from older learners due to their special age-related characteristics. While previous research has offered insights into how secondary and university language teachers perceive and enact CBA for older learners, whether teachers of YLLs manifest similar beliefs and practices about CBA remains unknown. Given that teachers’ beliefs are context-dependent (Yu et al., 2020), further investigation into teachers’ beliefs and practices about CBA in young learner contexts is essential. Another important gap is that previous studies on L2 teachers’ CBA beliefs and practices merely focused on one or two aspects of CBA, with an intensive discussion on self-and peer assessment and teacher feedback (e.g., Chen et al., 2014; Vattøy, 2020; Mäkipää, 2021). To address this gap, two recent studies, conducted by Wang et al. (2020) and Wu et al. (2021c) respectively, have attempted to investigated language teachers’ beliefs about CBA in a more comprehensive way. Nonetheless, both studies were contextualized within university EFL classrooms. Thus, the current study, situated in the Chinese context, aims to investigate primary school EFL teachers’ beliefs and practices relating to the holistic process of CBA, including PA, collecting evidence, MPJ, and providing feedback.

Research questions

Informed by the research gaps discussed above, this study seeks to address the following three questions:

RQ1: What beliefs do primary school EFL teachers hold about the processes of CBA?

RQ2: How do primary school EFL teachers implement CBA practices?

RQ3: To what extent do primary school EFL teachers’ beliefs about CBA align with their practices?

Methodology

A questionnaire-based survey study was conducted to answer the research questions. Descriptions of the instrument, data collection, participants, and data analysis are provided in the ensuing sections.

Instrument

Our research instrument, the Primary School English Teachers’ CBA questionnaire, was developed in a larger study on the implementation of CBA for young learners in China (Yan, 2020). The questionnaire comprised four sections: Perceived-purpose Scale, Perceived-process Scale, Practice Scale, and Demographic Information. The present study focused on the Perceived-process Scale and the Practice Scale, which examined teachers’ beliefs about the processes of CBA and their self-reported CBA practices, respectively.

Following Dörnyei and Taguchi (2010) guidelines, we first identified potential constructs of the Perceived-process Scale and the Practice Scale with reference to Davison and Leung (2009) framework of CBA. As discussed earlier, four dimensions are included in this framework: PA, collecting learning evidence, making professional judgments and providing appropriate feedback. The dimension collecting learning evidence was designed in this study to emphasize three types of assessment methods: SAO, planned assessment opportunities and FATs. Given the importance of distinguishing descriptive feedback from evaluative feedback (Wiliam, 2010), both types of feedback were included in the dimension providing appropriate feedback. Thus, seven potential constructs were included in both the Perceived-process Scale and the Practice Scale: PA, using SAO, using planned assessment opportunities, using FATs, MPJ, providing descriptive feedback (PDF), and providing evaluative feedback (PEF).

Forty-four items were initially generated for both scales, most of which were drawn from the Beliefs about Assessment and Evaluation questionnaire (Rogers et al., 2007), the Classroom Assessment questionnaire (Cheng et al., 2004), the “Learning How to Learn” project’s questionnaire (James and Pedder, 2006), and the Assessment Practices Inventory (Zhang and Burry-Stock, 2003). Some items were generated on the basis of the semi-structured interviews conducted with three primary school EFL teachers who did not participate in the main study. The first researcher interviewed the three teachers individually, during which an open, comprehensive topic was discussed: What do you think about the purposes of teachers’ classroom assessment and what assessment practices do you employ when assessing your students? The teachers’ responses were used as a source for the item pool. For example, when asking about assessment practices, one teacher described that “I frequently check whether students have mastered what they learned in class through classroom tests, dictation and recitation.” This guided the writing-up of the questionnaire items such as “Teachers collect evidence of learning through classroom tests” and “Teachers collect evidence of learning through dictation.” Two 6-point Likert scales were established to study teachers’ beliefs about CBA in the Perceived-process Scale (1 = Not important at all, 2 = Not important, 3 = Somewhat important, 4 = Important, 5 = Very important, 6 = Completely important), and their self-reported CBA practices in the Practice Scale (1 = Never, 2 = Very rarely, 3 = Rarely, 4 = Occasionally, 5 = Frequently, 6 = Always), respectively.

Finally, to examine the content validity, two experts and a group of postgraduates were invited to provide feedback on the content relevance, clarity and comprehensiveness of the two scales, based on which two items were dropped, retaining 42 items, and some items were revised. The items were originally generated in English, and then translated from English to Chinese by the first author and a doctoral student, using a back-translation method (Nunan and Bailey, 2009). The questionnaire was then piloted with 26 primary school English teachers, who did not participate in the main study and provided comments on questionnaire clarity and administration procedures. Modifications were made and a finalized questionnaire was obtained (Appendix A).

Data collection

The first author collected the questionnaire data. A convenience sampling strategy that is commonly used in L2 research (Dörnyei, 2007) was used to collect questionnaire responses from teachers who were teaching EFL at primary schools in China. Specifically, the target participants were primary school EFL teachers from one municipality and one province in China, to whom the first researcher had access. To approach these participants, an information sheet was first sent to six primary school EFL teaching advisors in the two places, who had the responsibility of providing guidance to teachers’ daily teaching and were in charge of all teachers in their own districts. An online questionnaire invitation was then sent out to primary school teachers through the advisors. A total number of 312 questionnaires were received. Of those, 117 questionnaires with an obvious response set (i.e., almost the same answers for all the items) were excluded, leaving 195 valid responses.

Participants

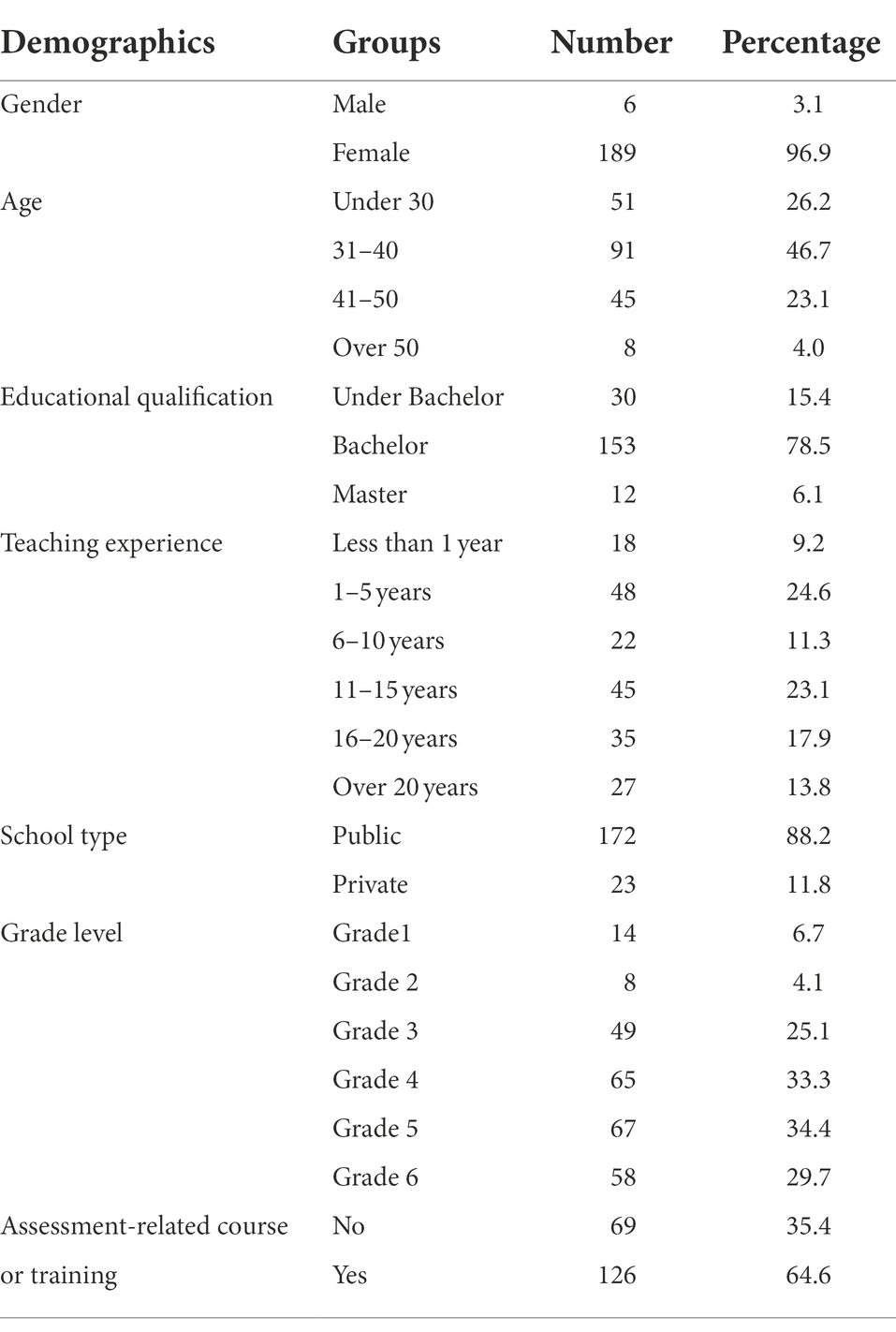

As Table 1 shows, a majority of the teacher participants were female (96.9%, n = 189), and most teachers were aged under 40 years (72.9%, n = 142). Over three quarters of the teachers held a bachelor’s degree (78.5%, n = 153), with a small minority holding a master’s degree and a degree lower than the Bachelor (21.5%, n = 42). About half of the participants had 6 to 20 years’ experience of teaching EFL to primary school students (52.3%, n = 102); 33.8% (n = 66) had less than 5 years and 13.5% (n = 27) had over than 20 years. The number of teachers from public schools (88.2%, n = 172) was larger than that from private schools (11.8%, n = 23). Of the participants, 69.2% (n = 136) taught lower grade levels (Grades 1, 2, 3 and 4), and a similar number taught higher grade levels (Grades 5 and 6) (64.1%, n = 125). Most participants had completed a course on assessment or received training in assessment (64.6%, n = 126).

Data analysis

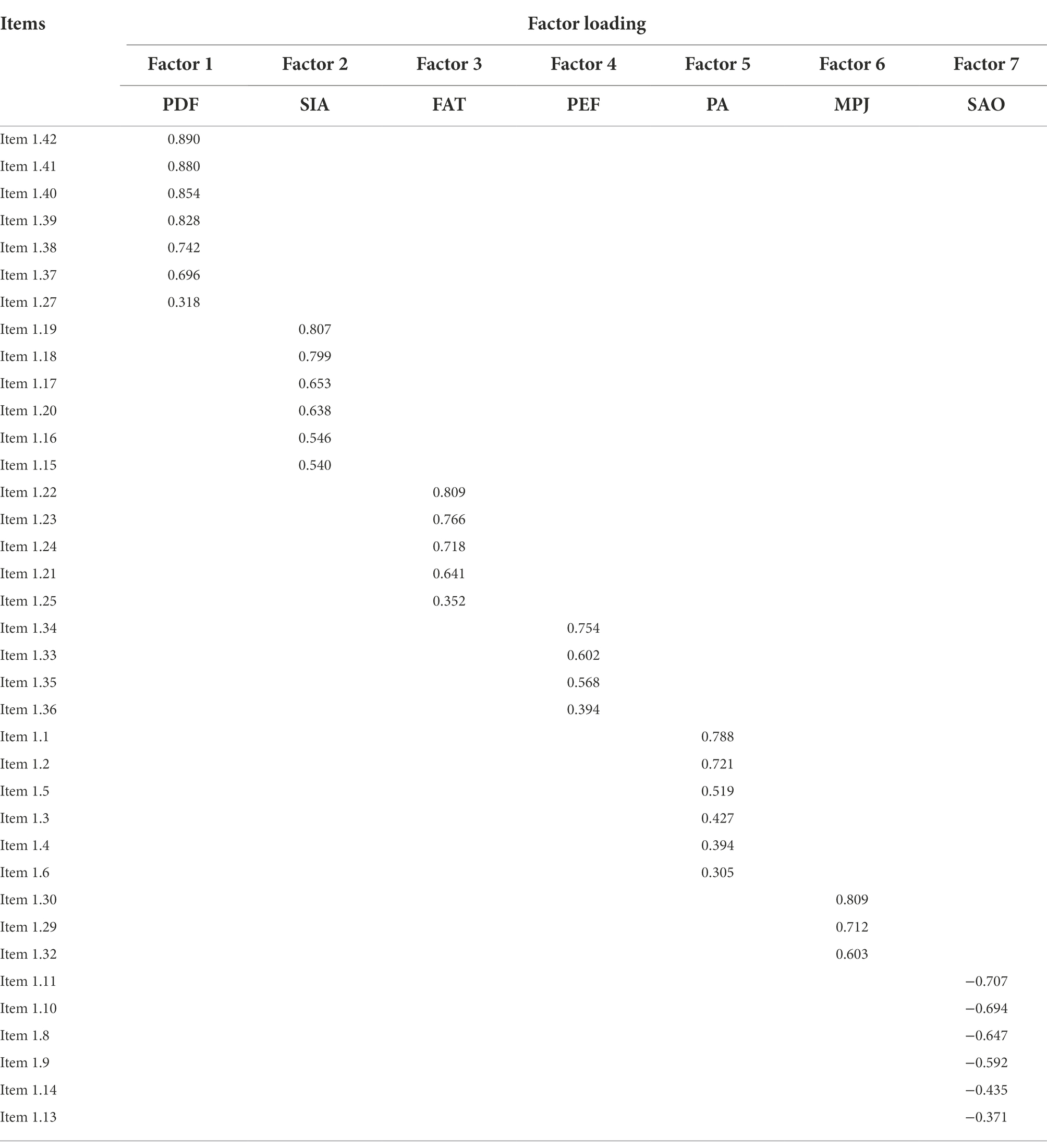

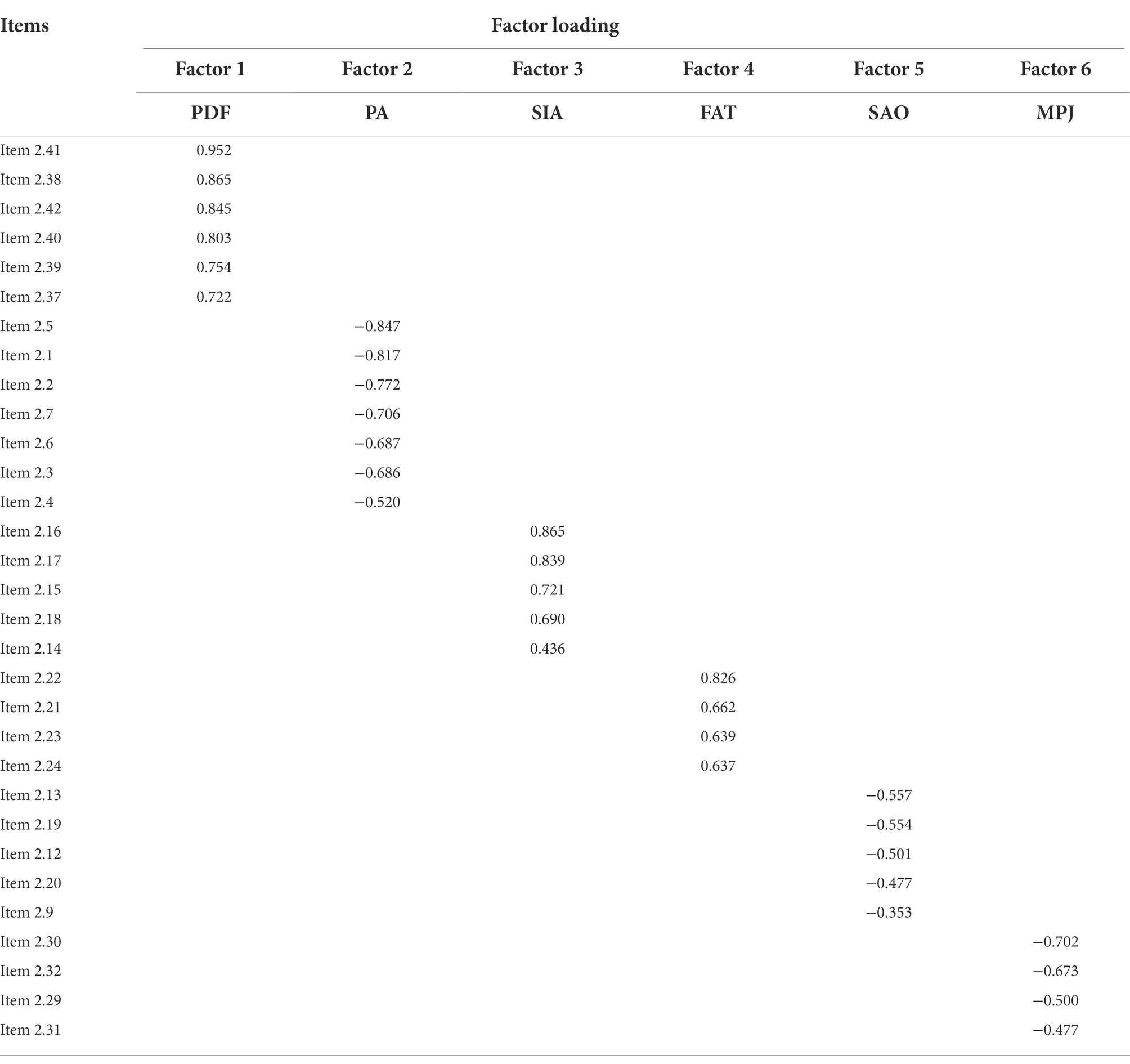

Questionnaire data was analyzed using SPSS 24. Missing data, outliers, and normality distribution were checked first. Exploratory factor analysis (EFA) was then conducted to explore the underlying constructs of the Perceived-process Scale and the Practice Scale. Assumptions were checked through Kaiser-Meyer-Olkin’s (KMO) Test of Sampling Adequacy and Bartlett’s Test of Sphericity. Factors were extracted using principal axis factoring with non-orthogonal rotation (Direct Oblimin, δ = 0) solution. A factor loading with a minimum absolute value of 0.30 was required. The results indicated a 37-item seven-factor solution and a 31-item six-factor solution for the Perceived-process Scale and the Practice Scale, respectively, (underlying constructs of the perceived-process scale and practice scale).

To answer RQ1 regarding teachers’ beliefs about CBA, descriptive analysis was conducted to obtain a general understanding of teachers’ beliefs about the seven factors. And repeated-measures ANOVA was calculated to examine the differences of teachers’ beliefs among different factors. The same analyzes were run to answer RQ2 regarding teachers’ self-reported CBA practices. To answer RQ3 regarding teachers’ belief-practice relationship, correlation analysis and multiple regression analysis were carried out. Correlation analysis was performed to examine whether there existed a direct relationship, and the latter was performed to explore how well teachers’ CBA beliefs could predict their practices.

Results

Underlying constructs of the perceived-process scale and practice scale

Underlying constructs of the perceived-process scale

In the process of EFA of 42 items on the Perceived-process Scale, five items yielded cross-loadings over 0.30 on more than one factor and thus were discarded. The cross-loading of four items (Item 1.12, Item 1.26, Item 1.28 and Item 1.31) may be explained by the fact that the four key steps of CBA are interrelated with one another. For instance, Item 1.12 ‘Teachers collect evidence of learning through classroom observation’, which was initially designed as a potential item for the construct using SAO, cross-loaded onto the construct PDF. The potential construct using SAO emphasizes that incidental assessment opportunities like teacher observation can be generated by teachers to timely obtain learning evidence and provide immediate feedback (Turner and Purpura, 2016). From a conceptual perspective, this construct not only addresses the method of evidence collection but also the formative purpose of using such method, and thus is related to the potential construct PDF that has a focus on evidence use. Therefore, it can be deduced that the cross-loading of Item 1.12 was justified. Besides, the cross-loading of another item, Item 1.7, may be associated with construct clarity.

According to Davison and Leung (2009), selecting appropriate assessments is an important element of the construct PA, based on which Item 1.7 was generated as “Teachers select appropriate assessment methods according to students’ needs when PA.” This potential item, however, cross-loaded onto the construct PA and the construct using SAO. This finding indicates that the construct PA appears to require clarification and offers a possibility of considering selecting appropriate assessment methods as an element of collecting learning evidence. After the five cross-loading items were removed, seven factors were extracted, with a cumulative contribution of 72.54% (KMO = 0.934, df = 666, p < 0.001). Cronbach’s alpha coefficients (α) of the seven factors ranged from 0.834 to 0.953, indicating good internal consistency.

Table 2 shows that Factor 1, PDF contained seven items (a = 0.953) regarding using feedback to improve student learning, such as identifying strengths and weaknesses in relation to learning goals and finding solutions to help students improve their learning. Factor 2 included six items (a = 0.911), revealing how regular instructional activities like oral presentations and role plays can be conducted as assessment opportunities, where students often play an active role in the assessment process, for instance, being involved in self-and peer assessment. These six items were originally designed to be covered by the construct planned assessment opportunities, which addresses that teachers can design or plan instruction-embedded activities to elicit student learning. However, in order to highlight students’ active role, this factor was relabeled student-involving assessment opportunities (SIA). Factor 3, using FAT, included five items (a = 0.886) showing the use of more formal types of assessments (e.g., classroom tests, dictation, oral reading and reciting). Factor 4, PEF, included four items (a = 0.834) focusing on providing feedback to students and parents about their achievement through scores and grades. Factor 5, PA, covered six items (a = 0.847) in relation to establishing and sharing instructional objectives and assessment criteria with students. Factor 6, MPJ, contained three items (a = 0.889) showing how judgments of students’ performance could be made by comparing their performance to pre-set learning goals or their previous performance. Factor 7, using SAO, included six items (a = 0.881) revealing how informal and unplanned assessments (e.g., teacher questioning, teacher-student conversations) could be embedded in daily instruction to modify teaching and provide immediate feedback to students. It can be seen that the original seven constructs of the Perceived-process Scale were retained with EFA.

Underlying constructs of the practice scale

In the process of EFA of the 42 items on the Practice Scale, four items had lower loadings of 0.30, four items loaded on more than one factor, and another three items were identified as outlying ones at they were unrelated to other items on the same factor. The cross-loading of the items on the Practice Scale (Item 2.10, Item 2.11, Item 2.25, Item 2.28) is likely to be explained by the precision of language wording. For example, Item 2.25 ‘I take account of students’ language knowledge (e.g., vocabulary, grammar) when interpreting assessment data’, as a potential item for the construct making professional judgments, was cross-loading onto the construct using FAT. A careful examination of this item showed qualitative difference in wording as compared to some other potential items for the same construct. The use of “take account of” seemed to indicate that this item focused on what to be assessed. However, other items like Item 2.29, and Item 2.30 used “compared students’ current performance against” to suggest a clear focus on how to make sense of assessment data. The wording difference, as a result, might affect participants’ interpretation of the items. In the final solution, 11 items were discarded and six factors were extracted, explaining 71.48% of variance (KMO = 0.925, df = 465, p < 0.001). Cronbach’s Alpha coefficients (a) of the six factors ranged from 0.848 to 0.954.

As Table 3 shows, Factor 1, PDF, contained six items (a = 0.954) reflecting teachers’ use of detailed feedback to help students recognize their strengths and weaknesses in learning and move their learning forward. Factor 2, PA, with seven items (a = 0.885), reflected teachers’ practices of establishing and sharing instructional objectives and assessment criteria with students as well as selecting appropriate assessment methods. Factor 3, SIA, with five items (a = 0.871), reflected teachers’ use of instruction-embedded assessment opportunities where students played a major role (e.g., self-assessment, peer assessment). Factor 4, FAT, with four items (a = 0.848), showed teachers’ use of FATs to collect learning evidence (e.g., classroom tests, dictation). Factor 5, SAO, with five items (a = 0.859), revealed teachers’ use of incidental instruction-embedded assessments (e.g., observation, oral questioning). Factor 6, MPJ, with four items (a = 0.879), showed how teachers made professional interpretation of assessment information. The results show that only six constructs were retained with EFL, with the original construct PDF being dismissed. The reason is that two initial items (Item 3.35 and Item 3.36) of PDF had a lower loading of 0.30 during the process of factoring. Another two items (Item 3.34 and Item 3.34) of PDF loaded onto the factor FAT and were regarded as outlying variables because these two items focused on how teachers reported students’ performance while the four items of the factor FAT were related to teachers’ use of formal tasks to evaluate students’ performance. This was confirmed by the reliability coefficient for the factor FAT, showing that Cronbach’s alpha became higher if Item 3.33 and Item 3.34 were deleted.

Teachers’ CBA beliefs

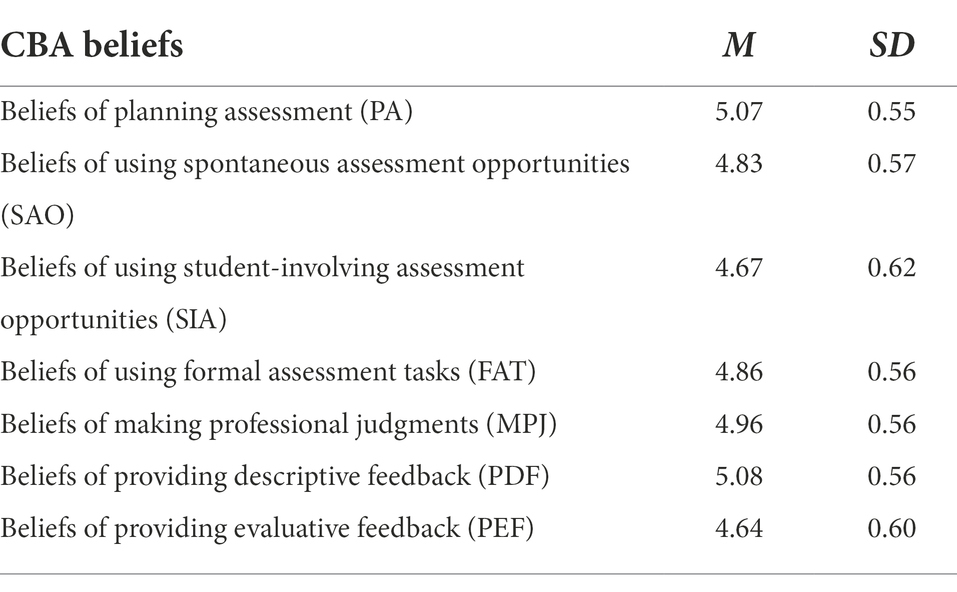

Descriptive data of teachers’ beliefs of the CBA processes are displayed in Table 4. The seven factors derived from the Perceived-process Scale all had a mean score higher than 4.0 (“Important”), indicating that the teachers believed that the various processes of CBA were important for the enhancement of student learning. Specifically, they agreed that PA and PDF were especially valuable, judging from the mean scores that exceeded 5.0 (“Very important”).

One-way repeated-measures ANOVA was conducted to examine differences among teachers’ beliefs regarding the seven factors. The assumption of normal distribution was satisfied given that the values of skewness (from −0.421 to 0.209) and kurtosis (from −0.251 to 1.037) fell within the cut-off values of|3.0|and|8.0|respectively (Kline, 2011). Mauchly’s Test showed that the assumption of sphericity had been violated, x2 (20) = 68.888, p < 0.001, so Greenhouse–Geisser of Huynh-Feldt (ε = 0.909) was applied to adjust degrees of freedom. The results of ANOVA showed that there were significant differences in the importance attached to the CBA processes, F (5.289, 1026.070) = 42.694, p < 0.001, η2 = 0.18. Follow-up pairwise comparisons with Bonferroni corrections indicated that all pairwise differences were significant (p < 0.05) excepted four paired comparisons, namely PA and PDF (p > 0.05), SAO and FAT (p > 0.05), SIA and PEF (p > 0.05), FAT and MPJ (p > 0.05). This suggested that the teachers considered PA and PDF to be the most important CBA processes in promoting student learning. As for the specific assessment methods for learning evidence collection, they placed greater emphasis on SAO (e.g., teacher questioning, observations) and FAT (e.g., classroom tests, textbook exercises, recitation), whereas a relatively lower preference was shown for SIA (e.g., self-assessment and peer assessment). They also believed strongly in the value of MPJ (e.g., comparing learning evidence against pre-set goals). Comparatively, from teachers’ perspective, SIA and PEF were the least important CBA processes.

Teachers’ CBA practices

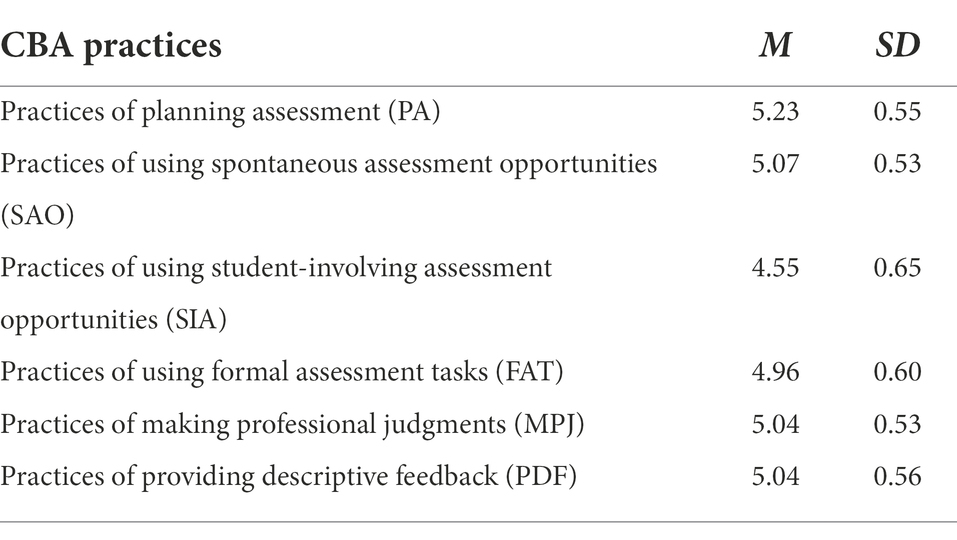

Table 5 presents the descriptive data of teachers’ self-reported CBA practices. The mean scores for PA, SAO, MPJ and PDF exceeded 5.0 (“Frequently”) and those for SIA and FAT exceeded 4.0 (“Occasionally”). The results revealed that, on average, the teachers reported frequent-use of PA, SAO, MPJ and PDF and occasional-use of SIA and FAT.

One-way repeated measures ANOVA was applied to examine the differences among the six factors. The data met the assumption of normal distribution (the values of skewness ranging from −0.285 to 0.150, and the values of kurtosis from −0.704 to 0.467), but the assumption of sphericity was violated, x2 (14) = 79.825, p < 0.001.Therefore, Greenhouse–Geisser of Huynh-Feldt (ε = 0.877) was applied. Significant differences were identified in the frequency of different CBA practices, F (4.277, 829.715) = 66.582, p < 0.001, η2 = 0.256. Follow-up pairwise comparisons with Bonferroni corrections showed that all pairwise differences were significant (p < 0.05) except six paired comparisons, namely, SAO and FAT (p > 0.05), SAO and MPJ (p > 0.05), SAO and PDF (p > 0.05), FAT and MPJ (p > 0.05), FAT and PDF (p > 0.05), MPJ and PDF (p > 0.05). The results revealed that, according to the teachers’ self-report, PA was the most frequently used CBA practice. As for collecting learning evidence, the teachers frequently used assessment methods of SAO (e.g., oral questioning and observations) and FAT (e.g., classroom tests and recitation tasks), while SIA (e.g., self-assessment and peer assessment) was less frequently used. Meanwhile, they reported frequent use of MPJ (e.g., made judgments of students’ performance against learning objectives or their previous performance) and PDF (e.g., providing feedback to students to identify learning strengths and weaknesses). In general, SIA was the least frequently used CBA practice by the teachers.

In summary, Chinese primary school EFL teachers in this study showed strong positive attitudes toward CBA, perceiving that PA and PDF were the most important assessment processes. While they emphasized the value of using multiple assessment methods, they placed more importance on SAO and FATs than student-involving assessment opportunities. MPJ were also considered as imperative for improving student learning. PEF, as opposed to PDF, gained the least popularity among teachers. A similar pattern was identified in their self-reported CBA practices, as they reported the most frequent practice of PA, multiple use of assessment methods to collect students’ learning evidence (with a heavy reliance on SAO and FAT), as well as frequent practice of MPJ and PDF. Such a similar pattern seemed to indicate alignment between teachers’ beliefs about CBA and their assessment practices.

Relationship between teachers’ CBA beliefs and practices

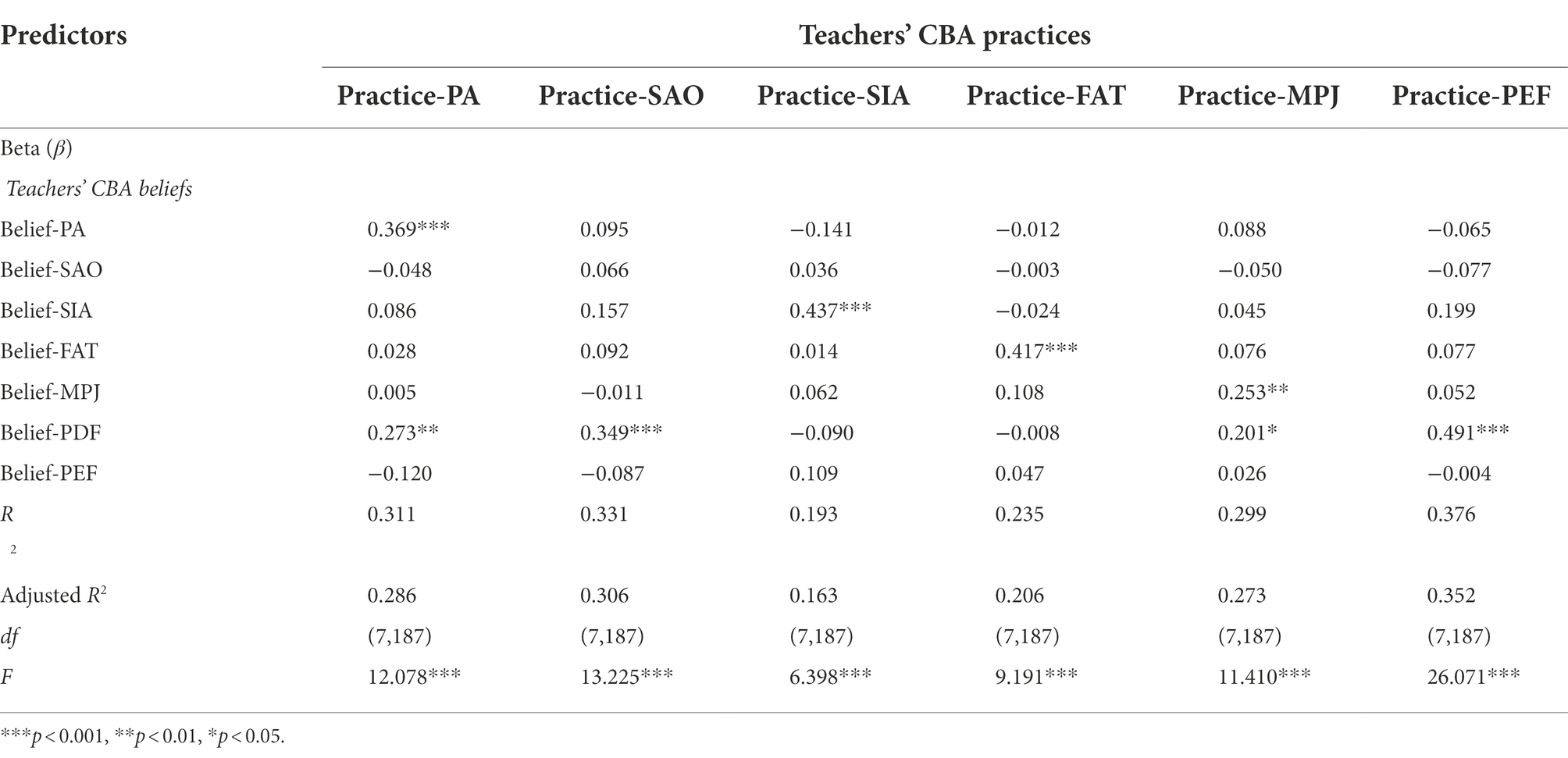

To examine the relationship between teachers’ beliefs and their self-reported practices related to CBA, correlation analysis was first conducted. As seen in Appendix B, each of the seven factors of teachers’ beliefs about CBA was positively and significantly correlated with the six factors of teachers’ self-reported CBA practices. Thus, six multiple regression analyzes using each of the six self-reported CBA practices as dependent variables were conducted, with the seven factors of teachers’ beliefs about CBA being set as predictors (see Table 6). The seven factors of teachers’ beliefs were found to have a significant effect on teachers’ CBA practices.

First, the results of multiple regression showed a significant model for the practice of PA, R2 = 0.311, adjusted R2 = 0.286, df = (7,187), F = 12.078, p < 0.001. The PA practice was predicted by the factors regarding teachers’ beliefs about PA (β = 0.369, p < 0.001) as well as PDF (β = 0.273, p < 0.01). Second, the results showed a significant model for the practice of SAO, R2 = 0.331, adjusted R2 = 0.306, df = (7,187), F = 13.225, p < 0.001. Such practice was predicted by teachers’ beliefs about SAO (β = 0.349, p < 0.001). Third, a significant model was identified for the practice of SIA, R2 = 0.232, adjusted R2 = 0.193, df = (7,187), F = 6.398, p < 0.001. Likewise, this practice was predicted by the factor of teachers’ beliefs about SIA. Fourth, a significant model was found for the practice of FAT, R2 = 0.235, adjusted R2 = 0.206, df = (7,187), F = 9.191, p < 0.001. In the same vein, the practice was predicted by teachers’ beliefs about FAT (β = 0.417, p < 0.001). Regarding the practice of MPJ, a significant model was also identified, R2 = 0.299, adjusted R2 = 0.273, df = (7,187), F = 11.410, p < 0.001). Such practice was predicted by teachers’ beliefs of MPJ (β = 0.253, p < 0.01) and PDF (β = 0.201, p < 0.05). Finally, a significant model was identified for the practice of PDF, R2 = 0.376, adjusted R2 = 0.352, df = (7,187), F = 23.044, p < 0.001), showing that the factor of teachers’ beliefs of PDF was a significant predictor (β = 0.491, p < 0.001).

Overall, the above results revealed strong relationships between Chinese primary school EFL teachers’ beliefs about CBA and their assessment practices. Their beliefs regarding PA, SAO, SAI, FAT, MPJ and PDF were found to be important predictors of the related assessment practices.

Discussion

Results of this study indicated that Chinese primary school EFL teachers generally held positive attitudes toward the various processes of CBA, and also reported that they attempted to enact CBA practices in their classrooms to support learning of YLLs. Their beliefs about CBA seemed to have a powerful influence on their assessment practices. Overall, this study contributes to the literature by comprehensively examining teachers’ self-reported beliefs and practices regarding CBA for young EFL learners in the Chinese context. Each research question is addressed in light of the findings.

Regarding the first research question, the findings revealed that Chinese primary school EFL teachers agreed on the importance of various processes of CBA (e.g., PA, collecting learning evidence, MPJ, providing appropriate feedback). Similar findings have been reported in the literature (e.g., Wang et al., 2020; Golzar et al., 2022). For example, in the recent study by Golzar et al. (2022), Afghan university EFL teachers perceived that student-involving assessment methods (e.g., self-and peer assessment) and quality feedback were of great value in student learning improvement. However, Indonesian EFL teachers in Puad and Ashton (2021) study mainly viewed CBA from a summative perspective, showing negative attitudes about self-and peer assessment and believing in the value of scores and grades in making students accountable. This differs from the finding of the current study. It seems that the teaching and learning context is an important factor on teachers’ beliefs about CBA.

As for the specific processes of CBA, the teachers in this study placed the greatest importance on PA, addressing the value of establishing and sharing clear instructional objectives and success criteria with students. This finding is close to Wu et al. (2021b) investigation in the Chinese university EFL context, where the teachers attached importance to communicating learning goals and success criteria to students. The significance of articulation of learning goals and success criteria, as suggested by previous research (Timperley and Parr, 2009; Balloo et al., 2018), is that it enables students to be truly engaged in the process of deep learning rather than surface-level learning that has a focus on task completion, which is important for students’ self-regulatory capacity. The teachers in this study also believed that, when making judgments, it was important to compare students’ learning performance against pre-set learning objectives or students’ previous learning progress. Such beliefs appear to be held by researchers like Airasian and Abrams (2003) and Jacobs and Renandya (2019), who highly emphasize the value of criterion-referenced assessment and pupil-referenced assessments to mitigate the undesirable negative impact of competition, diagnose students’ learning needs, and to identify strategies for learning improvement. In addition, the teachers in this study placed greatest emphasis on the value of descriptive feedback to help students understand what was necessary for achievement and how to overcome difficulties in learning. In comparison, they did not place a high value on evaluative feedback. The findings here are similar to Brumen and Cagran (2011) research where the teachers from three European countries (Czech Republic, Slovenia and Croatia) believed that YLLs should be provided with more descriptive and individual feedback rather than numerical grades. As Brumen and Cagran (2011) suggested, young learners would benefit more directly from descriptive feedback on their learning progress and language development.

However, when it comes teachers’ beliefs about collecting learning evidence, complex findings were identified in this study. The teachers placed considerable value on the use of multiple assessment methods, including SAO embedded in daily instruction (e.g., teacher questioning), FATs (e.g., classroom tests), and student-involving assessment opportunities (e.g., self-and peer assessment). This finding has also been seen in other studies (Shohamy et al., 2008; Troudi et al., 2009). Multiple assessment methods have the potential to respond to a wide range of L2 students’ learning needs (Leung, 2005). In primary schools in China, teachers usually teach large-size classes with up to 50 students (Wang, 2009; Wu et al., 2021b), leading them to use different forms of assessment to address students’ learning needs. Despite their positive attitudes toward multiple assessment methods, the teachers in this study perceived student-involving assessments as less important as compared to other assessment types. The relative conservative beliefs about student-involving assessments like self-and peer assessment might reflect the teachers’ concern over the subjectivity and validity of these assessment methods. Indeed, although some empirical evidence has indicated the effectiveness of such assessment methods in promoting YLLs’ autonomy (e.g., Butler and Lee, 2010; Liu and Brantmeier, 2019), there is widespread beliefs among teachers that YLLs are too immature for evaluating and self-regulating their own learning, as Butler (2019) noted. Similar traditional beliefs about student-involving assessments have been reported in previous empirical studies with YLLs (Tsagari, 2016). Actually, even for older learners, L2 teachers tend to believe that learners do not seem to have sufficient knowledge to accurately assess their own learning (Puad and Ashton, 2021).

Regarding the second research question, the findings of the present study are generally different from those found in Rixon (2016) international survey study where the teachers from over 100 countries across the globe were investigated regarding their assessment practices in young learner classrooms. In Rixon’s study, the teachers mainly used assessment for summative purposes, failing to make full use of assessment information to support YLLs’ English language learning. By contrast, in the present study, Chinese primary school EFL teachers attempted to enact various CBA practices to enhance YLLs’ language learning, such as PA, using multiple assessment methods, and PDF. Such findings are closer to Lee et al. (2019) study that showed the primary school English teachers’ attempts to implement CBA in writing classrooms to benefit student learning in the Hongkong context.

The findings about Chinese primary school EFL teachers’ attempts to implement CBA practices, despite being self-reported, are also encouraging for Chinese educational policy makers, which suggest that teachers have tried to translate into action principles of CBA for learning. In China, CBA has begun to attract language assessment experts and researchers’ attention since the beginning of the new century, and has become a policy-support practice in the Chinese educational system almost at the same time (Gu, 2012). At the primary school level, CBA, as an assessment initiative, was incorporated into the English Curriculum Standards for Compulsory Education (ECSCE) (2011 version; MOE, 2011), aimed at promoting learner autonomy through the integration of CBA into regular instruction. Such an initiative has been readdressed in the newly published ECSCE (2022 version; MOE, 2022). In this study, a series of CBA practices (e.g., clarifying learning goals and success criteria, using multiple assessment methods, MPJ and PDF) had been implemented in young learner classrooms, as reported by the teachers. These findings revealed that Chinese primary school EFL teachers had provided CBA opportunities for young learners to improve their learning. But it has to be borne in mind that all this was based on what they reported.

Nevertheless, this study found that, among the various CBA practices, the teachers reported the lowest frequency of adopting student-involving assessment opportunities like self-and peer assessments; by contrast, teacher-centered FATs were more frequently conducted with YLLs. This is in accordance with research that has identified that student-involving assessment opportunities do not have much of a presence in practice in L2 classrooms (Saito and Inoi, 2017). Based on teachers’ self-reports, it appeared that Chinese primary school EFL teachers did not provide genuine opportunities for young learners to take responsibility for their own learning. This may in part be due to the traditional Chinese culture of teaching and learning, where teachers are conceptualized as the authoritative figure, and students are regarded as passive recipients of knowledge (Carless, 2011). A Chinese saying, ‘being a teacher for only 1 day entitles one to lifelong respect from the student that befits his/her father’ (yiri weishi zhongshen weifu), expresses this hierarchical teacher-student relationship. Having been influenced by this culture throughout their own student life, Chinese EFL teachers are likely to develop firm beliefs regarding the teacher and student roles (Cheng et al., 2021; Sun and Zhang, 2021; Zhang and Sun, 2022). As such, when conducting assessment, teachers tend to still take a dominant role in assessment and monitoring students’ learning despite their beliefs about the beneficial impact of student-involving assessments.

The less frequent use of student-involving assessments might also be attributed to teachers’ lack of CBA literacy. Although the majority of teachers in this study reported that they had received assessment-related training or attended related courses, it seems possible that insufficient CBA content had been provided. As Xu and Brown (2017) study showed, Chinese university EFL teachers had insufficient CBA training in both pre-service and in-service courses. Hence the lack of CBA training might hinder teachers from developing essential assessment literacy to translate CBA principles into practice. Previous literature has indicated that CBA-literature teachers will be committed to embedding student-involving assessment opportunities into instruction in an ongoing manner (Dixon et al., 2020).

Regarding the third research question, the findings from the descriptive analyzes revealed that teachers’ stated beliefs and practices regarding CBA were generally aligned in certain aspects. For example, the teachers highly valued the clarification of learning objectives and success criteria, professional judgments of student learning and the provision of descriptive feedback, which were reflected in their frequent practices of these CBA processes. Similar finding has been reported by Wang et al. (2020) study, showing that the teachers’ practices of making learning explicit matched their beliefs about creating a supporting learning environment and clarifying success criteria. In addition, in the present study, the teachers’ beliefs on the use of different assessment methods were largely consistent with their practices. The teachers placed the least value on student-involving assessment opportunities, which was correspondingly reflected in their least frequent practice of SIA. This finding is similar to Wu et al. (2021b) study, where the teachers’ beliefs and practices were aligned regarding empowering students in the assessment process. Furthermore, the findings of multiple regression analyzes demonstrated that Chinese primary school EFL teachers’ beliefs about CBA were important predictors of their assessment practices, echoing the powerful influence of teachers’ beliefs on their instructional behaviors (Borg, 2019). The finding of this study is generally like that of Brown et al. (2015) study, which reports alignment between teachers’ assessment beliefs and their self-reported assessment practices in the Indian context. Nonetheless, it needs to be kept in mind that there is no evidence in this study as to teachers’ actual classroom assessment practices. It could be that, in actual classroom settings, teachers have not fully implemented CBA practices as what they have reported in the survey, because teachers’ self-reported teaching practices do not necessarily reflect their actual classroom behavior (Chen et al., 2012). Further research is needed to explore the beliefs/practice nexus.

Conclusion

Chinese primary school EFL teachers were found to place considerable value on the various processes of CBA for YLLs and reported that they had made attempts to implement CBA practices to facilitate the learning of YLLs. However, it would seem that their CBA practices need to be expanded to incorporate student-involving assessment opportunities, which was also reflected in their beliefs that such assessment opportunities were less important than FATs. In general, the teachers’ stated CBA beliefs and practices were in alignment.

Three pedagogical implications are drawn. First, it is recommended that teachers design student-involving assessment opportunities contextualized to the learning objectives of a particular lesson or unit. To maximize the benefits of building young learner autonomy, it is advisable for teachers to guide students to understand the learning objectives and success criteria using learner-friendly languages, and to cooperate with students to reflect on and monitor language knowledge and skills that they have mastered. Second, given the powerful impact of teachers’ beliefs on their CBA practices, it is suggested that teacher educators provide student teachers with sufficient experiences with CBA, and school administrators establish professional learning communities where meetings are organized to convey CBA principles and teachers can share their assessment experiences. This helps teachers to form positive beliefs about CBA, which, in turn, could motivate them to well utilize CBA as a pedagogical practice. Third, quality pre-service and in-service professional training programs are needed to help teachers master CBA-related knowledge and skills, especially those related to student-involving assessment opportunities. In this way, teachers will become CBA literate and can implement CBA practices more effectively.

Future studies that collect data from multiple sources, such as classroom observations, teacher interviews and teaching documents, are needed to validate teachers’ self-reported CBA practices. tMore research is needed to examine students’ beliefs about CBA and the consistency between students’ and teachers’ perspectives. Research on teachers’ experience with CBA-related training and the effect on their CBA practices is also warranted.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by The University of Auckland Human Ethics Committee. Written informed consent to participate in this study was provided by the participants' legal guardian/next of kin.

Author contributions

QY: conceived of the initial idea, designed the study, collected and analyzed the data, and drafted of the manuscript. LZ and HD: revised and proofread the manuscript. All authors agreed to the final version before LZ got it ready for submission as the corresponding author.

Funding

This study is funded by a research grant from the Chongqing Social Sciences Planning Office for the Foreign Language Project (grant no. 2021WYZX43) and a research grant from the Fundamental Research Funds for the Central Universities in China (grant no. 2022CDJSKJC03).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.1051728/full#supplementary-material

References

Airasian, P. W., and Abrams, L. (2003). “Classroom student evaluation,” in International Handbook of Educational Evaluation. eds. T. Kellaghan, D. L. Stufflebeam, and L. A. Wingate (Dordrecht, the Netherlands: Springer), 533–548.

Assessment Reform Group. (2002). Assessment for Learning: 10 Principles. Cambridge: Cambridge University Faculty of Education.

Balloo, K., Evans, C., Hughes, A., Zhu, X., and Winstone, N. (2018). Transparency isn’t spoon-feeding: how a transformative approach to the use of explicit qssessment criteria can support student self-regulation. Front. Educ. 3:e00069. doi: 10.3389/feduc.2018.00069

Black, P., and Wiliam, D. (1998). Assessment and classroom learning. Assess. Educ. Princ. Policy Pract. 5, 7–74. doi: 10.1080/0969595980050102

Black, P., and Wiliam, D. (2009). Developing the theory of formative assessment. Educ. Assess. Eval. Account. 21, 5–31. doi: 10.1007/s11092-008-9068-5

Black, P., and Wiliam, D. (2018). Classroom assessment and pedagogy. Assess. Educ. Princ. Policy Pract. 25, 551–575. doi: 10.1080/0969594X.2018.1441807

Borg, S. (2003). Teacher cognition in language teaching: a review of research on what language teachers think, know, believe, and do. Lang. Teach. 36, 81–109. doi: 10.1017/S0261444803001903

Borg, S. (2011). The impact of in-service teacher education on language teachers’ beliefs. System 39, 370–380. doi: 10.1016/j.system.2011.07.009

Borg, S. (2019). “Language teacher cognition: perspectives and debates,” in Second Handbook of English Language Teaching. ed. X. Gao (Cham, Switzerland: Springer), 1149–1169.

Britton, M. (2021). Assessment for Learning in Primary Language Learning and Teaching. Bristol, UK: Multilingual Matters.

Brookhart, S. M. (2004). Classroom assessment: tensions and intersections in theory and practice. Teach. Coll. Rec. 106, 429–458. doi: 10.1111/j.1467-9620.2004.00346.x

Brown, G. T., Chaudhry, H., and Dhamija, R. (2015). The impact of an assessment policy upon teachers’ self-reported assessment beliefs and practices: a quasi-experimental study of Indian teachers in private schools. Int. J. Educ. Res. 71, 50–64. doi: 10.1016/j.ijer.2015.03.001

Brumen, M., and Cagran, B. (2011). Teachers’ perspectives and practices in assessing young foreign language learners in three eastern European countries. Education 39, 541–559. doi: 10.1080/03004279.2010.488243

Brumen, M., Cagran, B., and Rixon, S. (2009). Comparative assessment of young learners’ foreign language competence in three eastern European countries. Educ. Stud. 35, 269–295. doi: 10.1080/03055690802648531

Burns, A., Freeman, D., and Edwards, E. (2015). Theorizing and studying the language-teaching mind: mapping research on language teacher cognition. Mod. Lang. J. 99, 585–601. doi: 10.1111/modl.12245

Butler, Y. G. (2016). “Assessing young learners,” in The Handbook of Second Language Assessment. eds. D. Tsagari and J. Banerjee (Boston, MA: De Gruyter Mouton), 359–375.

Butler, Y. G. (2019). “Assessment of young English learners in instructional settings,” in Second Handbook of English Language Teaching. ed. X. Gao (Cham, Switzerland: Springer), 477–496.

Butler, Y. G. (2021). “Assessing young learners,” in The Routledge Handbook of Language Testing. 2nd Edn. eds. G. Fulcher and L. Harding (New York: Routledge), 153–170.

Butler, Y. G., and Lee, J. (2010). The effects of self assessment among young learners of English. Lang. Test. 27, 5–31. doi: 10.1177/0265532209346370

Cameron, L. (2001). Teaching Languages to Young Learners. Cambridge, UK: Cambridge University Press.

Carless, D. (2011). From Testing to Productive Student Learning: Implementing Formative Assessment in Confucian-Heritage Settings. London, UK: Routledge.

Chen, J., Brown, G. T., Hattie, J. A., and Millward, P. (2012). Teachers' conceptions of excellent teaching and its relationships to self-reported teaching practices. Teach. Teach. Educ. 28, 936–947. doi: 10.1016/j.tate.2012.04.006

Chen, Q., May, L., Klenowski, V., and Kettle, M. (2014). The enactment of formative assessment in English language classrooms in two Chinese universities: teacher and student responses. Assess. Educ. Princ. Policy Pract. 21, 271–285. doi: 10.1080/0969594X.2013.790308

Cheng, L., Rogers, T., and Hu, H. (2004). ESL/EFL instructors’ classroom assessment practices: purposes, methods, and procedures. Lang. Test. 21, 360–389. doi: 10.1191/0265532204lt288oa

Cheng, X., Zhang, L. J., and Yan, Q. (2021). Exploring teacher written feedback in EFL writing classrooms: beliefs and practices in interaction. Lang. Teach. Res. doi: 10.1177/13621688211057665

Cizek, G. J. (2010). “An introduction to formative assessment: history, characteristics, and challenges,” in The Handbook of Formative Assessment. eds. H. L. Andrade and G. J. Cizek (New Youk, NY: Routledge), 3–17.

Davison, C. (2019). “Using assessment to enhance learning in English language education,” in Second Handbook of English Language Teaching. ed. X. Gao (Cem, Switzerland: Springer International), 433–454.

Davison, C., and Leung, C. (2009). Current issues in English language teacher-based assessment. TESOL Q. 43, 393–415. doi: 10.1002/j.1545-7249.2009.tb00242.x

Dixon, H. R., Hawe, E., and Parr, J. (2011). Enacting assessment for learning: the beliefs practice nexus. Assess. Educ.: Princ. Policy Pract. 18, 365–379.

Dixson, D. D., and Worrell, F. C. (2016). Formative and summative assessment in the classroom. Theory Pract. 55, 153–159. doi: 10.1080/00405841.2016.1148989

Dixon, H., Hill, M., and Hawe, E. (2020). Noticing and recognising AfL practice: challenges and their solution. Assess. Matters 14, 42–62. doi: 10.18296/am.0044

Dolin, J., Black, P., Harlen, W., and Tiberghien, A. (2018). “Exploring relations between formative and summative assessment,” in Transforming Assessment. eds. J. Dolin and R. Evans (Cham, Switzerland: Springer), 53–80.

Dörnyei, Z., and Taguchi, T. (2010). Questionnaires in Second Language Research: Construction, Administration, and Processing (2nd Edn.). New York, NY: Routledge.

Edelenbos, P., and Vinjé, M. P. (2000). The assessment of a foreign language at the end of primary (elementary) education. Lang. Test. 17, 144–162. doi: 10.1177/026553220001700203

Gan, Z., Leung, C., He, J., and Nang, H. (2018). Classroom assessment practices and learning motivation: a case study of Chinese EFL students. TESOL Q. 53, 514–529. doi: 10.1002/tesq.476

Golzar, J., Momenzadeh, S. E., and Miri, M. A. (2022). Afghan English teachers’ and students’ perceptions of formative assessment: a comparative analysis afghan English teachers’ and students’ perceptions of formative assessment: a comparative analysis. Cogent Educ. 9:2107297. doi: 10.1080/2331186X.2022.2107297

Gu, Y. (2012). “English curriculum and assessment for basic education in China,” in Perspectives on Teaching and Learning English Literacy in China. eds. J. Ruan and C. B. Leung (Dordrecht, the Netherlands: Springer), 35–50.

Hasselgreen, A. (2000). The assessment of the English ability of young learners in Norwegian schools: an innovative approach. Lang. Test. 17, 261–277. doi: 10.1177/026553220001700209

Hasselgreen, A. (2012). “Assessing young learners,” in The Routledge Handbook of Language Testing. eds. G. Fulcher and F. Davison (New York, NY: Routledge), 93–105.

Hild, G., and Nikolov, M. (2011). “Teachers’ views on tasks that work with primary school EFL learners,” in UPRT2010: Empirical Studies in English Applied Linguistics. eds. M. Lehmann, R. Lugossy, and J. Horváth (Pécs: Lingua Franca Csoport), 47–62.

Hill, K., and McNamara, T. (2011). Developing a comprehensive, empirically based research framework for classroom-based assessment. Lang. Test. 29, 395–420. doi: 10.1177/0265532211428317

Jacobs, G. M., and Renandya, W. A. (2019). Student Centered Cooperative Learning: Linking Concepts in Education to Promote Student Learning. Basingstoke, UK: Springer.

James, M., and Pedder, D. (2006). Beyond method: assessment and learning practices and values. Curric. J. 17, 109–138. doi: 10.1080/09585170600792712

Johnson, K. E. (1992). Learning to teach: instructional actions and decisions of preservice ESL teachers. TESOL Q. 26, 507–534. doi: 10.2307/3587176

Kaur, K. (2021). Formative assessment in English language teaching: exploring the enactment practices of teachers within three primary schools in Singapore. Asia Pac. J. Educ. 41, 695–710. doi: 10.1080/02188791.2021.1997707

Kline, R. B. (2011). Principles and Practices of Structural Equation Modeling (3rd Edn.). New York, NY: Guilford.

Lee, I., Mak, P., and Yuan, R. E. (2019). Assessment as learning in primary writing classrooms: an exploratory study. Stud. Educ. Eval. 62, 72–81. doi: 10.1016/j.stueduc.2019.04.012

Leung, C. (2005). “Classroom teacher assessment of second language development: construct as practice,” in The Handbook of Research in Second Language Teaching and Learning. ed. E. Hinkel (Mahwah, NJ: Erlbaum), 526–537.

Liu, H., and Brantmeier, C. (2019). “I know English”: self-assessment of foreign language reading and writing abilities among young Chinese learners of English. System 80, 60–72. doi: 10.1016/j.system.2018.10.013

Mak, P., and Lee, I. (2014). Implementing assessment for learning in L2 writing: an activity theory perspective. System 47, 73–87. doi: 10.1016/j.system.2014.09.018

Mäkipää, T. (2021). Students’ and teachers’ perceptions of self-assessment and teacher feedback in foreign language teaching in general upper secondary education–a case study in Finland. Cogent Educ. 8:1978622. doi: 10.1080/2331186X.2021.1978622

McMillan, J. H. (2013). “Why we need research on classroom assessment,” in The Sage Handbook of Research on Classroom Assessment. ed. J. H. McMillan (Thousand Oaks, CA: Sage), 2–17.

MOE (2011). English Curriculum Standards for Compulsory Education (2011 Version). Beijing, China: Beijing Normal University Press.

MOE (2022). English Curriculum Standards for Compulsory Education (2022 Version). Beijing, China: Beijing Normal University Press.

Mui So, W. W., and Hoi Lee, T. T. (2011). Influence of teachers’ perceptions of teaching and learning on the implementation of assessment for learning in inquiry study. Assess. Educ. Princ. Policy Pract. 18, 417–432. doi: 10.1080/0969594X.2011.577409

Nasr, M., Bagheri, M. S., Sadighi, F., and Rassaei, E. (2018). Iranian EFL teachers’ perceptions of assessment for learning regarding monitoring and scaffolding practices as a function of their demographics. Cogent Educ. 5:1558916. doi: 10.1080/2331186X.2018.1558916

Nikolov, M., and Timpe-Laughlin, V. (2021). Assessing young learners’ foreign language abilities. Lang. Teach. 54, 1–37. doi: 10.1017/S0261444820000294

Nunan, D., and Bailey, K. M. (2009). Exploring Second Language Classroom Research: A Comprehensive Guide. Boston, MA: Heinle/Cengage.

Pajares, F. M. (1992). Teachers’ beliefs and educational research: cleaning up a messy construct. Rev. Educ. Res. 62, 307–332. doi: 10.3102/00346543062003307

Panadero, E., Andrade, H., and Brookhart, S. M. (2018). Fusing self-regulated learning and formative assessment: a roadmap of where we are, how we got here, and where we are going. Aust. Educ. Res. 45, 13–31. doi: 10.1007/s13384-018-0258-y

Patekar, J. (2021). A look into the practices and challenges of assessing young EFL learners’ writing in Croatia. Lang. Test. 38, 456–479. doi: 10.1177/0265532221990657

Phipps, S., and Borg, S. (2009). Exploring tensions between teachers’ grammar teaching beliefs and practices. System 37, 380–390. doi: 10.1016/j.system.2009.03.002

Prošić-Santovac, D., Savić, V., and Rixon, S. (2019). “Assessing young English language learners in Serbia: Teachers’ attitudes and practices,” in Integrating Assessment into Early Language Learning and Teaching. eds. D. Prošić-Santovac and S. Rixon (Bristol, UK: Multilingual Matters), 251–266.

Pryor, J., and Crossouard, B. (2008). A socio-cultural theorisation of formative assessment. Oxf. Rev. Educ. 34, 1–20. doi: 10.1080/03054980701476386

Puad, L. M. A. Z., and Ashton, K. (2021). Teachers’ views on classroom-based assessment: an exploratory study at an Islamic boarding school in Indonesia. Asia Pac. J. Educ. 41, 253–265. doi: 10.1080/02188791.2020.1761775

Rea-Dickins, P. (2007). “Classroom-based assessment: possibilities and pitfalls,” in International Handbook of English Language Teaching. ed. C. C. J. Davison (New York, NY: Springer), 505–520.

Rixon, S. (2016). “Do development in assessment represent the “coming of age” of young learners English language teaching initiatives?: the international picture,” in Assessing Young Learners of English: Global and Local Perspectives. ed. M. Nikolov (New York: Springer), 19–41.

Rogers, T., Cheng, L., and Hu, H. (2007). ESL/EFL instructors’ beliefs about assessment and evaluation. Canadian Int. Educ. 36, 39–61. doi: 10.5206/cie-eci.v36i1.9088

Roothooft, H. (2014). The relationship between adult EFL teachers’ oral feedback practices and their beliefs. System 46, 65–79. doi: 10.1016/j.system.2014.07.012

Sadler, R. D. (1989). Formative assessment and the design of instructional systems. Instr. Sci. 18, 119–144. doi: 10.1007/BF00117714

Saito, H., and Inoi, S. (2017). Junior and senior high school EFL teachers’ use of formative assessment: a mixed-methods study. Lang. Assess. Q. 14, 213–233. doi: 10.1080/15434303.2017.1351975

Shohamy, E., Inbar-Lourie, O., and Poehner, M. E. (2008). Investigating Assessment Perceptions and Practices in the Advanced Foreign Language Classroom (Report No. 1108). University Park, PA: Center for Advanced Language Proficiency Education and Research.

Sun, Q., and Zhang, L. J. (2021). A sociocultural perspective on English-as-a-foreign-language (EFL) teachers’ cognitions sbout form-focused instruction. Front. Psychol. 12:593172. doi: 10.3389/fpsyg.2021.593172

Timperley, H. S., and Parr, J. M. (2009). What is this lesson about? Instructional processes and student understandings in writing classrooms. Curric. J. 20, 43–60. doi: 10.1080/09585170902763999

Troudi, S., Coombe, C., and Al-Hamly, M. (2009). EFL teachers’ views of English language assessment in higher education in the United Arab Emirates and Kuwait. TESOL Q. 43, 546–555. doi: 10.1002/j.1545-7249.2009.tb00252.x

Tsagari, D. (2016). Assessment orientations of state primary EFL teachers in two Mediterranean countries. Center Educ. Policy Stud. J. 6, 9–30. doi: 10.26529/cepsj.102

Turner, C. E. (2012). “Classroom assessment,” in The Routledge Handbook of Language Testing. eds. G. Fulcher and F. Davidson (London, England: Routledge), 65–79.

Turner, C. E., and Purpura, J. E. (2016). “Learning-oriented assessment in second and foreign language classrooms,” in The Handbook of Second Language Assessment Issue Purpura 2009. eds. D. Tsagari and J. Banerjee (Berlin, Germany: DeGruyter Mouton), 1–19.

Vattøy, K. D. (2020). Teachers’ beliefs about feedback practice as related to student self-regulation, self-efficacy, and language skills in teaching English as a foreign language. Stud. Educ. Eval. 64:100828. doi: 10.1016/j.stueduc.2019.100828

Vogt, K., and Tsagari, D. (2014). Assessment literacy of foreign language teachers: findings of a European study. Lang. Assess. Q. 11, 374–402. doi: 10.1080/15434303.2014.960046

Wang, Q. (2009). “Primary English in China: policy, curriculum and implementation” in The Age Factor and Early Language Learning. ed. M. Nikolov (Berlin, Germany: Mouton de Gruyter), 277–309.

Wang, X. (2017). A Chinese EFL teacher’s classroom assessment practices. Lang. Assess. Q. 14, 312–327. doi: 10.1080/15434303.2017.1393819

Wang, L., Lee, I., and Park, M. (2020). Chinese university EFL teachers’ beliefs and practices of classroom writing assessment. Stud. Educ. Eval. 66:100890. doi: 10.1016/j.stueduc.2020.100890

Wiliam, D. (2001). “An overview of the relationship between assessment and the curriculum,” in Currilum and Assessment. ed. D. Scott (Westport, Conn: Alex Publishing), 165–181.

Wiliam, D. (2010). “An integrative summary of the research literature and implications for a new theory of formative assessment” in Handbook of Formative Assessment. eds. H. L. Andrade and G. J. Cizek (New York, NY: Routledge), 18–40.

Wiliam, D., and Thompson, M. (2008). “Integrating assessment with instruction: what will it take to make it work?” in The Future of Assessment: Shaping Teaching and Learning. ed. C. A. Dwyer (Mahwah, NJ: Lawrence Erlbaum), 53–82.

Wu, X. M., Dixon, H. R., and Zhang, L. J. (2021a). Sustainable development of students’ learning capabilities: the case of university students’ attitudes towards teachers, peers, and themselves as oral feedback sources in learning English. Sustainability 13:5211. doi: 10.3390/su13095211

Wu, X. M., Zhang, L. J., and Dixon, H. R. (2021b). Implementing assessment for learning (AfL) in Chinese university EFL classes: teachers’ values and practices. System 101:102589. doi: 10.1016/j.system.2021.102589

Wu, X. M., Zhang, L. J., and Liu, Q. (2021c). Using assessment for learning (AfL): multi-case studies of three Chinese university English-as-a-foreign-language (EFL) teachers engaging students in learning and assessment. Front. Psychol. 12, 1–16. doi: 10.3389/fpsyg.2021.725132

Xu, Y., and Brown, G. T. (2017). University English teacher assessment literacy: a survey-test report from China. Pap. Lang. Test. Assess. 6, 133–158.

Xu, Y., and Liu, Y. (2009). Teacher assessment knowledge and practice: a narrative inquiry of a Chinese college EFL teacher’s experience. TESOL Q. 43, 492–513. doi: 10.1002/j.1545-7249.2009.tb00246.x

Yan, Q. (2020). Classroom-Based Assessment of Young EFL Learners in the Chinese Context: Teachers' Conceptions and Practices. Unpublished PhD Thesis. The University of Auckland, Auckland, New Zealand.

Yan, Q., Zhang, L. J., and Cheng, X. (2021). Implementing classroom-based assessment for young EFL learners in the Chinese context: a case study. Asia Pac. Educ. Res. 30, 541–552. doi: 10.1007/s40299-021-00602-9

Yu, S., Xu, H., Jiang, L., and Chan, I. K. I. (2020). Understanding Macau novice secondary teachers’ beliefs and practices of EFL writing instruction: a complexity theory perspective. J. Second. Lang. Writ. 48:100728. doi: 10.1016/j.jslw.2020.100728

Zhang, Z., and Burry-Stock, J. A. (2003). Classroom assessment practices and teachers’ self-perceived assessment skills. Appl. Meas. Educ. 16, 323–342. doi: 10.1207/S15324818AME1604_4

Zhang, L. J., and Sun, Q. B. (2022). Developing and validating the English teachers’ cognitions about grammar teaching questionnaire (TCAGTQ) to uncover teacher thinking. Front. Psychol. 13:880408. doi: 10.3389/fpsyg.2022.880408

Keywords: classroom-based assessment, young language learners, teachers’ beliefs, Chinese English-as-a-foreign-language learners, teacher education, China

Citation: Yan Q, Zhang LJ and Dixon HR (2022) Exploring classroom-based assessment for young EFL learners in the Chinese context: Teachers’ beliefs and practices. Front. Psychol. 13:1051728. doi: 10.3389/fpsyg.2022.1051728

Edited by:

Elena Mirela Samfira, Banat University of Agricultural Sciences and Veterinary Medicine, RomaniaReviewed by:

Constant Leung, King's College London, United KingdomDanijela Prošić-Santovac, University of Novi Sad, Serbia

Copyright © 2022 Yan, Zhang and Dixon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lawrence Jun Zhang, bGouemhhbmdAYXVja2xhbmQuYWMubno=

Qiaozhen Yan

Qiaozhen Yan Lawrence Jun Zhang

Lawrence Jun Zhang Helen R. Dixon

Helen R. Dixon