- 1McGill University, Montreal, QC, Canada

- 2Montreal Neurological Institute, Montreal, QC, Canada

- 3Escola Superior de Ciências da Santa Casa de Misericórdia de Vitória, Vitória, Brazil

- 4McGill University Health Centre (MUHC), Montreal, QC, Canada

- 5McGill University Health Centre-Research Institute (MUHC-RI), Montreal, QC, Canada

Importance: Given the importance of apathy for stroke, we felt it was time to scrutinize the psychometric properties of the commonly used Starkstein Apathy Scale (SAS) for this purpose.

Objectives: The objectives were to: (i) estimate the extent to which the SAS items fit a hierarchical continuum of the Rasch Model; and (ii) estimate the strength of the relationships between the Rasch analyzed SAS and converging constructs related to stroke outcomes.

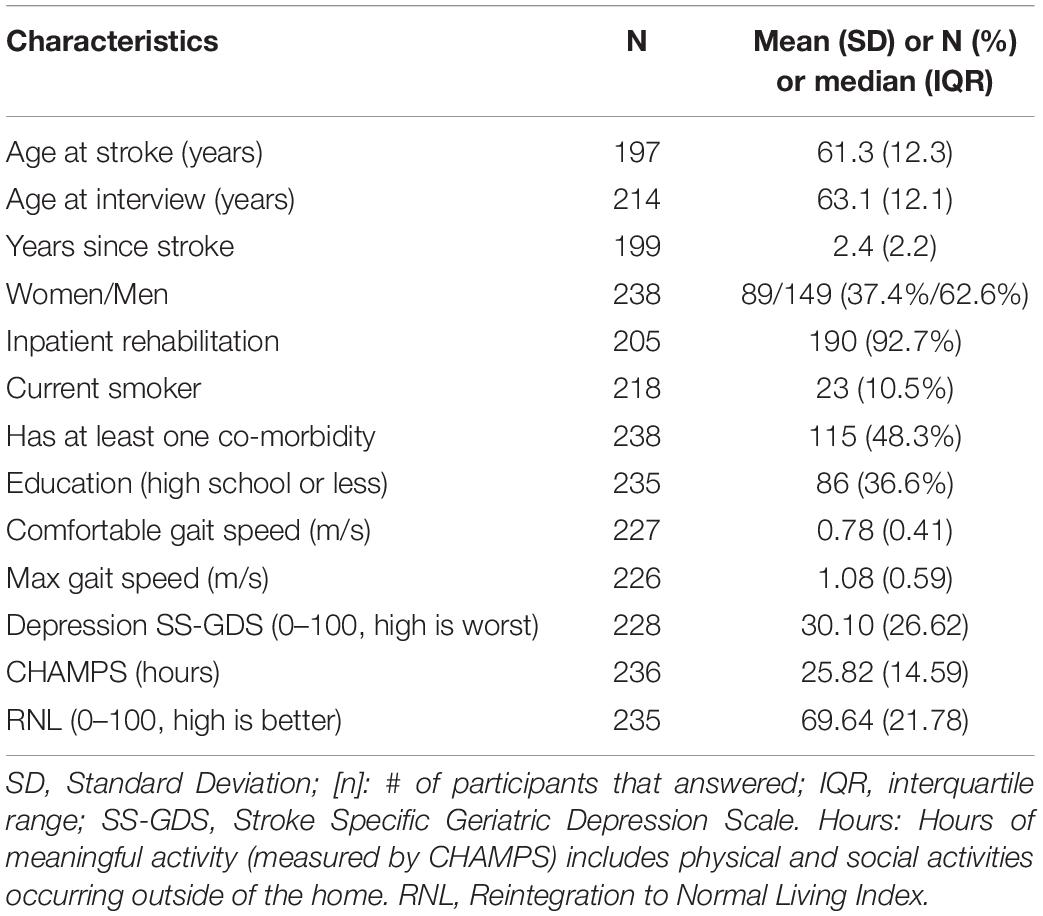

Methods: Data was from a clinical trial of a community-based intervention targeting participation. A total of 857 SAS questionnaires were completed by 238 people with stroke from up to 5 time points. SAS has 14 items, rated on a 4-point scale with higher values indicating more apathy. Psychometric properties were tested using Rasch partial-credit model, correlation, and regression. Items were rescored so higher scores are interpreted as lower apathy levels.

Results: Rasch analysis indicated that the response options were disordered for 8/14 items, pointing to unreliability in the interpretation of the response options; they were consequently reduced from 4 to 3. Only 9/14 items fit the Rasch model and therefore suitable for creating a total score. The new rSAS was deemed unidimensional (residual correlations: < 0.3), reasonably reliable (person separation index: 0.74), with item-locations uniform across time, age, sex, and education. However, 30% of scores were > 2 SD above the standardized mean but only 2/9 items covered this range (construct mistargeting). Apathy (rSAS/SAS) was correlated weakly with anxiety/depression and uncorrelated with physical capacity. Regression showed that the effect of apathy on participation and health perception was similar for rSAS/SAS versions: R2 participation measures ranged from 0.11 to 0.29; R2 for health perception was ∼0.25. When placed on the same scale (0–42), rSAS value was 6.5 units lower than SAS value with minimal floor/ceiling effects. Estimated change over time was identical (0.12 units/month) which was not substantial (1.44 units/year) but greater than expected assuming no change (t: 3.6 and 2.4).

Conclusion: The retained items of the rSAS targeted domains of behaviors more than beliefs and results support the rSAS as a robust measure of apathy in people with chronic stroke.

Introduction

Apathy is a defining feature in many common neurological conditions, including Parkinson’s Disease, Alzheimer’s Disease, and stroke (Robert et al., 2018; Le Heron et al., 2019). A meta-analysis of 24 studies found that apathy occurred in 30–40% of stroke patients (van Dalen et al., 2013). Mayo et al. (2009) estimated from an inception cohort that 20% of patients had apathy, as reported by a close companion, at some point in the first year post-stroke. They also found that apathy strongly affected recovery. Apathy has also been shown to affect health related quality of life (HRQL) in patients with stroke (Tang et al., 2014).

Although apathy is recognized as common and clinically important, there have been challenges in defining and measuring this construct. Marin originally described apathy as the “lack of motivation not attributable to disturbance of intellect, emotion, or level of consciousness” (Marin, 1991) and operationalized the definition as “a state characterized by simultaneous diminution in the overt behavioral, cognitive, and emotional concomitants of goal-directed behavior” (Marin et al., 1991). Medical diagnostic criteria were developed for apathy in 2009 and revised in 2018. The 2018 consensus group largely echoed Marin’s description, defining apathy as a quantitative reduction of goal-directed activity either in behavioral, cognitive, emotional, or social dimensions in comparison to the patient’s previous level of functioning in these areas. They also indicated that these changes may be reported by patients themselves or be based on the observations of others.

Practically, apathy can be considered as a diagnosis (i.e., a binary outcome) and as a state that can be measured along a continuum. Measures that include a series of questions (items) or structured interview components about apathy behaviors, are typically used for both diagnosis and measurement.

Such questionnaires assess apathy from the perspectives of: (i) the patient using a patient-reported outcome (PRO) or a self-reported outcome (SRO); (ii) an observer who is usually a significant other (ObsRO); or (iii) a health care professional using a clinician reported outcome (ClinRO) (Mayo et al., 2017). There is an important distinction between PRO and SRO. The answer to the questions in a PRO can only be provided by the person without interpretation from anyone else (U.S. Department of Health and Human Services Food and Drug Administration, 2009). In SRO, the response given by the person can be amended based on other information that may not have been provided by the patient (Mayo et al., 2017).

A continuous measurement scale is constructed by assigning numerical labels to the ordinal item-responses (e.g., not at all, slightly, some, and a lot) and summing these labels, assuming they have mathematical properties, which they may not. The continuous scale can be used to quantify severity and change over time. A specific cut-point on this continuum is used for diagnostic classification.

Notwithstanding the consensus on defining apathy, there is a plethora of apathy questionnaires and no gold standard (Clarke et al., 2011). For example, a recent systematic review by Carrozzino (2019) identified 13 different apathy measures used in Parkinson’s Disease research. Most of these have also been used in other neurological conditions, including stroke, without the due diligence required to ensure that the interpretation of the values apply to these other populations (Carrozzino, 2019).

As for many neuropsychiatric constructs, existing questionnaires were generally developed based on expert clinical input without a strong emphasis on psychometrics or patient experience to inform content. Classical approaches to psychometrics were applied after the items and the response options were set. The focus was on statistical homogeneity of the items (internal consistency expressed by Cronbach’s alpha) and factor structure. Dimensionality of these measures was felt necessary to cover the construct, but not always reflected in the scoring. Further the psychometrics underlying the total score were not scrutinized; measures were formed as a simple sum of the ordinally labeled response options. These approaches have well-known drawbacks: A high internal consistency can arise from redundant items, and change on one item will yield similar changes in the redundant items resulting in an overestimate of the calculated amount of change. These legacy measures are now being scrutinized in light of modern psychometric methods such as Item Response Theory (IRT) and Rasch Measurement Theory (RMT) (Hobart and Cano, 2009; Petrillo et al., 2015).

RMT estimates the extent to which a set of items fits an underlying linear hierarchy (Rasch Model); items that do not fit this hypothesized model should not be included in the total score until revised (Mayo, 2015). Thus, psychometrics come into play before the items and response options are set. RMT tests whether response options are functioning as expected in terms of representing more (or less) of the construct and if not, modifications are made. Rasch analysis is also used to transform the ordinal scores of the response options to have mathematical interval-like properties, rather than just numerical labels, allowing a legitimate total score to be derived. This approach also allows testing whether the items reflect more than one dimension and, therefore, whether the construct is best reflected by multiple measures.

Another key feature of these modern psychometric methods is the ability to test whether the items have the same mathematical interpretation in different subpopulations, such as those defined by sex or gender, language, or by different disease groups (Pallant and Tennant, 2007; Tennant and Conaghan, 2007). The additional information on how the items respond is useful in creating a measure with strong properties, needed particularly for evaluating change over time.

The most commonly used self-reported questionnaire for assessing apathy in stroke are the 18 item Apathy Evaluation Scale (AES) developed by Marin et al. (1991) in 1991 and the Starkstein Apathy Scale (SAS) (Starkstein et al., 1992) which is based on a preliminary version of the AES. The SAS consists of a combination of 14 (PRO/SRO) items with a four-point ordinal scale (0 = not at all; 1 = slightly; 2 = some; and 3 = a lot), with a higher summed total score indicating more apathy. By summing the 14 items to generate a total score the original developer conceptualized the SAS as measuring a single apathy construct.

We were unable to find any studies of the psychometric properties of the SAS in stroke, although it has been used in that population (Starkstein et al., 1993). Given the importance of apathy for stroke, we felt it was time to scrutinize and, if needed, improve the SAS using modern psychometric approaches. The global aim of this study was to contribute evidence supporting the use of the SAS in people with stroke. The specific objectives were to: (i) to estimate the extent to which the items of the SAS fit a hierarchical continuum based on the Rasch Model; and (ii) to estimate the strength of the relationship between the Rasch analyzed SAS and converging constructs related to stroke outcomes.

Materials and Methods

This study is a secondary analysis of an existing dataset from a clinical trial assessing a community-based, structured, program targeting participation in individuals post-stroke (Mayo et al., 2015). A sample of 238 English speaking participants living in the community having experienced a stroke within 5 years responded to the SAS at study entry (baseline = 0 month) and up to 4 more times (at 3, 6, 12, and > 12 months). A total of 857 SAS questionnaires were completed by the cohort over these time points and used in the Rasch analysis.

Measurement

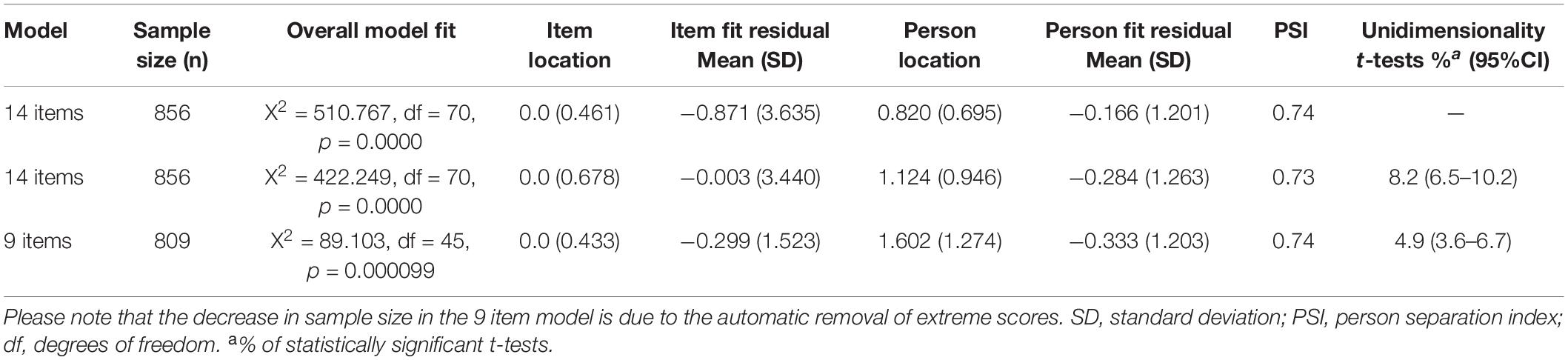

The World Health Organization’s (WHO) international classification for functioning, disability, and health (ICF) model) was used as the measurement framework and to structure the analyses as shown in Figure 1. Apathy is not part of the ICF, but motivation is listed as an impairment (b1301: mental functions that produce the incentive to act; the conscious or unconscious driving force for action). Apathy is part of the WHO international classification of diseases (ICD-10), listed as a diagnostic category not as a function (R45 symptoms and signs involving emotional state, specifically R45.3, demoralization and apathy). Apathy was represented by the original SAS with 14 items measured on a 4-point severity scale.

Figure 1. WHO international classification of functioning, disability and health (ICF) for the measurement framework.

The literature supports a relationship between apathy and depression (both diagnostic categories; depression is ICD-10 F32-F34). Here, we included two measures of depressive symptoms (ICF b1265: impairment of optimism as defined by mental functions that produce a personal disposition that is cheerful, buoyant and hopeful, as contrasted to being downhearted, gloomy and despairing): Stroke-Specific Geriatric Depression Scale (SS-GDS) (Cinamon et al., 2011) and the anxiety/depression item of the Euroqol EQ-5D (EuroQol Group, 2016).

There is a very strong effect of impairment of apathy/motivation on physical function, participation and self-rated health (Mayo et al., 2014). Physical function was assessed here with measured gait speed (comfortable and maximum), hypothesized to be less affected by motivation as it is a measure of capacity to walk a short distance (5 m). Two measures of participation were available: Community Healthy Activities Model Program for Seniors (CHAMPS) (Stewart et al., 2001) using hours of meaningful activity in and outside the home as the metric and the Reintegration to Normal Living Index (RNL) (Wood-Dauphinee and Williams, 1987). The EQ-5D VAS (visual analog scale: 0—death to 100—perfect health) was the measure of health perception. Personal factors under consideration were gender, time (0; 3; 6; 12; > 12 months), age (< 50; 50–60; 61–70; 71–80; > 80 years), and education (≤ 12 years; > 12 years).

Statistical Methods

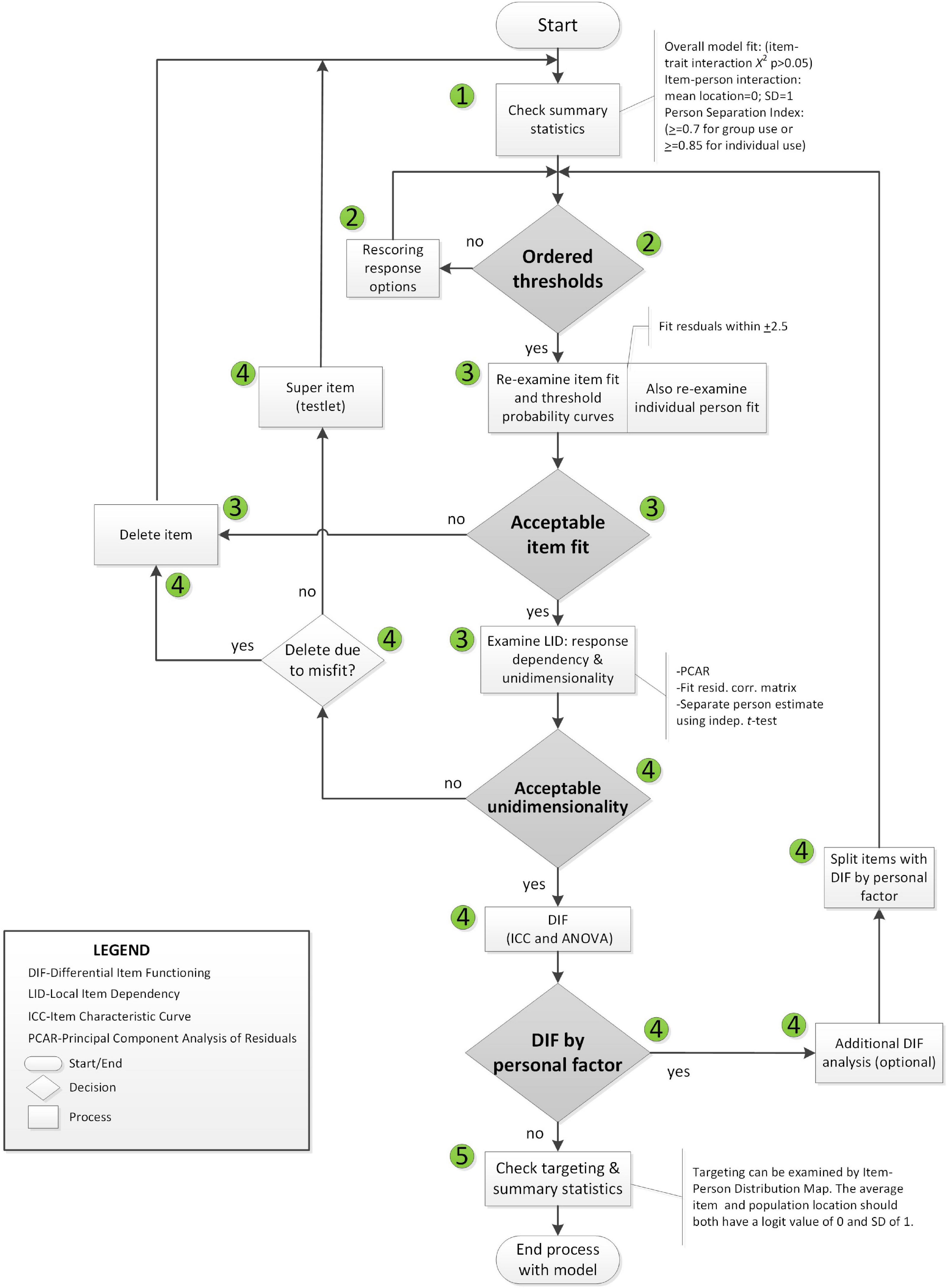

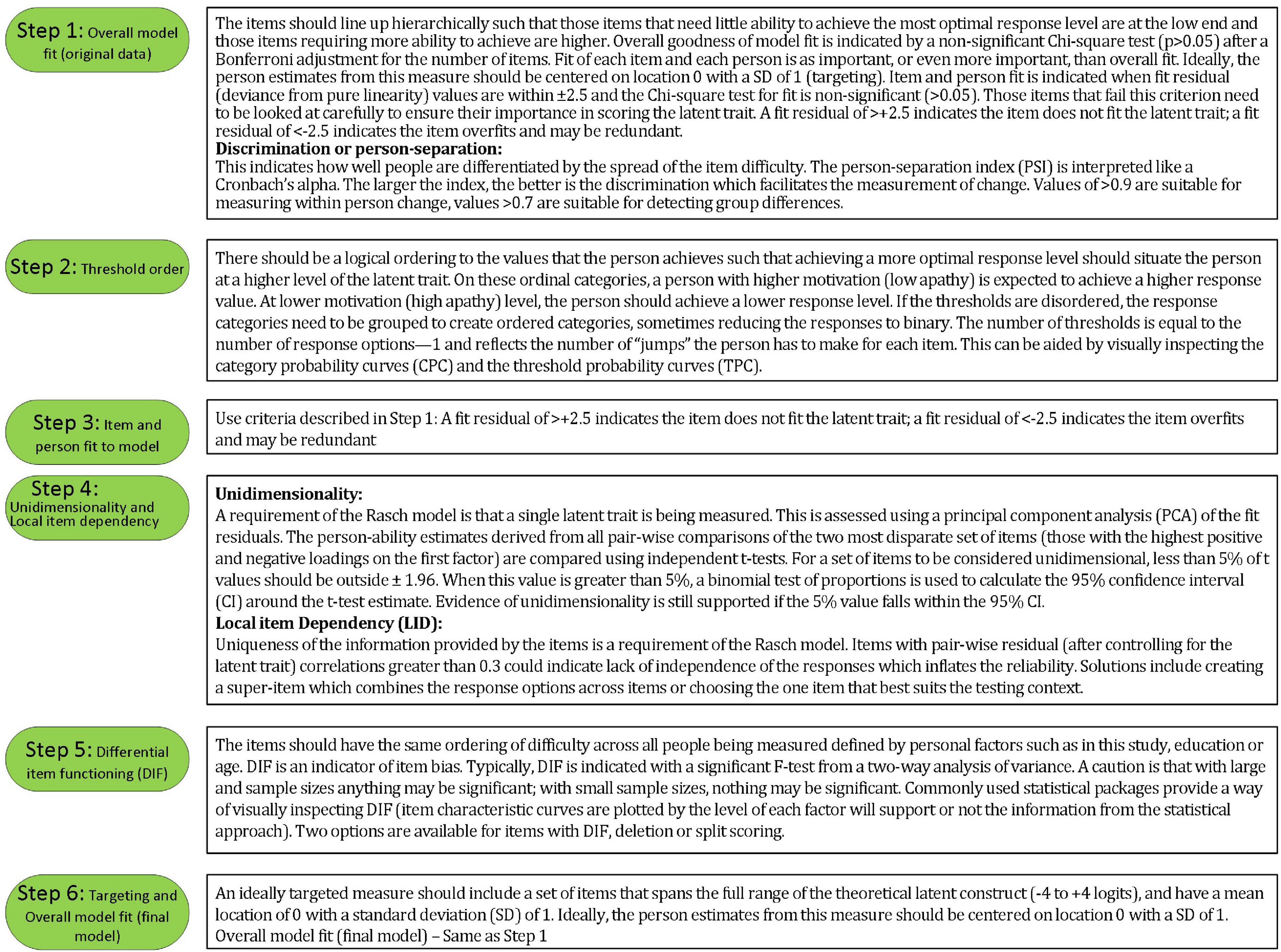

Rasch analysis was used to test whether the items on the SAS fit the Rasch model (Rasch, 1960). The typical steps and requirements for Rasch analysis were adopted from published guidelines (Pallant and Tennant, 2007; Tennant and Conaghan, 2007) and are detailed in Figures 2, 3. RUMM2020 version 4.1 software was used. The process is iterative and items that do not fit are removed one at a time until all items fit the model.

The Likelihood ratio test statistic performed in RUMM2020 (p < 0.0000001) supports the partial credit model. The threshold distances varied across items supporting the use of a partial credit model for this analysis (see Supplementary Appendix Figure 1 of item threshold map in Supplementary Appendix; Pallant et al., 2006).

Rasch analysis was conducted on all 14 items, initially. The number of observations available for Rasch analysis was 857 arising from 238 people with stroke, more than adequate to estimate item and person difficulties with a high degree of confidence level (99%) within a precision of ± 0.5 logits (Linacre, 1994). Please note that although 857 data points were entered into RUMM2020. The usable sample size was 856 since one data point had all extreme scores and was automatically removed. Bonferroni correction and/or post hoc downward sample size adjustment (features in RUMM2020) were used as appropriate (Hagell and Westergren, 2016; Hansen and Kjaersgaard, 2020). Five random samples with N of 300 each (with replacement) were drawn based on at least 10 observations per category (Linacre, 1999).

To access the extent to which using multiple time points introduced a concerning degree of local dependency that would alter the conclusions we also ran all analysis on the baseline data which had the largest sample size of all the time points and to the 5 random samples. Evidence of local dependency introduced by repeated measures is often detected in the item residual correlation matrix when item-pairs have correlation values > 0.3 (Pallant and Tennant, 2007; Tennant and Conaghan, 2007; Marais, 2009; Andrich and Marais, 2019). Local dependency can also have an impact on reliability (either an increase or decrease) (Marais, 2009). Local dependency leading to an increased misfit to the Rasch model would reduce sample reliability and separation, whereas decreased misfit would increase sample reliability and separation (Marais, 2009). Finally, the Wilcoxon Rank Sum Test was used to compare the item locations of the full dataset and the baseline time point.

Correlation coefficients were calculated between the revised SAS total score (rSAS) derived from Rasch analysis for those constructs at the same ICF level, i.e., measures of depressive symptoms (polyserial correlation for ED-5D item anxiety/depression and Pearson correlation for SS-GDS). As these measures are theorized to relate to the same latent construct, the following criteria were used to qualify the strength: strong ≥ 0.8; moderate 0.50–0.80; weak < 0.50 (de Vet et al., 2011). Correlation coefficients were also calculated between gait speed (comfortable and maximum) and rSAS (apathy) and it is theorized not to be related.

For downstream outcomes (participation and health perception), linear regression was used with adjustment for age, sex and gait speed. Here the interpretation of the strength of correlation coefficients for novel relationships was used (strong: ≥ 0.5).

Linear regression was also used to estimate the extent to which the response of the stroke participants to the community-based participation-targeted intervention (from the original study) differed when measured using SAS and rSAS. The regression parameter for slope (β) quantified linear growth over time and the t-statistic, derived from the ratio of the β to its standard error (SE) was used for effect size. As regression models used explanatory variables with different measurement scales, to facilitate comparison standardized regression coefficients were used.

Generalized estimating equations (GEE) was used to make a direct comparison of change over time between the two measures. Here the value for apathy was the outcome and the explanatory variables were the version of the measure used to derive the score (original SAS and rSAS), time, and age and sex as adjustment variables. GEE considers the clustering of apathy value in the measure and adjusts the error variance accordingly.

Results

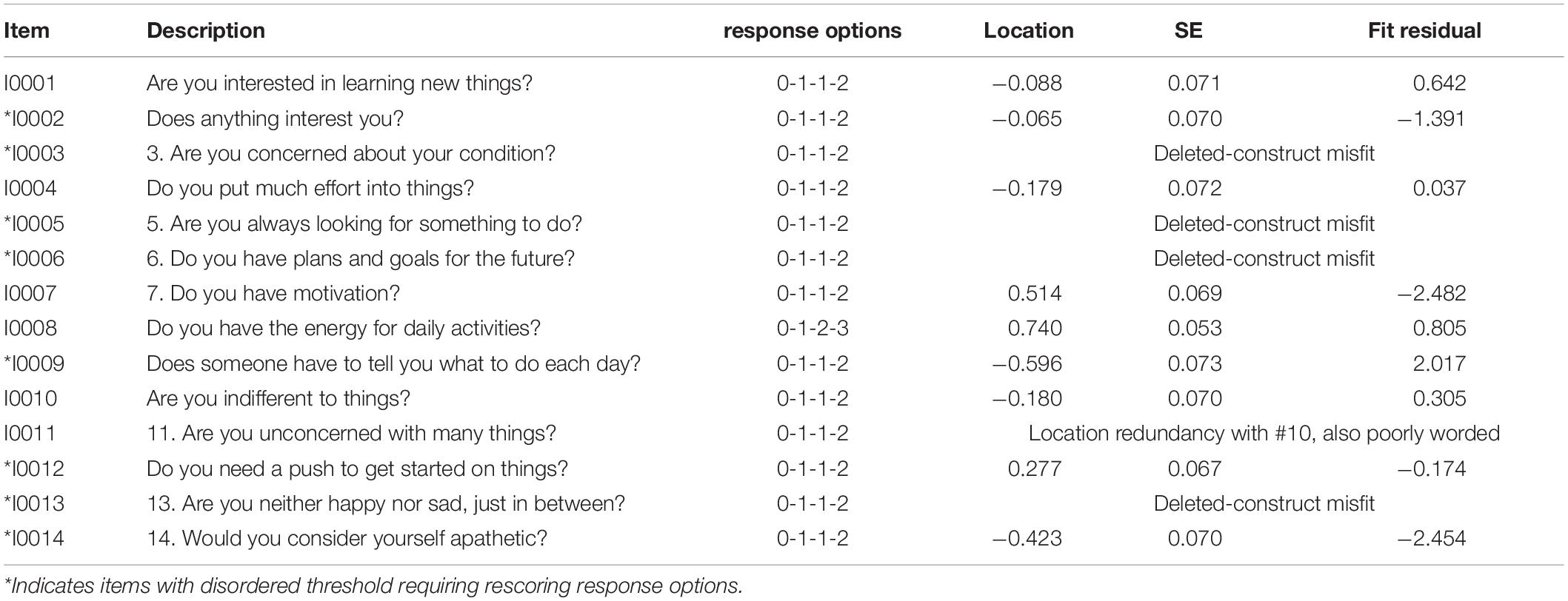

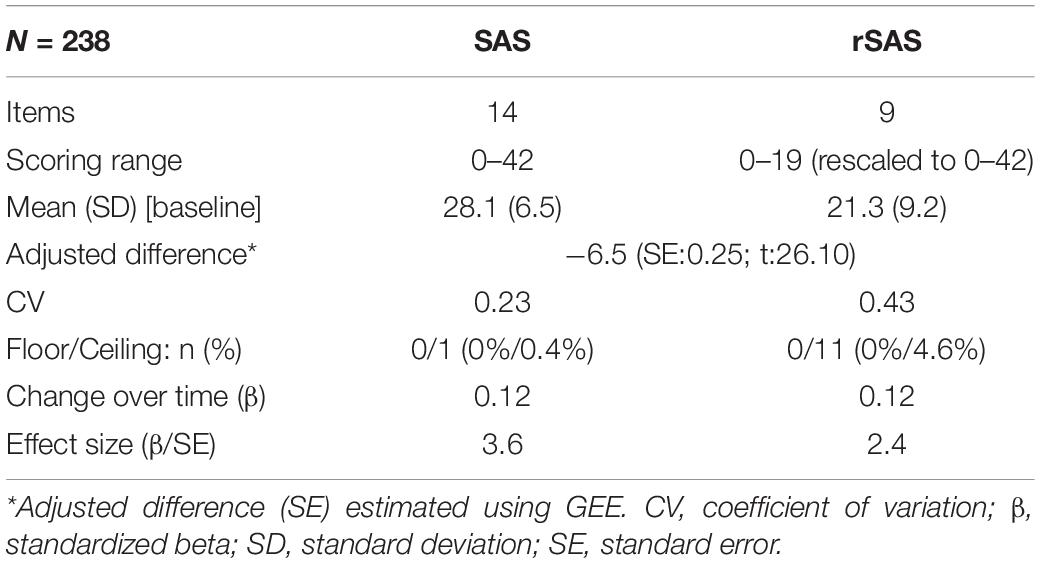

Participant characteristics of the sample at baseline are summarized in Table 1. On average, participants had a stroke 2.4 years before study entry. Table 2 summarizes the results of the Rasch analysis using data at all-time points. Disordered thresholds were observed in 8 of the 14 items. Disordered thresholds were consistently observed for “slightly” and “some” requiring the response options to be collapsed. Only item #8 retained the original response options. The remaining items were rescored due to poor spacing between Threshold Probability Curves (TPC) or poor fit between the observed values and the expected TPC. Iterations of the Rasch analysis are summarized in Table 3. After rescoring, the 14 items SAS still did not fit the Rasch model. The overall chi-square value for item-trait interaction was statistically significant [X2(70) = 510.767 p-value < 0.000] (Table 3). Item #3 and #5 had initial fit residuals of + 10.8 and + 5.9 respectively and were consistently > + 2.5 even after iterative adjustments with respect to the model and were deleted because of lack of fit to the hierarchical construct. Items #13 (fit residual of + 3.490) and #6 (fit residual of + 2.633) were iteratively deleted for the same reason. Item #11 (Are you unconcerned with many things?) did fit the Rasch model but had similar location (difficulty) values as item #10 (Are you indifferent to things?) with < 0.2 logit difference between the items and was deleted because it was poorly worded.

The remaining nine items met the requirements of the Rasch model with fit residuals between ± 2.5 as shown in Table 2. During iterative analysis, Item #7 and #14 had fit residuals < −2.5 indicating items over-discriminated the response pattern and may be redundant but in the final model the values were just within the cut-off (∼-2.4). In fact, item #14 fit residual improved to be within the set limit when item #11 was removed from the model.

Finally, The misfitting items (#3, #5, #6, #13) did not form a second factor based on the values of the PC Loadings when all 14 items were included. Additionally, the 4 items alone did not form a unidimensional construct (see Supplementary Appendix).

Local dependency was investigated using the correlation matrix of the residuals to examine response dependencies between items. All item-pair correlations were less than 0.3. A single construct, “apathy” was also supported with less than 5% of t-tests being significant (or the lower bound of the 95% binomial confidence interval should be less than 5%). Reliability based on the person separation index (PSI) is 0.74.

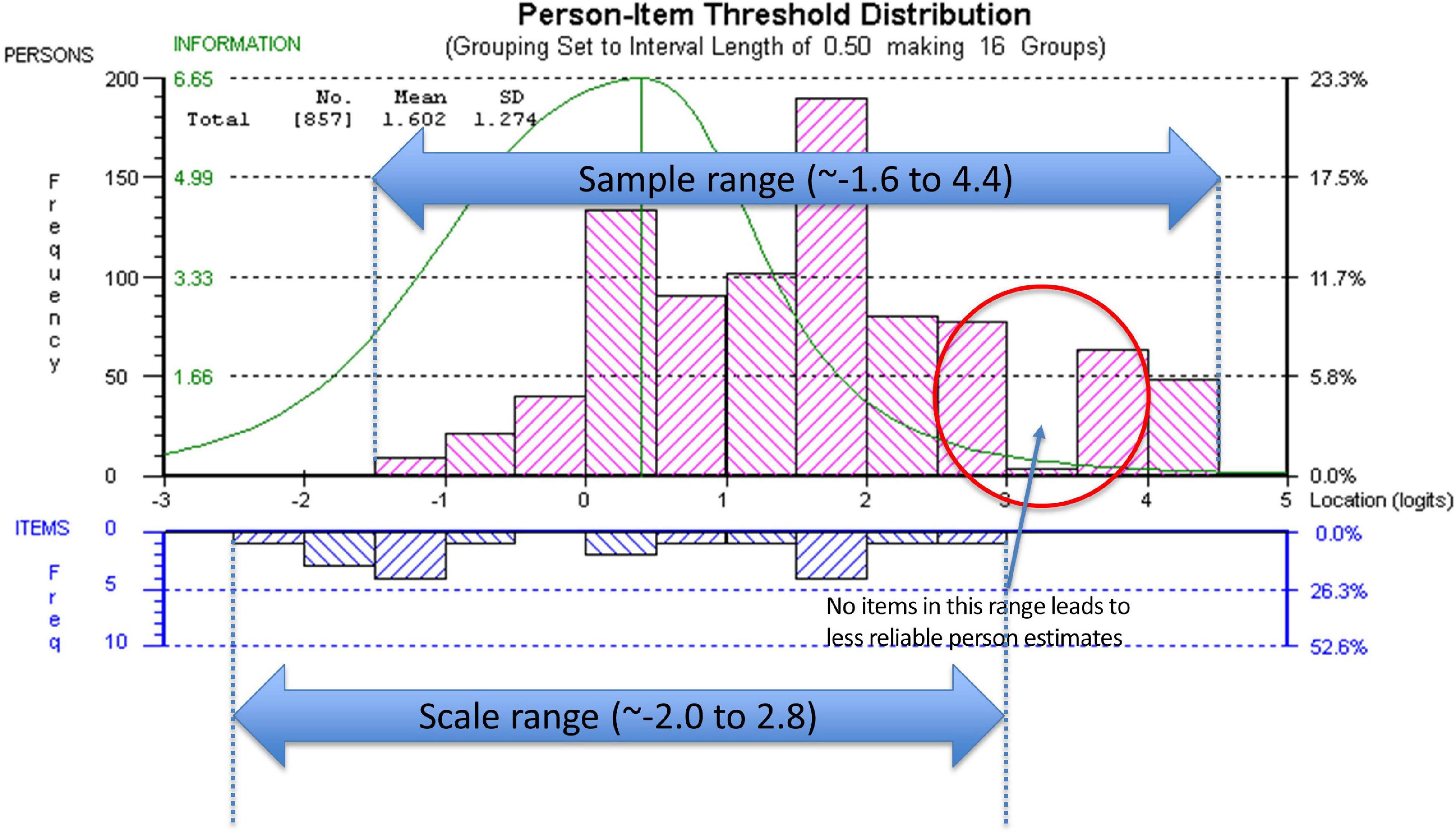

Targeting was assessed using the person-item threshold distribution map for the nine SAS items as shown in Figure 4. The figure shows item thresholds were reasonably well distributed over ∼5 logits with a near normal distribution (logit range ∼−2.0 to 2.8). The sample population had a logit mean of 1.60; SD:1.3 and a distribution range between ∼−1.6 and 4.4 logits showing some individuals at the low apathy end of the scale were not measured as reliably as there were no items that extended into that range. As shown in Figure 4, ∼13% of respondents’ scores were higher than any item available for the assessment. Also, 30% of scores were > 2 SD above the standardized mean but only 2/9 items covered this range.

The summary statistics based on the final model with 9 items had an overall chi-square value for item-trait interaction that was still statistically significant [X2(45) = 89.103 p-value < 0.0001] (Table 3).

As there are a large number of observations (n = 809) making the analysis overly sensitive to the detection of even trivial misfit adjusted sample sizes from the 5 random samples and the baseline time point were used. Each of the random samples and the baseline time point had non-significant overall chi-square p-values indicating global fit to the Rasch model. The average (the 5 random samples) p-value for fit of 0.28 and the average PSI of 0.73. The analysis of the baseline time point had a p-value for fit of 0.27 and PSI of 0.75 (see Supplementary Appendix Table 1).

Rasch analysis on each random sample and the baseline data point show that the fit residuals of the 9 items were within the set limit of ± 2.5. Item residual correlation matrix were all below the 0.3 cutoff indicating minimal local dependency in each of the random samples. PCA analysis within RUMM2020 did not indicate a second factor. The overall proportion of t-values falling outside a ± 1.96 range was less than 5% (or at least the lower bounds of 95% CIs of a binomial distribution were less than 5%).

There was no substantial DIF by time, age, sex, or education in the 5 random samples or the baseline data point. There was no discernable pattern of item DIF in the 5 random samples (see Supplementary Appendix Table 1). Graphically, there was no substantial deviation in the Item Characteristic Curves (ICC) for the personal factors (results not shown). Additionally, the results of the Wilcoxon Rank Sum Test showed that the full sample and the baseline time point did not differ on ranked item locations (p = 0.678).

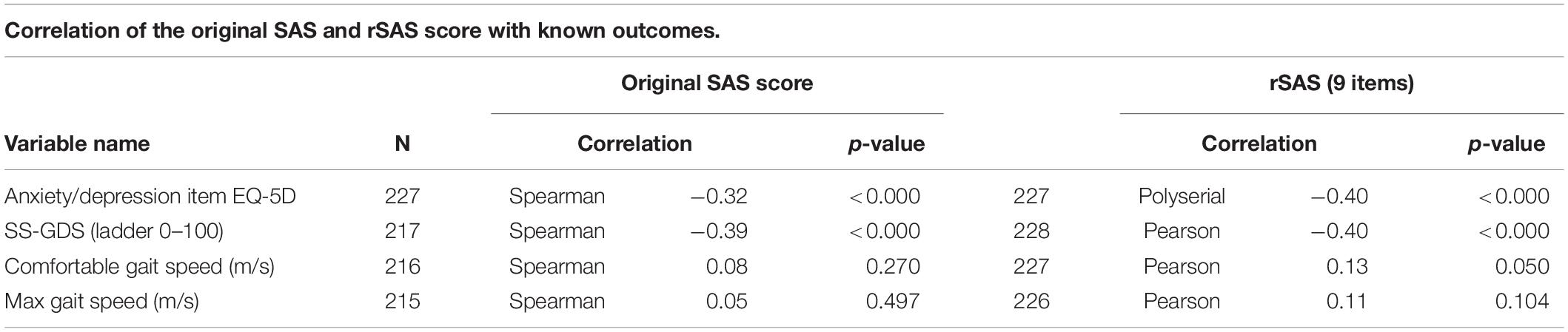

The correlations between the original SAS or rSAS apathy total score with measures used to support interpretability are shown in Table 4. The strongest correlations (range −0.37 to −0.41) were observed between the convergent construct of depressed mood, measured using the EQ-5D anxiety/depression item and the SS-GDS. Neither apathy scale version was correlated with comfortable or maximum gait speed (m/s) with estimates ranging from 0.07 to 0.13.

Table 4. Relationship between rSAS apathy total score and measures used to support interpretability.

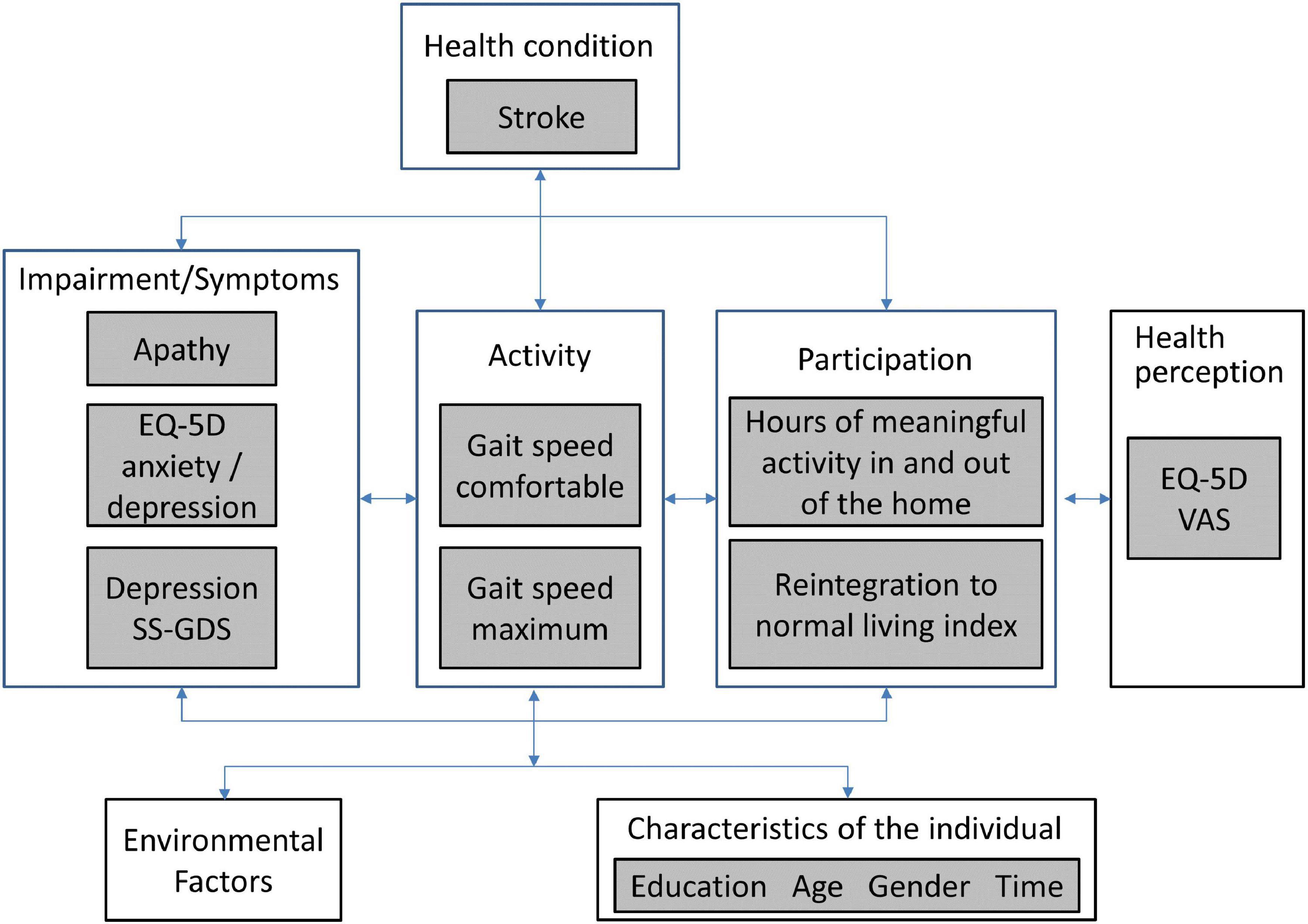

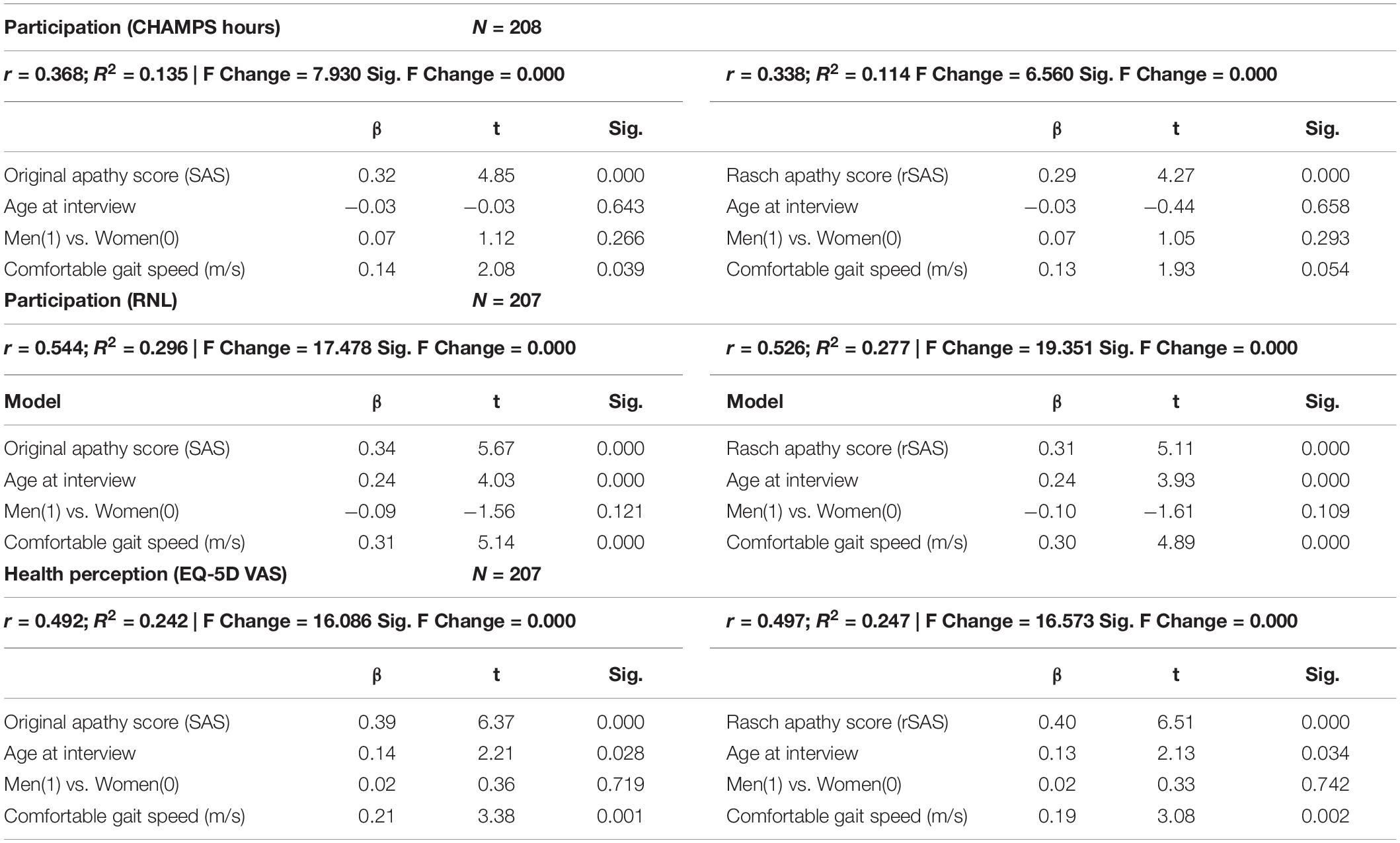

Table 5 shows the extent to which the two different versions of the SAS (original and rSAS) explain downstream outcomes of participation and perceived health adjusted for age, sex, and gait speed. For CHAMPS-hours of meaningful activity, the effect of apathy was similar for the two versions (SAS β:0.32, t:4.85) and for (rSAS β:0.29, t:4.27). This similarity held for the other downstream outcomes. R2 participation measures for SAS and rSAS ranged from 0.11 to 0.29, equivalent to correlations ranging from 0.34 to 0.54 (considered moderate to strong); R2 for health perception was approximately 0.25 (correlation 0.5, strong).

Table 5. Regression of apathy (original total score or Rasch apathy score [rSAS]) as a predictor of downstream outcomes (participation/HRQL).

Table 6 shows key measurement properties of the two versions. GEE showed the 9-item rSAS (rescaled to be out of 42) produced a value that was 6.5 units lower than the full 14-item version with more variability. Floor and ceiling effects were minimal for the two versions. Estimated change over time was identical (0.12 units per month) which was not substantial (1.44 units per year) but greater than expected assuming no change (t: 3.6 and 2.4).

Discussion

This study found that 9 of the original 14 items of the SAS fit a linear hierarchy (the Rasch model) suitable for measuring apathy in people with stroke. The results of the Rasch analysis on the original four-point ordinal scale showed that these thresholds were not used in a manner consistent with endorsing more positive response option with decreasing apathy (increasing motivation) (i.e., disordered thresholds). On 8 of the 14 items, participants consistently had difficulty differentiating reliably between the middle two response options “slightly” and “some.” The rationale of having more response options is to try to be more precise in the assessment, but the observed disordered thresholds indicate that participants could not reliably distinguish between some choices, so this design choice was counter-productive. Not taking disordered thresholds into account can provide a false sense of reliability and increase measurement error.

The original SAS items measure several aspects of apathy according to Pedersen et al. (2012) such as: (1) diminished motivation (#7 and #12); (2) behavioral (#4, #5, #8, and #9); (3) cognitive (#1, #2, #6, #11); (4) emotional (#10 and #13); and (5) insight (#3 and #14). The study identified two factors using Exploratory Factor Analysis (EFA) that must be interpreted with caution since other authors have commented on unidimensionality and have concluded that EFA is not appropriate to test unidimensionality (Ziegler and Hagemann, 2015; Morita and Kannari, 2016).

Our analysis showed four items (#3, #5, #6, and #13) did not fit the latent construct and so were removed from the rSAS. The poor fit of item #3 “Are you concerned about your condition?” has also been described in three other Parkinson’s studies as unreliable or ambiguous (Kirsch-Darrow et al., 2011; Pedersen et al., 2012; Morita and Kannari, 2016).

Morita and Kannari (2016) using Structured Equation Modeling Confirmatory Factor Analysis showed a single apathy construct but found that items #11 and #13 were not reflective of apathy in Parkinson’s population (Morita and Kannari, 2016). Our analysis showed that item #13 “Are you neither happy nor sad, just in between?” did not fit the apathy construct and so was deleted from the measure. On the other hand, item #11 “Are you unconcerned with many things?” did fit our model but it was poorly worded. In using the SAS we had already noted patients having difficulty understanding the negative-positive phrasing “unconcerned/many” was difficult for people to interpret. Removing item #11 allowed item #14 “Would you consider yourself apathetic?” to remain anchoring the rSAS in the apathy domain. Additionally, item #10 had a similar location with 0.2 logit difference. Item #5 “Are you always looking for something to do?” and item #6 “Do you have plans and goals for the future?” did not fit the apathy construct in the stroke population, possibly because these are features of stroke rather than apathy.

Fit residuals for items #7 and #14 were just within the cut-off of −2.5, indicating marginal over-fit to the Rasch model. These were retained in the rSAS version. These two items are “reverse worded items” such that they might be considered essentially the same question with one positively worded and the other one negatively worded. Using words with opposite meaning has been discussed as a means to control for acquiescence and social desirability bias (van Sonderen et al., 2013) but also may have the effect of artificially increasing the reliability of the SAS.

Of the 9 items fitting the Rasch model, 7 are classified as self-report items as they query observable behaviors where the response provided by the patient could be amended based on other information independent of the patient. The only two PRO items in the rSAS version are item #7 and #14 asking the person directly if they think they are motivated or apathetic, respectively.

Our results did not show a correlation between apathy and tests of physical capacity (comfortable or maximum gait speed). A systematic review did report an association between apathy and increased disability post-stroke in most studies, however the disability outcomes were mostly related to activities of daily living which require effort. The authors also noted that they could not perform a quantitative meta-analysis due to the amount of heterogeneity in the outcomes and analyses used among the studies (van Dalen et al., 2013).

The literature supports depression as being distinct from apathy, but with some degree of overlap (Mayo et al., 2009; Clarke et al., 2011). Previous work also suggests that apathy can coexist with depression to varying degree in stroke populations specifically (Mayo et al., 2009). A review of several different apathy measures show low correlation with depression (Clarke et al., 2011). Our hypothesis was that depression constructs would correlate weakly with the rSAS. The weak correlation observed between rSAS and depression outcomes (SS-GDS and EQ-5D anxiety/depression) provided further evidence that apathy is a distinct construct from depression. The modest correlation (∼0.4) between SS-GDS and rSAS may still be due to the inclusion of overlapping items asking about loss of interest, doing new things, and energy level contained in both questionnaires. It would be prudent to select apathy and depression scales without items querying these common features to avoid misclassification, given that apathy and depression can coexist.

In our study, the amount of variance in participation measures explained by either the SAS or rSAS was small and very similar (range 0.114–0.296, Table 5). However, these 2 versions explained approximately 25% of the variance in health perception. This indicates that apathy has more to do with how people feel than what they do. What they do, may be influenced by family activities.

Our result is largely consistent with the reported estimates in the literature showing some association between apathy and HRQL in autoimmune, inherited, and neurodegenerative disorders; however, apathy was used as an outcome or as an exposure variable in the analysis in the different studies (Benito-León et al., 2012; Tang et al., 2014; Kamat et al., 2016; Fritz et al., 2018).

The distribution of the items of the SAS along the latent trait of apathy does not match the distribution of the values on the latent trait observed in sample. We have conceived of the apathy construct to range from apathy (low end of the scale) to motivation (high end of the scale) with the latent trait standardized to have a mean of 0 and a SD of 1. The mean location along the latent trait with all 14 items after rescoring due to disordered thresholds was 1.0 (SD 0.9); with the rSAS of 9-items the mean location was 1.6 (SD: 1.2). This means that people had more motivation than the items were able to measure suggesting that to adequately measure the full range of the construct other items would be needed. Until a stroke-specific measure of the apathy-motivation construct can be developed, researchers could use the nine rSAS items.

Sample Size Considerations

As a secondary analysis from an existing dataset we chose to use all the available data instead of selecting only one time point and test for DIF by time (of which there was no evidence). We used Bonferroni correction and/or post hoc downward random sample size adjustment when appropriate. Sample sizes between 250 and 500 are considered a good size for Rasch analysis of a well-targeted scale (Linacre, 1994; Chen et al., 2014; Hagell and Westergren, 2016); however, Type 1 error can occur with N as small as 200 (Müller, 2020).

When the additional time points are included in the dataset the question of introducing dependency arises. Wright argues that dependency probably occurs in a small way explaining that as “The patients are not identical patients. They have changed” (Wright, 2003). The patient answering the questionnaire will not have identical level of ability at different time points.

Additionally, the lack of independence in the observations owing to repeated measures does not affect item locations on the hierarchy. In fact, it is an advantage in that the effect of repeated measures (time) can be tested using differential item functioning (DIF), where the hypothesis is the ordering of the items is unaffected by time. This is a valuable psychometric property for the estimation of change and is used to distinguish between change from response shift and true change and also to identify if there is a learning effect (Guilleux et al., 2015).

Limitations

The data for this study came from an existing dataset generated from participants who were living with the long-term sequelae of stroke and were enrolled in a study of community based program to develop skilling to enhance community participation. As such, the measures available were fixed, and additional measures of apathy were not available. However, we have no evidence that the rSAS which is a subset of the items on the original SAS does not reflect the apathy construct as the correlation with measures of related constructs were closely similar for the two versions.

We feel the data generated here indicates that more work on the apathy construct is needed including qualitative work to generate items reflecting the content from the person’s perspective, development of a robust scoring structure, and testing interpretability in diverse samples with respect to convergent and divergent constructs.

The rSAS was shown to be unidimensional according to the PCA of the residuals and the t-tests done on disparate items. Several approaches are suggested to test dimensionality of existing or new measures, including factor analysis and Rasch analysis which was used here. As factor analysis assumes the ordinal measurement scale is continuous and Rasch analysis converts ordinal scales to continuous, different conclusions about dimensionality can arise from these two approaches (Wright, 1996). Ideally, measures need to be constructed based on strong theoretical lines of an underlying unidimensional hierarchy (Waugh and Chapman, 2005). Future work on the apathy construct is warranted.

Usefulness of the Rasch Model

“Validity” is not a property of the measure, it is a property of the data with respect to how it can be interpreted. Rasch analysis tests the extent to which a set of items fit an underlying hierarchical model and so item and global fit support this hypothesis. Items that do not fit the Rasch model need to be investigated for sources of misfit which are often poor wording, a different construct, or negative vs. positive wording. Misfit indicates something is wrong with an item. Fit therefore indicates that the items collectively form a latent construct.

Future Directions

This study showed that a 9-item version of the original 14-item SAS could be used to assess apathy in chronic stroke patients without loss of content coverage but with gain in mathematical properties. The lack of targeting of the items to the range of motivation shown in this sample indicates additional items are needed. In addition, since the development of the original SAS, recommendations for developing PROs have been made (U.S. Department of Health and Human Services Food and Drug Administration, 2009). This process requires input from patients, caregivers, and clinicians and verification that all requirements for a robust, mathematically sound measure are met. Many disciplines are revisiting their legacy measures to assess the extent to which they measure up to these new standards and also adopting new measures that meet these new standards.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the McGill University Health Centre: Centre for Applied Ethics. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

SH analyzed the data. SH and NM wrote the draft of the manuscript. All authors contributed to the interpretation of the results and writing the final version of the article.

Funding

SH was funded by the Canada First Research Excellence Fund, awarded to McGill University for the Healthy Brains for Healthy Lives initiative.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.754103/full#supplementary-material

References

Andrich, D., and Marais, I. (2019). A Course in Rasch Measurement Theory: Measuring in the Educational, Social and Health Sciences. Singapore: Springer Singapore, 482.

Benito-León, J., Cubo, E., Coronell, C., and Animo Study Group. (2012). Impact of apathy on health-related quality of life in recently diagnosed Parkinson’s disease: The ANIMO study. Mov. Disord. 27, 211–218. doi: 10.1002/mds.23872

Carrozzino, D. (2019). Clinimetric approach to rating scales for the assessment of apathy in Parkinson’s disease: A systematic review. Prog. Neuro Psychopharmacol. Biol. Psychiatry 94:109641. doi: 10.1016/j.pnpbp.2019.109641

Chen, W.-H., Lenderking, W., Jin, Y., Wyrwich, K. W., Gelhorn, H., and Revicki, D. A. (2014). Is Rasch model analysis applicable in small sample size pilot studies for assessing item characteristics? An example using PROMIS pain behavior item bank data. Quality Life Res. 23, 485–493. doi: 10.1007/s11136-013-0487-5

Cinamon, J. S., Finch, L., Miller, S., Higgins, J., and Mayo, N. E. (2011). Preliminary evidence for the development of a stroke specific geriatric depression scale. Int. J. Geriatr. Psychiatry 26, 188–198. doi: 10.1002/gps.2513

Clarke, D. E., Ko, J. Y., Kuhl, E. A., van Reekum, R., Salvador, R., and Marin, R. S. (2011). Are the available apathy measures reliable and valid? A review of the psychometric evidence. J. Psychosomat. Res. 70, 73–97.

de Vet, H. C. W., Terwee, C. B., Mokkink, L. B., and Knol, D. L. (2011). Measurement in Medicine: A Practical Guide. Cambridge: Cambridge University Press.

Fritz, N. E., Boileau, N. R., Stout, J. C., Ready, R., Perlmutter, J. S., Paulsen, J. S., et al. (2018). Relationships Among Apathy, Health-Related Quality of Life, and Function in Huntington’s Disease. J. Neuropsychiatry Clin. Neurosci. 30, 194–201.

Guilleux, A., Blanchin, M., Vanier, A., Guillemin, F., Falissard, B., Schwartz, C. E., et al. (2015). RespOnse Shift ALgorithm in Item response theory (ROSALI) for response shift detection with missing data in longitudinal patient-reported outcome studies. Qual. Life Res. 24, 553–564. doi: 10.1007/s11136-014-0876-4

Hagell, P., and Westergren, A. (2016). Sample Size and Statistical Conclusions from Tests of Fit to the Rasch Model According to the Rasch Unidimensional Measurement Model (Rumm) Program in Health Outcome Measurement. J. Appl. Meas. 17, 416–431.

Hansen, T., and Kjaersgaard, A. (2020). Item analysis of the Eating Assessment Tool (EAT-10) by the Rasch model: a secondary analysis of cross-sectional survey data obtained among community-dwelling elders. Health Qual. Life Outcomes 18:139. doi: 10.1186/s12955-020-01384-2

Hobart, J., and Cano, S. (2009). Improving the evaluation of therapeutic interventions in multiple sclerosis: the role of new psychometric methods. Health Technol. Assess. 13, 1–177.

Kamat, R., Woods, S. P., Cameron, M. V., Iudicello, J. E., and Hiv Neurobehavioral Research Program (Hnrp) Group. (2016). Apathy is associated with lower mental and physical quality of life in persons infected with HIV. Psychol. Health Med. 21, 890–901. doi: 10.1080/13548506.2015.1131998

Kirsch-Darrow, L., Marsiske, M., Okun, M. S., Bauer, R., and Bowers, D. (2011). Apathy and Depression: Separate Factors in Parkinson’s Disease. J. Int. Neuropsychol. Soc. 17, 1058–1066. doi: 10.1017/s1355617711001068

Le Heron, C., Holroyd, C. B., Salamone, J., and Husain, M. (2019). Brain mechanisms underlying apathy. J. Neurol. Neurosurg. Psychiatry 90, 302–312. doi: 10.1136/jnnp-2018-318265

Linacre, J. M. (1994). Sample Size and Item Calibration [or Person Measure] Stability. Rasch Measure. Transact. 7:328.

Marin, R. S. (1991). Apathy - a Neuropsychiatric Syndrome. J. Neuropsych. Clin. N. 3, 243–254. doi: 10.1176/jnp.3.3.243

Marin, R. S., Biedrzycki, R. C., and Firinciogullari, S. (1991). Reliability and validity of the apathy evaluation scale. Psychiat. Res. 38, 143–162. doi: 10.1016/0165-1781(91)90040-v

Mayo, N. E. (2015). Dictionary of Quality of Life and Health Outcomes Measurement, Version 1, 1 Edn. Milwaukee, WI: ISQOL.

Mayo, N. E., Anderson, S., Barclay, R., Cameron, J. I., Desrosiers, J., Eng, J. J., et al. (2015). Getting on with the rest of your life following stroke: a randomized trial of a complex intervention aimed at enhancing life participation post stroke. Clin. Rehabil. 29, 1198–1211. doi: 10.1177/0269215514565396

Mayo, N. E., Bronstein, D., Scott, S. C., Finch, L. E., and Miller, S. (2014). Necessary and sufficient causes of participation post-stroke: practical and philosophical perspectives. Qual. Life Res. 23, 39–47. doi: 10.1007/s11136-013-0441-6

Mayo, N. E., Fellows, L. K., Scott, S. C., Cameron, J., and Wood-Dauphinee, S. (2009). A Longitudinal View of Apathy and Its Impact After Stroke. Stroke 40, 3299–3307. doi: 10.1161/STROKEAHA.109.554410

Mayo, N. E., Figueiredo, S., Ahmed, S., and Bartlett, S. J. (2017). Montreal Accord on Patient-Reported Outcomes (PROs) use series – Paper 2: terminology proposed to measure what matters in health. J. Clin. Epidemiol. 89, 119–124. doi: 10.1016/j.jclinepi.2017.04.013

Morita, H., and Kannari, K. (2016). Reliability and validity assessment of an apathy scale for home-care patients with Parkinson’s disease: a structural equation modeling analysis. J. Phys. Ther. Sci. 28, 1724–1727. doi: 10.1589/jpts.28.1724

Müller, M. (2020). Item fit statistics for Rasch analysis: can we trust them? J. Statist. Distribut. Applicat. 7:5.

Pallant, J. F., and Tennant, A. (2007). An introduction to the Rasch measurement model: An example using the Hospital Anxiety and Depression Scale (HADS). Br. J. Clin. Psychol. 46, 1–18. doi: 10.1348/014466506x96931

Pallant, J., Miller, R., and Tennant, A. (2006). Evaluation of the Edinburgh Post Natal Depression Scale using Rasch analysis. BMC Psychiatry 6:28. doi: 10.1186/1471-244X-6-28

Pedersen, K. F., Alves, G., Larsen, J. P., Tysnes, O.-B., Møller, S. G., and Brønnick, K. (2012). Psychometric Properties of the Starkstein Apathy Scale in Patients With Early Untreated Parkinson Disease. Am. J. Geriatr. Psychiatry 20, 142–148. doi: 10.1097/JGP.0b013e31823038f2

Petrillo, J., Cano, S. J., McLeod, L. D., and Coon, C. D. (2015). Using Classical Test Theory, Item Response Theory, and Rasch Measurement Theory to Evaluate Patient-Reported Outcome Measures: A Comparison of Worked Examples. Value Health 18, 25–34. doi: 10.1016/j.jval.2014.10.005

Rasch, G. (1960). Probabilistic models for some intelligence and attainment tests. Copenhagen: Danish Institution for Educational Research.

Robert, P., Lanctot, K. L., Aguera-Ortiz, L., Aalten, P., Bremond, F., Defrancesco, M., et al. (2018). Is it time to revise the diagnostic criteria for apathy in brain disorders? The 2018 international consensus group. Eur. Psychiat. 54, 71–76. doi: 10.1016/j.eurpsy.2018.07.008

Starkstein, S. E., Fedoroff, J. P., Price, T. R., Leiguarda, R., and Robinson, R. G. (1993). Apathy following cerebrovascular lesions. Stroke 24, 1625–1630. doi: 10.1161/01.str.24.11.1625

Starkstein, S. E., Mayberg, H. S., Preziosi, T. J., Andrezejewski, P., Leiguarda, R., and Robinson, R. G. (1992). Reliability, validity, and clinical correlates of apathy in Parkinson’s disease. J. Neuropsych. Clin. Neurosci. 4, 134–139. doi: 10.1176/jnp.4.2.134

Stewart, A. L., Mills, K. M., Kng, A. C., Haskell, W. L., Gillis, D., and Ritter, P. L. (2001). CHAMPS Physical Activity Questionnaire for Older Adults: outcomes for interventions. Med. Sci. Sports Exerc. 33, 1126–1141. doi: 10.1097/00005768-200107000-00010

Tang, W. K., Lau, C. G., Mok, V., Ungvari, G. S., and Wong, K. S. (2014). Apathy and health-related quality of life in stroke. Arch. Phys. Med. Rehabil. 95, 857–861.

Tennant, A., and Conaghan, P. G. (2007). The Rasch measurement model in rheumatology: What is it and why use it? When should it be applied, and what should one look for in a Rasch paper? Arthritis Care Res. 57, 1358–1362. doi: 10.1002/art.23108

U.S. Department of Health and Human Services Food and Drug Administration (2009). Guidance for Industry: Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. Silver Spring, MD: U.S. Department of Health and Human Services Food and Drug Administration.

van Dalen, J. W., Moll van Charante, E. P., Nederkoorn, P. J., van Gool, W. A., and Richard, E. (2013). Poststroke apathy. Stroke 44, 851–860. doi: 10.1161/strokeaha.112.674614

van Sonderen, E., Sanderman, R., and Coyne, J. C. (2013). Ineffectiveness of reverse wording of questionnaire items: let’s learn from cows in the rain. PLoS One 8:e68967–e. doi: 10.1371/journal.pone.0068967

Waugh, R. F., and Chapman, E. S. (2005). An analysis of dimensionality using factor analysis (true-score theory) and Rasch measurement: what is the difference? Which method is better? J. Appl. Meas. 6, 80–99.

Wood-Dauphinee, S., and Williams, J. I. (1987). Reintegration to normal living as a proxy to quality of life. J. Chronic Dis. 40, 491–499. doi: 10.1016/0021-9681(87)90005-1

Wright, B. D. (1996). Comparing Rasch measurement and factor analysis. Struct. Equat. Model. Multidiscipl. J. 3, 3–24. doi: 10.1080/10705519609540026

Keywords: Rasch analysis, stroke, apathy, measurement, patient-reported outcome, modern psychometrics

Citation: Hum S, Fellows LK, Lourenco C and Mayo NE (2021) Are the Items of the Starkstein Apathy Scale Fit for the Purpose of Measuring Apathy Post-stroke? Front. Psychol. 12:754103. doi: 10.3389/fpsyg.2021.754103

Received: 05 August 2021; Accepted: 25 October 2021;

Published: 07 December 2021.

Edited by:

Victor Zaia, Faculdade de Medicina do ABC, BrazilReviewed by:

Purya Baghaei, Islamic Azad University of Mashhad, IranTheodoros Kyriazos, Panteion University, Greece

Copyright © 2021 Hum, Fellows, Lourenco and Mayo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stanley Hum, U3RhbmxleS5odW1AbWNnaWxsLmNh

Stanley Hum

Stanley Hum Lesley K. Fellows

Lesley K. Fellows Christiane Lourenco

Christiane Lourenco Nancy E. Mayo

Nancy E. Mayo