- 1Faculty of Languages and Linguistics, University of Malaya, Kuala Lumpur, Malaysia

- 2Faculty of Foreign Studies, North China University of Water Resources and Electric Power, Zhengzhou, China

- 3School of International Studies, Communication University of China, Beijing, China

Students experience different levels of autonomy based on the mediation of self-regulated learning (SRL), but little is known about the effects of different mediation technologies on students' perceived SRL strategies. This mixed explanatory study compared two technology mediation models, Icourse (a learning management system) and Icourse+Pigai (an automatic writing evaluation system), with a control group that did not use technology. A quasi-experimental design was used, which involved a pre and post-intervention academic writing test, an SRL questionnaire, and one-to-one semi-structured student interviews. The aim was to investigate 280 Chinese undergraduate English as a foreign language (EFL) students' academic writing performance, lexical complexity, and perceptions of self-regulated strategies in academic writing. One-way ANCOVA of writing performance, Kruskal-Wallis test of lexical complexity, ANOVA of the SRL questionnaire, and grounded thematic content analysis revealed that, first, both Icourse and Icourse+Pigai provided significant support for the development of SRL strategies vs. the control group, although there was no significant difference between the two groups. Second, Icourse+Pigai-supported SRL was more helpful for improving students' academic writing performance because it enabled increased writing practice and correction feedback. Third, Icourse+Pigai-supported SRL did not significantly improve students' lexical complexity. In conclusion, we argue that both learning management systems and automated writing evaluation (AWE) platforms may be used to assist students' SRL learning to enhance their writing performance. More effort should be directed toward developing technological tools that increase both lexical accuracy and lexical complexity. We conclude that the technical tools used in this study were positively connected to the use of SRL techniques. However, when creating technologically mediated SRL activities, students' psychological study preferences should be considered.

Introduction

It has been well established that technology-mediated self-regulated learning (SRL) plays an increasingly prominent role in the language learning process (Zhu et al., 2016). Previous research has indicated that students experience different levels of autonomy based on the mediation they are provided for SRL (Bouwmeester et al., 2019; van Alten et al., 2020). However, not enough is known about the effects of different technologies on students perceived self-regulated learning strategies. Technology-mediated SRL enables students by providing more personalized pre-class preparation or classroom study, after-class practice, or discussion via online platforms and tools that support numerous resources and analyze individual learner data (Tan, 2019). As technological advances occur, instructors may need to adjust teaching strategies or modify their teaching practices within classrooms (Golonka et al., 2014). Learners, in turn, need to adapt to changes in their self-learning processes and practices necessitated by different types of technological tools (Cancino and Panes, 2021). Students may experience different cognitive loads depending on the study devices that they use to complete an assignment (Ko, 2017). For example, Ko (2017) indicated that learners' working memory load may be influenced by their physical learning environment, which includes different allocations of learning resources and technologies. Therefore, it is vital to understand the effects of different technologies on students' SRL processes and practices to better address students' learning needs.

According to a previous review (Broadbent and Poon, 2015), relatively insufficient attention has been paid to the effects of technology-supported SRL on academic achievement in English academic writing programs in blended learning settings in higher education. Academic writing, which predominately involves the development of a thesis, demands complex cognitive processes that requires the effective development of SRL strategies (Lam et al., 2018). Technology changes the EFL writing process from paper-based to online and subsequently influences the development of cognitive strategies in writing (Cancino and Panes, 2021). Thus, understanding technology mediation in SRL is crucial to better support students with effective SRL strategies. However, it is unclear whether technology use changes would ultimately change learning outcomes.

In Chinese higher education, poor academic English writing quality remains an issue among undergraduate students, despite their having received at least 10 years of English instruction since primary education. For instance, students are reported to compromise complexity for accuracy in their writing. They tend to overuse basic vocabulary, such as public verbs (e.g., say, stay, talk) and vague nouns (e.g., people, things, society) and avoid using advanced words or misuse advanced words in their academic writing (Hinkel, 2003; Zuo and Feng, 2015). Furthermore, the over-emphasis of accuracy in Chinese national academic English writing tests for non-English disciplines in higher education, such as College English Test Band 4 (CET-4) and Chinese English Test Band 6 (CET-6), reinforces such behavior (Zhang, 2019). However, linguistic complexity is an essential parameter by which to assess quality of English writing (Treffers-Daller et al., 2018; Xu et al., 2019). Among the various aspects of linguistic complexity, lexical complexity is crucial, as supported by research evidence from Csomay and Prades (2018), who found that higher quality essays among their participants were those that displayed a more comprehensive vocabulary range. However, whether technology-mediated SRL effectively enhances lexical complexity in students' academic writing has seldom been mentioned in previous research (Broadbent and Poon, 2015). Therefore, effort should be directed toward determining how technology-mediated SRL may help students to produce high-quality academic writing.

To address the issues mentioned above, this study compared the effects of Icourse and Icourse+Pigai-supported SRL on writing performance, lexical complexity, and perceived self-regulated learning strategies. The Small Private Open Course (SPOC) learning management platform enables enriched exposure to authentic materials and provides online quizzes and discussion boards to support various learning subjects (Qin, 2019). However, improvement in EFL learning to write requires enriched exposure to learning input and repeated writing practice with corrective feedback (Gilliland et al., 2018). Pigai provides automatic writing evaluation (AWE) with instant feedback and revision suggestions for learners, which may supplement individual learners' needs for synchronous feedback while simultaneously reducing teachers' workload. Combining Icourse and Pigai does not necessarily improve students' writing performance and writing quality or enhance SRL. Since the combination of technology use represents an extra burden and demands higher cognitive load of students, the blend of technology use may lead to a decline in students' satisfaction with learning (Xu et al., 2019). Thus, an investigation is required to determine the effects of different technology-mediated SRL on EFL learners' writing performance and quality.

Literature Review

Self-Regulated Learning

SRL refers to self-formed ideas, feelings, and actions that help individuals achieve their objectives (Zimmerman and Schunk, 2001; Seifert and Har-Paz, 2020). Technology-mediated SRL facilitates learners with flexible learning models and improves their language learning outcomes and motivation (An et al., 2020). Prior studies primarily focused on the effectiveness of technology-enhanced language learning within classroom instruction (An et al., 2020). There is a lack of empirical investigation of the effect of technology-mediated SRL on improving language skills. Of the limited number of previous studies that addressed technology-enhanced SRL, most reported positive relationships to language learning outcomes. For instance, Öztürk and Çakiroglu (2021) compared two groups of university students with and without SRL strategies in flipped learning. The findings indicated that SRL facilitated learning English speaking, reading, writing, and grammar. Similarly, students with SRL capabilities exhibited enhanced learning outcomes in blended learning settings (Zhu et al., 2016). In contrast, Sun and Wang (2020) found low-frequency use of SRL strategies among 319 sophomores Chinese EFL students in processes of learning writing, although SRL strategies significantly predicted writing proficiency. The students were reported to lack practice in writing during classroom sessions due to large classroom size and limited class time (Sun and Wang, 2020).

In terms of the instrument to measure SRL, the Motivated Strategies Learning Questionnaire (MSLQ) is frequently used (Pintrich et al., 1993). The MSLQ has been shown to be valid and reliable for use among undergraduate students. The original MSLQ assesses cognitive, meta-cognitive, and resource management strategies (Broadbent, 2017). Cognitive strategies involve the preparation, elaboration, and management of studies. Meta-cognitive strategies primarily refer to self-control. Resource management includes time management, effort regulation, and peer learning (Broadbent, 2017). According to Broadbent's (2017) review of 12 SRL regulated online studies, meta-cognition and resource management strategies positively influence learning outcomes, while cognitive strategies have the least amount of empirical evidence to suggest their utility. As SRL theory developed from a focus on meta-cognition to recognizing its multifaceted nature, it included motivation factors that influence learning (An et al., 2020). Pintrich (2004) noted an issue of the MSLQ is that it does not include motivational and affective factors that determine essential emotional strategies (Pintrich, 2004; An et al., 2020). Therefore, this study adopted a revised version of the MSLQ that includes four SRL aspects: cognitive, metacognitive, resource management, and emotional strategies (Supplementary Appendix 1).

Lexical Complexity

The ultimate goal for the technology-supported SRL, in this context, is to improve students' writing performance and writing quality. More specfically, lexical complexity is an essential indicator of EFL writing (Lemmouh, 2008; Zhu and Wang, 2013), but few studies have addressed the effect of SRL on linguistic complexity in EFL programs. O'Dell et al. (2000) suggested that lexical complexity primarily involves lexical diversity, lexical sophistication, and lexical density (the ratio of content words to tokens). The lexical diversity aims to measure lexical variability, while lexical sophistication compares the ratio of advanced words to the total tokens. Treffers-Daller et al. (2018) highlighted the importance of integrating lexical diversity, the range of words used to measure lexical complexity, and lexical sophistication, with reference to less frequently used words as defined by various standards. Previous literature on lexical complexity development is inconclusive; some studies discovered growth after training, while others did not (Knoch et al., 2014; Kalantari and Gholami, 2017). Bulté and Housen (2014) affirmed the possibility of capturing changes in linguistic complexity in L2 writing over a short period. Inquiry into the effects of various technologies on lexical complexity is necessary so that language teachers can support students with desirable technology-mediated SRL strategies, thus enabling students to achieve enhanced learning outcomes, such as better writing quality.

Icourse and Pigai

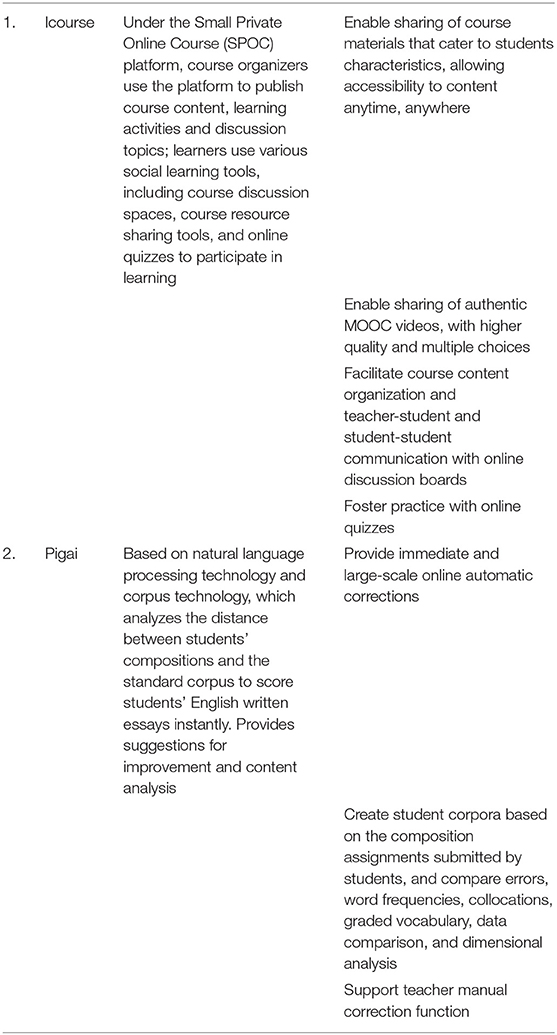

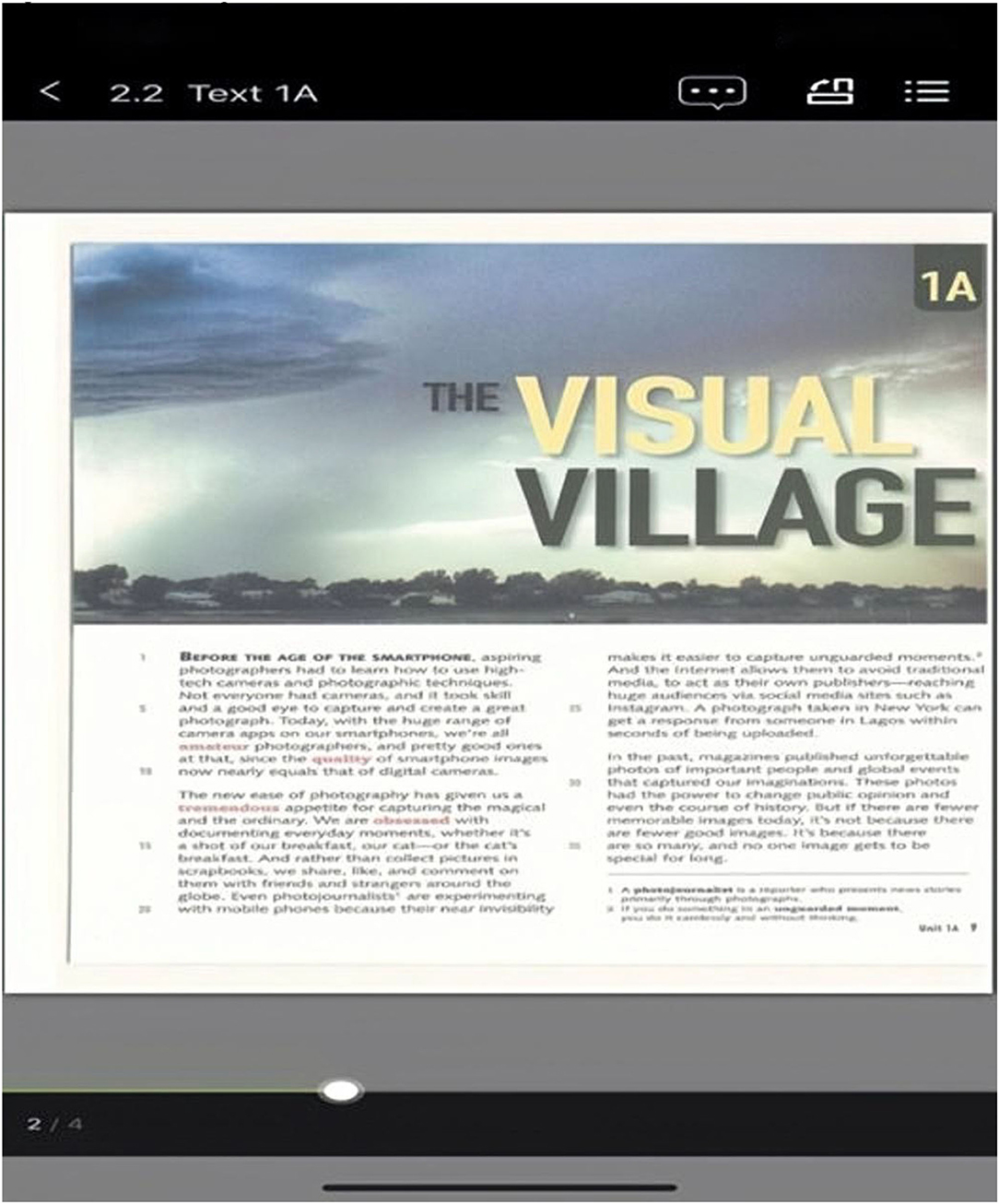

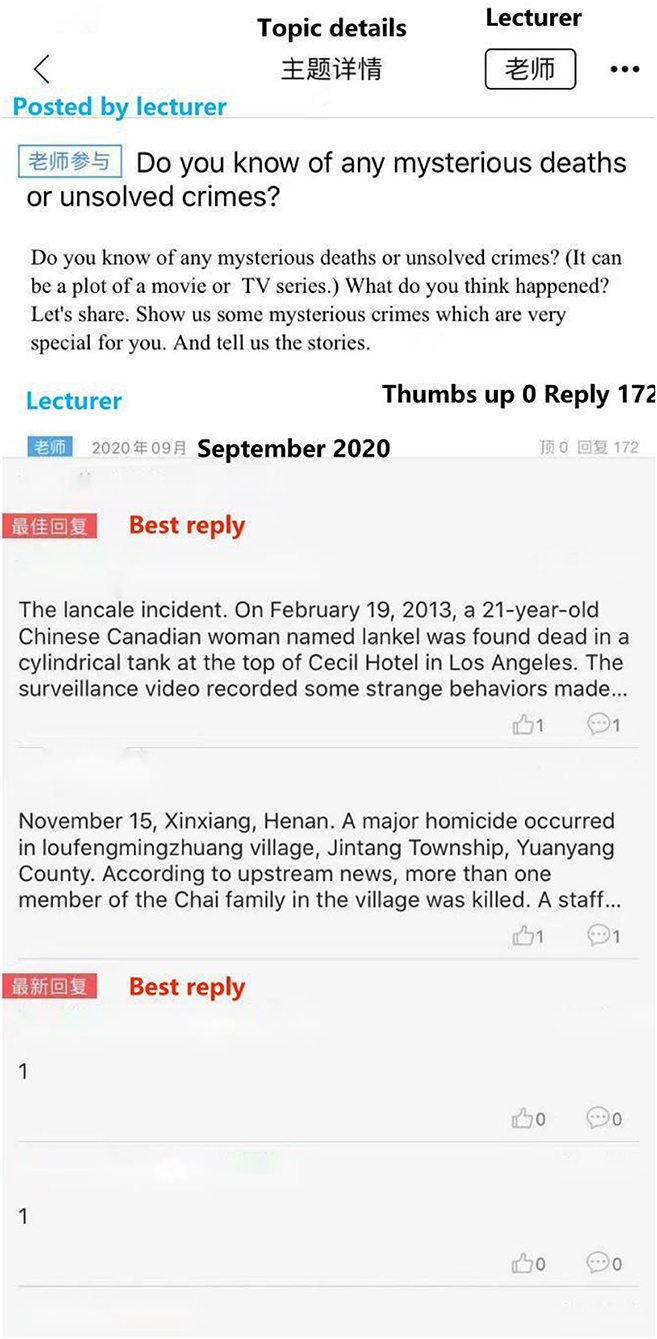

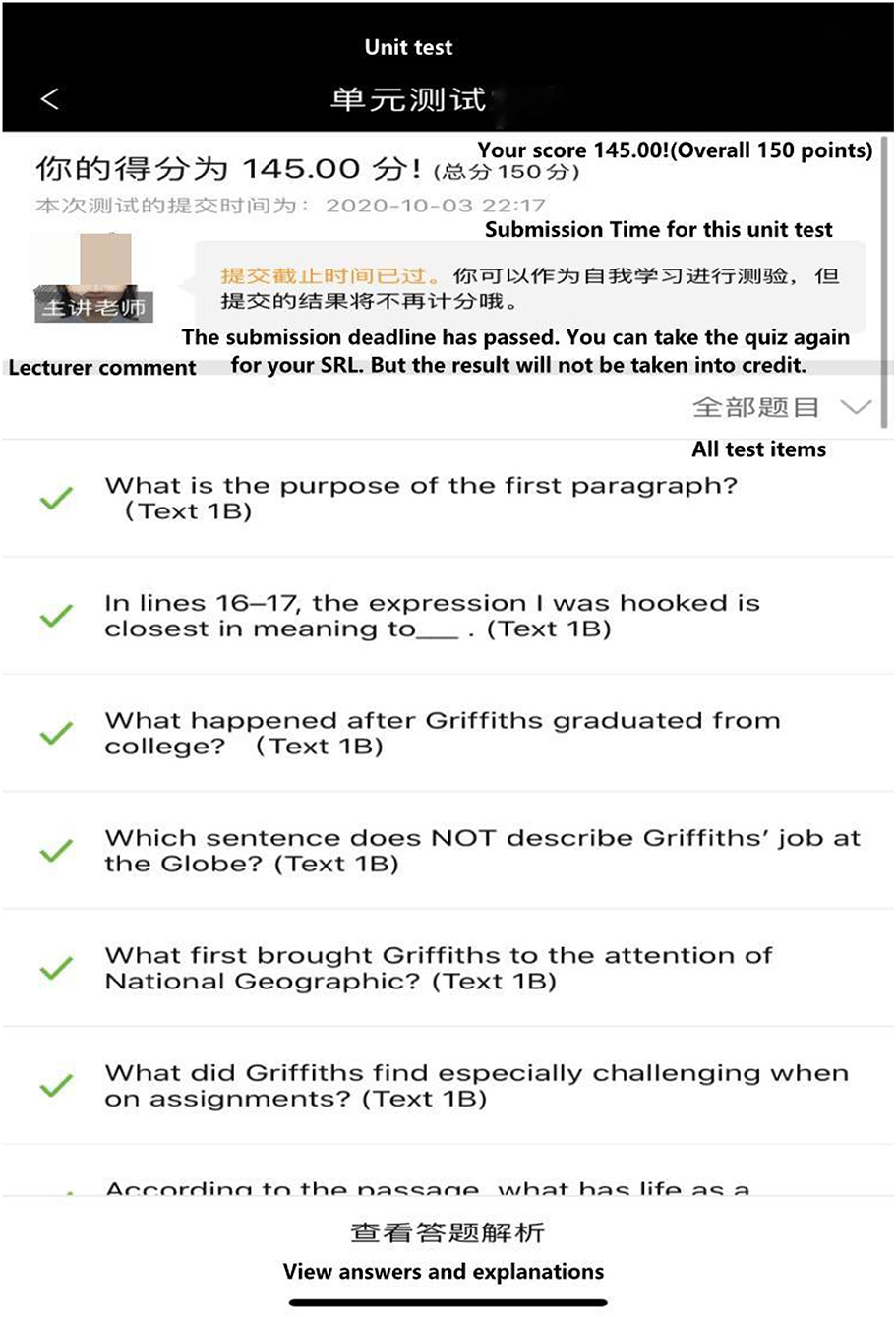

The technology tools adopted in the technlogy-mediated SRL in this research are the Icourse and Pigai. Based on previous studies (Golonka et al., 2014; Yang and Dai, 2015; Zhai, 2017), the definitions of Icourse and Pigai are presented in Table 1. Massive open online courses (MOOCs) are often criticized for high dropout rates and low student engagement (Gilliland et al., 2018; de Moura et al., 2021). Icourse, as a SPOC platform, is claimed to be a valid alternative as course designers, usually course lecturers, permit the course syllabus to be flexible in difficulty and more adaptable to different student characteristics (Ruiz-Palmero et al., 2020). Guo et al. (2021) quantitatively assessed the impact of the SPOC-based blended learning model embedded in the undergraduate course of International English Language Testing System (IELTS) writing at a Chinese university in Beijing. IELTS is an international standardized proficiency English test for non-English speakers. Assessments were made of writing performance through classroom observation, questionnaires, and achievement tests in pre, mid, and final terms. The experimental group outperformed the control group in the final term test results, but there were no significant differences in pre and mid-term results. However, the study did not include linguistic parameters for evaluating SPOC platforms' effects on EFL learning of writing. Of the few studies that did include linguistic measurement, Cheng et al. (2017) addressed the impact of SPOC learning management systems on 35 Chinese undergraduate EFL learners' writing performance in terms of essay length, accuracy, and lexical complexity. The findings revealed that the SPOC learning platform helped the learners to write with increased accuracy and fluency and with an increased ratio of advanced academic vocabulary in the post-test compared to the pre-test. However, the study did not include comparison with a control group that did not use the SPOC platform. Overall, prior studies highlighted the positive role SPOC platforms play in assisting the EFL learning of writing, in terms of improving writing test scores, accuracy, and fluency. Figures 1–3 illustrate how Icourse functions as a SPOC learning management system to support browsing course materials, answering online quizzes, and interacting via discussion boards.

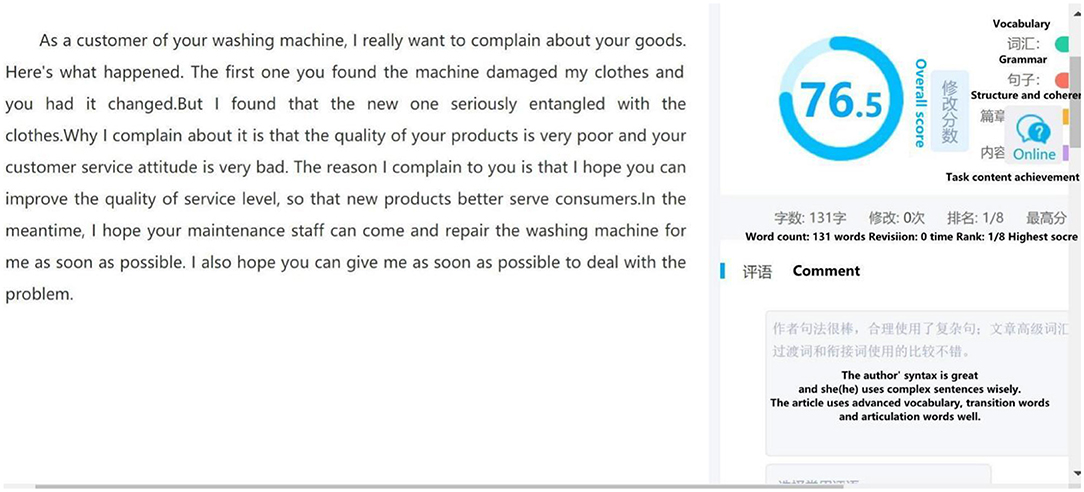

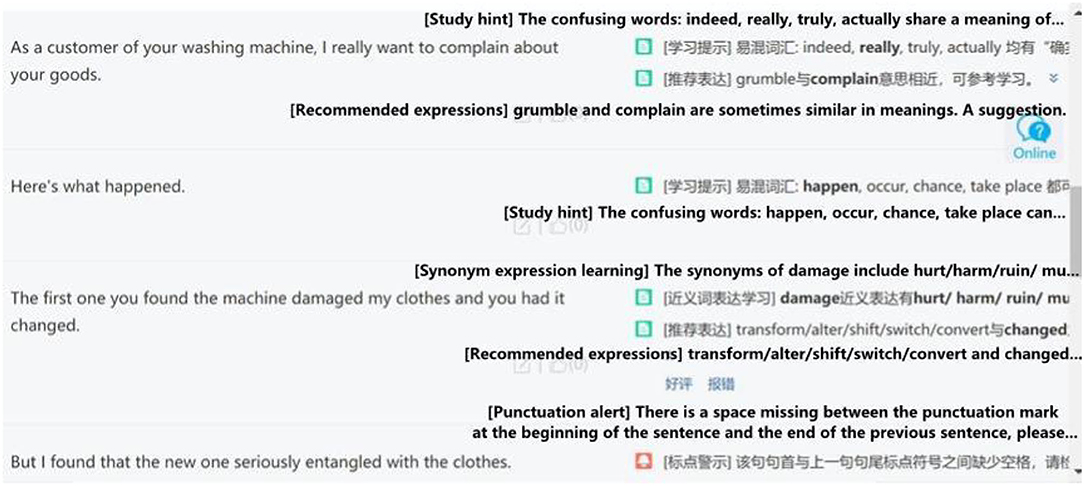

Besides the learning management system, Pigai is used as an AWE tool in this research. AWE aims to provide prompt writing revision feedback to learners (Liao, 2016). The major difference between AWE and teacher feedback is that AWE calculates the language gap between the EFL learner's language use and that of the native speaker (Li et al., 2019). While teacher feedback mainly relies on teachers' knowledge and teaching experience. Figures 4, 5 illustrate how Pigai works as an AWE tool to support learning how to write proficiently in English. Figure 4 shows how Pigai gives an overall mark to students' essays based on lexical, syntactic, semantic, and content parameters. A general remark on the vocabulary and sentence use is also displayed at the lower right corner of the screen. Figure 5 illustrates how Pigai provides detailed feedback regarding confusing words, synonyms, and convertible sentence patterns to expand students' vocabulary and sentence use.

Overall, positive findings support the applicability and efficiency of Pigai (Lin et al., 2020). For instance, Li and Zhang (2020) reported a positive role of Pigai in improving Chinese EFL learners' writing performance and writing self-efficacy by indicating errors in students' writing in real time and thereby enabling them to acquire vocabulary and sentence-construction knowledge. In contrast, some researchers have argued Pigai has deficits (Wu, 2017). For example, Pigai is less effective at providing feedback that helps logical thinking and content structure organization, which are also crucial factors for successful compositions, in addition to vocabulary and grammar (Wu, 2017). While the technology of Pigai constantly updates and adapts to emerging pedagogical needs, Hou (2020) called for additional studies of Pigai to keep pace with its technological advances.

Determining effective ways to support EFL learning is complex. Careful consideration should be made of the combination of various technologies, rather than favoring one specific technology over another (Lam et al., 2018).

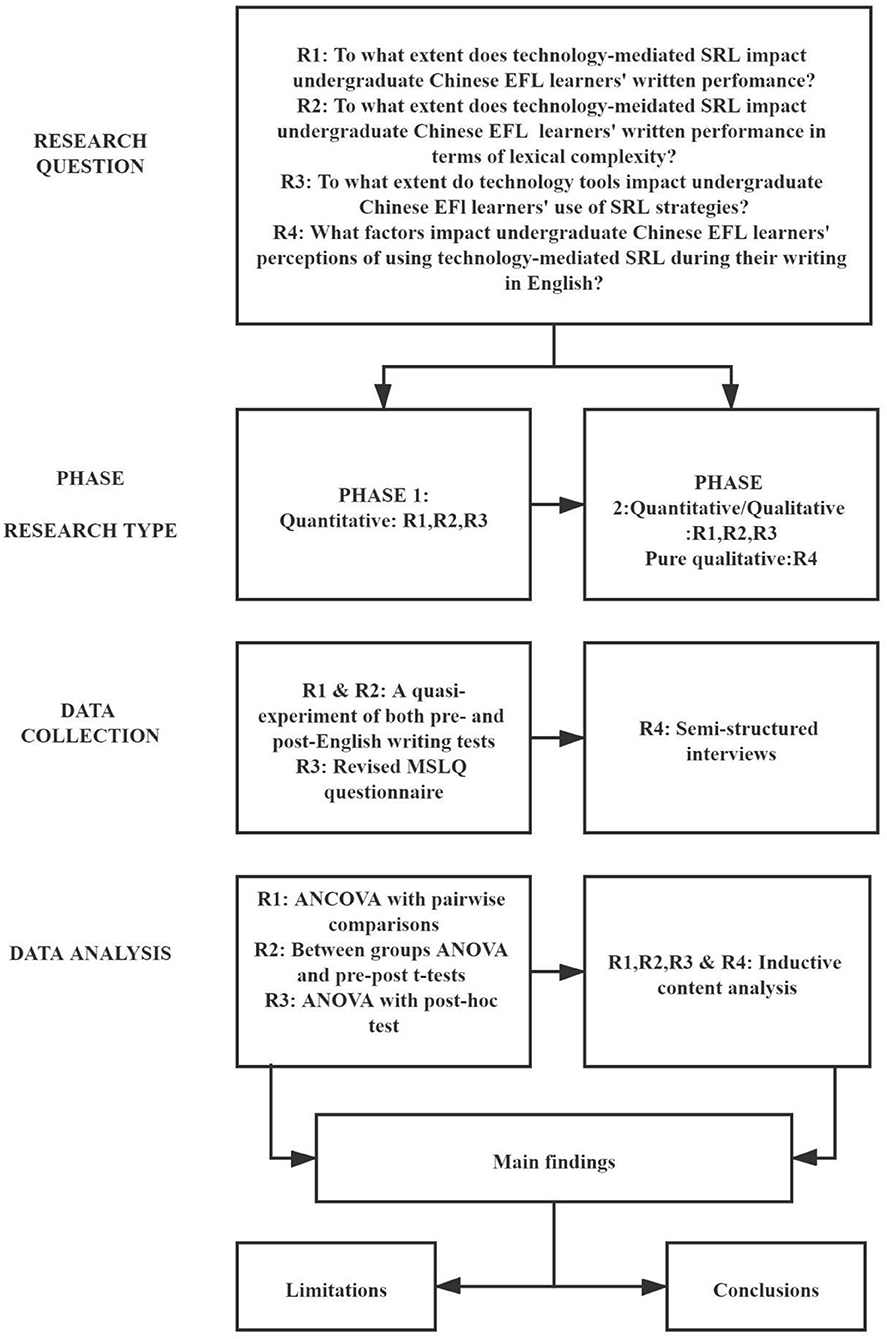

According to the research aim, the research questions of the current study were as follows:

1. To what extent does technology-mediated SRL impact undergraduate Chinese EFL learners' written performance?

2. To what extent does technology-mediated SRL impact undergraduate Chinese EFL learners' written performance in terms of lexical complexity?

3. To what extent do technology tools impact undergraduate Chinese EFL learners' use of SRL strategies?

4. What factors impact undergraduate Chinese EFL learners' perceptions of using technology-mediated SRL during their writing in English?

Materials and Methods

Participants

Purposive sampling was used to recruit the participants. The initial plan was to recruit 300 sophomore students from water conservancy engineering, mechanical engineering, electronic engineering, and allied subjects. However, although the intention was to have 100 students in each group, only 280 students agreed to participate in this study. Of these students, 99 were assigned to the control group, 90 to the Icourse group, and 91 to the Icourse+Pigai group. The participants were from the same Henan province in the People's Republic of China to ensure that they shared a similar EFL learning background. Their average age was 19 years (SD = 1.169), and each had at least 10 years of EFL learning experience since their primary education.

The research complied with all ethical stipulations of the ethics committee at the University of Malaya. Before conducting the research, the relevant university administrators were fully informed, and all the students voluntarily participated in the study, and each signed an online consent form before participating in the study. All participants remained anonymous during the entire research process.

Research Design

An explanatory sequential mixed-methods approach was used to address the research questions (Figure 6). First, a quasi-experimental study was conducted to obtain quantitative comparative data, with follow-up qualitative data derived from student interviews. This study assessed three groups: a control group that received no technology-mediated SRL, an Icourse-assisted SRL group, and an Icourse and Pigai supported SRL group. Icourse and Pigai supported self-regulated learning of academic writing both in and outside the classroom. The academic writing course outline is presented in Table 2. These systems include pre-class online learning of vocabulary, watching online instruction videos about writing skills, lead-in quizzes, sentence paraphrase practice, online forum discussion, after-class review, essay writing online submission, and receiving feedback and revisions. The same academic writing course was delivered to all three groups, using the same textbook in classroom instruction. The same five units of content were covered in one academic term and the class frequency was the same, namely three times per week for 90 mins per class. Each group was recruited from a general polytechnic-focused university. All three universities were ranked at the same level. The three senior lecturers in the three groups shared a similar educational background, namely, holding a master's degree and having taught 10–12 courses. Lecturers similarly monitored students' SRL processes by setting up tasks, quizzes, and activities with deadlines, and answered students' questions online when needed.

Writing Performance

Instrument

The two composition topics were revised from the academic International English Language Testing System (IELTS) Writing Task 2 (Esol, 2007). The topic was “What is your opinion on consumer complaints?” The second was “What is your opinion on distance education?” The reasons for selection of these tasks were that, first, the IELTS Writing Task 2 focuses on academic writing. Second, the grading rubrics for IELTS cater more to the research purpose of measuring students combined lexical accuracy and complexity rather than simply focusing on lexical accuracy alone. Supplementary Appendix 2 presents the revised writing grading rubrics (Esol, 2007). A pilot study was conducted among 30 students to check validity and reliability. KMO was 0.6 and Bartlett's test p value were 0.00 and Cronbach's alpha was 0.79; these values were considered acceptable for the study.

Data Collection

Both pre and post-tests were delivered online through scanning Quick Response (QR) codes. Before delivering the tests, the participants signed an informed consent electronically by scanning QR codes. The pre-test was delivered at the beginning of the academic term in September 2020, and the post-test was given at the end of the academic term in January 2021. Anti-cheating measures and a time limit of 30 mins were enforced to avoid plagiarism. If the online submission was blank or highly suspected of plagiarism, this composition was considered invalid. The number of valid cases for each group was 73 (out of 99), 70 (90), and 72 (91).

Data Analysis

Both AWE graded the tests in Pigai, as well as the researcher, and another experienced teacher. The average grade of the three grading results was regarded as the final result for each participant. After grading, the pre-test results were used as covariates in a one-way ANCOVA of academic achievement. The effect size was calculated.

Lexical Complexity

Instrument

The student texts in each group were assessed in terms of their lexical diversity, lexical density, and lexical sophistication. The lexical diversity measure used the STTR (standard type-token ratio) as measured by Wordsmith 8.0, developed by Mike Scott. The WordSmith software was originally developed by University of Liverpool, UK, and published by Oxford University Press. The measurement of STTR is more accurate than the type-token ratio (TTR) because it is less dependent on the text length (Treffers-Daller et al., 2018). The lexical density and lexical sophistication were calculated using Range 32, designed by P. Nation and A. Coxhead. By calculating the ratios of content words to the total tokens, Range 32 first excludes the built-in function words (function text) as filter words and obtains lexical density ratios (Zhu and Wang, 2013). Range 32 uses Laufer and Nation's base word list for the most high-frequency words, the second 1,000-word list (hereinafter referred to as baseword 2) for the next most high-frequency words, and the third word list (hereinafter referred to as baseword 3) for advanced academic vocabulary (Laufer and Nation, 1995; Zhu and Wang, 2013). Lexical sophistication was measured by calculating the frequency of words other than Range 32, which refers to the ratio of defined baseline 2 and 3 words with no spelling errors to the total token (Gong et al., 2019).

Data Collection and Analysis

The texts were first processed to identify spelling errors and homographs. Misused words were removed from the essay entry process to ensure that all words entered were correct output words. Where words were selected correctly but spelt incorrectly or homographs, such as bat the animal or bat for baseball, the researchers corrected them and added a marker afterwards. A pre-test was conducted to examine whether there were any differences among the three groups in terms of lexical complexity before the intervention. Both between-groups and timewise comparisons were conducted to determine whether there was any significant effect of technology-supported SRL on lexical complexity. Since the Levene test hypothesis was violated, the post-test lexical complexity ratios were analyzed using SPSS 26 with Kruskal-Wallis tests.

SRL Strategies

Instrument

Based on the literature review, a revised version of the MSLQ was applied to measure the technology-mediated SRL strategies in this study (Supplementary Appendix 1). MSLQ was initially developed by the National Center for Research USA after completing numerous correlational research on SRL and motivation (Pintrich, 2003). The tool consists of four sections: emotional (including motivational and affective factors), cognitive (including elaboration, rehearsal, and organization), metacognitive (including self-control), and resource management (including time management and peer learning). A five-point Likert-type scale was adopted for the self-report questionnaire, with responses ranging from 1 (strongly disagree) to 5 (strongly agree). The questionnaire was in Mandarin to ensure that the participants could fully understand all items. A pilot test was conducted with 30 undergraduate students other than the participants, which revealed a Cronbach's alpha value of 0.899 and a value of 0.917 for KMO and Bartlett's test.

Data Collection and Analysis

Participants received access to the questionnaire through QR code scan. The questionnaire was collected only after the intervention. One-way ANOVA was conducted to determine whether there were any statistically significant differences among the three groups.

Technology Use Factors Toward Technology-Supported SRL

Instrument

One-to-one interviews (Supplementary Appendix 3) were conducted with 10 participants (Icourse: 5, Icourse+Pigai: 5) to explore the reasons for the quantitative data using in-depth evidence. The interviews were semi-structured, and follow-up questions such as “how” or “why” were added based on the interviewees' answers. The interviews were designed and delivered in Mandarin Chinese to ensure that the interviewees could understand all interview questions.

Data Collection and Analysis

Interviewees were randomly chosen from the experimental groups. Each interview required up to 30 mins through WeChat video chat. WeChat is a free application that Tencent launched on January 21, 2011 to provide instant messaging services. All interviews were recorded after obtaining the interviewees' permission. All records were transcribed verbatim and translated into English by a licensed professional translator. The transcripts were then coded and analyzed using Nvivo 12. Inductive content analysis was used because no predetermined codes were used. Based on preliminary analyses, the researcher established the relationships between the nodes and checked them against the data.

Results

Writing Performance

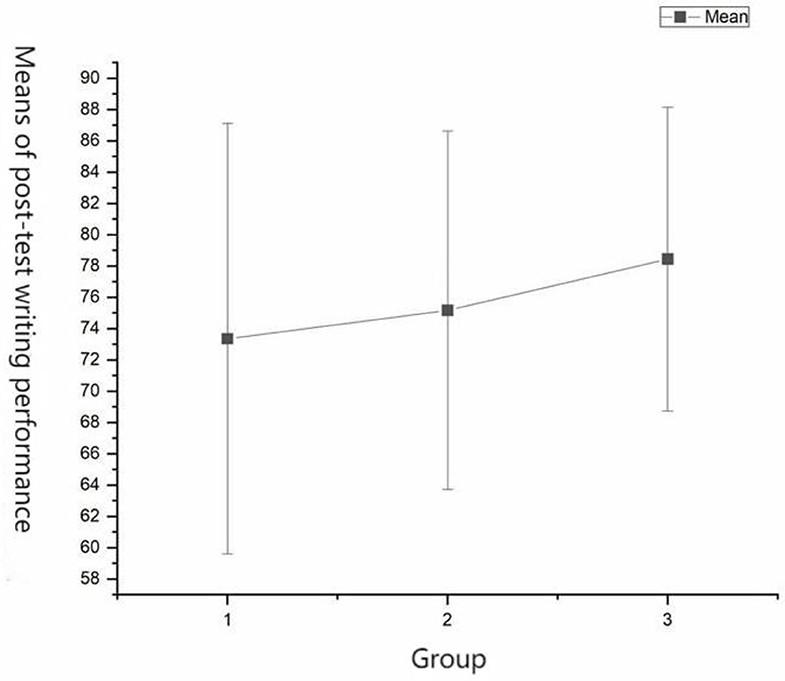

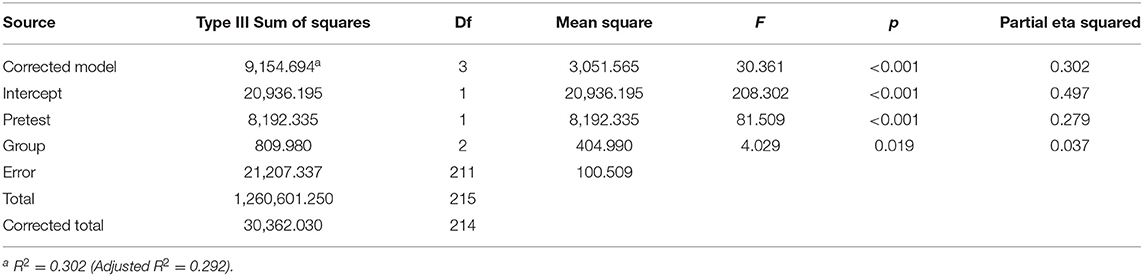

A one-way between-groups ANCOVA was conducted to compare the effectiveness of Icourse (group 2) and Icourse+Pigai (group 3) supported self-regulated learning on the participants' writing performance as compared to the control group (group 1) after one academic term. The independent variable was the technology tools used, and the dependent variable was the post-test score. Participants' pre-test scores were used as covariates in this analysis. Preliminary checks were conducted to ensure that the assumptions of normality, linearity, homogeneity of variances, homogeneity of regression slopes, and reliable measurement of the covariate were met. After adjusting for pre-test scores, there were significant differences in mean scores among the three groups (Figure 7) [F(1, 211) = 4.03, df = 2, p = 0.019, partial η2 = 0.04; Table 3]. According to the pairwise comparisons shown in Table 4, the Icourse+Pigai group (M = 78.44, SD = 9.71) significantly outperformed the control group (M = 73.35, SD = 13.76; p = 0.01). The difference between the Icourse group (M = 75.16, SD = 11.46) and the control group (M = 73.35, SD = 13.76; p = 0.23) was not statistically significant.

Lexical Complexity

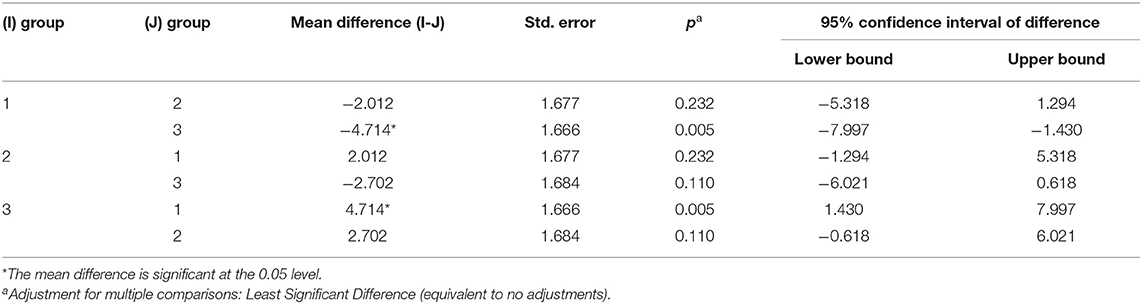

Table 5 illustrates that the pre-test comparison indicated no statistically significant differences in lexical diversity, lexical density, or lexical sophistication among the three groups. After the intervention, significant differences were observed in all three lexical complexity indicators in the Icourse group, but only in lexical diversity and density in the Icourse+Pigai group after the intervention. The latter group showed no significant differences in lexical sophistication after the intervention. In contrast, the control group exhibited no statistically significant difference from pre-test to post-test for any of the three lexical complexity indicators.

The Kruskal-Wallis post-test revealed no statistically significant differences in lexical diversity and sophistication across the three groups (control group, n = 73; Icourse group, n = 70; Icourse+Pigai group, n = 72), = 5.53, p = 0.063 (diversity), = 6.02, p = 0.049 (density), = 0.06, p = 0.970 (sophistication). The result leads the null hypothesis to be rejected that the distribution of lexical density is the same across the three groups, as there was a significant difference between the Icourse group and the Icourse+Pigai group in lexical density after the intervention.

SRL Strategies

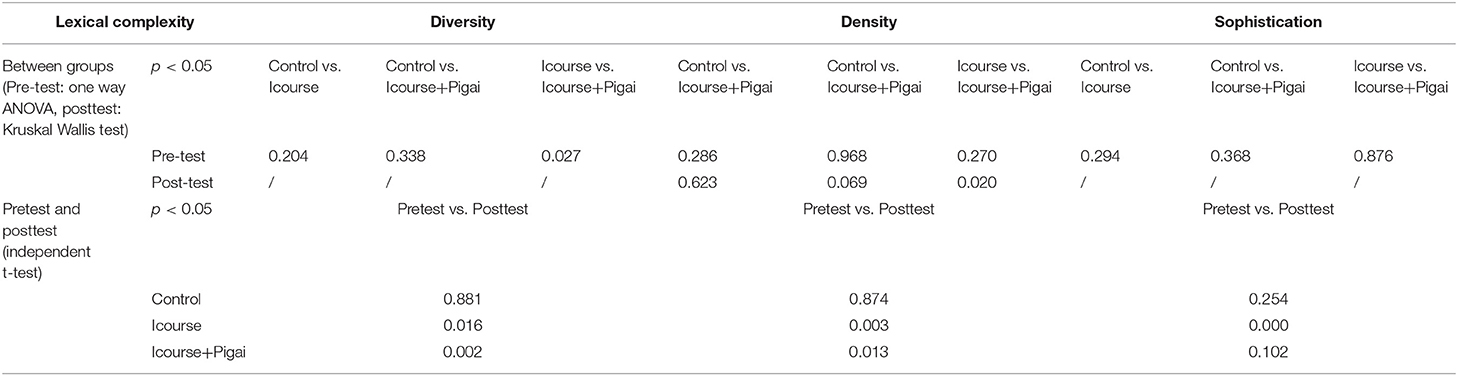

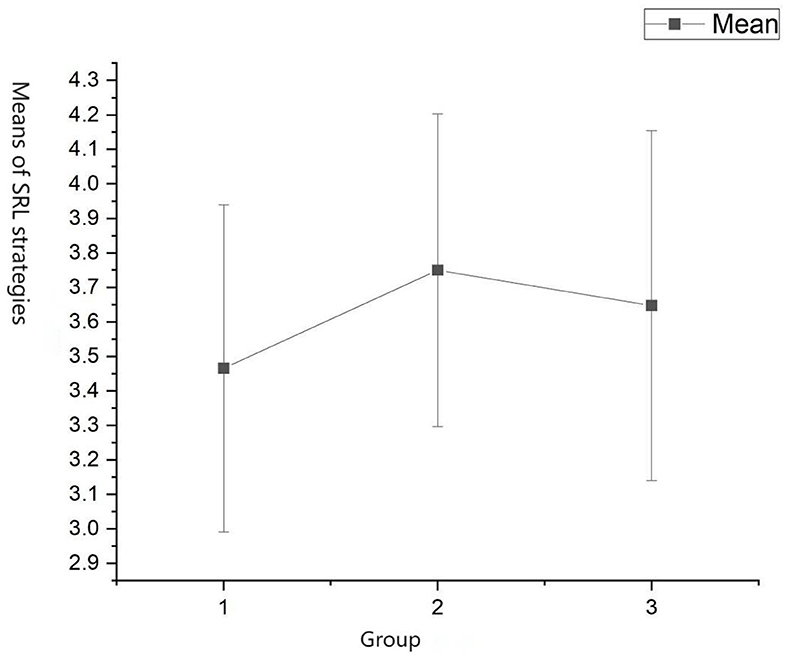

A one-way between-groups ANOVA was conducted to explore the impact of technology tools on SRL strategies. Since the assumptions required to conduct ANOVA were met and homogeneity of variances was not violated (p = 0.36), the three groups (control group, Icourse group, Icourse+Pigai group) were compared (Figure 8). There was a statistically significant difference (p < 0.05) in SRL strategies among the three groups, F = 8.59, df = 2, p < 0.01 (Table 6). Despite reaching statistical significance, the actual differences in the mean scores among the three groups were minor. The effect size, calculated using η2, was 0.06. Post-hoc comparisons using the LSD indicated that the mean score in the control group (M = 3.47, SD = 0.47) differed significantly from that of the Icourse group (M = 3.75, SD = 0.45) and that of the Icourse+Pigai group (M = 3.65, SD = 0.51). The Icourse+Pigai group did not differ significantly from the Icourse group (p = 0.15).

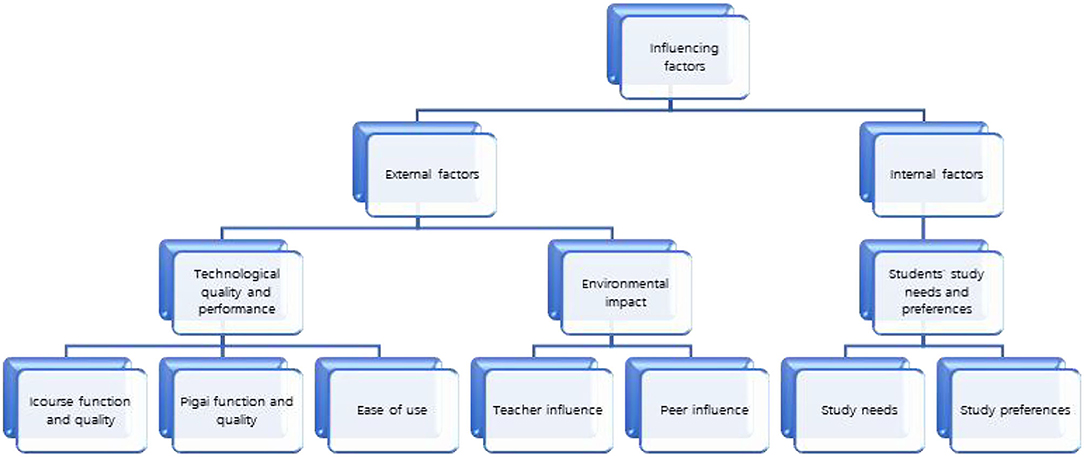

Technology Use Factors Toward Technology-Supported SRL

A list of 308 frequently occurring codes was found initially in the student transcripts and then reorganized into 46 categories of third-tier code families. Many of the themes identified in the initial coding concerned the qualities of Icourse and Pigai and the advantages and disadvantages of using the tools in academic writing. Likewise, other factors related to student needs and preferences also emerged, such as increased essay practice and unwillingness toward online peer review. As the codes were grouped and sorted, 15 categories of second-tier code families were identified, such as Icourse function, Icourse quality, Pigai function, Pigai quality, study needs, teacher influence, and peer influence. The 15 categories were then grouped as 7 broader themes and then categorized as 3 main themes and ultimately classified as two main categories as internal and external factors (Figure 9). For example, internal factors referred to study needs and study preferences, and external factors included teacher influence and peer influence.

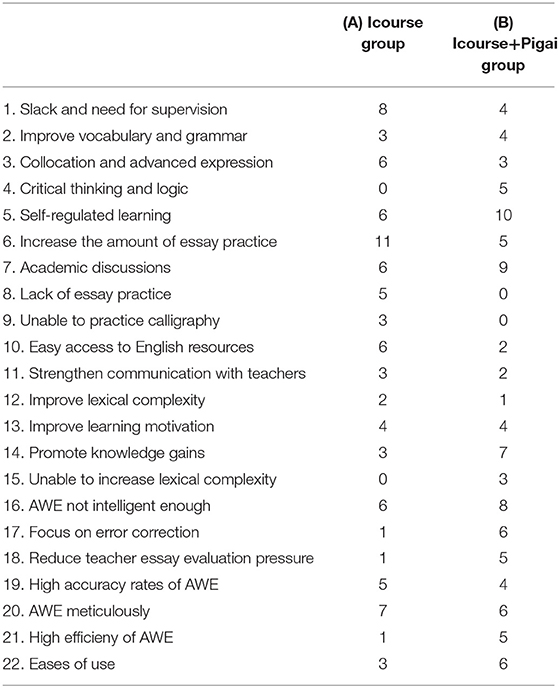

Table 7 presents 22 categories from the 46 third-tier code families that distinctly show the differences and similarities among participants' perceptions between the two experimental groups. The five participants in the Icourse group expressed a stronger desire for an increased amount of writing practice (Icourse group: 11 citations, Icourse+Pigai: 5 citations). Compared to no complaints of Icourse drawbacks in the Icourse+Pigai group, students in the Icourse group complained about its drawbacks, such as lack of essay practice (5 citations) and inability to produce calligraphy (3 citations). Compared to the Icourse group, the most distinct feature in the Icourse+Pigai group was that students referred to self-regulated learning more frequently (Icourse group: 6 citations, Icourse+Pigai group: 10 citations). As seldom mentioned in the Icourse group, the Icourse+Pigai group referred more to the Pigai benefit of high efficiency of AWE (5 citations) and reduced teacher essay evaluation pressure (5 citations).

Discussion

Writing Peformance

The Icourse+Pigai group significantly outperformed the control group in writing performance, while the Icourse group showed no significant statistical difference from the control group. The writing performance results indicate that Icourse+Pigai-mediated SRL is more conducive to enhanced writing performance than is Icourse-mediated SRL. This may be because Icourse-supported SRL fails to satisfy students' study needs for more opportunities for writing practice. As revealed by the interview results, students in the Icourse group expressed a stronger desire for frequent writing practice available through technological support.

If there is an online system, it can be better than the current one because we are a little weaker in English writing, and then the system can give feedback and give some suggestions. (Interviewee 1)

I also feel that I need to practice my composition, I do feel that I don't have much practice now. (Interviewee 2)

This research finding is consistent with Rüth et al. (2021), who found that testing and quizzes were more effective for learning than was repeated exposure to learning materials. Writing practice provides relevant cognitive load, that is, knowledge construction processes that unavoidably lead to learning (Sweller et al., 1998; Nückles et al., 2020). Pigai, which supports online writing submission and provides AWE services, enables students to learn through self-regulated writing practice. This might partially explain the higher writing scores in the Icourse+Pigai group. However, the participants perceived Icourse, the learning management system, as essential in their SRL, since Pigai does not allow exposure to learning materials, online discussion, and MOOC learning. The participants felt that Icourse and Pigai are irreplaceable because the two technological tools play their own roles in SRL.

I think it is better to use two of them. Because Icourse supports online discussion, and then you can preview the lessons. Pigai, on the other hand, allows you to submit your essays and give feedback about your writing timely. I don't think the two conflicts with each other. (Interviewee 9)

The participants' psychological study preferences also might have played a role in their SRL technological use.

Since it is a writing course, I tend to have Icourse and Pigai together. I am not used to relying on only one software to study the subject. I think the two have one focus for me, so I think both of them are necessary. (Interviewee 10)

Lexical Complexity

No statistically significant differences in lexical complexity were found among the three groups in the pre-test. From the post-test lexical complexity results, the technology-tools-supported SRL did not significantly affect students' lexical diversity and sophistication compared with the control group. The result is consistent with the participants' interview results, in that they felt negative about Icourse and Pigai's ability to significantly improve their lexical complexity. They stated that Pigai focuses more on lexical accuracy than lexical complexity in error correction.

It will tell you which word is misspelled, and if you misspell it, you can correct the word in your composition. In a sense, it also provides a learning opportunity. However, it does not significantly improve my lexical complexity because it cannot replace your words with more advanced words after all, and its AI technology has not yet developed to this level. (Interviewee 7)

I think it is more focused on picking mistakes than lexical complexity. It does not require advanced vocabulary, and it will only say that your balance of structure is relatively simple. (Interviewee 6)

The negative perceptions are not consistent with Jia's (2016) finding that students perceived a higher level of satisfaction regarding improving their lexical complexity of writing with Pigai mediation and used a higher frequency of Basewords 2 and 3 (less frequent words) according to Range 32 software analysis. She also stated that lexical diversity and lexical density improved after a 12-week intervention. This is consistent with Zuo and Feng's (2015) result that Pigai's scoring criteria focus more on lexical accuracy than lexical complexity. Students tend to adjust their writing strategies according to the scoring criteria applied by Pigai to obtain high scores. Our results indicate that SRL supported by both the Icourse group and the Icourse+Pigai group affected students' writing performance in terms of lexical complexity but did not significantly improve on it in the current phase of technological development.

SRL Strategies

Finally, ANOVA of SRL strategies revealed significant differences between the control and technology-supported groups. The results indicated that both Icourse and Icourse+Pigai positively related to the participants' use of SRL strategies. This aligns with van Alten et al. (2020) study, which found that providing students with technological SRL prompts is an effective strategy for improving SRL. They found that providing online videos in the process of flipped learning was positively related to students' learning outcomes. Likewise, Öztürk and Çakiroglu (2021) demonstrated that technology-mediated SRL positively enhanced students' writing skills in a flipped learning environment. According to Broadbent and Poon's (2015) review, enhanced SRL strategies positively influence learning outcome because, despite cognitive skills having a relatively negligible influence on improving learning outcomes, metacognition, time management, and critical thinking skills are positively related to learning outcomes.

However, the Icourse and Icourse+Pigai groups exhibited no significant difference in the use of SRL strategies, indicating that variation in technology tools did not significantly affect the participants' SRL strategy use. Students' psychological study preferences may partially explain this finding. Psychological study preference is compared to physical study preferences, such as, visual, aural or kinesthetic influences on study preferences. In this research, it refers to the possible psychological factors that affects students' choices on some educational modes over others. In previous studies, study preference primarily referred to sensory modality preferences. This denotes those students make study choices physically, through vision or auditory reactions (Hu et al., 2018). However, the study preferences in this research primarily referred to students' psychological factors. For instance, the interview results reflected those participants tended not to use the peer evaluation function in Pigai, even if they were told that it could be helpful to their writing. They expressed feelings of “distrust” and “embarrassment” regarding showing their essays to classmates.

I think that sometimes it is challenging to evaluate others' work because of face issues. I just said that I still don't feel confident about my evaluation ability. I think this is a bit embarrassing. (Interviewee 8)

I don't think it's necessary because I think my classmates have poor writing and everyone is quite clueless. (Interviewee 5)

Our results are consistent with van Alten et al. (2020), who found that some students disliked the SRL prompts even though the SRL support encouraged students to be more conscious of their learning. Likewise, Yot-Domínguez and Marcelo (2017) found that students tended not to use mobile-related technology tools in their SRL, but rather used mobile devices for social communication purposes. Students' psychological study preferences affect their study choices regarding technology prompts, which may subsequently influence their SRL strategy use.

Technology Use Factors Toward Technology-Supported SRL

The research shed light on the possible factors to consider when improving students' technology-enhanced SRL experience. Findings indicate that students' perceptions toward technology-supported SRL on their academic learning of writing vary, to some degree, by both internal and external factors (Figure 9). The state-of-art technology innovations alone do not guarantee an effective learning process and outcome (Hao et al., 2021). Chew and Ng (2021) emphasized the importance of integrating the effects of students' personality and proficiency in their word contribution in online forums. Different personality traits, such as, introverts or extroverts, may lead to different word productions in their online discussion with the same technological tool (Chew and Ng, 2021). Similarly, Lai et al. (2018) reported that various external and internal factors influence students' perceived study engagement. They proposed a new perspective in viewing technological use as diversified, which means one technological use can generate multiple forms of technology supported learning experiences. Rather than viewing technology as a whole entity in itself, students' psycho-social factors are also essential in contributing to their technologically supported learning experience (Lai et al., 2018). Our research consistently supported their finding by recognizing the importance of integrating students' internal needs and psychological factors with external factors: technological quality and performance and environmental impact are equally important as technological advances in the design and implementation of technology-supported SRL for students.

Conclusion

The study shows that the Icourse+Pigai group yielded a significantly positive result in writing performance as compared to the control and Icourse. This is partly because the Icourse+Pigai group enabled exposure to learning materials and supported more opportunities for writing practice and corrective feedback. Our research results regarding lexical complexity show that technology-supported SRL failed to significantly improve lexical diversity and sophistication. This is possibly because current feedback focuses more on lexical accuracy than on lexical complexity. Finally, the results of using SRL strategies indicated that the groups with technological support differed significantly from the control group. However, the variation in technological tools in this research did not significantly change SRL strategies. We found that students' psychological study preferences may play a role in students' choice of technological mediation of SRL strategies, all else being equal. According to student interview results, the students' perceived influencing factors were identified as external (technological quality and performance, environmental impact) and internal (study needs and preferences). We thereby conclude that it is feasible to apply both learning management systems and AWE platforms to support students' SRL learning to improve their writing performance. We call for more efforts to design technology tools that improve both lexical accuracy and lexical complexity. We conclude that the technological tools applied in this research are positively related to SRL strategies. However, students' psychological study preferences should be considered when designing technologically mediated SRL activities.

The limitations of this research lie in the heavy reliance on students' self-report questionnaires in data collection. Self-reports are sometimes biased, which reduces their validity. Future studies may add more instruments such as observation or eye-tracking techniques to triangulate the data. Furthermore, the limitations also include the possible influence of different lecturers on the group due to individual differences. Further studies may use one lecturer to teach the three groups to minimize the possible effects of the individual differences. Another limitation is that although all participants spent the same fixed time for SRL in lecture learning, the time of their SRL process spent on the preview and assignment after classes may be different. Further studies may find ways to record students' SRL study time or ask students to report their time use in SRL study in students' residences. Moreover, future studies may focus on other technological combinations or technology types since there is a wide range of available technological tools, such as AI and mobile technologies. Further investigation is necessary to explore the effects of psychological study preferences on technologically supported SRL strategies. Overall, a fruitful avenue for future research appears to be exploration of the effects of various technological prompts on students' SRL learning.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the University of Malaya Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

YH and SZ conceived the idea and drafted the research. YH carried out the data collection and analysis. SZ and L-LN supervised the research and provided the critical feedback. All authors read and approved the final manuscript.

Funding

The research is acknowledged by team project of Research Promotion Program (No. SIS21T01) which is partially funded by School of International Studies, Communication University of China.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.752793/full#supplementary-material

References

An, Z., Gan, Z., and Wang, C. (2020). Profiling Chinese EFL students' technology-based self-regulated English learning strategies. PLoS ONE 15:e0240094. doi: 10.1371/journal.pone.0240094

Bouwmeester, R. A., de Kleijn, R. A., van den Berg, I. E., ten Cate, O. T. J., van Rijen, H. V., and Westerveld, H. E. (2019). Flipping the medical classroom: effect on workload, interactivity, motivation, and retention of knowledge. Comput. Educ. 139, 118–128. doi: 10.1016/j.compedu.2019.05.002

Broadbent, J. (2017). Comparing online and blended learner's self-regulated learning strategies and academic performance. Internet High. Educ. 33, 24–32. doi: 10.1016/j.iheduc.2017.01.004

Broadbent, J., and Poon, W. L. (2015). Self-regulated learning strategies and academic achievement in online higher education learning environments: a systematic review. Internet High. Educ. 27, 1–13. doi: 10.1016/j.iheduc.2015.04.007

Bulté, B., and Housen, A. (2014). Conceptualizing and measuring short-term changes in L2 writing complexity. J. Second Lang. Writ. 26, 42–65. doi: 10.1016/j.jslw.2014.09.005

Cancino, M., and Panes, J. (2021). The impact of Google Translate on L2 writing quality measures: evidence from Chilean EFL high school learners. System 98, 102464. doi: 10.1016/j.system.2021.102464

Cheng, L. L., Sui, X. B., Guo, M., and Lu, M. (2017). The empirical study of college english writing MOOC teaching mode on the basis of SPOC. J. Weinan Normal Univ. 32, 61–67.

Chew, S. Y., and Ng, L. L. (2021). The influence of personality and language proficiency on ESL learners' word contribution in face-to-face and synchronous online forums. J. Nusantara Stud. (JONUS) 6, 199–221. doi: 10.24200/jonus.vol6iss1pp199-221

Csomay, E., and Prades, A. (2018). Academic vocabulary in ESL student papers: a corpus-based study. J. Eng. Acad. Purposes 33, 100–118. doi: 10.1016/j.jeap.2018.02.003

de Moura, V. F., de Souza, C. A., and Viana, A. B. N. (2021). The use of Massive Open Online Courses (MOOCs) in blended learning courses and the functional value perceived by students. Comput. Educ. 161:104077. doi: 10.1016/j.compedu.2020.104077

Esol, C. (2007). Cambridge IELTS 6: Examination Papers From University of Cambridge ESOL Examinations. Cambridge: Cambridge University Press

Gilliland, B., Oyama, A., and Stacey, P. (2018). Second language writing in a MOOC: affordances and missed opportunities. TESL-EJ 22:n1.

Golonka, E. M., Bowles, A. R., Frank, V. M., Richardson, D. L., and Freynik, S. (2014). Technologies for foreign language learning: a review of technology types and their effectiveness. Comput. Assist. Lang. Learn. 27, 70–105. doi: 10.1080/09588221.2012.700315

Gong, W., Zhou, J., and Hu, S. (2019). The effects of automated writing evaluation provided by pigai website on language complexity of non-english majors. FLLTP 4, 45–54.

Guo, S., Lv, Y. L., and Lu, X. X. (2021). The construction and emipirical research of IELTS Writing blended teaching model based on SPOC. J. Beijing City Univ. 1, 29–34. doi: 10.16132/j.cnki.cn11-5388/z.2021.01.006

Hao, T., Wang, Z., and Ardasheva, Y. (2021). Technology-assisted vocabulary learning for EFL learners: a meta-analysis. J. Res. Educ. Effect. 14, 1–23. doi: 10.1080/19345747.2021.1917028

Hinkel, E. (2003). Simplicity without elegance: features of sentences in L1 and L2 academic texts. Tesol Q. 37, 275–301. doi: 10.2307/3588505

Hou, Y. (2020). Implications of AES system of Pigai for self-regulated learning. Theory Pract. Lang. Stud. 10, 261–268. doi: 10.17507/tpls.1003.01

Hu, Y., Gao, H., Wofford, M. M., and Violato, C. (2018). A longitudinal study in learning preferences and academic performance in first year medical school. Anatom. Sci. Educ. 11, 488–495. doi: 10.1002/ase.1757

Jia, J. (2016). The Effect of Automatic Essay Scoring Feedback on Lexical Richness and Syntactic Complexity in College English Writing North University of China. Taiyuan.

Kalantari, R., and Gholami, J. (2017). Lexical complexity development from dynamic systems theory perspective: lexical density, diversity, and sophistication. Int. J. Instruct. 10, 1–18. doi: 10.12973/iji.2017.1041a

Knoch, U., Rouhshad, A., and Storch, N. (2014). Does the writing of undergraduate ESL students develop after one year of study in an English-medium university? Assess. Writ. 21, 1–17. doi: 10.1016/j.asw.2014.01.001

Ko, M.-H. (2017). Learner perspectives regarding device type in technology-assisted language learning. Comput. Assist. Lang. Learn. 30, 844–863. doi: 10.1080/09588221.2017.1367310

Lai, C., Hu, X., and Lyu, B. (2018). Understanding the nature of learners' out-of-class language learning experience with technology. Comput. Assist. Lang. Learn. 31, 114–143. doi: 10.1080/09588221.2017.1391293

Lam, Y. W., Hew, K. F., and Chiu, K. F. (2018). Improving argumentative writing: effects of a blended learning approach and gamification. Lang. Learn. Technol. 22, 97–118. Available online at: http://hdl.handle.net/10125/44583

Laufer, B., and Nation, P. (1995). Vocabulary size: lexical richness in L2 written production. Applied Linguistics 16, 307–322. doi: 10.1093/applin/16.3.307

Lemmouh, Z. (2008). The relationship between grades and the lexical richness of student essays. Nordic J. English Stud. 7, 163–180. doi: 10.35360/njes.106

Li, D., and Zhang, L. (2020). Exploring teacher scaffolding in a CLIL-framed EFL intensive reading class: a classroom discourse analysis approach. Lang. Teach. Res. 2020:1362168820903340. doi: 10.1177/1362168820903340

Li, R., Meng, Z., Tian, M., Zhang, Z., Ni, C., and Xiao, W. (2019). Examining EFL learners' individual antecedents on the adoption of automated writing evaluation in China. Comput. Assist. Lang. Learn. 32, 784–804. doi: 10.1080/09588221.2018.1540433

Liao, H.-C. (2016). Using automated writing evaluation to reduce grammar errors in writing. Elt J. 70, 308–319. doi: 10.1093/elt/ccv058

Lin, C.-A., Lin, Y.-L., and Tsai, P.-S. (2020). “Assessing foreign language narrative writing through automated writing evaluation: a case for the web-based pigai system,” in ICT-Based Assessment, Methods, and Programs in Tertiary Education (Hershey, PA: IGI Global), 100–119. doi: 10.4018/978-1-7998-3062-7.ch006

Nückles, M., Roelle, J., Glogger-Frey, I., Waldeyer, J., and Renkl, A. (2020). The self-regulation-view in writing-to-learn: using journal writing to optimize cognitive load in self-regulated learning. Educ. Psychol. Rev. 31, 1–38. doi: 10.1007/s10648-020-09541-1

O'Dell, F., Read, J., and McCarthy, M. (2000). Assessing Vocabulary. Cambridge: Cambridge University Press.

Öztürk, M., and Çakiroglu, Ü. (2021). Flipped learning design in EFL classrooms: implementing self-regulated learning strategies to develop language skills. Smart Learn. Environ. 8, 1–20. doi: 10.1186/s40561-021-00146-x

Pintrich, P. R. (2003). A motivational science perspective on the role of student motivation in learning and teaching contexts. J. Educ. Psychol. 95:667. doi: 10.1037/0022-0663.95.4.667

Pintrich, P. R. (2004). A conceptual framework for assessing motivation and self-regulated learning in college students. Educ. Psychol. Rev. 16, 385–407. doi: 10.1007/s10648-004-0006-x

Pintrich, P. R., Smith, D. A., Garcia, T., and McKeachie, W. J. (1993). Reliability and predictive validity of the Motivated Strategies for Learning Questionnaire (MSLQ). Educ. Psychol. Meas. 53, 801–813. doi: 10.1177/0013164493053003024

Qin, H. B. (2019). Cognition of learning management system based on the icourse platform. Chin. J. Multimed. Netw. Educ. 1, 10–11.

Ruiz-Palmero, J., Fernández-Lacorte, J.-M., Sánchez-Rivas, E., and Colomo-Magaña, E. (2020). The implementation of Small Private Online Courses (SPOC) as a new approach to education. Int. J. Educ. Technol. Higher Educ. 17, 1–12. doi: 10.1186/s41239-020-00206-1

Rüth, M., Breuer, J., Zimmermann, D., and Kaspar, K. (2021). The effects of different feedback types on learning with mobile quiz apps. Front. Psychol. 12:665144. doi: 10.3389/fpsyg.2021.665144

Seifert, T., and Har-Paz, C. (2020). The effects of mobile learning in an EFL class on self-regulated learning and school achievement. Int. J. Mobile Blended Learn. (IJMBL) 12, 49–65. doi: 10.4018/IJMBL.2020070104

Sun, T., and Wang, C. (2020). College students' writing self-efficacy and writing self-regulated learning strategies in learning English as a foreign language. System 90:102221. doi: 10.1016/j.system.2020.102221

Sweller, J., Van Merrienboer, J. J., and Paas, F. G. (1998). Cognitive architecture and instructional design. Educ. Psychol. Rev. 10, 251–296. doi: 10.1023/A:1022193728205

Tan, Y. (2019). Research on smart education-oriented effective teaching model for college english. J. Beijing City Univ. 2, 84–90.

Treffers-Daller, J., Parslow, P., and Williams, S. (2018). Back to basics: how measures of lexical diversity can help discriminate between CEFR levels. Appl. Ling. 39, 302–327. doi: 10.1093/applin/amw009

van Alten, D. C., Phielix, C., Janssen, J., and Kester, L. (2020). Self-regulated learning support in flipped learning videos enhances learning outcomes. Comput. Educ. 158:104000. doi: 10.1016/j.compedu.2020.104000

Wu, D. (2017). Research on the application of online writing platform in college english writing teaching—take Pigai as an example. J. Huanggang Normal Univ. 37, 24–28. doi: 10.3969/j.issn.1003-8078.2017.01.06

Xu, Z., Banerjee, M., Ramirez, G., Zhu, G., and Wijekumar, K. (2019). The effectiveness of educational technology applications on adult English language learners' writing quality: a meta-analysis. Comput. Assist. Lang. Learn. 32, 132–162. doi: 10.1080/09588221.2018.1501069

Yang, X. Q., and Dai, Y. C. (2015). An empirical study on college english autonomous writing teaching model based on www.pigai.org. TEFLE. 162, 17–23. doi: 10.3969/j.issn.1001-5795.2015.02.003

Yot-Domínguez, C., and Marcelo, C. (2017). University students' self-regulated learning using digital technologies. Int. J. Educ. Technol. Higher Educ. 14:38. doi: 10.1186/s41239-017-0076-8

Zhai, N. (2017). Construction and implementation of network course platform for foreign language teaching based on MOOC of Chinese Universities. Electron. Design Eng. 25, 101–104.

Zhang, S. S. X. (2019). The effect of online automated feedback on english writing across proficiency levels: from the perspective of the ZPD. For. Lang. Their Teach. 5, 30–39.

Zhu, H., and Wang, J. (2013). The developmental characteristics of vocabulary richness in english writing—a longitudinal study based on self-build corpus. For. Lang. World 000, 77–86.

Zhu, Y., Au, W., and Yates, G. (2016). University students' self-control and self-regulated learning in a blended course. Internet High. Educ. 30, 54–62. doi: 10.1016/j.iheduc.2016.04.001

Zimmerman, B. J., and Schunk, D. H. (2001). Self-Regulated Learning and Academic Achievement: Theoretical Perspectives. England: Routledge.

Zuo, Y. Y., and Feng, L. (2015). A study on the dimensions of the marking standard of college english writing—based on the comparison of the ratings of writingroadmap and Picai.com. Modern Educ. Technol. 25, 60–66. doi: 10.3969/j.issn.1009-8097.2015.08.009

Keywords: academic writing performance, lexical complexity, psychological study preferences, self-regulated learning, study needs, technology-mediated SRL

Citation: Han Y, Zhao S and Ng L-L (2021) How Technology Tools Impact Writing Performance, Lexical Complexity, and Perceived Self-Regulated Learning Strategies in EFL Academic Writing: A Comparative Study. Front. Psychol. 12:752793. doi: 10.3389/fpsyg.2021.752793

Received: 03 August 2021; Accepted: 05 October 2021;

Published: 03 November 2021.

Edited by:

Erin Peters-Burton, George Mason University, United StatesReviewed by:

Ying Wang, Georgia Institute of Technology, United StatesStephanie Stehle, George Mason University, United States

Copyright © 2021 Han, Zhao and Ng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shuo Zhao, emhhb3NodW9AbndwdS5lZHUuY24=; Lee-Luan Ng, bmdsZWVsdWFuQHVtLmVkdS5teQ==

Yangxi Han

Yangxi Han Shuo Zhao3*

Shuo Zhao3* Lee-Luan Ng

Lee-Luan Ng