- 1Department of Psychology, College of Humanities and Management, Guizhou University of Traditional Chinese Medicine, Guiyang, China

- 2University Science Park Management Center, Guiyang University, Guiyang, China

- 3Department of Light and Chemical Engineering, Guizhou Light Industry Technical College, Guiyang, China

- 4Department of Psychology, Faculty of Education, Hubei University, Wuhan, China

Previous studies confirmed that the cognitive resources are limited for each person, and perceptual load affects the detection of stimulus greatly; however, how the visual perceptual load influences audiovisual integration (AVI) is still unclear. Here, 20 older and 20 younger adults were recruited to perform an auditory/visual discrimination task under various visual perceptual-load conditions. The analysis for the response times revealed a significantly faster response to the audiovisual stimulus than to the visual stimulus or auditory stimulus (all p < 0.001), and a significantly slower response by the older adults than by the younger adults to all targets (all p ≤ 0.024). The race-model analysis revealed a higher AV facilitation effect for older (12.54%) than for younger (7.08%) adults under low visual perceptual-load conditions; however, no obvious difference was found between younger (2.92%) and older (3.06%) adults under medium visual perceptual-load conditions. Only the AV depression effect was found for both younger and older adults under high visual perceptual-load conditions. Additionally, the peak latencies of AVI were significantly delayed in older adults under all visual perceptual-load conditions. These results suggested that visual perceptual load decreased AVI (i.e., depression effects), and the AVI effect was increased but delayed for older adults.

Introduction

Individuals simultaneously receive many types of sensory information from the outside world, including visual, auditory, tactile, olfactory, and gustatory information; however, our brain can select and merge the available information to facilitate the perception of the outside world. For example, when driving a car, it is necessary to watch the road condition (visual information), listen to the voice broadcast (auditory information), and operate the steering wheel (tactile information) simultaneously. The interactive processing of information from multiple sensory modalities is called multisensory integration. Individuals acquire most of the information from the environment through visual and auditory modalities, and numerous studies have found that responses to audiovisual stimulus were faster and more accurate than those to auditory-only or visual-only stimulus (Meredith et al., 1987; Stein and Meredith, 1993; Stein, 2012). The procedure that merges visual and auditory information is defined as audiovisual integration (AVI), which assists individuals in identifying objects much more easily and is the topical issue of multisensory integration (Raab, 1962; Miller, 1982). Ordinary life is complex and AVI might be disturbed by various distractors from the surrounding environment. Furthermore, for each person, the cognitive resources are limited, that is, if the cognitive demand is higher for one task, less will be left to process other tasks, known as “perceptual load theory” (Kahneman, 1973; Lavie and Tsal, 1994; Lavie, 1995). Under different perceptual loads, how individuals efficiently integrate visual and auditory information has been a hot multisensory research topic in recent years (Alsius et al., 2005, 2007; Baseler et al., 2014; Wahn and König, 2015; Ren et al., 2020a).

Alsius et al. (2005, 2014) and Ren et al. (2020a) investigated the additional perceptual load of visual transients on AVI. In studies by Alsius et al. (2005, 2014) McGurk stimuli were used in audiovisual redundant tasks to assess AVI, and meaningless checkerboard images and white noise were used in the study by Ren et al. (2020a). In the low perceptual-load condition, participants were instructed to respond only to the audiovisual redundant task but to respond to the visual distractor at the same time in the high perceptual-load condition. Consistent results showed that the AVI was lower in the condition with high perceptual load of visual transients (dual task) than in the condition with a low perceptual load of visual transients (single task). In the studies by Alsius et al. (2005, 2014) and Ren et al. (2020a), the visual distractors were transitorily presented and accompanied by stimuli in an audiovisual redundant task. To investigate the influence of sustained visual distractors on AVI, Wahn and König (2015) instructed participants to continuously track visual moving balls when performing the audiovisual redundancy task, and their results revealed that AVI was comparable under high sustained visual perceptual-load conditions and low sustained visual perceptual-load conditions, indicating that sustained visual perceptual load did not disrupt AVI. Dual tasks were employed in the abovementioned studies, in which one task was used to assess AVI and the other was used to manipulate visual perceptual load, and the visual perceptual load was from the additional distractor, during which the low perceptual-load condition involved focused attention but high perceptual-load condition involved divided attention. Therefore, it is difficult to evidence whether the perceptual load influences AVI or attention clearly. To clarify this question, the current study aimed to investigate how the visual perceptual load (demand) from the visual stimulus itself influences AVI in a single task.

Macdonald and Lavie (2011) found that visual perceptual load severely affected the auditory perception and even induced “inattentional deafness,” showing that the participant failed to notice the tone (∼79%) during the visual detection task under high visual perceptual load (Macdonald and Lavie, 2011). Diederich et al. (2008) proposed that AVI occurred only when the processing of auditory and visual information was completed in a specific time frame. Considering the limitation of nerve-center energy proposed by Kahneman (1973) in “Attention and Effort,” with increasing visual perceptual load, more perceptual resources occupied by visual stimuli and the processing of auditory stimuli will undoubtedly be limited. Colonius and Diederich (2004) reported that the AVI effect was greater when the intensities of visual and auditory stimuli were equalized, and it is relatively lower if they were not coordinated (Colonius and Diederich, 2004). Therefore, we hypothesized that the AVI effect is higher for equalized pairs (low visual perceptual-load conditions) than for incoordinate pairs (high visual perceptual-load conditions). The hypothesis was tested using a visual/auditory discrimination task with different visual perceptual-load stimuli. If the AVI was lower under the higher visual perceptual-load conditions than under the low or medium visual perceptual-load conditions, it would be concluded that the visual perceptual load greatly affected the AVI and that the visual perceptual load reduced the AVI effect.

Additionally, aging is an important factor that influences AVI, and some researchers reported that the AVI was higher for older adults than for younger adults (Laurienti et al., 2006; Peiffer et al., 2007; Stephen et al., 2010; Ren et al., 2020b), but how AVI changed with the alteration of visual perceptual load for older adults is also still unclear. Recently, some of them proposed that higher AVI in older adults is an adaptive mechanism to compensate for unimodal sensory decline (Laurienti et al., 2006; Peiffer et al., 2007; Freiherr et al., 2013; Ren et al., 2020c), and the audiovisual perceptual training could improve cognitive function of healthy older adults (Anguera et al., 2013; Yang et al., 2018; O’Brien et al., 2020) and patients with mild cognitive impairment (Lee et al., 2020). Therefore, it is necessary to investigate the effect of the perceptual load of visual stimuli on AVI to clarify the interaction of perceptual load and AVI, which might further offer a reference for the selection of experimental materials in cognitive training tasks. Therefore, another aim of the present study was to investigate the aging effect on the interaction between AVI and visual perceptual load. Considering that auditory and visual stimulus processing is generally slower in older adults (Grady, 2008; Anderson, 2019), but they are still able to correctly perceive the outside world, we hypothesized that a compensatory mechanism also existed for older adults during AVI even under visual perceptual-load conditions. However, one of the well-documented effects of aging is that there is a reduction in the total amount of resources available for information processing (Cabeza et al., 2006; Paige and Gutchess, 2015); thus, performance might be even worse in high visual perceptual-load condition. In the current study, the hypothesis was tested by comparing AVI between older and younger adults under all visual perceptual-load conditions. If the AVI effect of the older adults is higher than that of younger adults under any visual perceptual-load conditions, it would be concluded that the older adults might establish a compensatory mechanism.

Materials and Methods

Subjects

The sample size was calculated using the G∗Power 3.1.9.2 program.1 The total sample size was 36, with an effect size f of 0.4 and a power (1-β err prob) of 0.8 (Lakens, 2021). Therefore, 20 healthy older adults (59–73 years, mean age ± SD, 63 ± 4) and 20 younger college students (20–23 years, mean age ± SD, 22 ± 1) were recruited to participate in this study. All older adults were recruited from Guiyang City, and all younger adults were undergraduates. All participants had normal hearing, had a normal or corrected-to-normal vision, had no color blindness or color weakness, and were not informed of the aim of this study. Those participants whose mini-mental state examination (MMSE) scores were out of the range of 25–30 were excluded from the experiment (Bleecker et al., 1988). All the participants signed the informed consent form approved by the Ethics Committee of the Second Affiliated Hospital of Guizhou University of Traditional Chinese Medicine, and obtained the remuneration for their time.

Stimuli

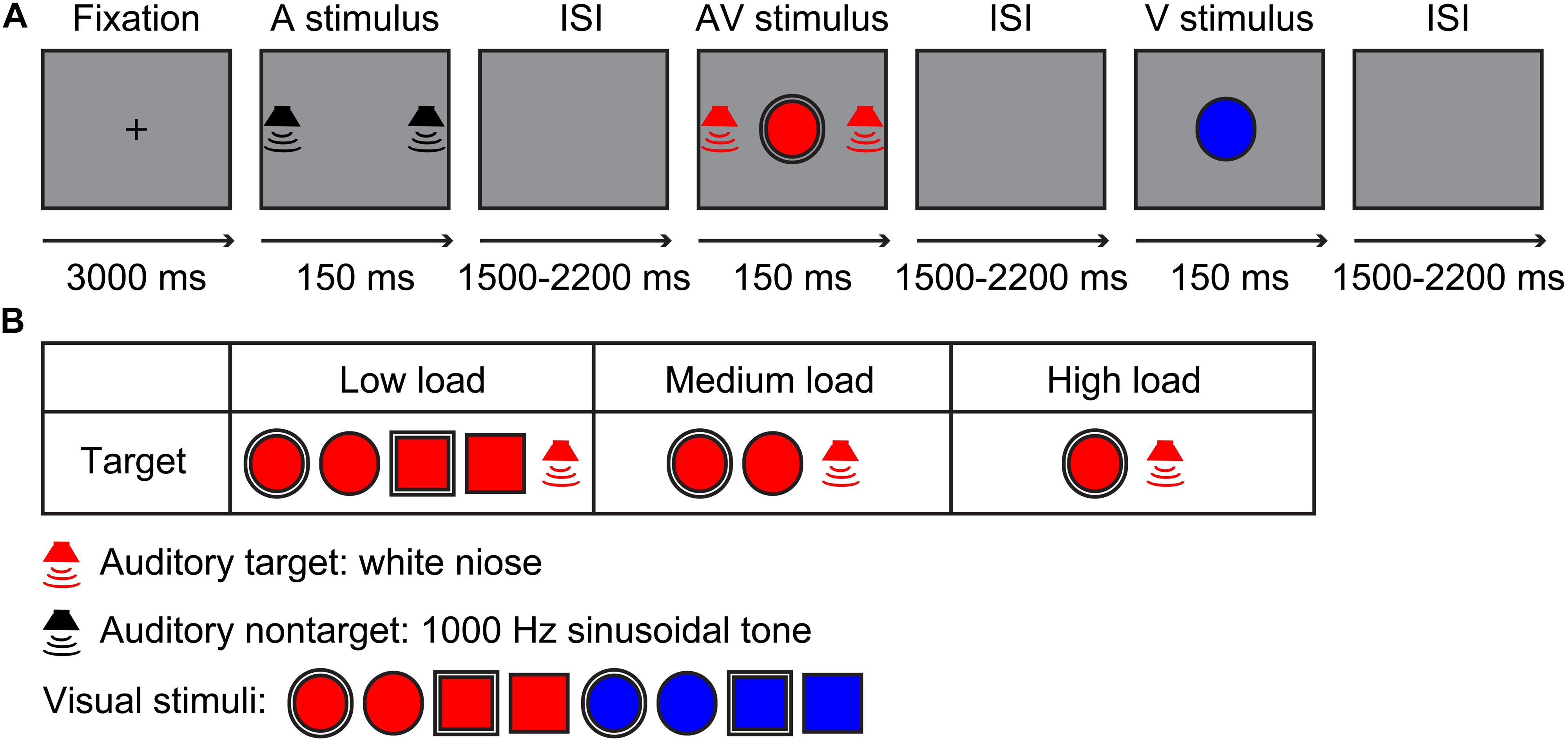

The visual stimuli included a red/blue circle and square with a 2.6-cm diameter single- or dual-border (Bruner et al., 1956; Figure 1B). The auditory stimuli contained a 60-dB white noise for auditory target stimuli and 60-dB 1,000-Hz sinusoidal tone for auditory non-target stimuli, which were edited using Audacity 2.3.02 for a duration of 150 ms (10 ms of rise or/fall cosine gate) (Ren et al., 2016, 2018). The experiment contained three sessions, namely, a low visual perceptual-load session, a medium visual perceptual-load session, and a high visual perceptual-load session. For low visual perceptual-load session, the visual target stimuli were red circles and squares with single- or dual-border, and the visual non-target stimuli were blue circles and squares with single- or dual-border (Figure 1B). For medium visual perceptual-load session, the visual target stimuli were red circles with single or dual borders, and the visual non-target stimuli included red squares with single or dual borders and blue circles and squares with single or dual borders (Figure 1B). For high visual perceptual-load session, the visual target stimuli were red circles with dual borders, and the visual non-target stimuli included red circles with single borders, red squares with single or dual borders, and blue circles and squares with single or dual borders (Figure 1B). Under all of the visual perceptual-load conditions, the audiovisual target stimuli were the simultaneous presentation of the auditory target stimulus and visual target stimulus, and the audiovisual non-target stimulus was the simultaneous presentation of the auditory non-target stimulus and visual non-target stimulus. The following two conditions: the visual non-target stimulus accompanied by auditory target stimulus and the visual target stimulus accompanied by auditory non-target stimulus were not included.

Figure 1. Experimental design. (A) A possible sequence for the auditory non-target, audiovisual target, and visual non-target stimuli in the experiment. (B) Stimuli types. Low load, low visual perceptual-load condition; Medium load, medium visual perceptual-load condition; High load, high visual perceptual-load condition.

Procedure

The experiment was conducted in a dimly lit and sound-attenuated room (Cognitive Psychology Laboratory, Guizhou University of Traditional Chinese Medicine, China). The presentation for all stimuli and collection for behavioral response were controlled by E-prime 2.0 (Psychology Software Tools, Inc., Pittsburgh, PA, United States). The visual stimulus (V) was presented on the screen of the computer in front of the participant with 60-cm distance, and the background of the monitor (Dell, E2213c) was gray during the experiment. The auditory stimulus (A) was presented through two speakers (Edifier, R19U) located on the right and the left of the screen. Each session started with a 3,000-ms fixation, and then, all stimuli (A,V,AV) were presented for 150 ms randomly with a randomized interstimulus interval (ISI) of 1,500–2,200 ms (Figure 1A). The participant was instructed only to respond to target stimuli by pressing the right button of the mouse as accurately and rapidly as possible, but withhold responses for non-target stimuli. In total, three sessions were conducted randomly, and each session lasted for 10 min with suitable rest according to the physical condition of the participant individually. Each session contained 240 trials, including 120 target and 120 non-target stimuli, with 40 trials for each stimulus type.

Data Analysis

The accuracy of responding is the percentage of correct responses (the response time falling within the average time duration ± 2.5 SD) relative to the total number of target stimuli. The response times (RTs) and accuracy for each participant under each condition were calculated separately, and then, submitted to a 2group (older, younger) × 3load (low, medium, high) × 3stimulus (A,V,AV) ANOVA with Greenhouse–Geisser corrections followed by a post hoc analysis.

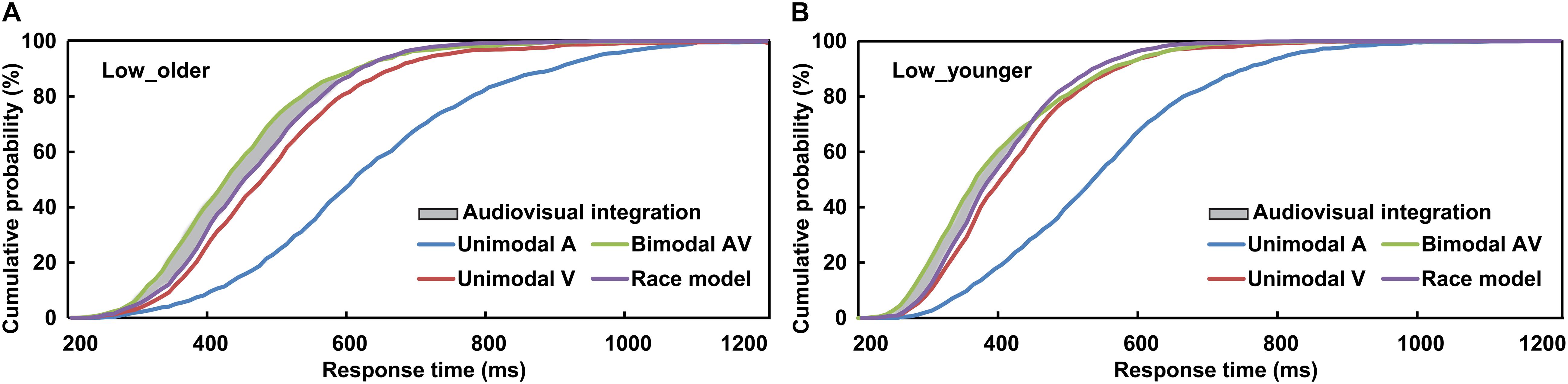

To interpret the phenomenon that the response to AV stimulus was obviously faster than to A or V stimulus, the separate-activation model and coactivation model were raised (Meredith et al., 1987; Stein and Meredith, 1993; Stein, 2012). The separate-activation model is that the processing of auditory and visual information never combined, and the response was induced by the winner of the race, and so it was also called “race model.” However, the coactivation model is that the activation of auditory and visual was combined and this induced the response cooperatively. The race model was calculated based on the cumulative distribution functions (CDFs) of visual-only and auditory-only response in 10-ms time bins, P(RM) = [P(V) + P(A)]-P(V) × P(A) (Miller, 1982, 1986; Laurienti et al., 2006). P(A) and P(V) were the response probability to a visual-only or auditory-only trial within a given timeframe, respectively, and P(RM) is the predicted response probability for AV trial basing on P(A) and P(V) (Figures 2A,B). If P(AV) is significantly different from P(RM), which suggests the AV violated the race model and the co-activation model was applied, then the AVI was assumed to have occurred (t-test, p ≤ 0.05). If P(AV) was significantly greater than P(RM), it was defined as AV facilitation, otherwise, AV depression (Meredith et al., 1987; Stein and Meredith, 1993). As previously studied, the AVI effect was assessed using race model by analyzing the RTs data (Laurienti et al., 2006; Peiffer et al., 2007; Stevenson et al., 2014; Ren et al., 2016, 2018). A probability-difference curve was generated by subtracting the P(RM) of an individual from his/her audiovisual CDFs [P(AV)] in each 10-ms time bin, and the peak value (peak benefit) of the curve was used to assess AVI ability (Xu et al., 2020). The time frame from the stimulus onset to peak benefit was peak latency, which is an important index to evaluate when AVI occurred (Laurienti et al., 2006; Peiffer et al., 2007; Ren et al., 2016, 2018). SPSS version 19.0 software (SPSS, Tokyo, Japan) was used for all statistical analyses.

Figure 2. Cumulative distribution functions of auditory stimuli, visual stimuli, race model, and audiovisual stimuli for older (A) and younger (B) adults under low visual perceptual-load conditions. Low_older, low visual perceptual-load condition for older adults; Low_younger, low visual perceptual-load condition for younger adults.

Results

Accuracy

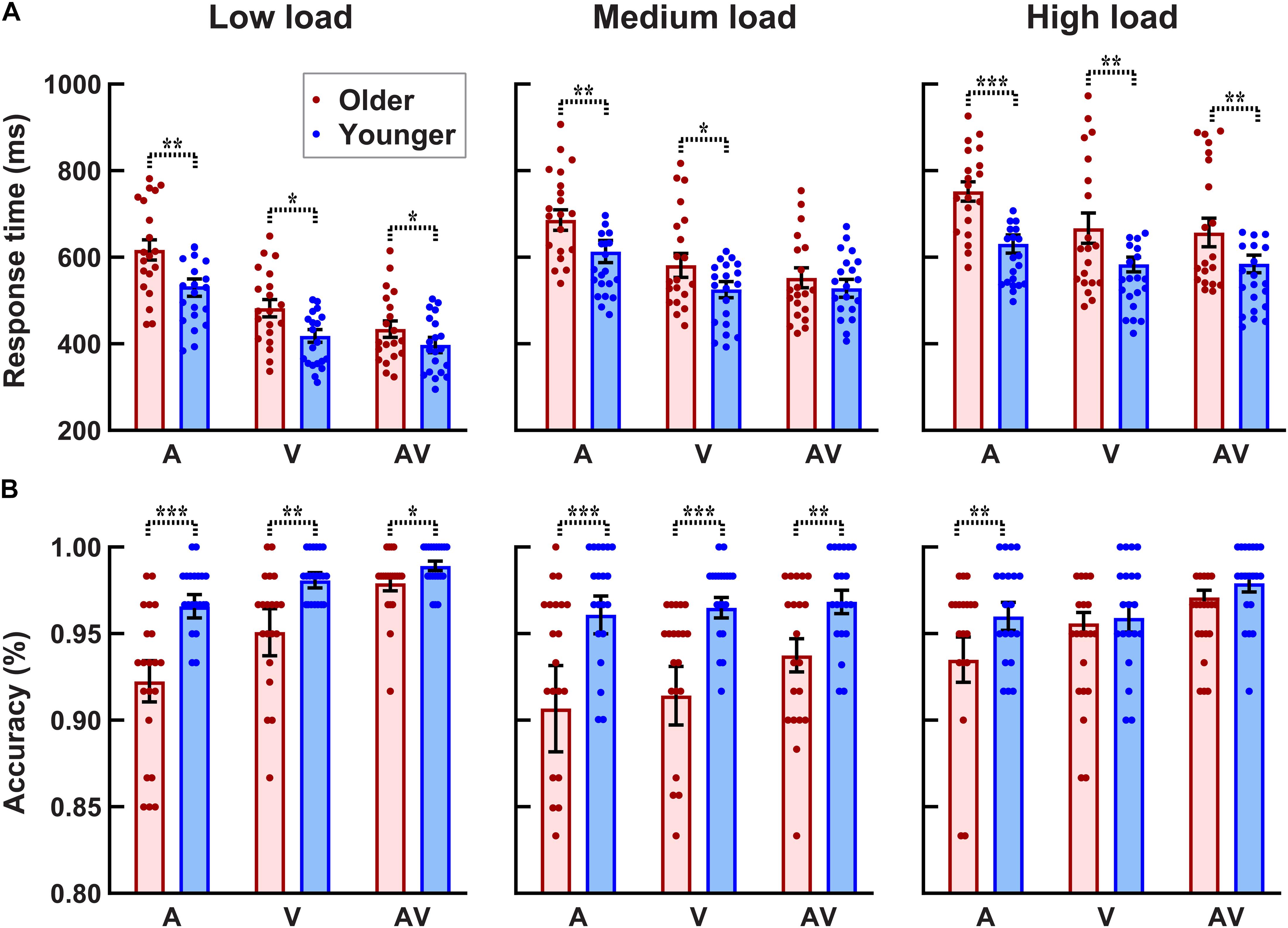

As shown in Figure 3B, the accuracy for each participant and each condition was higher than 80%. The 2group (older, younger) × 3load (low, medium, high) × 3stimulus (A,V,AV) ANOVA analysis revealed a significant group main effect [F(1, 38) = 9.289, p = 0.004, ηp2 = 0.196], showing that the accuracy was lower for older adults than that for younger adults. There was a significant perceptual load main effect [F(2, 76) = 7.026, p = 0.003, ηp2 = 0.156], showing that the accuracy under low and medium visual perceptual-load conditions was higher than that under high visual perceptual-load conditions. Additionally, a significant stimulus type main effect was also found [F(2, 76) = 13.038, p < 0.001, ηp2 = 0.255], showing that the accuracy was higher when responding to bimodal AV stimulus than unimodal V or A stimulus (AV > V > A). In addition, the interaction of perceptual load × group was marginally significant [F(2, 76) = 3.368, p = 0.049, ηp2 = 0.081]. The post hoc analysis using pairwise comparison with Bonferroni correction for perceptual load revealed that the accuracy was lower under high visual perceptual-load conditions than that under low (p = 0.004) and medium (p = 0.018) visual perceptual-load conditions, but there was no significant difference between the low and medium visual perceptual-load conditions (p = 1.000) for older adults. The post hoc analysis using pairwise comparison with Bonferroni correction for the group revealed that the accuracy for older adults was lower than that for younger adults under both low (p = 0.003) and medium (p = 0.009) visual perceptual-load conditions, but no significant difference was found between older and younger adults (p = 0.168) under high visual perceptual-load conditions.

Figure 3. Higher response time (A) and lower accuracy (B) for older adults than for younger adults under all visual perceptual-load conditions. *p < 0.05, **p < 0.01, ***p < 0.001. Low load, low visual perceptual-load condition; Medium load, medium visual perceptual-load condition; High load, high visual perceptual-load condition.

Response Times

The 2group (older, younger) × 3load (low, medium, high) × 3stimulus (A,V,AV) ANOVA analysis for RTs (Figure 3A) revealed significant group main effect [F(1, 38) = 5.528, p = 0.024, ηp2 = 0.127], showing that the response was slower by older adults than that by younger adults. There were significant perceptual load main effects [F(2, 76) = 166.072, p < 0.001, ηp2 = 0.814], showing that the response under the low visual perceptual-load conditions was fastest (low > medium > high). Additionally, significant stimulus type main effect was also found [F(2, 76) = 105.521, p < 0.001, ηp2 = 0.156], showing that the response to AV stimulus was faster than that to A or V stimulus (AV > V > A). The interaction of perceptual load × group was marginally significant [F(2, 76) = 3.324, p = 0.048, ηp2 = 0.080]. The post hoc analysis using pairwise comparison with Bonferroni correction revealed that the response by older adults was obviously slower than that by younger adults under low (p = 0.028) and high (p = 0.009) visual perceptual-load conditions, but no significant difference was found between younger and older adults under medium visual perceptual-load conditions (p = 0.125). Additionally, under all perceptual-load conditions, the response was slower by older adults than that by younger adults (all p ≥ 0.002). The interaction of perceptual load × stimulus was also significant [F(4, 152) = 18.927, p < 0.001, ηp2 = 0.332]. The post hoc analysis using pairwise comparison with Bonferroni correction for perceptual load revealed that under low and medium visual perceptual-load conditions, the response to the three stimuli were significantly different (AV > V > A, all p ≤ 0.004). However, under the high visual perceptual-load conditions, the response to A stimulus was slower than that to AV (p < 0.001) or V (p < 0.001) stimulus, but no significant difference was found between AV and V stimuli (p = 1.000). Additionally, the response among all visual perceptual-load conditions was significantly different for all stimuli (low > medium > high, all p < 0.001).

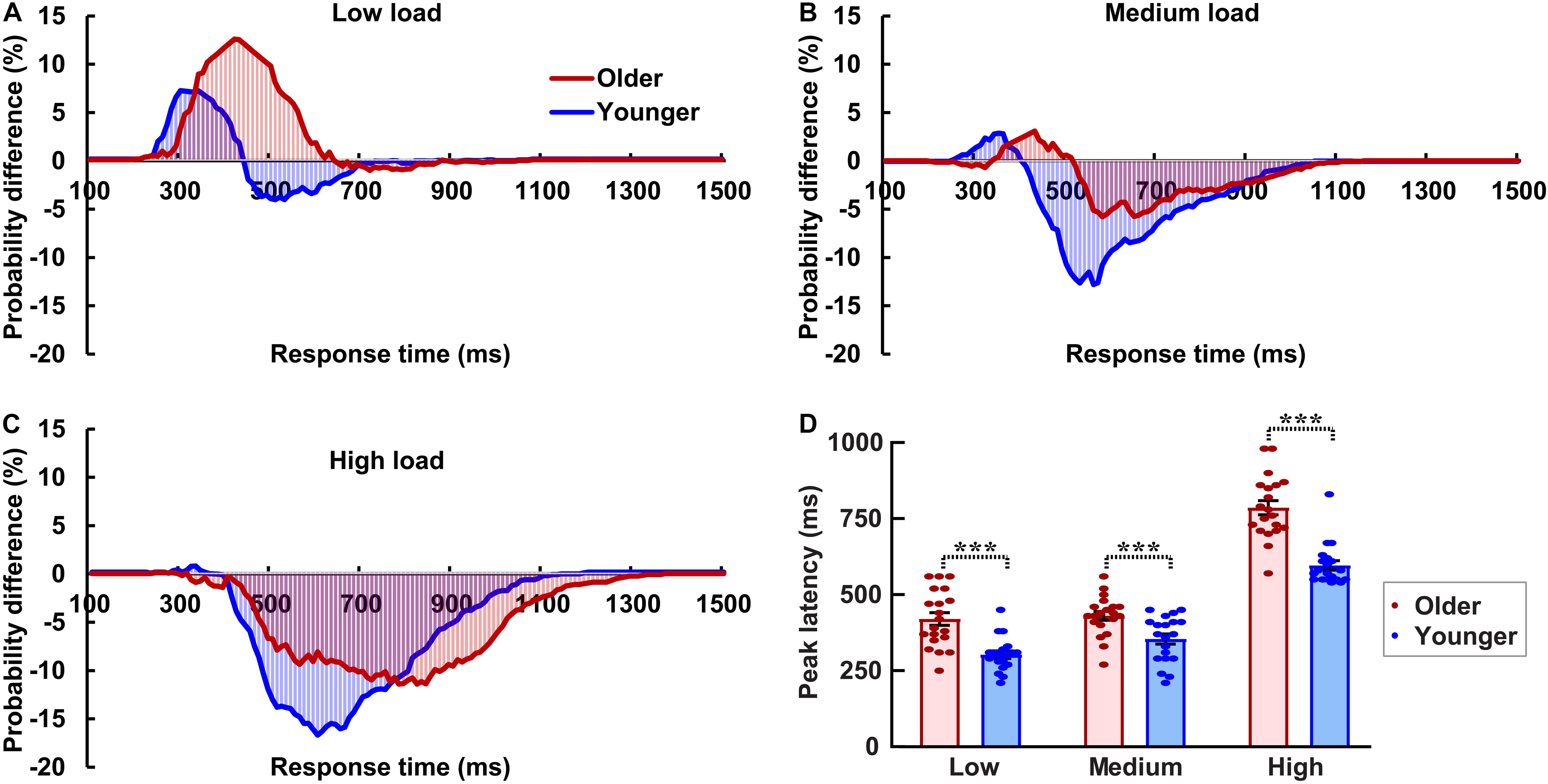

Race Model Analysis

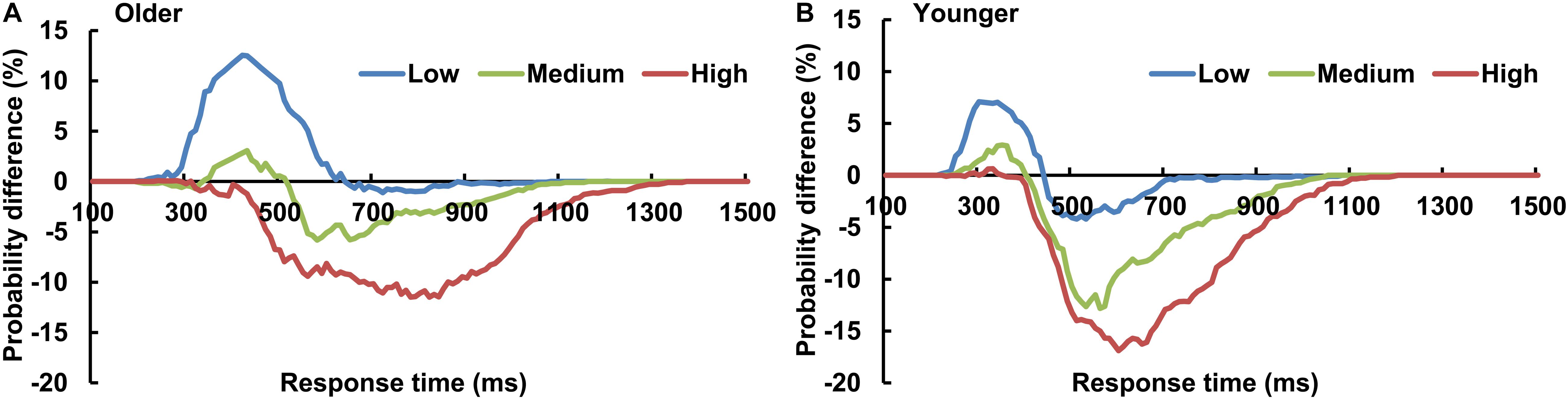

The AVI was assessed using race model under all visual perceptual-load conditions, as Figure 2A for older adults and Figure 2B for younger adults under low perceptual-load conditions. Significant AVI was found under all visual perceptual-load conditions for both older (two-tailed t-test, all p ≤ 0.047) and younger (two-tailed t-test, all p ≤ 0.05) adults. The AV facilitation effect for older adults (12.54%) was higher than that for younger adults (7.08%) under low visual perceptual-load conditions (Figure 4A), but under medium visual perceptual-load conditions no significant difference was found between older (3.06%) and younger adults (2.92%) (Figure 4B). Under high visual perceptual-load conditions, only the AV depression effect was found in both older (−11.47%) and younger adults (−16.89%) (Figure 4C). These results indicated the AVI was higher in older adults, and the facilitation effect was absent and even the depression effect occurred with an increase in the visual perceptual load for both older (Figure 5A) and younger (Figure 5B) adults. Additionally, the peak latencies were delayed for older adults under all visual perceptual-load conditions than that for younger adults, exhibiting 420 vs. 300 ms, 430 vs. 350 ms, and 850 vs. 600 ms for low, medium, and high visual perceptual-load conditions, respectively (Figure 4D), indicating delayed AVI for older adults than that for younger adults.

Figure 4. Significant audiovisual facilitation effect was mainly found in the low visual perceptual-load conditions (A), but depression effects were found in the medium (B) and high (C) visual perceptual-load conditions. The AVI was delayed for older adults than that for younger adults under all visual perceptual-load conditions. (D) Low, low visual perceptual-load condition; Medium, medium visual perceptual-load condition; High, high visual perceptual-load condition. ***p < 0.001.

Figure 5. The AVI effect changed from facilitation to depression for both older (A) and younger (B) adults with increasing of visual perceptual load. Low, low visual perceptual-load condition; Medium, medium visual perceptual-load condition; High, high visual perceptual-load condition.

Discussion

The current study aims to investigate the influence of visual perceptual load on AVI and its aging effect. The results showed that the AV facilitation effect was higher for older adults than that for younger adults under low visual perceptual-load conditions, and no obvious difference was found between younger and older adults under medium visual perceptual-load conditions; however, only the AV depression effect was found for both younger and older adults under high visual perceptual-load conditions. Additionally, the AVI was delayed in older adults compared with younger adults under all visual perceptual-load conditions.

Unexpectedly, the AVI effect was altered from facilitation to depression with the increasing visual perceptual load. According to the nerve-center energy theory proposed by Kahneman (1973)in “Attention and Effort” (1973), the complex stimulus can expend more energy and the simple stimulus can expend less energy. The visual task is a simple “feature identification” (single feature) under low visual perceptual-load conditions, but it is complex “object identification” (conjunction of features) under medium (two features) and high (three features) visual perceptual-load conditions. Therefore, more energy was allocated to the visual stimulus under medium visual perceptual-load conditions than that under low visual perceptual-load conditions, and more under high visual perceptual-load conditions than that under medium visual perceptual-load conditions. Additionally, a recent study reported that AVI was sensitive to the amount of available cognitive resources (Michail et al., 2021). In the current study, the memory loads were different among perceptual-load conditions, showing the highest memory load under high perceptual-load conditions (three attributes) and the lowest memory load under low perceptual-load conditions (one attribute). Therefore, more cognitive resources are allocated to the visual stimulus under medium visual perceptual-load conditions than that under low visual perceptual-load conditions, and more under high visual perceptual-load conditions than that under medium visual perceptual-load conditions. The multisensory integration occurred in the human primary visual cortex (Murray et al., 2016). The increased visual demands could, theoretically, limit resources for integrative processing. With increasing perceptual load, more resources were shifted to visual stimuli, which reduced auditory weighting, and therefore limited the benefits of integration (Deloss et al., 2013). Therefore, it is reasonable that the AV facilitation effect was mainly found under low visual perceptual-load conditions and it decreased with the increasing visual perceptual load. However, there is a limitation that it was impossible to rule out of the role of memory load, and precise experimental designs are necessary for future studies. The biased competition model assumes that nerve-center energy enhances the selected sensory neural response and suppresses the irrelevant sensory neural response. With the additional visual perceptual load, more energy was diverted to the visual stimulus, which also led to AV suppression in addition to facilitation (Kamijo et al., 2007). Therefore, the AV depression effect under the medium and high visual perceptual-load conditions might be mainly attributed to the biased competition.

In addition, the response was slower with increasing visual perceptual load. Only one attribute (red color) was identified under low visual perceptual-load conditions; however, two attributes (red color and circle) were identified under medium visual perceptual-load conditions, and three attributes (red color, circle, double frame) were identified under high visual perceptual-load conditions. The capacity of the visual system for processing information is limited, and the performance was worse if the subject was instructed to identify two attributes than that to one attribute (Duncan, 1984; Kastner and Ungerleider, 2001). Therefore, the reduced response speed might be mainly attributed to the limited processing resources. Additionally, under the low visual perceptual-load conditions, the red color attribute is quickly and effortlessly detected, resulting from its salience, but it cannot be completed through the simple feature property under the medium and high visual perceptual-load conditions, which leads to a slower response. Therefore, another possible reason for the slower response with increasing visual perceptual load is the weakened salience for object identification than that for the simple feature.

Additionally, consistent with our original hypothesis, the AVI effect was higher in older adults than that in younger adults under low visual perceptual load conditions, which was also consistent with some of the previous studies reporting a higher AVI effect in older adults (Laurienti et al., 2006; Peiffer et al., 2007; Diederich et al., 2008; Deloss et al., 2013; Sekiyama et al., 2014). Although the primary brain regions for AVI are the same in older and younger adults, Grady (2009) reported that older adults recruited additional brain areas to participate in AVI (Grady, 2009). Diaconescu et al. (2013) used MEG to record the responses of subjects (15 younger adults and 16 elderly adults) to semantically related bimodal (V + A) and unimodal (V or A) sensory stimuli to capture the different brain areas involved in AVI. They reported that older individuals activated a specific brain network, the medial prefrontal cortex or posterior parietal cortex, when responding to audiovisual information. A comparative study between older and younger adults was also conducted by Ren et al. (2018) using an auditory/visual discrimination task, and they found activity in the visual cortex during AV stimuli processing in older but not in younger adults, which also indicated that older adults recruited the primary visual cortex involved in AVI. Additionally, reductions in hemispheric asymmetry in older adults have been found in many cognitive tasks, including episodic memory retrieval (Grady et al., 2002), perception (Grady et al., 1994, 2000), and working memory (Cabeza, 2002; Methqal et al., 2017). Therefore, the higher AVI effect of older adults, as a possible adaptive mechanism, might have mainly resulted from decreased brain specialization and activation of a distinct brain network to compensate for dysfunction in visual-only or auditory-only stimulus processing compared with younger adults (Gazzaniga et al., 2008; Koelewijn et al., 2010; Diaconescu et al., 2013; Baseler et al., 2014; Gutchess, 2014; Setti et al., 2014; Ren et al., 2018). However, the current studies could not identify whether the higher AVI is an adaptive mechanism or an indicator for unisensory decline, and future neuroimaging studies are necessary to clarify this matter. In addition, the AVI was significantly delayed in older adults compared with younger adults under all visual perceptual-load conditions, which was consistent with previous studies (Ren et al., 2018, 2020b). According to the “time-window-of-integration model” proposed by Diederich et al. (2008) before the integration of auditory and visual information (second stage), it is necessary to complete early auditory and visual information processing, which was assumed to be independent (first stage). There were significant sensory declines in older adults, showing slowed auditory and visual information processing in the first stage (Salthouse, 1996; Hirst et al., 2019); therefore, the delayed AVI with aging might be mainly attributed to age-related sensory decline.

Conclusion

In conclusion, resulting from biased competition, the AVI effect was reduced with increasing visual perceptual load for both older and younger adults. It is possible that as a compensatory mechanism of the unimodal perceptual decline, the AVI of older adults was higher than that of younger adults, but their AVI effect was delayed resulting from age-related sensory decline.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethics Committee of the Second Affiliated Hospital of Guizhou University of Traditional Chinese Medicine. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

YR and WY conceived and designed the experiments. YL and TW collected the data. YR analyzed the data and wrote the draft manuscript. HL revised the manuscript and responsed to the reviewers by receiving comments for WY. All authors contributed to the article and approved the submitted version.

Funding

This study was partially supported by the Science and Technology Planning Project of Guizhou Province (QianKeHeJiChu-ZK [2021] General 120), the National Natural Science Foundation of China (Nos. 31800932 and 31700973), the Innovation and Entrepreneurship Project for High-level Overseas Talent of Guizhou Province [(2019)04], and the Humanity and Social Science Youth Foundation of the Ministry of Education of China (Nos. 18XJC190003 and 16YJC190025).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank the individuals who participated in this study and as also the reviewers for their helpful comments and suggestions on revised versions of this manuscript.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.740221/full#supplementary-material

Footnotes

References

Alsius, A., Möttönen, R., Sams, M. E., Soto-Faraco, S., and Tiippana, K. (2014). Effect of attentional load on audiovisual speech perception: evidence from ERPs. Front. Psychol. 5:727.

Alsius, A., Navarra, J., Campbell, R., and Soto-Faraco, S. (2005). Audiovisual integration of speech falters under high attention demands. Curr. Biol. 15, 839–843. doi: 10.1016/j.cub.2005.03.046

Alsius, A., Navarra, J., and Soto-Faraco, S. (2007). Attention to touch weakens audiovisual speech integration. Exp. Brain Res. 183, 399–404. doi: 10.1007/s00221-007-1110-1

Anguera, J. A., Boccanfuso, J., Rintoul, J. L., Al-Hashimi, O., Faraji, F., Janowich, J., et al. (2013). Video game training enhances cognitive control in older adults. Nature 501, 97–101. doi: 10.1038/nature12486

Baseler, H. A., Harris, R. J., Young, A. W., and Andrews, T. J. (2014). Neural responses to expression and gaze in the posterior superior temporal sulcus interact with facial identity. Cereb. Cortex 24, 737–744. doi: 10.1093/cercor/bhs360

Bleecker, M. L., Bolla-Wilson, K., Kawas, C., and Agnew, J. (1988). Age-specific norms for the mini-mental state exam. Neurology 38, 1565–1568. doi: 10.1212/wnl.38.10.1565

Bruner, J. S., Goodnow, J. J., and Austin, G. A. (1956). A study of thinking. Philos. Phenomenol. Res. 7, 215–221.

Cabeza, R. (2002). Hemispheric asymmetry reduction in older adults: the HAROLD model. Psychol. Aging 17, 85–100. doi: 10.1037/0882-7974.17.1.85

Cabeza, R., Nyberg, L., and Park, D. (2006). Cognitive neuroscience of aging: linking cognitive and cerebral aging. Psychologist 163, 560–561.

Colonius, H., and Diederich, A. (2004). Multisensory interaction in saccadic reaction time: a time-window-of-integration model. J. Cogn. Neurosci. 16, 1000–1009. doi: 10.1162/0898929041502733

Deloss, D. J., Pierce, R. S., and Andersen, G. J. (2013). Multisensory integration, aging, and the sound-induced flash illusion. Psychol. Aging 28, 802–812. doi: 10.1037/a0033289

Diaconescu, A. O., Hasher, L., and McIntosh, A. R. (2013). Visual dominance and multisensory integration changes with age. Neuroimage 65, 152–166. doi: 10.1016/j.neuroimage.2012.09.057

Diederich, A., Colonius, H., and Schomburg, A. (2008). Assessing age-related multisensory enhancement with the time-window-of-integration model. Neuropsychologia 46, 2556–2562. doi: 10.1016/j.neuropsychologia.2008.03.026

Duncan, J. (1984). Selective attention and the organization of visual information. J. Exp. Psychol. Gen. 113, 501–517. doi: 10.1037/0096-3445.113.4.501

Freiherr, J., Lundström, J. N., Habel, U., and Reetz, K. (2013). Multisensory integration mechanisms during aging. Front. Hum. Neurosci. 7:863.

Gazzaniga, M. S., Ivry, R. B., and Mangun, G. R. (2008). Cognitive Neuroscience: the Biology of the Mind. California: MIT Press.

Grady, C. L. (2009). Functional Neuroimaging Studies of Aging. Encyclopedia of Neuroscience. Pittsburgh: Academic Press.

Grady, C. L., Bernstein, L. J., Beig, S., and Siegenthaler, A. L. (2002). The effects of encoding task on age-related differences in the functional neuroanatomy of face memory. Psychol. Aging 17, 7–23. doi: 10.1037/0882-7974.17.1.7

Grady, C. L., Maisog, J. M., Horwitz, B., Ungerleider, L. G., Mentis, M. J., Salerno, J. A., et al. (1994). Age-related changes in cortical blood flow activation during visual processing of faces and location. J. Neurosci. 14(3 Pt 2), 1450–1462. doi: 10.1523/jneurosci.14-03-01450.1994

Grady, C. L., McIntosh, A. R., Horwitz, B., and Rapoport, S. I. (2000). Age-related changes in the neural correlates of degraded and nondegraded face processing. Cogn. Neuropsychol. 17, 165–186. doi: 10.1080/026432900380553

Gutchess, A. (2014). Plasticity of the aging brain: new directions in cognitive neuroscience. Science 346, 579–582. doi: 10.1126/science.1254604

Hirst, R. J., Setti, A., Kenny, R. A., and Newell, F. N. (2019). Age-related sensory decline mediates the Sound-Induced flash illusion: evidence for reliability weighting models of multisensory perception. Sci. Rep. 9:19347.

Kamijo, K., Nishihira, Y., Higashiura, T., and Kuroiwa, K. (2007). The interactive effect of exercise intensity and task difficulty on human cognitive processing. Int. J. Psychophysiol. 65, 114–121. doi: 10.1016/j.ijpsycho.2007.04.001

Kastner, S., and Ungerleider, L. G. (2001). The neural basis of biased competition in human visual cortex. Neuropsychologia 39, 1263–1276. doi: 10.1016/s0028-3932(01)00116-6

Koelewijn, T., Bronkhorst, A., and Theeuwes, J. (2010). Attention and the multiple stages of multisensory integration: a review of audiovisual studies. Acta Psychol. 134, 372–384. doi: 10.1016/j.actpsy.2010.03.010

Lakens, D. (2021). Sample Size Justification. Available Online at: https://psyarxiv.com/9d3yf/ (accessed January 4, 2021).

Laurienti, P. J., Burdette, J. H., Maldjian, J. A., and Wallace, M. T. (2006). Enhanced multisensory integration in older adults. Neurobiol. Aging 27, 1155–1163. doi: 10.1016/j.neurobiolaging.2005.05.024

Lavie, N. (1995). Perceptual load as a necessary condition for selective attention. J. Exp. Psychol. Hum. Percept. Perform. 21, 451–468. doi: 10.1037/0096-1523.21.3.451

Lavie, N., and Tsal, Y. (1994). Perceptual load as a major determinant of the locus of selection in visual attention. Percept. Psychophys. 56, 183–197. doi: 10.3758/bf03213897

Lee, L. P., Har, A. W., Ngai, C. H., Lai, D. W. L., Lam, B. Y., and Chan, C. C. (2020). Audiovisual integrative training for augmenting cognitive- motor functions in older adults with mild cognitive impairment. BMC Geriatr. 20:64.

Macdonald, J. S. P., and Lavie, N. (2011). Visual perceptual load induces inattentional deafness. Atten. Percept. Psychophys. 73, 1780–1789. doi: 10.3758/s13414-011-0144-4

Meredith, M. A., Nemitz, J. W., and Stein, B. E. (1987). Determinants of multisensory integration in superior colliculus neurons. I. temporal factors. J. Neurosci. 7, 3215–3229. doi: 10.1523/jneurosci.07-10-03215.1987

Methqal, I., Provost, J.-S., Wilson, M. A., Monchi, O., Amiri, M., Pinsard, B., et al. (2017). Age-related shift in neuro-activation during a word-matching task. Front. Aging Neurosci. 9:265.

Murray, M. M., Thelen, A., Thut, G., Romei, V., Martuzzi, R., and Matusz, P. J. (2016). The multisensory function of the human primary visual cortex. Neuropsychologia 83, 161–169. doi: 10.1016/j.neuropsychologia.2015.08.011

Michail, G., Senkowski, D., Niedeggen, M., and Keil, J. (2021). Memory load alters perception-related neural oscillations during multisensory integration. J. Neurosci. 41, 1505–1515. doi: 10.1523/jneurosci.1397-20.2020

Miller, J. (1982). Divided attention: evidence for coactivation with redundant signals. Cogn. Psychol. 14, 247–279. doi: 10.1016/0010-0285(82)90010-x

Miller, J. (1986). Timecourse of coactivation in bimodal divided attention. Percept. Psychophys. 40, 331–343. doi: 10.3758/bf03203025

O’Brien, J. M., Chan, J. S., and Setti, A. (2020). Audio-visual training in older adults: 2-interval-forced choice task improves performance. Front. Neurosci. 14:569212.

Paige, L. E., and Gutchess, A. H. (2015). “Cognitive neuroscience of aging,” in Encyclopedia of Geropsychology, ed. N. A. Pachana (Berlin: Springer).

Peiffer, A. M., Mozolic, J. L., Hugenschmidt, C. E., and Laurienti, P. J. (2007). Age-related multisensory enhancement in a simple audiovisual detection task. Neuroreport 18, 1077–1081. doi: 10.1097/wnr.0b013e3281e72ae7

Raab, D. H. (1962). Statistical facilitation of simple reaction times. Trans. N. Y. Acad. Sci. 24, 574–590. doi: 10.1111/j.2164-0947.1962.tb01433.x

Ren, Y., Li, S., Wang, T., and Yang, W. (2020a). Age-related shifts in theta oscillatory activity during audio-visual integration regardless of visual attentional load. Front. Aging Neurosci. 12:571950.

Ren, Y., Ren, Y., Yang, W., Tang, X., Wu, F., Wu, Q., et al. (2018). Comparison for younger and older adults: stimulus temporal asynchrony modulates audiovisual integration. Int. J. Psychophysiol. 124, 1–11. doi: 10.1016/j.ijpsycho.2017.12.004

Ren, Y., Xu, Z., Lu, S., Wang, T., and Yang, W. (2020b). Stimulus specific to age-related audio-visual integration in discrimination tasks. i-Perception 11, 1–14.

Ren, Y., Xu, Z., Wang, T., and Yang, W. (2020c). Age-related alterations in audiovisual integration: a brief overview. Psychologia 62, 233–252. doi: 10.2117/psysoc.2020-a002

Ren, Y., Yang, W., Nakahashi, K., Takahashi, S., and Wu, J. (2016). Audiovisual integration delayed by stimulus onset asynchrony between auditory and visual stimuli in older adults. Perception 46, 205–218. doi: 10.1177/0301006616673850

Salthouse, T. A. (1996). The processing-speed theory of adult age differences in cognition. Psychol. Rev. 103, 403–428. doi: 10.1037/0033-295x.103.3.403

Sekiyama, K., Takahiro, S., and Shinichi, S. (2014). Enhanced audiovisual integration with aging in speech perception: a heightened McGurk effect in older adults. Front. Psychol. 5:323.

Setti, A., Stapleton, J., Leahy, D., Walsh, C., Kenny, R. A., and Newell, F. N. (2014). Improving the efficiency of multisensory integration in older adults: audio-visual temporal discrimination training reduces susceptibility to the sound-induced flash illusion. Neuropsychologia 61, 259–268. doi: 10.1016/j.neuropsychologia.2014.06.027

Stephen, J. M., Knoefel, J. E., Adair, J., Hart, B., and Aine, C. J. (2010). Aging-related changes in auditory and visual integration measured with MEG. Neurosci. Lett. 484, 76–80. doi: 10.1016/j.neulet.2010.08.023

Stevenson, R. A., Ghose, D., Fister, J. K., Sarko, D. K., Altieri, N. A., Nidiffer, A. R., et al. (2014). Identifying and quantifying multisensory integration: a tutorial review. Brain Topogr. 27, 707–730. doi: 10.1007/s10548-014-0365-7

Wahn, B., and König, P. (2015). Audition and vision share spatial attentional resources, yet attentional load does not disrupt audiovisual integration. Front. Psychol. 6:1084.

Xu, Z., Yang, W., Zhou, Z., and Ren, Y. (2020). Cue–target onset asynchrony modulates interaction between exogenous attention and audiovisual integration. Cogn. Process. 21, 261–270. doi: 10.1007/s10339-020-00950-2

Keywords: audiovisual integration, perceptual load, older adults, race model, discrimination task

Citation: Ren Y, Li H, Li Y, Wang T and Yang W (2021) Visual Perceptual Load Attenuates Age-Related Audiovisual Integration in an Audiovisual Discrimination Task. Front. Psychol. 12:740221. doi: 10.3389/fpsyg.2021.740221

Received: 13 July 2021; Accepted: 23 August 2021;

Published: 29 September 2021.

Edited by:

Julian Keil, University of Kiel, GermanyReviewed by:

Rebecca Hirst, Trinity College Dublin, IrelandJonathan Wilbiks, University of New Brunswick Fredericton, Canada

Copyright © 2021 Ren, Li, Li, Wang and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Weiping Yang, c3d5d3BAMTYzLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Yanna Ren

Yanna Ren Hannan Li2†

Hannan Li2† Weiping Yang

Weiping Yang