94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 25 November 2021

Sec. Educational Psychology

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.716639

This article is part of the Research TopicTheory and Empirical Practice in Research on Social and Emotional SkillsView all 15 articles

Responding to the need for school-based, broadly applicable, low-cost, and brief assessments of socio-emotional skills, we describe the conceptual background and empirical development of the SENNA inventory and provide new psychometric information on its internal structure. Data were obtained through a computerized survey from 50,000 Brazilian students enrolled in public school grades 6 to 12, spread across the entire State of São Paulo. The SENNA inventory was designed to assess 18 particular skills (e.g., empathy, responsibility, tolerance of frustration, and social initiative), each operationalized by nine items that represent three types of items: three positively keyed trait-identity items, three negatively keyed identity items, and three (always positively keyed) self-efficacy items, totaling a set of 162 items. Results show that the 18 skill constructs empirically defined a higher-order structure that we interpret as the social-emotional Big Five, labeled as Engaging with Others, Amity, Self-Management, Emotional Regulation, and Open-Mindedness. The same five factors emerged whether we assessed the 18 skills with items representing (a) a trait-identity approach that emphasizes lived skills (what do I typically do?) or (b) a self-efficacy approach that emphasizes capability (how well can I do that?). Given that its target youth group is as young as 11 years old (grade 6), a population particularly prone to the response bias of acquiescence, SENNA is also equipped to correct for individual differences in acquiescence, which are shown to systematically bias results when not corrected.

Over the past two decades, education scientists and policy-makers showed an increased attention for the assessment and learning of Social-Emotional Skills, also called 21st century skills (from here onward abbreviated as SEMS) (Abrahams et al., 2019). This interest shift built on the notion that more traditional indicators of scholastic achievement, such as scores on math and reading, are not sufficient for a successful and happy life and for dealing with the challenges of today’s volatile, uncertain, complex, and ambiguous (VUCA) world1. In addition to content-specific knowledge and skills, students nowadays also need transferable skills such as collaboration and dealing with diversity, presentation skills, skills to regulate emotions, managerial and implementation skills, and a need to be open-minded, creative, and innovative, among others (De Fruyt, 2019). Hence, schools today pay considerable attention to the development of SEMS that they have been shown to affect a range of consequential outcomes, both in the short term and the long term (Taylor et al., 2017; Bertling and Alegre, 2018).

The increased attention to SEMS raised questions on their conceptual status, their underlying structure, and how SEMS develop normatively, driven by a complex interaction between biological factors and formal and informal learning experiences, including scholastic intervention (Kyllonen et al., 2014; Lipnevich et al., 2016; Abrahams et al., 2019). The reviews by Abrahams et al. (2019) further clarified that, in order to advance this field, we first need to converge on a taxonomy structuring SEMS, so we can design assessment instruments to examine pertinent research questions and support educators and teachers with formative and summative tools for assessing SEMS.

The present paper will review the conceptual background and construction of SENNA, an inventory designed to assess SEMS in Brazilian public education that is also useful for scientific and applied research. SENNA’s psychometric features will be examined in a sample of more than 50,000 students enrolled in grades 6 to 12 in public schools in the State of São Paulo. Little is known about the structure of socio-emotional variables in South America, especially in the public schools in Brazil that serve large numbers of underprivileged youth from poor and uneducated family backgrounds. Thus, the present research also provides important information about the applicability and generalizability of research from the Western, educated, rich, and industrialized countries (Henrich et al., 2010) that have so far dominated the SEMS research agenda.

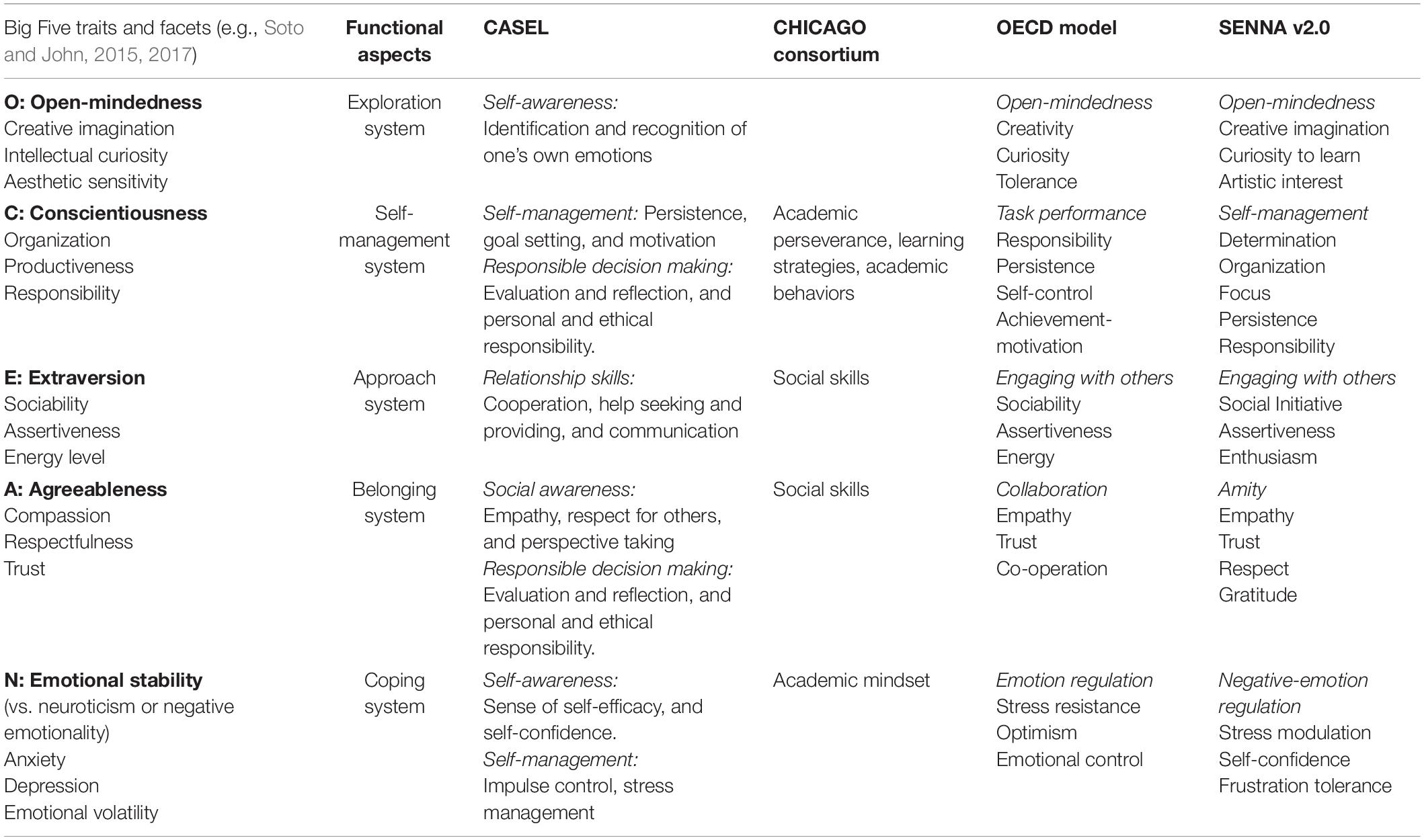

A Google search using the terms ‘social-emotional skills,’ ‘transferable skills’ or ‘21st century skills’ results in hundreds of hits referring to a plethora of different SEMS frameworks and taxonomies, with some advocating only a few and others proposing hundred or more skills2 (Abrahams et al., 2019). Table 1 provides an overview of some of the more comprehensive frameworks and their associated skills. Although there may be notable reasons why these frameworks differ in terms of the number and nature of specified SEMS, this mixture of models and diversity of vocabularies hampers an integrative and in-depth discussion on how to organize evidence-based learning of SEMS in education. The field further suffers from the jingle-jangle fallacy, where similarly named constructs across frameworks indicate different skills (jingle), whereas nearly identical skills are labeled differently by different researchers (jangle) (Olderbak and Wilhelm, 2020).

Table 1. Relationship of Big Five traits, functional aspects, and dimensions in major socio-emotional skills frameworks.

The challenge to bring order in the chaos of (often overlapping) skill terms closely resembles personality psychologists’ struggles to structure the hundreds of personality descriptive adjectives in the natural language into the Big Five personality taxonomy (John et al., 2008). Today, personality psychologists agree that five broad dimensions, namely Extraversion, Agreeableness, Conscientiousness, Emotional Stability (vs. Negative Emotionality), and Openness to Experiences, form the largest common denominator to describe personality differences observable in various age and cultural groups (McCrae and Terracciano, 2005; De Fruyt and Van Leeuwen, 2014; John, 2021). This encompassing empirical framework helped to solve the issues of overlap among constructs, and significantly advanced knowledge on how personality develops. A parallel trimming and structuring exercise is required for the field of SEMS.

During the past decade, the Organization of Economic Cooperation and Development (OECD) started an explicit study on the assessment of SEMS in a number of large cities around the world, as a complement of their Program of International Student Assessment3. John and De Fruyt (2015), serving in the technical advisory committee of this project, reviewed the SEMS literature at that time and concluded that there was an emerging consensus that the multitude of SEMS could be conceptually grouped in five broad skills categories, namely Engaging with Others, Collaboration, Task Performance, Emotion Regulation, and Open-Mindedness. Table 1 also includes more specific skills, such as persistence and empathy, and illustrates how they can be conceptually grouped into these broad dimensions. These five SEMS dimensions show strong conceptual similarities with the Big Five personality dimensions; hence we refer to them as the ‘Social-Emotional Big Five.’ This resemblance is not surprising, given that traits and cognitive abilities form constituting building blocks of competencies or SEMS (Hoekstra and Van Sluijs, 2003; Bartram, 2005).

The OECD (John and De Fruyt, 2015; Kankaraš and Suárez-Álvarez, 2019) and De Fruyt et al. (2015, p. 279) defined SEMS as “individual characteristics that: (a) originate in the reciprocal interaction between biological predispositions and environmental factors, (b) are manifested in consistent patterns of thoughts, feelings and behaviors, (c) continue to develop through formal and informal learning experiences, and (d) influence important socio-economic outcomes throughout the individual’s life.” This definition is broad enough to capture skills and their building blocks, underscoring their malleability across life and their consequential impact on outcomes that matter for the individual and society.

In Brazil, interest in the development and assessment of SEMS has been steadily growing over the past 15 years to improve general welfare and prepare youth for upcoming challenges via public education and intervention programs. Challenges for the Brazilian public education systems have been numerous and urgent, with large and early school drop-out rates, poor results in PISA, complex social inequality challenges, high violence and crime rates, and considerable (youth) unemployment, amongst others (Miyamoto et al., 2014).

The Ayrton Senna Institute has played a pioneering role in raising awareness about the importance of SEMS in education and initiated and conducted a series of meetings and research activities to learn about SEMS assessment and their development. In 2013, the Ayrton Senna Institute collaborated with the OECD4 and the Education Secretariat of Rio de Janeiro to investigate the measurement of SEMS and describe their associations with various outcomes (Miyamoto et al., 2014; Santos and Primi, 2014). Leading the first empirical OECD study on SEMS in a developing country, Santos and Primi (2014) identified eight SEMS instruments with constructs that predicted consequential outcomes of education, were feasible to administer, assessed malleable constructs, and showed robust psychometric characteristics. In a large sample of middle school and high school students, Primi et al. (2016, 2019c) examined the items and scales of the Strengths and Difficulties Questionnaire (Goodman, 1997), the Self-Efficacy Questionnaire for Children (Muris, 2001), the Core Self-Evaluations (Judge et al., 2003), the Big Five Inventory (John et al., 2008), the Rosenberg Self-Esteem Scale (Rosenberg, 1965), the Nowicki-Strickland Locus of Control (Nowicki and Strickland, 1973) scale, the Big Five for Children (Barbaranelli et al., 2003), and the Grit Scale (Duckworth and Quinn, 2009) and found that they could all be mapped under the umbrella of the ‘Social-Emotional Big Five.’ Also, a sixth factor resulted from the factor analysis, reflecting beliefs about personal control versus low self-esteem, hopelessness, and feeling defeated, that connects with low Emotional Stability. This study provided the first empirical support for the conceptual classification proposed by John and De Fruyt (2015) and the OECD (2015) (Primi et al., 2016, 2019c).

Resulting from this work, a first set with 92 items was compiled (tentatively called SENNA 1.0) that was used in the OECD’s first pilot study on SEMS in Rio de Janeiro (Miyamoto et al., 2014; Santos and Primi, 2014; Primi et al., 2016). This first item set and data collection (N = 27,628) provided information at the broad Social-Emotional Big Five level and further helped to delineate requirements for a new broad SEMS measure that could be generally implemented in Brazilian education.

First, it quickly became clear that a feasible large-scale assessment of SEMS in Brazilian public schools needed to be a broadly applicable survey, available at a low cost, requiring little time to administer (no more than 50 min of class time), and preferably useful for self-description without assistance. The assessment further had to result in feedback for individual students, but also reports at the level of classes (for teachers), schools (for directors) and educational districts/states (for policy makers). Besides these more formal necessities, there were also some critical content and technical requirements.

Second, to avoid a WEIRD-culture bias (Henrich et al., 2010) and to acknowledge the unique features of Brazilian culture and education, it was recommended to use an emic and bottom-up approach to the questionnaire constructions; the goal was to write and select items that would be age and culture appropriate for students in Brazilian public schools, covering the age range of grades 6 to 12 (i.e., typically age 11 to 18). These requirements suggested that a large new item set would be developed, and assessment procedures would be checked, always in close collaboration with students (through focus groups and pretesting), teachers, and various educational stakeholders.

Third, although there was some first evidence that the Social-Emotional Big Five was a useful framework (Primi et al., 2016, 2019c), educational stakeholders were interested to get information on students’ mastery of various more specific skills, such as responsibility, respect, or creativity, that could eventually be organized under the umbrella of the social-emotional five, as shown in Table 1. Information at the broad skill level is probably useful for directors and policy makers, but individual students and their teachers want to know the student’s standing on one or more specific skills, a level that is more actionable in the classroom.

Fourth, the review by Santos and Primi (2014) made clear that SEMS can be assessed following two conceptually different approaches. One is the trait or identity approach (Wiggins and Pincus, 1992; see John, 2021, for a review), which focuses on typical skilled behavior and asks “How do you typically or generally behave (or think or feel)?” We can think of this approach as measuring “lived skills,” that is the skill level at which an individual operates most of the time.

In contrast, other researchers have advocated an approach focused on capabilities or maximal behavior; here the interest is not on typical but on peak performance. In self-report form, students are asked “How well can you act (or think or feel) in a particular domain?”—in other words, self-efficacy. Bandura (1977) defined self-efficacy as students’ belief that they have the capability to organize and execute the actions required to manage future situations. Bandura (2006, p. 308) emphasized that self-efficacy items “should be phrased in terms of can do rather than will do. Can is a judgment of capability” (emphases in the original).

As so often, most researchers have adopted only one of these two approaches. Thus, we know little about how measures based on one approach correlate with measures based on the other. Bandura (2006) has argued that perceived self-efficacy is a major determinant of what people typically do (suggesting a substantial correlation) yet also emphasized that “the two constructs are conceptually and empirically separable” (p. 309).

It is entirely conceivable that some SEMS, like ‘trust’ or ‘responsibility,’ may be better conceptualized and measured from the typical performance (trait) approach, whereas other like ‘presentation skills’ may be better understood from an maximal behavior (self-efficacy) approach. How much students trust others on a day-to-day basis may well be a better predictor of their citizenship behavior, whereas how well they can present may be a better predictor of a student’s impact on an audience when they have to speak to a group. These kinds of hypotheses, however, that can be examined only if one has the two approaches to measure these skills (trait/identity versus self-efficacy) are represented in the same inventory.

Although we do not know how closely trait identity and self-efficacy measures are linked, the initial evidence is promising (Santos and Primi, 2014; Primi et al., 2016, 2019c): The three self-efficacy domains measured in children by Muris (2001) were substantially and differentially correlated with three of the trait identity measures included in the Socio-emotional Five: Social self-efficacy with Extraversion (Engaging with Others); Emotional Self-efficacy with Emotional Stability (Negative-Emotion Regulation); and Academic Self-Efficacy with Conscientiousness (Self-Management). Moreover, the items set initially developed for SENNA 1.0 included some self-efficacy items but only for three of the SEMS domains. Given that we wanted to take SEMS assessment seriously, we decided to represent both trait/identity and self-efficacy scales for all skills in the new measure. Thus, we will be able to test (a) whether the self-efficacy based SEMS scales analyzed separately show the expected Socio-emotional Five structure and (b) whether self-efficacy and trait-identity based scales will jointly define the same underlying factor structure.

Finally, given the anticipated heterogeneous and young respondent samples it is critically important to think about ways to reduce systematic error due to response styles that can compromise structural and predictive validity (Primi et al., 2019a,b,d, 2020). Soto et al. (2008) observed that psychometric and structural analyses of personality descriptive items of younger and less-educated samples rarely resemble the better structural validities found in adults and well-educated samples. These effects, discovered first in the United States, should be even more pronounced in the less educated youth in developing countries. Examining a large sample of youth aged 10 to 20 years, Soto et al. (2008) found that the underlying cause is large variability in how children and adolescents use the numerical rating scale to indicate how well a particular item describes themselves. Specifically, on a 1–5 rating scale, some youth will show high acquiescence and preferentially use the upper or right-hand side of the scale (i.e., use 4 and 5 far more often than 1 and 2) regardless of the content of the item. In contrast, others will show low acquiescence and preferentially use the lower or left-hand side of the scale (i.e., use 1 and 2 more often than 4 and 5). The first response bias is called high acquiescence because these youngsters agree more with items (yeah-saying), whereas the second response bias is named low acquiescence (nay-saying). Soto et al. (2008) showed that individual differences in acquiescence bias were most pronounced at age 10 to 12 and then decreased substantially (by 50%!) all the way to age 18, at which point they reached stable adult levels. Primi et al. (2019a) replicated these findings with public school students in Brazil and found that the acquiescence corrections substantially improved criterion validity. Having high-quality reverse (or false) keyed items is critical to assessing and controlling the effect of acquiescence. We therefore need carefully developed items that can be arranged like antonym pairs to measure not only high but also low levels of each of the SEMS dimensions in the new inventory.

Relying on our previous work for SENNA 1.0, a succinct review of the literature (e.g., John et al., 2008; Soto and John, 2017), and consultation of various educational stakeholders, we developed a blueprint of 18 SEMS, with tentative labels and short definitional descriptions, as shown in Table 2. SEMS are conceptually grouped under the Social-Emotional Big Five headers. These definitions formed the starting point to write and compile a large pool of 527 candidate items; 92 items came from the original SENNA 1.0 and 435 new items. This was an iterative emic process, involving multiple item writing and revision sessions, with input from research psychologists, education experts, and economists as well as former and current teachers. The item set included both positively and negatively keyed trait items, and also included items written specifically to reflect a self-efficacy rating perspective. Our goal was to construct short and homogeneous scales through item factor analysis with nine items each, including three positively keyed SEMS identity items, three negatively keyed identity items, and three SEMS self-efficacy statements—note that the self-efficacy items are, by definition, positively keyed (i.e., it doesn’t make sense to ask students how well they can not do something). The selection of items was done in a two-phase process relying on data from two different studies.

Our first study was conducted in 2014 and aimed to obtain a full inter-item covariance matrix across the 527 candidate items. Because we could not administer so many items to the same student, a Balanced Incomplete Block Design (BIB; van der Linden et al., 2004) was used to administer different subsets of items to different subsets of students, thus allowing us to estimate the full inter-item covariance matrix for every pair of items. Each student answered a booklet with a subset of 90 items on average, with a total of 24 paper-and-pencil booklets. The sample included 33,766 students from grades 5, 9, and 10 enrolled in public education in the State of Ceará, in the Northeast of Brazil. Students were randomly assigned to the 24 booklets. An average of 1,406 students answered each of the 24 booklets (min 1,268, max 1,427). We ran a series of exploratory factor and bi-factor analyses for the groups of items that designed to measure each of the intended 18 SEMS constructs. Our goal was to identify items that measured (a) the intended lower (or facet) level construct (e.g., Empathy) well and (b) showed good discrimination against both the other facets in the same domain (e.g., Respect) and the facets in the four other domains (e.g., Persistence in the Self-Management domain). After selecting preliminary candidate items for each facet, we ran exploratory factor analyses of candidate facet scales and inspected item-total correlations to further investigate convergent and discriminant validity. We selected those items that demonstrated a high loading on the general factor (from exploratory bi-factor analysis) and correlated more strongly with its intended facet scale than with other facets. As others have noticed in Western countries (e.g., Soto and John, 2017), results showed that it was particularly difficult to write items to measure low levels of Open-mindedness (e.g., lack of Intellectual Curiosity) and of high levels of Negative-Emotion Regulation; for example, items describing high levels of Self-confidence also tended to correlate with facets like Enthusiasm and Assertiveness (from Engaging with Others) and Persistence and Determination (from Self-Management). We retained the most promising items and wrote some additional candidate items for facets that were less well measured, resulting in a total of 306 items.

In a second study conducted in 2015, we analyzed these 306 items to select the final item set for SENNA. For this occasion, we developed a computerized web application that administered items in seven booklets to students using another BIB design. A sample of 5,485 high school students enrolled in public schools in the State of Ceará was randomly assigned to one out of the seven booklets (an average of 783 students answered each booklet), and each student answered a subset of 132 items. We followed the same method and rationale of the first study (internal structure analysis) to select the final set of 162 candidate items that composed SENNA. A more detailed description and materials of these two studies can be found in the SENNA manual (Primi et al., 2021).

To balance content across true keyed and false keyed items, and thus control acquiescence more systematically, we arranged the items on the trait identity scales into approximate antonym pairs. For example, a true-keyed identity item on the Curiosity to Learn facet scale (Open-mindedness domain) was “A lot of subjects awake my curiosity (identity +), whereas “I don’t have much interest in finding out how things work (identity −) was a reverse-keyed item (see Table 2 for more examples). A self-efficacy item example was “How well can you learn new things?” Thus, the self-efficacy items were all true keyed5 and their item content was developed separately from the identity items to highlight specific skill components associated with each of the 18 SEMS to be measured. The final SENNA measure thus included nine items per SEMS facet, specifically (a) six identity items (three positively and three negatively keyed items, forming three opposite pairs to permit us to compute and correct for acquiescence) and (b) three true-keyed self-efficacy items. Example items can be found in Table 2.

One limitation of our initial instrument development studies was that participants had always completed only subsets of our item pools. Here, we administered the full inventory to all participants at their schools, thus testing the feasibility of our assessment approach in the field. The present work first reports a joint factor analysis of the 18 positive identity, 18 negative identity, and 18 self-efficacy SEMS cluster scales to identify how these unfold into the Social-Emotional Big Five framework. We hypothesized that (a) the trait-identity clusters and self-efficacy clusters will load together on the expected SEMS factor, and (b) that acquiescence variance will affect the clarity of that structure, such that the structure obtained for the raw-score, uncorrected scales will be less clear, and adhere less well to the expected structure, than when the clusters are all corrected for individual differences in acquiescence. Soto et al. (2008) had found in Western youth aged 10–20 that the acquiescence effect was most pronounced for Agreeableness, and we examine whether that is also the case in our Brazilian public school students.

In addition, we examine whether separate analyses of (a) the 6-item identity clusters and (b) the 3-item self-efficacy clusters each shows the expected five-factor structure, testing our hypothesis that both approaches lead to measures that conform to the same underlying structure when acquiescence is controlled. Finally, we present the results of a joint principal component analysis of the acquiescence-corrected identity and self-efficacy clusters to test the hypothesis that the five-factor spaces for two approaches to SEMS measurement converge on a common model.

The sample included students from 234 cities and 501 public schools distributed across the entire State of São Paulo, and is the most comprehensive school-based assessment of SEMS attempted in Brazil so far. In total, 50,209 students completed the full 162-item SENNA item set via a dedicated web platform. Students were enrolled in grades 6 to 12, 52.7% were girls, and the average age was 14.9 years (SD = 2.1). All data were collected while students were participating in a reading program at their school.

The present study aimed to test if a five-factor solution can account for the covariance matrix formed by of the three sets of 18 SENNA cluster scales described above (see examples for the three kinds of items in Table 2). For example, would the newly developed self-efficacy item clusters load along with the identity item clusters or form separate factors? We expected convergence across the trait-identity and self-efficacy approaches but not perfectly simple structure because several of the SEMS facet scales fall at the boundaries between the standard Big Five factors, like Self-Confidence (between Negative-Emotion Regulation and Engaging with Others) and Respect (between Amity and Self-Management). Finally, we wanted to investigate how acquiescence would affect the internal structure and whether these effects could be controlled estimating each respondent’s acquiescence tendency only from the (true and false keyed) trait identity items (as the self-efficacy items do not include any reverse-keyed items needed to compute acquiescence).

For each SEMS facet, three indicator variables were computed averaging scores on (a) three positively keyed identity items (‘identity +’), (b) three negatively (or false) keyed identity items (after reversing items so high scores always still reflect a high skill level; ‘identity −’), and (c) three positively keyed self-efficacy items, thus 18 SEMS constructs using three indicators with three items each, for a total of 54 short cluster scales. We then fitted a five-factor model with target rotation using Exploratory Structural Equation Modeling (ESEM) via MPLUS. We ran this analysis twice, one with the raw item scores and another with acquiescence corrected scores.

The identity scales of SENNA include 108 items (6 × 18) forming a balanced scale given that each facet has three positively and three negatively keyed items, or a total of 54 “antonym” pairs. We calculated an acquiescence index (ACQ) for each student, computing the average score across all 108 items, before reversing the negatively keyed items, per individual (see Soto et al., 2008, and Soto and John, 2017; for details on this procedure; Primi et al., 2020 for psychometric details and https://github.com/rprimi/noisecanceling for a R package to implement this method). If a student used the response scale in a fully symmetrical way, they would tend to have answer profiles such as 1–5, 2–4, 3–3, 4–2, or 5–1 to the two items in each semantic antonym, resulting in an ACQ score of 3, exactly at the mid-point of the 1–5 response scale labeled as:‘1’ (not at all like me), ‘2’ (little like me), ‘3’ (moderately like me), ‘4’ (a lot like me) and ‘5’ (completely like me)6.

If a student is more likely to agree regardless of the content of the items, the average across antonym pairs will be ACQ > 3, indicating elevated levels agreement (acquiescence bias). If the student is more likely to disagrees regardless of the content, ACQ < 3 indicating dis- acquiescence bias. This ACQ-index was used to correct all item responses, including the responses to the self-efficacy items, which do not include reverse-keyed items and thus ACQ cannot be estimated directly. We do that by subtracting the individual’s ACQ-index from each of their item scores. This procedure removes acquiescence variance from item scores (see more details in Primi et al., 2020).

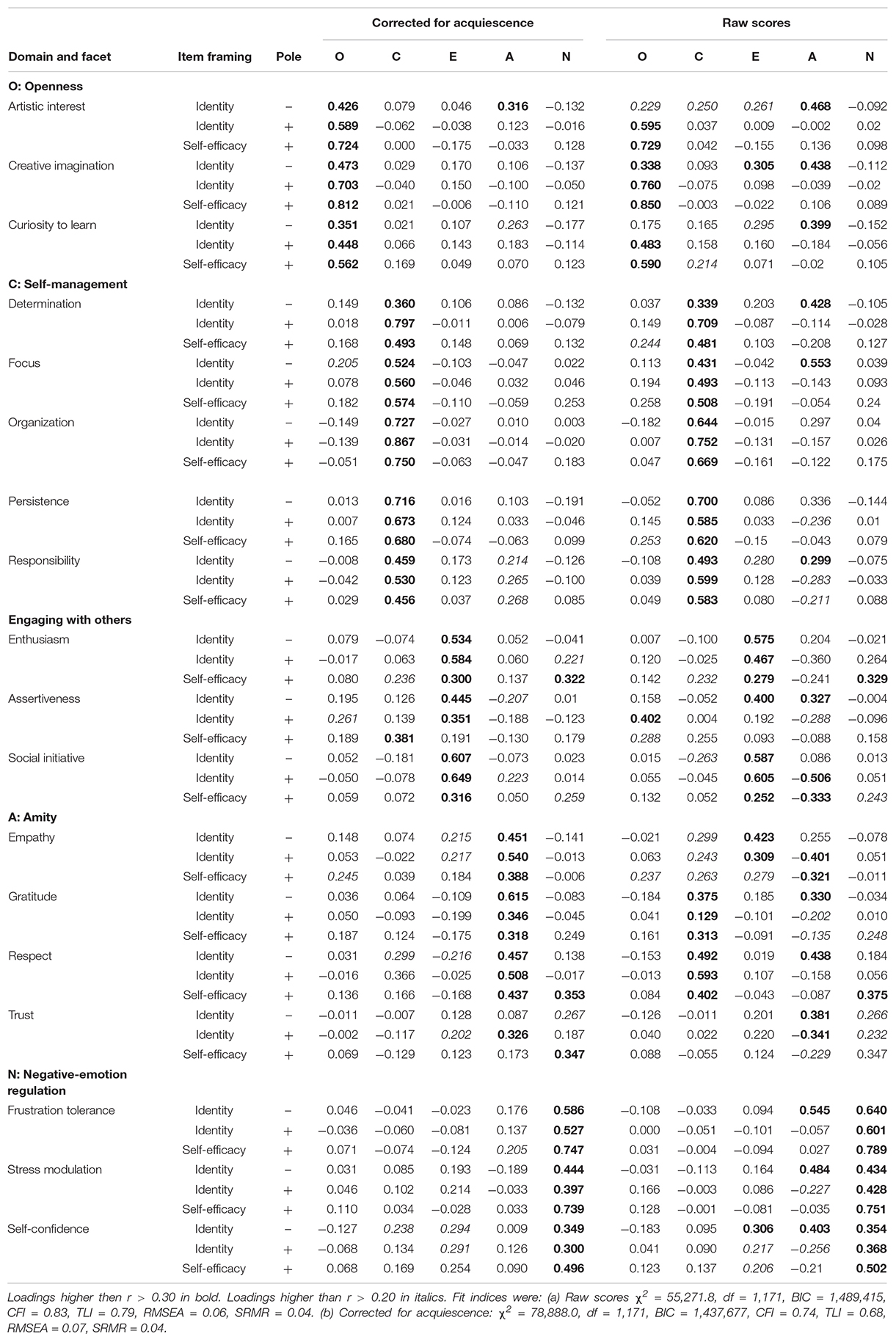

Table 3 shows the results of the ESEM internal structure analysis of the 54 indicators for the five-factor solution. The loadings on the left side of the table (columns four to eight) represent the final model when acquiescence variance was removed at the level of the individual respondent. The results were surprisingly clear: For 16 (out of 18) SEMS, all three indicators (positive identity, negative identity, and positive self-efficacy) had their highest loading on their intended social-emotional five-factor domain. Thus, quite consistently, positively and negatively keyed identity item clusters loaded together with their respective self-efficacy counterparts on the expected factors. There were two exceptions: the self-efficacy cluster for Assertiveness loaded more strongly on the Self-Management factor, whereas the self-efficacy cluster for Trust loaded more strongly on the Emotion Regulation factor. In fact, the other indicators of Trust did not clearly emerge as a facet of Amity, and were poorly represented in the overall factor solution.

Table 3. Factor loadings of Exploratory Structural Equation Modeling (ESEM) of 54 SEMS indicators, measured with either positively keyed or negatively keyed trait-identity items or self-efficacy items.

As expected, some secondary loadings were observed: (a) Responsibility, a facet of Self-Management, also had secondary loadings on Amity across all indicators. This skill is conceptualized as the most interpersonal aspect of Self-Management and implies a commitment to others, thus associating it also with Amity; (b) The self-efficacy indicator of Enthusiasm, a facet of Engagement with Others, also loaded on Emotion Regulation because its items refer to experiencing energy and positive emotions in stressful situations; (c) The self-efficacy indictor of Respect, a facet of Amity, has a secondary loading on Emotion Regulation as items refer to the regulation of negative behavior and impulses (e.g., suspicion) in interpersonal situations; (d) Frustration tolerance, a facet of Emotion Regulation, also had a secondary loading on Amity because items refer to the regulation of anger and irritability in social situations that connects to caring with others; (e) Finally, all indicators of Self Confidence, a facet of Negative-Emotion Regulation, had secondary loadings on Engagement with others. These items refer to positive confidence in the self that is a necessary condition to reach out to others.

The second focus of our analyses was examining the effect of acquiescence correction on the internal structure. The acquiescence index had a mean of M = 2.95 (close to the expected 3.0, the mid-point of the rating scale); however, the standard deviation (SD) was 0.37, indicating substantial individual differences in scale usage across the students and thus deviation from the expected score of 3.0. Thus, we expected acquiescence would have a salient effect.

We computed a similar ESEM model but now with the uncorrected raw scores and loadings of indicators are reported on the right-hand side of Table 3 (in columns 9 to 13). An inspection of these columns shows that in every broad SEMS domain, a substantively higher number of indicator scales had their primary loading on another factor than intended. This was mainly due to the negatively keyed identity indicators of several SEMS, which had their primary loading on the fourth factor. Overall, in this analysis, the Amity domain was not recovered, due to a strong influence of acquiescence in the covariance matrix. The fourth factor extracted in this analysis seems to reflect acquiescence variance because it has negative items with positive loadings contrasted with positive items with negative loadings.

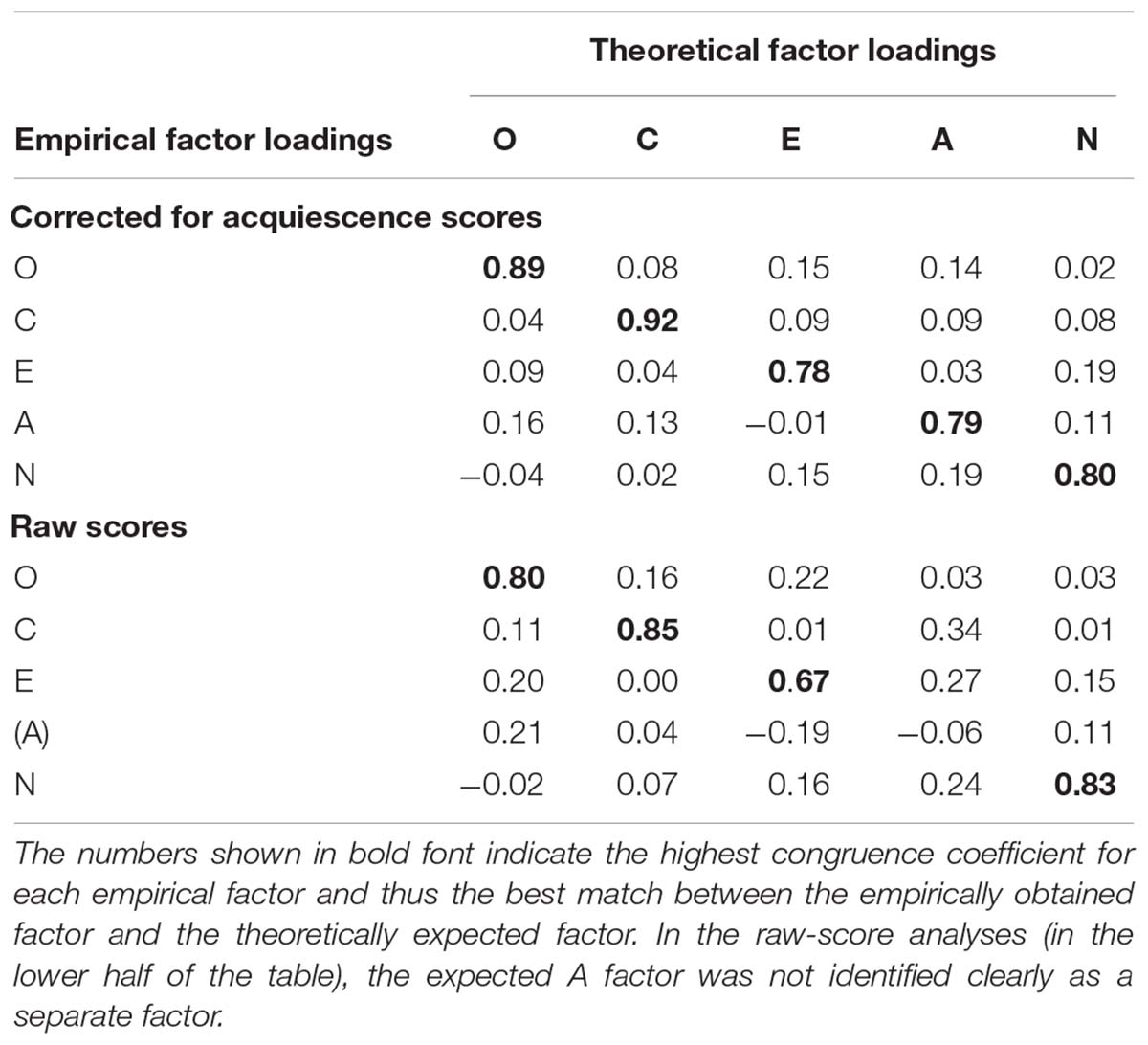

To quantify these observations, we compared the ESEM results from the raw and the acquiescence-corrected SEMS indicators further using a novel variant of factor congruence analysis. To define a theory-based, idealized target matrix of loadings, we created a vector of 54 numbers for each of the five expected domains, one for each indicator variable, with perfect theoretical loadings of 1.0 only on the one intended domain and zero on all the other four domains (thus, somewhat unrealistically, not allowing any of the known secondary loadings). We then correlated this idealized target matrix with the observed loadings of the 18 indicators estimated by the ESEM model, separately for the raw and for the acquiescence-corrected scores. These congruence coefficients are presented in Table 4.

Table 4. Conceptual congruency coefficients: Correlations of the empirically observed loadings on the five factors with a priori theoretical (ideal) loadings (1 and 0) for (a) scores corrected for acquiescence (upper half) and (b) uncorrected raw scores (lower half).

The upper half of Table 4 shows the congruence coefficients for the acquiescence-corrected ESEM model, whereas the lower half shows the congruence coefficients for the raw-score model. Each cell shows the congruence coefficient for comparing the empirical loadings (rows) to the idealized theoretical loadings (columns). We calculated these coefficients for all pairwise combinations. If structures were identical, then the values on the diagonal would all be 1.0 and the off-diagonal values would be zero.

When we consider the data corrected for acquiescence, the loadings were much closer to this ideal. The five congruence coefficients on the diagonal averaged 0.85 and even the lowest was 0.78. In contrast, for the raw scores, the diagonal congruence coefficients averaged only 0.62. Moreover, the off-diagonal coefficients were much closer to zero for the acquiescence-corrected ESEM model. In other words, the loading pattern for the raw scores was a far less clear representation of the social-emotional five-factor domains than the acquiescence-corrected one.

As expected on the basis of Western research, acquiescence affected the SEMS indicators in the Amity domain most strongly. Looking at the congruency of the empirical factor in row A in Table 4, there was no similarity of empirical loadings with the theoretically expected values for A (i.e., column A: r = −0.06) or with any other domain (O: r = 0.21, C: r = 0.04, E: r = −0.19, N: r = 0.11). When we look at column A (which represents the vector of theoretically expected perfect loadings of 1s and 0s), we see only small correlations with empirical loadings for factors representing C (r = 0.34), E (r = 0.27), and N (r = 0.25).

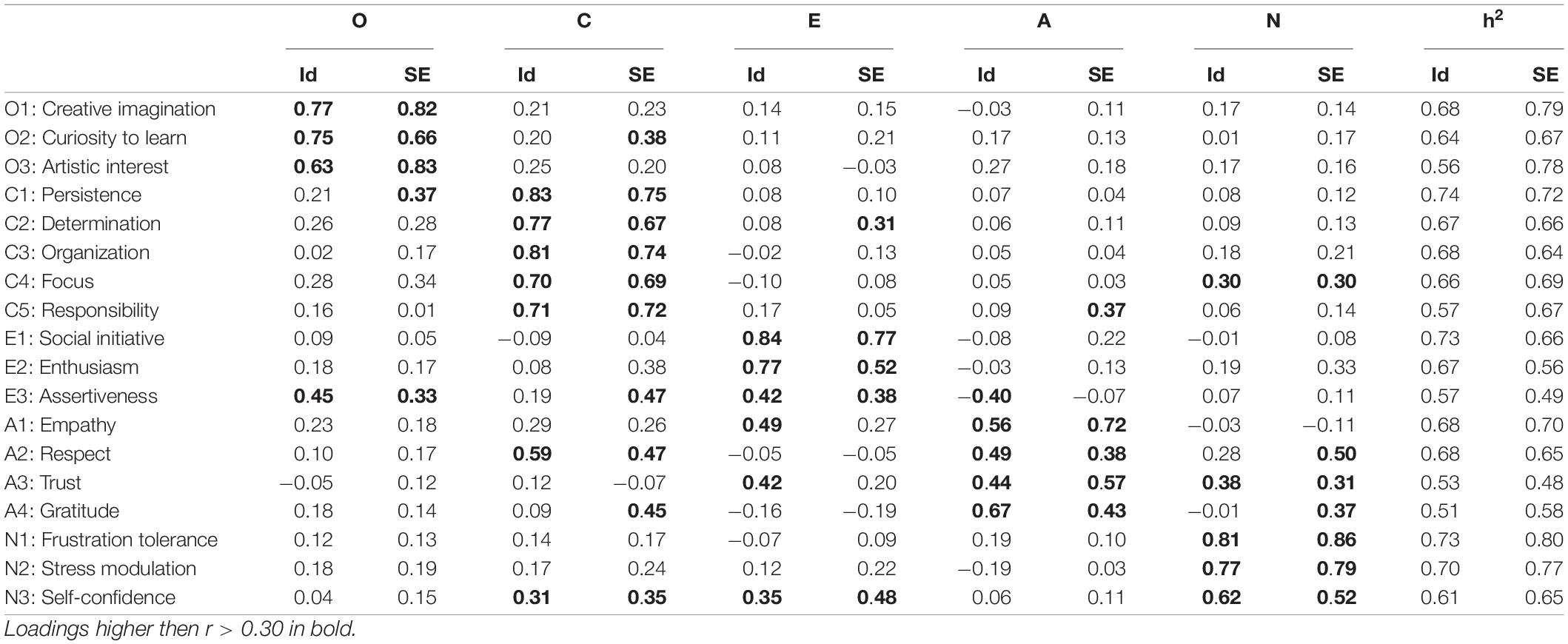

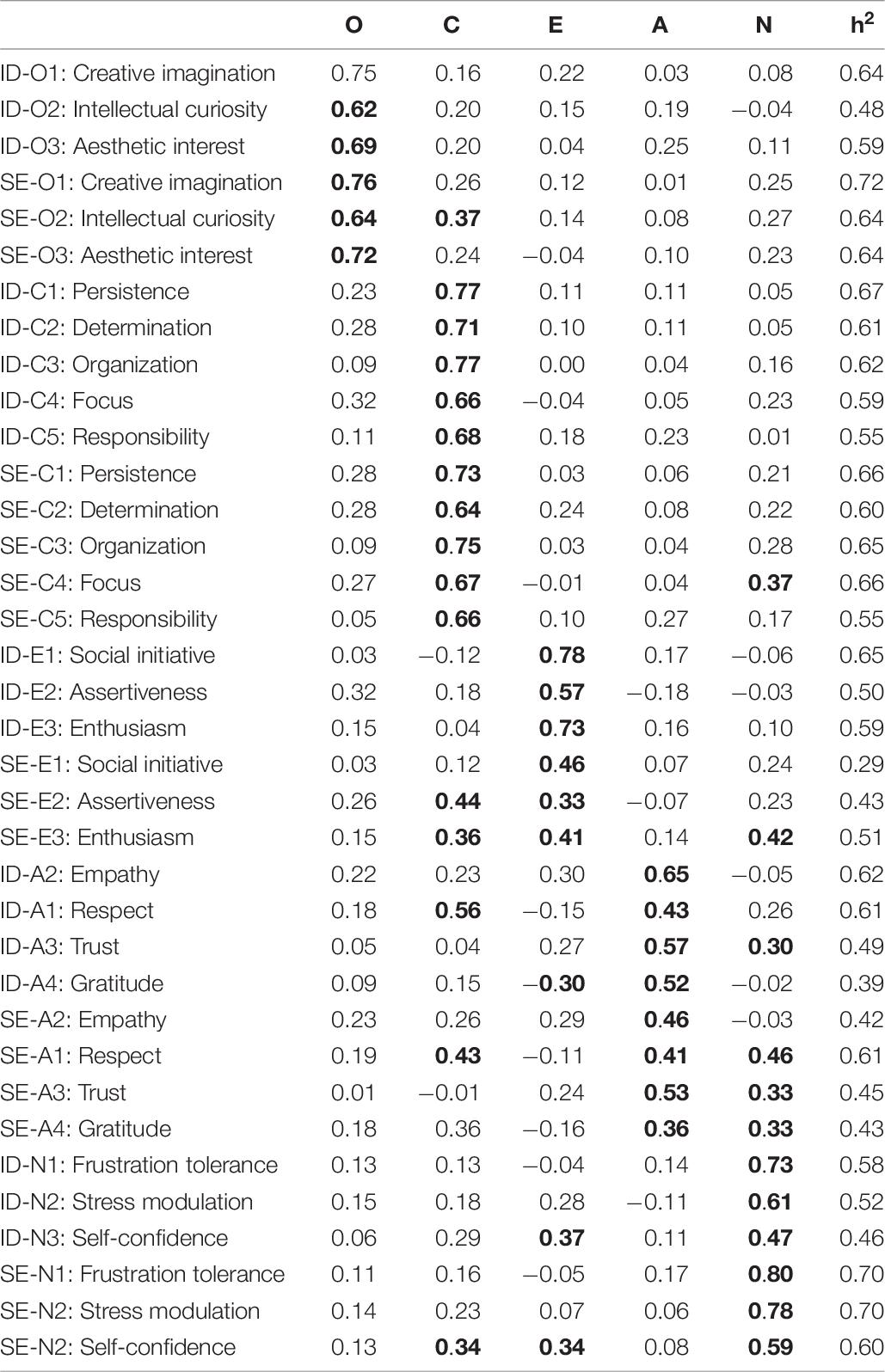

Finally, we tested whether the newly devised self-efficacy scales would be well represented within the now familiar five-factor structure. We conducted separate principal component analyses of (a) the 18 SENNA identity indicators (now combining their true and false-keyed items into one 6-item scale) and (b) the self-efficacy indicators (three items each) after all indicators had been corrected for acquiescence. We used principal components because we wanted a model with no constraints in loadings. As can be observed in Table 5, the self-efficacy scales grouped as expected according to the five domains, and so did the identity scales. Some of the self-efficacy scales, however, showed secondary loadings on the Self-management factor: Curiosity to learn (O), Assertiveness (E), Gratitude (A), and Self-Confidence (N) had substantive loadings on Self-Management.

Table 5. Separate factor structures for the trait-identity item clusters (Id) and for the self-efficacy (SE) item clusters: Standardized loadings from a principal component analysis after controlling for acquiescence and communality (h2) to indicate total variance explained.

When analyzed together with the identity items, self-efficacy scales still had their highest loadings on the five factors along with their corresponding identity scales (see Table 6). SEMS identity and self-efficacy items hence seem to function as indicators of the same underlying latent social-emotional five factors.

Table 6. Joint principal component analysis of person-centered identity and self-efficacy scales: Standardized loadings and communality (h2) to indicate total variance explained.

This paper described the developmental history of SENNA and provided new psychometric information on its internal structure obtained from a large sample of 50,000 Brazilian students enrolled in public school grades 6 to 12, spread across the entire State of São Paulo. The SENNA inventory was designed as an instrument assessing 18 different skills, each operationalized by nine items that represent three types of items: three positively keyed identity items and three negatively keyed identity items, complemented with three (always positively keyed) self-efficacy items, totaling a set of 162 items. Individual skills were assumed to group into the higher-order structure of the social-emotional Big Five, labeled as Engaging with Others, Amity, Self-management, Emotional Regulation, and Open-mindedness. Given its youth target group is as young as 11 years old (grade 6), SENNA was also equipped to correct systematically for individual differences in acquiescence which are known to have particularly strong biasing effects from ages 10 to 13 (Soto et al., 2008).

Our results showed convincing evidence that the 18 SEMS measured here aligned within the social-emotional Big Five structure, both when analyzing the identity and self-efficacy scales together and also when they were analyzed separately, with one critical condition: in samples of youth like our’s, with children as young as 11 years old, individual differences in acquiescence have to be corrected. There were some expected secondary loadings that have also been observed in Western samples (e.g., Soto et al., 2008; Soto and John, 2017). The results for the Trust skill in this study were less clear, and this scale was more difficult to position uniquely in the social-emotional skill space. Overall, these findings underscore that the social-emotional Big Five is also a useful framework to organize SEMS in a non-WEIRD culture like Brazil (John et al., 2008; Kyllonen et al., 2014; Lipnevich et al., 2016; Abrahams et al., 2019; Primi et al., 2019c).

This work further provided strong evidence that students from grades 6 to 12 are able to provide reliable and valid descriptions on identity and self-efficacy scales to assess a broad variety of SEMS, even when data are collected in the course of large-scale assessments. The instrument hence meets all requirements that were previously listed, including being broadly applicable, at a low cost (in time and financially), and to be completed independently by students. The SENNA inventory in its present form, is a significant step forward compared to its predecessor (SENNA 1.0; Primi et al., 2016) including (a) scales to assess five domains and 18 specific SEMS, (b) representing both identity and self-efficacy items to represent the constructs, and (c) an effective method to deal with acquiescence bias in responding.

Furthermore, this study demonstrated the importance of having a proper method to control for acquiescence variance, that is a main problem in large-scale assessment where participants are often younger, represent various backgrounds, are not necessarily motivated or paying attention during the entire assessment, and where some participants may demonstrate answering tendencies such as yes- or no-saying. Low motivation to complete assessments may produce inconsistent agreement and disagreements. In the literature, this is called Insufficient Effort Responding (Niessen et al., 2016). Since acquiescence varies across students, it may influence inter-item correlations confounding the correlations that we expect to be caused by the latent dimensions we are trying to measure. This systematic confounding suppresses correlations of items of opposite poles and inflates correlations between items assessing the same pole (++ and −−, Maydeu-Olivares and Steenkamp, 2018; Mirowsky and Ross, 1991; Primi et al., 2020; Primi et al., 2019b).

In this sample, as well as in our previous work (Primi et al., 2016), we observe sizable acquiescence variance. This influence usually produces two significant factors grouping positive and negative items. As Ten Berge (1999) points out, sometimes we can find an acquiescence factor having all items - positive and negative before reversing - loading positively on a factor (or reversed negative scales with positive loadings conflated with positive facet scales with positive loadings on a factor as was in our case) that can be interpreted as an acquiescence factor. In sum, acquiescence is a “ghost” cofounder that needs to be controlled before we can run item factor analysis. In our study, the Amity factor was difficult to recover, except when we controlled for acquiescence.

While developing SENNA, we systematically included both trait-identity and self-efficacy items to assess all SEMS, to be in a position to systematically examine their relative contributions to assessing SEMS and investigate their validities to predict education outcomes of interest. Contributions and validities could be systematically different for methods (identity versus self-efficacy), but could also depend on the SEMS considered (see our example of trust and presentation skills in the introduction). As it stands now, and relying on the findings of the current study, we aggregate scores on identity and self-efficacy scales and take these aggregates as an index of a particular SEMS. This practice may have to be amended in the future, when new evidence would become available, demonstrating differential predictive validities favoring one over another measurement perspective (identity relative to self-efficacy). Although both assessment perspectives can be distinguished conceptually, it is not clear at the moment whether identity and self-efficacy items can be empirically distinguished well.

Moreover, the combination of positively- and negatively keyed identity items helped to correct for acquiescence. Scales composed exclusively of self-efficacy items miss negatively keyed items (low-skill items) and hence do not allow for the identification and correction for acquiescence bias. The combination of identity items (both positively and negatively keyed) together with self-efficacy items also enables the researcher to correct the self-efficacy items for acquiescence bias.

SENNA was primarily designed for implementation in education practice and policy making, but it should also serve fundamental and applied research purposes. We provided new evidence in this paper supporting its use and highlighting some of its research opportunities. Use of the inventory, its scales and its reports will help education in Brazil to use a common vocabulary to talk about SEMS development among students, teachers, parents, directors and policy makers. In addition, it creates an opportunity to develop evidence-based actions to be implemented in the classroom but also for policy-making. Intensive policy debates started in many countries, including Brazil, to represent SEMS learning in the educational curriculum (OECD, 2015). One significant initiative in Brazil is the Brazilian Common Core for Teaching Fundamentals7, referring to global competencies that tap into various combinations of more foundational SEMS skills. SENNA results can provide input for this debate.

From a research perspective, the demonstration of the relationships between SEMS at school and outcomes across life will be important. Further examining the conceptual distinction between identity versus self-efficacy measurement perspectives will be additional avenues of research. Finally, the supplementary confirmation of the social-emotional Big Five framework as a model to structure SEMS also opens new perspectives to think about the construction of more formative assessment tools that can be directly used within classrooms to inform the learning of SEMS in education (Pancorbo et al., 2020).

The present work has a number of strengths, such as relying on a large sample systematically sampled from public schools in a culture for which the instrument was designed, and providing a nuanced approach to represent diverse content and multiple measurement angles to assess SEMS. There are, however, also a number of limitations, that readers and potential users need to take into account. First, the present data are self-reports susceptible to a range of biases, beyond acquiescence. Second, at the present stage, SENNA is not designed to be used in summative assessment contexts, where the result of an assessment has important consequential outcomes, such as investing in new programs or providing extra support for teachers.

Finally, evidence for external and criterion evidence in scholastic contexts is needed. One paper (Primi et al., 2019a) has already shown that the broad SENNA domain scales are related to students’ objective test scores, with the Self-Management and Open-Mindedness domain scores showing the expected strongest validity coefficients. Future research needs to test the reasonable hypothesis that going from this broad level to the lower level of specific SEMS will further improve the prediction of important scholastic and life outcomes in public school students.

This article is part of a research program on socio-emotional skills sponsored by the Ayrton Senna Foundation. RP also receives a scholarship from the National Council on Scientific and Technological Development (CNPq) and funding from São Paulo Research Foundation (FAPESP). Coordenação de Aperfeiçoamento de Pessoal de Nível Superior – Brasil (CAPES) PVEX – 88881.337381/2019-01.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Studies involving human participants were reviewed and approved by Universidade São Francisco. Written informed consent for participation was not required in accordance with the local legislation and institutional requirements. Data collected for this study were part of student’s regular school activities involving minimal risk. We received approval from the educational secretariat to collect data as part of these activities.

RP, DS, OJ, and FD contributed to the conception and design of the study. RP analyzed the data and wrote the first draft of the manuscript. OJ, FD, and DS revised and wrote substantial sessions of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abrahams, L., Pancorbo, G., Primi, R., Santos, D., Kyllonen, P., John, O. P., et al. (2019). Social-emotional skill assessment in children and adolescents: advances and challenges in personality, clinical, and educational contexts. Psychol. Assess. 31, 460–473. doi: 10.1037/pas0000591

Bandura, A. (1977). Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 84, 191–215. doi: 10.1037/0033-295X.84.2.191

Bandura, A. (2006). “Guide for constructing self-efficacy scales,” in Self-efficacy Beliefs of Adolescents, Vol. 5, eds F. Pajares and T. Urdan (Greenwich, CT: Information Age Publishing), 307–337.

Barbaranelli, C., Caprara, G. V., Rabasca, A., and Pastorelli, C. (2003). A questionnaire for measuring the big five in late childhood. Personality Individual Differ. 34, 645–664. doi: 10.1016/S0191-8869(02)00051-X

Bartram, D. (2005). The great eight competencies: a criterion-centric approach to validation. J. Appl. Psychol. 90, 1185–1203.

Bertling, J., and Alegre, J. (2018). PISA 2021 Context Questionnaire Framework. Princeton NJ: Educational Testing Service (ETS), 80.

De Fruyt, F., and Van Leeuwen, K. (2014). Advancements in the field of personality development. J. Adolesc. 37, 763–769. doi: 10.1016/j.adolescence.2014.04.009

De Fruyt, F., Wille, B., and John, O. P. (2015). Employability in the 21st century: complex (Interactive) problem solving and other essential skills. [Editorial Material]. Indus. Organ. Psychology-Perspect. Sci. Practice 8, 276–281.

De Fruyt, F. D. (2019). Towards an evidence-based recruitment and selection process. Pertsonak Eta Antolakunde Publikoak Kudeatzeko Euskal Aldizkaria Revista Vasca de Gestión de Personas y Organizaciones Públicas 16, 8–15.

Duckworth, A. L., and Quinn, P. D. (2009). Development and validation of the short grit scale (grit-s). J. Pers. Assess. 91, 166–174. doi: 10.1080/00223890802634290

Goodman, R. (1997). The strengths and difficulties questionnaire: a research note. J. Child Psychol. Psychiatry 38, 581–586.

Henrich, J., Heine, S. J., and Norenzayan, A. (2010). The weirdest people in the world? Behav. Brain Sci. 33, 61–83. doi: 10.1017/S0140525X0999152X

Hoekstra, H. A., and Van Sluijs, E. (eds) (2003). Managing Competencies: Implementing Human Resource Management. Nijmegen: Royal Van Gorcum.

John, O. P. (2021). “The integrative Big Five trait taxonomy: the paradigm matures,” in Handbook of Personality: Theory and Research, 4th Edn, eds O. P. John and R. W. Robins (New York, NY: Guilford).

John, O. P., and De Fruyt, F. (2015). Framework for the OECD Longitudinal Study of Social and Emotional Skills in Cities. Paris: OECD Publishing.

John, O. P., Naumann, L. P., and Soto, C. J. (2008). “Paradigm shift to the integrative Big Five trait taxonomy: history, measurement, and conceptual issues,” in Handbook of Personality: Theory and Research, 3rd Edn, eds O. P. John, R. W. Robins, and L. A. Pervin (New York, NY: Guilford), 114–158.

Judge, T. A., Erez, A., Bono, J. E., and Thoresen, C. J. (2003). The core self-evaluations scale: development of a measure. Personnel Psychol. 56, 303–331. doi: 10.1111/j.1744-6570.2003.tb00152.x

Kankaraš, M., and Suárez-Álvarez, J. (2019). Assessment Framework of the OECD Study on Social and Emotional Skills. Paris: OECD.

Kyllonen, P. C., Lipnevich, A. A., Burrus, J., and Roberts, R. D. (2014). Personality, motivation, and college readiness: a prospectus for assessment and development: personality, motivation, and college readiness. ETS Res. Report Series 2014, 1–48. doi: 10.1002/ets2.12004

Lipnevich, A. A., Preckel, F., and Roberts, R. D. (eds) (2016). Psychosocial Skills and School Systems in the 21st Century: Theory, Research, and Practice. Berlin: Springer International Publishing.

Maydeu-Olivares, A., and Steenkamp, J.-B. E. M. (2018). An Integrated Procedure to Control for Common Method Variance in Survey Data using Random Intercept Factor Analysis Models. Available online at: https://www.academia.edu/36641946/An_integrated_procedure_to_control_for_common_method_variance_in_survey_data_using_random_intercept_factor_analysis_models accessed January 23, 2019.

McCrae, R. R., and Terracciano, A. (2005). Personality profiles of cultures: aggregate personality traits. J. Pers. Soc. Psychol. 89, 407–425. doi: 10.1037/0022-3514.89.3.407

Mirowsky, J., and Ross, C. E. (1991). Eliminating defense and agreement bias from measures of the sense of control: a 2 X 2 Index. Soc. Psychol. Quar. 54, 127–145. doi: 10.2307/2786931

Miyamoto, K., Kubacka, K., and Oliveira, E. (2014). Promoting Social and Emotional Skills for Societal Progress in Rio de Janeiro. Paris: Organisation for Economic Co-operation and Development.

Muris, P. (2001). A brief questionnaire for measuring self-efficacy in youths. J. Psychopathol. Behav. Assess. 23, 145–149. doi: 10.1023/A:1010961119608

Niessen, A. S. M., Meijer, R. R., and Tendeiro, J. N. (2016). Detecting careless respondents in web-based questionnaires: which method to use? J. Res. Personal. 63, 1–11. doi: 10.1016/j.jrp.2016.04.010

Nowicki, S., and Strickland, B. R. (1973). A locus of control scale for children. J. Consult. Clin. Psychol. 40, 148–154. doi: 10.1037/h0033978

Olderbak, S., and Wilhelm, O. (2020). Overarching principles for the organization of socioemotional constructs. Curr. Direct. Psychol. Sci. 29, 63–70. doi: 10.1177/0963721419884317

Pancorbo, G., Primi, R., John, O. P., Santos, D., Abrahams, L., and De Fruyt, F. (2020). Development and psychometric properties of rubrics for assessing social-emotional skills in youth. Stud. Educ. Eval. 67:14.

Primi, R., Santos, D., De Fruyt, F., Hauck-Filho, N., and John, O. P. (2019c). Mapping self-report questionnaires for socio-emotional characteristics: what do they measure? Estudos de Psicologia (Campinas) 36:e180138. doi: 10.1590/1982-0275201936e180138

Primi, R., De Fruyt, F. D., Santos, D., Antonoplis, S., and John, O. P. (2019a). True or false? keying direction and acquiescence influence the validity of socio-emotional skills items in predicting high school achievement. Int. J. Testing 20, 1–25. doi: 10.1080/15305058.2019.1673398

Primi, R., Santos, D., De Fruyt, F., and John, O. P. (2019d). Comparison of classical and modern methods for measuring and correcting for acquiescence. Br. J. Mathematical Statistical Psychol. 72, 447–465. doi: 10.1111/bmsp.12168

Primi, R., Hauck-Filho, N., Valentini, F., Santos, D., and Falk, C. F. (2019b). “Controlling acquiescence bias with multidimensional IRT modeling,” in Quantitative Psychology. IMPS 2017. Springer Proceedings in Mathematics & Statistics, eds M. Wiberg, S. Culpepper, R. Janssen, J. González, and D. Molenaar (Cham: Springer), doi: 10.1007/978-3-030-01310-3_4

Primi, R., Hauck-Filho, N., Valentini, F., and Santos, D. (2020). “Classical perspectives of controlling acquiescence with balanced scales,” in Quantitative Psychology, eds M. Wiberg, D. Molenaar, J. González, U. Böckenholt, and J.-S. Kim (Berlin: Springer International Publishing), 333–345. doi: 10.1007/978-3-030-43469-4_25

Primi, R., Santos, D., John, O. P., and De Fruyt, F. (2021). SENNA Inventory for the Assessment of Social and Emotional Skills: Technical Manual. São Paulo: Instituto Ayrton Senna. doi: 10.31234/osf.io/byvpr

Primi, R., Santos, D., John, O. P., and Fruyt, F. D. (2016). Development of an inventory assessing social and emotional skills in Brazilian youth. Eur. J. Psychol. Assess. 32, 5–16. doi: 10.1027/1015-5759/a000343

Rosenberg, M. (1965). Society and the Adolescent Self-Image. Princeton, NJ: Princeton University Press. doi: 10.1515/9781400876136/html

Santos, D., and Primi, R. (2014). Social and Emotional Development and School Learning: A Measurement Proposal in Support of Public Policy. São Apulo: EduLab21, Instituto Ayrton Senna. Available online at: https://globaled.gse.harvard.edu/files/geii/files/social-and-emotional-developmente-and-school-learning1.pdf (accessed November 23, 2016).

Soto, C. J., and John, O. P. (2015). “Conceptualization, development, and initial validation of the Big Five Inventory–2,” in Paper Presented at the Biennial Meeting of the Association for Research in Personality (St. Louis, MO). Available online at: http://www.colby.edu/psych/personality-lab/

Soto, C. J., and John, O. P. (2017). The next Big Five Inventory (BFI-2): developing and assessing a hierarchical model with 15 facets to enhance bandwidth, fidelity, and predictive power. J. Pers. Soc. Psychol. 113, 117–143. doi: 10.1037/pspp0000096

Soto, C. J., John, O. P., Gosling, S. D., and Potter, J. (2008). The developmental psychometrics of big five self-reports: acquiescence, factor structure, coherence, and differentiation from ages 10 to 20. J. Pers. Soc. Psychol. 94, 718–737. doi: 10.1037/0022-3514.94.4.718

Taylor, R. D., Oberle, E., Durlak, J. A., and Weissberg, R. P. (2017). Promoting positive youth development through school-based social and emotional learning interventions: a meta-analysis of follow-up effects. Child Dev. 88, 1156–1171. doi: 10.1111/cdev.12864

Ten Berge, J. M. (1999). A legitimate case of component analysis of ipsative measures, and partialling the mean as an alternative to ipsatization. Multivariate Behav. Res. 34, 89–102. doi: 10.1207/s15327906mbr3401_4

van der Linden, W. J., Veldkamp, B. P., and Carlson, J. E. (2004). Optimizing balanced incomplete block designs for educational assessments. Appl. Psychol. Measurement 28, 317–331. doi: 10.1177/0146621604264870

Keywords: 21st century skills, social-emotional skills, instrument development, Big Five, five-factor model, measurement invariance, exploratory structural equation modeling

Citation: Primi R, Santos D, John OP and De Fruyt F (2021) SENNA Inventory for the Assessment of Social and Emotional Skills in Public School Students in Brazil: Measuring Both Identity and Self-Efficacy. Front. Psychol. 12:716639. doi: 10.3389/fpsyg.2021.716639

Received: 28 May 2021; Accepted: 05 October 2021;

Published: 25 November 2021.

Edited by:

Jesus de la Fuente, University of Navarra, SpainReviewed by:

Mercedes Gómez-López, University of Córdoba, SpainCopyright © 2021 Primi, Santos, John and De Fruyt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ricardo Primi, cnByaW1pQG1hYy5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.