94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 29 November 2021

Sec. Cognition

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.716433

This article is part of the Research Topic The Mechanisms Underlying the Human Minimal Self View all 12 articles

Carlotta Langer1,2*

Carlotta Langer1,2* Nihat Ay1,2,3,4

Nihat Ay1,2,3,4The Integrated Information Theory provides a quantitative approach to consciousness and can be applied to neural networks. An embodied agent controlled by such a network influences and is being influenced by its environment. This involves, on the one hand, morphological computation within goal directed action and, on the other hand, integrated information within the controller, the agent's brain. In this article, we combine different methods in order to examine the information flows among and within the body, the brain and the environment of an agent. This allows us to relate various information flows to each other. We test this framework in a simple experimental setup. There, we calculate the optimal policy for goal-directed behavior based on the “planning as inference” method, in which the information-geometric em-algorithm is used to optimize the likelihood of the goal. Morphological computation and integrated information are then calculated with respect to the optimal policies. Comparing the dynamics of these measures under changing morphological circumstances highlights the antagonistic relationship between these two concepts. The more morphological computation is involved, the less information integration within the brain is required. In order to determine the influence of the brain on the behavior of the agent it is necessary to additionally measure the information flow to and from the brain.

An agent that is faced with a task can solve it using solely its brain, its body's interaction with the world, or a combination of both. This article presents a framework to analyze the importance of these different interactions for an embodied agent and therefore aims at advancing the understanding of how embodiment influences the brain and the behavior of an agent. To illustrate the idea we discuss the following scenario:

Consider a sailor at sea without any navigational equipment. The sailor has to rely on the information given by the sun or the visible stars in order to determine in which direction to steer. The more complex part of the task is solved by the information processed in the brain of the sailor. On the other hand, a bird equipped with magneto-reception, meaning one that is able to use the magnetic field of the earth to perceive its direction, can rely on this sense and does not need to integrate different sources of information. Here, the body of the bird interacts with the environment for the bird to orientate itself. The complexity of the task is met by the morphology of the bird. Taking this example further we consider a modern boat with a highly developed navigation system. The sailor now only needs to know how to interpret the machines and will therefore have less complex calculations to do. The complexity of the task shifts from the brain and background knowledge of the sailor toward the construction of the navigation system, which receives and integrates different information sources for the sailor to use.

Our objective is to analyze these shifts of complexity. We will do that by quantifying the importance of the information flow in an embodied agent performing a task under different morphological circumstances.

The importance of the human body for perception of the environment and ourselves is a core idea of the embodied cognition theory, see for example (Wilson, 2002) or (Gallagher, 2005). In Gallagher (2000) the author develops a definition of a human minimal self in the following way:

“Phenomenologically, that is, in terms of how one experiences it, a consciousness of oneself as an immediate subject of experience, unextended in time. The minimal self almost certainly depends on brain processes and an ecologically embedded body, but one does not have to know or be aware of this to have an experience that still counts as a self-experience.”

Therefore, it is important to understand the influence the ecologically embedded body has on the brain. Hence, here we aim at quantifying both, the interaction of the body with the environment and the information flows inside the body and the brain, respectively, using the same framework and thereby relating them to each other. As a first step in that direction we will analyze simulated artificial agents in a toy example. These agents have a control architecture, the brain of the agent, consisting of a neural network. This will provide the basis for future analysis of more complex agents such as humanoid robots. Ultimately, we hope to gain insights about human agency, and in particular the representation of the self.

The setting of our experiment will be presented in section 2.1. The question we ask is: How is the complexity of solving the task distributed among the different parts of the body, brain and environment?

The main statements that we will support by our experiments are:

1. The more the agent can rely on the interaction of its body with the environment to solve a task, the less integrated information in the brain is required.

This antagonistic relationship between integrated information and morphological computation can be observed even in cases in which the controller has no influence on the behavior of the agent. Hence it is necessary to analyze further information flows in order to fully understand the impact of the controller on the behavior.

2. The importance of integrated information in the controller for the behavior of an embodied agent depends additionally on the information flowing to and from the controller. Therefore, it is not sufficient to only calculate an integrated information measure for understanding its behavioral implications.

In order to test these statements, we need to develop a theoretical background.

We will model the different interactions using the sensori-motor loop, which depicts the connections among the world W, the controller C, the sensors S and actuators A. This will be discussed further in section 2.1.2.

Using the sensori-motor loop we are able to define a set of probability distributions reflecting the structure of the information flow of an agent interacting with the world. Now we need to find the probability distributions that describe a behavior that optimizes the likelihood of success. It would be possible to use a learning or evolutionary algorithm on the agents to find this optimal behavior, but instead we will apply a method called “planning as inference.”

Planning as inference is a technique proposed in Attias (2003), in which a goal directed planning task under uncertainty is solved by probabilistic inference tools. This method models the actions an agent can perform as latent variables. These variables are then optimized with respect to a goal variable using the em-algorithm, an information geometric algorithm that is guaranteed to converge, as proven in Amari (1995). This algorithm might result in local minima depending on the input distribution, which allows us to analyze different kinds of agents and strategies that lead to a similar probability of success. This course of action has the advantage that we can directly calculate the optimal policies without having to first train the agents. We will describe this method in the context of our experimental setup in further detail in section 2.2.

Having calculated the distributions that describe the optimal behavior, we apply various information theoretic measures to quantify the strength of the different connections. The measures we are going to discuss are defined by minimizing the KL-divergence between the original distribution and the set of split distributions. The split distributions lack the information flow that we want to measure. Following this concept we are able to quantify the strength of the different information flows, which leads to measures that can be interpreted as integrated information and morphological computation, respectively. We will further define four additional measures that together quantify all the connections among the controller, sensors and actuators. These are defined in section 2.3.

Using information theoretic measures to quantify the information flow in an embodied agent is a natural approach, since we could perceive the different parts of the system as communicating with each other. Surely the world does not actively send information to the controller, but the controller still receives information about the world through the sensors. There have been various studies analyzing acting agents by using information theoretic measures. In Klyubin et al. (2007) maximizing the information flow through the whole system is used as a learning objective. Furthermore, in Touchette and Lloyd (2004) the authors use the concepts of information and entropy to define conditions under which a system is perfectly controllable or observable. Emphasizing the importance of the sensory input, entropy and mutual information are utilized in Sporns and Pegors (2004) to analyze how an agent actively structures its sensory input. Moreover, the authors of Lungarella et al. (2005) also include the structure of the motor data in their analysis. The last two cited articles additionally discuss two measures regarding the amount of information and the complexity of its integration. These concepts are also important in the context of Integrated Information Theory.

Integrated Information Theory (IIT) proposed by Tononi aims at measuring the amount and quality of consciousness. This theory went through multiple phases of development starting as a measure for brain complexity (Tononi et al., 1994) and then evolved through different iterations (Tononi and Edelman, 1998; Tononi, 2008), toward a broad theory of consciousness (Oizumi et al., 2014). The two key concepts that are present in all versions of IIT are “Information” and “Integration.” Information refers to the number of states a system can be in and Integration describes the amount to which the information is integrated among the different parts of it. Measures for integrated information differ depending on the version of the theory they are referring to and on the framework they are defined in. We discussed a branch of these measures building on information geometry in Langer and Ay (2020). In this article we will use the measure that we propose in Langer and Ay (2020) in the case of a known environment, as defined in section 2.3.1. As advocated by the authors of Mediano et al. (2021) we will treat the integrated information measure as a complexity measure and therefore as a way to quantify the relevant information flow in the controller.

Another general feature of all IIT measures so far is that they focus solely on the brain, meaning on the controller in the case of an artificial agent. Therefore, we want to embed these measures into the sensori-motor loop and analyze their behavior in relation to the dynamics of the body and environment. Although the measures are only focusing on the controller, there have been simulated experiments with evolving embodied agents, interacting with their environment, in the context of IIT. In Edlund et al. (2011) the authors measure the integrated information values for simulated evolving artificial agents in a maze and conclude that integrated information grows with the fitness of the agents. Increasing the complexity of the environment leads in Albantakis et al. (2014) to the conclusion that integrated information needs to increase in order to capture a more complex environment. In Albantakis and Tononi (2015) the authors go one step further and conclude from experiments with elementary cellular automata and adaptive logic-gate networks that a high integrated information value increases the likelihood of a rich dynamical behavior. All of these examples focus on the measures in the controller in order to analyze what kind of cause-effect structure makes a difference intrinsically. Since we are interested in an embodied agent solving a task, we want to emphasize the importance of the interaction of the agent's body with the world and additionally measure this interaction explicitly. This leads us to the concept of morphological computation.

Morphological computation is the reduction of computational complexity for the controller resulting from the interaction between the body and the world, as described in Ghazi-Zahedi (2019). There are different ways in which the body can lift the burden of the brain, as discussed in Müller and Hoffmann (2017). An example for morphological computation is the bird using its magneto-reception mentioned earlier in the introduction. Another case of morphological computation would be a human grabbing a fragile object compared to a robotic metal hand. The soft tissue of the human hands allows us to be less precise in the calculation of the pressure that we apply. The robot needs to perform more difficult computations and will therefore most likely have a higher integrated information. Does this mean that our experience of this task is less conscious than the experience of the robot? Here we want to take a step back from the abstract concept of consciousness and instead examine the complexity of the tasks. Even though the interactions are not fully controlled by the human brain, the soft skin of the human hand interacts with the object in a more complicated manner than the robot's hand. In this article we want to analyze how the complexity of solving a task is met by the different information flows among the brain, body and environment. In Lungarella and Sporns (2006), the authors find that the information flow in the agent can be affected by changes in the body's morphology. Examining this phenomenon further we will observe shifts in the importance of the information flows depending on the morphology of the body, which directly changes the complexity of the environment for the agent.

Furthermore, we will define two additional groups of agents. For the agents of the first group all the information has to go through the controller, while the controller has no impact on the action for the agents in the second group. These cases demonstrate once more that the antagonistic behavior of morphological computation and integrated information exists regardless of the behavior of the agents. The results of our experiments are presented in section 3.

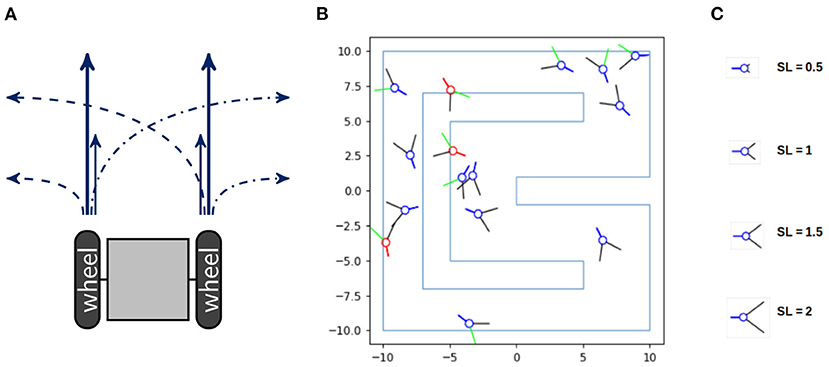

In order to analyze the information flow of an acting agent, we examine the following simple setting. The agents are idealized models of a two-wheeled robot depicted in Figure 1A. Each wheel can spin either fast or slow, hence the agents have four different movements and are unable to stop. If both wheels spin fast, then the agent moves 0.6 units of length and if they both spin slow, then the agent moves 0.2. In case of one fast and one slow wheel the agent makes a turn of approximately 10° with a speed of 0.4. The code of the movement of the agents and a video of 5 agents performing random movements can be found in Langer (2021). The agent's body consists of a blue circle and a blue line marking the back of the agent, depicted in Figure 1B. The two black lines are binary sensors that only detect whether they touch an obstacle or not, without reporting the exact distance to it. If a sensor touches a wall it turns green and if the body of the agent touches a wall it turns red.

Figure 1. (A) A sketch of a two-wheeled robot and its four different types of movement. (B) The racetrack the agents have to survive in and (C) the different sensor lengths, named SL, on the right.

Consider a racetrack as shown in Figure 1B. The agents die as soon as their bodies touch a wall. Hence the goal for the agents is to stay alive. The design and implementation of the agents and the racetrack is due to Nathaniel Virgo. Although we depicted more than one agent in the environment, these agents do not influence each other.

Additionally, we want to manipulate the amount of potential morphological computation for the agents. There exist different concepts referred to as morphological computation, as thoroughly examined in Müller and Hoffmann (2017), where the authors distinguish between three different categories. These are (1) Morphology facilitating control, (2) Morphology facilitating perception and (3) proper Morphological computation. The notion we will use belongs to the second category and is called “pre-processing” in Ghazi-Zahedi (2019). How well agents perceive their environment can heavily influence the complexity of the task they are facing. One example is the design of the compound eyes of flies, which has been analyzed and used for building an obstacle avoiding robot in Franceschini et al. (1992). Therefore manipulating the qualities of the sensors directly influences the agent's perception and consequently the amount of necessary computation in the controller. Hence changing the length of the sensors influences the agent's ability for interacting with the environment. We will therefore vary the length of the sensors from 0.5 to 2.75. Four different sensor lengths are depicted in Figure 1C.

The strategies the agents should use will be calculated by applying the concept of planning as inference as discussed in section 2.2. Utilizing this method we are able to directly determine the optimal behaviors without having to train any agents.

Before we discuss this further, we will first present the control architecture of the agents in the next section.

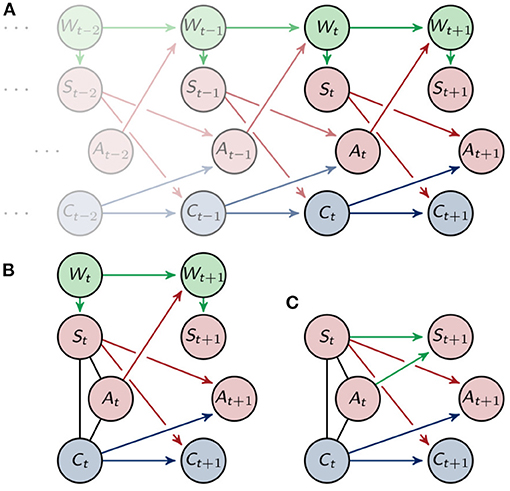

We model the whole system by using the sensori-motor loop as depicted in Figure 2A. There the information about the world is received by be the sensors, which send their information to the controller and directly to the actuators. This direct connection between the sensors and the actuators enables the agent to have a response to certain stimuli, without the need for integrating the information in the controller. The controller processes the information from the sensors and also influences the actuators, which in turn have an effect on the world. The sensori-motor loop, also called action-perception circle, has been analyzed and discussed in, for example, Klyubin et al. (2004), Ay and Zahedi (2014), and Ay and Löhr (2015).

Unfolding the connections among the different parts of the agent and its environment for one timestep leads to the depiction in Figure 2B. The agents have two sensor , two controller and two actuator nodes . The sensors and controllers send their information to the actuators and controllers in the next point in time. The sensors are only influenced by the world W and the world is only affected by the actuators and the last world state.

To simplify we only draw one node for each S, A and C in the following graphs.

The behavior of the agents is governed by a probabilistic law, which can be modeled as the following discrete multivariate time-homogeneous Markov process

with the state space and the distribution

The corresponding directed acyclic graph is depicted in Figure 3A. See Lauritzen (1996) for more information on the relationship between graphs and graphical models. Throughout this article we will assume that the distributions on are strictly positive.

Figure 3. (A) Graphical representation of the Markov process . (B) Graphical representation of one timestep and (C) the marginalized graph.

In the next section we will take a closer look at the role of the environment.

The Markov process defined above describes the interactions between the agent and its environment in terms of a joint distribution. Note that the distributions discussed in this section determine the information flow in the system. The optimization of this flow will require a planning process which we are going to address in the next section. Since the agent has only access to the world through the sensors, we replace

using information intrinsically known to the agent. In order to do that, we will look closer at one step in time P(xt, xt+1) = P(xt)P(xt+1|xt). Reducing the focus to one step in time means that we need to define an initial distribution that takes into account the past of the agent. In Figure 3A we see that the sensors St, actuators At and controller nodes Ct are conditionally independent given the past, but marginalizing to the point in time t leads to additional connections. More precisely marginalizing to one timestep results in undirected edges between St, At and Ct. Here we will assume that the environment only influences the sensors, even in the graph marginalized to one timestep as depicted in Figure 3B. We will then sum over in order to get a Markov process that only depends on the variables known to the agent.

Proposition 1. Marginalizing the distribution, that corresponds to the graph (B) in Figure 3, that is

over leads to the following Markov process

The proof can be found in the Supplementary Material.

The new process describes the behavior of the environment with information known to the agent and is shown in Figure 3C. A similar distribution is also used in Ghazi-Zahedi and Ay (2013) in section 3.3.1. and in Ghazi-Zahedi (2019). There it is derived by taking P(St+1|St) as the intrinsically available information of P(Wt+1|Wt).

We sample this distribution for every sensor length, by storing 20.000.000 sensor and motor values for agents starting in a random place in the arena, performing arbitrary movements. We denote all the sampled and therefore fixed distributions by .

Since we are now able to define a set of distributions that describe the interaction between the agent and the world according to the sensori-motor loop, we will present the method to find the optimal behavior in the next section.

In order to calculate the optimal behavior of the agents, we will use the concept of planning as inference. This was originally proposed by Attias in Attias (2003) and further developed by Toussaint and collegues in Toussaint et al. (2006), Toussaint (2009), and Toussaint et al. (2008) as a theory of planning under uncertainty. There the conditional distribution describing the action of the agent is considered to be a hidden variable that has to be optimized. This is done by using the EM-algorithm, which is equivalent to the information theoretic em-algorithm in this case. We use the em-algorithm, because of its intuitive geometric nature. More details can be found in the Supplementary Material. The em-algorithm is well known and was proposed in Csiszár and Tsunády (1984), further discussed in Amari (1995) and Amari et al. (1992). The resulting distribution maximizes the likelihood of achieving the predefined goal, but might be a local optimum depending on the initial distribution. Normally this is a disadvantage, but in our setting it allows us to analyze various strategies by using different initial distributions.

The goal of the agents in our example is to maximize the probability of being alive after the next two movements. To make at least two steps is necessary since we want the connection between Ct and Ct+1 to have an impact on the outcome. This can be seen in Figure 4.

We will denote the goal variable by G with the state space , where P(g1): = P(g = 1) refers to the probability of the agent to be alive. Since the agent moves twice, this distribution depends on the states of the last three sensor and motor states

The variable G depends on the nodes that are marked with a golden circle in Figure 4. We sampled this distribution for every sensor length, as described in the previous section in the context of .

The architecture of the agents considered in this article was discussed in the last sections. There we outlined how we sample the distribution that describes the influence the agent has on itself through the world. The distributions influencing the behavior of the agents are

Hence we will treat (At+1, Ct+1) as hidden variables and optimize their distributions with respect to the goal. We denote these distributions by α, β and γ in order to emphasize that the process is time-homogeneous, meaning that P(At+1|St, Ct) = P(At+2|St+1, Ct+1), P(St+1|St, At) = P(St+2|St+1, At+1) and P(Ct+1|St, Ct) = P(Ct+2|St+1, Ct+1) as indicated in Figure 4. Note that the above mentioned homogeneity does not imply stationarity.

It remains to define the initial distribution P(St, Ct, At). In the original planning as inference framework an action sequence is selected conditioned on the final goal state and an initial observation, as described in Attias (2003). Here, we do not want to restrict the agents to an initial observation St. Instead we first write the initial distribution in the following form

Using the sampled distribution , we are able to calculate and set . The remaining distributions P(ct|at, st) and P(at) are also treated as variables and optimized using the em-algorithm. This approach leads to the optimal starting conditions for the agents. The details of the optimization are described in the Supplementary Material.

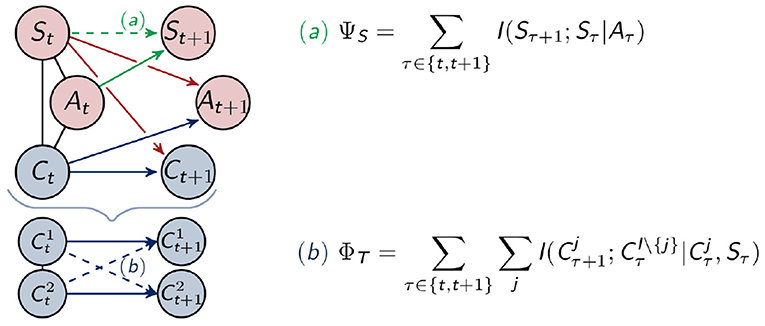

In this section we will define the different measures. These are information theoretic measures that use the KL-divergence to calculate the difference between the original distribution and a split distribution. This split distribution is the one that is closest to the original distribution without having the connection that we want to measure.

Definition 1 (Measure Ψ). Let be a set of probability distributions corresponding to a split system. Then we define the measure Ψ, by minimizing the KL-divergence between M and the full distribution P to quantify the strength of the connections missing in the split system

Note that this measure depends on M, the set of split distributions.

Every discussed measure has a closed form solution and can be written in the form of sums of conditional mutual information terms.

Definition 2 (Conditional Mutual Information). Let (Z1, Z2, Z3) be a random vector on with the distribution P. The conditional mutual information of the random variables Z1 and Z2 given Z3 is defined as

If I(Z1; Z2|Z3) = 0, then Z1 is independent of Z2 given Z3. Therefore, this quantifies the connection between Z1 and Z2, while Z3 is fixed. Additionally, we emphasize this by marking the respective connection quantified by the measure in a graph as a dashed connection. To simplify the figures we only depict one timestep, but the connections between (Yt+1, Yt+2) are the same as the connections between (Yt, Yt+1).

The base of the logarithms in the definitions above is 2, hence the unit of all the measures defined below is bits.

Although these measures were originally defined for only one timestep, we will introduce them directly tailored to our setting with two timesteps.

The two measures discussed in this section each quantify the information flow among the same type of node in different points in time. Integrated information only considers the nodes inside the controller and therefore measures the information flow inside the agent, while morphological computation is concerned with the exterior perspective and measures the information flow between the sensors.

The measure ΦT restricts itself to the controller nodes and can be seen in the context of the Integrated Information Theory of consciousness (Tononi, 2004). This theory was discussed in the introduction. It aims at measuring the strength of the connections among different nodes across different points in time, in other words, the connections that integrate the information. Since every influence on Ct+1 is known in our setting, we are able to use the measure ΦT proposed in Langer and Ay (2020). This measure is defined in the following way

and depicted as (b) in Figure 5. In the definition above, denotes the conditional mutual information, described in Definition 2, and I\{j} is the set of indices of controller nodes without j. For two controller nodes and j = 2 this would be {1, 2}\{2} = {1}. Hence ΦT measures the connections between and with i, j ∈ {1, 2} and i ≠ j.

Figure 5. Calculation of the measures for morphological computation (a) and integrated information (b).

A proof of the closed form solution can be found in Langer and Ay (2020). All the following measures can be proven in a similar way.

In Ghazi-Zahedi (2019) morphological computation was referred to as morphological intelligence and characterized in Definition 1.1. as follows

“Morphological Intelligence is the reduction of computational cost for the brain (or controller) resulting from the exploitation of the morphology and its interaction with the environment.”

There exists a variety of measures for morphological computation, described for example in Ghazi-Zahedi (2019) and Ghazi-Zahedi et al. (2017). The distribution describes the influence the agent has on itself through the environment. Hence this distribution is dependent on the environment and the morphology of the agent. The interplay between environment and body is influenced by the length of the sensors.

In Ghazi-Zahedi and Ay (2013) the authors define the following measure for morphological computation, which depends on . It quantifies the strength of the influence of the past sensory input on the next sensory input given the last action as

which corresponds to ASOCW defined in Ghazi-Zahedi (2019) in Definition 3.1.3. There the author compares the different measures numerically and concludes in the chapter 4.9 that the measure following the approach of ΨS, but defined directly on the world states, has advantages over other formulations and is therefore the recommended one. We will follow this reasoning and consider ΨS to be the measure of morphological computation.

We will observe that the measures for integrated information and morphological computation behave antagonistically. This, however, does not lead to a definitive conclusion about how much of the behavior of the agent is determined by the controller. Intuitively, it might be the case, that the agent acts regardless of all the information integrated in the controller. In order to understand the influences leading to the actions of the agents, we will present four additional measures for the four remaining connections in the graph and a measure quantifying the total information flow. These are depicted in Figure 6.

Figure 6. Calculation of the measures for (c) reactive control, (d) action effect, (e) sensory information, (f) control and (g) total information flow.

Reactive control describes a direct stimuli response, meaning that the sensors send their unprocessed information directly to the actuators. We are measuring this by the value ΨR. The corresponding split distribution results from removing the connection between St and At+1

We are able to quantify the effect of the action on the next sensory state by calculating

This measures the amount of control an agent has. Hence in Ghazi-Zahedi and Ay (2013) this measure was normalized and inverted in order to quantify morphological computation. The differences between this approach and ΨS are further discussed in section 4.9 in Ghazi-Zahedi (2019).

The commands the controller sends to the actuators should be based on the information received from the sensors. Therefore, we will additionally calculate the strength of the information flow from the sensor to the controller nodes. The smaller this value is, the more likely it is that the controller converged to a general strategy and performs this blindly without including the information from the sensors. We will call this “sensory information,” ΨSI,

Since we are looking at an embodied agent, we additionally want to measure how much of the information processed in the controller has an actual impact on the behavior of the agent. We will term the measure quantifying the strength of the impact of the controller on the actuators “control,” ΨC,

The last measure quantifies the total information flow, ΨTIF. In this case two points in time are independent of each other in the split system, as depicted in Figure 6,

The total information flow is an upper bound for all the other measures defined in the previous sections.

In this section we will present the results of our experiments. The length of the sensors are varied from 0.5 to 2.75 in steps of 0.25. We took 100 random input distributions . Each time the algorithm takes at least 1,000 iteration steps and stops when the difference between the likelihood of the goal is smaller than 1∗10−5.

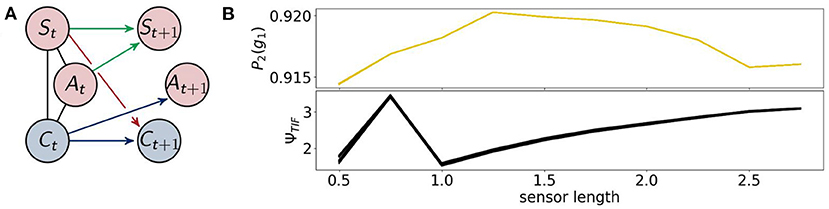

The architecture of the fully coupled agents are the ones described in section 2.1.1 as shown in Figure 7 on the left. We will refer to the optimized distribution of a fully coupled agent by P1, hence P1(g1) is the probability with which the agents survive. This value is depicted in Figure 7 on the top right. The agents perform best between a sensor length of 1.25 and 2.25. If the sensors are too long or too short their information is not useful to assure the survival of the agents.

Figure 7. (A) The architecture of the fully coupled agents and (B) the probability of survival (top) and the total information flow ΨTIF (bottom).

The total information flow ΨTIF in Figure 7 on the bottom right exhibits an almost monotonic increase, except for a local maximum at a sensor length of 1. We will discuss this sensor length below in the context of ΨR and ΨA.

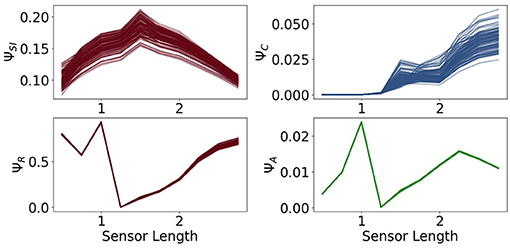

Now we are going to present the results for integrated information ΦT and morphological computation ΨS, depicted in Figure 8.

We observe that ΦT monotonically decreases as the sensors become larger. Directly to the right, ΨS exhibits the opposite dynamic. It quantifies the influence of the past sensory input on the next sensory input given the action. Hence, taking the perspective of the agent, ΨS describes the extrinsic information flow, whereas ΦT only depends on the controller nodes and quantifies therefore, the most intrinsic information flow. So these measures exhibit an antagonistic relationship between the outside and the inside, meaning between morphological computation and integrated information.

Note that the total information flow, ΨTIF, is a sum of three mutual information terms and that the first term I(St+1; St, At) is an upper bound of ΨS, the measure for morphological computation. Since ΨS is particularly high compared to the other measures, the dynamics of I(St+1; St, At) are dominating ΨTIF, leading to the monotonic increase in Figure 7.

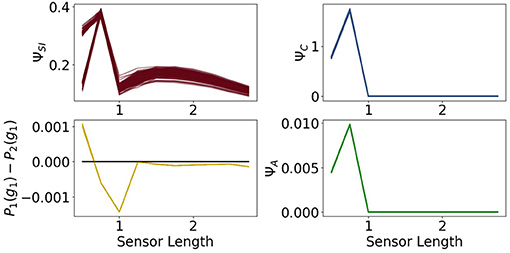

In Figure 9 in the first row we see the measures ΨSI and ΨC. The measure ΨSI quantifies how important the information flow from the sensors to the controller is. For a length below 1 the sensors are too short and above approximately 2 too long to carry information that is valuable for the controller. The importance of the commands sent from the controller to the actuators is measured by ΨC. Between 0.5 and 1.25 this value is very close to 0, which means, that the controller has next to no influence on the behavior of the agent. In this case the sensors are so short that the agents need to react directly to it.

Figure 9. The measures for control ΨC, sensory information ΨSI, reactive control ΨR and action effect ΨA for the fully coupled agents.

Hence, although ΦT has its maximum values at a sensor length of 0.5, the integrated information does not have a significant impact on the behavior of the agents. Therefore the importance of the information flow in the controller of an embodied agent depends additionally on the information flowing to and from the controller.

The measure for reactive control is shown in the second row. In the case of short sensors, the information needs to get passed directly to the actuators. Now we will compare ΨR to ΨA, depicted on the bottom right in Figure 9. The latter one is defined as the action effect, meaning the higher ΨA is, the more influence the actuators have on the next sensor state. The maximum of ΨR and ΨA are at a sensor length of 1, which results in the local maximum of ΨTIF in Figure 7. Both graphs show similar dynamics between sensors of length 1 to 2.25. If the sensors are too small, the information needs to pass directly to the actuators, but the actuators might not be able to assure survival and therefore ΨR is high, while ΨA is low. In the case of very long sensors, they detect a wall with a high probability, so that the next sensory state will again detect a wall regardless of the action taken. This leads to a high ΨR and a low ΨA.

At a sensor length of 1.25, ΨR is close to 0, as well as ΨC and ΨA, which suggests that the algorithm converged to an optimum in which the next sensor state is not dependent on the action and the action is not dependent on the last sensor state.

At a first glance the values of ΨA and ΨC seem to be insignificant compared to the other measures, but note that the relatively small amount is an expected result in these experiments. The last sensor state has a very high influence on the next sensor state and on the next action, since an agent that is not touching a wall will most likely not touch a wall in the next step and move slowly, whereas an agent touching a wall will steer away and, depending on the length of the sensors, probably touch a wall in the next step. Nevertheless, if ΨA and ΨC are not zero, then there exists an information flow and therefore an influence from the actuators to the sensors and from the controller nodes to the actuators. Hence observing the dynamics and relating them to the other measures does lead to insights to the interplay of the different information flows.

In order to further substantiate the results of our analysis, we will now examine two subclasses of agents. We will directly manipulating the architecture of the agents so that the influence on the actuators are limited. Hence we will gain insights on the importance of reactive control and the controller for the behavior of the agent. The first subclass contains agents that are incapable of reactive control and therefore all the information has to flow through the controller. Hence we call them controller driven agents in section 3.2. The second class consists of agents in which the controller has no impact on the actuators. These will be called reactive control agents and discussed in section 3.3.

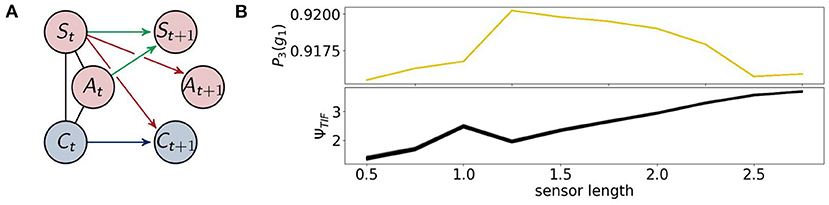

Now, we will discuss the results for the agents that are not able to use reactive control. These are displayed in Figure 10 on the left. We will refer to the optimized distributions by P2.

Figure 10. (A) the architecture of the controller driven agents and (B) the probability of survival (top) and the total information flow ΨTIF(bottom).

Note that these agents are a subclass of the fully coupled ones. Hence optimizing the likelihood of success for these agents should not lead to a higher value for success than for the fully coupled agents. Since we are using the em-algorithm that converges to local minima, however, we observe that controller driven agents have a higher probability of success around a sensor length 1, as depicted on the right in Figure 10.

The results of the total information flow are similar compared to the case of the fully coupled agents after a sensor length of 1. In this case ΨTIF has a global maximum at 0.75, which we will discuss in the context of ΨSI and ΨA.

The measures ΦT and ΨS show in Figure 11 approximately the same values as in Figure 8. There is no change in the dynamics of ΨS, but ΦT is lower than before at a sensor length of 0.5. Note that ΨC in Figure 12 is significantly higher in this case, so that the integrated information makes an impact on the actuators.

Figure 12. The measures for control ΨC, sensory information ΨSI, action effect ΨA for the controller driven agents and the performance difference the fully coupled agents and the reactive ones.

All of the measures corresponding to the controller have a spike at 0.75, at which point these agents perform better than the ones with the ability for reactive control as can be seen in the graph on the bottom left of Figure 12. There the total information flow ΨTIF, depicted in Figure 10, reaches its maximum. This spike can also be observed in ΨA, meaning that the influence of the actuators on the next sensory input given the last sensory input is high.

Additionally, looking at the goal difference depicted on the bottom left in Figure 12, we see that these agents perform better than the fully coupled agents for the sensors being longer than 0.5. The black line marks the value 0. After a sensor length of 1 the measures ΨC and ΨA and show that the information flows from the controller to the actuators and from the actuators to the sensors are barely existent. Therefore, we come to the conclusion, that the agents converged to an optimum in which the actuators do not depend on the sensory input and have no influence on the next sensory state. Note that ΦT still shows the decreasing behavior, even though it has no impact on the actions of the agent.

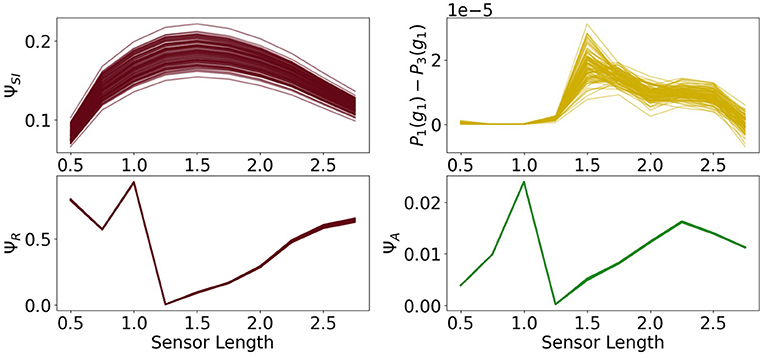

The architecture of the reactive control agents is shown in Figure 13 on the left. Here the controller has no influence on the actuators. On the right we see the probability of survival P3(g1).

Figure 13. (A) The architecture of the reactive control agents, (B) the probability of survival (top) and the total information flow ΨTIF (bottom).

There is now significant difference between the total information flow of the fully coupled agents and the total information flow in this case.

The measures ΦT and ΨS show in Figure 14 the same antagonistic behavior as in the fully coupled case. This demonstrates once more that only using integrated information as a measure in the case of embodied agents does not suffice if we want to understand the agent's behavior.

A closer examination of the difference in performance, depicted on the top right in Figure 15, reveals that the agents connected to a controller perform better for sensors between 1.25 and 2.5. Looking back at Figure 9, we see that this is approximately the region in which ΨC and ΨSI are both high. This supports the idea that integrated information has an impact on the behavior, when at the same time the information flows to and from the controller are high.

Figure 15. The measures for control morphological computation ΨS, reactive control ΨR and action effect ΨA for the reactive control agents and the performance difference between the fully coupled agents and the reactive ones.

The other measures show the same dynamics as the corresponding measures for the fully coupled agents.

In this article we combine different techniques in order to create a framework to analyze the information flow among an agents body, its controller and the environment. The main question we want to approach is how the complexity of solving a task is distributed among these different interacting parts.

We demonstrate the steps in the analysis with the example of small simulated agents that are not allowed to touch the walls of a racetrack. These agents have a sufficiently simple architecture such that we are able to rigorously analyze the different information flows. Additionally, we can examine the dynamics of the information theoretic measures of an agent under changing morphological circumstances by modifying the length of the sensors.

We calculate the optimal behavior by using the concept of planning as inference which allows us to model the conditional distributions determining the actions of the agents as latent variables. Using the information geometric em-algorithm, we are able to optimize the latent variables such that the probability of success is maximal. Here, the expectation maximization EM algorithm used in statistics is equivalent to the em-algorithm, but we chose to present the em-algorithm, because it has an intuitive geometric interpretation. This algorithm is guaranteed to converge, but converges to different (local) optima depending on the starting distribution. Hence this allows us to analyze various kinds of strategies that lead to a reasonably successful agent.

The distributions that are optimal regarding reaching a goal are then analyzed by applying seven information theoretic measures. We use the measure ΦT to calculate the integrated information in the controller and we demonstrate that, although the agents have goal optimized policies, this value can be high even in cases in which it has no behavioral relevance. Therefore, the importance of the information flow in the controller of an embodied agent additionally depends on the information flow to and from the controller, measured by ΨSI and ΨC. Hence, if we want to fully understand the impact integrated information has on the behavior of an agent, it is not sufficient to only calculate an integrated information measure. This is supported by the comparison of the fully coupled agents to the reactive ones, the agents in which the controller has no impact on the actuators. It shows that the controller has a positive influence on the performance of the agents exactly in the cases in which ΨSI and ΨC are both high.

Comparing the morphological computation, measured by ΨS, to the integrated information reveals an antagonistic relationship between them. The more the agent's body interacts with its environment, the less information is integrated.

The measure for reactive control ΨR displays a dynamic similar to the action effect ΨA. Removing the ability to send information from the sensors directly to the actuators, in the controller driven agents, leads to agents that perform an action regardless of the sensor input for a sensor length greater than 1.

Finally, the total information flow is an upper bound for the other measures. Therefore ΨTIF combined with the measures above give us a notion of which information flow has the most influence on the system.

All in all, we present a method to completely examine the information flow among the controller, body and environment of an agent. This gives us insights into how the complexity of the task is met by the different interacting components. We observe how the morphology of the body and the architecture of the agents influence the internal information flows. The example discussed in this article is limited by its simplicity, but even in this scenario, we were able to demonstrate the value of examining the different measures.

We will continue to develop these concepts further to be able to efficiently analyze more complicated agents and tasks and test them on humanoid robots. A humanoid robot can perform for example a reaching movement, which is a goal directed task that allows for more degrees of freedom and the need to integrate different information sources such as visual information and the angle of the joints.

Furthermore, we have seen in the examples presented in this paper, that some tasks can be performed without involvement of the controller. In contrast to the agents in this article, which are optimized directly using planning as inference, natural agents learn to control their body and to interact with their environment gradually. It is intuitive to assume that learning a new task requires much more computation in the controller than executing an already acquired skill. Hence, it is important to analyze the temporal dynamics of the integrated information and morphological computation measures during the learning process to gain insights into potential learning phases. These different learning phases may lead us one step closer to understanding the emergence of the senses of agency and body ownership, two concepts closely related to the human minimal self (Gallagher, 2000).

Using an agent with a more complicated morphology can lead to the opportunity to study the “degrees of freedom” problem, formulated in motor control theory. In his influential work (Bernstein, 1967) addresses the difficulties resulting from the many degrees of freedom within a human body, namely the problem of choosing a particular motor action out of a number of options that lead to the same outcome. In Bernstein (1967), in the chapter “Conclusions toward the study of motor co-ordination,” he makes the following observation:

“All these many sources of indeterminacy lead to the same end result; which is that the motor effect of a central impulse cannot be decided at the centre but is decided entirely at the periphery: at the last spinal and myoneural synapse, at the muscle, in the mechanical and anatomical change of forces in the limb being moved, etc.”

He thus emphasizes the importance of the morphology of the body for the actual movement.

There have been a number of theories further discussing this topic. In Todorov and Jordan (2002), for example, the authors propose a computational level theory based on stochastic optimal feedback control. The resulting “minimum intervention principle” highlights the importance of variability in task-irrelevant dimensions. It would be interesting to analyze, whether we observe spikes in the control value and the integrated information that indicate a correctional motor action only for the task-relevant dimensions.

Another theory approaching the degrees of freedom problem is the “equilibrium point hypothesis” by Feldman and colleagues, Asatrian and Feldman (1965) and Feldman (1986). There the control is modeled by shifting equilibrium points in opposing muscles. The usage of the properties of the body in order to achieve co-ordination is directly related to the concept of morphological computation. The authors of Montúfar et al. (2015) study how relatively simple controllers can achieve a set of desired movements through embodiment constraints and call this concept “cheap control.”

By applying our framework to more complex tasks, we would expect results agreeing with the observations in Montúfar et al. (2015). Fewer degrees of freedom, which are associated with strong embodiment constraints, should lead to high morphological computation and therefore, following the reasoning of this paper, to a small integrated information value.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://github.com/CarlottaLanger/MorphologyShapesIntegratedInformation.

NA and CL: conceptualization and methodology. CL: software, investigation, and writing. NA: supervision, project administration, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

The authors acknowledge funding by Deutsche Forschungsgemeinschaft Priority Programme “The Active Self” (SPP 2134). Publishing fees supported by Funding Programme *Open Access Publishing* of Hamburg University of Technology (TUHH).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank Nathaniel Virgo for the design and implementation of the racetrack and for the introduction to the theory of Planning as Inference.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.716433/full#supplementary-material

Albantakis, L., Hintze, A., Koch, C., Adami, C., and Tononi, G. (2014). Evolution of integrated causal structures in animats exposed to environments of increasing complexity. PLoS Comput. Biol. 10:e1003966. doi: 10.1371/journal.pcbi.1003966

Albantakis, L., and Tononi, G. (2015). The intrinsic cause-effect power of discrete dynamical systems—from elementary cellular automata to adapting animats. Entropy 17, 5472–5502. doi: 10.3390/e17085472

Amari, S.-I. (1995). Information geometry of the EM and em algorithms for neural networks. Neural Netw. 8, 1379–1408. doi: 10.1016/0893-6080(95)00003-8

Amari, S.-I., Kurata, K., and Nagaoka, H. (1992). Information geometry of boltzmann machines. IEEE Trans. Neural Netw. 3, 260–271. doi: 10.1109/72.125867

Asatrian, D., and Feldman, A. (1965). On the functional structure of the nervous system during movement control or preservation of a stationary posture. i. mechanographic analysis of the action of a joint during the performance of a postural task. Biofizika 10, 837–846.

Attias, H. (2003). “Planning by probabilistic inference,” in Proceeding of the 9th International Workshop on Artificial Intelligence and Statistics, Volume R4 of Proceedings of Machine Learning Research, eds C. M. Bishop and B. J. Frey (Key West: PMLR), 9–16.

Ay, N., and Löhr, W. (2015). The umwelt of an embodied agent-a measure-theoretic definition. Theory Biosci. 134, 105–116. doi: 10.1007/s12064-015-0217-3

Ay, N., and Zahedi, K. (2014). On the Causal Structure of the Sensorimotor Loop, chapter 9. Berlin; Heidelberg: Springer Berlin Heidelberg.

Csiszár, I., and Tsunády, G. (1984). “Information geometry and alternating minimization procedures,” in Statistics and Decisions (Supplementary Issue, No.1), ed E. F. Dedewicz (Munich: Oldenburg Verlag), 205–237.

Edlund, J., Chaumont, N., Hintze, A., Koch, C., Tononi, G., and Adami, C. (2011). Integrated information increases with fitness in the evolution of animats. PLoS Comput. Biol. 7:e1002236. doi: 10.1371/journal.pcbi.1002236

Feldman, A. (1986). Once more on the equilibrium-point hypothesis (λ model) for motor control. J. Mot. Behav. 18, 17–54. doi: 10.1080/00222895.1986.10735369

Franceschini, N., Pichon, J.-M., and Blanes, C. (1992). From insect vision to robot vision. Philos. Trans. R. Soc. B Biol. Sci. 337, 283–294. doi: 10.1098/rstb.1992.0106

Gallagher, S. (2000). Philosophical conceptions of the self: implications for cognitive science. Trends Cogn. Sci. 4, 14–21. doi: 10.1016/S1364-6613(99)01417-5

Ghazi-Zahedi, K., and Ay, N. (2013). Quantifying morphological computation. Entropy 15, 1887–1915. doi: 10.3390/e15051887

Ghazi-Zahedi, K., Langer, C., and Ay, N. (2017). Morphological computation: synergy of body and brain. Entropy 19:456. doi: 10.3390/e19090456

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2004). “Tracking information flow through the environment: simple cases of stigmergy,” in Artificial Life IX: Proceedings of the Ninth International Conference on the Simulation and Synthesis of Living Systems, ed J. Pollack (Boston, MA: MIT Press), 563–568.

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2007). Representations of space and time in the maximization of information flow in the perception-action loop. Neural Comput. 19, 2387–2432. doi: 10.1162/neco.2007.19.9.2387

Langer, C. (2021). Morphology Shapes Integrated Information Github Repository. Available online at: https://github.com/CarlottaLanger/MorphologyShapesIntegratedInformation.

Langer, C., and Ay, N. (2020). Complexity as causal information integration. Entropy 22:1107. doi: 10.3390/e22101107

Lungarella, M., Pegors, T., Bulwinkle, D., and Sporns, O. (2005). Methods for quantifying the informational structure of sensory and motor data. Neuroinformatics 3, 243–262. doi: 10.1385/NI:3,3:243

Lungarella, M., and Sporns, O. (2006). Mapping information flow in sensorimotor networks. PLoS Comput. Biol. 2:e144. doi: 10.1371/journal.pcbi.0020144

Mediano, P. A. M., Rosas, F. E., Farah, J. C., Shanahan, M., Bor, D., and Barrett, A. B. (2021). Integrated information as a common signature of dynamical and information-processing complexity. arXiv:2106.10211 [q-bio.NC].

Montúfar, G., Ghazi-Zahedi, K., and Ay, N. (2015). A theory of cheap control in embodied systems. PLoS Comput. Biol. 11:e1004427. doi: 10.1371/journal.pcbi.1004427

Müller, V., and Hoffmann, M. (2017). What is morphological computation? On how the body contributes to cognition and control. Artif. Life 23, 1–24. doi: 10.1162/ARTL_a_00219

Oizumi, M., Albantakis, L., and Tononi, G. (2014). From the phenomenology to the mechanisms of consciousness: Integrated information theory 3.0. PLoS Comput. Biol. 10:e1003588. doi: 10.1371/journal.pcbi.1003588

Sporns, O., and Pegors, T. K. (2004). “Information-theoretical aspects of embodied artificial intelligence,” in Embodied Artificial Intelligence: International Seminar, Dagstuhl Castle, Germany, July 7-11, 2003. Revised Papers, eds F. Iida, R. Pfeifer, L. Steels and Y. Kuniyoshi (Berlin; Heidelberg: Springer Berlin Heidelberg), 74–85.

Todorov, E., and Jordan, M. (2002). Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226–1235. doi: 10.1038/nn963

Tononi, G. (2004). An information integration theory of consciousness. BMC Neurosci. 5:42. doi: 10.1186/1471-2202-5-42

Tononi, G. (2008). Consciousness as integrated information: a provisional manifesto. Biol. Bull. 215, 216–242. doi: 10.2307/25470707

Tononi, G., and Edelman, G. M. (1998). Consciousness and complexity. Science 282, 1846–1851. doi: 10.1126/science.282.5395.1846

Tononi, G., Sporns, O., and Edelman, G. M. (1994). A measure for brain complexity: relating functional segregation and integration in the nervous system. Proc. Natl. Acad. Sci. U.S.A. 91, 5033–5037. doi: 10.1073/pnas.91.11.5033

Touchette, H., and Lloyd, S. (2004). Information-theoretic approach to the study of control systems. Physica A 331, 140–172. doi: 10.1016/j.physa.2003.09.007

Toussaint, M. (2009). Probabilistic inference as a model of planned behavior. Künstliche Intell. 23, 23–29. Available online at: https://ipvs.informatik.uni-stuttgart.de/mlr/papers/09-toussaint-KI.pdf

Toussaint, M., Charlin, L., and Poupart, P. (2008). “Hierarchical pomdp controller optimization by likelihood maximization,” in Proceedings of the Twenty-Fourth Conference Annual Conference on Uncertainty in Artificial Intelligence (Helsinki), 562–570.

Toussaint, M., Harmeling, S., and Storkey, A. (2006). Probabilistic inference for solving (po)mdps. Technical Report 934, School of Informatics, University of Edinburgh.

Keywords: information theory, information geometry, planning as inference, morphological computation, integrated information, embodied artificial intelligence

Citation: Langer C and Ay N (2021) How Morphological Computation Shapes Integrated Information in Embodied Agents. Front. Psychol. 12:716433. doi: 10.3389/fpsyg.2021.716433

Received: 01 June 2021; Accepted: 27 October 2021;

Published: 29 November 2021.

Edited by:

Verena V. Hafner, Humboldt University of Berlin, GermanyCopyright © 2021 Langer and Ay. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Carlotta Langer, Y2FybG90dGEubGFuZ2VyQHR1aGguZGU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.