94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Psychol., 26 August 2021

Sec. Cognitive Science

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.715696

Our aim in this paper is to contribute toward acknowledging the general role of opposites as an organizing principle in the human mind. We support this claim in relation to human reasoning by collecting evidence from various studies which shows that “thinking in opposites” is not only involved in formal logical thinking, but can also be applied in both deductive and inductive reasoning, as well as in problem solving. We also describe the results of a series of studies which, although they have been developed within a number of different theoretical frameworks based on various methodologies, all demonstrate that giving hints or training reasoners to think in terms of opposites improves their performance in tasks in which spontaneous thinking may lead to classic biases and impasses. Since we all possess an intuitive idea of what opposites are, prompting people to “think in opposites” is something which is undoubtedly within everyone's reach and in the final section, we discuss the potential of this strategy and suggest possible future research directions of systematic testing the benefits that might arise from the use of this technique in contexts beyond those tested thus far. Ascertaining the conditions in which reasoners might benefit will also help in terms of clarifying the underlying mechanisms from the point of view, for instance, of analytical, conscious processing vs. automatic, unconscious processing.

Humans have an intuitive idea of opposites in addition to an intuitive idea of what constitutes similarity, diversity, and sameness. Same-different tasks have been extensively used in Psychology to study perception and categorization without the need to explain to participants what the terms “same” or “different” mean. We are all familiar with the experience of needing to change an item we have just bought when we notice a defect and we expect the salesperson in the shop to exchange it with an identical item (that is, the same size, color, and design etc.). If the assistant tries to give us a different item or even a similar one, he/she will need to convince us to accept it as a replacement since we see it is not the same as the original item. In the same way, we immediately recognize whether two sounds are identical, similar or different in terms of a series of opposite features, for example, high-low, increasing-decreasing, regular-irregular or pleasant-unpleasant. These are all understood by us as being opposite attributes without the need for any formal definition. These might seem to be quite trivial examples, but their obviousness shows us that we readily acknowledge how basic and pervasive these relationships are in the structure of our everyday lives.

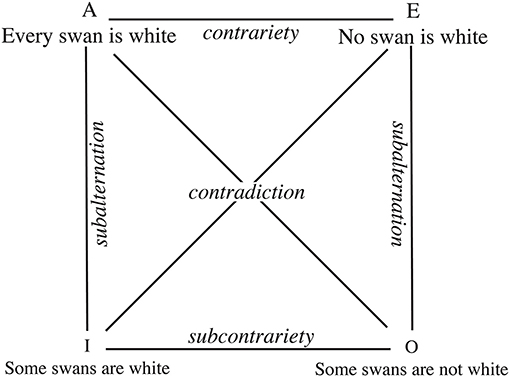

The idea that similarity is transversal to many different cognitive functions has been accepted in Psychology at least since Tversky's seminal work (Tversky, 1977). “Similarity is a central construct in cognitive science. It is involved in explanations of cognitive processes as diverse as memory retrieval, categorization, visual search, problem solving, induction, language processing, and social judgment (see Hahn et al., 2003, and references therein). Consequently, the theoretical understanding of similarity affects research in all of these areas” (Hahn et al., 2009). Any cognitive scientist engaged in describing how human perception, categorization, language or reasoning works will continuously come across similarity as a key concept in the organizing principle by means of which individuals classify objects, form concepts, and make generalizations (for a review, see Goldstone, 1994). We much less frequently hear cognitive scientists making references to opposites when discussing the same processes. Our aim with the present paper is to encourage cognitive scientists that the role of opposites in cognition should not be relegated to formal logical matters such as those traditionally exemplified by “the square of opposition” (Figure 1; see Beziau and Basti, 2016 for an update on the developments of the square of opposition within modern logics).

Figure 1. The square of opposition refers to a diagram which was introduced in traditional logics. It represents four types of logical relationships holding between four basic forms of propositions: universal affirmatives (A), universal negations (E), particular affirmatives (I), and particular negations (O).

In the following sections of the present paper (sections Thinking in Terms of Opposites in Order to Figure Out Alternatives in Everyday Life, Opposites and Deductive Reasoning, Opposites and Inductive Reasoning, and Opposites and Insight Problem Solving) we draw attention to the role that opposites play in reasoning processes such as everyday counterfactual thinking, classic deductive and inductive reasoning tasks and the representational changes required in certain reasoning tasks. An inspection of the contexts in which opposites are implied in spontaneous human thinking and of others in which they are crucially needed (even though not spontaneously applied) suggests that the notion of opposites supports human thinking in a number of ways. If we acknowledge this, it follows that opposites can be regarded as a general organizing principle for the human mind rather than simply a specific relationship (however respectable) merely related to logics. This broader perspective on opposites which sees them as useful in a variety of reasoning processes leads to new questions and suggests new directions for research, as we propose in the final section of the paper (section Discussion). Before addressing all these points, we refer to three different approaches which have been developed within the field of Cognitive science in the last 20 years that—although in different ways—all deem opposites to be a general and basic phenomenon. What is fundamental to these approaches is the idea that opposites are a primal organizing principle for the human mind which applies to language, perception and relational reasoning.

(i) Cognitive linguists have noted and discussed the pervasiveness of antonyms in natural human languages (see, for instance, Jones, 2002, 2007; Paradis et al., 2009). It has been acknowledged that opposites represent a special semantic relationship which is more easily learned and more intuitively understood than any other semantic relationship and indeed non-experts can easily identify opposites, despite being unable to formulate a clear definition of the requisite for two meanings to be opposites (e.g., Kagan, 1984; Miller and Fellbaum, 1991; Fellbaum, 1995; Jones, 2002; Murphy, 2003; Croft and Cruse, 2004). It has been shown that this primary intuition has its roots in infants' pre-verbal categorization (e.g., Casasola et al., 2003; Casasola, 2008).

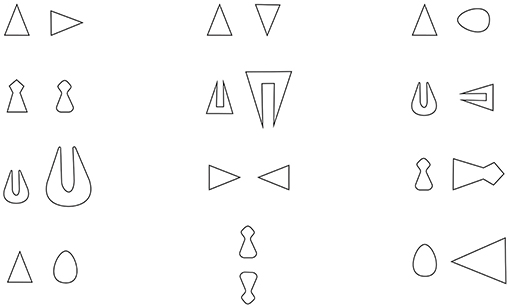

(ii) A second approach involves the hypothesis that opposites are a specific, basic, perceived relationship. This approach (the main theoretical and methodological framework of which are summarized in Bianchi and Savardi, 2008a) has led to various experimental explorations of the abilities of adults and children to recognize whether certain configurations are “opposite” as compared to “different” or “similar” and to also produce opposite configurations. These explorations referred to various perceptual domains and contents and involved, for example, simple visual configurations (e.g., Bianchi and Savardi, 2006; Schepis et al., 2009; Savardi et al., 2010; Bianchi et al., 2017a), everyday objects and/or environments (e.g., Bianchi et al., 2011, 2013, 2017c), human body postures (e.g., Bianchi and Savardi, 2008b; Bianchi et al., 2014), and acoustic stimuli (e.g., Biassoni, 2009; Bianchi et al., 2017b). The results emerging from these studies indicated that (a) the participants were consistent in defining the point along a dimension at which a property stops being perceived as pertaining to one extreme of the dimension (e.g., near on the dimension near/far) and starts to be perceived as an instance of the opposite property (e.g., in this case far) or of an intermediate region with variations that are not perceived as pertaining to either one pole or the other (e.g., neither near nor far); (b) two configurations are perceived to be opposites when they show a maximum contrast in terms of a perceptually salient property—usually regarding the orientation or direction of the configuration—within a condition of otherwise overall invariance (some examples are provided in Figure 2); and (c) due evidently to this close connection between the perception of opposition and the perception of opposite orientation in an overall invariant configuration, opposition is also perceived in configurations involving mirror symmetry, including people's perception of their own body reflection in a plane mirror.

Figure 2. Some examples of pairs of configurations formed of two simple bidimensional figures which are perceived as similar (1st column), opposite (2nd column), and different (3rd column)—Bianchi and Savardi (2008a).

(iii) A third approach focuses on people's ability to discern three different types of oppositional relationships between objects, concepts and ideas, that is anomaly, antinomy, and antithesis. The recognition of deviance (anomaly), incompatibility (antinomy), and opposition (antithesis) has been shown to be critical not only for complex thought (Chinn and Brewer, 1993; Holyoak, 2012), but also with regard to professional abilities in medicine (Dumas et al., 2014), chemistry (Bellocchi and Ritchie, 2011), and engineering (Dumas and Schmidt, 2015; Dumas et al., 2016). This approach was developed over the course of a decade in relation to a number of domains (see Alexander, 2012; Dumas et al., 2013; Alexander et al., 2016) and a Test of Relational Reasoning (TORR) was developed to measure the aforementioned forms of relational reasoning, and also analogy which refers to thinking in terms of similarity rather than opposition (Hesse, 1959; Goswami and Mead, 1992; Alexander et al., 1997; Dunbar, 2001; Hofstadter, 2001; Gentner et al., 2003; Braasch and Goldman, 2010). The anomalous reasoning scale of the TORR gauges respondents' ability to discriminate a deviance or a discrepancy by recognizing an object that does not fit a given or typical pattern. The antinomous reasoning scale measures the ability to reason with mutual exclusivity between categories and to recognize a paradoxical situation (i.e., a situation in which two conditions cannot both be true). The antithetical reasoning scale measures the ability to recognize a direct oppositional relation between situations. The TORR measures the ability to recognize these relationships both with regard to visual stimuli and to written sentences.

This study focuses on the role of opposites in relation to various types of reasoning tasks. In this sense, we come closer to the third approach described in the previous section (point iii above). However, the phenomenon is observed from a standpoint which is less technical than that presupposed by that approach and is more similar to the intuitive idea of opposition relating to the other two approaches (that is points i and ii above). Indeed, when we refer to “thinking in opposites,” we do not refer to any technical or logical definition which, conversely, constructs such as antithesis, contradiction, and counterfactuality necessarily imply. We start, more simply, from the consideration that when humans conceive of a variation from a given state, object, action or situation, they necessarily do it with reference to opposites. For the sake of intellectual honesty, it is to be noted that the roots of this idea were already present in the work of Aristotle (1984, Cat. 5, 4a 30–34), but have been explicitly applied to the human cognitive system in more recent times (e.g., Gärdenfors, 2000, 2014; Paradis, 2005; Bianchi and Savardi, 2008a). By saying that every kind of perceptually identifiable variation between two objects relates in some way to opposition, we do not mean that the final outcome is necessarily globally perceived as opposite; from a global perspective, two objects might simply appear diverse or similar. This depends on the number of features which are transformed and on which features are transformed. Indeed, in the case of similarity, diversity and opposition, both the number of common, distinctive features and the salience of those features are critical factors (the literature supporting this statement is reviewed in Bianchi and Savardi, 2008a, p. 129–130). However, in order to stand up to an analytical (i.e., local) inspection, any variation must necessarily consist of a change from one property or state to another property or state along a dimension which is cognitively defined by two opposite properties. This can involve varying degrees of alteration, for example, something small can be transformed either into something big (i.e., its opposite) or into which is still perceived as small but is less small than before. The transformation may also comprise a variation which shifts toward an intermediate property (e.g., something small becomes something which is perceived as neither small nor big).

The fact that there is a close link between non-identity and opposition has also emerged in research investigating the extent to which the negation of one property (e.g., not good) implies affirming the opposite property (e.g., bad). For instance, “The water is not hot” makes us think of water than might be warm, lukewarm or cool. It has been empirically proved that inferring one of these options in preference to the others depends in part on rhetoric aspects (e.g., Colston, 1999; Horn and Kato, 2000) and in part on semantic (e.g., Paradis and Willners, 2006) and perceptual aspects (e.g., Bianchi et al., 2011) relating to both the nature of the negated property itself and the opposite pole. In any case, understanding negation seems to entail a mental construction of a model implying a variation from the negated situation identified within a dimension with two opposite poles (Kaup et al., 2006, 2007).

This idea that every variation (including negation) which occurs along a dimension with opposite poles applies to the present paper in the sense that we see that “thinking in opposites” becomes the natural background for creating mental alternatives. These may be alternatives to reality which relate to how past events could have been different (section Thinking in Terms of Opposites in Order to Figure Out Alternatives in Everyday Life), alternative models in deductive reasoning (section Opposites and Deductive Reasoning); alternative outcomes in hypothesis testing (section Opposites and Inductive Reasoning); or alternative representations of a problem in insight problem solving (section Opposites and Insight Problem Solving). The goal of this paper is to provide evidence of the plausibility of this idea and to stimulate new directions for future research.

As psychologists have shown, the range of applications for counterfactual thinking is extensive. It supports thinking processes in various reasoning tasks (Byrne, 2016, 2017, 2018) and it is often activated when one is engaged in justifying or defending past events (Markman et al., 2008) or negative performances (Markman and Tetlock, 2000; McCrea, 2008; Tyser et al., 2012), as well as when people formulate intents or take decisions regarding future events (Markman et al., 1993; Epstude and Roese, 2008; Ferrante et al., 2013).

Although the number of counterfactual alternatives to a given event is potentially infinite, people only tend to create a limited number of alternatives. They transform exceptional events into normal events rather than vice versa (Kahneman and Tversky, 1982a), uncontrollable events become controllable (Girotto et al., 1991; Davis et al., 1995), inaction is replaced by action (Kahneman and Tversky, 1982b; Ritov and Baron, 1990), changes regarding the first events in a causal chain are prioritized over the last events (Wells et al., 1987; Segura et al., 2002) and, in contrast, the last events are changed rather than the first events in a sequence of causally independent events (Miller and Gunasegaram, 1990).

What is especially relevant to the present analysis is that no matter the content of the alternative scenario, counterfactual thinking involves imagining the opposite of what really occurred (Fillenbaum, 1974; Santamaria et al., 2005; Byrne, 2018). An example of counterfactual thinking such as “If he had caught the plane, he would have arrived on time” makes us think about both missing the plane and arriving late as opposed to catching the plane and arriving on time. “If I hadn't forgotten my umbrella, I would not be soaked to the skin now” makes us think about remembering to take the umbrella as opposed to forgetting it, and about being dry as opposed to being drenched.

Literature on the development of children has shown that by the age of 7 they are able to compare what actually occurred with alternatives to reality (e.g., German, 1999; Beck et al., 2006; Rafetseder et al., 2010) and to understand emotions based on counterfactual reasoning. That is to say that they understand how “thinking about how things could have been better” can make one feel regret, and that “thinking about how things could have been worse” can make one feel relief (see Kuczaj and Daly, 1979; Harris et al., 1996; Amsel and Smalley, 2000; Guttentag and Ferrell, 2004). Curiosity about alternative outcomes is already present in children of 4 and 5 years (e.g., Fitzgibbon et al., 2019), and can therefore be said to predate counterfactual reasoning in its strict sense. At this age, children also understand that by saying something “almost happened” (e.g., “it almost fell”) involves thinking not only about what really happened but also about the event that did not occur (e.g., Beck and Guthrie, 2011).

In this section, we focus on three phenomena which give an idea of the pervasiveness of opposites in deductive reasoning. Let us start with a classic example, Wason's four-card selection task (Wason, 1966, 1968). This type of task requires the ability to produce valid inferences from the information expressed in the premises. A set of four cards is placed on a table and the participants taking part in the experiment are told that each of these cards has a number printed on one side and a letter on the other side. They can, of course, only see one side of the cards, some of which are number side up and some of which are letter side up. Their task is to determine which cards need to be turned over in order to test the proposition “if there is a vowel on one side of the card then there is an even number on the other side.” In order to solve the syllogism (as required in all deductive reasoning tasks), counterexamples to the initial hypothesis need to be found. Indeed, if one single counterexample can be found, then the hypothesis has to be considered invalid, and therefore also the entire inference leading to the hypothesis is wrong (Geiger and Oberauer, 2007; Markovits et al., 2010). The first mental model that comes to mind confirms the association “vowel-even number” and people turn over the card with a vowel on it (let's call it the p-property card) and the one with an even number on it (let's call it the q-property card). However, only when the search for counterexamples fails can the initial hypothesis be confirmed. The correct procedure to follow involves testing the combination of the antecedent with the negation of the consequent (i.e., p and not-q). The frequency with which this strategy is spontaneously activated depends on the contents of the syllogism (Cheng and Holyoak, 1985; Manktelow and Over, 1991; Sperber et al., 1995; Girotto et al., 2001) and on whether the participants are given a prompt that in order to prove that a rule is true, they need to prove there is no case in which the rule is false (Augustinova, 2008). Independently of whether counterfactual thinking is spontaneously activated or prompted by a hint, in Wason's classic task we clearly see this in action since thinking “not-q” implies thinking in opposites, and “not even” numbers immediately make one think of odd numbers.

A second phenomenon in which opposites are in effect implied concerns deductive reasoning involving scalar implicatures. Scalar implicatures underly the interpretation of certain categorical syllogisms. Reasoners attribute an implicit meaning to an utterance beyond the literal meaning and also beyond its strict, logical meaning, based on implicit conversational pragmatic assumptions (Papafragou and Musolino, 2003; Chierchia, 2004; Papafragou and Skordos, 2016). Scalar implicatures usually involve quantifiers, but in any case, entail an interpretation along a scale of possibilities. The listener will assume that the speaker has a reason for not choosing a stronger term on the scale concerned. This can be clearly seen in the use of “some”—as in (1) which suggests the meaning “not all,” even though by saying “some,” logically speaking “all” cannot be excluded. Assuming that the speaker is trying to be helpful and say what he/she genuinely considers to be relevant to the conversation, the fact that he/she chooses “some” prompts the listener to think that the other person is not in a position to make an informationally stronger statement (e.g., Mary ate all of the cakes). The listener thus infers that the second statement (i.e., Mary did not eat all of the cakes) is true.

What we want to emphasize in this paper is that scalar implicatures necessarily require one to think along a scale which has opposites at each extreme. The underlying dimension in (1) goes from “all” to “none.” In the second example, the scale implied refers to the number of bears. The scalar implicature involved (“three-more than three”) is based on the contrast between “the same quantity” and “a different quantity,” which might be more than three or less than three. In this case too (assuming the speaker is trying to be helpful and say what he/she really saw and considers to be relevant), the listener infers that they saw exactly three bears and not more or less than three. The third example works in a similar way, but it plays on the contrast between “and-or,” that is, between “both and only one of the two” (Horn, 1984).

(1) a. Mary ate some of the cakes.

b. Mary did not eat all of the cakes.

(2) a. We saw three bears.

b. We did not see more than three bears.

(3) a. Elmo will buy a car or a boat.

b. Elmo will not buy both a car and a boat.

Some recent studies have focused on the role played by the cognitive structure of the scale in question in terms of pragmatic strengthening and the computation of an implicature. This idea is that the semantic structure of an adjective, for example, will systematically encourage or block certain inferences (e.g., van Tiel et al., 2016; Gotzner et al., 2018; Leffel et al., 2019). The structure of a scale is operationalized with reference to boundedness, the extremeness of the stronger pole, the nature of the weaker pole (i.e., whether it is minimum, relative, or a zero-point indicating the absence of the property), the distance between the two poles and their polarity (i.e., positive or negative). This puts constraints on the range of potential values and thereby determines the alternatives which can be used in the computation of an implicature (see also van Tiel and Schaeken, 2017).

The third phenomenon we refer to concerns reasoning in relation to logical connectives such as inclusive disjunctions (i.e., “P or Q or both”) and exclusive disjunctions (i.e., “either P or Q”). The truth table of any binary connective relating to two propositions (P, Q) holds four truth-value outcomes, in a defined order (see Table 1). The first row refers to the condition in which P is true and Q is true; the second to when P is true and Q is false; the third row to when P is false and Q is true and the fourth row to when P and Q are both false. The conditional “if P, then Q” implied in Wason's 4 card problem is only false in the second condition, that is, when P is true and Q false, which, in effect, is the condition which will solve the task.

Many logical fallacies derive from an inappropriate interpretation of disjunctive connectives. The exclusive disjunction (“either P or Q”) is true only when the conditions described by the second and third row of the truth table hold, that is, P is true and Q is false or vice versa. Conversely, the inclusive disjunction (“P or Q or both”) is true in the first three conditions and false only in the fourth one (i.e., when P and Q are both false). In the case of a basic inference in which the major premise is an inclusive disjunction and the minor premise is affirmative (modus tollens), given the affirmation of P, reasoners tend to conclude not-Q, and given the affirmation of Q they tend to conclude not-P (e.g., Evans et al., 1993). This would be valid in the case of an exclusive disjunction, whereas we are dealing here with an inclusive disjunction. According to Robert and Brisson (2016, p. 383 ff.), and assuming the explanation of the fallacies in conditional reasoning provided by the mental model theory in terms of incomplete representations of the premises is correct (Johnson-Laird, 1983, 2010; Johnson-Laird and Khemlani, 2014), conditional fallacies all depend on the counterexample to the fallacious conclusion not being taken into account. As Table 1 demonstrates, the second, third and fourth models all imply negation, that is they refer to something which is not-P or not-Q. As stated in the introduction to this paper (section Introduction), from a cognitive point of view negation presupposes opposition. In the examples in Table 1, not-even means odd, not-vowel means a consonant. However, not all domains are conceptualized as being mutually exclusive. The binary or graded structure of a dimension (e.g., Kennedy and McNally, 2005) substantially influences the amount of shift which spontaneously comes to mind. The semantic meaning of the sentence John is not handsome merely entails that John is something less than handsome, that is, he might be attractive, average looking or even ugly, depending also on other contextual and pragmatic factors (e.g., Paradis, 2008). Despite the fact that this is a matter of modulation, there is in any case a presupposed reference to opposition.

Hypothesis testing epitomizes the process of inductive reasoning (Oaksford and Chater, 1994; Vartanian et al., 2003). It underlies not only scientific reasoning (Mahoney and DeMonbreun, 1977; Langley et al., 1987; Klahr and Dunbar, 1988), but also many classes of human judgments, including social inferences regarding individual or group behaviors (e.g., Nisbett and Ross, 1980; DiDonato et al., 2011). The process of hypothesis testing involves forming hypotheses, and then gathering evidence in order to test and revise these hypotheses (e.g., Klayman and Ha, 1987; Heit, 2000; Evans, 2007).

Wason's rule discovery task (Wason, 1960) represents the standard paradigm employed to study hypothesis testing behavior. In this task, the participants are asked to discover the arithmetic rule devised by the experimenter that applies to a series of three number sequences. They are given an initial sequence (2-4-6) that fits in with the rule and are invited to construct their own series of three number sequences in order to test any hypotheses they formulate about the rule. The experimenter provides feedback and once the participants feel confident that they have found the rule, they announce their finding. Generally, the process goes on until the participants come up with the right rule or it is stopped after a fixed amount of time. This task requires the reasoners to create not only series of numbers that confirm their idea regarding the rule, but also series of numbers which disconfirm the rule (Rossi et al., 2001; Evans, 2007). Most people do not proceed in this way, and this task illustrates how people generally tend to follow a confirmation bias in hypothesis testing tasks. In fact, only around 20% of participants find the correct rule at the first attempt (Wason, 1960; Farris and Revlin, 1989b). Analyses of the reasoning process used by those participants who find the correct rule at the first attempt revealed that they applied a counterfactual strategy both when generating hypotheses and when testing them (Klayman and Ha, 1987; Farris and Revlin, 1989a,b; Oaksford and Chater, 1994; Gale and Ball, 2012). Counterfactual hypotheses were formed by varying one thing at a time; for instance, if the initial hypothesis was “any series of three even numbers in increasing order”, participants created a counterfactual hypothesis such as “any series of three odd numbers in increasing order” or “any series of three even numbers in decreasing order” or “any series of three numbers which are the same” (Tschirgi, 1980). The examples not only make it clear that each of the hypotheses transforms and tests the effect of making one transformation at a time, but also show that the transformations are based on opposites (i.e., even is transformed into odd, increasing is transformed into decreasing and different numbers are transformed into the same numbers).

There has been a great deal of debate, in some way inspired by Popper (2005) falsification model, on the exclusive need to adopt disconfirmatory strategies in inductive inference tasks (e.g., Farris and Revlin, 1989a,b, 1991; Gorman, 1991) and various attempts have been made to train participants to use disconfirmatory strategies on the 2-4-6 rule discovery task or in other similar tasks. One of these is the ‘Eleusis' card game (first invented by Robert Abbott in1956). The problem is essentially very similar to Wason's triples task. The game involves one player (the dealer) dealing a row of cards in sequence and this person then chooses a secret rule to determine which card or cards can be played subsequently. The other players take turns to try and guess the rule for the sequence by placing one or more cards on the table. The dealer says whether these cards fit in with the rule or not. Gorman et al. (1984) trained participants to apply either a confirmatory strategy (“test your guesses by concentrating on playing cards that will be correct”) or a disconfirmatory strategy (“test your guesses by deliberately playing cards that you think will be wrong”), or a mixed strategy (“first, concentrate on getting right answers until you have a guess; then test your guess by deliberately playing cards you are sure will be wrong”). Similar strategies were tested by Tweney et al. (1980), but using the 2-4-6 problem. Gorman et al. (1984) found that groups using a disconfirmatory strategy found the correct rule 72% of the time, those applying a combined strategy were correct 50% of the time and those using a confirmatory strategy were only right in 25% of cases. Tweney et al. (1980) found that a disconfirmatory strategy was easily induced, but it did not lead to greater efficiency and neither did a mixed strategy. A comparison of the two studies suggests that a critical element is whether participants are given feedback on whether their guesses are consistent with the rule or not. Indeed, in Gorman and Gorman's study Gorman and Gorman's 1984, the participants were allowed to make as many guesses as they liked within a half hour time limit and playing a maximum of 60 cards. They were requested to write down their guesses, the number of the card and the time at which they made the guess, but received no feedback until the end of the experiment.

Improvements in performance were generally found when the participants were prompted to work on the discovery of two interrelated rules (this condition is known as dual goal instruction). Success rates at the first attempt typically rise to over 60% in the dual goal instruction condition, when complementary rules are considered (e.g., Tweney et al., 1980; Tukey, 1986; Gorman et al., 1987; Wharton et al., 1993). Why does this type of manipulation make a difference? Gale and Ball (2006, 2009) hypothesized and subsequently verified that a critical element is whether the two rules stimulate participants to think in terms of contrast classes, that is, in term of relevant oppositional contrasts. As confirmed in a further study on finding rules for triple sequences (Gale and Ball, 2012), the facilitatory effect of the dual goal instruction was greater when the participants were given the 6-4-2 triple sequence as an exemplar of the second rule (with a success rate of around 75%), than when the exemplar was 9-8-1 (with only a 36% success rate) or the 4-4-4 sequence (with a 20% success rate). The 6-4-2 triple makes the relevant contrast immediately evident; the 9-8-1 triple contrasts in more than one dimension and thus only to some extent shifts the participants' attention toward the critical characteristics while the 4-4-4 triple suggests a contrast class (same vs. different numbers) which is not useful in any way.

The idea of a “contrast class” refers here to a psychological rather than logical concept (Oaksford, 2002, p. 140). It does not merely identify the logical complement set. In Oaksford's example, the sentence “Person X is not drinking coffee”, entails a logical complement set which includes all beverages except coffee (i.e., whisky, cola, or sparkling water etc.), but the immediate hypothesis that comes to people's minds is that the person must be drinking some other hot beverage such as tea. Thus, we see that the identification of a contrasting set is driven by cognitive “relevance” (Sperber and Wilson, 1986/1995) which means that reasoners focus on one or more relevant contrasts out of a range of alternatives. These in turn might depend on various aspects such as perceptual relevance, semantic factors, and also contextual aspects (e.g., situational, socio-linguistic, stylistic, or prosodic factors). These influence what the reasoner may decide constitutes a contrast. The identification of a contrast tends to be more straightforward if there is clearly a binary opposite, for example, even-odd, ascending-descending, or vowel-consonant (for a discussion of the concept of relevance in the identification of contrast sets, see Tenbrink and Freksa, 2009, but also Paradis et al., 2009 for an analysis of the more general issue of opposites and canonicity). We return to this point in the final discussion.

In line with the main claim of this paper, we suggest that an explanation for why stimulating reasoners to think in opposites by means of facilitatory hints involving contrast classes seems to be more effective than prompting them to apply disconfirmatory strategies (e.g., Tweney et al., 1980) might depend on the fact that this strategy does not ask people to focus on testing hypotheses they expect to be wrong. In contrast, it allows them to make a positive search using opposites to identify potential falsifiers of the rule. This is not very different to Gale and Ball's suggestion (Gale and Ball's 2012, p. 416–417) which is, however, based on Oaksford and Chater's (1994) iterative counterfactual model.

Another domain in which thinking in terms of opposites is crucial is problem solving. The process of problem solving starts off from an initial state, such as a given situation or problem statement; the solver works toward the goal state (i.e., the solved problem) passing through various intermediate states along the way. Insight problems cannot be solved by means of the mere application of predefined rules and they are typically characterized by a moment when the solution arrives suddenly and unexpectedly (often known as the “Aha!” moment) after an impasse, usually as part of a stadial process (Ohlsson, 1992; Öllinger et al., 2014; Fedor et al., 2015). It is crucial that before this revelatory moment there has been some form of representational change which has allowed the person to overcome a blockage deriving from a former (unproductive) representation of the problem (Knoblich et al., 1999; Öllinger et al., 2008; Ohlsson, 2011) and various studies have focused on the mechanisms underlying this change and on how to facilitate it (see Gilhooly et al., 2015).

The relevance of opposites in this representational change was first theorized by the Gestalt psychologists Duncker (1945) and Wertheimer (1945/1959). According to Wertheimer, solving a problem means creating new groupings within the overall structure of the problem, by unifying the elements of the problem that were initially separated and dividing elements that were initially unified thereby creating a new mental organization of the problem (Wertheimer, 1945/1959). Duncker (1945) pointed out that what is needed in this restructuring is a shift of function in the elements within a system, and he explicitly defined this shift in terms of opposites. In the last 10 years, a number of studies have been conducted that offer direct or indirect evidence that thinking in opposites supports a relaxation of the constraints that prevent the reasoners from seeing the solution. For example, in the ping-pong ball problem (Ansburg and Dominowski, 2000), reasoners are asked to work out how to throw a ping-pong ball (without bouncing it off any surface or tying it to anything) so that it will travel a short distance, come to a dead stop and then roll back on its tracks. In order to reach the solution, it is necessary to imagine the ball following a vertical trajectory, but this contrasts with the mental model which initially comes to peoples' minds which contains the implicit assumption of a horizontal trajectory (Murray and Byrne, 2013). This bias toward a horizontal trajectory is the cause of the impasse. The problem solver needs to stop focusing on a horizontal trajectory and start thinking of a vertical trajectory in order to resolve the impasse.

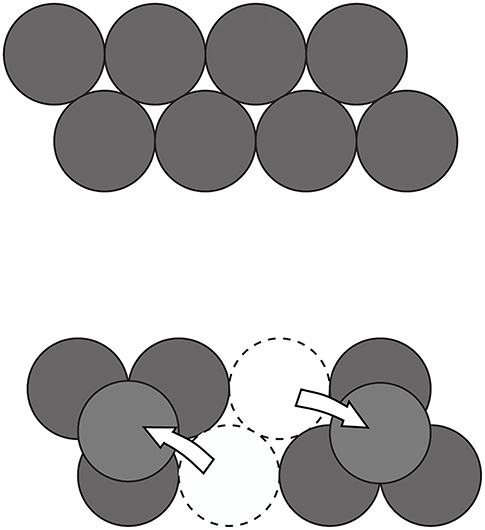

Explicitly stimulating participants (either by means of training or using hints) to explore the initial structure of a problem in terms of its salient spatial features and to systematically transform them into their opposites has proved to be effective in a series of studies (e.g., Branchini et al., 2015a,b, 2016; Bianchi et al., 2020). To exemplify the point, let's consider the eight coin problem in which the participants are asked to start from a given configuration of eight disks (see the top image in Figure 3), and to move only two coins in such a way that the new arrangement will respect the condition that each coin only touches three other coins. The moves which need to be made and the final solution are represented in the bottom image in Figure 3. If one looks at the initial configuration, one notices various aspects: that the configuration is oriented horizontally; that there is the same number of coins in each of the two rows; that they are misaligned; that they are united to form a single group of coins and that they lie on the same plane (i.e., it is a bidimensional configuration). Encouraging the participants to focus on the properties they identify while exploring the structure of the problem and then to think of these in terms of their opposites (i.e., horizontal-vertical, equal-different number, aligned-misaligned, united-separated and bidimensional-three dimensional) helped the participants to ascertain which aspect they needed to transform, increased the number of attempts made and led to a better performance. In the eight coin problem, the two pairs of opposites which are functional are united-separated (since it is necessary to split the group of coins into two separate subgroups) and bidimensional-three dimensional (since one needs to position two of the coins over the other three so that they are then superimposed and no longer coplanar).

Figure 3. Initial configuration (Top image) and final configuration (Bottom image) in the eight coin problem (Ormerod et al., 2002). The arrow represents the moves which need to be made.

In this paper, we have reviewed studies carried out in the last 20 years to explore the idea that opposition is a general organizing principle for the human mind. In addition to the research revised in the introduction (section Introduction) which emphasized the importance of opposites as a basic structure for human perception, human language, and relational reasoning, in this paper we put forward the idea that they are also a pervasive structure which is implied in various reasoning tasks. We have shown that “thinking in opposites” is fundamental to how people naturally think when they imagine alternatives to reality in everyday counterfactual reasoning (see section Thinking in Terms of Opposites in Order to Figure Out Alternatives in Everyday Life), that it is presupposed in various deductive reasoning phenomena, from understanding scalar implicatures to counterfactual reasoning in basic conditional reasoning, as well as in reasoning about disjunctive connectives (see section Opposites and Deductive Reasoning), that it supports inductive thinking in that it is involved in the identification of contrast class sets which are crucial to hypothesis testing (see section Opposites and Inductive Reasoning) and that it upholds representational change in insight problem solving thereby paving the way to the resolution of the problem (see section Opposites and Insight Problem Solving). In this sense this overview might lead to the impression that we do, in fact, use opposites more than we think.

On the other hand, as part of the picture which emerged from the review of the literature discussed in this paper, there is the consideration that counterfactual thinking is spontaneously used in some circumstances in everyday life, but is not so often spontaneously activated in inductive and deductive reasoning, and neither is it used as a purposeful strategy in problem solving. However, various studies investigating possible ways to stimulate reasoners to overcome typical reasoning biases in all of these domains suggest that giving them a hint to “think in opposites” points them in the right direction and improves their performance. In this sense opposites are used less than they could be. The facilitatory effect of using opposites as a strategy has been found in some studies on people's performance in classic deductive tasks (e.g., Augustinova, 2008), in Wason's triple inductive task (e.g., Gale and Ball, 2009, 2012), and in visuo-spatial insight problem solving (e.g., Murray and Byrne, 2013; Branchini et al., 2015a,b, 2016; Bianchi et al., 2020).

The tasks and prompts used in the abovementioned studies are very different from each other and are also very specific. Further experimentation is therefore needed before any conclusions suggesting that encouraging people to think in terms of opposites kick starts an intuitive strategy that enhances their performance can be generalized beyond the conditions of validity tested thus far. For instance, Branchini et al. (2015a, 2016) tested the effects of implicit hints and explicit training programs based on thinking in opposites in classic visuo-spatial insight problems. They found that this was effective both in terms of modifying the contents of the attempts made (i.e., the choice of properties to focus on), and in terms of the success rate. This has, however, not been tested with verbal insight problems (such as those studied, for instance, by Dow and Mayer, 2004; Macchi and Bagassi, 2015; Patrick et al., 2015), and neither has an adaptation of the training been tested in a hypothesis testing condition, such as for example Wason's triplets condition. If the seed triple 2-4-6 in Wason's problem leads to the hypothesis that the rule involves an “ascending series of even numbers, regularly increasing by two,” an explicit prompt to think in opposites would immediately suggest the precise direction in which to search, that is, testing whether the series is descending rather than ascending, whether it is made up of odd rather than even numbers, or whether it has irregular rather than regular intervals between the numbers, and so on.

Another aspect that future research might help to clarify concerns the relation between thinking in opposites and Type 1 and Type 2 processes as defined by Evans and Stanovich (2013a,b). Type 1 processes refer to fast, unconscious, automatic processing, Type 2 to slow, conscious, controlled processing (see also Sloman, 1996; Kahneman, 2011; Stanovich, 2011). The various different types of prompts used in the studies revised in this paper involved, in fact, both implicit and explicit suggestions. In the studies carried out by Augustinova (2008); Gale and Ball (2012); Murray and Byrne (2013) and Branchini et al. (2015a), implicit hints were enough to improve the participants' performance. These consisted of, respectively, a falsification cue, a contrast class cue, a counterexample, and an invitation to list the features of the problem and their opposites. There was no explanation as to why this would help. In these studies, the facilitating factors are implicit processes (which hinge on Type 1 processes) since the participants were exposed to the hints without any awareness of how they could be useful. In other studies from which a facilitatory effect emerged (see the studies done by Branchini et al., 2016; Bianchi et al., 2020), the participants were explicitly trained to use thinking in opposites as a strategy. This represents an analytically conscious suggestion which hinges on Type 2 processes. Based on these results, a provisional hypothesis that a prompt might be effective on both levels, within specific limits, seems to emerge, but further studies are of course needed to consolidate or disprove this.

Another aspect that deserves further investigation concerns whether this strategy is effective both when individuals are working alone or in groups. Content related hints to use opposites turned out to be effective in individual settings in the studies done by Gale and Ball (2012) and Murray and Byrne (2013), whereas positive effects resulting from general training to think in opposites were found in small group settings in the studies carried out by Bianchi et al. (2020) and Branchini et al. (2015a, 2016). Augustinova also worked with groups (Augustinova et al., 2005; Augustinova, 2008). Does this mean that more generic prompts suffice in group contexts whereas a content specific prompt is needed in individual contexts? Whether this relates to the processing dynamics which are natural in groups but are not present in the case of individual reasoners (Tindale and Kameda, 2000; Tindale et al., 2001) is an aspect which is worth investigating further, taking into account, however, the fact that it is always easier to contradict another person's best guess than it is to question one's own best guess (Poletiek, 1996).

Notably, as mentioned in the introduction to this paper, the strength of a prompt to generically “think in opposites” is based on the intuitive nature of opposition that it presupposes, as compared to more complex technical or logical definitions of opposition (i.e., counterfactuality, antynomy, and logical opposition). This means that it can easily become a deliberate strategy to produce systematic manipulations of an initial problem in order to resolve it. Moreover, since opposites, by definition, consist of pairs of properties, they offer a method of opening up the space within which a search is carried out, while at the same time giving precise directions. This combination of openness and boundary shifting fits in with the requisites of an effective cognitive heuristic according to, for instance, Öllinger et al. (2013). Therefore, opposites allow one to think not only in terms of not-x, but also in terms of alternatives which are clearly identifiable since they lie along well-defined dimensions. Contrasts are not set in stone. What makes a contrast relevant depends on contextual aspects, the mental framework, and various linguistic and pragmatic conditions (e.g., Paradis et al., 2009; Tenbrink and Freksa, 2009). This strategy, therefore, is one which is adaptive and flexible, and the variability of alternatives that come up is extremely wide, if not infinite.

The dual nature of opposition also conforms well with the duality that is inherent to the type of thinking implied in scientific discoveries, as discussed by Platt (1964) in relation to paramount discoveries in Molecular Biology and Physics in a short but extremely rich article published in the Science journal. He bases his claim on a variety of remarkable examples which demonstrate that the most efficient way for humans to use their minds when solving scientific questions consists of, at each step, explicitly setting down the question and the alternative hypotheses before conducting crucial experiments in order to exclude some alternatives and then adopt what remains. This procedure is known as strong inference and it is clearly modeled in terms of a decision tree (a conditional inductive trees or a logical tree) in which every time the branches fork, we can choose to go right or left. Thus, we can reasonably say that opposites, as conceptualized in this paper, seem to be the cognitive mechanism underlying the identification of these forks in the branches. “Thinking in opposites” is a way of thinking which is within everyone's reach and can easily become a deliberate thinking strategy. Due to the fact that the identification of opposites is sensitive to situations, this strategy can potentially lead to the creation of an extremely complex, rich set of alternatives for reasoners to test systematically.

EB, EC, RB, US, and IB contributed to conception of the review and wrote sections of the manuscript. EB and IB wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

This research was supported by the Department of Human Sciences, University of Verona (Italy).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Alexander, P. A. (2012). The Test of Relational Reasoning. College Park, MD: Disciplined Reading and Learning Research Laboratory.

Alexander, P. A., Dumas, D., Grossnickle, E. M., List, A., and Firetto, C. M. (2016). Measuring relational reasoning. J. Exp. Educ. 84, 119–151. doi: 10.1080/00220973.2014.963216

Alexander, P. A., White, C. S., and Daugherty, M. (1997). “Analogical reasoning and early mathematics learning,” in Mathematical Reasoning: Analogies, Metaphors, and Images: Studies in Mathematical Thinking and Learning, ed L. D. English (Mahwah, NJ: Lawrence Erlbaum Associates), 117–147.

Amsel, E., and Smalley, D. (2000). “Beyond really and truly: children's counterfactual thinking about pretend and possible worlds,” in Children's Reasoning and Mind, eds K. Riggs and P. Mitchell (Brighton: Psychology Press), 99–134.

Ansburg, P. I., and Dominowski, R. I. (2000). Promoting insightful problem solving. J. Creat. Behav. 34, 30–60. doi: 10.1002/j.21626057.2000.tb01201.x

Aristotle, (ed.) (1984). “Categories,” in The Complete Works of Aristotle: The Revised Oxford Translation, Vol. 1, ed J. Barnes (Princeton, NJ: Princeton University Press), 15–42.

Augustinova, M. (2008). Falsification cueing in collective reasoning: example of Wason selection task. Eur. J. Soc. Psychol. 38, 770–785. doi: 10.1002/ejsp.532

Augustinova, M., Oberlé, D., and Stasser, G. L. (2005). Differential access to information and anticipated group interaction: impact on individual reasoning. J. Pers. Soc. Psychol. 88, 619–631. doi: 10.1037/0022-3514.88.4.619

Beck, S. R., and Guthrie, C. (2011). Almost thinking counterfactually: children's understanding of close counterfactuals. Child Dev. 82, 1189–1198. doi: 10.1111/j.1467-8624.2011.01590.x

Beck, S. R., Robinson, E. J., Carroll, D. J., and Apperly, I. A. (2006). Children's thinking about counterfactuals and future hypotheticals as possibilities. Child Dev. 77, 413–426. doi: 10.1111/j.1467-8624.2006.00879.x

Bellocchi, A., and Ritchie, S. M. (2011). Investigating and theorizing discourse during analogy writing in chemistry. J. Res. Sci. Teach. 48, 771–792. doi: 10.1002/tea.20428

Beziau, J. Y., and Basti, G. (Eds.) (2016). The Square of Opposition: A Cornerstone of Thought. Basel: Birkhäuse.

Bianchi, I., Bertamini, M., Burro, R., and Savardi, U. (2017a). Opposition and identicalness: two basic components of adults' perception and mental representation of symmetry. Symmetry 9:128. doi: 10.3390/sym9080128

Bianchi, I., Branchini, E., Burro, R., Capitani, E., and Savardi, U. (2020). Overtly prompting people to “think in opposites” supports insight problem solving. Think. Reas. 26, 31–67. doi: 10.1080/13546783.2018.1553738

Bianchi, I., Burro, R., Pezzola, R., and Savardi, U. (2017b). Matching visual and acoustic mirror forms. Symmetry 9, 39–60. doi: 10.3390/sym9030039.2-s2.0-85019926670

Bianchi, I., Burro, R., Torquati, S., and Savardi, U. (2013). The middle of the road. Acta Psychol. 144, 121–135. doi: 10.1016/j.actpsy.2013.05.005

Bianchi, I., Paradis, C., Burro, R., van de Weijer, J., Nyström, M., and Savardi, U. (2017c). Identification of opposites and intermediates by eye and by hand. Acta Psychol. 180, 175–189. doi: 10.1016/j.actpsy.2017.08.011

Bianchi, I., and Savardi, U. (2006). Oppositeness in visually perceived forms. Gestalt Theory 4, 354–374.

Bianchi, I., and Savardi, U. (2008b). The relationship perceived between the real body and the mirror image. Perception 5, 666–687. doi: 10.1068/p57442-s2.0-46449086995

Bianchi, I., Savardi, U., Burro, R., and Martelli, M. F. (2014). Doing the opposite to what another person is doing. Acta Psychol. 151, 117–133. doi: 10.1016/j.actpsy.2014.06.003

Bianchi, I., Savardi, U., and Kubovy, M. (2011). Dimensions and their poles: a metric and topological theory of opposites. Lang. Cogn. Process. 26, 1232–1265. doi: 10.1080/01690965.2010.520943

Biassoni, F. (2009). “Basic qualities in naïve subjects' perception of voice. Are they based on contrary properties?,” in The Perception and Cognition of Contraries, ed U. Savardi (Milano: McGraw Hill), 131–152.

Braasch, J. L. G., and Goldman, S. R. (2010). The role of prior knowledge in learning from analogies in science texts. Discourse Process. 47, 447–479. doi: 10.1080/01638530903420960

Branchini, E., Bianchi, I., Burro, R., Capitani, E., and Savardi, U. (2016). Can contraries prompt intuition in insight problem solving?. Front. Psychol. 7:1962. doi: 10.3389/fpsyg.2016.01962

Branchini, E., Burro, R., Bianchi, I., and Savardi, U. (2015a). Contraries as an effective strategy in geometrical problem solving. Think. Reas. 21, 397–430. doi: 10.1080/13546783.2014

Branchini, E., Savardi, U., and Bianchi, I. (2015b). Productive thinking: the role of perception and perceiving opposition. Gestalt Theory 37, 7–24.

Byrne, R. M. J. (2016). Counterfactual thought. Annu. Rev. Psychol. 67, 135–157. doi: 10.1146/annurev-psych-122414-033249

Byrne, R. M. J. (2017). Counterfactual thinking: from logic to morality. Curr. Dir. Psychol. Sci. 26, 314–322. doi: 10.1177/0963721417695617

Byrne, R. M. J. (2018). “Counterfactual reasoning and Imagination,” in The Routledge Handbook of Thinking and Reasoning, eds L. J. Ball and V. A. Thompson (London: Routledge), 71–87.

Casasola, M. (2008). The development of infants' spatial categories. Curr. Dir. Psychol. Sci. 7, 21–25. doi: 10.1111/j.1467-8721.2008.00541.x

Casasola, M., Cohen, L. B., and Chiarello, E. (2003). Six-month-old infants' categorization of containment spatial relations. Child Dev. 74, 1–15. doi: 10.1111/1467-8624.00562

Cheng, P. W., and Holyoak, K. J. (1985). Pragmatic reasoning schemas. Cognit. Psychol. 17, 391–416. doi: 10.1016/0010-0285(85)90014-3

Chierchia, G. (2004). “Scalar implicatures, polarity phenomena, and the syntax/pragmatic interface,” in Structures and Beyond: Volume 3: The Cartography of Syntactic Structures, ed A. Belletti (Oxford: Oxford University Press), 39–103.

Chinn, C. A., and Brewer, W. F. (1993). The role of anomalous data in knowledge acquisition: a theoretical framework and implications for science instruction. Rev. Educ. Res. 63, 1–49. doi: 10.2307/1170558

Colston, H. (1999). Not good” is “bad” but “not bad” is not “good”: an analysis of three accounts of negation asymmetries. Discourse Processes 28, 237–256.

Davis, C. G., Lehman, D. R., Wortman, C. B., Silver, R. C., and Thompson, S. C. (1995). The undoing of traumatic life events. Pers. Soc. Psychol. B 21, 109–124. doi: 10.1177/0146167295212002

DiDonato, T. E., Ullrich, J., and Krueger, J. I. (2011). Social perception as induction and inference: an integrative model of intergroup differentiation, ingroup favoritism, and differential accuracy. J. Pers. Soc. Psychol. 100, 66–83. doi: 10.1037/a0021051

Dow, G. T., and Mayer, R. E. (2004). Teaching students to solve insight problems: evidence for domain specificity in creativity training. Creat. Res. J. 16, 389–402. doi: 10.1080/10400410409534550

Dumas, D., Alexander, P. A., Baker, L. M., Jablansky, S., and Dunbar, K. N. (2014). Relational reasoning in medical education: patterns in discourse and diagnosis. J. Educ. Psychol. 106, 1021–1035. doi: 10.1037/a0036777

Dumas, D., Alexander, P. A., and Grossnickle, E. M. (2013). Relational reasoning and its manifestations in the educational context: a systematic review of the literature. Educ. Psychol. Rev. 25, 391–427. doi: 10.1007/s10648-013-9224-4

Dumas, D., and Schmidt, L. (2015). Relational reasoning as predictor for engineering ideation success using TRIZ. J. Eng. Design 26, 74–88. doi: 10.1080/09544828.2015.1020287

Dumas, D., Schmidt, L. C., and Alexander, P. A. (2016). Predicting creative problem solving in engineering design. Think. Skills Creat. 21, 50–66. doi: 10.1016/j.tsc.2016.05.002

Dunbar, K. (2001). “The analogical paradox: why analogy is so easy in naturalistic settings yet so difficult in the psychological laboratory,” in The Analogical Mind: Perspectives from Cognitive Science, eds D. Gentner, K. J. Holyoak, and B. N. Kokinov (Cambridge: MIT), 313–334.

Epstude, K., and Roese, N. J. (2008). The functional theory of counterfactual thinking. Pers. Soc. Psychol. Rev. 12, 168–192. doi: 10.1177/1088868308316091

Evans, J. S. B. T. (2007). Hypothetical Thinking: Dual Processes in Reasoning and Judgment. Hove: Psychology Press.

Evans, J. S. B. T., Newstead, S. E., and Byrne, R. M. J. (1993). Human Reasoning: The Psychology of Deduction. Mahwah, NJ: Lawrence Erlbaum Associates.

Evans, J. S. B. T., and Stanovich, K. E. (2013a). Dual-process theories of higher cognition: advancing the debate. Perspect. Psychol. Sci. 8, 223–241. doi: 10.1177/1745691612460685

Evans, J. S. B. T., and Stanovich, K. E. (2013b). Theory and metatheory in the study of dual processing: reply to comments. Perspect. Psychol. Sci. 8, 263–271. doi: 10.1177/1745691613483774

Farris, H., and Revlin, R. (1989a). The discovery process: a counterfactual strategy. Soc. Stud. Sci. 19, 497–513. doi: 10.1177/030631289019003005

Farris, H., and Revlin, R. (1989b). Sensible reasoning in two tasks: rule discovery and hypothesis evaluation. Mem. Cogn. 17, 221–232. doi: 10.3758/BF03197071

Farris, H., and Revlin, R. (1991). Rule discovery strategies: falsification without disconfirmation (reply to Gorman). Soc. Stud. Sci. 21, 565–567. doi: 10.1177/030631291021003008

Fedor, A., Szathmáry, E., and Öllinger, M. (2015). Problem solving stages in the five square problem. Front. Psychol. 6:1050. doi: 10.3389/fpsyg.2015.01050

Fellbaum, C. (1995). Co-occurrence and antonymy. Int. J. Lexicogr. 8, 281–303. doi: 10.1093/ijl/8.4.281

Ferrante, D., Girotto, V., Strag,à, M., and Walsh, C. (2013). Improving the past and the future: a temporal asymmetry in hypothetical thinking. J. Exp. Psychol. 142, 23–27. doi: 10.1037/a0027947

Fillenbaum, D. (1974). Information amplified: memory for counterfactual conditionals. J. Exp. Psychol. 102, 44–49. doi: 10.1037/h0035693

Fitzgibbon, L., Moll, H., Carboni, J., Lee, R., and Dehghani, M. (2019). Counterfactual curiosity in preschool children. J. Exp. Child Psychol. 183, 146–157. doi: 10.1016/j.jecp.2018.11.022

Gale, M., and Ball, L. J. (2006). Dual-goal facilitation in Wason's 2-4-6 task: what mediates successful rule discovery? Q. J. Exp. Psychol. 59, 873–885. doi: 10.1080/02724980543000051

Gale, M., and Ball, L. J. (2009). Exploring the determinants of dual goal facilitation in a rule discovery task. Think. Reas. 15, 294–315. doi: 10.1080/13546780903040666

Gale, M., and Ball, L. J. (2012). Contrast class cues and performance facilitation in a hypothesis testing task: Evidence for an iterative counterfactual model. Mem. Cogn. 40, 408–419. doi: 10.3758/s13421-011-0159-z

Gärdenfors, P. (2014). The Geometry of Meaning: Semantics Based on Conceptual Spaces. Cambridge, MA: The MIT Press.

Geiger, S. M., and Oberauer, K. (2007). Reasoning with conditionals: does every counterexample count? It's frequency that counts. Mem. Cogn. 35, 2060–2074. doi: 10.3758/BF03192938

Gentner, D., Loewenstein, J., and Thompson, L. (2003). Learning and transfer: a general role for analogical encoding. J. Educ. Psychol. 95, 393–405. doi: 10.1037/0022-0663.95.2.393

German, T. P. (1999). Children's causal reasoning: counterfactual thinking occurs for “negative” outcomes only. Dev. Sci. 2, 442–447. doi: 10.1111/1467-7687.00088

Gilhooly, K., Ball, L., and Macchi, L. (2015). Insight and creative thinking processes: routine and special. Think. Reas. 21, 1–4. doi: 10.1080/13546783.2014.966758

Girotto, V., Kernrnelmeir, M., Sperber, D., and van der Henst, J. B. (2001). Inept reasoners or pragmatic virtuosos? Relevance and the deontic selection task. Cognition 81, 69–76. doi: 10.1016/s0010-0277(01)00124-x

Girotto, V., Legrenzi, P., and Rizzo, A. (1991). Event controllability in counterfactual thinking. Acta Psychol. 78, 111–133. doi: 10.1016/0001-6918(91)90007-M

Goldstone, R. L. (1994). The role of similarity in categorization: providing a groundwork. Cognition 1952, 125–157. doi: 10.1016/0010-0277(94)90065-5

Gorman, M. (1991). Counterfactual simulations of science: a response to Farris and Revlin. Soc. Stud. Sci. 21, 561–564. doi: 10.1177/030631291021003007

Gorman, M. E., and Gorman, M. E. (1984). A comparison of disconfirmatory, confirmatory and control strategies on Wason's 2–4–6 task. Q. J. Exp. Psychol. 36, 629–648. doi: 10.1080/14640748408402183

Gorman, M. E., Gorman, M. E., Latta, R. M., and Cunningham, G. (1984). How disconfirmatory, confirmatory and combined strategies affect group problem-solving. Br. J. Psychol. 75, 65–79. doi: 10.1111/j.2044-8295.1984.tb02790.x

Gorman, M. E., Stafford, A., and Gorman, M. E. (1987). Disconfirmation and dual hypotheses on a more difficult version of Wason's 2–4–6 task. Q. J. Exp. Psychol. 1, 1–28. doi: 10.1080/02724988743000006

Goswami, U., and Mead, F. (1992). Onset and rime awareness and analogies in reading. Read. Res. Q. 27, 152–162. doi: 10.2307/747684

Gotzner, N., Solt, S., and Benz, A. (2018). Scalar diversity, negative strengthening, and adjectival semantics. Front. Psychol. 9:1659. doi: 10.3389/fpsyg.2018.01659

Guttentag, R., and Ferrell, J. (2004). Reality compared with its alternatives: age differences in judgments of regret and relief. Dev. Psychol. 40, 764–775. doi: 10.1037/0012-1649.40.5.764

Hahn, U., Chater, N., and Richardson, L. B. (2003). Similarity as transformation. Cognition 87, 1–32. doi: 10.1016/S0010-0277(02)00184-1

Hahn, U., Close, J., and Graf, M. (2009). Transformation direction influences shape-similarity judgments. Psychol. Sci. 20, 447–454. doi: 10.1111/j.1467-9280.2009.02310.x

Harris, P. L., German, T., and Mills, P. (1996). Children's use of counterfactual thinking in causal reasoning. Cognition 61, 233–259. doi: 10.1016/S0010-0277(96)00715-9

Heit, E. (2000). Properties of inductive reasoning. Psychon. Bull. Rev. 7, 569–592. doi: 10.3758/BF03212996

Hofstadter, D. R. (2001). “Epilogue: Analogy as the core of cognition,” in The Analogical Mind: Perspectives from Cognitive Science, eds D. Gentner, K. J. Holyoak, and B. N. Kokinov (Cambridge: MIT), 499–538.

Holyoak, K. J. (2012). “Analogy and relational reasoning,” in The Oxford Handbook of Thinking and Reasoning, eds K. J. Holyoak and R. G. Morrison (New York, NY: Oxford University Press), 234–259.

Horn, L. R. (1984). “Toward a new taxonomy for pragmatic inference: Q-based and R-based implicature,” in Georgetown University Round Table on Languages and Linguistics, ed D. Schiffrin (Washington, DC: Georgetown University Press), 11–42.

Horn, L. R., and Kato, Y. (Eds.) (2000). Negation and Polarity. Syntactic and Semantic Perspectives. Oxford: Oxford University Press.

Johnson-Laird, P. N. (2010). Mental models and human reasoning. Proc. Natl. Acad. Sci. U.S.A. 107, 18243–18250. doi: 10.1073/pnas.1012933107

Johnson-Laird, P. N., and Khemlani, S. S. (2014). Toward a unified theory of reasoning. Psychol. Learn. Motiv. 59, 1–42. doi: 10.1016/B978-0-12-407187-2.00001-0

Jones, S. (2007). ‘Opposites' in discourse: a comparison of antonym use across four domains. J. Pragmat. 39, 1105–1119. doi: 10.1016/j.pragma.2006.11.019

Kahneman, D., and Tversky, A. (1982a). “The simulation heuristic,” in Judgment Under Uncertainty: Heuristics and Biases, eds D. Kahneman, P. Slovic, and A. Tversky (New York, NY: Cambridge University Press), 201–208.

Kahneman, D., and Tversky, A. (1982b). The psychology of preferences. Sci. Am. 246, 160–173. doi: 10.1038/scientificamerican0182-160

Kaup, B., Lüdtke, J., and Zwaan, R. (2006). Processing negated sentences with contradictory predicates: is a door that is not open mentally closed? J. Pragmat. 38, 1033–1050. doi: 10.1016/j.pragma.2005.09.012

Kaup, B., Yaxley, R. H., Madden, C. J., Zwaan, R. A., and Lüdtke, J. (2007). Experiential simulations of negated text information. Q. J. Exp. Psychol. 60, 976–990. doi: 10.1080/17470210600823512

Kennedy, C., and McNally, L. (2005). Scale structure, degree modification, and the semantics of gradable predicates. Language 81, 345–381. doi: 10.1353/lan.2005.0071

Klahr, D., and Dunbar, K. (1988). Dual space search during scientific reasoning. Cogn. Sci. 12, 1–48. doi: 10.1016/0364-0213(88)90007-9

Klayman, J., and Ha, Y. W. (1987). Confirmation, disconfirmation, and information in hypothesis testing. Psychol. Rev. 94, 211–228. doi: 10.1037/0033-295X.94.2.211

Knoblich, G., Ohlsson, S., Haider, H., and Rhenius, D. (1999). Constraint relaxation and chunk decomposition in insight problem solving. J. Exp. Psychol. 25, 1534–1555. doi: 10.1037/0278-7393.25.6.1534

Kuczaj, S. A., and Daly, M. J. (1979). The development of hypothetical reference in the speech of young children. J. Child Lang. 6, 563–579. doi: 10.1017/S0305000900002543

Langley, P., Simon, H. A., Bradshaw, G. L., and Zytkow, J. M. (1987). Scientific Discovery. Computational Explorations of the Creative Processes. Cambridge, MA: MIT Press.

Leffel, T., Cremers, A., Gotzner, N., and Romoli, J. (2019). Vagueness in implicature: the case of modified adjectives. J. Semant. 36, 317–348. doi: 10.1093/jos/ffy020

Macchi, L., and Bagassi, M. (2015). When analytic thought is challenged by a misunderstanding, Think. Reas. 21, 147–164. doi: 10.1080/13546783.2014.964315

Mahoney, M. J., and DeMonbreun, B. G. (1977). Psychology of the scientist: an analysis of problem solving bias. Cognit. Ther. Res. 6, 229–238. doi: 10.1007/BF01186796

Manktelow, K. I., and Over, D. E. (1991). Social roles and utilities in reasoning with deontic conditionals. Cognition 39, 85–105. doi: 10.1016/0010-0277(91)90039-7

Markman, K. D., Gavanski, I., Sherman, S. J., and McMullen, M. N. (1993). The mental simulation of better and worse possible worlds. J. Exp. Soc. Psychol. 29, 87–109. doi: 10.1006/jesp.1993.1005

Markman, K. D., McMullen, M. N., and Elizaga, R. A. (2008). Counterfactual thinking, persistence, and performance: a test of the reflection and evaluation model. J. Exp. Soc. Psychol. 44, 421–428. doi: 10.1016/j.jesp.2007.01.001

Markman, K. D., and Tetlock, P. E. (2000). I couldn't have known: accountability, foreseeability, and counterfactual denials of responsibility. Br. J. Soc. Psychol. 39, 313–325. doi: 10.1348/014466600164499

Markovits, H., Furgues, H. L., and Brunet, M. L. (2010). Conditional reasoning, frequency of counterexamples, and the effect of response modality. Mem. Cogn. 38, 485–492. doi: 10.3758/MC.38.4.485

McCrea, S. M. (2008). Self-handicapping, excuse making, and counterfactual thinking: consequences for self-esteem and future motivation. J. Pers. Soc. Psychol. 95, 274–292. doi: 10.1037/0022-3514.95.2.274

Miller, D. T., and Gunasegaram, S. (1990). Temporal order and the perceived mutability of events: implications for blame assignment. J. Pers. Soc. Psychol. 59, 1111–1118. doi: 10.1037/0022-3514.59.6.1111

Miller, G. A., and Fellbaum, C. (1991). Semantic networks of English. Cognition 41, 197–229. doi: 10.1016/0010-0277(91)90036-4

Murphy, L. (2003). Semantic Relations and the Lexicon: Antonyms, Synonyms and Other Semantic Paradigms. Cambridge: Cambridge University Press.

Murray, M. A., and Byrne, R. M. J. (2013). Cognitive change in insight problem solving: initial model errors and counterexamples. J. Cogn. Psychol. 25, 210–219. doi: 10.1080/20445911.2012.743986

Nisbett, R., and Ross, L. (1980). Human Inference: Strategies and Shortcomings of Social Judgment. Englewood Cliffs, NJ: Prentice-Hall.

Oaksford, M. (2002). Contrast classes and matching bias as explanations of the effects of negation on conditional reasoning. Think. Reas. 8, 135–151. doi: 10.1080/13546780143000170

Oaksford, M., and Chater, N. (1994). Another look at eliminative and enumerative behavior in a conceptual task. Eur. J. Cogn. Psychol. 6, 149–169. doi: 10.1080/09541449408520141

Ohlsson, S. (1992). “Information-processing explanations of insight and related phenomena,” in Advances in the Psychology of Thinking, Vol. 1, eds M. T. Keane and K. J. Gilhooly (New York, NY: Harvester Wheatsheaf), 1–44.

Ohlsson, S. (2011). Deep Learning: How the Mind Overrides Experience. Cambridge: Cambridge University Press.

Öllinger, M., Jones, G., Faber, A. H., and Knoblich, G. (2013). Cognitive mechanisms of insight: the role of heuristics and representational change in solving the eight-coin problem. J. Exp. Psychol. Learn. Mem. Cogn. 39, 931–939. doi: 10.1037/a0029194

Öllinger, M., Jones, G., and Knoblich, G. (2008). Investigating the effect of mental set on insight problem solving. Exp. Psychol. 55, 270–282. doi: 10.1027/1618-3169.55.4.269

Öllinger, M., Jones, G., and Knoblich, G. (2014). The dynamics of search, impasse, and representational change provide a coherent explanation of difficulty in the nine-dot problem. Psychol. Res. 78, 266–275. doi: 10.1007/s00426-013-0494-8

Ormerod, T. C., MacGregor, J. N., and Chronicle, E. P. (2002). Dynamics and constraints in insight problem solving. J. Exp. Psychol. Learn. Mem. Cogn. 28, 791–799. doi: 10.1037//0278-7393.28.4.791

Papafragou, A., and Musolino, J. (2003). Scalar implicatures: experiments at the semantics-pragmatics interface. Cognition 86, 253–282. doi: 10.1016/S0010-0277(02)00179-8

Papafragou, A., and Skordos, D. (2016). “Scalar Implicature,” in The Oxford Handbook of Developmental Linguistics, eds J. Lidz, W. Snyder, and J. Pater (Oxford: Oxford University Press), 611–629.

Paradis, C. (2005). Ontologies and construals in lexical semantics. Axiomathes 15, 541–573. doi: 10.1007/s10516-004-7680-7

Paradis, C. (2008). Configurations, construals and change: expressions of DEGREE. English Lang. Linguist. 12, 317–343. doi: 10.1017/S1360674308002645

Paradis, C., and Willners, C. (2006). Antonymy and negation. The boundedness hypothesis. J. Pragmat. 38, 1051–1080. doi: 10.1016/j.pragma.2005.11.009

Paradis, C., Willners, C., and Jones, S. (2009). Good and bad opposites: using textual and experimental techniques to measure antonym canonicity. Mental Lexicon 4, 380–429. doi: 10.1075/ml.4.3.04par

Patrick, J., Ahmed, A., Smy, V., Seeby, H., and Sambrooks, K. (2015). A cognitive procedure for representation change in verbal insight problems. J. Exp. Psychol. Learn. Mem. Cogn. 41,746–759. doi: 10.1037/xlm0000045

Poletiek, F. H. (1996). Paradoxes of falsification. Q. J. Exp. Psychol. 49A, 447–462. doi: 10.1080/713755628

Rafetseder, E., Cristi-Vargas, R., and Perner, J. (2010). Counterfactual reasoning: developing a sense of “nearest possible world”. Child Dev. 81, 376–389. doi: 10.1111/j.1467-8624.2009.01401.x

Ritov, I., and Baron, J. (1990). Reluctance to vaccinate: omission bias and ambiguity. J. Behav. Decis. Mak. 3, 263–277. doi: 10.1002/bdm.3960030404

Robert, S., and Brisson, J. (2016). The Klein group, squares of opposition and the explanation of fallacies in reasoning. Log. Universalis 10, 377–392. doi: 10.1007/s11787-016-0150-3

Rossi, S., Caverni, J. P., and Girotto, V. (2001). Hypothesis testing in a rule discovery problem: when a focused procedure is effective. Q. J. Exp. Psychol. 54A, 263–267. doi: 10.1080/02724980042000101

Santamaria, C., Espino, O., and Byrne, R. M. J. (2005). Counterfactual and semifactual conditionals prime alternative possibilities. J. Exp. Psychol. Learn. Mem. Cogn. 31, 1149–1154. doi: 10.1037/0278-7393.31.5.1149

Savardi, U., Bianchi, I., and Bertamini, M. (2010). Naive prediction of orientation and motion in mirrors: from what we see to what we expect reflections to do. Acta Psychol. 134, 1–15.

Schepis, A., Zuczkowski, A., and Bianchi, I. (2009). “Are drag and push contraries?,” in The Perception and Cognition of Contraries, ed U. Savardi (Milano: McGraw Hill), 153–174.

Segura, S., Fernandez-Berrocal, P., and Byrne, R. M. J. (2002). Temporal and causal order effects in counterfactual thinking. Q. J. Exp. Psychol. 55, 1295–1305. doi: 10.1080/02724980244000125

Sperber, D., Cara, F., and Girotto, V. (1995). Relevance theory explains the selection task. Cognition 57, 31–95. doi: 10.1016/0010-0277(95)00666-m

Sperber, D., and Wilson, D. (1986/1995). Relevance: Communication and Cognition. Cambridge, MA: Harvard University Press.

Stanovich, K. E. (2011). Rationality and the Reflective Mind. New York, NY: Oxford University Press.

Tenbrink, T., and Freksa, C. (2009). Contrast sets in spatial and temporal language. Cogn. Process. 10, S322–S324. doi: 10.1007/s10339-009-0309-4

Tindale, R. S., and Kameda, T. (2000). ‘Social sharedness' as a unifying theme for information processing in groups. Group Process. Intergroup Relat. 3, 123–140. doi: 10.1177/1368430200003002002

Tindale, R. S., Meisenhelder, H. M., Dykema-Engblade, A. A., and Hogg, M. A. (2001). “Shared cognitions in small groups,” in Blackwell Handbook in Social Psychology: Group Processes, eds M. A. Hogg and R. S. Tindale (Oxford: Blackwell Publishers), 1–30.

Tschirgi, J. E. (1980). Sensible reasoning: a hypothesis about hypotheses. Child Dev. 51, 1–10. doi: 10.2307/1129583

Tukey, D. D. (1986). A philosophical and empirical analysis of subjects' modes of inquiry in Wason's 2–4–6 task. Q. J. Exp. Psychol. 38A, 5–33. doi: 10.1080/14640748608401583

Tweney, R. D., Doherty, M. E., Worner, W. J., Pliske, D. B., Mynatt, C. R., Gross, K. A., et al. (1980). Strategies of rule discovery in an inference task. Q. J. Exp. Psychol. 32, 109–123. doi: 10.1080/00335558008248237

Tyser, M. P., McCrea, S. M., and Knuepfer, K. (2012). Pursuing perfection or pursuing protection? Self-evaluation concerns and the motivational consequences of counterfactual thinking. Eur. J. Soc. Psychol. 42, 372–382. doi: 10.1002/ejsp.1864

van Tiel, B., and Schaeken, W. (2017). Processing conversational implicatures: alternatives and counterfactual reasoning. Cogn. Sci. 41, 1119–1154. doi: 10.1111/cogs.12362

van Tiel, B., van Miltenburg, E., Zevakhina, N., and Geurts, B. (2016). Scalar diversity. J. Semant. 33, 107–135. doi: 10.1093/jos/ffu017

Vartanian, O., Martindale, C., and Kwiatkowski, J. (2003). Creativity and inductive reasoning: The relationship between divergent thinking and performance on Wason's 2-4-6 task. Q. J. Exp. Psychol. A 56, 641–655. doi: 10.1080/02724980244000567

Wason, P. C. (1960). On the failure to eliminate hypotheses in a conceptual task. Q. J. Exp. Psychol. 12, 129–140. doi: 10.1080/17470216008416717

Wason, P. C. (1966). “Reasoning,” in New Horizons in Psychology: I, ed B. M. Foss (Harmondsworth: Penguin), 106–137.

Wason, P. C. (1968). Reasoning about a rule. Q. J. Exp. Psychol. 60, 273–281. doi: 10.1080/14640746808400161

Wells, G. L., Taylor, B. R., and Turtle, J. W. (1987). The undoing of scenarios. J. Pers. Soc. Psychol. 53, 421–430. doi: 10.1037/0022-3514.53.3.421

Keywords: opposites, contrast class, inductive and deductive reasoning, insight problem solving, counterfactual thinking

Citation: Branchini E, Capitani E, Burro R, Savardi U and Bianchi I (2021) Opposites in Reasoning Processes: Do We Use Them More Than We Think, but Less Than We Could? Front. Psychol. 12:715696. doi: 10.3389/fpsyg.2021.715696

Received: 27 May 2021; Accepted: 03 August 2021;

Published: 26 August 2021.

Edited by:

Thora Tenbrink, Bangor University, United KingdomReviewed by:

Michele Vicovaro, University of Padua, ItalyCopyright © 2021 Branchini, Capitani, Burro, Savardi and Bianchi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Erika Branchini, ZXJpa2EuYnJhbmNoaW5pQHVuaXZyLml0