- 1School of Computer Science and Artificial Intelligence, Changzhou University, Changzhou, China

- 2Viterbi School of Engineering, University of Southern California, Los Angeles, CA, United States

- 3School of Electrical and Mechanical Engineering, Shaoxing University, Shaoxing, China

Electroencephalogram (EEG)-based emotion recognition (ER) has drawn increasing attention in the brain–computer interface (BCI) due to its great potentials in human–machine interaction applications. According to the characteristics of rhythms, EEG signals usually can be divided into several different frequency bands. Most existing methods concatenate multiple frequency band features together and treat them as a single feature vector. However, it is often difficult to utilize band-specific information in this way. In this study, an optimized projection and Fisher discriminative dictionary learning (OPFDDL) model is proposed to efficiently exploit the specific discriminative information of each frequency band. Using subspace projection technology, EEG signals of all frequency bands are projected into a subspace. The shared dictionary is learned in the projection subspace such that the specific discriminative information of each frequency band can be utilized efficiently, and simultaneously, the shared discriminative information among multiple bands can be preserved. In particular, the Fisher discrimination criterion is imposed on the atoms to minimize within-class sparse reconstruction error and maximize between-class sparse reconstruction error. Then, an alternating optimization algorithm is developed to obtain the optimal solution for the projection matrix and the dictionary. Experimental results on two EEG-based ER datasets show that this model can achieve remarkable results and demonstrate its effectiveness.

Introduction

Brain–computer interface (BCI) has been one of the research hotspots in recent years in health monitoring and biomedicine (Edgar et al., 2020; Ni et al., 2020b). The BCI does not rely on muscles and the peripheral nervous system. It establishes a direct information transmission channel between the brain and the outside world. The electroencephalography (EEG) signals captured by the BCI system are a powerful tool to analyze neural activities and brain conditions. EEG has the advantages of convenience (i.e. non-invasive, non-destructive and simple) and validity (i.e. sensitivity, validity and compatibility) (Sreeja and Himanshu, 2020). EEG signal is an important tool for revealing the emotional state of human beings. It has been shown that when people are in different thinking and emotional states, the rhythm components of EEG signals are different from their waveform. In BCI, the operation of emotion recognition (ER) starts from external stimuli to subjects, which induce specific emotions such as happiness, sadness, and anger. These stimuli may be videos, images, music, and so on. During the session, EEG data are recorded by EEG devices. Subsequently, the first step is to extract and preprocess useful features obtained from the recorded EEG. The next step is to train the classifier and optimize the parameters. The final step is to test the training model with new EEG data that are not used in the training process.

Traditional machine learning classifiers have been widely used in EEG-based ER, such as support vector machine (SVM) (Zheng et al., 2019), deep learning (Hwang et al., 2020; Song et al., 2020), nearest neighbor classifier (Li et al., 2019), random forest (Fraiwan et al., 2012), and probabilistic neural networks (Nakisa et al., 2018). In recent years, dictionary learning-based methods have achieved great success in EEG-based recognition tasks for BCI (Ameri et al., 2016; Gu et al., 2020; Ni et al., 2020a). In general, dictionary learning-based classification methods often learn the discriminative and robust dictionaries from training samples. The test sample is sparsely represented as a sparse linear combination of atoms by the learned dictionary, and then, the classification task can be carried out according to the reconstruction error and/or the sparse coefficients. Dictionary learning works well-even with noisy EEG signals. Barthélemy et al. (2013) developed an efficient method to represent EEG signals based on the adapted Gabor dictionary and demonstrated on real data that the learned multivariate model is flexible and the learned representation is informative and interpretable. Abolghasemi and Ferdowsi (2015) developed a dictionary learning framework to remove ballistocardiogram (BCG) artifacts from EEG. Given the advantage of the noise-robust sparse dictionary, a new cost function was proposed, which can model BCG artifacts and then remove them from the original EEG signals. Kashefpoor et al. (2019) developed a correlational label consistent K-SVD dictionary learning method applied to EEG-based screening tool. This method was applied to speckle extraction of EEG signals and extracted spectral features in both time and frequency domains. Aiming at the problem that eye movement and blinking can cause artifacts, Kanoga et al. (2019) proposed a multi-scale dictionary learning method to eliminate eye artifacts from single-channel measurement. Specifically, the time-domain waveforms related to repetitive phase events in EEG signals were learned within the framework of dictionary learning. And the proposed multi-scale dictionary learning method was used to represent the signal components on different timescales. To achieve the highly accurate classification of EEG in BCI, Huang et al. (2020) developed a signal identification model using sparse representation and fast compressed residual convolutional neural networks (CNNs). The authors used the common spatial patterns to extract EEG signal features and build a redundant dictionary using these features. Then, the proposed deep model as a classifier recognized the input EEG signals.

Although machine learning has achieved good classification performance in some application scenarios, the accuracy and applicability of the classification do not go far enough. Since EEG data provide comprehensive information across different frequency bands to characterize emotions, it was expected to design an ER method, which utilizes the specific discriminative information of each frequency band and preserves the common discrimination information shared by multiple band signals. After the success of dictionary learning, in this study, we propose optimized projection and Fisher discriminative dictionary learning (DDL) for EEG-based ER. According to the Fisher discrimination criterion of minimum within-class sparse reconstruction error and maximum between-class sparse reconstruction error, we learn the discriminative projection to map the multiple band signals into a shared subspace and simultaneously build a shared dictionary that establishes the connection between different bands and represents the characteristics of signals well. Therefore, the joint learning of projection and dictionary ensures the common internal structure of multiple frequency bands of signals to be mined in the subspace.

The main contributions of this study are as follows:

(1) A multiple frequency band collaborative learning is introduced in dictionary learning for the EEG-based ER. This learning mechanism can efficiently integrate the band-independent information and inter-band correlation information.

(2) Through the feature projection matrix, the data of multiple frequency bands are projected into a common projection subspace to keep the latent manifold of EEG signals. Meanwhile, the discriminative dictionary is learned by enforcing the classification criterion so that the learned sparse code has a strong representation and discrimination ability.

(3) This joint optimization method has some benefits. Learning independent projection matrices makes this model easily extensible; meanwhile, learning a dictionary in a subspace allows abandoning extraneous information in the original features. In addition, the alternating optimization procedure ensures the dictionary and projection are optimized at the same time.

(4) These extensive experiments on the SEED and DREAMER datasets demonstrate that the multiple band collaborative learning is effective, and this method can improve the discrimination ability of sparse coding in EEG-based ER.

Background

Datasets

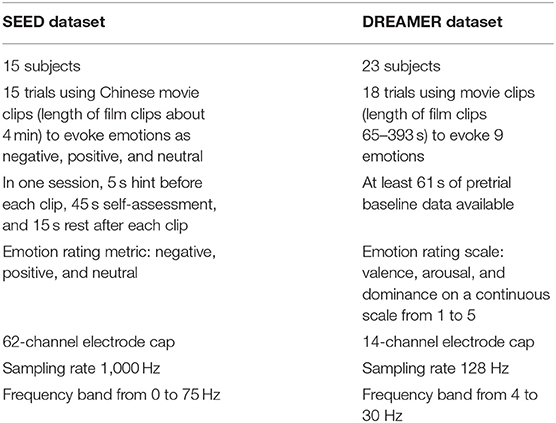

The experimental data in this study are taken from two public EEG emotion datasets: SEED (Zheng and Lu, 2015) and DREAMER (Katsigiannis and Ramzan, 2018). Table 1 briefly describes the information of the two datasets. Both SEED and DREAMER datasets are collected when subjects watched emotion-eliciting movies. In the SEED dataset, each subject participated in three experiments, which were separated into three time periods, corresponding to three sessions, and each session corresponds to 15 EEG data trials. Thus, a total of 15 × 3 = 45 trials are formed per subject. The SEED provided five frequency bands: δ band (1–3 Hz), θ band (4–7 Hz), α band (8–13 Hz), β band (14–30 Hz), and γ band (31–50 Hz). For the DREAMER dataset, the data recorded by each subject contain three parts: 18 experimental signal segments, 18 baseline signal segments corresponding to relaxation state, and 18 corresponding labels. The DREAMER data provided EEG features with frequency bands θ, α, and β.

Machine Learning-Based EEG Signal Processing Program

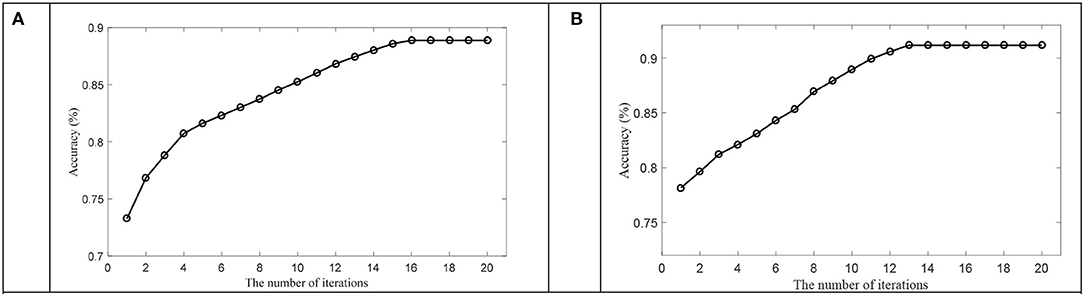

For machine learning-based EEG-based ER, feature extraction and emotion classification are the critical procedures. Considering the SEED dataset as an example, the process of constructing five frequency band sequences is described in Figure 1. Firstly, EEG signals collected by BCI are preprocessed by filtering. Then, according to the characteristics of different rhythms of EEG signals, EEG signals usually can be divided into several rhythmic signal components ranging from 0 to 50 Hz. Secondly, EEG features can be extracted by various strategies. Time-domain, frequency-domain, and non-linear analysis methods are the three types of most commonly used EEG feature extraction methods. The time-domain features aim to capture the temporal information of EEG signals, such as higher-order crossings (HOC) (Petrantonakis and Hadjileontiadis, 2010), Hjorth features (Petrantonakis and Hadjileontiadis, 2010), and event-related potential (ERP) (Brouwer et al., 2015). The frequency-domain features aim to capture primarily the EEG emotion information from a frequency perspective. Then, EEG features can be extracted by various methods, such as rhythm (Bhatti et al., 2016), wavelet packet decomposition (WPD) (Wu et al., 2008), and approximate entropy (AE) (Ko et al., 2009). Non-linear features are extracted from the transformed phase space. Non-linear features contain quantitative measures that represent the complex dynamic characteristics of the EEG signals, such as Lyapunov exponent (Lyap) (Kutepov et al., 2020) and correlation dimension (CorrDim) (Geng et al., 2011). Finally, many machine learning methods are established to handle EEG emotion classification on extracted feature sets.

Figure 1. The process of constructing multiple frequency band sequences (Wei et al., 2020).

A common approach to deal with multiple bands of EEG data using traditional dictionary learning methods is to directly concatenate features of multiple bands together in the high-dimensional space and treat this single feature vector as the input to the model. However, dictionary learning may not perform well because different band features usually carry different characteristics of EEG emotion.

Dictionary Learning

Let be a set of m-dimensional n training signals. To minimize the reconstruction error and satisfy the sparsity constraints, the sparse representation and dictionary learning of X can be accomplished by

where is a dictionary with K atoms is the sparse coefficient matrix of signals X, and si is the sparse coefficient vector of xi over D. T is the sparse constraint factor. The ||si||0 ≤ T term requires the signal xi to have fewer than T non-zero items in its decomposition.

It is not easy to find the optimal sparse solution using ℓ0-norm regularization term; thus, an alternative formulation of Equation (1) is to replace it with ℓ1-norm regularization as

Equation (2) can be optimized by many efficient ℓ1 optimization methods, such as the famous K-SVD algorithm (Aharon et al., 2006; Jiang et al., 2013). However, Equation (2) is an unsupervised learning framework. To learn a discriminative dictionary for classification tasks, different kinds of loss functions or Fisher discrimination criterion are considered in the dictionary learning. Fisher discrimination constraints on atoms of the dictionary (Peng et al., 2020) or sparse coefficient S (Li et al., 2013) or reconstruction error of X (Zheng and Sun, 2019; Zhang et al., 2021) strive to preserve the class distribution and geometric structure of data.

Suppose data matrix X consists of samples from C different classes, from X, both the sub-dictionary Di and sub-sparse coefficient matrix Si are learned for the i-th class data (i = 1, 2,.,C). The whole dictionary D is represented as D = [D1, D2, ..., DC]. Let and denote the within-class scatter and between-class reconstruction error of X, respectively, then

and

where δlj( ) function returns the sparse codes consistent with the class of (j = 1,2,.,n), and ζlj( ) function returns the sparse codes not consistent with the class of .

Then, a discriminative dictionary can be learned by reducing the within-class diversity and by increase between-class separation using Equations (3) and (4) (Zheng and Sun, 2019; Zhang et al., 2021).

Optimized Projection and Fisher Discriminative Dictionary Learning

Objective Function

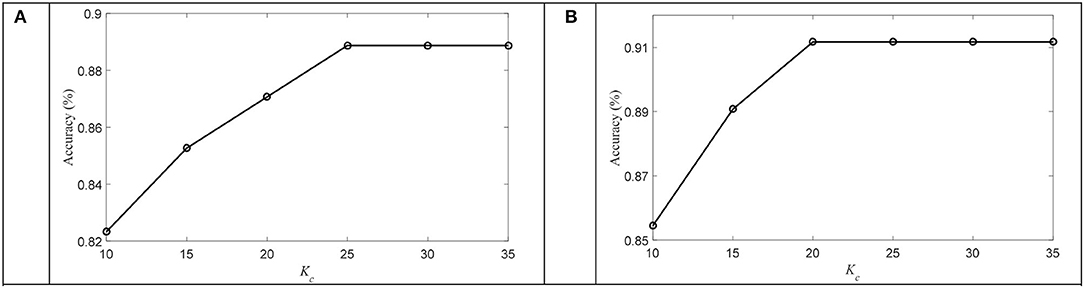

Here, we describe in detail the optimized projection and Fisher DDL model for collaborative learning of multiple frequency band EEG signals. The training framework of the OPFDDL model is illustrated in Figure 2. We learn a discriminative projection to map multiple frequency band EEG signals into a common subspace; simultaneously, we learn a common discriminative dictionary to encode the band-invariant information of multiple frequency bands. In particular, to promote the discrimination ability of the model, we utilize the model according to the Fisher discrimination criterion (Gong et al., 2019) under the structure of dictionary learning.

Figure 2. The training framework of optimized projection and Fisher discriminative dictionary learning (OPFDDL) model.

Let denote the signal set X of the frequency band r, where R is the number of frequency bands (r =1,., R). is the jth sample in Xr. To build the connection between different frequency bands and exploit the specific characteristic of each representation, we project into a feature subspace as by using a transformation matrix Qr ∈ Rm × dr. Therefore, we obtain by as the feature representations for R frequency bands. Then, we denote the within-class reconstruction error and between-class reconstruction error of the rth frequency band in the projection subspace

and

where is the within-class scatter matrix for sparse coding of the rth frequency band, and is the between-class scatter matrix for sparse coding of the rth frequency band.

From the classification point of view, minimizing within-class scatter and maximizing between-class scatter in the dictionary learning-based classifier can be represented as

With definition as Q~ = [Q1, Q2, ..., QR], , and

Equation (7) can be written as follows:

The projection matrix is limited to be orthogonal, which is highly effective in the optimization process. The solution of Equation (8) refers to the complex inverse operation and is computationally intensive. Thus, we translate it into the following quadratic weighted optimization (QWO) problem and obtain the objective function of OPFDDL

It is noted that the parameter μ is an adaptive weight that can be obtained by a closed-form solution but is not a manually adjusted parameter.

Optimization

In the following, the alternating optimization approach is used to update the parameters in Equation (9).

(1) Update step for . With D and μ fixed, and with the known and , the optimization of can be solved by

The projection matrix is constituted by the feature vector corresponding to the first d minimum eigenvalues of Equation (10).

(2) Update step for D. With the definition of , , , , and , and with the known and μ, Equation (9) can be written as

For each column of , i.e., , the optimization of D can be solved by the following problem:

Then, D can be updated by

where λD is the step size.

(3) Update step for S. When D, , and μ are learned, the sparse code s for each signal x in the subspace can be obtained by

(4) Update step for μ. By recalling that the matrixes, and can be built according to the obtained and S. When and are learned, the solution of μ is , and it can be obtained by a closed-form solution

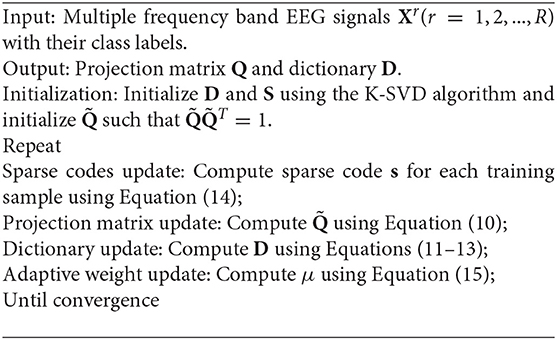

Based on the above analysis, the implementation process of OPFDDL is described in Algorithm 1. We initialize the sub-dictionary for each class by the K-SVD algorithm, and then, we integrate them to form the initialization dictionary D.

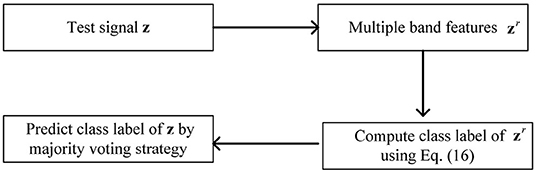

When the projection matrix Q and dictionary D are learned, we perform the following procedure to run testing work. The testing procedure of OPFDDL is illustrated in Figure 3. For each testing EEG signal z, its rth frequency band feature is denoted as zr. We map zr into projection subspace using Qr and classify its class label according to the smallest reconstruction error on each class as follows:

where is the pseudo-inverse of dictionary of class j.

Finally, we use the majority voting to identify the class label of signal z, i.e.,

where Δj is the number of votes for class j.

Experiment

Experimental Settings

Following the study of Li Y. et al. (2019), we used extracted methods of three features on the SEED dataset, including differential entropy (DE), power spectral density (PSD), and fractal dimension (FD). We investigated the EEG features over all frequency bands per second with no overlap in each channel. We used the random 10 trials in each subject for model training and the rest 5 trials for testing. The classification performance corresponding to each period is recorded for each subject. For DREAMER dataset, to balance the number and length of the segments, we divided the 60-s EEG signals into 59 blocks with an overlap rate of 50%. The DE feature extraction method was carried out and 14-dimensional features for each frequency band were obtained. For each subject, we trained our model using the random 12 trials and the rest 6 trials for testing.

We compared our proposed model with five machine learning methods, including SVM (Cortes and Vapnik, 1995), K-SVD (Aharon et al., 2006), PCB-ICL-TSK (Ni et al., 2020b), DDL (Zhou et al., 2012), and dictionary pair learning (DPL) (Ameri et al., 2016). The Gaussian kernel and Gaussian fuzzy membership were used in SVM and PCB-ICL-TSK, respectively. The parameters in comparison methods were set according to the default settings in corresponding methods. In OPFDDL, the dimension of the projection subspace was set as 90% of the dimension of the EEG signal features. The number of atoms in each class was selected in {10, 15, 20, 25, 30, 35}. The λ parameter in Equation (2) was set as 0.01. We used the 5-fold cross-validation method to select the optimal parameters, and we performed five independent runs to evaluate the classification accuracy of all methods.

Experiment Results on the SEED Dataset

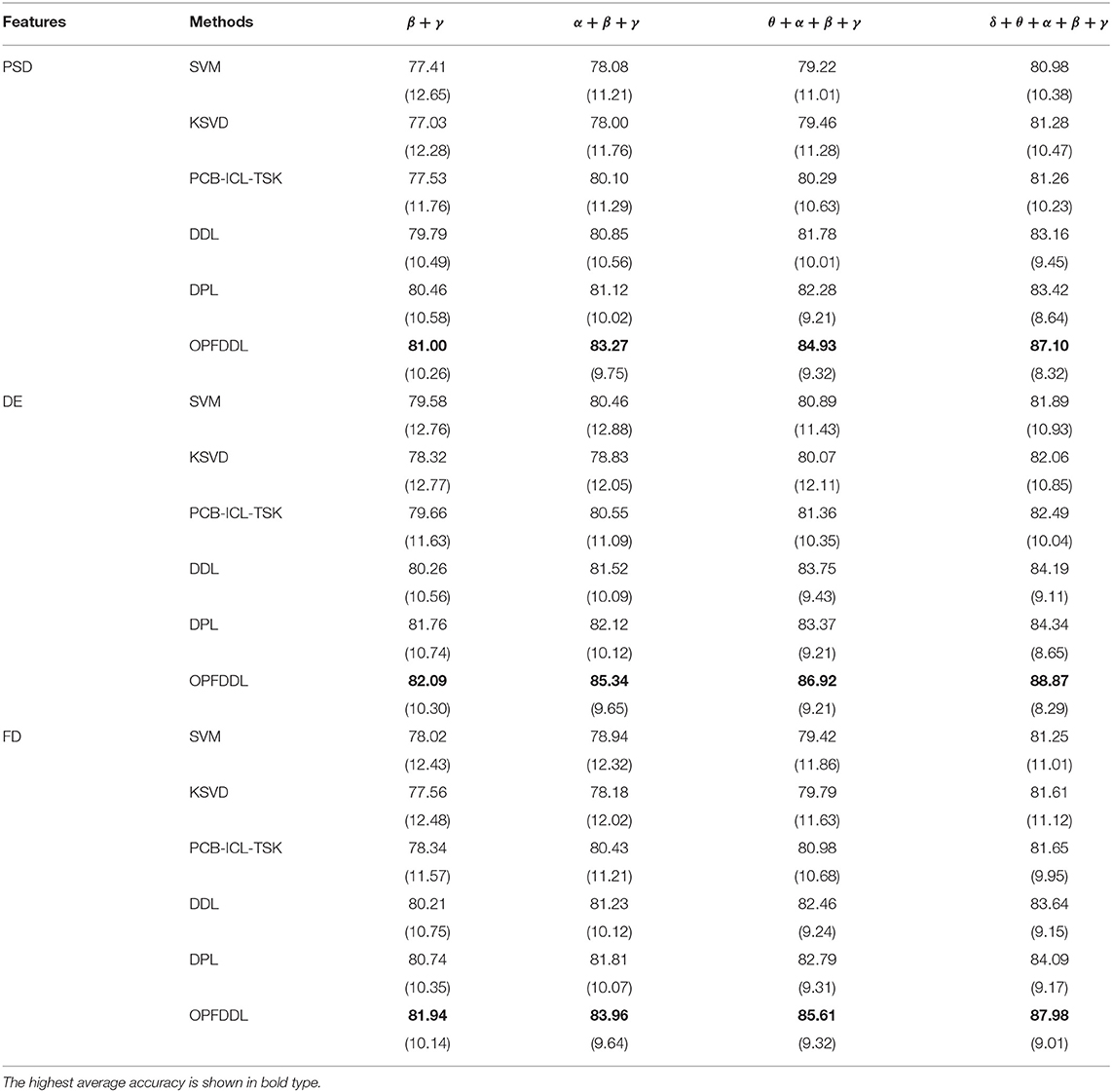

In this subsection, we performed the comparison experiments on the SEED dataset using various combinations of frequency bands and various features. The average accuracy performances of all methods with three feature methods are summarized in Table 2. From these results, we have the following observations: (1) Under different frequency band combinations, the results of total frequency bands of all methods are the best. For example, the classification accuracy of OPFDDL using all frequency bands is 6.70, 3.83, and 2.17% higher than that using frequency bands β + γ, α + β + γ, and θ + α + β + γ. The classification accuracy of SVM using all frequency bands is 3.57, 2.90, and 1.76% higher than that using frequency bands β + γ, α + β + γ, and θ + α + β + γ. In addition, in most cases, the SDs of all methods are small in all five bands. It demonstrates that multiple bands are helpful for EEG-based ER, due to that the features of each band have discrimination ability and five bands are complementary for distinguishing EEG emotions. (2) The classification performance of the three features is comparable. The performance of the DE feature is slightly better and shows an advantage in most of the cases. The classification accuracy of OPFDDL using the DE feature is 88.87%. It indicates that the DE feature is suitable to deal with EEG emotion signals. (3) OPFDDL outperforms all comparison methods, especially in the case of all five bands. It is because that OPFDDL can effectively integrate band-independent information and inter-band correlation information. The encouraging results indicate that direct concatenation of five frequency bands of EEG data cannot well-exploit the inherent distinguishing characteristics of data. Considering the common information of multiband and band-specific information shared in each band, it is important to jointly learn multiple band representations. (4) Compared with K-SVD and ODFDL, OPFDDL generates the shared common dictionary on all frequency bands in the projected subspace, which can maintain the data structure of multiple frequency bands. In addition, based on the Fisher discrimination criterion of maximizing within-class compactness and minimizing between-class separation, OPFDDL can well learn the discriminative dictionary from the cooperation of multiple frequency bands.

Table 2. The average accuracies (SDs) of all methods under four combinations of frequency bands and three feature methods.

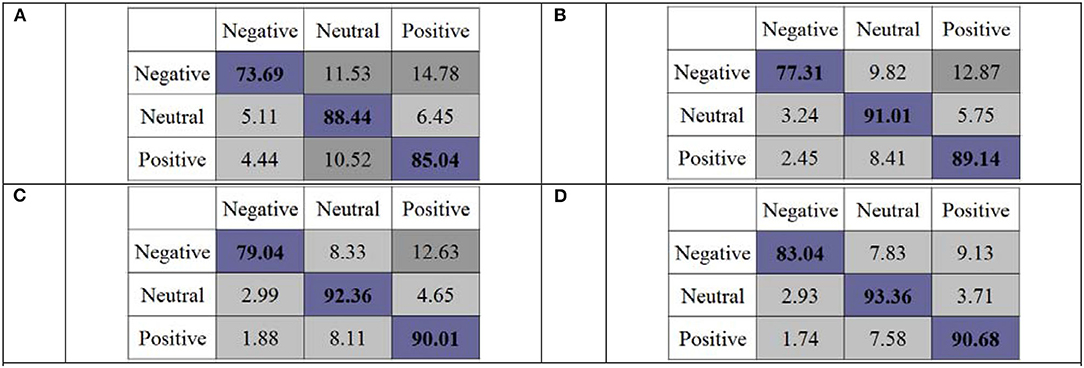

To further validate the discrimination ability of OPFDDL, in Figure 4, we reported the confusion matrix of the OPFDDL model with the DE feature. As shown in Figure 4, OPFDDL achieves a better classification performance on positive and neutral emotions than negative emotions in all cases. The performance result of OPFDDL is similar to that in references (Zheng and Lu, 2015; Li Y. et al., 2019). This suggests that subjects may have different EEG signals when experiencing negative emotions and have similar EEG signals when experiencing positive and natural emotions. In addition, it can be seen that OPFDDL achieves the best classification performance (see Figure 4D) which uses all five frequency bands.

Figure 4. Confusion matrices of OPFDDL of frequency bands using differential entropy (DE) features. (A) β + γ, (B) α + β + γ, (C) θ + α + β + γ, and (D) δ + θ + α + β + γ.

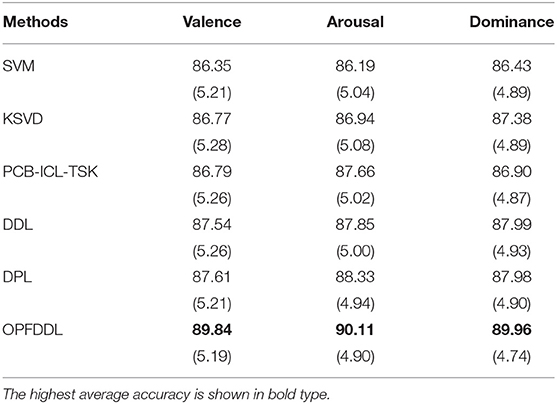

Experiment Results on the DREAMER Dataset

In this subsection, we performed the comparison experiments on the DREAMER dataset. We performed the comparison experiment using the DE feature. Similar to the SEED dataset, our model is compared with the abovementioned five methods. In the experiment, we verified the performance of OPFDDL according to valence, arousal, and dominance. The accuracy performance of all methods under the frequency band θ + α + β is shown in Table 3. From the results, we can see that OPFDDL obtained the highest accuracies of 89.84, 90.11, and 89.96% in terms of arousal, valence, and dominance. Similar to the performance results on the SEED dataset, OPFDDL performed best among all comparison methods. Based on the Fisher discrimination criterion, OPFDDL can well-learn the intrinsic relationships of EEG bands and can obtain the discriminative dictionary from multiple frequency bands cooperation in the projection subspace. In addition, the joint optimization strategy, which addresses the shared projection subspace and dictionary learning, also can incrementally enhance the recognition performance of our proposed model. Thus, our proposed model can utilize more distinctive representations of multiple frequency bands of EEG signals.

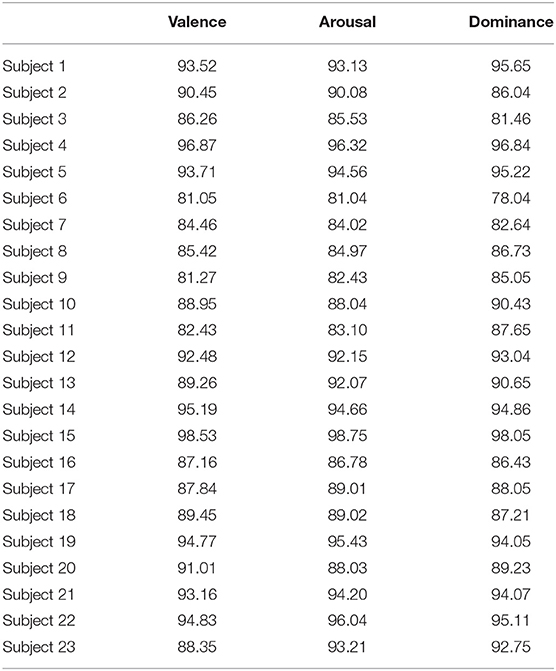

Then, we recorded the average accuracies of each subject for the OPFDDL model using the DE feature in terms of arousal, valence, and dominance. The experimental results are shown in Table 4. The proposed OPFDDL model had achieved satisfactory recognition performance for all three dimensions of arousal, valence, and dominance. Based on the structure of dictionary learning and the principles of projection and Fisher discrimination criterion, OPFDDL can make better use of discriminative information of different frequency band data and has stronger generalization ability, so it can be effectively used in EEG emotion classification task.

Table 4. Average accuracies of each subject for OPFDDL model using differential entropy (DE) feature on DREAMER dataset.

Parameter Variations

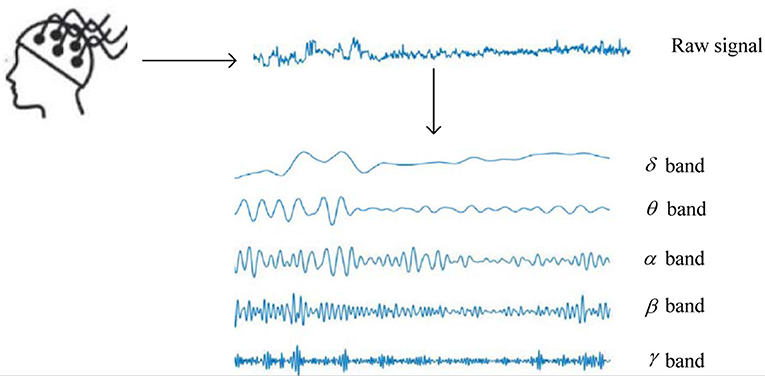

In this subsection, we first discussed the convergence of OPFDDL using the DE feature of total frequency bands on SEED and DREAMER datasets. The threshold for iteration stop was set as 10−3. Figure 5 plots the accuracy that varies with the number of iterations on one subject in two datasets. The results verify the convergence of OPFDDL. It can be seen that the OPFDDL model can achieve convergence within 20 iterations.

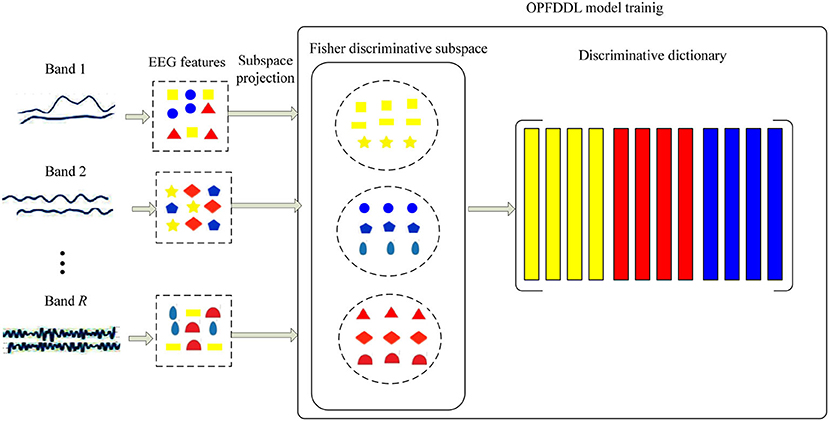

Then, we discussed the number of atoms used in OPFDDL. The number of atoms in each class Kc was increased from 10 to 35 in increments of 5. Figure 6 plots the accuracy that varies with the parameter Kc. The results show that after an initial dramatic increase, the classification accuracy of OPFDDL becomes stable after Kc = 20. In addition, the variation trend of accuracy is consistent on two datasets. Thus, the classification performance of OPFDDL is acceptable for small dictionary sizes.

Conclusions

Most previous machine learning methods focus on extracting feature representations for total frequency bands together without considering specific discriminative information of different frequency bands. In this study, we propose collaborative learning of multiple frequency bands for EEG-based ER. In particular, our model is an integration of projection and dictionary learning based on the Fisher discrimination criterion. For subspace projection optimization, a shared subspace is employed for each frequency band such that the band-specific representations and shared band-invariant information can be simultaneously utilized. For dictionary learning optimization, a shared dictionary is learned from the projected subspace where the Fisher discrimination criterion is used to minimize within-class sparse reconstruction error and maximize between-class sparse reconstruction error. The joint learning strategy allows the model to extend easily. Consequently, we obtain a discriminative dictionary with a small size. We have performed the experiments and proved the performance of OPFDDL on two real-world EEG emotion datasets, i.e., SEED and DREAMER. For further studies, we will try to utilize and test more discriminative sparse representation criteria in our model. In addition, we only consider subject-dependent classification in EEG emotion identification. Applying this model to subject-independent classification is a challenging work.

Data Availability Statement

The SEED dataset analyzed for this study can be found in this link (http://bcmi.sjtu.edu.cn/~seed/seed.html). The DREAMER dataset analyzed for this study can be found in this link (https://zenodo.org/record/546113).

Author Contributions

XG, JZho, and JZhu conceived and developed the theoretical framework of the study. All authors carried out experiment and data process, and drafted the study.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant No. 61806026 and by the Natural Science Foundation of Jiangsu Province under Grant No. BK20180956.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Abolghasemi, V., and Ferdowsi, S. (2015). EEG-fMRI: dictionary learning for removal of ballistocardiogram artifact from EEG. Biomed. Signal Process. Control 18, 186–194. doi: 10.1016/j.bspc.2015.01.001

Aharon, M., Elad, M., and Bruckstein, A. (2006). K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54, 4311–4322. doi: 10.1109/TSP.2006.881199

Ameri, R., Pouyan, A., and Abolghasemi, V. (2016). Projective dictionary pair learning for EEG signal classification in brain computer interface applications. Neurocomputing 218, 382–389. doi: 10.1016/j.neucom.2016.08.082

Barthélemy, Q., Gouy-Pailler, C., Isaac, Y., Souloumiac, A., Larue, A., and Mars, J. (2013). Multivariate temporal dictionary learning for EEG. J. Neurosci. Methods 215, 19–28. doi: 10.1016/j.jneumeth.2013.02.001

Bhatti, A., Majid, M., Anwar, S., and Khan, B. (2016). Human emotion recognition and analysis in response to audio music using brain signals. Comput. Hum. Behav. 65, 267–275. doi: 10.1016/j.chb.2016.08.029

Brouwer, A., Zander, T., van Erp, J., Korteling, J., and Bronkhorst, A. (2015). Using neurophysiological signals that reflect cognitive or affective state: six recommendations to avoid common pitfalls. Front. Neurosci. 9:136. doi: 10.3389/978-2-88919-613-5

Cortes, C., and Vapnik, V. (1995). Support vector machine. Mach. Learn. 20, 273–297. doi: 10.1007/BF00994018

Edgar, P., Torres, E. A., Hernández-Lvarez, M., and Sang, G. Y. (2020). EEG-based BCI emotion recognition: a survey. Sensors 20:5083. doi: 10.3390/s20185083

Fraiwan, L., Lweesy, K., Khasawneh, N., Wenz, H., and Dickhaus, H. (2012). Automated sleep stage identification system based on time-frequency analysis of a single EEG channel and random forest classifier. Computer Methods Prog. Biomed. 108, 10–19. doi: 10.1016/j.cmpb.2011.11.005

Geng, S., Zhou, W., Yuan, Q., Cai, D., and Zeng, Y. (2011). EEG non-linear feature extraction using correlation dimension and hurst exponent. Neurol. Res. 33, 908–912. doi: 10.1179/1743132811Y.0000000041

Gong, C., Zhou, P., Han, J., and Xu, D. (2019). Learning rotation-invariant and fisher discriminative convolutional neural networks for object detection. IEEE Trans. Image Process. 28, 265–278. doi: 10.1109/TIP.2018.2867198

Gu, X., Zhang, C., and Ni, T. (2020). A hierarchical discriminative sparse representation classifier for EEG signal detection. IEEE/ACM Trans. Comput. Biol. Bioinformat. doi: 10.1109/TCBB.2020.3006699. [Epub ahead of print].

Huang, J., Li, Y., Chen, B., Lin, C., and Yao, B. (2020). An intelligent EEG classification methodology based on sparse representation enhanced deep learning networks. Front. Neurosci. 14:808. doi: 10.3389/fnins.2020.00808

Hwang, S., Hong, K., Son, G., and Byun, H. (2020). Learning CNN features from DE features for EEG-based emotion recognition. Pattern Anal. Applicat. 23, 1323–1335. doi: 10.1007/s10044-019-00860-w

Jiang, Z., Lin, Z., and Davis, L. (2013). Label consistent K-SVD: learning a discriminative dictionary for recognition. IEEE Trans. Pattern Anal. Mach. Intell.35, 2651–2664. doi: 10.1109/TPAMI.2013.88

Kanoga, S., Kanemura, A., and Asoh, H. (2019). Multi-scale dictionary learning for ocular artifact reduction from single-channel electroencephalograms. Neurocomputing 347, 240–250. doi: 10.1016/j.neucom.2019.02.060

Kashefpoor, M., Rabbani, H., and Barekatain, M. (2019). Supervised dictionary learning of EEG signals for mild cognitive impairment diagnosis. Biomed. Signal Process. Control 53:101559. doi: 10.1016/j.bspc.2019.101559

Katsigiannis, S., and Ramzan, N. (2018). Dreamer: a database for emotion recognition through EEG and ECG signals from wireless low cost off-the-shelf devices. IEEE J. Biomed. Health Informat. 22, 98–107. doi: 10.1109/JBHI.2017.2688239

Ko, K., Yang, H., and Sim, K. (2009). Emotion recognition using EEG signals with relative power values and bayesian network. Int. J. Control Automat. Syst. 7:865. doi: 10.1007/s12555-009-0521-0

Kutepov, I., Dobriyan, V., Zhigalov, M., Stepanov, M., Krysko, A., Yakovleva, T., et al. (2020). EEG analysis in patients with schizophrenia based on Lyapunov exponents. Informat. Med. Unlock. 18:100289. doi: 10.1016/j.imu.2020.100289

Li, L., Li, S., and Fu, Y. (2013). “Discriminative dictionary learning with low-rank regularization for face recognition,” in 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (Shanghai: IEEE).

Li, X., Fan, H., Wang, H., and Wang, L. (2019). Common spatial patterns combined with phase synchronization information for classification of EEG signals. Biomed. Signal Process. Control 52, 248–256. doi: 10.1016/j.bspc.2019.04.034

Li, Y., Zheng, W., Cui, Z., Zong, Y., and Ge, S. (2019). EEG emotion recognition based on graph regularized sparse linear regression. Neural Process. Lett. 49, 555–571. doi: 10.1007/s11063-018-9829-1

Nakisa, B., Rastgoo, M., Tjondronegoro, D., and Chandrana, V. (2018). Evolutionary computation algorithms for feature selection of EEG-based emotion recognition using mobile sensors. Expert Syst. Applicat. 93, 143–155. doi: 10.1016/j.eswa.2017.09.062

Ni, T., Gu, X., and Jiang, Y. (2020a). Transfer discriminative dictionary learning with label consistency for classification of EEG signals of epilepsy. J. Ambient Intell. Hum. Comput. doi: 10.1007/s12652-020-02620-9. [Epub ahead of print].

Ni, T., Gu, X., and Zhang, C. (2020b). An intelligence EEG signal recognition method via noise insensitive TSK fuzzy system based on interclass competitive learning. Front. Neurosci. 14:837. doi: 10.3389/fnins.2020.00837

Peng, Y., Liu, S., Wang, X., and Wu, X. (2020). Joint local constraint and fisher discrimination based dictionary learning for image classification. Neurocomputing 398, 505–519. doi: 10.1016/j.neucom.2019.05.103

Petrantonakis, P., and Hadjileontiadis, L. (2010). Emotion recognition from EEG using higher order crossings. IEEE Trans. Informat. Technol. Biomed. 14, 186–197. doi: 10.1109/TITB.2009.2034649

Song, T., Zheng, W., Song, P., and Cui, Z. (2020). EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 11, 532–541. doi: 10.1109/TAFFC.2018.2817622

Sreeja, S., and Himanshu, D. (2020). Distance-based weighted sparse representation to classify motor imagery EEG signals for BCI applications. Multimed. Tools Applicat. 79, 13775–13793. doi: 10.1007/s11042-019-08602-0

Wei, C., Chen, L., Song, Z., Lou, X., and Li, D. (2020). EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomed. Signal Process. Control 58:101756. doi: 10.1016/j.bspc.2019.101756

Wu, T., Yan, G., Yang, B., and Sun, H. (2008). EEG feature extraction based on wavelet packet decomposition for brain computer interface. Measurement 41, 618–625. doi: 10.1016/j.measurement.2007.07.007

Zhang, G., Yang, J., Zheng, Y., Luo, Z., and Zhang, J. (2021). Optimal discriminative feature and dictionary learning for image set classification. Informat. Sci. 547, 498–513. doi: 10.1016/j.ins.2020.08.066

Zheng, W., Liu, W., Lu, Y., Lu, B., and Cichocki, A. (2019). Emotion meter: a multimodal framework for recognizing human emotions. IEEE Trans. Cybernet. 49, 1110–1122. doi: 10.1109/TCYB.2018.2797176

Zheng, W. L., and Lu, B. L. (2015). Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Autonom. Ment. Dev. 7, 162–175. doi: 10.1109/TAMD.2015.2431497

Zheng, Z., and Sun, H. (2019). Jointly discriminative projection and dictionary learning for domain adaptive collaborative representation-based classification. Patt. Recognit. 90, 325–336. doi: 10.1016/j.patcog.2019.01.004

Keywords: EEG signal, emotion recognition, dictionary learning, fisher discrimination criterion, brain computer interface

Citation: Gu X, Fan Y, Zhou J and Zhu J (2021) Optimized Projection and Fisher Discriminative Dictionary Learning for EEG Emotion Recognition. Front. Psychol. 12:705528. doi: 10.3389/fpsyg.2021.705528

Received: 05 May 2021; Accepted: 31 May 2021;

Published: 28 June 2021.

Edited by:

Yaoru Sun, Tongji University, ChinaReviewed by:

Yanhui Zhang, Hebei University of Chinese Medicine, ChinaYufeng Yao, Changshu Institute of Technology, China

Copyright © 2021 Gu, Fan, Zhou and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jie Zhou, c3h1al96aG91QDE2My5jb20=; Jiaqun Zhu, YW5nZWxzb2FyQGNjenUuZWR1LmNu

Xiaoqing Gu

Xiaoqing Gu Yiqing Fan

Yiqing Fan Jie Zhou

Jie Zhou Jiaqun Zhu1*

Jiaqun Zhu1*