94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 03 August 2021

Sec. Psychology of Language

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.686485

This article is part of the Research TopicFuzzy Lexical Representations in the Nonnative Mental LexiconView all 13 articles

Previous work on placement expressions (e.g., “she put the cup on the table”) has demonstrated cross-linguistic differences in the specificity of placement expressions in the native language (L1), with some languages preferring more general, widely applicable expressions and others preferring more specific expressions based on more fine-grained distinctions. Research on second language (L2) acquisition of an additional spoken language has shown that learning the appropriate L2 placement distinctions poses a challenge for adult learners whose L2 semantic representations can be non-target like and have fuzzy boundaries. Unknown is whether similar effects apply to learners acquiring a L2 in a different sensory-motor modality, e.g., hearing learners of a sign language. Placement verbs in signed languages tend to be highly iconic and to exhibit transparent semantic boundaries. This may facilitate acquisition of signed placement verbs. In addition, little is known about how exposure to different semantic boundaries in placement events in a typologically different language affects lexical semantic meaning in the L1. In this study, we examined placement event descriptions (in American Sign Language (ASL) and English) in hearing L2 learners of ASL who were native speakers of English. L2 signers' ASL placement descriptions looked similar to those of two Deaf, native ASL signer controls, suggesting that the iconicity and transparency of placement distinctions in the visual modality may facilitate L2 acquisition. Nevertheless, L2 signers used a wider range of handshapes in ASL and used them less appropriately, indicating that fuzzy semantic boundaries occur in cross-modal L2 acquisition as well. In addition, while the L2 signers' English verbal expressions were not different from those of a non-signing control group, placement distinctions expressed in co-speech gesture were marginally more ASL-like for L2 signers, suggesting that exposure to different semantic boundaries can cause changes to how placement is conceptualized in the L1 as well.

In learning how to say the equivalent of “the woman put the cup on the table” in a second language, many learners face the challenge of semantic reconstruction. A speaker whose first language (L1) is English and whose second language (L2) is Dutch must learn that Dutch does not have one verb that corresponds to “put” in English. Instead, when describing an event of putting in Dutch, the speaker must choose between different verbs. This choice requires attention to the shape and orientation of the object being placed. Thus, the learner must not only learn the appropriate vocabulary in the target language but may also need to reorganize their conceptualization of placement events. This is a challenge for many learners whose tendency to transfer semantic boundaries from the L1 onto the L2 can result in non-target like use of verbs of placement, indicating fuzzy placement semantics. Unknown is whether differences in semantic transparency in the target-language may help acquisition. Like spoken languages, sign languages use different verbal distinctions in descriptions of placement of different objects. Unlike spoken language verbs in many languages, however, placement verbs are often highly iconic in sign languages. They involve handshapes reflecting visual properties of their referents, and/or kinesthetic properties of how an entity is handled. Placement descriptions in sign languages therefore offer a transparent link between elements of the world and their linguistic encoding. It is unknown whether such transparency facilitates acquiring placement expressions for hearing second language learners of a sign language, or whether they experience the same difficulties in acquiring novel, semantic distinctions as do hearing second language learners of a spoken language.

Also poorly understood are the consequences for L1 placement semantics of learning a typologically different L2. The process of acquiring target-like semantic boundaries may require the learner to engage in semantic reconstruction. As the L2 is fully or partially acquired, this process may come to influence the L1, creating a system where the semantic boundaries of the L1 are (temporarily) fuzzy and unstable and consequently may differ from that of monolinguals and bilinguals with a different L2.

The present study aims to address these gaps in our knowledge by investigating placement descriptions in native English speakers learning American Sign Language (ASL) as an L2. Placement expressions are highly transparent in American Sign Language and at the same time, they exhibit some form overlap with co-speech gestures used in placement descriptions. We take advantage of these facts to ask (1) whether acquiring target-like placement verbs is challenging for different-modality L2 learners as has been shown for same-modality learners, or whether the transparency of ASL placement verbs decreases the difficulty of this task, and (2) whether the learners' English placement descriptions (speech and gesture) show evidence of influence from ASL.

Languages show considerable differences in the expression of placement events (Kopecka and Narasimhan, 2012). A placement event is a type of caused motion event, in which an agent moves something somewhere, e.g., putting a book on a bookshelf. Studies have shown that the descriptions of placement is a typologically quite diverse domain cross-linguistically, not least in terms of verb semantics (see Bohnemeyer and Pederson, 2011; Gullberg, 2011a; Slobin et al., 2011; Kopecka and Narasimhan, 2012). This is perhaps surprising. Given that speakers from different cultures share similar visual and motor experiences with respect to placing objects, we might expect them to describe those experiences in similar ways. However, studies from the last decades have shown that this is far from the case (Ameka and Levinson, 2007; Bohnemeyer and Pederson, 2011; Kopecka and Narasimhan, 2012). Narasimhan et al. (2012) note that languages such as Hungarian, Kalasha, Hindi, and Tamil use a semantically general verb for “put” (as do languages like English and French). This type of single-term or general placement verb language is in opposition to multi-term or specific placement verb languages, such as Tzeltal, which requires selection of one of numerous verb roots to describe a placement event. Languages such as Dutch, Swedish, Polish, and Yeli Dnye are a slightly different kind of multi-term languages. They select a verb from small set of so-called posture verbs, depending on several factors, including the orientation of the object being placed. For example, German, also a posture verb language, distinguishes between the verbs “stellen” “to put upright,” “setzen” “to set,” “legen” “to lay,” “stecken” “to stick,” and “hängen” “to hang” (De Knop, 2016).

Relevant semantic distinctions show up in the co-speech gestures of a language, as well as in speech (Hoetjes, 2008; Gullberg, 2009a, 2011a). Speakers frequently accompany their words with co-speech gesture (McNeill, 1992; Kendon, 2004). Many co-speech gestures are iconic representations of some part of the speech content, that is, they are handshapes that share form properties with the represented entity or action (Kendon, 1980; McNeill and Levy, 1982; McNeill, 1992). Because placement descriptions denote placement actions, which are similar across languages, we might expect speakers to accompany their descriptions with similar co-speech gestures irrespective of the variation in semantic distinctions in different languages. For example, gesturers across languages might use handshapes similar to the motor actions used to perform placement of different items, e.g., a “cup” handshape when talking about the placement of a cup or a glass, but a pincer handshape for small objects, such as beads or coins. However, research has shown that cross-linguistic differences in placement events extend beyond the verbal component of language, and that co-speech gestures instead exhibit patterns specific to language or language type (Hoetjes, 2008; Gullberg, 2009a, 2011a). In particular, speakers of general placement verb languages like French tend to use gestures that reflect the focus in their verbal expression on the act of moving something. This means that they gesture mainly about the direction or path of an object being moved. Conversely, speakers of multi-term, specific placement verb languages like Dutch who have to select verbs in part based on properties of the object being placed typically use gestures that represent form properties of the figure object (Gullberg, 2011a). Figure 1 shows an example of a general placement verb system type path gesture compared to a specific placement verb system type gesture including both information about the figure object and the path of the placement event.

Figure 1. Path gesture in description of placing a clothes hanger (left) and figure + path gesture in description of placing a speaker (right).

English tends to encode placement with the verb “put.” Although English has specific placement verbs based on posture (“set” and “lay”), they are infrequent in placement descriptions (Pauwels, 2000) and English is categorized as a general placement verb language. Hoetjes (2008) examined English speakers' placement verbs and placement gestures. She found that English speakers as a group used “put” in 59% of their placement descriptions, and “place,” another general placement verb, in 10% of their descriptions. In another experiment using the same stimuli, Gullberg (2009a) similarly found the mean proportion of “put” to be 61% for native English speakers. In both studies, the mean percentage of gestures incorporating information about the figure object was below 40% for native English speakers, and correspondingly, the proportion of path-only gestures was over 60%.

To date, no studies have specifically examined placement verbs in a sign language. American Sign Language (ASL) is the primary language of most Deaf individuals in the U.S. and parts of Canada. ASL is produced with the hands and body and perceived with the eyes. Expressing language in the visual-manual modality appears to afford a high degree of iconicity (Perniss et al., 2010). For example, the ASL sign “CUP” involves a sideways C-handshape, similar to the handshape one would use to hold a cup (Figure 2). In this paper, we use capitalized letters to indicate sign glosses and letters/numbers to indicate handshapes (the relevant handshapes are pictured in the Supplementary Images). Following convention, ASL signs are glossed with English words, but note that sign and gloss are not always translation equivalents.

While no studies have looked specifically at how placement is expressed in ASL, some aspects of ASL and other sign languages that are relevant for understanding signed placement verbs have been investigated in previous work. Specifically, most sign languages have a system of classifiers (Aronoff et al., 2005; Zwitserlood, 2012) that play an important role in this domain. Classifiers are handshapes that represent something about the object being described, e.g., shape and size, semantic class, or how an agent would handle the object. There are two broad categories of classifiers (Zwitserlood, 2012): handling (or handle) classifiers, where the hand(s) represent(s) how the entity is held by an agent (Figure 3), and entity classifiers, where the hand(s) represent(s) the entity (Figure 4).

Although classifiers represent information about the figure object, it is not the case that there are unlimited gradient distinctions in classifiers; rather, there exists a set of conventionalized handshapes, where each is conventionally used for specific types of objects. For example, the C handshape handling classifier is used for tall cylinder-like objects like vases, cups, bottles, etc., and the flattened O handshape handling classifier is used for thin flat objects like books, papers, blankets, etc. (Zwitserlood, 2012). Both handling and entity classifiers can be incorporated into verbs of motion and location, sometimes called classifier verbs or classifier predicates (Supalla, 1982; Aronoff et al., 2003, 2005). Verbs like “PUT” and “MOVE” are examples of verbs that can be classifier verbs (e.g., Slobin et al., 2003; Slobin, 2013) and can be used in placement descriptions1. When describing how objects are used or manipulated, ASL signers tend to incorporate handling classifiers, rather than entity classifiers into verbs (Padden et al., 2015). Thus, ASL appears to prototypically express placement events with verbs like “MOVE” and “PUT” with incorporated handling classifiers. Despite being similar to languages like English and French in using general placement verbs such as “MOVE” and “PUT” as the basic verb, ASL is differentiated from these languages by the frequent incorporation into the verb of an additional morpheme (the classifier) which specifies shape and orientation of the figure object. Because of this, ASL can be considered a specific placement verb language2. We confirmed this with data from two Deaf, native ASL signers, which will be described in more detail below.

Expressing placement in a second language (L2) not only requires learning placement verbs, locative expressions and appropriate syntax. It also requires learning the semantic boundaries of placement words, which can differ, even between words that are cognates across languages. Learning new semantic boundaries requires first detecting the relevant difference and then mentally rearranging concepts and shifting boundaries accordingly. Rearranging concepts resulting from the semantics of the first language (L1) poses a challenge for the learner (Ijaz, 1986; Kellerman, 1995). This is because native language learning habituates the individual to thinking in ways that are compatible with available means of expression, i.e., to what Slobin (1996) calls “thinking for speaking.” To become target-like in placement descriptions, many L2 learners must therefore learn a new way of categorizing semantically. This can cause a variety of issues in the L2, including L1 transfer, that is, mapping semantic boundaries from the native language onto the L2, and fuzzy semantic boundaries. Non-native patterns can arise both when going from a more general to a more complex placement verb system and vice versa (Cadierno et al., 2016). When the L1 uses a general placement verb (e.g., “put” in English) and the L2 distinguishes between several specific placement verbs (e.g., “zetten” vs. “leggen” in Dutch), learners overuse one verb and do not maintain obligatory semantic distinctions (Viberg, 1998; Gullberg, 2009a). In cases where the L1 has several, specific placement verbs, and the L2 has one or more general placement verbs, learners' use of placement verbs in speech may show native-like overall distinctions relatively quickly (Gullberg, 2009a, 2011b; Lewandowski and Özçalişkan, 2021) but can nevertheless include non-native verb forms (Cadierno et al., 2016) and overuse of more peripheral verbs in an attempt to re-create placement distinctions from the L1 (Gullberg, 2009b).

Co-speech gestures have been used as a means to probe L2 speakers' underlying representations in the placement domain (Gullberg, 2009a,b, 2011a,b). While L2 learners of a more general placement verb system (e.g., Dutch L1-French L2) may be able to acquire the verbal system with relatively little difficulty, studying their gestures reveals a somewhat different picture. Using a French native-like pattern in speech does not necessarily mean that the learners have abandoned the conceptualization of placement events from their L1. Specifically, native French speakers primarily accompany their placement verbs with path-only gestures. In contrast, many L1 Dutch learners of L2 French use significantly more figure gestures, maintaining the distinctions from their native Dutch (Gullberg, 2009b), even as they are using a target-like system in their spoken French (see also the study by Özçalişkan, 2016 showing persistent L1 co-speech gesture in L2 expression of voluntary motion). Conversely, L1 speakers of a general placement verb language (e.g., English) learning a specific placement verb L2 (e.g., Dutch) produce mainly English-like path-only gestures in their L2, even when they begin to use the appropriate verb distinctions in speech (Gullberg, 2009a). Importantly, learners of a specific system do not gesture about figure-objects unless they apply the relevant distinctions in speech (Gullberg, 2009b). A general observation is that although it is difficult, and the progress is gradual, semantic reconstruction away from fuzziness and into alignment with the L2 seems possible (Gullberg, 2009b).

While evidence suggests that acquiring semantic distinctions in an L2 is challenging, it is important to note that this evidence is based exclusively on research with same-modality L2 learners, that is, individuals with a spoken L1 learning a spoken L2. However, not all second language learning happens within the same modality. Many hearing individuals acquire a sign language as an L2. Researchers are increasingly asking to what extent the principles of L2 acquisition apply when the source language is spoken and the target language is signed (Chen Pichler and Koulidobrova, 2016). While many challenges are similar for hearing learners of signed and spoken second languages, additional issues arise when acquiring a new language in a new modality (McKee and McKee, 1992; Wilcox and Wilcox, 1997), including learning to manage visual-manual phonology (Bochner et al., 2011; Chen Pichler, 2011; Ortega, 2013; Ortega and Ozyurek, 2013; Ortega and Morgan, 2015), multiple articulators (Gulamani et al., 2020), spatial grammar and depicting referents with the body (Bel et al., 2014; Ferrara and Nilsson, 2017; Frederiksen and Mayberry, 2019; Kurz et al., 2019; Gulamani et al., 2020), using the face to display grammatical information (McIntire and Reilly, 1988), and the high degree of iconicity in sign languages (Lieberth and Gamble, 1991; Campbell et al., 1992; Baus et al., 2013; Ortega and Morgan, 2015). At the same time, it is possible that hearing learners' experience with co-speech gesture in their L1 affects their acquisition of a sign language (McIntire and Reilly, 1988; Taub et al., 2008; Chen Pichler and Koulidobrova, 2016). Hearing individuals produce spontaneous co-speech gestures when they speak. However, it is as of yet unclear whether co-speech gesture in fact helps or hinders sign acquisition, and previous work suggests that the answer to this question may vary by linguistic domain (Schembri et al., 2005; Ortega and Morgan, 2010; Chen Pichler, 2011; Ortega, 2013; Marshall and Morgan, 2015; Janke and Marshall, 2017; Kurz et al., 2019).

To date, it is unknown whether the acquisition of placement expressions is similarly difficult when acquiring a signed compared to a spoken L2. Many researchers have noted similarities between the classifier handshapes used by Deaf signers and the handshapes used in co-speech gesture and pantomime by hearing individuals in (Singleton et al., 1993; Schembri et al., 2005; Sevcikova, 2014; Marshall and Morgan, 2015; Quinto-Pozos and Parrill, 2015), despite obvious differences such as signers tapping into a much more conventionalized system and gesturers employing these handshapes on the fly. Other studies show similarities in how the signers and gesturers alternate between different (classifier) handshapes in their descriptions of objects and humans handling them (Brentari et al., 2012; Padden et al., 2015; Masson-Carro et al., 2016; Hwang et al., 2017; van Nispen, 2017; Ortega, 2020). Thus, it is possible that English speakers can build on their use of gestural distinctions to acquire ASL semantic boundaries in placement verbs relatively quickly. Moreover, the high degree of transparency in ASL placement distinctions may also be an advantage for L2 learners, decreasing the proficiency level required to use target-like placement distinctions in ASL compared to learners acquiring a less transparent system.

Research has shown that language influence can happen in both directions, from L1 to L2 but also from L2 to L1. In bilinguals, the two languages are activated at the same time and compete for selection (e.g., Jared and Kroll, 2001; Marian and Spivey, 2003; Dijkstra, 2005; Kroll et al., 2008). This not only results in influence from the first on the second language; even at the very beginning stages, learning a second language affects the first language (see Kroll et al., 2015). Further, this effect is not only observed with respect to language processing, but also in how events are conceptualized, e.g., “conceptual transfer” (Bylund and Jarvis, 2011; Daller et al., 2011). Bi-directional effects have been observed in the context of cross-modality language learning as well. Work by Morford et al. has shown that ASL signs are activated during English print word recognition in highly proficient ASL-English bilinguals, irrespective of language dominance (Morford et al., 2011, 2014). Similar effects have been reported for DGS (Deutsche Gebärdensprache, German Sign Language)-German bimodal bilinguals (Kubus et al., 2015; Hosemann et al., 2020).

L2 influence on placement verb semantic boundaries in L1 has not been researched specifically for the case when the L1 is clearly dominant and the L2 is weaker. However, Alferink and Gullberg (2014) investigated placement verbs in individuals who grew up with and continued to use both French and Dutch in their daily lives. These early bilinguals showed evidence of having blurred obligatory placement verb distinctions in Dutch, effectively using the same distinctions for both Dutch and French despite the former being a specific, multi-term language and the latter being a general, single-term language.

Thus, there is reason to expect L2 learners' placement expressions in English to be influenced by ASL. Such an influence could be evident in either speech or in gesture. Co-speech gesture research has found evidence of gestural transfer from the L2 to the L1 (Brown and Gullberg, 2008, 2011; see also overview in Gullberg, 2009c). Specifically, in L1 descriptions of voluntary motion, some L2 learners show evidence of aligning with the L2 in gesture while maintaining L1 patterns in speech. Pertinent to the present study, there appears to be an additional effect on L1 co-speech gesture from learning a signed as opposed to a spoken L2. Iconic gesture rates increase with sign language proficiency, something that does not happen when learning spoken second languages, even in languages known for frequent gestures, such as French or Italian (Casey et al., 2012; Weisberg et al., 2020). For hearing English-ASL bilinguals, there is the additional factor that classifiers, and particularly classifiers of the handling type that are predominant in placement descriptions, have iconic properties reflecting visuo-spatial properties of their referents (Zwitserlood, 2012), which offers a visual correspondence between elements of the world and their linguistic encoding. It has been shown that, in some domains, co-speech gesturers and signers tend to use their hands in similar ways (Sevcikova, 2014; Quinto-Pozos and Parrill, 2015). As such, placement descriptions in sign languages offer a visual correspondence between elements of the world and their linguistic encoding which may be easy to adopt either because it already overlaps with the distinctions used by the learners in co-speech gesture, or because of the high degree of transparency in the distinctions that are being employed in ASL.

The present study investigates what semantic reorganization in the domain of placement events looks like when the source and target languages do not share a sensory-motor modality. We ask two major questions: First, whether second language learners of ASL face similar challenges with placement verbs as do same-modality L2 learners, especially in the light of the high degree of transparency in ASL placement verb distinctions. Second, we ask whether there is evidence that learning new semantic boundaries for placement events affects L1 semantics. If modality and transparency do not matter, then we would expect English L1-ASL L2 language users to use general placement verbs such as “PUT” and “MOVE,” and specifically, L2 signers should use classifiers at a lower rate than native signers and exhibit fuzzy semantic boundaries for the classifiers they do use. Further, if L1 semantics is affected by learning a signed L2, then we would expect L2 signers' placement descriptions in English speech and/or co-speech gesture to include ASL-like distinctions that do not occur in non-signing English speakers. Specifically, L2 signers would be expected to use comparatively more verbs with a specific rather than general placement meaning, and/or to use more co-speech gestures reflecting properties of the figure object.

We recruited eight hearing L2 signers (five female) to take part in the task. These individuals were native speakers of English who learned ASL as young adults. All were intermediate learners who had completed at least 1 year of ASL instruction (six weekly contact hours). At the time of participation, seven of the learners used ASL daily, and one learner used ASL once a month. Seven of the L2 learners had exposure to either Spanish or French starting between the ages of nine and fourteen. Table 1 summarizes their demographic information. We additionally tested eight non-signing English speakers (five female, mean age: 19; SD: 1), and two Deaf, native ASL signers (two female, ages 21 and 62), on the same task. Seven of the non-signers had exposure to a language other than English (Spanish, French, Farsi, German); three were exposed to the non-English language after the age of 11, two at the age of eight, and two were exposed to Spanish from birth 3. All non-signing participants reported that they were English dominant.

We used a director-matcher task (e.g., Clark and Wilkes-Gibbs, 1986) to elicit placement descriptions of our stimuli. The stimuli consisted of a video segment showing a man repositioning objects in a room. The video was split into six parts, each containing the placement of four or five stimulus objects (e.g., a cup, a lamp, plates, a scarf), for a total of 25 events (see Appendix in Supplementary Material for a full list of stimulus items). In our version of the director-matcher task, the participant was the director, and their task was to watch the video clips (Figure 5a) one at a time and explain to the matcher (a native language user confederate) what happened after each clip. The matcher in turn drew this information on a picture of the empty room Figure 5b), specifically where the objects being described were placed. The video clips were not visible to the matcher and the drawing was not visible to the director.

After providing written, informed consent, the director and matcher were seated across from each other, and the experimenter explained their tasks in the language of the experiment. As a memory aid, the director was given list of pictures corresponding to the objects-to-be-described4. The matcher was instructed to ask questions whenever clarification was needed. Two cameras captured the communication between the director and the matcher during the task. Only data from the director are analyzed in this study. The L2 signers completed the task twice, once in English and once in ASL with different interlocutors. We counterbalanced the order of languages, and participants performed another task before completing the task the second time. Participants were either paid a small amount or given credit in a college course for their participation.

Speech and sign transcriptions were done in the ELAN software (Wittenburg et al., 2006) by transcribers who were native language users. For each of the stimulus objects, they identified the first complete, spontaneous, and minimal placement description. Such descriptions included mention of the figure object, generally in the form of a lexical noun phrase, the verb (or intransitive construction, e.g., “the cup is on the table”), and often the final location of the object as well. Repetitions, elaborations and answers to questions by the interlocutor were not included. From each of the included descriptions, the transcriber provided a verbatim transcription of the placement event specifically, shown in italics in 1) for English and 2) for ASL.

(1) [then he grabbed the paper towels] and placed them on the kitchen counter

(2) [BOOK TAKE-handshape: C] CABINET PUT-handshape: C [(he) took the book] (and) put it in the cabinet

Finally, it was noted which placement verb was used in each placement description [underlined in (1) and (2)]. For ASL trials, two trained coders, a Deaf native signer and a hearing proficient signer, noted whether the placement verb was used in the citation form or with an incorporated classifier. The coders were instructed to be conservative when encountering handshapes that were ambiguous between citation form and incorporated classifier. For example, holding a thin flat object in a horizontal position would use the same flat O handshape as the citation form of “PUT.” In such cases, the verb was coded as occurring in citation form unless the hand orientation or movement was different from the citation form. Flat and open/round versions of the same handshape were grouped together. Where applicable, the coders also noted the type of classifier (handling vs. entity) used in the placement verb. This resulted in three categorizations of verbs and intransitive constructions with possible classifier incorporation: (1) Handling classifier, (2) Entity classifier, and (3) Citation form. Across the entire data set, the two coders agreed on 92% of categorizations.

For gesture transcriptions, we focused on tokens occurring during the minimal placement descriptions identified for speech. We marked gesture strokes, that is, the most effortful and expressive part of the gesture, and post-stroke holds, that is, periods of maintaining the stroke handshape after the stroke itself (Kita et al., 1998; Kendon, 2004). For each gesture identified, two coders separately noted whether the handshape and hand orientation (a) expressed figure information by reflecting properties of the stimulus object in question, (b) did not reflect figure object properties but only indicated direction or path of movement, (c) reflected only properties of the ground object on which the figure object was placed, or (d) did not have any relationship to the placement event proper, that is, the gesture was a beat gesture serving to emphasize speech rhythm, a thinking gesture indicating word finding difficulty, or it was unclear what the gesture represented. This resulted in the following gesture categories: (1) Figure inclusion (a), (2) Path only (b), (3) Everything else (c and d). To minimize influence on gesture coding from the speech content, this coding was undertaking without access to the video's sound. Across the entire data set, the two coders agreed on 90% of form categorizations. In cases of disagreement, the judgement of the first coder was retained.

The analysis focuses on the target objects for which the placement description was complete. Some trials were skipped, most likely because participants simply forgot to mention a target object. Non-signing participants skipped nine target objects (5%), native ASL signers skipped one target object (2%), and the L2 ASL learners skipped ten (5%) target objects in English and 15 (7.5%) in ASL.

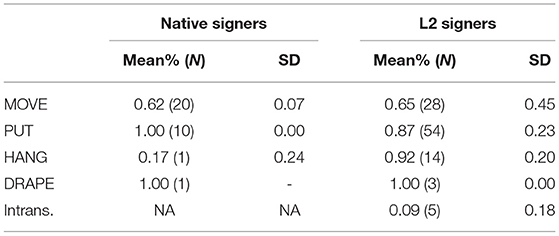

We first confirmed that the Deaf, native signer control data matched our expectations of ASL as using primarily general verb types like “PUT,” and “MOVE” modified with classifiers to reflect properties of the figure object (see verb illustrations in the Supplementary Images). As shown in Table 2, the verb types “MOVE” and “PUT” together accounted for 88% of verb tokens used. “HANG” was the only additional verb used with any regularity. The native signers' mean proportion of verbs incorporating a classifier handshape was 66%. The vast majority of classifiers were handling classifiers (84%); only a small proportion were entity classifiers (16%). Table 3 shows how often different verbs were produced with classifiers. On average, “MOVE” was used with a classifier more than half the time. 92% of those classifiers were handling classifiers. The verb “PUT” never occurred in citation form in these data; it was used with a handling classifier 94% of the time. Overall, seven different classifiers occurred: three handshapes occurred as handling classifiers (O, C, S) and four occurred as entity classifiers (Y, B, C, baby-C; see illustrations of classifier handshapes in the Supplementary Images). Thus, while a few non-specific placement verbs account for the vast majority of tokens in native ASL signers' placement descriptions, they are modified with classifiers more often than not, which creates a complex system involving multiple distinctions based on properties of the figure object.

Table 3. Classifier incorporation by verb and group5

We next asked whether the L2 signers similarly used general placement verbs modified with classifiers in their placement descriptions, which would suggest they that have reconstructed their conceptualization of placement events and are using semantic distinctions that are relevant in the target language.

An examination of how the L2 learners' use of broad ASL verb types compares with that of the two Deaf, native signers, irrespective of classifier use, shows that the L2 signers use “MOVE” and “PUT” most frequently, similarly to the native signers (Table 2). However, where the native signers use a greater proportion of “MOVE,” L2 signers on average use the two verbs at a similar rate. The main difference between the groups' overall verb use is the L2 learners' use of a large proportion of intransitive descriptions, and their use of “SET,” which are low or absent from the native data6. Both groups use comparable proportions of “HANG” and “DRAPE.” Despite differences in proportion, an analysis of variance on the proportion of tokens (arcsine transformed) as a function of verb type and group showed no difference in the use of broad ASL verb types by the L2 learners as compared to the native signers [F(6, 48) = 1.656, p = 0.153].

We next asked whether L2 and native signers incorporated classifiers into their verbs at similar rates. Aggregating across verbs, the mean rate of classifier incorporation was 56% (SD = 27) for L2 signers, compared with 66% (SD = 13) for native signers. Nevertheless, a mixed-effect logistic regression (Jaeger, 2008) showed no significant effect of group (ß = −0.329, p = 0.77)7. Table 3 shows how often classifiers were incorporated into the different verbs by native and L2 signers. Overall, the groups incorporated classifiers similarly. On average, L2 signers used a high rate of classifiers for both “MOVE” and “PUT,” which is similar to the native signers. The main difference was in the verb “HANG,” where L2 signers incorporated classifiers at a much higher rate than native signers. As for classifier type, the L2 signers were similar to the native signers in using mostly handling classifiers (79%), with fewer entity classifiers (21%). The learners used both “MOVE” and “PUT” primarily with handling classifiers. Overall, nine classifiers occurred in the L2 data: four handshapes were used as entity classifiers (C, B, baby-C, F) and five were used as handing classifiers (A, 5, C, O, S). The native signers used the handshapes C, B, and baby-C as well as Y as entity classifiers. The two groups overlapped in their use of the handling classifier handshapes C, O and S, and the L2 signers additionally used A and 5 handshapes.

We finally asked whether the classifiers used by the L2 signers were appropriate for the described objects, as compared with the native signers. We grouped the described objects into five categories based on shared characteristics that were expected to influence how placement of the object would be expressed: (1) Tall cylindrical objects with a thin handle or neck (wine bottle, water bottle, paper towel holder, lamp, and candle), (2) Objects with a functional base (glass, potted plant, plates, speaker, basket, and bowl), (3) Thin rectangular objects without clear functional base (picture frame, magazine, computer, and book), (4) Object made from fabric (jacket, table cloth, pillow, scarf, throw, bag, and hat), and (5) Other (cables, silverware, clothes hanger)8. We then examined which handshapes were preferred by native and L2 signers for objects in each of the five categories (Table 4). Visual inspection of Table 4 shows that, excepting the Fabric category, the L2 signers used more verbs without classifiers than the native signers for all categories9. The most frequently occurring classifier was the same in both groups for the categories Tall Cylinder, Functional Base and Thin Rectangle. At the same time, the L2 signers used the preferred classifier in each group at a numerically lower rate than the native signers. Moreover, the L2 signers' use of classifiers was more variable than the native signers' across all categories.

We asked whether the L2 signers' classifier handshapes were appropriate for the figure objects in question. A Deaf, native ASL signer rated the appropriateness of classifier handshapes produced by each signer for each target object on a scale from 1 to 5. A rating of 1 corresponded to “very bad ASL,” and a rating of 5 corresponded to “very good ASL.” Submitting these ratings to an ordinal mixed effects model10 revealed a significant difference between groups (L.R. χ2 = 4.4711, df = 1, p < 0.05), with native signers receiving higher ratings (M = 4.78, SD = 0.04) than the L2 signers (M = 3.19, SD = 1.05).

To assess whether the L2 signers experience influence from ASL on their L1, English, we first asked whether they use English placement verbs similarly to non-signers. As shown in Table 5, the most frequently used verb for English was the general verb “put” for both non-signers (69%) and L2 signers (63%). The general placement verbs, “move” and “place” were the also among the most frequently used, and two general verbs “be” and “bring” were used infrequently. Four specific verbs occurred: “hang,” “drape,” “spread” (used only by non-signers), and “stick” (used only by L2 signers). Two specific posture verbs, “set,” and “lay” also occurred in the data. “Set” occurred in both groups and “lay” only occurred in the L2 signer group. Thus, the specificity in verbal expression is very similar across non-signers and L2 signers. Overall, the native English speakers (non-signers and L2 signers) exhibited a pattern of verb use that is congruent with previous research, namely by preferring non-specific placement verbs (“put,” “move,” and “place”) at a mean rate of 85% for both the non-signers and L2 signers, and by specifically preferring “put.” A mixed effects logistic regression analysis of verb type (general vs. specific) revealed no difference between groups (ß = 0.085, p = 0.866).

We next analyzed the co-speech gestures in the English data from the non-signers and the L2 signers. First, we asked whether exposure to ASL led the L2 signers to produce more iconic gestures (including gestures representing the Figure object, Path only, and Ground only, see Methods) in English compared to the non-signers. As a group, the non-signers produced a total of 185 gestures (M = 24.71, SD = 5.84) during their placement descriptions and the L2 signers produced a total of 121 gestures (M = 15.13, SD = 13.81). Thus, it was not the case that the L2 signers gestured more than the non-signers. A Poisson regression modeling number of gestures as a function of group showed no significant difference between the groups (L.R. χ2 = 1.915, df = 1, p = 0.1881)11.

Next, we asked whether there is evidence of bidirectional transfer in the L2 signers' English co-speech gestures. It is possible that the L2 signers' semantic categories have realigned with ASL. This is because they have acquired from the ASL system the use of classifiers to make distinctions between different placement events (even if they do not always use the appropriate handshape). There was no evidence of realignment in speech. However, as discussed, English has only a few specific placement verbs. This, together with the fact that the specific placement verbs have low frequencies makes it unlikely that L2 signers would use them as a prominent part of their inventory of placement expressions. For this reason, speech data alone may not accurately reflect the state of the L2 signers' semantic organization, but English co-speech gesture data may.

If there is bidirectional transfer, then the co-speech gestures of L2 signers should look different than those of non-signing English speakers. Specifically, we expect L2 signers to produce more gestures incorporating figure object information and less path-only gestures compared to non-signers. To assess whether this was the case, we focused on gestures that expressed information about Path only vs. about Figure. One L2 signer produced no Figure or Path gestures and was excluded from this analysis. We compared non-signers' and L2 signers' use of these gesture types (Figure 6). A mixed effects logistic regression analysis of gesture type as a function of group revealed a marginally significant effect of group (ß = −1.256, p = 0.065), with L2 signers' producing numerically more gestures about figure than path-only gestures (67% vs. 33%, SD = 26) compared to the non-signers (46% vs. 54% SD = 28).

Given that the L2 signers frequently used classifiers in their ASL descriptions, it is possible that their distribution of gesture types is affected by ordering effects in the experiment. Specifically, half the L2 signers completed the task in ASL before completing it in English. Thus, the L2 signers' higher rate of figure gestures could be a result of priming from ASL. We compared the distribution of gesture types between the L2 signers who did the task in ASL first and those who did the task in English first. If the higher rate of figure gestures in the bilingual group as a whole is driven by priming effects in the ASL-first participants, then we should see higher rates of figure gestures in that group compared to the English-first group. This was not the case. The mean rate of figure gestures was 54% (SD = 24) for the ASL-first group (N = 4) and 85% (SD = 15) for the English-first group (N = 3). A mixed effects logistic regression analysis revealed no significant difference between those L2 signers who completed the task in English first and those who completed the task in ASL first (ß = −1.012, p = 0.184).

The present study examined placement descriptions by second language (L2) learners of American Sign Language (ASL) and asked two questions, namely (1) whether learning the semantics of placement descriptions in a typologically different L2 in a different modality presents a challenge similar to when it occurs within the same modality, and (2) whether cross-modal L2 learners show evidence of semantic reorganization in placement descriptions in their first language (L1).

We found that the hearing L2 ASL signers used verb types similarly to Deaf, native signers and even incorporated classifiers at a comparable, if still somewhat lower, rate. Based on previous research, we would predict that the L2 signers in the present study should have problems acquiring the ASL placement verb system, given its complexity compared to English. Specifically, we expected non-target like use of classifiers suggesting fuzzy semantic boundaries. It is then perhaps surprising to find that the hearing ASL learners were well on their way to acquiring native-like placement descriptions. This is not to say that L2 signers are fully target-like in their ASL use. Classifier handshape ratings from a Deaf, native signer were lower for the L2 signers than for native signers, suggesting that the L2 learners were less ASL appropriate in their handshape selection. Moreover, the L2 signers used a wider variety of handshapes than the native signers, which is in line with previous findings suggesting that L2 signers tend to struggle with selecting target-like handshapes for objects and make distinctions that are too fine-grained (Schembri et al., 2005; Brentari et al., 2012; Marshall and Morgan, 2015; Janke and Marshall, 2017). Finally, the present study focuses on the semantics of placement verbs. It is likely that an investigation of the syntactic constructions and pragmatic contexts in which placement verbs participate would reveal additional differences between L2 learners and native signers.

Nevertheless, the L2 signers in the present study performed unexpectedly similar to Deaf, native signers with respect to including figure information in placement verbs. This is so especially in light of the learners' limited ASL experience, which was <3 years on average (mean 2.88 years, range 1–8 years). By comparison, English learners of Dutch who were residing in the Netherlands and therefore immersed in the language and culture for several years on average (mean length of residence: 11 years, range: 4 months to 19 years) show substantial problems with Dutch placement verbs (Gullberg, 2009a). However, this difference is not necessarily attributable to the difference in modality per se. First, it is a limitation of the present study that we only had two Deaf signers in the control group for ASL. With additional signers, clearer patterns in classifier preference for placement of different object types could emerge and possibly a statistically significant difference in classifier rate between groups. The ASL L2 learners' relative success could also be due to the higher semantic transparency in ASL verbs compared to a language like Dutch. For example, in a study of placement verb acquisition in Tamil and Dutch speaking children, Narasimhan and Gullberg (2011) found that Tamil children acquire relatively infrequent placement verbs (specifically caused posture verbs) early. They attribute this to the semantic transparency of Tamil verbs, which consist of multi-morphemic units such as “make stand” that “individually label the causal and result subevents” (2011, p. 504). By comparison, Dutch caused posture verbs are highly frequent but are also monomorphemic and much less transparent. ASL placement verbs are highly transparent, consisting of a root verb movement (e.g., “MOVE”) combined with a classifier representing either the handling or the shape and size of an object. It is possible that ASL learners are capitalizing on this transparency.

Another possibility is that L2 signers are benefitting from the fact that there is some overlap between the distinctions used in English co-speech gesture and in ASL. Specifically, even though native English speakers predominantly gesture about path in their English, they also gesture about the figure object at a non-negligible rate (46% in the present study, and 40% in Hoetjes, 2008). This overlap may help L2 signers reach the rate of 56% incorporation of classifiers expressing figure object information in ASL observed in the present study sooner than expected given their proficiency.

Regardless of the underlying reason, the results of the present study suggest that ASL learners successfully begin to reorganize their placement verbs semantics in the context of using ASL. It then becomes an important question whether this reorganization happens independent of their English semantics, that is whether their original system is still intact or whether the ability to express new and additional placement distinctions in ASL results in more far-reaching semantic changes. Previous work has found that same-modality learners can exhibit prolonged maintenance of their native placement distinctions, to the extent that L1-like patterns can be observed in L2 gesture, even when speech has become target-like (Gullberg, 2009a; Hoetjes, 2018). This suggests a persistence of L1 semantic organization for the purposes of speaking in the L2. In the case of ASL L2 acquisition, it is not possible to directly examine L2 gestures co-occurring with a main (verbal) expressive channel to assess whether there is evidence of maintenance of L1 semantics. However, the fact that L2 signers included figure information at a rate comparable to Deaf native signers along with the evidence of bidirectional transfer from the L2 to the L1 in the L2 signers' co-speech gesture pattern suggests limited persistence of L1 semantic patterns.

Unlike previous work (Weisberg et al., 2020), we did not find the cross-modal L2 learners in this study to use more iconic gestures than non-signers. This result is possibly an effect of proficiency and length of signing experience. In the present study, the L2 signers had around 3 years of sign experience on average. The L2 signers in the study by Weisberg and colleagues had 10 or more years of exposure to ASL, suggesting that increased gesturing may occur with increased exposure to and proficiency in ASL. In support of this hypothesis, Casey et al. (2012) found no clear increase in co-speech gesture frequency in L2 learners after 1 year of academic ASL instruction. While they found a numerical increase in gesture rate in the different-modality learners that was absent from the control group of same-modality language learners, the learner groups did not differ statistically from each other in terms of gesture rate. In the present study, however, we found the non-signers to use numerically (although not statistically significantly) more iconic gestures than the L2 learners. This difference could be due to the focus in the present paper on analyzing only the subset of participants' utterances that pertained to how an object was placed. Such utterances occur in specific discourse contexts, where the figure object is typically known information. Previous work has shown discourse context to affect gesture rate in non-signers (Debreslioska and Gullberg, 2020). We leave it for future research to determine whether L2 signers' and non-signers' gesture rates vary in a similar or different manner as a function of discourse context.

However, gesture rate was not the only domain we examined for bidirectional language transfer. In assessing the type of gestures used, we found that L2 signers gestured about the figure object (numerically) more than native English speaking non-signers, although statistically, the difference was only a trend. Nevertheless, the results suggest that on average the L2 signers have begun diverging from the monolingual pattern of gesturing nearly equally about Figure and Path and are instead favoring gestures about the figure object. The results here suggest that L2 learners' original system is not intact. This is remarkable given their profile as adult L2 learners of intermediate proficiency, with an average of <3 years of experience. This finding therefore raises questions about how gesture patterns compare in the native language of monolinguals and bilinguals when L2 learning happens within modalities. While previous work on placement descriptions has mainly focused on performance in the L2, one analysis of L2 learners' gesture patterns in the L1 replicated results for monolinguals in the same language (compare Hoetjes, 2008; Gullberg, 2009a). While more work is needed to confirm these patterns, comparing the L1 results of the current study to those of previous work suggests that semantic boundaries may be differentially affected by the L2 early on in acquisition in same- vs. different modality learning. In this context, it will be especially important to compare the present findings to situations in which both the L1 and the L2 are spoken and the L2 has specific placement verbs with transparent semantics in order to assess the role of a high degree of semantic transparency vs. the modality of the second language.

This study examined object placement event descriptions by English L1-ASL L2 language users, asking whether cross-modal L2 learners face similar challenges to same modality L2 learners in learning to talk about placement. Placement verbs in signed languages such as ASL tend to be highly iconic and to exhibit transparent semantic boundaries which could facilitate their acquisition. We also asked how exposure to a typologically different language affects different semantic boundaries in placement events in the L1. Overall, L2 signers used ASL placement descriptions that looked similar to the Deaf, native signers', despite using a wider range of classifier handshapes and using them less appropriately, indicating somewhat fuzzy and less target-like boundaries in their placement semantics. Moreover, the L2 signers' English co-speech gesture patterns suggest that learning ASL may affect conceptualization of placement in the L1. Specifically, the placement distinctions expressed in co-speech gesture by the L2 signers were marginally more ASL-like compared to non-signers' gestures. Taken together, these results suggest that the iconicity and transparency of placement distinctions in the visual modality may facilitate semantic reconstruction in the placement domain, leading to increased target-like use of placement distinctions in the L2 as well as L1 placement distinctions that may differ from those of non-signers with the same first language.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Human Research Protections Program, UC San Diego. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

AF conceived and designed the study, conducted analyses, and wrote the manuscript. Data collection and coding was carried out with the help of research assistants.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

This manuscript would not have been possible without the signers and speakers who took part. Thank you to research assistants DeAnna Suitt, Samantha Moreno, Kalvin Morales, and Matthew Sampson for help with running the experiment, annotation, and coding. Thank you to Monica Keller, a native signer and linguist, for help with ASL judgments.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.686485/full#supplementary-material

Supplementary Image 1. MOVE.

Supplementary Image 2. PUT.

Supplementary Image 3. HANG.

Supplementary Image 4. DRAPE.

Supplementary Image 5. Intransitive construction without classifier: the bag is on the door.

Supplementary Image 6. Intransitive construction with classifier: the magazine is on the table.

Supplementary Image 7. SET.

Supplementary Image 8. 5 handshape (handling).

Supplementary Image 9. A handshape (handling).

Supplementary Image 10. C handshape (handling).

Supplementary Image 11. C handshape, flat (handling).

Supplementary Image 12. O handshape (handling).

Supplementary Image 13. S handshape (handling).

Supplementary Image 14. B handshape (entity).

Supplementary Image 15. Y handshape (entity).

Supplementary Image 16. F Handshape (entity).

Supplementary Image 17. C handshape, flat (entity).

Supplementary Image 18. baby-C handshape (entity).

1. ^“MOVE” and “PUT” both use the same flattened O handshape in the citation form (see Supplemental Images). The two verbs differ in their movements: whereas “PUT” has a defined ending point only, “MOVE” has both a defined beginning and ending point.

2. ^Although note that it is at present an empirical question whether classifier incorporation is generally obligatory in placement descriptions.

3. ^Spanish is similar to English in using general rather than specific placement verbs. Therefore, we do not expect the simultaneous speech-speech bilinguals to talk about placement differently than other English-speaking non-signers.

4. ^Pilot work showed that this list was necessary in order for the participants to remember and describe every placement event. Unlike previous studies (e.g., Gullberg, 2009a), we used a list of pictures rather than written words in an effort to stay consistent between languages, as ASL has no conventionally recognized written form.

5. ^“SET” is excluded from the table. Its citation form is made with an A-handshape that is sometimes considered a classifier and can be used to represent placement or location of objects, but this handshape does not alternate with other classifiers. We opted for a conservative analysis that does not treat instances of “SET” as containing a classifier that represents the figure object. However, an analysis that includes all instances of “SET” as having classifiers also does not result in any between-group differences.

6. ^ASL has no copula. Intransitives are descriptions such as “PLATE ON TABLE,” “the plates are on the table”.

7. ^Mixed effects logistic regression models were fit in R Core Team (2014) using the lme4 package (Bates et al., 2014). Models included random effects of subjects and items. Coefficient estimates, and p-values based on the Wald Z statistic are reported.

8. ^Excepting the Fabric and Other categories, these categories largely correspond to the object shapes discussed by Zwitserlood (2012).

9. ^For the Fabric category, the native signers used a large number of handshapes that were appropriate for showing how fabric items are handled but these handshapes were indistinguishable from citation forms and were consequently not counted as classifiers, as explained in the methods.

10. ^Ordinal mixed effects models were fit in R Core Team (2014) using the packages Ordinal (Christensen, 2019), Car (Fox and Weisberg, 2019), and RVAdeMemoire (Hervé, 2021). Models included random effects of subjects and items.

11. ^We used a generalized linear model for this regression. Because over-dispersion was indicated, we opted to use a quasi-poisson regression model.

Alferink, I., and Gullberg, M. (2014). French–Dutch bilinguals do not maintain obligatory semantic distinctions: evidence from placement verbs. Bilingual. Langu. Cogn. 17, 22–37. doi: 10.1017/S136672891300028X

Ameka, F. K., and Levinson, S. C. (2007). Introduction: The typology and semantics of locative predicates: posturals, positionals, and other beasts. Linguistics 45, 847–872. doi: 10.1515/LING.2007.025

Aronoff, M., Meir, I., Padden, C., and Sandler, W. (2003). Classifier constructions and morphology in two sign languages, in Perspectives on Classifier Constructions in Sign Languages, ed K. Emmorey (Mahwah, NJ: Psychology Press), 53–84.

Aronoff, M., Meir, I., and Sandler, W. (2005). The paradox of sign language morphology. Language. 81:301. doi: 10.1353/LAN.2005.0043

Bates, D., Maechler, M., Bolker, B., and Walker, S. (2014). lme4: Linear mixed-effects models using Eigen and S4. (R package version 1.0-6). Available online at: http://CRAN.R-project.org/package=lme4 [Computer software].

Baus, C., Carreiras, M., and Emmorey, K. (2013). When does iconicity in sign language matter? Langu. Cogn. Proc. 28, 261–271. doi: 10.1080/01690965.2011.620374

Bel, A., Ortells, M., and Morgan, G. (2014). Reference control in the narratives of adult sign language learners. Int. J. Bilingu. 19, 608–624. doi: 10.1177/1367006914527186

Bochner, J. H., Christie, K., Hauser, P. C., and Searls, J. M. (2011). When is a difference really different? Learners' discrimination of linguistic contrasts in american sign language. Lang. Learn. 26, 1302–1327. doi: 10.1111/j.1467-9922.2011.00671.x

Bohnemeyer, J., and Pederson, E. (Eds.). (2011). Event Representation in Language and Cognition. Cambridge: Cambridge University Press.

Brentari, D., Coppola, M., Mazzoni, L., and Goldin-Meadow, S. (2012). When does a system become phonological? Handshape production in gesturers, signers, and homesigners. Natur. Lang. Lingu. Theory. 30, 1–31. doi: 10.1007/s11049-011-9145-1

Brown, A., and Gullberg, M. (2008). Bidirectional crosslinguistic influence in L1-L2 encoding of manner in speech and gesture: a study of Japanese speakers of English. Studies Sec. Lang. Acqu. 30, 225–251. doi: 10.1017/S0272263108080327

Brown, A., and Gullberg, M. (2011). Bidirectional cross-linguistic influence in event conceptualization? Expressions of Path among Japanese learners of English*. Bilingualism 14, 79–94. doi: 10.1017/S1366728910000064

Bylund, E., and Jarvis, S. (2011). L2 effects on L1 event conceptualization*. Bilingualism 14, 47–59. doi: 10.1017/S1366728910000180

Cadierno, T., Ibarretxe-Antuñano, I., and Hijazo-Gascón, A. (2016). Semantic categorization of placement verbs in L1 and L2 Danish and Spanish: placement verbs in L1 and L2 Danish. Lang. Learn. 66, 191–223. doi: 10.1111/lang.12153

Campbell, R., Martin, P., and White, T. (1992). Forced choice recognition of sign in novice learners of British Sign Language. Appl. Lingu. 13, 185–201.

Casey, S., Emmorey, K., and Larrabee, H. (2012). The effects of learning American Sign Language on co-speech gesture. Bilingualism 15, 677–686. doi: 10.1017/S1366728911000575

Chen Pichler, D. (2011). Sources of handshape error in first-time signers of ASL, in Deaf Around the World: The Impact of Language, eds G. Mathur and D. J. Napoli (Oxford: Oxford University Press), 96–121.

Chen Pichler, D., and Koulidobrova, E. (2016). Acquisition of sign language as a second language (L2), in The Oxford Handjournal of Deaf Studies in Language: Research, Policy, and Practice, eds M. Marschark and P. E. Spencer (Oxford: Oxford University Press), 218–230.

Christensen, R. H. B. (2019). ordinal-Regression Models for Ordinal Data. R package version 2019.12-10. Available online at: https://CRAN.R-project.org/package=ordinal

Clark, H. H., and Wilkes-Gibbs, D. (1986). Referring as a collaborative process. Cognition 22, 1–39. doi: 10.1016/0010-0277(86)90010-7

Daller, M. H., Treffers-Daller, J., and Furman, R. (2011). Transfer of conceptualization patterns in bilinguals: the construal of motion events in Turkish and German*. Bilingualism 14, 95–119. doi: 10.1017/S1366728910000106

De Knop, S. (2016). German causative events with placement verbs. Lege Artis. 1, 75–115. doi: 10.1515/lart-2016-0002

Debreslioska, S., and Gullberg, M. (2020). What's New? Gestures accompany inferable rather than brand-new referents in discourse. Front. Psych. 11:1935. doi: 10.3389/fpsyg.2020.01935

Dijkstra, T. (2005). Bilingual visual word recognition and lexical access, in Handjournal of Bilingualism: Psycholinguistic Approaches, eds J. F. Kroll and A. M. B. de Groot (Oxford: Oxford University Press), 179–201.

Ferrara, L., and Nilsson, A.-L. (2017). Describing spatial layouts as an L2M2 signed language learner. Sign Langu. Lingu. 1, 1–26. doi: 10.1075/sll.20.1.01fer

Fox, J., and Weisberg, S. (2019). An {R} Companion to Applied Regression, Third Edition. Thousand Oaks CA: Sage. Available online at: https://socialsciences.mcmaster.ca/jfox/Journals/Companion/

Frederiksen, A. T., and Mayberry, R. I. (2019). Reference tracking in early stages of different modality L2 acquisition: Limited over-explicitness in novice ASL signers' referring expressions. Sec. Lang. Res. 35, 253–283. doi: 10.1177/0267658317750220

Gulamani, S., Marshall, C., and Morgan, G. (2020). The challenges of viewpoint-taking when learning a sign language: data from the ‘frog story' in British Sign Language. Sec. Langu. Res. 1–33. doi: 10.1177/0267658320906855

Gullberg, M. (2009a). Reconstructing verb meaning in a second language: how English speakers of L2 Dutch talk and gesture about placement. Annu. Rev. Cogn. Lingu. 7, 221–244. doi: 10.1075/arcl.7.09gul

Gullberg, M. (2009b). Gestures and the development of semantic representations in first and second language acquisition. Acquis. Int. En Lang. Étrang. Aile. Lia 1, 117–139. doi: 10.4000/aile.4514

Gullberg, M. (2009c). Why gestures are relevant to the bilingual lexicon, in The Bilingual Mental Lexicon, ed A. Pavlenko (Bristol; Buffalo, NY: Multilingual Matters), 161–184.

Gullberg, M. (2011a). Language-specific encoding of placement events in gestures, in Event Representation in Language and Cognition, eds B. Jürgen and P. Eric (Bristol; Buffalo, NY), 166–188.

Gullberg, M. (2011b). Thinking, speaking, and gesturing about motion in more than one language, in Thinking and Speaking in Two Languages, ed A. Pavlenko (Bristol; Buffalo, NY: Multilingual Matters), 143–169.

Hervé, M. (2021). RVAideMemoire: Testing and Plotting Procedures for Biostatistics. R package version 0.9-79. Available online at: https://CRAN.R-project.org/package=RVAideMemoire

Hoetjes, M. (2008). The use of gestures in placement events, in Leiden Working Papers in Linguistics 2.3, Vol. 5 (Leiden), 24–35.

Hoetjes, M. (2018). Transfer in gesture: l2 placement event descriptions, in Proceedings of the 40th Annual Conference of the Cognitive Science Society (Madison, WI), 1791–1796.

Hosemann, J., Mani, N., Herrmann, A., Steinbach, M., and Altvater-Mackensen, N. (2020). Signs activate their written word translation in deaf adults: an ERP study on cross-modal co-activation in German Sign Language. Glossa J. Gen. Lingu. 5:57. doi: 10.5334/gjgl.1014

Hwang, S.-O., Tomita, N., Morgan, H., Ergin, R., ILkbaşaran, D., Seegers, S., et al. (2017). Of the body and the hands: patterned iconicity for semantic categories. Langu. Cogn. 9, 573–602. doi: 10.1017/langcog.2016.28

Ijaz, I. H. (1986). Linguistic and cognitive determinants of lexical acquisition in a second language. Lang. Learn. 36, 401–451. doi: 10.1111/j.1467-1770.1986.tb01034.x

Jaeger, T. F. (2008). Categorical data analysis: away from ANOVAs (transformation or not) and towards logit mixed models. J. Mem. Lang. 59, 434–446. doi: 10.1016/j.jml.2007.11.007

Janke, V., and Marshall, C. (2017). Using the hands to represent objects in space: gesture as a substrate for signed language acquisition. Front. Psych. 8:13. doi: 10.3389/fpsyg.2017.02007

Jared, D., and Kroll, J. F. (2001). Do bilinguals activate phonological representations in one or both of their languages when naming words? J. Mem. Lang. 44, 2–31. doi: 10.1006/jmla.2000.2747

Kellerman, E. (1995). Crosslinguistic influence: transfer to nowhere? Ann. Rev. Appl. Lingu. 15, 125–150. doi: 10.1017/S0267190500002658

Kendon, A. (1980). Gesticulation and speech: Two aspects of the process of utterance, in The Relationship of Verbal and Nonverbal Communication, ed M. R. Key (The Hague; New York, NY: Mouton), 207–227.

Kita, S., van Gijn, I., and van der Hulst, H. (1998). Movement phases in signs and co-speech gestures, and their transcription by human coders, in Gesture and Sign Language in Human-Computer Interaction, eds I. Wachsmuth and M. Fröhlich (Vol. 1371) (Berlin; Heidelberg: Springer), 23–35. doi: 10.1007/BFb0052986

Kopecka, A., and Narasimhan, B. (2012). Events of Putting and Taking: A Crosslinguistic Perspective. John Benjamins Publishing.

Kroll, J. F., Bobb, S. C., Misra, M., and Guo, T. (2008). Language selection in bilingual speech: evidence for inhibitory processes. Acta Psychol. 128, 416–430. doi: 10.1016/j.actpsy.2008.02.001

Kroll, J. F., Dussias, P. E., Bice, K., and Perrotti, L. (2015). Bilingualism, mind, and brain. Annu. Rev. Lingu. 1, 377–394. doi: 10.1146/annurev-linguist-030514-124937

Kubus, O., Villwock, A., Morford, J. P., and Rathmann, C. (2015). Word recognition in deaf readers: cross-language activation of German Sign Language and German. Appl. Psychol. 36, 831–854. doi: 10.1017/S0142716413000520

Kurz, K. B., Mullaney, K., and Occhino, C. (2019). Constructed Action in American sign language: a look at second language learners in a second modality. Languages. 4:90. doi: 10.3390/languages4040090

Lewandowski, W., and Özçalişkan, S. (2021). The specificity of event expression in first language influences expression of object placement events in second language. Studies Sec. Lang. Acqu. 1–32. doi: 10.1017/S0272263121000048

Lieberth, A. K., and Gamble, M. E. B. (1991). The role of iconicity in sign language learning by hearing adults. J. Commun. Disord. 24, 89–99.

Marian, V., and Spivey, M. (2003). Bilingual and monolingual processing of competing lexical items. Appl. Psychol. 24, 173–193. doi: 10.1017/S0142716403000092

Marshall, C. R., and Morgan, G. (2015). From gesture to sign language: conventionalization of classifier constructions by adult hearing learners of British Sign Language. Top. Cogn. Sci. 7, 61–80. doi: 10.1111/tops.12118

Masson-Carro, I., Goudbeek, M., and Krahmer, E. (2016). Can you handle this? The impact of object affordances on how co-speech gestures are produced. Lang. Cogn. Neurosci. 12, 430–440. doi: 10.1080/23273798.2015.1108448

McIntire, M. L., and Reilly, J. S. (1988). Nonmanual Behaviors in L1 and L2 Learners of American Sign Language. Sign Language Studies. 61, 351–375.

McKee, R. L., and McKee, D. (1992). What's So Hard about Learning ASL?: Students'& teachers' perceptions. Sign Lang. Studies. 75, 129–158.

McNeill, D. (1992). Hand and Mind: What Gestures Reveal About Thought. Chicago, IL: University of Chicago Press.

McNeill, D., and Levy, E. (1982). Conceptual representations in language activity and gesture, in Speech, Place, and Action, ed R. J. Jarvella and W. Klein (New York, NY: Wiley). p. 271–295.

Morford, J. P., Kroll, J. F., Piñar, P., and Wilkinson, E. (2014). Bilingual word recognition in deaf and hearing signers: effects of proficiency and language dominance on cross-language activation. Sec. Lang. Res. 30, 251–271. doi: 10.1177/0267658313503467

Morford, J. P., Wilkinson, E., Villwock, A., Piñar, P., and Kroll, J. F. (2011). When deaf signers read English: do written words activate their sign translations? Cognition 118, 286–292. doi: 10.1016/j.cognition.2010.11.006

Narasimhan, B., and Gullberg, M. (2011). The role of input frequency and semantic transparency in the acquisition of verb meaning: evidence from placement verbs in Tamil and Dutch. J. Child Lang. 38, 504–532. doi: 10.1017/S0305000910000164

Narasimhan, B., Kopecka, A., Bowerman, M., Gullberg, M., and Majid, A. (2012). Putting and taking events: a crosslinguistic perspective, in Events of Putting and Taking: A Crosslinguistic Perspective, ed A. Kopecka and B. Narasimhan (Amsterdan: John Benjamins Publishing). p. 1–18.

Ortega, G. (2013). Acquisition of a signed phonological system by hearing adults: The Role of sign structure and iconicity (Unpublished Doctoral Dissertation). University College London, United Kingdom.

Ortega, G. (2020). Systematic mappings between semantic categories and types of iconic representations in the manual modality: a normed database of silent gesture. Behav. Res. Methods 52, 51–67. doi: 10.3758/s13428-019-01204-6

Ortega, G., and Morgan, G. (2010). Comparing child and adult development of a visual phonological system. Lang. Interact. Acquis. 1, 67–81. doi: 10.1075/lia.1.1.05ort

Ortega, G., and Morgan, G. (2015). Input processing at first exposure to a sign language. Sec. Lang. Res. 21, 443–463. doi: 10.1177/0267658315576822

Ortega, G., and Ozyurek, A. (2013). Gesture-sign interface in hearing non-signers' first exposure to sign, in The Tilburg Gesture Research Meeting [TiGeR 2013]. (Tilburg).

Özçalişkan, S. (2016). Do gestures follow speech in bilinguals' description of motion?* Bilingualism 19, 644–653. doi: 10.1017/S1366728915000796

Padden, C., Hwang, S.-O., Lepic, R., and Seegers, S. (2015). Tools for language: patterned iconicity in sign language nouns and verbs. Topics Cogn. Sci. 7, 81–94. doi: 10.1111/tops.12121

Pauwels, P. (2000). Put, Set, Lay and Place: A Cognitive Linguistic Approach to Verbal Meaning. München: Lincom Europa.

Perniss, P., Thompson, R. L., and Vigliocco, G. (2010). Iconicity as a general property of language: evidence from spoken and signed languages. Front. Psych. 1:227. doi: 10.3389/fpsyg.2010.00227

Quinto-Pozos, D., and Parrill, F. (2015). Signers and co-speech gesturers adopt similar strategies for portraying viewpoint in narratives. Topics Cogn. Sci. 7, 12–35. doi: 10.1111/tops,0.12120

R Core Team (2014). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. Available online at: http://www.R-project.org/

Schembri, A., Jones, C., and Burnham, D. (2005). Comparing action gestures and classifier verbs of motion: evidence from australian sign language, taiwan sign language, and nonsigners' gestures without speech. J. Deaf Studies Deaf Educ. 10, 272–290. doi: 10.1093/deafed/eni029

Sevcikova, Z. (2014). Categorical versus gradient properties of handling handshapes in British Sign Language (BSL) (Unpublished Doctoral Dissertation). University College London, United Kingdom.

Singleton, J. L., Morford, J. P., and Goldin-Meadow, S. (1993). Once is not enough: standards of well-formedness in manual communication created over three different timespans. Language 69, 683–715. doi: 10.2307/416883

Slobin, D. I. (1996). ‘From “thought and language” to “thinking for speaking”,' in Rethinking Linguistic Relativity, eds J. J. Gumpertz and S. C. Levinson (Cambridge: Cambridge University Press), 70–96.

Slobin, D. I. (2013). Typology and channel of communication. Where do sign languages fit in?, in Language Typology and Historical Contingency: In honor of Johanna Nichols, eds B. Bickel, L. A. Grenoble, D. A. Peterson, and A. Timberlake (Amsterdan: John Benjamins Publishing Company). p. 48–68.

Slobin, D. I., Bowerman, M., Eisenbeiss, S., and Narasimhan. (2011). Putting things in places: developmental consequences of linguistic typology, in Event Representation in Language and Cognition, eds J. Bohnemeyer & E. Pederson (Cambridge: Cambridge University Press), 134–165.

Slobin, D. I., Hoiting, N., Kuntze, M., Lindert, R., Weinberg, A., Pyers, J., et al. (2003). A cognitive/functional perspective on the acquisition of ‘classifiers', in Perspectives on Classifier Constructions in Sign Languages, ed K. Emmorey (Mahwah, NJ: Lawrence Erlbaum Associates), 271–296.

Supalla, T. R. (1982). Structure and Acquisition of Verbs of Motion and Location in American Sign Language. San Diego: University of California.

Taub, S., Galvan, D., Piñar, P., and Mather, S. (2008). Gesture and ASL L2 Acquisition. Sign Languages: Spinning and Unravelling the Past, Present and Future. Petrópolis: Arara Azul.

van Nispen, K. (2017). Production and comprehension of pantomimes used to depict objects. Front. Psych. 8:16. doi: 10.3389/fpsyg.2017.01095

Viberg, A. (1998). Crosslinguistic perspectives on lexical acquisition: the case of language-specific semantic differentiation, in Perspectives on Lexical Acquisition in a Second Language, eds K. Haastrup and A. Viberg (Lund: Lund Univ Press), 175–208.

Weisberg, J., Casey, S., Sevcikova Sehyr, Z., and Emmorey, K. (2020). Second language acquisition of American Sign Language influences co-speech gesture production. Bilingu. Lang. Cogn. 23, 473–482. doi: 10.1017/S1366728919000208

Wilcox, S., and Wilcox, P. P. (1997). Learning To See: Teaching American Sign Language as a Second Language (1st edition). Washington, DC: Gallaudet University Press.

Wittenburg, P., Brugman, H., Russell, A., Klassmann, A., and Sloetjes, H. (2006). ELAN: a professional framework for multimodality research, in Proceedings of the 5th International Conference on Language Resources and Evaluation (Genoa), 1556–1559.

Keywords: speech-sign bilingualism, caused motion events, bidirectional language influences, sign language, co-speech gestures, iconicity, second language, fuzzy lexical representations

Citation: Frederiksen AT (2021) Emerging ASL Distinctions in Sign-Speech Bilinguals' Signs and Co-speech Gestures in Placement Descriptions. Front. Psychol. 12:686485. doi: 10.3389/fpsyg.2021.686485

Received: 26 March 2021; Accepted: 23 June 2021;

Published: 03 August 2021.

Edited by:

Andreas Opitz, Leipzig University, GermanyReviewed by:

Karen Emmorey, San Diego State University, United StatesCopyright © 2021 Frederiksen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anne Therese Frederiksen, YS50LmZyZWRlcmlrc2VuQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.