- 1Department of Psychology, Università Cattolica del Sacro Cuore, Brescia, Italy

- 2Department of Psychology, Università Cattolica del Sacro Cuore, Milan, Italy

- 3Department of Psychology, Paderborn University, Paderborn, Germany

Several methods are available to answer questions regarding similarity and accuracy, each of which has specific properties and limitations. This study focuses on the Latent Congruence Model (LCM; Cheung, 2009), because of its capacity to deal with cross-informant measurement invariance issues. Until now, no cross-national applications of LCM are present in the literature, perhaps because of the difficulty to deal with both cross-national and cross-informant measurement issues implied by those models. This study presents a step-by-step procedure to apply LCM to dyadic cross-national research designs controlling for both cross-national and cross-informant measurement invariance. An illustrative example on parent–child support exchanges in Italy and Germany is provided. Findings help to show the different possible scenarios of partial invariance, and a discussion related to how to deal with those scenarios is provided. Future perspectives in the study of parent–child similarity and accuracy in cross-national research will be discussed.

Introduction

More and more often, literature has suggested that, to conduct research on family relationships, it is necessary to collect data from more than one informant, although until now most family research focuses on one informant (Lanz et al., 2015). Multiple informants research allows studying interdependence among family members in many different ways, among which there is the analysis of the correspondence of perceptions of two different family members. In those cases, the level of analyses is always dyadic, but the meaning of that correspondence can be different (i.e., similarity or accuracy) depending on the position of informants in relation to the considered construct (Lanz et al., 2018).

There are many ways by which similarity or accuracy can be analyzed, some of them are able to investigate both the individual and the dyadic contribution to that correspondence (i.e., congruence), and some are also able to deal with measurement errors. This study presents in detail the Latent Congruence Model (LCM) (Cheung, 2009) as a way to test both similarity and accuracy, taking into account both cross-national and cross-informant measurement (un)equivalence. A cross-national family research on parent–child emotional support exchanges constitutes the example on which all the analyses were conducted, and Mplus syntaxes and output are provided.

Similarity and Accuracy in Family Dyads

The congruence between scores of two informants can be conceptualized in different ways according to the position of informants in the evaluation of the construct. Indeed, as Lanz et al. (2018) underlined, informants can be embedded in the evaluation of a construct (i.e., husband evaluates his own values, wife evaluates her own values), non-embedded (i.e., father and mother evaluate values of their child), or mixed (i.e., father and child evaluate values of child). When both informants are embedded or non-embedded, the congruence of their scores is conceptualized as similarity (i.e., how much similar are husband and wife in their values); when informants are mixed, the accuracy of the score of the non-embedded informant related to the score of the embedded informant is evaluated (i.e., how much accurate is the paternal perception of values reported by the child).

Most of the family research on similarity or accuracy focuses on parent–child dyads or on romantic dyads. The similarity is, for example, investigated in studies on value similarity (Hoellger et al., 2020), personality similarity (Wang et al., 2018), or similarity in the perception of relationship conflict in parent–adolescent child dyads (Mastrotheodoros et al., 2020). Accuracy is investigated especially regarding bias in perception of characteristics of the partner (Pusch et al., 2021). Fewer studies investigate similarity and accuracy on the same construct in the same study. One exception is Decuyper et al. (2012) who studied personality similarity and accuracy in dating and married dyads.

In most of those family studies, the considered dyads are distinguishable or nonexchangeable, which means that there is at least one variable that can distinguish the two partners of the dyad. For instance, gender differentiates the two partners in heterosexual dyads; generation differentiates the two partners in parent–child dyads. In this study, we focus on distinguishable dyads, because they are more usual in family research. However, a discussion of how to deal with similarity and accuracy in exchangeable dyads (e.g., homosexual dyads, friends, colleagues) can be found in Kenny et al. (2006).

LCM: A Way to Test Similarity and Accuracy Taking Into Account the Measurement Model

Among the most intuitive approaches to the study on similarity and accuracy there is the creation of dyadic indexes such as the mean-level difference scores (e.g., algebraic, absolute, and squared difference) and the profile similarity indexes (e.g., sum of absolute/squared difference, profile correlations) (Edwards, 1994; Lanz et al., 2018). However, research that computes dyadic indexes usually does not consider the measurement model that informs of the scores used to compute those dyadic indexes. Since most of the instruments chosen to evaluate family relationships and functioning are based on the classical test theory, computing congruence between two informants also considering the measurement model could increase the interpretability of findings related to dyadic congruence.

Some recent reviews of the literature (De Los Reyes et al., 2019a,b) supported the fact that discrepancies of informants are meaningful and related to criterion validity variables (De Los Reyes et al., 2015); thus, they are not mainly explained by measurement confounds because of the way informants are using instruments to evaluate targets. However, other researchers (Russell et al., 2016) still underline the importance of testing models based on equivalent cross-informant measurement models to have more precise estimates of congruence. Moreover, dyadic research underlines the importance of testing dyadic measurement invariance in dyadic research designs (Tagliabue and Lanz, 2014; Claxton et al., 2015; Sakaluk et al., 2021), and some research findings revealed that measurement models tested with CFA applied to dyads are not always fully invariant across dyad members. For instance, DeLuca et al. (2019) found partial metric invariance and/or partial scalar invariance for Adult Self Report (ASR) and the Adult Behavior Checklist's (ABCL) dimensions.

To deal with measurement model, SEM models that allow both to test the measurement model of scores of dyadic partners, and to model similarity and accuracy at a latent level have been proposed. The measurement model (often tested through CFA) is related to the way items are linked with latent variables, and such item-factor relationship is described by different parameters: factor loadings, intercepts, and residuals. Testing dyadic measurement invariance means to verify that each of those parameters is equivalent across the two informants (i.e., parent and child). In particular, measurement invariance levels are configural, metric, strong, and strict (Steenkamp and Baumgartner, 1998; Claxton et al., 2015). Configural invariance level means that the confirmatory measurement model fits well for both parents and children, although all the parameters (factor loadings, intercepts, residuals) are free to be different in parents and children. When metric invariance is found, it means that the meaning of the latent factor is equal for both partners; indeed, technically speaking, factor loadings are constrained to be equal for parents and children. Strong invariance means that the mean of the different items is the same for parents and children. Finally, strict invariance means that the measurement error amount is the same for both partners (and it also means that the shared variance of each item is the same for both parents and children). It is important to evaluate and keep measurement model and measurement invariance into consideration when investigating and interpreting similarity or accuracy (Edwards, 2009).

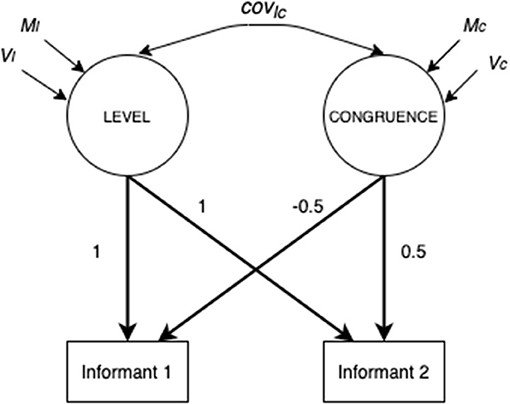

Among the SEM models able to test similarity and/or accuracy in dyadic perceptions, the Latent Congruence Model (LCM; Cheung, 2009) allows testing both similarity and accuracy within the same framework checking for cross-informant measurement invariance. LCM is a specific SEM model in which the unit of analysis is the dyad. The simplest LCM involves two observed variables on the same construct from two informants (i.e., perception of father and child of their quality of communication). Figure 1 shows the simplest LCM. The variance of the two observed variables is disentangled into two factors, the first is called LEVEL and the second is called CONGRUENCE. LEVEL is the mean of the two observed variables, and it is estimated fixing to 1 the factor loadings of the two observed variables on the LEVEL factor. CONGRUENCE is the difference between the two observed variables, and it is estimated by fixing to 0.5 or −0.5 the two factor loadings of the observed variables on the CONGRUENCE factor, so that difference is equally divided for the two observed variables and the direction of the difference (i.e., which is the higher or lower score) can be interpreted. The mean and variance of the LEVEL factor are the grand mean and the variance of the mean scores of all the dyads, whereas the mean and the variance of the CONGRUENCE factor are respectively the average difference between scores of the two dyadic members and its variability across dyads.

Figure 1. Simplest LCM on observed variables (Figure adapted from Cheung, 2009, p. 9).

The simplest LCM is not able to work on measurement model, thus a more complex LCM, called item-level LCM, was proposed by Cheung (2009). In that model, instead of the two observed variables, there are two latent variables, measured by items filled in by the two informants. Thus, LCM becomes a two-level SEM. First-level latent variables represent the perception of each partner, so it is possible to test cross-informant measurement invariance putting equivalence constraints across partners. Second-level variables are LEVEL and CONGRUENCE already explained above. It is important to note that the CONGRUENCE variable is a general label indicating the difference between the two perceptions, but, according to the variables and informants considered, it could be interpreted as lack of similarity (L_SIM), or lack of accuracy (L_ACC). LCM focused on lack of similarity and LCM focused on lack of accuracy are slightly different, and the details of those differences are presented in the data analysis section.

LCM was first proposed within organizational psychology (Cheung, 2009), an area of psychology that frequently uses congruence or discrepancy scores. However, since then, LCM has been often used in family research too. For instance, Schaffhuser et al. (2016) used LCM to investigate discrepancy in dyadic perceptions of personality traits within romantic couples, also analyzing actor and partner association with relationship satisfaction. Also, Reifman and Niehuis (2018) and Mastrotheodoros et al. (2020) used LCM within longitudinal designs, testing measurement invariance in both dyadic and longitudinal aspects and then evaluating how discrepancy in couple or parent–child relationships changes overtime.

Cross-Cultural Comparisons on Similarity and Accuracy

Recently, a meta-analysis (De Los Reyes et al., 2019b) revealed that congruence is related to cultural characteristics. Moreover, as Rescorla (2016) underlined, there is a need for cross-cultural direct comparisons regarding parent–child discrepancies. Very few studies directly compared parent–child discrepancy across nations, and the ones that did, as for example Rescorla et al. (2013), did not test cross-cultural measurement invariance. We also did not find applications of LCM to cross-cultural or cross-national studies. One possible explanation is the lack of discussion about how to keep into consideration at the same time cross-informant and cross-national (or cross-cultural) measurement invariance. Indeed, single sample applications of LCM show how to test cross-informant measurement invariance when estimating second-order level and congruence variables. However, in cross-national (or cross-cultural) designs, a preliminary step is needed, which is verifying the presence of cross-national (or cross-cultural) measurement invariance before testing the cross-informant one and applying the whole LCM. This study proposes a step-by-step guide to conduct those kinds of analyses and interpret cross-national differences in similarity and accuracy within parent–child dyads.

The Study

There are three aims that guided this study: (1) to investigate parent–adult child similarity and accuracy through LCM using illustrative data on given or received emotional support in German and Italian samples; (2) to discuss how to test cross-national and cross-informant measurement invariance within LCM framework; and (3) to compare findings controlling, or not, for cross-national and cross-informant measurement invariance, and discuss possible interpretations of findings.

Materials and Methods

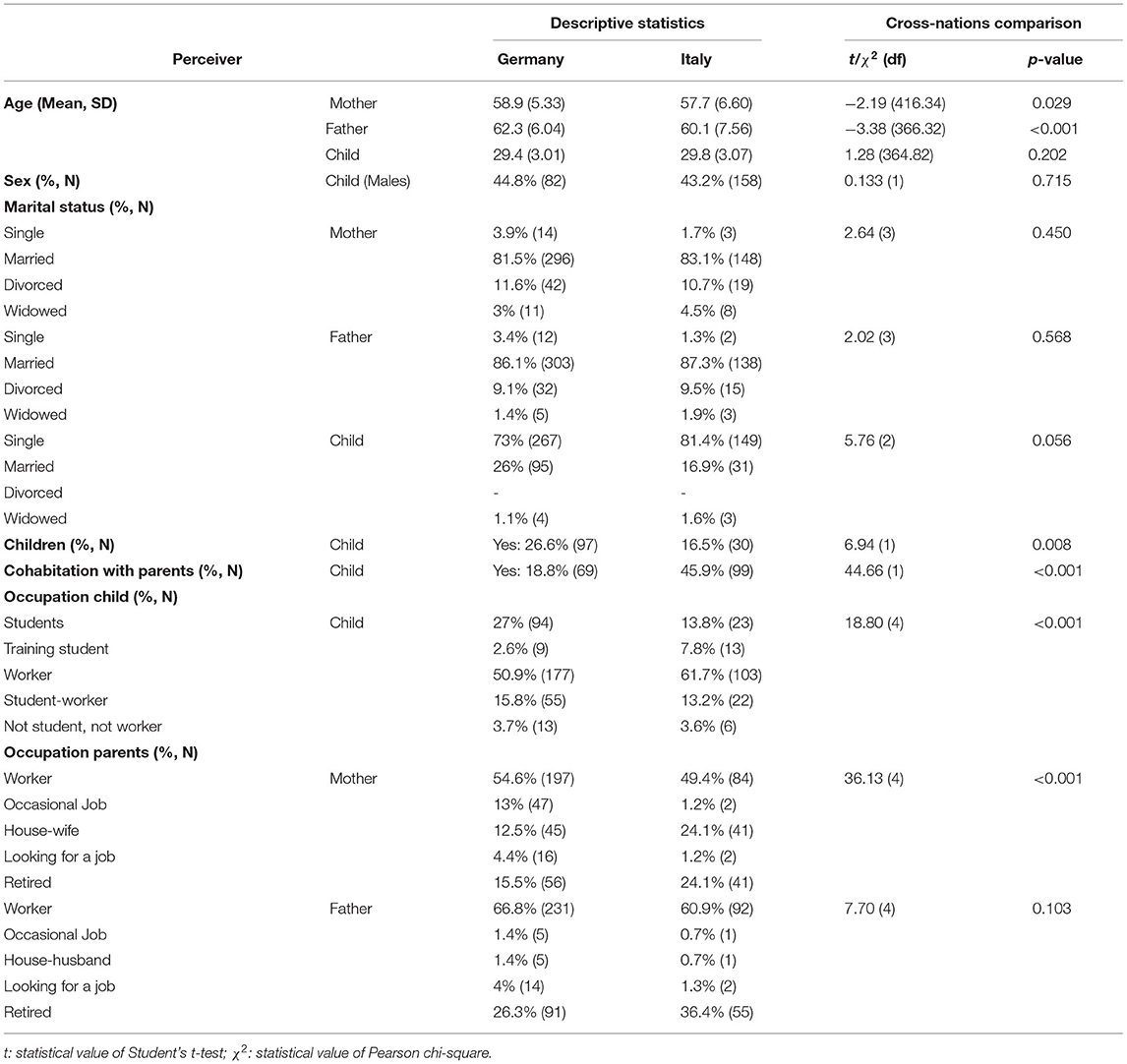

Illustrative data are composed of 195 Italian and 417 German family triads (father, mother, adult child). Young-adult children (43.7% males) were aged between 25 and 35 (M = 29.66; SD = 3.07), mothers were aged between 41 and 87 (M = 58.07; SD = 6.24) and fathers between 41 and 87 (M = 60.78; SD = 7.19).

The sample for this research derives from the “Interdependence in adult child-parent relationships” project funded by the German Research Foundation (DFG). The study was conducted between 2016 and 2017. In response to advertisements, the participants contacted the project team and got the questionnaires via mail (Germany) or directly by hand from the research team (Italy). The participation was voluntary, and the participants could quit the study at any time. Data were collected confidentially and anonymously. The data of adult children, fathers, and mothers were matched by personal codes, but the data were not attributable to individual participants.

Five hundred eighty-four mother–child dyads (395 German and 189 Italian) and 599 father–child dyads (406 German and 193 Italian) were included in data analyses for the estimation of similarity and accuracy LCMs.

The German and the Italian sub-samples were mostly comparable with respect to sociodemographic variables for each of the three perceivers (Table 1). However, some differences were found between the two. Specifically, German children seem to have reached some adulthood markers more than Italian ones (only 18.8% German children cohabitate with parents; and 26.6% of them are parents).

Instrument

Each family member (father, mother, adult child) filled in the nine-item emotional support subscale from the Given and received support scale (Sommer and Buhl, 2018). The instrument consisted of two items of instruments used in the Panel Analysis of Intimate Relationships and Family Dynamics (Pairfam; Thönnissen et al., unpublished1) and seven ad hoc-developed items. The instruction and items composing the instrument are presented in Appendix A in Supplementary Material. The items were translated from German to English and Italian and then back-translated for control by native speakers of German and Italian.

Each family member answered the nine items referring to the other two members, and emotional support was measured separately for given and received support. Specifically, in this study, young adult children provided their perception of giving support to mother (ω = 0.901) and father (ω = 0.906) and of receiving support from mother (ω = 0.931) and father (ω = 0.942) in the past 12 months, whereas mothers and fathers provided their perceptions of giving support to their child (respectively ω = 0.925 and 0.925) and of receiving support from him/her (respectively ω = 0.937 and 0.937) in the past 12 months. Each participant was asked for the given support “What kinds of help did you give to your child/father/mother in the last 12 months?” and for the received support “What kinds of help did you receive from your child/father/mother in the last 12 months?”; the participants rated items such as “Advice regarding personal problems (item 1)” or “Talk about my/their worries and troubles (item 8)” referring to each of the other family members. Items were rated on a 5-point Likert scale from 1 (never) to 5 (always). The scale we used was highly reliable even when the composite reliability (ω) was estimated separately for each country (see Supplementary Material).

Data Analyses

Missingness Analysis and Outliers

Missing data were handled in Mplus via the full information maximum likelihood (FIML) method. We evaluated missingness percentage at dyadic, respondent, and item level because of family data (Tagliabue and Donato, 2015). Then, the missingness mechanism was evaluated by Little's MCAR test. Multivariate outliers were analyzed by Mahalanobis Distance based on chi-square distribution significant for p < 0.001 (Tabachnick and Fidell, 2013).

Latent Congruence Model (Aim 1)

In the illustrative example, we asked parent–child dyads to evaluate given and received emotional support within their relationship. For instance, the mother evaluates how much emotional support she is giving to her adult child and how much emotional support she is receiving from that child. At the same time, the adult child evaluates how much emotional support he/she is giving to his/her mother and how much emotional support he/she is receiving from her.

When LCM is applied to perceptions of mother and adult child of given emotional support, dyadic similarity regarding the perception of given emotional support is evaluated; the same for perception of mother and adult child of received emotional support in their relationship. When LCM is applied, on one side, to perception of mothers of given emotional support to the adult child and perception of the adult child of received emotional support from the mother, and, on the other side, to perception of mothers of received emotional support from adult child and perception of adult child of given emotional support to mother, accuracy in exchanged emotional support is evaluated.

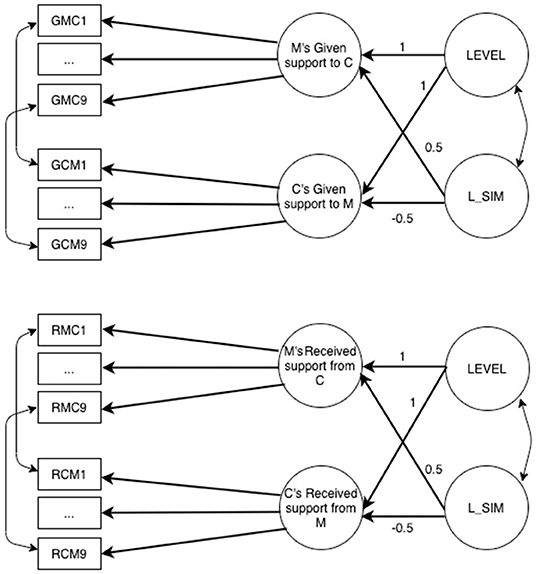

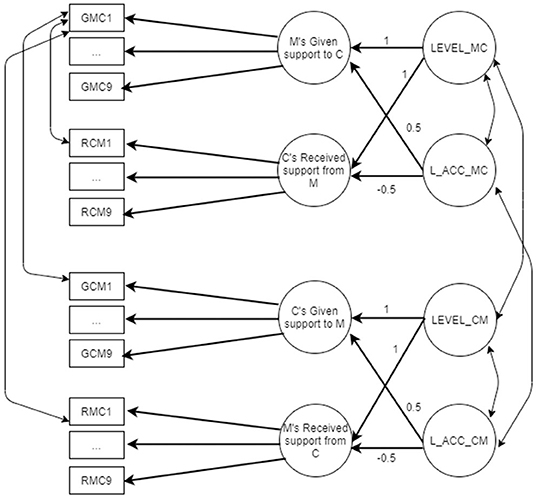

Two different latent congruence models (LCMs) were tested, one for similarity and the other for accuracy, separately for the German and Italian samples. In all LCMs, constraints related to full cross-informant measurement invariance were inserted. Figure 2 presents the similarity LCM, whereas Figure 3 presents the accuracy one. All the models were tested using Mplus (RRID:SCR_015578).

Figure 2. Similarity LCM referred to mother–child given support M, mother; C, child. GMC1–GMC9, items assessing the support given by the mother; GCM1–GCM9, items assessing the support given by the child; LEVEL, average (mean) level of the mother and child perceptions of given support (second-order factor); L_SIM, lack of similarity (second-order factor).

Figure 3. Accuracy LCM referred to mother–child relationship M, mother; C, child. GMC1–GMC9, items assessing the support given by the mother; GCM1–GCM9, items assessing the support given by the child; RMC1–RMC9, items assessing the support received by the mother; RCM1–RCM9, items assessing the support received by the child; LEVEL_MC, average (mean) level of mother and child perceptions for the downward support exchange (second-order factor); LEVEL_CM, average (mean) level of mother and child perceptions for the upward support exchange (second-order factor); L_ACC_MC, lack of accuracy for the downward support exchanges (second-order factor); L_ACC_CM, lack of accuracy for the upward support exchanges (second-order factor).

Similarity LCM is applied separately for given and received support (Figure 2), and for mother–child and father–child dyads (for a total of four models). In each similarity LCM, there are two second-order factors: LEVEL is estimated constraining to 1 factor loadings of first-order factors, whereas lack of similarity factor (L_SIM) is estimated constraining to 0.5 the first-order factor of parent and to the −0.5 the first-order factor of child. Item residuals were correlated across the two informants, so for instance, residual of item 1 referring to perception of mother of given support to child was correlated to residual of item 1 referring to perception of child of given support to mother, and the same was done for all residuals of the items. Figure 2 shows only covariances referred to item 1, but the same is done for all the nine items.

Figure 3 presents the accuracy LCM. Accuracy is evaluated separately for mother–child and father–child dyads. Items measuring given and received support both from mother and child are included in the same LCM. Each LCM includes four first-order factors (support given by the mother, support received by the child, support given by the child, and support received by the mother) and four second-order factors: LEVEL and lack of accuracy (L_ACC) factors for the support given by the mother and received by the child (also called downward support exchanges, Kim et al., 2014), as well as LEVEL and lack of accuracy (L_ACC) factors for the support given by the child and received by the mother (also called upward support exchanges, Kim et al., 2014). As happened for similarity LCM, LEVEL is estimated constraining to 1 factor loadings of first-order factors, whereas lack of accuracy factor (L_ACC) is estimated constraining to 0.5 the given support first-order factor and to −0.5 the received support first-order factor. Covariances among item residuals are estimated across items with the same wording. Figure 3 shows only covariances referred to item 1, but the same is done for all the nine items.

For all the LCMs, the goodness of model fit was evaluated using the following indices: chi-square test (χ2), which indicates good fit when it is not significant (p > 0.05); root mean squared error of approximation (RMSEA) and the standardized root mean square residual (SRMR), which indicate good fit when lower than 0.08; and the comparative fit index (CFI), which indicates good fit when higher than 0.9 (Marsh et al., 2004). At the following link https://osf.io/t54yf/?view_only=a10011bc93f24cc6ad8bb6ea4cbe6a0a Mplus LCM input and output referred to similarity and accuracy models of both relationships are available.

Cross-National and Cross-Informant Invariance (Aim 2)

A multigroup model was applied, first testing if a configural first-order model fits well in the two samples (German and Italian ones). This means that given and received support first-order latent variables were estimated, without adding second-order latent variables of LCM. Measurement invariance, testing if factor loadings of the items (metric invariance), intercepts (scalar invariance), and residuals (strict invariance) were equivalent across groups, was verified first across nations (Germany vs. Italy) and then across informants (parent vs. child). The reason for that choice is rooted in the construct we are measuring. Similarity (or accuracy) is measured starting from the individual perception of the relationship by each informant. When cross-national comparisons are done, we need to verify first that there is an equivalent measurement model of the same construct evaluated by the same informant in the two nations, and, in a second step, whether there is also cross-informant measurement invariance.

To compare nested models, we compared chi-square, RMSEA, and CFI values across models. A significant chi-square difference (p < 0.05), a decrease in the CFI higher than 0.1, and an increase in the RMSEA equal to or higher than 0.015 were considered indicating a substantial decrease in model fit (Chen, 2007). When the difference was detected only by the chi-square test, it was not considered relevant as it is well-known that the chi-square significance is also affected by other factors such as the sample size (Iacobucci, 2010). At the following link https://osf.io/t54yf/?view_only=a10011bc93f24cc6ad8bb6ea4cbe6a0a Mplus input and output files produced to test the cross-national and cross-informant measurement invariance referred to similarity and accuracy models of both relationships are available.

Cross-National and Cross-Informant Measurement Invariance Within LCM (Aim 3)

Multigroup LCM was tested first without applying cross-national and cross-informant constraints, and, second, adding the cross-national and cross-informant measurement invariance constraints tested in the aim 2 section. This was done to demonstrate whether differences in cross-national parent–child similarity and accuracy could be due to measurement non-invariance reasons. Equality constraints were put on LEVEL and lack of similarity or accuracy (L_SIM or L_ACC) second-order factors of Italian and German samples to compare parent–child congruence scores (variance and mean) in the two nations. At the following link https://osf.io/t54yf/?view_only=a10011bc93f24cc6ad8bb6ea4cbe6a0a Mplus input and output files produced to test similarity and accuracy LCM of both relationships are available. Moreover, a table with all the different steps of the analyses is presented in Appendix B in Supplementary Material.

Results

Missingness Analysis and Outliers

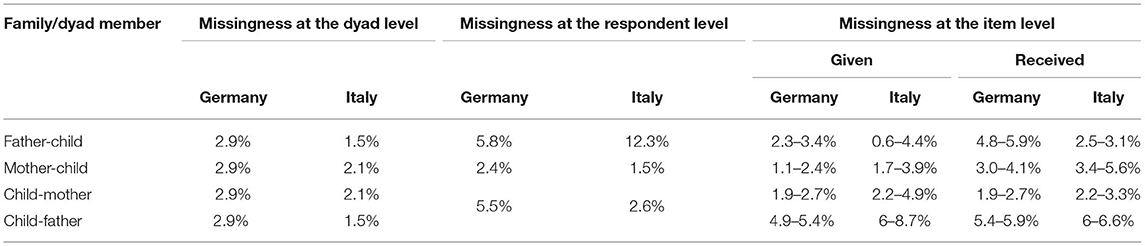

Missingness analysis was conducted on the complete dataset made of 612 families (Germany and Italy). The findings are presented in Table 2. Overall, the missingness rates were similar for Germany and Italy. Specifically, missing data at the dyad level ranged between 1.5 (Italian father–child dyad) and 2.9% (German mother–child and father–child dyad). Missing data at the respondent level ranged between 1.5 (Italian mothers) and 12.3% (Italian fathers). Missingness at the item level never exceeded 10% for each version of the received and given support scale. Regarding missingness mechanism, Little's MCAR test indicates that data were distributed randomly for the father–child relationship for both Germany [χ2(1,163) = 1224.02; p = 0.104] and Italy [χ2(645) = 609.71; p = 0.837]; while a different mechanism of missingness was revealed for the mother–child relationship, as missing data were distributed randomly for the Germany group [χ2(1,154) = 1,224.91; p = 0.072], and non-randomly for the Italian group [χ2(707) = 793.79; p = 0.013]. Multivariate outliers for the father–child dyad (11 German dyads, two Italian dyads) and for the mother–child dyad (22 German dyads, six Italian dyads) were excluded, and ML was used as estimator in subsequent analysis (similarity and accuracy models).

Table 2. Missingness rates at the dyad, respondent, and item level for the German and Italian samples.

Similarity and Accuracy

The findings will be presented separately for similarity and accuracy. Within each section, findings related to the three aims will be described, following a step-by-step structure. For the sake of brevity, we present only results referring to the mother–child relationship. Results referring to the father–child relationship are reported in Supplementary Material.

Similarity

LCM (Aim 1)

To realize aim 1, we run two LCMs separately for each nation. In particular, we tested two similarity models for the mother–child relationship, the given support similarity model, which allows comparing perception of the child of given support to the mother with perception of the mother of given support to her child, and the received support similarity model, which manages to compare perception of the child of received support from the mother with perception of the mother of received support from her child.

Each LCM included two first-order factors (support given/received by the child and support given/received by the mother), and two second-order factors (LEVEL factor and L_SIM factor for the support given/received by the child and the mother). In each model, we imposed cross-informant constraints, i.e., factor loadings of items, intercept, and residuals were imposed to be equivalent for mother and child reports when the two informants were evaluating the support they gave/received to each other (e.g., equivalence between the support given by the mother and given by the child). These models were run to estimate the amount of (lack of) similarity between the two informants. In other words, we estimated from these models the mean and the variance of the L_SIM second-order factor(s), whereas we constrained the mean of LEVEL to be 0, and we estimated its variance.

Both models (given and received support similarity models) run on the German sample had sufficient fit indices [given support: χ2(150) = 497.76; p < 0.001; RMSEA = 0.078 (0.071 0.086); CFI = 0.912; SRMR = 0.094; received support: χ2(150) = 509.20; p < 0.001; RMSEA = 0.079 (0.072 0.087); CFI = 0.925; SRMR = 0.068]. The lack of similarity for the given support between mother and child was 0.0962 (p = 0.022) [variance = 0.468 (p < 0.001)], and the variance of the LEVEL factor was 0.460 (p < 0.001); while for the received support, the lack of similarity between mother and child was −0.235 (p < 0.001) [variance = 0.580 (p < 0.001)], and the variance of the LEVEL factor was 0.546 (p < 0.001). The same models were run for the Italian sample obtaining worse fit indices for both models because of the smaller sample size [given support: χ2(150) = 444.81; p < 0.001; RMSEA = 0.104 (0.093, 0.115); CFI = 0.846; SRMR = 0.103; received support: χ2(150) = 445.26; p < 0.001; RMSEA = 0.104 (0.093, 0.115); CFI = 0.890; SRMR = 0.082]. In this nation, the lack of similarity was on average 0.261 (p < 0.001) [variance = 0.425 (p < 0.001)] for the given support, and the variance of the LEVEL factor for the given support was 0.430 (p < 0.001); while for the received support, the lack of similarity was −0.112 (p = 0.097) [variance = 0.676 (p < 0.001)] and the variance of the LEVEL factor was 0.522 (p < 0.001). In both nations, mother perceives to give more and receive less support than the child. Similarity models referred to father–child relationship showed that, only in Italy, father perceives to give more support than child. On the contrary, similarity in received support (both nations) and similarity in given support (only Germany) was found in father–child dyads (see Supplementary Material).

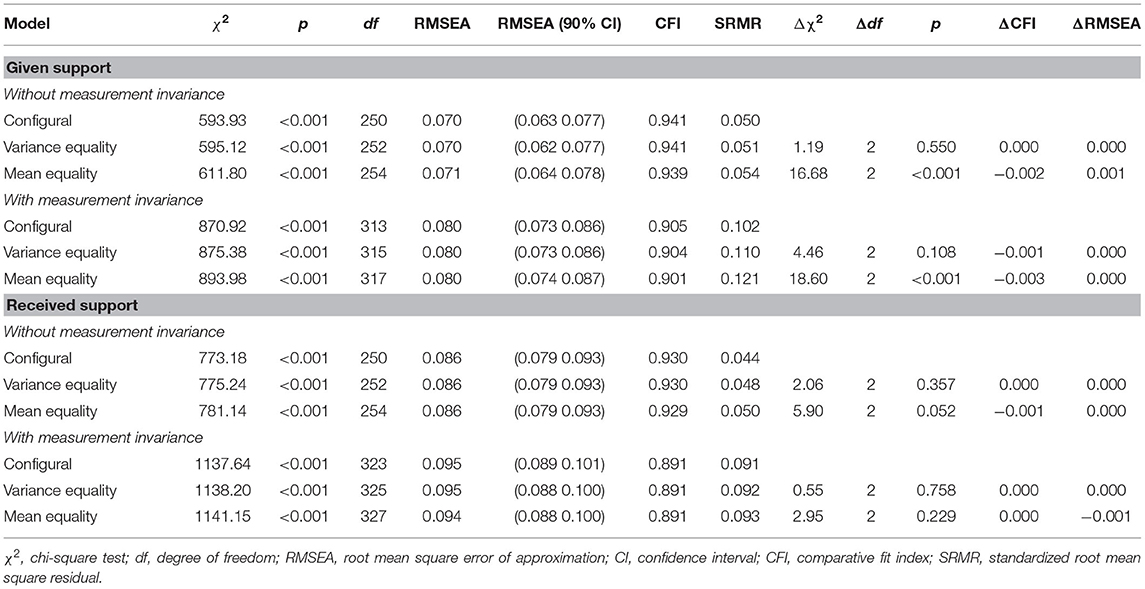

Cross-National and Cross-Informant Invariance (Aim 2)

As the measurement invariance issue concerns only the first-order factors, we run the two models (given support similarity model and received support similarity model) presented in LCM (aim 1) but the second-order factors were removed. Models were not applied separately to German and Italian samples, but we tested them within a multigroup model. Furthermore, as required to fully test the measurement invariance of each item composing the scale, we fixed to 0 the means of all first-order factors and to 1 all first-order factor variances of the first group (Germany), while they were freely estimated for the second group (Italy). All factor loadings of both groups were freely estimated.

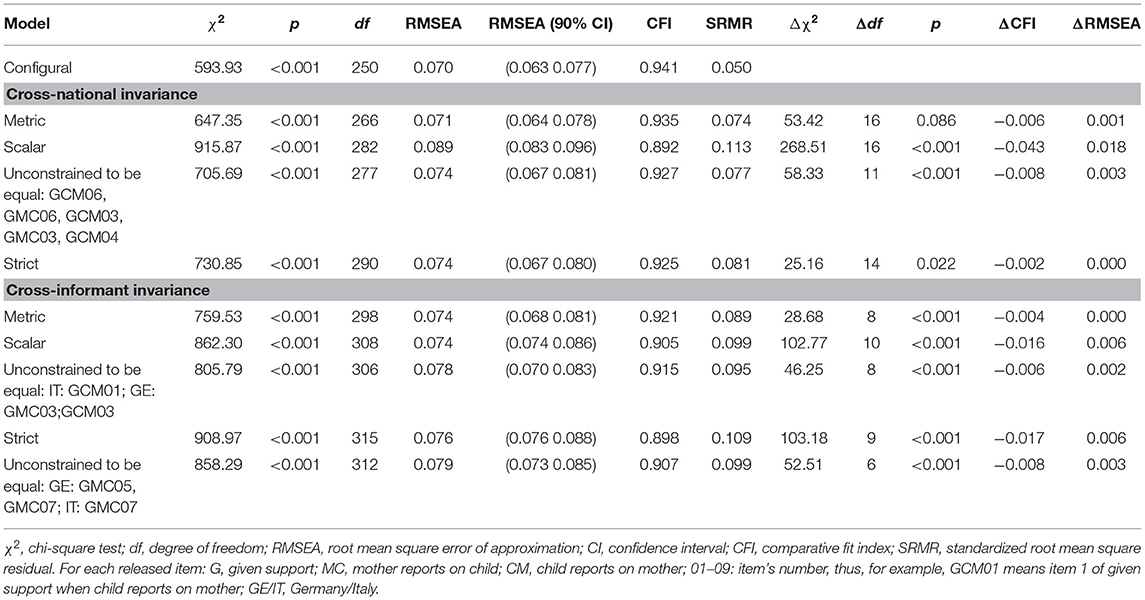

Regarding the given support similarity model, the configural model presented good model fit indices (see Table 3). We then proceeded testing cross-national measurement invariance by verifying if items reported by the same informant (e.g., child) thinking of giving support to the other informant (e.g., mother) had equivalent factor loadings (metric cross-national invariance), intercepts (scalar cross-national invariance), and residuals (strict cross-national invariance) across Germany and Italy.

Table 3. Cross-national and cross-informant invariance of the similarity model for the given support mother–child relationship.

For the two factors (e.g., support given by the child, support given by the mother) included in the model, we found that their items had equivalent factor loadings across nations (full metric invariance). Instead, regarding the scalar invariance, we found that item 6 (“Offered to conduct a conversation”) and item 3 (“Conducted conversations concerning my child/mother personal topics”) worked differently across nations for each of the two factors, while item 4 (“Conducted conversations concerning my mother daily issues”) worked differently across nations only when the child refers to the mother. In particular, item 3 had higher intercepts in Germany than in Italy both when the informant was the mother (3.72 vs. 3.38) as well as the child (3.82 vs. 3.41), the same for item 4 when the child was the informant (4.14 vs. 3.87). On the contrary, item 6 showed higher intercepts in Italy than in Germany both when the informant was the mother (3.76 vs. 3.43), as well as the child (4.04 vs. 3.28). In other words, German mothers and children tend to score item 3 higher than Italians, German children tend to score item 4 higher than Italians, while Italian mothers and children tend to score item 6 higher than Germans.

Keeping into account the three non-invariant intercepts, we proceeded testing the strict cross-national invariance. Tested residuals (all except residuals of non-invariant items from the scalar invariance) were equivalent across nations. We can conclude that given support factors of mother and child have the same meaning within the two nations (metric cross-national invariance), but their latent means are not fully comparable across groups because some intercepts differ across the two nations.

Maintaining in the model the cross-national invariance constraints, we proceeded testing the measurement invariance across informants. In other words, we verified if the instrument works equivalently across the two informants who are reporting about giving support to each other.

We imposed cross-informant constraints between items in which the child reported the given support to the mother, and items in which the mother reported the support she gave to her child. Constraints were imposed starting from factor loadings of items (metric cross-informant invariance) and proceeding with intercepts of items (scalar cross-informant invariance) and residuals (strict cross-informant invariance). We found equivalent factor loadings across the two informants (full metric invariance). Instead, we found that item 1 (“Advice regarding personal problems”) had different intercepts across informants for the Italian group, while item 3 (“Conducted conversations concerning my child/mother personal topics”) had different intercepts across informants for the Germany group. Specifically, Italian mothers tend to score higher in item 1 (3.19 vs. 2.84) than their children, while German children tend to score higher in item 3 (3.82 vs. 3.56) than their mothers.

We proceeded by testing the equivalence of residuals across informants, finding that residuals of items 5 and 7 were different across informants for the German group, while only the residual of item 7 was different across informants for the Italian group. Specifically, the residual variances were higher when the child was the informant both for item 7 in the Italian group (0.413 vs. 0.212), and for item 5 (0.311 vs. 0.180) and item 7 (0.413 vs. 0.252) in the German group. These findings revealed that, also from a cross-informant point of view, measurement model is not fully invariant, showing that if a lack of similarity will be found, it could be also because of cross-informant differences in intercepts.

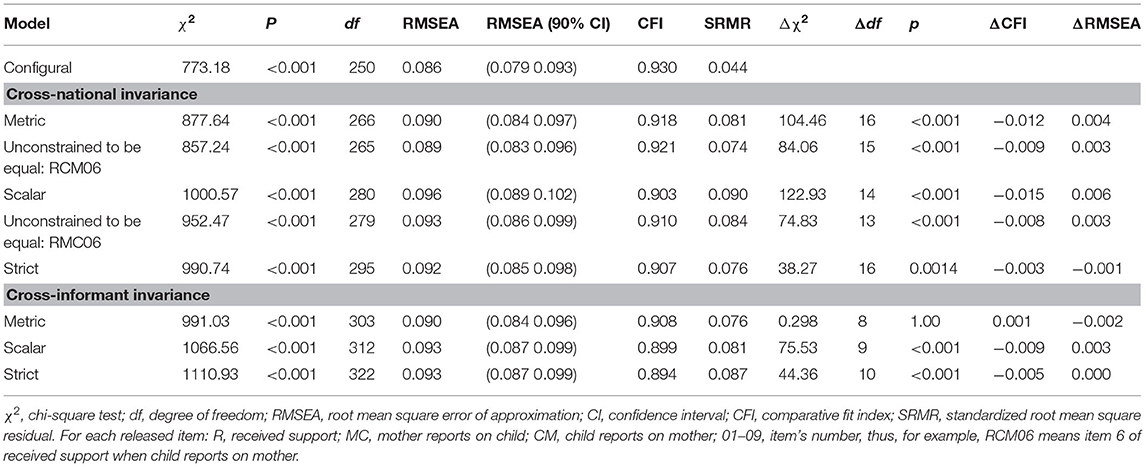

We followed exactly the same procedure for the received support similarity model (see Table 4). In this case, we found a partial metric invariance within the cross-national comparison, as the factor loading of item 6 of the child reporting the received support from the mother was higher for the German group (0.885 vs. 0.578). In other words, item 6 (“Offered to conduct a conversation”) is much more representative of the support received by mothers for German children compared with Italians. Intercepts and residuals were equivalent across nations except for the intercept of item 6 when reported by the mother, as it was higher for Italy (3.67 vs. 3.24). We can conclude that given support factors of mother and child have only partially the same meaning within the two nations (partial metric cross-national invariance), and also their latent means are not fully comparable across groups because some intercepts are different across the two nations. Additionally, we found full cross-informant invariance, which means that the instrument works invariantly across child and mother when they report about receiving support from each other.

Table 4. Cross-national and cross-informant invariance of the similarity model for the received support within the mother–child relationship.

Regarding the father–child relationship, we found cross-national partial scalar invariance for both given and received support, cross-informant partial scalar invariance for the given support, and full cross-informant invariance for the received support (see Supplementary Material).

Cross-National and Cross-Informant Measurement Invariance Within LCM (Aim 3)

We tested two multigroup LCMs separately for similarity in given support and similarity in received support, one without considering measurement invariant constraints, and the other adding the cross-national and cross-informant measurement invariance constraints that we found to be plausible in the aim 2 section. As we aimed to verify if LCM second-order factors (LEVEL and L_SIM) had equivalent variances and means across the two nations, we first run a multi-group model (configural) in which second-order factors were free to vary across the two nations. As reported in Table 5, all these parameters were freely estimated, except for LEVEL of the first group (Germany) and means of L_SIM, which were fixed to zero in order to make the model identified. The configural LCMs for both given and received support had sufficient fit indices (Table 6). We then proceeded testing variance equivalence of the factors across the nations. In other words, we constrained the variance of the second-order factors in the German group to be equivalent to the corresponding second-order factors in the Italian group. Fit indices of these models were not significantly different from those of the unconstrained models, thus indicating that the variances of the two second-order factors are equivalent across the two nations for both the given and the received support similarity models. Finally, we tested mean equivalence of the factors fixing the mean of the Italian second-order factors to zero (i.e., constraining them to be equivalent to that of the German). These models were good for both the similarity models, and they were sufficiently similar to the previous ones. Therefore, we can also conclude that the means of the second-order factors are equivalent across the two nations.

Table 5. Variances and means of second-order factors for the given and received support similarity models.

We then proceeded testing the same models but including the measurement invariant constraints that we found to be plausible in the aim 2 section (Table 6). As the constrained models were not relevantly different from the not constrained ones, we concluded that both the variances and the means of the two second-order factors for both the given support and the received support are equivalent across Germany and Italy (Table 5). However, it should be noted that the mean of L_SIM in given support could be affected by differences in cross-national and cross-informant intercepts, and the mean of L_SIM in received support could be affected by differences in cross-national intercepts (see aim 2 section). For those reasons, we should be cautious in concluding that mother and child similarly perceive given and received support and that there are no cross-national differences in those perceptions. The same conclusions can be drawn from the father–child relationship, since the means of the second-order factors are equivalent across the two nations for both given and received support (see Supplementary Material).

Accuracy

LCM (Aim 1)

Differently from the similarity, the accuracy of the reports of mother and child is evaluated including in the same model both the reports referring to the given support and the ones referring to the received support from both informants, because in this way it is possible to test accuracy in both downward and upward support at the same time. Each LCM was run separately for each nation, and we imposed cross-informant constraints for mother and child reports when the two informants were evaluating the same support exchange (e.g., equivalence between the support given by the mother and received by the child).

The model, run on the German sample, had good fit indices [χ2(586) = 1323.29; p < 0.001; RMSEA = 0.058 (0.053 0.062), p = 0.001; CFI = 0.93; SRMR = 0.076] and indicated that the lack of accuracy for the downward support exchange (support given by the mother and received by the child) was on average −0.045 (p = 0.216) and its variance was 0.379 (p < 0.001), while the variance of the LEVEL_MC factor was 0.457 (p < 0.001). Instead, the upward support exchange (support given by the child and received by the mother) was 0.087 (p = 0.055), and its variance was 0.574 (p < 0.001), while the variance of the LEVEL_CM factor was 0.528 (p < 0.001).

The same model for the Italian sample obtained worse fit indices because of the smaller sample size [χ2(586) = 1300.37; p < 0.001; RMSEA = 0.082 (0.076 0.088), p < 0.001; CFI = 0.871; SRMR = 0.094]. In this nation, the lack of accuracy was on average 0.128 (p = 0.066) [variance = 0.725 (p < 0.001)], and the variance of the LEVEL_MC factor was 0.528 (p < 0.001) for the downward support exchange; while the lack of accuracy was on average −0.037 (p = 0.474) [variance = 0.366 (p < 0.001)], and the variance of the LEVEL_MC factor was 0.353 (p < 0.001) for the upward support exchange.

We can conclude that in both nations there is high accuracy in the evaluation of upward and downward support exchanges between mother and child, as the lack of accuracy between the two informants always showed non-significant estimates. Accuracy models referred to the father–child relationship showed that, in both nations, father perceives to give more support than the support received by child (downward support exchange). Regarding the upward exchange, accuracy was found in Italy, while in Germany the child perceives to give less support than the father perceives to receive (see Supplementary Material).

Cross-National and Cross-Informant Invariance (Aim 2)

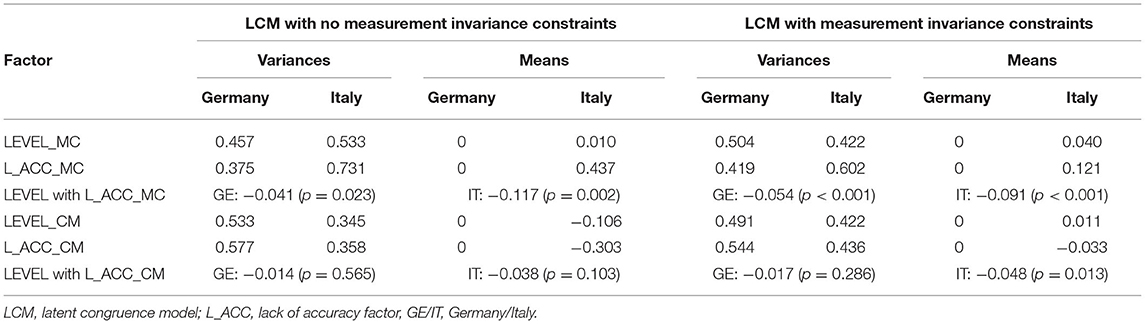

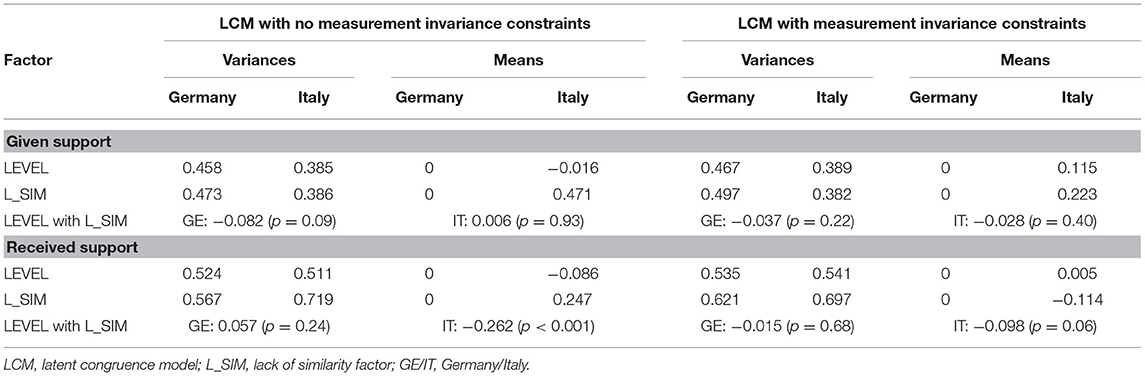

As for the similarity models, we run a multi-group model and fixed to 0 the means of all factors and to 1 the variances of all factors of the first group (Germany), while we freely estimated factor loadings of both groups and means and variances of factors of the second group (Italy). As this configural model presented good model fit indices (see Table 7), we proceeded testing cross-national measurement invariance. For all the four first-order factors (support given by the mother, received by the child, given by the child, received by the mother) included in the model, we found that their items had equivalent factor loadings across nations (full metric invariance). Instead, regarding scalar invariance, we found that item 6 (“Offered to conduct a conversation”) worked differently across nations for each of the four factors. In particular, these were intercepts of item 6 (Germany vs. Italy) when the item was reported by the child, referring to received (3.37 vs. 4.09) or given (3.28 vs. 4.10) support to the mother, and when this item was reported by the mother, referring to received (3.23 vs. 3.67) or given (3.43 vs. 3.80) support to the child. In other words, Italians tend to score higher than Germans. Tested residuals (all except residual of item 6) were equivalent across nations. As for each factor eight out of the nine items were fully invariant across nations, we concluded that each factor has the same meaning within the two nations (metric cross-national invariance) and that their latent (scalar cross-national invariance) and observed (strict cross-national invariance) total scores can be compared across groups.

Table 7. Cross-national and cross-informant invariance of the accuracy model for the mother–child relationship.

Maintaining in the model the cross-national invariance constraints, we proceed testing the measurement invariance across informant. In other words, we verified if the instrument works equivalently across the two informants that are reporting about both downward and upward support exchanges. We found that all the nine items were fully invariant across the two informants, concluding that mother and child interpret in the same way the items when referring to both downward and upward support exchanges, and that their latent and observed scores are comparable. Regarding the father–child relationship, we found the same results: cross-national partial scalar invariance and full cross-informant invariance for all the accuracy models (see Supplementary Material).

Cross-National and Cross-Informant Measurement Invariance Within LCM (Aim 3)

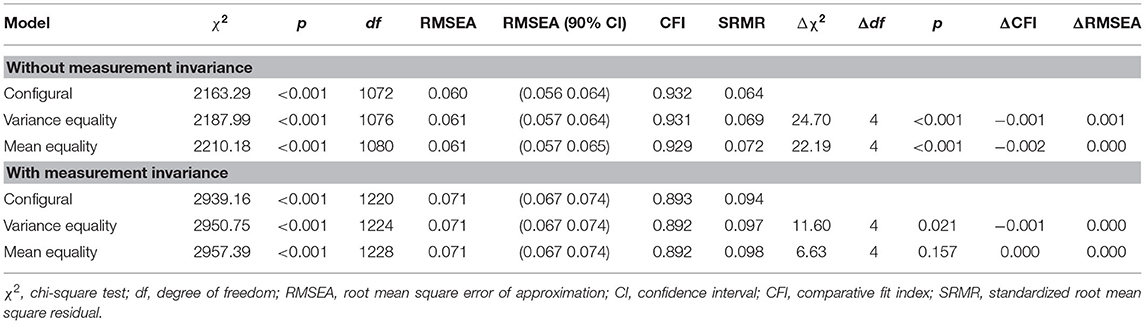

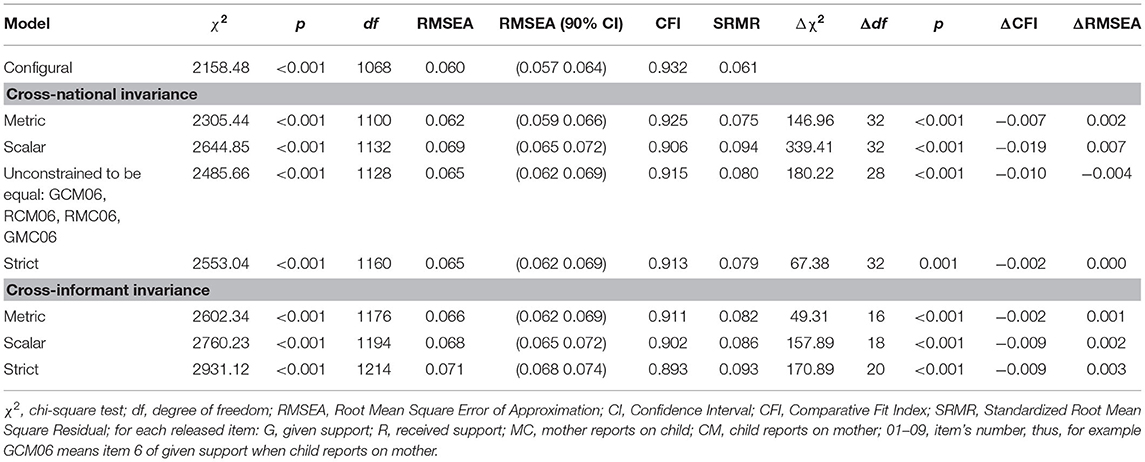

As happened with similarity models, we first run a multi-group model in which second-order factors were free to vary across the two nations, and in which no measurement invariance constraints were added. As reported in Table 8, all these parameters are freely estimated, except for the means of the first group (Germany), which were fixed to zero in order to make the model identified. This LCM had good fit indices (Table 9), so we proceeded testing the variance equivalence of the factors across nations. This model had good fit indices, which were not relevantly different from those of the unconstrained model, thus indicating that the variances of the four second-order factors are equivalent across the two nations. Finally, we tested the mean equivalence of the factors (Table 9), and the model was sufficiently similar to the previous one, to conclude that the means of the four second-order factors are equivalent across the two nations.

A second multi-group LCM was tested, following the same procedure just described, but including the measurement invariant constraints that we found to be plausible in the aim 2 section. Also, in this case, we concluded that both the variances and the means of the four second-order factors are equivalent across Germany and Italy (Table 9). The same conclusion can be drawn for the father–child relationship (see Supplementary Material).

Discussion

In this study, we illustrated how to conduct a cross-national comparison of parent–child similarity and accuracy in support exchanges perceptions applying LCM. The illustrative example shows that LCM is a useful model able to keep into account, at the same time, cross-informant and cross-national measurement invariance when investigating and interpreting parent–child similarity and accuracy. To the knowledge of the authors, no previous study compared parent–child similarity and accuracy between two or more nations. Thus, this is the first study that deals with those comparisons, accepting the challenge to test both cross-informant and cross-national measurement invariance in the same LCM.

An issue that we needed to keep into consideration was deciding the sequence of models tested. Usually, LCM is tested first verifying the configural cross-informant measurement invariant LCM, and then adding step-by-step constraints referred, respectively, to metric, scalar, and strict invariance (Cheung, 2009). That procedure is fundamental to correctly estimate and interpret the amount parent–child discrepancy found. When a cross-national or cross-cultural research design is used and LCM is applied, two different aspects of measurement invariance are involved. Indeed, data could be affected by differences in measurement models because of cross-national differences, cross-informant differences, or both. It highlights the importance of detecting the kind of measurement (non)invariance within the data, besides the level of measurement (non)invariance (metric, scalar, strict). For those reasons, it is important to first test cross-national measurement invariance and then to add the cross-informant one. To test cross-national measurement invariance, all the measurement invariance levels should be verified within a multigroup approach that compares the measurement models of two nations. After finding the best fitting cross-national measurement invariant LCM, it is possible to start the second step-by-step comparison, checking whether there is equivalence in the measurement models of the two informants.

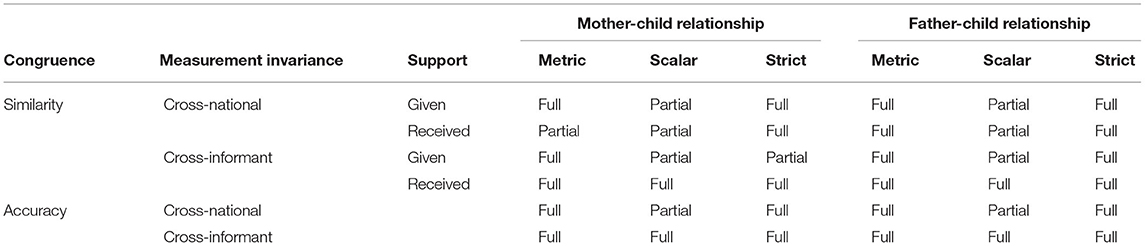

Findings and scenarios could be very different, we could have full cross-national and full cross-informant invariance, partial cross-national and full cross-informant invariance, full cross-national and partial cross-informant invariance, and partial cross-national and partial cross-informant invariance. Moreover, partial invariance could be at different levels: metric (for instance, we found partial cross-national metric invariance in mother–child similarity in received support), scalar (as we found in most of the models, especially in cross-national comparisons), or strict (for instance, we found partial cross-informant strict invariance in mother–child similarity in given support). Table 10 summarizes those scenarios and indicates full or partial invariance found in the illustrative example.

Table 10. Cross-national LCM measurement invariance scenarios and findings on the illustrative data.

The most desirable situation is the one in which both full cross-national and full cross-informant measurement invariance are found. In that case, researchers are sure that they can interpret the second order variable of the LCM (lack of similarity or lack of accuracy) as due to the level differences in the perception of the evaluated construct. However, very often, real data, as the ones presented in this study, reveal partial measurement invariance at some level.

Finding partial metric invariance in cross-national comparison questions the meaning of the construct in the different nations, and separately for each informant; thus, when similarity and accuracy are compared across nations, it is important to consider that the meaning of the construct on which similarity and accuracy are evaluated is slightly different in the two (or more) nations. Partial scalar invariance in cross-national comparison strongly affects the interpretation of similarity and accuracy and the cross-national comparison, and this is even more problematic if cross-national partial scalar invariance is found for only one informant. Similarly, when cross-informant partial metric invariance is found, the researcher is unsure about the interpretation of the similarity or accuracy variable, because he/she is not sure that the meaning of the construct is equivalent for the two informants. Also, cross-informant partial scalar invariance could affect the amount of similarity and accuracy, because one of the two informants partially overestimates (or underestimates) the level of the construct, so the discrepancy could be affected. Partial strict invariance is the less problematic situation, because the amount of residual is not directly involved in the estimation of similarity and accuracy latent variables. Generally speaking, if partial invariance is due to only one parameter, this reasonably does not have a great impact on the interpretation of results (Dimitrov, 2010), but if there are more items involved, researchers need to reflect on the meaning and amount of similarity and accuracy. For instance, in the analyses, many problems are related to item 6 (“Offered to conduct a conversation”): many cross-national measurement differences are due to the fact that support scores of Italian parents and children could be overestimated, because the intercept of that item is higher compared with the parameter for German parents and children. One possible reason for that could be the higher percentage of Italian young adults cohabiting with their parents than German ones. Since that finding is pervasive, researchers could evaluate to delete the item from analyses. In other cases, it could be sufficient to be aware of possible biases in the estimates.

LCM and the techniques we have used here can also be applied to other types of discrepancies, not only to cross-informant discrepancy, for instance, discrepancy between real and ideal personal characteristics (Liu et al., 2005), or between ideal and actual partner (Knee et al., 2001), or between received and desired support (Wang, 2019), and so on.

This study focused on the application of LCM to just one construct adopting a cross-national dyadic design. In the illustrative example, the construct was emotional support, and we found differences in similarity and accuracy (for instance, especially for given support, parent–child dyads are characterized by less similarity and more accuracy). Those findings revealed that, for parent–child emotional support exchanges literature, it would be important to consider, at the same time, similarity and accuracy processes especially when family triads are studied. Considering similarity and accuracy in the different family dyads allows to adopt a family research approach (Lanz et al., 2015). However, in order to understand the meaning of similarity and accuracy referred to a specific construct, it would be important to also consider other constructs as validity criterion (for some examples see Al Ghriwati et al., 2018; Makol et al., 2019).

Future research could use LCM as a more general framework to investigate complex models, in which validity criteria and predictors and outcomes of congruence (discrepancy, similarity or accuracy) are included (Cheung, 2009). However, Edwards (2009) criticized LCM because, although it constitutes advancement from a measurement point of view (i.e., LCM allows estimating measurement errors and measurement invariance), it presents the same problems as dyadic indexes. Dyadic indexes of discrepancy do not consider the contribution of the individual level (Rogers et al., 2018). That is not a problem per se, but it can undermine the interpretation of findings when dyadic indexes are used as predictors or outcomes in more complex models. Indeed, main effects are confounded with interaction/moderation effect and interpretation of findings can be misleading (Rogers et al., 2018; Laird, 2020). According to Edwards (2009), LCM focuses on level and congruence second-order factors defining them as different from the components, but it is not able to solve some problems of interpretations because of the fact that mean or different scores used as predictors or outcomes could hide the main effects of the components.

Literature presents many solutions to those problems. For instance, regression-based approaches, such as polynomial regression models (Edwards, 1994; Laird and Weems, 2011; Laird and De Los Reyes, 2013) or response surface analysis (Edwards, 1994; Barranti et al., 2017; RSA), allow to control the individual level (main effects) to test discrepancy (interaction effect) by including the component measures (individual level), their interaction (dyadic level), and higher-order terms as independent variables in the equation. Also, the Truth and Bias model (T and B; West and Kenny, 2011; Stern and West, 2018) considers both individual and dyadic processes, and allows testing, at the same time, two types of accuracy (i.e., mean-level bias and correlational accuracy). Finally, research questions dealing with interdependent outcomes (e.g., relationship satisfaction of both partners) can be answered by the Actor-Partner Interdependent Model (APIM) framework, which allows considering at the same time both predictors and outcomes measured by the two partners of the dyad; for instance, Schönbrodt et al. (2018) proposed the Dyadic Response Surface Analysis (DRSA), which integrates RSA with APIM and allows testing similarity effect on each of the outcome of two partners.

However, most of those solutions do not allow dealing with measurement model, that, as shown in this study, can be important to reflect on how the construct is measured and which items are more different across nations and/or informants. Recently, some models have been proposed, which allow keeping into consideration measurement models and also, potentially, to deal with measurement invariance issues. One of those models is Latent Moderated Structural Equations (Su et al., 2019), which extends the strengths of polynomial regression models to a SEM in which measurement invariance can be tested. Moreover, as happened for polynomial regression models, it allows testing not only linear relationships among predictors and outcomes but also curvilinear relationships that, for some theoretical frameworks, could be more interesting (Cheung, 2009; Edwards, 2009). However, Su et al. (2019) did not discuss the impact of measurement cross-informants (non)invariance on findings.

This study shows the importance of considering cross-informant and cross-national measurement (non)invariance in research on parent–child discrepancy, similarity, accuracy, and congruence. In that sense, LCM (both regarding similarity and accuracy) and the procedure we propose here are useful when the interest of research is to understand if parent–child discrepancy is similar or different across groups (nations, countries, age groups, and so on). On the opposite, when the research aim is to test predictive relationships between discrepancy and outcomes in different groups, other models, such as Latent Moderated Structural Equations, could be used, but they need to be adapted in order to consider both cross-informant and cross-group invariance. Overall, solid knowledge of conceptual and psychometric frameworks of methods used to measure dyadic similarity and accuracy is fundamental to choose the most appropriate technique to prevent researchers from erroneous or incomplete interpretations of their data.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

ST conceived the idea for the paper, contributed to the design of the study, was involved in all steps of the research process, and wrote a first set-up and draft of the manuscript. MZ and AS conducted and interpreted statistical analyses and drafted and edited the manuscript. SS, CH, HB, and ML contributed to the design of the study, data collection and adjustments, and wrote additions. ML made a substantial, direct and intellectual contribution to the work. All authors approved the manuscript and agreed to be accountable for all aspects of the work.

Funding

The present research had been funded by German Research Foundation and by Università Cattolica del Sacro Cuore (Department of Psychology, Linea D1 2018 La discrepanza percettiva: questioni teoriche e metodologiche). Open access publication fees will be payed by Linea D3.1 Università Cattolica del Sacro Cuore and by German Research Foundation.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank the Italian students who helped in data collection.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.672383/full#supplementary-material

Footnotes

1. ^Thönnissen, C., Wilhelm, B., Fiedrich, S., Alt, P., and Walper, S. (2015). Pairfam Scales Manual. Wave 1 to 6. Unpublished.

2. ^All the parameters reported in this paper are non-standardized estimates.

References

Al Ghriwati, N., Winter, M. A., Greenlee, J. L., and Thompson, E. L. (2018). Discrepancies between parent and self-reports of adolescent psychosocial symptoms: associations with family conflict and asthma outcomes. J. Fam. Psychol. 32, 992–997. doi: 10.1037/fam0000459

Barranti, M., Carlson, E. N., and Côt,é, S. (2017). How to test questions about similarity in personality and social psychology research: description and empirical demonstration of response surface analysis. Soc. Psychol. Personal. Sci. 8, 465–475. doi: 10.1177/1948550617698204

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Struct. Equ. Model. Multidiscip. J. 14, 464–504. doi: 10.1080/10705510701301834

Cheung, G. W. (2009). Introducing the latent congruence model for improving the assessment of similarity, agreement, and fit in organizational research. Organ. Res. Methods 12, 6–33. doi: 10.1177/1094428107308914

Claxton, S. E., Deluca, H. K., and Van Dulmen, M. H. (2015). Testing psychometric properties in dyadic data using confirmatory factor analysis: current practices and recommendations. Test. Psychometr. Methodol. Appl. Psychol. 22, 181–198. doi: 10.4473/TPM22.2.2

De Los Reyes, A., Augenstein, T. M., Wang, M., Thomas, S. A., Drabick, D. A. G., Burgers, D., et al. (2015). The validity of the multi-informant approach to assessing child and adolescent mental health. Psychol. Bull. 141, 858–900. doi: 10.1037/a0038498

De Los Reyes, A., Cook, C. R., Gresham, F. M., Makol, B. A., and Wang, M. (2019a). Informant discrepancies in assessments of psychosocial functioning in school-based services and research: Review and directions for future research. J. Sch. Psychol. 74, 74–89. doi: 10.1016/j.jsp.2019.05.005

De Los Reyes, A., Lerner, M. D., Keeley, L. M., Weber, R., Drabick, D. A. G., Rabinowitz, J., et al. (2019b). Improving interpretability of subjective assessments about psychological phenomena: a review and cross-cultural meta-analysis. Rev. Gen. Psychol. 23, 293–319. doi: 10.1177/108926801983764

Decuyper, M., De Bolle, M., and De Fruyt, F. (2012). Personality similarity, perceptual accuracy, and relationship satisfaction in dating and married couples. Pers. Relatsh. 19, 128–145. doi: 10.1111/j.1475-6811.2010.01344.x

DeLuca, H. K., Sorgente, A., and Van Dulmen, M. H. (2019). Dyadic invariance of the adult self-report and adult behavior checklist: evidence from young adult romantic couples. Psychol. Assess. 31, 192–209. doi: 10.1037/pas0000658

Dimitrov, D. M. (2010). Testing for factorial invariance in the context of construct validation. Meas. Eval. Couns. Dev. 43, 121–149. doi: 10.1177/0748175610373459

Edwards, J. R. (1994). The study of congruence in organizational behavior research: critique and a proposed alternative. Organ. Behav. Hum. Decis. Process. 58, 51–100. doi: 10.1006/obhd.1994.1029

Edwards, J. R. (2009). Latent variable modeling in congruence research: current problems and future directions. Organ. Res. Methods 12, 34–62. doi: 10.1177/1094428107308920

Hoellger, C., Sommer, S., Albert, I., and Buhl, H. M. (2020). Intergenerational value similarity in adulthood. J. Fam. Issues 42, 1234–1257. doi: 10.1177/0192513X20943914

Iacobucci, D. (2010). Structural equations modeling: fit indices, sample size, and advanced topics. J. Consum. Psychol. 20, 90–98. doi: 10.1016/j.jcps.2009.09.003

Kim, K., Zarit, S. H., Birditt, K. S., and Fingerman, K. L. (2014). Discrepancy in reports of support exchanges between parents and adult offspring: within-and between-family differences. J. Fam. Psychol. 28, 168–179. doi: 10.1037/a0035735

Knee, R. C., Nanayakkara, A., Vietor, N. A., Neighbors, C., and Patrick, H. (2001). Implicit theories of relationships: who cares if romantic partners are less than ideal? Pers. Soc. Psychol. Bull. 27, 808–819. doi: 10.1177/0146167201277004

Laird, R. D. (2020). Analytical challenges of testing hypotheses of agreement and discrepancy: comment on Campione-Barr, Lindell, and Giron (2020). Dev. Psychol. 56, 970–977. doi: 10.1037/dev0000763

Laird, R. D., and De Los Reyes, A. (2013). Testing informant discrepancies as predictors of early adolescent psychopathology: why difference scores cannot tell you what you want to know and how polynomial regression may. J. Abnorm. Child Psychol. 41, 1–14. doi: 10.1007/s10802-012-9659-y

Laird, R. D., and Weems, C. F. (2011). The equivalence of regression models using difference scores and models using separate scores for each informant: implications for the study of informant discrepancies. Psychol. Assess. 23, 388–397. doi: 10.1037/a0021926

Lanz, M., Scabini, E., Tagliabue, S., and Morgano, A. (2015). How should family interdependence be studied? the methodological issues of non-independence. Test. Psychometr. Methodol. Appl. Psychol. 22, 169–180. doi: 10.4473/TPM22.2.1

Lanz, M., Sorgente, A., and Tagliabue, S. (2018). Inter-rater agreement indices for multiple informant methodology. Marriage Fam. Rev. 54, 148–182. doi: 10.1080/01494929.2017.1340919

Liu, W. M., Rochlen, A., and Mohr, J. J. (2005). Real and ideal gender-role conflict: exploring psychological distress among men. Psychol. Men Masc. 6, 137–148. doi: 10.1037/1524-9220.6.2.137

Makol, B. A., De Los Reyes, A., Ostrander, R., and Reynolds, E. K. (2019). Parent-youth divergence (and convergence) in reports of youth internalizing problems in psychiatric inpatient care. J. Abnorm. Child Psychol. 47, 1677–1689. doi: 10.1007/s10802-019-00540-7

Marsh, H. W., Hau, K. T., and Wen, Z. (2004). In search of golden rules: comment on hypothesis-testing approaches to cutoff values for fit indexes and dangers in overgeneralizing Hu and Bentler (1999). Struct. Equ. Model. 11, 320–341. doi: 10.1207/s15328007sem1103_2

Mastrotheodoros, S., Van der Graaff, J., Deković, M., Meeus, W. H., and Branje, S. (2020). Parent–adolescent conflict across adolescence: trajectories of informant discrepancies and associations with personality types. J. Youth Adolesc. 49, 119–135. doi: 10.1007/s10964-019-01054-7

Pusch, S., Schönbrodt, F. D., Zygar-Hoffmann, C., and Hagemeyer, B. (2021). Perception of communal motives in couples: accuracy, bias, and their associations with relationship length. J. Res. Pers. 91:104060. doi: 10.1016/j.jrp.2020.104060

Reifman, A., and Niehuis, S. (2018). Over-and under-perceiving social support from one's partner and relationship quality over time. Marriage Fam. Rev. 54, 793–805. doi: 10.1080/01494929.2018.1501632

Rescorla, L. A. (2016). Cross-nationalperspectives on parent-adolescent discrepancies: existing findings and future directions. J. Youth Adolesc. 45, 2185–2196. doi: 10.1007/s10964-016-0554-7

Rescorla, L. A., Ginzburg, S., Achenbach, T. M., Ivanova, M. Y., Almqvist, F., Begovac, I., et al. (2013). Cross-informant agreement between parent-reported and adolescent self-reported problems in 25 societies. J. Clin. Child Adolesc. Psychol. 42, 262–273. doi: 10.1080/15374416.2012.717870

Rogers, K. H., Wood, D., and Furr, R. M. (2018). Assessment of similarity and self-other agreement in dyadic relationships: a guide to best practices. J. Soc. Pers. Relat. 35, 112–134. doi: 10.1177/0265407517712615

Russell, J. D., Graham, R. A., Neill, E. L., et al. (2016). Agreement in youth–parent perceptions of parenting behaviors: a case for testing measurement invariance in reporter discrepancy research. J. Youth Adolesc. 45, 2094–2107. doi: 10.1007/s10964-016-0495-1

Sakaluk, J. K., Fisher, A. N., and Kilshaw, R. E. (2021). Dyadic measurement invariance and its importance for replicability in romantic relationship science. Pers. Relatsh. 28, 190–226. doi: 10.1111/pere.12341

Schaffhuser, K., Allemand, M., Werner, C. S., and Martin, M. (2016). Discrepancy in personality perceptions is related to relationship satisfaction: findings from dyadic latent discrepancy analyses. J. Pers. 84, 658–670. doi: 10.1111/jopy.12189

Schönbrodt, F. D., Humberg, S., Nestler, S., and Carlson, E. (2018). Testing similarity effects with dyadic response surface analysis. Eur. J. Pers. 32, 627–641. doi: 10.1002/per.2169

Sommer, S., and Buhl, H. M. (2018). Intergenerational transfers: associations with adult children's emotional support of their parents. J. Adult Dev. 25, 286–296, doi: 10.1007/s10804-018-9296-y

Steenkamp, J. B., and Baumgartner, H. (1998). Assessing measurement invariance in cross-national research. J. Consum. Res. 25, 78–90. doi: 10.1086/209528

Stern, C., and West, T. V. (2018). Assessing accuracy in close relationships research: a truth and bias approach. J. Soc. Pers. Relat. 35, 89–111. doi: 10.1177/0265407517712901

Su, R., Zhang, Q., Liu, Y., and Tay, L. (2019). Modeling congruence in organizational research with latent moderated structural equations. J. Appl. Psychol. 104, 1404–1433. doi: 10.1037/apl0000411

Tabachnick, B. G., and Fidell, L. S. (2013). Using Multivariate Statistics: International Edition. Boston, MA: Pearson.

Tagliabue, S., and Donato, S. (2015). Missing data in family research: examining different levels of missingness. Test. Psychometr. Methodol. Appl. Psychol. 22, 199–217. doi: 10.4473/TPM22.2.3

Tagliabue, S., and Lanz, M. (2014). Exploring social and personal relationships: the issue of measurement invariance of non-independent observations. Eur. J. Soc. Psychol. 44, 683–690. doi: 10.1002/ejsp.2047

Wang, N. (2019). Support gaps in parent-emerging adult dyads: the role of support quality. Pers. Relatsh. 26, 232–261. doi: 10.1111/pere.12276

Wang, S., Kim, K., and Boerner, K. (2018). Personality similarity and marital quality among couples in later life. Pers. Relatsh. 25, 565–580. doi: 10.1111/pere.12260

Keywords: latent congruence model, measurement invariance, similarity, accuracy, cross-national, cross-informant, parent-child relationship, support exchanges

Citation: Tagliabue S, Zambelli M, Sorgente A, Sommer S, Hoellger C, Buhl HM and Lanz M (2021) Latent Congruence Model to Investigate Similarity and Accuracy in Family Members' Perception: The Challenge of Cross-National and Cross-Informant Measurement (Non)Invariance. Front. Psychol. 12:672383. doi: 10.3389/fpsyg.2021.672383

Received: 25 February 2021; Accepted: 29 June 2021;

Published: 11 August 2021.

Edited by:

Siwei Liu, University of California, Davis, United StatesReviewed by:

Thomas Ledermann, Florida State University, United StatesJoanna Teresa Mazur, University of Zielona Góra, Poland

Copyright © 2021 Tagliabue, Zambelli, Sorgente, Sommer, Hoellger, Buhl and Lanz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Semira Tagliabue, c2VtaXJhLnRhZ2xpYWJ1ZUB1bmljYXR0Lml0

Semira Tagliabue

Semira Tagliabue Michela Zambelli

Michela Zambelli Angela Sorgente

Angela Sorgente Sabrina Sommer3

Sabrina Sommer3 Margherita Lanz

Margherita Lanz