95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 11 June 2021

Sec. Comparative Psychology

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.654314

This article is part of the Research Topic Songs and Signs: Interdisciplinary Perspectives on Cultural Transmission and Inheritance in Human and Nonhuman Animals View all 20 articles

We describe an art–science project called “Feral Interactions—The Answer of the Humpback Whale” inspired by humpback whale songs and interactions between individuals based on mutual influences, learning process, or ranking in the dominance hierarchy. The aim was to build new sounds that can be used to initiate acoustic interactions with these whales, not in a one-way direction, as playbacks do, but in real interspecies exchanges. Thus, we investigated how the humpback whales generate sounds in order to better understand their abilities and limits. By carefully listening to their emitted vocalizations, we also describe their acoustic features and temporal structure, in a scientific way and also with a musical approach as it is done with musique concrète, in order to specify the types and the morphologies of whale sounds. The idea is to highlight the most precise information to generate our own sounds that will be suggested to the whales. Based on the approach developed in musique concrète, similarities with the sounds produced by bassoon were identified and then were processed to become “concrete sound elements.” This analysis also brought us to design a new music interface that allows us to create adapted musical phrases in real-time. With this approach, interactions will be possible in both directions, from and to whales.

Intra- or interspecies interactions are the effects between individuals respectively from the same or different species, based on sensory stimuli from at least one of their senses including sight, smell, touch, taste, and hearing (Cheng, 1991). Durations of these interactions have different levels of consequences on their life and vital activities (Wootton and Emmerson, 2005) and could be largely different from simple to complex interactions with positive associations (predator–prey relationships, symbiotic relationships such as mutualism, commensalism, and parasitism) and negative associations (competition). Cetaceans are highly social species living in groups. Intraspecies interactions are strong during their activities and strengthen the structure of their societies (see, for example, MacLeod, 1998; Orbach, 2019). However, numerous observations were also already done between different cetacean species. Observations of these interspecies interactions published in scientific revues mostly involved delphinids (Weller et al., 1996; Frantzis and Herzing, 2002; Herzing et al., 2003; Psarakos et al., 2003; Smultea et al., 2014). Interactions between odontoceti and mysticeti species seems rarer, sometimes in a relationship as prey/predator (Whitehead and Glass, 1985; Arnbom et al., 1987; Vidal and Pechter, 1989; Jefferson et al., 1991; Palacios and Mate, 1996; Rossi-Santos et al., 2009; Deakos et al., 2010; Cotter et al., 2012) or as competitors for food (Wedekin et al., 2004).

Humans were always interested in nature and their inhabitants, even when marine wildlife was more difficult to observe and to be in contact with, in spiritual and practical ways. In occidental civilizations, over centuries, cetaceans were shown like scary creatures with deformed imaginary bodies. In other parts of the world, cetaceans were considered gods and were revered (Constantine, 2002). More recently, during the 19th and 20th centuries, whales were massively hunted for food and goods. After the international whale moratorium in 1982, interactions with cetaceans were still negative, due to the numerous marine anthropogenic activities, like fisheries, marine traffic, plastic, chemical and sound pollution, and climate change. Nevertheless, the public progressively started to interact differently with cetaceans, especially because of another intellectual view of understanding the oceans (Alpers, 1963; Cousteau and Diolé, 1972; Constantine, 2002; Neimi, 2010; Allen, 2014). It is interesting to note that the cetacean morphology, and not their emitted sounds, was one of the main criteria for positive or negative perceptions that humans have from cetaceans. However, since the easier democratic access for all to pleasure sail and motor crafts, and the new interests for marine life, and more largely for the ocean, meetings with cetaceans became not rare anymore, and then interactions were possible and happened more often (Parsons and Brown, 2017). Thus, positive and negative interactions were described when cetacean species respectively come to or go away from humans (Allen, 2014; Butterworth and Simmonds, 2017). Films, documentaries, and seaworlds have also implied, in their own way, a radical change in public attitudes and in particular a renewed interest for these marine mammals (Lavigne et al., 1999; Samuels and Tyack, 2000). Moreover, countries started a new management of the oceans; and international, regional, and national policies to protect cetaceans and their marine environment have been written and put in place, also contributing to higher respect for these species (Baur et al., 1999).

Research projects were done on cetacean species, to better understand their behaviors, their interactions, their habitats, and their migrations routes and also to better describe the potential effects of anthropogenic activities on them and their marine environment. These programs involved scientists with different skills, such as biology, ecology, ethology, genetics, acoustics, signal processing, mathematics, and computer science. In 2007, we started an international project in collaboration with the Malagasy Ngo Cetamada and the Department of Animal Biology of the University of Antananarivo, Madagascar. The main objective was to characterize the Southwestern Indian Ocean humpback whale population (individual identification, spatial distribution, and potential breeding hotspots). We also focused on the songs emitted by the male individuals with the idea to automatically classify their vocalizations. Because these songs are mainly emitted by males during the breeding seasons, they could have specific roles in mating and for reproduction, to attract females and/or to keep males away from each other (Winn and Winn, 1978; Tyack, 1981; Tyack and Whitehead, 1983; Helweg et al., 1992; Darling and Bérubé, 2001; Darling et al., 2006; see review in Cholewiak et al., 2013). From the detection and the extraction of the vocalizations, their songs were analyzed in order to characterize the internal structures of the successive phrases. Payne and McVay (1971) introduced the concept of units (also called song units), as harmonic or pulsed vocalizations emitted between two silences. Manual and automatic classifications of units are usually done from acoustic time and frequency features, such as duration, relative acoustic intensity, fundamental frequency, presence of harmonics, and shape of the Fourier spectrogram (Dunlop et al., 2007; Cholewiak et al., 2013). These units are organized in time sequences and repeated to form the song themes (Payne and McVay, 1971). During the last five decades, scientists studied these songs, and it was firstly shown that their evolutions are very slow from 1 year to another with the transformations of existing units, and the removal and the insertion of few new units in a cultural evolution (Payne et al., 1983; Payne and Payne, 1985; Allen et al., 2019; see review in Garland and McGregor, 2020). None of these changes reveal the functions of songs. Secondly, whales from the same ocean interact with each other by sharing the same themes, considered as regional dialects. In a few cases, songs changed faster, with new units introduced by humpback whales from another area, although in fact the mechanism is still being disentangled and may include song learning on migratory routes leading to their characterization as cultural revolution (Noad et al., 2000; Allen et al., 2019; Owen et al., 2019). However, different populations often emit different themes, as do whales within the same population across spans of several years.

In 1970, Payne published a vinyl album record with humpback whale song recordings (Payne, 1970). This album was played at the United Nations and at the Japanese House of Representatives to motivate governments and official authorities to stop whale hunting. Whale songs were also a source of inspiration for artistic works (for example, Collins, 1970; Hovhaness, 1970; Crumb, 1971; Bush, 1978; Cage, 1980; Nollman, 1982; Walker, 2001; Potter, 2003—see a review in Rothenberg, 2008a).

Another motivation was the goal to interact with a nonhuman species. Some of them are directly linked with vital activities, like territory defense or food search. Other interactions are based on mutual visual observations. With cetaceans, first experiments were focused on their abilities, their behaviors, and even their intelligence to manage such exchanges with humans. For example, a catalog of words was taught to dolphins (Lilly, 1967), showing that they can learn more than 30 words and can organize them in sentences (Herman et al., 1969; see review in Herman, 2010). Recently, this anthropomorphism approach was replaced by studies using what the cetaceans can do by themselves, as emitted sounds and behaviors (Dudzinski, 2010; Herzing, 2011; Reiss, 2011). For humpback whales, studies based on playback of their own emitted sounds helped to distinguish specific sounds like breeding or feeding sounds (Tyack, 1983; Mobley et al., 1988; Frankel and Clark, 1998; Darling et al., 2012; Dunlop et al., 2013).

Human music was also introduced during interactions; for example, composed and improvised musical pieces produced with flute, cello, violin, and even orchestra were played for dolphins, belugas, and humpback whales (for example, Video on YouTube, 2008, 2011a, 2011b, 2015). A small number of musical performances were also done by listening to sounds emitted by cetaceans during bidirectional interactions and already showed that these interactions were possible even with wild cetaceans. For example, Rothenberg (2008b) played clarinet to humpback whales in Hawaii, United States, and was able to adjust his musical improvisation taking account of the units emitted by the whale. Nollman (2008) played music with orcas in their marine environment. However, beside the musicians’ perceptions, no scientific method has been used to objectively characterize these interactions with whales.

Our art–science project “Feral Interactions—The Answer of the Humpback Whale” began in this context, with the motivation to take into account the humpback whale units and also to objectively measure the interaction level. Our first step was to carefully listen to the humpback whale songs following the analytic listening invented by Schaeffer (1967). Analytic listening is the foundation of musique concrète, which is not based on music sheets or on relationships between music notes and chords, or on personal perception by instrumentalists. It consists of selecting musical objects and analyzing the contents as well as their positions within a musical theme. Then, audio processing can be applied on these raw sounds to transform them into sound objects, finally totally disconnected from the musical instruments that produced the sounds. The aim is to assemble these objects to produce an original musical composition, which is played on loudspeakers. Schaeffer’s fundamental discovery was that these sound objects taken out of their context and used as musical objects are freed from the connotation of their source and can thus reveal their inherent sonic qualities.

Then, we identified some common acoustic features with sounds that can be obtained from a bassoon. We investigated the mechanical structure and the acoustic features of these two sound generators. In this paper, we will explain how our recent scientific study on the humpback whale larynx anatomy brought new inputs showing why the bassoon is the musical instrument closer to the vocal system of the humpback whales, rather than tapped or strummed string instruments or drums, even to simulate pulsating sounds. We will also present and analyze the results of a first experiment of human–animal interactions. Finally, we will discuss the design of a gestural interface for more nuanced and adaptive human–whale interaction.

Schaeffer (1966) highlighted the language of things and considered listening as the important first step, far from our cultural referents, to be able to create sound objects (Dufour et al., 1999). This process started from the origin of the sounds, i.e., what produced them but also on the perception and sensation of the listeners. It goes through “écouter” (indicative listening), “ouïr” (to be able to listen), before “entendre” (selective listening), and finally “comprendre” (identity listening) (Schaeffer, 1966). In this current study, we strictly followed these successive steps, beginning with the awareness of musical similarities between vocalizations emitted by humpback whales and sounds produced from bassoons. This “trouvaille,” as defined by Schaeffer (1966), is based on several common mechanical and acoustic features between the body of this musical instrument and the humpback whale vocal generator. Therefore, we were able to make comparisons between vibrators and resonators based on the features of the bassoon and our recent knowledge about the anatomy of the humpback whale larynx.

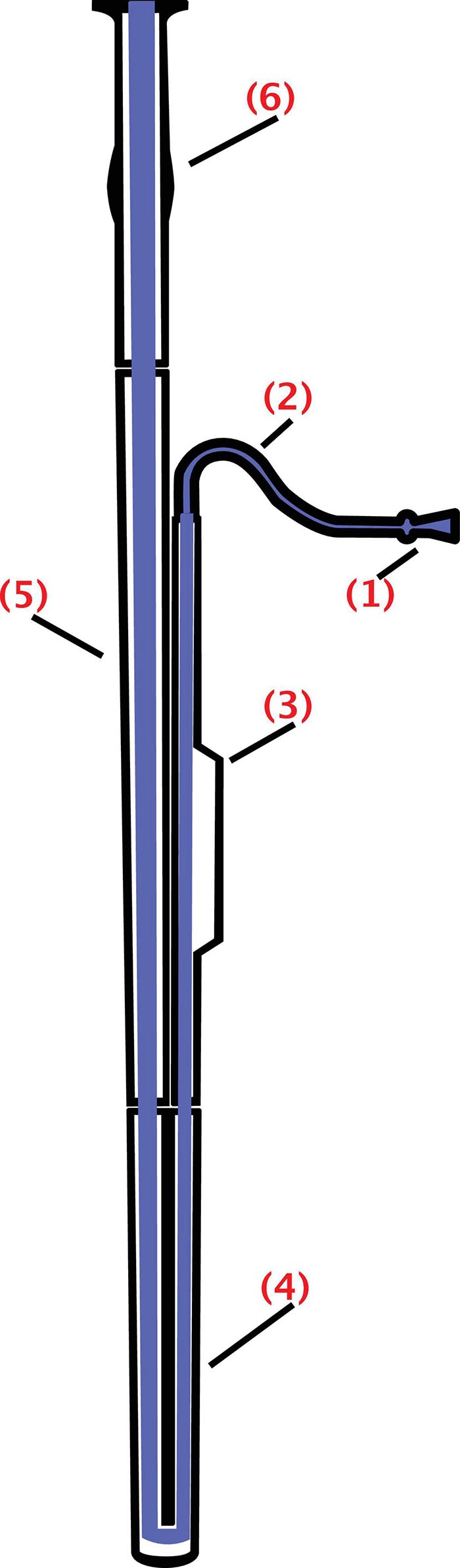

For the bassoon, the vibrator consists of two reeds tied together (Figure 1). The mouthpiece is thus made up of two parallel strips of delicately prepared and ligated reeds, unlike the clarinet and the saxophone, which have only one reed. Reeds are generally made from a plant call Arundo Donax. The long fibers are positioned from the heel up to the tip. A high quality of the reeds is absolutely needed to create sustainable sounds and not to produce unwanted whistles, generated by irregular facing (Brand, 1950; Giudici, 2019). These two reeds are flexible and mobile. Moistened and pinched between the lips of the instrumentalist, they vibrate with the airflow coming from the mouth. Periodic oscillations of the mouthpiece excite the air column (Nederveen, 1969). The fundamental frequency and the harmonics will depend on different parameters including the stiffness of the two reeds, the pressure of the lips, the power of the airflow, the length of the air–column, and the mechanical resonances obtained by opening or closing tone-holes. The colors of the sounds that will be emitted by the instrumentalists depend on the air attack of the reed involving the lips as well as the pressure and the precision of the airflow. Musicians have also to press their lips on the reed blades to intentionally control the slit opening between the two reeds, in order to tune the color, the pitch, and loudness of the notes that they want to play.

Figure 1. Schematics of the bassoon: The bell (6), extending upward; the bass joint (or long joint) (5), connecting the bell and the boot; the boot (or butt) (4), at the bottom of the instrument and folding over on itself; the wing joint (or tenor joint) (3), which extends from boot to bocal; and the bocal (or crook) (2), a crooked metal tube that attaches the wing joint to a reed (1) (from https://commons.wikimedia.org/wiki/File: Fagott-Bassoon.svg by users Mezzofortist, GMaxwell CC-BY-SA 3.0).

The humpback whale vibrator is made by two parallel identical 30-cm-long cartilages, called arytenoid cartilages, covered by a more or less thick membrane over their entire length (Reidenberg and Laitman, 2007; Figure 2). The arytenoids work as a valve. The whale will unseal these cartilages by relaxing the muscles so that air can move inside the respiratory system. The whale can move the cartilages in three directions: (a) to longitudinally open with a constant gap on the whole length, (b) to gradually open them in triangular form larger at the apex, or (3) to place them in a V shape (Damien et al., 2018). By adjusting the slit opening of the cartilages, and also by adjusting the stiffness of the membranes and the pressure from the airflow, the whale can precisely control the acoustic features of the emitted vocalizations that allow a great variety of sounds to be generated (Adam et al., 2013; Damien et al., 2018).

The humpback whale vibrator therefore has no common anatomical characteristic with the vocal folds of terrestrial mammals and monkey lips of the odontoceti species. The arytenoids cannot be stretched more or less to modulate the frequencies as terrestrial mammals do. As well, their vibrator can produce pulsed sounds but no clicks as odontoceti species do. The functional anatomies of their own sound generators explain the different time and frequency acoustic features: the clicks emitted by odontoceti species are transient broadband signals, and the humpback whale vocalizations are tonal signals.

Even if the vibrators of the bassoon and of the humpback whale vocal generator are far from each other in terms of the intrinsic features of the materials, including the size, the stiffness, and the thickness, the way to vibrate follows the same principle of fluid dynamics: the specific volume and speed of the airflow creates pressure and passes through the opening between the two constrained symmetrical rigid parts. These two systems can be both modeled by a harmonic oscillator as the mass (ms)–damper (rs)–spring (stiffness ks) controlled valve:

where pr and pm are the inner and outer air pressures, respectively. For the bassoon, pm and pr are the pressures before the reeds from the mouth and after the reeds, respectively. For the whale, these two pressures are, respectively, in the trachea before the arytenoids and right after the arytenoids at the entrance of the laryngeal sac.

In both cases, we considered the flows as low as possible to be laminar, which allows to simplify the equation and obtain preliminary simulated results from physics models (Almeida et al., 2003). Note that non-linearities in vibrator oscillations, especially due to faster variations of the airflows, are usually smoothed by the mass damper in the physics models (Almeida et al., 2003; Guillemain, 2004). However, whether for the current playing of the bassoon or for the loud vocalizations emitted by humpback whales, the airflows have to be mainly considered as turbulent due to the high volumes of air relative to the size of the duct and the resulting pressures on the vibrator walls (Wijnands and Hirschberg, 1995; Adam et al., 2013).

Feedback controls (from the instrumentalist and the whale) make it possible to adjust the resonance of the vibrators and to sustain the harmonic oscillations. Bassoon players will more or less force on his/her lips to slightly modify the pm values. For the whale, a fat membrane called the cushion is localized perfectly above the arytenoids. Its role is to dramatically reduce the space just before the vibrated membranes covering the arytenoid cartilages (Damien et al., 2018). Basically, this cushion brings the airflow to the arytenoids and also presses the membranes around the cartilages, in the same way that the bassoon instrumentalist does with his/her lips on the reeds. An original mechanical model of the humpback whale vocal generator was designed and used to test the different pressures on the vibrator and also to characterize the airflow propagation especially before and after the arytenoids (Lallemand et al., 2014; Cazau et al., 2016; Midforth et al., 2016; Figure 3).

Interestingly, geometrical similarities were also found in the structure and the size of both sound systems.

The bassoon is made with a 2.50-m-long conical drill tube made of precious wood (Figure 1). The total length is 1.30 m when they are folded up. The internal pipe is conical with a diameter that widens regularly from 4 to 40 mm. It is composed of four parts: the wing joint, the butt, the bass joint, and the bell. Depending on the construction, about 30 tone-holes are positioned on the instrument. A curved bocal, a 30-cm metal tube, connects the instrument to the mouthpiece. The original compass was 3 octaves upward from the Bb0 (58 Hz). However, the modern version now allows to reach treble Eb5 (622 Hz). The sound timbre also changes by opening and closing the tone-holes.

The humpback whale vocal generator is also made with two pipes linked by the arytenoids: the trachea and the nasal cavities (Figure 2; Reidenberg and Laitman, 2007; Adam et al., 2013; Adam et al., 2018). The trachea is the pipe between the lungs and the arytenoid cartilages. The nasal cavities and the laryngeal sac are the acoustic resonators. The features of these two pipes vary from one individual to another, with lengths from 0.60 to 1.20 m (Reidenberg and Laitman, 2007). This specific anatomy allows them to produce tonal sounds with a fundamental frequency less than 100 Hz. To produce sounds, humpback whales can include or not the nasal cavities by opening or closing the epiglottis. Then, the nasal cavities focus more energy in specific harmonic frequencies, called formants, that can be up to 8 kHz (Adam et al., 2013).

Unit repertoires are not easy to create, especially because of the variabilities of the unit features, even for repetitive units emitted by the same singer. Therefore, manual annotations of acoustic datasets, even by experts trained to detect and classify units, showed inter-annotator variability for different reasons, and in particular due to the subjectivity of the analysis (Leroy et al., 2018). The automatic approaches clearly help to better describe these songs. A very large panel of methods were proposed, for example, based on the information entropy (Suzuki et al., 2005), on a threshold on the Fourier coefficients (Mellinger, 2005), on the extraction of the edge contour (Gillespie, 2004), on the analysis of the mel-frequency cepstral coefficient (MFCC) (Pace et al., 2010) or the wavelet coefficients (Kaplun et al., 2020), or with the use of artificial neural networks (Allen et al., 2017; Mohebbi-Kalkhoran, 2019). To go further with the perception of these vocalizations, new representations were suggested and were very interesting to better extract the similarities of units in these songs, especially based on colored pictograms (Rothenberg and Deal, 2015). These representations, even though with less precise details, encouraged us to think differently about the vocalizations and the whole time structure of the songs. However, automatic classifications are still a current challenge because whale singers are not predictable like machines and constantly add changes and ornaments in their units, altering the duration, the fundamental frequency, some formants, or even the shape of the vocalizations.

The units’ acoustic features are important, but some of them also seem to play a specific role in the syntactic structures (Mercado III, 2016). Durations of silences between units in the successive phrases give specific rhythms (Schneider and Mercado III, 2018). In this study, we also focused on the intonation and the prosody of the themes, including the duration, the melody, and the rhythm of the successive units (Picot et al., 2007).

Musical sounds can be described in three temporal phases, named attack, sustain, release (ASR): the attack when the vibration is enough to generate a sound, the sustain when the mechanical control is adjusted to maintain the sound as a stationary process, and the release when the energy comes lower and lower. Schaeffer (1967) describes more in detail different types of sound morphologies and further characteristics like mass, timbre, pace, dynamics, and profiles. The attack part plays a specific role in music and human perception of sound events. It is one of the (multiple) central questions of electroacoustic music and musique concrète. Schaeffer even suggested to cut off these attack parts in order to no longer be able to identify the musical instrument from which the sound was emitted. The objective is clearly to lose any references about the origin of the sounds and then to consider sound objects.

For humpback whale vocalizations, it was also possible to identify these three classical ASR temporal envelope phases. Attacks are directly correlated to the minimum of the sub glottal pressure needed to excite the arytenoid membranes and to set this vibrator into oscillations (Adam et al., 2013). Then, the sustain part appears when the whales control the vibrations propagated to the laryngeal sac, and to the nasal cavities when opened. They adjust the global shape of the vocalization with static or dynamic amplitude and frequency modulations by reducing the glottal flow intensity and/or by modifying the biomechanical properties of the vibrators, like the surface and the stiffness of the arytenoid membranes. Finally, the whales will decrease the outer pressure, by reducing the air flow from the lungs, until reaching the static inner pressure of the fully inflated laryngeal sac. These decay parts can be highly variable, with short or long time durations.

Our method started from careful listening of the emitted whale songs, which is the first step in showing whales that they are being listened to. Then, our approach is to go further than standard playback studies: firstly, our study is not based on simple synthetic sounds or pre-recorded sounds but on sounds created adaptively and suggested from whale vocalizations in real-time. Secondly, the musical interface we used makes it possible to introduce specific features, as modified attacks of few units in the phrase, or playing with the duration of silences to highlight some units like the pulsed sounds, for example. The interface gave us the potentiality of real two-way interactions.

For wind musical instruments, the airflow is used to put the vibrator actively into oscillations. The air is coming directly from the lungs, and the durations of the musical notes are directly linked to the specific apnea abilities of the musicians. Thus, aspects of an individual musician’s performance style are partially determined by their breath control. However, in order to obtain a tonal sound held over a longer time period, wind instrumentalists have developed a particular technique: the circular breath. This technique is based on the use of the air stored inside the mouth, especially in the cheek and jowl cavities. This air volume can be used as a second source of air after the lungs in order to maintain the sustain part or to modulate the current notes based on the unidirectional airflow. The contemporary bassoonists with whom Aline Pénitot works have all explored circular breathing.

Even if humpback whales do not take advantage of their mouth to perform the “circular breath” described previously, it was interesting to notice that they also moved the airflow in two directions inside their respiratory system. The natural one is the way from the lungs to the laryngeal sac. The opposite way is back from the laryngeal sac to the lungs, in a circular breathing process (Reidenberg and Laitman, 2007). The main objective of this bidirectional airflow is to make the apnea longer, but whales could also use this technique to increase the duration of their vocalizations or to add new tonal shapes (Adam et al., 2013).

In both cases (bassoon and humpback whale), airflows are crucial to create the initial vibrations of the oscillators and then to maintain and control the sustainable part of the sounds. These flows can be used with air coming from the lungs or coming from a second tank, as the laryngeal sac for the whale and the mouth cavity for the bassoon instrumentalist. These two options of the air sources could increase the acoustic diversity of the expected sounds under the mechanical and anatomical constraints of these generators.

Musicians also work the instrument in all of their mechanical and musical capacities, assembled or disassembled. For example, by blowing directly into the bell (the upper part of the bassoon) or the boot (lower part), certain types of bassoon sounds are obtained, or by blowing with a reed pierced in the boot, pulsed sounds can be produced. These musical sounds are patterned in time sequences of successive tonal or transient sounds, spaced enough to be distinguishable by human listeners for which the time separation hearing efficiency is around 50 and max 100 ms (Auriol, 1986).

Humpback whales are also able to generate this type of sounds. Pulsed sounds are emitted when they simultaneously release the arytenoid membrane stress and reduce the airflow through the vibrator but keep it strong enough to maintain the membrane in oscillation. It is interesting that similar pulsed sounds can be found in human speech, as the glottalization, popularly known as vocal fry (Childers and Lee, 1991; Redi and Shattuck-Hufnagelf, 2001).

For bassoons and humpback whales, pulsed sounds can finally be obtained under similar biomechanical conditions, by slowing down the movements of the vibrators. In both cases, these vibrators can produce two different types of sounds: tonal and pulsed sounds. The range of both vibrators extend to the production of chaotic sounds (Cazau and Adam, 2013; Cazau et al., 2016).

From our “trouvaille,” we were able to design sound objects. This process was motivated by decorrelating the sounds from their origin (in our case, from the bassoon that produced them), in accordance with Schaeffer’s approach. The use of the bassoon was needed to better work on the similarities described previously, but it should not be a limit to the perception of the sounds, as labeled as bassoon sounds. The way to envision musique concrète is to go beyond the instruments—or the things that create the original sounds—so as not to be limited by its mechanical constraints and also by the representation listeners can have from it (Schaeffer, 1966).

Considering the acoustic and morphologic similarities between the bassoon sounds and the humpback whale vocalizations, sessions in a professional music recording studio were planned to elaborate and to record a typology of sound morphologies with special attention to the attack, described previously (see section “Temporal Aspects of Sounds”), using Schaeffer’s methodology of analytic listening and characterization of sound objects. These recorded sounds are then classified and renamed by their morphological and typological characteristics. This approach is called reduced listening by Schaeffer. “The sound is listened to for itself, disregarding the real or supposed origin, and the meaning that it can convey” (Chion, 1983). We applied this approach to create a library of 47 sounds (available to readers by request to the corresponding author) originally produced with the bassoon and played taking into account the acoustic features of the units emitted by humpback whales. Following Schaeffer’s method, our sounds were classified and named in the rest of the article as concrete sound elements (CSEs). To name them, onomatopoeias were used (Waou, Oyé, and Ouin), characteristics of intention (accent and support), or a variation around the notion of pulsed sounds (Frot, iteration, and shuffling). The first word used to name the sound is the main characteristic, and then secondary characteristics are added depending on the quality of the sound. The purpose of these CSEs was to broaden the perception of the whale units. This unique sound library was used during human–machine–whale interactions.

In their study of humpback whale songs, Payne and McVay (1971) conclude that “the function of the songs is unknown.” However, because singers were recorded during breeding seasons, it was firstly assumed that songs could be linked with mating activities, as interactions between females and males, and/or between males. Up to now, the role of these songs is not totally clear and still under investigation. It is the same for the time structure and the meaning of its evolution over the years. Therefore, acoustic playbacks on humpback whales should be done with high caution. In any case, this kind of experiment has to be done by experts, with marine mammal observers (MMOs) on boats and following the rules of protection and respect of the cetacean species.

Therefore, this project done during the humpback whale breeding season in July–August 2018 off La Réunion Island (Indian Ocean) followed strict ethics rules, especially based on the charter of responsible approach of marine mammals (Region Réunion, 2017). During the three field missions, our boat was stopped at 300 m away from the isolated singer, with the engine turned off. No swimmers or divers went into the water. A MMO was on board to observe behaviors of humpback whales and to report any exterior signs from the whales that could be interpreted as a disturbance or a harassment, like stop singing, change in the breathing rate, moving away from the boat, or any exhibition of what can be perceived as agonistic behaviors.

After the experiments, humpback whales were not tracked. The boat went away at very slow speed (3 knots) up to 500 m before adopting a higher speed.

During the experiments, the sounds were played by the musician-experimenter seated on the boat desk, using a laptop and a MIDI controller. The hydrophone Ambient, ASF-1 MKII, and the underwater speaker Lubell LL916 were deployed in the water at 10-m depth right under the boat (Figure 4). The acoustic intensities were lower than 135 dB re 1 μPa at 1 m. The duration of the experiment was limited to 15 min and was done just one time to the same whale.

Before any sounds in the water were played, the humpback whales were visually observed and their songs were carefully listened to. Then, to initiate the whale–human interactions, the concrete sound elements were chosen in the sound library taking into account the vocalizations emitted by the humpback whale singer. Because the CSEs were recorded in a music studio before going on sea, the approach of the musician-experimenter was not to mimic the humpback whale units but more to play CSEs that could match some acoustic features of units, taking into account the musical structures of the phrases. These CSEs were played in real-time, using the sampler connected to the underwater speaker following, anticipating, and synchronizing the successive units, in order to adjust the rhythm and tonation.

For this study, we analyzed the acoustic recording done during the last experimental day because (a) it corresponded to the longest interaction, (b) the recording was done continuously with no interruption, and (c) the weather conditions and the sea state were sunny and calm.

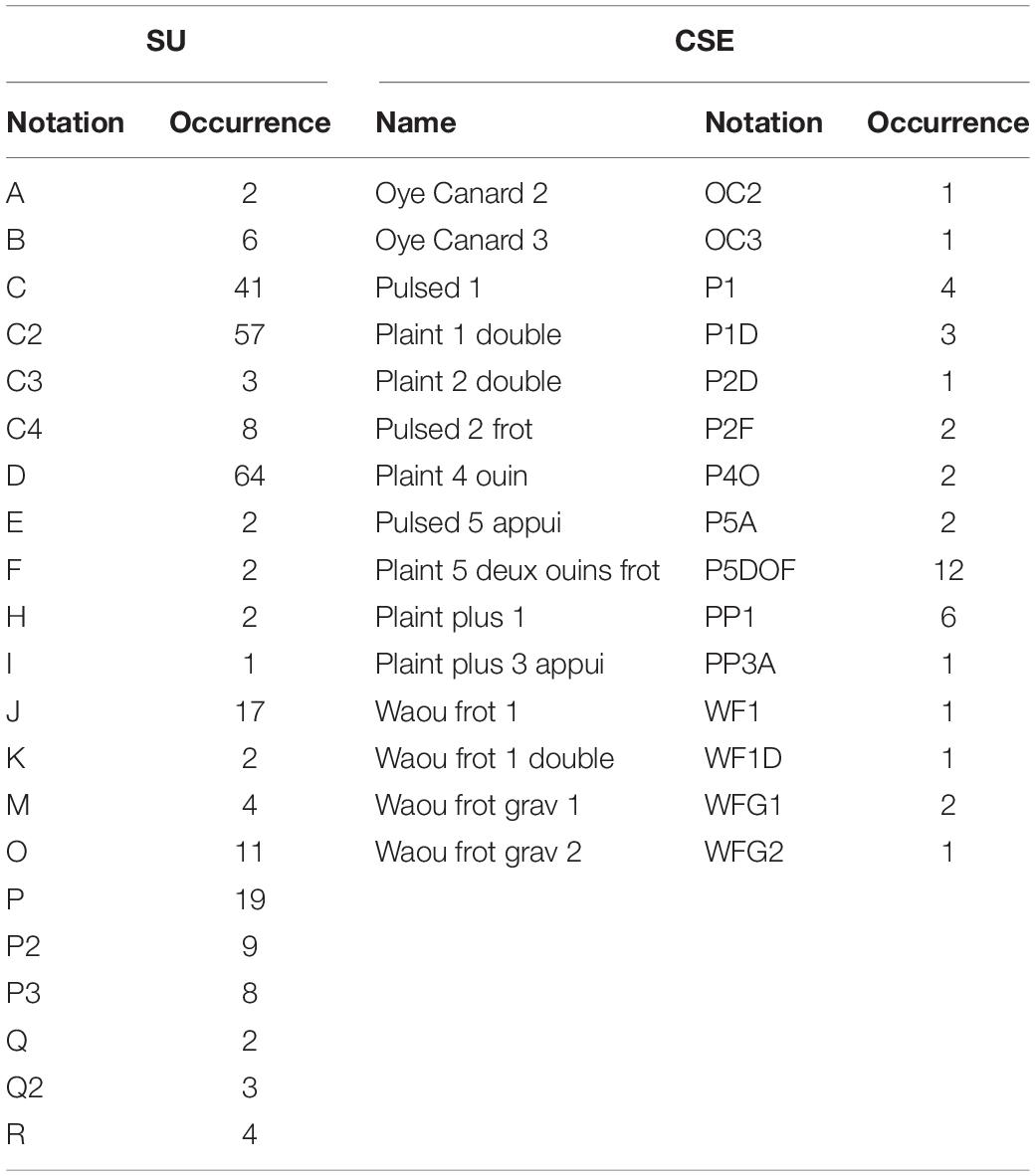

The duration of this acoustic recordings (48 kHz, 24 bits) was 17 min 35 s. During the annotation phase, the bioacoustic expert manually detected all units and concrete sound elements, by carefully listening to the acoustic recordings and by visually examining the spectrograms. The types of the sounds (tonal and pulsed; Adam et al., 2013), the contour of the fundamental frequency (flat, upsweep, downsweep, convex, concave, and continually modulated shape; Hickey et al., 2009), and the presence of harmonic frequencies (Adam et al., 2013) were used to create new classes. When only the unit durations were significantly different but the other acoustic features were still close, sub-classes were created and notated with the letter of the class and a number (for example, C2, C3, and C4 are subclasses of C). In a few cases of overlapping units and CSEs, they did not start at the same time, and it was easy to manually classify them. Finally, for this acoustic recording, 307 acoustic events were detected, including 40 concrete sound elements and 267 units. To name the units, we used letters of the Latin alphabet, assigned in alphabetic order, for each new type of vocalization successively detected in the acoustic recording, as suggested by Payne and McVay (1971). The same letter followed by a number is used when the units are closed enough not to create a new category. Finally, the humpback whale individual emitted 21 different units, and 13 different concrete sound elements were played during this experiment (Table 1). These units and CSEs are available to readers by request to the corresponding author.

Table 1. Names and occurrences of the humpback whale song units (SUs) and the played concrete sound elements (CSEs).

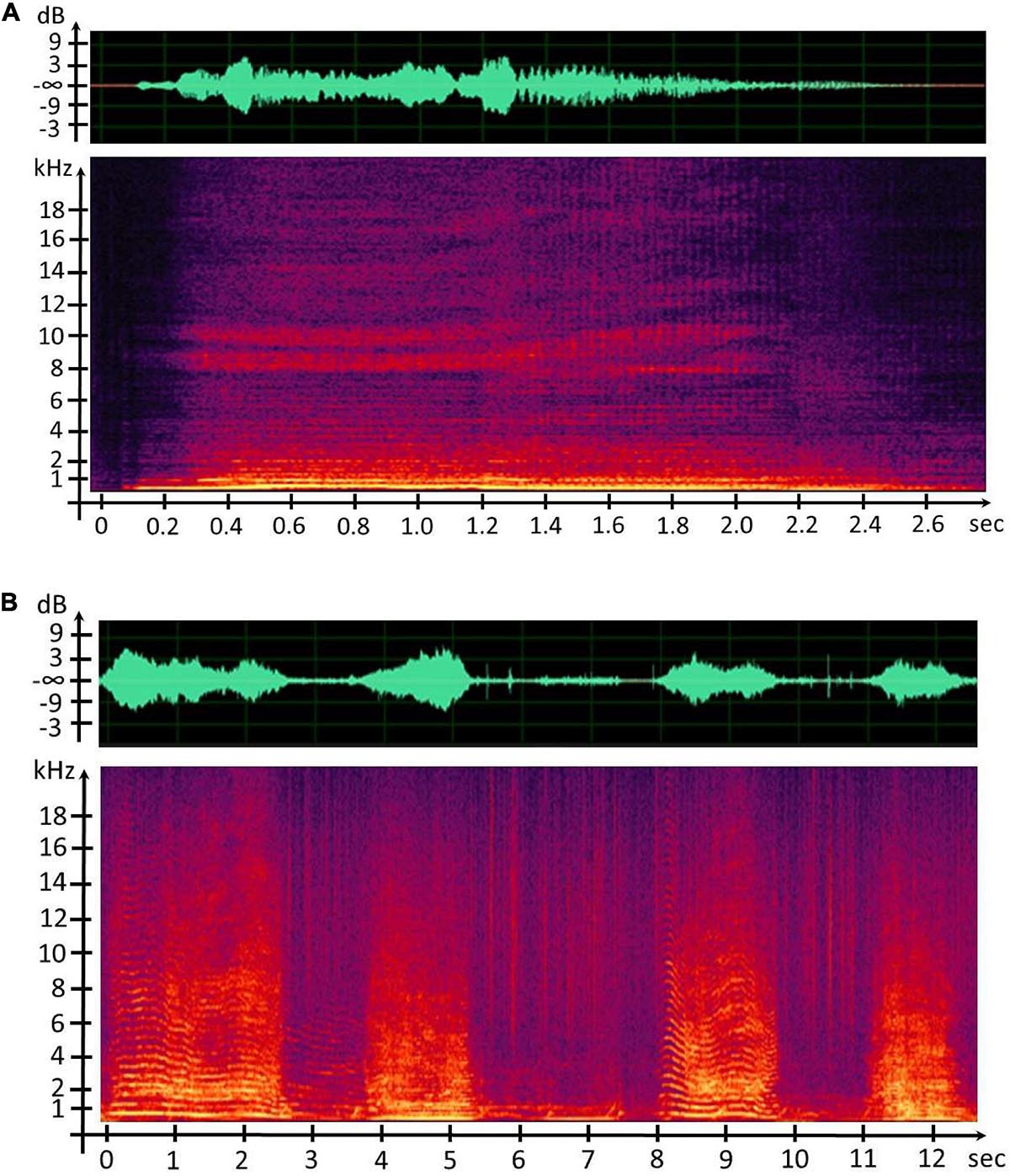

After mechanical and anatomical similarities between the sound generators were noticed, the underlying question was to know if the CSEs and units share common acoustic features or at least were distributed close to each other. We firstly provided the spectrograms using the 512-sample fast Fourier transform and the weighted Blackman–Harris window, with 75% overlap in order to detect all the acoustic events, CSEs, and units (Figure 5).

Figure 5. (A) Waveform and spectrogram representation of the concrete sound element (CSE) “Waou frot grav 2.” (B) Waveform and spectrogram representation of humpback whale song units.

To compare the CSEs and the units, two different sets of acoustic features were automatically provided with the motivation to investigate two levels of complexity. Firstly, five characteristics were computed: the duration and the spectral properties, including minimum, maximum, peak, and bandwidth. For each feature, the obtained results with the means and the standard deviations are given in Table 2.

The humpback whale units last from less than 1–5 s, as do almost all the CSEs (Table 2B). Only the CSE “WFG1” lasts more than 5 s, and finally, it was the sound with the longest duration (Table 2A). Moreover, regarding spectral properties, these sounds also show similarity, for all CSEs compared with the units. Therefore, the minimal frequencies of the CSEs and the units were between 80 Hz (units “F” and CSEs “P4O”) and 500 Hz (units “D” and “A” and concrete sound element “WFG2”). Only for the unit “R” was this frequency higher than for the other sounds (740 Hz). The peak frequencies were also distributed in the same range, except for the CSE “OC3” (2 kHz) in which we can recognize the resonance property of the bassoon.

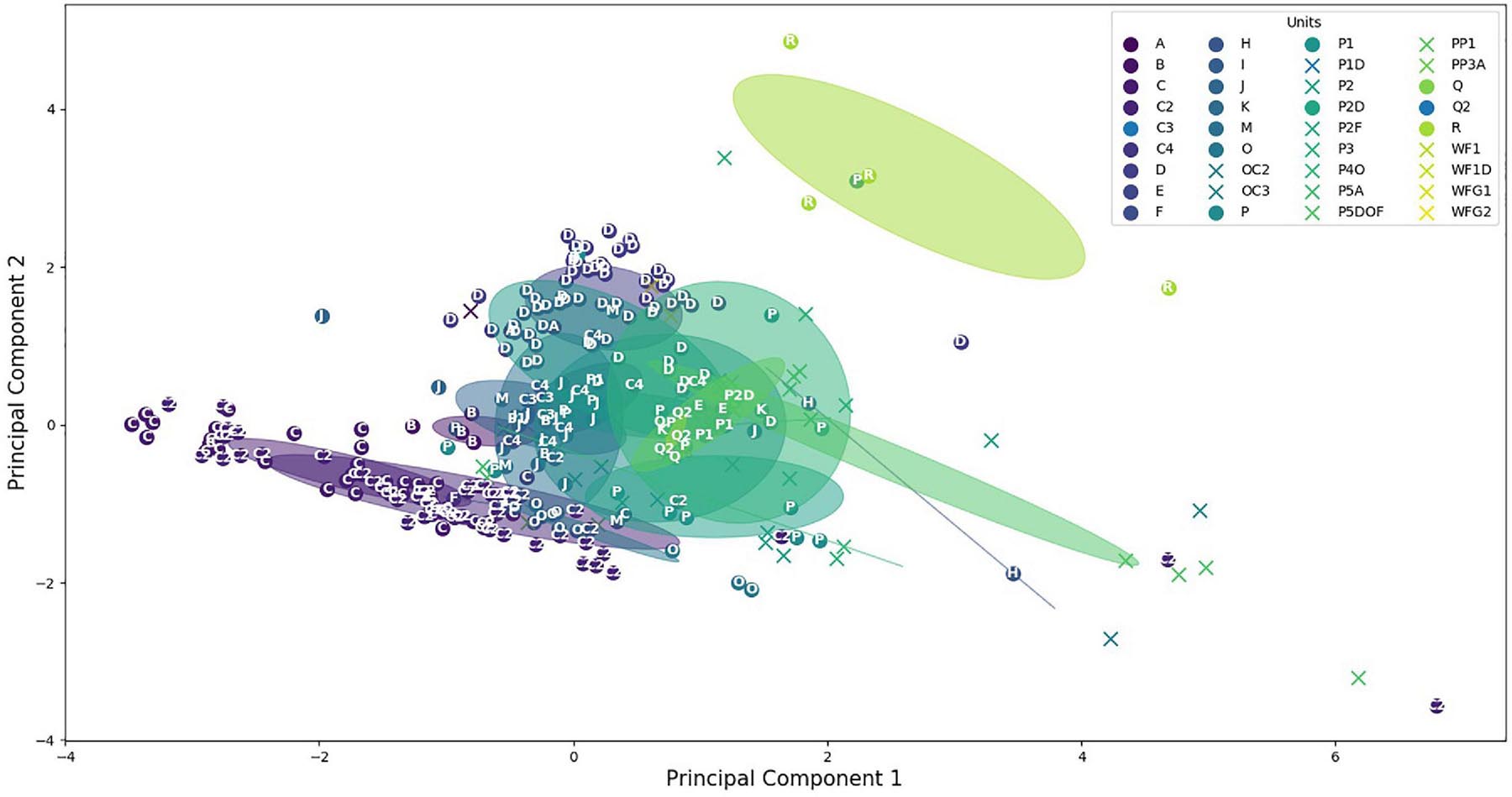

To have a better view, a principal component analysis (PCA) was computed. The two first PCA components provided 46 and 29% of the variance proportion of the data. This proportion will increase to 94% by adding the third component. However, the 3D figure would have been hard to understand, so we chose to keep only the two components. Figure 6 displays, with the same color, all sounds of the same category showing their dispersion inside their own category. We can see that the majority of the CSEs and the units are grouped between -2 and 2 on both axes, showing close values of their acoustic features. The unit dispersions were measured by computing the mean Euclidean distances between these sounds in their own category (Tables 2A,B). As a result, only five sound categories have a mean distance higher than 2. The distances are lower than 1 for 64% of these categories (23 out of 36), showing that the CSEs and units are well clustered. The circles showed the overlapping of the different categories. It means firstly that some units had acoustic similarities. Furthermore, it seemed that the unit reproducibility depends on the type of sounds with lower standard deviations for tonal sounds than for pulsed sounds. Secondly, the units shared acoustic features close to those of CSEs, as shown with Euclidian distances and standard deviations in Figure 6.

Figure 6. Distribution of the concrete sound elements (stars) and the units (dots). Circles are centered on clusters with a radius corresponding to the standard deviation of 1.

However, the diversities were not equal for the different categories (Figure 6). The lower dispersion suggested that the different units of the same category have closer acoustic features than when the dispersion is higher. These diversities can be explained by the different numbers of the units and also because in the same category, the whale can slightly modify one of the parameters. For example, the time duration of units in one category could be longer or shorter, while the types of the sounds (tonal or pulsed) and the spectrogram shapes are still very similar. As a result, in Figure 6, we can see that the two units “A” and “B” are very close to the unit “C.” On the other hand, the C2 category showed a large dispersion, because these units were emitted with lower acoustic intensities and were shorter (meaning that the mean of the acoustic parameters gave less constant values) and also because the occurrence of these units was higher (Table 1).

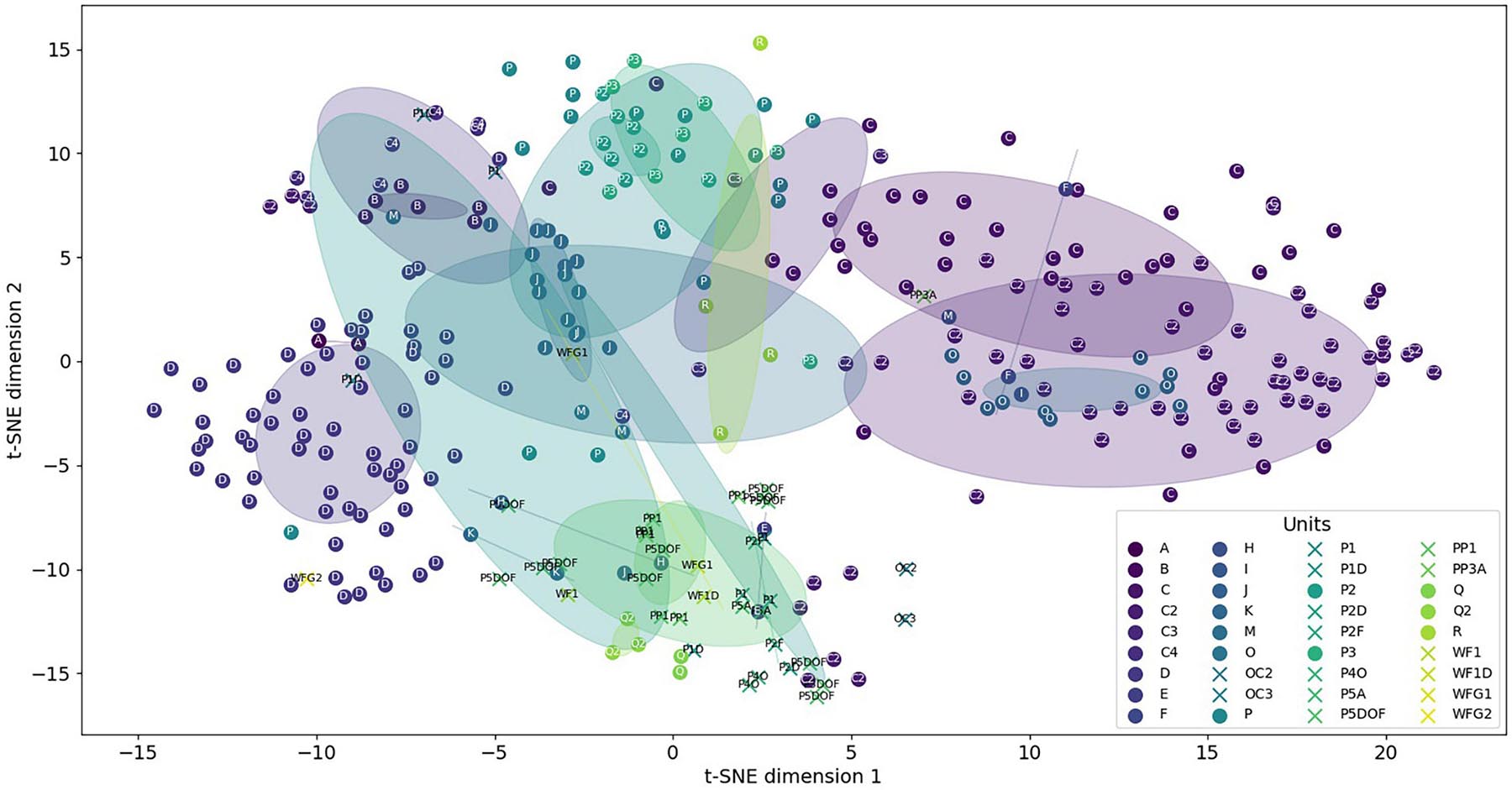

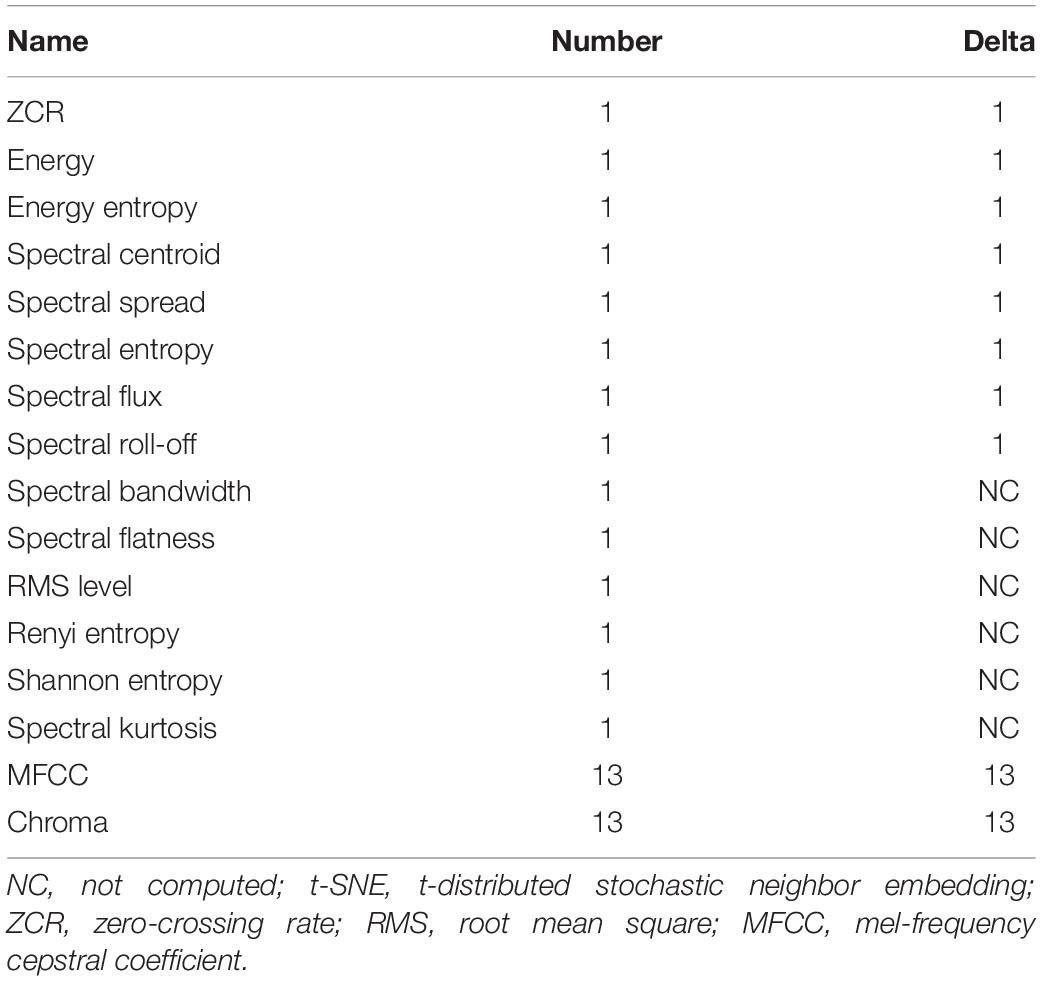

Secondly, we would like to investigate a higher complexity of these concrete sound elements and units. Therefore, from each detected unit, a total of 74 acoustic features were automatically computed from the time and spectral representations (see the mathematical definition of these features in Malfante et al., 2018) (Table 3). All implementations were taken from the Librosa toolbox (McFee et al., 2020), the audio_analysis software package (Lerch, 2012), and the pyaudio analysis toolbox (Giannakopoulos, 2015). The t-distributed stochastic neighbor embedding (t-SNE) was implemented to enable the representation and visualization of the feature space (van der Maaten and Hinton, 2012) using the Scikit-learn Python package (Pedregosa et al., 2011). The aim of using t-SNE was to explicitly show the similar and dissimilar sounds from the CSEs and the units based on these acoustic features (Table 2). With this approach, dissimilar points should be further from each other (Poupard et al., 2019). On the contrary, if the CSEs and the units share similar acoustic properties, they should be close in the t-SNE map.

Note that default parameters were used to compute the features and to compute the t-SNE with the Scikit-learn implementation.

From the 74 acoustic features, we also provided the t-SNE map. In Figure 7, we used the same color for all the CSEs and units inside the same category, making it easier to visualize the variability of these sounds. Circles are centered on the cluster of each categories, and the radius was provided for the standard deviation of 1.5.

Figure 7. t-Distributed stochastic neighbor embedding (t-SNE) map. Stars represent concrete element sounds, and dots represent humpback whale units. Circles are centered on clusters with a radius corresponding to the standard deviation of 1.5.

From this map, we can see that units and CSEs are well clustered showing that the acoustic features enable to discriminate all the different types of sounds. Furthermore, the mixed distribution of the stars, for CSEs, and the dots, for units, shows the close similarity of the different sequences emitted after a specific concrete sound element. For example, the CSEs are found inside the cloud of the units with their t-SNE dimension 1 between −5 and 5 when the units are distributed between −15 and 20. For the t-SNE dimension 2, the CSEs and units share the same range.

We also computed the Euclidean distance between CSEs and units (Table 4). It appears that the mean distance between units is 2.08 and that the Euclidean distances between 2/3 of CSEs and the units are lower than 2.08.

Table 4. Mean Euclidean distance between the categories of concrete sound elements (columns) and of the units (rows).

Our second objective was to measure the similarities between the time sequences of vocalizations emitted by the whales after the display of a specific concrete sound element. With these comparisons, we would like to show if the whales emit the same sequences or not.

We applied the Levenshtein distance similarity index (LSI) (Levenshtein, 1966), previously used in different studies, especially to assess the changes in humpback whale songs across multiple sites and over years (Eriksen et al., 2005; Rekdahl et al., 2018; Owen et al., 2019). This index allows to evaluate the number of changes needed to transform one song to another. A change could be an insertion, a deletion, or a substitution of one unit in the songs. Furthermore, the representative song (median string) was computed for each stimulus (Owen et al., 2019). In our study, we chose to provide the normalized version of the Levenshtein distance on the unit sequences emitted after each concrete sound element. Thus, we defined each concrete sound element as stimulus (Levenshtein, 1966), and this approach estimated the changes during the sequences of successive vocalizations following the insertion of these stimuli (Table 5). Because we provided distance (distance = 1 – similarity), the 2 compared sequences are identical when the normalized Levenshtein similarity index (NLSI) value is null, and the sequences are totally different when the value is 1. All implementations were performed in Python with the python-string-similarity toolbox (Luo, 2020) with the python-Levenshtein modules (Necas et al., 2019).

The low NLSI values showed that, after the same concrete sound element, the following emitted units have specific acoustic features or the unit sequences are close. After the concrete sound elements PP1, six unit sequences were detected, with the common structure “C2C2C2D” (Table 4). The different NLSI values showed that specific unit sequences can be expected after emitting CSEs.

Two different patterns could be identified for the time unit sequences emitted after the stimulus CSEs “P1” (Table 4). Half of the sequences were similar, including the pattern “BCC,” while the second half always started with the unit “C2.” It included a “C2C2D” pattern and did not include any of the “B” or “C” units. Similar results could be identified in the time sequences emitted after the stimulus CSE “PP1,” which shared close acoustic features with the CSE “P1.” For that stimulus, two unit sequences contained the pattern “CCD,” while the others contained the “C2C2D” pattern instead. This “C2C2D” pattern was also identified in the sequences emitted after the stimuli “OC3,” “P1D,” “P4O,” “P5A,” “P5DOF,” and “WFG2.” The “CCD” pattern was identified after the CSEs “OC2,” “P2D,” “P2F,” “PP3A,” and “P5DOF.”

These results on the diversity of the unit sequences show interesting modifications after the stimuli, which could testify to the singer’s reactions to the CSEs. Nevertheless, this is not sufficient to strictly prove that the stimuli were the only explanation of these changes because sometimes singers repeat phrases quite precisely and other times they do not, even without any playbacks happening. To investigate deeper, further experiments should definitively include acoustic recordings of the whole song before starting playbacks of CSEs, in order to analyze the level of repetitiveness of the unit sequences during the song.

It is possible to approach the acoustic features of humpback whale sounds using different types of musical instruments, including singing bowls, bowed, plucked, tapped string instruments, or even human voice. Among all of them, wind instruments are based on vibrators that are close to the vibrator of the humpback whale sound generator, and wind instruments with double reeds are the closest. It takes a high air pressure to obtain a sound with these wind instruments. Therefore, it is very difficult for bassoonists to produce a low-intensity sound. Interestingly, this was also noticed for humpback whale vocalizations (Au et al., 2006). This is explained by the specific anatomy of their vibrator: airflow has to be strong enough to put the membranes that cover the arytenoids in vibration. It depends on the distance between the two arytenoids, their respective positions, and the length and the thickness of the membranes involved in these vibrations.

However, Schaeffer suggested that generated sounds must be distinguished from the musical instrument. Following this approach, the concrete sound elements could be created by using a bassoon or any other instruments or tools. We could assume that acoustic similarities of these CSEs will still be essential to initiate interactions with the humpback whales. The concrete sound elements should not be considered as stimuli, as they were defined for the t-SNE method but they have to be seen as part of the mutual music exchange between the whale and the musician-experimenter. The concrete sound elements have to be played leading or following the humpback whale units taking account of different parameters including the musical timbre, the attacks, the rhythm and the whole structure of the phrases. Using concrete sound elements this way will not be seen as inclusion of new random sounds to disturb the whale during its song, but to suggest sounds that could perceive as musical ornaments inside the original phrase.

Therefore, it will be interesting to renew these experiments and to compare the number of changes in the song phrases during and without the play of the CSEs. This further work could contribute to show whether the whale makes choices in the emission of each unit, of each sequence, or if the humpback singer is primarily following the population-wide song structure.

We are not a marine species. Our voice and our musical instruments are not designed to be directly used in the underwater environment. We proposed an interface to tackle this and to allow us to meet the whales in their own local context.

The interface was also the solution to go beyond the human musical instruments that have their own acoustic limits and constraints. The experience gained through our work with playing concrete sound elements with a sampler prompted the design of an original interactive interface. It is motivated by the wish to explore instantaneous interactions between the musicians-experimenters and the humpback whales. The objective is to finely adjust variations of sounds taking into account the variability of the humpback whale vocalizations, with a possibility to create new sounds close to what the musician-experimenter listens to, and also to be at the same level of the whale, in terms of acoustic intensities, frequencies, and variabilities. Thus, this interface is based on simultaneous acoustic recordings and sounds played in the water: firstly, the musician-experimenter are able to listen carefully to the humpback whale vocalizations in order to identify the units, their acoustic features, and also their time structure as sequences, sub-phrases, phrases, and themes. Secondly, the musician-experimenter will choose the adapted CSEs and will play it at the right time inside the humpback whale song, before, after, or simultaneously with the units. With this approach, we expected that the whale would not consider the produced CSEs as echoes of its own units but as sounds that refer to its own vocalizations. Our motivation is not to produce new sounds in the water to humpback whales but to signify to individuals that the musician-experimenter is also here to listen to them and to adjust the choice of the CSEs considering the global time structure of this acoustic exchange. The interface is designed taking into account the four aspects of sound morphology, song structure, intentionality, and gesture. It also allows to signify that silences are listened to.

Up to now, sounds were emitted through a waterproof speaker deployed in the water under the boat, but the musician-experimenter was seated on the boat desk. We thought that the musician-experimenter could also be in the water, for the following reasons: Firstly, with this position outside of the water, the experimenter can feel the border between the marine and air worlds. This could limit the acoustic perception of the produced sounds and also of the vocalizations emitted by the whale. Secondly, the other motivation would be to consider the local soundscape. This is not the same acoustic environment in the water as on the boat, in particular when several people are on the deck, too. Thirdly, to be in the water could be the opportunity to work on the gestures and to link them to how the different sounds are produced. Fourthly, in case of visual contact between the experimenter and the whale, mutual visual observations could be complementary to acoustic perceptions. Even if humpback whale singers are known to be static while singing, the objective is to instantaneously have more information about their potential reactions, postures, and interests: any outside signs could be complementary to their vocal production and could have a specific sense that we need to take into account during this interaction. In the same way, humpback whales could see the experimenter during the performance, could observe gestures, and have information from that. It will be interesting to know how the humpback whales will behave because up to now, studies were done only when humpback whale singers interacted over distances long enough for no visual contacts (Cholewiak et al., 2018). Consequently, a singer that can visualize another agent making song-like sounds and movements at close range is likely to be encountering a radically different scenario from what it would naturally encounter in vocal communication contexts.

For all these reasons, we designed our new interface, named Gestural Underwater interactive Whale–Human interface (GUiWHi), to be used also in the water (Figure 8). The components will include the following:

- For underwater audio capture and diffusion, a hydrophone (Ambient, ASF-1 MKII/10 Hz–80 kHz), an underwater speaker (Lubell LL916/200 Hz–23 kHz, 78 W, 180 dB re 1 μPa at 1 m), a diffusion amplifier, an audio interface (RME BabyFace Pro, 24 bits, and 96 kHz with integrated microphone phantom power and pre-amplifier).

- For gesture analysis, sound synthesis and manipulation, and recording of the whole session, a laptop computer (Apple MacBookPro with hard disk, screen, and interactive audio programming environment Cycling’ 74 Max with a bespoke gesture-controlled sound synthesis program).

- For underwater gesture sensing, two wrist-worn inertial measurement units [9-degree-of-freedom (DoF) inertial measurement unit (IMU) Bitalino R-IoT with wired serial connection to the computer, 100 Hz] and one underwater tablet (Valtamer Alltab 4.0 with wired connection to the computer).

The two IMUs allow to finely capture continuous gestures via the built-in accelerometers, gyroscopes, and magnetometers. As such, arm posture (orientation angles), attacks (impulses), and slow arm or forearm movements can be used to initiate and continuously modify sounds, while the tablet with its touch-screen surface allows for preselection of sound classes.

This interface can be played underwater by a diver–musician–experimenter such that a gesture can be associated with a specific type and profile of sound that is produced by the sound synthesis software. This way, a musical development can be elaborated based on careful listening, which is clearly addressed at the whales, going beyond a simple reaction to the type and morphology of their sounds. The musician will sometimes need to take the lead and sometimes let herself/himself be guided. These alternative leaderships between the musician and the whale could potentially provide answers to specific questions about some aspects of these songs, such as why the whale repeats more often specific units than others and what importance should be given to sounds.

Did we reach our goal to suggest a bidirectional interaction between human musicians and humpback whales? What can we expect from these interactions?

This project is not another attempt to expose one animal species to music or to force whales to listen to human music. The original motivation is totally different, with the idea of starting from their emitted vocal sounds. Our first work was to open our acoustic perception to their songs, based on the large knowledge accumulated from the scientific literature over the last five decades. Then, we would like to better understand the anatomy of their vocal generator, because we thought that coherence and possibilities have to be known before thinking about a complex musical proposal based on multiple unknown acoustic sources. Our goal was to predict if this meeting can work, and what kind of results we can expect in terms of musical exchange. It was very important for this project to stay close to the acoustic features of humpback whales but without imitating them. Finally, we had the opportunity to play our music to one humpback whale individual at the Réunion Island. We observed that the whale stayed around and was not afraid. More of that, it was possible to start musical exchanges taking into account the answers from both sides. On the boat, we were aware about the interaction with this individual whale during this experiment.

This work was to submit a proposal based on musique concrète with the objective to create an exchange not based on a competition for vital activities, like protection of their territories, finding mates, defending harems, raising calves, and reactions to predators. The project was initiated to allow opportunities for one-to-one interactions at the same level. It means that our approach was definitely not to lead the music piece and to impose the music orientation. This preliminary study has limitations, especially about how the whale perceived our musical intention. The whale singer may not have been thrilled with this human interjection of concrete sound elements into its performance, even if it tolerated it. This singer was free to change or not the structure of its song and the intonation of its units and, by this way, suggested to the human musician-experimenter to follow these variations or to include new “musical” ornaments.

Perspectives could open inspirations to compose new music pieces in different styles. We also would like to take into account the acoustic characteristics of underwater soundscapes. Indeed, the music perception is influenced by the proportion of biophony, geophony, and anthrophony, and the context in the local geographic sites has to be taken into account during further interactions. It will be very interesting to test a large variety of areas, including shallow waters or underwater canyons. Our idea is also to organize a concert for the public and to observe behaviors in order to describe the perception of what happened and finally their real relationship to nature.

Our work is not a new reason to increase noise in the oceans, although we support the effort of many organizations and institutions that are involved in underwater noise mitigation. Underwater noises are still important, and the effects of these anthropogenic sounds are now well described in the scientific literature. Taking account of this world concern, this project could be seen as an alternative to contribute to cetacean welfare, as music is used as a tool to decrease stress for some animal species (Alworth and Buerkle, 2013; Dhungana et al., 2018), for example, like gorillas (Wells et al., 2006), horses (Eyraud et al., 2019), and domestic species (Hampton et al., 2020; Lindig et al., 2020). Of course, this beneficial effect on stress will have to be proven on humpback whales, for example, by requiring cortisol monitoring (Rolland et al., 2012; Mingramm et al., 2020).

We humans have been able to develop relationships between music from very different cultures and sometimes even with musicians who do not speak the same language and in whose culture music has a very different function. Perhaps, through the process of musique concrète and the development of this interface, we will find ways to interact more and more deeply with the whales. This will not happen without further questioning what over the centuries the conventional Western thought has called culture and what we have called nature. Beyond the questions around animal welfare, the acquisition of knowledge and the conservation, the protection, or even the repair of our misdeeds against the environment and animals, it is without doubt that this project must be anchored at the heart of the reflections about nature/culture in order to define peaceful relations between humans and whales.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The animal study was reviewed and approved by Institute Neurosciences Paris-Saclay.

AP conceived, managed, and developed this project, composed the music, participated in the field work, and did the field missions at La Réunion Island, Indian Ocean, in 2018. OA did the acoustic recordings of humpback whale songs in Ste Marie Madagascar, Indian Ocean, designed the scientific part, and contributed to the field logistics. About the sounds analyzed in this manuscript, OA did the manual annotation of the different acoustic recordings. DC and PN did the comparison of the acoustic features of the CSEs and the units emitted by the humpback whale. DC and PN developed the source code, which is freely accessible on https://osmose.xyz/. AP designed the GUiWHi device, and OA and DS were involved in the setup of the GUiWHi device. AP and OA wrote the initial manuscript. DS revised, extended, and proofread the manuscript. All the authors gave their final approval for publication.

This study was financially supported by SCAM, CNC Dicream, Why Note, Césaré, Centre National de Création Musicale, Université Paris-Saclay, Diagonale Paris Saclay, Région Réunion. Thanks for the partial financial support from the French associations Dirac Gualiba, Compagnie Ondas, Abyss.

AP was employed by the non-profit organization – Compagnie Ondas.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would like to thank bassoon players Brice Martin, Sophie Bernado, and Florian Gazagne, especially for their help with the creation of the CSEs.

Adam, O., Cazau, D., Damien, J., Gandilhon, N., White, P., Laitman, J. T., et al. (2018). “From anatomy to sounds: description of the sound generator,” in Proceedings of the 8th International Workshop on Detection, Classification, Localization, and Density Estimation of Marine Mammals Using Passive Acoustics, June 2018, Paris.

Adam, O., Cazau, D., Gandilhon, N., Fabre, B., Laitman, J. T., and Reidenberg, J. S. (2013). New acoustic model for humpback whale sound production. Appl. Acoust. 74, 1182–1190. doi: 10.1016/j.apacoust.2013.04.007

Allen, J. A., Garland, E. C., Dunlop, R. A., and Noad, M. J. (2019). Network analysis reveals underlying syntactic features in a vocally learnt mammalian display, humpback whale song. Proc. R. Soc. B 286:20192014. doi: 10.1098/rspb.2019.2014

Allen, J. A., Murray, A., Noad, M. J., Dunlop, R. A., and Garland, E. C. (2017). Using self-organizing maps to classify humpback whale song units and quantify their similarity. J. Acoust. Soc. Am. 142, 1943–1952. doi: 10.1121/1.4982040

Allen, S. J. (2014). “From exploitation to adoration: the historical and contemporary contexts of human-cetacean interactions,” in Whale-Watching: Sustainable Tourism and Ecological Management, eds J. Higham, L. Bejder, and R. Williams (Cambridge: Cambridge University Press), 31–47. doi: 10.1017/CB0O781139018166.004

Almeida, A., Vergez, C., Caussé, R., and Rodet, X. (2003). “Experimental research on double reed physical properties,” in Proceedings of the Stockholm Music Acoustics Conference, Stockholm, 6.

Alworth, L. C., and Buerkle, S. C. (2013). The effects of music on animal physiology, behavior and welfare. Lab Anim. 42, 54–61. doi: 10.1038/laban.162

Arnbom, T., Papastavrou, V., Weilgart, L. S., and Witehead, H. (1987). Sperm whales react to an attack by killer whales. J. Mammal. 68, 450–453. doi: 10.2307/1381497

Au, W. W. L., Pack, A. A., Lammers, M. O., Herman, L. M., Deakos, M. H., and Andrews, K. (2006). Acoustic properties of humpback whales songs. J. Acoust. Soc. Am. 120, 1103–1110.

Auriol, B. (1986). Le test temporel de Leipp est-il pour le système nerveux un indicateur localisé ou global? Bull. Audiophonol. Ann. Sc. Univ. Franche Comté 2, 505–512.

Baur, D. C., Bean, M. J., and Gosliner, M. L. (1999). “The laws governing marine mammal conservation in the United States,” in Conservation and Management of Marine Mammals, eds J. R. Twiss and R. R. Reeves (Washington, DC: Smithsonian Institute Press), 48–86.

Butterworth, A., and Simmonds, M. P. (2017). People – Marine Mammal Interactions. Lausanne: Frontiers Media. doi: 10.3389/978-2-88945-231-6

Cazau, D., and Adam, O. (2013). “Classifying humpback whale sound units by their vocal physiology, including chaotic features,” in Proceedings of the Int. Symp. Neural Information Scaled for Bioacoustics, Joint to Neural Information Processing System (NIPS), Lake Tahoe, NA, 212–217.

Cazau, D., Adam, O., Aubin, T., Laitman, J. T., and Reidenberg, J. S. (2016). A study of vocal nonlinearities in humpback whale songs: from production mechanisms to acoustic analysis. Sci. Rep. 6:31660. doi: 10.1038/srep31660

Cheng, T. C. (1991). “Is parasitism symbiosis? A definition of terms and the evolution of concepts,” in Parasite—Host Associations: Coexistence or Conflict?, eds C. A. Toft, A. Aeschlimann, and L. Bolis (Oxford: Oxford University Press), 15–36.

Childers, D. G., and Lee, C. K. (1991). Vocal quality factors: analysis, synthesis, and perception. J. Acoust. Soc. Am. 90, 2394–2410. doi: 10.1121/1.402044

Chion, M. (1983). “Guide des objets sonores,” in Bibliothèque de Recherche Musicale, ed. B. Chastel (Paris: INA/GRM).

Cholewiak, D. M., Cerchio, S., Jacobsen, J. K., Urbán, R. J., and Clark, C. W. (2018). Songbird dynamics under the sea: acoustic interactions between humpback whales suggest song mediates male interactions. R. Soc. Open Sci. 5:171298. doi: 10.1098/rsos.171298

Cholewiak, D. M., Sousa-Lima, R. S., and Cerchio, S. (2013). Humpback whale song hierarchical structure: historical context and discussion of current classification issues. Mar. Mamm. Sci. 29, 312–332.

Collins, J. (1970). Farewell to Tarwathie, Vinyl Album Record Whales & Nightingales. New York, NY: Elektra Records.

Constantine, R. (2002). “Folklore and legends,” in The Encyclopedia of Marine Mammals, eds W. F. Perrin, B. Wursig, and J. G. M. Thewissen (San Diego, CA: Academic Press), 448–450.

Cotter, M. P., Maldini, D., and Jefferson, T. A. (2012). “Porpicide” in California: killing of harbor porpoises (Phocoena phocoena) by coastal bottlenose dolphins (Tursiops truncatus). Mar. Mamm. Sci. 28, E1–E15. doi: 10.1111/j.1748-7692.2011.00474.x

Cousteau, J.-Y., and Diolé, P. (1972). The Whale: Mightymonarch of the Sea. New York, NY: Arrowood Press.

Damien, J., Adam, O., Cazau, D., White, P., Laitman, J. T., and Reidenberg, J. S. (2018). Anatomy and functional morphology of the mysticete rorqual whale larynx: phonation positions of the U−fold. Anat. Rec. 302, 703–717. doi: 10.1002/ar.24034

Darling, J. D., and Bérubé, M. (2001). Interactions of singing humpback whales with other males. Mar. Mamm. Sci. 17, 570–584. doi: 10.1111/j.1748-7692.2001.tb01005.x

Darling, J. D., Jones, M. E., and Nicklin, C. P. (2006). Humpback whale songs: do they organize males during the breeding season? Behaviour 143, 1051–1101. doi: 10.1163/156853906778607381

Darling, J. D., Jones, M. E., and Nicklin, C. P. (2012). Humpback whale (Megaptera novaeangliae) singers in Hawaii are attracted to playback of similar song (L). J. Acoust. Soc. Am. 132, 2955–2958. doi: 10.1121/1.4757739

Deakos, W. H., Branstetter, B. K., Mazzuca, L., Fertl, D., and Mobley, J. R. Jr. (2010). Two unusual interactions between a bottlenose dolphin (Tursiops truncatus) and a Humpback Whale (Megaptera novaeangliae) in Hawaiian waters. Aquat. Mamm. 36, 121–128. doi: 10.1578/AM.36.2.2010.121

Dhungana, S., Khanal, D. R., Sharma, M., Bhattarai, N., Tamang, D. T., Wasti, S., et al. (2018). Effect of music on animal behavior: a review. Nepal. Vet. J. 35, 142–149. doi: 10.3126/nvj.v35i0.25251

Dudzinski, K. M. (2010). Dolphin Mysteries: Unlocking the Secrets of Communication. London: Yale University Press.

Dufour, D., Thomas, J.-C., Solomos, M., Dufourt, H., Augoyard, J.-F., Renouard Larivière, R., et al. (1999). in Ouïr, Entendre, Écouter, Comprendre Après Schaeffer, ed. B. Chastel (Paris: Institut National de l’Audiovisuel), 280.

Dunlop, R. A., Noad, M. J., Cato, D. H., Kniest, E., Miller, P. J. O., Smith, J. N., et al. (2013). Multivariate analysis of behavioural response experiments in humpback whales (Megaptera novaeangliae). J. Exp. Biol. 216, 759–770. doi: 10.1242/jeb.071498

Dunlop, R. A., Noad, M. J., Cato, D. H., and Stokes, D. (2007). The social vocalization repertoire of east Australian migrating humpback whales (Megaptera novaenangliae). J. Acoust. Soc. Am. 122, 2893–2905. doi: 10.1121/1.2783115

Eriksen, N., Miller, L. A., Tougaard, J., and Helweg, D. A. (2005). Cultural change in the songs of humpback whales (Megaptera novaeangliae) from Tonga. Behaviour 142, 305–325. doi: 10.1163/1568539053778283

Eyraud, C., Valenchon, M., Adam, O., and Petit, O. (2019). La Diffusion de Musique, un Outil de Gestion du Stress au Quotidien? Journées Sciences et Innovations Equines. Saumur: Institut français du cheval et de l’équitation, 10.

Frankel, A. S., and Clark, C. W. (1998). Results of low-frequency playback of M-sequence noise to humpback whales, Megaptera novaeangliae, in Hawaii. Can. J. Zool. 76, 521–535. doi: 10.1139/z97-223

Frantzis, A., and Herzing, D. L. (2002). Mixed-species associations of striped dolphins (Stenella coeruleoalba), short-beaked common dolphins (Delphinus delphis), and Risso’s dolphins (Grampus griseus) in the Gulf of Corinth (Greece, Mediterranean Sea). Aquat. Mamm. 28, 188–197.

Garland, E. C., and McGregor, P. K. (2020). Cultural transmission, evollution and revolution in vocal displays: insights from bird and whale song. Front. Psychol. 11:544929. doi: 10.3389/fpsyg.2020.544929

Giannakopoulos, T. (2015). pyAudioAnalysis: an open-source Python library for audio. PLoS One 10:e0144610. doi: 10.1371/journal.pone.0144610

Gillespie, D. (2004). Detection and classification of right whale class using an edge detector operating on smoothed spectrogram. J. Can. Acoust. 32, 39–47.

Giudici, P. (2019). The Yellow Folder. A Research on the Periphery of Life. Vol. 12. Helsinki, Finland: Ruukku Studies in Artistic Research. doi: 10.22501/ruu.92058

Guillemain, P. (2004). A digital synthesis model of double-reed wind instruments. EURASIP J. Appl. Signal Process. 7, 990–1000.

Hampton, A., Ford, A., Cox, R. E., Liu, C.-C., and Koh, R. (2020). Effects of music on behavior and physiological stress response of domestic cats in a veterinary clinic. J. Feline Med. Surg. 22, 122–128. doi: 10.1177/1098612X19828131

Helweg, D. A., Frankel, A. S., Mobley, J., and Herman, L. M. (1992). “Humpback whale song: our current understanding,” in Marine Mammal Sensory Systems, eds J. A. Thomas, R. A. Kastelein, and A. Y. Supin (Boston, MA: Springer), 459–483. doi: 10.1007/978-1-4615-3406-8_32

Herman, L. M. (2010). What laboratory research has told us about dolphin cognition. Int. J. Comp. Psychol. 23, 310–330.

Herman, L. M., Beach, F. A. III, Pepper, R. L., and Stalling, R. B. (1969). Learning-set formation in the bottlenose dolphin. Psychon. Sci. 14, 98–99. doi: 10.3758/bf03332723

Herzing, D. (2011). Dolphin Diaries: My 25 Years with Spotted Dolphins in the Bahamas. New York, NY: St. Martin’s Press, 63.

Herzing, D. L., Moewe, K., and Brunnick, B. J. (2003). Interspecies interactions between Atlantic spotted dolphins, Stenella frontalis and Bottlenose dolphins, Tursiops truncatus, on Great Bahama Bank, Bahamas. Aquat. Mamm. 29, 335–341. doi: 10.1578/01675420360736505

Hickey, R., Berrow, S., and Goold, J. (2009). Towards a bottlenose dolphin whistle ethogram from the Shannon Estuary, Ireland. Biol. Environ. Proc. R. Ir. Acad. 109, 89–94. doi: 10.3318/BIOE.2009.109.2.89

Hovhaness, A. (1970). And God Created Great Whales, Symphonic Poem for Orchestra and Recorded Whale Sounds, Commissioned by Andre Kostelanetz. New York, NY: New York Philharmonic.

Jefferson, T. A., Stacey, P. J., and Baird, R. W. (1991). A review of killer whale interactions with other marine mammals: predation to coexistence. Mamm. Rev. 21, 151–180. doi: 10.1111/j.1365-2907.1991.tb00291.x

Kaplun, D., Voznesensky, A., Romanov, S., Andreev, V., and Butusov, D. (2020). Classification of hydroacoustic signals based on harmonic wavelets and a deep learning artificial intelligence system. Appl. Sci. 10:3097. doi: 10.3390/app10093097

Lallemand, F.-X., Latham, J., Smith, J., Cazau, D., Adam, O., Barney, A., et al. (2014). Building a Singing Whale. M.Sc. Report. Southampton: Institute of Sound and Vibration Research, University of Southampton.

Lavigne, D. M., Scheffer, V. B., and Kellert, S. R. (1999). “The evolution of North American attitudes toward marine mammals,” in Conservationand Management of Marine Mammals, eds J. R. Twiss and R. R. Reeves (Washington, DC: Smithsonian Insitute Press), 10–47.

Lerch, A. (2012). An Introduction to Audio Content Analysis: Applications in Signal Processing and Music Informatics. Hoboken, NJ: Wiley-IEEE Press, 272.

Leroy, E., Thomisch, K., Royer, J. Y., Boebel, O., and Van Opzeeland, I. (2018). On the reliability of acoustic annotations and automatic detections of antarctic blue whale calls under different acoustic conditions. J. Acoust. Soc. Am. 144, 740–754. doi: 10.1121/1.5049803

Levenshtein, V. I. (1966). Binary codes capable of correcting deletions, insertions, and reversals. Sov. Phys. Dokl. 10, 707–710.

Lilly, J. C. (1967). The Mind of the Dolphin: A Nonhuman Intelligence. Garden City, NY: Doubleday edts.

Lindig, A. M., McGreevy, P. D., and Crean, A. J. (2020). Musical dogs: a review of the influence of auditory enrichment on canine health and behavior. Animals 10:127. doi: 10.3390/ani10010127

Luo, Z. (2020). Python String Similarity Library v0.2.0. Available online at: https://github.com/luozhouyang/python-string-similarity (accessed December 05, 2020).

MacLeod, C. D. (1998). Intraspecific scarring in odontocete cetaceans: an indicator of male ‘quality’ in aggressive social interactions? J. Zool. 244, 71–77. doi: 10.1111/j.1469-7998.1998.tb00008.x

Malfante, M., Dalla Mura, M., Metaxian, J. P., Mars, J. I., Macedo, O., and Inza, A. (2018). Machine learning for volcano-seismic signals: challenges and perspectives. IEEE Signal Process. Mag. 35, 20–30. doi: 10.1109/msp.2017.2779166

McFee, B., Lostanlen, V., Metsai, A., McVicar, M., Balke, S., Thomé, C., et al. (2020). librosa/librosa:0.8.0, Zenodo. doi: 10.5281/zenodo.3955228

Mellinger, D. (2005). Automatic detection of regularly repeating vocalizations. J. Acoust. Soc. Am. 118:1940. doi: 10.1121/1.4781034

Mercado, E. III (2016). Acoustic signaling by singing humpback whales (Megaptera novaeangliae): what role does reverberation play? PLoS One 11:e0167277. doi: 10.1371/journal.pone.0167277

Midforth, F., Damien, J., Cazau, D., Adam, O., Barney, A., and White, P. (2016). Developing and Analysing A Numerical Model for Humpback Whale (Megaptera novaeangliae) Vocalisations. M.Sc. report. Southampton: Institute of Sound and Vibration Research, University of Southampton.

Mingramm, F. M. J., Keeley, T., Whitworth, D. J., and Dunlop, R. A. (2020). Blubber cortisol levels in humpback whales (Megaptera novaeangliae): a measure of physiological stress without effects from sampling. Gen. Comp. Endocrinol. 291:113436. doi: 10.1016/j.ygcen.2020.113436

Mobley, J. R. Jr., Herman, L. M., and Frankel, A. S. (1988). Responses of wintering humpback whales (Megaptera novaeangliae) to playback of recordings of winter and summer vocalizations and of synthetic sound. Behav. Ecol. Sociobiol. 23, 211–223. doi: 10.1007/bf00302944

Mohebbi-Kalkhoran, H. (2019). Automated machine learning approaches for humpback whale vocalization classification. J. Acoust. Soc. Am. 146:2964. doi: 10.1121/1.5137305

Necas, D., Haapala, A., and Ohtamaa, M. (2019). Python Levenshtein Module. Available online at: https://github.com/ztane/python-Levenshtein (accessed December 05, 2020).

Neimi, A. R. (2010). “Life by the shore. Maritime dimensions of the late stone age, Arctic Norway,” in Transference. Interdisciplinary Communications, ed. W. Ostreng (Oslo: CAS).

Noad, M. J., Cato, D. H., Bryden, M. M., Jenner, M. N., and Jenner, K. C. (2000). Cultural revolution in whale songs. Nature 408:537. doi: 10.1038/35046199

Orbach, D. N. (2019). “Sexual strategies: male and female mating tactics, ethology and behavioral ecology of odontocetes,” in Ethology and Behavioral Ecology of Odontocetes, ed. B. Würsig (Cham: Springer), 75–93. doi: 10.1007/978-3-030-16663-2_4

Owen, C., Rendell, L., Constantine, R., Noad, M. J., Allen, J., Andrews, O., et al. (2019). Migratory convergence facilitates cultural transmission of humpback whale song. R. Soc. Open Sci. 6:190337. doi: 10.1098/rsos.190337

Pace, F., Benard, F., Glotin, H., Adam, O., and White, P. (2010). Subunit definition for humpback whale call classification. Appl. Acoust. 11, 1107–1114. doi: 10.1016/j.apacoust.2010.05.016

Palacios, D. M., and Mate, B. (1996). Attack by false killer whales (Pseudorca crassidens) on sperm whales (Physeter macrocephalus) in the Galapagos Islands. Mar. Mamm. Sci. 12, 582–587. doi: 10.1111/j.1748-7692.1996.tb00070.x

Parsons, E. C. M., and Brown, D. M. (2017). “From hunting to watching: human interactions with cetaceans,” in Marine Mammal Welfare, ed. A. Butterworth (Cham: Springer International Publishing), 67–89. doi: 10.1007/978-3-319-46994-2_5

Payne, K., and Payne, R. (1985). Large scale changes over 19 years in songs of humpback whales in Bermuda. Ethology 68, 89–114. doi: 10.1111/j.1439-0310.1985.tb00118.x

Payne, K., Tyack, P., and Payne, R. (1983). “Progressive changes in the songs of humpback whales (Megaptera novaeangliae): a detailed analysis of two seasons in Hawaii,” in Communication and Behavior of Whales, ed. R. Payne (Boulder, CO: Westview Press), 9–57.

Payne, R., and McVay, S. (1971). Songs of humpback whales. Science 173, 585–597. doi: 10.1126/science.173.3997.585

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830.