- School of Educational Foundations & Inquiry, Bowling Green State University, Bowling Green, OH, United States

The American Educational Research Association and American Psychological Association published standards for reporting on research. The transparency of reporting measures and data collection is paramount for interpretability and replicability of research. We analyzed 57 articles that assessed alphabet knowledge (AK) using researcher-developed measures. The quality of reporting on different elements of AK measures and data collection was not related to the journal type nor to the impact factor or rank of the journal but rather seemed to depend on the individual author, reviewers, and journal editor. We propose various topics related to effective reporting of measures and data collection methods that we encourage the early childhood and literacy communities to discuss.

Introduction

The purpose of educational research is to contribute “to the building, refining, and general acceptance of a core body of knowledge within a collaborative research community” (Lin et al., 2010, p. 299). For this to occur, researchers need to explain their study in ways that enable readers to understand, interpret, and evaluate the quality of the research. “Ernest and McLean (1998) reason that conducting research is akin to detective work and that as researchers we need to provide readers with as many clues as reasonably possible regarding how we have reached our conclusions” (Zientek et al., 2008, p. 208).

Over the past decade or so, the effectiveness of reporting research studies has been scrutinized in various areas of research with many research communities developing standards, policies, or guidelines for their researchers to follow. For instance, in 2010, the medical community revised their Consolidated Standards of Reporting Trials (CONSORT) “to alleviate the problems arising from inadequate reporting of randomized controlled trials” (CONSORT group, 2010, para 1). The European Association of Science Editors (EASE) also developed guidelines in 2010, which they are continuously adapting, to help authors and translators communicate more efficiently in English (European Association of Science Editors, 2018).

Many authors have also investigated different aspects of effective reporting. The journal Perspectives on Psychological Science devoted a special issue (see Pashler and Wagenmakers, 2012) to the issues of replicability in psychology. Several researchers investigated the reporting of statistics in journals with different impact factors (Tressoldi et al., 2013) and submission guidelines (Giofrè et al., 2017) while others investigated experimental designs and the statistical methods used (Robinson et al., 2007; Zientek et al., 2008; Zientek and Thompson, 2009; Asendorpf et al., 2013). Still other researchers looked at the publication rates of replication studies (Makel and Plucker, 2014; Makel et al., 2016), or the quality of specific journals (Skidmore et al., 2014; Mohan et al., 2016; Zhao et al., 2017). Several researchers (e.g., Slavin, 2002, 2005; Asendorpf et al., 2013; Makel, 2014; Tressoldi and Giofrè, 2015) have made recommendations to authors, editors, and reviewers related to various elements of published documentation of research. While all of these aspects of reporting are important, the quality of the research rests on the adequacy of the measures and how the data is collected. That is, even if the researcher used appropriate research design, statistical methods, and published in journals with high impact factors, the study is useless if the measures are inappropriate, unreliable, or invalid. We have not found a published article investigating the quality of the measures used or how they are documented. Therefore, we extend the research of documentation of the quality of research by investigating the reporting of researcher-developed measures and data collection methods in published articles specifically relating to alphabet knowledge (AK). Although we are specifically investigating AK measures, our hope is to add to the broader examination of the quality of reporting on research about topics in early childhood and literacy. That is, the quality of reporting on AK measures can serve as a case study for the quality of reporting on measures and data collection methods in general.

In 2006, the American Educational Research Association (AERA) developed standards for reporting on empirical social science research (American Educational Research Association, 2006). The stated purpose for these standards was “to provide guidance about the kinds of information essential to understanding both the nature of the research and the importance of the results” (p. 33). The AERA standards have two overarching principles: (a) warranted and (b) transparent reporting of empirical research. Reporting that uses these principles as guidelines “permits scholars to understand one another's work, prepares that work for public scrutiny, and enables others to use that work” (American Educational Research Association, 2006, p.33). Because we are interested specifically in the transparency of reporting, whether or not the research is warranted is beyond the scope of this article. Two important elements of transparency are interpretability and replicability. That is, is there enough detail in the published article so a reader is able to understand the results and conclusions and duplicate the study?

In 2010, the manual of American Psychological Association (APA) included journal article reporting standards (JARS): information recommended for inclusion in manuscripts that report new data collections regardless of research design (pp. 247–250). The important recommendations from APA JARS related to the topic of this inquiry are (1) definitions of all primary and secondary measures, (2) methods used to collect data, and (3) information on validated or ad hoc instruments created for individual studies. Even though (3) of APA JARS relates to both validated and ad hoc instruments, we have focused on ad hoc, researcher-developed instruments. We define researcher-developed AK instruments as those that are not published, commercially available measures.

The rationale for focusing on researcher-developed instruments was two-fold. One is that there are different transparency needs for these two types of measures. Authors using validated commercially available measures do not need as many details as those using their own ad hoc measures because other researchers can find specific details about these published instruments elsewhere to be able to interpret the results and use the same measure to replicate the study. Also, researcher-developed instruments can lead to an overestimation of the effects of the treatment, which could lead to inflated claims for the results. Therefore, there are more criteria for effective reporting of researcher-developed measures to ensure that the study is interpretable and replicable.

The second rationale is that many researchers of AK rely on their own measures. Approximately 40% of the articles we looked at that had an AK measure used a researcher-developed measure. To our knowledge, there is not, as yet, a validated commercially available assessment of AK only. There are many AK measures as sub-tests of assessments (e.g., Bracken, DIBELS, PALS, OSELA), which can be costly if the researcher needs just the AK subtest. Also, it is easy to develop your own alphabet measure because there is a finite amount of information to assess.

AK is the understanding of various elements of written letters. This includes saying the names of the letters, saying the sounds of the letters, writing the letters, and recognizing the names or sounds of the letters. Each of these types of AK have both uppercase and lowercase letters. Because there are different elements of AK, reporting what exactly was assessed and how it was assessed during a research study is highly important for interpreting the results and conclusions and for replicating the study.

Since Jeanne Chall's research in the 1960's Chall (1967, 1983), AK at entrance to kindergarten has been shown to be highly predictive of future reading ability (Bond and Dykstra, 1967/1997; Share et al., 1984; Adams, 1990; National Research Council, 1998; Lonigan et al., 2000; Storch and Whitehurst, 2002; National Early Literacy Panel, 2008). Therefore, for the past fifty years or so, studies of emergent literacy in pre-school and kindergarten almost always have included an assessment of AK. Many researchers have also used AK as a background or co-variable in studies on literacy development during the elementary school years.

Starting about twenty years ago, literacy researchers have been developing a nuanced understanding of young children's AK. Research (Treiman et al., 1994; Treiman and Broderick, 1998; McBride-Chang, 1999; Lonigan et al., 2000; Evans et al., 2006; Justice et al., 2006; Ellefson et al., 2009; Piasta and Wagner, 2010; Drouin et al., 2012) has shown developmental trends in AK, such as most uppercase letters are typically learned before lowercase, some letters (e.g., O, A) are typically learned before others, and children in the United States (U.S.) typically learn letter names before letter sounds while the reverse is true of British children. Although the picture of alphabet learning is still unclear, there is a growing consensus that not all types of AK are equal in terms of young children's learning. “In practical terms, this means that an assessment of uppercase letter naming, for example, may reveal positively-skewed distributions for children who are 4 years old, normal distributions six months later, and negatively-skewed distributions six months after that” (Drouin et al., 2012, p. 544). For this example, an uppercase letter naming assessment would be useful for only a brief time in a child's development. Plus, AK is even more complicated than this example because it has multiple skills embedded within it (e.g., uppercase naming, lowercase naming, sounds), which have their own developmental trajectories. So, those same U.S. five-year-olds who show a negatively-skewed distribution for uppercase letter naming, may also show a normal distribution for lowercase letter naming, and a positively-skewed distribution for sounds. If researchers only report that they assessed AK, without giving details on what type of AK (e.g., naming, sounds) or type of alphabet letter (i.e., uppercase, lowercase), it is impossible for readers to know exactly what the research entailed. This, in turn, limits how the reader can interpret the data, replicate the study, and apply the results to practice.

Incomplete reporting of research methodology can influence the advancement of the literacy community's knowledge. Ehri et al. (2001), in their meta-analysis of phonics instruction programs, stated “one common weakness of the studies was failing to provide needed information” (p. 431). Piasta and Wagner (2010) did a meta-analysis of alphabet learning and instruction, in which they analyzed the effects of instruction on alphabet outcomes. Their original pool of 300 studies that provided alphabet training and assessed alphabet outcomes was reduced to 37 (12%) studies due to lack of “(1) measurement of discrete alphabet outcomes at post-test, (2) explicit acknowledgment of alphabet training as component of instruction, or (3) sufficient information to calculate an effect size” (p. 10). Therefore, a large number (88%) of the published studies providing alphabet training could not be further analyzed for this meta-analysis because of the non-transparency or lack of interpretability in the reporting. In 2019, Torgerson et al. did a tertiary review of 12 systematic reviews on the effectiveness of phonics interventions. Their review included a quality appraisal using the PRISMA checklist, with five of the 12 articles not reporting doing a quality appraisal of the studies they included. Based on this, Torgerson et al. (2019) stated, “This omission in these five SRs [systematic reviews] is critical, and therefore, the results from these SRs should carry lower weight of evidence in our conclusions (p. 226).” Although Ehri et al. (2001), Piasta and Wagner (2010), and Torgerson et al. (2019) issues related to inclusion were broader than our purposes, these studies highlight the importance of effective reporting in published research articles. A similar lack of transparency related to the reporting of AK measures could restrict the literacy community's ability to accept, build, and refine a core body of knowledge relating to AK development.

A description of the measures in a research study should be “precise and sufficiently complete to enable another researcher, where appropriate, to understand what was done and, where appropriate, to replicate or reproduce the methods of data collection under the same or altered research circumstances” (American Educational Research Association, 2006, p. 35). Therefore, the purpose of this article was to investigate if authors of published articles that use researcher-developed AK measures reported their study sufficiently so it can be interpreted and replicated. Because we are concerned with the interpretability and replicability of research assessing AK, our primary research interest was how authors reported what AK measures they used and how they used them. Our secondary research interest was to examine how authors reported other elements of their study, such as details about their participants and sampling procedures, that are important for interpretability and replicability of the study.

One potential reason for differences in reporting quality is the type of journal in which these articles were published. Perhaps the editors and reviewers for journals that are related specifically to literacy would expect more details and transparency, especially as related to the AK measures, than editors and reviewers for journals that publish on a variety of topics (e.g., early childhood, psychology). Another reason could be that top-tier journals have more rigorous requirements for acceptance and that their reviewers could be more concerned with transparency of reporting than lower-tier journals. Therefore, a tertiary research interest was to examine whether there were differences in the effectiveness of reporting based on type of journal (i.e., literacy and non-literacy) and ranking of journal (e.g., top tier, second tier).

The research questions were as follows:

(1) What percentage of AK elements do authors report in their published articles?

(2) What percentage of General elements do authors report in their published articles?

(3) Are there differences in these percentages based on

a) type of journal: Literacy journals could be expected to have a higher percentage of AK elements reported while the two journal types would have equal percentages of General elements reported.

b) ranking of journal: journals with higher impact scores and rankings could be expected to have a higher percentage of AK and General elements reported than journals with lower impact scores and rankings.

Methods

Identification of Established Evaluation Criteria

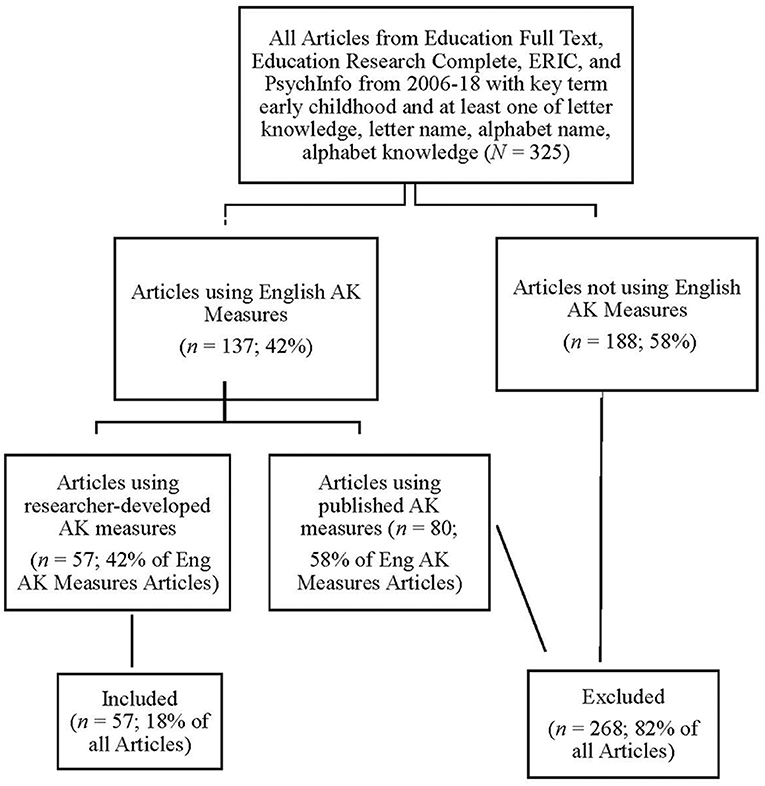

Inclusionary criteria (See Figure 1 for data flow chart) were that the research had to be published in a journal indexed in at least one of the Education Full Text, Education Research Complete, ERIC and PsycINFO databases, with the key term early childhood and at least one of four alphabet-related terms: letter name, letter knowledge, alphabet name, and alphabet knowledge and in the years 2006 to 2018. We chose this time frame because the AERA standards were published in 2006 and 2018 was the last full year of publications when we began this project. Three hundred and twenty-five articles fit these criteria.

Inclusionary criterion for published articles was that the article assessed English AK using researcher-developed measures. This excluded all non-empirical articles (e.g., reviews, advice), plus those that did not include an AK measure (e.g., assessed adults, phonemic awareness), assessed languages other than English (e.g., Dutch, Hebrew) or used published AK measures (e.g., PALS, DIBELS). Fifty-seven articles fit these criteria.

The vast majority of these articles were quantitative (n = 51; 89%). The others were single case design (n = 3; 5%), case studies (n = 2; 4%), and mixed (n = 1; 2%) methodologies. Because some elements analyzed were not relevant to all types of methodology, we considered using quantitative methodology as an inclusionary criterion. However, we chose to include all articles using AK measures because many reviews exclude non-quantitative articles and, more importantly, regardless of methodology all articles should be interpretable and replicable.

In reviewing the included articles, we noticed multiple authors had published several journal articles that fit these inclusionary criteria. In a comparison of articles written by the same authors, there were enough differences in the reporting in multiple areas of interest (e.g., types of AK assessed, measures used) to consider these articles as separate entities so all included articles, regardless of authorship, were examined individually.

Instrumentation

Although we would have preferred to use an existing rubric or checklist (Gall et al., 2007), we were unable to find an existing rubric or checklist that met our purpose for coding the reporting quality of published articles relating to alphabet knowledge measures. Therefore, we developed our own coding rubric based on the American Educational Research Association (2006) standards, American Psychological Association (2010) JARS, and logical deduction of criteria related to the elements of AK. However, to counteract some of the issues with researcher-developed measures, we have attempted to be as transparent as possible in reporting on this measure. The elements of transparency and replicability divided into two categories, which are explained below.

AK Measure Reporting

Because of the multi-faceted nature of AK, articles that included the following elements of AK measures would be “precise and sufficiently complete” (American Educational Research Association, 2006, p. 35) for readers to interpret and replicate the study:

1. Definition of AK terms [e.g., alphabet knowledge, letter naming, letter identification; American Psychological Association JARS (2010), Table 1: Measures and covariates: methods used to collect data (p. 842; abbreviated hereafter as APA Table 1: Measures)];

2. Type of knowledge [e.g., names, sounds; American Educational Research Association (2006) standard 3.2 “collection of data or empirical materials” (p. 35; abbreviated hereafter as AERA 3.2)];

3. Type of alphabet letter [i.e., uppercase, lowercase; AERA 3.2];

4. Number of letters used [e.g., all 26, 10; AERA 3.2];

5. Order of letters [e.g., random, letter in participant's name first; AERA 3.2];

6. Format of measure [e.g., one sheet, individual cards; font type and size; AERA 3.2];

7. Directions [e.g., asked child to name letter the examiner pointed to; AERA 3.2];

8. How they used this measure in their analysis [e.g., separate or combined scores; AERA 4.3.c “appropriate use” (p. 36)];

9. methods used to enhance the quality of measurements, including training and reliability of data collectors [AERA 4.2.c. “coding processes”; APA Table 1: Measures];

10. Psychometric properties of researcher-developed instruments [AERA 4.3.c “dependability (reliability)” (p. 36); APA Table 1: Measures].

General Reporting

The elements about the study, which are not specifically related to the measure and data collection methods but need to be disclosed for interpretability and replicability, are:

11. Statement of the purpose or problem under study (AERA 1.1; APA Table 1: Introduction);

12. Research hypotheses or questions (APA Table 1: Introduction);

13. Relevant characteristics of participants: (a) eligibility and exclusion criteria, (b) age/grades, (c) gender (d) race/ethnicity, (e) SES/family income level, (f) language status (e.g., English as a second language), and (g) disability [AERA 3.1.a and APA Table 1: Method: Participant characteristics; although characteristics (b) - (g) are not mentioned specifically, they are relevant because they have been linked to literacy skills].

14. Sampling procedures (a) percentage of sample approached that participated, (b) settings and locations where data were collected, (c) payments made to participants (e.g., money or gifts), and (d) institutional review board agreements and ethical standards met (AERA 3.1.b and APA Table 1: Sampling procedures).

Coding

Similar to other studies of reporting quality (Zientek et al., 2008; Makel et al., 2016), a dichotomous scale (present, not present) was used and the quality of the information was not evaluated. If the authors mentioned the item anywhere in the article, it was marked as present. If coders could not locate anything related to the item, it was marked as not present except in the following circumstances. Because some elements analyzed were not relevant to all methodologies, a non-applicable (n/a) rather than a not present coding was denoted. Also, some articles investigated one element of AK exclusively so how they used their measure (i.e., individual, combined with other measures) was not applicable. Therefore, percentages, based on only those elements that apply to each study, are reported instead of total scores.

Graduate Assistants (GAs) were trained by the first author. During the training, the coding rubric was explained, then the trainer and trainees coded articles into an excel spreadsheet together until the GAs said they understood the process. The GAs then coded articles independently but were checked by the first author until the coded data were consistent to previously coded information. At least two trained people coded each article. These data sheets were then cross-checked for differences. Of the 1,323 coded items, there were 119 discrepancies (9%; κ = 0.82, an excellent rating; American Psychological Association, 2018). These were then discussed to come to a consensus of whether they were present or not.

Results

Reporting Quality

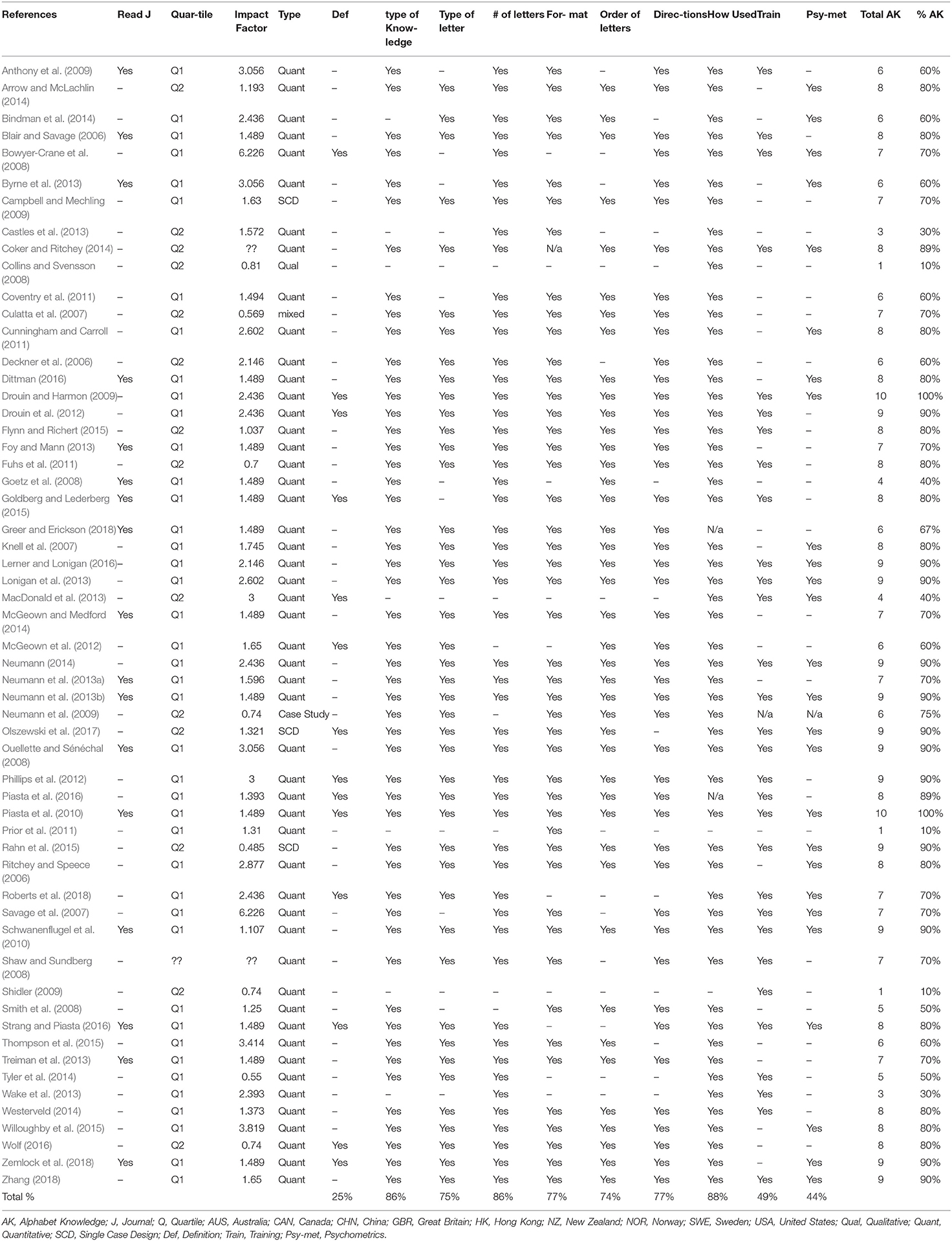

To investigate how authors reported what AK measures they used and how they used them, we looked at the quality of the reporting for the 10 elements of the measures related specifically to AK. See Table 1 for information about the reporting of each element of the AK measure for each article, percentage for each article, and the total percentage for each element. The range was from 10% to 100% of AK elements mentioned. The lowest number of studies (23%) defined the AK terms whereas the highest number of studies (88%, 2 n/a) denoted how they used the AK measure. The average for reporting all 10 AK elements was 71%, with a standard deviation of 22%.

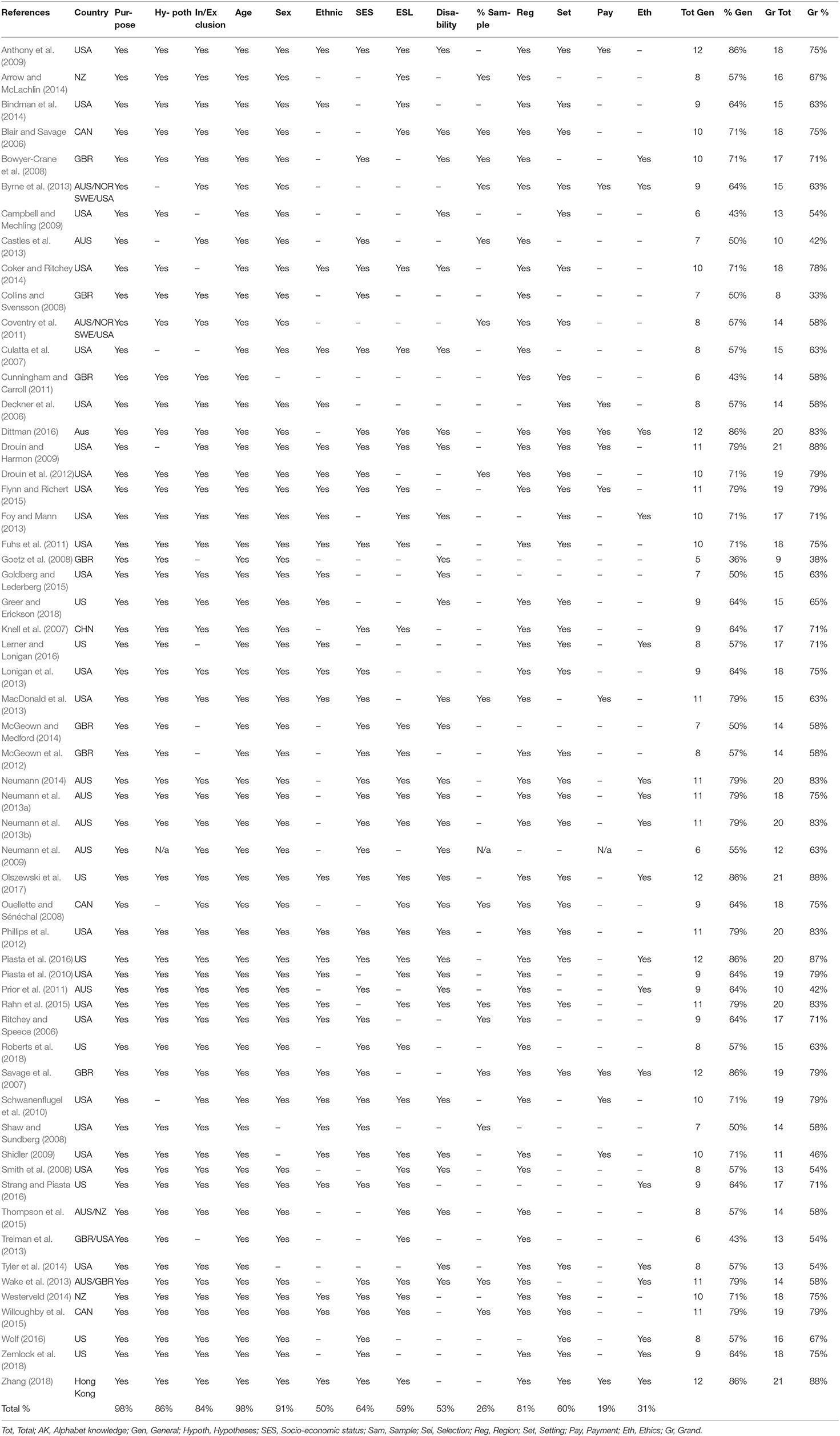

To investigate how authors reported other elements of their study that are important for interpretability and replicability, we looked at the quality of the reporting for the 14 general elements of the studies. See Table 2 for information about the reporting of each general element measure for each article, percentage for each article, and the total percentage for each element. The range was from 36% to 86% of general elements mentioned. The lowest number of studies (18%) mentioned whether any payments were made whereas all authors (100%) stated the purpose for their study and the age of their participants. The average for reporting all 14 general elements was 66%, with a standard deviation of 13%.

To investigate how authors reported all elements of their study, we looked at the overall quality by combining the 24 elements. The total percentage ranged from a low of 33% to a high of 88%, with an average of 68% and standard deviation of 13%.

Type of Journal

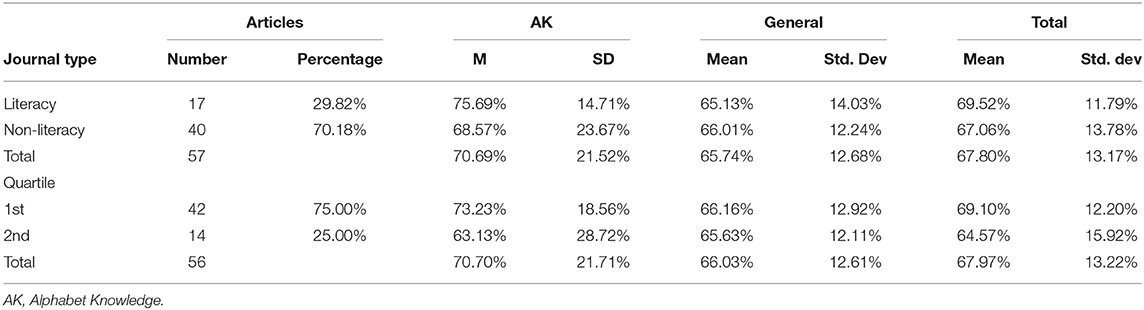

To investigate whether there were differences in the level of reporting based on type of journal, we divided the articles into literacy and non-literacy publications. There were four literacy journals: Journal of Literacy Research (n = 1 article), Reading and Writing: An Interdisciplinary Journal (n = 12), Reading Research Quarterly (n = 1), and Scientific Studies of Reading (n = 3), with 17 articles total. There were 30 non-literacy journals, with 40 articles total. The Early Childhood Research Quarterly had five articles, Early Childhood Education Journal and Journal of Experimental Child Psychology had three, Journal of Child Psychology and Psychiatry and Learning and Individual Differences had two, and 25 journals published only one article each from 2006–2018.

Independent t-tests were run for AK, General, and total percentages with a 95% confidence interval (CI) for the mean difference. We used Hedges' g to calculate the effect size because the sample sizes were different. As can be seen in Table 3, the literacy journals' average for AK measures (M = 76%) was higher than non-literacy journals' (M = 69%); however, this difference was not statistically significant, t(55) = 1.15, p = 0.257, Hedges' g =.033. For the General elements, the literacy and non-literacy journals' averages were almost the same (M = 65, 66%, respectively) so there was no statistical significance, t(55) = −0.24, p = 0.813, Hedges' g = 0.069. As to be expected from the previous results, the total averages (literacy M = 70%; non-literacy M = 67%) were also not statistically significant, t(55) =.64, p = 0.524, Hedges' g = 0.186. The results for the General elements agreed with our prediction that the differences would not be statistically significant. Our prediction that the literacy journals would be more precise in their reporting of AK elements was not supported. However, because of the small number of articles analyzed the statistical power for these analyses was not high.

Journal Impact Factors and Ranking

To investigate whether there were differences in the level of reporting based on type of journal, we divided the articles by two common ways to evaluate journals; thereby, allowing two different statistical analyses. We used impact factor scores as reported on the individual journals' websites or researchgate.com. We could not locate impact factors for two journals (Assessment for Effective Interventions and Journal of At-Risk Issues) so 55 articles were in this analysis. Because impact factor scores are continuous, we ran correlations between this measure and the two elements of reporting. Impact factor was not significantly correlated with AK, General, or Total scores.

We also used Scimago Journal Rank (SCImago, 2020), which “is a publicly available portal that includes the journals and country scientific indicators developed from the information contained in the Scopus® database (Elsevier B.V.).… Citation data is drawn from over 21,500 titles from more than 5,000 international publishers” (no page). We could not locate a quartile ranking for one journal, Assessment for Effective Interventions, so 56 articles were in this analysis. Forty-two (75%) journals were ranked in Quartile 1 (Q1) while 14 (25%) were in Quartile 2 (Q2).

Independent t-tests were run for AK, General, and total percentages with a 95% confidence interval (CI) for the mean difference. We used Hedges' g to calculate the effect size because the sample sizes were different. As can be seen in Table 3, the Q1 journals' averages for all measures (AK M = 73%, General M = 66%; Total M = 69%) were higher than Q2 journals' (AK M = 63%, General M = 66%; Total M = 65%); however, these differences were not statistically significant, t(54) = 1.52, p = 0.133, Hedges' g = 0.470; t(54) = 0.13, p = 0.894, Hedges' g =.042; t(54) = 1.11, p = 0.270, Hedges' g = 0.343 respectively. Therefore, our prediction that higher-tier journals would be more precise in their reporting than lower-tier journals was not supported. However, because of the small number of articles analyzed the statistical power for these analyses was not high.

Although interactions between type (i.e., literacy and non-literacy) and journal ranking (i.e., Q1 and Q2) could be another potential reason for differences in the level of reporting, we could not investigate this. All four literacy journals were ranked in the first quartile; therefore, we were unable to differentiate between ranking and type of journal.

Summary

Over the past decade, multiple organizations, including AERA and APA, have developed or revised standards for testing, evaluating, and reporting research. Although there has been increased awareness of these issues by organizations, the degree to which journal articles aligned with these standards fluctuated greatly, with a low of 33% of elements mentioned to a high of 88%, with an average of 68%. The contrast was even greater for just the AK measures with a range from 10 to 100%, with an average of 71%. There was no statistically significant relationship between the quality of reporting and the type (i.e., literacy or non-literacy) or evaluation (i.e., impact factor, ranking) of the journal.

Discussion

Effective reporting is an important but overlooked element of research dissemination. Even if the research itself is rigorous, if the authors omit crucial details in the reporting of their study, the published article is flawed, with decreased interpretability and replicability. This, in turn, can lead to problems with the literacy research community's ability to accept, build, and refine (Lin et al., 2010) a core body of knowledge relating to AK development.

In this study, we have shown that the quality of reporting details of the AK measure and general information as recommended by AERA and APA JARS standards differ from published article to article. These differences are not due to being published in a literacy-oriented journal instead of other journals nor top-tier rather than second-tier journals. Our finding is similar to Tressoldi et al. (2013) who found that “statistical practices vary extremely widely from journal to journal, whether IF [impact factor] is high or relatively low” (p. 6).

There are several possible reasons for these differences: authors themselves, the reviewers of the manuscripts, the editors of the journals, or a combination of the three. The majority of articles (n = 40; 70%) were authored by researchers who published only once from 2006–2018. There were five sets of authors with more than one publication, with all except for Drouin publishing in multiple journals. Co-authors Anthony, Lonigan, and Piasta, in various combinations of authorship, published seven articles with a range of 71-87%. Neumann (n = 4) ranged from 63-83%. McGeown's two articles were both 58%, Drouin's were 79 and 88%, and Bryne's were 58 and 63%. Therefore, there is some fluctuation in the level of effective reporting even within the same authorship, although it is not as wide as for the whole sample (33–88%). Only McGeown's two articles had the exact same level of effectiveness; therefore, at least some of the reason for this fluctuation lays with the authors themselves.

The majority of journals (n = 27; 82%) published only one article with researcher-developed AK measures from 2006 to 2018. Six journals had more than one publication. Reading and Writing: an Interdisciplinary Journal published 12 articles, with a range of 38-83%. Early Childhood Research Quarterly (n = 5) ranged from 63 to 88%, Scientific Studies of Reading (n = 3) were between 63 and 75%, Early Childhood Education Journal (n = 3) were 46-67%, and Journal of Experimental Child Psychology (n = 3) ranged from 58 to 75%. Journal of Child Psychology and Psychiatry's two articles were 71% and 79% while Learning and Individual Differences' were 71 and 75%. Similar to authorship, there is some fluctuation in the level of effective reporting even within the same journal, although it is not as wide as for the whole sample (33–88%). However, Reading and Writing, the journal with the most publications, had the widest range and was most similar to the whole sample. Therefore, at least some of the reason for this fluctuation lays with the journal. Although we have no way of knowing what the authors, reviewers, and editors' interactions were, it seems like the fluctuations in reporting detail can be best accounted for by a combination of all three.

With the development of the standards, AERA and APA organizations have “provided guidance about the kinds of information essential to understanding both the nature of the research and the importance of the results” (American Educational Research Association, 2006, p. 33). However, even after 8–12 years, as can be seen from our analysis, many articles lack key elements of these standards. Perhaps these researchers are not aware of or use these standards. Or, because these standards are recommendations not imperatives, researchers might use some but not all of them. Therefore, the effectiveness of reporting still depends on individual authors, reviewers, and editors. The total overall level of effective reporting on early childhood measures, including AK, and general information could be enhanced if the early childhood research community developed some guidelines specific to research with young participants. In the following paragraphs, we suggest some questions the research community could use to discuss ways in which we could develop specific guidelines to enhance the AERA and APA standards.

One question for this potential discussion would be whether all elements within the AERA and APA standards should be treated as equally important or whether there some elements that are more relevant to early childhood than others. In this study, we treated all elements as equally important, with each element worth one point. But, are all these elements equally necessary for transparence or are some more vital? For instance, prior research has shown that young children typically learn uppercase before lowercase, U.S. children learn names before sounds while British learn sounds first, and some letters are typically learned before others (Treiman et al., 1994; Treiman and Broderick, 1998; McBride-Chang, 1999; Lonigan et al., 2000; Evans et al., 2006; Justice et al., 2006; Ellefson et al., 2009; Piasta and Wagner, 2010; Drouin et al., 2012); therefore, we think letter type, knowledge type, number of letters and how they are used all are vital for interpretation. The other aspects (e.g., format, directions) could enhance replicability but probably are not necessary for understanding the results. However, this is our opinion so we urge the research community to discuss what elements are necessary to report for transparency.

What is necessary to know about participants? All studies (100%) reported age, 53 (93%) reported sex, and 49 (86%) mentioned who was included or excluded from the study. Ethnicity, SES, English status, and whether anyone had a disability were mentioned by 51-65%. Are all these elements necessary to report in all studies or are they only necessary if they are an important aspect of the study? For instance, English status and disabilities were, of course, mentioned when they were an integral part of the research, such as the participants were children with Down's syndrome or English Learners (EL). They were also mentioned frequently as exclusionary criteria (e.g., children with speech impediments were not tested). However, if studies did not mention these elements does it reduce the readers' ability to interpret and replicate the study substantially? If these aspects are not mentioned, do readers assume the researchers did not record this information or there were no participants with disabilities or EL? Do these assumptions change readers' understanding or interpretation of the results?

Reporting of the ethnicity of participants brings up a unique and interesting issue for discussion. It was included in our study because previous research has linked it to reading achievement. We found that whether authors reported it or not depended, at least in part, on where they did their study. Of the 30 studies done exclusively in the United States, 81% mentioned ethnicity whereas only four of the 23 (17%) studies done in other countries and zero of the three studies that were done in the United States and other countries did so. No other element had this division by country. This adds a potential twist to the discussion for guidelines – could the criteria for effective reporting depend on various non-research factors, like country?

Another aspect, beyond what elements should be included in articles, of this potential discussion is the quality of the reporting. We did not assess the quality of the reporting; that is, any mention of an element was given a point. However, is there a level of detail that is ideal? For instance, should readers assume some basic level of ethics is met if the article is published or does it have to always be explicitly stated? Especially when considering page limitations of most journals, is it necessary for authors to include, for example, information about IRB approval or other ethical treatment of participants in all journal articles? Or, for research that does not include elements that could be harmful to participants, such as asking about alphabet letters, can the ethics of the research be monitored by the editor and assumed by the readers? Authors could mention any ethical considerations in a cover letter but save space in the manuscript.

Another area of concern is training. Some authors gave detailed explanations of the training whereas others included the word “trained.” Should readers trust that the assessors were adequately trained or should we require more details? Should these requirements differ by measure? That is, training assessors for an alphabet knowledge measure would be easier than for analyzing poetry writing. Even within alphabet knowledge, letter naming is straight-forward with English-speaking adults knowing the names (except perhaps the American zee and the Queen's English zed) and how to score them whereas letter sounds is more complex, with multiple sounds for some letters including long and short vowels. Again considering page limitations, how detailed does the description of training need to be?

Another major question, if there is a discussion with guidelines developed, is how does the research community disseminate this information? The articles using researcher-developed AK measures are widely dispersed. From 2006 to 2018, there were 57 articles published in 34 journals, ranging from medical, communication disorders, early childhood, psychology, and literacy. Because of this variety, reviewers might not be knowledgeable about AK and the specific requirements for interpretability and replicability. Therefore, how to make sure authors, reviewers, and even editors are knowledgeable about the necessary components to describe becomes complicated and important.

These are just some of the areas that the early childhood research community could discuss and use to help formulate guidelines for publication specific to our particular genre. Although we looked specifically at AK with many of the details investigated distinctly related to AK (e.g., type of letter), we believe other literacy-related (e.g., phonemic awareness, vocabulary, fluency) and early childhood (e.g., social skills, executive functioning) knowledge and skills would also need details on researcher-developed measures to be transparent, interpretable, and replicable. Although we cannot determine without doing a similar study for other bodies of research, we would not be surprised if other research areas had the similar levels of fluctuation in the transparency of the reporting of their researcher-developed measures and data collection methods. Therefore, we encourage the literacy and early childhood research communities to engage in a rigorous discussion of these issues surrounding effective reporting of research. In this way, we can add our specific guidelines to the broader standards to “to assist researchers in the preparation of manuscripts that report such work, editors and reviewers in the consideration of these manuscripts for publication, and readers in learning from and building upon such publications” (American Educational Research Association, 2006, p. 33).

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

SH coded and input data, analyzed the data, did the statistical analysis, wrote some of the manuscript, proofread/edited the manuscript, submitted. SS did the literature searches, collected the articles, coded and input data, analyzed the data, wrote some of the manuscript, proofread/edited the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adams, M. J. (1990). Beginning to Read: Thinking and Learning About Print. Cambridge, MA: MIT Press.

American Educational Research Association (2006). Standards for reporting on empirical social science research in AERA publications. Educ. Res. 35, 33–40. doi: 10.3102/0013189X035006033

American Psychological Association (2010). “Appendix: journal article reporting standards (JARS), meta-analysis reporting standards (MARS), and flow of participants through each stage of an experiment or quasi-experiment,” in Publication Manual of the American Psychological Association, 6th Ed. (Washington, DC: Author), 247–250.

American Psychological Association (2018). “Cohen's Kappa,” in APA Dictionary of Psychology. Available online at: https://dictionary.apa.org/cohens-kappa (accessed December 6, 2019).

*Anthony, J., Solari, E., Williams, J. M., Schoger, K. D., Zhang, Z., Branum-Martin, L., et al. (2009). Development of bilingual phonological awareness in Spanish-speaking English language learners: the roles of vocabulary, letter knowledge, and prior phonological awareness. Sci. Stud. Read. 13, 535–564. doi: 10.1080/10888430903034770

*Arrow, A., and McLachlin, C. (2014). The development of phonological awareness and letter knowledge in young New Zealand children. Speech Lang. Hear. 17, 49–57. doi: 10.1179/2050572813Y.0000000023

Asendorpf, J. B., Conner, M., De Fruyt, F., De Houwer, J., Denissen, J. J. A., Fiedler, K., et al. (2013). Recommendations for increasing replicability in psychology. Eur. J. Pers. 27, 108–119. doi: 10.1002/per.1919

*Bindman, S., Skibbe, L., Hindman, A., Aram, D., and Morrison, F. (2014). Parental writing support and preschoolers' early literacy, language, and fine motor skills. Early Child. Res. Q. 29, 614–624. doi: 10.1016/j.ecresq.2014.07.002

*Blair, R., and Savage, R. (2006). Name writing but not environmental print recognition is related to letter-sound knowledge and phonological awareness in pre-readers. Read. Writ. 19, 991–1016. doi: 10.1007/s11145-006-9027-9

Bond, G. L., and Dykstra, R. (1967/1997). The cooperative research program in first-grade reading instruction. Read. Res. Q. 32, 345–427. doi: 10.1598/RRQ.32.4.4

*Bowyer-Crane, C., Snowling, M., Duff, F., Fieldsend, E., Carroll, J., Miles, J., et al. (2008). Improving early language and literacy skills: differential effects of an oral language versus a phonology with reading intervention. J. Child Psychol. Psychiatry 49, 422–432. doi: 10.1111/j.1469-7610.2007.01849.x

*Byrne, B., Wadsworth, S., Boehme, K., Talk, A., Coventry, W., Olson, R., et al. (2013). Multivariate genetic analysis of learning and early reading development. Sci. Stud. Read. 17, 224–242. doi: 10.1080/10888438.2011.654298

*Campbell, M., and Mechling, L. (2009). Small group computer-assisted instruction with SMART board technology. Remedial Special Educ. 30, 47–57. doi: 10.1177/0741932508315048

*Castles, A., McLean, G., Bavin, E., Bretherton, E., Carlin, J., Prior, M., et al. (2013). Computer use and letter knowledge in pre-school children: a population-based study. J. Paediatr. Child Health 49, 193–198. doi: 10.1111/jpc.12126

Chall, J. S. (1967). Learning to Read: The Great Debate; An Inquiry into the Science, Art, and Ideology of Old and New Methods of Teaching Children to Read, 1910–1965. New York, NY: McGraw-Hill.

*Coker, D. L., and Ritchey, K. D. (2014). Universal screening for writing rick in kindergarten. Assess. Effect. Interv. 39, 245–256. doi: 10.1177/1534508413502389

*Collins, F. M., and Svensson, C. (2008). If I had a magic wand I'd magic her out of the book: the rich literacy practices of competent readers. Early Years 28, 81–91. doi: 10.1080/09575140701844599

CONSORT group (2010). Welcome to the CONSORT Website. Available online at: http://www.consort-statement.org/ (accessed March 26, 2021).

*Coventry, W., Byrne, B., Olson, R., Corley, R., and Samuelsson, S. (2011). Dynamic and statistic assessment of phonological awareness in preschool: a behavior-genetic study. J. Learn. Disabil. 44, 322–329. doi: 10.1177/0022219411407862

*Culatta, B., Hall, K., Kovarsky, D., and Theadore, G. (2007). Contextualized approach to language and literacy (Project CALL): capitalizing on varied activities and contexts to teach early literacy skills. Commun. Disord. Q. 28, 216–235. doi: 10.1177/1525740107311813

*Cunningham, A., and Carroll, J. (2011). Age and schooling effects on early literacy and phoneme awareness. J. Exp. Child Psychol. 109, 248–255. doi: 10.1016/j.jecp.2010.12.005

*Deckner, E., Adamson, L., and Bakeman, R. (2006). Child and maternal contributions to shared reading: effects on language and literacy development. Appl. Dev. Psychol. 27, 31–41. doi: 10.1016/j.appdev.2005.12.001

*and Dittman, C. K. (2016). Associations between inattention, hyperactivity and pre-reading skills before and after formal reading instruction begins. Read. Writing 29, 1771–1791. doi: 10.1007/s11145-016-9652-x

*Drouin, M. A., and Harmon, J. (2009). Name writing and letter knowledge in preschoolers: incongruities in skills and the usefulness of name writing as a developmental indicator. Early Child. Res. Q. 24, 263–270. doi: 10.1016/j.ecresq.2009.05.001

*Drouin, M. A., Horner, S. L., and Sondergeld, T. (2012). Alphabet knowledge in preschool: a Rasch model analysis. Early Child. Res. Q. 27, 543–554. doi: 10.1016/j.ecresq.2011.12.008

Ehri, L. C., Nunes, S. R., Stahl, S. A., and Willows, D. M. (2001). Systematic phonics instruction helps students learn to read: Evidence from the National Reading Panel's meta-analysis. Rev. Educ. Res. 71, 393–447. doi: 10.3102/00346543071003393

Ellefson, M. R., Treiman, R., and Kessler, B. (2009). Learning to label letters by sounds or names: a comparison of England and the United States. J. Exp. Child Psychol. 102, 323–341. doi: 10.1016/j.jecp.2008.05.008

Ernest, J. M., and McLean, J. E. (1998). Fight the good fight: A response to Thompson, Knapp, and Levin.. Res. Schools 5, 59–62.

European Association of Science Editors (2018). EASE guidelines for authors and translators of scientific articles to be published in English. Eur. Sci. Edit. 44, e1–e16. doi: 10.20316/ESE.2018.44.e1

Evans, M. A., Bell, M., Shaw, D., Moretti, S., and Page, J. (2006). Letter names, letter sounds and phonological awareness: an examination of kindergarten children across letters and of letters across children. Read. Writ. 19, 959–989. doi: 10.1007/s11145-006-9026-x

*Flynn, R. M., and Richert, R. A. (2015). Parents support preschoolers' use of a novel interactive device. Infant Child Dev. 24, 624–642. doi: 10.1002/icd.1911

*Foy, J., and Mann, V. (2013). Executive function and early reading skills. Read. Writ. 26, 453–472. doi: 10.1007/s11145-012-9376-5

*Fuhs, M., Wyant, A., and Day, J. (2011). Unique contributions of impulsivity and inhibition to prereading skills in preschoolers at Head Start. J. Res. Child. Educ. 25, 145–159. doi: 10.1080/02568543.2011.555497

Gall, M. D., Gall, J. P., and Borg, W. R. (2007). Educational Research: An introduction, 8th Ed. New York, NY: Pearson.

Giofrè, D., Cumming, G., Fresc, L., Boedker, I., and Tressoldi, P. (2017). The influence of journal submission guidelines on authors' reporting of statistics and use of open research practices. PLoS ONE 12:e0175583. doi: 10.1371/journal.pone.0175583

*Goetz, K., Hulme, C., Brigstocke, S., Carroll, J., Nasir, L., and Snowling, M. (2008). Training of reading and phoneme awareness skills in children with Down Syndrome. Read. Writ. 21, 395–412. doi: 10.1007/s11145-007-9089-3

*Goldberg, H. R., and Lederberg, A. R. (2015). Acquisition of the alphabetic principle in deaf and hard-of-hearing preschoolers: the role of phonology in letter-sound learning. Read. Writ. 28, 509–525. doi: 10.1007/s11145-014-9535-y

*Greer, C. W., and Erickson, K. A. (2018). A preliminary exploration of uppercase letter-name knowledge among students with significant cognitive disabilities. Read. Writ. 31, 173–183. doi: 10.1007/s11145-017-9780-y

Justice, L. M., Pence, K., Bowles, R. B., and Wiggins, A. (2006). An investigation of four hypotheses concerning the order by which 4-year-old children learn the alphabet letters. Early Child. Res. Q. 21, 374–389. doi: 10.1016/j.ecresq.2006.07.010

*Knell, E., Haiyan, Q., Miao, P., Yanping, C., Siegel, L., Lin, Z., et al. (2007). Early English immersion and literacy in Xi'an, China. Modern Lang. J. 91, 395–417. doi: 10.1111/j.1540-4781.2007.00586.x

*Lerner, M. D., and Lonigan, C. J. (2016). Bidirectional relations between phonological awareness and letter knowledge in preschool revisited: A growth curve analysis of the relation between two code-related skills. J. Exp. Child Psychol. 144, 166–183. doi: 10.1016/j.jecp.2015.09.023

Lin, E., Wang, J., Klecka, C. L., Odell, S. J., and Spalding, E. (2010). Judging research in teacher education. J. Teach. Educ. 61, 295–301. doi: 10.1177/0022487110374013

*Lonigan, C., Purpura, D., Wilson, S., Walker, P., and Clancy-Mencheti, J. (2013). Evaluating the components of an emergent literacy intervention for preschool children at risk for reading difficulties. J. Exp. Child Psychol. 114, 111–130. doi: 10.1016/j.jecp.2012.08.010

Lonigan, C. J., Burgess, S. R., and Anthony, J. L. (2000). Development of emergent literacy and early reading skills in preschool children: evidence from a latent-variable longitudinal study. Dev. Psychol. 36, 696–613. doi: 10.1037/0012-1649.36.5.596

*MacDonald, H., Sullivan, A., and Watkins, M. (2013). Multivariate screening model for later word reading achievement: predictive utility of prereading skills and cognitive ability. J. Appl. School Psychol. 29, 52–71. doi: 10.1080/15377903.2013.751476

Makel, M. C. (2014). The empirical march: making science better at self-correction. Psychol. Aesthet. Creativity Arts 8, 2–7. doi: 10.1037/a0035803

Makel, M. C., and Plucker, J. A. (2014). Facts are more important than novelty: replication in the education sciences. Educ. Res. 20, 1–13. doi: 10.3102/0013189X14545513

Makel, M. C., Plucker, J. A., Freeman, J., Lombardi, A., Simonsen, B., and Coyne, M. (2016). Replication of special education research: necessary but far too rare. Remedial Special Educ. 37, 205–212. doi: 10.1177/0741932516646083

McBride-Chang, C. (1999). The ABCs of the ABCs: the development of letter name and letter sound knowledge. Merrill Palmer Q. 45, 285–308.

*McGeown, S., Johnston, R., and Medford, E. (2012). Reading instruction affects the cognitive skills supporting early reading development. Learn. Individ. Differ. 22, 360–364. doi: 10.1016/j.lindif.2012.01.012

*McGeown, S., and Medford, E. (2014). Using method of instruction to predict the skills supporting initial reading development: insight from a synthetic phonics approach. Read. Writ. 27, 591–608. doi: 10.1007/s11145-013-9460-5

Mohan, K. P., Peungposop, N., and Junprasert, T. (2016). State of the art behavioral science research: a review of the publications in the international journal of behavioral science. Int. J. Behav. Sci. 11, 1–18. doi: 10.14456/ijbs.2016.1

National Early Literacy Panel (2008). Developing early literacy: Report of the National Early Literacy Panel. Washington, DC: National Institute for Literacy. Available online at: http://lincs.ed.gov/publications/pdf/NELPReport09.pdf

National Research Council (1998). Preventing Reading Difficulties in Young Children. Washington, DC: National Academy Press.

*Neumann, M., Hood, M., and Ford, R. (2013b). Using environmental print to enhance emergent literacy and print motivation. Read. Writ. 26, 771–793. doi: 10.1007/s11145-012-9390-7

*and Neumann, M. M. (2014). Using environmental print to foster emergent literacy in children from a low-SES community. Early Child. Res. Q. 29, 310–318. doi: 10.1016/j.ecresq.2014.03.005

*Neumann, M. M., Acosta, A. C., and Neumann, D. L. (2013a). Young children's visual attention to environmental print as measured by eye tracker analysis. Read. Res. Q. 49, 157–167. doi: 10.1002/rrq.66

*Neumann, M. M., Hood, M., and Neumann, D. L. (2009). The scaffolding of emergent literacy skills in the home environment: a case study. Early Child. Educ. J. 36, 313–319. doi: 10.1007/s10643-008-0291-y

*Olszewski, A., Soto, X., and Goldstein, H. (2017).Modeling alphabet skills as instructive feedback within a phonological awareness intervention. Am. J. Speech Lang. Pathol. 26, 769–790. doi: 10.1044/2017_AJSLP-16-0042

*Ouellette, G., and Sénéchal, M. (2008). A window into early literacy: exploring the cognitive and linguistic underpinnings of invented spelling. Sci. Stud. Read. 12, 195–219. doi: 10.1080/10888430801917324

Pashler, H., and Wagenmakers, E-J. (2012). Editors' introduction to the special section on replicability in psychological science: A crisis of confidence? Perspect. Psychol. Sci. 7, 528–530. doi: 10.1177/1745691612465253

*Phillips, B., Piasta, S., Anthony, J., Lonigan, C., and Francis, D. (2012). IRTs of the ABCs: children's letter name acquisition. J. Sch. Psychol. 50, 461–481. doi: 10.1016/j.jsp.2012.05.002

*Piasta, S. B., Phillips, B. M., Williams, J. M., Bowles, R. P., and Anthony, J. L. (2016). Measuring young children's alphabet knowledge: development and validation of brief letter-sound knowledge assessments. Elem. Sch. J. 116, 523–548. doi: 10.1086/686222

*Piasta, S. B., Purpura, D. J., and Wagner, R. K. (2010). Fostering alphabet knowledge development: a comparison of two instructional approaches. Read. Writ. 23, 607–626. doi: 10.1007/s11145-009-9174-x

Piasta, S. B., and Wagner, R. K. (2010). Learning letter names and sounds: effects of instruction, letter type, and phonological processing skill. J. Exp. Child Psychol. 105, 324–344. doi: 10.1016/j.jecp.2009.12.008

*Prior, M., Bavin, E., and Ong, B. (2011). Predictors of school readiness in five- to six-year-old children from an Australian longitudinal community sample. Educ. Psychol. 31, 3–16. doi: 10.1080/01443410.2010.541048

*Rahn, N. L., Wilson, J., Egan, A., Brandes, D., Kunkel, A., Peterson, M., et al. (2015). Using incremental rehearsal to teach letter sounds to English language learners. Educ. Treat. Child. 38, 71–91. doi: 10.1353/etc.2015.0000

*Ritchey, K. D., and Speece, D. L. (2006). From letter names to word reading: The nascent role of sublexical fluency. Contemp. Educ. Psychol. 31, 301–327. doi: 10.1016/j.cedpsych.2005.10.001

*Roberts, T., Vadasy, P. F., and Sanders, E. A. (2018). Preschoolers' alphabet learning: letter name and sound instruction, cognitive processes, and english proficiency. Early Child. Res. Q. 44, 257–274. doi: 10.1016/j.ecresq.2018.04.011

Robinson, D. H., Levin, J. R., Thomas, G. D., Pituch, K. A., and Vaugh, S. (2007). The incidence of “causal” statements in teaching-and-learning research journals. Am. Educ. Res. J. 44, 400–413. doi: 10.3102/0002831207302174

*Savage, R., Carless, S., and Ferraro, V. (2007). Predicting curriculum and test performance at age 11 years from pupil background, baseline skills and phonological awareness at age 5 years. J. Child Psychol. Psychiatry 48, 732–739. doi: 10.1111/j.1469-7610.2007.01746.x

*Schwanenflugel, P. J., Hamilton, C. E., Neuharth-Pritchett, S., Restrepo, M. A., Bradley, B. A., and Webb, M. Y. (2010). PAVEd for success: An evaluation of a comprehensive preliteracy program for four-year-old children. J. Lit. Res. 42, 227–275. doi: 10.1080/1086296X.2010.503551

SCImago (2020). SJR — SCImagoJournal and Country Rank [Portal]. Available online at: http://www.scimagojr.com (accessed August 3, 2019).

Share, D. L., Jorm, A. F., Maclean, R., and Matthews, R. (1984). Sources of individual differences in reading acquisition. J. Educ. Psychol. 76, 1309–1324. doi: 10.1037/0022-0663.76.6.1309

*Shaw, D. M., and Sundberg, M. L. (2008). How a neurologically integrated approach which teaches sound-symbol correspondence and legible letter formations impacts at-risk first graders. J. At Risk Issues 14, 13–21.

*and Shidler, L. (2009). The impact of time spent coaching for teacher efficacy on student achievement. Early Child. Educ. J. 36, 453–460. doi: 10.1007/s10643-008-0298-4

Skidmore, S. T., Zientek, L. R., Combs, J. P., Fuller, M. B., Hirai, M., Price, D. P., et al. (2014). Empirical reporting practices in Community College journal of research and practice and journal of developmental education from 2002 to 2011: a systematic review. Commun. College J. Res. Pract. 38, 927–946. doi: 10.1080/10668926.2013.843478

Slavin, R. E. (2002). Evidence-based education policies: transforming educational practice and research. Educ. Res. 31, 15–21. doi: 10.3102/0013189X031007015

Slavin, R. E. (2005). Evidence-based reform in education: promise and pitfalls. Mid-Western Educ. Res. 18, 8–13.

*Smith, S., Scott, K., Roberts, J., and Locke, J. (2008). Disabled readers' performance on tasks of phonological processing, rapid naming and letter knowledge before and after kindergarten. Learn. Disabil.Res. Pract. 23, 113–124. doi: 10.1111/j.1540-5826.2008.00269.x

Storch, S. A., and Whitehurst, G. J. (2002). Oral language and code-related precursors to reading: evidence from a longitudinal structural model. Dev. Psychol. 38, 937–947. doi: 10.1037/0012-1649.38.6.934

*Strang, T. M., and Piasta, S. B. (2016). Socioeconomic differences in code-focused emergent literacy skills. Read. Writing 29, 1337–1362. doi: 10.1007/s11145-016-9639-7

*Thompson, G. B., Fletcher-Flinn, C. M., Wilson, K. J., McKay, M. F., and Margrain, V. G. (2015). Learning with sublexical information from emerging reading vocabularies in exceptionally early and normal reading development. Cognition 136, 166–185. doi: 10.1016/j.cognition.2014.11.032

Torgerson, C., Brooks, G., Gascoine, L., and Higgins, S. (2019). Phonics: reading policy and the evidence from a systematic “tertiary” review. Res. Pap. Educ. 34, 208–238. doi: 10.1080/02671522.2017.1420816

Treiman, R., and Broderick, V. (1998). What's in a name? Children's knowledge about the letters in their own names. J. Exp. Child Psychol. 70, 97–116 doi: 10.1006/jecp.1998.2448

*Treiman, R., Stothard, S., and Snowling, M. (2013). Instruction matters: spelling of vowels by children in England and the US. Read. Writ. 26, 473–487. doi: 10.1007/s11145-012-9377-4

Treiman, R., Weatherston, S., and Berch, D. (1994). The role of letter names in children's learning of phoneme–grapheme relations. Appl. Psycholinguist. 15, 97–122. doi: 10.1017/S0142716400006998

Tressoldi, P. E., and Giofrè, D. (2015). The pervasive avoidance of prospective statistical power: major consequences and practical solutions. Front. Psychol. 6:726. doi: 10.3389/fpsyg.2015.00726

Tressoldi, P. E., Giofrè, D., Sella, F., and Cumming, G. (2013). High impact = high statistical standards? not necessarily so. PLoS ONE 8:e56180. doi: 10.1371/journal.pone.0056180

*Tyler, A. A., Osterhouse, H., Wickham, K., McNutt, R., and Shao, Y. (2014). Effects of explicit teacher-implemented phoneme awareness instruction in 4-year-olds. Clin. Linguist. Phonet. 28, 493–507. doi: 10.3109/02699206.2014.927004

*Wake, M., Tobin, S., Levickis, P., Gold, L., Ukoumunne, O., Zens, N., et al. (2013). Randomized trial of a population-based, home-delivered intervention for preschool language delay. Pediatrics 132, e885–e904. doi: 10.1542/peds.2012-3878

*and Westerveld, M. F. (2014). Emergent literacy performance across two languages: assessing four-year-old bilingual children, Int. J. Biling. Educ. Bilingual. 17, 526–543. doi: 10.1080/13670050.2013.835302

*Willoughby, D., Evans, M. A., and Nowak, S. (2015). Do ABC eBooks boost engagement and learning in preschoolers? An experimental study comparing eBooks with paper ABC and storybook controls. Comput. Educ. 82, 107–117. doi: 10.1016/j.compedu.2014.11.008

*and Wolf, G. M. (2016). Letter-sound reading: Teaching preschool children print-to-sound processing. Early Child. Educ. J. 44, 11–19. doi: 10.1007/s10643-014-0685-y

*Zemlock, D., Vinci-Booher, S., and James, K. H. (2018). Visual–motor symbol production facilitates letter recognition in young children. Read. Writ. 31, 1255–1271. doi: 10.1007/s11145-018-9831-z

*and Zhang, X. (2018). Letter-name knowledge longitudinally predicts young Chinese children's Chinese word reading and number competencies in a multilingual context. Learn. Individ. Differ. 65, 176–186. doi: 10.1016/j.lindif.2018.06.004

Zhao, J., Beckett, G. H., and Wang, L. L. (2017). Evaluating the research quality of education journals in China: implications for increasing global impact in peripheral countries. Rev. Educ. Res. 87, 583–618. doi: 10.3102/0034654317690813

Zientek, L. R., Capraro, M. M., and Capraro, R. M. (2008). Reporting practices in quantitative teacher education research: one look at the evidence cited in the AERA panel report. Educ. Res. 37, 208–216. doi: 10.3102/0013189X08319762

Zientek, L. R., and Thompson, B. (2009). Matrix summaries improve research reports: secondary analyses using published literature. Educ. Res. 38, 343–352. doi: 10.3102/0013189X09339056

*^References marked with an asterisk indicate studies included in the analysis.

Keywords: alphabet knowledge, letter knowledge, research methodology, evaluation, emergent literacy

Citation: Horner SL and Shaffer SA (2021) Evaluating the Reporting Quality of Researcher-Developed Alphabet Knowledge Measures: How Transparent and Replicable Is It? Front. Psychol. 12:601849. doi: 10.3389/fpsyg.2021.601849

Received: 06 October 2020; Accepted: 16 March 2021;

Published: 16 April 2021.

Edited by:

Ann Dowker, University of Oxford, United KingdomReviewed by:

David Giofrè, Liverpool John Moores University, United KingdomGreg Brooks, The University of Sheffield, United Kingdom

Copyright © 2021 Horner and Shaffer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sherri L. Horner, c2hvcm5lckBiZ3N1LmVkdQ==

Sherri L. Horner

Sherri L. Horner Sharon A. Shaffer

Sharon A. Shaffer