94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 26 February 2021

Sec. Quantitative Psychology and Measurement

Volume 12 - 2021 | https://doi.org/10.3389/fpsyg.2021.542257

Pedro Henrique Ribeiro Santiago1,2*

Pedro Henrique Ribeiro Santiago1,2* Adrian Quintero3

Adrian Quintero3 Dandara Haag1,2

Dandara Haag1,2 Rachel Roberts4

Rachel Roberts4 Lisa Smithers2

Lisa Smithers2 Lisa Jamieson1

Lisa Jamieson1Aim: We aimed to investigate whether the 12-item Multidimensional Scale of Perceived Social Support (MSPSS) constitutes a valid and reliable measure of social support for the general adult Australian population.

Methods: Data were from Australia’s National Survey of Adult Oral Health 2004–2006 and included 3899 participants aged 18 years old and over. The psychometric properties were evaluated with Bayesian confirmatory factor analysis. One-, two-, and three-factor (Significant Other, Family and Friends) structures were tested. Model fit was assessed with the posterior predictive p-value (PPPχ2), Bayesian root mean square error of approximation (BRMSEA), and Bayesian comparative fit index (BCFI). Dimensionality was tested by comparing competing factorial structures with the Bayes factor (BF). Reliability was evaluated with the Bayesian ΩH. Convergent validity was investigated with the Perceived Stress Scale (PSS) and discriminant validity with the Perceived Dental Control scale (PDC-3).

Results: The theoretical three-factor model (Significant Other, Family, and Friends) provided a good fit to the data [PPPχ2 < 0.001, BRMSEA = 0.089-95% credible interval (CrI) (0.088, 0.089); BCFI = 0.963-95% CrI (0.963, 0.964)]. The BF provided decisive support for the three-factor structure in relation to the other structures. The SO [BΩH = 0.95 - 95% CrI (0.90, 0.99)], FA (BΩH = 0.92 - 95% CrI (0.87, 0.97), and FR (BΩH = 0.92 - 95% CrI (0.88, 0.97)] subscales displayed excellent reliability. The MSPSS displayed initial evidence of convergent and discriminant validity.

Conclusion: The MSPSS demonstrated good psychometric properties and excellent reliability in a large Australian sample. This instrument can be applied in national surveys and provide evidence of the role of social support in the Australian population.

Social support is a key social determinant of health (Wilkinson and Marmot, 2003). It works as a buffer of life adversities through multiple mechanisms, including supportive actions of others and the belief that support is available (Cohen et al., 2000). Substantial evidence has shown that increased social support is associated with a reduction in the effect of stressful events, higher self-regulation, and better physical and psychological health outcomes (Wilkinson and Marmot, 2003). Since social support is a complex and multidimensional construct (Lakey and Cohen, 2000), a number of instruments have been developed to measure different aspects of social support (van Dam et al., 2005). For instance, instruments were created to evaluate social support functions (e.g., emotional, tangible, positive interaction, companionship) (Sherbourne and Stewart, 1991), sources of social support (e.g., signification other, family, friends) (Zimet et al., 1988), received (e.g., a friend loaned money) (Barrera et al., 1981) or perceived (e.g., there are people I can depend on) social support (Cutrona and Russell, 1987), social support availability (Cohen and Hoberman, 1983) or adequacy, among others (Gottlieb and Bergen, 2010). Thus, although there is no single instrument that covers every aspect of social support, a review indicated five social support measures with strong psychometric properties (López and Cooper, 2011).

One of these five measures is the Multidimensional Scale of Perceived Social Support (MSPSS), a 12-item instrument originally developed by Zimet et al. (1988) to evaluate perception of social support adequacy from three different sources (significant other, family, and friends). Since its development, the MSPSS has been widely adopted, being translated to more than 20 languages, due to several reasons (Dambi et al., 2018). First, it measures the perception of social support (e.g., quality of relationships), in line with empirical findings that the quality of support is a better predictor of psychological status than support objectively measured (Wu et al., 2011). Second, the MSPSS evaluates distinct sources of social support, including “family,” “friends,” and “significant other,” which might elucidate the different mechanisms through which social support operates to improve health and other psychosocial outcomes (Bruwer et al., 2008). Taken together, the abovementioned characteristics of the MSPSS help inform interventions focused on distinct aspects of social support that are relevant to specific outcomes in the population.

In the original validation of the MSPSS, principal component analysis (PCA) indicated a three-factor structure, the Significant Other (SO), Family (FA), and Friends (FR) subscales, which displayed good internal consistency (αSO = 0.91, αFA = 0.87, αFR = 0.85) and adequate test–retest reliability (αSO = 0.72, αFA = 0.85, αFR = 0.75). Soon after the initial study, two additional validations were conducted by the instrument developers Zimet, Powell (Zimet et al., 1990) and Dahlem, Zimet (Dahlem et al., 1991) in independent samples, reporting again the three-factor structure and good reliability.

Over the decades, the psychometric properties of the MSPSS have been evaluated worldwide, including countries such as Pakistan (Akhtar et al., 2010), China (Chou, 2000), Uganda (Nakigudde et al., 2009), Mexico (Edwards, 2004), Turkey (Başol, 2008), Thailand (Wongpakaran et al., 2011), Nepal (Tonsing et al., 2012), South Africa (Bruwer et al., 2008), Malaysia (Ng et al., 2010), Iran (Bagherian-Sararoudi et al., 2013), Sweden (Ekbäck et al., 2013), Russia (Pushkarev et al., 2018), and others (Denis et al., 2015; Theofilou, 2015). Despite being investigated in multiple countries, a recent systematic review by Dambi, Corten (Dambi et al., 2018) indicated several limitations of previous MSPSS validations and cross-cultural adaptations. Previous MSPSS validations were conducted in small- or medium-sized samples of restricted populations, mostly clinical and university convenience samples. For example, the MSPSS properties were previously investigated in 275 undergraduate students (Zimet et al., 1988), 325 pregnant women (Akhtar et al., 2010), 475 high school students (Chou, 2000), 433 school administrators (Başol, 2008), 310 medical students and 152 psychiatric patients (Wongpakaran et al., 2011), 176 myocardial infarction (MI) patients (Bagherian-Sararoudi et al., 2013), 127 women with hirsutism and 154 nursing students (Ekbäck et al., 2013), among others.

Another point discussed by Dambi, Corten (Dambi et al., 2018) was the MSPSS factorial structure. The majority of previous studies reported the three-factor structure with few notable exceptions: Lai, Hamid (Lai et al., 1996) and Chou (2000) reported a two-factor structure, which combines the SO with the FR subscale, in Chinese samples. The reason for combining the subscales was that all SO items use the term “special person” (e.g., “There is a special person who is around when I am in need”), and respondents believed that a “special person” could be referring to a friend. The conceptual overlap happened because the “person we call ‘special’ might differ according to culture” (Başol, 2008) and the Chinese respondents did not distinguish between the two terms. For this reason, Eker, Arkar (Eker et al., 2001) suggested that an explanation for the “special person” term (“a girlfriend/boyfriend, fiancé, relative, neighbour, or a doctor”) should be added to the items to provide clarification. Additionally, one-factor MSPSS structures were also reported in certain “collectivistic” societies, such as rural areas in Pakistan, in which the “sense of communal living dilutes the differences between family members, friends and significant others” (Akhtar et al., 2010).

Moreover, in the majority of previous MSPSS validations, the method employed to investigate the dimensionality was exploratory factor analysis (EFA). While the replication of the three-factor structure (Significant Other, Family, and Friends) by EFA in multiple independent studies provides support for the original MSPSS dimensionality, Dambi, Corten (Dambi et al., 2018) pointed out limitations on how EFA was used. For instance, some studies employed EFA with orthogonal rotation (instead of oblique rotation) (Nakigudde et al., 2009; Ekbäck et al., 2013, 2014), which assumes uncorrelated factors. The problem is that moderate correlations are expected between receiving support from a Significant Other, Family, and/or Friends, and these three factors were shown to be correlated in previous MSPSS literature (Dambi et al., 2018).

Dambi, Corten (Dambi et al., 2018) also discussed limitations in the investigation of model fit. Fit indices traditionally employed in factor models, such as the root mean square error of approximation (RMSEA) (Steiger, 1980), have been evaluated in the context of EFA (e.g., factor retention) (Preacher et al., 2013; Barendse et al., 2015) and are available for EFA in modern software, such as R package psych (Revelle, 2017). Despite its availability, the majority of MSPSS validations that employed EFA did not report fit indices. The problem is that, for the studies that reported the three-factor structure, it is not possible to know “the degree to which the data/translation fits into the original factor model” (Dambi et al., 2018). That is, it is not possible to know whether the three-factor structure was actually a good fit for the data. In case of poor fit, alternative MSPSS factorial structure, such as the two-factor structure (Friends and Family), or model respecifications, such as the inclusion of correlated uniqueness (Brown, 2014), would have to be considered.

For these reasons, Dambi, Corten (Dambi et al., 2018) argued that, for the investigation of the MSPSS dimensionality, confirmatory factor analysis (CFA) should be preferred since it enables the evaluation of a priori MSPSS theoretical structures and “given that the MSPSS can yield one-, two- or three-factors, all the three models should be tested using CFA before a decision on the degree of fit can be made.” However, a minority of previous MSPSS validations performed CFA, and of these, only three studies adequately described fit indices. The authors concluded that “provision of multiple goodness-of-fit indices for all the three (MSPSS) models should be a ‘standard’ reporting practise as it provides the potential readership with all the essential information for them to critique the methodological quality and subsequent conclusions in keeping with the evidence supplied” (Dambi et al., 2018).

In summary, while the replication of the three-factor model in multiple independent studies indicates support for the original MSPSS factorial structure, a recent review recommended that future MSPSS validations should provide more robust evidence regarding the fit of the original three-factor model and its comparison to alternative MSPSS factorial structures (e.g., two-factor model, one-factor model).

Considering the shortcoming of previous MSPSS validations, there are three gaps in the literature that this study intends to address: first, the MSPSS validation studies were conducted in small- or medium-sized convenience samples of restricted populations. To the best of our knowledge, there are no studies that investigated the MSPSS validity for a general population using a large sample. While convenience samples do not necessarily lead to biased estimates (i.e., biased factor loadings) (Rothman et al., 2013), generalizability to a national population is unclear (Jager et al., 2017). For instance, validation studies of other instruments showed “slight differences in the strength of associations with other constructs in convenience and representative samples,” warranting further investigation (Leckelt et al., 2018). In Australia, one study validated an instrument (to measure perceived stress) in a restricted Indigenous subpopulation reporting good psychometric properties (Santiago et al., 2019) that have not been replicated at a national level (Santiago et al., 2020). In conclusion, while it is possible that psychometric properties of an instrument generalize from restricted subgroups to the national population, independent validation in national samples still seems to be required. In the case of the MSPSS, the examination in a general population can inform, for example, whether this instrument is suitable for application in large, population-level social support interventions.

Second, despite the MSPSS being previously used in epidemiological research in Australia (O’Dea and Campbell, 2011; Schuurmans-Stekhoven, 2017), there were no psychometric studies that evaluated its construct validity specifically for the Australian population. One important characteristic of the MSPSS compared to other social support instruments is that it provides information about distinct sources of social support, such as significant other, family, and friends. Previous research in Australia showed, for example, that managers receive support mostly outside of the workplace, from a spouse or partner, leading them to feel “lonely at the top” (Lindorff, 2001). On the contrary, Australian nurses do benefit primarily from peers (work colleagues) support when dealing with work stress (Joiner and Bartram, 2004). While sources of social support were investigated in restricted groups (managers, nurses, students) (Urquhart and Pooley, 2007), the validation of the MSPSS can provide a measure of sources of social support for the national Australian population. Future studies can employ the MSPSS to examine the impact of these sources (i.e., significant other, family, or friends) on psychosocial outcomes (e.g., diminishing stress) at a population level, leading to targeted interventions.

Moreover, perceptions of social support are influenced by cultural differences (Glazer, 2006), including between high-income countries (Davidson et al., 2008). Hence, it is important to evaluate whether questionnaires measuring social support have appropriate functioning in distinct cultures. The need for evidence-based assessment was the reason behind the specific validations of the MSPSS (originally developed in the United States) in multiple countries and cultures (Dambi et al., 2018). The countries in which the MSPSS were validated include low-, middle-, and high-income countries, such as France (Denis et al., 2015) and Canada (Clara et al., 2003). Considering that Australia has unique sociodemographic characteristics compared to other Western high-income countries, including low population density (Pong et al., 2009) and a third of the population being born overseas (Australia Bureau of Statistics, 2016), it is also necessary to ensure that MSPSS is also valid and reliable in the Australian context.

Third, we evaluated the MSPSS psychometric properties with Bayesian confirmatory factor analysis (BCFA). Since all previous validations were conducted within a frequentist framework, the application of BCFA can provide further insight into the MSPSS psychometric properties, such as an in-depth evaluation of model fit through the inspection of the fit indices’ posterior distribution. The current study aims to investigate whether the MSPSS constitutes a valid and reliable measure of social support for the general Australian population.

The sample comprised 3899 adult Australians in the population-based study Australia’s National Survey of Adult Oral Health (NSAOH) 2004–2006. The NSAOH sampling strategy was a three-stage (i.e., postcodes, households, people) stratified clustered design implemented to select a representative sample of the Australian population. Participants were interviewed by study staff via computer-assisted telephone interview (CATI). The participants who agreed to receive dental examinations were also mailed a questionnaire with several measures, including the MSPSS (Supplementary Table 1). Among the participants who received examination, the questionnaire response rate was 70.1% (Sanders and Slade, 2011). The NSAOH 2004–2006 was approved by the University of Adelaide’s Human Research Ethics Committee. All participants provided signed informed consent (Slade et al., 2004).

The Multidimensional Scale of Perceived Social Support (MSPSS) is a 12-item instrument assessed by a 5-point scale (1 = Strongly Disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly Agree). The original validation by Zimet, Dahlem (Zimet et al., 1988) indicated a three-factor structure comprising Significant Other (SO), Family (FA), and Friends (FR) subscales.

The Perceived Stress Scale (PSS-14) is a 14-item instrument assessed by a 5-point scale (1 = Strongly Disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly Agree) with a two-factor structure of Perceived Stress (PS) and Perceived Coping (PC). A revised version has been recently validated for the Australian general population (Santiago et al., 2020).

The Perceived Dental Control (PDC-3) evaluates perceptions of control (“I don’t feel in control when I’m in the dental chair”), predictability (“I don’t feel like I know what’s going to happen next when I’m in the dental chair”), and likelihood of harm (“I believe I will be hurt when I’m in the dental chair”) when at the dentist (Armfield et al., 2008). Details of response options are as per above.

The statistical analysis was conducted with R software (R Core Team, 2013) and R package blavaan 0.3-6 (Merkle and Rosseel, 2015). The Markov Chain Monte Carlo (MCMC) estimation was performed with Stan (Gelman et al., 2015) within the RStan interface (Stan Development Team, 2018). Considering that estimation with sampling weights are currently being developed for BCFA, all analyses were conducted with unweighted data. The criterion validity analysis was conducted with JASP (JASP Team, 2018).

The factorial structure was evaluated through BCFA (Lee, 1981). Since missing values for individual items ranged from 0.02% to 0.18%, multiple imputation was not required (Graham, 2009) and complete case analysis was conducted (n = 3868). The first model evaluated was the one-factor model, since it is the most parsimonious, and if it is not possible to reject a one-factor model at first, there is no need to evaluate models with a more complex factorial structure (Kline, 2015). In case the one-factor model was rejected, the next model evaluated was the two-factor structure in which the SO subscale was combined with the FR subscale, a factorial structure that has been previously reported in Chinese samples (Lai et al., 1996; Chou, 2000). For the sake of completeness, we also evaluated the two other possible two-factor models, combining the FA subscale with the FR subscale and the FA subscale with the SO subscale. Finally, we evaluated the theoretical model comprising the SO, FA, and FR factors (Zimet et al., 1988).

Let yi be the p observed variables (OV) (i.e., observed items responses) associated with participant i and m be the number of latent variables (LV). Then, the factor model estimated was:

where ν is the p × 1 vector of intercepts for the OV, Λ is the p × m matrix of factor loadings, ηi is the m × 1 vector containing the LV such that ηi ∼ N_m(0,ϕ) and εi is the p × 1 vector of residuals distributed as εi∼N_p(0,∑). In addition, εi and ηi were assumed to be uncorrelated. The LV were assumed to covary, so ϕ is the m × m latent variable covariance matrix (Jöreskog and Sörbom, 1996; Merkle and Rosseel, 2015). The graphical representation of the structural equation models (SEMs) is displayed in Supplementary Figure 1.

The factor models were estimated with a mean structure (i.e., intercept parameters), originally developed for continuous items. Although factor models with a threshold structure can also be estimated in BCFA (Lee, 2007), there is one major limitation that withholds its implementation in the current study. The only fit index currently available for factor models with a threshold structure is the χ2 statistic in which the null hypothesis represents the exact correspondence between the model-implied covariance matrix and sample covariance matrix (Gelman et al., 1996; Sellbom and Tellegen, 2019; Taylor, 2019). Fit indices, such as the RMSEA and the comparative fit index (CFI) (Bentler, 1990), which were developed to complement the χ2 statistic and describe the degree of correspondence between the model and the data (Garnier-Villarreal and Jorgensen, 2019), have only been validated for factor models with a mean structure in BCFA (Garnier-Villarreal and Jorgensen, 2019; Hoofs et al., 2018). Although factor models with a threshold structure are potentially more aligned with the ordered-categorical nature of MSPSS items, interpretation of model fit would be restricted using these models. That is, under factor models with a threshold structure, we would only be able to evaluate the exact correspondence between the model and the data using the χ2 statistic, and it is unlikely that any hypothesized factorial structure can exactly reproduce the MSPSS item responses (MacCallum, 2003). For this reason, factor models with a mean structure were estimated. Finally, R package blavaan can fit only factor models with a mean structure (instead of a threshold structure) in its current version.

Model estimation was carried out with three independent MCMC chains with Hamiltonian Monte Carlo sampling (Duane et al., 1987). The estimation was performed with 1000 iterations for each chain after a burn-in period of 1000 iterations. Convergence of the MCMC chains to the posterior distribution was evaluated graphically with (a) trace plots (Gelfand and Smith, 1990) and formally with (b) the estimated potential scale reduction factor (PSRF) (Gelman and Rubin, 1992) and the (c) the Monte Carlo standard error (MCSE) using batch means (Jones et al., 2006). PSRF values for each parameter close to 1.0 indicate convergence to the posterior distribution (Brooks and Gelman, 1998). Brooks and Gelman (Brooks and Gelman, 1998) recommended that when PSRF values for each parameter are close to 1.0 and smaller than 1.1, convergence to the posterior distribution can be considered to be reached. Otherwise, MCMC chains with more iterations are necessary to improve convergence to the posterior distribution.

Vague priors [default in blavaan (Merkle and Rosseel, 2015) for estimation using Stan] were specified for the factor loadings [λ∼ N(μ = 0, σ2 = 100)], OV intercepts [ν∼ N(μ = 0, σ2 = 1024)], OV residual standard deviations [ε∼ G(1,0.50)], and LV residual standard deviations [ε∼ G(1,0.50)]. Each factor correlation had a prior uniform distribution on the interval [−1, 1]. The parameters were, a priori, assumed to be mutually independent (Scheines et al., 1999). Recent simulation studies have shown this set of priors to be weakly informative for a variety of SEMs typically encountered in practice (Merkle et al., 2020). When the sample size is large (or very large) and vague priors are specified, the posterior distribution is predominantly informed by the likelihood function, and results become asymptotically equal to a maximum likelihood (ML) solution (Garnier-Villarreal and Jorgensen, 2019). To illustrate this equivalence, we reported results from maximum likelihood estimation in the Supplementary Material. The latent variables were scaled using the reference variable method, imposing a unit loading identification (ULI) constraint on the first item of each subscale (Kline, 2015). Completely standardized solutions of the factor analytical models were reported.

Model fit was investigated through posterior predictive model checking (PPMC) (Gelman et al., 1996). PPMC uses a discrepancy function to calculate whether the observed data are consistent with the expected values of the model at each iteration of the Markov chain that successfully converged to the posterior distribution. In our study, the discrepancy function selected was the χ2 statistic, which compares the sample covariance matrix (S) with the model-implied covariance matrix () (Gierl and Mulvenon, 1995). The χ2 statistic is displayed below:

where is the p × 1 vector of sample means, and is the p × 1 vector of model-implied means. The fit of the SEM was then evaluated with the posterior predictive p-value (PPPχ2). The PPPχ2 estimates the proportion of posterior samples from which the discrepancy measure calculated with observed data (Dobs) is higher than the discrepancy measure calculated with replicated data (Drep) under the model. The rationale is that, if the observed data is perfectly explained by the model, occasions when Dobs > Drep (or Dobs < Drep, for that matter) are arbitrary and the PPPχ2 should approximate 50% (Garnier-Villarreal and Jorgensen, 2019).

The limitation of the PPPχ2 is that the χ2 statistic evaluates the null hypothesis of exact correspondence between the model-implied covariance matrix and sample covariance matrix. However, theoretical models, such as the MSPSS three-factor structure comprising SO, FA, and FR (Zimet et al., 1988), were created to be merely approximations of reality and were not expected to perfectly explain observed data from empirical research (MacCallum, 2003). Sellbom and Tellegen (Sellbom and Tellegen, 2019) emphasize that, in psychological assessment research with factor analysis, “the null hypothesis is virtually always rejected, which means that there will always be significant discrepancies between the estimated model parameters and observed data.” Thus, as the sample size increases, the χ2 statistic becomes more and more sensitive to detect trivial deviations from the model. This limitation of the χ2 statistic is present in both frequentist and Bayesian CFA and has been reiterated by several methodologists (Saris et al., 2009; Asparouhov and Muthén, 2010; West et al., 2012; Hayduk, 2014; Garnier-Villarreal and Jorgensen, 2019). Hence, when the study has enough power, the PPPχ2 will detect trivial model misspecifications, even when these misspecifications have no substantive or practical meaning. While more studies are needed, the sensitivity of the PPPχ2 to detect negligible differences within large samples seems to approach 1.0 (Hoofs et al., 2018), requiring other fit indices such as RMSEA and CFI to be used to evaluate model fit.

For this reason, we also evaluated the fit of the model with indices such as the RMSEA and the CFI, which complement the χ2 statistic by indicating the degree of correspondence between the model and the data (Garnier-Villarreal and Jorgensen, 2019). Based on previous work by Hoofs et al. (2018), Garnier-Villarreal and Jorgensen (2019) recently adapted fit indices to Bayesian structural equation modeling. The proposed Bayesian RMSEA (BRMSEA) and Bayesian CFI (BCFI) are displayed below:

where p∗ is the number of unique elements within the sample variance–covariance matrix, i is the Markov chain iteration, N is the sample size, is the χ2 statistic (previously described) calculated with observed data (Dobs) under the hypothesized model, is the χ2 statistic calculated with observed data (Dobs) under the independence model, and pD is the effective number of parameters. Since the number of parameters in Bayesian inference cannot be expressed as integers (e.g., informative compared to noninformative priors further restrict the parameter space), we used the effective number of parameters (pD) based on the deviance information criteria (DIC) (Spiegelhalter et al., 2002). The pD was calculated through the marginalized DIC (mDIC) after latent variables were integrated out (Quintero and Lesaffre, 2018). The independence model was specified by constraining covariances among observed variables to zero and freely estimating intercepts and variances (Widaman and Thompson, 2003).

In terms of interpretation, since widely used cutoff points derived from frequentist simulation studies (Hu and Bentler, 1999) do not provide the same type I and II error rates in BSEM, hypothesis testing using these cutoffs with BRMSEA and BCFI should not be conducted. Nonetheless, Garnier-Villarreal and Jorgensen (Garnier-Villarreal and Jorgensen, 2019) explained that “traditional guidelines proposed for interpreting the magnitude of SEM fit indices based on intuition and experience would be no less valid.” For this reason, we evaluated the magnitude of fit indices such as BRMSEA and BCFI as descriptive measures of the degree to which the model failed to reproduce the data (Garnier-Villarreal and Jorgensen, 2019).

In addition to the evaluation of model fit of the one-, two-, and three-factor models, we used the Bayes factor (BF) (Jeffreys, 1961) to formally test which factorial structure has more support from the data. The BF compares the marginal likelihood of the data under model 2 (the alternative hypothesis) with the marginal likelihood of the data under model 1 (the null hypothesis), providing thus a continuous measure of the predictive accuracy of the two competing models (Wetzels and Wagenmakers, 2012). Since calculation of the BF can be difficult, the log-Bayes factor (logBF) was calculated using the Laplace approximation (Lewis and Raftery, 1997) and then converted to the BF.

Reliability. Similarly, we propose calculating reliability using a Bayesian version of McDonald’s ΩH (McDonald, 2013):

where is the factor loading of item j, i is the Markov chain iteration, s is the number of Markov chain iterations, ψ is the factor variance, Σ is the sample covariance matrix, and 1 is the k-dimensional vector of 1’s. The McDonald’s ΩH was chosen over traditional reliability such as Cronbach (1951)α, since it does not assume (1) tau equivalence or a (2) congeneric model without correlated uniqueness (Dunn et al., 2014).

Nonparametric bivariate Kendall’s τ (Kendall, 1948) correlations were calculated between the MSPSS subscales total score (SO, FA, and FR), subscales total scores from the Perceived Stress and Perceived Coping (PSS), and total scores from the PDC. Since the PSS subscale total scores and the PDC total scores had missing values, we employed pairwise exclusion to calculate the Kendall’s τ correlations with the MSPSS subscales total score. For the Kendall’s τ correlations, the prior employed was also vague. The prior for the Kendall’s τ correlation is a uniform distribution on the interval [−1, 1] (Van Doorn et al., 2018; Wagenmakers et al., 2018a). Perceived stress was chosen for the evaluation of criterion validity since a large body of empirical research has provided evidence of the protective effects of social support on stress (Lakey and Cohen, 2000). Hence, it was expected a negative correlation of the MSPSS subscales with Perceived Stress (convergent validity) and a positive correlation with Perceived Coping (divergent validity). We also expected a weak and nonmeaningful correlation (discriminant validity) between social support and perceived control during dental examination (measured by the PDC-3). The appointment with the dentist occurs on the dental examination room, where individuals undergo examination alone (without a significant other, family, friends). Hence, the PDC-3 evaluates perceptions of control limited to the experience of dental examination (e.g., “I don’t feel like I know what’s going to happen next when I’m in the dental chair”), and these perceptions were shown to be more associated with personality factors (neuroticism, trait anxiety) (Brunsman et al., 2003) than the social support received in other domains of life.

In our study, CFA with Bayesian inference and evaluation of fit with BRMSEA and BCFI were chosen due to three main advantages over the frequentist approach. First, (1) fit indices such as CFI are complex functions of model parameters and have unknown sampling distributions precluding the calculation of precision measures such as 95% confidence intervals (CI). Although the RMSEA is an exception since it has a known sampling distribution (Browne and Cudeck, 1992), the BCFI and BRMSEA provide an empirical approximation of the “realized values of the discrepancy measure” (Levy, 2011) for any sample size without the need to rely on asymptotic theory. Second, (2) the interpretation of credible intervals (CrI) (i.e., 95% probability that the true parameter value lies between the interval) is more intuitive than the interpretation of CI since statistical inference is conducted by conditioning on the study data rather than depending on infinite repetitions of the estimator (Morey et al., 2016). Third, (3) hypothesis testing with p values has received strong criticism over the decades (Cohen, 1994). P-values are considered a confounded measure since they depend upon both effect size and sample size (Lang et al., 1998). Moreover, the failure to reject the null hypothesis does not prove the null is correct or preferable than an alternative hypothesis (Wagenmakers, 2007), so “absence of evidence is not evidence of absence” (Jaykaran et al., 2011). Finally, authors such as Amrhein, Greenland (Amrhein et al., 2019) recently emphasized that the use of cutoff points and dichotomization of p-values into “significant” and “nonsignificant” should be abandoned, since similar effect sizes with p-values below and above thresholds (e.g., above and below 0.05) should not be interpreted differently. Therefore, all statistical hypothesis tests in the current study were conducted with the BF.

Although the current study had a large sample, another advantage of BCFA is in small sample sizes, in which frequentist estimation often results in nonconvergence and inaccurate parameter estimates (Smid et al., 2020). The reason is that frequentist procedures rely on “asymptotic results that are typically not satisfied with psychometric data except in large-scale settings” (Rupp et al., 2004). However, in psychological research, many target populations can be naturally small or hard to access groups (e.g., burn survivor patients with posttraumatic stress symptoms) (Van De Schoot et al., 2015), so recruiting large samples is not feasible or even possible. In these cases, BCFA can also provide a powerful alternative to frequentist CFA. Thus, future validations of the MSPSS targeted at small, selected populations would also benefit from BCFA.

The age range of participants was 18–82 years (Mage = 50.2, SD = 14.8); almost two-thirds were women (62.1%), over two-thirds had received a tertiary education (67.4%), and almost 60% were employed (Table 1). There were no meaningful differences between the original sample (n = 3899) and the complete cases sample (n = 3868).

In all models evaluated in this study, the one-, two-, and three-factor models, the Markov chains converged to the posterior distribution with 1000 iterations after discarding the first 1000 iterations as a burn-in. The visual inspection of trace plots indicated convergence of the three Markov chains. Trace plots of the three-factor model are reported (Supplementary Figure 2). The PSRF for individual parameters were very close to 1.00 and smaller than 1.10 in all models. The PSRFs of the three-factor model are displayed in Table 2, while the PSRFs of the one- and two-factor models are displayed in Supplementary Tables 2–5.

The MCSEs of the one-factor model ranged from 9.49 × 10–5 to 9.63 × 10–4, the MCSEs of the two-factor model ranged from 4.06 × 10–19 to 9.63 × 10–4, and the MCSEs of the three-factor model ranged from 4.23 × 10–5 to 9.73 × 10–4. In all cases, the MCSEs of the parameters were smaller than 5% of the posterior standard deviation.

The evaluation of PPPχ2 across all models indicated that the χ2 statistic, which evaluates null hypothesis of exact correspondence between the model-implied covariance matrix and sample covariance matrix, calculated with observed data (Dobs) was substantially higher when compared to the χ2 statistic calculated with replicated data (Drep) (PPPχ2 < 0.001). Considering that the PPPχ2 can be sensitive to trivial model misspecifications under large samples (as the sample in our study), we proceeded then to inspect fit indices such as RMSEA and CFI to evaluate the degree of correspondence between the model and the data. The first model evaluated was the one-factor model and it displayed a poor fit (Table 3).

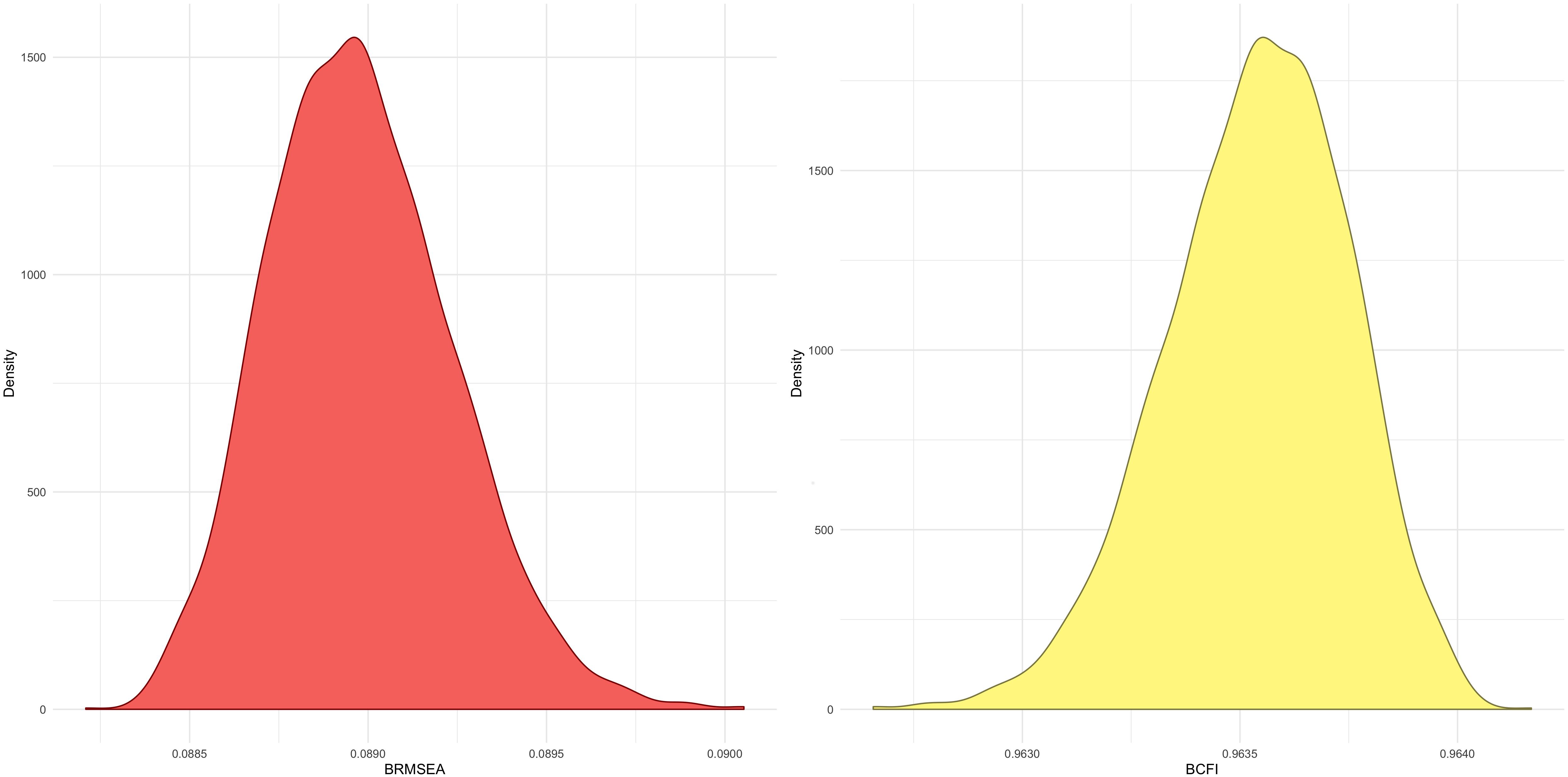

All the two-factor models, such as the two-factor model in which the SO and FR subscales were combined, also displayed poor fit. The theoretical three-factor model (SO, FA, and FR) was evaluated, and the fit to the data was substantially improved. ML estimation results are shown in Supplementary Table 6 by means of comparison. The inspection of the three-factor model BRMSEA’s posterior distribution indicated values consistent with an adequate model fit, while the BCFI’s posterior distribution comprised values consistent with a good fit (Figure 1).

Figure 1. Kernel density plots of the realized posterior distributions of approximate fit indices. Note: The realized posterior distribution of the Bayesian root mean square error of approximation (BRMSEA) (left plot) and Bayesian comparative fit index (BCFI) (right plot) are displayed.

Furthermore, all factor loadings evaluated at the posterior mean were higher than 0.8, and high factor loadings (> 0.80) were observed across the entirety of the posterior distributions, as indicated by the 95% credible intervals (Table 2). For these reasons, the three-factor structure was confirmed as an adequate measurement model for the MSPSS.

The BF indicated decisive support for the three-factor model in comparison with the one-factor model (BF31 = 5.94 × 103409) and in comparison with all two-factor models. For instance, the BF indicated decisive support for the three-factor model in comparison with the two-factor model in which SO and FR subscales were combined (BF32 = 9.22 × 102005).

The SO [BΩH = 0.95 - 95% CrI (0.90, 0.99)], FA [BΩH = 0.92 - 95% CrI (0.87, 0.97)], and FR [BΩH = 0.92 - 95% CrI (0.88, 0.97)] subscales displayed excellent reliability.

The Kendall’s τ correlations between the MSPSS subscales and the Perceived Stress subscale, Perceived Coping subscale, and PDC-3 are displayed in Table 4. The MSPSS subscales displayed weak positive correlations with the Perceived Stress subscale and weak negative correlations with the Perceived Coping subscale.

Furthermore, there was no meaningful association between the SO, FA, and FR subscales and the PDC-3. Although the Bayes factor provided strong support for the alternative hypothesis of nonzero correlation between SO and PDC-3 and decisive support for the alternative hypothesis of nonzero correlation between FA and PDC-3, the magnitudes of these correlations were close to zero, indicating that these correlations were negligible and had no practical meaning.

The present study aimed to evaluate whether the MSPSS constitutes a valid and reliable instrument to measure social support in a large sample of non-Aboriginal Australians. The findings confirmed that the theoretical three-factor structure composed of SO, FA, and FR and reliability was excellent. The implications for future use of the MSPSS in Australia are discussed.

The findings provided support for the theoretical three-factor model composed by SO, FA, and FR. While the fit of the one- and two-factor models were unacceptable, the three-factor model provided a good fit to the data.

In the three-factor model, the very small PPPχ2 (< 0.001) indicated that compared to the replicated data under the model, the observed data consistently showed stronger discrepancies with respect to the model. Authors such as Saris, Satorra (Saris et al., 2009) and West, Taylor (West et al., 2012) have argued that such discrepancies are expected and unavoidable in the light of the large sample sizes needed for sufficient statistical power to estimate SEM model parameters (Sellbom and Tellegen, 2019). That is, the PPPχ2 can detect trivial discrepancies that have no substantive meaning, even when the theoretical model (for example, the MSPSS structure of SO, FA, and FR) constitute a good approximation of reality (Garnier-Villarreal and Jorgensen, 2019). In Bayesian CFA, the PPPχ2 sensitivity to detect negligible differences increases with sample size and seems to approach 100% under large samples (Hoofs et al., 2018). Thus, the very small PPPχ2 (< 0.001) observed for the three-factor model does not indicate poor fit of the three-factor model by itself and needs to be complemented and considered with the other fit indices such as BRMSEA and BCFI.

For instance, the BRMSEA of the three-factor model was within “traditional guidelines proposed for interpreting the magnitude of SEM fit indices” (Garnier-Villarreal and Jorgensen, 2019), such as that “a value of about 0.08 or less for the RMSEA would indicate a reasonable error of approximation” (Browne and Cudeck, 1992). Similarly, the BCFA was above the usually recommended value of 0.95 (Yu, 2002). In the end, despite the small PPPχ2 (< 0.001), the BRMSEA and BCFI clearly indicated adequate model fit of the three-factor structure. When the models were compared, the BF provided decisive support for the three-factor structure in relation to the other two structures. For example, the BF suggested that the data are 3.90 × 101991 times more likely to occur under the three-factor structure compared to the two-factor structure in which the SO and FR subscales were combined.

The support for the three-factor structure is consistent with the literature and indicates that significant other, family, or friends are independent sources, which provide qualitatively distinct social support. For example, it is known that social support from a significant other, such as a romantic partner, is particularly relevant when an individual is facing unemployment. In this situation, social support from a partner can increase the perception that striving to pursue a job is a worthwhile endeavor (Vinokur and Caplan, 1987). Alternatively, support from friends can be especially relevant in the face of relationship stress. When an individual is experiencing problems within a relationship, a friend can become a confidant and provide advice due to not being directly involved in the relationship dynamics (Jackson, 1992). In the current study, the correlations between SO, FA, and FR subscales ranged from 0.50 to 0.64, showing that these dimensions were moderately correlated but without posing concerns regarding discriminant validity (r > 0.80) (Brown, 2014). That is, the dimensions were correlated (e.g., individuals who received support from family also reported receiving support from friends and a significant other), but the sources of support were distinct (e.g., some individuals received more support from family than from other sources, such as friends and a significant other). For this reason, total scores should be computed for each subscale (SO, FA, and FR) independently instead of a total score computed based on all 12 items.

The two-factor model in which the SO and FR subscales were combined was not a good fit for the data, indicating that Australian respondents did discriminate between the terms “special person” and “friends.” In the current study, the MSPSS was applied in its original format (Zimet et al., 1988) without any additional explanations to the “special person” term such as “a girlfriend/boyfriend, fiancé, relative, neighbour, or a doctor” (Eker et al., 2001). Therefore, considering that the majority of Australians have English as their native language (McDonald et al., 2019), the original MSPSS can be applied in Australia without revisions. Moreover, the two other possible two-factor models, in which FA and FR or FA and SO were combined, also did not show good fit to the data, indicating that a three-factor structure better explained the item responses in the Australian population.

The reliability of the three subscales was excellent, consistent with previous MSPSS psychometric studies (Pushkarev et al., 2018). Despite not being yet subjected to simulation studies, we proposed a Bayesian version of ΩH by calculating the ΩH formula at each iteration of the Markov chain, which creates a posterior distribution for the BΩH statistic. Since under uninformative priors the mean of the posterior distribution should approximate the maximum likelihood estimate, the BΩH expectedly resembled the ΩH. In our study, the BΩH and ML ΩH were equivalent to a three-decimal precision. The approach we took was different from Yang and Xia (2019), who recently evaluated Bayesian estimation of the categorical ΩH by substituting “central tendency measures such as the medians of the posterior distributions” into the original categorial ΩH formula.

The SO, FA, and FR subscales displayed the expected patterns of convergent and divergent validity since they were negatively correlated with Perceived Stress and positively correlated with Perceived Coping. These associations were consistent with research evidence showing that social support is protective against stress (Lakey and Cohen, 2000) since it provides external resources to overcome stressful events and promotes individual coping by enhancing feelings of personal control (Lincoln et al., 2003). Although the magnitudes of these correlations were weak, a recent systematic review by Harandi, Taghinasab (Harandi et al., 2017) showed that social support is only moderately correlated with mental health outcomes. Moreover, since social support and perceived stress/coping are theoretically related but qualitatively distinct constructs, correlations with small magnitudes have also been previously reported (Hamdan-Mansour and Dawani, 2008; Santiago et al., 2019). In general, the observed correlations in our study provided initial support for the MSPSS convergent and divergent validity, but future studies should further investigate the MSPSS convergent and divergent validity in Australia.

Regarding discriminant validity, the SO, FA, and FR subscales displayed close to zero correlations with the PDC-3. Accordingly, it was expected that social support would be weekly associated with perceived control during dental examination. Although the presence of a significant other, family, or friends in the dental clinic is potentially beneficial (Bernson et al., 2011), previous research had emphasized individual characteristics, such as personality traits (Brunsman et al., 2003) and genetic vulnerability (Carter et al., 2014), as leading factors impacting perceived control during examination by the dentist.

Although good psychometric properties have previously been reported, to the best of our knowledge, this study was the first to evaluate the validity of the MSPSS for a large, general heteregenous population. The findings showed that the MSPSS can adequately measure perceptions of social support according to different sources of support (significant other, family, and friends) at a national level in Australia. Thus, the MSPSS can be included in national surveys and applied in future large studies conducted in the Australian context.

The current study had several strengths, such as the use of modern BSEM methodology to conduct the psychometric analysis. Despite resources such as the modification index (Sörbom, 1989) and fit indices for multigroup CFA (Cheung and Rensvold, 2002) used in frequentist analysis being currently under development for BSEM, the inferences based on the entirety of the posterior distribution provided substantial advantages to the comprehension of the MSPSS psychometric functioning. For example, BSEM enabled us to evaluate the (“realized”) posterior distribution of the CFI and certify that all values were congruent with a good fitting model.

The same reasoning was possible regarding the strength of factor loadings and factor correlations. For instance, the examination of an MSPSS factor loading 95% credible interval informed that there is a 95% probability that the true factor loading in the population lied between that upper and lower limit. Since this probabilistic interpretation is naturally intuitive for researchers, clinicians, and policymakers, it has also been commonly (and erroneously) attributed to frequentist 95% confidence intervals (Morey et al., 2016). However, even in circumstances where 95% credible intervals and 95% confidence intervals are numerically similar, “they are not mathematical equivalent and conceptually quite different” (Van de Schoot et al., 2014). In summary, the investigation of MSPSS parameters through the posterior distribution and 95% credible intervals provides a more intuitive interpretation for researchers and policymakers, providing statements about precision and plausibility (rather than fixed long-term probabilities) (Morey et al., 2016), about the MSPSS psychometric properties.

Moreover, Bayesian estimation and hypothesis testing with the BF provides “a practical solution to the pervasive problems of p-value” (Wagenmakers, 2007) in psychometric research. In our study, the use of BF was relevant for comparing the MSPSS competing factorial structures. In a recent systematic review conducted by Dambi, Corten (Dambi et al., 2018) regarding MSPSS validations across multiple cultures, one main criticism was that the majority of studies used EFA and did not adequately describe fit indices or compare alternative structures (i.e., one-, two-, and three-factor models). Among the previous studies that did employ CFA to compare the MSPSS factorial structures, support for the three-factor model was provided (Clara et al., 2003; Stewart et al., 2014). While CFA fit indices (Golino et al., 2020) or information criterion such as the Akaike Information Criterion (AIC) (Akaike, 1987) or Bayesian Information Criterion (BIC) (Schwarz, 1978) can be used as relative measures of fit, the advantage of the BF is that it allows for a direct comparison between two competing models. That is, the BF provides a clear interpretation of how many times the evidence favors one model over the other (Wagenmakers et al., 2018b). In the case of the MSPSS, our findings concurred with previous studies that the three-factor should be preferred (Clara et al., 2003; Stewart et al., 2014) but also provided new evidence on how many times the data favored the MSPSS three-factor structure over other models. The BF showed, for instance, that the evidence towards the three-factor model compared to the two- and one-factor models in Australia was extreme (Jeffreys, 1961; Lee and Wagenmakers, 2014).

The study also had limitations. First, the data were from a survey conducted in 2004–2006, so over the last decade, the distribution of social support in the Australian population may have changed. Therefore, future studies should investigate whether the functioning of items remained stable or there was item parameter drift (Goldstein, 1983). Second, only two measures (the PSS and PDC-3) were used for the analysis of criterion validity and we could not provide strong evidence of the MSPSS external validity. Future studies should investigate convergent, discriminant, and predictive validity of the MSPSS in Australia more broadly and using other selected measures. Third, estimation with sampling weights are under development for BCFA, so psychometric analyses were conducted in the unweighted sample, which, despite constituting a large sample of Australian adults, it is not representative of the Australian population. Finally, fit indices such as RMSEA and CFI for factor models with a threshold structure, models originally developed for ordered-categorical items (Muthén, 1984), have not yet been validated for BCFA (Yu, 2002). Hence, the application of factor models with mean structure to MSPSS items limits the investigation of all possible parameters of interest, such as threshold parameters. Threshold parameters indicate the amount of a latent response variable that, when exceeded, predict the preference for one response category (e.g., Strongly Agree) over another (e.g., Agree) (Kline, 2015). Once these fit indices are validated for threshold models in BCFA and its calculation made available in state-of-the-art software, future studies should further investigate the MSPPS using these models.

The good psychometric properties and excellent reliability of the MSPSS were confirmed in a large sample of Australian adults. The MSPSS comprised three subscales, Significant Other, Family, and Friends. Total scores should be computed for each subscale independently. Furthermore, the MSPSS can be applied at a national level, including in national surveys. The MSPSS test scores can disclose important importation regarding the sources of social support in Australia and provide evidence to the role of social support in the Australian population.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by University of Adelaide’s Human Research Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

PS, AQ, LS, RR, and LJ conceptualized the project. PS and AQ conducted the formal analysis. LJ provided resources and funding acquisition. PS, AQ, and DH wrote the initial version of the manuscript. PS, LS, RR, LJ, DH, and AQ interpreted the data. LS, RR, LJ, DH, and AQ provided theoretical and statistical supervision. All authors reviewed and edited the final draft.

This research was supported by a grant from the Australia’s National Health and Medical Research Council (Project Grant Nos. 299060, 349514, and 349537).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.542257/full#supplementary-material

Akaike, H. (1987). Factor Analysis and AIC. Selected Papers of Hirotugu Akaike. Berlin: Springer, 371–386.

Akhtar, A., Rahman, A., Husain, M., Chaudhry, I. B., Duddu, V., and Husain, N. (2010). Multidimensional scale of perceived social support: psychometric properties in a South Asian population. J. Obstet. Gynaecol. Res. 36, 845–851. doi: 10.1111/j.1447-0756.2010.01204.x

Amrhein, V., Greenland, S., and McShane, B. (2019). Scientists Rise Up Against Statistical Significance. Berlin: Nature Publishing Group.

Armfield, J. M., Slade, G. D., and Spencer, A. J. (2008). Cognitive vulnerability and dental fear. BMC Oral Health 8:2. doi: 10.1186/1472-6831-8-2

Asparouhov, T., and Muthén, B. (2010). Bayesian Analysis of Latent Variable Models using Mplus. Available online at: www statmodel com/download/BayesAdvantages18 pdf (accessed September 29, 2010).

Australia Bureau of Statistics (2016). Census QuickStats–Australia 2016. Available online at: https://quickstats.censusdata.abs.gov.au/census_services/getproduct/census/2016/quickstat/036 (accessed March 03, 2020).

Bagherian-Sararoudi, R., Hajian, A., Ehsan, H. B., Sarafraz, M. R., and Zimet, G. D. (2013). Psychometric properties of the Persian version of the multidimensional scale of perceived social support in Iran. Int. J. Prev. Med. 4:1277.

Barendse, M., Oort, F., and Timmerman, M. (2015). Using exploratory factor analysis to determine the dimensionality of discrete responses. Struct. Equ. Modeling A Multidisciplinary J. 22, 87–101. doi: 10.1080/10705511.2014.934850

Barrera, M., Sandler, I. N., and Ramsay, T. B. (1981). Preliminary development of a scale of social support: studies on college students. Am. J. Community Psychol. 9, 435–447. doi: 10.1007/bf00918174

Başol, G. (2008). Validity and reliability of the multidimensional scale of perceived social support-revised, with a Turkish sample. Soc. Behav. Personality Int. J. 36, 1303–1313. doi: 10.2224/sbp.2008.36.10.1303

Bentler, P. M. (1990). Comparative fit indexes in structural models. Psychol. Bull. 107:238. doi: 10.1037/0033-2909.107.2.238

Bernson, J. M., Hallberg, L. R. M., Elfström, M. L., and Hakeberg, M. (2011). ‘Making dental care possible–a mutual affair’. A grounded theory relating to adult patients with dental fear and regular dental treatment. Eur. J. Oral Sci. 119, 373–380. doi: 10.1111/j.1600-0722.2011.00845.x

Brooks, S. P., and Gelman, A. (1998). General methods for monitoring convergence of iterative simulations. J. Comput. Graph. Stat. 7, 434–455. doi: 10.2307/1390675

Brown, T. A. (2014). Confirmatory Factor Analysis for Applied Research. New York, NY: Guilford Publications.

Browne, M. W., and Cudeck, R. (1992). Alternative ways of assessing model fit. Sociol. Methods Res. 21, 230–258. doi: 10.1177/0049124192021002005

Brunsman, B. A., Logan, H. L., Patil, R. R., and Baron, R. S. (2003). The development and validation of the revised Iowa Dental Control Index (IDCI). Pers. Individ. Dif. 34, 1113–1128. doi: 10.1016/s0191-8869(02)00102-2

Bruwer, B., Emsley, R., Kidd, M., Lochner, C., and Seedat, S. (2008). Psychometric properties of the multidimensional scale of perceived social support in youth. Compr. Psychiatry 49, 195–201. doi: 10.1016/j.comppsych.2007.09.002

Carter, A. E., Carter, G., Boschen, M., AlShwaimi, E., and George, R. (2014). Pathways of fear and anxiety in dentistry: a review. World J. Clin. Cases WJCC 2:642. doi: 10.12998/wjcc.v2.i11.642

Cheung, G. W., and Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Struct. Equ. Modeling 9, 233–255. doi: 10.1207/s15328007sem0902_5

Chou, K.-L. (2000). Assessing Chinese adolescents’ social support: the multidimensional scale of perceived social support. Pers. Individ. Dif. 28, 299–307. doi: 10.1016/s0191-8869(99)00098-7

Clara, I. P., Cox, B. J., Enns, M. W., Murray, L. T., and Torgrudc, L. J. (2003). Confirmatory factor analysis of the multidimensional scale of perceived social support in clinically distressed and student samples. J. Pers. Assess. 81, 265–270. doi: 10.1207/s15327752jpa8103_09

Cohen, S., and Hoberman, H. M. (1983). Positive events and social supports as buffers of life change stress 1. J. Appl. Soc. Psychol. 13, 99–125. doi: 10.1111/j.1559-1816.1983.tb02325.x

Cohen, S., Underwood, L. G., and Gottlieb, B. H. (2000). Social Support Measurement and Intervention: A Guide for Health and Social Scientists. Oxford: Oxford University Press.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika 16, 297–334. doi: 10.1007/bf02310555

Cutrona, C. E., and Russell, D. W. (1987). The provisions of social relationships and adaptation to stress. Adv. Pers. Relat. 1, 37–67.

Dahlem, N. W., Zimet, G. D., and Walker, R. R. (1991). The multidimensional scale of perceived social support: a confirmation study. J. Clin. Psychol. 47, 756–761. doi: 10.1002/1097-4679(199111)47:6<756::aid-jclp2270470605>3.0.co;2-l

Dambi, J. M., Corten, L., Chiwaridzo, M., Jack, H., Mlambo, T., and Jelsma, J. (2018). A systematic review of the psychometric properties of the cross-cultural translations and adaptations of the Multidimensional Perceived Social Support Scale (MSPSS). Health Q. Life Outcomes 16:80.

Davidson, L. M., Demaray, M. K., Malecki, C. K., Ellonen, N., and Korkiamäki, R. (2008). United States and Finnish adolescents’ perceptions of social support: a cross-cultural analysis. Sch. Psychol. Int. 29, 363–375. doi: 10.1177/0143034308093675

Denis, A., Callahan, S., and Bouvard, M. (2015). Evaluation of the French version of the multidimensional scale of perceived social support during the postpartum period. Matern. Child Health J. 19, 1245–1251. doi: 10.1007/s10995-014-1630-9

Duane, S., Kennedy, A. D., Pendleton, B. J., and Roweth, D. (1987). Hybrid monte carlo. Physics Lett. B 195, 216–222.

Dunn, T. J., Baguley, T., and Brunsden, V. (2014). From alpha to omega: a practical solution to the pervasive problem of internal consistency estimation. Br. J. Psychol. 105, 399–412. doi: 10.1111/bjop.12046

Edwards, L. M. (2004). Measuring perceived social support in Mexican American youth: psychometric properties of the multidimensional scale of perceived social support. Hispanic J. Behav. Sci. 26, 187–194. doi: 10.1177/0739986304264374

Ekbäck, M., Benzein, E., Lindberg, M., and Årestedt, K. (2013). The Swedish version of the multidimensional scale of perceived social support (MSPSS)-a psychometric evaluation study in women with hirsutism and nursing students. Health Q. Life Outcomes 11:168. doi: 10.1186/1477-7525-11-168

Ekbäck, M. P., Lindberg, M., Benzein, E., and Årestedt, K. (2014). Social support: an important factor for quality of life in women with hirsutism. Health Q. Life Outcomes 12:183.

Eker, D., Arkar, H., and Yaldız, H. (2001). Factorial structure, validity, and reliability of revised form of the multidimensional scale of perceived social support. Turkish J. Psychiatry 12, 17–25.

Garnier-Villarreal, M., and Jorgensen, T. D. (2019). Adapting fit indices for Bayesian structural equation modeling: comparison to maximum likelihood. Psychol. Methods 25, 46–70. doi: 10.1037/met0000224

Gelfand, A. E., and Smith, A. F. (1990). Sampling-based approaches to calculating marginal densities. J. Am. Stat. Assoc. 85, 398–409. doi: 10.1080/01621459.1990.10476213

Gelman, A., Lee, D., and Guo, J. (2015). Stan: a probabilistic programming language for Bayesian inference and optimization. J. Educ. Behav. Stat. 40, 530–543. doi: 10.3102/1076998615606113

Gelman, A., Meng, X.-L., and Stern, H. (1996). Posterior predictive assessment of model fitness via realized discrepancies. Statistica Sinica 6, 733–760.

Gelman, A., and Rubin, D. B. (1992). Inference from iterative simulation using multiple sequences. Stat. Sci. 7, 457–472. doi: 10.1214/ss/1177011136

Gierl, M., and Mulvenon, S. (1995). “Evaluating the application of fit indices to structural equation models in educational research: a review of the literature from 1990 through 1994,” in Proceedings of the Annual Meeting of the American Educational Research Association, (San Francisco, CA).

Glazer, S. (2006). Social support across cultures. Int. J. Intercult. Relat. 30, 605–622. doi: 10.1016/j.ijintrel.2005.01.013

Goldstein, H. (1983). Measuring changes in educational attainment over time: problems and possibilities. J. Educ. Meas. 20, 369–377. doi: 10.1111/j.1745-3984.1983.tb00214.x

Golino, H., Moulder, R., Shi, D., Christensen, A. P., Garrido, L. E., Nieto, M. D., et al. (2020). Entropy fit indices: new fit measures for assessing the structure and dimensionality of multiple latent variables. Multivariate Behav. Res. 7, 1–29. doi: 10.1080/00273171.2020.1779642

Gottlieb, B. H., and Bergen, A. E. (2010). Social support concepts and measures. J. Psychosom. Res. 69, 511–520. doi: 10.1016/j.jpsychores.2009.10.001

Graham, J. W. (2009). Missing data analysis: making it work in the real world. Annu. Rev. Psychol. 60, 549–576. doi: 10.1146/annurev.psych.58.110405.085530

Hamdan-Mansour, A. M., and Dawani, H. A. (2008). Social support and stress among university students in Jordan. Int. J. Ment. Health Addict. 6, 442–450. doi: 10.1007/s11469-007-9112-6

Harandi, T. F., Taghinasab, M. M., and Nayeri, T. D. (2017). The correlation of social support with mental health: a meta-analysis. Electron. Physician 9:5212. doi: 10.19082/5212

Hayduk, L. A. (2014). Shame for disrespecting evidence: the personal consequences of insufficient respect for structural equation model testing. BMC Med. Res. Methodol. 14:124. doi: 10.1186/1471-2288-14-124

Hoofs, H., van de Schoot, R., Jansen, N. W., and Kant, I. (2018). Evaluating model fit in Bayesian confirmatory factor analysis with large samples: simulation study introducing the BRMSEA. Educ. Psychol. Meas. 78, 537–568. doi: 10.1177/0013164417709314

Hu, Lt, and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Modeling A Multidisciplinary J. 6, 1–55. doi: 10.1080/10705519909540118

Jackson, P. B. (1992). Specifying the buffering hypothesis: support, strain, and depression. Soc. Psychol. Q. 55, 363–378. doi: 10.2307/2786953

Jager, J., Putnick, D. L., and Bornstein, M. H. I. I. (2017). More than just convenient: the scientific merits of homogeneous convenience samples. Monogr. Soc. Res. Child Dev. 82, 13–30. doi: 10.1111/mono.12296

Jaykaran, D. S., Yadav, P., and Kantharia, N. (2011). Nonsignificant P values cannot prove null hypothesis: absence of evidence is not evidence of absence. J. Pharm. Bioallied Sci. 3:465. doi: 10.4103/0975-7406.84470

Joiner, T. A., and Bartram, T. (2004). How empowerment and social support affect Australian nurses’ work stressors. Aust. Health Rev. 28, 56–64. doi: 10.1071/ah040056

Jones, G. L., Haran, M., Caffo, B. S., and Neath, R. (2006). Fixed-width output analysis for Markov chain Monte Carlo. J. Am. Stat. Assoc. 101, 1537–1547.

Jöreskog, K. G., and Sörbom, D. (1996). LISREL 8: User’s Reference Guide. Skokie, IL: Scientific Software International.

Kendall, S. M. (1948). Rank Correlation. Van Nostrand’s Scientific Encyclopedia. New York, NY: Springer.

Kline, R. B. (2015). Principles and Practice of Structural Equation Modeling. New York, NY: Guilford publications.

Lai, J., Hamid, P., Lee, W., and Yu, H. (1996). “Optimism and social support as stress buffers among Hong Kong adolescents,” in Proceedings of the 26th International Congress of Psychology, Canada.

Lakey, B., and Cohen, S. (2000). “Social support and theory,” in Social Support Measurement and Intervention: A Guide for Health and Social Scientists, eds S. Cohen, L. G. Underwood, and B. H. Gottlieb (Oxford: Oxford University Press), 29. doi: 10.1093/med:psych/9780195126709.003.0002

Lang, J. M., Rothman, K. J., and Cann, C. I. (1998). That confounded P-value. Epidemiology 9, 7–8. doi: 10.1097/00001648-199801000-00004

Leckelt, M., Wetzel, E., Gerlach, T. M., Ackerman, R. A., Miller, J. D., Chopik, W. J., et al. (2018). Validation of the Narcissistic Admiration and Rivalry Questionnaire Short Scale (NARQ-S) in convenience and representative samples. Psychol. Assess. 30:86. doi: 10.1037/pas0000433

Lee, M. D., and Wagenmakers, E.-J. (2014). Bayesian Cognitive Modeling: A Practical Course. Cambridge: Cambridge University Press.

Lee, S.-Y. (2007). Structural Equation modeling: A Bayesian Approach. Hoboken, NJ: John Wiley & Sons.

Lee, S.-Y. A. (1981). Bayesian approach to confirmatory factor analysis. Psychometrika 46, 153–160. doi: 10.1007/bf02293896

Levy, R. (2011). Bayesian data-model fit assessment for structural equation modeling. Struct. Equ. Modeling A Multidisciplinary J. 18, 663–685. doi: 10.1080/10705511.2011.607723

Lewis, S. M., and Raftery, A. E. (1997). Estimating Bayes factors via posterior simulation with the Laplace—Metropolis estimator. J. Am. Stat. Assoc. 92, 648–655. doi: 10.2307/2965712

Lincoln, K. D., Chatters, L. M., and Taylor, R. J. (2003). Psychological distress among Black and White Americans: differential effects of social support, negative interaction and personal control. J. Health Soc. Behav. 44:390. doi: 10.2307/1519786

Lindorff, M. (2001). Research Note: are they lonely at the top? Social relationships and social support among Australian managers. Work Stress 15, 274–282. doi: 10.1080/026783701110.1080/02678370110066599

López, M., and Cooper, L. (2011). Social Support Measures Review. Laytonsville, MD: National Center for Latino Child & Family Research.

MacCallum, R. C. (2003). 2001 presidential address: working with imperfect models. Multivariate Behav. Res. 38, 113–139. doi: 10.1207/s15327906mbr3801_5

McDonald, P., Moyle, H., and Temple, J. (2019). English proficiency in Australia, 1981 to 2016. Aust. J. Soc. Issues 54, 112–134. doi: 10.1002/ajs4.67

Merkle, E. C., Fitzsimmons, E., Uanhoro, J., and Goodrich, B. (2020). Efficient Bayesian structural equation modeling in Stan. arXiv [Preprint]

Merkle, E. C., and Rosseel, Y. (2015). blavaan: Bayesian structural equation models via parameter expansion. arXiv [Preprint] doi: 10.18637/jss.v085.i04

Morey, R. D., Hoekstra, R., Rouder, J. N., Lee, M. D., and Wagenmakers, E.-J. (2016). The fallacy of placing confidence in confidence intervals. Psychonomic Bull. Rev. 23, 103–123. doi: 10.3758/s13423-015-0947-8

Muthén, B. (1984). A general structural equation model with dichotomous, ordered categorical, and continuous latent variable indicators. Psychometrika 49, 115–132. doi: 10.1007/bf02294210

Nakigudde, J., Musisi, S., Ehnvall, A., Airaksinen, E., and Agren, H. (2009). Adaptation of the multidimensional scale of perceived social support in a Ugandan setting. Afr. Health Sci. 9, S35–S41.

Ng, C., Siddiq, A. A., Aida, S., Zainal, N., and Koh, O. (2010). Validation of the Malay version of the Multidimensional Scale of Perceived Social Support (MSPSS-M) among a group of medical students in Faculty of Medicine. University Malaya. Asian J. Psychiatr. 3, 3–6. doi: 10.1016/j.ajp.2009.12.001

O’Dea, B., and Campbell, A. (2011). Online social networking amongst teens: friend or foe? Stud. Health Technol. Inform. 167, 133–138.

Pong, R. W., DesMeules, M., and Lagacé, C. (2009). Rural–urban disparities in health: how does Canada fare and how does Canada compare with Australia? Aust. J. Rural. Health 17, 58–64. doi: 10.1111/j.1440-1584.2008.01039.x

Preacher, K. J., Zhang, G., Kim, C., and Mels, G. (2013). Choosing the optimal number of factors in exploratory factor analysis: a model selection perspective. Multivariate Behav. Res. 48, 28–56. doi: 10.1080/00273171.2012.710386

Pushkarev, G., Zimet, G. D., Kuznetsov, V., and Yaroslavskaya, E. (2018). The multidimensional scale of perceived social support (MSPSS): reliability and validity of Russian version. Clin. Gerontol. 43, 331–339. doi: 10.1080/07317115.2018.1558325

Quintero, A., and Lesaffre, E. (2018). Comparing hierarchical models via the marginalized deviance information criterion. Stat. Med. 37, 2440–2454. doi: 10.1002/sim.7649

R Core Team (2013). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing.

Rothman, K. J., Gallacher, J. E., and Hatch, E. E. (2013). Why representativeness should be avoided. Int. J. Epidemiol. 42, 1012–1014. doi: 10.1093/ije/dys223

Rupp, A. A., Dey, D. K., and Zumbo, B. D. (2004). To Bayes or not to Bayes, from whether to when: applications of Bayesian methodology to modeling. Struct. Equ. Modeling 11, 424–451. doi: 10.1207/s15328007sem1103_7

Sanders, A. E., and Slade, G. D. (2011). Gender modifies effect of perceived stress on orofacial pain symptoms: national Survey of Adult Oral Health. J. Orofac. Pain 25, 317–326.

Santiago, P. H. R., Nielsen, T., Smithers, L. G., Roberts, R., and Jamieson, L. (2020). Measuring Stress in Australia: validation of the perceived stress scale (PSS-14) in a nationally representative sample. Health Qual. Life Outcomes 18:100.

Santiago, P. H. R., Roberts, R., Smithers, L. G., and Jamieson, L. (2019). Stress beyond coping? A Rasch analysis of the Perceived Stress Scale (PSS-14) in an Aboriginal population. PLoS One 14:e0216333. doi: 10.1371/journal.pone.0216333

Saris, W. E., Satorra, A., and Van der Veld, W. M. (2009). Testing structural equation models or detection of misspecifications? Struct. Equ. Modeling 16, 561–582. doi: 10.1080/10705510903203433

Scheines, R., Hoijtink, H., and Boomsma, A. (1999). Bayesian estimation and testing of structural equation models. Psychometrika 64, 37–52. doi: 10.1007/bf02294318

Schuurmans-Stekhoven, J. B. (2017). Spirit or fleeting apparition? Why spirituality’s link with social support might be incrementally invalid. J. Relig. Health 56, 1248–1262. doi: 10.1007/s10943-013-9801-3

Schwarz, G. (1978). Estimating the dimension of a model. Ann. Stat. 6, 461–464. doi: 10.1214/aos/1176344136

Sellbom, M., and Tellegen, A. (2019). Factor analysis in psychological assessment research: common pitfalls and recommendations. Psychol. Assess. 31:1428. doi: 10.1037/pas0000623

Sherbourne, C. D., and Stewart, A. L. (1991). The MOS social support survey. Soc. Sci. Med. 32, 705–714. doi: 10.1016/0277-9536(91)90150-b

Slade, G. D., Spencer, A. J., and Roberts-Thomson, K. F. (2004). Australia’s dental generations. Natl. Survey Adult Oral Health 6:274.

Smid, S. C., McNeish, D., Mioèeviæ, M., and van de Schoot, R. (2020). Bayesian versus frequentist estimation for structural equation models in small sample contexts: a systematic review. Struct. Equ. Modeling A Multidisciplinary J. 27, 131–161. doi: 10.1080/10705511.2019.1577140

Spiegelhalter, D. J., Best, N. G., Carlin, B. P., and Van Der Linde, A. (2002). Bayesian measures of model complexity and fit. J. R. Stat. Soc. Series B (Stat. Methodol). 64, 583–639. doi: 10.1111/1467-9868.00353

Steiger, J. H. (ed.) (1980). “Statistically based tests for the number of common factors,” in Proceedings of the Annual meeting of the Psychometric Society, (Iowa City, IA).

Stewart, R. C., Umar, E., Tomenson, B., and Creed, F. (2014). Validation of the multi-dimensional scale of perceived social support (MSPSS) and the relationship between social support, intimate partner violence and antenatal depression in Malawi. BMC Psychiatry 14:180. doi: 10.1186/1471-244X-14-180

Taylor, J. M. (2019). Overview and illustration of Bayesian confirmatory factor Analysis with ordinal indicators. Pract. Assess. Res. Eval. 24:4.

Theofilou, P. (2015). Translation and cultural adaptation of the Multidimensional Scale of Perceived Social Support for Greece. Health Psychol. Res. 3:1061.

Tonsing, K., Zimet, G. D., and Tse, S. (2012). Assessing social support among South Asians: the multidimensional scale of perceived social support. Asian J. Psychiatr. 5, 164–168. doi: 10.1016/j.ajp.2012.02.012

Urquhart, B., and Pooley, J. A. (2007). The transition experience of Australian students to university: the importance of social support. Aust. Commun. Psychol. 19, 78–91.

van Dam, H. A., van der Horst, F. G., Knoops, L., Ryckman, R. M., Crebolder, H. F. J. M., and van den Borne, B. H. W. (2005). Social support in diabetes: a systematic review of controlled intervention studies. Patient Educ. Couns. 59, 1–12. doi: 10.1016/j.pec.2004.11.001

Van De Schoot, R., Broere, J. J., Perryck, K. H., Zondervan-Zwijnenburg, M., and Van Loey, N. E. (2015). Analyzing small data sets using Bayesian estimation: the case of posttraumatic stress symptoms following mechanical ventilation in burn survivors. Eur. J. Psychotraumatol. 6:25216. doi: 10.3402/ejpt.v6.25216

Van de Schoot, R., Kaplan, D., Denissen, J., Asendorpf, J. B., Neyer, F. J., and Van Aken, M. A. (2014). A gentle introduction to Bayesian analysis: applications to developmental research. Child Dev. 85, 842–860. doi: 10.1111/cdev.12169

Van Doorn, J., Ly, A., Marsman, M., and Wagenmakers, E.-J. (2018). Bayesian inference for Kendall’s rank correlation coefficient. Am. Stat. 72, 303–308. doi: 10.1080/00031305.2016.1264998

Vinokur, A., and Caplan, R. D. (1987). Attitudes and social support: determinants of job-seeking behavior and well-being among the unemployed 1. J. Appl. Soc. Psychol. 17, 1007–1024. doi: 10.1111/j.1559-1816.1987.tb02345.x

Wagenmakers, E.-J. (2007). A practical solution to the pervasive problems ofp values. Psychonomic Bull. Rev. 14, 779–804. doi: 10.3758/bf03194105

Wagenmakers, E.-J., Love, J., Marsman, M., Jamil, T., Ly, A., Verhagen, J., et al. (2018a). Bayesian inference for psychology. Part II: example applications with JASP. Psychonomic Bull. Rev. 25, 58–76. doi: 10.3758/s13423-017-1323-7

Wagenmakers, E.-J., Marsman, M., Jamil, T., Ly, A., Verhagen, J., Love, J., et al. (2018b). Bayesian inference for psychology. Part I: theoretical advantages and practical ramifications. Psychonomic Bull. Rev. 25, 35–57. doi: 10.3758/s13423-017-1343-3

West, S. G., Taylor, A. B., and Wu, W. (2012). Model fit and model selection in structural equation modeling. Handbook Struct. Equ. Modeling 1, 209–231.

Wetzels, R., and Wagenmakers, E.-J. (2012). A default Bayesian hypothesis test for correlations and partial correlations. Psychonomic Bull. Rev. 19, 1057–1064. doi: 10.3758/s13423-012-0295-x

Widaman, K. F., and Thompson, J. S. (2003). On specifying the null model for incremental fit indices in structural equation modeling. Psychol. Methods 8:16. doi: 10.1037/1082-989x.8.1.16

Wilkinson, R. G., and Marmot, M. (2003). Social Determinants of Health: The Solid Facts. Geneva: World Health Organization.

Wongpakaran, T., Wongpakaran, N., and Ruktrakul, R. (2011). Reliability and validity of the multidimensional scale of perceived social support (MSPSS): Thai version. Clin. Pract. Epidemiol. Ment. Health CP & EMH 7:161. doi: 10.2174/1745017901107010161

Wu, C.-Y., Stewart, R., Huang, H.-C., Prince, M., and Liu, S.-I. (2011). The impact of quality and quantity of social support on help-seeking behavior prior to deliberate self-harm. Gen. Hosp. Psychiatry 33, 37–44. doi: 10.1016/j.genhosppsych.2010.10.006

Yang, Y., and Xia, Y. (2019). Categorical Omega with small sample sizes via Bayesian Estimation: an alternative to Frequentist estimators. Educ. Psychol. Meas. 79, 19–39. doi: 10.1177/0013164417752008

Yu, C.-Y. (2002). Evaluating Cutoff Criteria of Model Fit Indices for Latent Variable Models with Binary and Continuous Outcomes. Los Angeles, CA: University of California.

Zimet, G. D., Dahlem, N. W., Zimet, S. G., and Farley, G. K. (1988). The multidimensional scale of perceived social support. J. Pers. Assess. 52, 30–41.

Keywords: Bayesian confirmatory factor analysis, Bayesian inference, social support, Australia, psychometrics

Citation: Santiago PHR, Quintero A, Haag D, Roberts R, Smithers L and Jamieson L (2021) Drop-the-p: Bayesian CFA of the Multidimensional Scale of Perceived Social Support in Australia. Front. Psychol. 12:542257. doi: 10.3389/fpsyg.2021.542257

Received: 12 March 2020; Accepted: 22 January 2021;

Published: 26 February 2021.

Edited by:

Martin Lages, University of Glasgow, United KingdomReviewed by: