- 1Work and Engineering Psychology Research Group, Department of Human Sciences, Technical University of Darmstadt, Darmstadt, Germany

- 2Elastic Lightweight Robotics, Department of Electrical Engineering and Information Technology, Robotics Research Institute, Technische Universität Dortmund, Dortmund, Germany

- 3Institute for Mechatronic Systems, Mechanical Engineering, Technical University of Darmstadt, Darmstadt, Germany

Cognitive modeling of human behavior has advanced the understanding of underlying processes in several domains of psychology and cognitive science. In this article, we outline how we expect cognitive modeling to improve comprehension of individual cognitive processes in human-agent interaction and, particularly, human-robot interaction (HRI). We argue that cognitive models offer advantages compared to data-analytical models, specifically for research questions with expressed interest in theories of cognitive functions. However, the implementation of cognitive models is arguably more complex than common statistical procedures. Additionally, cognitive modeling paradigms typically have an explicit commitment to an underlying computational theory. We propose a conceptual framework for designing cognitive models that aims to identify whether the use of cognitive modeling is applicable to a given research question. The framework consists of five external and internal aspects related to the modeling process: research question, level of analysis, modeling paradigms, computational properties, and iterative model development. In addition to deriving our framework from a concise literature analysis, we discuss challenges and potentials of cognitive modeling. We expect cognitive models to leverage personalized human behavior prediction, agent behavior generation, and interaction pretraining as well as adaptation, which we outline with application examples from personalized HRI.

Introduction

Contemporary approaches highlight the relevance of personalization in human-agent interaction (HAI). For example, e-commerce applications that use web personalization to create product deals and recommendations for users traditionally enjoy persistent research interest (Salonen and Karjaluoto, 2016). However, personalization has also long since branched out from e-commerce to further areas of human-computer interaction (HCI), such as activity recognition (Sztyler and Stuckenschmidt, 2017; Zunino et al., 2017; Siirtola et al., 2019), body part tracking (Tkach et al., 2017), assisted driving (Hasenjäger and Wersing, 2017), and human-robot interaction (HRI; Clabaugh and Matarić, 2018; Collins, 2019; Irfan et al., 2019). Although user experience of personalized services is positively influenced by overtness and transparency (Chen and Sundar, 2018; Dolin et al., 2018), personalization is not universally appreciated due to concerns over users’ loss of information privacy (Alatalo and Siponen, 2001; Chellappa and Sin, 2005; Awad and Krishnan, 2006; Schneider et al., 2017; Ku et al., 2018).

As Graus and Ferwerda (2019) argue, personalization is typically achieved by a system adapting to data-driven inference about users based on their previous behaviors. Their study posits that a theoretically motivated approach may lead to two benefits over a purely data-driven model: reducing the need for extensive data analysis and potentially generating new insight regarding the appropriateness of a given theory. The sentiment for more theory-driven approaches in data analysis is also shared by Plonsky et al. (2019) and Bourgin et al. (2019). Both articles highlight the improved prediction of human decisions by machine learning models after implementing variants of behaviorally relevant psychological theories. Bourgin et al. (2019) specifically make the case for pretraining machine learning models with data simulated by cognitive models. Cognitive models refer to the instantiation of a theory that relates to one or more cognitive functions and tries computationally to replicate them. Due to this, cognitive modeling is routinely used synonymously with computational modeling (Sun, 2008a). In previous research, the application of cognitive models has helped to explain or recontextualize several empirically established psychological phenomena (Adams, 2007; Körding et al., 2007; Vul et al., 2014; Srivastava and Vul, 2017). It is routinely argued that the advantage of cognitive models over, for example, verbal-conceptual or data-driven statistical models lie in the need to translate a theoretical framework into a computational system, leaving less freedom for interpretation (Sun, 2008a; Stafford, 2009; Murphy, 2011; Farkaš, 2012). In contrast to cognitive models, verbal-conceptual models define no formal relationship between concepts in a mathematical sense, and statistical models use mathematical equations to describe the relationship between concepts but do not require the translation into a computational system. Sun (2008a) notes that statistical models “may be viewed as a subset of computational models, as normally they can readily lead to computational implementations […].”

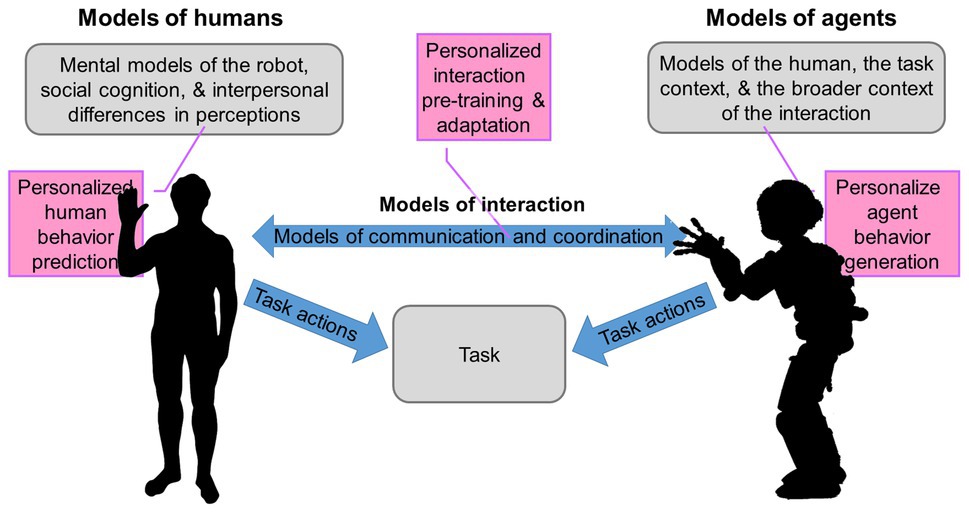

As Plonsky et al. (2019) and Bourgin et al. (2019) show, involving cognitive models in human behavior prediction as outlined in Figure 1 increases predictive performance. It is reasonable to assume that a similar performance increase can be expected by incorporating cognitive models into the data-analytic inference required for personalization (Graus and Ferwerda, 2019) and in (personalized) HRI (Collins, 2019; Cross et al., 2019; Fischer and Demiris, 2019; Prescott et al., 2019). Following from this, this article discusses challenges and potentials of cognitive models focusing on user-specific effects and proposes a conceptual framework for (personalized) model development in Section “A Conceptual Framework for Designing Cognitive Models.” Subsequently, we discuss the HRI application examples from Figure 1 in detail and analyze common pitfalls in Section “Application Examples and Pitfalls.” Section “Conclusion” concludes by discussing connections of personalization and cognitive modeling and outlining directions for future research.

Figure 1. Cognitive human-robot interaction (HRI) as presented and discussed by Mutlu et al. (2016) as an example of human-agent interaction (HAI) (blue and gray). Various interaction challenges might be tackled applying cognitive models and exhibit strong potential for personalization (magenta). For instance, human behavior prediction (models of humans), interaction pretraining and adaptation (models of coordination), and generating agent behavior from human models (models of agents).

A Conceptual Framework for Designing Cognitive Models

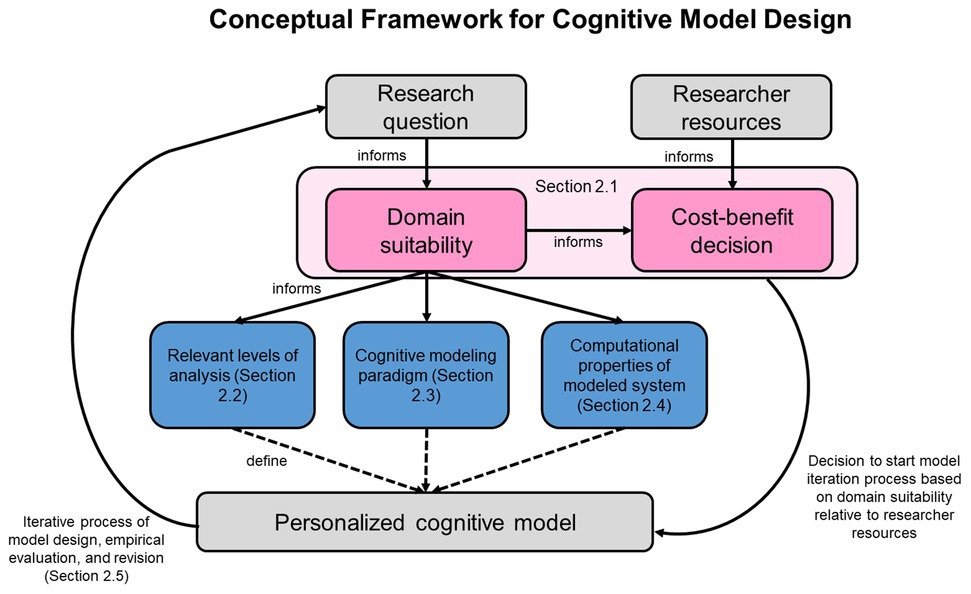

We present a conceptual framework to consider model-related and external aspects when designing cognitive models, following the definition of conceptual frameworks given by Imenda (2014). As an inductive synthesis of existing theoretical and empirical insights, the proposed framework highlights important considerations with regard to cognitive modeling, specifically for researchers new to the method. Figure 2 provides a schematic representation of the framework components and their interactions, which are presented and discussed in the remainder of the paper. Researchers applying the framework start by evaluating the domain suitability of the research question and make a cost-benefit decision based on the suitability and available resources. Given a positive evaluation, they define the model-related aspects that constrain the actual cognitive model. Based on the model’s performance in predicting empirical data, there may be a need to revise the model design and evaluate again or use the available model to investigate the research question.

Figure 2. Conceptual framework for cognitive model design. External aspects are displayed in gray, and pink indicates initial considerations that inform the specific design decisions, which are themselves colored blue. The framework is used by evaluating domain suitability and available resources to reach a decision on whether to initiate the modeling process. Then, model-related aspects are defined and the resulting model is empirically evaluated. If necessary, an iterative process of model improvement is started.

Research Questions and Resources

An initial threshold regarding the application of cognitive models is the considered research question, i.e., questions related to cognitive functions. Sun (2008a) identifies several cognitive functions that can be approached by cognitive modeling: motivation, emotion, perception, categorization, memory, decision making, reasoning, planning, problem-solving, motor control, learning, metacognition, language, and communication. If included in a theory, these cognitive functions may suggest a computational view toward human behavior and may, therefore, benefit from cognitive modeling. Because many other types of models lack the precision derived from formalized model definition (Murphy, 2011), cognitive models can help to understand the functions suggested by Sun (2008a). The aforementioned cognitive functions develop highly interindividually, and cognitive states in a given situation are difficult to generalize. Therefore, Lee (2011) highlights the importance of accounting for individual differences in the execution of cognitive functions and proposes hierarchical cognitive modeling as a way to do so. Using cognitive models to estimate and then maintain a representation of the motivational, emotional, or other cognitive states of individuals allows an interactive system to adjust its behavior and, accordingly, may help to personalize user experience with HAI systems (Schürmann et al., 2019). It is still debated whether statistical or verbal-conceptual models (Sun, 2008a; Çelikok et al., 2019; Guest and Martin, 2020) provide the required conceptual precision to shed light on the underlying theory. In our opinion, the application of cognitive modeling is less beneficial for research questions that do not directly deal with the cognitive functions mentioned above or research questions that lack established assumptions about how these cognitive functions work. As depicted in Figure 2, the suitability of cognitive modeling is determined based on the related cognitive functions.

A second important requirement of applying cognitive modeling relates to resources available to the researcher, e.g., programming capabilities. To our knowledge, there is no software solution available that allows for cognitive modeling design without programming expertise. As Addyman and French (2012) point out, even simulation environments such as ACT-R (Ritter et al., 2019) are often of little use to researchers without programming experience. Although most programming languages should be capable of the required mathematical operations, high-level languages focusing on statistics and providing function libraries, e.g., R, Python or Matlab, can strongly simplify cognitive modeling. In our framework, programming resources and the suitability of the research question inform a cost-benefit decision that indicates whether the development of a cognitive model should be started (see Figure 2).

Relevant Levels of Analysis

Marr (1982) defines three levels of analysis on which the study of cognitive systems is most commonly based. These levels do not fall into a strict hierarchy but can be understood as complementary descriptions of a cognitive system from equally important perspectives. The first step when applying our framework is to clarify to which levels of analysis the cognitive model in question may be connected (left path in Figure 2). Answering this question provides the researcher with constraints for further modeling steps. The computational level includes the content of computations that a cognitive system, irrespective of being human or artificial, executes. This includes the logic and structure of the problem or task that a cognitive system attempts to solve. The algorithmic level contains information about the processes and representations that describe the computation. Last, the implementational level deals with the biological or artificial realization in physical hardware. Zednik and Jakel (2014) paraphrase this categorization of levels of analysis; the computational level specifies what a system is doing and why it is doing it; the algorithmic level specifies the how; and the implementational level specifies the where. Over time, researchers have suggested adding layers to the levels of analysis (Griffiths et al., 2015) or adjusting models so that they are defined on more than one level of analysis (Griffiths et al., 2012; Vul et al., 2014).

Applying cognitive modeling to a given research question includes identifying the levels of analysis that are most relevant or applicable, i.e., which level of analysis is required to describe the given problem. For example, Griffiths et al. (2008) argue that Bayesian cognitive modeling is more suitable for problems of inductive inference than for predicting human behavior due to the mathematical structure of Bayes’ rule. Outlining the scope of the problem that the cognitive system is expected to solve leads, in the authors’ experience, to an intuitive restriction of applicable levels of analysis. If one can assume that all individuals solve the same cognitive problem, the level of analysis chosen is not something to be personalized but rather a modeling choice that determines the possible dimensions of personalization in subsequent steps.

Selection of Cognitive Modeling Paradigms

Considering the identified cognitive problem, several modeling paradigms may present themselves, each with their own potential for personalization. These candidate paradigms are routinely, but not necessarily, defined on the same level of analysis (Marr, 1982) as the cognitive problem they approach. One could argue that the more levels covered by a model’s predictions, the more complete the understanding of a phenomenon is. For example, instead of providing a predicted response to a choice problem, a model can also provide an estimate of predicted reaction time required to respond to the choice problem. Although covering multiple levels has the potential to provide new insights, a research question may not yet include any reasonable assumptions about reaction times so that the required additional specifications of a prediction time model could be theoretically under-constrained. Additionally, covering Marr’s levels completely may not be necessary for all research problems; e.g., cognitive algorithms might be powerful extensions to existing robotic platforms.

Depending on the relation to cognitive functions and levels of analysis (Marr, 1982), an appropriate cognitive modeling paradigm should be selected (middle path in Figure 2). Sun (2008b) identifies the following paradigms: connectionism, Bayesianism, dynamical systems approaches, declarative or logic-based models, and cognitive architectures. All these paradigms allow for free parameters that govern individual model behavior and, hence, allow for personalization by parameter fitting. Moreover, the paradigms have soft boundaries, and mathematical representations of specific cognitive processes overlap (Roe et al., 2001; Fard et al., 2017). The number of free parameters in cognitive models can, however, cause overfitting as discussed in Section “Application Examples and Pitfalls.” Therefore, we advise readers to approach the selection of cognitive modeling paradigms driven by their research question’s underlying theory: Assuming interest in whether human choices satisfy criteria of rationality, juxtaposing a Bayesian model as a proxy for computational rationality against a heuristic model of violations against computational rationality is a suitable approach. As another example, a research question could concern specific neurological processes and, therefore, be compatible with modeling paradigms with an extension to implementational level of Marr (1982), i.e., the neural hardware.

Computational Properties of the Modeled System

As previously outlined, there are no general indications to select modeling paradigms or covering levels of analysis (Marr, 1982). Therefore, it appears suitable to consider the required computational properties to adequately account for the modeled behavior. This consideration is captured in the third path of our framework (right path in Figure 2). Calder et al. (2018) outline some computational properties: deterministic and nondeterministic (representing behavior by probabilities) models, static and dynamic (representing temporal effects) models, discrete or continuous models, and models based on individuals or populations. If a model is deterministic, it always produces the same behavior given the same input, and a nondeterministic model produces the behavior based on an internal probability. A static model has no inherent concept of time, and a dynamic one does. Discrete models represent their components in steps or levels, and continuous models use representations that are smooth. We posit that, as different models can be used to describe the same human behavior, they likely share similar properties. For HAI research, we assume that individual-focused models that are nondeterministic in nature to represent the probabilistic aspects of human choice and perception (Körding et al., 2007; Rieskamp, 2008) appear beneficial to provide accurate predictions of the target behavior. If the behavior of interest is human choice and perception, we consider the focus on individuals and non-determinism as necessary properties of a model. Whether a model operates discretely or continuously and whether it is static or dynamic may depend on the research question or cognitive function.

A principled way of drawing inference about a cognitive model’s parameters on intra‐ and inter-individual levels comes in the form of hierarchical cognitive modeling (Lee, 2011). Once required computational properties have been defined, this hierarchical approach considers an individual’s model parameters to be sampled from a population-wide distribution of parameters. In this way, both inter‐ and intra-individual variations in the behavior of human users can be respected by HAI systems with hierarchical modeling levels, thus allowing for personalization of the cognitive model.

Iterative Model Development, Evaluation, and Revision

Our proposed framework considers the external aspects, and settling on specific decisions regarding model development should result in a functioning and testable cognitive model. Evaluating the resulting model against empirical evidence or competing models, however, may show a gap between model predictions and observed behavior, depending on the specific nature of the research question. This suggests an iterative process of model development, evaluation, and revision, which provides the opportunity to reassess whether a specific combination of levels of analysis, modeling paradigm, and computational properties suits the research question. Murphy (2011) highlights that certain aspects of human behavior might not be understood well enough to justify using a formalized theory and a cognitive model building on said theory. However, an indication of whether we know enough or not is the repeated reference to formalized theories of cognitive functions in the literature. An applied example of this can be found in research about human user behavior in online services. Schürmann et al. (2020) conduct a secondary literature review in which they reanalyze existing review data concerning the frequency of references to computational-level theories, the frequency of interpretations of statistical model results as computational, and the frequency of actual computational implementations. References to formalized theories are found in 44.2% of the investigated literature, and results of statistical models are interpreted in a computational manner in 33.3% of cases. However, the prevalence of cognitive modeling implementations is low at 5% (Schürmann et al., 2020). Accordingly, it seems that information is sufficient to warrant statements about the cognitive functions of online users. An iterative model development process can then close in on suitable specifications such as the level(s) of analysis, modeling paradigm, and computational properties required to adequately describe a target behavior. To implement personalization, the formalization of inter-individual differences appears necessary. Although these could be represented as parameter differences in statistical models (Sun, 2008b) as well, cognitive models are potentially leading to improved understanding and theories of cognitive functions.

Application Examples and Pitfalls

Before highlighting application examples and pitfalls of cognitive modeling in HAI with regard to personalization, it is necessary to define applications of cognitive models. We differentiate between three applications of cognitive models as outlined in Figure 1: (1) using models of human agents to understand decisional or perceptual processes to improve predictions of the agent’s behavior, (2) modeling human behavior to pretrain and adapt interaction, e.g., to monitor users’ preferences, and (3) generating behavior of an artificial agent based on a cognitive model of human behavior.

The agent of interest may be a humanoid robot, a chat bot, or any type of system that might benefit from generating its own behavior in a human-like manner. In the remainder of this section, we focus on interaction between humans and humanoid robots as shown in Figure 1 because we deem it a striking and very graspable exemplary case. Here, robotic agents may use cognitive models to predict human interactions, but they may also control their own sensorimotor behavior by use of such a cognitive model. The benefit of applying cognitive approaches lies in the potentially realistic imitation of human behavior and can foster both psychological research and the development of humanoid robots (Asada et al., 2009; Hoffmann et al., 2010; Schillaci et al., 2016; Prescott et al., 2019; Schürmann et al., 2019). Through fitting free parameters to interindividual differences, behavior prediction and generation can be personalized rather straightforwardly. Combining the idea of human and robot models with the approach of Bourgin et al. (2019) to pretrain contemporary machine learning models with cognitive models, we argue that humanoid robots could produce more human-like sensorimotor behavior that fosters interaction and adapts to the human partner. Considering the example of a human-robot handshake, cognitive models could be used to predict a user’s movement selection (behavior prediction) and control the humanoid’s motion execution (behavior generation) and also to align the robot’s actions to the human partner, spatially and temporally (interaction adaptation; Wang et al., 2013; Vogt et al., 2017).

Pitfalls of applying cognitive models to HAI are generally similar to other domains. The advantage of higher formalization and predictive precision comes at the price of having to communicate programming-related and mathematical concepts to audiences that may be used to verbal-conceptual theories. Additionally, development, maintenance, and publication of model code represent considerable challenges compared to less computationally sophisticated methods. When programming a model, researchers need to be aware of the relation between the number of free parameters in a model and the danger of overfitting (Farrell and Lewandowsky, 2018). Specifically, within the context of personalization, the danger of overfitting individual differences lies in the loss of generalization so that the prediction of new, previously unseen users would be initially poor. Recently, researchers have noted that cognitive models run the risk of having fundamental aspects adjusted after empirical data have been observed for the purpose of increasing the fit to the data (Lee et al., 2019). This pitfall should be given special consideration when applying cognitive models to scenarios of personalization.

Conclusion

Cognitive modeling has strong potential in general and personalized HAI. We recommend considering the given conditions, especially whether the interactive task deals with the inter-individual aspects of cognitive functions. The conceptual framework proposed in this article helps to determine which cognitive function is of relevance and which cognitive modeling paradigm satisfies the required computational properties and serves for personalization as well as whether formal theories of cognition exist. Moreover, using the framework in HAI systems may help to discern whether a cognitive model could be used to predict human behavior, to pretrain and adapt interaction, and/or to generate the behavior of an artificial agent in a personalized fashion (see Figure 1).

Although not too commonly used, personalized HAI can be realized with many contemporary modeling paradigms through fitting free parameters or even online adaptation of model structures. We outline conditions that, when met, put cognitive modeling in a strong position to provide insights that cannot be provided by otherwise prominent statistical models. As Graus and Ferwerda (2019) suggest, theory-driven models benefit from a reduced need for extensive data analysis. Whether this holds true for cognitive models, which are notorious for their quickly rising number of free parameters, remains to be seen. As a second benefit, the generation of new insights seems particularly important with respect to cognitive models. Aside from theory-driven personalization, an added value of cognitive modeling in HAI stems from its practical application, e.g., in humanoid robot development. Here, improvement of the interaction with particular human users would not only result directly from the representation of inter-individual differences, but also from a general approximation of human behavior. First, human-like, e.g., less precise but more versatile, robot movements have been shown to improve the perceived interaction quality (Pan et al., 2019). Second, cognitive modeling has been successfully used for pretraining machine learning models (Bourgin et al., 2019), which increases learning efficiency and has strong potential to foster distinct progress in personalizing interaction.

Applying the proposed framework can clarify the relation between external and internal aspects of cognitive modeling and, especially, support first-time users. Future research should elaborate the conceptual framework in empirical HAI studies; focusing the purposes outlined in Figure 1 will help to improve personalized interaction.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Funding

We acknowledge financial support by Deutsche Forschungsgemeinschaft and Technische Universität Dortmund/TU Dortmund University within the funding programme Open Access Publishing.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adams, W. J. (2007). A common light-prior for visual search, shape, and reflectance judgments. J. Vis. 7:11. doi: 10.1167/7.11.11

Addyman, C., and French, R. M. (2012). Computational modeling in cognitive science: a manifesto for change. Top. Cogn. Sci. 4, 332–341. doi: 10.1111/j.1756-8765.2012.01206.x

Alatalo, T., and Siponen, M. T. (2001). “Addressing the personalization paradox in the development of electronic commerce systems.” in Post-Proceedings of the EBusiness Research Forum (EBRF), Tampere, Finland.

Asada, M., Hosoda, K., Kuniyoshi, Y., Ishiguro, H., Inui, T., Yoshikawa, Y., et al. (2009). Cognitive developmental robotics: a survey. IEEE Trans. Auton. Ment. Dev. 1, 12–34. doi: 10.1109/TAMD.2009.2021702

Awad, N. F., and Krishnan, M. S. (2006). The personalization privacy paradox: an empirical evaluation of information transparency and the willingness to be profiled online for personalization. MIS Q. 30:13. doi: 10.2307/25148715

Bourgin, D. D., Peterson, J. C., Reichman, D., Russell, S. J., and Griffiths, T. L. (2019). “Cognitive model priors for predicting human decisions.” in International Conference on Machine Learning, 5133–5141.

Calder, M., Craig, C., Culley, D., de Cani, R., Donnelly, C. A., Douglas, R., et al. (2018). Computational modelling for decision-making: where, why, what, who and how. R Soc. Open Sci. 5:172096. doi: 10.1098/rsos.172096

Çelikok, M. M., Peltola, T., Daee, P., and Kaski, S. (2019). Interactive AI with a theory of mind. ArXiv [Preprint]. Available at: https://arxiv.org/abs/1912.05284 (Accessed August 28, 2020).

Chellappa, R. K., and Sin, R. G. (2005). Personalization versus privacy: an empirical examination of the online consumer’s dilemma. Inf. Technol. Manag. 6, 181–202. doi: 10.1007/s10799-005-5879-y

Chen, T. -W., and Sundar, S. S. (2018). “This app would like to use your current location to better serve you: importance of user assent and system transparency in personalized mobile services.” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems; April 21–26, 2018; 1–13.

Clabaugh, C., and Matarić, M. (2018). Robots for the people, by the people: personalizing human-machine interaction. Sci. Robot. 3:eaat7451. doi: 10.1126/scirobotics.aat7451

Collins, E. C. (2019). Drawing parallels in human-other interactions: a trans-disciplinary approach to developing human-robot interaction methodologies. Philos. Trans. R Soc. Lond. Ser. B Biol. Sci. 374:20180433. doi: 10.1098/rstb.2018.0433

Cross, E. S., Hortensius, R., and Wykowska, A. (2019). From social brains to social robots: applying neurocognitive insights to human-robot interaction. Philos. Trans. R Soc. Lond. Ser. B Biol. Sci. 374:20180024. doi: 10.1098/rstb.2018.0024

Dolin, C., Weinshel, B., Shan, S., Hahn, C. M., Choi, E., Mazurek, M. L., et al. (2018). “Unpacking perceptions of data-driven inferences underlying online targeting and personalization.” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems; April 21–26, 2018; 1–12.

Fard, P. R., Park, H., Warkentin, A., Kiebel, S. J., and Bitzer, S. (2017). A Bayesian reformulation of the extended drift-diffusion model in perceptual decision making. Front. Comput. Neurosci. 11:29. doi: 10.3389/fncom.2017.00029

Farkaš, I. (2012). Indispensability of computational modeling in cognitive science. J. Cogn. Sci. 13, 401–435. doi: 10.17791/jcs.2012.13.4.401

Farrell, S., and Lewandowsky, S. (2018). Computational modeling of cognition and behavior. 1st Edn. Cambridge, UK: Cambridge University Press.

Fischer, T., and Demiris, Y. (2019). Computational modelling of embodied visual perspective-taking. IEEE Trans. Cogn. Develop. Syst. 1. doi: 10.1109/tcds.2019.2949861

Graus, M., and Ferwerda, B. (2019). “Theory-grounded user modeling for personalized HCI” in Personalized human-computer interaction. eds. M. Augstein, E. Herder, and W. Wörndl.

Griffiths, T. L., Kemp, C., and Tenenbaum, J. B. (2008). “Bayesian models of cognition” in Cambridge handbook of computational cognitive modeling. ed. R. Sun (New York, NY, US: Cambridge University Press), 59–100.

Griffiths, T. L., Lieder, F., and Goodman, N. D. (2015). Rational use of cognitive resources: levels of analysis between the computational and the algorithmic. Top. Cogn. Sci. 7, 217–229. doi: 10.1111/tops.12142

Griffiths, T. L., Vul, E., and Sanborn, A. N. (2012). Bridging levels of analysis for probabilistic models of cognition. Curr. Dir. Psychol. Sci. 21, 263–268. doi: 10.1177/0963721412447619

Guest, O., and Martin, A. E. (2020). How computational modeling can force theory building in psychological science. PsyArXiv [preprint]. doi: 10.31234/osf.io/rybh9

Hasenjäger, M., and Wersing, H. (2017). “Personalization in advanced driver assistance systems and autonomous vehicles: a review.” in 2017 IEEE 20th International Conference on Intelligent Transportation Systems (Itsc); October 16–19, 2017; 1–7.

Hoffmann, M., Marques, H., Arieta, A., Sumioka, H., Lungarella, M., and Pfeifer, R. (2010). Body schema in robotics: a review. IEEE Trans. Cogn. Develop. Syst. 2, 304–324. doi: 10.1109/TAMD.2010.2086454

Imenda, S. (2014). Is there a conceptual difference between theoretical and conceptual frameworks? J. Soc. Sci. 38, 185–195. doi: 10.1080/09718923.2014.11893249

Irfan, B., Ramachandran, A., Spaulding, S., Glas, D. F., Leite, I., and Koay, K. L. (2019). “Personalization in long-term human-robot interaction.” in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction. (HRI); March 11–14, 2019; 685–686.

Körding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., and Shams, L. (2007). Causal inference in multisensory perception. PLoS One 2:e943. doi: 10.1371/journal.pone.0000943

Ku, Y. -C., Li, P. -Y., and Lee, Y. -L. (2018). “Are you worried about personalized service? An empirical study of the personalization-privacy paradox” in HCI in business, government, and organizations. Vol. 10923. eds. F. F. -H. Nah and B. S. Xiao (Cham, Switzerland: Springer International Publishing), 351–360.

Lee, M. D. (2011). How cognitive modeling can benefit from hierarchical Bayesian models. J. Math. Psychol. 55, 1–7. doi: 10.1016/j.jmp.2010.08.013

Lee, M. D., Criss, A. H., Devezer, B., Donkin, C., Etz, A., Leite, F. P., et al. (2019). Robust modeling in cognitive science. Comput. Brain Behav. 2, 141–153. doi: 10.1007/s42113-019-00029-y

Marr, D. (1982). Vision: A computational investigation into the human representation and processing of visual information. Cambridge, UK: MIT Press.

Murphy, G. (2011). “The contribution (and drawbacks) of models to the study of concepts” in Formal approaches in categorization. eds. E. Pothos and A. Willis (Cambridge, MA, US: Cambridge University Press), 299–312.

Mutlu, B., Roy, N., and Šabanović, S. (2016). “Cognitive human-robot interaction” in Springer handbook of robotics. eds. B. Siciliano and O. Khatib (Springer), 1907–1934.

Pan, M. K., Knoop, E., Bächer, M., and Niemeyer, G. (2019). “Fast handovers with a robot character: small sensorimotor delays improve perceived qualities.” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); November 4–8, 2019.

Plonsky, O., Apel, R., Ert, E., Tennenholtz, M., Bourgin, D., Peterson, J. C., et al. (2019). Predicting human decisions with behavioral theories and machine learning. ArXiv [Preprint]. Available at: https://arxiv.org/abs/1904.06866 (Accessed August 28, 2020).

Prescott, T. J., Camilleri, D., Martinez-Hernandez, U., Damianou, A., and Lawrence, N. D. (2019). Memory and mental time travel in humans and social robots. Philos. Trans. R Soc. B 374:20180025. doi: 10.1098/rstb.2018.0025

Rieskamp, J. (2008). The probabilistic nature of preferential choice. J. Exp. Psychol. Learn. Mem. Cogn. 34, 1446–1465. doi: 10.1037/a0013646

Ritter, F. E., Tehranchi, F., and Oury, J. D. (2019). ACT-R: a cognitive architecture for modeling cognition. Wiley Interdiscip. Rev. Cogn. Sci. 10:e1488. doi: 10.1002/wcs.1488

Roe, R. M., Busemeyer, J. R., and Townsend, J. T. (2001). Multialternative decision field theory: a dynamic connectionist model of decision making. Psychol. Rev. 108, 370–392. doi: 10.1037/0033-295X.108.2.370

Salonen, V., and Karjaluoto, H. (2016). Web personalization: the state of the art and future avenues for research and practice. Telemat. Inform. 33, 1088–1104. doi: 10.1016/j.tele.2016.03.004

Schillaci, G., Hafner, V. V., and Lara, B. (2016). Exploration behaviors, body representations, and simulation processes for the development of cognition in artificial agents. Front. Robot. AI 3:39. doi: 10.3389/frobt.2016.00039

Schneider, H., George, C., Eiband, M., and Lachner, F. (2017). “Investigating perceptions of personalization and privacy in India” in Human-computer interaction – INTERACT 2017. Lecture notes in computer science. Vol. 10516. eds. R. Bernhaupt, G. Dalvi, A. Joshi, D. K. Balkrishan, J. O’Neill, and M. Winckler (Cham: Springer), 488–491.

Schürmann, T., Gerber, N., and Gerber, P. (2020). Benefits of formalized computational modeling for understanding user behavior in online privacy research. J. Intellect. Cap., 21, 431–458. doi: 10.1108/JIC-05-2019-0126 [Epub ahead of print]

Schürmann, T., Mohler, B. J., Peters, J., and Beckerle, P. (2019). How cognitive models of human body experience might push robotics. Front. Neurorobot. 13:14. doi: 10.3389/fnbot.2019.00014

Siirtola, P., Koskimäki, H., and Röning, J. (2019). Personalizing human activity recognition models using incremental learning. ArXiv [Preprint]. Available at: https://arxiv.org/abs/1905.12628 (Accessed August 28, 2020).

Srivastava, N., and Vul, E. (2017). Rationalizing subjective probability distortions. Proceedings of the 39th Annual Meeting of the Cognitive Science Society.

Stafford, T. (2009). “What use are computational models of cognitive processes?” in Connectionist models of behaviour and cognition II: Proceedings of the eleventh neural computation and psychology workshop. eds. J. Mayor, N. Ruh, and K. Plunkett (UK: University of Oxford), 265–274.

Sun, R. (2008a). “Introduction to computational cognitive modeling” in The Cambridge handbook of computational psychology. New York, NY, US: Cambridge University Press, 3–20.

Sun, R. (2008b). The Cambridge handbook of computational psychology. New York, NY, US: Cambridge University Press.

Sztyler, T., and Stuckenschmidt, H. (2017). “Online personalization of cross-subjects based activity recognition models on wearable devices.” in 2017 IEEE International Conference on Pervasive Computing and Communications (Per Com); March 13–17, 2017; 180–189.

Tkach, A., Tagliasacchi, A., Remelli, E., Pauly, M., and Fitzgibbon, A. (2017). Online generative model personalization for hand tracking. ACM Trans. Graph. 36, 1–11. doi: 10.1145/3130800.3130830

Vogt, D., Stepputtis, S., Grehl, S., Jung, B., and Amor, H. B. (2017). “A system for learning continuous human-robot interactions from human-human demonstrations.” in 2017 IEEE International Conference on Robotics and Automation (ICRA); May 29–June 3, 2017; 2882–2889.

Vul, E., Goodman, N., Griffiths, T. L., and Tenenbaum, J. B. (2014). One and done? Optimal decisions from very few samples. Cogn. Sci. 38, 599–637. doi: 10.1111/cogs.12101

Wang, Z., Mülling, K., Deisenroth, M. P., Ben Amor, H., Vogt, D., Schölkopf, B., et al. (2013). Probabilistic movement modeling for intention inference in human-robot interaction. Int. J. Robot. Res. 32, 841–858. doi: 10.1177/0278364913478447

Zednik, C., and Jakel, F. (2014). How does Bayesian reverse-engineering work? Proc. Annu. Conf. Cogn. Sci. Soc. 36, 666–671.

Keywords: personalization, cognitive modeling, human-agent interaction, behavior prediction/generation, interaction adaption

Citation: Schürmann T and Beckerle P (2020) Personalizing Human-Agent Interaction Through Cognitive Models. Front. Psychol. 11:561510. doi: 10.3389/fpsyg.2020.561510

Edited by:

Bruce Ferwerda, Jönköping University, SwedenReviewed by:

Panagiotis Germanakos, SAP SE, GermanyBenjamin Cowley, University of Helsinki, Finland

Copyright © 2020 Schürmann and Beckerle. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Philipp Beckerle, cGhpbGlwcC5iZWNrZXJsZUB0dS1kb3J0bXVuZC5kZQ==

Tim Schürmann

Tim Schürmann Philipp Beckerle

Philipp Beckerle