- Centre for Research in Human Development, Concordia University, Montréal, QC, Canada

Despite evidence of differential processing of personally relevant stimuli (PR), most studies investigating attentional biases in processing emotional content use generic stimuli. We sought to examine differences in the processing of PR, relative to generic, stimuli across information processing tasks and to validate their use in predicting concurrent interpersonal functioning. Fifty participants (25 female) viewed generic and PR (i.e., their intimate partner’s face) emotional stimuli during tasks assessing selective attention (using a modified version of the Spatial Cueing Task) and inhibition (using the Negative Affective Priming task) of emotional content. Ratings of relationship quality were also collected. Evidence of increased selective attention during controlled and greater avoidance during automatic stages of processing emerged when viewing PR, relative to generic, emotional faces. We also found greater inhibition of PR sad faces. Finally, male, but not female, participants who displayed greater difficulty disengaging from the sad face of their partner reported more conflict in their relationships. Taken together, findings from information processing studies using generic emotional stimuli may not be representative of how we process PR stimuli in naturalistic settings.

Introduction

Facial expressions of emotion are salient and evolutionary-significant visual stimuli when engaging in social interactions (Keltner and Gross, 1999). In fact, the accurate recognition of emotions in facial expressions is integral to meaningful social interactions by allowing individuals to infer the emotional states of others and to anticipate future responses (Russell et al., 2003). On the other hand, the presence of attentional biases or deficits in facial emotion processing is associated with high levels of anxiety (Mathews and MacLeod, 2005; Bar-Haim et al., 2007) and depressive symptoms (Gotlib et al., 2004; Ellenbogen and Schwartzman, 2009). For example, individuals with high trait anxiety or a diagnosis of an anxiety disorder are slower at disengaging their attention away from masked angry faces (presented with limited conscious awareness), relative to those with low trait anxiety or healthy controls, respectively (Fox et al., 2002; Ellenbogen and Schwartzman, 2009). Similarly, individuals with depression are slower to shift their attention from sad faces relative to controls and individuals with anxiety disorders (Gotlib et al., 2004; Ellenbogen and Schwartzman, 2009). In the above studies and the vast majority of research in this area (Mogg and Bradley, 1998; Hallion and Ruscio, 2011; Heeren et al., 2015), the experimental protocols used stimuli of emotional faces from validated databases, which are typically derived from trained actors portraying the extreme depiction of each emotion. Other studies use words associated with an emotional state, such as “death” or “happy.” Although these “generic” emotional stimuli are useful in identifying general attentional biases and the brain circuits associated with different emotions (Lang et al., 2000), this methodology may be limited in its generalizability to naturalistic situations. Indeed, a growing body of literature suggests that generic stimuli may be processed differently than stimuli that are meaningful and relevant to the individual (Bartels and Zeki, 2000; Leibenluft et al., 2004; Wingenfeld et al., 2006; Claypool et al., 2007; Gobbini and Haxby, 2007; Fossati, 2012; Fridrici et al., 2013). In one study using the emotional Stroop task, participants displayed greater interference in reaction times when viewing personally relevant words, relative to generic negative or neutral words (Wingenfeld et al., 2006). Individuals with comorbid borderline personality disorder and post-traumatic stress disorder were slower to name the color of negative personally relevant words, relative to generic negative and neutral words (Wingenfeld et al., 2009). A related body of research has documented similar differences in the processing of self-vs. other-related stimuli (Ibáñez et al., 2011; Salehinejad et al., in press). For example, persons with elevated depressive symptoms, relative to those with no symptoms, exhibited reduced attention to positive words when the words were processed as being self-referential, but not when the words were meant to describe another person (Ji et al., 2017).

With regards to processing emotion in faces, researchers have shown that that individuals may perceive facial expressions of familiar (i.e., faces which individuals have been previously exposed to) and unfamiliar faces differently. For example, participants perceived familiar faces as expressing more happiness than unfamiliar faces even when the facial expression of happiness was identical (Claypool et al., 2007). Furthermore, enhancing familiarity through mere exposure to stimuli might alter selective attention during computer tasks. In one study, individuals attended less to threatening and more to neutral stimuli following mere exposure, relative to novel stimuli of equal emotional valence (Young and Claypool, 2010). These findings are not surprising, given the existing research suggesting different neural networks are involved in the recognition of familiar and unfamiliar faces, and in particular the amygdala and the insula, two brain structures implicated in the processing of emotional stimuli (Gobbini and Haxby, 2007; Fossati, 2012).

Indeed, differential neural responding to familiar and unfamiliar stimuli is of great importance to social neuroscience research. For example, there is evidence of different neural activation, mostly in brain areas that mediate emotional responses (amygdala, medial insula, anterior paracingulate cortex, and posterior superior temporal sulcus), when processing the face of a romantic partner vs. that of a close friend (Bartels and Zeki, 2000), and between the way a mother processes the face of her own child vs. that of a familiar but unrelated child (Leibenluft et al., 2004). Researchers have also found greater activation in autobiographical memory circuits when viewing familiar stimuli. Specifically, Viskontas et al. (2009) found increased activation of the medial temporal lobe when viewing photographs of individuals with whom they had previous contact, such as family members and the experimenter, compared to familiar but not necessarily personally relevant (e.g., celebrities) and unfamiliar stimuli. The association between familiarity of visual stimuli and increased medial temporal lobe activation has been replicated across various studies (Elfgren et al., 2006; Weibert et al., 2016). These findings may be reflective of the greater number of memories and experiences attached to personally relevant stimuli, independent of the image itself (Viskontas et al., 2009).

Taken together, both stronger activation of neural circuits associated with emotion processing and brain areas involved in autobiographical memory may, in part, underlie differences in the processing of personally relevant stimuli relative to generic stimuli. Moreover, faces of different types of familiar persons may not be processed uniformly. Viewing one’s intimate partner’s face resulted in higher neural activation in the greatest number of cortical regions, especially in areas associated with emotional processing, than viewing the face of a parent or one’s own face (Taylor et al., 2009). Using event-related potentials and pupillometry, increased attention and arousal were found when participants viewed positive, negative or neutral words in sentences referring to their romantic partner or best friend compared to those referring to an unknown person (Bayer et al., 2017). Despite evidence suggesting substantive differences in how personally relevant stimuli are processed in the brain relative to generic stimuli, especially when viewing the face of a romantic partner (Taylor et al., 2009), personally relevant stimuli have rarely been used in tasks assessing attention and social information processing. Therefore, one goal of the current study was to compare the information processing of emotional faces using generic and personally relevant stimuli.

As a second study aim, we sought to examine whether information processing with personally relevant stimuli predicts interpersonal functioning, in an effort to show evidence of predictive validity. While the use of generic stimuli may be beneficial in studying basic behavioral responses, such as fear (Lundqvist et al., 2004), it is unsure whether these stimuli are useful when studying complex human social behavior, such as interpersonal functioning. Studies using generic stimuli have examined whether selective attention, as a form of emotion regulation, might serve to moderate affect during stressful circumstances. For example, participants who were slow to disengage their attention from supraliminal dysphoric stimuli exhibited a more pronounced negative mood response following an interpersonal stressor (Ellenbogen et al., 2006). Similar findings have linked attentional bias to aversive information with increased reactivity to a stressor (MacLeod et al., 2002). These data suggest that persons with strong attentional bias may have disruptions in their interpersonal functioning given the lack of flexibility to deal effectively with challenging circumstances. However, few studies directly assessed the impact of biased attention on interpersonal relationships. There is some evidence that attentional avoidance of generic fearful, sad and neutral facial expressions mediates the relation between early life stressors and social problems later on (Humphreys et al., 2016; Mastorakos and Scott, 2019). We have reported that the inhibition of personally relevant angry faces was found to moderate the relation between empathy and interpersonal functioning, so that persons with high empathy, but difficulties inhibiting highly relevant and salient stimuli, exhibited poor interpersonal functioning (Iacono et al., 2015). Taken together, comparing the use of generic vs. personally relevant stimuli when examining the link between attentional biases and interpersonal outcomes warrants further exploration.

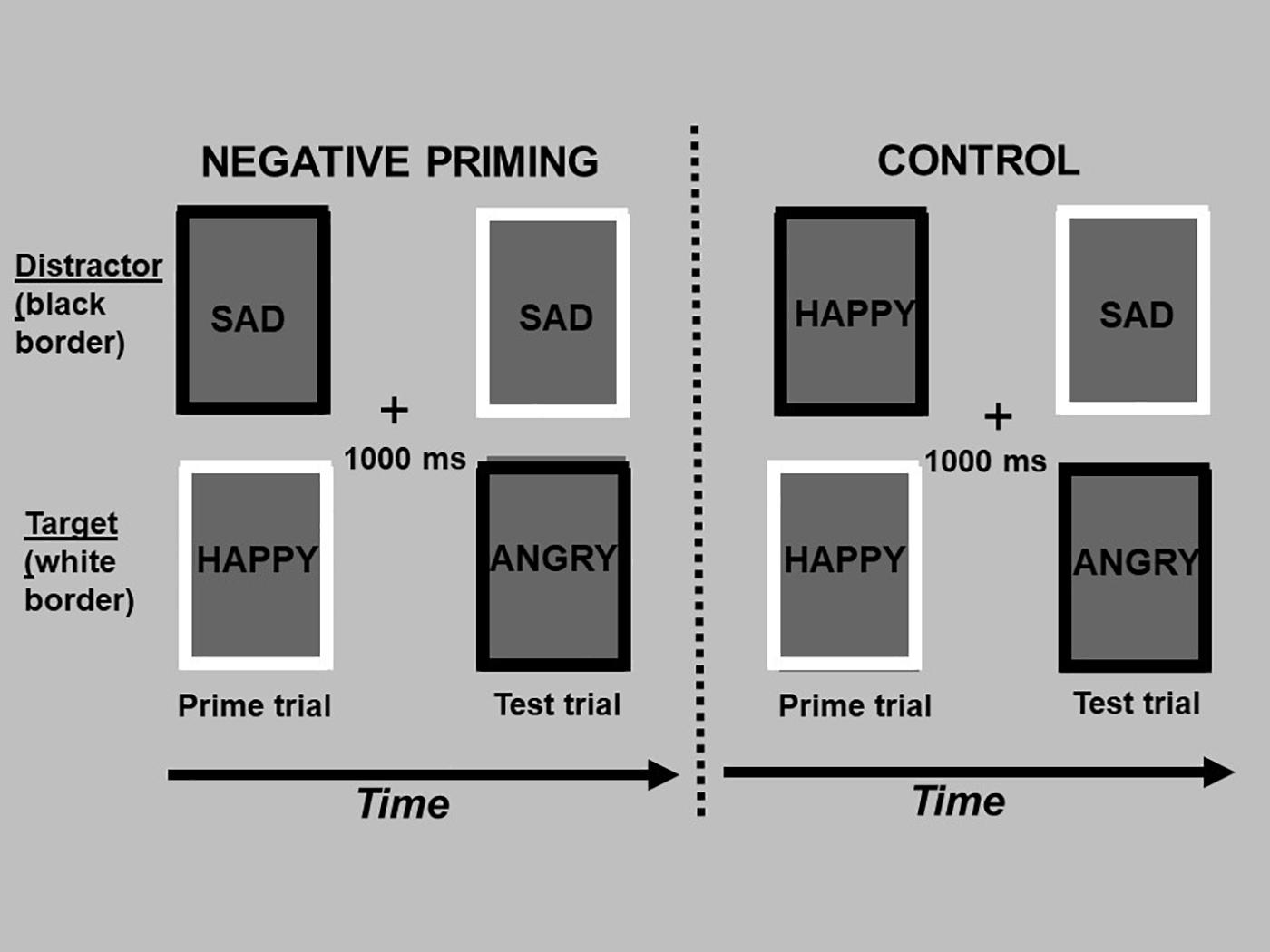

In summary, the aims of the current study were twofold. First, we compared how individuals process generic and personally relevant facial expressions of anger, sadness, and happiness relative to neutral facial expressions across measures of selective attention and cognitive inhibition. Differences in selective attention allocated to emotional stimuli can be estimated using a modified version of the spatial cueing task (Stormark et al., 1995; Ellenbogen et al., 2002) that measures the latency to respond to a neutral target following either a valid or invalid cue. Cues are valid when presented in the same hemifield as the target, and invalid when presented to the hemifield contralateral to the target. The valid vs. invalid manipulation permits the differentiation in reaction time between the engagement and disengagement of spatial attention (Posner, 1978). Importantly, the adapted modified procedure measures how efficiently one disengages their attention from emotional stimuli. There is increasing evidence that early automatic processing of emotional stimuli relates to different functions (i.e., fear processing) and brain circuits than slower conscious processing (Morris et al., 1999; Ellenbogen et al., 2006). Therefore, in the current study, generic and personally relevant stimuli were presented either briefly followed by a mask to assess automatic processing with limited conscious awareness, or at a long exposure duration allowing full conscious processing. Cognitive inhibition was assessed using the negative affective priming (NAP) task (Goeleven et al., 2006). Cognitive inhibition is defined as the ability to suppress interferences from distracting emotional information in one’s environment. Because working memory has a limited capacity, its efficient functioning depends on inhibitory processes that limit the access of irrelevant information into consciousness. If one’s cognitive inhibition capacity is weakened, too much irrelevant information enters into working memory, impeding our ability to respond flexibly and adapt our behavior and emotional responses to the environment (Hasher et al., 1999). Using the NAP task to measure cognitive inhibition, participants are instructed to respond on multiple trials to a target emotional stimulus (i.e., angry, sad, and happy faces) while simultaneously ignoring or inhibiting an emotional (task-irrelevant) distractor. The NAP effect refers to a participant’s trial response time for the target emotional stimulus (e.g., sad faces) when the same stimulus was the inhibited distractor on the previous trial (i.e., NAP trials; see Figure 1). Delays in responding on NAP trials represents increased cognitive inhibition capacity, while faster responding represents poorer inhibition of emotional distractors (Joormann, 2010).

Figure 1. The figure depicts the design of the negative affective priming task, consisting of “prime” and “test” trials. A prime trial (1st and 3rd column) consisted of a target picture with a white border and distractor picture (to be ignored) with a black border, and was always followed by a test trial (2nd and 4th column) 1,000 ms later (“+” fixation between trials). In negative priming test trials, the emotional expression of the target picture (sad face, top picture in the 2nd column) was the same as the emotional expression of the previously ignored picture in the prime trial (top picture in the 1st column). In the control test trials, the emotional expression of the target picture (sad face; top picture in the 4th column) was unrelated to the emotional expression of the previously ignored picture in the prime trial (top picture in the 3rd column). The test trials of the negative priming and control sequences are identical; they only differ in the type of emotion that was ignored in the previous prime trial. The words in each rectangle depict pictures of facial expressions of emotion, which were omitted to limit exposure of these photos beyond their use in research studies.

Second, we examined whether information processing of an intimate partner’s face is associated with relationship quality (i.e., conflict and support), as a means of providing evidence of the validity for this methodology. Given that the personally relevant pictures depicted participants’ intimate partners, we chose to examine whether attention and inhibition of these stimuli predicted the quality of the romantic relationship. Further, previous studies have demonstrated that conflict and support in a romantic relationship predict satisfaction with the relationship (Cramer, 2004; Campbell et al., 2005), depression symptoms (Mackinnon et al., 2012; Marchand-Reilly, 2012), and overall well-being (Gere and Schimmack, 2011). Therefore, given the importance of these constructs, the present study focused on conflict and support within the romantic relationship.

We hypothesized that the personally relevant stimuli, relative to the generic stimuli, will elicit greater selective attention and inhibition overall. Because threat is linked to fear systems associated with early automatic processing (Morris et al., 1999), we expected that differences in threat between generic and personally relevant stimuli would be minimal with perhaps a lesser effect for personally relevant than generic stimuli for early automatic processing. However, as sad affect may be more meaningful in a personally relevant context (i.e., empathy and potential signals of problems within the relationship) compared to generic stimuli (i.e., empathy alone), we expected the greatest difference in information processing for sad personally relevant faces relative to the sad faces of strangers. Lastly, we predicted that greater selective attention and less inhibition toward personally relevant sad faces, relative to generic sad faces, would be associated with more conflict and less support within romantic relationships. We also examined sex differences but had no apriori hypotheses given that there are few studies of the topic in the research area.

Materials and Methods

Participants

Fifty participants (25 females), aged between 18 and 29 years old (M = 22.9 years, SD = 3.1 years), were recruited in the Montréal, Québec area through the Concordia University participant pool website, as well as local and online advertisements. Participants and their partners were required to be in a romantic relationship for a minimum of 6 months at the time of testing. Twenty-eight percent of participants reported being in their relationship between 6 months and 1 year, 46% between 1 and 3 years, 20% between 3 and 6 years and 6% reported 6 years or more. Fifty-two percent of participants reported living with their partner. Exclusion criteria include any past or present diagnosis of schizophrenia, bipolar disorder, pervasive developmental disorder, or current substance abuse/dependence in either the participant or their partner, visual impairment, major medical illness within the past 3 weeks, or the use of a psychotropic medication at the time of the study.

Measures

The Structured Clinical Interview for DSM-IV (SCID-I; First et al., 2002)

The SCID-I, a valid and reliable diagnostic instrument (e.g., Zanarini and Frankenburg, 2001), was used to assess parents’ current and past mental health.

Quality of Relationships Inventory

The Quality of Relationships Inventory (QRI; Pierce et al., 1991) is a self-report measure used to assess the supportive (i.e., the extent to which the participant perceives availability of support) and conflictual (i.e., perceived conflict within the relationship) aspects within close relationships. This inventory included 25 items rated on a 4-point Likert scale ranging from “not at all” to “always or very much.” In this study, we use the support and conflict scales, but not the depth scale (the importance of the relationship to the participant). Sums of each subscale were calculated with higher scores signifying greater perception of support and conflict. Internal consistency for the current sample was α = 0.91 (conflict) and α = 0.77 (support).

The Beck Depression Inventory-II

The Beck Depression Inventory–second edition (BDI-II; Beck et al., 1996) was used to assess the presence of depressive symptoms over the past 2 weeks. Higher scores on the 21-item inventory are indicative of greater depressive symptoms. The internal consistency (α) coefficient for the current sample was 0.80.

Materials

Personally Relevant and Generic Stimuli

The Facial Action Coding System (FACS; Ekman et al., 2002) was used to train the partner of the participant to make four facial expressions: neutral, angry, sad and happy. Each component of the facial expressions was shown and described in detail (e.g., bearing of the teeth to portray anger). Partners were encouraged to use mental imagery to help produce the desired facial expressions and also had a mirror available for practice. If more guidance was needed, the experimenter would model the different facial expressions for each emotion. The pictures were taken using a Canon PowerShot A590IS 8.0 megapixel digital camera mounted on a tripod. Seven to ten pictures were taken for each emotion. Partners wore a gray t-shirt provided to them.

Using criteria from the FACS (Ekman et al., 2002), the five best pictures for each emotion were selected. These pictures were rated by five lab members on a global rating scale from 1 to 10. Pictures were judged on their intensity (degree of emotion conveyed) and their authenticity. The two pictures for each emotion type with the highest average ratings across all raters for both dimensions were selected as stimuli for the experiment. Mean picture ratings across all participants were as follows: neutral (M = 7.6, SD = 0.64), angry (M = 7.2, SD = 0.91), sad (M = 6.8, SD = 0.79), and happy (M = 8.1, SD = 0.80) stimuli. An intra-class correlation computed across all raters indicated high inter-rater reliability (ICC = 0.995).

Personally relevant pictures were processed using Adobe Photoshop CS4 editing software. Pictures were reduced to 60 by 90 pixels, the background was made uniform, and the brightness and hue were adjusted to match the generic stimuli. With regards to the generic pictures, a set of 12 (6 male) Caucasian faces each displaying a neutral, angry, sad and happy expression were selected from the MacArthur Network Face Stimuli Set (NimStim stimulus set; Tottenham et al., 2009).

Modified Spatial Cueing Task

A modified version (Ellenbogen et al., 2002) of the spatial cueing visual attention paradigm (Posner, 1980) was used to assess attentional engagement and disengagement toward generic and personally relevant stimuli. Participants fixated on a centrally located “+” sign on a black background, which was flanked on both sides by a gray rectangle (3.7 cm × 3.2 cm). The center of each rectangle was 2.2° of visual angle from the fixation point. A small black dot (i.e., target) appeared in either of the gray rectangles. Preceding each target presentation, a cue appeared in one of the rectangles. The cue was indicative of where the target would appear. The cues were pictures of faces displaying one of the four emotion types (neutral, angry, sad, or happy), and were either generic or personally relevant. Participants were asked to respond as quickly as possible once the target appeared.

There were 384 trials (192 personally relevant), divided into 16 blocks (4 blocks for each emotion type) of 24 trials, and 20 additional “catch” trials presented across blocks, where the target did not appear following the presentation of the cue. Catch trials (not included in statistical analyses) were used to prevent participants from developing an automatic response set due to the fixed cue-target intervals in this experiment. On valid or attentional engagement trials (288), the cue and target appeared in the same hemifield. On invalid or disengagement trials (96), the cue and target appeared in opposite hemifields. The stimulus onset asynchrony (the interval between the onset of the cue and onset of the target) for valid and invalid trials was 830 ms, and the interval between trials (from the offset of the target to next cue onset) was 2,200 ms. Cues were presented for 750 ms or 17 ms followed by a masking stimulus. For masked trials, the mask was presented for 183 ms immediately at the offset of the cue. At 80 ms following the offset of the cue or mask, targets were presented for 600 ms. The masks were made by digitally cutting pictures into small pieces and randomly reassembling them.

Within each block, the picture cues were of the same emotion type to avoid affective carryover effects, where the emotion type of the cue on one trial influences the response on the subsequent trial. The order of blocks and hemifield of stimulus presentation were varied randomly across subjects, except that two blocks of the same picture category were never consecutive. Validly and invalidly cued targets represented 75 and 25% of all trials, respectively, a ratio shown to be effective in cueing attention (Posner, 1978). Reaction times (RT) less than 150 ms and more than 850 ms were excluded from the analyses.

An index of disengagement score was computed by subtracting RTs of neutral picture cues from RTs of emotional picture cues for invalid trials.

An index of engagement was computed by subtracting RTs of emotional picture cues from RTs of neutral picture cues for valid trials. For both indices, positive scores indicate greater selective attention to the emotional cue (i.e., faster engagement to, or slower disengagement from, an emotional face) whereas negative scores signal attentional avoidance (i.e., slower engagement to, and faster disengagement from, an emotional face).

Negative Affective Priming Task

Derived from the original negative priming paradigm (Tipper and Cranston, 1985) and its later adaptation (Goeleven et al., 2006; Taylor et al., 2011), a computerized cognitive task was designed to assess participants’ ability to inhibit generic and personally relevant facial stimuli depicting angry, sad and happy emotional expressions. Participants were instructed to use a two-key response box to identify, as quickly as possible, whether the stimulus presented in a white frame (target) depicted a positive or a negative facial expression, while ignoring the stimulus presented in a black frame (distractor). Target and distractor stimuli were presented simultaneously at either the top or the bottom of the screen.

Specifically, the negative affective priming (NAP) task consisted of fixed consecutive pairs of “prime” and “test” trials (see Figure 1). Prime trials always preceded the test trials. In the negative priming condition, the emotional expression of the target picture during the test trial was the same as the emotional expression of the previously ignored distractor in the prime trial. In the control condition, the emotional expression of the target picture during the test trial was unrelated to the emotional expression of the previously ignored picture in the prime trial. Importantly, the pictures presented during the test trial of the negative priming and control conditions were identical; the conditions only differed in the pictures presented in the prime trials. In order to counterbalance the type of emotional stimulus used as targets in prime and test trials, as well as distractors in test trials, two sequences of negative priming and control manipulations were used. Trials were also counterbalanced for the spatial location of the pictures. The design of the NAP task was identical for both the personally relevant and generic stimuli, and differed only in the number of distinct actors conveying the emotional expressions.

One hundred and ninety-two stimulus presentations were paired (priming and test trials) into 96 trials (48 negative priming trials and 48 control trials), appearing in random sequence. Participants were presented with an equal number of paired trials for each emotional expression (32 angry, 32 sad, 32 happy), half of which consisted of personally relevant pictures. Trial sequences (priming followed by a test trial) used either personally relevant or generic pictures; they were never mixed. Pictures remained on the screen until a response was provided or for a maximum of 7,500 ms. Each trial was separated by an inter-stimulus interval of 1,000 ms during which time a centered fixation cross would appear.

An index of inhibition score was computed for each emotional expression by subtracting mean RT on matched control test trials from mean RT on matched negative priming test trials. A positive index value indicates strong inhibition, meaning that the emotional expression of the distractor presented during the prime trial led to a slower RT during the test trial of the same emotion. Conversely, a negative index value indicates reduced/poor inhibition because the distractor presented during the prime trial prompted a faster RT during the test trial. Reaction times below 150 ms and above 4,000 ms, as well as incorrect responses to test trials, were excluded from the analyses.

Procedure

Following completion of the screening protocol, including a structured interview assessing past and current mental health, participants and their intimate partners were scheduled for separate sessions. First, partners took part in a photography session to obtain the personally relevant stimuli (as described above). Approximately 1 week later, participants completed the aforementioned information processing tasks in random order, as well as an emotion-modulated acoustic startle task. The electromyography startle data were of questionable quality, and were thus excluded from the present study. Participants used a chin rest throughout the tasks to maintain a distance of 57 cm from the computer monitor. All tasks were performed on an IBM PC computer, with a 17 in. NEC color monitor, which were programmed using the STIM Stimulus Presentation System software (version 7.584) developed by the James Long Company (Caroga Lake, NY). Following completion of the tasks, the questionnaires were completed. Participants and their partners were debriefed and remunerated for the time spent in the laboratory. All procedures were approved by the Human Research Ethics Committee of Concordia University (Montréal, Québec), and participants and their partners provided written informed consent.

Data Analysis

Power analyses for the within-subject comparison of personally relevant and generic stimuli were calculated using G∗Power 3.1 (Faul et al., 2007), using an effect size of 0.16 (Cohen’s f; small-medium effect size). The effect size of 0.16 was determined from a previous study comparing inhibition scores (happy, sad, angry) for personally relevant vs. generic stimuli (Iacono et al., 2015). The analysis indicated that 50 participants would provide sufficient power (0.80, p < 0.05) to test the study’s primary hypothesis. Data were screened for outliers (±3 standard deviations from the mean) and distributional anomalies. Prior to testing the hypotheses, tests of validity for each task were conducted. The data were then analyzed to determine the effect of personal relevance for each task. For the modified spatial cueing task, a Sex × Relevance (generic, personally relevant) × Emotion (neutral, happy, sad, angry) × Exposure (750 ms, masked) repeated measures ANOVA was performed on engagement and disengagement scores separately. For the NAP task, a Sex × Relevance × Emotion repeated measures ANOVA was conducted on inhibition scores.

Finally, a series of hierarchical multiple regressions predicting relationship quality (i.e., support and conflict) from indices of social information processing were conducted. For the spatial cueing data, the predictor variables were entered in three steps: (1) BDI scores (to control for depression) and participant’s sex, (2) centered disengagement scores from generic and personally relevant stimuli, and (3) a Sex × Centered Personally Relevant Stimuli. Models were ran separately for each emotion type and repeated for both 750 ms and masked cue presentations. Similar hierarchical multiple regressions were performed on engagement scores from valid trials of the spatial cueing task and inhibition scores from the NAP. Significant interactions were followed-up using a test of simple slopes (Aiken and West, 1991).

Results

Spatial Cueing Task

To test the validity of the spatial cueing task, RT data were analyzed with a Picture relevance × Cue Validity (valid, invalid) × Emotion × Exposure repeated measures ANOVA. As expected, a main effect of cue validity was found for trials with the 750 ms cue presentation, [F(1,49) = 17.790, p = 0.000, η2 = 0.266] and masked cues [F(1,46) = 119.550, p = 0.000, η2 = 0.709]. Specifically, participants had slower RT for invalid relative to valid trials for both 750 ms and masked presentations, supporting the validity of the spatial cueing paradigm. For the 750 ms presentation, mean RT was 320 ms (SD = 50 ms) for invalid trials and 306 ms (SD = 48 ms) for valid trials. For the masked presentation, mean RT was 318 ms (SD = 47 ms) for invalid trials and 284 ms (SD = 40 ms) for valid trials.

Disengagement (Invalid) Trials

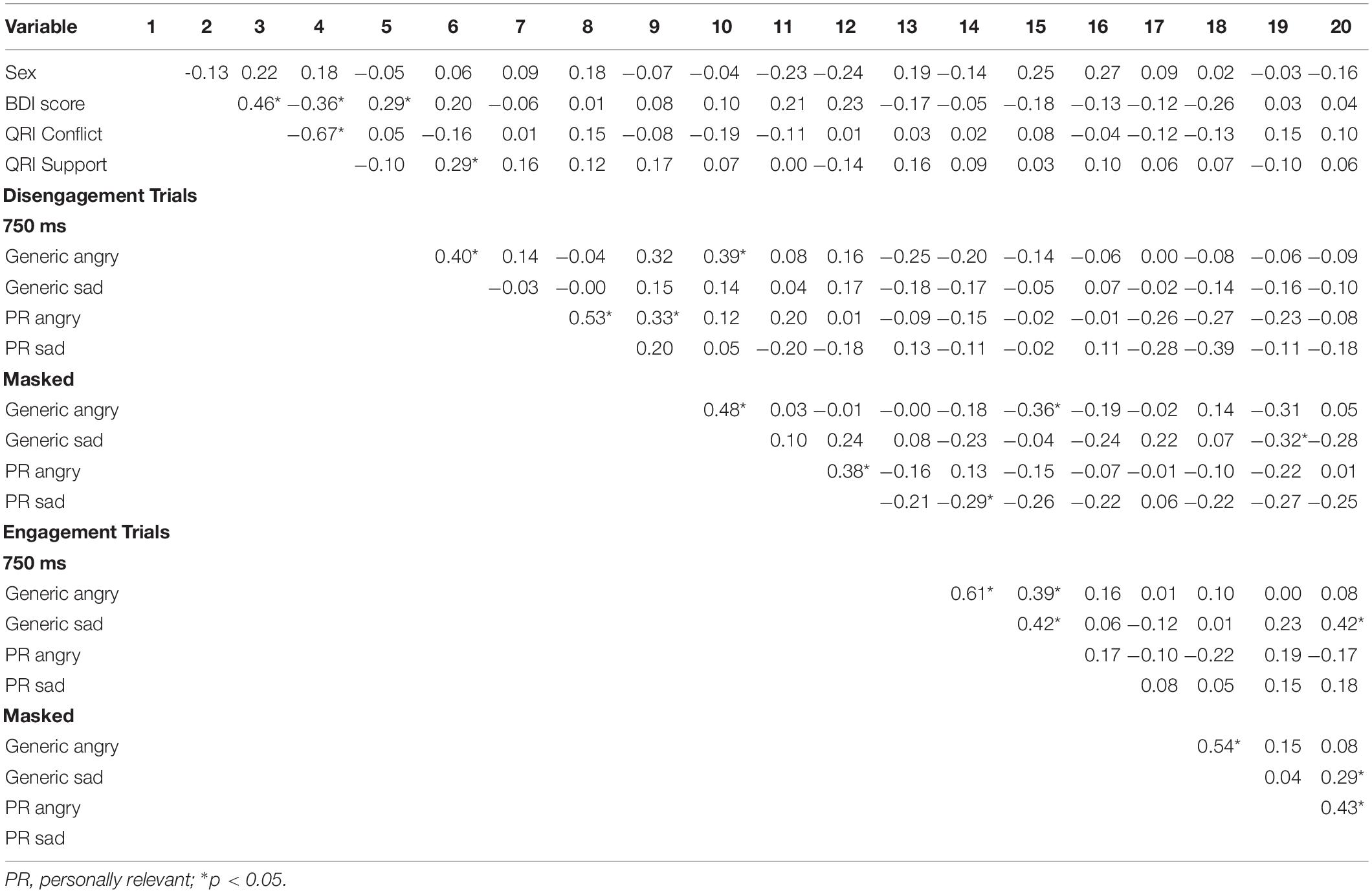

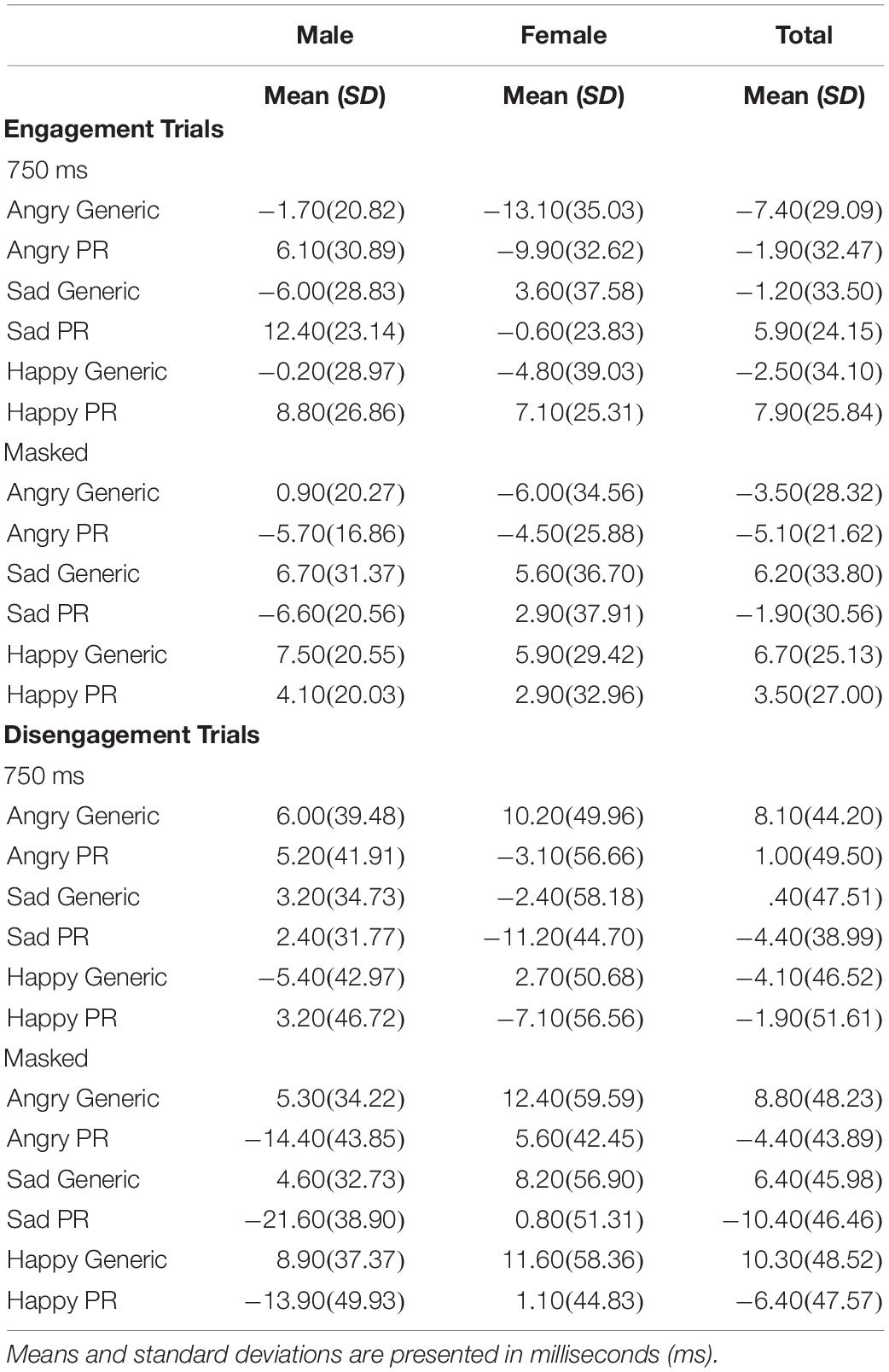

To examine the effect of picture relevance on attentional disengagement, a Sex × Picture Relevance × Emotion × Exposure repeated measures ANOVA was conducted using the disengagement index scores. A significant main effect of relevance was observed [F(1,48) = 4.257, p = 0.045, η2 = 0.081], with participants being faster to shift their attention away from personally relevant stimuli than generic stimuli (see Table 1).

Table 1. Mean index scores (emotion minus neutral) and standard deviations (SD) for the processing of generic and personally relevant stimuli during engagement and disengagement trials of the spatial cueing task across all emotion types.

Engagement (Valid) Trials

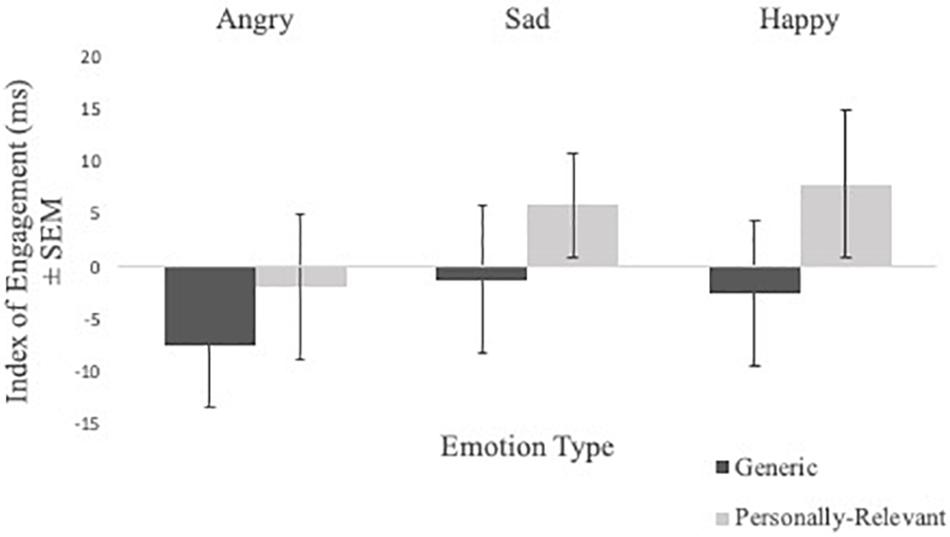

Engagement index scores from the valid trials of the spatial cueing task were subject to a Sex × Picture Relevance × Emotion Type × Exposure repeated measures ANOVA. A significant Picture Relevance × Exposure interaction was observed [F(1,48) = 4.376, p = 0.042, η2 = 0.084]. To follow-up, a Picture Relevance × Emotion Type repeated measures ANOVA was conducted separately for 750 ms exposure and masked trials. For 750 ms exposure trials, a main effect of relevance was observed [F(1,48) = 4.090, p = 0.049, η2 = 0.079], where participants were quicker to shift toward personally relevant faces, relative to generic stimuli (see Figure 2). No significant findings were found for masked trials.

Figure 2. Comparisons of mean index scores of engagement (i.e., RT valid trials with emotional stimuli—RT valid trials with neutral stimuli) of angry, sad, and happy facial expressions for generic and personally relevant stimuli for 750 ms presentation time. Relative to generic pictures of facial expressions of emotion, participants shifted attention more quickly toward facial expressions of their intimate partner when presented for 750 ms, but not masked presentations. Error bars represent standard errors.

In sum, personally relevant stimuli, regardless of whether the facial expression was angry, sad, or happy, elicited attentional avoidance on disengagement trials (on both 750 ms and masked disengagement trials) and rapid shifts of attention toward stimuli (on 750 ms engagement trials) relative to trials with generic facial expressions of emotion. See Table 1 for means and standard deviations of the spatial cueing data.

Negative Affective Priming Task

To verify the validity of the NAP task, a Priming Condition (negative priming, control) × Emotion Type × Picture Relevance repeated measures ANOVA was conducted on RT data. A main effect for priming condition [F(1,56) = 13.294, p = 0.001, η2 = 0.192] was observed, with participants demonstrating overall significantly slower RTs on negative priming trials (M = 954 ms, SD = 272 ms) compared to control trials (M = 916 ms, SD = 268 ms), supporting the validity of the NAP paradigm.

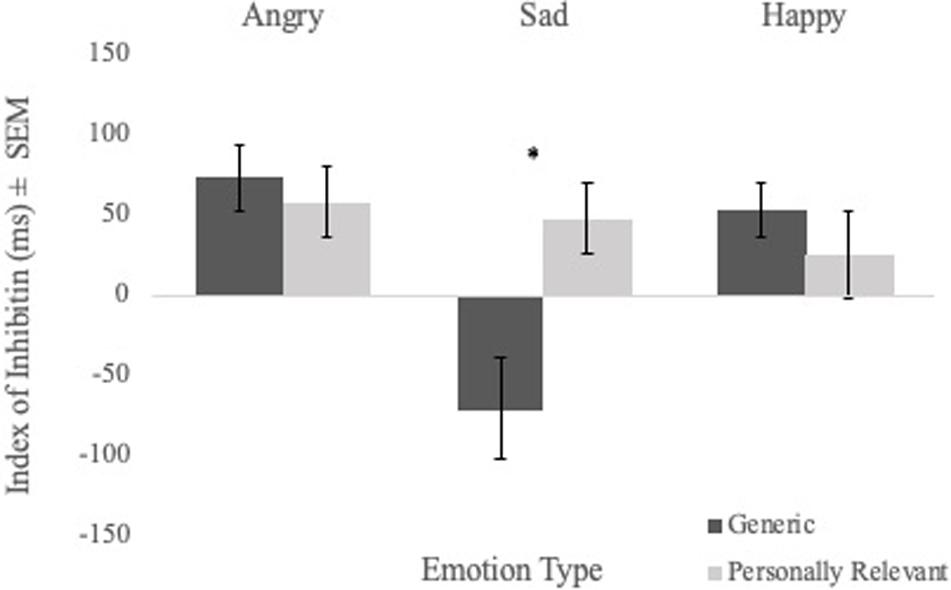

To examine the effect of picture relevance on inhibition, a Sex × Picture Relevance × Emotion Type repeated measures ANOVA of inhibition index scores was performed. No significant main effect of picture relevance was found. However, the Picture Relevance × Emotion Type interaction was significant [F(1,56) = 5.970, p = 0.003, η2 = 0.096]. Follow-up analyses revealed a main effect of relevance for sad faces [F(1,56) = 12.441, p = 0.001, η2 = 0.182], with participants demonstrating strong inhibition of personally relevant sad stimuli but poor inhibition of generic sad stimuli (see Figure 3). There were no main effects for angry or happy faces.

Figure 3. Comparisons of mean inhibition index scores (i.e., mean RT on control trials—mean RT on priming trials) of angry, sad, and happy facial expressions for generic and personally relevant stimuli. Relative to generic pictures of facial expressions of sadness, participants exhibited increased inhibition of the sad face of their intimate partner. Note. Error bars represent standard errors. *p < 0.05.

Social Information Processing of Personally Relevant Stimuli and Relationship Quality

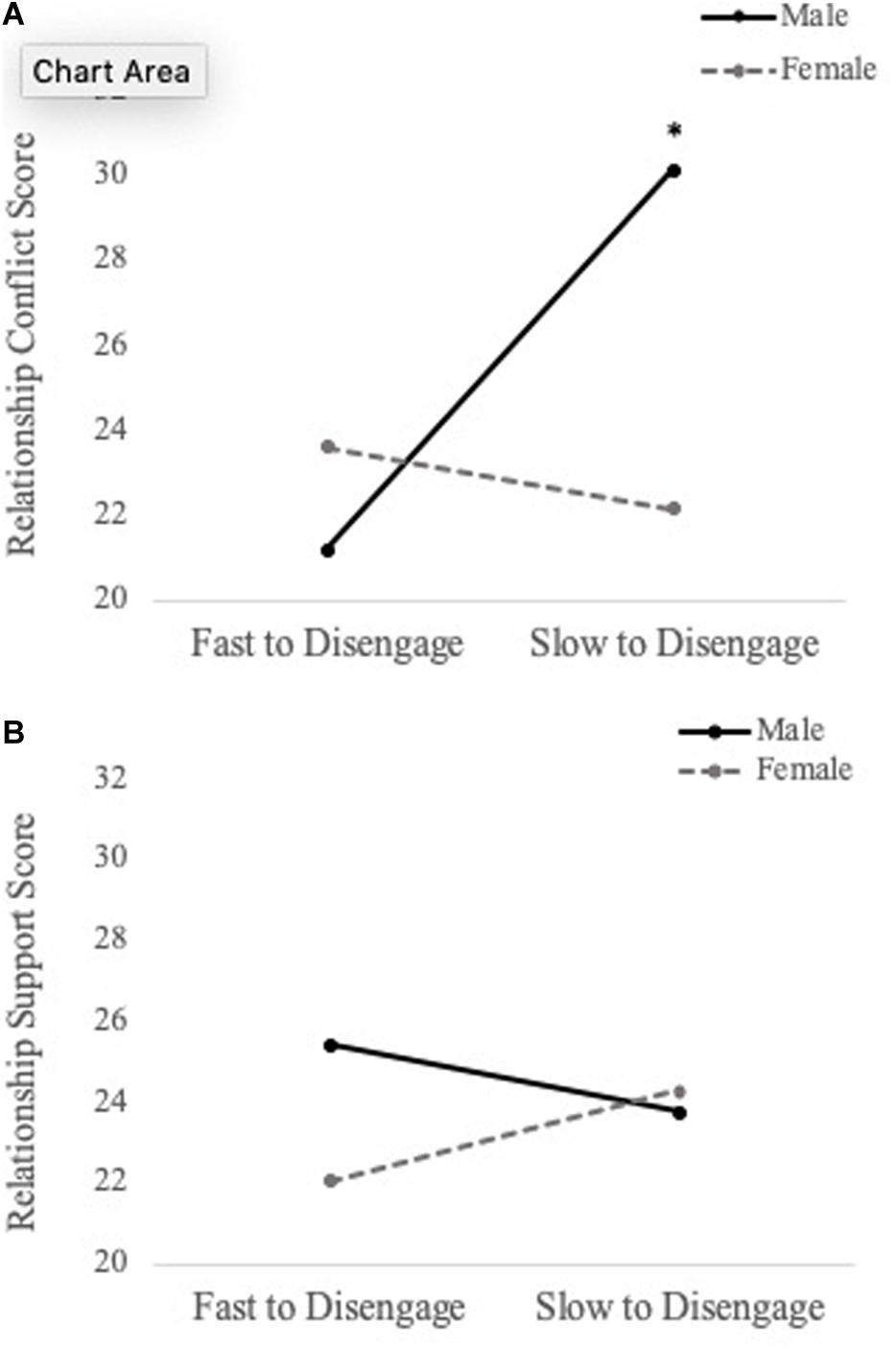

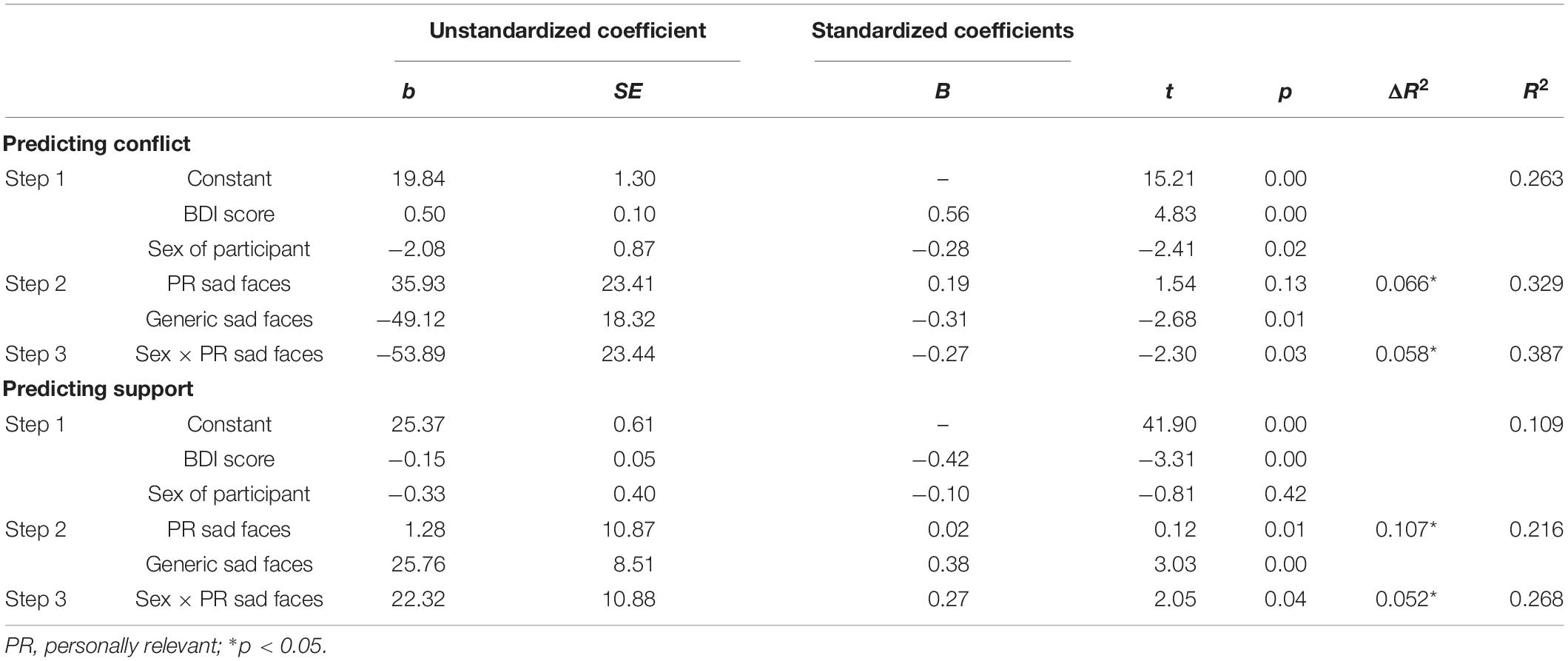

To examine whether social information processing of personally relevant stimuli predicts relationship quality (i.e., conflict and support), a series of hierarchical multiple regressions were performed. For 750 ms cue presentation time, we found a main effect of disengagement from generic sad faces when predicting levels of conflict (b = -49.116, SE = 18.324, t = -2.680, p = 0.010), such that fast disengagement from generic sad faces predicted increased conflict. Additionally, there was a significant Sex × Personally Relevant Sad Stimuli interaction (b = -53.890, SE = 23.439, t = -2.299, p = 0.026). With respect to the overall effect size, the model was found to explain 38.7% of the variance in relationship conflict scores. Simple slopes analyses revealed that the slope for male participants was significantly different than zero [b = 85.715, t(45) = 2.107, p = 0.04]. Specifically, increased attention toward personally relevant sad faces was associated with greater levels of conflict for males, but not females (see Figure 4A).

Figure 4. Simple slopes analyses demonstrating the relation between attentional disengagement of personally relevant sad faces (750 ms) and level of conflict (A) and support (B) in romantic relationship for males and females. Difficulties shifting attention away (increased selective attention) from the sad face of their intimate partner was associated with greater levels of conflict for male participants, but not female participants. Similar findings were observed for relationship support, but neither slope was significantly different than zero. *p < 0.05.

The same hierarchical regression models were conducted to predict relationship support. For 750 ms cue presentation time, there was a main effect of disengagement from generic sad faces when predicting levels of support (b = 22.324, SE = 10.883, t = 2.051, p = 0.046), such that fast disengagement from generic sad faces predicted less support. Moreover, the Sex × Personally Relevant Sad Faces interaction was a significant predictor of support in the relationship (b = 22.324, SE = 10.883, t = 2.051, p = 0.046). With respect to the overall effect size, the model was found to explain 26.8% of the variance in relationship support scores. Despite the statistically significant interaction, simple slope analyses revealed both the slopes for male and female participants were not significantly different from zero (see Figure 4B). The above results are summarized in Table 2 and the Pearson correlations between these study variables are presented in Table 3.

Table 2. Multiple regression results for predictors of the level of conflict and support in intimate relationships for the invalid sad face trials of the spatial cueing task (750-ms presentation time).

We examined whether disengagement from the other emotional stimuli predicted relationship quality. The results of these analyses were not statistically significant, suggesting the findings were specific to sad facial expressions (data not shown). In addition, there were no significant findings for masked presentation trials or when using engagement scores of the spatial cueing task (data not shown). Similar hierarchical regressions were performed for the negative priming and task, but did not yield statistically significant results (data not shown).

Discussion

The purpose of the present study was twofold. First, we compared selective attention and inhibition of emotional faces using generic and personally relevant stimuli. Second, with the goal of providing evidence of the predictive validity of this methodology, we assessed whether the information processing of personally relevant stimuli predicted relationship quality. A number of key findings emerged. Consistent with predictions, the present study provides evidence that personally relevant facial expressions of emotion, using pictures of participants’ intimate partners, are processed differently than pictures of strangers’ facial expressions taken from generic databases. Relative to generic pictures, participants shifted attention more quickly toward facial expressions of their intimate partner, regardless of valence, when presented with full conscious awareness (750 ms). Attentional disengagement, in contrast, was faster (indicative of attentional avoidance) when shifting attention away from the facial expressions of their intimate partner relative to generic pictures for both the masked and 750 ms presentations. For cognitive inhibition, the findings were emotion-specific, as predicted. While participants were unable to inhibit the processing of sad generic faces, similar to previous studies using this paradigm (Taylor et al., 2011; Ellenbogen et al., 2013), they showed strong inhibition of personally relevant sad facial expressions. Finally, partly consistent with our hypothesis, difficulties disengaging attention from personally relevant sad faces predicted increased conflict in their relationship among male but not female participants. A similar pattern of results was found for relationship support, although the effect was weaker than ratings of conflict. Unexpectedly, the efficiency to disengage attention from generic sad faces also predicted relationship quality, such that fast disengagement (avoidance) from generic sad faces predicted more conflict and less support in participants’ current relationship.

Personally relevant stimuli elicited both increased selective attention and avoidance relative to generic stimuli depending on the type of trial and exposure duration. The selective attention effect, occurring only when shifting attention toward the emotional cue, supports the view that personally relevant stimuli attract attention and are more salient than generic stimuli (Wingenfeld et al., 2006; Fridrici et al., 2013). The fact that this effect was evident across all facial expressions of emotion suggests that it is likely due to familiarity and unrelated to the expression of a specific emotion (Bartels and Zeki, 2000; Leibenluft et al., 2004; Viskontas et al., 2009). In contrast to increased selective attention, the observed attentional avoidance of personally relevant stimuli might represent an attempt to regulate negative affect caused by their presentation (Ellenbogen et al., 2002; Cisler and Koster, 2010). In the present study, the fast disengagement from the face of one’s intimate partner might reflect enhanced processing of the familiar vs. generic faces, enabling participants to engage in fast task-consistent shifts away from emotional faces. Moreover, previous research has shown that threatening stimuli are less attended to following mere exposure (Young and Claypool, 2010). Therefore, it is also possible that previous exposure to personally relevant facial expressions may underlie the participants’ ability to quickly disengage from their intimate partner’s face compared to that of a stranger. On the other hand, generic stimuli might capture attention more readily, and thus slow down disengagement, via the activation of threat-related circuits associated with fast automatic processing (Morris et al., 1999). Given that the rapid shifts of attention toward and away from personally relevant faces were consistent with task instructions (i.e., to respond to a target that is correctly cued in engagement trials, and to shift attention away when the target is incorrectly cued in a disengagement trial), it is likely that these changes represent adaptive responses to highly salient cues. However, further research is needed to determine the correlates and functional relevance of this fast attentional avoidance to determine whether it is adaptive or maladaptive.

In addition to these general increases in selective attention and avoidance, we found evidence of increased valence-specific (sad) inhibition of personally relevant faces relative to generic ones. In past studies of NAP (Taylor et al., 2011; Ellenbogen et al., 2013), participants were unable to inhibit the processing of distracting generic sad faces. Although the cause of the inhibition failure is unknown, we speculate that difficulties suppressing sad faces may be associated with an automatic empathy response to a distressed stranger, which might impede inhibition. With respect to pictures of a distressed intimate partner, it appears that a different process might be active. Sad affect in an intimate partner would be expected to elicit more complex and personal responses, perhaps signaling relationship problems and eliciting strong emotion. Given the persistent nature of sadness, it might be a more salient indicator of threat to the relationship compared to other negative emotions, such as anger (Ellsworth and Smith, 1988). The effective use of cognitive inhibition is likely an important component in the regulation of sadness. For example, individuals with a history of depression as well as clinically depressed individuals display difficulties inhibiting irrelevant sad stimuli (Joormann, 2004; Goeleven et al., 2006; Joormann and Gotlib, 2010). Thus, the improvement in inhibitory control of personally relevant sad faces may represent an adaptive response to regulate emotions associated with partner distress. In addition, high inhibitory control of these salient stimuli may have positive effects on the regulation of physiological stress reactivity and secretion of the hormone cortisol. We recently found that high chronic stress was associated with poor inhibition of the sad face of one’s intimate partner, and that this change in inhibition predicted an elevated cortisol response at awakening 6 months later (Wong et al., 2020). Taken together, strong inhibitory control of personally relevant sad faces may be related to different indices of adaptive functioning. Future research, however, will be needed to test these predictions.

Importantly, we found evidence that information processing biases predict concurrent relationship quality, although these effects are, in part, related to the sex of the participant and the valence of the stimuli used. Male participants’ slow disengagement from their female partners’ sad face was associated with increased conflict in the relationship. Interestingly, this association was specific to depictions of sadness and was not found in female participants, perhaps indicating greater salience of female emotional facial expressions relative to male facial expressions. Indeed, women express a higher rate of facial emotions and report experiencing more fear, sadness, and joy than men (Allen and Haccoun, 1976; LaFrance and Banaji, 1992). In addition, women were also rated as more emotionally expressive than men in a study of couples using an objective behavioral coding system (Notarius and Johnson, 1982). Thus, facial expressions of emotion displayed by women are perhaps more meaningful and salient in the context of a romantic relationship than similar expressions displayed by men because they occur more frequently and might be more closely tied to problems in the relationship.

Unexpectedly, slow disengagement from generic sad faces presented for 750 ms predicted increased support and less conflict in the relationship, which is opposite to the findings reported for personally relevant stimuli. Again, differences between generic and personally relevant stimuli are striking, further highlighting the importance of distinguishing between the two types of processing. Perhaps the relationship between general selective attention, as assessed with generic stimuli, and positive relationship quality requires increased selective attention to emotional cues rather than avoidance or suppression, which is known to hinder relationship functioning (Gross and John, 2003; Ben-Naim et al., 2013; Velotti et al., 2016). It is possible that the relationship observed with generic stimuli is tapping into general links between attentional style and interpersonal functioning, whereas personally relevant processing may be tapping into more specific factors regarding the quality of participants’ current relationship.

A key strength of the present research is that it is among a small number studies (Iacono et al., 2015; Wong et al., 2020) to assess information processing using emotional facial expressions of participants’ intimate partners. However, a number of limitations warrant consideration. First, participants were exposed to the face of their partner more frequently during the experiment than the generic stimuli, which used multiple actors. Habituation to the partner’s face may explain some of the differences in the processing of personally relevant and generic stimuli. Future studies may want to match the number of actors used for the generic stimuli with those used for the personally relevant stimuli (e.g., one actor expressing the different emotions). However, the present study intended to mimic how most studies use generic photographic databases and attempted to minimize familiarity effects that occur with multiple presentations of facial emotions from the same actor. Second, considering that the generic stimuli employ trained actors, it is possible that they display more salient or intense depictions of emotion than the personally relevant ones, despite our efforts to train intimate partners to express similar facial emotions. Third, we only measured relationship quality as perceived by the participant. Future studies may benefit from including an objective measure of relationship functioning (e.g., a semi-structured interview) and/or including partners’ reports. Finally, the cross-sectional nature of the assessment of information processing and relationship quality precludes interpretations of direction of effect.

Overall, the present study highlights robust differences in information processing when using generic vs. personally meaningful emotional stimuli, and demonstrates how attentional disengagement biases for sad faces using both types of stimuli relate to current relationship functioning. Future research should attempt to replicate these findings with a larger sample and test out directional hypotheses using a prospective study design. If reliability and validity are shown in future studies, personally relevant social information tasks could open new areas of social and neurobiological research. Moreover, the possibility of using low-cost information processing tasks to predict relationship quality could have important clinical implications, given the strong links between poor interpersonal functioning and health (Holt-Lunstad et al., 2008).

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by the Human Research Ethics Committee of Concordia University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

LS ran the statistical analyses. LS and VT produced the first draft of the manuscript. ME designed the study and edited the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding

This project was funded by the Social Sciences and Humanities Research Council of Canada (http://www.sshrc-crsh.gc.ca/home-accueil-eng.aspx). This funding was awarded to ME. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

Aiken, L. S., and West, S. G. (1991). Multiple Regression: Testing and Interpreting Interactions. Thousand Oaks, CA: Sage Publications, Inc.

Allen, J. G., and Haccoun, D. M. (1976). Sex differences in emotionality: a multidimensional approach. Hum. Relat. 29, 711–722. doi: 10.1177/001872677602900801

Bar-Haim, Y., Lamy, D., Pergamin, L., Bakermans-Kranenburg, M. J., and van IJzendoorn, M. H. (2007). Threat-related attentional bias in anxious and nonanxious individuals: a meta analytic study. Psychol. Bull. 133, 1–24. doi: 10.1037/0033-2909.133.1.1

Bartels, A., and Zeki, S. (2000). The neural basis of romantic love. Neuroreport 11, 3829–3834. doi: 10.1097/00001756-200011270-00046

Bayer, M., Ruthmann, K., and Schacht, A. (2017). The impact of personal relevance on emotion processing: evidence from event-related potentials and pupillary responses. Soc. Cogn. Affect. Neurosci. 12, 1470–1479. doi: 10.1093/scan/nsx075

Beck, A. T., Steer, R. A., and Brown, G. K. (1996). Beck depression inventory-II. San Antonio 78, 490–498.

Ben-Naim, S., Hirschberger, G., Ein-Dor, T., and Mikulincer, M. (2013). An experimental study of emotion regulation during relationship conflict interactions: the moderating role of attachment orientations. Emotion 13:506. doi: 10.1037/a0031473

Campbell, L., Simpson, J. A., Boldry, J., and Kashy, D. A. (2005). Perceptions of Conflict and Support in Romantic Relationships: The Role of Attachment Anxiety. J. Pers. Soc. Psychol. 88, 510–531. doi: 10.1037/0022-3514.88.3.510

Cisler, J. M., and Koster, E. H. W. (2010). Mechanisms of attentional biases towards threat in anxiety disorders: an integrative review. Clin. Psychol. Rev. 30, 203–216. doi: 10.1016/j.cpr.2009.11.003

Claypool, H. M., Hugenberg, K., Housley, M. K., and Mackie, D. M. (2007). Familiar eyes are smiling: on the role of familiarity in the perception of facial affect. Eur. J. Soc. Psychol. 37, 856–866. doi: 10.1002/ejsp.422

Cramer, D. (2004). Satisfaction with a romantic relationship, depression, support and conflict. Psychol. Psychother. 77, 449–461. doi: 10.1348/1476083042555389

Ekman, P., Friesen, W. V., and Hager, J. (2002). Emotional facial action coding system. Manual and investigator’s guide CD-ROM. Salt Lake City, UT: A Human Face.

Elfgren, C., van Westen, D., Passant, U., Larsson, E. M., Mannfolk, P., and Fransson, P. (2006). fMRI activity in the medial temporal lobe during famous face processing. Neuroimage 30, 609–616. doi: 10.1016/j.neuroimage.2005.09.060

Ellenbogen, M. A., Linnen, A. M., Cardoso, C., and Joober, R. (2013). Intranasal oxytocin impedes the ability to ignore task-irrelevant facial expressions of sadness in students with depressive symptoms. Psychoneuroendocrinology 38, 387–398. doi: 10.1016/j.psyneuen.2012.06.016

Ellenbogen, M. A., and Schwartzman, A. E. (2009). Selective attention and avoidance on a pictorial cueing task during stress in clinically anxious and depressed participants. Behav. Res. Ther. 47, 128–138. doi: 10.1016/j.brat.2008.10.021

Ellenbogen, M. A., Schwartzman, A. E., Stewart, J., and Walker, C.-D. (2002). Stress and selective attention: The interplay of mood, cortisol levels, and emotional information processing. Psychophysiology 39, 723–732. doi: 10.1017/S0048577202010739

Ellenbogen, M. A., Schwartzman, A. E., Stewart, J., and Walker, C.-D. (2006). Automatic and effortful emotional information processing regulates different aspects of the stress response. Psychoneuroendocrinology 31, 373–387. doi: 10.1016/j.psyneuen.2005.09.001

Ellsworth, P. C., and Smith, C. A. (1988). From appraisal to emotion: differences among unpleasant feelings. Motiv. Emot. 12, 271–302. doi: 10.1007/bf00993115

Faul, F., Erdfelder, E., Lang, A. G., and Buchner, A. (2007). G∗Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/bf03193146

First, M. B., Spitzer, R. L., Gibbon, M., and Williams, J. B. W. (2002). Structured Clinical Interview for DSM-IV-TR Axis I Disorders, Research Version, Non-Patient Edition (SCID-I/NP). New York, NY: New York State Psychiatric Institute.

Fossati, P. (2012). Neural correlates of emotion processing: from emotional to social brain. Eur. Neuropsychopharmacol. 22, S487–S491. doi: 10.1016/j.euroneuro.2012.07.008

Fox, E., Russo, R., and Dutton, K. (2002). Attentional bias for threat: evidence for delayed disengagement from emotional faces. Cogn. Emot. 16, 355–379. doi: 10.1080/02699930143000527

Fridrici, C., Leichsenring-Driessen, C., Driessen, M., Wingenfeld, K., Kremer, G., and Beblo, T. (2013). The individualized alcohol Stroop task: no attentional bias toward personalized stimuli in alcohol-dependents. Psychol. Addict. Behav. 27, 62–70. doi: 10.1037/a0029139

Gere, J., and Schimmack, U. (2011). When romantic partners’ goals conflict: effects on relationship quality and subjective well-being. J. Happiness Stud. 14, 37–49. doi: 10.1007/s10902-011-9314-2

Gobbini, M. I., and Haxby, J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41. doi: 10.1016/j.neuropsychologia.2006.04.015

Goeleven, E., De Raedt, R., Baert, S., and Koster, E. H. (2006). Deficient inhibition of emotional information in depression. J. Affect. Disord. 93, 149–157. doi: 10.1016/j.jad.2006.03.007

Gotlib, I. H., Krasnoperova, E., Yue, D. N., and Joormann, J. (2004). Attentional biases for negative interpersonal stimuli in clinical depression. J. Abnorm. Psychol. 113, 127–135. doi: 10.1037/0021-843x.113.1.121

Gross, J. J., and John, O. P. (2003). Individual differences in two emotion regulation processes: implications for affect, relationships, and well-being. J. Pers. Soc. Psychol. 85:348. doi: 10.1037/0022-3514.85.2.348

Hallion, L. S., and Ruscio, A. M. (2011). A Meta-Analysis of the Effect of Cognitive Bias Modification on Anxiety and Depression. Psychological Bulletin 137, 940–958. doi: 10.1037/a0024355

Hasher, L., Zacks, R. T., and May, C. P. (1999). “Inhibitory control, circadian arousal, and age,” in Attention and Performance XVII, Cognitive Regulation of Performance: Interaction of Theory and Application, eds D. Gopher and A. Koriat (Cambridge: MIT Press), 653–675.

Heeren, A., Mogoaşe, C., Philippot, P., and McNally, R. J. (2015). Attention bias modification for social anxiety: a systematic review and meta-analysis. Clin. Psychol. Rev. 40, 76–90. doi: 10.1016/j.cpr.2015.06.001

Holt-Lunstad, J., Birmingham, W., and Jones, B. Q. (2008). Is there something unique about marriage? The relative impact of marital status, relationship quality, and network social support on ambulatory blood pressure and mental health. Ann. Behav. Med. 35, 239–244. doi: 10.1007/s12160-008-9018-y

Humphreys, K. L., Kircanski, K., Colich, N. L., and Gotlib, I. H. (2016). Attentional avoidance of fearful facial expressions following early life stress is associated with impaired social functioning. J. Child Psychol. Psychiatry 57, 1174–1182. doi: 10.1111/jcpp.12607

Iacono, V., Ellenbogen, M. A., Wilson, A. L., Desormeau, P., and Nijjar, R. (2015). Inhibition of personally-relevant angry faces moderates the effect of empathy on interpersonal functioning. PLoS One 10:e0112990. doi: 10.1371/journal.pone.0112990

Ibáñez, A., Hurtado, E., Lobos, A., Escobar, J., Trujillo, N., Baez, S., et al. (2011). Subliminal presentation of other faces (but not own face) primes behavioral and evoked cortical processing of empathy for pain. Brain Res. 1398, 72–85. doi: 10.1016/j.brainres.2011.05.014

Ji, J. L., Grafton, B., and MacLeod, C. (2017). Referential focus moderates depression-linked attentional avoidance of positive information. Behav. Res. Ther. 93, 47–54. doi: 10.1016/j.brat.2017.03.004

Joormann, J. (2004). Attentional bias in dysphoria: the role of inhibitory processes. Cogn. Emot. 18, 125–147. doi: 10.1080/02699930244000480

Joormann, J. (2010). Cognitive inhibition and emotion regulation in depression. Curr. Dir. Psychol. Sci. 19, 161–166. doi: 10.1177/0963721410370293

Joormann, J., and Gotlib, I. H. (2010). Emotion regulation in depression: relation to cognitive inhibition. Cogn. Emot. 24, 281–298. doi: 10.1080/02699930903407948

Keltner, D., and Gross, J. J. (1999). Functional accounts of emotions. Cogn. Emot. 13, 467–480. doi: 10.1080/026999399379140

LaFrance, M., and Banaji, M. (1992). “Toward a reconsideration of the gender–emotion relationship,” in Review of Personality and Social Psychology, Vol. 14. Emotion and Social Behavior, ed. M. S. Clark (Thousand Oaks, CA: Sage Publicaions), 178–201.

Lang, P. J., Davis, M., and Öhman, A. (2000). Fear and anxiety: animal models and human cognitive psychophysiology. J. Affect. Disord. 61, 137–159. doi: 10.1016/S0165-0327(00)00343-8

Leibenluft, E., Gobbini, M., Harrison, T., and Haxby, J. V. (2004). Mothers neural activation in response to pictures of their children and other children. Biol. Psychiatry 56:232. doi: 10.1016/j.biopsych.2004.05.017

Lundqvist, D., Esteves, F., and Öhman, A. (2004). The face of wrath: The role of features and configurations in conveying social threat. Cogn. Emot. 18, 161–182. doi: 10.1080/02699930244000453

Mackinnon, S. P., Sherry, S. B., Antony, M. M., Stewart, S. H., Sherry, D. L., and Hartling, N. (2012). Caught in a bad romance: perfectionism, conflict, and depression in romantic relationships. J. Fam. Psychol. 26, 215–225. doi: 10.1037/a0027402

MacLeod, C., Rutherford, E., Campbell, L., Ebsworthy, G., and Holker, L. (2002). Selective attention and emotional vulnerability: assessing the causal basis of their association through the experimental manipulation of attentional bias. J. Abnorm. Psychol. 111:107. doi: 10.1037/0021-843X.111.1.107

Marchand-Reilly, J. F. (2012). Attachment anxiety, conflict behaviors, and depressive symptoms in emerging adults’ romantic relationships. J. Adult Dev. 19, 170–176. doi: 10.1007/s10804-012-9144-4

Mastorakos, T., and Scott, K. L. (2019). Attention biases and social-emotional development in preschool-aged children who have been exposed to domestic violence. Child Abuse Neglect 89, 78–86. doi: 10.1016/j.chiabu.2019.01.001

Mathews, A., and MacLeod, C. (2005). Cognitive vulnerability to emotional disorders. Annu. Rev. Clin. Psychol. 1, 167–195. doi: 10.1146/annurev.clinpsy.1.102803.143916

Mogg, K., and Bradley, B. (1998). A cognitive-motivational analysis of anxiety. Behav. Res. Ther. 36, 809–848. doi: 10.1016/S0005-7967(98)00063-1

Morris, J. S., Öhman, A., and Dolan, R. J. (1999). A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl. Acad. Sci. U.S.A. 96, 1680–1685. doi: 10.1073/pnas.96.4.1680

Notarius, C. I., and Johnson, J. S. (1982). Emotional expression in husbands and wives. J. Marriage Fam. 44, 483–489. doi: 10.2307/351556

Pierce, G. R., Sarason, I. G., and Sarason, B. R. (1991). General and relationship-based perceptions of social support: Are two constructs better than one? J. Pers. Soc. Psychol. 61:1028. doi: 10.1037/a0020836

Posner, M. I. (1980). Orienting of attention. Q. J. Exp. Psychol. 32:25. doi: 10.1080/00335558008248231

Russell, J. A., Bachorowski, J.-A., and Fernández-Dols, J.-M. (2003). Facial and vocal expressions of emotion. Annu. Rev. Psychol. 54, 329–349. doi: 10.1146/annurev.psych.54.101601.145102

Salehinejad, M. A., Nejati, V., and Nitsche, M. A. (in press). Neurocognitive correlates of self-esteem: from self-related attentional bias to involvement of the ventromedial prefrontal cortex. Neurosci. Res. doi: 10.1016/j.neures.2019.12.008

Stormark, K. M., Nordby, H., and Hugdahl, K. (1995). Attentional shifts to emotionally charged cues: behavioural and ERP data. Cogn. Emot. 9, 507–523. doi: 10.1080/02699939508408978

Taylor, M. J., Arsalidou, M., Bayless, S. J., Morris, D., Evans, J. W., and Barbeau, E. J. (2009). Neural correlates of personally familiar faces: parents, partner and own faces. Hum. Brain Mapp. 30, 2008–2020. doi: 10.1002/hbm.20646

Taylor, V. A., Ellenbogen, M. A., Washburn, D., and Joober, R. (2011). The effects of glucocorticoids on the inhibition of emotional information: a dose–response study. Biol. Psychol. 86, 17–25. doi: 10.1016/j.biopsycho.2010.10.001

Tipper, S. P., and Cranston, M. (1985). Selective attention and priming: inhibitory and facilitatory effects of ignored primes. Q. J. Exp. Psychol. 37, 591–611. doi: 10.1080/14640748508400921

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., et al. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249. doi: 10.1016/j.psychres.2008.05.006

Velotti, P., Balzarotti, S., Tagliabue, S., English, T., Zavattini, G. C., and Gross, J. J. (2016). Emotional suppression in early marriage: actor, partner, and similarity effects on marital quality. J. Soc. Pers. Relat. 33, 277–302. doi: 10.1177/0265407515574466

Viskontas, I. V., Quiroga, R. Q., and Fried, I. (2009). Human medial temporal lobe neurons respond preferentially to personally relevant images. Proc. Natl. Acad. Sci. U.S.A. 106, 21329–21334. doi: 10.1073/pnas.0902319106

Weibert, K., Harris, R. J., Mitchell, A., Byrne, H., Young, A. W., and Andrews, T. J. (2016). An image-invariant neural response to familiar faces in the human medial temporal lobe. Cortex 84, 34–42. doi: 10.1016/j.cortex.2016.08.014

Wingenfeld, K., Mensebach, C., Driessen, M., Bullig, R., Hartje, W., and Beblo, T. (2006). Attention bias towards personally relevant Stimuli: the individual emotional stroop task. Psychol. Rep. 99, 781–793. doi: 10.2466/pr0.99.3.781-793

Wingenfeld, K., Mensebach, C., Rullkoetter, N., Schlosser, N., Schaffrath, C., Woermann, F. G., et al. (2009). Attentional bias to personally relevant words in borderline personality disorder is strongly related to comorbid posttraumatic stress disorder. J. Personal. Disord. 23, 141–155. doi: 10.1521/pedi.2009.23.2.141

Wong, S. F., Trespalacios, F., and Ellenbogen, M. A. (2020). Poor inhibition of personally-relevant facial expressions of sadness and anger predicts an elevated cortisol response following awakening six months later. Int. J. Psychophysiol. 150, 73–82. doi: 10.1016/j.ijpsycho.2020.02.005

Young, S. G., and Claypool, H. M. (2010). Mere exposure has differential effects on attention allocation to threatening and neutral stimuli. J. Exp. Soc. Psychol. 46, 424–427. doi: 10.1016/j.jesp.2009.10.015

Keywords: information processing, personally relevant stimuli, interpersonal outcomes, emotion, attentional biases

Citation: Serravalle L, Tsekova V and Ellenbogen MA (2020) Predicting Interpersonal Outcomes From Information Processing Tasks Using Personally Relevant and Generic Stimuli: A Methodology Study. Front. Psychol. 11:543596. doi: 10.3389/fpsyg.2020.543596

Received: 17 March 2020; Accepted: 31 August 2020;

Published: 24 September 2020.

Edited by:

Wenfeng Chen, Renmin University of China, ChinaCopyright © 2020 Serravalle, Tsekova and Ellenbogen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mark A. Ellenbogen, bWFyay5lbGxlbmJvZ2VuQGNvbmNvcmRpYS5jYQ==

Lisa Serravalle

Lisa Serravalle Virginia Tsekova

Virginia Tsekova Mark A. Ellenbogen

Mark A. Ellenbogen