94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 04 August 2020

Sec. Quantitative Psychology and Measurement

Volume 11 - 2020 | https://doi.org/10.3389/fpsyg.2020.01803

This article is part of the Research TopicFrom West to East: Recent Advances in Psychometrics and Psychological Instruments in AsiaView all 33 articles

Valid measures of student motivation can inform the design of learning environments to engage students and maximize learning gains. This study validates a measure of student motivation, the Reduced Instructional Materials Motivation Survey (RIMMS), with a sample of Chinese middle school students using an adaptive learning system in math. Participants were 429 students from 21 provinces in China. Their ages ranged from 14 to 17 years old, and most were in 9th grade. A confirmatory factor analysis (CFA) validated the RIMMS in this context by demonstrating that RIMMS responses retained the intended four-factor structure: attention, relevance, confidence, and satisfaction. To illustrate the utility of measuring student motivation, this study identifies factors of motivation that are strongest for specific student subgroups. Students who expected to attend elite high schools rated the adaptive learning system higher on all four RIMMS motivation factors compared to students who did not expect to attend elite high schools. Lower parental education levels were associated with higher ratings on three RIMMS factors. This study contributes to the field’s understanding of student motivation in adaptive learning settings.

Adaptive learning systems use various learning algorithms, such as machine learning and item response theories, to personalize the learning sequence for each student. Personalization is based on system-generated student learning profiles, which are informed by students’ performance on an initial knowledge diagnostic, and continuously updated with student usage data and learning behaviors. As students spend more time in the system, their learning profiles become more accurate and allow for greater personalization (Hauger and Köck, 2007, 355–60; Van Seters et al., 2012, 942–52). Well-known adaptive learning systems include Knewton, ALEKS, i-Ready, Achieve3000, and various cognitive tutor programs.

Studies show that adaptive learning systems are often effective at promoting student learning. A review found that 32 of 37 (86%) studies on the effects of adaptive learning reported positive learning achievement outcomes (Xie et al., 2019, 11). A comparison of 6,400 courses found that courses with adaptive assignments produce greater learning gains than courses without adaptive assignments (Bomash and Kish, 2015, 8–15). A large-scale effectiveness study found that an adaptive learning system improved the average student’s performance on an algebra proficiency exam by approximately 8 percentile points (Pane et al., 2014, 127). Another study found that over 2 years, personalized learning improved student mathematics performance, equivalent to a 3 percentile gain on a standardized assessment (Pane et al., 2017, 3).

The extent to which students benefit from adaptive learning systems may depend in part on their experiences of motivation while using such systems. Student motivation influences both the learning process and its outcomes (Pintrich and de Groot, 1990, 33–40; Zimmerman et al., 1992, 663–76; Carini et al., 2006, 1–32; Glanville and Wildhagen, 2007, 1019–41; Kuh et al., 2007, 1–5). Unfortunately, students’ motivation tends to decline as they progress through K-12 educational systems (Christidou, 2011). This decline has been linked to educational systems’ inadequate fulfillment of students’ needs for autonomy, self-efficacy, and relevance in their learning (Gnambs and Hanfstingl, 2016, 1698–1712). To prevent or reverse this trend, it is vital to understand where motivation is lost and for whom. Measuring student motivation may improve adaptive learning systems by enhancing the accuracy of student learning profiles, the basis for personalization.

One popular framework for measuring student motivation is the Attention Relevance Confidence Satisfaction (ARCS) model of student motivation. The ARCS model was developed in the context of face-to-face classroom instruction but has since been applied in computer- and Web-based instructional settings (e.g., Keller, 1999, 39–47; Astleitner and Hufnagl, 2003, 361–76). The ARCS Model posits that four factors must be present to motivate a student (Keller, 1987, 1–2):

• Attention: The learning experience must capture and hold the student’s attention, for example by stimulating curiosity and varying the presentation style.

• Relevance: The learning experience must feel personally relevant to the student. It might meet a specific need such as a credential, align with the student’s motives or values, or connect to familiar experiences.

• Confidence: The learning experience must elicit a sense of confidence in one’s ability to learn. It might do this by setting clear requirements for success and providing appropriately challenging opportunities.

• Satisfaction: The process or results of the learning experience must feel satisfying to the student. Learning experiences can promote satisfaction by providing meaningful opportunities to apply new skills, reinforcing students’ successes, and expressing that all students are evaluated using the same standards.

The ARCS model has implications both for measuring students’ motivation and for improving educational interventions to enhance motivation. Keller’s Motivational Tactics Checklist (Keller, 2010, 287–91) describes strategies for supporting each of the four factors of the ARCS Model. User instructions designed to support even one factor of the ARCS Model have been shown to improve performance over instructions that have not been manipulated to support motivation (Loorbach et al., 2007, 343–358).

The Instructional Materials Motivation Survey (IMMS) is the original measure of student motivation based on the ARCS model (Keller, 2010, 277–87). The IMMS is widely used to measure students’ needs prior to engaging with instructional materials, and to measure students’ reactions after engaging with instructional materials. It consists of 36 items arranged in four subscales corresponding to the ARCS model’s components of motivation. One validation study supported the validity of the IMMS, reporting Cronbach’s alpha ≥0.75 for all IMMS dimensions, interdimension correlations from 0.40 to 0.80, and statistically significant correlations with global satisfaction ratings (Cook et al., 2009, 1507–8). Meanwhile, three studies found that it is necessary to reduce the number of items on the IMMS to strengthen the measure’s psychometric properties (Huang et al., 2006, 250; Loorbach et al., 2015, 9; Hauze and Marshall, 2020, 49–64).

The 12-item Reduced IMMS (RIMMS) consists of three items measuring each of the four IMMS subscales, respectively. A validation study found that the RIMMS fits the four factors of the ARCS Model better than the IMMS (Loorbach et al., 2015, 204–218). The RIMMS is appropriate for measuring students’ responses to adaptive learning technology; it was validated in an individual learning setting (Loorbach et al., 2015, 204), and has been applied in a range of computer-based learning settings (Khacharem, 2017, 4; Linser and Kurtz, 2018, 1508; Nel and Nel, 2019, 178–80; Villena Taranilla et al., 2019, 6). These studies have generally reported acceptable subscale reliabilities for each of the four subscales: attention (α = 0.73–0.90), relevance (α = 0.69–0.82), confidence (α = 0.59–0.89), and satisfaction (α = 0.82–0.88). These studies have also found evidence of measure sensitivity: they have detected pre- intervention- to post-intervention change.

The RIMMS was validated in the Netherlands (Loorbach et al., 2015, 1) and has since been applied in Spain, the United States, Holland, the United Kingdom, Canada, and South Africa (Linser and Kurtz, 2018, 1508; Nel and Nel, 2019, 178; Villena Taranilla et al., 2019, 4). Yet no studies to date have investigated its psychometric properties in Eastern cultures. Validation is essential when applying a measure in a new culture, as culture influences both behavior and cognition (Han, 2010, 297–88; Varnum et al., 2010, 9–13; Millar et al., 2013, 138–57). Consequently, instruments do not necessarily retain their psychometric properties when used in new cultural contexts (Hambleton et al., 2005; Dai et al., 2020, 3–5). For example, researchers who translated the IMMS into Turkish found that it required substantial modification to exhibit sound psychometric properties (Kutu and Sozbilir, 2011, 292).

The present study aims to (1) validate the RIMMS (Loorbach et al., 2015, 204–18) in a sample of Chinese middle school students using an adaptive learning system, and (2) examine associations among student motivation and student background characteristics in this setting. While China’s online education market is large, with 144 million online education users in 2017 (China Internet Network Information Center, 2017), adaptive learning is relatively new to this market. Accordingly, research on students’ motivation while using adaptive learning systems in China is nascent. Validating a measure of student motivation for this context is key to improving adaptive learning systems to support Chinese students’ motivation and learning.

In addition to validating the RIMMS in this sample, the present study aims to illustrate the utility of student motivation measures for identifying sub-groups of students who may require motivational support. For example, patterns of motivation or engagement may differ by educational aspirations (Roebken, 2007, 3–9; Gutman and Schoon, 2018, 114–15), socioeconomic status (Willms, 2003, 48), gender (Hsieh et al., 2015, 341–45), or familiarity with the learning format (Miller et al., 2011, 1431; Orfanou et al., 2015, 238). Detecting these differences may improve the precision with which adaptive learning systems can target motivational support.

Participants were 429 students from 21 provinces in China. Recruitment targeted a wide range of provinces and schools. The study included all schools and students that agreed to participate. Students’ ages ranged from 14 to 17. Most students were in 9th grade. They represented typical students of their age in their provinces. As incentives, students received school supplies (e.g., pens, ruler, and eraser).

The study took place in 21 provinces. All participants went to designated schools or learning centers in their provinces and received free transportation and boarding if necessary. Over two consecutive days in the summer, participants used Squirrel AI Learning for a total of 6 h and 40 min. Participants followed identical learning schedules, including times for studying, breaks, and lunch. The schedule was designed to maximize time spent in the Squirrel AI Learning system. Participants used Squirrel AI Learning individually in school computer labs; they did not receive human instruction or tutoring. A researcher monitored each computer lab to provide technical assistance and to ensure that students followed the schedule. An independent research organization monitored study implementation by proctoring exams and enforcing uniform learning schedules.

Before students used Squirrel AI Learning, they were provided with a basic-information questionnaire, which included student information (e.g., year of birth, gender, grade level) and family background information (e.g., parent education level).

After using Squirrel AI Learning, students were asked to complete a paper version of the RIMMS in Chinese. The RIMMS was administered after students used Squirrel AI Learning to measure students’ experiences of motivation while using the system, consistent with prior studies using the RIMMS and IMMS (Loorbach et al., 2015, 204, 211). The research team translated the English version of RIMMS into Chinese, and back translated to guarantee the accuracy of the survey (see Table 1). Students were given a maximum of half an hour to complete the survey, but most students completed the survey within 10 min.

One of the first Chinese developers to release an adaptive learning system, Squirrel AI Learning has established over 2,000 learning centers in over 700 cities serving almost 2 million registered accounts. Users represent a range of socioeconomic status, urbanicity, and academic achievement. For a more detailed description of the Squirrel AI Learning System, see Li et al. (2018), 46–8.

Participants studied Squirrel AI Learning 9th grade math content. The content was considered review for the students, consistent with the predominant use of Squirrel AI Learning in China. Topics included quadratic equations, parallelograms, and linear functions.

Squirrel AI Learning’s product design is grounded in many of the same design principles specified in the ARCS Model as motivation supports (Keller, 1987, 2–7). Of the ARCS Model’s four motivational factors, the Squirrel AI Learning system is primarily designed to support student confidence and attention.

The Squirrel AI Learning system’s adaptive learning technology presents each student with problems targeted to their ability, so that problems are neither too challenging nor too simple. This is consistent with Keller’s recommendations for supporting student confidence, based on the ARCS Model: “The success experience will be meaningful and will stimulate continued motivation if there is enough challenge to require a degree of effort to succeed, but not so much that it creates serious anxieties or threatens failure” (Keller, 1987, 4).

Keller (1987), 5 also specified that instructional materials can support confidence by providing corrective feedback. The Squirrel AI Learning system includes an intelligent, immediate feedback mechanism. After completing each problem, students are told whether they have answered correctly, with elaborated explanations and opportunities to correct their work. Research has demonstrated the benefits of such instant and frequent feedback (Hattie and Timperley, 2007, 81–112).

To support students’ attention, Keller recommends promoting an inquiry mindset by engaging students in problem solving (Keller, 1987, 2–3). The Squirrel AI Learning system aims to foster an inquiry mindset through its learn-by-doing approach, in which students learn by solving problems, with the option to use additional resources as needed. The Squirrel AI Learning system uses the student’s learning profile to select the most appropriate learning resources for the student, such as instructional videos, lecture notes, and worked examples. Using a variety of instructional supports further supports students’ attention (Keller, 1987, 2–3).

To answer RQ1, we used confirmatory factor analysis (CFA), widely considered a rigorous quantitative approach to validate instruments (Wang and Wang, 2012). We specified the four-factor model proposed in RIMMS (Figure 1) and applied several criteria to determine whether the data fit this model. While 0.3 is a conventional threshold for factor loadings (Brown, 2006), to be conservative we only considered a factor acceptable if it met a higher factor loading threshold of 0.4 (Ford et al., 1986). In addition to examining the value of factor loadings, we also investigated whether each factor loading demonstrated statistical significance. Furthermore, we examined indices of model fit: Standardized Root Mean Square Residual (SRMSR) <0.05 indicates close approximate fit; Comparative Fit Index (CFI) of >0.9 represents reasonably good fit; Tucker Lewis Index (TLI) >0.9 indicates reasonably good fit (see Hu and Bentler, 1999). Finally, we examined Cronbach’s alpha to assess reliability of the factors, applying reliability threshold of >0.7, a widely used threshold in educational studies (Nunnally, 1978).

Next, for RQ2, we used a multiple indicators multiple causes (MIMIC) model, a type of structural equation model which relates CFA-based results to student and family background information. Based on our review of the literature, as well as consultations with expert teachers, the indicators selected for examination during the MIMIC modeling process included student gender, student familiarity with the content, student education aspiration, and parent education level. Specifically, gender was a binary variable (female or male; “male” was the reference level). Student familiarity with computers was a self-report binary variable (very familiar or not; “not familiar with computers” was the reference level). Student education aspiration was a binary variable (expect yourself to attend an elite high school or not; “not expecting to attend an elite high school” was the reference level). Parent education level was a binary variable (high = at least one parent’s highest degree was college or above, or low = neither parents attended college or above; low was the reference level). Student grade level was excluded from the analysis because 96% of the sample were grade 9 students and there was little variance in the variable.

The analysis team analyzed only a de-identified dataset. All analyses were completed in Mplus.

A total of 417 students completed the Chinese version of RIMMS. For RQ1, with an RMSEA of 0.059, the four-factor CFA demonstrated close fit with the data (Byrne, 1998). CFA analyses also indicated robust item loadings (all larger than 0.6) on this model for the validation data (see Table 1), and all factor loadings were significant (p < 0.001). In addition, indices of model fit were very robust: the final model showed an SRMSR of 0.024 (values <0.05 indicate close approximate fit), a CFI of 0.984 (values >0.9 represent reasonably good fit), and a TLI of 0.978 (values >0.9 indicate reasonably good fit). In addition, Cronbach’s Alpha indicated high reliabilities for the four factors of RIMMS: Cronbach’s alpha is 0.89 for attention, 0.80 for relevance, 0.86 for confidence, and 0.89 for satisfaction (Nunnally, 1978). This indicated that the four-factor RIMMS model is valid for making inferences about student motivation among Chinese 9th grade students in an adaptive learning setting.

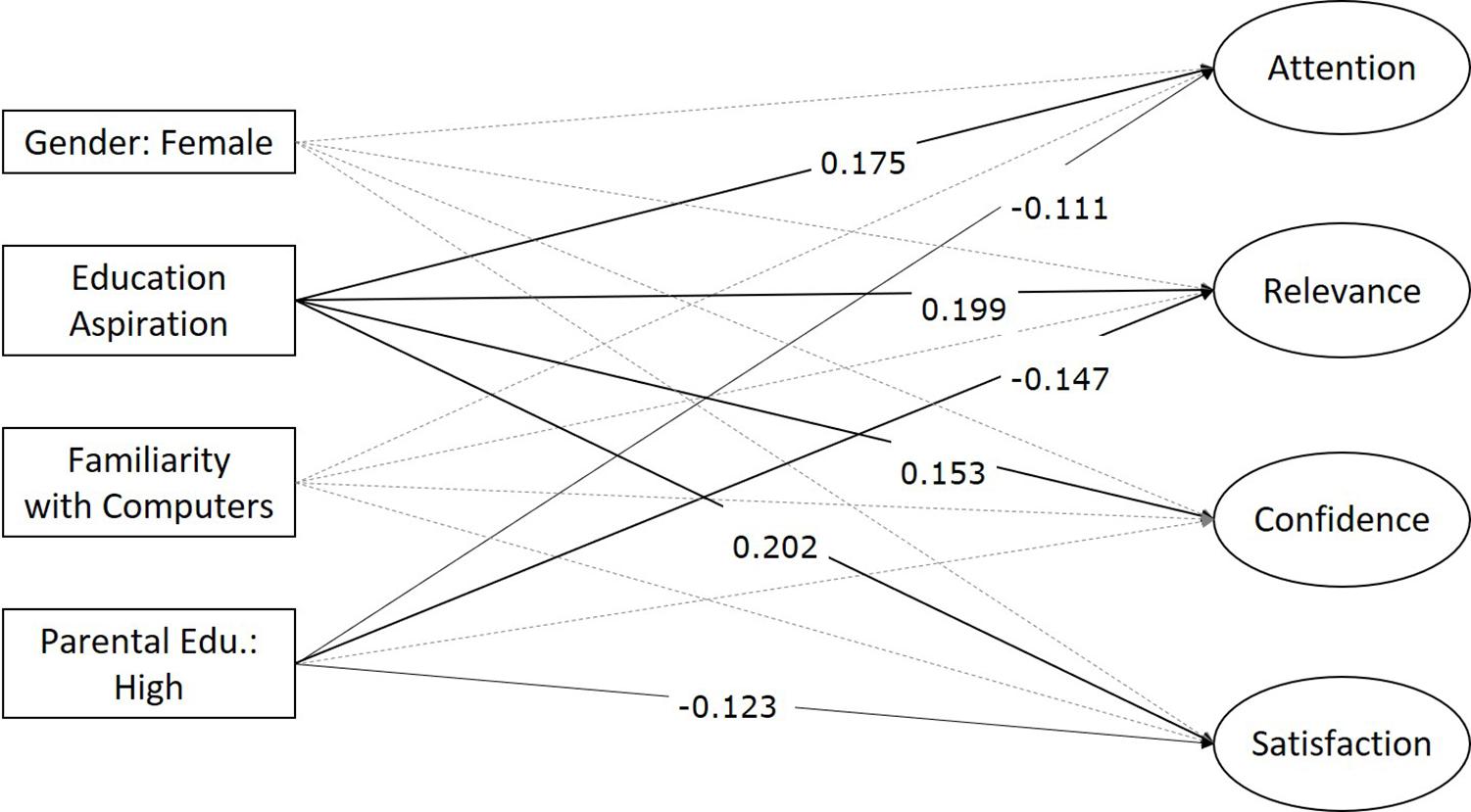

For RQ2, a total of 397 students provided complete information on both the RIMSS and the background survey. The MIMIC model demonstrated close fit with the data: the final model showed a SRMSR of 0.026, a CFI of 0.978, and a TLI of 0.969. Based on the MIMIC model, educational aspiration was positively related to all four RIMMS factors: attention (B = 0.380, p < 0.01), relevance (B = 0.432, p < 0.01), confidence (B = 0.333, p < 0.01), and satisfaction (B = 0.148, p < 0.01). Parental education level was negatively related to attention (B = −0.235, p < 0.05), relevance (B = −0.311, p < 0.01), and satisfaction (B = −0.260, p < 0.05), but not confidence (p = 0.299). Neither student familiarity with computers nor student gender were significantly associated with the motivation factors. See Figure 2 for details.

Figure 2. Associations between student background and RIMMS. Insignificant loadings were excluded from the figure.

Motivation is integral to academic success (Pintrich and de Groot, 1990, 33–40; Zimmerman et al., 1992, 663–76; Carini et al., 2006, 1–32; Glanville and Wildhagen, 2007, 1019–41; Kuh et al., 2007, 1–5). Measuring motivation is foundational to improving it. This study validated a measure of student motivation, the Reduced Instructional Materials Motivation Survey (RIMMS), in a sample of Chinese middle school students using an adaptive learning system for math content review. A CFA found evidence of validity: RIMMS responses retained the four-factor structure intended by the measure’s developers – attention, relevance, confidence, and satisfaction. This finding adds to the growing body of evidence suggesting the RIMMS can be used to draw valid inferences in a variety of cultures (Linser and Kurtz, 2018, 1508; Nel and Nel, 2019, 178; Villena Taranilla et al., 2019, 4). This is the first study, to our knowledge, that extends these findings to China. As adaptive learning systems become increasingly popular in China, such measures can be used to design systems that adapt not only to students’ learning progress, but also to their motivational needs.

This study illustrates how the RIMMS can be used to identify differences in students’ motivation profiles. Findings are consistent with prior research highlighting the relationship between self-efficacy and academic goal setting (Bandura and Schunk, 1981). Students with higher education aspirations (who expected to attend elite high schools) rated the Squirrel AI Learning system higher on all four RIMMS motivation factors: attention, relevance, confidence, and satisfaction. These students may have felt that Squirrel AI Learning math content was relevant to their educational aspirations. They may have had greater prior mathematical ability, resulting both in higher educational aspirations and confidence when using Squirrel AI Learning. They may also have foreseen or experienced satisfying opportunities to apply these skills in pursuit of admission to elite high schools.

Further, the RIMMS was able to detect differences in student motivation by parental education. Measuring socioeconomic differences in motivation is especially important given that students from families of low socioeconomic status are more likely to be disengaged in school, a pattern that has been reported in both Eastern and Western cultures (Willms, 2003, 48; Hannum and Park, 2004, 8–12). By contrast, the present study found that students who reported lower parental education rated the Squirrel AI Learning System higher on the attention, relevance, and satisfaction factors of motivation than participants who reported higher parental education. This novel finding illustrates the utility of measuring product- or situation-specific motivation to reveal nuances in broader trends.

In this study, gender did not predict students’ RIMMS ratings of an adaptive learning system. Given that gender stereotypes negatively affect girls’ math performance in both Eastern and Western cultures (Beilock et al., 2010, 1861; Song et al., 2016, 943–952), it is encouraging that male and female students reported similar motivational experiences using Squirrel AI Learning. More research is needed to clarify the conditions under which gender may affect students’ experiences with edtech. While some studies have found an association between gender and edtech engagement (Lowrie and Jorgensen, 2011, 2246–47; Hsieh et al., 2015, 341–45), others have found that males and females tend to perceive edtech similarly (Meiselwitz and Sadera, 2008, 238; Ituma, 2011, 61; Orfanou et al., 2015, 237). Validated measures of student motivation, such as the RIMMS, may be instrumental for identifying factors associated with gender differences in edtech engagement.

This study found that familiarity with computers did not predict students’ motivational experiences with an adaptive learning system. It may be that general computer familiarity is too low a bar to distinguish among 9th grade students. We included this variable because some participants were from rural areas with limited computer access, but most students reported they were familiar with computers. Other studies reporting associations between system familiarity and user experience have generally measured platform-specific experience, such as experience with educational games or learning management systems (Miller et al., 2011, 1428; Orfanou et al., 2015, 233).

Psychometrically sound measures of student motivation, such as the RIMMS, will enable researchers and developers to better understand students’ experiences with adaptive learning systems. This information could be used in efficacy studies to explore how outcomes vary by student engagement. It could also be useful for product development. In an adaptive learning system, motivation data could improve the accuracy of student learning profiles, facilitating a more personalized learning experience. For example, the system might learn that a student is low on a specific motivation factor, then boost that factor’s salience for that student by applying factor-specific strategies from Keller’s Motivational Tactics Checklist (Keller, 2010, 287–91). Adaptive learning systems will become increasingly powerful as they develop the capacity to personalize not only educational content but also motivational supports.

Some aspects of the study design limit the external generalizability of our findings. The current study focused only on 9th grade students and on a limited number of mathematics topics. Future studies should explore whether the RIMMS retains its four-factor structure, and whether associations between student characteristics and motivation hold, in other grades and subject areas. This study was conducted over a relatively short timeframe (2 days). Participants had not used the Squirrel AI Learning system before this study; it is possible that the study was too short for the novelty to wear off. The research team accepted these tradeoffs to arrange for a large sample of students from many regions of China to use the adaptive learning system at the same time. Finally, our findings regarding the association between parental education and motivation may not generalize to socioeconomic status more broadly. This study used student-reported parental education as a proxy for socioeconomic status because Chinese schools do not offer free or reduced-price lunch, and students may not be able to provide accurate information about parental income.

Adaptive learning systems are often effective learning tools. While they are common in the United States and elsewhere, they are relatively new and gaining popularity in China. To develop effective, engaging systems, developers need information about students’ experiences. While validated measures of student motivation exist, little is known about their applicability in a Chinese adaptive learning context. This study found that the RIMMS can be used to draw valid inferences about Chinese 9th grade students’ experiences of motivation when using an adaptive learning system intensively over a short timeframe for math content review.

The datasets generated for this study are not publicly available due to restrictions set by our Institutional Review Board agreement. Participants did not consent to have their data shared.

Written informed consent from the participants’ legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements. In addition, SRI International followed best ethical practice by analyzing de-identified data, and the SRI Institutional Review Board determined that SRI’s analysis of the de-identified data did not constitute human subjects research.

SW, CC, and YX contributed to conception and design of the study. SW and YX performed the statistical analysis. SW, CC, and LS wrote different sections of the manuscript and contributed to manuscript revision. All authors contributed to the article and approved the submitted version.

The authors declare that this study received funding from the Squirrel AI Learning by Yixue Education Group. The funder was involved in participant recruitment and data collection. The funder was not involved in the study design, analysis, interpretation of data, or the writing of this article.

SRI International (authors: SW, CC, and LS) was contracted by Squirrel AI Learning by Yixue Education Group to conduct this work. YX was a data analyst at SRI International when the data were analyzed, and she is currently an employee at Kidaptive. WC and RT are employees of Squirrel AI Learning by Yixue Education Group.

We would like to express our great appreciation to Dr. Marie Bienkowski and Dr. Mingyu Feng for their valuable contributions to efficacy studies of the Squirrel AI Learning System, which inspired this line of research.

Astleitner, H., and Hufnagl, M. (2003). The effects of situation-outcome-expectancies and of ARCS-strategies on self-regulated learning with web-lectures. J. Educ. Multimed. Hypermed. 12, 361–376.

Bandura, A., and Schunk, D. (1981). Cultivating competence, self-efficacy, and intrinsic interest through proximal self-motivation. J. Pers. Soc. Psychol. 41, 586–598. doi: 10.1037/0022-3514.41.3.586

Beilock, S. L., Gunderson, E. A., Ramirez, G., and Levine, S. C. (2010). Female teachers’ math anxiety affects girls’ math achievement. Proc. Natl. Acad. Sci. U.S.A. 107, 1860–1863. doi: 10.1073/pnas.0910967107

Bomash, I., and Kish, C. (2015). The Improvement Index: Evaluating Academic Gains in College Students Using Adaptive Lessons. New York, NY: Knewton.

Brown, T. A. (2006). Confirmatory Factor Analysis for Applied Research. New York, NY: The Guilford Press.

Byrne, B. M. (1998). Structural Equation Modeling with LISREL, PRELIS and SIMPLIS: Basic Concepts, Applications and Programming. Mahwah, NJ: Lawrence Erlbaum Associates.

Carini, R., Kuh, G., and Klein, S. (2006). Student engagement and student learning: testing the linkages. Res. High. Educ. 47, 1–32. doi: 10.1007/s11162-005-8150-9

China Internet Network Information Center (2017). The 40th China Statistical Report on Internet Development. Beijing: China Internet Network Information Center.

Christidou, V. (2011). Interest, attitudes and images related to science: combining students’voices with the voices of school science, teachers, and popular science. Int. J. Environ. Sci. Educ. 6, 141–159.

Cook, D. A., Beckman, T. J., Thomas, K. G., and Thompson, W. G. (2009). Measuring motivational characteristics of courses: applying keller’s instructional materials motivation survey to a web-based course. Acad. Med. 84, 1505–1509. doi: 10.1097/ACM.0b013e3181baf56d

Dai, B., Zhang, W., Wang, Y., and Jian, X. (2020). Comparison of three trust assessment scales based on item response theory. Front. Psychol. 11:10. doi: 10.3389/fpsyg.2020.00010

Ford, J. K., MacCallum, R. C., and Tait, M. (1986). The application of exploratory factor analysis in applied psychology: a critical review and analysis. Pers. Psychol. 39, 291–314. doi: 10.1111/j.1744-6570.1986.tb00583.x

Glanville, J. L., and Wildhagen, T. (2007). The measurement of school engagement: assessing dimensionality and measurement invariance across race and ethnicity. Educ. Psychol. Meas. 67, 1019–1041. doi: 10.1177/0013164406299126

Gnambs, T., and Hanfstingl, B. (2016). The decline of academic motivation during adolescence: an accelerated longitudinal cohort analysis on the effect of psychological need satisfaction. Educ. Psychol. 36, 1698–1712.

Gutman, L. M., and Schoon, I. (2018). Emotional engagement, educational aspirations, and their association during secondary school. J. Adolesc. 67, 109–119. doi: 10.1016/j.adolescence.2018.05.014

Hambleton, R. K., Merenda, P. F., and Spielberger, C. D. (2005). Adapting Educational and Psychological Tests for Cross-Cultural Assessment. Mahwah, NJ: Lawrence Erlbaum.

Han, S. (2010). “Cultural differences in thinking styles,” in Towards a Theory of Thinking, eds B. Glatzeder, V. Goel, and A. Müller (Berlin: Springer), 279–288. doi: 10.1007/978-3-642-03129-8_19

Hannum, E., and Park, A. (2004). Children’s Educational Engagement in Rural China. Available online at: https://www.researchgate.net/profile/Albert_Park/publication/228547973_Children%27s_Educational_Engagement_in_Rural_China/links/0deec519195b4cccdf000000/Childrens-Educational-Engagement-in-Rural-China.pdf (accessed May 29, 2014)Google Scholar

Hauger, D., and Köck, M. (2007). “State of the Art of adaptivity in E-learning platforms,” in Proceedings of the 15th Workshop on Adaptivity and User Modeling in Interactive Systems LWA, Halle, 355–360.

Hauze, S., and Marshall, J. (2020). Validation of the instructional materials motivation survey: measuring student motivation to learn via mixed reality nursing education simulation. Int. J. E Learn. 19, 49–64.

Hsieh, Y.-H., and Lin, Y.-C, and Hou, H.-T (2015). “Exploring elementary-school students’ engagement patterns in a game-based learning environment. J. Educ. Technol. Soc. 18, 336–348.

Hu, L., and Bentler, P. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Huang, W., Huang, W., Diefes−Dux, H., and Imbrie, P. K. (2006). A preliminary validation of attention, relevance, confidence and satisfaction model-based instructional material motivational survey in a computer-based tutorial setting. Br. J. Educ. Technol. 37, 243–259. doi: 10.1111/j.1467-8535.2005.00582.x

Ituma, A. (2011). “An evaluation of students’ perceptions and engagement with E-learning components in a campus based university. Active Learn. High. Educ. 12, 57–68. doi: 10.1177/1469787410387722

Keller, J. (2010). Motivational Design for Learning and Performance: The ARCS Model Approach. New York, NY: Springer.

Keller, J. M. (1987). Strategies for stimulating the motivation to learn. Perform. Instruct. 26, 1–7. doi: 10.1002/pfi.4160260802

Keller, J. M. (1999). Using the ARCS motivational process in computer-based instruction and distance education. New Dir. Teach. Learn. 1999, 37–47. doi: 10.1002/tl.7804

Khacharem, A. (2017). Top-down and bottom-up guidance in comprehension of schematic football diagrams. J. Sports Sci. 35, 1204–1210. doi: 10.1080/02640414.2016.1218034

Kuh, G. D., Cruce, T., Shoup, R., Kinzie, J., and Gonyea, R. M. (2007). Unmasking the Effects of Student Engagement on College Grades and Persistence. Chicago, IL: American Educational Research Association.

Kutu, H., and Sozbilir, M. (2011). Adaptation of instructional materials motivation survey to turkish: a validity and reliability study. Necatibey Faculty Educ. Electron. J. Sci. Math. Educ. 5, 292–311.

Li, H., Cui, W., Xu, Z., Zhu, Z., and Feng, M. (2018). “Yixue adaptive learning system and its promise on improving student learning,” in Proceedings of the 10th International Conference on Computer Supported Education (CSEDU 2018), Funchal, 45–52.

Linser, R., and Kurtz, G. (2018). “Do anonymity and choice of role help to motivate and engage higher education students in multiplayer online role play simulation games?,” in Proceedings of EdMedia: World Conference on Educational Media and Technology Amsterdam, Netherlands, (Waynesville, NC: Association for the Advancement of Computing in Education (AACE), 1506– 1513.

Loorbach, N., Karreman, J., and Steehouder, M. (2007). Adding motivational elements to an instruction manual for seniors: effects on usability and motivation. Tech. Commun. 54, 343–358.

Loorbach, N., Peters, O., Karreman, J., and Steehouder, M. (2015). Validation of the instructional materials motivation survey (IMMS) in a self-directed instructional setting aimed at working with technology. Br. J. Educ. Technol. 46, 204–218. doi: 10.1111/bjet.12138

Lowrie, T., and Jorgensen, R. (2011). “Gender differences in students’ mathematics game playing. Comput. Educ. 57, 2244–2248. doi: 10.1016/j.compedu.2011.06.010

Meiselwitz, G., and Sadera, W. A. (2008). Investigating the connection between usability and learning outcomes in online learning environments. JOUR 4:9.

Millar, P. R., Serbun, S. J., Vadalia, A., and Gutchess, A. H. (2013). Cross-cultural differences in memory specificity. Cult. Brain 1, 138–157. doi: 10.1007/s40167-013-0011-3

Miller, L. M., Chang, C.-I., Wang, S., Beier, M. E., and Klisch, Y. (2011). Learning and motivational impacts of a multimedia science game. Comput. Educ. 57, 1425–1433. doi: 10.1016/j.compedu.2011.01.016

Nel, G., and Nel, L. (2019). “Motivational value of code.Org’s code studio tutorials in an undergraduate programming course,” in ICT Education, Vol. 963, eds S. Kabanda, H. Suleman, and S. Gruner (Cham: Springer International Publishing), 173–188. doi: 10.1007/978-3-030-05813-5_12

Orfanou, K., Tselios, N., and Katsanos, C. (2015). Perceived usability evaluation of learning management systems: empirical evaluation of the system usability scale. Int. Rev. Res. Open Distribut. Learn. 16, 227–246.

Pane, J. F., Griffin, B. A., McCaffrey, D. F., and Karam, R. (2014). Effectiveness of cognitive tutor algebra i at scale. Educ. Eval. Policy Anal. 36, 127–144. doi: 10.3102/0162373713507480

Pane, J. F., Steiner, E. D., Baird, M. D., Hamilton, L. S., and Pane, J. D. (2017). How Does Personalized Learning Affect Student Achievement? Santa Monica, CA: RAND Corporation.

Pintrich, P., and de Groot, E. (1990). Motivational and self-regulated learning components to academic performance. J. Educ. Psychol. 82, 33–40. doi: 10.1037/0022-0663.82.1.33

Roebken, H. (2007). Multiple Goals, Satisfaction, and Achievement in University Undergraduate Education: A Student Experience in the Research University (SERU) Project Research Paper. Research & Occasional Paper Series: CSHE. 2.07. Berkeley, CA: Center for Studies in Higher Education.

Song, J., Zuo, B., and Yan, L. (2016). Effects of gender stereotypes on performance in mathematics: a serial multivariable mediation model. Soc. Behav. Pers. 44, 943–952. doi: 10.2224/sbp.2016.44.6.943

Van Seters, J. R., Ossevoort, M. A., Tramper, J., and Goedhart, M. J. (2012). The influence of student characteristics on the use of adaptive E-learning material. Comput. Educ. 58, 942–952. doi: 10.1016/j.compedu.2011.11.002

Varnum, M. E. W., Grossmann, I., Kitayama, S., and Nisbett, R. E. (2010). The origin of cultural differences in cognition: the social orientation hypothesis. Curr. Dir. Psychol. Sci. 19, 9–13. doi: 10.1177/0963721409359301

Villena Taranilla, R., Cózar-Gutiérrez, R., González-Calero, J. A., and Cirugeda, I. L. (2019). Strolling through a city of the Roman Empire: an analysis of the potential of virtual reality to teach history in primary education. Interact. Learn. Environ. 1–11. doi: 10.1080/10494820.2019.1674886

Wang, J., and Wang, X. (2012). Structural Equation Modeling: Applications Using Mplus. Hoboken, NJ: JohnWiley & Sons, Inc.

Willms, J. D. (2003). Student Engagement at School: A Sense of Belonging and Participation. Paris: Organisation for Economic Co-Operation and Development.

Xie, H., Chu, H.-C., Hwang, G.-J., and Wang, C.-C. (2019). Trends and development in technology-enhanced adaptive/personalized learning: a systematic review of journal publications from 2007 to 2017. Comput. Educ. 140:103599. doi: 10.1016/j.compedu.2019.103599

Keywords: motivation and engagement, survey, education technology, adaptive learning systems, factor analysis

Citation: Wang S, Christensen C, Xu Y, Cui W, Tong R and Shear L (2020) Measuring Chinese Middle School Students’ Motivation Using the Reduced Instructional Materials Motivation Survey (RIMMS): A Validation Study in the Adaptive Learning Setting. Front. Psychol. 11:1803. doi: 10.3389/fpsyg.2020.01803

Received: 31 January 2020; Accepted: 30 June 2020;

Published: 04 August 2020.

Edited by:

Mengcheng Wang, Guangzhou University, ChinaReviewed by:

Yonglin Huang, The City University of New York, United StatesCopyright © 2020 Wang, Christensen, Xu, Cui, Tong and Shear. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shuai Wang, c2h1YWkuZGVyZWsud2FuZ0BnbWFpbC5jb20=

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.