- 1Department of General Psychology, University of Padova, Padua, Italy

- 2Department of Brain and Behavioral Sciences, University of Pavia, Pavia, Italy

The COVID-19 pandemic is a serious public health crisis that is causing major worldwide disruption. So far, the most widely deployed interventions have been non-pharmacological (NPI), such as various forms of social distancing, pervasive use of personal protective equipment (PPE), such as facemasks, shields, or gloves, and hand washing and disinfection of fomites. These measures will very likely continue to be mandated in the medium or even long term until an effective treatment or vaccine is found (Leung et al., 2020). Even beyond that time frame, many of these public health recommendations will have become part of individual lifestyles and hence continue to be observed. Moreover, it is implausible that the disruption caused by COVID-19 will dissipate soon. Analysis of transmission dynamics suggests that the disease could persist into 2025, with prolonged or intermittent social distancing in place until 2022 (Kissler et al., 2020).

Human behavior research will be profoundly impacted beyond the stagnation resulting from the closure of laboratories during government-mandated lockdowns. In this viewpoint article, we argue that disruption provides an important opportunity for accelerating structural reforms already underway to reduce waste in planning, conducting, and reporting research (Cristea and Naudet, 2019). We discuss three aspects relevant to human behavior research: (1) unavoidable, extensive changes in data collection and ensuing untoward consequences; (2) the possibility of shifting research priorities to aspects relevant to the pandemic; (3) recommendations to enhance adaptation to the disruption caused by the pandemic.

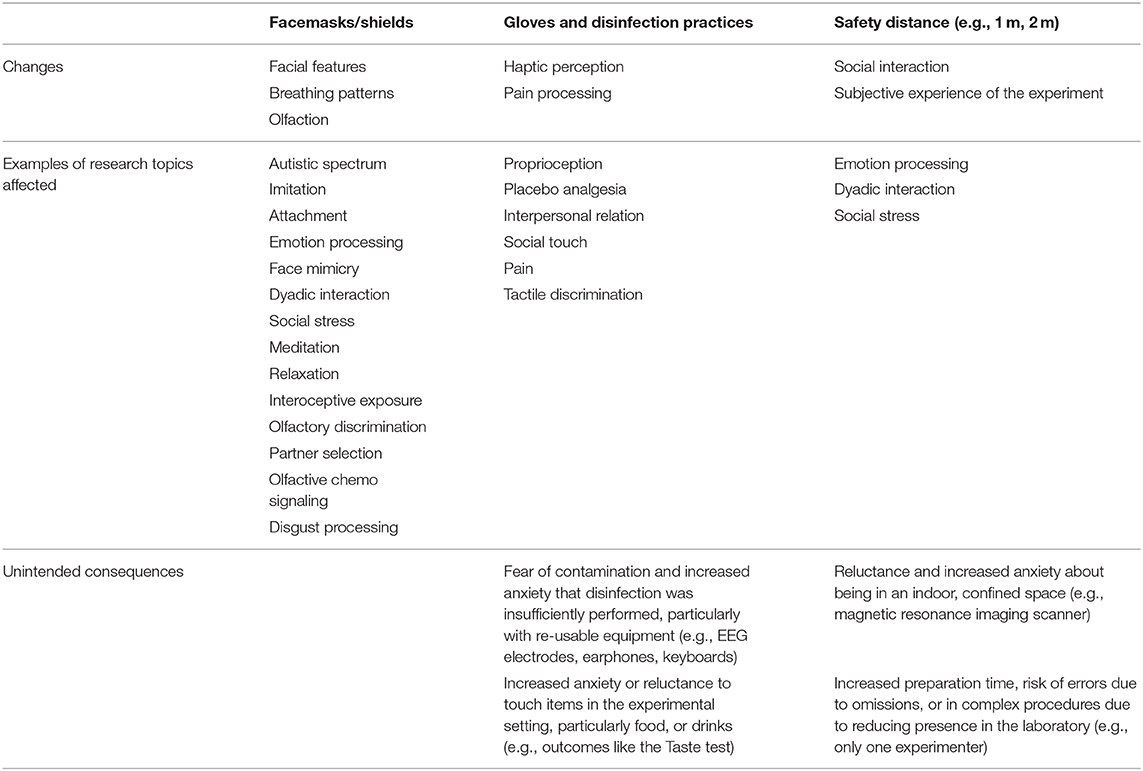

Data collection is very unlikely to return to the “old” normal for the foreseeable future. For example, neuroimaging studies usually involve placing participants in the confined space of a magnetic resonance imaging scanner. Studies measuring stress hormones, electroencephalography, or psychophysiology also involve close contact to collect saliva and blood samples or to place electrodes. Behavioral studies often involve interaction with persons who administer tasks or require that various surfaces and materials be touched. One immediate solution would be conducting “socially distant” experiments, for instance, by keeping a safe distance and making participants and research personnel wear PPE. Though data collection in this way would resemble pre-COVID times, it would come with a range of unintended consequences (Table 1). First, it would significantly augment costs in terms of resources, training of personnel, and time spent preparing experiments. For laboratories or researchers with scarce resources, these costs could amount to a drastic reduction in the experiments performed, with an ensuing decrease in publication output, which might further affect the capacity to attract new funding and retain researchers. Secondly, even with the use of PPE, some participants might be reluctant or anxious to expose themselves to close and unnecessary physical interaction. Participants with particular vulnerabilities, like neuroticism, social anxiety, or obsessive-compulsive traits, might find the trade-off between risks, and gains unacceptable. Thirdly, some research topics (e.g., face processing, imitation, emotional expression, dyadic interaction) or study populations (e.g., autistic spectrum, social anxiety, obsessive-compulsive) would become difficult to study with the current experimental paradigms (Table 1). New paradigms can be developed, but they will need to first be assessed for reliability and validated, which will undoubtedly take time. Finally, generalized use of PPE by participants and personnel could alter the “usual” experimental setting, introducing additional biases, similarly to the experimenter effect (Rosenthal, 1976).

Table 1. Possible consequences of non-pharmacological interventions for COVID-19 on human behavior research.

Data collection could also adapt by leveraging technology, such as running experiments remotely via available platforms, like for instance Amazon's Mechanical Turk (MTurk), where any task that programmable with standard browser technology can be used (Crump et al., 2013). Templates of already-programmed and easily customizable experimental tasks, such as the Stroop or Balloon Analog Risk Task, are also available on platforms like Pavlovia. Ecological momentary assessment is another feasible option, since it was conceived from the beginning for remote use, with participants logging in to fill in scales or activity journals in a naturalistic environment (Shiffman et al., 2008). Increasingly affordable wearables can be used for collecting physiological data (Javelot et al., 2014). Web-based research was already expanding before the pandemic, and the quality of the data collected in this way is comparable with that of laboratory studies (Germine et al., 2012). Still, there are lingering issues. For instance, for some MTurk experiments, disparities have been evidenced between laboratory and online data collection (Crump et al., 2013). Further clarifications about quality, such as consistency or interpretability (Abdolkhani et al., 2020), are also needed for data collected using wearables.

Beyond updating data collection practices, a significant portion of human behavior research might change course to focus on the effects of the pandemic. For example, the incidence of mental disorders or of negative effects on psychological and physical well-being, particularly across populations of interest (e.g., recovered patients, caregivers, and healthcare workers), are crucial areas of inquiry. Many researchers might feel hard-pressed to not miss out on studying this critical period and embark on hastily planned and conducted studies. Multiplication and fragmentation of efforts are likely, for instance, by conducting highly overlapping surveys in widely accessible and oversampled populations (e.g., university students). Moreover, rushed planning is bound to lead to taking shortcuts and cutting corners in study design and conduct, e.g., skipping pre-registration or even ethical committee approval or using not validated measurement tools, like ad hoc surveys. Surveys using non-probability and convenience samples, especially for social and mental health problems, frequently produce biased and misleading findings, particularly for estimates of prevalence (Pierce et al., 2020). A significant portion of human behavior research that re-oriented itself to study the pandemic could result in to a heap of non-reproducible, unreliable, or overlapping findings.

Human behavior studies could also aim to inform the planning and enforcement of public health responses in the pandemic. Behavioral scientists might focus on finding and testing ways to increase adherence to NPIs or to lessen the negative effects of isolation, particularly in vulnerable groups, e.g., the elderly or the chronically ill and their caretakers. Studies could also attempt to elucidate factors that make individuals uncollaborative with recommendations from public health authorities. Though all of these topics are important, important caveats must be considered. Psychology and neuroscience have been affected by a crisis in reproducibility and credibility, with several established findings proving unreliable and even non-reproducible (Button et al., 2013; Open Science Collaboration, 2015). It is crucial to ensure that only robust and reproducible results are applied or even proposed in the context of a serious public health crisis. For instance, the possible influence of psychological factors on susceptibility to infection and potential psychological interventions to address them could be interesting topics. However, the existing literature is marked by inconsistency, heterogeneity, reverse causality, or other biases (Falagas et al., 2010). Even for robust and reproducible findings, translation is doubtful, particularly when these are based on convenience samples or on simplified and largely artificial experimental contexts. For example, the scarcity of medical resources (e.g., N-95 masks, drugs, or ventilators) in a pandemic with its unavoidable ethical conundrum about allocation principles and triage might appeal to moral reasoning researchers. Even assuming, implausibly, that most of the existent research in this area is robust, translation to dramatic real-life situations and highly specialized contexts, such as intensive care, would be difficult and error-prone. Translation might not even be useful, given that comprehensive ethical guidance and decision rules to support medical professionals already exist (Emanuel et al., 2020).

The COVID-19 pandemic and the corresponding global public health response pose significant and lasting difficulties for human behavior research. In many contexts, such as laboratories with limited resources and uncertain funding, challenges will lead to a reduced research output, which might have further domino effects on securing funding and retaining researchers. As a remedy, modifying data collection practices is useful but insufficient. Conversely, adaptation might require the implementation of radical changes—producing less research but of higher quality and more utility (Cristea and Naudet, 2019). To this purpose, we advocate for the acceleration and generalization of proposed structural reforms (i.e., “open science”) in how research is planned, conducted, and reported (Munafò et al., 2017; Cristea and Naudet, 2019) and summarize six key recommendations.

First, a definitive move from atomized and fragmented experimental research to large-scale collaboration should be encouraged through incentives from funders and academic institutions alike. In the current status quo, interdisciplinary research has systematically lower odds of being funded (Bromham et al., 2016). Conversely, funders could favor top-down funding on topics of prominent interest and encourage large consortia with international representativity and interdisciplinarity over bottom-up funding for a select number of excellent individual investigators. Second, particularly for research focused on the pandemic, relevant priorities need to be identified before conducting studies. This can be achieved through assessing the concrete needs of the populations targeted (e.g., healthcare workers, families of victims, individuals suffering from isolation, disabilities, pre-existing physical and mental health issues, and the economically vulnerable) and subsequently conducting systematic reviews so as to avoid fragmentation and overlap. To this purpose, journals could require that some reports of primary research also include rapid reviews (Tricco et al., 2015), a simplified form of systematic reviews. For instance, The Lancet journals require a “Research in context” box, which needs to be based on a systematic search. Study formats like Registered Reports, in which a study is accepted in principle after peer review of its rationale and methods (Hardwicke and Ioannidis, 2018), are uniquely suited for this change. Third, methodological rigor and reproducibility in design, conduct, analysis, and reporting should move to the forefront of the human behavior research agenda (Cristea and Naudet, 2019). For example, preregistration of studies (Nosek et al., 2019) in a public repository should be widely employed to support transparent reporting. Registered reports (Hardwicke and Ioannidis, 2018) and study protocols are formats that ensure rigorous evaluation of the experimental design and statistical analysis plan before commencing data collection, thus making sure shortcuts and methodological shortcomings are eliminated. Fourth, data and code sharing, along with the use of publicly available datasets (e.g., 1000 Functional Connectomes Project, Human Connectome Project), should become the norm. These practices allow the use of already-collected data to be maximized, including in terms of assessing reproducibility, conducting re-analyses using different methods, and exploring new hypotheses on large collections of data (Cristea and Naudet, 2019). Fifth, to reduce publication bias, submission of all unpublished studies, the so-called “file drawer,” should be encouraged and supported. Reporting findings in preprints can aid this desideratum, but stronger incentives are necessary to ensure that preprints also transparently and completely report conducted research. The Preprint Review at eLife (Elife, 2020), in which the journal effectively takes into review manuscripts posted on the preprint server BioRxiv, is a promising initiative in this direction. Journals could also create study formats specifically designed for publishing studies that resulted in inconclusive findings, even when caused by procedural issues, e.g., unclear manipulation checks, insufficient stimulus presentation times, or other technical errors. This would both aid transparency and help other researchers better prepare their own experiments. Sixth, peer review of both articles and preprints should be regarded as on par with the production of new research. Platforms like Publons help track reviewing activity, which could be rewarded by funders and academic institutions involved in hiring, promotion, or tenure (Moher et al., 2018). Researchers who manage to publish less during the pandemic could still be compensated for the onerous activity of peer review, to the benefit of the entire community.

Of course, individual researchers cannot implement such sweeping changes on their own, without decisive action from policymakers like funding bodies, academic institutions, and journals. For instance, decisions related to hiring, promotion, or tenure of academics could reward several of the behaviors described, such as complete and transparent publication regardless of the results, availability of data and code, or contributions to peer review (Moher et al., 2018). Academic institutions and funders should acknowledge the slowdown of experimental research during the pandemic and hence accelerate the move toward more “responsible indicators” that would incentivize best publication practices over productivity and citations (Moher et al., 2018). Funders could encourage submissions leveraging existing datasets or developing tools for data re-use, e.g., to track multiple uses of the same dataset. Journals could stimulate data sharing by assigning priority to manuscripts sharing or re-using data and code, like re-analyses, or individual participant data meta-analyses.

Author Contributions

CG and IC contributed equally to this manuscript in terms of its conceivement and preparation. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was carried out within the scope of the project “use-inspired basic research”, for which the Department of General Psychology of the University of Padova has been recognized as “Dipartimento di eccellenza” by the Ministry of University and Research.

References

Abdolkhani, R., Gray, K., Borda, A., and Desouza, R. (2020). Quality assurance of health wearables data: participatory workshop on barriers, solutions, and expectations. JMIR mHealth uHealth 8:e15329. doi: 10.2196/15329

Bromham, L., Dinnage, R., and Hua, X. (2016). Interdisciplinary research has consistently lower funding success. Nature 534, 684–687. doi: 10.1038/nature18315

Button, K. S., Ioannidis, J. P. A., Mokrysz, C., Nosek, B. A., Flint, J., Robinson, E. S. J., et al. (2013). Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376. doi: 10.1038/nrn3475

Cristea, I. A., and Naudet, F. (2019). Increase value and reduce waste in research on psychological therapies. Behav. Res. Ther, 123:103479. doi: 10.1016/j.brat.2019.103479

Crump, M. J. C., Mcdonnell, J. V., and Gureckis, T. M. (2013). Evaluating Amazon's mechanical turk as a tool for experimental behavioral research. PLoS ONE 8:e57410. doi: 10.1371/journal.pone.0057410

Elife (2020). eLife Launches Service to Peer Review Preprints on bioRxiv. eLife. Available online at: https://elifesciences.org/for-the-press/a5a129f2/elife-launches-service-to-peer-review-preprints-on-biorxiv

Emanuel, E.J., Persad, G., Upshur, R., Thome, B., Parker, M., Glickman, A., et al. (2020). Fair allocation of scarce medical resources in the time of Covid-19. N. Engl. J. Med. 382, 2049–2055. doi: 10.1056/NEJMsb2005114

Falagas, M. E., Karamanidou, C., Kastoris, A. C., Karlis, G., and Rafailidis, P. I. (2010). Psychosocial factors and susceptibility to or outcome of acute respiratory tract infections. Int. J. Tuberc. Lung Dis. 14, 141–148. Available online at: https://www.ingentaconnect.com/content/iuatld/ijtld/2010/00000014/00000002/art00004#

Germine, L., Nakayama, K., Duchaine, B. C., Chabris, C. F., Chatterjee, G., and Wilmer, J. B. (2012). Is the Web as good as the lab? Comparable performance from web and lab in cognitive/perceptual experiments. Psychon. Bull. Rev. 19, 847–857. doi: 10.3758/s13423-012-0296-9

Hardwicke, T. E., and Ioannidis, J. P. A. (2018). Mapping the universe of registered reports. Nat. Hum. Behav. 2, 793–796. doi: 10.1038/s41562-018-0444-y

Javelot, H., Spadazzi, A., Weiner, L., Garcia, S., Gentili, C., Kosel, M., et al. (2014). Telemonitoring with respect to mood disorders and information and communication technologies: overview and presentation of the PSYCHE project. Biomed Res. Int. 2014:104658. doi: 10.1155/2014/104658

Kissler, S. M., Tedijanto, C., Goldstein, E., Grad, Y. H., and Lipsitch, M. (2020). Projecting the transmission dynamics of SARS-CoV-2 through the postpandemic period. Science 368, 860–868. doi: 10.1126/science.abb5793

Leung, K., Wu, J. T., Liu, D., and Leung, G. M. (2020). First-wave COVID-19 transmissibility and severity in China outside Hubei after control measures, and second-wave scenario planning: a modelling impact assessment. Lancet 395, 1382–1393. doi: 10.1016/S0140-6736(20)30746-7

Moher, D., Naudet, F., Cristea, I.A., Miedema, F., Ioannidis, J.P.A., and Goodman, S.N. (2018). Assessing scientists for hiring, promotion, and tenure. PLoS Biol. 16:e2004089. doi: 10.1371/journal.pbio.2004089

Munafò, M.R., Nosek, B.A., Bishop, D.V.M., Button, K.S., Chambers, C.D., Percie Du Sert, N., et al. (2017). A manifesto for reproducible science. Nat. Hum. Behav. 1:0021. doi: 10.1038/s41562-016-0021

Nosek, B.A., Beck, E.D., Campbell, L., Flake, J.K., Hardwicke, T.E., Mellor, D.T., et al. (2019). Preregistration is hard, and worthwhile. Trends Cogn. Sci. 23, 815–818. doi: 10.1016/j.tics.2019.07.009

Open Science Collaboration (2015). Estimating the reproducibility of psychological science. Science 349:aac4716. doi: 10.1126/science.aac4716

Pierce, M., Mcmanus, S., Jessop, C., John, A., Hotopf, M., Ford, T., et al. (2020). Says who? The significance of sampling in mental health surveys during COVID-19. Lancet Psychiatry 7, 567–568. doi: 10.1016/S2215-0366(20)30237-6

Shiffman, S., Stone, A.A., and Hufford, M.R. (2008). Ecological momentary assessment. Ann. Rev. Clin. Psychol. 4, 1–32. doi: 10.1146/annurev.clinpsy.3.022806.091415

Keywords: open science, data sharing, social distancing, preprint, preregistration, coronavirus disease, neuroimaging, experimental psychology

Citation: Gentili C and Cristea IA (2020) Challenges and Opportunities for Human Behavior Research in the Coronavirus Disease (COVID-19) Pandemic. Front. Psychol. 11:1786. doi: 10.3389/fpsyg.2020.01786

Received: 29 April 2020; Accepted: 29 June 2020;

Published: 10 July 2020.

Edited by:

Roberto Codella, University of Milan, ItalyReviewed by:

Nicola Lovecchio, University of Milan, ItalyFederico Ranieri, University of Verona, Italy

Copyright © 2020 Gentili and Cristea. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Claudio Gentili, Yy5nZW50aWxpQHVuaXBkLml0

Claudio Gentili

Claudio Gentili Ioana A. Cristea

Ioana A. Cristea