- 1Department of Psychology, Yonsei University, Seoul, South Korea

- 2Department of Psychology, Chungbuk National University, Cheongju, South Korea

- 3Department of Psychology, University of California, Berkeley, Berkeley, CA, United States

The purposes of this study were to develop the Yonsei Face Database (YFace DB), consisting of both static and dynamic face stimuli for six basic emotions (happiness, sadness, anger, surprise, fear, and disgust), and to test its validity. The database includes selected pictures (static stimuli) and film clips (dynamic stimuli) of 74 models (50% female) aged between 19 and 40. Thousand four hundred and eighty selected pictures and film clips were assessed for the accuracy, intensity, and naturalness during the validation procedure by 221 undergraduate students. The overall accuracy of the pictures was 76%. Film clips had a higher accuracy, of 83%; the highest accuracy was observed in happiness and the lowest in fear across all conditions (static with mouth open or closed, or dynamic). The accuracy was higher in film clips across all emotions but happiness and disgust, while the naturalness was higher in the pictures than in film clips except for sadness and anger. The intensity varied the most across conditions and emotions. Significant gender effects were found in perception accuracy for both the gender of models and raters. Male raters perceived surprise more accurately in static stimuli with mouth open and in dynamic stimuli while female raters perceived fear more accurately in all conditions. Moreover, sadness and anger expressed in static stimuli with mouth open and fear expressed in dynamic stimuli were perceived more accurately when models were male. Disgust expressed in static stimuli with mouth open and dynamic stimuli, and fear expressed in static stimuli with mouth closed were perceived more accurately when models were female. The YFace DB is the largest Asian face database by far and the first to include both static and dynamic facial expression stimuli, and the current study can provide researchers with a wealth of information about the validity of each stimulus through the validation procedure.

Introduction

Facial expression plays an important role in the formation and maintenance of social relationships between individuals (McKone and Robbins, 2011). Researchers have investigated various aspects of face perception, including the mechanisms behind facial recognition and discrimination, information processing of faces, development of face perception, and the relationship between mental disorders and face recognition (Tsao and Livingstone, 2008; Calder et al., 2011). For example, it was found that infants prefer face-like stimuli to non-facial stimuli (Johnson et al., 2008) and that their face recognition skills become more sophisticated as they age (Passarotti et al., 2003; Golarai et al., 2007; Scherf et al., 2007). A plethora of fMRI studies have identified specific brain regions and networks, such as the fusiform face area, superior temporal sulcus, and occipital lobe, that are responsible for processing face perception (Ishai, 2008; Pitcher et al., 2011). In addition, people generally perform holistic than local processing of faces, meaning that parts of faces are integrated into one meaningful entity rather than separate parts perceived independently (Richler and Gauthier, 2014; Behrmann et al., 2015). Researchers have demonstrated that people develop a template for face perception and continuously modify it as they gain more experience (e.g., norm-based coding), rather than processing individual faces one by one (e.g., exemplar-based coding; Rhodes et al., 2005). Other studies have shown that the face perception differs across age, gender, and race, based on the information at hand (Zebrowitz et al., 2003, 2007; Hess et al., 2004; Becker et al., 2007). Moreover, some studies have shown significant correlations between deficits in face perception and various disorders, such as autism spectrum disorder (Dawson et al., 2005; Harms et al., 2010), and schizophrenia (Kohler et al., 2009).

In the studies of face perception and recognition, actual pictures of people were used as stimuli in computerized tasks to measure individual decision-making behaviors or brain activities via fMRI or ERP methods (Calder et al., 2011). These pictures were sometimes modified, depending on the purpose of the study, where some were composited or manipulated (Steyvers, 1999; Leopold et al., 2001) and others were altered to leave only the outlines of the faces (Wilson et al., 2002; Kim and Kim, 2003). Despite the variations in study goals, since most studies using facial stimuli require variability in their stimuli, it is extremely difficult for individual researchers to develop their own stimuli. Consequently, several researchers have developed face stimuli databases and have distributed them.

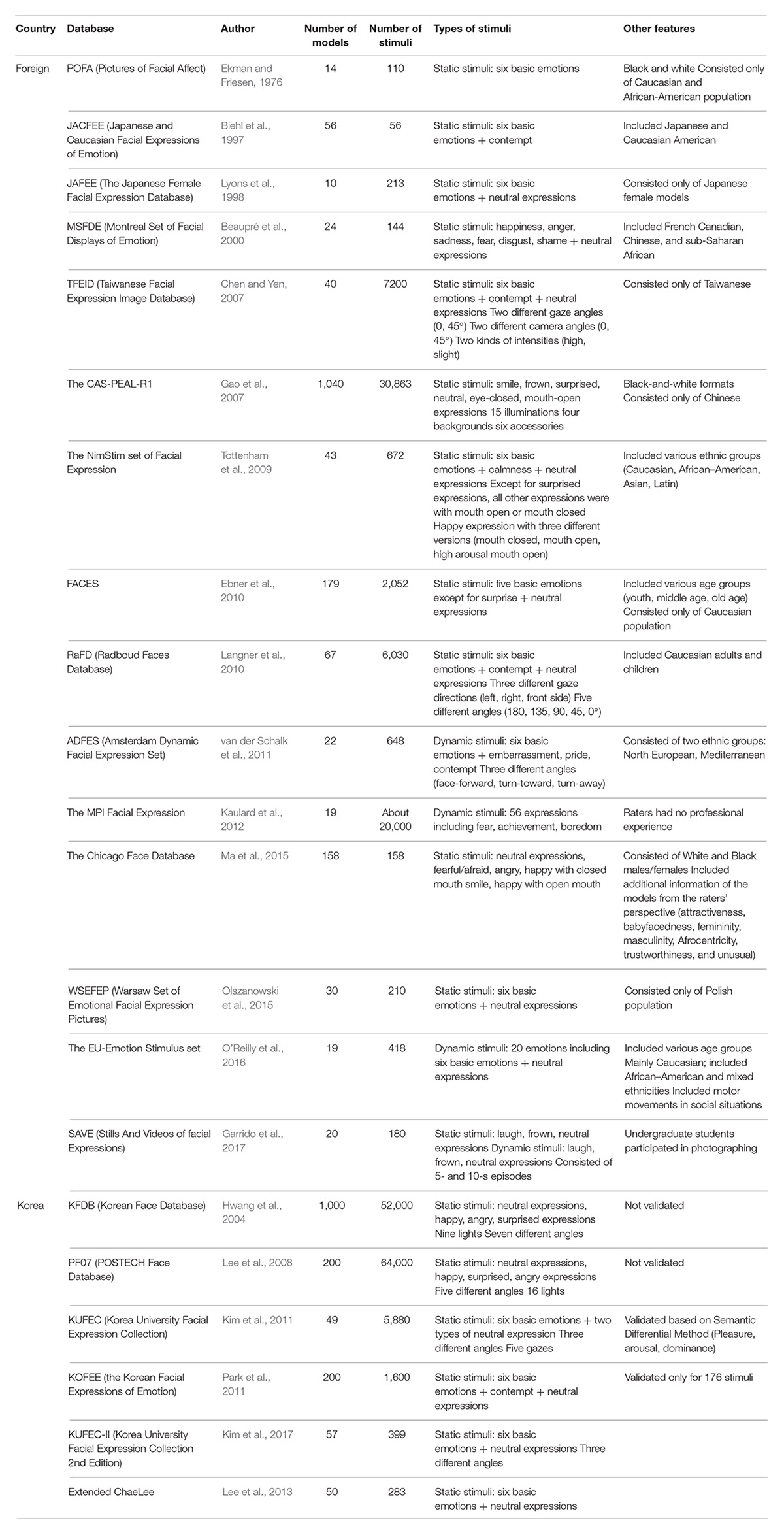

Table 1 summarizes the most widely used face databases. Each database typically consists of the six basic emotions defined by Ekman et al. (1972) which are happiness, sadness, anger, surprise, fear, and disgust, depicted by at least 20 models, although varying in their number and the types of stimuli. Upon permission, many researchers have conducted studies with selected stimuli from the databases. Pictures of Facial Affect (POFA) is one of the earliest face stimuli databases, developed by Ekman and Friesen (1976) and consisted of 110 black-and-white pictures expressing the six basic emotions. The most widely used database in the face perception studies is NimStim set (Tottenham et al., 2009), where many researchers have compared perceptual differences across diverse clinical populations (Norton et al., 2009; Hankin et al., 2010; Levens and Gotlib, 2010) or examined brain activities using an fMRI approach (Harris and Aguirre, 2010; Monk et al., 2010; Weng et al., 2011). Noted for its wide range in age of 179 models (ranging from the 20 s to the 60 s), the FACES database (Ebner et al., 2010) is mainly used in studies examining differences in face recognition ability across different age groups (Ebner and Johnson, 2009; Voelkle et al., 2012). The Chicago Face Database (Ma et al., 2015), which contains male and female, Black and White individuals in its initially published subset, was used in testing the effect of race in perceiving faces (Gwinn et al., 2015; Kleider-Offutt et al., 2017).

Although limited, face databases containing Asian faces have also been developed. The Japanese and Caucasian Facial Expressions of Emotion (JACFEE) database (Biehl et al., 1997) has been used in various studies examining brain activity patterns across emotionality (Whalen et al., 2001), differences in facial expression recognition across cultures (Matsumoto et al., 2002), and differences in facial recognition ability of individuals with clinical disorders such as schizophrenia or social anxiety disorder (Hooker and Park, 2002; Stein et al., 2002). The female-only version, Japanese Female Facial Expression (JAFEE) Database (Lyons et al., 1998), is also available which includes pictures of Japanese female models expressing six basic emotions and neutral expressions. The CAS-PEAL-R1 database (Gao et al., 2007), a large-scale Chinese face database, consists of 30,863 pictures of 595 men and 445 women expressing six facial emotion expressions. The CAS-PEAL-R1 has been frequently used for face recognition studies in computer science (Zhang et al., 2009; Rivera et al., 2012). The Taiwanese Facial Expression Image Database (TFEID) (Chen and Yen, 2007) includes 7800 stimuli of 40 Taiwanese models expressing 6 basic emotions with two levels of intensity and neutral expressions. Montreal Set of Facial Displays of Emotion (MSFDE) (Beaupré et al., 2000) database is more diverse in terms of ethnic background of its 24 models, including Chinese, French Canadian, and sub-Saharan African. Still, only a small proportion from the total 144 stimuli are Asian face stimuli.

Recently, databases including film clips as well as pictures have been developed to capture and deliver the dynamics of emotions. For example, the Stills and Videos of facial Expressions (SAVE) database (Garrido et al., 2017) included pictures and film clips of models laughing and frowning, as well as presenting neutral expressions. The Amsterdam Dynamic Facial Expression Set (ADFES; van der Schalk et al., 2011) recorded film clips of the six basic emotions plus three subtle expressions, such as embarrassment, pride, and contempt, taken from three different angles. In addition, there are databases with various facial emotion expressions, such as the EU-Emotion Stimulus set, containing 20 different facial emotion expressions (O’Reilly et al., 2016), and Max Planck Institute Facial Expression (Kaulard et al., 2012), with 56 sets of facial emotion expressions.

In Korea, various face databases comprising only of static face stimuli have been developed and used in a number of studies. For example, the Korea University Facial Expression Collection (KUFEC; Kim et al., 2011) was used to examine the facial recognition ability of clinical populations (Jung et al., 2015; Jang et al., 2016; Kim and Kim, 2016) and the differences in perceiving facial expressions in a non-clinical adult group (Kim et al., 2013). Recently, KUFEC-II, a revised version of KUFEC, has been developed to overcome the limitations of KUFEC in its shooting and selection of stimuli by adopting the Facial Action Coding System (FACS; Ekman et al., 2002) Some Korean databases, such as Extended ChaeLee (Lee et al., 2013) and the Korean Facial Expressions of Emotion (KOFEE) database (Park et al., 2011) have been used in many neuroscience studies with emphasis on the facial perception skills of clinical populations (Kim et al., 2014; Lee et al., 2015; Oh et al., 2016; Park et al., 2016). Furthermore, other databases, such as the Korean Face Database (KFDB; Hwang et al., 2004) and the POSTECH Face Database (PF07; Lee et al., 2008), have been employed in developing algorithms for face recognition.

However, due to a few limitations of the existing databases, foreign or Korean, their employment in Korean research has faced some challenges. First, foreign databases are limited in the number and types of Asian facial emotion expressions contained within. For example, the CAS-PEAL-R1 does not include all six basic emotions in its database and the stimuli formats are in black-and-white, resulting in its limited usage. NimStim set includes only six Asian out of its 43 models, and MSFDE contains 8 Asian out of its 24 models. Although the JACFEE database includes 28 pictures of 14 Asian models, which is a half of its total number of stimuli, the pictures are only presented in the black-and-white format, again limiting its utilization. Plus, JAFEE only consists of female models, which may not be suitable to be used across genders (Thayer and Johnsen, 2000). Previous studies have shown that people are more likely to remember the faces of their own race than those of other races (O’toole et al., 1994; Walker and Tanaka, 2003) and to recognize emotions of the same race with higher accuracy (Kilbride and Yarczower, 1983; Markham and Wang, 1996). These findings suggest that the performance in face perception is influenced by the race of models in the stimuli, which suggests the need to use models for face stimuli of the same race as the rater in face perception research.

Second, the existing Korean face databases are not as diverse in the numbers and types of stimuli as foreign databases, restricting selections for studies, or not validated. Validation is an essential process in developing databases, which typically includes measuring the accuracy, intensity, and naturalness of the stimuli (Tottenham et al., 2009). Although the Extended ChaeLee is validated, it only includes frontal faces and has a relatively small number (50) of stimuli. Both KUFEC and KUFEC-II consist of a large number of stimuli taken from three different angles (45°, 0°, −45°) with five different viewpoints (front, up, down, left, and right) of two different neutral expressions and six basic emotions of 49 and 57 male and female Korean models. However, only 672 stimuli from KUFEC and 399 from KUFEC-II have been validated, and all are static stimuli, restricting its utilization for a range of studies. KOFEE is also validated for only 176 stimuli, which also limits selection for researchers. Moreover, although KFDB and PF07 include a large number of stimuli (52,000 for KFDB, 64,000 for PF07), they have not been validated as well.

Third, despite the dynamic nature of emotional expressivity and its perception in daily social interactions (Sato and Yoshikawa, 2004; Kamachi et al., 2013), most of the existing databases, especially the Korean databases, consist only of static stimuli, which raises the ecological validity issue (Rhodes et al., 2011; van der Schalk et al., 2011; Kościński, 2013). Indeed, recent studies revealed that study results are affected by the types of stimuli (static or dynamic). For example, some researchers found that facial expressions are perceived more accurately in dynamic than in static stimuli (Ambadar et al., 2005; Trautmann et al., 2009) and that the intensity and naturalness are rated higher in dynamic stimuli (Biele and Grabowska, 2006; Weyers et al., 2006; Cunningham and Wallraven, 2009). Furthermore, raters reported a higher arousal level when presented with dynamic rather than static stimuli (Sato and Yoshikawa, 2007a), and they imitated models’ facial expressions more accurately (Sato and Yoshikawa, 2007b; Sato et al., 2008). In addition, neuroimaging studies have noted that the range of brain neural networks activated when processing dynamic facial expressions is wider than that for static expressions. Thus, a database that subsumes both the static and dynamic stimuli is called for, and, internationally, several facial stimuli databases have been developed to include dynamic stimuli into their databases. Yet, such database that suits Asian population has not been developed.

The purpose of this study is to develop a new face database that encompasses both static and dynamic stimuli to complement for the limitations in the existing face databases for the Asian population.

Materials and Methods

Stimulus

Models

Models were recruited via advertisements posted on online meetup groups for models in both the general and college communities. A total of 74 models (37 males, 37 females, aged from 19 to 40 years) participated in the photo and film shoots. They were informed of the date, time, and location of shooting via text messages, email, or phone calls. On the day of the shooting, the models were provided with a brief description of the research study and procedure, and participated upon consenting.

Stimuli

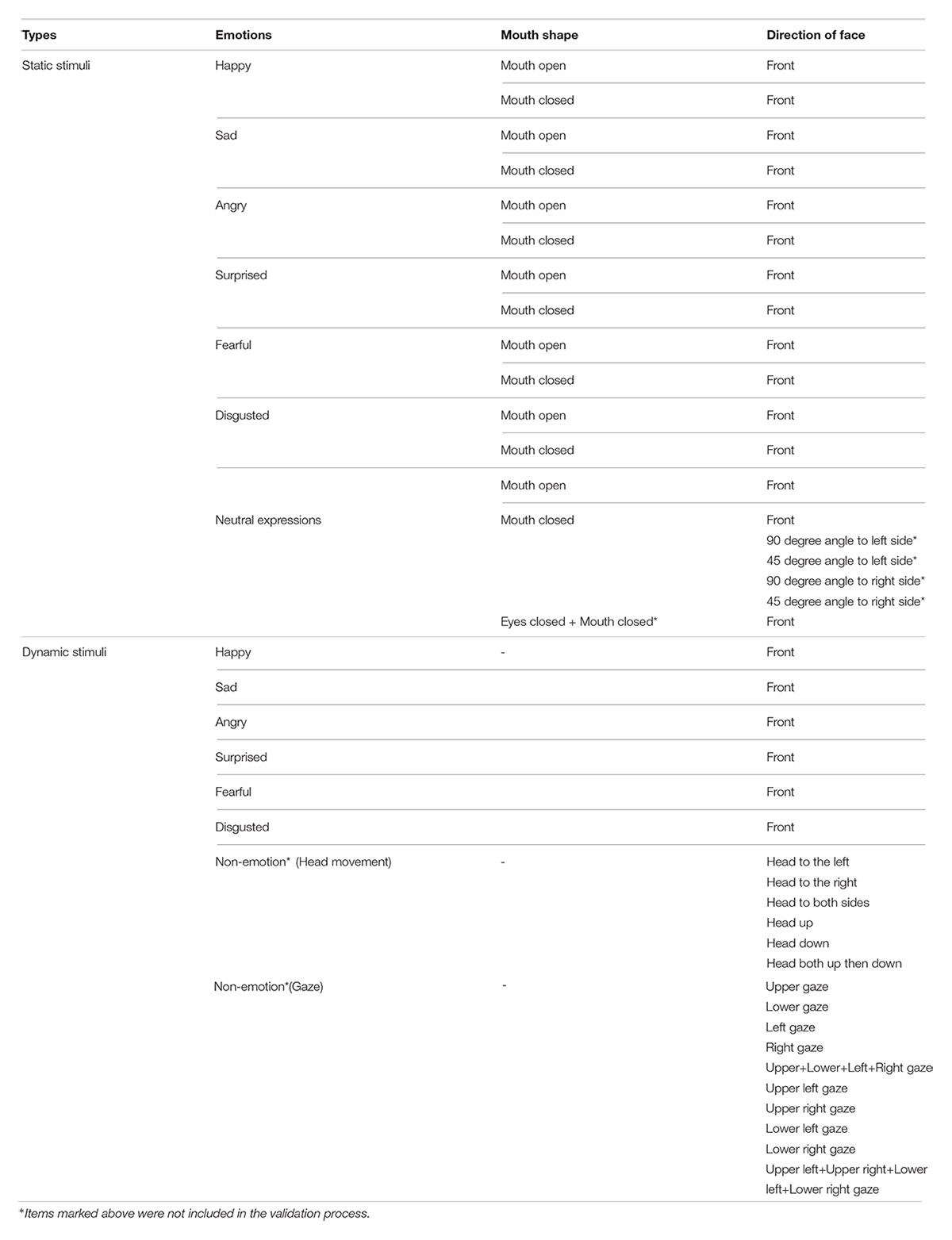

For the Yonsei Face Database (YFace DB), static and dynamic stimuli displaying emotional and neutral faces were obtained. Static stimuli consisted of seven facial emotion expressions in total, including the neutral expression and six basic emotions: happiness, sadness, anger, surprise, fear, and disgust (Ekman et al., 1972). In addition, since the six basic emotions are expressed differently when the mouth is open or closed, each expression was taken in two conditions (mouth open and mouth closed). Neutral expressions with mouth open, mouth closed, and mouth and eyes closed were captured. Moreover, to be compatible with face recognition studies that featured various angles (Royer et al., 2016), the mouth-closed neutral expressions were taken at 45° and 90° angles from both left and right sides in addition to the frontal images and films.

As with the static stimuli, dynamic stimuli (films) featured the six basic emotion expressions (happiness, sadness, anger, surprise, fear, and disgust). The models were instructed to change their facial emotion expression from a neutral state to peak states of each emotion in 4–5 s. Neutral dynamic stimuli included head and gaze movements. The head movements were captured as models moved their heads from the front to the left side at a 90° angle then back to the front, and to the right side at a 90° angle and then back to the front. Each 90° angle movement took approximately 4–5 s, constituting 15–20 s for the whole movement. Gaze movements were filmed as models moved their eyes in vertical, horizontal, and diagonal directions and then back to the front. It took approximately 1–2 s to move the eyes to one direction and then revert to the front. In total, it took approximately 5–10 s for each film clip. Afterward, the head and gaze movements were edited for each direction to create the final sets of stimuli.

Procedures

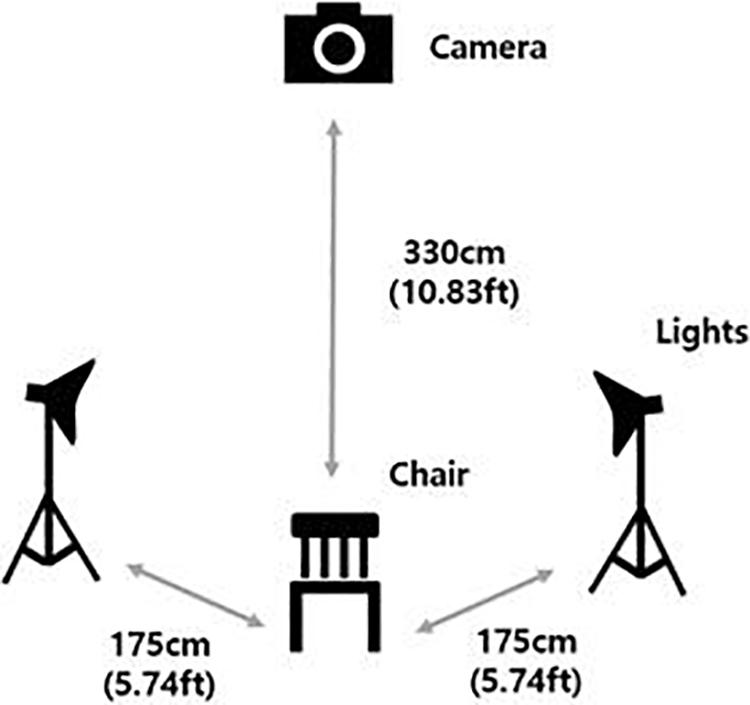

The photo and film shoots took place in a video production room at a university library located in Seoul. The models were instructed to sit in a chair in front of a white background screen. A Canon EOS 5D Mark II camera equipped with a Canon EF 70–200 mm lens was used, along with two PROSPOT DHL-1K standing lights. The distance between the chair and the camera was approximately 330 cm (10.83 ft), and the distance between the chair and the lights were approximately 175 cm (5.74 ft) each. Figure 1 shows the setup of the photo shoot.

First, the models were provided with the information about the purpose and details of the shooting. After they had consented to the procedure, models were asked to change into black T-shirts and remove any confounding features, such as beards, mustaches, necklaces, glasses, makeup, or bangs. Before the shooting, models were given a list of items to be pictured and filmed, with corresponding photo samples of each item to practice. Instructions were provided on the detailed facial muscle action units for the facial emotion expressions, as described in the Facial Action Coding Scheme of Ekman et al. (2002). Then photos per each emotion expression were taken in the order on the list while models were instructed to make facial emotion expression in front of the camera as naturally as possible. Models were also asked to make their expressions as intense as possible, because some researchers have reported that Koreans tend to express facial emotion with relatively weak intensity (Kim et al., 2011). A professional photographer directed the shooting with the help of a graduate student research assistant majoring in Psychology. They elicited the expressions from the models by describing situations most appropriate for each emotion. Static stimuli for each emotion were taken first, followed by neutral expressions with different head directions. Then, dynamic stimuli for each emotion were filmed, followed by neutral expressions with different head/gaze movements. The photographer took several pictures for each emotion until both the photographer and the research assistant agreed that the models made the most congruent facial expression to the requested emotion. Table 2 shows a categorized list of all stimuli included in the database.

The Stimuli Selection Procedure

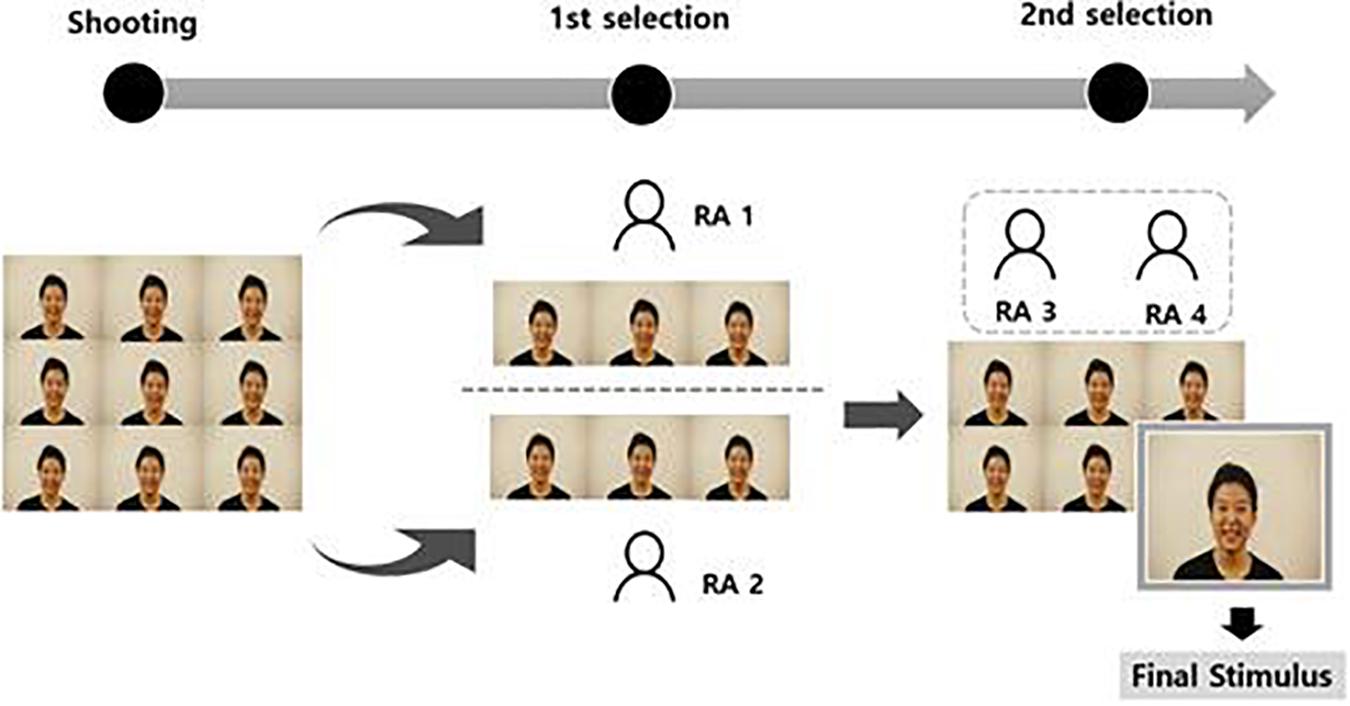

In order to select stimuli for the database, a two-step procedure was used (Figure 2). Two research assistants participated in the first process, and another two assistants participated in the second process. All research assistants were trained on the basic facial emotion expressions (Ekman et al., 2002) by the graduate student who participated in the shooting for an hour, and any questions that raised were addressed to minimize misunderstandings.

In the first part of the static stimuli selection procedure, two research assistants selected three static stimuli per facial emotion expression based on the following criteria. First, the stimuli should express each emotion appropriately and with high intensity (Ebner et al., 2010). Second, the stimuli should involve a head or body leaning at a minimal level. Third, the forehead should be well revealed in the stimuli. In the second part of the procedure, another two research assistants selected one stimulus from the three static stimuli per facial expression chosen from the first selection process, using the same criteria.

In the first part of the dynamic stimuli selection procedure, two research assistants selected one or two dynamic stimuli with head/gaze movement per facial expression according to following criteria. First, the stimuli should express each emotion appropriately with high intensity. Second, the stimuli should involve a head or body leaning toward or backwards at a minimal level (close to a 90° angle). Third, the stimuli should be presented with little body movement except for face turning. In the second part of the procedure, one set of dynamic stimuli for each facial expression with the head/gaze movement was selected according to the following criteria. First, dynamic stimuli should involve as little eye blinking as possible. Second, the stimuli should include the face turning at a 90° angle to both the left and right sides. Third, the head/gaze movements should comply with the speed timeframe (4–5 s to move the head to a 90° angle and 1–2 s to move gaze direction to one side and revert to the front). In the second part of the procedure, another two research assistants selected one stimulus out of the selected stimuli per facial expression, using the same criteria.

Editing the Stimuli

In the final stage of development, 1,480 stimuli (1,036 static stimuli and 444 dynamic stimuli) were selected from a total of 3,034 stimuli (1,406 static stimuli and 1,628 dynamic stimuli). The hue and size were edited for standardization using either Adobe Photoshop CS6 or Adobe Premiere CS. The static stimuli were adjusted to a resolution of 516 × 3444 and dynamic stimuli to 1920 × 1080. The neutral expressions of static stimuli with different head directions and dynamic stimuli with the head/gaze movements were separated according to the direction of head/gaze movement and saved separately.

Validation Procedure of Stimuli

Participants

A total of 230 undergraduate students recruited from a university in Seoul (101 males, 120 females, aged from 18 to 28) participated in the database validation procedure. The participants were recruited via a recruitment website for research participants at the university in return for two course credits. Nine participants (five females) were excluded in the analysis due to technical errors during the data collection; hence, 221 participants were included in the final analysis. This study was approved by Yonsei University of Korea, Institutional Review Board (Approval No.: 7001988-201804-HR-140-09).

Materials

A sixth-generation i3 desktop with Windows 10 operating system and 22-inch monitor with a resolution of 1920 × 1080 was used in the experiment. The Psychopy 1.84.2 program was utilized to create computer-based experimental tasks and to record responses.

Stimuli

A total of 1480 ratable stimuli from the Yonsei Face DB were reviewed for evaluation (1036 static stimuli of six basic emotions and neutral expressions with mouth open or closed and 444 dynamic stimuli of six basic emotions). Static stimuli with neutral expressions taken from side angles and dynamic stimuli with head/gaze movements that were not suitable for assessing the accuracy, intensity, and naturalness were excluded in the validation process. The stimuli were resized to avoid errors in storing data online using Adobe Photoshop CS6 or Adobe Premiere CS. As a result, static stimuli with 657 × 438 pixels and dynamic stimuli with 640 × 360 pixels were used for the computerized tasks.

Validation Criteria

Items were evaluated for accuracy, intensity, and naturalness because such properties are the most widely measured criteria in validating a facial emotion expression database. Accuracy is the most foundational indicator for selecting stimuli and has been widely used by researchers in the development and validation of face databases, which measures what expression each facial emotion represents (Tottenham et al., 2009; Ebner et al., 2010). Intensity is the degree to which an individual is influenced by facial stimuli and is known to be one of the most salient aspects of emotion (Sonnemans and Frijda, 1994). Some studies have found that there is a correlation between the intensity and accuracy of an emotion expressed, where high intensity of facial expression is associated with higher accuracy in face perception. This suggests that intensity is also an important factor to be considered when selecting a stimulus (Palermo and Coltheart, 2004; Adolph and Georg, 2010; Hoffmann et al., 2010). The face databases that measure intensity include the Warsaw Set of Emotional Facial Expression Pictures (WSEFEP) database (Olszanowski et al., 2015) and the EU-Emotion Stimulus set (O’Reilly et al., 2016). Naturalness refers to the degree to which a facial stimulus truly reflects the emotion experienced at the moment (Livingstone et al., 2014). In particular, naturalness is highly correlated with the ecological validity (Carroll and Russell, 1997; Limbrecht et al., 2012). For example, people perceive natural smiles more positively than awkward smiles, indicating the dependence of facial expression perception on the naturalness of the expression (Miles and Johnston, 2007). One of the face databases that evaluated naturalness is the MPI Facial Expression Database (Kaulard et al., 2012).

To measure accuracy, the raters were asked to choose which label best represented the emotion displayed in each picture and in the film clips from choices of the six basic emotion expressions and neutral expressions (only the six basic emotion expressions for the clips). To measure the intensity and naturalness, a seven-point Likert-scale was used where 1 represented low intensity (or low naturalness/high awkwardness) and 7 represented high intensity (high naturalness).

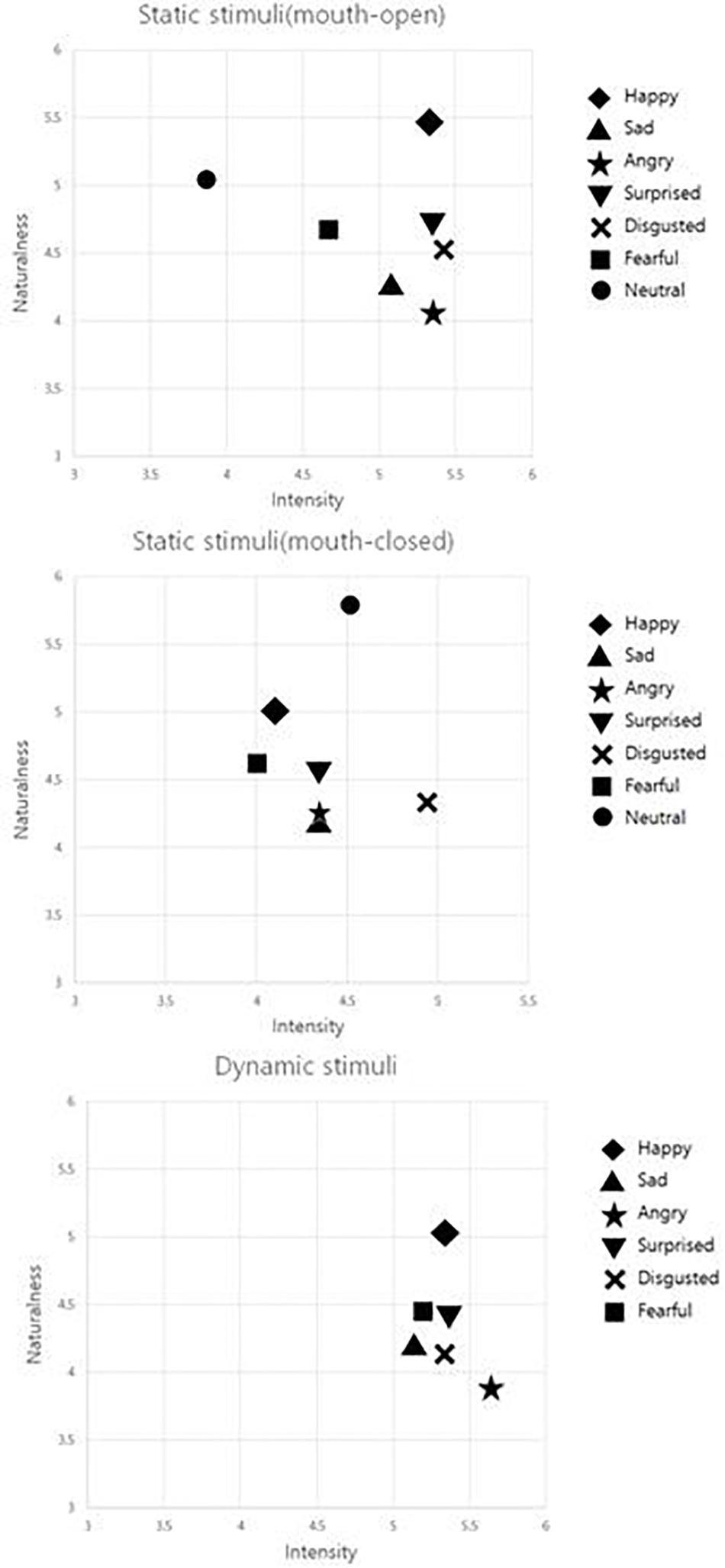

The Procedure

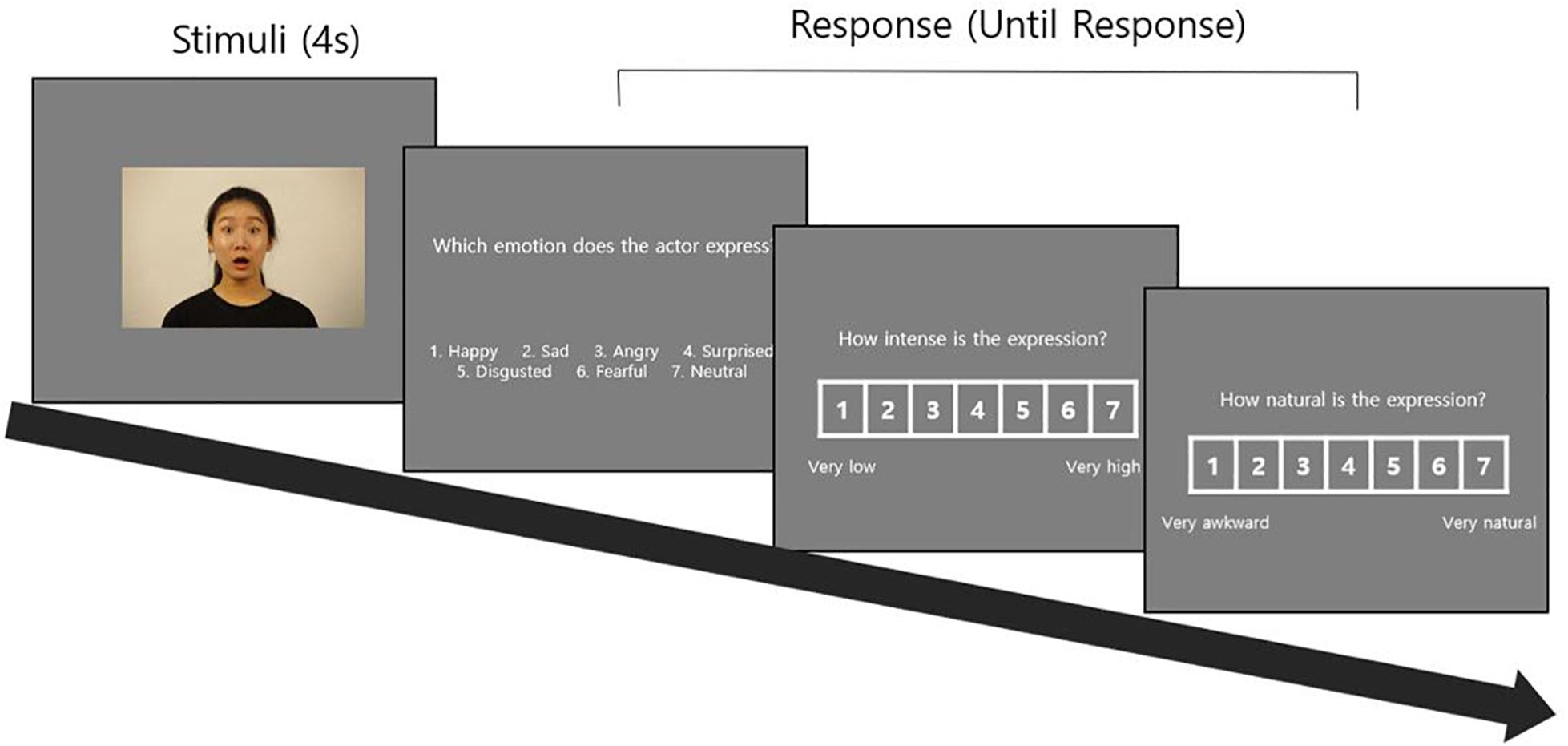

After being informed about the research study, the raters signed the consent form and participated in the procedure described below. To avoid the fatigue effect in raters, the number of stimuli evaluated per person was limited to 10% of the 1480 stimuli, so that the assessment could be completed within a 1 hr timeframe. The stimuli for the evaluation were therefore separated into ten sets consisting of 148 stimuli per set (a combination of 103 static stimuli and 45 dynamic stimuli or 104 static stimuli and 44 dynamic stimuli). The evaluation process was performed in two blocks, one for static stimuli and the other for dynamic stimuli. Raters were encouraged to take a break between the blocks if desired. To account for the order effect in presenting stimuli, the sequence between static and dynamic stimuli blocks was counterbalanced. Static stimuli were presented for 4 s each, followed by a question, “Which emotion does the actor express?” and choices for the six basic emotion and neutral expressions. Subsequently, questions regarding the level of intensity and naturalness were asked, and the raters responded to each question on the seven-point scale. For each question, the raters pressed a number from 1 to 7 on a keyboard to complete the response and then moved on to the next item (Figure 3). The same procedure was applied for the dynamic stimuli evaluation but only six choices of basic emotion expressions were given for assessing accuracy. Through this process, raters evaluated all 148 stimuli. Figure 4 shows relative positions of ratings in intensity and naturalness across emotions.

Figure 4. Relative positions of ratings in intensity and naturalness across emotions by stimuli type.

Data Analysis

Descriptive statistics were conducted to calculate the means and standard deviations of accuracy, intensity, and naturalness for each stimulus.

A two-way ANOVA (3 × 6) was conducted with accuracy, intensity, and naturalness as dependent variables, and types of stimuli presented (three groups: static stimuli with mouth open or mouth closed, dynamic stimuli) and facial expressions (six emotions: happiness, sadness, anger, surprise, fear, and disgust) as independent variables. In addition, a Bonferroni post hoc analysis was performed for items with significant differences. In order to appraise the difference in the accuracy between the gender of models and the gender of raters, a two-way ANOVA (2 × 2) was conducted. In addition, a total of 14 independent t-tests were administered to examine the difference in the mean accuracy for six facial emotion expressions and neutral expression depending on the gender of models and raters. In this analysis, static stimuli with mouth open and mouth closed were combined.

Results

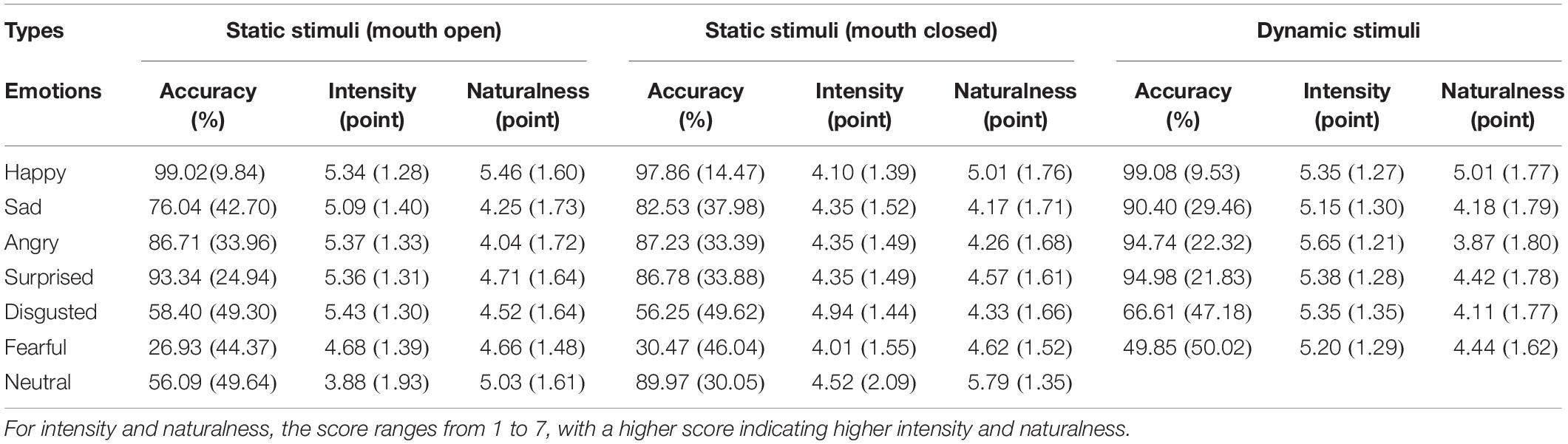

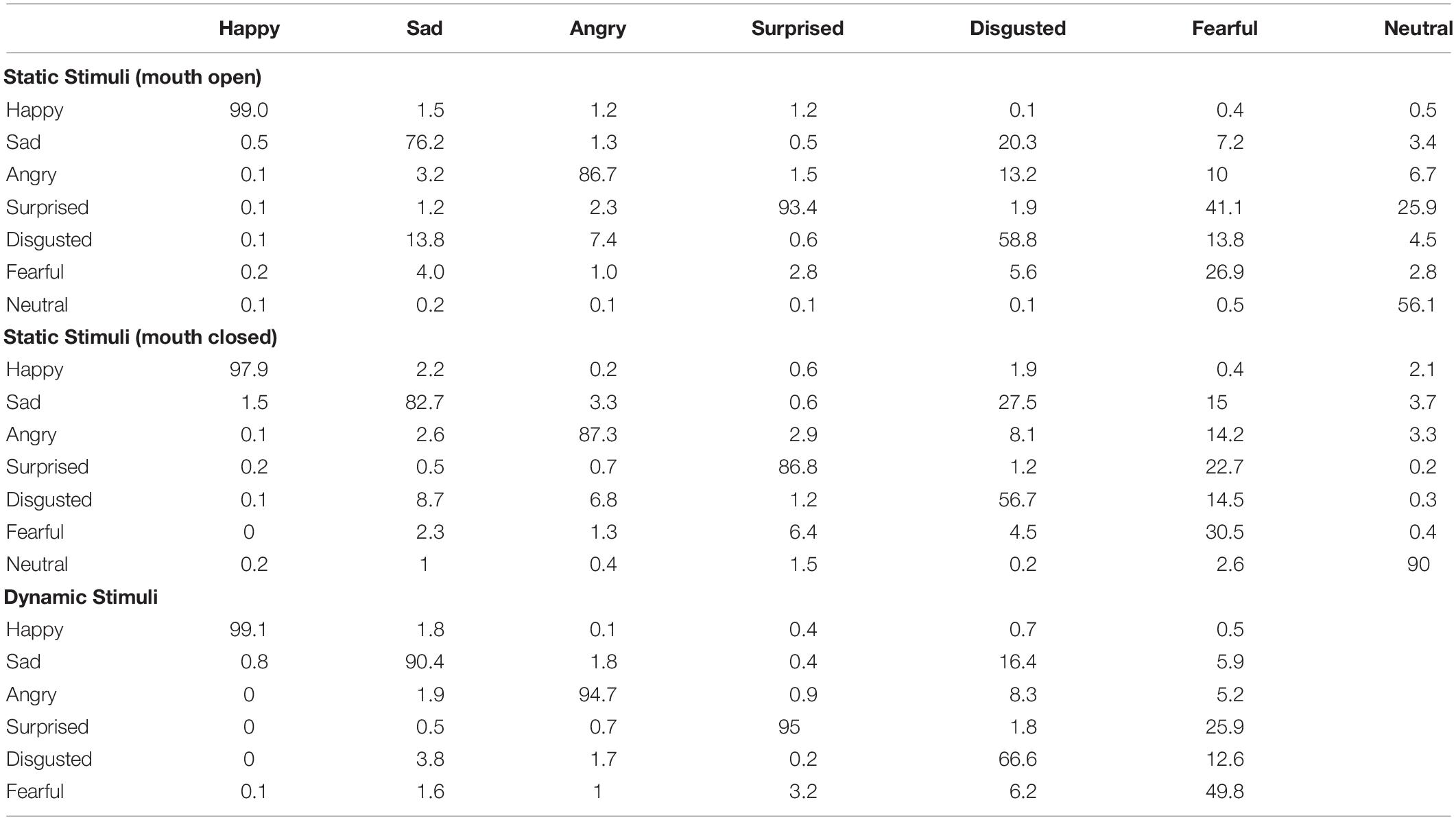

The mean accuracy of the total facial stimuli included in the YFace DB was approximately 76% (SD = 42.60). The mean accuracy was 71% (SD = 45.40) for static stimuli with mouth open, 76% (SD = 42.78) for static stimuli with mouth closed, and 83% (SD = 37.90) for dynamic stimuli. The mean intensity was 5.02 (SD = 1.53) for static stimuli with mouth open, 4.38 (SD = 1.61) for static stimuli with mouth closed, and 5.35 (SD = 1.29) for dynamic stimuli. The mean naturalness was 4.67 (SD = 1.69) for static stimuli with mouth open, 4.68 (SD = 1.70) for static stimuli with mouth closed, and 4.34 (SD = 1.79) for dynamic stimuli. Table 3 shows the average scores for accuracy, intensity, and naturalness according to the type and facial emotion presented. In all types, happiness was rated with the highest accuracy (static stimuli with mouth open: 99.02% [9.84], static stimuli with mouth closed: 97.86% [14.47], dynamic stimuli: 99.08% [9.53]), indicating that the raters clearly distinguished happy facial expressions from other types of facial emotion expression. By contrast, fear was rated with the lowest accuracy of all types (static stimuli with mouth open: 26.93% [44.37], static stimuli with mouth closed: 30.47% [46.04], dynamic stimuli: 49.85% [50.02]), suggesting that the raters were more likely to confuse fearful facial expressions with other facial emotion expressions. Table 4 shows the percentage of each response choice per emotion (%).

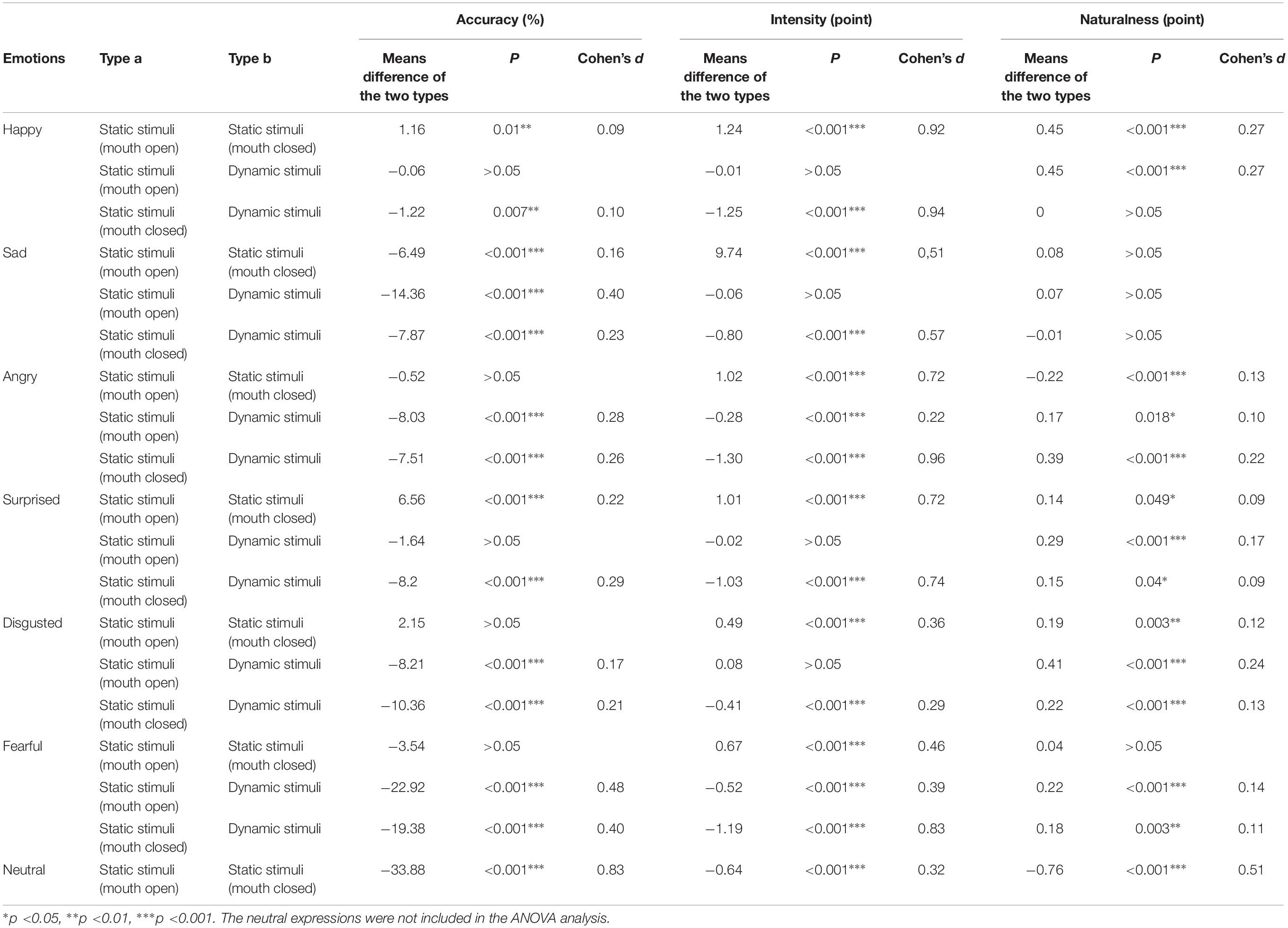

In order to compare accuracy, intensity, and naturalness according to the types of stimuli and emotions, a two-way ANOVA was performed. Results showed a significant interaction effect between the types of stimuli and facial expressions across accuracy, F(10,29420) = 26.80, p < 0.001; intensity, F(10,29420) = 35.47, p < 0.001; and naturalness, F(10,29420) = 8.67, p < 0.001.

Accuracy

The Bonferroni post hoc analysis (Table 5) showed that the accuracy of dynamic stimuli was significantly higher than that of the static stimuli, either with mouth open or closed, for all emotions except for the static stimuli of happiness with mouth closed and of surprise with mouth closed.

Of the two types of static stimuli (mouth open and mouth closed), differences in mean levels of accuracy were reported across different emotions. For happiness and surprise facial expressions, static stimuli with mouth open had a higher accuracy than the stimuli with mouth closed. In contrast, anger, disgust, and fear did not show significant differences in accuracy between mouth-open and mouth-closed conditions. Sadness and neutral expressions with mouth closed were more accurate than with mouth open.

Intensity

The Bonferroni post hoc analysis (Table 5) showed that the intensity of anger was significantly higher in the dynamic stimuli than in the static stimuli with mouth closed and mouth open. The intensity for happiness, sadness, surprise, and disgust were higher in the dynamic stimuli than in the static stimuli with mouth closed, but there was no significant difference in the intensity in the static stimuli with mouth open. The neutral facial expression with mouth closed had a significantly higher intensity than that with mouth open.

Naturalness

The Bonferroni post hoc analysis (Table 5) showed that the naturalness of the static stimuli with mouth open for all six emotions except sadness was significantly higher than that of dynamic stimuli. For anger, surprise, disgust, and fear, the static stimuli with mouth closed were rated as more natural than the dynamic stimuli were. There was no significant difference in the naturalness of the sadness expression between the dynamic stimuli and static stimuli. For anger and neutral expressions, static stimuli with mouth closed were rated as more natural than with mouth open.

Differences in Accuracy Depending on Model and Rater Gender

The results showed that a significant difference in accuracy between the gender of raters was found for some emotion expressions. First, male raters perceived surprised expressions more accurately than did female raters, t(1634) = 2.18, p = 0.03, Cohen’s d = 0.11, while female raters perceived fearful expressions more accurately than did male raters in static stimuli with mouth open, t(1628) = −3.79, p < 0.001, Cohen’s d = 0.19. In static stimuli with mouth closed, the female raters perceived only the fearful expression more accurately than male raters did, t(1629) = −4.19, p < 0.001, Cohen’s d = 0.21. Meanwhile, the surprised expression in dynamic stimuli was perceived more accurately by the male raters, t(1633) = 3.30, p < 0.001, Cohen’s d = 0.16, whereas the fearful expression was perceived more accurately by the female raters than the male raters, t(1633) = −3.83, p < 0.001, Cohen’s d = 0.19.

Likewise, some emotion expressions were perceived more or less accurately depending on the gender of models. The accuracy for sadness, t(1634) = 4.84, p < 0.001, Cohen’s d = 0.24, and anger, t(1631) = 2.00, p = 0.046, Cohen’s d = 0.10, was higher in static stimuli with mouth open when the models were male. For the disgusted expression, accuracy was higher when the models were female, t(1630) = −5.69, p < 0.001, Cohen’s d = 0.28. In the static stimuli with mouth closed, fearful expressions were perceived more accurately when the models were female, t(1629) = −3.05, p = 0.002, Cohen’s d = 0.15. In the dynamic stimuli, the fearful expression was perceived more accurately when the models were male, t(1626) = 3.68, p < 0.001, Cohen’s d = 0.18. For the disgusted expression, accuracy was higher when the models were female, t(1633) = −5.59, p < 0.001, Cohen’s d = 0.28.

However, there was no significant interaction effect between the gender of the models and the raters across all emotions: static stimuli with mouth open F(1,11443) = 1.55, p > 0.05; static stimuli with mouth closed F(1,11444) = 0.44, p > 0.05; dynamic stimuli F(1,9808) = 0.08, p > 0.05.

Discussion

The purpose of this study was to develop a Korean face database comprising both static and dynamic facial emotion expressions to complement for the limitations in the existing face databases. Six basic emotions and neutral facial expressions were captured in 1480 sets of static and dynamic stimuli, taken from 74 models and rated by 221 participants for the accuracy, intensity, and naturalness of the expressions. Results showed a high level of accuracy, an average of 76%, which is similar to that of other existing databases, and the mean intensity and naturalness of the stimuli were reasonable, at 4.89 and 4.57 out of 7, respectively. This study suggests a wide applicability of the database in research because its diverse array of stimuli, including not only dynamic motion clips but also static stimuli with mouth open and mouth closed, were successfully validated. The implications of the study are as follows.

First, it appears that the accuracy of perceiving facial expression varies across emotions. For example, while the highest accuracy was reported in perceiving happy facial expressions, the lowest accuracy was observed in perceiving fearful facial expressions, regardless of the stimuli type presented. These results are similar to previous studies that used the existing domestic face databases (Extended ChaeLee, happy 95.5%, disgusted 69.1%, and fearful 49%; KUFEC-II, happy 97.11%, disgusted 63.46%, and fearful 49.76%). Although the validated foreign face databases reported a higher accuracy in perceiving disgust and fear than the domestic face databases did, the accuracy of these two facial expressions are still relatively lower than those of other facial emotion expressions (JACFEE: happy 98.13%, disgusted 76.15%, and fearful 66.73%; NimStim set: happy 92%, disgusted 80%, and fearful 60%; Radboud Faces Database (RaFD): happy 98%, disgusted 80%, and fearful 81%). Thus, it can be inferred that the low accuracy of disgust and fear may not be a unique feature of the current face database but a common issue arising from the differences in face recognition in general. In particular, analyses of the incorrect responses of each emotion have shown that raters tend to confuse disgust with sadness and fear with surprise. In regard to this confusion, Biehl et al. (1997) offered several explanations. First, the morphological similarity between facial emotion expressions could influence the ability to distinguish fear from surprise expressions. For example, fearful and surprised expressions share some features, such as raised eyebrows, dilated pupils, and opened mouth (Ekman et al., 2002). Second, individual differences in the frequency of exposure to each facial emotion expression in everyday life could affect the results. For example, when interacting with other people in social settings, sad expressions are more frequently observed than disgusted expressions, and surprised expressions are more commonly encountered than fearful expressions. It could be assumed that people are more likely to confuse ambiguous facial emotion expressions with expressions that they are more familiar with given limited information available at hand. Third, since disgusted and fearful facial expressions use more facial muscles than other types of facial expressions do and require expressional elements that are more complicated, models may not had been able to express such emotions accurately, or raters may have had difficulty interpreting them correctly. In addition, cultural differences in face recognition may have an important impact on the results of this study. Previous studies have examined the cultural differences in perceiving facial expressions and found that Asians showed significantly lower recognition accuracy rates for fearful and disgusted expressions than did Western people when the same facial emotion expressions were presented (Ekman et al., 1987; Huang et al., 2001; Beaupré and Hess, 2005). Relatedly, given the relative difficulty that Asians have with facial recognition for fear and disgust, the validation of the current face database may have been undermined.

Second, significant differences in accuracy, intensity, and naturalness across emotions and types of stimuli suggest the need for careful selection of facial stimuli depending on the purpose of the study. For example, the accuracy of dynamic stimuli was higher than that of static stimuli for most facial emotion expressions. This is consistent with previous studies showing that dynamic stimuli are better tools for perceiving facial expressions accurately than static stimuli are (Ambadar et al., 2005; Trautmann et al., 2009). Unlike static stimuli, in which only one moment of an expression is presented, dynamic stimuli provide more information about how facial expressions gradually change over time (Krumhuber et al., 2013). In addition, even within the static stimuli, there was a difference in accuracy, depending on the shape of mouth, in some facial emotion expressions. In the case of happiness and surpised expressions, the accuracy was higher with the mouth open than with the mouth closed. For anger, disgust, and fearful expressions, however, no difference was found in the accuracy between open mouth and closed mouth stimuli. These results are different from those found in the NimStim study (Tottenham et al., 2009), which showed that the higher accuracy in sad expressions was observed with closed mouth stimuli and that the higher accuracy in happy, angry, and fearful expressions were found with open mouth stimuli. Happy and surprised facial expressions, which were rated more accurately with open mouth stimuli in the current study, were categorized as positive or neutral expressions, in contrast to angry, disgusted, and fearful facial expressions (Kim et al., 2011). This can be interpreted as for Asians, the effect of mouth shapes on negative expressions is weaker than for positive or neutral expressions, since the intensity of static stimuli with mouth open is higher overall than those with mouth closed. In previous studies, angry facial expressions with high intensity were perceived more accurately by Western people than by Asians, but the results showed that there was no difference in the accuracy between these two groups when an angry facial expression was presented with low intensity (Matsumoto, 1992; Biehl et al., 1997; Beaupré and Hess, 2005; Bourgeois et al., 2005). Further research should be conducted to test for this assumption by examining differences in facial emotion expression recognition between Asian and Western cultures.

By contrast, there was no significant difference between static stimuli and dynamic stimuli in terms of intensity. However, it is notable that both accuracy and intensity were significantly higher for angry and fearful expressions in dynamic stimuli than in the static stimuli. Moreover, the degree of additional information provided by motion clips for recognizing emotion expression varies by the type of emotion (Nusseck et al., 2008; Cunningham and Wallraven, 2009). The current study suggests that dynamic stimuli provide relatively more information for recognizing angry and fearful expressions than other types of facial emotion expression. Particularly, fearful facial expressions, for example, whereas the accuracy with static stimuli was less than 30%, the accuracy with dynamic stimuli was approximately 50%. Although accuracy at 50% is not considered high, considering the remarkably low accuracy for recognizing fearful facial expressions generally, utilizing dynamic stimuli rather than the static stimuli for fearful expressions is highly recommended in order to obtain reliable results in accordance to the research purposes.

As for naturalness, happy, surprised, disgusted, and fearful facial expressions were rated as more natural in static stimuli with mouth open than were dynamic stimuli. Databases containing dynamic facial stimuli tend to adopt one of two shooting methods (Scherer and Bänziger, 2010; Krumhuber et al., 2017). The first method is to provide specific instructions for creating facial emotion expressions. This method has the advantage of reducing variability in an actor’s facial emotion expression, making facial recognition easier, and enhancing the consistency across stimuli, but it has the disadvantage that it results in reduced naturalness in the stimuli. The second method involves shooting facial expressions that occur in a more natural situation, without giving any additional instructions. The advantage of this method is that the stimuli produced are perceived as more natural as they resemble with the facial emotion expressions observed in daily life. However, the consistency among facial expressions is compromised due to the difficulty in controlling them. This study adopted the first method, specifying instructions for each emotion when models were asked to make facial emotion expressions that changed from neutral to peak states of an emotion and controlling for any variability other than facial expression. Thus, the dynamic stimuli comprised footage of unnatural emotionality that is not easily observed in everyday life. It may be necessary to find a better way of incorporating the naturalness of facial expressions that reflect everyday interaction when developing new face databases in the future.

In terms of neutral expression, all evaluative criteria, such as accuracy, intensity, and naturalness, were met with higher scores for static stimuli with mouth closed than with mouth open. This may be because the expression that is generally perceived as a neutral expression is a form with mouth closed. In addition, by analyzing the incorrect responses, researchers found that the neutral expression with mouth open was confused with a surprised expression with weak intensity. Therefore, it is more appropriate to use static stimuli with mouth closed when the neutral expression is used for the stimuli in experiments.

Finally, although there was no significant interaction between the gender of the models and the gender of the raters on the accuracy of the total facial emotion expression in each stimulus, there was a significant difference in accuracy for some facial emotion expressions according to the gender of models or the raters. The significant gender difference for the models means that certain expressions were more accurately expressed by one gender of model. Considering these results, if stimuli of only one gender are used in an experiment, it may be appropriate to use the stimuli of the gender reported with higher accuracy according to each expression. Moreover, there was a difference in recognition accuracy according to the gender of the raters. Regardless of the type, the fearful expression was more accurately perceived by female raters, while the surprised expression was perceived more accurately by males. These results may be due to the tendency for females to be more aware of subtle and complex emotions than males are (Hoffmann et al., 2010). As we have seen above, fear is a more complex emotion to express than other types of facial emotion; thus, females may perceive fearful facial expressions better than males do. In a study comparing facial expressions between genders, it was shown that females perceived fearful facial expressions more accurately than males did (Mandal and Palchoudhury, 1985; Nowicki and Hartigan, 1988). On the other hand, the finding that females were less accurate in recognizing surprised expressions than males were suggests that females were biased toward fearful expressions in perceiving the emotion of surprise. However, there is limited research on the mechanism behind differences in perceiving surprised or fearful expressions between genders. Some neuropsychological studies have observed that females show a higher activation in the left amygdala than males when seeing fearful expressions (Thomas et al., 2001; Williams et al., 2006; Kempton et al., 2009). In this regard, it can be assumed that females are more sensitive to receiving emotions associated with fear. On the basis of the results of this study, further research may shed light on the underlying mechanisms behind the gender differences observed in perceiving different types of emotion expressions.

The limitations of this study are as follows. First, because both models and raters in this study were either in their 20 s or 30 s, the generalizability of the study results may be limited. Previous studies have noted an own-age bias, where people perform better in identity recognition (Wright and Stroud, 2002; Lamont et al., 2005; Anastasi and Rhodes, 2006) as well as emotion recognition in faces (Ebner and Johnson, 2010; Ebner et al., 2011) of the same age group than those of other age groups, suggesting the need to match face stimuli to the age group of participants in experiments. For this reason, face databases including models from various age groups, ranging from children to the elderly, have been developed recently (Ebner et al., 2010; Egger et al., 2011; Dalrymple et al., 2013). In particular, the FACES (Ebner et al., 2010) database has shown in its validation study that the stimuli of each age group were perceived differently across all age groups. However, in Korea, since there are only few databases that include a variety of age groups, it will be meaningful to form a group of models and raters to represent a wide range of age groups when developing a new face database in the future.

Second, in this study, the forced choice method was used to measure the accuracy of the stimulus in the validating procedure. This method seems to increase rates of incorrect responses for the facial recognition of the database overall by allowing the participant to select a response even when the stimulus is not clearly recognized. In some of the existing databases, a “none of above” or “other” option was added for the raters to choose from if the presented facial expression was not clearly applicable to any options listed (Tottenham et al., 2009; Langner et al., 2010). In a recently developed database, several facial expressions were presented in a continuous scale so that the participants were freed from the pressure of selecting a single categorical response when perceiving facial expressions (Olszanowski et al., 2015). Alternatively, a free-labeling method (Widen and Russell, 2010), which increases raters’ self-reflection to emotional stimuli, could be considered as a possible approach for measuring facial emotion expressions.

Using suggested methods to evaluate stimuli for the future development and validation of new databases may provide more detailed and accurate information for each stimulus. Third, in this study the confounding variables of models, such as the bangs, beard, dyed hair, and makeup, were removed to improve the consistency of the stimulus. However, in everyday life, it is common and natural to encounter such variables. This database may be useful for experiments that require consistent stimuli, but it may be less useful for experiments that prefer naturalistic stimuli. In order to increase the ecological validity of the stimulus, an alternative approach may be to shoot photos and films without removing the confounding variables. Finally, The YFace DB includes only Korean models, which could raise concerns regarding its use with other Asian ethnic groups or generalization of its results across cultures. Considering the possibility of subtle variation in codifying and expressing emotions in different cultures (Marsh et al., 2003; Elfenbein et al., 2007), further investigation of face recognition among other Asian ethnic groups using YFace DB should be followed.

The Yonsei Face DB is the first Asian face database to include static and dynamic stimuli for facial emotion expressions, providing abundant information for validation to help researchers select appropriate stimuli. It is expected that this database will be utilized in various fields related to face research and contribute to the development of related fields.

Data Availability Statement

We confirmed that our data/materials are available upon request on our website (http://yonseipsye.dothome.co.kr/?p=758 or http://yonsei.au1.qualtrics.com/jfe/form/SV_a5WpsFtN4oaYPAh).

Ethics Statement

The studies involving human participants were reviewed and approved by Yonsei University Institutional Review Board (Approval No.: 7001988-201804-HR-140-09). The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

K-MC and WJ developed the theoretical framework and designed the experiments. K-MC directed the project. SK performed the experiments and analyzed the data. YK aided in interpreting the results and worked on the manuscript. K-MC, SK, and YK wrote the article.

Funding

This study was supported by the Ministry of Science and ICT of the Republic of Korea and the National Research Foundation of Korea [NRF-2017M3C4A7083533].

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adolph, D., and Georg, W. A. (2010). Valence and arousal: a comparison of two sets of emotional facial expressions. Am. J. Psychol. 123, 209–219.

Ambadar, Z., Schooler, J. W., and Cohn, J. F. (2005). Deciphering the enigmatic face: the importance of facial dynamics in interpreting subtle facial expressions. Psychol. Sci. 16, 403–410. doi: 10.1111/j.0956-7976.2005.01548.x

Anastasi, J. S., and Rhodes, M. G. (2006). Evidence for an own-age bias in face recognition. North Am. J. Psychol. 8, 237–252.

Beaupré, M. G., Cheung, N., and Hess, U. (2000). The Montreal Set of Facial Displays of Emotion [Slides]. Montreal: University of Quebec at Montreal.

Beaupré, M. G., and Hess, U. (2005). Cross-cultural emotion recognition among Canadian ethnic groups. J. Cross Cult. Psychol. 36, 355–370. doi: 10.1177/0022022104273656

Becker, D. V., Kenrick, D. T., Neuberg, S. L., Blackwell, K. C., and Smith, D. M. (2007). The confounded nature of angry men and happy women. J. Personal. Soc. Psychol. 92, 179–190. doi: 10.1037/0022-3514.92.2.179

Behrmann, M., Richler, J. J., Avidan, G., and Kimchi, R. (2015). “Holistic face perception,” in The Oxford Handbook of Perceptual Organization, ed. W. Johan, (Oxford: Oxford Library of Psychology), 758–774.

Biehl, M., Matsumoto, D., Ekman, P., Hearn, V., Heider, K., Kudoh, T., et al. (1997). Matsumoto and Ekman’s Japanese and caucasian facial expressions of emotion (JACFEE): reliability data and cross-national differences. J. Nonverbal Behav. 21, 3–21.

Biele, C., and Grabowska, A. (2006). Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 171, 1–6. doi: 10.1007/s00221-005-0254-0

Bourgeois, P., Herrera, P., and Hess, U. (2005). Impact of Cultural Factors on the Judgements of Emotional Facial Expressions. Montreal: University of Quebec at Montreal.

Calder, A., Rhodes, G., Johnson, M., and Haxby, J. V. (2011). Oxford Handbook of Face Perception. Oxford: Oxford University Press.

Carroll, J. M., and Russell, J. A. (1997). Facial expressions in Hollywood’s portrayal of emotion. J. Personal. Soc. Psychol. 72, 164–176.

Chen, L. F., and Yen, Y. S. (2007). Taiwanese Facial Expression Image Database. Brain Mapping Laboratory, Institute of Brain Science. Taipei: National Yang-Ming University.

Cunningham, D. W., and Wallraven, C. (2009). Dynamic information for the recognition of conversational expressions. J. Vis. 9, 1–77. doi: 10.1167/9.13.7

Dalrymple, K. A., Gomez, J., and Duchaine, B. (2013). The dartmouth database of children’s faces: acquisition and validation of a new face stimulus set. PloS One 8:e79131. doi: 10.1371/journal.pone.0079131

Dawson, G., Webb, S. J., and McPartland, J. (2005). Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Dev. Neuropsychol. 27, 403–424. doi: 10.1207/s15326942dn2703_6

Ebner, N. C., He, Y. I., and Johnson, M. K. (2011). Age and emotion affect how we look at a face: visual scan patterns differ for own-age versus other-age emotional faces. Cogn. Emot. 25, 983–997. doi: 10.1080/02699931.2010.540817

Ebner, N. C., and Johnson, M. K. (2009). Young and older emotional faces: are there age group differences in expression identification and memory? Emotion 9, 329–339. doi: 10.1037/a0015179

Ebner, N. C., and Johnson, M. K. (2010). Age-group differences in interference from young and older emotional faces. Cogn. Emot. 24, 1095–1116. doi: 10.1080/02699930903128395

Ebner, N. C., Riediger, M., and Lindenberger, U. (2010). FACES—A database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362. doi: 10.3758/BRM.42.1.351

Egger, H. L., Pine, D. S., Nelson, E., Leibenluft, E., Ernst, M., Towbin, K. E., et al. (2011). The NIMH child emotional faces picture set (NIMH−ChEFS): a new set of children’s facial emotion stimuli. Int. J. Methods Psychiatric Res. 20, 145–156. doi: 10.1002/mpr.343

Ekman, P., and Friesen, W. V. (1976). Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press.

Ekman, P., Friesen, W. V., and Ellsworth, P. (1972). Emotion in the Human Face: Guide-lines for Research and an Integration of Findings: Guidelines for Research and an Integration of Findings. New York, NY: Pergamon.

Ekman, P., Friesen, W. V., and Hager, J. (2002). Facial Action Coding System. Manual and Investigators Guide. Salt Lake City, UT: Research Nexus.

Ekman, P., Friesen, W. V., O’sullivan, M., Chan, A., Diacoyanni-Tarlatzis, I., Heider, K., et al. (1987). Universals and cultural differences in the judgments of facial expressions of emotion. J. Personal. Soc. Psychol. 53, 712. doi: 10.1037//0022-3514.53.4.712

Elfenbein, H. A., Beaupré, M., Lévesque, M., and Hess, U. (2007). Toward a dialect theory: cultural differences in the expression and recognition of posed facial expressions. Emotions 7, 131–146. doi: 10.1037/1528-3542.7.1.131

Gao, W., Cao, B., Shan, S., Chen, X., Zhou, D., Zhang, X., et al. (2007). The CAS-PEAL large-scale Chinese face database and baseline evaluations. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 38, 149–161. doi: 10.1109/tsmca.2007.909557

Garrido, M. V., Lopes, D., Prada, M., Rodrigues, D., Jerónimo, R., and Mourão, R. P. (2017). The many faces of a face: comparing stills and videos of facial expressions in eight dimensions (SAVE database). Behav. Res. Methods 49, 1343–1360. doi: 10.3758/s13428-016-0790-5

Golarai, G., Ghahremani, D. G., Whitfield-Gabrieli, S., Reiss, A., Eberhardt, J. L., Gabrieli, J. D., et al. (2007). Differential development of high-level visual cortex correlates with category-specific recognition memory. Nat. Neurosci. 10, 512–522. doi: 10.1038/nn1865

Gwinn, J. D., Barden, J., and Judd, C. M. (2015). Face recognition in the presence of angry expressions: a target-race effect rather than a cross-race effect. J. Exp. Soc. Psychol. 58, 1–10. doi: 10.1016/j.jesp.2014.12.001

Hankin, B. L., Gibb, B. E., Abela, J. R., and Flory, K. (2010). Selective attention to affective stimuli and clinical depression among youths: role of anxiety and specificity of emotion. J. Abnorm. Psychol. 119, 491–501. doi: 10.1037/a0019609

Harms, M. B., Martin, A., and Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol. Rev. 20, 290–322. doi: 10.1007/s11065-010-9138-6

Harris, A., and Aguirre, G. K. (2010). Neural tuning for face wholes and parts in human fusiform gyrus revealed by FMRI adaptation. Am. J. Physiol. Heart Circ. Physiol. 104, 336–345. doi: 10.1152/jn.00626.2009

Hess, U., Adams, R. B., and Kleck, R. E. (2004). Facial appearance, gender, and emotion expression. Emotion 4, 378–388. doi: 10.1037/1528-3542.4.4.378

Hoffmann, H., Kessler, H., Eppel, T., Rukavina, S., and Traue, H. C. (2010). Expression intensity, gender and facial emotion recognition: women recognize only subtle facial emotions better than men. Acta Psychol. 135, 278–283. doi: 10.1016/j.actpsy.2010.07.012

Hooker, C., and Park, S. (2002). Emotion processing and its relationship to social functioning in schizophrenia patients. Psychiatry Res. 112, 41–50. doi: 10.1016/s0165-1781(02)00177-4

Huang, Y., Tang, S., Helmeste, D., Shioiri, T., and Someya, T. (2001). Differential judgement of static facial expressions of emotions in three cultures. Psychiatry Clin. Neurosci. 55, 479–483. doi: 10.1046/j.1440-1819.2001.00893.x

Hwang, B. W., Roh, M. C., and Lee, S. W. (2004). “Performance evaluation of face recognition algorithms on asian face database,” in Automatic Face and Gesture Recognition Proceedings on 6th IEEE International Conference, (Seoul: IEEE.), 278–283.

Ishai, A. (2008). Let’s face it: it’s a cortical network. Neuroimage 40, 415–419. doi: 10.1016/j.neuroimage.2007.10.040

Jang, S. K., Park, S. C., Lee, S. H., Cho, Y. S., and Choi, K. H. (2016). Attention and memory bias to facial emotions underlying negative symptoms of schizophrenia. Cogn. Neuropsychiatry 21, 45–59. doi: 10.1080/13546805.2015.1127222

Johnson, M. H., Grossmann, T., and Farroni, T. (2008). The social cognitive neuroscience of infancy: illuminating the early development of social brain functions. Adv. Child Dev. Behav. 36, 331–372. doi: 10.1016/s0065-2407(08)00008-6

Jung, H. Y., Jin, Y. S., and Chang, M. S. (2015). The characteristics of emotion recognition in elderly with anxiety tendency. Korean J. Dev. Psychol. 28, 91–113.

Kamachi, M., Bruce, V., Mukaida, S., Gyoba, J., Yoshikawa, S., and Akamatsu, S. (2013). Dynamic properties influence the perception of facial expressions. Perception 42, 1266–1278. doi: 10.1068/p3131n

Kaulard, K., Cunningham, D. W., Bülthoff, H. H., and Wallraven, C. (2012). The MPI facial expression database—A validated database of emotional and conversational facial expressions. PloS One 7:e32321. doi: 10.1371/journal.pone.0032321

Kempton, M. J., Haldane, M., Jogia, J., Christodoulou, T., Powell, J., Collier, D., et al. (2009). The effects of gender and COMT Val158Met polymorphism on fearful facial affect recognition: a fMRI study. Int. J. Neuropsychopharmacol. 12, 371–381. doi: 10.1017/S1461145708009395

Kilbride, J. E., and Yarczower, M. (1983). Ethnic bias in the recognition of facial expressions. J. Nonverbal Behav. 8, 27–41. doi: 10.1007/bf00986328

Kim, J. H., and Kim, C. B. (2003). The radial frequency information in the underlying brain mechanisms of the face recognition. Korean J. Exp. Psychol. 15, 561–577.

Kim, J. M., Sul, S. H., and Moon, Y. H. (2013). The impact of perceiving angry and fearful facial expressions on decision making performance in threat situations. Korean J. Cogn. Biol. Psychol. 25, 445–461. doi: 10.22172/cogbio.2013.25.4.004

Kim, M. W., Choi, J. S., and Cho, Y. S. (2011). The Korea University Facial Expression Collection(KUFEC) and semantic differential ratings of emotion. Korean J. Psychol. Gen. 30, 1189–1211.

Kim, S. H., and Kim, M. S. (2016). Event-related potential study of facial affect recognition in college students with schizotypal traits. Korean J. Cogn. Biol. Psychol. 28, 67–97. doi: 10.22172/cogbio.2016.28.1.004

Kim, S. M., Kwon, Y. J., Jung, S. Y., Kim, M. J., Cho, Y. S., Kim, H. T., et al. (2017). Development of the Korean Facial Emotion Stimuli: Korea University facial expression collection 2nd Edition. Front. Psychol. 8:769. doi: 10.3389/fpsyg.2017.00769

Kim, Y. R., Kim, C. H., Park, J. H., Pyo, J., and Treasure, J. (2014). The impact of intranasal oxytocin on attention to social emotional stimuli in patients with anorexia nervosa: a double blind within-subject cross-over experiment. PloS One 9:e90721. doi: 10.1371/journal.pone.0090721

Kleider-Offutt, H. M., Bond, A. D., and Hegerty, S. E. (2017). Black stereotypical features: when a face type can get you in trouble. Curr. Dir. Psychol. Sci. 26, 28–33. doi: 10.1177/0963721416667916

Kohler, C. G., Walker, J. B., Martin, E. A., Healey, K. M., and Moberg, P. J. (2009). Facial emotion perception in schizophrenia: a meta-analytic review. Schizophr. Bul. 36, 1009–1019. doi: 10.1093/schbul/sbn192

Kościński, K. (2013). Perception of facial attractiveness from static and dynamic stimuli. Perception 42, 163–175. doi: 10.1068/p7378

Krumhuber, E. G., Kappas, A., and Manstead, A. S. (2013). Effects of dynamic aspects of facial expressions: a review. Emot. Rev. 5, 41–46. doi: 10.1177/1754073912451349

Krumhuber, E. G., Skora, L., Küster, D., and Fou, L. (2017). A review of dynamic datasets for facial expression research. Emot. Rev. 9, 280–292. doi: 10.1177/1754073916670022

Lamont, A. C., Stewart-Williams, S., and Podd, J. (2005). Face recognition and aging: effects of target age and memory load. Mem. Cogn. 33, 1017–1024. doi: 10.3758/bf03193209

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H., Hawk, S. T., and Van Knippenberg, A. D. (2010). Presentation and validation of the radboud faces database. Cogn. Emot. 24, 1377–1388. doi: 10.1080/02699930903485076

Lee, H. S., Park, S., Kang, B. N., Shin, J., Lee, J. Y., Je, H., et al. (2008). “The POSTECH face database (PF07) and performance evaluation,” in Automatic Face & Gesture Recognition Proceedings on 8th IEEE International Conference, (Amsterdam: IEEE), 1–6.

Lee, J., Lee, S., Chun, J. W., Cho, H., Kim, D. J., and Jung, Y. C. (2015). Compromised prefrontal cognitive control over emotional interference in adolescents with internet gaming disorder. Cyberpsychol. Behav. Soc. Netw. 18, 661–668. doi: 10.1089/cyber.2015.0231

Lee, K. U., Kim, J., Yeon, B., Kim, S. H., and Chae, J. H. (2013). Development and standardization of extended ChaeLee Korean facial expressions of emotions. Psychiatry Investig. 10, 155–163. doi: 10.4306/pi.2013.10.2.155

Leopold, D. A., O’Toole, A. J., Vetter, T., and Blanz, V. (2001). Prototype-referenced shape encoding revealed by high-level aftereffects. Nat. Neurosci. 4, 89–94. doi: 10.1038/82947

Levens, S. M., and Gotlib, I. H. (2010). Updating positive and negative stimuli in working memory in depression. J. Exp. Psychol. Gen. 139, 654–664. doi: 10.1037/a0020283

Limbrecht, K., Rukavina, S., Scheck, A., Walter, S., Hoffmann, H., and Traue, H. C. (2012). The influence of naturalness, attractiveness and intensity on facial emotion recognition. Psychol. Res. 2, 166–176.

Livingstone, S. R., Choi, D., and Russo, F. A. (2014). The influence of vocal training and acting experience on measures of voice quality and emotional genuineness. Front. Psychol. 5:516. doi: 10.3389/fpsyg.2014.00156

Lyons, M. J., Akamatsu, S., Kamachi, M., Gyoba, J., and Budynek, J. (1998). “The Japanese female facial expression (JAFFE) database,” in Proceedings of Third International Conference on Automatic Face and Gesture Recognition, (Nara: IEEE), 14–16.

Ma, D. S., Correll, J., and Wittenbrink, B. (2015). The Chicago face database: a free stimulus set of faces and norming data. Behav. Res. Methods 47, 1122–1135. doi: 10.3758/s13428-014-0532-5

Mandal, M. K., and Palchoudhury, S. (1985). Perceptual skill in decoding facial affect. Percept. Mot. Skills 60, 96–98. doi: 10.2466/pms.1985.60.1.96

Markham, R., and Wang, L. (1996). Recognition of emotion by Chinese and Australian children. J. Cross Cult. Psychol. 27, 616–643. doi: 10.1177/0022022196275008

Marsh, A. A., Elfenbein, H. A., and Ambady, N. (2003). Nonveral “accents” cultural differences in facial expression of emotion. Psychol. Sci. 14, 373–376. doi: 10.1111/1467-9280.24461

Matsumoto, D. (1992). American-Japanese cultural differences in the recognition of universal facial expressions. J. Cross Cult. Psychol. 23, 72–84.

Matsumoto, D., Consolacion, T., Yamada, H., Suzuki, R., Franklin, B., Paul, S., et al. (2002). American-Japanese cultural differences in judgements of emotional expressions of different intensities. Cogn. Emot. 16, 721–747. doi: 10.1080/02699930143000608

McKone, E., and Robbins, R. (2011). “Are faces special,” in Oxford Handbook of Face Perception, eds C. Andrew, R. Gillian, J. Mark, and H. James, (Oxford: Oxford University Press), 149–176.

Miles, L., and Johnston, L. (2007). Detecting happiness: perceiver sensitivity to enjoyment and non-enjoyment smiles. J. Nonverbal Behav. 31, 259–275. doi: 10.1007/s10919-007-0036-4

Monk, C. S., Weng, S. J., Wiggins, J. L., Kurapati, N., Louro, H. M., Carrasco, M., et al. (2010). Neural circuitry of emotional face processing in autism spectrum disorders. J. Psychiatry Neurosci. 35, 105–114. doi: 10.1503/jpn.090085

Norton, D., McBain, R., Holt, D. J., Ongur, D., and Chen, Y. (2009). Association of impaired facial affect recognition with basic facial and visual processing deficits in schizophrenia. Biol. Psychiatry 65, 1094–1098. doi: 10.1016/j.biopsych.2009.01.026

Nowicki, S. Jr., and Hartigan, M. (1988). Accuracy of facial affect recognition as a function of locus of control orientation and anticipated interpersonal interaction. J. Soc. Psychol. 128, 363–372. doi: 10.1080/00224545.1988.9713753

Nusseck, M., Cunningham, D. W., Wallraven, C., and Bülthoff, H. H. (2008). The contribution of different facial regions to the recognition of conversational expressions. J. Vis. 8, 1–23. doi: 10.1167/8.8.1

Oh, S. I., Oh, K. W., Kim, H. J., Park, J. S., and Kim, S. H. (2016). Impaired perception of emotional expression in amyotrophic lateral sclerosis. J. Clin. Neurol. 12, 295–300. doi: 10.3988/jcn.2016.12.3.295

Olszanowski, M., Pochwatko, G., Kuklinski, K., Scibor-Rylski, M., Lewinski, P., and Ohme, R. K. (2015). Warsaw set of emotional facial expression pictures: a validation study of facial display photographs. Front. Psychol. 5:1516. doi: 10.3389/fpsyg.2014.01516

O’Reilly, H., Pigat, D., Fridenson, S., Berggren, S., Tal, S., Golan, O., et al. (2016). The EU-emotion stimulus set: a validation study. Behav/. Res. Methods 48, 567–576. doi: 10.3758/s13428-015-0601-4

O’toole, A. J., Deffenbacher, K. A., Valentin, D., and Abdi, H. (1994). Structural aspects of face recognition and the other-race effect. Mem. Cogn. 22, 208–224. doi: 10.3758/bf03208892

Palermo, R., and Coltheart, M. (2004). Photographs of facial expression: accuracy, response times, and ratings of intensity. Behav. Res. Methods Instrum. Comput. 36, 634–638. doi: 10.3758/bf03206544

Park, H. Y., Yun, J. Y., Shin, N. Y., Kim, S. Y., Jung, W. H., Shin, Y. S., et al. (2016). Decreased neural response for facial emotion processing in subjects with high genetic load for schizophrenia. Progr. Neuro Psychopharmacol. Biol. Psychiatry 71, 90–96. doi: 10.1016/j.pnpbp.2016.06.014

Park, J. Y., Oh, J. M., Kim, S. Y., Lee, M. K., Lee, C. R., Kim, B. R., et al. (2011). Korean facial expressions of emotion (KOFEE). Seoul: Yonsei University College of Medicine.

Passarotti, A. M., Paul, B. M., Bussiere, J. R., Buxton, R. B., Wong, E. C., and Stiles, J. (2003). The development of face and location processing: an fMRI study. Dev. Sci. 6, 100–117. doi: 10.3109/00048674.2010.534069

Pitcher, D., Walsh, V., and Duchaine, B. (2011). The role of the occipital face area in the cortical face perception network. Exp. Brain Res. 209, 481–493. doi: 10.1007/s00221-011-2579-1

Rhodes, G., Lie, H. C., Thevaraja, N., Taylor, L., Iredell, N., Curran, C., et al. (2011). Facial attractiveness ratings from video-clips and static images tell the same story. Plos One 6:e26653. doi: 10.1371/journal.pone.0026653

Rhodes, G., Robbins, R., Jaquet, E., McKone, E., Jeffery, L., and Clifford, C. W. (2005). “Adaptation and face perception: how aftereffects implicate norm-based coding of faces,” in Fitting the Mind to the World: Adaptation and After-Effects in High-Level Vision, eds C. Colin, and G. Rhodes, (Oxford University Press), 213–240. doi: 10.1093/acprof:oso/9780198529699.003.0009

Richler, J. J., and Gauthier, I. (2014). A meta-analysis and review of holistic face processing. Psychol. Bull. 140, 1281–1302. doi: 10.1037/a0037004

Rivera, A. R., Castillo, J. R., and Chae, O. O. (2012). Local directional number pattern for face analysis: face and expression recognition. IEEE Trans. Image Process. 22, 1740–1752. doi: 10.1109/TIP.2012.2235848

Royer, J., Blais, C., Barnabé-Lortie, V., Carré, M., Leclerc, J., and Fiset, D. (2016). Efficient visual information for unfamiliar face matching despite viewpoint variations: it’s not in the eyes! Vis. Res. 123, 33–40. doi: 10.1016/j.visres.2016.04.004

Sato, W., Fujimura, T., and Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. doi: 10.1016/j.ijpsycho.2008.06.001

Sato, W., and Yoshikawa, S. (2004). BRIEF REPORT: the dynamic aspects of emotional facial expressions. Cogn. Emot. 18, 701–710. doi: 10.1080/02699930341000176

Sato, W., and Yoshikawa, S. (2007a). Enhanced experience of emotional arousal in response to dynamic facial expressions. J. Nonverbal Behav. 31, 119–135. doi: 10.1007/s10919-007-0025-7

Sato, W., and Yoshikawa, S. (2007b). Spontaneous facial mimicry in response to dynamic facial expressions. Cognition 104, 1–18. doi: 10.1016/j.cognition.2006.05.001

Scherer, K. R., and Bänziger, T. (2010). “On the use of actor portrayals in research on emotional expression,” in Blueprint for Affective Computing: A Sourcebook, eds K. R. Scherer, T. Bänziger, and E. B. Roesch, (Oxford: Oxford university Press), 166–176.

Scherf, K. S., Behrmann, M., Humphreys, K., and Luna, B. (2007). Visual category−selectivity for faces, places and objects emerges along different developmental trajectories. Dev. Sci. 10, F15–F30.

Sonnemans, J., and Frijda, N. H. (1994). The structure of subjective emotional intensity. Cogn. Emot. 8, 329–350. doi: 10.1080/02699939408408945

Stein, M. B., Goldin, P. R., Sareen, J., Zorrilla, L. T. E., and Brown, G. G. (2002). Increased amygdala activation to angry and contemptuous faces in generalized social phobia. Arch. Gen. Psychiatry 59, 1027–1034.

Steyvers, M. (1999). Morphing techniques for manipulating face images. Behav. Res. Methods Instrum. Comput. 31, 359–369. doi: 10.3758/bf03207733

Thayer, J., and Johnsen, B. H. (2000). Sex differences in judgement of facial affect: a multivariate analysis of recognition errors. Scand. J. Psychol. 41, 243–246. doi: 10.1111/1467-9450.00193

Thomas, K. M., Drevets, W. C., Whalen, P. J., Eccard, C. H., Dahl, R. E., Ryan, N. D., et al. (2001). Amygdala response to facial expressions in children and adults. Biol. Psychiatry 49, 309–316. doi: 10.1016/s0006-3223(00)01066-0

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., et al. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249. doi: 10.1016/j.psychres.2008.05.006

Trautmann, S. A., Fehr, T., and Herrmann, M. (2009). Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284, 100–115. doi: 10.1016/j.brainres.2009.05.075

Tsao, D. Y., and Livingstone, M. S. (2008). Mechanisms of face perception. Annu. Rev. Neurosci. 31, 411–437. doi: 10.1146/annurev.neuro.30.051606.094238

van der Schalk, J., Hawk, S. T., Fischer, A. H., and Doosje, B. (2011). Moving faces, looking places: validation of the amsterdam dynamic facial expression set (ADFES). Emotion 11, 907–920. doi: 10.1037/a0023853

Voelkle, M. C., Ebner, N. C., Lindenberger, U., and Riediger, M. (2012). Let me guess how old you are: effects of age, gender, and facial expression on perceptions of age. Psychol. Aging 27, 265–277. doi: 10.1037/a0025065

Walker, P. M., and Tanaka, J. W. (2003). An encoding advantage for own-race versus other-race faces. Perception 32, 1117–1125. doi: 10.1068/p5098

Weng, S. J., Carrasco, M., Swartz, J. R., Wiggins, J. L., Kurapati, N., Liberzon, I., et al. (2011). Neural activation to emotional faces in adolescents with autism spectrum disorders. J. Child Psychol. Psychiatry 52, 296–305. doi: 10.1111/j.1469-7610.2010.02317.x

Weyers, P., Mühlberger, A., Hefele, C., and Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology 43, 450–453. doi: 10.1111/j.1469-8986.2006.00451.x

Whalen, P. J., Shin, L. M., McInerney, S. C., Fischer, H., Wright, C. I., and Rauch, S. L. (2001). A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion 1, 70–83. doi: 10.1037//1528-3542.1.1.70

Widen, S. C., and Russell, J. A. (2010). Descriptive and prescriptive definitions of emotion. Emot. Rev. 2, 377–378. doi: 10.1016/j.applanim.2018.01.008

Williams, L. M., Das, P., Liddell, B. J., Kemp, A. H., Rennie, C. J., and Gordon, E. (2006). Mode of functional connectivity in amygdala pathways dissociates level of awareness for signals of fear. J. Neurosci. 26, 9264–9271. doi: 10.1523/jneurosci.1016-06.2006

Wilson, H. R., Loffler, G., and Wilkinson, F. (2002). Synthetic faces, face cubes, and the geometry of face space. Vis. Res. 42, 2909–2923. doi: 10.1016/s0042-6989(02)00362-0

Wright, D. B., and Stroud, J. N. (2002). Age differences in lineup identification accuracy: people are better with their own age. Law Hum. Behav. 26, 641–654. doi: 10.1023/a:1020981501383

Zebrowitz, L. A., Fellous, J. M., Mignault, A., and Andreoletti, C. (2003). Trait impressions as overgeneralized responses to adaptively significant facial qualities: evidence from connectionist modeling. Personal. Soc. Psychol. Rev. 7, 194–215. doi: 10.1207/s15327957pspr0703_01

Zebrowitz, L. A., Kikuchi, M., and Fellous, J. M. (2007). Are effects of emotion expression on trait impressions mediated by babyfaceness? Evidence from connectionist modeling. Personal. Soc. Psychol. Bull. 33, 648–662. doi: 10.1177/0146167206297399

Keywords: face database, picture stimuli, film clip, facial expression, validation

Citation: Chung K-M, Kim S, Jung WH and Kim Y (2019) Development and Validation of the Yonsei Face Database (YFace DB). Front. Psychol. 10:2626. doi: 10.3389/fpsyg.2019.02626

Received: 04 July 2019; Accepted: 07 November 2019;

Published: 03 December 2019.

Edited by:

Maurizio Codispoti, University of Bologna, ItalyReviewed by:

Ottmar Volker Lipp, Curtin University, AustraliaXunbing Shen, Jiangxi University of Traditional Chinese Medicine, China

Copyright © 2019 Chung, Kim, Jung and Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kyong-Mee Chung, a21jaHVuZ0B5b25zZWkuYWMua3I=

Kyong-Mee Chung

Kyong-Mee Chung Soojin Kim

Soojin Kim Woo Hyun Jung

Woo Hyun Jung Yeunjoo Kim

Yeunjoo Kim