- 1Center for Multisensory Marketing, Department of Marketing, BI Norwegian Business School, Oslo, Norway

- 2Sussex Computer Human Interaction Lab, Creative Technology Research Group, School of Engineering and Informatics, University of Sussex, Brighton, United Kingdom

- 3INSEEC Business School, Paris, France

- 4Crossmodal Research Laboratory, Department of Experimental Psychology, University of Oxford, Oxford, United Kingdom

There is growing interest in the development of new technologies that capitalize on our emerging understanding of the multisensory influences on flavor perception in order to enhance human–food interaction design. This review focuses on the role of (extrinsic) visual, auditory, and haptic/tactile elements in modulating flavor perception and more generally, our food and drink experiences. We review some of the most exciting examples of recent multisensory technologies for augmenting such experiences. Here, we discuss applications for these technologies, for example, in the field of food experience design, in the support of healthy eating, and in the rapidly growing world of sensory marketing. However, as the review makes clear, while there are many opportunities for novel human–food interaction design, there are also a number of challenges that will need to be tackled before new technologies can be meaningfully integrated into our everyday food and drink experiences.

Introduction

Interest in multisensory perception is growing rapidly in the fields of Human–Computer Interaction (HCI, Obrist et al., 2016), sensory marketing (e.g., Petit et al., 2015), and the arts (e.g., Haverkamp, 2013; Vi et al., 2017). This places knowledge concerning the human senses, and their interactions, at the center of design processes (Obrist et al., 2017). In the context of Human-Food Interaction (HFI, Choi et al., 2014; Comber et al., 2014), there has been an increasing interest in how multisensory technologies can augment/modify multisensory flavor perception1, and food and drink experiences more generally and possibly also to sensorially nudge people toward healthier food behaviors (Nijholt et al., 2016; Petit et al., 2016). The key idea here is that flavor is a multisensory construct (involving taste, or gustation, olfaction, and possibly also trigeminal components; see Kakutani et al., 2017) and all the senses can potentially influence the way in which we experience it (Spence, 2015a). Hence, multisensory technologies, that is, technologies that are designed to stimulate the human senses, allow researchers to control the different inputs that accompany a given multisensory flavor, or food experience.

Why, it can be asked, use multisensory technologies in order to augment our flavor experiences? Given that technology is already ubiquitous in our everyday experiences, such technologies in the context of HFI hold the potential to transform how we will eat in the future (Spence and Piqueras-Fiszman, 2013). More specifically, we want to argue that a meaningful marriage of multisensory science (e.g., considering the guiding principles of multisensory flavor perception, e.g., Prescott, 2015; Spence, 2015a) and technology in systems capable of augmenting flavor perception can impact what people choose to eat and drink, how they perceive the ensuing flavor experience, and how much they ultimately end-up consuming.

In this mini-review, we present an overview of multisensory technologies for flavor augmentation that have been developed recently. Importantly, we follow Prescott’s (2015) distinction between core intrinsic (taste, smell, and some elements of touch) and extrinsic (e.g., color, shape, atmospheric sound – which can modulate the experience of flavor but might not be constitutive) elements of the flavor experience and focus on the role of the latter in flavor augmentation2. Our aim is to make researchers working in different fields aware of the various ways in which multisensory technologies that target extrinsic elements of flavor experiences are starting to transform how we interact with and experience what we consume. As such, we expect this manuscript to provide a first point of contact for those interested in multisensory technologies and flavor augmentation. Additionally, we hope that this review will contribute to bridging the gap between researchers working in the fields of HCI/HFI on the one hand, and food science, marketing, and psychology, on the other. It is our view that the latter disciplines would benefit from an increased awareness of the different technologies that are currently available to those working in HCI/HFI. These latter, in turn, would realize some of the potential uses that their technologies have, as well as the financial gains that may derive from such applications. We conclude by presenting challenges that face those wanting to augment flavor perception and experiences.

Flavor Perception and Augmentation

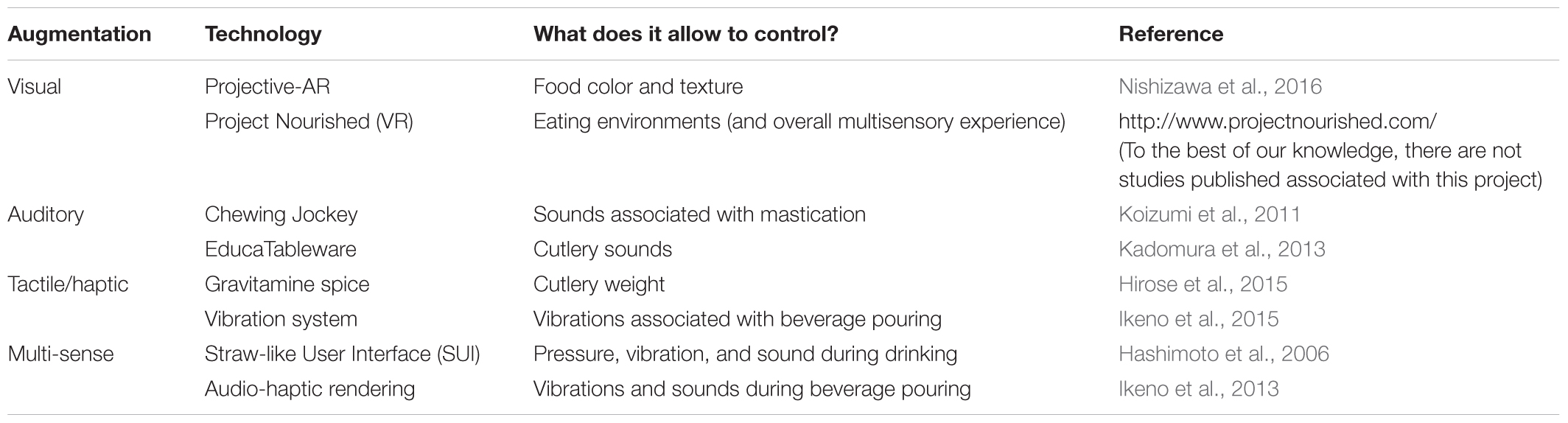

Here, we present some key concepts and technologies associated with flavor augmentation on the basis of flavor extrinsic cues (see Table 1 for a summary of some representative examples). People rarely put something in their mouth without first having made a prediction about what it will taste like. These expectations, set primarily by what we see and smell (orthonasally), but also sometimes by what we hear and feel/touch, anchor the experience when we come to taste something (see Verhagen and Engelen, 2006). For example, visual cues such as color or shape can be used to guide food and drink expectations, search, and augmentation based on semantic knowledge (learned associations as a function of a common identity or meaning such as between the color red and tomato flavor) and crossmodal correspondences (feature compatibility across the senses, such as between sweetness and curvature; e.g., Shermer and Levitan, 2014; Velasco et al., 2015, 2016b; Sawada et al., 2017).

TABLE 1. Examples of multisensory technologies for flavor augmentation based on extrinsic cues associated with the flavor and food/drink experiences.

Visual Augmentation

Vision is critical when it comes to setting our flavor expectations and hence modifying our flavor experiences (Piqueras-Fiszman and Spence, 2015; Spence et al., 2016). Current technologies allow one to go beyond traditional means of food enhancement, based on vision (e.g., just matching or mismatching visual information with a given flavor), and to create novel HFIs that dynamically modulate our flavor experiences, and perhaps also more broadly, our consumption behaviors.

For instance, Nishizawa et al. (2016) developed an augmented reality (AR) system using a projector and a camera in order to transform the visual characteristics (e.g., texture, color) of foods or plates digitally, in real-time, based on the evidence showing that these factors influence people’s perception of what they eat (e.g., Okajima et al., 2013). In a similar vein, and as a more specific example, Okajima and Spence (2011) modified the texture of tomato ketchup by changing the skewness of the luminance histogram whilst not changing the chromaticity of the video feed. Modifying such visual features, among others, was found to influence sensory attributes such as the ketchup’s perceived consistency and taste such that different skewness led participants think they were tasting different ketchups (see also Narumi et al., 2011; Huisman et al., 2016).3

Augmented reality systems build on mixed reality (MR) interactions (i.e., incorporating both virtual and real inputs, see Narumi, 2016). AR would appear to have been adopted more rapidly than virtual reality (VR) in flavor- and food-related technology research and practice. For instance, Kabaq4 is an AR food program that offers restaurants the option of presenting their customers with 3D visions of the food that they serve, before ordering. As for VR, whilst some researchers are exploring the possibility of virtual flavors via digitally controller electric and thermal taste sensations, such systems are currently of very limited use/potential (see Spence et al., 2017, for a critique). That being said, there is potential to design experiences in VR that target the user’s flavor expectations (e.g., before going to a restaurant or buying a product). There are currently many ongoing research initiatives that have been designed to further our knowledge on the applications of VR systems to flavor/food experience design5 (e.g., Bruijnes et al., 2016). One such initiative involves using VR to expose (virtually) people with food-related medical conditions to obesogenic environments (Schroeder et al., 2016; Wiederhold et al., 2016).

Companies are now exploring product packaging that, together with a smartphone, can be turned into inexpensive VR headsets (e.g., as in the case of some of Coca Cola’s cardboard packaging). Such headsets might enable brands to deliver targeted experiences in VR. Whilst, at present, this approach appears more as a curiosity than anything else, we anticipate that it might 1 day become an extension of the total product experience, in that any given product might have its own customized multisensory experience(s) in VR (Lingle, 2017; Michail, 2017). Such experiences may be designed based on research showing the influence of visual atmospheric cues (e.g., lightning, environment) on flavor perception (Stroebele and De Castro, 2004; Spence et al., 2014).

Auditory Augmentation

Often described as the forgotten flavor sense, research on auditory contributions to the experience of eating and drinking has grown rapidly in recent years. The evidence currently suggests that audition is critical to the perception of attributes such as crunchiness, crispiness, and crackliness (Spence, 2015b). What is more, the sounds associated with eating and drinking such as chewing, gulping, or lip-smacking (Zampini and Spence, 2004; Youssef et al., 2017), environmental noise (Woods et al., 2011), and soundscapes/music (Crisinel et al., 2012; Kantono et al., 2016) can all influence food perception (e.g., tastes, odors, textures, flavors). For instance, noise can enhance the perception of umami and diminish perceived sweetness (Yan and Dando, 2015). Based on these kinds of findings, there is growing interest in developing technologies that can capitalize on the sense of audition for flavor augmentation (Velasco et al., 2016a; see also a reference to “EverCrisp app” by Kayac Inc in Choi et al., 2014, designed to enhance food-biting sounds).

Systems that build on the role of mastication sounds on flavor perception constitute one example of flavor augmentation based on audition. The “Chewing Jockey,” for example, is a device that uses a bone-conduction speaker, a microphone, jaw movement tracking sensor, and a computer, to allow one to monitor mastication and use such movements to synchronize and control sound-delivery (Koizumi et al., 2011). Based on such a concept, researchers are now interested in the modulation of texture perception (e.g., in the elderly who find it difficult to chew solid foods, see Endo et al., 2016), consumption monitoring (Elder and Mohr, 2016), and the creation of novel and fun food interactions (e.g., mapping unexpected sounds such as screaming sounds to gummies chewing, Koizumi et al., 2011), by modifying, or replacing the actual sounds of mastication.

The role of audition goes beyond mastication sounds though, as there are many other auditory cues that we may hear at more or less the same time as we eat (Velasco et al., 2016a). These include those sounds directly associated with our interaction with the food, but also atmospheric sounds. In terms of the former, Kadomura et al. (2013) introduced “EducaTableware,” which include a fork and a cup that use food’s (electrical) resistance values, and eating times and intervals to emit sounds while a user consumes a given food (see also Kadomura et al., 2011). This device creates a novel interaction between the user and the food (e.g., for entertainment). In terms of atmospheric sounds, although music devices are ever-present, there is much room for development. For example, based on the idea that sounds can influence taste/flavor perception and enjoyment (i.e., hedonics; Spence, 2017), MR systems that combine real food and audiovisual virtual environments may be developed (e.g., what would it be like to eat a cheesecake, via VR, on Mars? see Project Nourished6).

Tactile/Haptic Augmentation

What we feel/touch can also influence the perception of flavor while eating and drinking (e.g., Krishna and Morrin, 2008; Biggs et al., 2016; Slocombe et al., 2016). Researchers have demonstrated that elements such as the weight, size, shape of cutlery and tableware can influence flavor expectations and perception (Spence, 2017; van Rompay et al., 2017). An example of this comes from Michel et al. (2015), who reported that relatively heavy cutlery can lead to tastier food perception. Notably, similar to systems that build on vision and audition, most of the potential of touch-related devices for flavor augmentation so far has been in terms of MR solutions.

For instance, Hirose et al. (2015) developed a fork-type device that involves an accelerometer, a photo reflector sensor, and motor slider, to digitally control the center of gravity, and therefore the perceived weight, of the eating utensil. The intention behind “Gravitamine spice” is to modify the felt weight of the food/cutlery before eating. Another example comes from Ikeno et al. (2015) who showed that different patterns of vibrations accompanying the action of pouring a beverage can influence how much is poured. These technologies might potentially be used to nudge people to consume a little less, to create novel human–food interactions, and to augment flavor. Meanwhile, Iwata et al. (2004) developed a haptic device for biting, known as the “Food simulator.” This interface generates a force on the user’s teeth, which is based on the force profile of people biting a given food, in order to stimulate the sensation of biting such a food.

There are also multiple emerging haptic/tactile technologies that can be used for flavor augmentation or innovative HFI design. For instance, Tsutsui et al. (2016) developed a high resolution tactile interface for the lips, a part of the body that is often stimulated while eating and drinking, which created a new interaction design space. There might also be opportunities when it comes to MR scenarios where people eat and receive haptic feedback on their body either associated with the food they eat (e.g., Choi et al., 2014) or remote dining with touch-related signals from co-diners (e.g., Wei et al., 2011). Of course, in many cases, there may be no specific need for haptic/tactile interfaces be technology-based. Nevertheless, what technology can potentially offer is a new way of stimulating the skin (e.g., contingently) and therefore opens-up a space for novel interactions and flavor experiences.

Combining Multiple Extrinsic Flavor Elements for Flavor Augmentation

Visual, auditory, and tactile/haptic flavor augmentation systems have, in general, focused on allowing the integration of one property (e.g., color) or series of properties (e.g., color and shape), in a given sense (e.g., vision) with specific flavor experiences. Importantly, though, eating and drinking constitute some of life’s most multisensory experiences (e.g., involving color, shape, sound, vibration, texture roughness, etc., Spence, 2015a). It is perhaps little wonder, then, that those trying to emulate more real-life experiences have focused on designing technologies that allow the integration and controllability of inputs associated with multiple sensory modalities (e.g., Kita and Rekimoto, 2013). For example, the “Straw-like User Interface (SUI)” augments the user’s drinking experiences based on multisensory inputs (e.g., using pressure, vibration, and sound, see Hashimoto et al., 2006; see also Ranasinghe et al., 2014). Another example comes from Ikeno et al. (2013) who developed a system that combines vibrations and sounds (e.g., an auditory “glug” characteristic of a Sake bottle when a drink is poured) to influence the subjective impression of a liquid. Whilst there is certainly no need to stimulate all of the senses, for a given flavor augmentation, solutions that allow the delivery of multiple cues at a given time might broaden the scope for multisensory design.

Discussion and Conclusion

This review presents flavor, and more general, food and drink augmentation in the context of multisensory experience design. In particular, we provide an overview of both older and more recent efforts around flavor augmentation in HFI. In addition to psychologists and sensory/food scientists, those researchers involved in HCI are increasingly exploring new ways of transforming our eating and drinking experiences. The proliferation of VR and MR systems provide the most promising platforms for new (multisensory) flavor experiences in the near future.

We have concentrated on exemplar systems that have capitalized on flavor extrinsic elements from vision, audition, and touch/haptics for flavor augmentation. Whilst such systems are still far from ubiquitous, they are nevertheless increasingly being considered by some of the key players/influencers of the food and drink industry – such as, for example, chefs, culinary artists, experiential brand event managers, and so on (Spence, 2017).

Importantly, however, there are multiple challenges ahead for both researchers and practitioners who may be interested in using multisensory technologies for flavor augmentation. First, a vast gap often exists between technology, as showcased in HCI research, and what actually ends-up in more commercial settings relevant to those working in the food and drink industry (e.g., in a fancy modernist restaurant on in a branded experiential event). Second, there is a need for long-term follow-up investigations, as most of the research examples that have been reported to date have been based on one-off, small scale studies (e.g., small sample sizes with limited experimental designs; for example, Nishizawa et al., 2016, conducted two studies with four and six participants, respectively). There is a need to control for variables such as novelty and habituation, something that will undoubtedly be required in order to know whether the brain adapts to the multisensory flavor experiences designed with new technologies, or whether instead the benefits may last into the medium/longer-term. In other words, there needs to be a consistent added value for flavor and food augmentation to become more than a one-time curiosity or gimmick.

The aforesaid challenges might be addressed (at least in part), by the meaningful integration of scientific insights concerning multisensory flavor perception with new technologies. Whilst research on the principles governing multisensory integration during flavor perception is ongoing (see Prescott, 2015; Spence, 2015a), design guidelines have nevertheless been suggested (Schifferstein and Desmet, 2008; Velasco et al., 2016a). Taking a full-scale, evidence-based approach to the design of multisensory flavor experiences that incorporates technology is not an easy task and therefore will require both time and a fundamentally multidisciplinary approach.

However, the hope is that multisensory technologies might inspire tomorrow’s practitioners to: (1) modify flavor perception and experiences; (2) nudge people toward healthier food behaviors; (3) facilitate food choice before ordering/buying; (4) make dining more entertaining. For example, TeamLab, an art collective, collaborated recently with the Sagaya restaurant in Tokyo to develop a dining experience described as follows: “when a dish is placed on the table, the scenic world contained within the dish is unleashed, unfolding onto the table and into the surrounding space. For example, a bird painted on a ceramic dish is released from the dish and can perch on the branch of a tree that has been unleashed from a different dish” (cited in Stewart, 2017, p. 17). Other examples include the oft-mentioned Michelin-starred modernist restaurant Ultraviolet by Paul Pairet in Shanghai. There, diners are guided through a multisensory dining experience that is accompanied by changing lights, projections, and soundscapes (Yap, 2016; Spence, 2017). Technology in the context of multisensory flavor experience design is a means to transform sensory information into ingredients/raw materials for our future flavor experiences. In that sense, we foresee more applications and novel design spaces being explored in the wider food and drink world.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Funding

MO contribution to this review is partially supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program under grant agreement no. 638605.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

- ^ By flavor augmentation, we refer to the process of modifying, boosting, or enhancing, a given flavor experience, be it perceived or imagined, using technology.

- ^ Whilst we do not focus on olfactory interfaces, devices based on orthonasal olfaction (and its interaction with other senses) have also been proposed by researchers working in the topic of flavor augmentation (e.g., Nambu et al., 2010; Hashimoto and Nakamoto, 2016).

- ^ See also Okajima’s Laboratory website (https://goo.gl/kH1S9Q) for some examples.

- ^ http://www.kabaq.io/

- ^ The ACM International Conference on Multimodal Interaction workshop on “Multisensory Human-Food Interaction” (https://goo.gl/HRRdVs) or the Special Issue on “Virtual reality and food: Applications in sensory and consumer science” in The Journal of Computers and Graphics (https://goo.gl/FKwWjF).

- ^ http://projectnourished.com/

- ^ See also a report in DesignBoom with visual documentation: https://www.designboom.com/design/teamlab-interactive-saga-beef-restaurant-sagaya-ginza-tokyo-04-13-2017/.

References

Biggs, L., Juravle, G., and Spence, C. (2016). Haptic exploration of plateware alters the perceived texture and taste of food. Food Qual. Prefer. 50, 129–134. doi: 10.1016/j.foodqual.2016.02.007

Bruijnes, M., Huisman, G., and Heylen, D. (2016). “Tasty tech: human-food interaction and multimodal interfaces,” in Proceedings of the 1st Workshop on Multi-Sensorial Approaches to Human-Food Interaction (MHFI ’16), eds A. Nijholt, C. Velasco, G. Huisman, and K. Karunanayaka (New York, NY: ACM). doi: 10.1145/3007577.3007581

Choi, J. H. J., Foth, M., and Hearn, G. (eds) (2014). Eat, Cook, Grow: Mixing Human-Computer Interactions with Human-Food Interactions. Cambridge, MA: MIT Press.

Comber, R., Choi, J. H. J., Hoonhout, J., and O’Hara, K. (2014). Designing for human–food interaction: an introduction to the special issue on ‘food and interaction design’. Int. J. Hum. Comput. Stud. 72, 181–184. doi: 10.1016/j.ijhcs.2013.09.001

Crisinel, A.-S., Cosser, S., King, S., Jones, R., Petrie, J., and Spence, C. (2012). A bittersweet symphony: systematically modulating the taste of food by changing the sonic properties of the soundtrack playing in the background. Food Qual. Prefer. 24, 201–204. doi: 10.1016/j.foodqual.2011.08.009

Elder, R. S., and Mohr, G. S. (2016). The crunch effect: food sound salience as a consumption monitoring cue. Food Qual. Prefer. 51, 39–46. doi: 10.1016/j.foodqual.2016.02.015

Endo, H., Ino, S., and Fujisaki, W. (2016). The effect of a crunchy pseudo-chewing sound on perceived texture of softened foods. Physiol. Behav. 167, 324–331. doi: 10.1016/j.physbeh.2016.10.001

Hashimoto, K., and Nakamoto, T. (2016). “Olfactory display using surface acoustic wave device and micropumps for wearable applications,” in Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, 179–180. doi: 10.1109/VR.2016.7504712

Hashimoto, Y., Nagaya, N., Kojima, M., Miyajima, S., Ohtaki, J., Yamamoto, A., et al. (2006). “Straw-like user interface: virtual experience of the sensation of drinking using a straw,” in Proceedings of the 2006 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology (ACE ’06), (New York, NY: ACM). doi: 10.1145/1178823.1178882

Haverkamp, M. (2013). Synesthetic Design: Handbook for a Multi-Sensory Approach. Basel: Birkhäuser Verlag.

Hirose, M., Iwazaki, K., Nojiri, K., Takeda, M., Sugiura, Y., and Inami, M. (2015). “Gravitamine spice: a system that changes the perception of eating through virtual weight sensation,” in Proceedings of the 6th Augmented Human International Conference (AH ’15), (New York, NY: ACM), 33–40. doi: 10.1145/2735711.2735795

Huisman, G., Bruijnes, M., and Heylen, D. K. J. (2016). “A moving feast: Effects of color, shape and animation on taste associations and taste perceptions,” in Proceedings of the 13th International Conference on Advances in Computer Entertainment Technology (ACE 2016), (New York, NY: ACM), 12. doi: 10.1145/3001773.3001776

Ikeno, S., Okazaki, R., Hachisu, T., Sato, M., and Kajimoto, H. (2013). “Audio-haptic rendering of water being poured from sake bottle,” in Proceeding of the 10th International Conference on Advances in Computer Entertainment - Volume 8253 (ACE 2013), eds D. Reidsma, H. Katayose, and A. Nijholt (New York, NY: Springer-Verlag), 548–551. doi: 10.1007/978-3-319-03161-3_50

Ikeno, S., Watanabe, R., Okazaki, R., Hachisu, T., Sato, M., and Kajimoto, H. (2015). “Change in the amount poured as a result of vibration when pouring a liquid,” in Haptic Interaction. Lecture Notes in Electrical Engineering, Vol. 277, eds H. Kajimoto, H. Ando, and K. U. Kyung (Tokyo: Springer), 7–11.

Iwata, H., Yano, H., Uemura, T., and Moriya, T. (2004). “Food simulator: a haptic interface for biting,” in Proceedings of the IEEE Virtual Reality (Washington, DC: IEEE Computer Society), 51–57.

Kadomura, A., Nakamori, R., Tsukada, K., and Siio, I. (2011). “EaTheremin,” in Proceedings of the SIGGRAPH Asia 2011 Emerging Technologies (SA ’11), (New York, NY: ACM). doi: 10.1145/2073370.2073376

Kadomura, A., Tsukada, K., and Siio, I. (2013). “EducaTableware: computer-augmented tableware to enhance the eating experiences,” in Proceedings of the CHI ’13 Extended Abstracts on Human Factors in Computing Systems (CHI EA ’13), (New York, NY: ACM), 3071–3074. doi: 10.1145/2468356.2479613

Kakutani, Y., Narumi, T., Kobayakawa, T., Kawai, T., Kusakabe, Y., Kunieda, S., et al. (2017). Taste of breath: the temporal order of taste and smell synchronized with breathing as a determinant for taste and olfactory integration. Sci. Rep. 7:8922. doi: 10.1038/s41598-017-07285-7

Kantono, K., Hamid, N., Shepherd, D., Yoo, M. J., Grazioli, G., and Carr, B. T. (2016). Listening to music can influence hedonic and sensory perceptions of gelati. Appetite 100, 244–255. doi: 10.1016/j.appet.2016.02.143

Kita, Y., and Rekimoto, J. (2013). “Spot-light: multimodal projection mapping on food,” in HCI International 2013 - Posters’ Extended Abstracts. HCI 2013. Communications in Computer and Information Science, Vol. 374, ed. C. Stephanidis (Berlin: Springer), 652–655.

Koizumi, N., Tanaka, H., Uema, Y., and Inami, M. (2011). “Chewing jockey: augmented food texture by using sound based on the cross-modal effect,” in Proceedings of the 8th International Conference on Advances in Computer Entertainment Technology (ACE ’11), eds T. Romão, N. Correia, M. Inami, H. Kato, R. Prada, T. Terada, et al. (New York, NY: ACM), 4. doi: 10.1145/2071423.2071449

Krishna, A., and Morrin, M. (2008). Does touch affect taste? The perceptual transfer of product container haptic cues. J. Consum. Res. 34, 807–818.

Lingle, R. (2017). AR and VR in packaging: Beyond the buzz. Available at: http://www.packagingdigest.com/packaging-design/arvr-packaging-beyond-the-buzz1707

Michail, N. (2017). From Marketing to Taste: How Virtual Reality will Change the Food Industry. Available at: www.foodnavigator.com/Market-Trends/From-marketing-to-taste-How-virtual-reality-will-change-the-food-industry

Michel, C., Velasco, C., and Spence, C. (2015). Cutlery matters: heavy cutlery enhances diners’ enjoyment of the food served in a realistic dining environment. Flavour 4, 1–8. doi: 10.1186/s13411-015-0036-y

Nambu, A., Narumi, T., Nishimura, K., Tanikawa, T., and Hirose, M. (2010). “Visual-olfactory display using olfactory sensory map,” in Proceedings of the 2010 IEEE Virtual Reality (VR), Waltham, MA, 39–42. doi: 10.1109/VR.2010.5444817

Narumi, N. (2016). “Multi-sensorial virtual reality and augmented human food interaction,” in Proceedings of the 1st Workshop on Multi-sensorial Approaches to Human-Food Interaction (MHFI ’16), eds A. Nijholt, C. Velasco, G. Huisman, and K. Karunanayaka (New York, NY: ACM), 6. doi: 10.1145/3007577.3007587

Narumi, T., Nishizaka, S., Kajinami, T., Tanikawa, T., and Hirose, M. (2011). “Meta Cookie+: an illusion-based gustatory display,” in Proceedings of the 2011 International Conference on Virtual and Mixed Reality: New Trends - Volume Part I, ed. R. Shumaker (Berlin: Springer-Verlag), 260–269. doi: 10.1007/978-3-642-22021-0_29

Nijholt, A., Velasco, C., Karunanayaka, K., and Huisman, G. (2016). “1st international workshop on multi-sensorial approaches to human-food interaction (workshop summary),” in Proceedings of the 18th ACM International Conference on Multimodal Interaction (ICMI 2016), (Tokyo: ACM), 601–603. doi: 10.1145/2993148.3007633

Nishizawa, M., Jiang, W., and Okajima, K. (2016). “Projective-AR system for customizing the appearance and taste of food,” in Proceedings of the 2016 Workshop on Multimodal Virtual and Augmented Reality (MVAR ’16), (New York, NY: ACM), 6. doi: 10.1145/3001959.3001966

Obrist, C., Velasco, C., Vi, C. T., Ranasinghe, N., Israr, A., Cheok, A. D., et al. (2016). Sensing the future of HCI: Touch, taste, & smell user interfaces. Interactions 23, 40–49. doi: 10.1145/2973568

Obrist, M., Gatti, E., Maggioni, E., Vi, C. T., and Velasco, C. (2017). Multisensory experiences in HCI. IEEE MultiMedia 24, 9–13. doi: 10.1109/MMUL.2017.33

Okajima, K., and Spence, C. (2011). Effects of visual food texture on taste perception. i-Perception 2:966. doi: 10.1068/ic966

Okajima, K., Ueda, J., and Spence, C. (2013). Effects of visual texture on food perception. J. Vis. 13, 1078–1078. doi: 10.1167/13.9.1078

Petit, O., Cheok, A. D., and Oullier, O. (2016). Can food porn make us slim? How brains of consumers react to food in digital environments. Integr. Food Nutr. Metab. 3, 251–255. doi: 10.15761/IFNM.1000138

Petit, O., Cheok, A. D., Spence, C., Velasco, C., and Karunanayaka, K. T. (2015). “Sensory marketing in light of new technologies,” in Proceedings of the 12th International Conference on Advances in Computer Entertainment Technology (ACE ’15), (New York, NY: ACM), 4. doi: 10.1145/2832932.2837006

Piqueras-Fiszman, B., and Spence, C. (2015). Sensory expectations based on product-extrinsic food cues: an interdisciplinary review of the empirical evidence and theoretical accounts. Food Qual. Prefer. 40, 165–179. doi: 10.1016/j.foodqual.2014.09.013

Prescott, J. (2015). Multisensory processes in flavour perception and their influence on food choice. Curr. Opin. Food Sci. 3, 47–52. doi: 10.1016/j.cofs.2015.02.007

Ranasinghe, N., Lee, K.-Y., and Do, E. Y. L. (2014). “FunRasa: an interactive drinking platform,” in Proceedings of the 8th International Conference on Tangible, Embedded and Embodied Interaction (TEI ’14), (New York, NY: ACM), 133–136.

Sawada, R., Sato, W., Toichi, M., and Fushiki, T. (2017). Fat content modulates rapid detection of food: a visual search study using fast food and Japanese diet. Front. Psychol. 8:1033. doi: 10.3389/fpsyg.2017.01033

Schifferstein, H. N., and Desmet, P. M. (2008). Tools facilitating multi-sensory product design. Des. J. 11, 137–158. doi: 10.2752/175630608X329226

Schroeder, P. A., Lohmann, J., Butz, M. V., and Plewnia, C. (2016). Behavioral bias for food reflected in hand movements: a preliminary study with healthy subjects. Cyberpsychol. Behav. Soc. Netw. 19, 120–126. doi: 10.1089/cyber.2015.0311

Shermer, D. Z., and Levitan, C. A. (2014). Red hot: the crossmodal effect of color intensity on perceived piquancy. Multisens. Res. 27, 207–223. doi: 10.1163/22134808-00002457

Slocombe, B. G., Carmichael, D. A., and Simner, J. (2016). Cross-modal tactile–taste interactions in food evaluations. Neuropsychologia 88, 58–64. doi: 10.1016/j.neuropsychologia.2015.07.011

Spence, C. (2015a). Multisensory flavor perception. Cell 161, 24–35. doi: 10.1016/j.cell.2015.03.007

Spence, C. (2015b). Eating with our ears: assessing the importance of the sounds of consumption on our perception and enjoyment of multisensory flavour experiences. Flavour 4:3. doi: 10.1186/2044-7248-4-3

Spence, C., Obrist, M., Velasco, C., and Ranasinghe, N. (2017). Digitizing the chemical senses: possibilities & pitfalls. Int. J. Hum. Comput. Stud. 107, 62–74. doi: 10.1016/j.ijhcs.2017.06.003

Spence, C., Okajima, K., Cheok, A. D., Petit, O., and Michel, C. (2016). Eating with our eyes: from visual hunger to digital satiation. Brain Cogn. 110, 53–63. doi: 10.1016/j.bandc.2015.08.006

Spence, C., and Piqueras-Fiszman, B. (2013). Technology at the dining table. Flavour 2:16. doi: 10.1186/2044-7248-2-16

Spence, C., Velasco, C., and Knoeferle, K. (2014). A large sample study on the influence of the multisensory environment on the wine drinking experience. Flavour 3:1–12. doi: 10.1186/2044-7248-3-8

Stewart, J. (2017). Digital Installation Transforms Restaurant Into Immersive Dining Experience. Available at: http://mymodernmet.com/teamlab-sagaya-interactive-restaurants/

Stroebele, N., and De Castro, J. M. (2004). Effect of ambience on food intake and food choice. Nutrition 20, 821–838. doi: 10.1016/j.nut.2004.05.012

Tsutsui, Y., Hirota, K., Nojima, T., and Ikei, Y. (2016). “High-resolution tactile display for lips,” in Human Interface and the Management of Information: Applications and Services. HIMI 2016. Lecture Notes in Computer Science, Vol. 9735, ed. S. Yamamoto (Berlin: Springer), 357–366.

van Rompay, T. J., Finger, F., Saakes, D., and Fenko, A. (2017). “See me, feel me”: effects of 3D-printed surface patterns on beverage evaluation. Food Qual. Prefer. 62, 332–339. doi: 10.1016/j.foodqual.2016.12.002

Velasco, C., Carvalho, F. R., Petit, O., and Nijholt, A. (2016a). “A multisensory approach for the design of food and drink enhancing sonic systems,” in Proceedings of the 1st Workshop on Multi-sensorial Approaches to Human-Food Interaction (MHFI ’16), eds A. Nijholt, C. Velasco, G. Huisman, and K. Karunanayaka (New York, NY: ACM), 7. doi: 10.1145/3007577.3007578

Velasco, C., Wan, C., Knoeferle, K., Zhou, X., Salgado-Montejo, A., and Spence, C. (2015). Searching for flavor labels in food products: the influence of color-flavor congruence and association strength. Front. Psychol. 6:301. doi: 10.3389/fpsyg.2015.00301

Velasco, C., Woods, A. T., Petit, O., Cheok, A. D., and Spence, C. (2016b). Crossmodal correspondences between taste and shape, and their implications for product packaging: A review. Food Qual. Prefer. 52, 17–26. doi: 10.1016/j.foodqual.2016.03.005

Verhagen, J. V., and Engelen, L. (2006). The neurocognitive bases of human multimodal food perception: sensory integration. Neurosci. Biobehav. Rev. 30, 613–650. doi: 10.1016/j.neubiorev.2005.11.003

Vi, C. T., Gatti, E., Ablart, D., Velasco, C., and Obrist, M. (2017). Not just see, but feel, smell, and taste the art: a case study on the creation and evaluation of multisensory art experiences in the museum. Int. J. Hum. Comput. Stud. 108, 1–14. doi: 10.1016/j.ijhcs.2017.06.004

Wei, J., Wang, X., Peiris, R. L., Choi, Y., Martinez, X. R., Tache, R., et al. (2011). “CoDine: an interactive multi-sensory system for remote dining,” in Proceedings of the 13th International Conference on Ubiquitous Computing (UbiComp ’11), (New York, NY: ACM), 21–30. doi: 10.1145/2030112.2030116

Wiederhold, B. K., Riva, G., and Gutiérrez-Maldonado, J. (2016). Virtual reality in the assessment and treatment of weight-related disorders. Cyberpsychol. Behav. Soc. Netw. 19, 67–73. doi: 10.1089/cyber.2016.0012

Woods, A. T., Poliakoff, E., Lloyd, D. M., Kuenzel, J., Hodson, R., Gonda, H., et al. (2011). Effect of background noise on food perception. Food Qual. Prefer. 22, 42–47. doi: 10.1016/j.foodqual.2010.07.003

Yan, K. S., and Dando, R. (2015). A crossmodal role for audition in taste perception. J. Exp. Psychol. 41, 590–596. doi: 10.1037/xhp0000044

Yap, S. (2016). Rise of the Machines: How Technology is Shaking up the Dining World. Available at: www.lifestyleasia.com/481919/rise-machines-technology-shaking-dining-world/

Youssef, J., Youssef, L., Juravle, G., and Spence, C. (2017). Plateware and slurping influence regular consumers’ sensory discriminative and hedonic responses to a hot soup. Int. J. Gastron. Food Sci. 9, 100–104. doi: 10.1016/j.ijgfs.2017.06.005

Keywords: food, multisensory, experience, augmentation, vision, audition, touch, haptics

Citation: Velasco C, Obrist M, Petit O and Spence C (2018) Multisensory Technology for Flavor Augmentation: A Mini Review. Front. Psychol. 9:26. doi: 10.3389/fpsyg.2018.00026

Received: 06 August 2017; Accepted: 09 January 2018;

Published: 30 January 2018.

Edited by:

Francesco Ferrise, Politecnico di Milano, ItalyCopyright © 2018 Velasco, Obrist, Petit and Spence. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Carlos Velasco, Y2FybG9zLnZlbGFzY29AYmkubm8=

Carlos Velasco

Carlos Velasco Marianna Obrist2

Marianna Obrist2 Olivia Petit

Olivia Petit Charles Spence

Charles Spence