95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Psychol. , 27 October 2017

Sec. Perception Science

Volume 8 - 2017 | https://doi.org/10.3389/fpsyg.2017.01896

In daily life, humans are bombarded with visual input. Yet, their attentional capacities for processing this input are severely limited. Several studies have investigated factors that influence these attentional limitations and have identified methods to circumvent them. Here, we provide a review of these findings. We first review studies that have demonstrated limitations of visuospatial attention and investigated physiological correlates of these limitations. We then review studies in multisensory research that have explored whether limitations in visuospatial attention can be circumvented by distributing information processing across several sensory modalities. Finally, we discuss research from the field of joint action that has investigated how limitations of visuospatial attention can be circumvented by distributing task demands across people and providing them with multisensory input. We conclude that limitations of visuospatial attention can be circumvented by distributing attentional processing across sensory modalities when tasks involve spatial as well as object-based attentional processing. However, if only spatial attentional processing is required, limitations of visuospatial attention cannot be circumvented by distributing attentional processing. These findings from multisensory research are applicable to visuospatial tasks that are performed jointly by two individuals. That is, in a joint visuospatial task requiring object-based as well as spatial attentional processing, joint performance is facilitated when task demands are distributed across sensory modalities. Future research could further investigate how applying findings from multisensory research to joint action research may facilitate joint performance. Generally, findings are applicable to real-world scenarios such as aviation or car-driving to circumvent limitations of visuospatial attention.

In everyday life, humans continuously process information from several sensory modalities. However, the amount of information humans can process is limited (Marois and Ivanoff, 2005; Dux et al., 2006). In particular, using attentional mechanisms humans are able to selectively attend only a limited amount of information while neglecting irrelevant sensory input (James, 1890; Chun et al., 2011). Researchers have explained these limitations in terms of a limited pool of attentional resources that can be depleted under high attentional demands (Kahneman, 1973; Wickens, 2002; Lavie, 2005). These limitations do not solely apply to sensory processing but also to motor processing (e.g., see Pashler, 1994; Dux et al., 2006; Sigman and Dehaene, 2008), yet for this review we primarily focus on limitations in sensory processing.

Regarding the type of attentional demands, a distinction in attention research is that between object-based attention and spatial attention (Fink et al., 1997; Serences et al., 2004; Soto and Blanco, 2004). Object-based attention refers to selectively attending to features of an object (e.g., attending to the color or shape of an object) whereas spatial attention refers to selectively attending to a location in space.

In the present review, we will primarily focus on limitations of spatial attention in the visual sensory modality and on how they can be circumvented. We first review findings about these limitations with a focus on visuospatial tasks. We then briefly describe physiological correlates of attentional processing during visuospatial task performance. We then turn to review multisensory research that has investigated whether limitations in visuospatial attention can be circumvented by distributing information processing across several sensory modalities. Subsequently, we review research in which findings from multisensory research are applied to joint tasks (i.e., tasks that are performed jointly by two individuals). Finally, we conclude the review with future directions for research on how findings from multisensory research could be used to circumvent limitations of visuospatial attention in joint tasks.

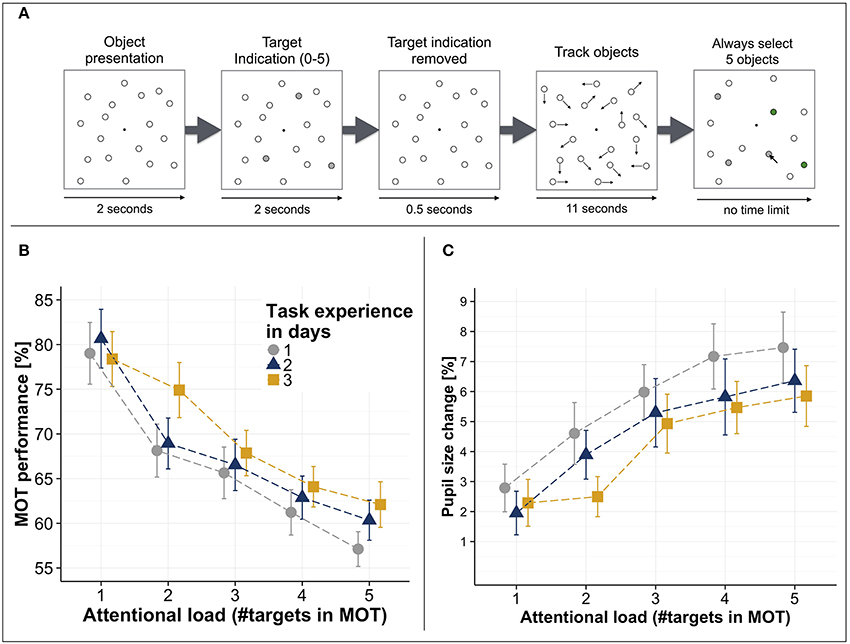

Limitations of visuospatial attention have been investigated in a wide variety of visuospatial tasks. One task that has been suggested to be highly suitable [among others such as response-competition tasks (Lavie, 2005, 2010; Matusz et al., 2015), or orthogonal cueing tasks (Spence and Driver, 2004; Spence, 2010)] to investigate visuospatial attentional processing is the “Multiple Object Tracking” (MOT) task (Pylyshyn and Storm, 1988; Yantis, 1992) (see Figure 1A, for a typical trial logic) as the attentional load can be systematically varied (i.e., by varying the number of targets that need to be tracked) while keeping the perceptual load constant (i.e., the total number of displayed objects) (Cavanagh and Alvarez, 2005; Arrighi et al., 2011; Wahn and König, 2015a,b). Notably, apart from spatial attentional demands, the MOT task also involves anticipatory processes (i.e., predicting the trajectories of the targets' movements) (Keane and Pylyshyn, 2006; Atsma et al., 2012). However, as in several studies investigating the MOT task the trajectories of targets also do change randomly (e.g., in Wahn and König, 2015a,b), the MOT task at least in these cases primarily involves spatial attentional processing. The general finding across studies is that with an increasing number of targets, performance in the MOT task systematically decreases (see Figure 1B), suggesting a limit of visuospatial attentional resources (Alvarez and Franconeri, 2007; Wahn et al., 2016a). Moreover, these capacity limitations are stable across several repetitions of the experiment on consecutive days (Wahn et al., 2016a, see Figure 1B) and over considerably longer periods of time (Alnæs et al., 2014).

Figure 1. (A) Multiple object tracking (MOT) task trial logic. First, several stationary objects are shown on a computer screen. A subset of these objects is indicated as targets (here in gray). Then, the target indication is removed (i.e., targets become indistinguishable from the other objects) and all objects start moving randomly across the screen. After several seconds, the objects stop moving and participants are asked to select the previously indicated target objects. (B) MOT performance (i.e., percent correct of selected targets) as a function of attentional load (i.e., number of tracked objects) and days of measurement. (C) Pupil size increases relative to a passive viewing condition (i.e., tracking no targets) as a function of attentional load and days of measurement. Error bars in (B,C) are standard error of the mean. All figures have been adapted from Wahn et al. (2016a).

The behavioral findings from the MOT task have been corroborated by studies looking at the physiological correlates of attentional processing. A prominent physiological correlate of attentional processing are pupil sizes (Heinrich, 1896; Kahneman and Beatty, 1966; Beatty, 1982; Hoeks and Levelt, 1993; Wierda et al., 2012; Mathôt et al., 2013; Alnæs et al., 2014; Lisi et al., 2015; Mathôt et al., 2016). Increases in pupil sizes have been shown to be associated with increases in attentional load in recent studies that used the MOT task (Alnæs et al., 2014; Wahn et al., 2016a). Specifically, it has been shown that when participants perform the MOT task at varying levels of attentional load, pupil sizes systematically increase with attentional load and these increases are consistently found for measurements on consecutive days (Wahn et al., 2016a, see Figure 1C). Apart from these studies investigating changes in pupil size, researchers also investigated physiological correlates of attentional processing using fMRI and EEG. Researchers found that parietal regions in the brain typically associated with attentional processing were active when participants performed the MOT task (Jovicich et al., 2001; Howe et al., 2009; Jahn et al., 2012; Alnæs et al., 2014) but notably also for several other spatial tasks (Mishkin and Ungerleider, 1982; Livingstone and Hubel, 1988; Maeder et al., 2001; Reed et al., 2005; Ahveninen and et al., 2006; Ungerleider and Pessoa, 2008), suggesting that performing the MOT task requires processing of brain regions typically associated with visuospatial attention. Moreover, several EEG studies have identified neural correlates whose activity rises with increasing attentional load in the MOT task (Sternshein et al., 2011; Drew et al., 2013).

In sum, the MOT task has served to assess visuospatial limitations of attentional resources in a number of studies (Alvarez and Franconeri, 2007; Alnæs et al., 2014; Wahn et al., 2016a) and their physiological correlates (Jovicich et al., 2001; Howe et al., 2009; Jahn et al., 2012; Alnæs et al., 2014; Wahn et al., 2016a). In the following, we discuss how the use of the MOT and other spatial tasks has been extended to investigate spatial attentional resources across multiple sensory modalities.

A question that has been extensively investigated in multisensory research is whether there are distinct pools of attentional resources for each sensory modality or one shared pool of attentional resources for all sensory modalities. Studies have found empirical support for the hypothesis that there are distinct resources (Duncan et al., 1997; Potter et al., 1998; Soto-Faraco and Spence, 2002; Larsen et al., 2003; Alais et al., 2006; Hein et al., 2006; Sinnett et al., 2006; Talsma et al., 2006; Van der Burg et al., 2007; Keitel et al., 2013; Finoia et al., 2015) as well as for the hypothesis that there are shared resources (Jolicoeur, 1999; Arnell and Larson, 2002; Soto-Faraco et al., 2002; Arnell and Jenkins, 2004; Macdonald and Lavie, 2011; Raveh and Lavie, 2015). In principle, if there are separate pools of attentional resources, attentional limitations in one sensory modality can be circumvented by distributing attentional processing across several sensory modalities. Conversely, if there is only one shared pool of attentional resources for all sensory modalities, attentional limitations in one sensory modality cannot be circumvented by distributing attentional processing across several sensory modalities.

The question of whether there are shared or distinct attentional resources across the sensory modalities has often been investigated using dual task designs (Pashler, 1994). In a dual task design, participants perform two tasks separately (“single task condition”) or at the same time (“dual task condition”). The extent to which attentional resources are shared for two tasks is assessed by comparing performance in the single task condition with performance in the dual task condition. If the attentional resources required for the two tasks are shared, task performance should decrease in the dual task condition relative to the single task condition. If attentional resources required for the two tasks are distinct, performance in the single and dual task conditions should not differ. In multisensory research, the two tasks in a dual task design are performed either in the same sensory modality or in different sensory modalities. The rationale of the design is that two tasks performed in the same sensory modality should always share attentional resources while two tasks performed in separate sensory modalities may or may not rely on shared attentional resources. That is, if attentional resources are distinct across sensory modalities, tasks performed in two separate sensory modalities should interfere less than tasks performed in the same sensory modality.

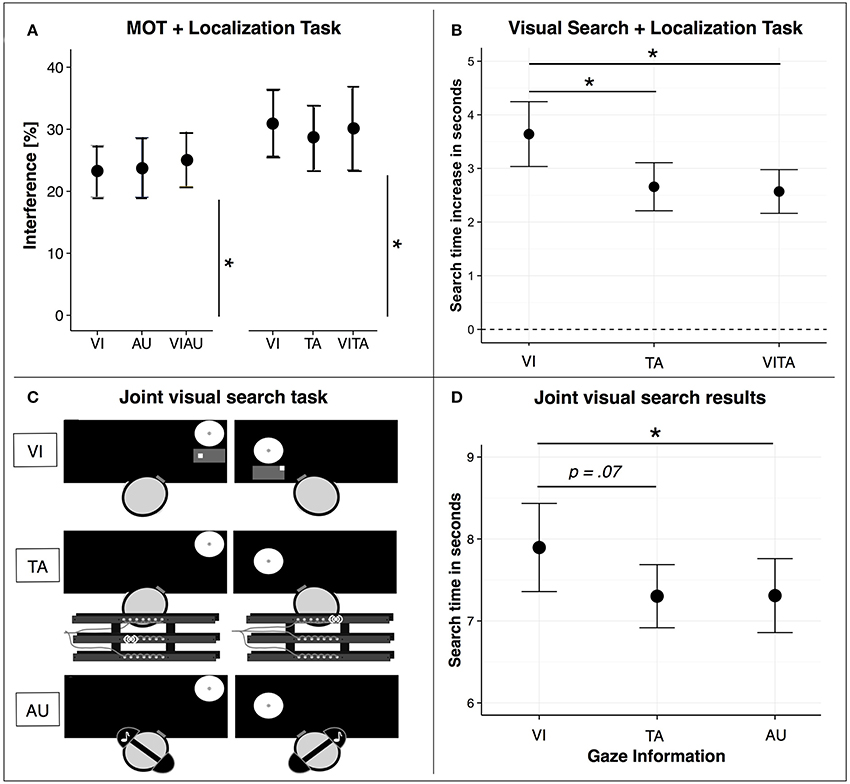

In the following, we will focus on research that has investigated how limitations in attentional resources for visuospatial attention can be circumvented by distributing information processing across sensory modalities using dual task designs. Several researchers suggested that a factor that influences the allocation of attentional resources across sensory modalities is the task-specific type of attentional processing (Bonnel and Hafter, 1998; Chan and Newell, 2008; Arrighi et al., 2011; Wahn and König, 2016; Wahn et al., 2017c). That is, the allocation of attentional resources depends on whether tasks performed in separate sensory modalities require object-based attention or spatial attention (for a recent review, see Wahn and König, 2017). In recent studies (Arrighi et al., 2011; Wahn and König, 2015a,b), this task-dependency in attentional resource allocation has been tested in a dual task design involving a visuospatial task (i.e., a MOT task). In particular, the MOT task was performed either alone or in combination with a secondary task that was either performed in the visual, auditory, or tactile sensory modalities. The secondary task either required object-based attention (i.e., the secondary task was a discrimination task) or spatial attention (i.e., the secondary task was a localization task). When participants performed the MOT task in combination with an object-based attention task in another sensory modality (i.e., an auditory pitch discrimination task), distinct attentional resources were found for the visual and auditory modalities (Arrighi et al., 2011). However, in studies in which participants performed the MOT task in combination with either a tactile (Wahn and König, 2015b) or auditory localization task (Wahn and König, 2015a), findings suggest that attentional resources are shared across the visual, tactile, and auditory sensory modalities. In particular, results showed that regardless of whether two spatial attention tasks were performed in two separate sensory modalities or the same sensory modality, tasks equally interfered with each other (see Figure 2A).

Figure 2. (A) Dual task interference when participants perform the MOT task either in combination with a visual (VI), tactile (TA), audiovisual (VIAU), or visuotactile (VITA) localization task. Interference is measured as the reduction in performance between single and dual task conditions. In particular, the reduction in performance for both tasks (i.e., MOT and localization task) are combined by taking the Euclidean distance between the performances in the single and dual task conditions, separately for each combination of tasks (MOT+VI, MOT+AU, MOT+TA, MOT+VIAU, MOT+VITA). (B) Search time increase relative to performing the visual search task alone when participants perform the same task either in combination with the VI, TA, or VITA localization task. (C) Joint visual search task conditions. Co-actors jointly searched for a target among distractors on two separate computer screens. A black mask was applied to the whole screen and only the currently viewed location was visible to the co-actors. Co-actors received the information about where their co-actor was looking either via a visual map (VI) that was displayed below their viewed location, via vibrations on a vibrotactile belt (TA), or via tones received through headphones (AU). (D) Joint visual search results. Search performance (i.e., time of the co-actor who found the target first) as a function of the sensory modality (VI, TA, or AU) in which the gaze information was received. Error bars in (A,B,D) are standard error of the mean. *Indicate significant comparisons with an alpha of .05. (A) has been adapted from Wahn and König (2015a,b), (B) from Wahn and König (2016), and (C,D) from Wahn et al. (2016c).

Further support for these conclusions was provided in another study (Wahn and König, 2016). In contrast to earlier studies (Wahn and König, 2015a,b), this time an object-based attention task was combined with a spatial attention task. In particular, participants performed a visual search task either in combination with a visual or tactile localization task. In line with the findings above (Arrighi et al., 2011), participants performed the visual search task faster in combination with the tactile localization task than in combination with the visual localization task (see Figure 2B). These findings suggest that attentional resources for the sensory modalities are distinct when tasks involve different types of attentional processing, i.e. object-based and spatial attentional processing.

In sum, the findings discussed above suggest that the allocation of attentional resources across sensory modalities (i.e., whether they are shared or distinct) depends on what type of attentional processing is required in a task. In particular, if tasks only require spatial attentional processing, findings suggest that attentional resources are shared across sensory modalities (Wahn and König, 2015a,b). However, if tasks also require object-based attentional processing, findings suggest that attentional resources are distinct across the sensory modalities (Arrighi et al., 2011; Wahn and König, 2016). Importantly, limitations in visuospatial attention can be circumvented by distributing attentional processing across sensory modalities if tasks involve object-based as well as spatial attentional processing.

Apart from the task-dependency, we also want to emphasize that there are several other factors that influence attentional processing such as motor demands (Marois and Ivanoff, 2005; Dux et al., 2006) and the sensory modality in which task load is increased (Rees et al., 2001; Macdonald and Lavie, 2011; Molloy et al., 2015; Raveh and Lavie, 2015) (for a detailed discussion, see Wahn and König, 2017). Another important factor to consider is the age of participants. Findings of a recent study (Matusz et al., 2015) suggested that conclusions about the distribution of attentional resources across the sensory modalities for adults do not necessarily generalize to children. In addition, we want to note that another effective means to circumvent limitations in one sensory modality is by providing redundant information via several sensory modalities, thereby taking advantage of the behavioral benefits of multisensory integration (i.e., faster reaction times and a higher accuracy) (Meredith and Stein, 1983; Ernst and Banks, 2002; Helbig and Ernst, 2008; Stein and Stanford, 2008; Gibney et al., 2017). The process of multisensory integration has been argued to be independent of top-down influences (Matusz and Eimer, 2011; De Meo et al., 2015; ten Oever et al., 2016) and be robust against additional attentional demands (Wahn and König, 2015a,b) for low-level stimuli (for more general reviews on the topic, see van Atteveldt et al., 2014; Chen and Spence, 2016; Macaluso et al., 2016; Tang et al., 2016), making it highly suitable to circumvent limitations within one sensory modality.

In previous sections, we have reviewed studies in which participants perform a task alone. However, in many situations in daily life, tasks are performed jointly by two or more humans with a shared goal (Sebanz et al., 2006; Vesper et al., 2017). For instance, when two humans carry a table together (Sebanz et al., 2006), search for a friend in a crowd (Brennan et al., 2008), or play team sports such as basketball or soccer. In such joint tasks, humans often achieve a higher performance than the better individual would achieve alone (i.e., a collective benefit) (Bahrami et al., 2010). Collective benefits have been investigated in several task domains such as visuomotor tasks (Knoblich and Jordan, 2003; Masumoto and Inui, 2013; Ganesh et al., 2014; Skewes et al., 2015; Rigoli et al., 2015; Wahn et al., 2016b), decision-making tasks (Bahrami et al., 2010, 2012a,b), and visuospatial tasks (Brennan et al., 2008; Neider et al., 2010; Brennan and Enns, 2015; Wahn et al., 2016c, 2017b).

Regarding visuospatial tasks, several studies have investigated joint performance in visual search tasks (Brennan et al., 2008; Neider et al., 2010; Brennan and Enns, 2015; Wahn et al., 2016c). In particular, Brennan et al. (2008) investigated how performance in a joint visual search task depends on how information is exchanged between two co-actors. In a joint visual search task, two co-actors jointly search for a target stimulus among distractor stimuli. Brennan et al. (2008) found that co-actors performed the joint search the fastest and divided the task demands most effectively in the condition where they received gaze information (i.e., a continuous display of the co-actor's gaze location), suggesting that co-actors highly benefit from receiving spatial information about the actions of their co-actor (also see Wahn et al., 2017b).

The task demands in the joint visual search task as employed by Brennan et al. (2008) involve a combination of object-based attention (i.e., discriminate targets from distractors in the visual search task) and spatial attention (i.e., localize where the co-actor is looking using the gaze information). As reported above, findings in multisensory research suggest that limitations of visuospatial attention can be effectively circumvented by distributing information processing across sensory modalities if processing involves a combination of object-based attention and spatial attention (Arrighi et al., 2011; Wahn and König, 2016). In a recent study (Wahn et al., 2016c), these findings from multisensory research were applied to a joint visual search task setting similar to the one used by Brennan et al. (2008). In particular, researchers investigated whether joint visual search performance is faster when actors receive information about their co-actor's viewed location via the auditory or tactile sensory modality compared to when they receive this information via the visual modality (see Figure 2C). Researchers found that co-actors searched faster when they received the viewing information via the tactile or auditory sensory modalities than via the visual sensory modality (see Figure 2D). These results suggest that findings from multisensory research mentioned above (Arrighi et al., 2011; Wahn and König, 2016) can be successfully applied to a joint visuospatial task.

The aim of the present review was to review recent studies investigating limitations in visuospatial attention. These studies have reliably found limitations of visuospatial attention and physiological correlates whose activity rises with increasing visuospatial attentional demands (Sternshein et al., 2011; Drew et al., 2013; Alnæs et al., 2014; Wahn et al., 2016a). Findings from multisensory research have demonstrated that such limitations of visuospatial attention can be circumvented by distributing information processing across sensory modalities (Arrighi et al., 2011; Wahn and König, 2015a,b, 2016) and these findings are applicable to joint tasks (Wahn et al., 2016c).

Apart from the study above (Wahn et al., 2016c), other studies on joint action have investigated how the use of multisensory stimuli (e.g., visual and auditory) can serve to facilitate joint performance (Knoblich and Jordan, 2003) and how the process of multisensory integration is affected by social settings (Heed et al., 2010; Wahn et al., 2017a). However, these studies have not investigated how distributing information processing across sensory modalities potentially could facilitate joint performance. We suggest that future studies could further investigate to what extent findings from multisensory research are applicable to joint tasks. In particular, attentional limitations may be circumvented in every joint task that involves a combination of object-based and spatial attentional processing in the visual sensory modality, thereby possibly facilitating joint performance.

The possibility to circumvent limitations of visuospatial attention is also relevant for many real-world tasks that require visuospatial attention such as car-driving (Spence and Read, 2003; Kunar et al., 2008; Spence and Ho, 2012), air-traffic control (Giraudet et al., 2014), aviation (Nikolic et al., 1998; Sklar and Sarter, 1999), navigation (Nagel et al., 2005; Kaspar et al., 2014; König et al., 2016), or rehabilitation (Johansson, 2012; Maidenbaum et al., 2014). Notably, for applying findings to real-world tasks additional factors such as how much the task was practiced (Ruthruff et al., 2001; Chirimuuta et al., 2007) or memorized (Matusz et al., 2017) should be taken into account as real-world tasks are often highly practiced and memorized. More generally, in such scenarios limitations of visuospatial attention could be effectively circumvented by distributing attentional processing across sensory modalities, thereby improving human performance and reducing the risk of accidents.

Drafted the manuscript: BW. Revised the manuscript: BW and PK.

We acknowledge the support by H2020 – H2020-FETPROACT-2014 641321 – socSMCs (for BW) and ERC-2010-AdG #269716 – MULTISENSE (for PK). Moreover, we acknowledge support from the Deutsche Forschungsgemeinschaft (DFG), Open Access Publishing Fund of Osnabrück University, and an open access publishing award by Osnabrück University (for BW).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This review is in large part a reproduction of Basil Wahn's Ph.D. thesis [“Limitations of visuospatial attention (and how to circumvent them)”], which can be found online in the following University repository: https://repositorium.ub.uni-osnabrueck.de/handle/urn:nbn:de:gbv:700-2017051515895

Note, the Ph.D. Thesis is only available in the University repository. Moreover, submitting the thesis as a mini review to this journal is in line with University policies of the University of Osnabrück.

We also acknowledge that Figures 1, 2 have appeared in part in our earlier publications. We referenced the original publications in the Figure captions and obtained permission to re-use the Figures.

Ahveninen, J., Jääskelinen, I. P., Raij, T., Bonmassar, G., Devore, S., Hämäläinen, M., et al. (2006). Task-modulated “what” and “where” pathways in human auditory cortex. Proc. Natl. Acad. Sci. U.S.A. 103, 14608–14613. doi: 10.1073/pnas.0510480103

Alais, D., Morrone, C., and Burr, D. (2006). Separate attentional resources for vision and audition. Proc. R. Soc. B Biol. Sci. 273, 1339–1345. doi: 10.1098/rspb.2005.3420

Alnæs, D., Sneve, M. H., Espeseth, T., Endestad, T., van de Pavert, S. H. P., and Laeng, B. (2014). Pupil size signals mental effort deployed during multiple object tracking and predicts brain activity in the dorsal attention network and the locus coeruleus. J. Vis. 14, 1. doi: 10.1167/14.4.1

Alvarez, G. A., and Franconeri, S. L. (2007). How many objects can you track?: Evidence for a resource-limited attentive tracking mechanism. J. Vis. 7:14. doi: 10.1167/7.13.14

Arnell, K. M., and Jenkins, R. (2004). Revisiting within-modality and cross-modality attentional blinks: Effects of target–distractor similarity. Percept. Psychophys. 66, 1147–1161. doi: 10.3758/BF03196842

Arnell, K. M., and Larson, J. M. (2002). Cross-modality attentional blinks without preparatory task-set switching. Psychon. Bull. Rev. 9, 497–506. doi: 10.3758/BF03196305

Arrighi, R., Lunardi, R., and Burr, D. (2011). Vision and audition do not share attentional resources in sustained tasks. Front. Psychol. 2:56. doi: 10.3389/fpsyg.2011.00056

Atsma, J., Koning, A., and van Lier, R. (2012). Multiple object tracking: anticipatory attention doesn't “bounce.” J. Vis. 12, 1–1. doi: 10.1167/12.13.1

Bahrami, B., Olsen, K., Bang, D., Roepstorff, A., Rees, G., and Frith, C. (2012a). Together, slowly but surely: the role of social interaction and feedback on the build-up of benefit in collective decision-making. J. Exp. Psychol. Hum. Percept. Perform. 38, 3–8. doi: 10.1037/a0025708

Bahrami, B., Olsen, K., Bang, D., Roepstorff, A., Rees, G., and Frith, C. (2012b). What failure in collective decision-making tells us about metacognition. Philos. Trans. R. Soc. B Biol. Sci. 367, 1350–1365. doi: 10.1098/rstb.2011.0420

Bahrami, B., Olsen, K., Latham, P. E., Roepstorff, A., Rees, G., and Frith, C. D. (2010). Optimally interacting minds. Science 329, 1081–1085. doi: 10.1126/science.1185718

Beatty, J. (1982). Task-evoked pupillary responses, processing load, and the structure of processing resources. Psychol. Bull. 91, 276. doi: 10.1037/0033-2909.91.2.276

Bonnel, A.-M., and Hafter, E. R. (1998). Divided attention between simultaneous auditory and visual signals. Percept. Psychophys. 60, 179–190. doi: 10.3758/BF03206027

Brennan, A. A., and Enns, J. T. (2015). When two heads are better than one: interactive versus independent benefits of collaborative cognition. Psychon. Bull. Rev. 22, 1076–1082. doi: 10.3758/s13423-014-0765-4

Brennan, S. E., Chen, X., Dickinson, C. A., Neider, M. B., and Zelinsky, G. J. (2008). Coordinating cognition: the costs and benefits of shared gaze during collaborative search. Cognition 106, 1465–1477. doi: 10.1016/j.cognition.2007.05.012

Cavanagh, P., and Alvarez, G. A. (2005). Tracking multiple targets with multifocal attention. Trends Cogn. Sci. 9, 349–354. doi: 10.1016/j.tics.2005.05.009

Chan, J. S., and Newell, F. N. (2008). Behavioral evidence for task-dependent “what” versus “where” processing within and across modalities. Percept. Psychophys. 70, 36–49. doi: 10.3758/PP.70.1.36

Chen, Y.-C., and Spence, C. (2016). Hemispheric asymmetry: looking for a novel signature of the modulation of spatial attention in multisensory processing. Psychon. Bull. Rev. 24, 690–707. doi: 10.3758/s13423-016-1154-y

Chirimuuta, M., Burr, D., and Morrone, M. C. (2007). The role of perceptual learning on modality-specific visual attentional effects. Vis. Res. 47, 60–70. doi: 10.1016/j.visres.2006.09.002

Chun, M. M., Golomb, J. D., and Turk-Browne, N. B. (2011). A taxonomy of external and internal attention. Ann. Rev. Psychol. 62, 73–101. doi: 10.1146/annurev.psych.093008.100427

De Meo, R., Murray, M. M., Clarke, S., and Matusz, P. J. (2015). Top-down control and early multisensory processes: chicken vs. egg. Front. Integr. Neurosci. 9:17. doi: 10.3389/fnint.2015.00017

Drew, T., Horowitz, T. S., and Vogel, E. K. (2013). Swapping or dropping? electrophysiological measures of difficulty during multiple object tracking. Cognition 126, 213–223. doi: 10.1016/j.cognition.2012.10.003

Duncan, J., Martens, S., and Ward, R. (1997). Restricted attentional capacity within but not between sensory modalities. Nature 397, 808–810. doi: 10.1038/42947

Dux, P. E., Ivanoff, J., Asplund, C. L., and Marois, R. (2006). Isolation of a central bottleneck of information processing with time-resolved fmri. Neuron 52, 1109–1120. doi: 10.1016/j.neuron.2006.11.009

Ernst, M. O., and Banks, M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433. doi: 10.1038/415429a

Fink, G., Dolan, R., Halligan, P., Marshall, J., and Frith, C. (1997). Space-based and object-based visual attention: shared and specific neural domains. Brain 120, 2013–2028. doi: 10.1093/brain/120.11.2013

Finoia, P., Mitchell, D. J., Hauk, O., Beste, C., Pizzella, V., and Duncan, J. (2015). Concurrent brain responses to separate auditory and visual targets. J. Neurophysiol. 114, 1239–1247. doi: 10.1152/jn.01050.2014

Ganesh, G., Takagi, A., Osu, R., Yoshioka, T., Kawato, M., and Burdet, E. (2014). Two is better than one: Physical interactions improve motor performance in humans. Sci. Rep. 4:3824. doi: 10.1038/srep03824

Gibney, K. D., Aligbe, E., Eggleston, B. A., Nunes, S. R., Kerkhoff, W. G., Dean, C. L., et al. (2017). Visual distractors disrupt audiovisual integration regardless of stimulus complexity. Front. Integr. Neurosci. 11:1. doi: 10.3389/fnint.2017.00001

Giraudet, L., Berenger, M., Imbert, J.-P., Tremblay, S., and Causse, M. (2014). “Inattentional deafness in simulated air traffic control tasks: a behavioral and p300 analysis,” in 5th International Conference on Applied Human Factors and Ergonomics (Kraków).

Heed, T., Habets, B., Sebanz, N., and Knoblich, G. (2010). Others' actions reduce crossmodal integration in peripersonal space. Curr. Biol. 20, 1345–1349. doi: 10.1016/j.cub.2010.05.068

Hein, G., Parr, A., and Duncan, J. (2006). Within-modality and cross-modality attentional blinks in a simple discrimination task. Percept. Psychophys. 68, 54–61. doi: 10.3758/BF03193655

Heinrich, W. (1896). Die aufmerksamkeit und die funktion der sinnesorgane. Zeitschrift für Psychologie und Physiologie der Sinnesorgane 11, 342–388.

Helbig, H. B., and Ernst, M. O. (2008). Visual-haptic cue weighting is independent of modality-specific attention. J. Vis. 8:21. doi: 10.1167/8.1.21

Hoeks, B., and Levelt, W. J. (1993). Pupillary dilation as a measure of attention: a quantitative system analysis. Behav. Res. Methods Instrum. Comput. 25, 16–26. doi: 10.3758/BF03204445

Howe, P. D., Horowitz, T. S., Morocz, I. A., Wolfe, J., and Livingstone, M. S. (2009). Using fmri to distinguish components of the multiple object tracking task. J. Vis. 9:10. doi: 10.1167/9.4.10

Jahn, G., Wendt, J., Lotze, M., Papenmeier, F., and Huff, M. (2012). Brain activation during spatial updating and attentive tracking of moving targets. Brain Cogn. 78, 105–113. doi: 10.1016/j.bandc.2011.12.001

Johansson, B. B. (2012). Multisensory stimulation in stroke rehabilitation. Front. Human Neurosci. 6:60. doi: 10.3389/fnhum.2012.00060

Jolicoeur, P. (1999). Restricted attentional capacity between sensory modalities. Psychon. Bull. Rev. 6, 87–92. doi: 10.3758/BF03210813

Jovicich, J., Peters, R. J., Koch, C., Braun, J., Chang, L., and Ernst, T. (2001). Brain areas specific for attentional load in a motion-tracking task. J. Cogn. Neurosci. 13, 1048–1058. doi: 10.1162/089892901753294347

Kahneman, D., and Beatty, J. (1966). Pupil diameter and load on memory. Science 154, 1583–1585. doi: 10.1126/science.154.3756.1583

Kaspar, K., König, S., Schwandt, J., and König, P. (2014). The experience of new sensorimotor contingencies by sensory augmentation. Conscious. Cogn. 28, 47–63. doi: 10.1016/j.concog.2014.06.006

Keane, B. P., and Pylyshyn, Z. W. (2006). Is motion extrapolation employed in multiple object tracking? tracking as a low-level, non-predictive function. Cogn. Psychol. 52, 346–368. doi: 10.1016/j.cogpsych.2005.12.001

Keitel, C., Maess, B., Schröger, E., and Müller, M. M. (2013). Early visual and auditory processing rely on modality-specific attentional resources. Neuroimage 70, 240–249. doi: 10.1016/j.neuroimage.2012.12.046

Knoblich, G., and Jordan, J. S. (2003). Action coordination in groups and individuals: learning anticipatory control. J. Exp. Psychol. Learn. Mem. Cogn. 29, 1006–1016. doi: 10.1037/0278-7393.29.5.1006

König, S. U., Schumann, F., Keyser, J., Goeke, C., Krause, C., Wache, S., et al. (2016). Learning new sensorimotor contingencies: effects of long-term use of sensory augmentation on the brain and conscious perception. PLoS ONE 11:e0166647. doi: 10.1371/journal.pone.0166647

Kunar, M. A., Carter, R., Cohen, M., and Horowitz, T. S. (2008). Telephone conversation impairs sustained visual attention via a central bottleneck. Psychon. Bull. Rev. 15, 1135–1140. doi: 10.3758/PBR.15.6.1135

Larsen, A., McIlhagga, W., Baert, J., and Bundesen, C. (2003). Seeing or hearing? Perceptual independence, modality confusions, and crossmodal congruity effects with focused and divided attention. Percept. Psychophys. 65, 568–574. doi: 10.3758/BF03194583

Lavie, N. (2005). Distracted and confused?: Selective attention under load. Trends Cogn. Sci. 9, 75–82. doi: 10.1016/j.tics.2004.12.004

Lavie, N. (2010). Attention, distraction, and cognitive control under load. Curr. Dir. Psychol. Sci. 19, 143–148. doi: 10.1177/0963721410370295

Lisi, M., Bonato, M., and Zorzi, M. (2015). Pupil dilation reveals top–down attentional load during spatial monitoring. Biol. Psychol. 112, 39–45. doi: 10.1016/j.biopsycho.2015.10.002

Livingstone, M., and Hubel, D. (1988). Segregation of form, color, movement, and depth: anatomy, physiology, and perception. Science 240, 740–749. doi: 10.1126/science.3283936

Macaluso, E., Noppeney, U., Talsma, D., Vercillo, T., Hartcher-O'Brien, J., and Adam, R. (2016). The curious incident of attention in multisensory integration: bottom-up vs. top-down. Multisens. Res. 29, 557–583. doi: 10.1163/22134808-00002528

Macdonald, J. S., and Lavie, N. (2011). Visual perceptual load induces inattentional deafness. Atten. Percept. Psychophys. 73, 1780–1789. doi: 10.3758/s13414-011-0144-4

Maeder, P. P., Meuli, R. A., Adriani, M., Bellmann, A., Fornari, E., Thiran, J.-P., et al. (2001). Distinct pathways involved in sound recognition and localization: a human fMRI study. Neuroimage 14, 802–816. doi: 10.1006/nimg.2001.0888

Maidenbaum, S., Abboud, S., and Amedi, A. (2014). Sensory substitution: closing the gap between basic research and widespread practical visual rehabilitation. Neurosci. Biobehav. Rev. 41, 3–15. doi: 10.1016/j.neubiorev.2013.11.007

Marois, R., and Ivanoff, J. (2005). Capacity limits of information processing in the brain. Trends Cogn. Sci. 9, 296–305. doi: 10.1016/j.tics.2005.04.010

Masumoto, J., and Inui, N. (2013). Two heads are better than one: both complementary and synchronous strategies facilitate joint action. J. Neurophys. 109, 1307–1314. doi: 10.1152/jn.00776.2012

Mathôt, S., Melmi, J.-B., van der Linden, L., and Van der Stigchel, S. (2016). The mind-writing pupil: a human-computer interface based on decoding of covert attention through pupillometry. PLOS ONE 11:e0148805. doi: 10.1371/journal.pone.0148805

Mathôt, S., Van der Linden, L., Grainger, J., and Vitu, F. (2013). The pupillary light response reveals the focus of covert visual attention. PLoS ONE 8:e78168. doi: 10.1371/journal.pone.0078168

Matusz, P. J., Broadbent, H., Ferrari, J., Forrest, B., Merkley, R., and Scerif, G. (2015). Multi-modal distraction: insights from children's limited attention. Cognition 136, 156–165. doi: 10.1016/j.cognition.2014.11.031

Matusz, P. J., and Eimer, M. (2011). Multisensory enhancement of attentional capture in visual search. Psychon. Bull. Rev. 18, 904–909. doi: 10.3758/s13423-011-0131-8

Matusz, P. J., Wallace, M. T., and Murray, M. M. (2017). A multisensory perspective on object memory. Neuropsychologia. doi: 10.1016/j.neuropsychologia.2017.04.008. [Epub ahead of print].

Meredith, M. A., and Stein, B. E. (1983). Interactions among converging sensory inputs in the superior colliculus. Science 221, 389–391. doi: 10.1126/science.6867718

Mishkin, M., and Ungerleider, L. G. (1982). Contribution of striate inputs to the visuospatial functions of parieto-preoccipital cortex in monkeys. Behav. Brain Res. 6, 57–77. doi: 10.1016/0166-4328(82)90081-X

Molloy, K., Griffiths, T. D., Chait, M., and Lavie, N. (2015). Inattentional deafness: visual load leads to time-specific suppression of auditory evoked responses. J. Neurosci. 35, 16046–16054. doi: 10.1523/JNEUROSCI.2931-15.2015

Nagel, S. K., Carl, C., Kringe, T., Märtin, R., and König, P. (2005). Beyond sensory substitution—learning the sixth sense. J. Neural Eng. 2, R13–R26. doi: 10.1088/1741-2560/2/4/R02

Neider, M. B., Chen, X., Dickinson, C. A., Brennan, S. E., and Zelinsky, G. J. (2010). Coordinating spatial referencing using shared gaze. Psychon. Bull. Rev. 17, 718–724. doi: 10.3758/PBR.17.5.718

Nikolic, M. I., Sklar, A. E., and Sarter, N. B. (1998). “Multisensory feedback in support of pilot-automation coordination: the case of uncommanded mode transitions,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 42 (Chicago, IL: SAGE Publications), 239–243.

Pashler, H. (1994). Dual-task interference in simple tasks: data and theory. Psychol. Bull. 116:220. doi: 10.1037/0033-2909.116.2.220

Potter, M. C., Chun, M. M., Banks, B. S., and Muckenhoupt, M. (1998). Two attentional deficits in serial target search: the visual attentional blink and an amodal task-switch deficit. J. Exp. Psychol. Learn. Mem. Cogn. 24, 979–992. doi: 10.1037/0278-7393.24.4.979

Pylyshyn, Z. W., and Storm, R. W. (1988). Tracking multiple independent targets: evidence for a parallel tracking mechanism. Spat. Vis. 3, 179–197. doi: 10.1163/156856888X00122

Raveh, D., and Lavie, N. (2015). Load-induced inattentional deafness. Atten. Percept. Psychophys. 77, 483–492. doi: 10.3758/s13414-014-0776-2

Reed, C. L., Klatzky, R. L., and Halgren, E. (2005). What vs. where in touch: an fMRI study. Neuroimage 25, 718–726. doi: 10.1016/j.neuroimage.2004.11.044

Rees, G., Frith, C., and Lavie, N. (2001). Processing of irrelevant visual motion during performance of an auditory attention task. Neuropsychologia 39, 937–949. doi: 10.1016/S0028-3932(01)00016-1

Rigoli, L., Romero, V., Shockley, K., Funke, G. J., Strang, A. J., and Richardson, M. J. (2015). “Effects of complementary control on the coordination dynamics of joint-action,” in Proceedings of the 37th Annual Conference of the Cognitive Science Society (Pasadena, CA), 1997–2002.

Ruthruff, E., Johnston, J. C., and Van Selst, M. (2001). Why practice reduces dual-task interference. J. Exp. Psychol. Human Percept. Perform. 27:3. doi: 10.1037/0096-1523.27.1.3

Sebanz, N., Bekkering, H., and Knoblich, G. (2006). Joint action: bodies and minds moving together. Trends Cogn. Sci. 10, 70–76. doi: 10.1016/j.tics.2005.12.009

Serences, J. T., Schwarzbach, J., Courtney, S. M., Golay, X., and Yantis, S. (2004). Control of object-based attention in human cortex. Cereb. Cortex 14, 1346–1357. doi: 10.1093/cercor/bhh095

Sigman, M., and Dehaene, S. (2008). Brain mechanisms of serial and parallel processing during dual-task performance. J. Neurosci. 28, 7585–7598. doi: 10.1523/JNEUROSCI.0948-08.2008

Sinnett, S., Costa, A., and Soto-Faraco, S. (2006). Manipulating inattentional blindness within and across sensory modalities. Q. J. Exp. Psychol. 59, 1425–1442. doi: 10.1080/17470210500298948

Skewes, J. C., Skewes, L., Michael, J., and Konvalinka, I. (2015). Synchronised and complementary coordination mechanisms in an asymmetric joint aiming task. Exp. Brain Res. 233, 551–565. doi: 10.1007/s00221-014-4135-2

Sklar, A. E., and Sarter, N. B. (1999). Good vibrations: tactile feedback in support of attention allocation and human-automation coordination in event- driven domains. Hum. Factors 41, 543–552. doi: 10.1518/001872099779656716

Soto, D., and Blanco, M. J. (2004). Spatial attention and object-based attention: a comparison within a single task. Vis. Res. 44, 69–81. doi: 10.1016/j.visres.2003.08.013

Soto-Faraco, S., and Spence, C. (2002). Modality-specific auditory and visual temporal processing deficits. Q. J. Exp. Psychol. 55, 23–40. doi: 10.1080/02724980143000136

Soto-Faraco, S., Spence, C., Fairbank, K., Kingstone, A., Hillstrom, A. P., and Shapiro, K. (2002). A crossmodal attentional blink between vision and touch. Psychon. Bull. Rev. 9, 731–738. doi: 10.3758/BF03196328

Spence, C. (2010). Crossmodal spatial attention. Ann. N. Y. Acad. Sci. 1191, 182–200. doi: 10.1111/j.1749-6632.2010.05440.x

Spence, C., and Driver, J. (2004). Crossmodal Space and Crossmodal Attention. Oxford, UK: Oxford University Press.

Spence, C., and Ho, C. (2012). The Multisensory Driver: Implications for Ergonomic Car Interface Design. Hampshire: Ashgate Publishing, Ltd.

Spence, C., and Read, L. (2003). Speech shadowing while driving on the difficulty of splitting attention between eye and ear. Psychol. Sci. 14, 251–256. doi: 10.1111/1467-9280.02439

Stein, B. E., and Stanford, T. R. (2008). Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266. doi: 10.1038/nrn2331

Sternshein, H., Agam, Y., and Sekuler, R. (2011). Eeg correlates of attentional load during multiple object tracking. PLoS ONE 6:e22660. doi: 10.1371/journal.pone.0022660

Talsma, D., Doty, T. J., Strowd, R., and Woldorff, M. G. (2006). Attentional capacity for processing concurrent stimuli is larger across sensory modalities than within a modality. Psychophysiology 43, 541–549. doi: 10.1111/j.1469-8986.2006.00452.x

Tang, X., Wu, J., and Shen, Y. (2016). The interactions of multisensory integration with endogenous and exogenous attention. Neurosci. Biobehav. Rev. 61, 208–224. doi: 10.1016/j.neubiorev.2015.11.002

ten Oever, S., Romei, V., van Atteveldt, N., Soto-Faraco, S., Murray, M. M., and Matusz, P. J. (2016). The cogs (context, object, and goals) in multisensory processing. Exp. Brain Res. 234, 1307–1323. doi: 10.1007/s00221-016-4590-z

Ungerleider, L. G., and Pessoa, L. (2008). What and where pathways. Scholarpedia 3:5342. doi: 10.4249/scholarpedia.5342

van Atteveldt, N., Murray, M. M., Thut, G., and Schroeder, C. E. (2014). Multisensory integration: flexible use of general operations. Neuron 81, 1240–1253. doi: 10.1016/j.neuron.2014.02.044

Van der Burg, E., Olivers, C. N. L., Bronkhorst, A. W., Koelewijn, T., and Theeuwes, J. (2007). The absence of an auditory–visual attentional blink is not due to echoic memory. Percept. Psychophys. 69, 1230–1241. doi: 10.3758/BF03193958

Vesper, C., Abramova, E., Btepage, J., Ciardo, F., Crossey, B., Effenberg, A., et al. (2017). Joint action: mental representations, shared information and general mechanisms for coordinating with others. Front. Psychol. 7:2039. doi: 10.3389/fpsyg.2016.02039

Wahn, B., Ferris, D. P., Hairston, W. D., and König, P. (2016a). Pupil sizes scale with attentional load and task experience in a multiple object tracking task. PLoS ONE 11:e0168087. doi: 10.1371/journal.pone.0168087

Wahn, B., Keshava, A., Sinnett, S., Kingstone, A., and König, P. (2017a). “Audiovisual integration is affected by performing a task jointly,” in Proceedings of the 39th Annual Conference of the Cognitive Science Society (Austin, TX), 1296–1301.

Wahn, B., Kingstone, A., and König, P. (2017b). Two trackers are better than one: information about the co-actor's actions and performance scores contribute to the collective benefit in a joint visuospatial task. Front. Psychol. 8:669. doi: 10.3389/fpsyg.2017.00669

Wahn, B., and König, P. (2015a). Audition and vision share spatial attentional resources, yet attentional load does not disrupt audiovisual integration. Front. Psychol. 6:1084. doi: 10.3389/fpsyg.2015.01084

Wahn, B., and König, P. (2015b). Vision and haptics share spatial attentional resources and visuotactile integration is not affected by high attentional load. Multisens. Res. 28, 371–392. doi: 10.1163/22134808-00002482

Wahn, B., and König, P. (2016). Attentional resource allocation in visuotactile processing depends on the task, but optimal visuotactile integration does not depend on attentional resources. Front. Integr. Neurosci. 10:13. doi: 10.3389/fnint.2016.00013

Wahn, B., and König, P. (2017). Is attentional resource allocation across sensory modalities task-dependent? Adv. Cogn. Psychol. 13, 83–96. doi: 10.5709/acp-0209-2

Wahn, B., Murali, S., Sinnett, S., and König, P. (2017c). Auditory stimulus detection partially depends on visuospatial attentional resources. Iperception 8:2041669516688026. doi: 10.1177/2041669516688026

Wahn, B., Schmitz, L., König, P., and Knoblich, G. (2016b). “Benefiting from being alike: Interindividual skill differences predict collective benefit in joint object control,” in Proceedings of the 38th Annual Conference of the Cognitive Science Society (Austin, TX), 2747–2752.

Wahn, B., Schwandt, J., Krüger, M., Crafa, D., Nunnendorf, V., and König, P. (2016c). Multisensory teamwork: using a tactile or an auditory display to exchange gaze information improves performance in joint visual search. Ergonomics 59, 781–795. doi: 10.1080/00140139.2015.1099742

Wickens, C. D. (2002). Multiple resources and performance prediction. Theor. Issues Ergon. Sci. 3, 159–177. doi: 10.1080/14639220210123806

Wierda, S. M., van Rijn, H., Taatgen, N. A., and Martens, S. (2012). Pupil dilation deconvolution reveals the dynamics of attention at high temporal resolution. Proc. Natl. Acad. Sci. U.S.A. 109, 8456–8460. doi: 10.1073/pnas.1201858109

Keywords: multisensory processing, visuospatial attention, joint action, attentional resources, multiple object tracking

Citation: Wahn B and König P (2017) Can Limitations of Visuospatial Attention Be Circumvented? A Review. Front. Psychol. 8:1896. doi: 10.3389/fpsyg.2017.01896

Received: 17 May 2017; Accepted: 12 October 2017;

Published: 27 October 2017.

Edited by:

Kathrin Ohla, Medical School Berlin, GermanyReviewed by:

Pawel J. Matusz, Centre Hospitalier Universitaire Vaudois (CHUV), SwitzerlandCopyright © 2017 Wahn and König. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Basil Wahn, YndhaG5AdW9zLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.