- 1College of Psychology and Sociology, Shenzhen University, Shenzhen, China

- 2Shenzhen Key Laboratory of Affective and Social Cognitive Science, Shenzhen University, Shenzhen, China

- 3Faculty of Humanities and Social Science, City University of Macau, Macau, China

Internet Gaming Disorder (IGD) is characterized by impairments in social communication and the avoidance of social contact. Facial expression processing is the basis of social communication. However, few studies have investigated how individuals with IGD process facial expressions, and whether they have deficits in emotional facial processing remains unclear. The aim of the present study was to explore these two issues by investigating the time course of emotional facial processing in individuals with IGD. A backward masking task was used to investigate the differences between individuals with IGD and normal controls (NC) in the processing of subliminally presented facial expressions (sad, happy, and neutral) with event-related potentials (ERPs). The behavioral results showed that individuals with IGD are slower than NC in response to both sad and neutral expressions in the sad–neutral context. The ERP results showed that individuals with IGD exhibit decreased amplitudes in ERP component N170 (an index of early face processing) in response to neutral expressions compared to happy expressions in the happy–neutral expressions context, which might be due to their expectancies for positive emotional content. The NC, on the other hand, exhibited comparable N170 amplitudes in response to both happy and neutral expressions in the happy–neutral expressions context, as well as sad and neutral expressions in the sad–neutral expressions context. Both individuals with IGD and NC showed comparable ERP amplitudes during the processing of sad expressions and neutral expressions. The present study revealed that individuals with IGD have different unconscious neutral facial processing patterns compared with normal individuals and suggested that individuals with IGD may expect more positive emotion in the happy–neutral expressions context.

Highlights:

• The present study investigated whether the unconscious processing of facial expressions is influenced by excessive online gaming. A validated backward masking paradigm was used to investigate whether individuals with Internet Gaming Disorder (IGD) and normal controls (NC) exhibit different patterns in facial expression processing.

• The results demonstrated that individuals with IGD respond differently to facial expressions compared with NC on a preattentive level. Behaviorally, individuals with IGD are slower than NC in response to both sad and neutral expressions in the sad–neutral context. The ERP results further showed (1) decreased amplitudes in the N170 component (an index of early face processing) in individuals with IGD when they process neutral expressions compared with happy expressions in the happy–neutral expressions context, whereas the NC exhibited comparable N170 amplitudes in response to these two expressions; (2) both the IGD and NC group demonstrated similar N170 amplitudes in response to sad and neutral faces in the sad–neutral expressions context.

• The decreased amplitudes of N170 to neutral faces than happy faces in individuals with IGD might due to their less expectancies for neutral content in the happy–neutral expressions context, while individuals with IGD may have no different expectancies for neutral and sad faces in the sad–neutral expressions context.

Introduction

Excessive computer game playing can be both addictive and pathological (D’Hondt et al., 2015; Lemmens et al., 2015). As a behavioral addiction, Internet Gaming Disorder (IGD) is characterized by compulsive gaming behaviors with harmful personal or social consequences, such as impairments in individuals’ academic, occupational, or social functioning (Brady, 1996; Young, 1998; DSM-V, American Psychiatric Association, 2013; Tam and Walter, 2013; Spada, 2014; Van Rooij and Prause, 2014; D’Hondt et al., 2015; Kuss and Lopez-Fernandez, 2016). Research has found that Internet addiction (including online gaming activities and other forms of Internet use) shares essential features with other addictions, including decreased executive control abilities and excessive emotional processing of addiction-related stimuli (Ng and Wiemer-Hastings, 2005; He et al., 2011; D’Hondt et al., 2015). Accordingly, previous studies of IGD focused predominantly on impairments in inhibitory control or executive control among individuals with IGD (Dong et al., 2010, 2011; Wang et al., 2013; D’Hondt et al., 2015; Zhang et al., 2016). The deficits of individuals with IGD in social interactions and social skills such as emotional and interpersonal communication have also received considerable attention (Young, 1998; Engelberg and Sjöberg, 2004; D’Hondt et al., 2015), but so far, there have been limited experimental studies on the processing of real-world socioemotional stimuli among individuals with IGD. Thus, the underlying mechanisms behind these deficits remain unclear.

Social communication has been suggested to depend largely on the capacity for expression recognition (Blair, 2005; He et al., 2011). Facial expressions are important socioemotional stimuli, as they can convey information about the identities, emotions, and intentions of other people, and thus represent a primary element of non-verbal communication in everyday life (Batty and Taylor, 2003; Itier and Taylor, 2004). Previous studies indirectly related to facial processing in IGD found that action video game players or violent media users had a reduced attention to happy faces in emotion recognition tasks (Kirsh et al., 2006; Kirsh and Mounts, 2007; Bailey and West, 2013). For example, Kirsh et al. (2006) found that compared with participants low in violent media consumption, participants high in violent media consumption were slower to identify happy expressions and faster to identify anger expressions. However, IGD-afflicted individuals’ processing of facial expressions remains unclear. Furthermore, studies on normal participants have revealed that emotional cues can be extracted from facial expressions in the preattentive or unconscious stage of face processing (Öhman, 1999; Dimberg et al., 2000; Vuilleumier and Schwartz, 2001; Vuilleumier, 2002). However, although deficits in conscious neutral-face processing were found in excessive Internet users (He et al., 2011), whether individuals with IGD had unique unconscious emotional facial processing patterns remained unclear. We therefore aimed to explore this issue in the present study.

To further investigate unconscious facial processing in individuals with IGD, the present study employed a visual backward masking paradigm. Visual backward masking is an “empirically rich and theoretically interesting phenomenon” that indicates the attenuation of the visibility of a target stimulus by a mask stimulus presented after the target (Breitmeyer, 1984; Breitmeyer and Ogmen, 2000, p. 1572). In this paradigm, a target stimulus is presented briefly (usually for 1–100 ms) and followed by a mask stimulus, which is a meaningless or scrambled picture that overlaps with the target stimulus spatially or structurally (Esteves and Öhman, 1993). The mask stimulus impairs the explicit awareness or perception of the target stimulus (Morris et al., 1999; Breitmeyer and Ogmen, 2000). This paradigm has been widely used to investigate recognition thresholds as well as to examine emotional and visual information processing, which are partially independent of awareness, in a variety of specific subject populations, such as people with affective disorders (Esteves and Öhman, 1993; Breitmeyer and Ogmen, 2000; Axelrod et al., 2015; Zhang et al., 2016). For example, Zhang et al. (2016) found deficits of unconscious facial processing in patients with major depression using the visual backward masking paradigm with event-related potentials (ERPs).

To gain a better understanding of unconscious facial processing, we used ERPs, which have high temporal resolution, in the present study. To our knowledge, there was only one published ERP study focusing on the facial processing of excessive Internet users (He et al., 2011). He et al. (2011) found deficits in early face processing among excessive Internet users by asking participants to passively view upright and inverted faces and non-face stimuli presented above the conscious threshold. Specifically, excessive Internet users were found to be impaired in social stimulus processing but intact in holistic configural face processing, which were represented as a smaller N170 face effect (i.e., the difference in the amplitudes of the N170 for neutral-face vs. non-face stimuli) and similar N170 inversion effect (i.e., the difference in the amplitudes of the N170 component of ERP in response to upright vs. inverted neutral faces) in excessive Internet users compared with normal controls (NC; He et al., 2011). N170 is widely acknowledged to be a face-sensitive ERP component, typically occurring 140 to 200 ms after stimulus onset and responding maximally to face stimuli, reflecting automatic processing in the early stage of face perception (Rossion et al., 2000; Itier and Taylor, 2004). The N170 component has been found to be not only associated with the structural encoding of faces (e.g., Dimberg et al., 2000; Sato et al., 2001; Eimer et al., 2003; Holmes et al., 2003; Schupp et al., 2004), but also modulated by emotional facial expressions (e.g., Blau et al., 2007; Pegna et al., 2008; for review, see Rellecke et al., 2013). Third, N170 was found to be associated with unconscious face processing in normal subjects (e.g., Pegna et al., 2008; Carlson and Reinke, 2010). For example, using the backward masking paradigm, Carlson and Reinke (2010) found that a masked fearful face enhanced the contralateral N170. Thus, in the present study, the N170 amplitude was taken as the index which indicated unconscious emotional facial perception in the early stage of face processing. Furthermore, expectancies for emotional content were suggested to influence the recognition of facial expressions (Leppänen et al., 2003; Hugenberg, 2005). For example, facilitation of the processing was observed when the stimuli were congruent with participants’ expectancies, and the opposite effect was observed when the stimuli were incongruent with participants’ expectancies (Leppänen et al., 2003; Hugenberg, 2005). Besides, according to a cognitive-behavioral model of problematic Internet use, pathological involvement in gaming results from problematic cognitions coupled with behaviors maintaining maladaptive responses (Davis, 2001). For example, individuals who have negative views of themselves may use gaming to achieve positive social interactions, social acceptance, or positive social feedback (King and Delfabbro, 2014). Besides, previous study found that individuals with Internet addiction had higher scores on the Behavior Inhibition System and Behavior Approach System Scale (BIS/BAS scale) fun-seeking subscales, suggesting that these individuals had higher sensitivity to the stimuli with reward, and were more likely to engage in approach behavior for the rewarding stimuli (Yen et al., 2009). Based on these previous findings which indicated the influence of expectancy on facial expression recognition (Leppänen et al., 2003; Hugenberg, 2005), together with the association between problematic gaming behavior with individuals with IGD and their aforementioned social needs (King and Delfabbro, 2014), and IGD’s higher sensitivity to rewarding stimuli (Yen et al., 2009), we speculate that to individuals with IGD, neutral faces are comparatively less rewarded than happy faces; accordingly, individuals with IGD may have less expectancy for neutral stimuli than for positive stimuli, and this incongruence would subsequently led to the lower activation for neutral expressions than happy expressions. Thus, we expected to observe that IGD show reduced N170 amplitudes in response to neutral expressions in the happy–neutral context, while NC group show comparable N170 to happy and neutral expressions in the happy–neutral context, which may represent different patterns in emotional facial processing between individuals with IGD and NC. Whereas this effect would not present in the sad–neutral context since individuals in both groups have no expectancy for sad or neutral expressions.

Materials and Methods

Participants

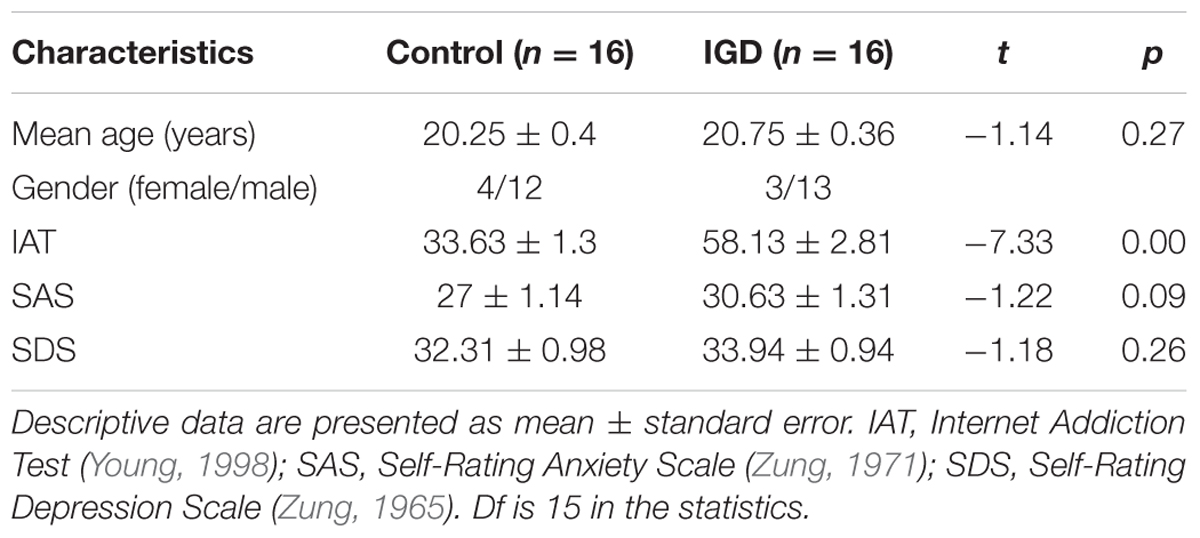

Sixteen participants with IGD and 16 NC were recruited from local universities in Shenzhen, China. Descriptions of participants’ demographics are presented in Table 1. There were no significant differences between the two groups in terms of age, handedness, or education. The proposed diagnostic cutpoint of the DSM-5 was suggested to be conservative (e.g., Lemmens et al., 2015); thus, Young’s Internet Addiction Test (IAT) was used to screen people for IGD in the present study. IAT is a reliable instrument and widely used in studies investigating Internet addiction disorders, including IGD (e.g., Khazaal et al., 2008). Young (1998) suggested that a score between 40 and 69 signifies problems due to Internet use. However, IAT relies on subjective ratings and is therefore susceptible to participants’ concealment or underestimation. Additionally, previous studies used “experience in playing video games of 10 or more hours a week” (Weinreich et al., 2015, p. 61) or “at least 4 years and for at least 2 h daily” (Szycik et al., 2017, p. 2) as the inclusion criterion for the expert/excessive users of violent video games. Thus, the present study also included the length of time that the participants spent on online gaming as a criterion. Individuals were asked to provide the number of hours per day and per week they spent online gaming. Individuals with score ≥40 on the IAT and who spent ≥4 h per day and ≥30 h per week on Internet gaming were included in our IGD cohort. Moreover, to control for comorbidities such as depression and anxiety (Sanders et al., 2000; Yen et al., 2007; Wei et al., 2012; Lai et al., 2015), we excluded individuals with IGD who scored more than 40 points on either the Zung Self-Rating Depression Scale (SDS) (Zung, 1965) or the Zung Self-Rating Anxiety Scale (SAS) (Zung, 1971). None of the participants had a history of head injury, neurological disorders, substance abuse or dependence over the past 6 months. All research procedures were approved by the Medical Ethical Committee of Shenzhen University Medical School according to the Declaration of Helsinki. All the participants provided written informed consent indicating that they fully understood the study.

Stimuli

We used the backward masking task program (see Procedure) and stimuli employed in Zhang et al.’s (2016) study. The target face stimuli, including 20 happy expressions, 20 sad expressions, and 40 neutral expressions, were selected from the native Chinese Facial Affective Picture System (CFAPS), which includes pictures assessed by Chinese participants in a previous study (Gong et al., 2011). The above-mentioned study found significant differences in nine-point-scale ratings for both emotional valence and arousal among the three categories of expressions. The study reported the following for valence ratings: “(2,77) = 143, p < 0.001, = 0.787, happy = 5.92 ± 0.13; sad = 2.78 ± 0.13; neutral = 4.22 ± 0.09; pairwise comparisons: ps < 0.001; for arousal ratings, (2,77) = 30.2, p < 0.001, = 0.439, happy = 5.13 ± 0.22; sad = 5.83 ± 0.22; neutral = 3.82 ± 0.16; for pairwise comparisons, emotional vs. neutral: p < 0.001, happy vs. sad: p < 0.087” (Zhang et al., 2016, p. 15). The stimulus display and behavioral data acquisition were conducted using E-Prime software (version 2.0, Psychology Software Tools, Inc., Boston, MA, United States).

Procedure

The procedure consisted of a happy block and a sad block. At the beginning of each trial, a central fixation cross was presented for 500 ms, followed by a 400-600 ms blank screen. Then, a target (happy/sad or neutral) face was presented for 17 ms, followed immediately by a scrambled face as a mask, which lasted for 150 ms (Zhang et al., 2016). Previous studies set the duration of the mask stimulus at 100 to 300 ms or other durations above the awareness threshold (e.g., Rolls and Tovee, 1994; Whalen et al., 1998; Fisch et al., 2009; for review, see Pessoa, 2005). Here, we used 150 ms according to the parameter in Zhang et al.’s (2016) study. The participants were required to discriminate the target faces by pressing two buttons on the computer keyboard with their left or right index fingers as soon as possible (Zhang et al., 2016). Each block included 160 trials with 80 emotional expressions and 80 neutral expressions that were randomized and presented as target stimuli—that is, 20 happy and 20 neutral faces were presented a total of four times in the happy block; 20 sad and 20 neutral faces were presented a total of four times in the sad block. The assignment of keys to each valence of expressions, and the sequence of blocks was counterbalanced across the participants (Zhang et al., 2016).

ERP Recording

Brain electrical activity was recorded through a 64-electrode scalp cap using the 10–20 system (Brain Products, Munich, Germany). The TP10 channel was used as the reference during the recordings (Kaufmann et al., 2009; Ferrante et al., 2015; Cui et al., 2017). Two electrodes were used to measure the electrooculogram (EOG). EEG and EOG activity were amplified at 0.01–100 Hz passband and sampled at 500 Hz. The EEG data were recorded with all electrode impedances maintained below 5 kΩ. The EEG data from each electrode were re-referenced to the average of the left and right mastoids prior to further analysis.

The EEG data were pre-processed and analyzed using BrainVision Analyzer 2.1 (Brain Products, Munich, Germany). Pre-processing included bad channel detection and removal, epoching, and eyeblink removal. Then, the signal was passed through a 0.01–30 Hz band-pass filter. The epochs consisted of the 200 ms before and 1000 ms after the onset of the target stimuli. EOG artifacts were corrected using independent component analysis (ICA) (Jung et al., 2001). Epochs with amplitude values exceeding ±80 μV at any electrode were excluded before the application of the EEG averaging procedure. The ERPs were independently computed for each participant and each experimental condition.

The ERP was time-locked to the presentation of the target face. Based on previous research on face processing (Luo et al., 2010; Frühholz et al., 2011; Zhang et al., 2016) and the topographical distribution of the grand-averaged ERP activity in the current study, the average amplitudes at the P8 and PO8 electrode sites were selected for the statistical analysis of the N170 component (time window: 150–230 ms). For each component, mean amplitudes were obtained within the corresponding time window and averaged from the electrodes.

Data Analysis

Further statistical analyses were conducted using IBM SPSS Statistics 22 (IBM Corp., Armonk, NY, United States). Because the happy and sad blocks were different emotional contexts, separate analyses of variance (ANOVAs) of the interaction of emotional valence (happy vs. neutral, sad vs. neutral, or happy vs. sad) × group (IGD vs. control) were conducted for the behavioral data and each ERP component. Both the behavioral data and the ERP amplitudes were analyzed with repeated measures ANOVAs using Greenhouse–Geisser adjusted degrees of freedom. The between-subject factor was the study group (IGD vs. control), and the within-subject factor was emotional valence of expression (happy vs. neutral, sad vs. neutral, or happy vs. sad). The post hoc analysis used Bonferroni corrections for multiple comparisons.

Results

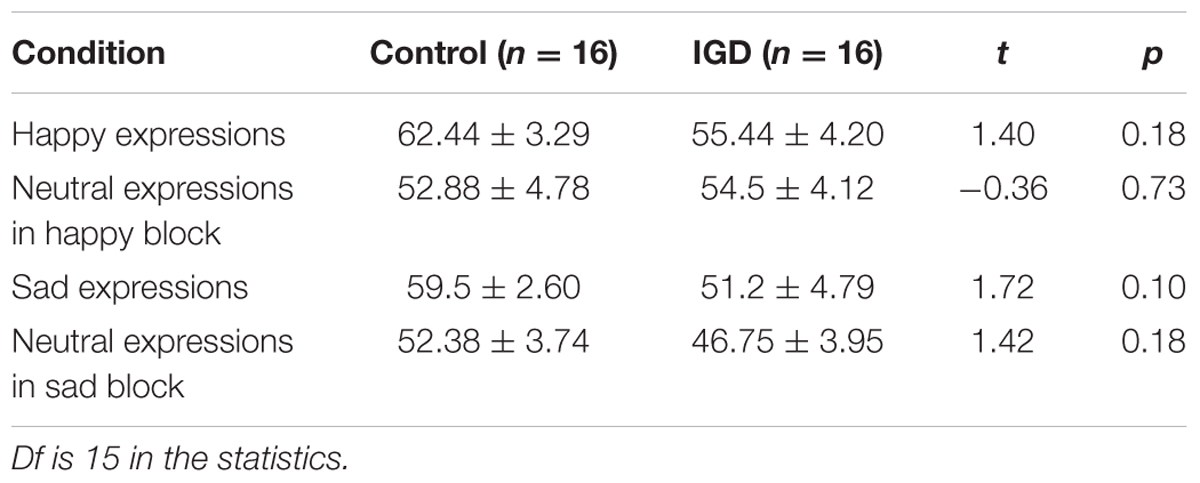

The numbers of trials included in the experimental conditions are listed in Table 2. For the following results, the descriptive data are presented as mean ± standard error unless noted otherwise.

Behavioral Data

Regarding reaction time, in the sad block, the main effect of valence was significant, F(1,30) = 4.86, p < 0.05, = 0.14; reaction time was shorter for sad expressions (618.87 ± 31.48 ms) than for neutral expressions (663.39 ± 34.77 ms); the main effect of group was significant, F(1,30) = 5.09, p < 0.05, = 0.15; and reaction time was shorter for the NC group (569.84 ± 44.68 ms) than for the IGD group (712.42 ± 44.68 ms). The interaction was not significant, p > 0.5. In the happy block, the main effect of valence was significant, F(1,30) = 6.63, p < 0.05, = 0.18; reaction time was shorter for happy expressions (583.97 ± 39.33 ms) than for neutral expressions (648.08 ± 36.6 ms); no other main and interaction effects reached significance, all ps > 0.1; reaction time for the NC group (577.25 ± 50.76 ms) was comparable with that for the IGD group (654.81 ± 50.76 ms). When the happy and sad trials were directly compared, the main effect and the interaction were not significant, all ps > 0.05.

In terms of accuracy, in the sad–neutral block, in the happy–neutral block, and when the happy and sad trials were directly compared, no main effect and interaction effect reached significance.

ERP Data

N170

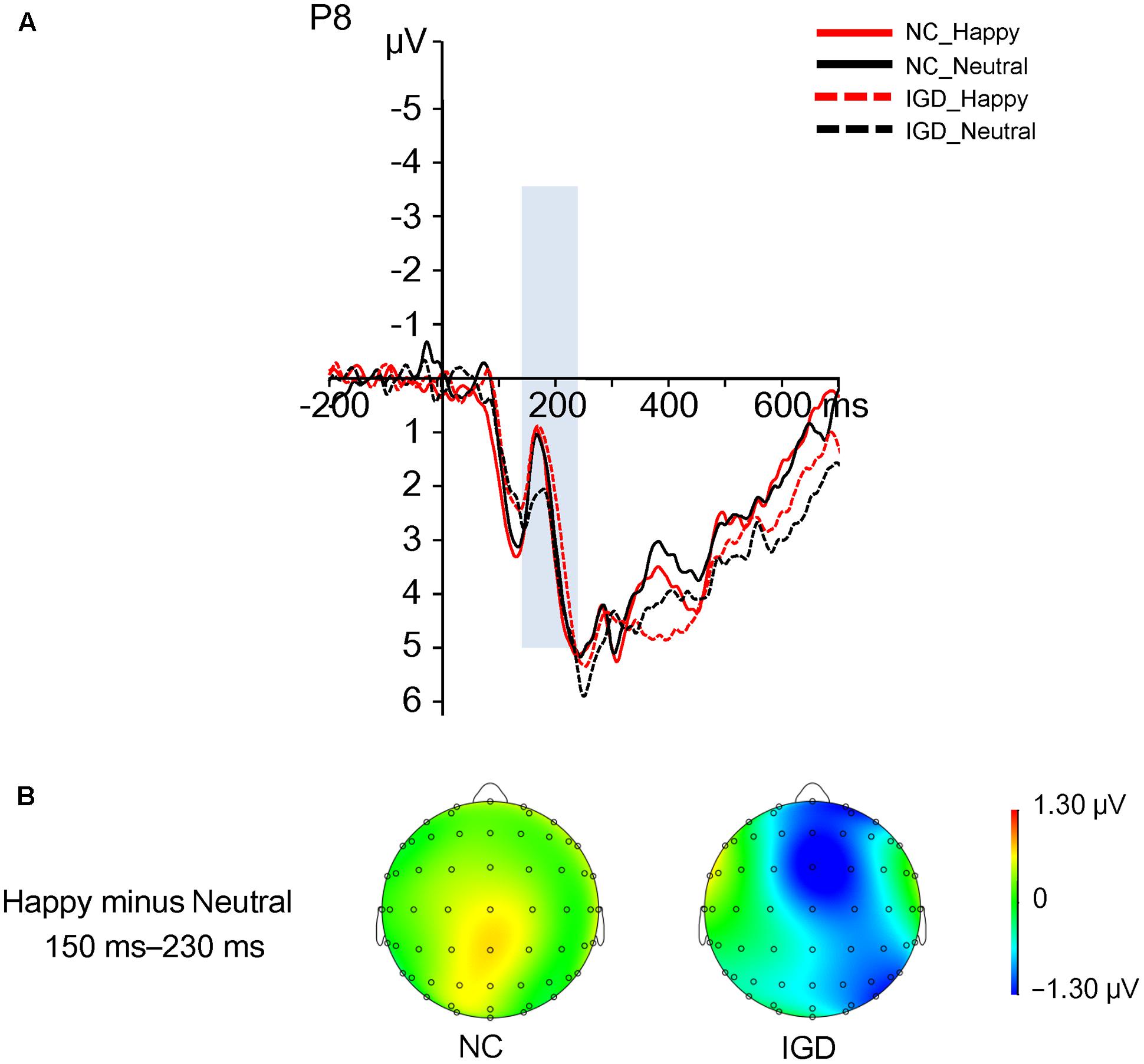

A 2 (group) × 2 (happy vs. neutral) ANOVA revealed that the main effect of valence was not significant, (1,30) = 3.47, p = 0.07, = 0.10, and the main effect of group was not significant, (1,30) = 0.01, p = 0.92, < 0.001. However, the interaction of valence by group was significant, (1,30) = 4.25, p = 0.048, = 0.124 (Figure 1). The post hoc analysis revealed that for the IGD group, happy expressions elicited a comparatively more negative-directed N170 component (3.02 ± 1.12 μV) than neutral faces (4.18 ± 1.09 μV), (1,30) = 7.70, p = 0.009, = 0.20, Bonferroni corrected. However, for the control group, happy and neutral expressions elicited similar N170 components (happy: 3.79 ± 1.12 μV, neutral: 3.73 ± 1.09 μV), (1,30) = 0.02, p = 0.89, = 0.001, Bonferroni corrected.

FIGURE 1. (A) Grand ERP waveforms of the N170 component displayed between 150 and 230 ms for the four conditions at the representative P8 site. (B) Topographic distributions of the difference waves between neutral and happy expressions (happy condition minus neutral condition) in the IGD and NC groups, with the 150–230 ms time interval.

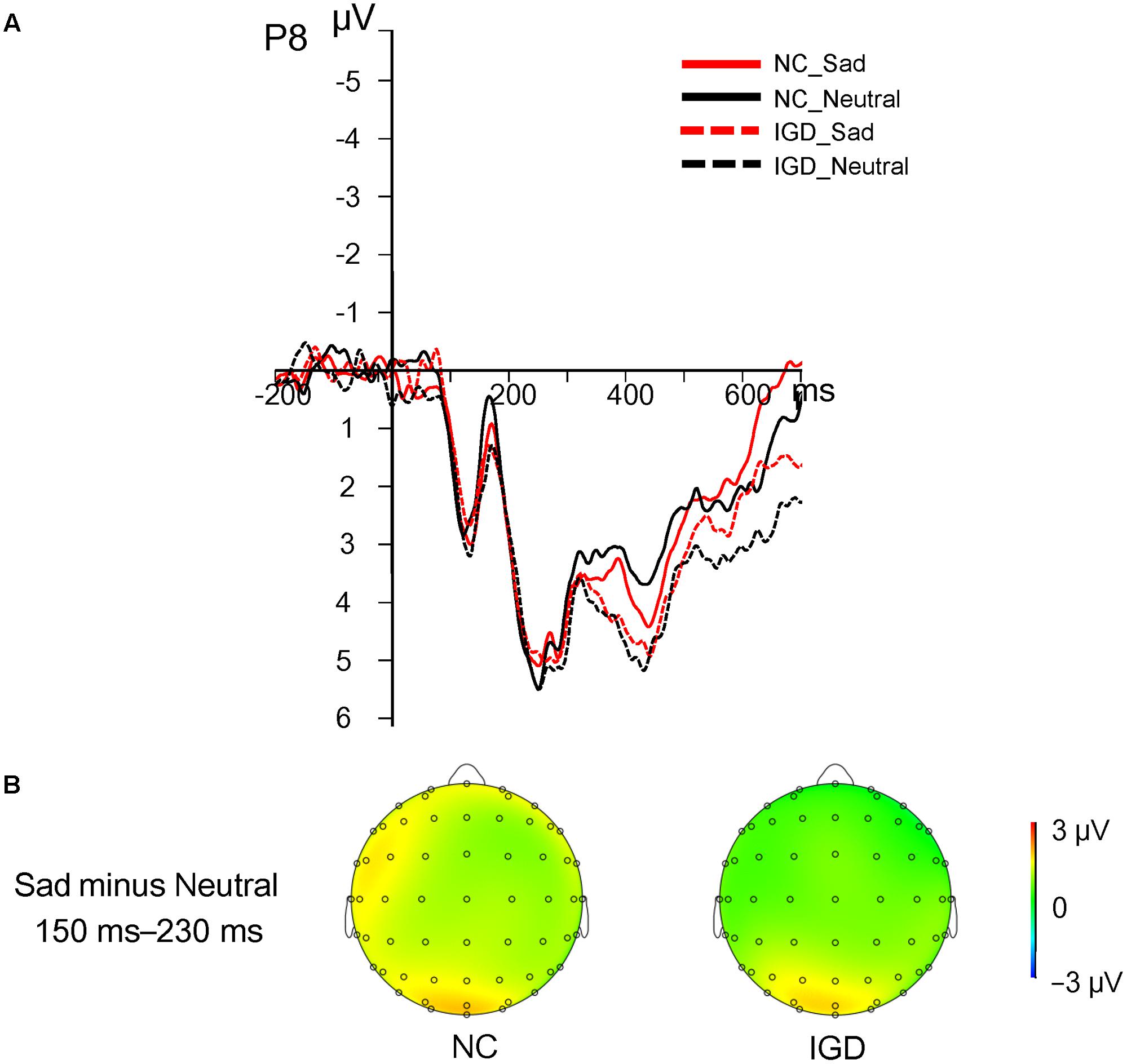

However, the amplitudes in the sad–neutral context did not show significant main or interaction effects in the sad–neutral condition (Figure 2). A 2 (group) × 2 (sad vs. neutral) ANOVA revealed that the main effects of valence [F(1,30] = 0.39, p = 0.54, = 0.01], group [F(1,30) = 0.02, p = 0.88, = 0.001], and the interaction [F(1,30) = 0.02, p = 0.88, = 0.001] were not significant and that the N170 components elicited by happy and neutral expressions in the IGD group (sad: 3.79 ± 1.21 μV, neutral: 3.65 ± 1.15 μV) were similar to those elicited in the control group (sad: 3.57 ± 1.21 μV, neutral: 3.35 ± 1.15 μV).

FIGURE 2. (A) Grand ERP waveforms of the N170 component displayed between 150 and 230 ms for the four conditions at the representative P8 site. (B) Topographic distributions of the difference waves between neutral and happy expressions (sad condition minus neutral condition) in the IGD and control groups, with the 150–230 ms time interval.

When directly comparing N170 amplitudes in response to sad and happy expressions, a 2 (IGD vs. NC group) × 2 (sad vs. happy) ANOVA demonstrated that the main effects of valence, group, and the interaction were not significant, all ps > 0.05.

Discussion

As a perceptual basis for social interaction, emotional expression processing is an important component of interpersonal communication. Although a wealth of studies have investigated executive functions in individuals with IGD, studies on the emotional expression processing of individuals with IGD have been limited; in particular, to our knowledge, there have been no published studies investigating unconscious processing of emotional expressions in IGD. The behavioral data of the present study revealed that both the IGD and NC groups responded faster to unconscious emotional expressions (happy and sad expressions) than to neutral expressions, suggesting that individuals with IGD have normal ability to extract emotional signals from facial expressions in the preattentive stage. This result was consistent with a previous finding which demonstrated a shorter reaction time to emotional expressions than to neutral expression in normal participants (Calder et al., 1997; Eimer et al., 2003) and extended this finding to individuals with IGD. Besides, compared with IGD, NC group showed shorter reaction time to both sad and neutral expressions in the sad block. However, there was no similar effect on happy and neutral expressions in the happy block. Prototypical happy faces were suggested to be more easily recognized and more distinguishable from neutral than sad faces (Calder et al., 1997; Surguladze et al., 2003). Based on this suggestion, in the happy block, happy expressions might be more distinguishable than neutral expressions for both NC and IGD group, thus facilitate the recognition task for the two expressions in both NC and IGD group. While there was no facilitation of recognition in the sad block since the sad expressions are not much distinguishable from neutral expressions as happy expressions. These results suggest that regarding the reaction time, the sad block condition/sad–neutral context might be more sensitive in distinguishing IGD and NC in unconscious facial recognition.

More importantly, the present study explored the time course of unconscious emotional facial processing in individuals with IGD. The ERP results showed reduced N170 amplitude in individuals with IGD when they processed unconscious neutral faces compared with happy faces, while NC showed similar N170 amplitudes when they processed neutral and happy faces in the happy–neutral context. Both individuals with IGD and NC showed similar N170 amplitudes to sad faces and neutral faces in the sad–neutral context. The decreased N170 amplitude for neutral expressions compared to happy expressions in the IGD group support our hypothesis, which suggested that participants’ different expectancies in processing positive and negative stimuli would influence their facial recognition, and lead to different facial processing in IGD and NC. Participants’ expectancies were previously suggested to influence implicit evaluation by affecting the valence of the prime stimuli in the affective priming task (Leppänen et al., 2003; Hugenberg, 2005). In the present study, neutral expressions were less rewarded than happy expressions in individuals with IGD, and IGD might have less expectancy for neutral expressions than for happy expressions, resulting in decreased N170 amplitudes for neutral expressions than happy expressions. However, in the sad–neutral condition, individuals may not have more expectancy for sad faces or less expectancy for neutral faces, leading to similar responses to sad and neutral faces. It should be noted that we cannot conclude that individuals with IGD have deficits in emotional facial recognition, since they showed similar N170 amplitudes to those of NC in response to happy and sad expressions. On the other hand, this result implies that individuals with IGD may have normal ability to extract emotional information from emotional expressions. Furthermore, the present ERP data showed differences between IGD and NC group in happy block condition, while the behavioral data showed differences of two groups in sad block condition. We suggest that N170 represent the distinct unconscious face processing of IGD in early stage, whereas the reaction time might reflect the facial expressions recognition in the late stage. However, considering that behavioral data often does not align to ERP data for easy explanations, more studies are needed for this issue.

In summary, the present results extended the previous findings on the face processing of excessive Internet users and demonstrated distinct mechanisms for facial expression processing in different facial contexts among individuals with IGD. Specifically, compared with NC, individuals with IGD have lower N170 amplitudes in response to neutral faces than in response to happy faces in the happy–neutral expression context, which may arise from their lower expectancy for neutral expressions. This effect was not observed in the sad–neutral expression context for either IGD or NC individuals.

Limitations and Future Studies

There are two limitations in the present study. First, more males than females were recruited due to the relative scarcity of females with excessive use of Internet game playing. Second, although previous studies found that substantial amounts of time in the virtual world (e.g., playing video games) were associated with individuals’ decreased interpersonal relationships in the real world and suggested that the lower frequency of social-emotional communication may alter how individuals with IGD process facial expressions in the real world (Lo et al., 2005; Weinreich et al., 2015), we cannot draw any conclusions about the causality of IGD subjects’ distinct facial expression processing pattern or the their impairments in social communication. More studies are needed to investigate the emotional facial processing mechanisms of individuals with IGD.

Author Contributions

XP, FC, and CJ developed the concepts for the study. TW collected the data. XP and TW analyzed the data. XP, CJ, and FC wrote the manuscript. All authors contributed to the manuscript and approved the final version of the manuscript for submission.

Funding

This work was supported by The Ministry of Education of Humanities and Social Science Project (16YJCZH074), the National Natural Science Foundation of China (31500877, 31600889), Guangdong Natural Science Foundation (2016A030310039), the Project of Philosophy and Social Science for the 12th 5-Year Planning of Guangdong Province (GD15XXL06), and the Outstanding Young Faculty Award of Guangdong Province (YQ2014149).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Dr. Dandan Zhang for generously supplying us with her computer program for the experiment. We thank Mr. Junfeng Li for helping us to recruit participants with IGD. We are grateful to the reviewers for their suggestions and comments.

References

American Psychiatric Association (2013). Diagnostic and Statistical Manual of Mental Disorders: DSM-V. Washington, DC: American Psychiatric Publishing. doi: 10.1176/appi.books.9780890425596

Axelrod, V., Bar, M., and Rees, G. (2015). Exploring the unconscious using faces. Trends Cogn. Sci. 19, 35–45. doi: 10.1016/j.tics.2014.11.003

Bailey, K., and West, R. (2013). The effects of an action video game on visual and affective information processing. Brain Res. 1504, 35–46. doi: 10.1016/j.brainres.2013.02.019

Batty, M., and Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 17, 613–620. doi: 10.1016/S0926-6410(03)00174-5

Blair, R. J. R. (2005). Responding to the emotions of others: dissociating forms of empathy through the study of typical and psychiatric populations. Conscious. Cogn. 14, 698–718. doi: 10.1016/j.concog.2005.06.004

Blau, V. C., Maurer, U., Tottenham, N., and McCandliss, B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 3:7. doi: 10.1186/1744-9081-3-7

Breitmeyer, B. G., and Ogmen, H. (2000). Recent models and findings in visual backward masking: a comparison, review, and update. Percept. Psychophys. 62, 1572–1595. doi: 10.3758/BF03212157

Calder, A. J., Young, A. W., Rowland, D., and Perrett, D. I. (1997). Computer-enhanced emotion in facial expressions. Proc. Biol. Sci. 264, 919–925. doi: 10.1098/rspb.1997.0127

Carlson, J. M., and Reinke, K. S. (2010). Spatial attention-related modulation of the N170 by backward masked fearful faces. Brain Cogn. 73, 20–27. doi: 10.1016/j.bandc.2010.01.007

Cui, F., Zhu, X., Luo, Y., and Cheng, J. (2017). Working memory load modulates the neural response to other’s pain: evidence from an ERP study. Neurosci. Lett. 644, 24–29. doi: 10.1016/j.neulet.2017.02.026

Davis, R. A. (2001). A cognitive-behavioral model of pathological Internet use. Comput. Hum. Behav. 17, 187–195. doi: 10.1016/S0747-5632(00)00041-8

D’Hondt, F., Billieux, J., and Maurage, P. (2015). Electrophysiological correlates of problematic Internet use: critical review and perspectives for future research. Neurosci. Biobehav. Rev. 59, 64–82. doi: 10.1016/j.neubiorev.2015.10.00510.005

Dimberg, U., Thunberg, M., and Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. doi: 10.1111/1467-9280.00221

Dong, G., Lu, Q., Zhou, H., and Zhao, X. (2010). Impulse inhibition in people with Internet addiction disorder: electrophysiological evidence from a Go/NoGo study. Neurosci. Lett. 485, 138–142. doi: 10.1016/j.neulet.2010.09.002

Dong, G., Lu, Q., Zhou, H., and Zhao, X. (2011). Precursor or sequela: pathological disorders in people with Internet addiction disorder. PLoS ONE 6:e14703. doi: 10.1371/journal.pone.0014703

Eimer, M., Holmes, A., and McGlone, F. P. (2003). The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn. Affect. Behav. Neurosci. 3, 97–110. doi: 10.3758/CABN.3.2.97

Engelberg, E., and Sjöberg, L. (2004). Internet use, social skills, and adjustment. Cyberpsychol. Behav. 7, 41–47. doi: 10.1089/109493104322820101

Esteves, F., and Öhman, A. (1993). Masking the face: recognition of emotional facial expressions as a function of the parameters of backward masking. Scand. J. Psychol. 34, 1–18. doi: 10.1111/j.1467-9450.1993.tb01096.x

Ferrante, A., Gavriel, C., and Faisal, A. (2015). “Towards a brain-derived neurofeedback framework for unsupervised personalisation of brain-computer interfaces,” in Proceedings of the 7th Annual International IEEE EMBS Conference on Neural Engineering (NER) (Washington, DC: IEEE). doi: 10.1109/ner.2015.7146585

Fisch, L., Privman, E., Ramot, M., Harel, M., Nir, Y., Kipervasser, S., et al. (2009). Neural “ignition”: enhanced activation linked to perceptual awareness in human ventral stream visual cortex. Neuron 64, 562–574. doi: 10.1016/j.neuron.2009.11.001

Frühholz, S., Jellinghaus, A., and Herrmann, M. (2011). Time course of implicit processing and explicit processing of emotional faces and emotional words. Biol. Psychol. 87, 265–274. doi: 10.1016/j.biopsycho.2011.03.008

Gong, X., Huang, Y. X., Wang, Y., and Luo, Y. J. (2011). Revision of the Chinese facial affective picture system. Chin. J. Ment. Health 25, 40–46.

He, J. B., Liu, C. J., Guo, Y. Y., and Zhao, L. (2011). Deficits in early-stage face perception in excessive internet users. Cyberpsychol. Behav. Soc. Netw. 14, 303–308. doi: 10.1089/cyber.2009.0333

Holmes, A., Vuilleumier, P., and Eimer, M. (2003). The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Cogn. Brain Res. 16, 174–184. doi: 10.1016/S0926-6410(02)00268-9

Hugenberg, K. (2005). Social categorization and the perception of facial affect: target race moderates the response latency advantage for happy faces. Emotion 5, 267–276. doi: 10.1037/1528-3542.5.3.267

Itier, R. J., and Taylor, M. J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 14, 132–142. doi: 10.1093/cercor/bhg111

Jung, T. P., Makeig, S., Westerfield, M., Townsend, J., Courchesne, E., and Sejnowski, T. J. (2001). Analysis and visualization of single-trial event-related potentials. Hum. Brain Mapp. 14, 166–185. doi: 10.1002/hbm.1050

Kaufmann, J. M., Schweinberger, S. R., and MikeBurton, A. (2009). N250 ERP correlates of the acquisition of face representations across different images. J. Cogn. Neurosci. 21, 625–641. doi: 10.1162/jocn.2009.21080

Khazaal, Y., Billieux, J., Thorens, G., Khan, R., Louati, Y., Scarlatti, E., et al. (2008). French validation of the internet addiction test. Cyberpsychol. Behav. 11, 703–706. doi: 10.1089/cpb.2007.0249

King, D. L., and Delfabbro, P. H. (2014). The cognitive psychology of Internet gaming disorder. Clin. Psychol. Rev. 34, 298–308. doi: 10.1016/j.cpr.2014.03.006

Kirsh, J. S., Mounts, J. R., and Olczak, P. V. (2006). Violent media consumption and the recognition of dynamic facial expressions. J. Interpers. Violence 21, 571–584. doi: 10.1177/0886260506286840

Kirsh, S. J., and Mounts, J. R. (2007). Violent video game play impacts facial emotion recognition. Aggress. Behav. 33, 353–358. doi: 10.1002/ab.20191

Kuss, D. J., and Lopez-Fernandez, O. (2016). Internet addiction and problematic Internet use: a systematic review of clinical research. World J. Psychiatry 6, 143–176. doi: 10.5498/wjp.v6.i1.143

Lai, C. M., Mak, K. K., Watanabe, H., Jeong, J., Kim, D., Bahar, N., et al. (2015). The mediating role of Internet addiction in depression, social anxiety, and psychosocial well-being among adolescents in six Asian countries: a structural equation modelling approach. Public Health 129, 1224–1236. doi: 10.1016/j.puhe.2015.07.031

Lemmens, J. S., Valkenburg, P. M., and Gentile, D. A. (2015). The Internet gaming disorder scale. Psychol. Assess. 27, 567–582. doi: 10.1037/pas0000062

Leppänen, J. M., Tenhunen, M., and Hietanen, J. K. (2003). Faster choice-reaction times to positive than to negative facial expressions: the role of cognitive and motor processes. J. Psychophysiol. 17, 113–123. doi: 10.1027//0269-8803.17.3.113

Lo, S. K., Wang, C. C., and Fang, W. (2005). Physical interpersonal relationships and social anxiety among online game players. Cyberpsychol. Behav. 8, 15–20. doi: 10.1089/cpb.2005.8.15

Luo, W., Feng, W., He, W., Wang, N. Y., and Luo, Y. J. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage 49, 1857–1867. doi: 10.1016/j.neuroimage.2009.09.018

Morris, J. S., Öhman, A., and Dolan, R. J. (1999). A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl. Acad. Sci. U.S.A. 96, 1680–1685. doi: 10.1073/pnas.96.4.1680

Ng, B. D., and Wiemer-Hastings, P. (2005). Addiction to the internet and online gaming. Cyberpsychol. Behav. 8, 110–113. doi: 10.1089/cpb.2005.8.110

Öhman, A. (1999). “Distinguishing unconscious from conscious emotional processes: methodological considerations and theoretical implications,” in Handbook of Cognition and Emotion, eds T. Dalgleish and M. Power (Chichester: Wiley), 321–352. doi: 10.1002/0470013494.ch17

Pegna, A. J., Landis, T., and Khateb, A. (2008). Electrophysiological evidence for early non-conscious processing of fearful facial expressions. Int. J. Psychophysiol. 70, 127–136. doi: 10.1016/j.ijpsycho.2008.08.007

Pessoa, L. (2005). To what extent are emotional visual stimuli processed without m attention and awareness? Curr. Opin. Neurobiol. 15, 188–196. doi: 10.1016/j.conb.2005.03.002

Rellecke, J., Sommer, W., and Schacht, A. (2013). Emotion effects on the N170: a question of reference? Brain Topogr. 26, 62–71. doi: 10.1007/s10548-012-0261-y0261-y

Rolls, E. T., and Tovee, M. J. (1994). Processing speed in the cerebral cortex and the neurophysiology of visual masking. Proc. Biol. Sci. 257, 9–16. doi: 10.1098/rspb.1994.0087

Rossion, B., Gauthier, I., Tarr, M. J., Despland, P., Bruyer, R., Linotte, S., et al. (2000). The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport 11, 69–72. doi: 10.1097/00001756-200001170-00014

Sanders, C. E., Field, T. M., Miguel, D., and Kaplan, M. (2000). The relationship of Internet use to depression and social isolation among adolescents. Adolescence 35, 237–242.

Sato, W., Kochiyama, T., Yoshikawa, S., and Matsumura, M. (2001). Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. Neuroreport 12, 709–714. doi: 10.1097/00001756-200103260-00019

Schupp, H. T., Öhman, A., Junghöfer, M., Weike, A. I., Stockburger, J., and Hamm, A. O. (2004). The facilitated processing of threatening faces: an ERP analysis. Emotion 4, 189–200. doi: 10.1037/1528-3542.4.2.189

Spada, M. M. (2014). An overview of problematic Internet use. Addict. Behav. 39, 3–6. doi: 10.1016/j.addbeh.2013.09.007

Surguladze, S. A., Brammer, M. J., Young, A. W., Andrew, C., Travis, M. J., Williams, S. C., et al. (2003). A preferential increase in the extrastriate response to signals of danger. Neuroimage 19, 1317–1328. doi: 10.1016/S1053-8119(03)00085-5

Szycik, G. R., Mohammadi, B., Münte, T. F., and Te Wildt, B. T. (2017). Lack of evidence that neural empathic responses are blunted in excessive users of violent video games: an fMRI study. Front. Psychol. 8:174. doi: 10.3389/fpsyg.2017.00174

Tam, P., and Walter, G. (2013). Problematic internet use in childhood and youth: evolution of a 21st century affliction. Australas. Psychiatry 21, 533–536. doi: 10.1177/1039856213509911

Van Rooij, A., and Prause, N. (2014). A critical review of “Internet addiction” criteria with suggestions for the future. J. Behav. Addict. 3, 203–213. doi: 10.1556/JBA.3.2014.4.1

Vuilleumier, P. (2002). Facial expression and selective attention. Curr. Opin. Psychiarty 15, 291–300. doi: 10.1097/00001504-200205000-00011

Vuilleumier, P., and Schwartz, S. (2001). Emotional facial expressions capture attention. Neurology 56, 153–158. doi: 10.1212/WNL.56.2.1532.153

Wang, L., Luo, J., Bai, Y., Kong, J., Luo, J., Gao, W., et al. (2013). Internet addiction of adolescents in China: prevalence, predictors, and association with well-being. Addict. Res. Theory 21, 62–69. doi: 10.3109/16066359.2012.690053690053

Wei, H. T., Chen, M. H., Huang, P. C., and Bai, Y. M. (2012). The association between online gaming, social phobia, and depression: an internet survey. BMC Psychiarty 12:92. doi: 10.1186/1471-244X-12-92

Weinreich, A., Strobach, T., and Schubert, T. (2015). Expertise in video game playing is associated with reduced valence-concordant emotional expressivity. Psychophysiology 52, 59–66. doi: 10.1111/psyp.12298

Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., and Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 18, 411–418.

Yen, J. Y., Ko, C. H., Yen, C. F., Chen, C. S., and Chen, C. C. (2009). The association between harmful alcohol use and Internet addiction among college students: comparison of personality. Psychiatry Clin. Neurosci. 63, 218–224. doi: 10.1111/j.1440-1819.2009.01943.x

Yen, J. Y., Ko, C. H., Yen, C. F., Wu, H. Y., and Yang, M. J. (2007). The comorbid psychiatric symptoms of Internet addiction: attention deficit and hyperactivity disorder (ADHD), depression, social phobia, and hostility. J. Adolesc. Health 41, 93–98. doi: 10.1016/j.jadohealth.2007.02.002

Young, K. S. (1998). Internet addiction: the emergence of a new clinical disorder. Cyberpsychol. Behav. 1, 237–244. doi: 10.1089/cpb.1998.1.237

Zhang, D., He, Z., Chen, Y., and Wei, Z. (2016). Deficits of unconscious emotional processing in patients with major depression: an ERP study. J. Affect. Disord. 199, 13–20. doi: 10.1016/j.jad.2016.03.056

Zung, W. W. (1965). A self-rating depression scale. Arch. Gen. Psychiatry 12, 63–70. doi: 10.1001/archpsyc.1965.01720310065008

Keywords: Internet Gaming Disorder, backward masking, unconscious facial processing, ERPs, N170

Citation: Peng X, Cui F, Wang T and Jiao C (2017) Unconscious Processing of Facial Expressions in Individuals with Internet Gaming Disorder. Front. Psychol. 8:1059. doi: 10.3389/fpsyg.2017.01059

Received: 07 December 2016; Accepted: 08 June 2017;

Published: 23 June 2017.

Edited by:

Jintao Zhang, Beijing Normal University, ChinaReviewed by:

Wenhai Zhang, Chengdu University, ChinaKira Bailey, University of Missouri, United States

Copyright © 2017 Peng, Cui, Wang and Jiao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Can Jiao, amlhb2NhbkBzenUuZWR1LmNu

Xiaozhe Peng

Xiaozhe Peng Fang Cui

Fang Cui Ting Wang

Ting Wang Can Jiao

Can Jiao