- 1School of Psychology, University of East London, London, UK

- 2Department of Economics (AE1), School of Business and Economics, Maastricht University, Maastricht, Netherlands

- 3Aston Brain Centre, School of Life and Health Sciences, Aston University, Birmingham, UK

- 4School of Architecture, Computing and Engineering, University of East London, London, UK

- 5Institute of Psychiatry, Psychology and Neuroscience, King’s College London, London, UK

The peculiar ability of humans to recognize hundreds of faces at a glance has been attributed to face-specific perceptual mechanisms known as holistic processing. Holistic processing includes the ability to discriminate individual facial features (i.e., featural processing) and their spatial relationships (i.e., spacing processing). Here, we aimed to characterize the spatio-temporal dynamics of featural- and spacing-processing of faces and objects. Nineteen healthy volunteers completed a newly created perceptual discrimination task for faces and objects (i.e., the “University of East London Face Task”) while their brain activity was recorded with a high-density (128 electrodes) electroencephalogram. Our results showed that early event related potentials at around 100 ms post-stimulus onset (i.e., P100) are sensitive to both facial features and spacing between the features. Spacing and features discriminability for objects occurred at circa 200 ms post-stimulus onset (P200). These findings indicate the existence of neurophysiological correlates of spacing vs. features processing in both face and objects, and demonstrate faster brain processing for faces.

Introduction

Humans can typically recognize hundreds of faces with ease. It has been suggested that this extraordinary ability relies on face-specific perceptual processing that allows the recognition of (upright) faces as a gestalt or a global representation (Rossion, 2008). This perceptual processing has been referred to as “holistic” (Tanaka and Farah, 1993), “configural” (Maurer et al., 2002), or “second-order relational” (Diamond and Carey, 1986). Despite the different terminology adopted, holistic processing (which is the term we adopt here) refers to the simultaneous (i.e., parallel) processing of multiple facial features (e.g., eyes, mouth, and nose – featural processing), and their metric distance (e.g., inter-ocular distance or nose-mouth distance – spacing processing) (see McKone and Yovel, 2009; Piepers and Robbins, 2012 for reviews on the subject). Object perception (even objects of expertise; Robbins and McKone, 2007), on the other side, specifically relies on featural processing only (Biederman, 1987; Tanaka and Simonyi, 2016).

Holistic face processing has been assessed using different behavioral paradigms. For instance, face perception is negatively affected by stimulus inversion (i.e., face-inversion effect), a manipulation believed to disrupt holistic processing (Yin, 1969). In the composite-face task (Young et al., 1987), identifying the top-halves of faces is harder when aligned with competing-identity bottom halves (forming the illusion of a new face) compared to when the halves are misaligned (i.e., composite-face effect). Despite widespread use of the “face-inversion” and the “composite-faces” to probe holistic face processing in typical and atypical populations (Palermo et al., 2011; Rossion et al., 2011; Rivolta et al., 2012b), these tasks do not directly manipulate facial features and their spacing relationship. Experimental paradigms that allow to manipulate facial features and their spacing relationship include, but are not limited to, the Jane Task (Mondloch et al., 2002) and the Albert Task (Yovel and Kanwisher, 2004). In these identity-matching tasks, participants are asked to decide whether two sequentially presented faces are the same or different, when the facial features (engaging featural processing) or the spacing between them (engaging spacing processing) differ. Previous results showed that performance in these identity-matching tasks is impaired after stimulus inversion, suggesting that holistic face processing integrates both featural shapes and their spacing (McKone and Yovel, 2009).

Neuroimaging studies have shown that separate and dissociable brain regions mediate spacing and featural processing. For instance, Transcranial Magnetic Stimulation (TMS) studies have demonstrated the involvement of the right lateral occipital lobe (right occipital face area, OFA; Pitcher et al., 2007) and of the left middle frontal gyrus (MFG; Renzi et al., 2013) in featural-face processing. Spacing processing, on the other side, has been related to the activity of the right inferior frontal gyrus (IFG; Renzi et al., 2013). Furthermore, functional magnetic resonance imaging (fMRI) studies have provided evidence of a correlation between the activity in the fusiform gyrus and spacing processing (Maurer et al., 2007)1. In summary, causal and correlational evidence from neuroimaging suggests that different face-sensitive regions in the occipital, temporal, and frontal lobe are involved in different aspects of face perception (i.e., featural vs. spacing).

Although, TMS and fMRI provide important evidence about the temporal and spatial features of face perception, they both have some limitations: TMS has restrictions in targeting regions that lie in the ventral surface of the temporal lobe, whereas fMRI has poor temporal resolution (Amaro and Barker, 2006). In contrast to these methods, Event-Related Potentials (ERPs) as measured with the electroencephalogram (EEG) reveal the timing of neuronal events underlying sensory and cognitive processes with millisecond precision across the whole scalp. EEG (along with Magnetoencephalography – MEG) studies suggest that the perception of visual stimuli (e.g., faces and objects) induces a sequence of evoked components within the first 200 ms after stimulus presentation (see Rossion, 2014 for a review). The most investigated component, N170 (M170 when tested with MEG), peaks at around 170 ms post-stimulus onset (Bentin et al., 1996; Liu et al., 2002). The N170 for faces is stronger than for any other visual category tested so far, and appears to be generated by activities of the occipital cortex and the fusiform gyrus (Itier et al., 2007; Rivolta et al., 2012b; Rossion, 2014). An earlier ERPs component, P100, peaking at around 100 ms post-stimulus onset (P100 is a positive component, also known as M100 when recorded with MEG) (Linkenkaer-Hansen et al., 1998; Rivolta et al., 2012a), is believed to reflect low-level features of visual stimuli, such as size and luminosity. Evidence for the face-sensitivity of P/M100 is mixed, with some studies finding face-sensitivity (Rivolta et al., 2012a) and others not (Boutsen et al., 2006). Another positive component, P200, which peaks at around 200 ms post-stimulus onset with a topography similar to P100 (Mercure et al., 2008) has been suggested to reflect cortical visual feedback from high- to low- level visual areas (Kotsoni et al., 2006) and to be involved in emotion face perception (Dennis and Chen, 2007).

EEG/MEG research on the role of early-evoked (100–200 ms) potentials in different aspects of face processing is surprisingly limited. There is indication suggesting early P100 sensitivity for spacing processing (Halit et al., 2000). For example, Wang et al. (2015) showed that, under certain attentional conditions, P100 is larger for spacing- as compared to featural-face processing. These neurophysiological studies, along with behavioral evidence (Zinchenko et al., 2015), suggest that the human visual system can rapidly (∼100 ms) discriminate between featural and spacing facial manipulations. The involvement of N/M170 in holistic face processing has been shown with the face-inversion effect (i.e., N170 is larger and delayed for inverted faces, see Rossion et al., 2000), the composite-face effect (i.e., N170 is larger for aligned than misaligned faces) (Letourneau and Mitchell, 2008) and Mooney faces (Rivolta et al., 2014a). The N/M170, however, is not sensitive to featural vs. spacing modulations of face stimuli (Halit et al., 2000; Scott and Nelson, 2006; Wang et al., 2015). This suggests that holistic processing investigated by tasks tapping into spacing vs. featural differences and holistic processing, as assessed by face inversion and composite face, may occur at different time-scales. Moreover, using featural and spatial modified face stimuli, Mercure et al. (2008) showed a significant effect on the P200 amplitude for faces with a spatial/configural modification, where the amplitude of P200 was reduced by the “feature manipulation” compared to the “spacing manipulation” (Mercure et al., 2008). Wang et al. (2015), however, reported a larger P200 for the featural-face processing using the steady-state visual evoked potentials (SSVEP) to differentiate spacing-vs. featural-face processing.

Overall, the current literature suggests that face-sensitive electrophysiological components may mediate spacing and featural mechanisms. However, it is still unclear whether these effects are face-specific or whether they also characterize the perception of non-face stimuli. In the current study, we investigated the spatio-temporal dynamics of spacing and featural detection in facial and non-facial stimuli with high-density EEG. In the experiment, we implemented a newly created identity-matching task called the “University of East London Face Task” (UEL-FT). This task tests feature and spacing perception for face and non-face stimuli. Based on previous evidence, we predicted to find three early face-sensitive components: P100, N170, and P200. Furthermore, we expected differences between featural and spacing effects for faces in early (i.e., P100 and N170) ERP components, especially in posterior electrodes. Since no previous EEG study specifically targeted featural and spacing processing in non-face stimuli, we did not advance a specific prediction on the spatio-temporal dynamics of house processing.

Materials and Methods

Participants

Nineteen participants (12 females) without any recorded history of psychiatric or neurological disorder and with a mean age of 28 years (range 21–41) participated in the experiment. All participants had normal or correct-to-normal vision and did not report everyday life problems in face recognition. The study was performed according to the Declaration of Helsinki and approved by the ethical committees of University of East London (UEL). After complete description of the study to the participants, written informed consent was obtained.

Stimuli

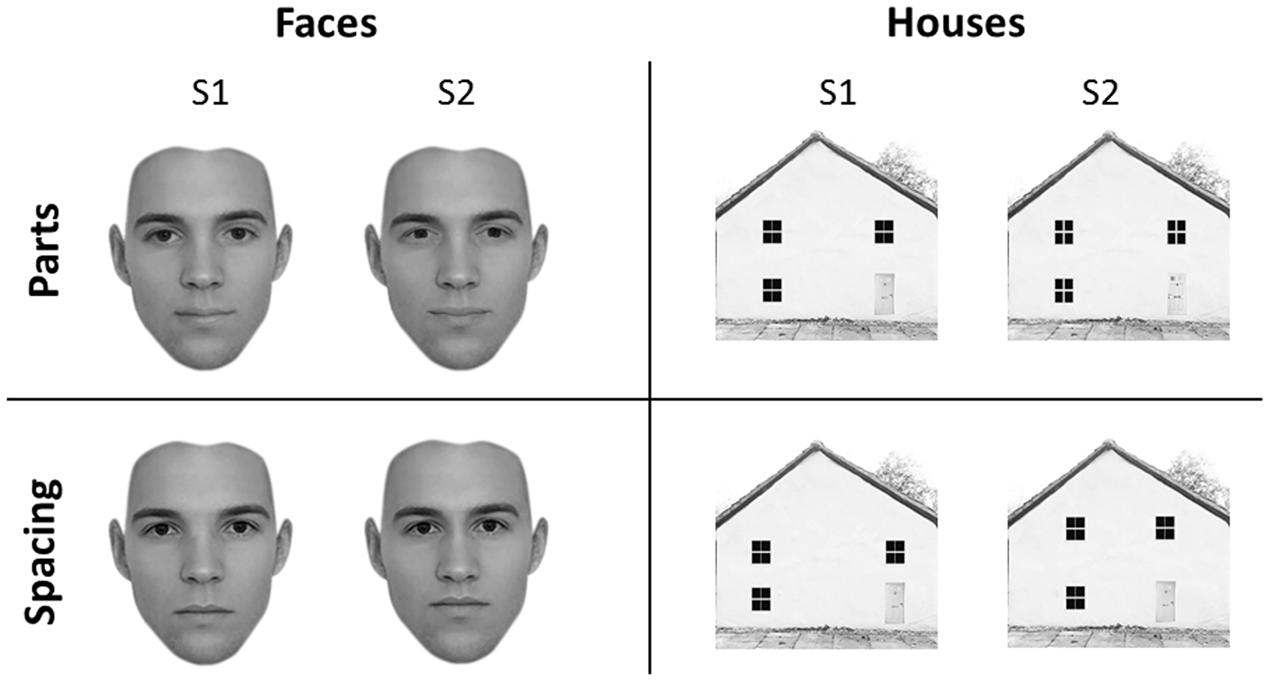

Forty-five faces and forty-five houses were created using five “original” faces and five “original” houses with a resolution of 300 × 300 pixels, in line with previous studies (Yovel and Kanwisher, 2004). Adobe Photoshop software (Adobe Systems, Inc., San Jose, CA, USA) was used to create the feature and spacing sets for the face and house stimuli. Starting from the original stimuli, which were downloaded from the internet, for both categories we created a feature set and a spacing set. Each set was made of four variations (Figure 1).

FIGURE 1. Experimental stimuli. Face (left) and house (right) stimuli adopted in the University of East London (UEL) face task. Parts (top) and spacing (bottom) manipulations are shown for both categories. Some of the face pictures have been obtained from www.beautycheck.de.

Face Stimuli

For the feature set, the two eyes and the mouth were replaced with eyes and mouth of similar shape taken from other stimuli (not belonging to the original set) to produce four variations of each of the five “original” faces. For the spacing set, the eyes were shifted inward or outward by 4–5 pixels and the mouth was shifted downward or upward by 4–5 pixels. All faces were cropped to exclude the hair.

House Stimuli

For the feature set, four variants of each of the five “original” houses were constructed by replacing windows and doors with windows and doors of similar shape but of different texture. For the spacing set, the location of the windows and doors was shifted so that they were closer together or farther apart and the two top windows were closer to or farther from the roof, on average by 15 pixels.

We did not adopt the original “Albert Task,” or “Jane Task” because they are characterized by fewer stimuli, which are repeated many times during the task. Previous studies suggest that stronger holistic processing is engaged with tasks that adopt many different stimuli (repeated few times), as compared to tasks adopting few stimuli (repeated many times) (McKone and Yovel, 2009).

Experimental Design

The task was divided into four blocks of 100 trials each. Each block included face-parts (FP), face-spacing (FS), house-parts (HP), or house spacing (HS) stimuli. Block presentation was randomized with the constraint that the two face and the two house blocks were presented in sequence (and never alternated). Participants received instructions at the beginning of each block. In each trial a pair of stimuli belonging to the same category (face or house) and condition (feature or spacing) was presented. Each trial started with a fixation mark (500 ms), followed by the first stimulus (S1–500 ms), followed by a fixation cross (500 ms) and the second stimulus (S2–500 ms) (see Figure 2). Participants had to judge whether S1 and S2 were identical (i.e., “same” response) or different (i.e., “different” response) by pressing one of two different keys (i.e., left arrow for “same” and right arrow for “different”) on a computer keyboard. In both the spacing and feature conditions half of the trials were “identical” (i.e., S1 was equal to S2) and half were “different” (i.e., variations in features or spacing from S1 to S2). Participants were given 2000 ms time to make a decision; after this time the response was considered as incorrect. They were also instructed to minimize big movements of the head and shoulders, avoid contraction of face muscles and try to blink and swallow in the period between trials.

All stimuli were shown in the center of a CRT monitor (30 cm diameter, 60 Hz refresh rate) installed inside an electrically shielded room, and placed at a distance of ∼100 cm from the participant’s head. Face and house stimuli were presented within a frame that covered a visual angle of 4.8° × 4.8°. The EEG experiment was programmed and delivered with Psych Toolbox (Matlab, MathWorks®). Stimuli did not differ in luminance [FP: M = 190 cd/cm2, SEM = 1.53; FS: M = 191 cd/cm2, SEM = 1.48; HP: M = 187 cd/cm2, SEM = 5.7; HS: M = 186 cd/cm2, SEM = 5.66; F(3,79) = 0.31, p = 0.82] and are reported in Supplementary Figure 1.

Behavioral Analysis

Behavioral analysis, accuracy, and reaction times (RTs), was performed in SPSS by means of a repeated measures ANOVA with factors category (face vs. house) and condition (parts vs. spacing). “Identical” (i.e., S1 = S2) and “different” (i.e., S1 ≠ S2) trials have been collapsed and only correct trials have been considered for statistical analysis.

EEG Data Processing and Statistical Analysis

EEG data were recorded with a high-density 128-channel Hydrocel Geodesic Sensor Net (Electrical Geodesic Inc., EGI, Eugene, OR, USA) referenced to the vertex (Tucker, 1993). The EEG signal was amplified with EGI NetAmps 200, digitized at 500 Hz, band-pass filtered from 0.1 to 200 Hz and stored for offline analysis. Impedance was kept below 50 kΩ. EEG data processing was performed using the open source Matlab toolbox “FieldTrip2” (Oostenveld et al., 2011). A band-pass filter (1–60 Hz) and a notch filter (50 Hz) were first applied to, respectively, limit the signal of interest and remove the power line noise. Data were subsequently segmented into epochs (i.e., trials) of 2500 ms length, starting 500 ms before S1 and ending 500 ms after S2. Each trial was baseline-corrected by removing a period of 400 ms (from 500 to 100 ms) before S1, during which subjects were at rest between trials. Therefore, both S1 and S2 were referred to the same baseline. Eye-blinks and muscle artifacts were detected using the automatized FieldTrip routine. Noisy electrodes were excluded and their signal substituted by an interpolation of the activity of neighboring electrodes (thus, a total of 128 electrodes per participant were considered in the analyses). After linear interpolation, the EEG signal was re-referenced according to the average activity of the 128 electrodes (Dien, 1998). Correct trials only were considered for all the EEG analysis. The correct average and artifact-free trials for each condition were: FP = 70 (SD = 12); FS = 60 (SD = 8); HP = 68 (SD = 10); HS = 76 (SD = 9).

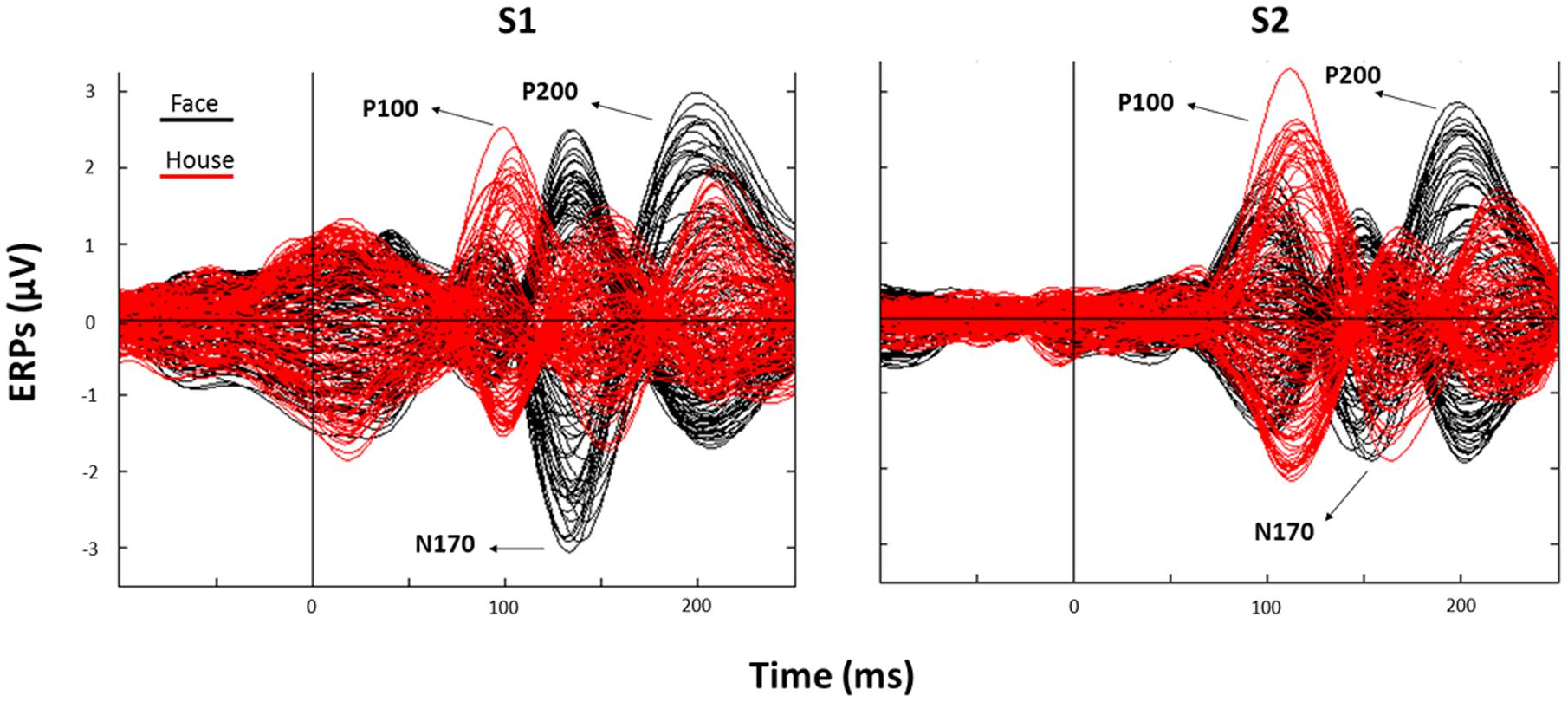

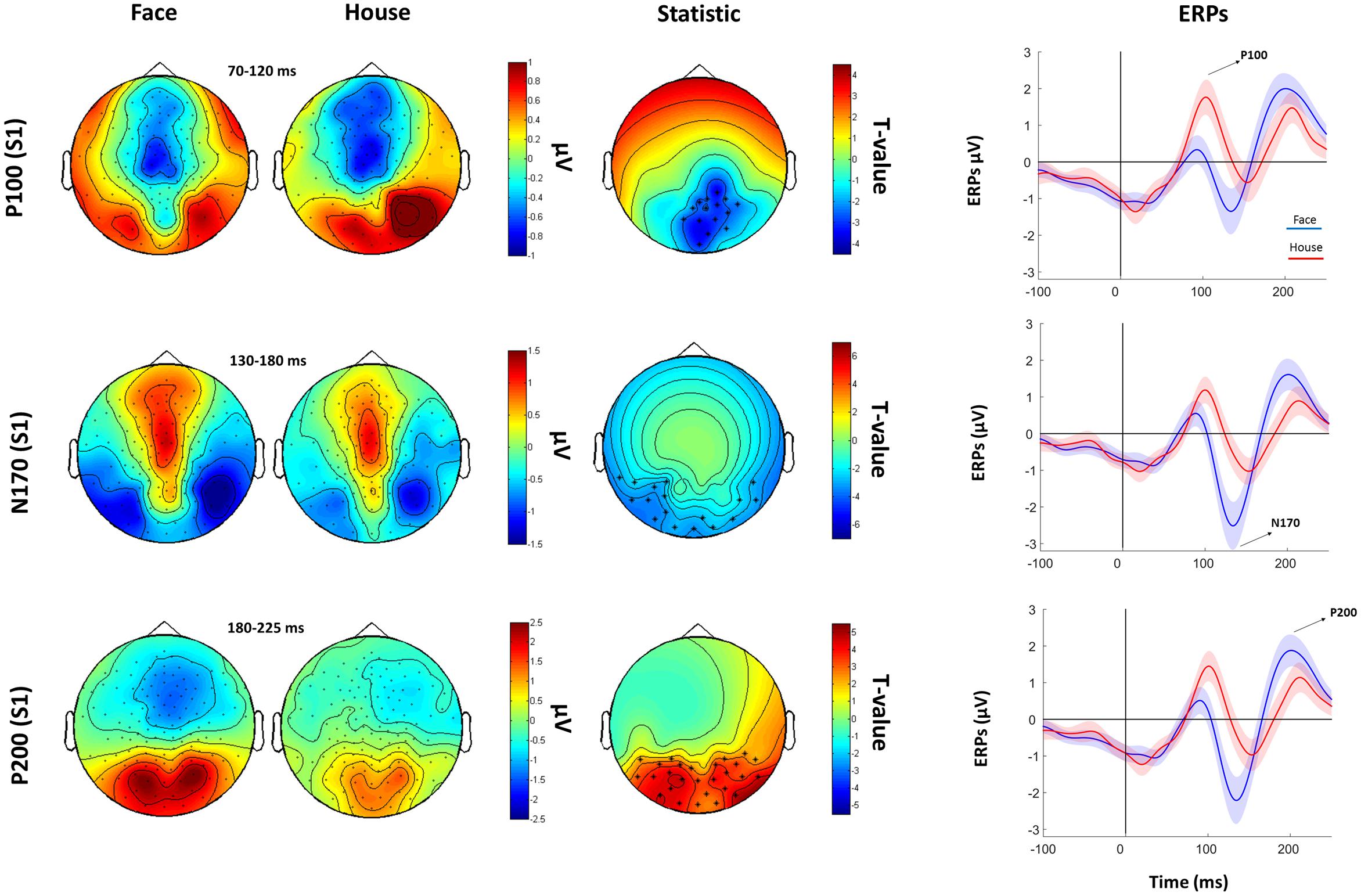

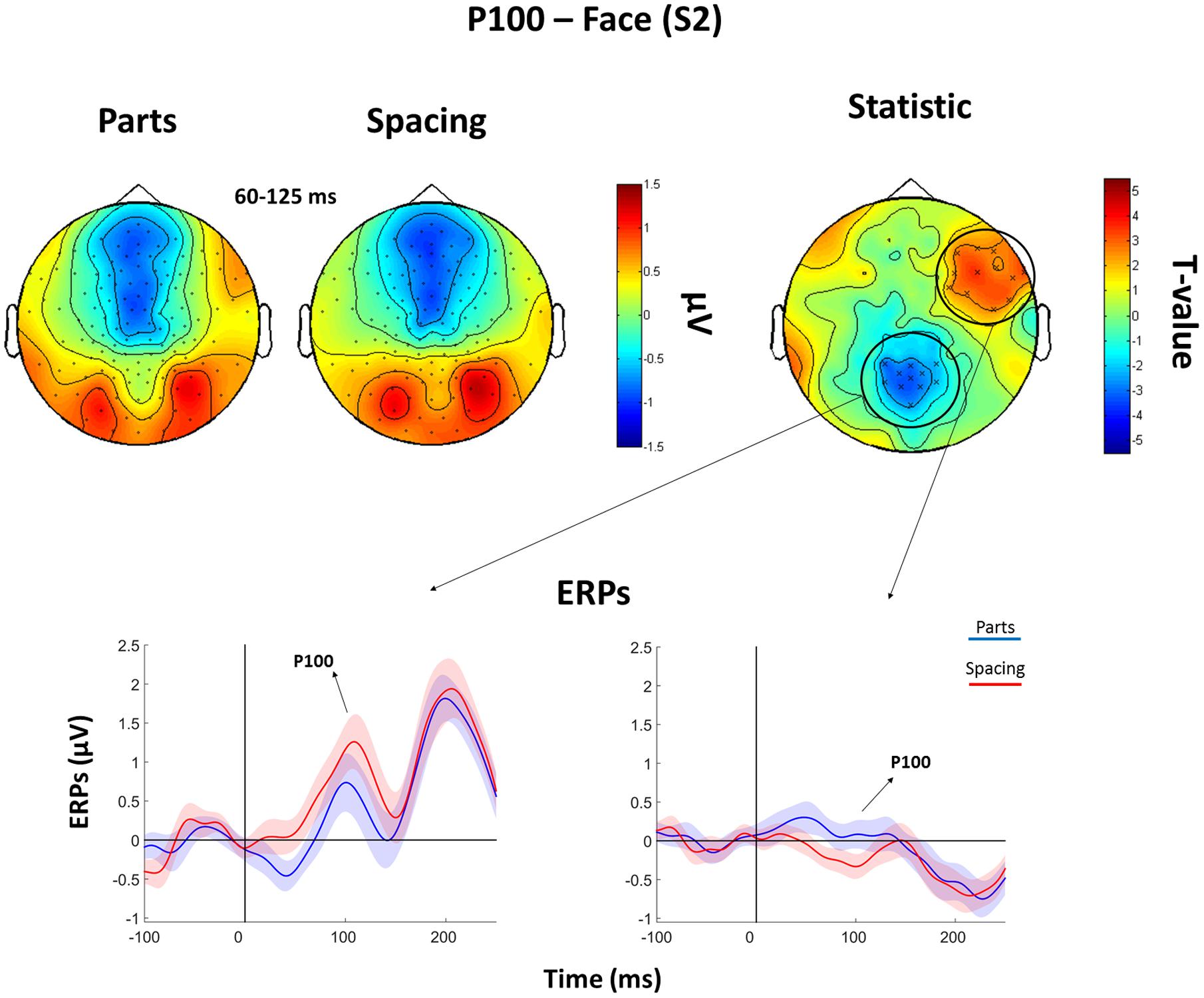

The subsequent analysis was divided in two parts. First, we aimed to verify the presence, in our data, of traditionally recorded early “face-sensitive” components, such as the P100, N170, and P200. To avoid potential adaptation effects (i.e., S2 amplitude reduction due to S1 perception), this was achieved by means of face-house contrasts on S1. After visual inspection of the grand-average ERPs data (Figure 3) and looking at individual peaks, we defined S1 time-windows of interest as follows: P100 (70–120 ms), N170 (130–180 ms), and P200 (180–250 ms) (see Figure 4 for the topography of the three components). Second, to ascertain whether within-class part-based vs. spacing-based perceptual mechanisms were characterized by different ERPs features, we compared features vs. spacing conditions separately for face and house stimuli (i.e., FP vs. FS and HP vs. HS). This analysis, in line with previous “match-to-sample” EEG studies (Mercure et al., 2008; Eimer et al., 2011), was carried out on S2. Time-windows of interest were determined by visual inspection (Figure 3) of the data as follows: P100 (Face: 60–125 ms; House: 65–140 ms), N170 (Face: 125–175 ms; House: 140–190 ms), P200 (Face: 175–250 ms; House: 190–250 ms). In both spacing and feature conditions half of the trials were “identical” (i.e., the stimulus was repeated) and half were “different” (i.e., variations in features or spacing). The EEG analysis, as in previous studies that adopted a similar task (Yovel and Kanwisher, 2004; Pitcher et al., 2007), has been conducted collapsing same and different trials together and considering only correct trials.

FIGURE 3. Corresponding event related potentials (ERPs) traces (butterfly plots) for all electrodes as averaged across all trials (black: Face; red: House) after S1 (left panel) and S2 (right panel) presentation (“0” indicates stimulus onset).

FIGURE 4. Faces Vs. House comparison. Left: Topographical plots for S1-evoked ERP components (P100, N170, and P200) for Face and House conditions. Middle: t-statistic maps of the ERP amplitude Face vs. House differences. Crosses indicate significant channels (∗: p < 0.01). Right grand-averaged ERPs traces for face and houses averaged across statistically significant electrodes (shades represent the SEM).

In order to analyze sensor-level EEG data we adopted the approach from our previous M/EEG studies (Premoli et al., 2014; Rivolta et al., 2015), by using a non-parametric cluster-based permutation analysis (Maris and Oostenveld, 2007) on each electrode separately for the P100, N170, and P200 components. Specifically, a paired t-test was conducted for each electrode at each time bin within the P100, N170, and P200 time-windows. T-values exceeding an a priori threshold of p < 0.01 were clustered based on adjacent time bins and neighboring electrodes. Cluster-level statistics were calculated by taking the sum of the t-values within every cluster. The comparisons were done with respect to the maximum values of summed t-values. By means of a permutation test (i.e., randomizing data across conditions and rerunning the statistical test 1500 times), we obtained a reference distribution of the maximum of summed cluster t-values to evaluate the statistic of the actual data. Clusters in the original dataset were considered to be significant at an alpha level of 5% if <5% of the permutations (N = 1500) used to construct the reference distribution yielded a maximum cluster-level statistic larger than the cluster-level value observed in the original data.

Since previous studies showed a prominent role of posterior (i.e., occipito-temporal) electrodes in detecting face-sensitive activity (Rossion, 2014), we ran the first analysis (S1) on posterior sensors only (N = 41) in order to increase the sensitivity of the statistics. However, due to the lack of any a priori predictions about the location from where potential conditions effect could arise, and since previous fMRI and TMS research highlighted spacing vs. feature activity even in the frontal cortex (Maurer et al., 2007; Renzi et al., 2013), within-category features vs. spacing contrasts were performed on all the 128 electrodes.

Results

Behavioral Results

Analysis of accuracy revealed a main effect of category [F(1,18) = 33.80, p < 0.001], with faces (M = 77.6%; SEM = 1.9) showing worse accuracy than houses (M = 86.2%; SEM = 1.3). There was no main effect of condition [F(1,18) = 2.3, p = 0.12], albeit there was a statistically significant category × condition interaction [F(1,18) = 65.25, p < 0.001]. Pairwise comparison (Bonferroni corrected) showed that our participants were more accurate in the FP (M = 84.0%; SEM = 2.2) condition than in FS (M = 71.2%; SEM = 2.0) (p < 0.001), whereas were less accurate in the HP (M = 82.0%; SEM = 1.7) than HS (M = 90%; SEM = 1.6) (p < 0.001).

Analysis of RTs (correct trials only) showed no main effect of condition [F(1,18) = 1.94, p = 0.18] and no main effect of category [F(1,18) = 1.17, p = 0.30]. There was, however, a category × condition interaction [F(1,18) = 5.54, p = 0.03]. Follow-up comparisons (Bonferroni corrected) showed that FS (M = 1124 ms, SEM = 107) was characterized by longer RTs than FP (M = 910 ms, SEM = 56) (p = 0.047).

ERPs Results

Face vs. House Contrasts

Cluster-based permutation analysis of the P100 showed that faces had reduced amplitude than houses in a cluster of 15 electrodes. The N170 was more negative for faces than for houses in a cluster of 13 electrodes. The P200 for faces was stronger than for houses in a cluster of 14 electrodes (Figure 4).

Within-Class Features vs. Spacing Contrasts

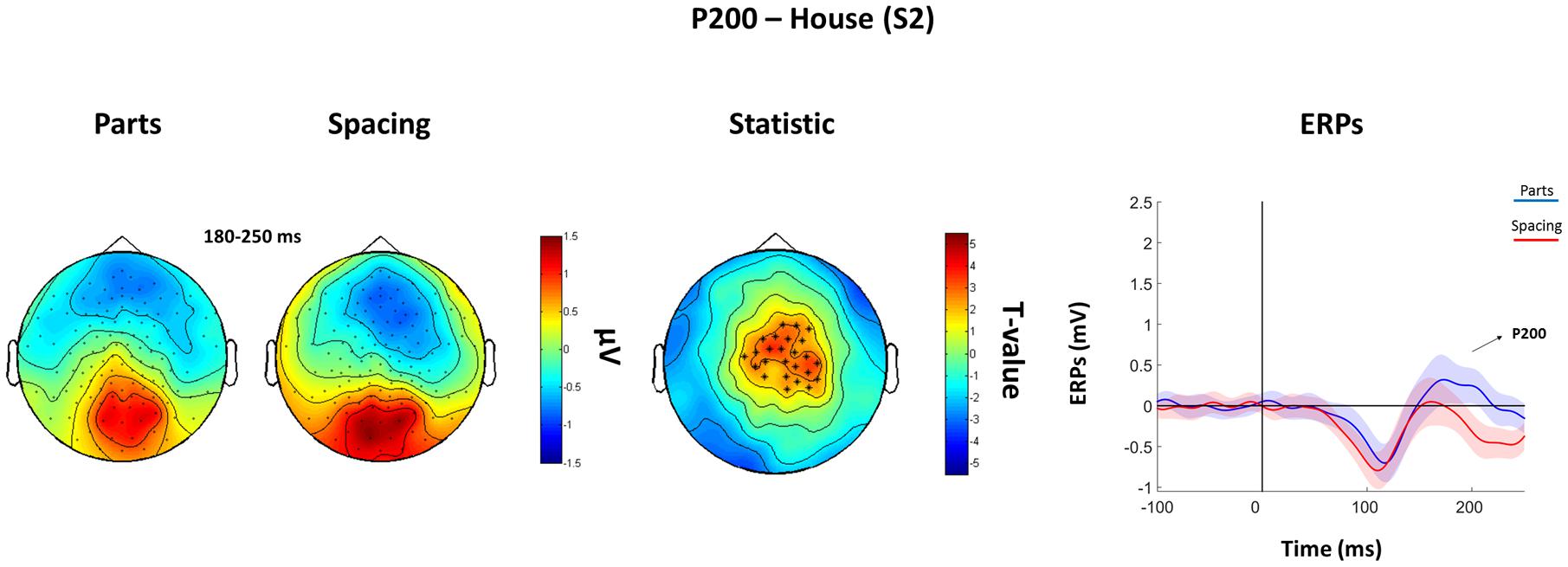

The analysis of the P100 for faces showed that FS led to higher P100 amplitude in a parietal-occipital cluster of 11 electrodes. Contrary, FP was characterized by higher P100 amplitude over a cluster of 12 right fronto-temporal electrodes (Figure 5). Given the dipole shape and location and taking into account the relatively poor spatial EEG resolution, it is likely that this spatial dissociation is due to the same (occipital) dipole. No FP vs. FS differences were found in the N170 and P200 (all Ps > 0.05). The analysis of the P200 for houses showed higher amplitude for HP than HS over a fronto-parietal (i.e., central) cluster including 24 electrodes (Figure 6). No HP vs. HS differences were found for the P100 and N170 (all Ps > 0.05).

FIGURE 5. Features (FP) vs. spacing (FS) contrasts for the P100 as elicited by S2 faces. Top: Topographical plots of grand-averaged ERPs for the two Face conditions (Parts and Spacing), t-statistic map distribution (X: p < 0.05). Bottom: Grand-averaged ERPs traces as averaged across statistically significant electrodes (shades represent the SEM).

FIGURE 6. Features (HP) vs. spacing (HS) contrasts for the P200 as elicited by S2 houses. Topographical plots of grand-averaged ERPs for the two House conditions (Parts and Spacing) (left), t-statistic map distribution (∗: p < 0.01) (middle), and grand-averaged ERPs traces as averaged across statistically significant electrodes (right) (shades represent the SEM).

Discussion

The current study investigated the ERPs markers (P100, N170, and P200) of featural and spatial processing in face and non-face visual stimuli. We implemented a newly developed perceptual discrimination task (i.e., UEL-FT) to demonstrate that facial and non-facial featural vs. spacing processing displays different spatio-temporal dynamics. Results, in line with our hypotheses, demonstrate that the human visual system can discriminate spacing vs. featural manipulations as early as after 100 ms post-stimulus onset (P100) for faces; whereas it requires circa 200 ms (P200) to discriminate spacing vs. featural manipulations for house stimuli.

At the behavioral level, participants were faster and more accurate at recognizing featural manipulations. This is in line with previous studies (Le Grand et al., 2006; Rivolta et al., 2012b; Tanaka et al., 2014; Wang et al., 2015) demonstrating how facial features are easier to process than manipulations of the distance between them. In the ERPs analysis, we showed that the early component P100 is sensitive to manipulations of faces in the UEL-FT task, which is in line with the literature (Mercure et al., 2008). Furthermore, our findings point out differences in the P100 amplitude distribution on the scalp, with parietal-occipital electrodes showing prominent spacing activity for faces, and with fronto-temporal electrodes showing prominent featural activity for faces.

Face perception is mediated by a network of cortical and subcortical brain regions (Haxby et al., 2000; Rivolta et al., 2014b). It has been demonstrated that some face areas are mainly involved in specific aspects of face processing. For instance, TMS delivered at circa 100 ms post-stimulus onset showed that right-OFA and left-MFG are implicated in featural processing, whereas the right IFG is involved in spacing processing (Pitcher et al., 2007; Renzi et al., 2013). Previous EEG and MEG studies showed occipital and frontal face-sensitive activity at the same latency (P/M100) (Linkenkaer-Hansen et al., 1998; Halgren et al., 2000; Rivolta et al., 2012a, 2014a). These findings need to be taken into careful consideration since the spatial (occipital vs. frontal) EEG difference may have its origin in the same (likely occipital) dipole. Mainly, our findings of P100 discriminability between spacing and feature face manipulations confirms previous evidence of early face-sensitive processing (Halit et al., 2000; Wang et al., 2015), suggesting that the visual system is sensitive to featural and spacing manipulations as early as 100 ms post-stimulus onset.

Critically, house stimuli that underwent the same manipulations of face stimuli did not show a P100 effect. This aligns with previous literature demonstrating that TMS over the OFA at ∼100 ms only affected spacing processing for faces, but not for houses (Pitcher et al., 2007), thus pointing toward face-specific perceptual mechanisms at 100 ms post-stimulus onset. Neurophysiological activity that discriminated between features and spacing processing for house stimuli was evident at the P200 level, suggesting that face processing occurs earlier that object processing (Farah et al., 1998; Crouzet et al., 2010).

In line with previous studies (Halit et al., 2000; Mercure et al., 2008; Wang et al., 2015), we did not find significant condition effects for faces at the level of the N170. Previous evidence suggests that the composite-face effect (Letourneau and Mitchell, 2008) and the inversion effect (Rossion et al., 2000) affect the N170 amplitude (but not, or to a lesser extent, the P100), indicating a critical involvement of this ERP component in holistic face processing (see Yovel, 2015 for a recent review). These differences between P100 and N170 indicate that holistic processing investigated by spacing vs. featural differences (McKone and Yovel, 2009), and holistic processing assessed by other types of tasks (i.e., face inversion or composite face) may occur at different time-scales. Our results, along with previous neuroimaging (fMRI, TMS) findings (Mercure et al., 2008; Wang et al., 2015; Yovel, 2015; Zachariou et al., 2016), contribute to define the EEG temporal dimension of early face processing. The visual system is able to discriminate the facial features and their distance or “spacing distribution,” as early as the P100 occurs and that might be later (N170) integrated into forming an holistic representation. Finally, we did not report features vs. spacing face differences in the P200, which supports the idea that this component might reflect emotional salience processing (i.e., P200 is greater for negative emotional faces and pictures) (Cuthbert et al., 2000; Dien et al., 2004). Furthermore, confirming previous findings, we detected face-sensitive N170 (Bentin et al., 1996) and P200 (Boutsen et al., 2006) activity. The P100 was more positive for houses than faces, potentially indicating that low-level features may differentiate face and house stimuli of our experiment (albeit luminance and size were similar between categories). Notwithstanding, we believe that this effect should not undermine the validity of our main findings, which are within-category.

Conclusion

Our EEG and behavioral findings suggest that featural vs. spacing processing for faces occurs at ∼100 ms (P100), whereas it occurs at ∼200 ms (P200) post-stimulus onset for houses. These results have important implications for theories of holistic face processing and their neurophysiological correlates. Future studies should try to implement EEG source connectivity approaches to further describe the spatial-dynamics of spacing and featural neural processing and characterize the topology of the evoked activity.

Author Contributions

MN, DB, and DR contributed to the design, recordings, analyses, and write-up. IP and SP contributed to analyses and write-up of the study.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We wish to thank Ms. Shakiba Azizi, Ms. Kristine-Amalie Petersen, Ms. Agata Tarnawska, and Ms. Marica Barbieri for the help in EEG data acquisition. We also wish to thank Mr. Robert Bonemei for creating the UEL-FT, Ms. Sharon Noh, Mr. Nickolas Gagnon, Prof. Duncan Turner, and Mr. Robert Seymour for the insightful comments on early versions of the manuscript. Some face images have been taken from www.beautycheck.de.

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2017.00333/full#supplementary-material

Footnotes

- ^ Note that Maurer et al. (2007) report fMRI activity in the fusiform gyrus, but outside the functionally localized FFA.

- ^ http://fieldtrip.fcdonders.nl/

References

Amaro, E., and Barker, G. J. (2006). Study design in fMRI: basic principles. Brain Cogn. 60, 220–232. doi: 10.1016/j.bandc.2005.11.009

Bentin, S., McCarthy, G., Perez, E., Puce, A., and Allison, T. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565. doi: 10.1162/jocn.1996.8.6.551

Biederman, I. (1987). Recognition-by-components: a theory of human image understanding. Psychol. Rev. 94, 115–147. doi: 10.1037/0033-295X.94.2.115

Boutsen, L., Humphreys, G. W., Praamstra, P., and Warbrick, T. (2006). Comparing neural correlates of configural processing in faces and objects: an ERP study of the Thatcher illusion. Neuroimage 32, 352–367. doi: 10.1016/j.neuroimage.2006.03.023

Crouzet, S., Kirchner, H., and Thorpe, S. J. (2010). Fast saccades toward faces: face detection in just 100 ms. J. Vis. 10, 1–17. doi: 10.1167/10.4.16

Cuthbert, B. N., Schupp, H. T., Bradley, M. M., Birbaumer, N., and Lang, P. J. (2000). Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biol. Psychol. 52, 95–111. doi: 10.1016/S0301-0511(99)00044-7

Dennis, T. A., and Chen, C.-C. (2007). Neurophysiological mechanisms in the emotional modulation of attention: the interplay between threat sensitivity and attentional control. Biol. Psychol. 76, 1–10. doi: 10.1016/j.biopsycho.2007.05.001

Diamond, R., and Carey, S. (1986). Why faces are and are not special: an effect of expertise. J. Exp. Psychol. 115, 107–117. doi: 10.1037/0096-3445.115.2.107

Dien, J. (1998). Issues in the application of the average reference: review, critiques, and recommendations. Behav. Res. Methods Instrum. Comput. 30, 34–43. doi: 10.3758/BF03209414

Dien, J., Spencer, K. M., and Donchin, E. (2004). Parsing the late positive complex: mental chronometry and the ERP components that inhabit the neighborhood of the P300. Psychophysiology 41, 665–678. doi: 10.1111/j.1469-8986.2004.00193.x

Eimer, M., Gosling, A., Nicholas, S., and Kiss, M. (2011). The N170 component and its links to configural face processing: a rapid neural adaptation study. Brain Res. 1376, 76–87. doi: 10.1016/j.brainres.2010.12.046

Farah, M. J., Wilson, K. D., Drain, M., and Tanaka, J. N. (1998). What is “special” about face perception? Psychol. Rev. 10, 482–498. doi: 10.1037/0033-295X.105.3.482

Halgren, E., Raij, T., Marinkovic, K., Jousmaki, V., and Hari, R. (2000). Cognitive response profile of the human fusiform face area as determined by MEG. Cereb. Cortex 10, 69–81. doi: 10.1093/cercor/10.1.69

Halit, H., de Haan, M., and Johnson, M. H. (2000). Modulation of event-related potentials by prototypical and atypical faces. Neuroreport 11, 1871–1875. doi: 10.1097/00001756-200006260-00014

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Itier, R. J., Alain, C., Sedore, K., and McIntosh, A. (2007). Early face processing specificity: it’s in the eyes! J. Cogn. Neurosci. 19, 1815–1826. doi: 10.1162/jocn.2007.19.11.1815

Kotsoni, E., Mareschal, D., Csibra, G., and Johnson, M. H. (2006). Common-onset visual masking in infancy: behavioral and electrophysiological evidence. J. Cogn. Neurosci. 18, 966–973. doi: 10.1162/jocn.2006.18.6.966

Le Grand, R., Cooper, P. A., Mondloch, C. J., Lewis, T. L., Sagiv, N., de Gelder, B., et al. (2006). What aspects of face processing are impaired in developmental prosopagnosia? Brain Cogn. 61, 139–158.

Letourneau, S. M., and Mitchell, T. V. (2008). Behavioral and ERP measures of holistic face processing in a composite task. Brain Cogn. 67, 234–245. doi: 10.1016/j.bandc.2008.01.007

Linkenkaer-Hansen, K., Palva, J. M., Sams, M., Hietanen, J. K., Aronen, H. J., and Ilmoniemi, R. J. (1998). Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto- and electroencephalography. Neurosci. Lett. 253, 147–150. doi: 10.1016/S0304-3940(98)00586-2

Liu, J., Harris, A., and Kanwisher, N. (2002). Stages of processing in face perception: an MEG study. Nat. Neurosci. 5, 910–916. doi: 10.1038/nn909

Maris, E., and Oostenveld, R. (2007). Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190. doi: 10.1016/j.jneumeth.2007.03.024

Maurer, D., Le Grand, R., and Mondloch, C. J. (2002). The many faces of configural processing. Trends Cogn. Sci. 6, 255–260. doi: 10.1016/S1364-6613(02)01903-4

Maurer, D., O’Craven, K. M., Le Grand, R., Mondloch, C. J., Springer, M. V., Lewis, T. L., et al. (2007). Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia 45, 1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016

McKone, E., and Yovel, G. (2009). Why does picture-plane inversion sometimes dissociate perception of features and spacing in faces, and sometimes not? Toward a new theory of holistic processing. Psychon. Bull. Rev. 16, 778–797. doi: 10.3758/PBR.16.5.778

Mercure, E., Dick, F., and Johnson, M. H. (2008). Featural and configural face processing differentially modulate ERP components. Brain Res. 1239, 162–170. doi: 10.1016/j.brainres.2008.07.098

Mondloch, C., Le Grand, R., and Maurer, D. (2002). Configural face processing develops more slowly than featural processing. Perception 31, 553–566. doi: 10.1068/p3339

Oostenveld, R., Fries, P., Maris, E., and Schoffelen, J.-M. (2011). FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011:156869. doi: 10.1155/2011/156869

Palermo, R., Willis, M. L., Rivolta, D., McKone, E., Wilson, C. E., and Calder, A. J. (2011). Impaired holistic coding of facial expression and facial identity in congenital prosopagnosia. Neuropsychologia 49, 1226–1235. doi: 10.1016/j.neuropsychologia.2011.02.021

Piepers, D. W., and Robbins, R. A. (2012). A review and clarification of the terms “holistic,” “configural,” and “relational” in the face perception literature. Front. Psychol. 3:559. doi: 10.3389/fpsyg.2012.00559

Pitcher, D., Walsh, V., Yovel, G., and Duchaine, B. (2007). TMS evidence for the involvement of the right occipital face area in early face processing. Curr. Biol. 17, 1568–1573. doi: 10.1016/j.cub.2007.07.063

Premoli, I., Castellanos, N., Rivolta, D., Belardinelli, P., Bajo, R., Zipser, C., et al. (2014). TMS-EEG signatures of GABAergic neurotransmission in the human cortex. J. Neurosci. 34, 5603–5612. doi: 10.1523/jneurosci.5089-13.2014

Renzi, C., Schiavi, S., Carbon, C.-C., Vecchi, T., Silvanto, J., and Cattaneo, Z. (2013). Processing of featural and configural aspects of faces is lateralized in dorsolateral prefrontal cortex: a TMS study. Neuroimage 74, 45–51. doi: 10.1016/j.neuroimage.2013.02.015

Rivolta, D., Castellanos, N. P., Stawowsky, C., Helbling, S., Wibral, M., Grutzner, C., et al. (2014a). Source-reconstruction of event-related fields reveals hyperfunction and hypofunction of cortical circuits in antipsychotic-naive, first-episode schizophrenia patients during Mooney face processing. J. Neurosci. 34, 5909–5917. doi: 10.1523/jneurosci.3752-13.2014

Rivolta, D., Heidegger, T., Scheller, B., Sauer, A., Schaum, M., Birkner, K., et al. (2015). Ketamine dysregulates the amplitude and connectivity of high-frequency oscillations in cortical-subcortical networks in humans: evidence from resting-state magnetoencephalography-recordings. Schizophr. Bull. 41, 1105–1114. doi: 10.1093/schbul/sbv051

Rivolta, D., Palermo, R., Schmalzl, L., and Williams, M. A. (2012a). An early category-specific neural response for the perception of both places and faces. Cogn. Neurosci. 3, 45–51. doi: 10.1080/17588928.2011.604726

Rivolta, D., Palermo, R., Schmalzl, L., and Williams, M. A. (2012b). Investigating the features of the M170 in congenital prosopagnosia. Front. Hum. Neurosci. 6:45. doi: 10.3389/fnhum.2012.00045)

Rivolta, D., Woolgar, A., Palermo, R., Butko, M., Schmalzl, L., and Williams, M. A. (2014b). Multi-voxel pattern analysis (MVPA) reveals abnormal fMRI activity in both the “core” and “extended” face network in congenital prosopagnosia. Front Hum Neurosci 8:925. doi: 10.3389/fnhum.2014.00925

Robbins, R., and McKone, E. (2007). No face-like processing for objects-of-expertise in three behavioural tasks. Cognition 103, 331–336. doi: 10.1016/j.cognition.2006.02.008

Rossion, B. (2008). Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol. (Amst.) 128, 274–289. doi: 10.1016/j.actpsy.2008.02.003

Rossion, B. (2014). Understanding face perception by means of human electrophysiology. Trends Cogn. Sci. 18, 310–318. doi: 10.1016/j.tics.2014.02.013

Rossion, B., Dricot, L., Goebel, R., and Busigny, T. (2011). Holistic face categorization in higher order visual areas of the normal and prosopagnosic brain: toward a non-hierarchical view of face perception. Front. Hum. Neurosci. 4:225. doi: 10.3389/fnhum.2010.00225

Rossion, B., Gauthier, I., Tarr, M. J., Despland, P., Bruyer, R., Linotte, S., et al. (2000). The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport 11, 69–74. doi: 10.1097/00001756-200001170-00014

Scott, L. S., and Nelson, C. A. (2006). Featural and configural face processing in adults and infants: a behavioral and electrophysiological investigation. Perception 35, 1107–1128. doi: 10.1068/p5493

Tanaka, J. W., and Farah, M. J. (1993). Parts and wholes in face recognition. Q. J. Exp. Psychol. A 46, 225–245. doi: 10.1080/14640749308401045

Tanaka, J. W., Quinn, P. C., Xu, B., Maynard, K., Huxtable, N., Lee, K., et al. (2014). The effects of information type (features vs. configuration) and location (eyes vs. mouth) on the development of face perception. J. Exp. Child Psychol. 124, 36–49. doi: 10.1016/j.jecp.2014.01.001

Tanaka, J. W., and Simonyi, D. (2016). The “parts and the wholes” of face recognition: a review of the literature. Q. J. Exp. Psychol. 69, 1876–1889. doi: 10.1080/17470218.2016.1146780

Tucker, D. M. (1993). Spatial sampling of head electrical fields: the geodesic sensor net. Electroencephalogr. Clin. Neurophysiol. 87, 154–163. doi: 10.1016/0013-4694(93)90121-B

Wang, H., Sun, P., Ip, C., Zhao, X., and Fu, S. (2015). Configural and featural face processing are differently modulated by attentional resources at early stages: an event-related potential study with rapid serial visual presentation. Brain Res. 1602, 75–84. doi: 10.1016/j.brainres.2015.01.017

Yin, R. K. (1969). Looking at upside-down faces. J. Exp. Psychol. 81, 141–145. doi: 10.1037/h0027474

Young, A. W., Hellawell, D., and Hay, D. C. (1987). Configurational information in face perception. Perception 16, 747–759. doi: 10.1068/p160747

Yovel, G. (2015). Neural and cognitive face-selective markers: an integrative review. Neuropsychologia 83, 5–13. doi: 10.1016/j.neuropsychologia.2015.09.026

Yovel, G., and Kanwisher, N. (2004). Face perception: domain specific, not process specific. Neuron 44, 889–898. doi: 10.1016/j.neuron.2004.11.018

Zachariou, V., Nikas, C. V., Safiullah, Z. N., Gotts, S. J., and Ungerleider, L. G. (2016). Spatial mechanisms within the dorsal visual pathway contribute to the configural processing of faces. Cereb. Cortex doi: 10.1093/cercor/bhw224 [Epub ahead of print].

Keywords: face perception, object perception, holistic processing, configural processing, EEG, P100, N170

Citation: Negrini M, Brkić D, Pizzamiglio S, Premoli I and Rivolta D (2017) Neurophysiological Correlates of Featural and Spacing Processing for Face and Non-face Stimuli. Front. Psychol. 8:333. doi: 10.3389/fpsyg.2017.00333

Received: 18 October 2016; Accepted: 22 February 2017;

Published: 13 March 2017.

Edited by:

Mariska Esther Kret, Leiden University, NetherlandsReviewed by:

Dragan Rangelov, University of Queensland, AustraliaAngela Gosling, Bournemouth University, UK

Copyright © 2017 Negrini, Brkić, Pizzamiglio, Premoli and Rivolta. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Davide Rivolta, ZC5yaXZvbHRhQHVlbC5hYy51aw==

†These authors have contributed equally to this work.

Marcello Negrini

Marcello Negrini Diandra Brkić

Diandra Brkić Sara Pizzamiglio

Sara Pizzamiglio Isabella Premoli

Isabella Premoli Davide Rivolta

Davide Rivolta