95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 01 February 2017

Sec. Auditory Cognitive Neuroscience

Volume 8 - 2017 | https://doi.org/10.3389/fpsyg.2017.00093

This article is part of the Research Topic Music and the Functions of the Brain: Arousal, Emotions, and Pleasure View all 12 articles

High-resolution audio has a higher sampling frequency and a greater bit depth than conventional low-resolution audio such as compact disks. The higher sampling frequency enables inaudible sound components (above 20 kHz) that are cut off in low-resolution audio to be reproduced. Previous studies of high-resolution audio have mainly focused on the effect of such high-frequency components. It is known that alpha-band power in a human electroencephalogram (EEG) is larger when the inaudible high-frequency components are present than when they are absent. Traditionally, alpha-band EEG activity has been associated with arousal level. However, no previous studies have explored whether sound sources with high-frequency components affect the arousal level of listeners. The present study examined this possibility by having 22 participants listen to two types of a 400-s musical excerpt of French Suite No. 5 by J. S. Bach (on cembalo, 24-bit quantization, 192 kHz A/D sampling), with or without inaudible high-frequency components, while performing a visual vigilance task. High-alpha (10.5–13 Hz) and low-beta (13–20 Hz) EEG powers were larger for the excerpt with high-frequency components than for the excerpt without them. Reaction times and error rates did not change during the task and were not different between the excerpts. The amplitude of the P3 component elicited by target stimuli in the vigilance task increased in the second half of the listening period for the excerpt with high-frequency components, whereas no such P3 amplitude change was observed for the other excerpt without them. The participants did not distinguish between these excerpts in terms of sound quality. Only a subjective rating of inactive pleasantness after listening was higher for the excerpt with high-frequency components than for the other excerpt. The present study shows that high-resolution audio that retains high-frequency components has an advantage over similar and indistinguishable digital sound sources in which such components are artificially cut off, suggesting that high-resolution audio with inaudible high-frequency components induces a relaxed attentional state without conscious awareness.

High-resolution audio has recently emerged in the digital music market due to recent advances in information and communications technologies. Because of a higher sampling frequency and a greater bit depth than conventional low-resolution audio such as compact disks (CDs), it provides a closer replication of the real analog sound waves. Sampling frequency means the number of samples per second taken from a sound source through analog-to-digital conversion. Bit depth is the number of possible values in each sample and expressed as a power of two. A higher sampling frequency makes the digitization of sound more accurate in the time-frequency domain, whereas a greater bit depth increases the resolution of the sound. What kind of advantage does the latest digital audio have for human beings? This question has not been sufficiently discussed. The present investigation used physiological, behavioral, and subjective measures to provide evidence that high-resolution audio affects human psychophysiological state without conscious awareness.

The higher sampling frequency enables higher frequency sound components to be reproduced, because one-half of the sampling frequency defines the upper limit of reproducible frequencies (as dictated by the Nyquist–Shannon sampling theorem). However, in conventional digital audio, sampling frequency is usually restrained so that sounds above 20 kHz are cut off in order to reduce file sizes for convenience. This reduction is based on the knowledge that sounds above 20 kHz do not influence sound quality ratings (Muraoka et al., 1981) and do not appear to produce evoked brain magnetic field responses (Fujioka et al., 2002).

In contrast to this conventional digital recording process in which inaudible high-frequency components are cut off, high-resolution music that retains such components has been repeatedly shown to affect human electroencephalographic (EEG) activity (Oohashi et al., 2000, 2006; Yagi et al., 2003a; Fukushima et al., 2014; Kuribayashi et al., 2014; Ito et al., 2016). This effect is often called “hypersonic” effect. In these studies, only the presence or absence of inaudible high-frequency components is manipulated while the sampling frequency and the bit depth are held constant. Interestingly, this effect appears with a considerable delay (i.e., 100–200 s after the onset of music). However, it remains unclear what kind of psychological and cognitive states are associated with this effect. These studies also suggest that it is difficult to distinguish in a conscious sense between sounds with and without inaudible high-frequency components (full-range vs. high-cut). Some studies have shown that full-range audio is rated as better sound quality (e.g., a softer tone, more comfortable to the ears) than high-cut audio (Oohashi et al., 2000; Yagi et al., 2003a). Another study has shown that participants are not able to distinguish between the two types of digital audio, with no significant differences found for subjective ratings of sound qualities (Kuribayashi et al., 2014). The feasibility of discrimination seems to depend on the kinds of audio sources and individuals (Nishiguchi et al., 2009). Regarding behavioral aspects, it has been shown that people listen to full-range sounds at a higher level of sound volume than high-cut sounds (Yagi et al., 2003b, 2006; Oohashi et al., 2006).

Previous studies have examined the effect of inaudible high-frequency components on EEG activity while listening to music under resting conditions. It has been shown that EEG alpha-band (8–13 Hz) frequency power is greater for high-resolution music with high-frequency components than for the same sound sources without them (Oohashi et al., 2000, 2006; Yagi et al., 2003a). The effect appears more clearly in a higher part of the conventional alpha-band frequency of 8–13 Hz (10.5–13 Hz: Kuribayashi et al., 2014; 11–13 Hz: Ito et al., 2016). Ito et al. (2016) reported that low beta-band (14–20 Hz) EEG power also showed the same tendency to increase as high alpha-band EEG power.

A study using positron emission tomography (PET) revealed that the brainstem and thalamus areas were more activated when hearing full-range as compared with high-cut sounds (Oohashi et al., 2000). Because such activation may support a role of the thalamus in emotional experience (LeDoux, 1993; Vogt and Gabriel, 1993; Blood and Zatorre, 2001; Jeffries et al., 2003; Brown et al., 2004; Lupien et al., 2009) and also in filtering or gating sensory input (Andreasen et al., 1994), Oohashi et al. (2000) speculated that the presence of inaudible high-frequency components may affect the perception of sounds and some aspects of human behavior.

Another line of research suggests a link between cognitive function and alpha-band as well as beta-band EEG activities. Alpha-band EEG activity is thought to be associated not only with arousal and vigilance levels (Barry et al., 2007) but also with cognitive tasks involving perception, working memory, long-term memory, and attention (e.g., Basar, 1999; Klimesch, 1999; Ward, 2003; Klimesch et al., 2005). Higher alpha-band activity is considered to inhibit task-irrelevant brain regions so as to serve effective disengagement for optimal processing (Jensen and Mazaheri, 2010; Foxe and Snyder, 2011; Weisz et al., 2011; Klimesch, 2012; De Blasio et al., 2013).

Beta-band power is broadly thought to be associated with motor function when it is derived from motor areas (Hari and Salmelin, 1997; Crone et al., 1998; Pfurtscheller and Lopes da Silva, 1999; Pfurtscheller et al., 2003). Moreover, beta power has been shown to increase with corresponding increases in arousal and vigilance levels, which indicates that participants get engaged in a task (e.g., Sebastiani et al., 2003; Aftanas et al., 2006; Gola et al., 2012; Kamiñski et al., 2012).

What kind of advantage does high-resolution audio with inaudible high-frequency components have for human beings? What remains unclear is what kind of psychophysiological states high-resolution audio induces, along with the corresponding increase in alpha- and beta-band EEG activities. To monitor listeners’ arousal level, we asked participants to listen to a musical piece while performing a visual vigilance task that required sustained attention in order to continuously respond to specific stimuli. Two types of high-resolution audio of the same musical piece were presented using a double-blind method: With or without inaudible high-frequency components.

EEG was recorded along with other psychophysiological measures: Heart rate (HR), heart rate variability (HRV), and facial electromyograms (EMGs). The former two measures index autonomic nervous system activities. HRV contains two components with different frequency bands: High frequency (HF; 0.15-0.4 Hz), and low frequency (LF; 0.04-0.15 Hz). HF and LF activities are mediated by vagal and vagosympathetic activations, respectively (Malliani et al., 1991; Malliani and Montano, 2002). The LF/HF power ratio is sometimes used as an index parameter that shows the sympathetic activities. Facial EMGs in the regions of the corrugator supercilii and the zygomaticus major muscles have been used as indices of negative and positive affects, respectively (Larsen et al., 2003). Decrements in vigilance task performance such as longer reaction times (RTs) and higher error rates are interpreted as reflecting the decrease in arousal level, which is also reflected in the electrical activity of the brain (Fruhstorfer and Bergström, 1969). Besides ongoing EEG activity, event-related potentials (ERPs) are associated with vigilance task performance. When vigilance task performance decreases, the amplitude of P3, a positive ERP component observed dominantly at parietal recording sites between 300 and 600 ms after stimulus onset, decreases and its latency increases (Fruhstorfer and Bergström, 1969; Davies and Parasuraman, 1977; Parasuraman, 1983). The P3 outcomes are thought to be modulated not only by overall arousal level but also by attentional resource allocation (Polich, 2007). P3 amplitude has been shown to be larger when greater attentional resources are allocated to the eliciting stimulus. It is thus thought that P3 amplitude can serve as a measure of processing capacity and mental workload (Kok, 1997, 2001).

In the present study, physiological, behavioral, and subjective measures were recorded to examine what kind of advantage high-resolution audio with inaudible high-frequency components has. Specifically, we were interested in how the increase in alpha- and beta-band EEG activities is associated with listeners’ arousal and vigilance level. Using a double-blind method, two types of high-resolution audio of the same musical piece (with or without inaudible high-frequency components) were presented while participants performed a vigilance task in the visual modality.

Twenty-six student volunteers at Hiroshima University gave their informed consent and participated in the study. Four participants had to be excluded due to technical problems. The remaining 22 participants (14 women, 18–24 years, M = 20.6 years) did not report any known neurological dysfunction or hearing deficit. They were right-handed according to the Edinburgh Inventory (M = 84.1 ± 12.8). All reported to have correct or corrected-to-normal vision. Eight participants had the experience of learning musical instruments for a few years, but none of them were professional musicians. The Research Ethics Committee of the Graduate School of Integrated Arts and Sciences in Hiroshima University approved the experimental protocol.

The present study used the same materials that were used in Kuribayashi et al. (2014). The first 200-s portion of French Suite No. 5 by J. S. Bach (on cembalo, 24-bit quantization, 192 kHz A/D sampling) was selected. In the present study, this portion was played twice to produce a 400-s excerpt. The original (full-range) excerpt is rich in high-frequency components. A high-cut version of the excerpt was produced by removing such components using a low-pass finite impulse response digital filter with a very steep slope (cutoff = 20 kHz, slope = –1,673 dB/oct). This linear-phase filter does not cause any phase distortion. Although the filter produces very small ripples (1.04∗10-2 dB), they are negligible and it is unlikely to affect auditory perception. Sounds were amplified using AI-501DA (TEAC Corporation, Tokyo, Japan) controlled by dedicated software on a laptop PC. Two loudspeakers with high-frequency tweeters (PM1; Bowers & Wilkins, Worthing, England) were located 1.5 m diagonally forward from the listening position. The sound pressure level was set at approximately 70 dB (A). Calibration measurements at the listening position ensured that the full-range excerpt contained abundant high-frequency components and that the high-frequency power of the high-cut excerpt (i.e., components over 20 kHz) did not differ from that of background noise. The average power spectra of the excerpts are available at http://links.lww.com/WNR/A279 as Supplemental Digital Content of Kuribayashi et al. (2014).

An equiprobable visual Go/NoGo task was conducted using a cathode ray tube (CRT) computer monitor (refresh rate = 100 Hz) in front of participants. A block consisted of 120 visual stimuli: 60 targets (either ‘T’ or ‘V’, 30 each) and 60 non-targets (‘O’) in a randomized order. The visual stimuli were 200 ms in duration and presented with a mean stimulus onset asynchrony (SOA) of 5 s (range = 3-7 s). Button-press responses with the left and right index fingers were required to ‘T’ and ‘V’ (or ‘V’ and ‘T’) respectively, as quickly and accurately as possible.

The study was conducted using a double-blind method. Participants listened to two versions of the 400-s musical excerpt (with or without high-frequency components) while performing the Go/NoGo task. Participants also performed the task under silent conditions for 100 s before and after music presentation (pre- and post-music periods). The presentation order of the two excerpts was counterbalanced across the participants. EEG, HR, and facial EMGs were recorded during task performance. After listening to each excerpt, participants completed a sound quality questionnaire consisting of 10 pairs of adjectives and then reported their mood states on the Affect Grid (Russell et al., 1989) and multiple mood scales (Terasaki et al., 1992). At the end of the experiment, participants judged which excerpt contained high-frequency components by making a binary choice between them.

Psychophysiological measures were recorded with a sampling rate of 1000 Hz using QuickAmp (Brain Products, Gilching, Germany). Filter bandpass was DC to 200 Hz. EEG was recorded from 34 scalp electrodes (Fp1/2, Fz, F3/4, F7/8, FC1/2, FC5/6, FT9/10, Cz, T7/8, C3/4, CP1/2, CP5/6, TP9/10, Pz, P3/4, P7/8, PO9/10, Oz, O1/2) according to the extended 10–20 system. Four additional electrodes (supra-orbital and infra-orbital ridges of the right eye and outer canthi) were used to monitor eye movements and blinks. EEG data were recorded using the average reference online and re-referenced to the digitally linked earlobes (A1–A2) offline. EEG data were resampled at 250 Hz and were filtered offline (1–60 Hz band pass, 24 dB/oct for EEG analysis; 0.1–60 Hz band pass, 24 dB/oct for ERP analysis). Ocular artifacts were corrected using a semi-automatic independent component analysis method implemented on Brain Vision Analyzer 2.04 (Brain Products). The components that were easily identifiable as artifacts related to blinks and eye movements were removed.

Heart rate was measured by recording electrocardiograms from the left ankle and the right hand. The R–R intervals were calculated and converted into HR in bpm. For facial EMGs, electrical activities over the zygomaticus major and corrugator supercilii regions were recorded using bipolar electrodes affixed above the left brow and on the left cheek, respectively (Fridlund and Cacioppo, 1986). The EMG data were filtered offline (15 Hz high-pass, 12 dB/oct) and fully rectified (Larsen et al., 2003).

A total of 600 s (including silent periods) was divided into six 100-s epochs. For EEG analysis, each 100-s epoch was divided into 97 2.048-s segments with 1.024 s overlap. Power spectrum was calculated by Fast Fourier Transform with a Hanning window. The total powers (μV2) of the following frequency bands were calculated: Delta (1–4 Hz), theta (4–8 Hz), low-alpha (8–10.5 Hz), high-alpha (10.5–13 Hz), low-beta (13–20 Hz), high-beta (20–30 Hz), and gamma (36–44 Hz). The square root of the total power (μV) was used for statistical analysis, following the procedure of previous studies (Oohashi et al., 2000, 2006; Kuribayashi et al., 2014). The scalp electrode sites were grouped into four regions: Anterior Left (AL: Fp1, F3, F7, FC1, FC5, FT9), Anterior Right (AR: Fp2, F4, F8, FC2, FC6, FT10), Posterior Left (PL: CP1, CP5, TP9, P3, P7, PO9, O1), and Posterior Right (PR: CP2, CP6, TP10, P4, P8, PO10, O2). For RT, EMG, and HR, the mean values of each 100-s epoch were calculated. Mean RT and EMG values were log-transformed before statistical analysis.

Heart rate variability analysis was done by using Kubios HRV 2.2 (Tarvainen et al., 2014). The last 300-s (5-min) epoch of the 400-s listening period was selected according to previously established guidelines (Berntson et al., 1997). Prior to spectrum estimation, the R–R interval series is converted to equidistantly sampled series via piecewise cubic spline interpolation. The spectrum is estimated using an autoregressive modeling based method. The total powers (ms2) were calculated for LF (0.04–0.15 Hz) and HF (0.15–0.4 Hz) bands, and LF/HF power ratio was obtained. The square roots of LF and HF (ms) were used for statistical analysis.

For ERP analysis, the total 400-s listening period was divided into two 200-s epochs, to secure a reasonable number of Go trials (around 20). Silent periods (pre- and post-music epoch) were not included in the calculation. Those trials found to have Go omissions, Go misses (incorrect hand response to ‘T’ or ‘V’), or NoGo responses (commission errors) were excluded from further processing steps. Go and NoGo responses were separately averaged to produce ERPs. Epochs (200 ms before stimulus presentation until 1000 ms after the presentation) were baseline corrected (-200 ms until 0 ms). The peak of a P3 wave was identified within a latency range of 350-500 ms at Pz where P3 amplitude is dominant topographically.

Each measure was subjected to a repeated measures analysis of variance (ANOVA) with sound type (full-range vs. high-cut) and epoch (pre-music, 0-100, 100-200, 200-300, 300-400 s, and post-music for EEG data; 0-200 and 200-400 s for ERP data) as factors. To compensate for possible type I error inflation by the violation of sphericity, multivariate ANOVA solutions are reported (Vasey and Thayer, 1987). The significance level was set at 0.05. For post hoc multiple comparisons of means, the comparison-wise level of significance was determined by the Bonferroni method.

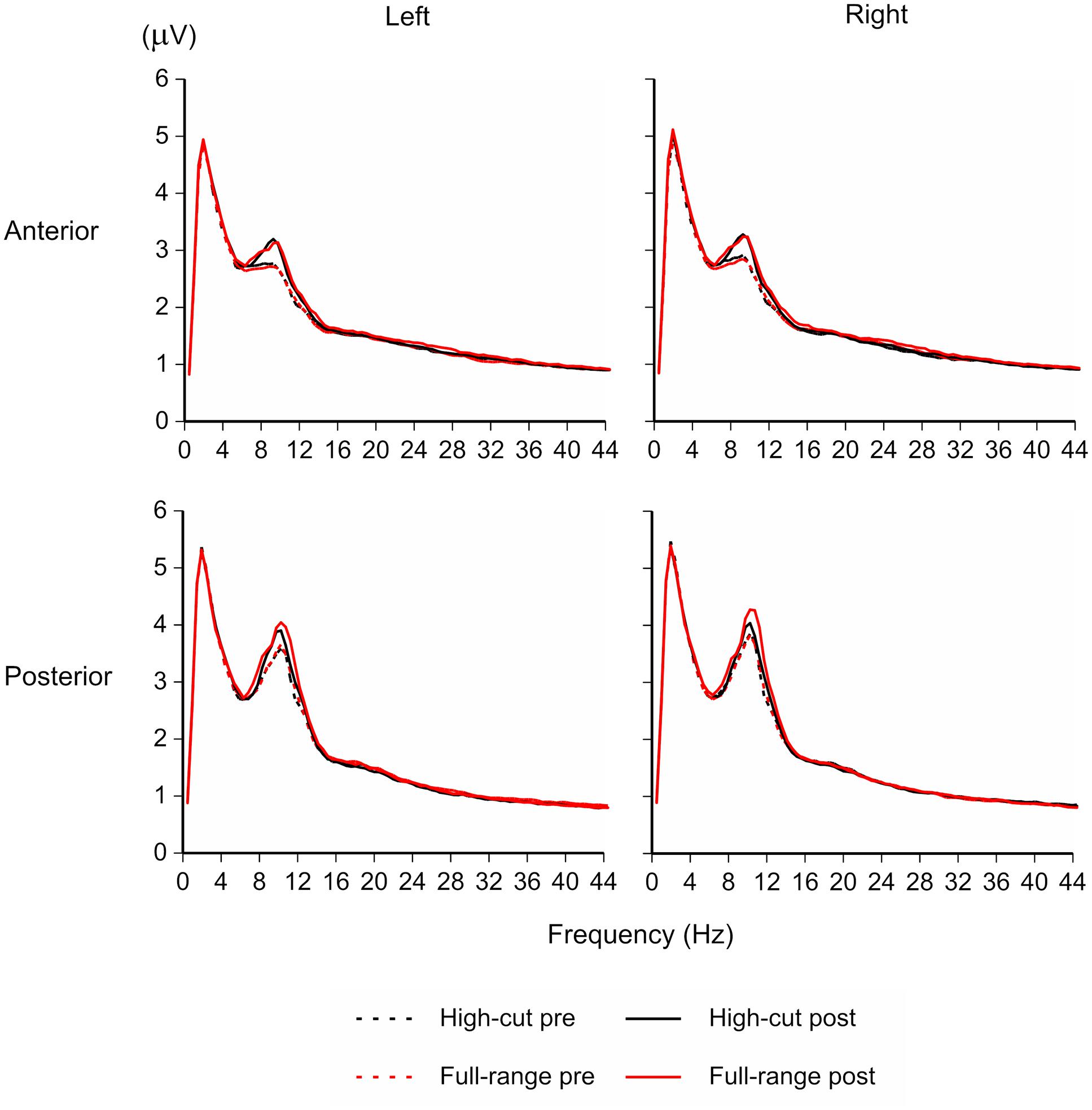

Figure 1 shows the EEG amplitude spectrogram for the four regions in the silent conditions (pre- and post-music periods). Although participants were performing a visual vigilance task with eyes opened, a peak around 10 Hz appears clearly. The amplitude of the peak appears to be increased after listening to music, in particular after listening to the full-range version of the musical piece.

FIGURE 1. EEG amplitude spectrogram for the full-range and high-cut conditions in the silent periods (100-s epochs before and after listening to music). The amplitude of the peak around 10 Hz was increased after listening to music, in particular after listening to the full-range version of the sound source that contains high-frequency components.

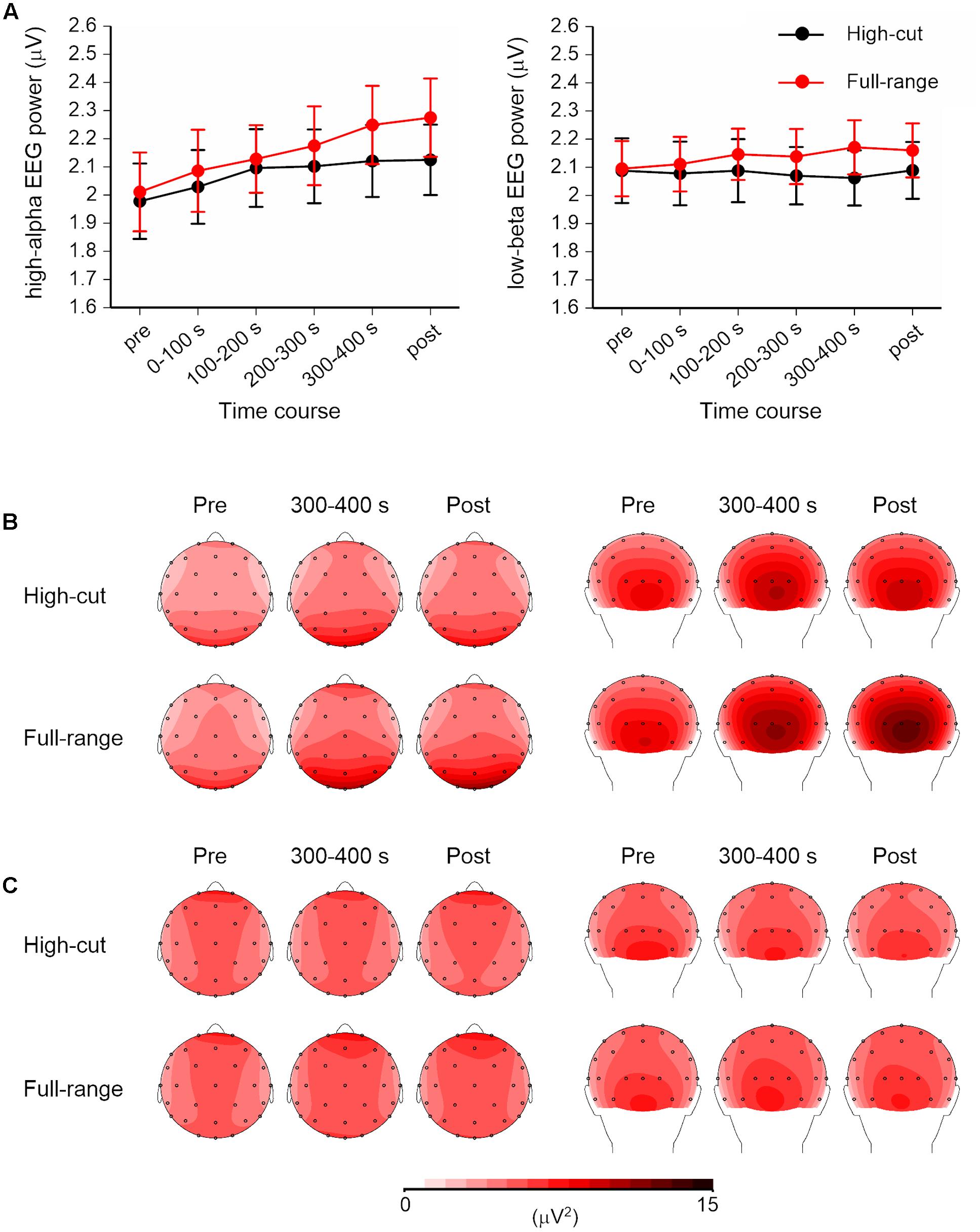

Figure 2 shows the time course and scalp topography of high-alpha EEG (10.5–13 Hz) and low-beta EEG (13–20 Hz) bands. For EEG measures, a Sound Type × Epoch × Anterior-Posterior × Hemisphere ANOVA was conducted for each frequency band. Significant effects of sound type were found for both bands. For other frequency bands, only the theta EEG band (4-8 Hz) power showed a significant Sound Type × Anterior-Posterior × Hemisphere interaction, F(1,21) = 5.37, p = 0.031, = 0.20. However, no significant simple main effects were found.

FIGURE 2. High-alpha (10.5–13 Hz) and low-beta (13–20 Hz) EEG powers for the full-range and high-cut conditions. (A) Time course of the square root of EEG powers over four scalp regions. Error bars show SEs. No music was played in the pre- and post-music epochs. (B,C) Scalp topography of the high-alpha and low-beta EEG powers in the pre-music, 300–400 s (i.e., last quarter of the listening period), and post-music epochs. Left: top view. Right: back view.

For high-alpha EEG band, the Sound Type × Epoch × Hemisphere interaction was significant, F(5,17) = 7.06, p = 0.001, = 0.67. Separate ANOVAs for each epoch revealed a significant Sound Type × Hemisphere interaction at the 200-300-s epoch, F(1,21) = 12.63, p = 0.002, = 0.38, and a significant effect of sound type at the post-music period, F(1,21) = 6.99, p = 0.015, = 0.25. Post hoc tests revealed that high-alpha EEG power was greater for the full-range excerpt than for the high-cut excerpt and that the sound type effect was found for the left but not right hemisphere at the 200-300-s epoch. No effects of sound type were obtained at the epochs before 200 s. The main effect of anterior-posterior was also significant, F(1,21) = 15.67, p = 0.001, = 0.43, showing that the high-alpha EEG was dominant over posterior scalp sites.

For low-beta EEG band, the Sound Type × Anterior-Posterior × Hemisphere interaction and the main effect of sound type effect were significant, F(1,21) = 4.49, p = 0.046, = 0.18; F(1,21) = 5.43, p = 0.030, = 0.21. Low-beta EEG power was greater in the full-range condition than in the high-cut condition. Separate ANOVAs for anterior-posterior and hemisphere also revealed significant effects of sound type, for posterior region: F(1,21) = 7.07, p = 0.015, = 0.25; for left hemisphere: F(1,21) = 5.26, p = 0.032, = 0.20; for right hemisphere: F(1,21) = 5.27, p = 0.032, = 0.20; except for anterior region: F(1,21) = 3.94, p = 0.060, = 0.16. Although there were no significant interaction effects including epoch, Figure 2 shows that the difference between the full-range and high-cut excerpts seems to be more prominent at later epochs. Two-tailed t-tests revealed significant differences between the two excerpts at the 200-300-s, 300-400-s, and post epochs, ts(21) > 2.37, ps < 0.027; p > 0.114 at the epochs before 200 s.

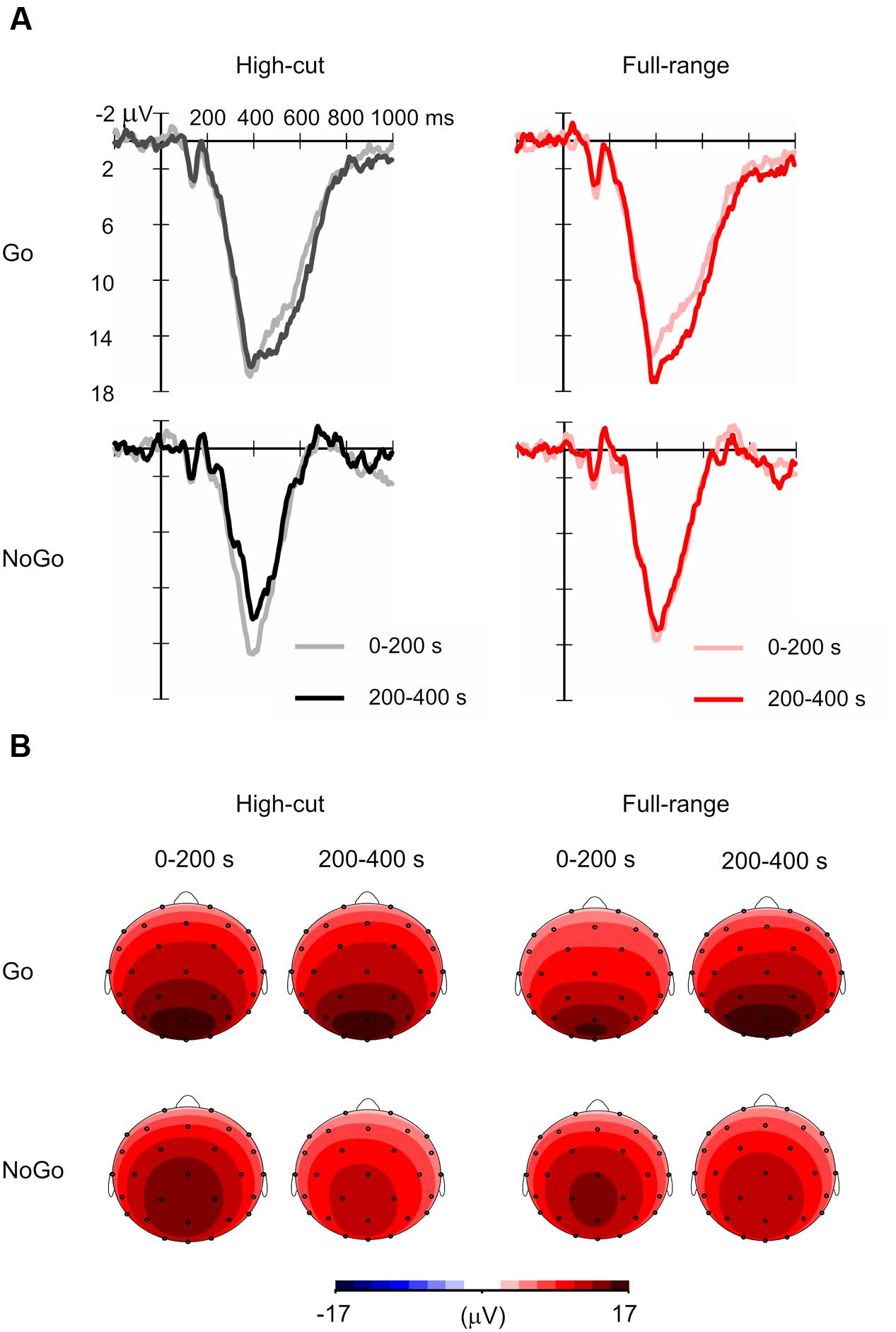

Figure 3 shows grand mean ERP waveforms and the scalp topography of the Go and NoGo P3 amplitudes. The mean number of averaged trials was 18.6 (range = 13-20). Although this is less than an optimal number of averages for P3 (Cohen and Polich, 1997), P3 peaks can be detected for all individual ERP waveforms. Table 1 shows the mean amplitudes and latencies of the P3 peaks.

FIGURE 3. Grand mean event-related potential (ERP) waveforms for Go and NoGo stimuli in the full-range and high-cut conditions. (A) Waveforms at Pz. (B) Scalp topography of P3 amplitudes in the 0–200 s (i.e., first half) and 200–400 s (i.e., second half) epochs of music listening. The mean amplitudes of 380–440 ms after stimulus onset in the grand mean ERP waveforms are shown.

For the P3 amplitude, a Sound Type × Epoch ANOVA was conducted for Go and NoGo stimulus conditions separately. A significant interaction was found for the Go condition, F(1,21) = 4.39, p = 0.049, = 0.17, but not for the NoGo condition, F(1,21) = 2.64, p = 0.119, = 0.11. Post hoc tests revealed that Go P3 amplitude increased from the 0-200 s to the 200-400 s epoch for the full-range excerpt, whereas Go P3 amplitude did not change for the high-cut excerpt. The main effect of epoch was significant for the NoGo condition, F(1,21) = 13.39, p = 0.001, = 0.39, showing that NoGo P3 amplitude decreased during the task for both musical excerpts.

Similar ANOVAs were conducted for latencies. No significant main or interaction effects of sound type were found. The main effect of epoch was significant for the Go stimulus condition, F(1,21) = 5.01, p = 0.036, = 0.19, showing that Go P3 latency increased through the task.

One of the reviewers questioned about the effects of sound type on the Nogo N2 (Falkenstein et al., 1999). We conducted a Sound Type × Epoch ANOVA on the amplitude of the Nogo N2 (Nogo minus Go in the 200–300 ms period at Fz and Cz). No significant main or interaction effects were found.

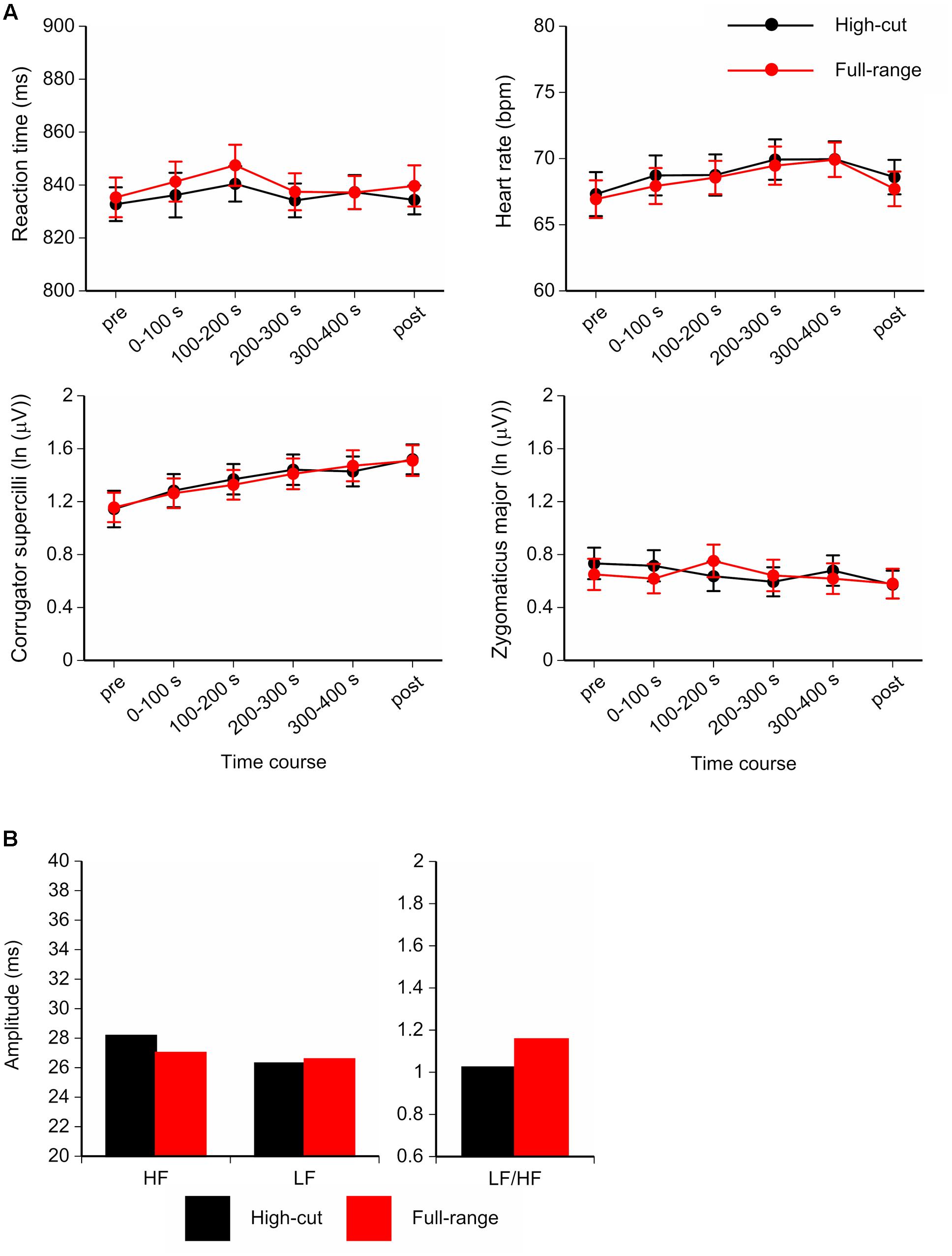

Participants performed the vigilance task with considerable accuracy (high-cut: M = 98.6%, 95.8-100%; full-range: M = 97.9%, 95.0-99.2%). Figure 4 shows the time course of mean Go reaction times, HR, and facial EMGs (corrugator supercilii, zygomaticus major), and the HRV components for the last 300-s epoch of the musical excerpts. For the corrugator supercilii, a Sound Type × Epoch ANOVA showed a significant main effect of epoch, F(5,17) = 5.69, p = 0.003, = 0.63. Corrugator activity increased over the course of the task. No significant main or interaction effects of sound type were found for RT or other physiological measures.

FIGURE 4. (A) Time course of the mean Go reaction times, Heart rate (HR), and facial electromyograms (EMGs; corrugator supercilii and zygomaticus major) for the full-range and high-cut conditions. (B) Amplitudes and ratio of heart rate variability (HRV) components for the last 300-s epoch of musical excerpts. Error bars show SEs.

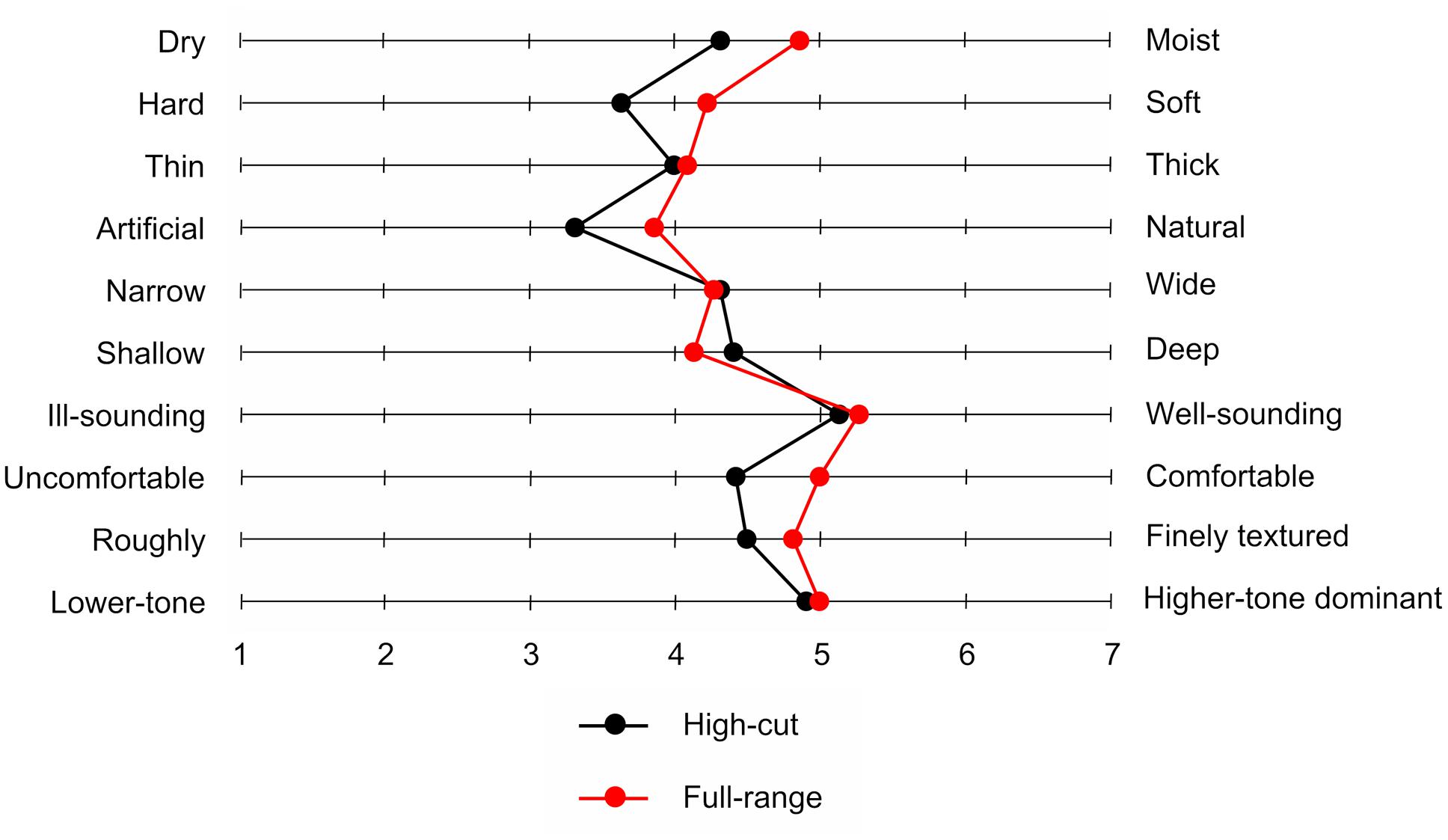

Table 2 shows mean scores for participants’ mood states. A significant difference between the two types of musical excerpt was found only for inactive pleasantness scores, t(21) = 3.13, p = 0.005. Participants provided higher inactive pleasantness scores under the full-range than under the high-cut excerpt. Figure 5 shows the mean sound quality ratings for the full-range and high-cut musical excerpts. No significant differences were found between the two types of audio source for any adjective pairs, ts(21) < 1.92, ps > 0.069. The correct rate of the forced choices was 41.0%, which did not exceed chance level (p = 0.523, binomial test).

FIGURE 5. Mean sound quality ratings for two musical excerpts with or without inaudible high-frequency components.

High-resolution audio with inaudible high-frequency components is a closer replication of real sounds than similar and indistinguishable sounds in which these components are artificially cut off. It remains unclear what kind of advantages high-resolution audio might have for human beings. Previous research in which participants listened to high-resolution music under resting conditions have shown that alpha and low-beta EEG powers were larger for an excerpt with high-frequency components as compared with an excerpt without them (Oohashi et al., 2000, 2006; Yagi et al., 2003a; Fukushima et al., 2014; Kuribayashi et al., 2014; Ito et al., 2016). The present study asked participants to listen to two types of high-resolution audio of the same musical piece (with or without inaudible high-frequency components) while performing a vigilance task in the visual modality. Although the effect size is small, the overall results support the view that the effect of high-resolution audio with inaudible high-frequency components on brain activity reflects a relaxed attentional state without conscious awareness.

We found greater high-alpha (10.5–13 Hz) and low-beta (13–20 Hz) EEG powers for the excerpt with high-frequency components as compared with the excerpt without them. The effect appeared in the latter half of the listening period (200-400 s) and during the 100-s period after music presentation (post-music epoch). Furthermore, for full-range sounds compared with high-cut sounds, Go trial P3 amplitude increased, and subjective relaxation scores were greater. Because task performance did not change across musical excerpts, with no difference in self-reported arousal, the effects of high-resolution audio with inaudible high-frequency components on brain activities should not reflect a decrease of listeners’ arousal level. These findings show that listeners seem to experience a relaxed attentional state when listening to high-resolution audio with inaudible high-frequency components compared to similar sounds without these components.

It has been shown that listening to musical pieces increases EEG powers of theta, alpha, and beta bands (Pavlygina et al., 2004; Jäncke et al., 2015), and that the enhanced alpha-band power holds for approximately 100 s after listening (Sanyal et al., 2013). Therefore, high-resolution audio with inaudible high-frequency components would be advantageous compared to a similar digital audio in which these components are removed, in terms of the enhanced brain activity. Kuribayashi and Nittono (2014) have localized the intracerebral sources of this alpha EEG effect using standardized low-resolution brain electromagnetic tomography (sLORETA). The analysis revealed that the difference between full-range and high-cut sounds appeared in the right inferior temporal cortex, whereas the main source of the alpha-band activity was located in the parietal-occipital region. The finding that the alpha-band activity difference was obtained in specific but not whole regions is suggestive that this increase may reflect an activity related to task performance rather than a global arousal effect (Barry et al., 2007).

The present study shows that not only high-alpha and low-beta EEG powers but also P3 amplitude increased in the last half of the listening period (200-400 s). Alpha-band EEG activity and P3 amplitude have been shown to be positively correlated, in such a way that prestimulus alpha directly modulates positive potential amplitude in an auditory equiprobable Go/NoGo task (Barry et al., 2000; De Blasio et al., 2013). P3 amplitude is larger when greater attentional resources are allocated to the eliciting stimulus (Kok, 1997, 2001; Polich, 2007). Alpha power is increased in tasks requiring a relaxed attentional state such as mindfulness and imagination of music (Cooper et al., 2006; Schaefer et al., 2011; Lomas et al., 2015). Increased alpha power is thought to be a signifier of enhanced processing, with attention focused on internally generated stimuli (Lomas et al., 2015). Beta power has been shown to increase when arousal and vigilance level increase (e.g., Sebastiani et al., 2003; Aftanas et al., 2006; Gola et al., 2012; Kamiñski et al., 2012). Taken together, the EEG and ERP results support the idea that listening to high-resolution audio with inaudible high-frequency components enhances the cortical activity related to the attention allocated to task-relevant stimuli. Although the effect was not observed in behavior, the gap between behavioral and EEG and ERP results is probably due to the ceiling effect of the vigilance task performance. Such a gap is often observed in other studies. For example, Okamoto and Nakagawa (2016) similarly reported that event-related synchronization in the alpha band during working memory task was increased 20–30 min after the onset of the exposure to blue (short-wavelength) light, as compared with green (middle-wavelength) light, while task performance was high irrespective of light colors.

As a mechanism underlying the effect of inaudible high-frequency sound components, we speculate that the brain may subconsciously recognize high-resolution audio that retains high-frequency components as being more natural, as compared with similar sounds in which such components are artificially removed. A link between alpha power and ratings of ‘naturalness’ of music has been reported. When listening to the same musical piece with different tempos, alpha-band EEG power increased for excerpts that were rated to be more natural, the ratings of which were not directly related to subjective arousal (Ma et al., 2012; Tian et al., 2013). As high-resolution audio replicates real sound waves more closely, it may sound more natural (at least on a subconscious level) and facilitate music-related psychophysiological responses.

Our findings have some limitations. First, because we used only a visual vigilance task, it is unclear whether high-resolution audio can improve performance on tasks that involve working memory and long-term memory. Because a vigilance task is relatively easy, our participants were able to sustain high performance. Other research using an n-back task requiring memory has shown that high-resolution audio also enhances task performance (Suzuki, 2013). Future research will benefit from using other tasks requiring various cognitive domains and processes.

Second, the underlying mechanism of how inaudible high-frequency components affect EEG activities cannot be revealed by the current data. It is noteworthy that presenting high-frequency components above 20 kHz alone did not produce any change in EEG activities (Oohashi et al., 2000). Therefore, the combination of inaudible high-frequency components and audible low-frequency components should be a key factor that causes this phenomenon. A possible clue was obtained by a recent study of Kuribayashi and Nittono (2015). Recording sound spectra of various musical instruments, they found that high-frequency components above 20 kHz appear abundantly during the rising period of a sound wave (i.e., from the silence to the maximal intensity, usually less than 0.1 s), but occur much less after that. Artificially cutting off the high-frequency components may cause a subtle distortion in this short period. It will take some time to accumulate these small, short-lasting differences until they produce discernible psychophysiological effects. This explanation is consistent with the fact that the effect of high-frequency components on EEG activities appears only after a 100–200-s exposure to the music (Oohashi et al., 2000, 2006; Yagi et al., 2003a; Fukushima et al., 2014; Kuribayashi et al., 2014; Ito et al., 2016).

Third, it remains unclear why there was a time lag until the effects of high-resolution audio on brain activity show up, and why this effect was maintained for 100 s after music stopped. A possible reason is that, as mentioned above, sufficiently long exposure is needed for the effects of inaudible high-frequency components. Another possibility is that listening to music has psychophysiological impact through the engagement of various neurochemical systems (Chanda and Levitin, 2013). Humoral effects are characterized by slow and durable responses, which might be underlying the lagged effect of high-resolution audio with inaudible high-frequency components. Although the present study did not reveal this effect on autonomic nervous system (HR and HRV) indices during music listening, participants reported greater relaxation scores after listening to high-resolution music with inaudible high-frequency components. It is a task for future research to determine the time course of the effect more precisely.

Fourth, the present study did not manipulate the sampling frequency and the bit depth of digital audio. High-resolution audio is characterized not only by the capability of reproducing inaudible high-frequency components but also by more accurate sampling and quantization (i.e., a higher sampling frequency and a greater bit depth) as compared with low-resolution audio. If the naturalness derived by a closer replication of real sounds affects EEG activities, the sampling frequency and the bit depth would do too regardless of whether the real sounds feature high-frequency components. This idea would be worth examining in future research.

In summary, high-resolution audio with inaudible high-frequency components has some advantages over similar and indistinguishable sounds in which these components are artificially cut off, such that the former type of digital audio induces a relaxed attentional state. Even without conscious awareness, a closer replication of real sounds in terms of frequency structure appears to bring out greater potential effects of music on human psychophysiological state and behavior.

This study was carried out in accordance with the recommendations of The Research Ethics Committee of the Graduate School of Integrated Arts and Sciences in Hiroshima University. All participants gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by The Research Ethics Committee of the Graduate School of Integrated Arts and Sciences in Hiroshima University.

RK and HN planned the experiment, interpreted the data, and wrote the paper. RK collected and analyzed the data.

This work was supported by JSPS KAKENHI Grant Number 15J06118.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors thank Ryuta Yamamoto, Katsuyuki Niyada, Kazushi Uemura, and Fujio Iwaki for their support as research coordinators. Hiroshima Innovation Center for Biomedical Engineering and Advanced Medicine offered the sound equipment.

Aftanas, L. I., Reva, N. V., Savotina, L. N., and Makhnev, V. P. (2006). Neurophysiological correlates of induced discrete emotions in humans: an individually oriented analysis. Neurosci. Behav. Physiol. 36, 119–130. doi: 10.1007/s11055-005-0170-6

Andreasen, N. C., Arndt, S., Swayze, V., Cizadlo, T., Flaum, M., O’Leary, D., et al. (1994). Thalamic abnormalities in schizophrenia visualized through magnetic resonance image averaging. Science 266, 294–298. doi: 10.1126/science.7939669

Barry, R. J., Clarke, A. R., Johnstone, S. J., Magee, C. A., and Rushby, J. A. (2007). EEG differences between eyes-closed and eyes-open resting conditions. Clin. Neurophysiol. 118, 2765–2773. doi: 10.1016/j.clinph.2007.07.028

Barry, R. J., Kirkaikul, S., and Hodder, D. (2000). EEG alpha activity and the ERP to target stimuli in an auditory oddball paradigm. Int. J. Psychophysiol. 39, 39–50. doi: 10.1016/S0167-8760(00)00114-8

Basar, E. (1999). Brain Function and Oscillations: Integrative Brain Function. Neurophysiology and Cognitive Processes, Vol. II. Berlin: Springer, doi: 10.1007/978-3-642-59893-7

Berntson, G. G., Bigger, J. T., Eckberg, D. L., Grossman, P., Kaufmann, P. G., Malik, M., et al. (1997). Heart rate variability: origins, methods, and interpretive caveats. Psychophysiology 34, 623–648. doi: 10.1111/j.1469-8986.1997.tb02140.x

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Brown, S., Martinez, M. J., and Parsons, L. M. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport 15, 2033–2037. doi: 10.1097/00001756-200409150-00008

Chanda, M. L., and Levitin, D. J. (2013). The neurochemistry of music. Trends Cogn. Sci. 17, 179–193. doi: 10.1016/j.tics.2013.02.007

Cohen, J., and Polich, J. (1997). On the number of trials needed for P300. Int. J. Psychophysiol. 25, 249–255. doi: 10.1016/S0167-8760(96)00743-X

Cooper, N. R., Burgess, A. P., Croft, R. J., and Gruzelier, J. H. (2006). Investigating evoked and induced electroencephalogram activity in task-related alpha power increases during an internally directed attention task. Neuroreport 17, 205–208. doi: 10.1097/01.wnr.0000198433.29389.54

Crone, N. E., Miglioretti, D. L., Gordon, B., Sieracki, J. M., Wilson, M. T., Uematsu, S., et al. (1998). Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. I. Alpha and beta event-related desynchronization. Brain 121, 2271–2299. doi: 10.1093/brain/121.12.2271

Davies, D. R., and Parasuraman, R. (1977). “Cortical evoked potentials and vigilance: a decision theory analysis,” in NATO Conference Series. Vigilance, Vol. 3, ed. R. Mackie (New York, NY: Plenum Press), 285–306. doi: 10.1007/978-1-4684-2529-1

De Blasio, F. M., Barry, R. J., and Steiner, G. Z. (2013). Prestimulus EEG amplitude determinants of ERP responses in a habituation paradigm. Int. J. Psychophysiol. 89, 444–450. doi: 10.1016/j.ijpsycho.2013.05.015

Falkenstein, M., Hoormann, J., and Hohnsbein, J. (1999). ERP components in Go/Nogo tasks and their relation to inhibition. Acta Psychol. (Amst.) 101, 267–291. doi: 10.1016/S0001-6918(99)00008-6

Foxe, J. J., and Snyder, A. C. (2011). The role of alpha-band brain oscillations as a sensory suppression mechanism during selective attention. Front. Psychol. 2:154. doi: 10.3389/fpsyg.2011.00154

Fridlund, A. J., and Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology 23, 567–589. doi: 10.1111/j.1469-8986.1986.tb00676.x

Fruhstorfer, H., and Bergström, R. M. (1969). Human vigilance and auditory evoked responses. Electroencephalogr. Clin. Neurophysiol. 27, 346–355. doi: 10.1016/0013-4694(69)91443-6

Fujioka, T., Kakigi, R., Gunji, A., and Takeshima, Y. (2002). The auditory evoked magnetic fields to very high frequency tones. Neuroscience 112, 367–381. doi: 10.1016/S0306-4522(02)00086-6

Fukushima, A., Yagi, R., Kawai, N., Honda, M., Nishina, E., and Oohashi, T. (2014). Frequencies of inaudible high-frequency sounds differentially affect brain activity: positive and negative hypersonic effects. PLoS ONE 9:e95464. doi: 10.1371/journal.pone.0095464

Gola, M., Kamiński, J., Brzezicka, A., and Wróbel, A. (2012). Beta band oscillations as a correlate of alertness — Changes in aging. Int. J. Psychophysiol. 85, 62–67. doi: 10.1016/j.ijpsycho.2011.09.00

Hari, R., and Salmelin, R. (1997). Human cortical oscillations: a neuromagnetic view through the skull. Trends. Neurosci. 20, 44–49. doi: 10.1016/S0166-2236(96)10065-5

Ito, S., Harada, T., Miyaguchi, M., Ishizaki, F., Chikamura, C., Kodama, Y., et al. (2016). Effect of high-resolution audio music box sound on EEG. Int. Med. J. 23, 1–3.

Jäncke, L., Kühnis, J., Rogenmoser, L., and Elmer, S. (2015). Time course of EEG oscillations during repeated listening of a well-known aria. Front. Hum. Neurosci. 9:401. doi: 10.3389/fnhum.2015.00401

Jeffries, K. J., Fritz, J. B., and Braun, A. R. (2003). Words in melody: an h(2)15o pet study of brain activation during singing and speaking. Neuroreport 14, 749–754. doi: 10.1097/01.wnr.0000066198.94941.a4

Jensen, O., and Mazaheri, A. (2010). Shaping functional architecture by oscillatory alpha activity: gating by inhibition. Front. Hum. Neurosci. 4:186. doi: 10.3389/fnhum.2010.00186

Kamiński, J., Brzezicka, A., Gola, M., and Wróbel, A. (2012). Beta band oscillations engagement in human alertness process. Int. J. Psychophysiol. 85, 125–128. doi: 10.1016/j.ijpsycho.2011.11.006

Klimesch, W. (1999). EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Res. Rev. 29, 169–195. doi: 10.1016/S0165-0173(98)00056-3

Klimesch, W. (2012). Alpha-band oscillations, attention, and controlled access to stored information. Trends Cogn. Sci. 16, 606–617. doi: 10.1016/j.tics.2012.10.007

Klimesch, W., Schack, B., and Sauseng, P. (2005). The functional significance of theta and upper alpha oscillations. Exp. Psychol. 52, 99–108. doi: 10.1027/1618-3169.52.2.99

Kok, A. (1997). Event-related-potential (ERP) reflections of mental resources: a review and synthesis. Biol. Psychol. 45, 19–56. doi: 10.1016/S0301-0511(96)05221-0

Kok, A. (2001). On the utility of P3 amplitude as a measure of processing capacity. Psychophysiology 38, 557–577. doi: 10.1017/S0048577201990559

Kuribayashi, R., and Nittono, H. (2014). Source localization of brain electrical activity while listening to high-resolution digital sounds with inaudible high-frequency components (Abstract). Int. J. Psychophysiol. 94:192. doi: 10.1016/j.ijpsycho.2014.08.796

Kuribayashi, R., and Nittono, H. (2015). Music instruments that produce sounds with inaudible high-frequency components (in Japanese). Stud. Hum. Sci. 10, 35–41. doi: 10.15027/39146

Kuribayashi, R., Yamamoto, R., and Nittono, H. (2014). High-resolution music with inaudible high-frequency components produces a lagged effect on human electroencephalographic activities. Neuroreport 25, 651–655. doi: 10.1097/wnr.0000000000000151

Larsen, J. T., Norris, C. J., and Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology 40, 776–785. doi: 10.1111/1469-8986.00078

LeDoux, J. E. (1993). Emotional memory systems in the brain. Behav. Brain Res. 58, 69–79. doi: 10.1016/0166-4328(93)90091-4

Lomas, T., Ivtzan, I., and Fu, C. H. (2015). A systematic review of the neurophysiology of mindfulness on EEG oscillations. Neurosci. Biobehav. Rev. 57, 401–410. doi: 10.1016/j.neubiorev.2015.09.018

Lupien, S. J., McEwen, B. S., Gunnar, M. R., and Heim, C. (2009). Effects of stress throughout the lifespan on the brain, behaviour and cognition. Nat. Rev. Neurosci. 10, 434–445. doi: 10.1038/nrn2639

Ma, W., Lai, Y., Yuan, Y., Wu, D., and Yao, D. (2012). Electroencephalogram variations in the α band during tempo-specific perception. Neuroreport 23, 125–128. doi: 10.1097/WNR.0b013e32834e7eac

Malliani, A., and Montano, N. (2002). Heart rate variability as a clinical tool. Ital. Heart J. 3, 439–445.

Malliani, A. I., Pagani, M., Lombardi, F., and Cerutti, S. (1991). Cardiovascular neural regulation explored in the frequency domain. Circulation 84, 482–489. doi: 10.1161/01.CIR.84.2.482

Muraoka, T., Iwahara, M., and Yamada, Y. (1981). Examination of audio-bandwidth requirements for optimum sound signal transmission. J. Audio Eng. Soc. 29, 2–9.

Nishiguchi, T., Hamasaki, K., Ono, K., Iwaki, M., and Ando, A. (2009). Perceptual discrimination of very high frequency components in wide frequency range musical sound. Appl. Acoust. 70, 921–934. doi: 10.1016/j.apacoust.2009.01.002

Okamoto, Y., and Nakagawa, S. (2016). Effects of light wavelength on MEG ERD/ERS during a working memory task. Int. J. Psychophysiol. 104, 10–16. doi: 10.1016/j.ijpsycho.2016.03.008

Oohashi, T., Kawai, N., Nishina, E., Honda, M., Yagi, R., Nakamura, S., et al. (2006). The role of biological system other than auditory air-conduction in the emergence of the hypersonic effect. Brain Res. 107, 339–347. doi: 10.1016/j.brainres.2005.12.096

Oohashi, T., Nishina, E., Honda, M., Yonekura, Y., Fuwamoto, Y., Kawai, N., et al. (2000). Inaudible high-frequency sounds affect brain activity: hypersonic effect. J. Neurophysiol. 83, 3548–3558.

Parasuraman, R. (1983). “Vigilance, arousal, and the brain,” in Physiological Correlates of Human Behavior, eds A. Gale and J. A. Edwards (London: Academic Press), 35–55.

Pavlygina, R. A., Sakharov, D. S., and Davydov, V. I. (2004). Spectral analysis of the human EEG during listening to musical compositions. Hum. Physiol. 30, 54–60. doi: 10.1023/B:HUMP.0000013765.64276.e6

Pfurtscheller, G., and Lopes da Silva, F. H. (1999). Event-related EEG/ MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857. doi: 10.1016/S1388-2457(99)00141-8

Pfurtscheller, G., Woertz, M., Supp, G., and Lopes da Silva, F. H. (2003). Early onset of post-movement beta electroencephalogram synchronization in the supplementary motor area during self-paced finger movement in man. Neurosci. Lett. 339, 111–114. doi: 10.1016/S0304-3940(02)01479-9

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Russell, J. A., Weiss, A., and Mendelsohn, G. A. (1989). Affect grid – a single-item scale of pleasure and arousal. J. Pers. Soc. Psychol. 57, 493–502. doi: 10.1037/0022-3514.57.3.493

Sanyal, S., Banerjee, A., Guhathakurta, T., Sengupta, R., Ghosh, D., and Ghose, P. (2013). “EEG study on the neural patterns of brain with music stimuli: an evidence of Hysteresis?,” in Proceedings of the International Seminar on ‘Creating and Teaching Music Patterns’, (Kolkata: Rabindra Bharati University), 51–61.

Schaefer, R. S., Vlek, R. J., and Desain, P. (2011). Music perception and imagery in EEG: alpha band effects of task and stimulus. Int. J. Psychophysiol. 82, 254–259. doi: 10.1016/j.ijpsycho.2011.09.007

Sebastiani, L., Simoni, A., Gemignani, A., Ghelarducci, B., and Santarcangelo, E. L. (2003). Autonomic and EEG correlates of emotional imagery in subjects with different hypnotic susceptibility. Brain Res. Bull. 60, 151–160. doi: 10.1016/S0361-9230(03)00025-X

Suzuki, K. (2013). Hypersonic effect and performance of recognition tests (in Japanese). Kagaku 83, 343–345.

Tarvainen, M. P., Niskanen, J.-P., Lipponen, J. A., Ranta-Aho, P. O., and Karjalainen, P. A. (2014). Kubios HRV–heart rate variability analysis software. Comput. Methods Programs Biomed. 113, 210–220. doi: 10.1016/j.cmpb.2013.07.024

Terasaki, M., Kishimoto, Y., and Koga, A. (1992). Construction of a multiple mood scale (In Japanese). Shinrigaku Kenkyu 62, 350–356. doi: 10.4992/jjpsy.62.350

Tian, Y., Ma, W., Tian, C., Xu, P., and Yao, D. (2013). Brain oscillations and electroencephalography scalp networks during tempo perception. Neurosci. Bull. 29, 731–736. doi: 10.1007/s12264-013-1352-9

Vasey, M. W., and Thayer, J. F. (1987). The continuing problem of false positives in repeated measures ANOVA in psychophysiology: a multivariate solution. Psychophysiology 24, 479–486. doi: 10.1111/j.1469-8986.1987.tb00324.x

Vogt, B. A., and Gabriel, M. (1993). Neurobiology of Cingulate Cortex and Limbic Thalamus. A Comprehensive Handbook. Boston, MA: Birkhauser.

Ward, L. M. (2003). Synchronous neural oscillations and cognitive processes. Trends Cogn. Sci. 7, 553–559. doi: 10.1016/j.tics.2003.10.012

Weisz, N., Hartmann, T., Muller, N., Lorenz, I., and Obleser, J. (2011). Alpha rhythms in audition: cognitive and clinical perspectives. Front. Psychol. 2:73. doi: 10.3389/fpsyg.2011.00073

Yagi, R., Nishina, E., Honda, M., and Oohashi, T. (2003a). Modulatory effect of inaudible high-frequency sounds on human acoustic perception. Neurosci. Lett. 351, 191–195. doi: 10.1016/j.neulet.2003.07.020

Yagi, R., Nishina, E., and Oohashi, T. (2003b). A method for behavioral evaluation of the “hypersonic effect”. Acoust. Sci. Technol. 24, 197–200. doi: 10.1250/ast.24.197

Keywords: high-resolution audio, electroencephalogram, alpha power, event-related potential, vigilance task, attention, conscious awareness, hypersonic effect

Citation: Kuribayashi R and Nittono H (2017) High-Resolution Audio with Inaudible High-Frequency Components Induces a Relaxed Attentional State without Conscious Awareness. Front. Psychol. 8:93. doi: 10.3389/fpsyg.2017.00093

Received: 01 October 2016; Accepted: 13 January 2017;

Published: 01 February 2017.

Edited by:

Mark Reybrouck, KU Leuven, BelgiumReviewed by:

Lutz Jäncke, University of Zurich, SwitzerlandCopyright © 2017 Kuribayashi and Nittono. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hiroshi Nittono, bml0dG9ub0BodXMub3Nha2EtdS5hYy5qcA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.