- Department of Psychology, Faculty of Psychology, Otemon Gakuin University, Ibaraki, Japan

Cosmetic makeup significantly influences facial perception. Because faces consist of similar physical structures, cosmetic makeup is typically used to highlight individual features, particularly those of the eyes (i.e., eye shadow) and mouth (i.e., lipstick). Though event-related potentials have been utilized to study various aspects of facial processing, the influence of cosmetics on specific ERP components remains unclear. The present study aimed to investigate the relationship between the application of cosmetic makeup and the amplitudes of the P1 and N170 event-related potential components during facial perception tasks. Moreover, the influence of visual perception on N170 amplitude, was evaluated under three makeup conditions: Eye Shadow, Lipstick, and No Makeup. Electroencephalography was used to monitor 17 participants who were exposed to visual stimuli under each these three makeup conditions. The results of the present study subsequently demonstrated that the Lipstick condition elicited a significantly greater N170 amplitude than the No Makeup condition, while P1 amplitude was unaffected by any of the conditions. Such findings indicate that the application of cosmetic makeup alters general facial perception but exerts no influence on the perception of low-level visual features. Collectively, these results support the notion that the application of makeup induces subtle alterations in the processing of facial stimuli, with a particular effect on the processing of specific facial components (i.e., the mouth), as reflected by changes in N170 amplitude.

Introduction

Women in a number of societies throughout the world have traditionally used cosmetic makeup to modify the visual perception of their facial beauty (Jones and Kramer, 2015), a process that elicits enhanced ratings of physical attractiveness from both men and women (Graham and Jouhar, 1981; Ueda and Koyama, 2011). Such results indicate that cosmetic makeup significantly influences the visual perception of facial stimuli.

While faces consist of similar physical structures, cosmetic makeup is typically used to highlight or emphasize individual features (i.e., eyes, nose, and mouth). Indeed, research has indicated that such enhancements are particularly relevant for the eyes (i.e., eye shadow) and the mouth (i.e., lipstick) (Graham and Jouhar, 1981; Ueda and Koyama, 2011; Jones and Kramer, 2015). Application of cosmetics to female faces was found to increase facial contrast (Russell, 2009). According to Russell (2003), the “consistent luminance difference between the darker regions of the eyes and mouth and the lighter regions of the skin that surround them forms a pattern unique to faces.” Russell et al. (2016) also reported that female faces with higher facial contrast were rated as healthier and more attractive when this difference in luminance was increased than when it was decreased, though the opposite was observed for male faces (Russell, 2003). In addition, previous studies have indicated that increasing facial contrast via the use of cosmetics plays a role in age perception, and that female faces with greater facial contrast appear younger (Porcheron et al., 2013; Jones et al., 2015). Furthermore, female faces to which cosmetics have been applied are considered more feminine and attractive than the same faces without cosmetics (Russell, 2009; Stephen and McKeegan, 2010; Jones et al., 2015). Because increased luminance contrast enhances femininity and attractiveness in female faces, but reduces masculinity and attractiveness in male faces (Russell, 2003, 2009; Stephen and McKeegan, 2010), only female faces were utilized in the present study.

Changes in facial perception can be detected via the recording of event-related brain potentials (ERPs), from which studies have identified face-sensitive P1 and N170 components. As the P1 and N170 ERP components are typically regarded as markers for processing the perceived faces, these components are useful in examining the effect of cosmetics on face perception.

P1, an early positive component of the ERP, typically peaks approximately 100 ms after the presentation of facial stimuli. Reported to reflect the processing of low-level visual features such as contrast (Tarkiainen et al., 2002; Rossion and Caharel, 2011), P1 amplitude has also been linked to face-specific visual processing (Thierry et al., 2007; Susac et al., 2009; Dering et al., 2011; Luo et al., 2013). Previous studies indicate that P1 features a medial (O1, O2) or a lateral-occipital scalp distribution, or both (Eimer, 1998, 2000a; Liu et al., 2002; Goffaux et al., 2003; Itier and Taylor, 2004a,b; Herrmann et al., 2005; Okazaki et al., 2008; Sadeh et al., 2010; Luo et al., 2013).

Comparatively, N170 is a negative component evoked at the onset of facial perception that is characterized by a posterior-temporal scalp distribution (P7, PO7, PO8, P8) (Bentin et al., 1996; Rossion and Jacques, 2008; Chen et al., 2009; Peng et al., 2012; Ran et al., 2014) and peaks approximately 170 ms after the presentation of facial stimuli. N170 amplitude is significantly greater in response to human faces than other visual images, including cars, hands, houses, furniture, and scrambled faces (Bentin et al., 1996; George et al., 1996; Eimer, 1998, 2000b; Eimer and McCarthy, 1999; Jemel et al., 1999; Bentin and Deouell, 2000). The neural generators of N170 are reported to lie adjacent to the fusiform area, a region previously implicated in facial processing (Bentin et al., 1996). This is consistent with previous functional magnetic resonance imaging (fMRI), magnetoencephalography (MEG), and Brain Electrical Source Analysis (BESA) studies (Puce et al., 1995; Watanabe et al., 1999a,b; Sadeh et al., 2010; Luo et al., 2013).

While the P1 component of the ERP appears to reflect a response to lower-level visual features, the N170 component appears to be driven by wide-scale facial perception (Rossion and Caharel, 2011). In addition, Schweinberger (2011) suggested that the P1 component reflects the pictorial encoding of faces, while the N170 component reflects the structural encoding of faces. Pictorial encoding is defined as the early top–down attentional processing of faces, while structural encoding precedes the processes involved in the identification of faces. N170 is therefore considered to be related to both domain-specific and domain-general processing of facial information (Eimer, 2011). However, the ability of P1 or N170 amplitudes to detect alterations in facial perception induced by the application of cosmetics remains unclear. In order to clarify the influence of cosmetic makeup on the neural representation of facial stimuli, the present study used an ERP adaptation paradigm, in which the stimulus presented was preceded by a stimulus of another category (e.g., a face presented within a different format) (Kovács et al., 2006; Eimer et al., 2010; Zimmer and Kovács, 2011; Caharel et al., 2015). Since previous studies of cosmetic makeup have utilized comparisons between faces with and without makeup, (Graham and Jouhar, 1981; Jones and Kramer, 2015), the present study adopted a similar paradigm.

The current literature indicates that the eyes play a central role in facial perception and representation. In particular, a recent MEG study demonstrated that participants require significantly longer to perceive eyes presented in isolation than when presented as a facial component (Watanabe et al., 1999b). Accordingly, eye-tracking studies demonstrate that participants tend to fixate close to or directly on the eyes during facial perception (Janik et al., 1978; Barton et al., 2006; Arizpe et al., 2012), and that N170 amplitude is significantly greater in response to eyes presented in isolation than to the whole face, nose, or mouth (Bentin et al., 1996; Bentin and Deouell, 2000; Itier et al., 2006; Itier et al., 2007; Nemrodov and Itier, 2011). However, conflicting reports exist with regard to this matter. While several studies have indicated that eyeless faces elicit N170 amplitudes similar to that of normal or inverted faces (Eimer, 1998; Magnuski and Gola, 2013), a similar experiment demonstrated greater N170 amplitudes for normal faces relative to eyeless faces (Nemrodov et al., 2014). Therefore, the relationship between N170 amplitude and the role of eyes in facial perception remains uncertain.

In addition, daSilva et al. (2016) recently demonstrated the significance of the mouth in facial processing by presenting mouth images depicting grimaces, smiles, and open mouth expressions to participants. Expressions featuring teeth elicited significantly larger N170 amplitudes compared to expressions without teeth (daSilva et al., 2016). However, daSilva et al. (2016) did not examine N170 amplitude in relation to processing of both the eyes and mouth. Accordingly, few studies have evaluated N170 amplitude responsivity to the eyes and mouth in the context of facial processing. Pesciarelli et al. (2016) reported that processing of the eyes in inverted faces elicited significantly larger N170 amplitudes than in upright faces; however, this was not true for the mouth. In addition, Pesciarelli et al. (2016) identified significantly larger N170 amplitudes for the mouth relative to the eyes in upright faces.

Therefore, it remains unclear whether the eyes or mouth exert a greater influence on N170 amplitude. As previously mentioned, cosmetic makeup is most frequently applied to the eyes (i.e., eye shadow) and the mouth (i.e., lipstick) (Graham and Jouhar, 1981; Ueda and Koyama, 2011; Jones and Kramer, 2015). Mulhern et al. (2003) examined the relative contribution of cosmetic application under five cosmetic conditions: no make-up, foundation only, eye make-up only, lip make-up only, and full-facial make-up. Women judged eye make-up as contributing most to attractiveness, while men rated both eye make-up and foundation as having a significant impact on attractiveness in the context of a full-facial makeover. However, lipstick did not appear to contribute to attractiveness independently (Mulhern et al., 2003). On the other hand, Stephen and McKeegan (2010) allowed participants to manipulate the color of the lips in color-calibrated face photographs along the red–green and blue–yellow axes. Participants increased redness contrast to enhance femininity and attractiveness in female faces, but reduced redness contrast to enhance masculinity in male faces (Stephen and McKeegan, 2010). In order to clarify whether the application of cosmetics to the eyes or mouth elicits a greater effect on N170 amplitude, the present study compared P1 and N170 amplitudes during facial perception under three makeup conditions: Eye shadow, Lipstick, and No Makeup.

The present study aimed to investigate the influence of cosmetic makeup on the perception of facial stimuli via the evaluation of N170 and P1 amplitudes during the recording of ERP. If N170 amplitude is more reflective of the processing of the eyes than the mouth following the application of cosmetics, a significant difference in N170 amplitude would be expected between the Eye shadow and Lipstick/No Makeup conditions. Alternatively, if N170 amplitude is more reflective of the processing of the mouth than the eyes following the application of cosmetics, a significant difference would be expected between the Lipstick and Eye shadow/No Makeup conditions. Moreover, because the application of cosmetics to female faces has been observed to increase facial contrast (Russell, 2009), the present study also aimed to investigate whether application of cosmetics to the eyes or mouth exerts a greater influence on P1 amplitude, which reflects the processing of lower-level visual features (Rossion and Caharel, 2011).

Materials and Methods

Participants

Seventeen healthy, right-handed, Japanese participants (5 men; 12 women; aged 18–24 years; mean age: 21.3 years) were selected for the present study. All participants exhibited normal or corrected-to-normal vision and had no history of psychiatric or neurological disorders.

All participants provided written informed consent prior to participation in the study, in accordance with the Declaration of Helsinki. The ethics committee of Otemon Gakuin University formally approved this experiment and the recruitment of participants from the Otemon Gakuin University student population.

Stimuli

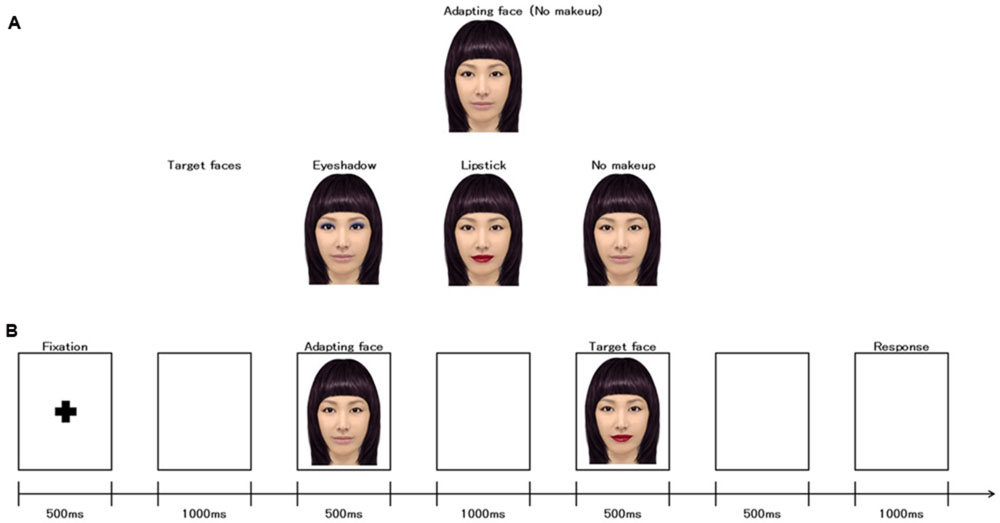

The images selected as visual stimuli included color pictures of the faces of 10 young, adult Japanese women. The stimulus faces were unfamiliar to all participants in the study. The pictures were obtained from various websites1,2 and included front-facing views of almost identical luminance. All images depicted neutral expressions. In total, 30 visual stimuli were used for the present study (each of the 10 model faces were provided in three conditions; Lipstick (wearing red lipstick only), Eye Shadow (wearing blue eye shadow only), and No Makeup (no makeup applied). Each image was digitally edited to include cosmetics of the same color (same red lipstick, same blue eye shadow) and reconstructed from the original using an application software (YouCan Makeup) of iPad3 (Figure 1A). The No Makeup condition was also used as the adapting image for the experiment and was presented prior to each stimulus for comparison. In addition, each image was edited to feature the same hairstyle and color (black), as reconstructed from the original using an iPad. All stimuli were airbrushed using Adobe Photoshop 12 to remove any outstanding features or blemishes and were subsequently processed using Photoshop software to ensure background consistency. All stimuli were presented in the same orientation on a white background. All faces were presented in a front-facing view and were equated for mean luminance (luminance values = 8.3 cd/m2) and size using Adobe Photoshop 12 software (Figure 1A). Faces occupied a visual angle of (horizontal × vertical) 3.4° × 4.0°. All faces were presented in the center of a 22-inch cathode ray tube monitor (Mitsubishi, Diamondtron M2, RDF223G, Chiyoda, Tokyo, Japan) that was placed 100 cm in front of the participants. The screen resolution was 1280 × 1024, with a refresh rate of 100 Hz.

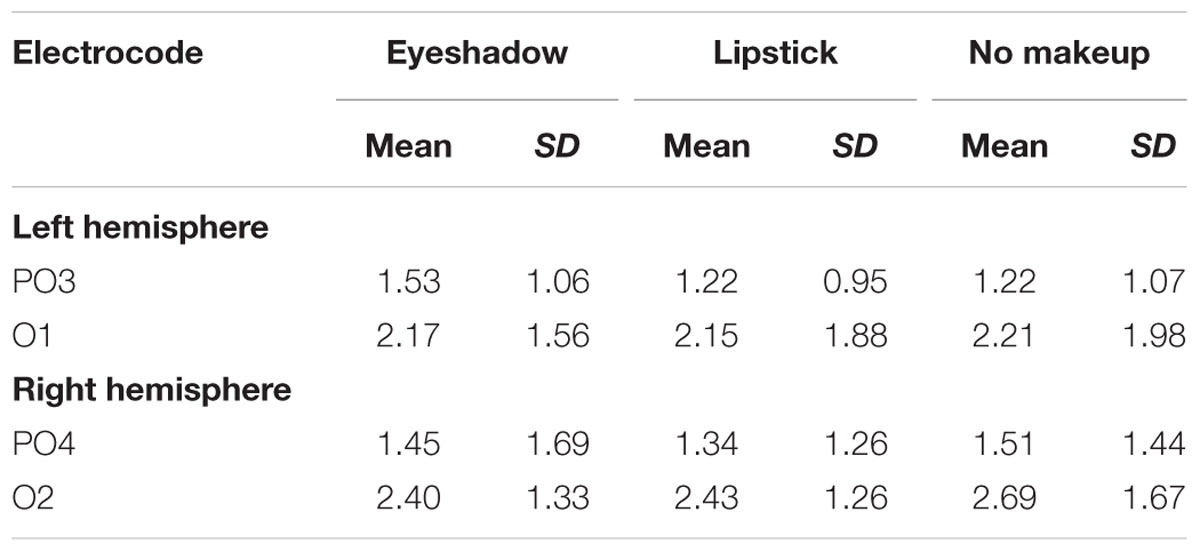

FIGURE 1. (A) Examples of adapting facial stimuli (No Makeup) and three target faces (Eye shadow, Lipstick, No Makeup). (B) Timeline of the single trial.

A previous study (Golby et al., 2001) reported differential activity in the fusiform region in response to same-race and alternate-race faces. Comparatively, several reports indicate that N170 amplitude is unaffected by the effects of race (Vizioli et al., 2010a,b), while additional studies report greater N170 amplitudes in response to other-race facial stimuli relative to own-race stimuli (Stahl et al., 2010; Wiese et al., 2012). For this reason, the images used as visual stimuli in the present study were of Japanese women only, and all participants were Japanese. In addition, since N170 amplitude varies depending on the viewpoint of the face (Eimer, 2000b; Caharel et al., 2015), all faces were presented in a front-facing view. Furthermore, in several studies, N170 has been shown to be sensitive to emotional expression (Batty and Taylor, 2003; Eger et al., 2003; Caharel et al., 2005; Blau et al., 2007; Leppänen et al., 2007). Therefore, the faces used for visual stimuli in the present study featured neutral expressions.

Procedure

Participants were seated comfortably 100 cm in front of a 22-inch cathode ray tube monitor on which stimuli were presented using a Multi Trigger System (Medical Try System, Kodaira, Tokyo, Japan). Each trial was completed as follows: (I) a fixation mark (+) was presented for 500 ms, followed by an inter-stimulus interval of 1000 ms; (II) an adapting facial stimulus (No Makeup) was presented for 500 ms, followed by an inter-stimulus interval of 1000 ms; (III) a target face stimulus (Lipstick, Eye Shadow, or No Makeup) was presented for 500 ms, followed by an inter-stimulus interval of 500 ms; and (IV) a judgment screen was presented for 1000 ms (Figure 1B). The inter-trial interval varied randomly between 500 and 1500 ms.

On the judgment screen, the three target faces were assigned a number: 1 = Lipstick; 2 = Eye Shadow; 3 = No Makeup. Each participant was instructed to compare the adapting face (No Makeup) with a target face and to identify the type of target face as quickly and accurately as possible. Participants were required to respond by pressing one of three buttons that corresponded to 1, 2, and 3 with their right index finger to indicate whether the facial stimulus belonged to the Lipstick, Eye Shadow, or No Makeup condition, respectively. Reaction time was measured using a digital timer accurate to 1 ms, beginning with the onset of stimulus presentation and finishing once participants had responded to the stimulus. Participants performed 10 practice trials, followed by three blocks of 80 trials (240 trials total). The order of conditions was randomized within each block. In total, 30 stimuli were presented in random order, with equal probability. In each trial, the adapting face and target face were obtained from the same person.

Recording and Analysis

Electroencephalography (EEG) and Electrooculography (EOG) data were acquired using a 128-channel Sensor Net (Electrical Geodesic, Inc., Eugene, OR, USA) and recorded via the standard EGI Net Station 5.2.01 package. EEG and EOG results were recorded using Ag/AgCl electrodes from the 10-5 system (Oostenveld and Praamstra, 2001; Jurcak et al., 2007) and each electrode was referred to the vertex (Cz). Next, each electrode was offline re-referenced to the common average. Vertical and horizontal eye movements were recorded using EOG electrodes placed above, below and at the outer canthi of both eyes to detect movement artifacts. EEG and EOG were sampled at 500 Hz and band-pass filtered at 0.01–30 Hz. Electrode impedance was maintained below 50 kΩ. For artifact rejection, all trials in which both the vertical and horizontal EOG voltages exceeded 140 mV during the recording epoch were excluded from further analysis.

Stimulus-locked ERPs were derived separately for each of the three target faces (Eye shadow, Lipstick, and No Makeup) from 200 ms before to 1000 ms after stimulus presentation, and were baseline corrected using the 200 ms pre-stimulus window. Based on previous studies (Luo et al., 2013; Ran et al., 2014), the P1 component was analyzed via the following four electrode sites: O1/O2 and PO3/PO4. The amplitude of the positive peak of the EEG signal was quantified 50–110 ms after stimulus presentation. Similarly, according to previous studies (Ran et al., 2014; Caharel et al., 2015), the N170 component was analyzed via the following 12 electrode sites: P5/P6, P7/P8, PO7/PO8, PO9/PO10, POO9h /POO10h, and PPO9h/PPO10h (the 10-5 system) (Oostenveld and Praamstra, 2001; Jurcak et al., 2007). The amplitude of the negative peak of the EEG signal was quantified 120 to 180 ms after stimulus presentation. The mean reaction time and ERP amplitude were then calculated for each participant in response to the three target faces.

Statistical Analysis

Reaction time was analyzed using a one-way repeated-measures analysis of variance (ANOVA) for each condition (Eye shadow, Lipstick, No Makeup). P1 amplitude was analyzed using a three-way (3 × 2 × 2) repeated-measures ANOVA with regard to condition (Eye shadow, Lipstick, No Makeup), hemisphere (left, right) and electrode placement (O1 vs. PO3, O2 vs. PO4), while post hoc comparisons were performed using the Bonferroni test. Similarly, N170 amplitude was analyzed using a three-way (3 × 2 × 6) repeated-measures ANOVA with regard to condition (Eye shadow, Lipstick, No Makeup), hemisphere (left, right) and electrode placement (P5 vs. P7 vs. PO7 vs. PO9 vs. POO9h vs. PPO9h, P6 vs. P8 vs. PO8 vs. PO10 vs. POO10h vs. PPO10h), while post hoc comparisons were performed using the Bonferroni test. ERP was analyzed using Greenhouse–Geisser corrections applied to p values associated with multiple degrees of freedom repeated-measures comparisons.

Results

Effect of Condition on Reaction Time

No main effect was detected for condition on reaction time: Eye shadow, 408 ± 81 ms (mean ± SD); Lipstick, 412 ± 98 ms; No Makeup, 391 ± 73 ms [F(2,32) = 1.70, p = 0.20].

Effect of Condition on P1 Amplitude

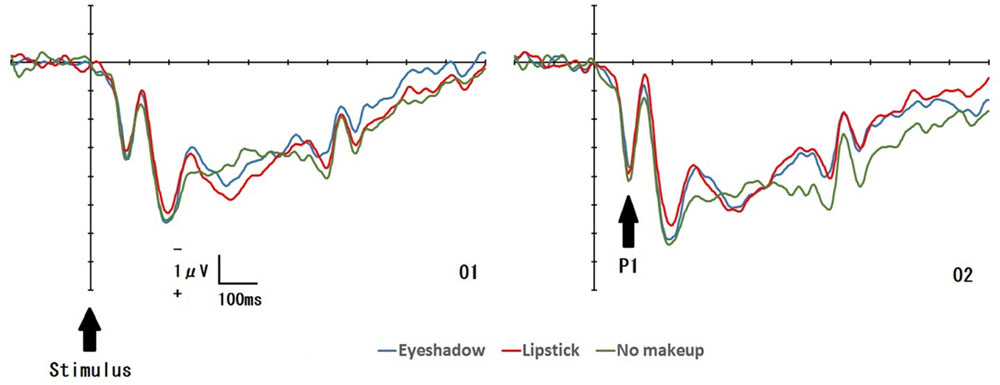

Figure 2 displays the grand-averaged EEG waveforms for all conditions (Eye shadow, Lipstick, No Makeup) at two electrode sites (O1/O2). For each condition, an enhanced positive ERP was identified 50–110 ms after exposure to the target face. This positive ERP was identified as P1. Table 1 displays the mean P1 amplitude for all conditions at four electrode sites (O1/O2 and PO3/PO4). No significant main effect was detected with regard to condition [F(2,32) = 0.49, p = 0.77, = 0.02), or hemisphere on P1 amplitude [F(1,16) = 0.42, p = 0.53, = 0.03]. However, electrode placement produced a significant effect on P1 amplitude [F(1,16) = 11.95, p = 0.003, = 0.43], with a greater P1 amplitude at the O1 and O2 sites than the PO3 and PO4 sites (p < 0.05). No significant interactions were observed between the variables (all p > 0.05).

FIGURE 2. Stimulus-locked average ERP waveforms at O1 and O2 for each target face: Eye shadow, Lipstick, and No Makeup.

Effect of Condition on N170 Amplitude

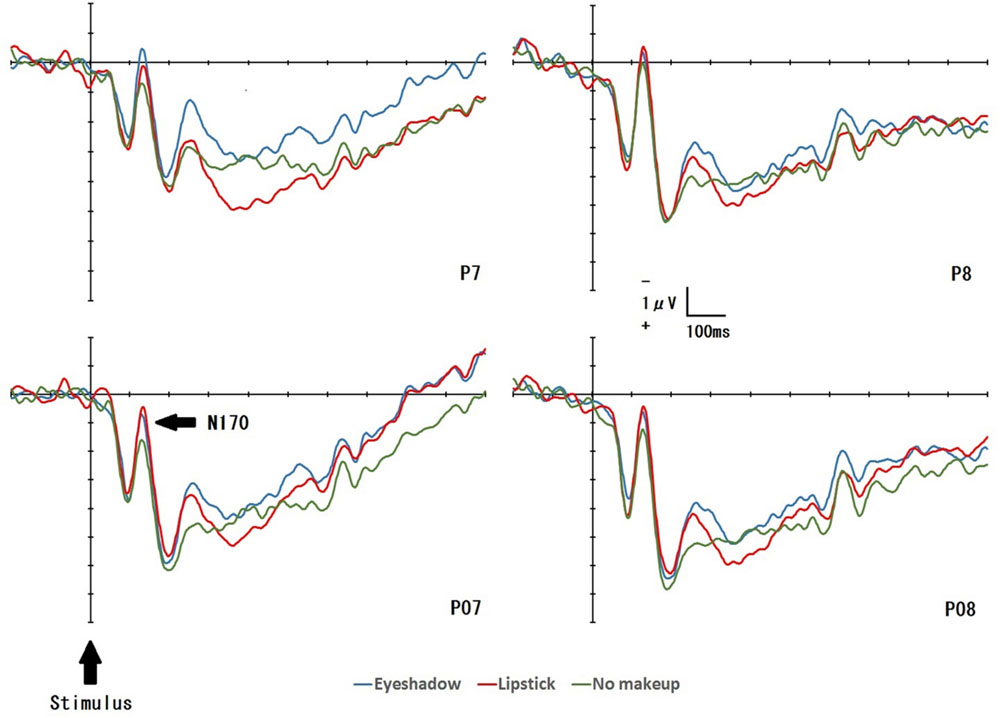

Figure 3 displays the grand-averaged EEG waveforms for all conditions (Eye shadow, Lipstick, No Makeup) at four electrode sites (P7/P8 and PO7/PO8). For each condition, an enhanced negative ERP was identified 120–180 ms after exposure to the target face. This negative ERP was identified as N170. Table 2 displays the mean N170 amplitude for all conditions at 12 electrode sites (P5/P6, P7/P8, PO7/PO8, PO9/PO10, POO9h /POO10h, and PPO9h /PPO10h). A significant main effect was detected for condition [F(2,32) = 3.39, p = 0.05, = 0.18], and electrode placement on N170 amplitude [F(5,80) = 7.07, p = 0.002, = 0.31]. The N170 amplitude for the Lipstick condition was significantly greater than for the No Makeup condition (p < 0.05). No significant main effect was detected with regard to hemisphere on N170 amplitude [F(1,16) = 0.29, p = 0.60, = 0.02]. In addition, no significant interactions were detected between any two of the three variables (condition × hemisphere, condition × electrode, and hemisphere × electrode) (all p > 0.05), however, a significant interaction was detected for all three (condition × hemisphere × electrode) [F(10,160) = 2.88, p = 0.04, = 0.15]. Simple effect analyses indicated that the N170 amplitude for the Lipstick condition was significantly greater than for the No Makeup condition in the left hemisphere (PO7) and right hemisphere (PO10) (p < 0.05). Furthermore, simple effect analyses demonstrated that N170 amplitude was significantly greater at the P8 and PPO10h placement sites than the PO8 site in the right hemisphere for all conditions (Eye shadow, Lipstick, No Makeup) (p < 0.05). Moreover, in the left hemisphere during the No Makeup condition, N170 amplitude was significantly greater at P5 than P7 (p < 0.05).

FIGURE 3. Stimulus-locked average ERP waveforms at P7, P8, PO7, and PO8 for each target face: Eye shadow, Lipstick, and No Makeup.

Discussion

The present study aimed to investigate changes in P1 and N170 amplitude in response to the application of cosmetics during a facial perception task. This experiment adopted an ERP adaptation paradigm, in which participants were required to compare a model face with makeup (Eye Shadow/Lipstick) to a model face without makeup (No Makeup). Subsequently, P1 and N170 amplitudes were analyzed using EEG and EOG during a facial perception task with three target faces; (Eye shadow, Lipstick, and No Makeup), wherein the adapting face presented prior to the target face was the No Makeup condition. The results of the present study demonstrated that N170 amplitudes were significantly greater in response to the Lipstick condition than to the No Makeup condition, while no significant effect was detected for cosmetic makeup on P1 amplitude.

Previously, Rossion and Caharel (2011) reported that P1 and N170 amplitudes demonstrated functional dissociation with regard to facial sensitivity, wherein P1 was driven by low-level visual features, while N170 reflected facial perception. The present results support these findings, providing direct evidence that the application of facial cosmetics does not influence the perception of low-level visual features, but instead affects overall facial perception. Although the results of previous studies indicated that application of cosmetics to female faces increased facial contrast (Russell, 2009), no significant effect was detected for cosmetic makeup on P1 amplitude in the present study. As the present study utilized an ERP adaptation paradigm (Kovács et al., 2006; Eimer et al., 2010; Zimmer and Kovács, 2011; Caharel et al., 2015), attention was attracted to detecting changes between the faces with and without makeup. Therefore, because attention allocated to the low-level visual processing of faces was distracted, no differences in P1 were observed according to condition. On the other hand, because attention was allocated to the perception and structural encoding of the face, the N170 amplitude was significantly greater in the Lipstick than in the No Makeup condition.

Such findings provide support for the notion that the application of cosmetics significantly influences facial perception (Graham and Jouhar, 1981; Ueda and Koyama, 2011; Jones and Kramer, 2015). In the present study, N170 amplitudes were significantly greater in response to the Lipstick condition than to the No Makeup condition, though they did not significantly differ between the No Makeup and Eye Shadow conditions. Such findings support the hypothesis that N170 amplitudes reflect the processing of specific facial stimuli, wherein N170 better represents processing of the mouth than the eyes. Therefore, the results of the present study indicate that application of cosmetic makeup to any region of the face influences mouth-based processing, as reflected by changes in N170 amplitude. These findings are consistent with those of a previous study (Pesciarelli et al., 2016), wherein the application of cosmetic makeup (Lipstick) drew attention to the mouth during facial perception.

However, because different amounts of makeup affect attractiveness (Jones et al., 2014), it is possible that the red lipstick was more vivid than the blue eye shadow in the present study. In addition, because longer viewing durations affect judgments of faces with different amounts of makeup in varied ways (Etcoff et al., 2011), the red lipstick may have simply been more eye-catching under the relatively short duration utilized in the present study. To clarify these issues, future studies should manipulate the amount of makeup and duration of stimulus presentation.

In addition, Jones et al. (2015) revealed that a typical application of cosmetics increases the luminance contrast of the eyes to a much greater extent than the redness contrast of the mouth. The present results, however, contradicted these findings. As previously mentioned, because the present study did not involve manipulation of the amount of makeup or the duration of stimulus presentation, further research is required in order to examine the relative influence of various amounts of makeup on luminance contrast and ERP components. Furthermore, a previous eye-tracking study (Blais et al., 2008) revealed that Western Caucasian observers fixate more on the eye region, while East Asian observers fixate more on the central region of the face. It is possible that participants from a primarily Caucasian culture may not have exhibited the same pattern of results as the Japanese participants tested in the present study. Some previous studies indicate that N170 amplitude is unaffected by the effects of race (Vizioli et al., 2010a,b), while additional studies report greater N170 amplitudes in response to other-race facial stimuli relative to own-race stimuli (Stahl et al., 2010; Wiese et al., 2012). In order to clarify these issues, future studies should compare N170 amplitude for observers of several races when both own-race and other-race stimuli are presented.

According to Nemrodov et al. (2014), N170 amplitude was greater in response to inverted faces (faces presented upside down) than upright faces (Bentin et al., 1996; Rossion et al., 1999; Itier and Taylor, 2002; Pesciarelli et al., 2016). However, the N170 face inversion effect was strongly attenuated in eyeless faces when fixation was on the eyes, but was normal when fixation was on the mouth (Nemrodov et al., 2014). Moreover, Pesciarelli et al. (2016) reported that processing of the eyes in inverted faces elicited significantly larger N170 amplitudes compared to upright faces, though this effect was not observed for processing of the mouth. In addition, Pesciarelli et al. (2016) reported that processing of the mouth elicited significantly larger N170 amplitudes compared to processing of the eyes, but only in upright faces. Further research is needed to examine the relationship between the N170 face inversion effect and the application of cosmetic makeup, with particular focus on the eye and mouth regions.

While N170 amplitudes did not significantly differ between the No Makeup and Eye Shadow conditions in the present study, the eyes nonetheless play a significant role in facial perception (Janik et al., 1978; Bentin et al., 1996; Bentin and Deouell, 2000; Barton et al., 2006; Itier et al., 2006, 2007; Nemrodov and Itier, 2011; Arizpe et al., 2012; Nemrodov et al., 2014). Accordingly, Haxby et al. (2000) reported that the superior temporal sulcus processes individual facial features, including the changeable aspects of faces and the perception of eye gaze. Moreover, Cecchini et al. (2013) demonstrated that the left middle temporal gyrus (BA21) exhibits enhanced activation in response to eyes in an intact face condition than to eyes in a scrambled face condition. The results of the present study, in conjunction with the aforementioned findings, indicate that the role of the eyes in facial perception should be investigated with regard to other ERP components or using additional neuroimaging techniques.

Conclusion

The present study found that N170 amplitude was significantly increased in response to the application of cosmetic makeup (Lipstick), but was unaffected by the No Makeup condition. In addition, no significant main effect was identified with regard to condition for P1 amplitude. Therefore, the present results support the notion that the application of cosmetic makeup alters facial perception. These findings subsequently indicate that cosmetic makeup produces a significant effect on facial perception, influencing the processing of specific facial features, with a particular focus on the mouth, as reflected by changes in N170 amplitude.

Author Contributions

HT designed the experiments, performed the experiments and EEG data recording. HT performed the EEG data analysis and statistical analyses and wrote the manuscript.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

- ^http://www.air-lights.com/recruit.html

- ^http://ameblo.jp/studioaquarius/entry-11473277532.html

- ^http://jp.perfectcorp.com/\#ymk

References

Arizpe, J., Kravitz, D. J., Yovel, G., and Baker, C. I. (2012). Start position strongly influences fixation patterns during face processing: difficulties with eye movements as a measure of information use. PLoS ONE 7:e31106. doi: 10.1371/journal.pone.0031106

Barton, J. J., Radcliffe, N., Cherkasova, M. V., Edelman, J., and Intriligator, J. M. (2006). Information processing during face recognition: the effects of familiarity, inversion, and morphing on scanning fixations. Perception 35, 1089–1105. doi: 10.1068/p5547

Batty, M., and Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 17, 613–620. doi: 10.1016/S0926-6410(03)00174-5

Bentin, S., Allison, T., Puce, A., Perez, E., and MacCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565. doi: 10.1162/jocn.1996.8.6.551

Bentin, S., and Deouell, L. (2000). Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cogn. Neuropsychol. 17, 35–55. doi: 10.1080/026432900380472

Blais, C., Jack, R. E., Scheepers, C., Fiset, D., and Caldara, R. (2008). Culture shapes how we look at faces. PLoS ONE 3:e3022. doi: 10.1371/journal.pone.0003022

Blau, V. C., Maurer, U., Tottenham, N., and McCandliss, B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Res. 3, 7. doi: 10.1186/1744-9081-3-7

Caharel, S., Collet, K., and Rossion, B. (2015). The early visual encoding of a face (N170) is viewpoint-dependent: a parametric ERP-adaptation study. Biol. Psychol. 106, 18–27. doi: 10.1016/j.biopsycho.2015.01.010

Caharel, S., Courtay, N., Bernard, C., Lalonde, R., and Rebai, M. (2005). Familiarity andemotional expression influence an early stage of face processing: an electro-physiological study. Brain Cogn. 59, 96–100. doi: 10.1016/j.bandc.2005.05.005

Cecchini, M., Aceto, P., Altavilla, D., Palumbo, L., and Lai, C. (2013). The role of the eyes in processing an intact face and its scrambled image: a dense array ERP and low-resolution electromagnetic tomography (sLORETA) study. Soc. Neurosci. 8, 314–325. doi: 10.1080/17470919.2013.797020

Chen, J., Liu, B., Chen, B., and Fang, F. (2009). Time course of amodal completion in face perception. Vision Res. 49, 752–758. doi: 10.1016/j.visres.2009.02.005

daSilva, E. B., Crager, K., Geisler, D., Newbern, P., Orem, B., and Puce, A. (2016). Something to sink your teeth into: the presence of teeth augments ERPs to mouth expressions. Neuroimage 127, 227–241. doi: 10.1016/j.neuroimage.2015.12.020

Dering, B., Martin, C. D., Moro, S., Pegna, A. J., and Thierry, G. (2011). Face-sensitive processes one hundred milliseconds after picture onset. Front. Hum. Neurosci. 5:93. doi: 10.3389/fnhum.2011.00093

Eger, E., Jedynak, A., Iwaki, T., and Skrandies, W. (2003). Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation offace stimuli. Neuropsychologia 41, 808–817. doi: 10.1016/S0028-3932(02)00287-7

Eimer, M. (1998). Does the face-specific N170 component reflect the activity of a specialized eye processor? Neuroreport 9, 2945–2948. doi: 10.1097/00001756-199809140-00005

Eimer, M. (2000a). Effects of face inversion on the structural encoding and recognition of faces: evidence from event-related brain potentials. Cogn. Brain Res. 10, 145–158. doi: 10.1016/S0926-6410(00)00038-0

Eimer, M. (2000b). The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 11, 2319–2324. doi: 10.1097/00001756-200007140-00050

Eimer, M. (2011). “The face-sensitive N170 component of the event-related brain potential,” in The Oxford Handbook of Face Perception, eds A. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (Oxford: Oxford University Press), 329–344.

Eimer, M., Kiss, M., and Nicholas, S. (2010). Response profile of the face-sensitive N170 component: a rapid adaptation study. Cereb. Cortex 20, 2442–2452. doi: 10.1093/cercor/bhp312

Eimer, M., and McCarthy, R. A. (1999). Prosopagnosia and structural encoding of faces: evidence from event-related potentials. Neuroreport 10, 255–259. doi: 10.1097/00001756-199902050-00010

Etcoff, N. L., Stock, S., Haley, L. E., Vickery, S. A., and House, D. M. (2011). Cosmetics as a feature of the extended human phenotype: modulation of the perception of biologically important facial signals. PLoS ONE 6:e25656. doi: 10.1371/journal.pone.0025656

George, N., Evans, J., Fiori, N., Davidoff, J., and Renault, B. (1996). Brain events related to normal and moderately scrambled faces. Cogn. Brain Res. 4, 65–76. doi: 10.1016/0926-6410(95)00045-3

Goffaux, V., Gauthier, I., and Rossion, B. (2003). Spatial scale contribution to early visual differences between face and object processing. Cogn. Brain Res. 16, 416–424. doi: 10.1016/S0926-6410(03)00056-9

Golby, A. J., Gabrieli, J. D., Chiao, J. Y., and Eberhardt, J. L. (2001). Differential responses in the fusiform region to same-race and other-race faces. Nat. Neurosci. 4, 845–850. doi: 10.1038/90565

Graham, J. A., and Jouhar, A. J. (1981). The effects of cosmetics on person perception. Int. J. Cosmet. Sci. 3, 199–210. doi: 10.1111/j.1467-2494.1981.tb00283.x

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Herrmann, M. J., Ehlis, A. C., Muehlberger, A., and Fallgatter, A. J. (2005). Source localization of early stages of face processing. Brain Topogr. 18, 77–85. doi: 10.1007/s10548-005-0277-7

Itier, R. J., Alain, C., Sedore, K., and McIntosh, A. R. (2007). Early face processing specificity: it’s in the eyes! J. Cogn. Neurosci. 19, 1815–1826. doi: 10.1162/jocn.2007.19.11.1815

Itier, R. J., Latinus, M., and Taylor, M. J. (2006). Face, eye and object early processing: what is the face specificity? Neuroimage 29, 667–676. doi: 10.1016/j.neuroimage.2005.07.041

Itier, R. J., and Taylor, M. J. (2002). Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage 15, 353–372. doi: 10.1006/nimg.2001.0982

Itier, R. J., and Taylor, M. J. (2004a). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 14, 132–142. doi: 10.1093/cercor/bhg111

Itier, R. J., and Taylor, M. J. (2004b). Source analysis of the N170 to faces and objects. Neuroreport 15, 1261–1265. doi: 10.1097/01.wnr.0000127827.3576.d8

Janik, S. W., Wellens, A. R., Goldberg, M. L., and Dell’Osso, L. F. (1978). Eyes as the center of focus in the visual examination of human faces. Percept. Mot. Skills 47, 857–858. doi: 10.2466/pms.1978.47.3.857

Jemel, B., George, N., Chaby, L., Fiori, N., and Renault, B. (1999). Differential processing of part-to-whole and part-to-part face priming: an ERP study. Neuroreport 10, 1069–1075. doi: 10.1097/00001756-199904060-00031

Jones, A. L., Kramer, R. S., and Ward, R. (2014). Miscalibrations in judgements of attractiveness with cosmetics. Q. J. Exp. Psychol. 67, 2060–2068. doi: 10.1080/17470218.2014.908932

Jones, A. L., and Kramer, S. S. (2015). Facial cosmetics have little effect on attractiveness judgments compared with identity. Perception 44, 79–86. doi: 10.1068/p7904

Jones, A. L., Russell, R., and Ward, R. (2015). Cosmetics alter biologically based factors of beauty: evidence from facial contrast. Evol. Psychol. 13, 210–229. doi: 10.1177/147470491501300113

Jurcak, V., Tsuzuki, D., and Dan, I. (2007). 10/20, 10/10, and 10/5 systems revisited: their validity as relative head-surface-based positioning systems. Neuroimage 34, 1600–1611. doi: 10.1016/j.neuroimage.2006.09.024

Kovács, G., Zimmer, M., Bankó, E., Harza, I., Antal, A., and Vidnyánszky, Z. (2006). Electrophysiological correlates of visual adaptation to faces and body parts in humans. Cereb. Cortex 16, 742–753. doi: 10.1093/cercor/bhj020

Leppänen, J. M., Kauppinen, P., Peltola, M. J., and Hietanen, J. K. (2007). Differential elec-trocortical responses to increasing intensities of fearful and happy emotionalexpressions. Brain Res. 1166, 103–109. doi: 10.1016/j.brainres.2007.06.060

Liu, J., Harris, A., and Kanwisher, N. (2002). Stages of processing in face perception: an MEG study. Nat. Neurosci. 5, 910–916. doi: 10.1038/nn909

Luo, S., Luo, W., He, W., Chen, X., and Luo, Y. (2013). P1 and N170 components distinguish human-like and animal-like makeup stimuli. Neuroreport 24, 482–486. doi: 10.1097/WNR.0b013e328361cf08

Magnuski, M., and Gola, M. (2013). It’s not only in the eyes: nonlinear relationship between face orientation and N170 amplitude irrespective of eye presence. Int. J. Psychophysiol. 89, 358–365. doi: 10.1016/j.ijpsycho.2013.04.016

Mulhern, R., Fieldman, G., Hussey, T., Lévêque, J. L., and Pineau, P. (2003). Do cosmetics enhance female Caucasian facial attractiveness? Int. J. Cosmet. Sci. 25, 199–205. doi: 10.1046/j.1467-2494.2003.00188.x

Nemrodov, D., Anderson, T., Preston, F. F., and Itier, R. J. (2014). Early sensitivity for eyes within faces: a new neuronal account of holistic and featural processing. Neuroimage 97, 81–94. doi: 10.1016/j.neuroimage.2014.04.042

Nemrodov, D., and Itier, R. J. (2011). The role of eyes in early face processing: a rapid adaptation study of the inversion effect. Br. J. Psychol. 102, 783–798. doi: 10.1111/j.2044-8295.2011.02033.x

Okazaki, Y., Abrahamyan, A., Stevens, C. J., and Ioannides, A. A. (2008). The timing of face selectivity and attentional modulation in visual processing. Neuroscience 152, 1130–1144. doi: 10.1016/j.neuroscience.2008.01.056

Oostenveld, R., and Praamstra, P. (2001). The five percent electrode system for high-resolution EEG and ERP measurements. Clin. Neurophysiol. 112, 713–719. doi: 10.1016/S1388-2457(00)00527-7

Peng, M., De Beuckelaer, A., Yuan, L., and Zhou, R. (2012). The processing of anticipated and unanticipated fearful faces: an ERP study. Neurosci. Lett. 526, 85–90. doi: 10.1016/j.neulet.2012.08.009

Pesciarelli, F., Leo, I., and Sarlo, M. (2016). Implicit processing of the eyes and mouth: evidence from human electrophysiology. PLoS ONE 11:e0147415. doi: 10.1371/journal.pone.0147415

Porcheron, A., Mauger, E., and Russell, R. (2013). Aspects of facial contrast decrease with age and are cues for age perception. PLoS ONE 8:e57985. doi: 10.1371/journal.pone.0057985

Puce, A., Allison, T., Gore, J. C., and MacCarthy, G. (1995). Face-sensitive regions in human extrastriate cortex studied by functional MRI. J. Neurophysiol. 74, 1192–1199.

Ran, G., Zhang, Q., Chen, X., and Pan, Y. (2014). The effects of prediction on the perception for own-race and other-race faces. PLoS ONE 9:e114011. doi: 10.1371/journal.pone.0114011

Rossion, B., and Caharel, S. (2011). ERP evidence for the speed of face categorization in the human brain: disentangling the contribution of low-level visual cues from face perception. Vision Res. 51, 1297–1311. doi: 10.1016/j.visres.2011.04.003

Rossion, B., Delvenne, J. F., Debatisse, D., Goffaux, V., Bruyer, R., Crommelinck, M., et al. (1999). Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biol. Psychol. 50, 173–189. doi: 10.1016/S0301-0511(99)00013-7

Rossion, B., and Jacques, C. (2008). Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage 39, 1959–1979. doi: 10.1016/j.neuroimage.2007.10.011

Russell, R. (2003). Sex, beauty, and the relative luminance of facial features. Perception 32, 1093–1107. doi: 10.1068/p5101

Russell, R. (2009). A sex difference in facial pigmentation and its exaggeration by cosmetics. Perception 38, 1211–1219. doi: 10.1068/p6331

Russell, R., Porcheron, A., Sweda, J. R., Jones, A. L., Mauger, E., and Morizot, F. (2016). Facial contrast is a cue for perceiving health from the face. J. Exp. Psychol. Hum. Percept. Perform. doi: 10.1037/xhp0000219 [Epub ahead of print].

Sadeh, B., Podlipsky, I., Zhdanov, A., and Yovel, G. (2010). Event-related potential and functional MRI measures of face-selectivity are highly correlated: a simultaneous ERP-fMRI investigation. Hum. Brain Mapp. 31, 1490–1501. doi: 10.1002/hbm.20952

Schweinberger, S. R. (2011). “Neurophysiological correlates of face recognition,” in The Oxford Handbook of Face Perception, eds A. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (Oxford: Oxford University Press), 345–366.

Stahl, J., Wiese, H., and Schweinberger, S. R. (2010). Learning task affects ERP-correlates of the own-race bias, but not recognition memory performance. Neuropsychologia 48, 2027–2040. doi: 10.1016/j.neuropsychologia.2010.03.024

Stephen, I. D., and McKeegan, A. M. (2010). Lip colour affects perceived sex typicality and attractiveness of human faces. Perception 39, 1104–1110. doi: 10.1068/p6730

Susac, A., Ilmoniemi, R. J., Pihko, E., Nurminen, J., and Supek, S. (2009). Early dissociation of face and object processing: a magnetoencephalographic study. Hum. Brain Mapp. 30, 917–927. doi: 10.1002/hbm.20557

Tarkiainen, A., Cornelissen, P. L., and Salmelin, R. (2002). Dynamics of visual feature analysis and object-level processing in face versus letter-string perception. Brain 125, 1125–1136. doi: 10.1093/brain/awf112

Thierry, G., Martin, C. D., Downing, P., and Pegna, A. J. (2007). Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nat. Neurosci. 10, 505–511. doi: 10.1038/nn1864

Ueda, S., and Koyama, T. (2011). Influence of eye make-up on the perception of gaze direction. Int. J. Cosmet. Sci. 33, 514–518. doi: 10.1111/j.1468-2494.2011.00664.x

Vizioli, L., Foreman, K., Rousselet, G. A., and Caldara, R. (2010a). Inverting faces elicits sensitivity to race on the N170 component: a cross-cultural study. J. Vis. 10, 1–23. doi: 10.1167/10.1.15

Vizioli, L., Rousselet, G. A., and Caldara, R. (2010b). Neural repetition suppression to identity is abolished by other-race faces. Proc. Natl. Acad. Sci. U.S.A. 107, 20081–20086. doi: 10.1073/pnas.1005751107

Watanabe, S., Kakigi, R., Koyama, S., and Kirino, E. (1999a). Human face perception traced by magneto- and electro-encephalography. Cogn. Brain Res. 8, 125–142. doi: 10.1016/S0926-6410(99)00013-0

Watanabe, S., Kakigi, R., Koyama, S., and Kirino, E. (1999b). It takes longer to recognize the eyes than the whole face in humans. Neuroreport 10, 2193–2198. doi: 10.1097/00001756-199907130-00035

Wiese, H., Kaufmann, J. M., and Schweinberger, S. R. (2012). The neural signature of the own-race bias: evidence from event-related potentials. Cereb. Cortex 24, 826–835. doi: 10.1093/cercor/bhs369

Keywords: N170, event-related potential, cosmetic makeup, eyes, mouth, face perception

Citation: Tanaka H (2016) Facial Cosmetics Exert a Greater Influence on Processing of the Mouth Relative to the Eyes: Evidence from the N170 Event-Related Potential Component. Front. Psychol. 7:1359. doi: 10.3389/fpsyg.2016.01359

Received: 16 June 2016; Accepted: 25 August 2016;

Published: 05 September 2016.

Edited by:

Jim Grange, Keele University, UKReviewed by:

Alex L. Jones, Swansea University, UKLindsey A. Short, Redeemer University College, Canada

Copyright © 2016 Tanaka. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hideaki Tanaka, dGFuYWhpZGVAb3RlbW9uLmFjLmpw

Hideaki Tanaka

Hideaki Tanaka