- 1Department of Linguistics, Macquarie University, Sydney, NSW, Australia

- 2The HEARing CRC, Melbourne, VIC, Australia

- 3Department of Psychology, Macquarie University, Sydney, NSW, Australia

- 4Department of Statistics, Macquarie University, Sydney, NSW, Australia

Listening to degraded speech can be challenging and requires a continuous investment of cognitive resources, which is more challenging for those with hearing loss. However, while alpha power (8–12 Hz) and pupil dilation have been suggested as objective correlates of listening effort, it is not clear whether they assess the same cognitive processes involved, or other sensory and/or neurophysiological mechanisms that are associated with the task. Therefore, the aim of this study is to compare alpha power and pupil dilation during a sentence recognition task in 15 randomized levels of noise (-7 to +7 dB SNR) using highly intelligible (16 channel vocoded) and moderately intelligible (6 channel vocoded) speech. Twenty young normal-hearing adults participated in the study, however, due to extraneous noise, data from only 16 (10 females, 6 males; aged 19–28 years) was used in the Electroencephalography (EEG) analysis and 10 in the pupil analysis. Behavioral testing of perceived effort and speech performance was assessed at 3 fixed SNRs per participant and was comparable to sentence recognition performance assessed in the physiological test session for both 16- and 6-channel vocoded sentences. Results showed a significant interaction between channel vocoding for both the alpha power and the pupil size changes. While both measures significantly decreased with more positive SNRs for the 16-channel vocoding, this was not observed with the 6-channel vocoding. The results of this study suggest that these measures may encode different processes involved in speech perception, which show similar trends for highly intelligible speech, but diverge for more spectrally degraded speech. The results to date suggest that these objective correlates of listening effort, and the cognitive processes involved in listening effort, are not yet sufficiently well understood to be used within a clinical setting.

Introduction

Listening to degraded speech, either in adverse acoustic environments or with hearing loss, is challenging (McCoy et al., 2005; Stenfelt and Rönnberg, 2009), and it is assumed that the increased cognitive load required to understand a conversation is associated with self-reported effort (Lunner et al., 2009; Rudner et al., 2012). Adults with hearing loss report listening to be greatly taxing (Kramer et al., 2006), which may cause increased stress and fatigue (Hétu et al., 1988), contribute to early retirement (Danermark and Gellerstedt, 2004), social withdrawal (Weinstein and Ventry, 1982), and negatively affect relationships (Hétu et al., 1993). Current speech perception tests, which measure performance on a word or sentence recognition task, provide only a gross indication of the activity limitations caused by hearing loss, and do not consider the top–down effects related to increased concentration and attention, as well as effort (Wingfield et al., 2005; Pichora-Fuller and Singh, 2006; Schneider et al., 2010). Therefore, concurrently measuring the cognitive load or listening effort needed to undertake a speech perception task could increase its sensitivity, enabling a more holistic understanding of the challenges faced by adults with hearing loss in communicative settings.

Listening effort, defined as “the mental exertion required to attend to, and understand, an auditory message” (McGarrigle et al., 2014), is influenced by both the clarity of the auditory signal and the cognitive resources available. As hearing loss and cognitive decline are highly associated with age (Salthouse, 2004; Lin et al., 2013), there is a recognized need to understand the contribution of cognition and effort to listening to everyday speech within a clinical environment to better direct rehabilitation strategies towards and/or improve device fitting, particularly for older adults. Certainly it has been shown that greater cognitive resources are required to perceive a speech signal that becomes more degraded and this is more challenging for older adults (Rabbitt, 1991; Rönnberg et al., 2010, 2013). However, importantly, several studies have also highlighted the advantages that individuals with greater cognitive resources have to understand speech in noise (Lunner, 2003), utilize fast signal processing strategies in hearing aids (Lunner and Sundewall-Thorén, 2007), and compensate when mismatches occur between what is heard and the brain’s phonological representations of speech (Avivi-Reich et al., 2014).

Recently, there has been an increased interest in understanding and measuring listening effort, so that future clinical measures may ensue. Many studies have attempted to estimate listening effort, using behavioral, subjective or objective approaches (see McGarrigle et al., 2014 for a review). While subjective measures have high face-validity, they have several inherent limitations; including whether participants are indeed rating perceived effort, or rating their ability to discriminate between different signal-to-noise ratios (SNRs; Rudner et al., 2012). Additionally, subjective measures poorly correlate with other behavioral and objective measures of listening effort (Zekveld et al., 2010; Gosselin and Gagné, 2011; Hornsby, 2013), possibly because these measures relate to specific components of the goal-directed cognitive processes underpinning mental effort (Sarter et al., 2006), therefore each should be investigated. An effective and consistent objective correlate of listening effort has not yet been found (Bernarding et al., 2013), although pupil dilation and oscillations in the alpha frequency band (8–12 Hz) have independently been shown to be associated with changes in speech intelligibility (Obleser et al., 2012; Becker et al., 2013; Zekveld and Kramer, 2014; Petersen et al., 2015) and seem to be sensitive to hearing loss during a speech recognition or digit recall task in noise (Kramer et al., 1997; Zekveld et al., 2011; Petersen et al., 2015). It is, however, not yet known whether these two objective measures assess the same processes, whether sensory (e.g., phonological mapping of degraded speech), cognitive (e.g., cognitive load, inhibition of task irrelevant activity, or working memory), or neurophysiological (e.g., acute stress associated with the investment of attentional resources). These physiological responses may also reflect the extent of brain regions that are recruited to achieve a specific performance (e.g., to increase cognitive processing or provide inhibitory control; see Radulescu et al., 2014). Further, while there is an extensive literature on the neurophysiological mechanisms governing pupil dilation (Laeng et al., 2012), less is understood about those which underpin oscillatory cortical activity or the neuromodulators which influence it (Klimesch et al., 2007).

There appear to be general trends observed between task difficulty and changes in pupil dilation or in alpha power, however, these are not consistent across all studies (see Zekveld and Kramer, 2014; Wöstmann et al., 2015). This may in part depend on the type of task (i.e., listening to randomized or fixed speech tokens), the period when the physiological response is measured (during listening to degraded speech or during the retention period of a memory recall task), or the population characteristics (younger versus older adults, or normal hearing versus those with hearing loss). Alternatively, cognitive load/listening effort may be inherently non-linear and a function of the availability of processing resources coupled with the intentional motivation to allocate such resources to the task (Sarter et al., 2006). That is, when the task is too difficult and the processing demands exceed the available cognitive resources, or when the task is too easy and requires minimal cognitive resources (i.e., is automatic or passive), then effort may not be required or allocated to the task (Granholm et al., 1996; Zekveld and Kramer, 2014). As such, the greatest change in objective measures related to effort may be observable at medium levels of performance, rather than at the extreme ends of performance. Similar non-linear associations between performance and stress (Anderson, 1976) and performance and mental effort have been previously reported (Radulescu et al., 2014).

The current study aims to compare both alpha activity and pupil dilation measured simultaneously over a complete performance-intensity function while listening to sentences with high intelligibility (16-channel vocoded) or moderate intelligibility (6-channel vocoded). Specifically, it aims to identify whether these measures show similar patterns of behavior across the 15 SNRs and with the two levels of vocoding, suggesting that they may encode similar sensory, cognitive or neurophysiological processes involved in listening effort (that currently remain unclear; McGarrigle et al., 2014). A further reason to manipulate both the SNRs and the channel vocoding to degrade speech was to investigate the behavior of these measures on what could be approximated to a simulation of listening with a cochlear implant (Friesen et al., 2001). If these measures are to be applicable in clinical settings, their pattern of behavior should be predictable in a clinical population.

Materials and Methods

Participants

Twenty young adults were recruited to participate in this study. Amongst this group, two did not attend all testing sessions. Invalid recordings led to the exclusion of two more participants for the Electroencephalography (EEG) measures and an additional six for the pupil measures. The main reason for excluding the data related to participants looking away from the visual target or closing their eyes when listening became difficult. Participants (10 females, 6 males) were aged from 19 to 28 years (mean = 23 years, SD = 2.6). All participants were native Australian English speakers and were right-handed. Participants’ hearing was screened using distortion product otoacoustic emissions. All participants had present emissions bilaterally between 1–4 kHz, which ruled out a moderate or greater hearing loss. All participants reported normal or corrected-to-normal vision. Informed consent was obtained from all participants.

Speech Perception Material

Recorded Bamford-Kowal-Bench/Australia (BKB/A) sentences spoken by a native Australian-English female were presented as targets in the presence of four-talker babble noise. The sentences and background noise were vocoded by dividing the frequency range from 50 to 6000 Hz into 6 or 16 logarithmically spaced channels. The amplitude envelope was then extracted from each channel and used to modulate the noise with the same frequency band. Each band of noise was then recombined to produce the noise vocoded sentences and background noise. See Shannon et al. (1995) for more information about speech recognition with vocoded material.

Physiological Measures

Electroencephalography activity and pupil dilation were measured simultaneously during the speech recognition task conducted in a sound-treated and magnetically shielded room. With their forehead resting on an eye-tracker support, participants were asked to maintain their gaze on a small cross presented in the middle of the computer screen. The following presentation protocol was used: 1 s of quiet, variable length of noise (>1 s), sentence in noise, 1 s of noise. Physiological testing was conducted across two sessions: session one used the 16-channel vocoded material and session two used the 6-channel vocoded material. Each session presented 240 target sentences at 65 dB with the noise randomized between 58 and 72 dB (-7 to +7 dB SNR, a total of 15 levels). Pilot data indicated these SNRs provided the full range (0–100%) of speech recognition scores (SRS). The randomization was programmed for sentences of the same BKB/A list to be presented at the same SNR to allow off-line scoring of performance as per the original lists.

After each presentation, a response period of 4 s was given, and indicated by a starting and a finishing tone. Participants were asked to repeat the sentences they heard between the two tone signals, and to guess when unsure. Oral responses were recorded using a voice recorder and video-camera setup directly in front of them, to allow more accurate marking of their responses at a later time. The sentence recognition in noise task was scored at a word level (using the standard BKB/A scoring criteria) and performance was scored for each SNR condition.

EEG

A soft-cap was used to facilitate the spatial separation of the electrodes. EEG data were recorded from 32 Ag-AgCl sintered electrodes using the 10–20 montage with a Synamps II amplifier. The ground electrode was located between the Fz and FPz electrodes. Electrode impedances were kept below 5 kΩ. Ocular movement was recorded with bipolar electrodes placed at the outer canthi, and above and below the left eye. Data was recorded at a sampling rate of 1000 Hz, an online band-pass filter of 0.01 to 100 Hz, and a notch filter at 50 Hz.

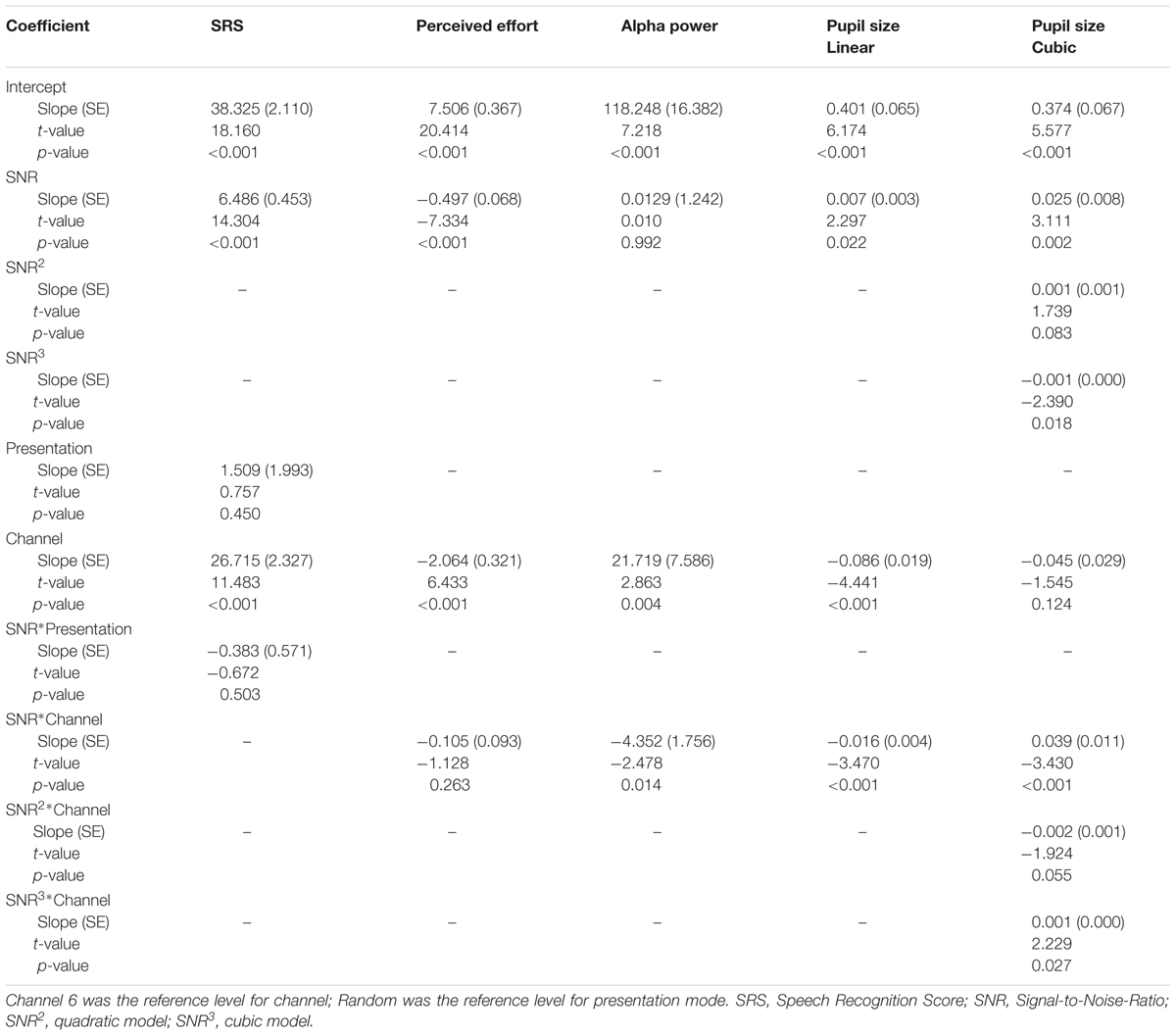

Post-acquisition, all cortical recordings were analyzed using Fieldtrip, an analysis toolbox in MATLAB developed by Oostenveld et al. (2011). The raw EEG data were first epoched between -2 and 6 s relative to the stimulus onset at 0 s which were then re-referenced to the combined mastoids. The re-referenced epochs were then bandpass filtered with the cut-off frequencies of 0.5 to 45 Hz. Eyeblink artifacts were rejected by transforming the sensor space data into independent components space data using independent component analysis (‘runica’). The eyeblink artifacts were visually inspected and rejected by transforming the components data back into sensor space by excluding the identified eyeblink component(s). Movement related artifacts and noisy trials were rejected by visual inspections. The accepted trials were bandpass filtered again with cut-off frequencies between 8 and 12 Hz to extract alpha oscillations. Alpha band activity was extracted from the parietal electrodes (P3, P4, and Pz) during the encoding period (1 s duration finishing 200 ms before the end of the sentence) and was subtracted from the baseline in noise (300–800 ms after the noise onset) on a trial by trial basis, then averaged to obtain mean alpha power for each SNR. As no significant time-frequency electrode clusters were identified across the scalp during the sentence processing time period, alpha power in the parietal region was used in the current study. A time-frequency representation of the average EEG data collapsed across all of the signal-to-noise levels (Figure 1) illustrated the increased activity occurring in the alpha frequency-band averaged during the sentence presentations for both 16-channel and 6-channel noise vocoded sentences.

FIGURE 1. Time-frequency representation of the EEG activity averaged across all participants, in the frontal and parietal region, for 16- and 6-channel vocoding. The time-frequency representations are relative to the activity occurring during the 1 s of noise beginning at the 1 s time-point. On this graph, all sentences finished at the 4.5 s time-point.

Pupillometry

Pupil size was measured with a monocular (right eye) Eyelink 1000 eye-tracker sampling at 1000 Hz. Single-trial pupil data was processed through Dataviewer software (version 1.11.1), and compiled into single-trial pupil-diameter waveforms (0 s baseline to 6 s) for further offline processing and analyses performed using MATLAB. Data were smoothed using a 5-points moving average.

Blinks were identified in each trial as pupil sample sizes that were smaller than three standard deviations below the mean pupil diameter. Trials where more than 15% of the trial samples were detected as in a blink (which also occurred when the participants were looking away from target) were rejected. In accepted trials, samples within blinks were interpolated from between 66 ms preceding the onset of a blink to 132 ms following the end of a blink. Accepted trials were averaged to form condition-specific pupil size waveforms to represent change of pupil dilation across the trial. For each participant a threshold of 135 or more accepted trials in both the 6- and the 16- channel blocks had to be met to not be excluded, so that a meaningful condition average may be formed. The average of accepted trials for each participant was 193, or 13 trials per SNR.

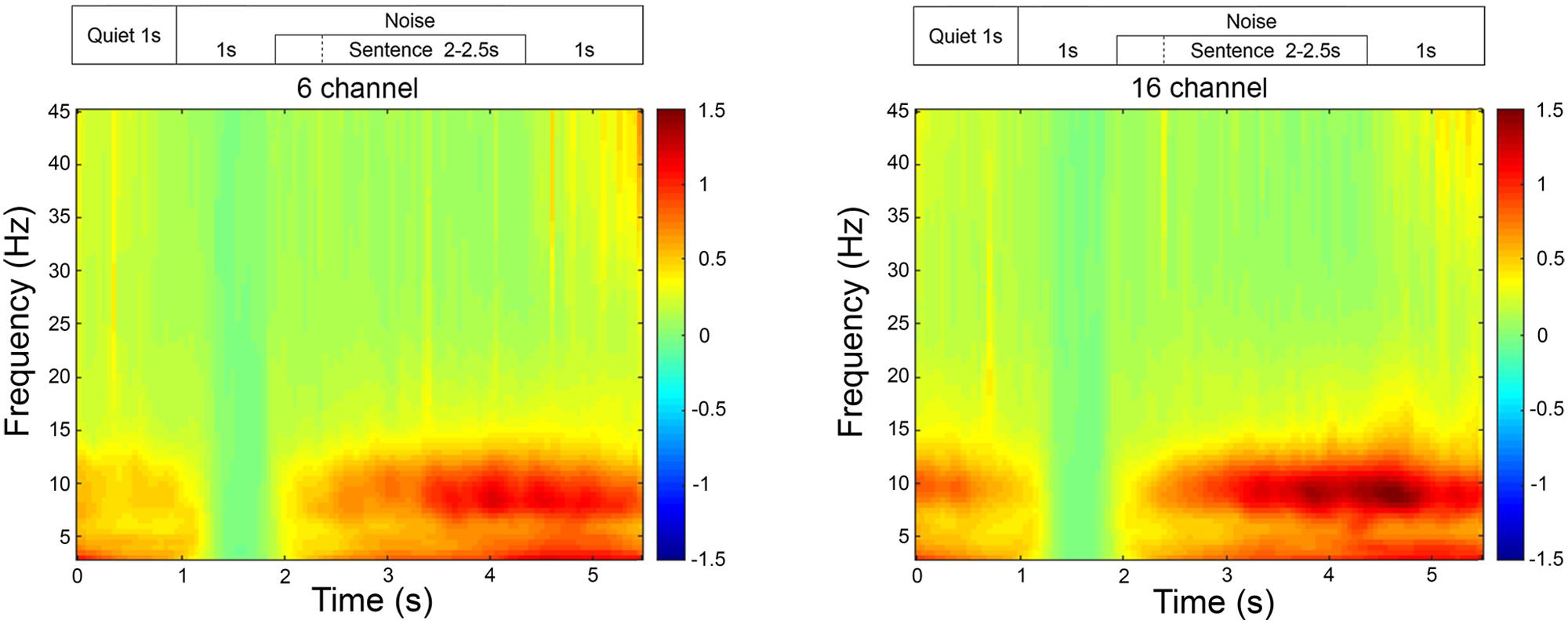

For each trial, the mean pupil size measured between 0 and 2 s was subtracted from the peak pupil size identified between 2 and 6 s (see Figure 2 for an example of the pupil response during the experiment).

FIGURE 2. Averaged pupil size over time for all trials and participants, for 16- and 6-channel vocoding. The 1 s time-point refers to the beginning of noise. On this graph, all sentences finished at the 4.5 s time-point.

Behavioral Measures

A behavioral test session was conducted with each participant to obtain a self-reported measure of effort during the sentence recognition task, which could be later compared to the physiological measures. This measure could not readily be obtained during the physiological test session because of the randomization of SNRs at each trial. The behavioral testing was performed in an acoustically treated room, with the equipment calibrated prior to each participant’s session. The speaker was positioned one meter from the participant at 0° azimuth. An adaptive procedure was chosen to obtain effort ratings at three SNRs around the mid-range of each participant’s performance-intensity function. The speech-in-noise algorithm and software used were developed by the National Acoustic Laboratories to obtain speech reception thresholds (SRT, the signal to noise ratio at which 50% of words were correctly perceived; see Keidser et al., 2013 for a comprehensive review). Target sentences were presented at 65 dB and the background noise was modulated using an adaptive procedure. The participant’s SRT was calculated when the standard error was less than 0.8 dB. The noise was then presented at a fixed level based on the participant’s SRT with 1 list (16 sentences), to validate the accuracy of the initial SRT calculation. Finally, the noise was fixed at -3 and +3 dB relative to their SRT and two lists per condition were presented, so that performance could be measured in easier and more difficult conditions. Thus, the conditions presented were: 50%SRT, 50%SRT(-3 dB), and 50%SRT(+3 dB) in the 16- and 6-channel vocoded conditions. All presentations were counterbalanced across participants for level and vocoding. After each presentation, participants were asked to rate the perceived effort invested in each SRT condition on a Borg CR10 scale (Borg, 1998).

Statistical Methods

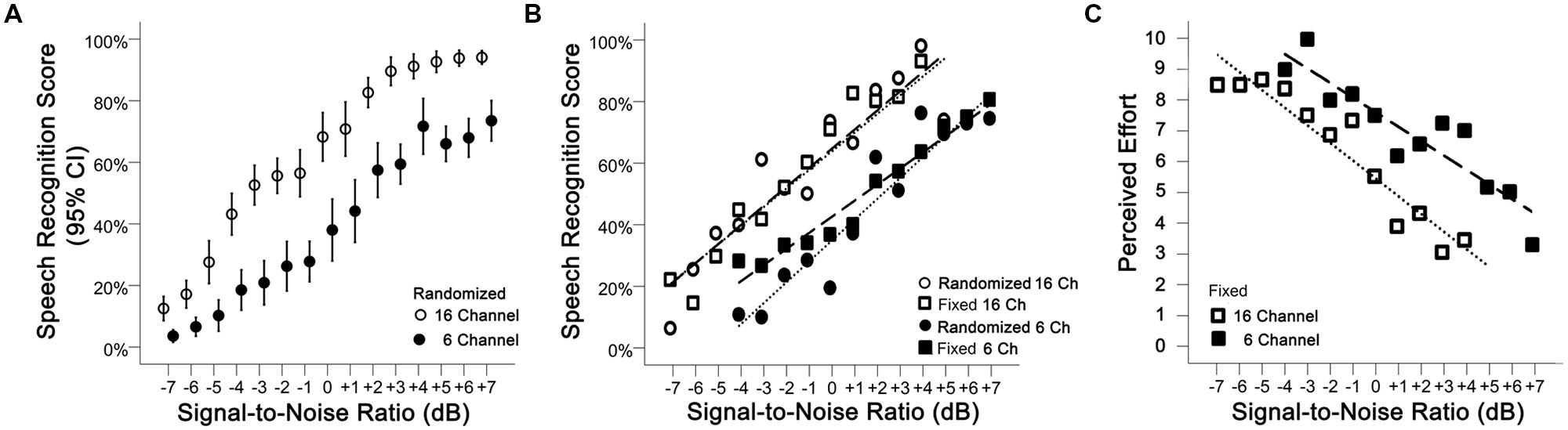

Linear mixed-effects models with a random intercept for individual were used for all analyses to control for repeated-measures over different levels of SNR on individuals. While random slopes were also of interest, these models failed to converge and were therefore not utilized.

Models for SRS were built by comparing a model with SNR, presentation mode and channel vocoding to a model containing SNR, presentation mode, channel vocoding and the interaction between SNR and presentation mode. The terms were fitted in the order described although no result difference was found if they were added to the model in a different order. Likelihood ratio tests were used to compare fixed effects of the simpler and more complex models after fitting the model using maximum likelihood. Where an interaction was not significant, the main effects model results were reported. All categorical variables used treatment contrasts (whereby all levels were compared with a reference level). P-values less than 0.05 were considered significant for all analyses.

Models for perceived effort, pupil size and alpha power were built by comparing a model with SNR and channel vocoding as main effects to a model with an interaction between SNR and channel vocoding. Because visual inspection of the change in pupil size and alpha power over SNRs suggested non-linear changes for one or both channels, models sequentially including a quadratic term for SNR (i.e., SNR2) and then a cubic term for SNR (i.e., SNR3) with an interaction between each term and vocoding channel were used to determine if the effects were similar for both channels. Again, likelihood ratio tests were used to compare models. These models are reported separately by channel vocoding (6 and 16) to aid interpretation. Models with a quadratic term are used to describe a simple curvilinear change while cubic terms are used to explain more complicated curvature with more than one change in the direction of the curve.

To account for the use of repeated measures on individuals, correlations presented in the results section are the average of the correlations calculated for each individual. Analyses were performed in R version using the nlme Package. This study was conducted under the ethical oversight of the Human Research Ethics Committee at Macquarie University (Ref: 5201100426).

Results

Performance-Intensity Functions and Effort Ratings

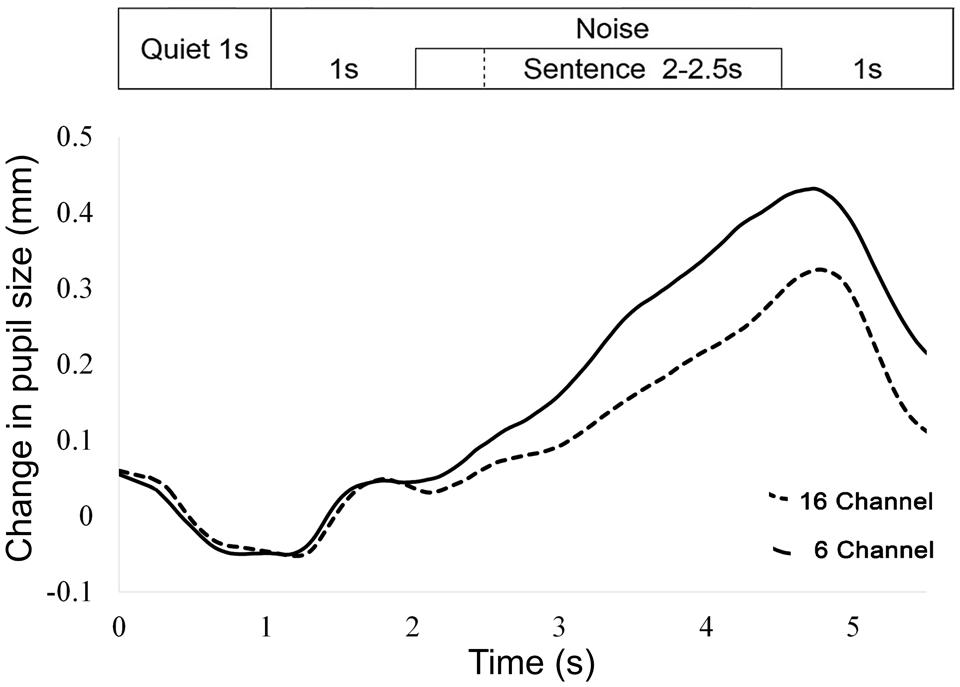

Performance-intensity functions were measured during the behavioral test session (using 3 fixed SNRs per participant) and the physiological test session (using randomized SNRs across the 15 levels of noise). As seen in Figure 3A, SRSs measured during the physiological test session increased with SNR (p < 0.001) for both vocoding levels [16 ch: r = 0.93 (95% CI: 0.92 to 0.94); 6 ch: r = 0.92 (95% CI: 0.91 to 0.94)]. As expected, SRSs were significantly greater with the 16-channel material compared to the 6-channel (mean difference 26.72%, 95% CI: 22.12 to 31.31%, p < 0.001, Table 1). Figure 3B displays the performance-intensity functions where the three SNR levels presented in the behavioral session (fixed presentation) were matched to the same three SNRs measured during the objective session (randomized presentation). There was no evidence for a difference in the pattern of change in SRS between the fixed and random modes of presentation across the SNR levels, after adjusting for channel vocoding (p = 0.50, Table 1). For the 16-channel vocoding, for every unit increase in SNR, SRS increased by 6.44% (95% CI: 5.07 to 7.82%) for the fixed versus 6.47% (95% CI: 5.12 to 7.82%) for the randomized presentation, showing that the slopes by mode of presentation overlap considerably. Similarly, for the 6-channel vocoding, for every unit increase in SNR, SRS increased by 5.47 (95% CI: 4.29 to 6.64%) for the fixed versus 6.65% (95% CI: 5.13 to 8.18%) for the randomized presentation.

FIGURE 3. (A) Performance-intensity functions (mean plus 95% confidence intervals) are shown for the 16-channel (open circles) and 6-channel (closed circles) vocoded sentences measured during the physiological test session where SNRs were randomized. (B) Performance-intensity functions across the behavioral (squares) and physiological (circles) test sessions are very similar. (C) Mean effort ratings for 16-channel and 6-channel vocoded material measured in the behavioral test session.

Figure 3C shows the mean effort ratings measured after each of the fixed SNR sentence blocks. There was no interaction between SNR and channel vocoding (p = 0.26, Table 1) indicating no evidence of a different pattern of effort over SNR between the two channels. Excluding the interaction term, LME regression confirmed that perceived effort averaged over channels significantly decreased (p < 0.001) with increasing SNR (-0.55, 95% CI: -0.65 to -0.45). SRS with 6-channel vocoding required on average 2.10 units more effort than 16-channel vocoding (95% CI: 1.47 to 2.74; p < 0.001).

EEG Analyses

Effect of Vocoding on Baseline Alpha

A LME regression was used to examine the effect of vocoding (conducted during different test sessions) on alpha power during baseline. No significant difference was found between16- and 6-channel vocoding (mean difference = 0.69 mcV2, 95% CI: -1.47 to 2.85, p = 0.53). This suggests that overall, participants had similar alpha power baselines on both test sessions.

Alpha Power Change and SNR

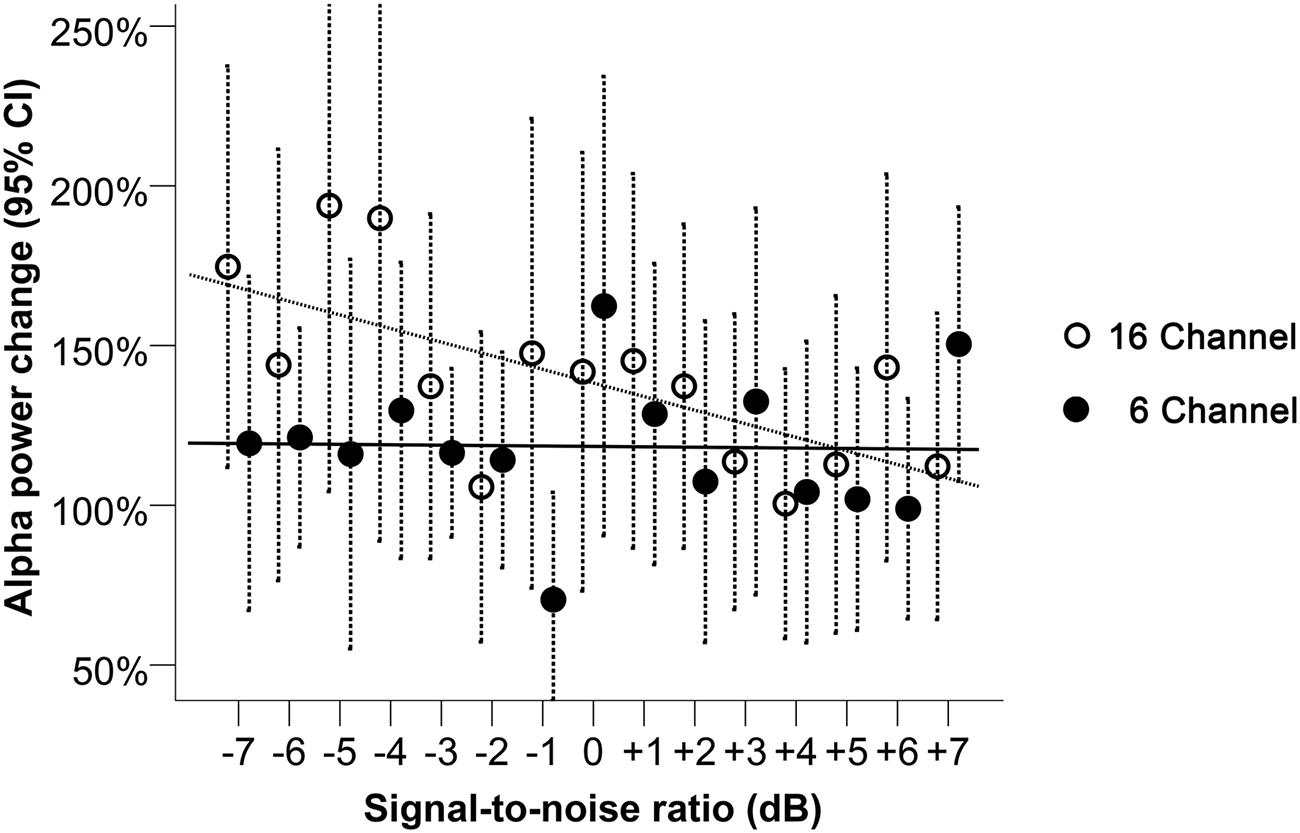

Alpha power was processed as a relative change from baseline in noise, for each trial. A LME regression model suggested a significant interaction effect between SNR and channel vocoding on alpha power change (p = 0.01, Table 1). Specifically, for the 6-channel vocoding, there was no evidence of a change in alpha power over the different SNRs (0.01%, 95% CI: -2.38 to 2.41%); p = 0.99) while for the 16-channel vocoding, for every unit increase in SNR, alpha power decreased by 4.34% (95% CI: 1.94 to 6.73% decrease; p < 0.001). Non-linear models using a quadratic or cubic term for both channel vocoding did not improve model fit compared to a linear model (log likelihood -2632.18 vs. -2632.57, p = 0.68 and -2631.87 vs. -2632.57, p = 0.84, respectively). As seen in Figure 4, the largest separation between 16- and 6-channel vocoding was in the most challenging (lower) SNRs.

FIGURE 4. Alpha power change relative to baseline during 16- and 6-channel vocoded sentence recognition at SNRs between -7 and +7 dB. Dashed bars indicate 95% CI. The trend lines shown correspond to the best model fit, respectively, for the 16- and the 6-channel conditions.

Pupil Analyses

Pupil Size Change from Baseline

For the pupil size, a LME model was conducted to verify the effect of vocoding (conducted during different test sessions) on baseline, while controlling for repeated measures. The pupil size during baseline was found to be significantly larger during the second session [6-channel (harder condition); mean difference = 0.56 mm, 95% CI: 0.47 to 0.64 mm, p < 0.001].

Pupil Size Change and SNR

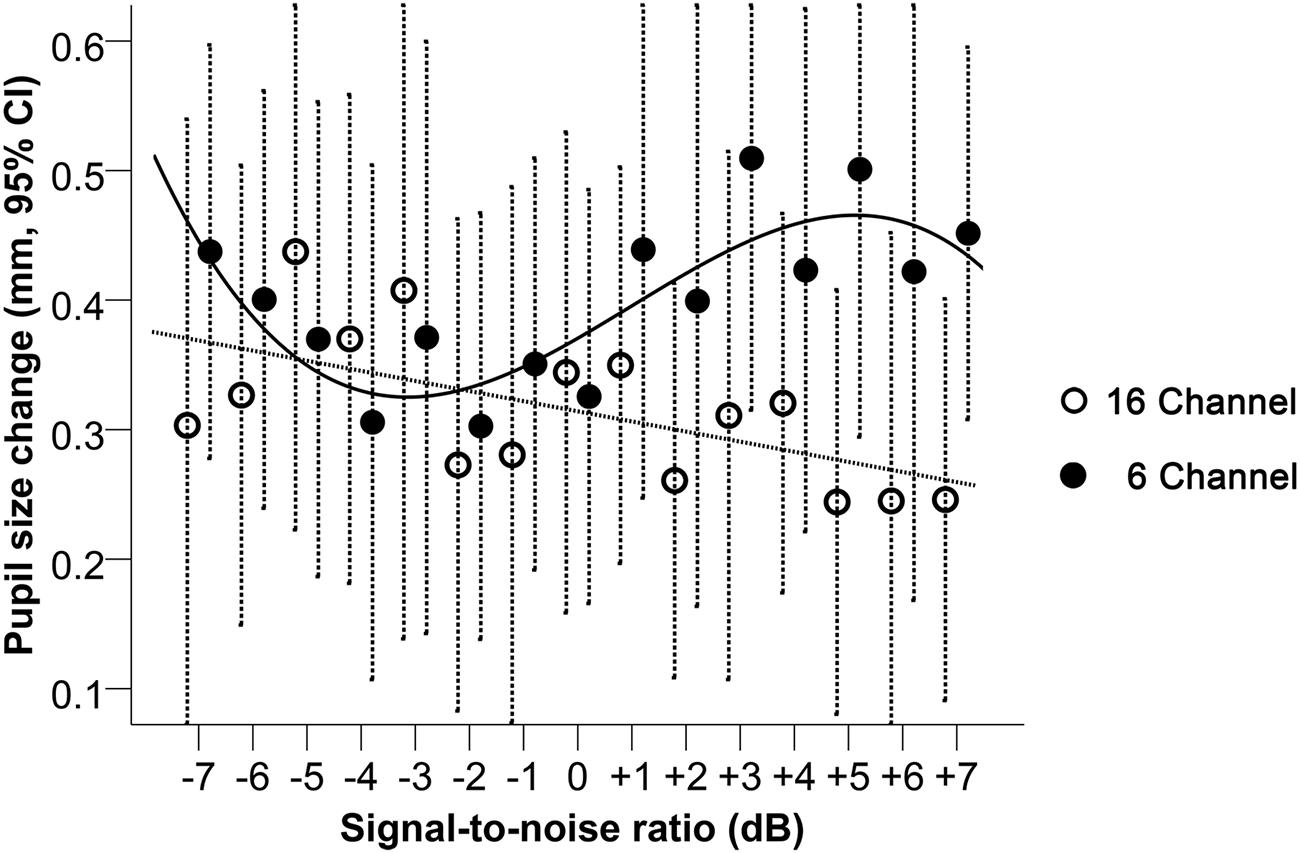

Looking at the pupil size change relative to baseline (Figure 5), A LME regression model with only a linear term in SNR indicated a significant interaction effect between vocoding and SNR (p < 0.001, Table 1). For every unit increase in SNR, pupil size significantly increased by 0.007 mm (95% CI: 0.001 to 0.014 mm; p = 0.02) for the 6-channel vocoding while it significantly decreased for the 16-channel (mean change -0.008 mm, 95% CI: -0.015 to -0.002 mm; p = 0.01). Visual inspection of the relationship between pupil size and SNR indicated a potential non-linear relationship. As such a mixed effects model for pupil diameter containing a cubic term for SNR (Table 1) had significantly better fit compared to a linear model (log likelihood 97.6 versus 92.4, p = 0.04) or quadratic model (log likelihood 97.6 versus 94.4, p = 0.04). An interaction between the cubic term and channel was significant (p = 0.03). Examination of the relationship between pupil size and SNR within each channel indicated that with 16-channel vocoding, there was no significant effect of a quadratic term (p = 0.34) or cubic term in SNR (p = 0.46), while there was strong evidence of a cubic relationship (p = 0.01) for the 6-channel vocoding.

FIGURE 5. Pupil size change relative to baseline during 16-and 6-channel vocoded sentence recognition at SNRs between -7 and +7 dB. Dashed bars indicate 95% CI. The trend lines shown correspond to the best model fit, respectively, for the 16- and the 6-channel conditions.

Individual Alpha Power versus Pupil Size Change Comparisons

At the individual level, alpha power change was not found to be significantly correlated (p > 0.05) with pupil size change for either the 16-channel (mean r = 0.05, 95% CI: -0.16 to 0.26) or the 6-channel vocoding (mean r = -0.10, 95% CI: -0.35 to 0.16).

Discussion

The results of this study suggest that, while there was a significant and expected difference in speech recognition performance and effort rating between the 6- and 16-channel vocoded material across the 15 SNRs, the mean changes observed in the physiological measures (alpha power and pupil size) were less predictable. Significant relationships were found between mean pupil dilation and SNR, and mean alpha power and SNR for 16-channel vocoded sentences, showing a similar trajectory of change; i.e., larger pupil responses and larger alpha power change were measured for less intelligible speech. For the pupil response only, there was also a significant non-linear relationship with SNR with the 6-channel vocoded sentences, whereby pupil dilation was larger in the hardest and easier conditions. This is perhaps consistent with the non-linear change in pupil dilation with changes in task difficulty that have been shown previously (Granholm et al., 1996; Zekveld and Kramer, 2014). Further, significant interactions between SNR and vocoding were seen in both physiological measures, although the largest difference between alpha power change was observed in the least intelligible conditions (more negative SNRs) whereas the largest difference in the pupil dilation was observed in the most intelligible conditions (more positive SNRs).

The linear association between SNR and pupil dilation for the 16-channel vocoded sentences, and the comparatively larger pupil dilation for the 6-channel compared with the 16-channel vocoded sentences at more positive SNRs (≥+2 dB), is similar to that observed in previous studies, i.e., larger pupil size is observed with greater cognitive load (Kahneman and Beatty, 1966; Granholm et al., 1996; Winn et al., 2015). Larger pupil dilation relative to baseline is typically measured during more cognitively demanding speech processing tasks. For example, poorer SNRs (Zekveld et al., 2010), greater spectral degradation with channel vocoding (Winn et al., 2015), single-talker compared with noise maskers (Koelewijn et al., 2012), randomized SNRs compared with fixed SNRs (Zekveld and Kramer, 2014), grammatical complexity (Schluroff, 1982) or perceptual effort with hearing loss (Kramer et al., 1997). Certainly the results of the current study support an increase in pupil dilation for the most challenging SNRs with the 16-channel vocoded sentences. However, the relationship between pupil dilation and SNR for the 6-channel vocoded sentences in the current study was not simple, where the mean pupil dilation across subjects plateaued for moderately negative SNRs and showed an increase with increasing speech intelligibility. It is possible that the changes in the pupil size across the 15 SNRs for the 6-channel vocoded sentences could reflect the non-linear behavior of the pupil size that has been observed when task difficulty exceeds capacity (Peavler, 1974; Granholm et al., 1996; Zekveld and Kramer, 2014). For example, it has been demonstrated that pupil dilation systematically increases with task difficulty (such as with a digit recall task), until it reaches or exceeds the limits of available cognitive resources, whereby it either asymptotes (Peavler, 1974), declines (Granholm et al., 1996), or shows both a decline followed by an asymptote for the most challenging intelligibility conditions (Zekveld and Kramer, 2014). An alternative explanation is that the noise levels per se could have influenced pupil dilation at the more negative SNRs (noise levels reached a maximum of 72 dB), where mean pupil dilation for both 16- and 6-channel vocoded sentences was similar. While Zekveld and Kramer (2014) attempted to reduce the likelihood of noise affecting pupil dilation by controlling the overall signal level while changing the SNR, in the current study, a fixed signal level was used with modulated levels of noise. Pupil dilation has been shown to be modulated by acute stress (Valentino and Van Bockstaele, 2008; Laeng et al., 2012) and animal studies have demonstrated that long-term effects of non-traumatic noise is associated with increased cortisol levels, hypertension and reduced cardiovascular function (see Gourévitch et al., 2014 for a review). A recent study looking at physiological measures of stress during listening in noise found that adults with hearing loss, who are constantly exposed to degraded speech, had higher autonomic system reactivity compared to adults with normal hearing, at similar performance levels (Mackersie et al., 2015). Therefore, while the noise levels in the current study were short-term, this may have caused a phasic stress reaction which could have influenced pupil dilation. This hypothesis, however, is not supported by studies suggesting that the pupil dilates with negative affect (Partala and Surakka, 2003).

The change in mean alpha power, relative to baseline, showed an enhancement of alpha activity in both 16-channel and 6-channel vocoding conditions, consistent with the inhibition hypothesis, where activity that is not related to the goal-directed task is actively inhibited (Klimesch et al., 2007). Therefore, it has been suggested that alpha enhancement which occurs during a speech-in-noise task results from the enhancement of auditory attention through the active suppression of noise (Strauß et al., 2014). However, most studies assessing alpha power change with vocoded speech material (Obleser and Weisz, 2012; Becker et al., 2013; Strauß et al., 2014) or during the processing of semantic information (Klimesch et al., 1997) have shown a reduction of alpha power, which is consistent with active cognitive processing of speech information. Specifically, the results of the current study appear contradictory to those reported by Obleser and Weisz (2012) using noise vocoded (2-, 4-, 8-, and 16-channels) mono- bi- and tri-syllabic words. They showed less alpha power suppression, of posterior-central alpha power with decreasing intelligibility measured between 800 and 900 ms post word onset. However, the task across the two studies was not the same. In the current study, participants were required to repeat the vocoded sentences, whereas in the Obleser and Weisz (2012) study, participants were asked to rank the comprehension of vocoded words without attending to the linguistic or acoustic aspects of the speech materials. While previous studies have shown a very high correlation between SRSs and rating scores, it is unclear whether the pattern of event-related oscillatory cortical activity measured during these different tasks is the same. Further, the types of analyses conducted across studies are not the same. For example, while Becker et al. (2013) demonstrated that mean alpha power during the region of interest (ROI) between 480 and 620 ms is reduced as speech intelligibility is increased (using monosyllabic French words), this was an absolute measure of alpha power rather than a change relative to the baseline. Variability of whether alpha power was increased or decreased was observed within studies. For example, Becker et al. (2013) showed the mean trajectory of change in alpha power during noise-vocoded monosyllabic words and demonstrated that alpha power is enhanced in the less intelligible conditions (similar to our results) but is suppressed in the most intelligible conditions (similar to the results shown by Obleser and Weisz, 2012). Further, using an auditory lexical decision task, Strauß et al. (2014) demonstrated mean increases of alpha power occurred for clear pseudo-words but a reduction was observed for ambiguous and real-words, which parametrically changed as the clarity of the words increased. Finally, using 18 younger and 20 older healthy adults, Wöstmann et al. (2015) demonstrated that decreases in mean alpha power which occurred as speech intelligibility increased (using four syllable digits masked by a single speaker) appeared to be driven by the older adults rather than an effect across the entire population. Given the differences in the types of speech stimuli used across the different studies, the task required, as well as the ROI used to assess alpha power changes (i.e., during or after the speech tokens), and the different populations assessed (older versus younger adults), further investigation of alpha power is needed to better understand the changes observed and how this might be used as an objective measure of attentional effort and/or cognitive load for the individual.

Within the current study, while a significant interaction was found between 6- and 16-channel vocoding for both alpha power and pupil size change, the trend patterns differed. The magnitude of the difference between both vocoding levels was greater in the most challenging SNRs for alpha power, but in the least challenging SNRs for the pupil size. This could suggest that these physiological responses are driven by different neurophysiological or attentional networks (Corbetta and Shulman, 2002; Corbetta et al., 2008; Petersen and Posner, 2012). There is a vast literature on attentional effort which suggests that discrete neuroanatomical areas encode specific cognitive operations (“processors”) that are involved in attention, which are modified by “controllers” depending on the type of attentional tasks required (see Power and Petersen, 2013). While the majority of the literature in this field focuses on the visual modality, there is evidence to suggest that similar processes should be evident when listening to degraded speech, such as listening in noise (Spagna et al., 2015). The main determinants of attentional allocation would then be; the identification of the appropriate processing strategy needed to undertake the speech perception task, the maintenance of attention during the task, and the processing of errors to increase (or, at least, reduce declines in) performance. Further, these processes may work synergistically under less cognitively demanding conditions but diverge under more challenging conditions, or conditions which have different types of attentional requirements (Vossel et al., 2014). It is also possible that different processors and controllers are used by different individuals to undertake these cognitively demanding task, which may have led to a lack of correlation between alpha power change and pupil dilation change within individuals. Corbetta and Shulman (2002) proposed the existence of two anatomically distinct attention networks; the dorsal fronto-parietal network, which is involved in the top–down voluntary or goal-directed allocation of attention (which includes preparatory attention and orienting within memory), and the ventral fronto-parietal network, which is involved in the involuntary shifts in attention. It is proposed that under normal circumstances, the ventral network is suppressed but is activated by unexpected, novel, salient, or behaviorally relevant events. Where this occurs, it is assumed that a “circuit-breaking” signal is sent to the dorsal attention network, resulting in reorienting, or shifting in attention toward this new event (Corbetta et al., 2008). It has been proposed that the locus coeruleus-norepinephrine (LC-NE) system modulates the functional integration of the entire cortical attentional system (Corbetta et al., 2008; Sara, 2009), whereby NE released by the LC triggers the ventral network to interrupt the dorsal attention network (Bouret and Sara, 2005) and reset attention. This ensures a coordinated rapid and adaptive neurophysiological response to spontaneous or conditioned behavioral imperatives (Sara and Bouret, 2012).

Pupil dilation is under the control of the LC-NE system, therefore it may be reasonable to assume that indirect attention tasks may be associated with the changes in pupil dilation observed in the current study. It has been proposed that pupil dilation is modulated by both staying on task and choosing between alternatives (exploration; Aston-Jones and Cohen, 2005). Therefore, a complex task, such as the perception and comprehension of a moderately intelligible (vocoded) speech signal, may result in changes in pupil dilation that reflect the interaction between different processing strategies. Alpha power changes have been associated with top–down inhibition of task irrelevant brain regions, and it has been suggested that alpha power is under the control of the dorsal attention network (Zumer et al., 2014). Further, increases in alpha power may inhibit the ventral attention network, preventing reorienting to irrelevant stimuli during goal-directed cognitive behavior (Benedek et al., 2014). While other models of attention exist (Seeley et al., 2007; Petersen and Posner, 2012), it is clear that a simple association between a physiological measure of attentional effort and task difficulty (e.g., changes in speech intelligibility) fails to consider the multiple autonomic cognitive operations as well as the voluntary control of attention that reflects effortful cognitive control (see Sarter et al., 2006). It is recognized that there is a dynamic interplay between the bottom–up sensory information and the top–down cognitively controlled factors (which may be either under automatic or voluntary control), such as knowledge, expectations and goals, that can be modulated by motivational factors, such as payment for participations (Tomporowski and Tinsley, 1996) and genetic influencers (Fan et al., 2003). Therefore, it is reasonable to assume that considerable variability in attentional allocation could exist between individuals undertaking a highly complex task.

An alternative explanation is that the within-subject variability of sustaining on-task attention toward sentences with unpredictable levels of intelligibility, was greater under the more challenging noise vocoding conditions (6-channel) where the effort-reward balance was not as high compared with the 16-channel vocoded materials. Sustaining attention on a complex task is challenging (Warm et al., 2008) and requires suppression of internal tendencies of mind-wandering, a default network activation that typically occurs during low task demands (Christoff et al., 2009; Gruberger et al., 2011), with concomitant activation of the goal-directed dorsal fronto-parietal attentional network (Corbetta and Shulman, 2002). Fluctuations in sustained attention can occur with stress, distraction with competing stimuli, fatigue, or lack of motivation toward the task, and are commonly associated with a decline in performance (Hancock, 1989; Esterman et al., 2012). As stated by Esterman et al. (2012) “as the neural systems supporting task performance appear to shift with one’s attentional state, failure to account for attentional fluctuations may obscure meaningful information about underlying mechanisms”. Certainly, some people have a preponderance to mind-wandering (Mason et al., 2007). This may be a confounder to the results of the current study comparing physiological responses to a range of SNRs, despite the ecological validity that this may have to their ability to follow conversations within multi-talker environments. That is, the variability in the physiological measures may, in fact, provide important information about the individual’s processing of degraded speech that is not captured within more common behavioral measures of speech perception. For example, a recent study by Kuchinsky et al. (2016), suggests that individual differences in the pupillary response of older adults with hearing loss during a monosyllabic word recognition task was related to task vigilance (less variability in response time) and to the extent of primary auditory cortical activity. Therefore, pupil dilation may index the magnitude of the engagement between bottom–up sensory and top–down cortical processing which is increased with greater degradation of the speech signal (influenced by poorer SNR, reduced spectral information, or hearing loss).

Significant differences in the baseline data were also observed between the 6- and 16-channel vocoding for pupil size, but not for alpha power. These two levels of vocoding were assessed during different sessions for all subjects, therefore this could be due either to a session effect, or to a difference in the level of cognitive effort that was maintained throughout the session. Given that the results are consistent with an increase in cognitive load during the 6-channel vocoded session, it is likely that the difference in the tonic pupillary response across the two physiological measures sessions (16- versus 6-channel vocoded-sentence tasks) resulted from differences in vigilance or the awareness of errors in performance during the more cognitively challenging task (Critchley, 2005; Ullsperger et al., 2010).

Limitations of the study include the relatively small number of participants included in the final data analysis (particularly for pupillary measures), and that only 16 sentences were presented for each SNR level (scored as 50 words across the set of 16 sentences) in each condition, reducing statistical power. Further, the test set-up restricted people from responding normally to an effortful task (i.e., a number of participants tended to close their eyes during the stimuli presentation but were instructed to keep their eyes opened). Explicitly investing effort in trying to keep their eyes opened despite the natural tendency to want to close them may have in itself created changes in pupil size and alpha oscillations. This may also have added an additional stressful component to the task.

Conclusion

The results of this study suggest that the relationship between task difficulty and both pupil dilation and alpha power change was similar for the 16-channel vocoded sentences (high intelligibility), which might suggest that the attentional networks are operating with high concordance, or in a consistent and predictable manner across the SNRs. However, further degradations in the speech intelligibility, using the 6-channel vocoded materials, could have produced a discordant relationship between the attention networks, or different processors (such as linguistic strategies) may have been used to comprehend the speech signal. Importantly, however, given the considerable interest in assessing listening effort within clinical settings (see McGarrigle et al., 2014), it is important to ensure that we have a solid understanding of what these physiological measures are assessing, and how to interpret the responses for the individual. Certainly, the results of this study do not currently support the clinical use of these physiological techniques as sufficiently sensitive to provide complementary information about listening effort to existing measures of speech perception performance. To be clinically viable in a hearing rehabilitation setting, such objective indices of effort should be more sensitive to changes in auditory input than existing measures of speech perception performance or subjective ratings of effort. The behavior of these indices should also be predictable across a range of performances and speech degradation to be applicable to the range of hearing loss and devices available, including hearing aids, and cochlear implants.

Author Contributions

Original idea: PL, CM, IB. Protocol development: PL, IB, CM, RI. Data collection: CYL, LG, PL, IB. Writing of manuscript: CM, IB, LG, KM, CYL. Data processing and analyses: RI, PL, PG, IB, CM, KM.

Funding

This study was supported by the HEARing CRC, established and supported by the Cooperative Research Centres Programme – Business Australia.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank Jörg Buchholz for support with protocol development, Mike Jones for support with statistical analyses, and Gabrielle Martinez for help with data collection. Contributions- Original idea: PL, CM, IB. Protocol development: PL, IB, CM, RI. Data collection: CYL, LG, PL, IB. Writing of manuscript: CM, IB, LG, KM, CYL. Data processing and analyses: RI, PL, PG, IB, CM, KM.

References

Anderson, C. R. (1976). Coping behaviors as intervening mechanisms in the inverted-U stress-performance relationship. J. Appl. Psychol. 61, 30. doi: 10.1037/0021-9010.61.1.30

Aston-Jones, G., and Cohen, J. D. (2005). An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu. Rev. Neurosci. 28, 403–450. doi: 10.1146/annurev.neuro.28.061604.135709

Avivi-Reich, M., Daneman, M., and Schneider, B. A. (2014). How age and linguistic competence alter the interplay of perceptual and cognitive factors when listening to conversations in a noisy environment. Front. Syst. Neurosci. 8:21. doi: 10.3389/fnsys.2014.00021

Becker, R., Pefkou, M., Michel, C. M., and Hervais-Adelman, A. G. (2013). Left temporal alpha-band activity reflects single word intelligibility. Front. Syst. Neurosci. 7:121. doi: 10.3389/fnsys.2013.00121

Benedek, M., Schickel, R. J., Jauk, E., Fink, A., and Neubauer, A. C. (2014). Alpha power increases in right parietal cortex reflects focused internal attention. Neuropsychologia 56, 393–400. doi: 10.1016/j.neuropsychologia.2014.02.010

Bernarding, C., Strauss, D. J., Hannemann, R., Seidler, H., and Corona-Strauss, F. I. (2013). Neural correlates of listening effort related factors: influence of age and hearing impairment. Brain Res. Bull. 91, 21–30. doi: 10.1016/j.brainresbull.2012.11.005

Bouret, S., and Sara, S. J. (2005). Network reset: a simplified overarching theory of locus coeruleus noradrenaline function. Trends Neurosci. 28, 574–582. doi: 10.1016/j.tins.2005.09.002

Christoff, K., Gordon, A. M., Smallwood, J., Smith, R., and Schooler, J. W. (2009). Experience sampling during fMRI reveals default network and executive system contributions to mind wandering. Proc. Natl. Acad. Sci. U.S.A. 106, 8719–8724. doi: 10.1073/pnas.0900234106

Corbetta, M., Patel, G., and Shulman, G. L. (2008). The reorienting system of the human brain: from environment to theory of mind. Neuron 58, 306–324. doi: 10.1016/j.neuron.2008.04.017

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Critchley, H. D. (2005). Neural mechanisms of autonomic, affective, and cognitive integration. J. Comp. Neurol. 493, 154–166. doi: 10.1002/cne.20749

Danermark, B., and Gellerstedt, L. C. (2004). Psychosocial work environment, hearing impairment and health. Int. J. Audiol. 43, 383–389. doi: 10.1080/14992020400050049

Esterman, M., Noonan, S.K., Rosenberg, M., and DeGutis, J. (2012). In the zone or zoning out? Tracking behavioral and neural fluctuations during sustained attention. Cereb Cortex. 23, 2712–2723. doi: 10.1093/cercor/bhs261

Fan, J., Fossella, J., Sommer, T., Wu, Y., and Posner, M. I. (2003). Mapping the genetic variation of executive attention onto brain activity. Proc. Natl. Acad. Sci. U.S.A. 100, 7406–7411. doi: 10.1073/pnas.0732088100

Friesen, L. M., Shannon, R. V., Baskent, D., and Wang, X. (2001). Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J. Acoust. Soc. Am. 110, 1150–1163. doi: 10.1121/1.1381538

Gosselin, P. A., and Gagné, J. P. (2011). Older adults expend more listening effort than young adults recognizing speech in noise. J. Speech Lang. Hear. Res. 54, 944–958. doi: 10.1044/1092-4388(2010/10-0069)

Gourévitch, B., Edeline, J.-M., Occelli, F., and Eggermont, J. J. (2014). Is the din really harmless? Long-term effects of non-traumatic noise on the adult auditory system. Nat. Rev. Neurosci. 15, 483–491. doi: 10.1038/nrn3744

Granholm, E., Asarnow, R. F., Sarkin, A. J., and Dykes, K. L. (1996). Pupillary responses index cognitive resource limitations. Psychophysiology 33, 457–461. doi: 10.1111/j.1469-8986.1996.tb01071.x

Gruberger, M., Simon, E. B., Levkovitz, Y., Zangen, A., and Hendler, T. (2011). Towards a neuroscience of mind-wandering. Front. Hum. Neurosci. 5:56. doi: 10.3389/fnhum.2011.00056

Hétu, R., Jones, L., and Getty, L. (1993). The impact of acquired hearing impairment on intimate relationships: implications for rehabilitation. Int. J. Audiol. 32, 363–380. doi: 10.3109/00206099309071867

Hétu, R., Riverin, L., Lalande, N., Getty, L., and St-Cyr, C. (1988). Qualitative analysis of the handicap associated with occupational hearing loss. Br. J. Audiol. 22, 251–264. doi: 10.3109/03005368809076462

Hornsby, B. W. (2013). The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear Hear. 34, 523–534. doi: 10.1097/AUD.0b013e31828003d8

Kahneman, D., and Beatty, J. (1966). Pupil diameter and load on memory. Science 154, 1583–1585. doi: 10.1126/science.154.3756.1583

Keidser, G., Dillon, H., Mejia, J., and Nguyen, C.-V. (2013). An algorithm that administers adaptive speech-in-noise testing to a specified reliability at selectable points on the psychometric function. Int. J. Audiol. 52, 795–800. doi: 10.3109/14992027.2013.817688

Klimesch, W., Doppelmayr, M., Pachinger, T., and Russegger, H. (1997). Event-related desynchronization in the alpha band and the processing of semantic information. Cogn. Brain. Res. 6, 83–94. doi: 10.1016/S0926-6410(97)00018-9

Klimesch, W., Sauseng, P., and Hanslmayr, S. (2007). EEG alpha oscillations: the inhibition–timing hypothesis. Brain Res. Rev. 53, 63–88. doi: 10.1016/j.brainresrev.2006.06.003

Koelewijn, T., Zekveld, A. A., Festen, J. M., and Kramer, S. E. (2012). Pupil dilation uncovers extra listening effort in the presence of a single-talker masker. Ear. Hear. 33, 291–300. doi: 10.1097/AUD.0b013e3182310019

Kramer, S. E., Kapteyn, T. S., Festen, J. M., and Kuik, D. J. (1997). Assessing aspects of auditory handicap by means of pupil dilatation. Int. J. Audiol. 36, 155–164. doi: 10.3109/00206099709071969

Kramer, S. E., Kapteyn, T. S., and Houtgast, T. (2006). Occupational performance: comparing normally-hearing and hearing-impaired employees using the Amsterdam Checklist for Hearing and Work. Int. J. Audiol. 45, 503–512. doi: 10.1080/14992020600754583

Kuchinsky, S. E., Vaden, K. I. Jr., Ahlstrom, J. B., Cute, S. L., Humes, L. E., Dubno, J. R., et al. (2016). Task-related vigilance during word recognition in noise for older adults with hearing loss. Exp. Aging Res. 42, 64–85. doi: 10.1080/0361073X.2016.1108712

Laeng, B., Sirois, S., and Gredebäck, G. (2012). Pupillometry a window to the preconscious? Perspect. Psychol. Sci. 7, 18–27. doi: 10.1177/1745691611427305

Lin, F. R., Yaffe, K., Xia, J., Xue, Q.-L., Harris, T. B., Purchase-Helzner, E., et al. (2013). Hearing loss and cognitive decline in older adults. JAMA Int. Med. 173, 293–299. doi: 10.1001/jamainternmed.2013.1868

Lunner, T. (2003). Cognitive function in relation to hearing aid use. Int. J. Audiol. 42:S49-S58. doi: 10.3109/14992020309074624

Lunner, T., Rudner, M., and Rönnberg, J. (2009). Cognition and hearing aids. Scand J Psychol. 50, 395–403. doi: 10.1111/j.1467-9450.2009.00742.x

Lunner, T., and Sundewall-Thorén, E. (2007). Interactions between cognition, compression, and listening conditions: effects on speech-in-noise performance in a two-channel hearing aid. J. Am. Acad. Audiol. 18, 604–617. doi: 10.3766/jaaa.18.7.7

Mackersie, C. L., MacPhee, I. X., and Heldt, E. W. (2015). Effects of hearing loss on heart rate variability and skin conductance measured during sentence recognition in noise. Ear. Hear. 36, 145–154. doi: 10.1097/AUD.0000000000000091

Mason, M. F., Norton, M. I., Van Horn, J. D., Wegner, D. M., Grafton, S. T., and Macrae, C. N. (2007). Wandering minds: the default network and stimulus-independent thought. Science 315, 393–395. doi: 10.1126/science.1131295

McCoy, S. L., Tun, P. A., Cox, L. C., Colangelo, M., Stewart, R. A., and Wingfield, A. (2005). Hearing loss and perceptual effort: downstream effects on older adults’ memory for speech. Q. J. Exp. Psychol. A. 58, 22–33. doi: 10.1080/02724980443000151

McGarrigle, R., Munro, K. J., Dawes, P., Stewart, A. J., Moore, D. R., Barry, J. G., et al. (2014). Listening effort and fatigue: what exactly are we measuring? A british society of audiology cognition in hearing special interest group ‘white paper’. Int. J. Audiol. 53, 433–440. doi: 10.3109/14992027.2014.890296

Obleser, J., and Weisz, N. (2012). Suppressed alpha oscillations predict intelligibility of speech and its acoustic details. Cereb. Cortex 22, 2466–2477. doi: 10.1093/cercor/bhr325

Obleser, J., Wöstmann, M., Hellbernd, N., Wilsch, A., and Maess, B. (2012). Adverse listening conditions and memory load drive a common alpha oscillatory network. J. Neurosci. 32, 12376–12383. doi: 10.1523/JNEUROSCI.4908-11.2012

Oostenveld, R., Fries, P., Maris, E., and Schoffelen, J.-M. (2011). FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intel. Neurosci. 2011:156869. doi: 10.1155/2011/156869

Partala, T., and Surakka, V. (2003). Pupil size variation as an indication of affective processing. Int. J. Hum. Comput. Stud. 59, 185–198. doi: 10.1016/S1071-5819(03)00017-X

Peavler, W. S. (1974). Pupil size, information overload, and performance differences. Psychophysiology 11, 559–566. doi: 10.1111/j.1469-8986.1974.tb01114.x

Petersen, E. B., Wöstmann, M., Obleser, J., Stenfelt, S., and Lunner, T. (2015). Hearing loss impacts neural alpha oscillations under adverse listening conditions. Front. Psychol. 6:177. doi: 10.3389/fpsyg.2015.00177

Petersen, S. E., and Posner, M. I. (2012). The attention system of the human brain: 20 years after. Annu. Rev. Neurosci. 35:73. doi: 10.1146/annurev-neuro-062111-150525

Pichora-Fuller, M. K., and Singh, G. (2006). Effects of age on auditory and cognitive processing: implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif. 10, 29–59. doi: 10.1177/108471380601000103

Power, J. D., and Petersen, S. E. (2013). Control-related systems in the human brain. Curr. Opin. Neurobiol. 23, 223–228. doi: 10.1016/j.conb.2012.12.009

Rabbitt, P. (1991). Mild hearing loss can cause apparent memory failures which increase with age and reduce with IQ. Acta Otolaryngol. 111, 167–176. doi: 10.3109/00016489109127274

Radulescu, E., Nagai, Y., and Critchley, H. (2014). “Mental Effort: brain and Autonomic Correlates,” in Handbook of Biobehavioral Approaches to Self-Regulation, eds G. H. E. Gendolla, M. Tops, and S. L. Koole (New York, NY: Springer), 237–253.

Rönnberg, J., Lunner, T., Zekveld, A., Sörqvist, P., Danielsson, H., Lyxell, B., et al. (2013). The Ease of Language Understanding (ELU) model: theoretical, empirical, and clinical advances. Front. Syst. Neurosci. 7:31. doi: 10.3389/fnsys.2013.00031.

Rönnberg, J., Rudner, M., Lunner, T., and Zekveld, A. A. (2010). When cognition kicks in: working memory and speech understanding in noise. Noise Health 12, 263–269. doi: 10.4103/1463-1741.70505

Rudner, M., Lunner, T., Behrens, T., Thorén, E. S., and Rönnberg, J. (2012). Working memory capacity may influence perceived effort during aided speech recognition in noise. J. Am. Acad. Audiol. 23, 577–589. doi: 10.3766/jaaa.23.7.7

Salthouse, T. A. (2004). What and when of cognitive aging. Curr. Dir. Psychol. 13, 140–144. doi: 10.1111/j.0963-7214.2004.00293.x

Sara, S. J. (2009). The locus coeruleus and noradrenergic modulation of cognition. Nat. Rev. Neurosci. 10, 211–223. doi: 10.1038/nrn2573

Sara, S. J., and Bouret, S. (2012). Orienting and reorienting: the locus coeruleus mediates cognition through arousal. Neuron 76, 130–141. doi: 10.1016/j.neuron.2012.09.011

Sarter, M., Gehring, W. J., and Kozak, R. (2006). More attention must be paid: the neurobiology of attentional effort. Brain Res. Rev. 51, 145–160. doi: 10.1016/j.brainresrev.2005.11.002

Schluroff, M. (1982). Pupil responses to grammatical complexity of sentences. Brain Lang. 17, 133–145. doi: 10.1016/0093-934X(82)90010-4

Schneider, B., Pichora-Fuller, K., and Daneman, M. (2010). “Effects of senescent changes in audition and cognition on spoken language comprehension,” in The Aging Auditory System, eds S. Gordon-Salant, R. D. Frisina, R. R. Fay, A. N. Popper (New York, NY: Springer), 167–210.

Seeley, W. W., Menon, V., Schatzberg, A. F., Keller, J., Glover, G. H., Kenna, H., et al. (2007). Dissociable intrinsic connectivity networks for salience processing and executive control. J. Neurosci. 27, 2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007

Shannon, R. V., Zeng, F.-G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). Speech recognition with primarily temporal cues. Science 270, 303–304. doi: 10.1126/science.270.5234.303

Spagna, A., Mackie, M.-A., and Fan, J. (2015). Supramodal executive control of attention. Front. Psychol. 6:65. doi: 10.3389/fpsyg.2015.00065

Stenfelt, S., and Rönnberg, J. (2009). The Signal-Cognition interface: interactions between degraded auditory signals and cognitive processes. Scand. J. Psychol. 50, 385–393. doi: 10.1111/j.1467-9450.2009.00748.x

Strauß, A., Wöstmann, M., and Obleser, J. (2014). Cortical alpha oscillations as a tool for auditory selective inhibition. Front. Hum. Neurosci. 8:350. doi: 10.3389/fnhum.2014.00350.

Tomporowski, P. D., and Tinsley, V. F. (1996). Effects of memory demand and motivation on sustained attention in young and older adults. Am. J. Psychol. 109, 187–204. doi: 10.2307/1423272

Ullsperger, M., Harsay, H. A., Wessel, J. R., and Ridderinkhof, K. R. (2010). Conscious perception of errors and its relation to the anterior insula. Brain Struct. Funct. 214, 629–643. doi: 10.1007/s00429-010-0261-1

Valentino, R. J., and Van Bockstaele, E. (2008). Convergent regulation of locus coeruleus activity as an adaptive response to stress. Eur. J. Pharmacol. 583, 194–203. doi: 10.1016/j.ejphar.2007.11.062

Vossel, S., Geng, J. J., and Fink, G. R. (2014). Dorsal and ventral attention systems distinct neural circuits but collaborative roles. Neuroscientist 20, 150–159. doi: 10.1177/1073858413494269

Warm, J. S., Parasuraman, R., and Matthews, G. (2008). Vigilance requires hard mental work and is stressful. Hum. Factors 50, 433–441. doi: 10.1518/001872008X312152

Weinstein, B. E., and Ventry, I. M. (1982). Hearing impairment and social isolation in the elderly. J. Speech Lang. Hear. Res. 25, 593–599. doi: 10.1044/jshr.2504.593

Wingfield, A., Tun, P. A., and McCoy, S. L. (2005). Hearing loss in older adulthood what it is and how it interacts with cognitive performance. Curr. Dir. Psychol. 14, 144–148. doi: 10.1111/j.0963-7214.2005.00356.x

Winn, M. B., Edwards, J. R., and Litovsky, R. Y. (2015). The impact of auditory spectral resolution on listening effort revealed by pupil dilation. Ear. Hear. 36, 153–165. doi: 10.1097/AUD.0000000000000145

Wöstmann, M., Herrmann, B., Wilsch, A., and Obleser, J. (2015). Neural alpha dynamics in younger and older listeners reflect acoustic challenges and predictive benefits. J. Neurosci. 35, 1458–1467. doi: 10.1523/JNEUROSCI.3250-14.2015

Zekveld, A. A., and Kramer, S. E. (2014). Cognitive processing load across a wide range of listening conditions: insights from pupillometry. Psychophysiology 51, 277–284. doi: 10.1111/psyp.12151

Zekveld, A. A., Kramer, S. E., and Festen, J. M. (2010). Pupil response as an indication of effortful listening: the influence of sentence intelligibility. Ear. Hear. 31, 480–490. doi: 10.1097/AUD.0b013e3181d4f251

Zekveld, A. A., Kramer, S. E., and Festen, J. M. (2011). Cognitive load during speech perception in noise: the influence of age, hearing loss, and cognition on the pupil response. Ear. Hear. 32, 498–510. doi: 10.1097/AUD.0b013e31820512bb

Keywords: alpha power, pupil dilation, listening effort, listening in noise, speech perception, perceived effort, mental exertion

Citation: McMahon CM, Boisvert I, de Lissa P, Granger L, Ibrahim R, Lo CY, Miles K and Graham PL (2016) Monitoring Alpha Oscillations and Pupil Dilation across a Performance-Intensity Function. Front. Psychol. 7:745. doi: 10.3389/fpsyg.2016.00745

Received: 11 December 2015; Accepted: 05 May 2016;

Published: 24 May 2016.

Edited by:

Adriana A. Zekveld, VU University Medical Center, NetherlandsReviewed by:

Stefanie E. Kuchinsky, University of Maryland, USAAntje Strauß, Centre National de la Recherche Scientifique, France

Copyright © 2016 McMahon, Boisvert, de Lissa, Granger, Ibrahim, Lo, Miles and Graham. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Catherine M. McMahon, Y2F0aC5tY21haG9uQG1xLmVkdS5hdQ==

Catherine M. McMahon

Catherine M. McMahon Isabelle Boisvert

Isabelle Boisvert Peter de Lissa

Peter de Lissa Louise Granger1,2

Louise Granger1,2 Chi Yhun Lo

Chi Yhun Lo Kelly Miles

Kelly Miles Petra L. Graham

Petra L. Graham