95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 03 May 2016

Sec. Performance Science

Volume 7 - 2016 | https://doi.org/10.3389/fpsyg.2016.00567

This paper presents a relatively unexplored area of expertise research which focuses on the solving of British-style cryptic crossword puzzles. Unlike its American “straight-definition” counterparts, which are primarily semantically-cued retrieval tasks, the British cryptic crossword is an exercise in code-cracking detection work. Solvers learn to ignore the superficial “surface reading” of the clue, which is phrased to be deliberately misleading, and look instead for a grammatical set of coded instructions which, if executed precisely, will lead to the correct (and only) answer. Sample clues are set out to illustrate the task requirements and demands. Hypothesized aptitudes for the field might include high fluid intelligence, skill at quasi-algebraic puzzles, pattern matching, visuospatial manipulation, divergent thinking and breaking frame abilities. These skills are additional to the crystallized knowledge and word-retrieval demands which are also a feature of American crossword puzzles. The authors present results from an exploratory survey intended to identify the characteristics of the cryptic crossword solving population, and outline the impact of these results on the direction of their subsequent research. Survey results were strongly supportive of a number of hypothesized skill-sets and guided the selection of appropriate test content and research paradigms which formed the basis of an extensive research program to be reported elsewhere. The paper concludes by arguing the case for a more grounded approach to expertise studies, termed the Grounded Expertise Components Approach. In this, the design and scope of the empirical program flows from a detailed and objectively-based characterization of the research population at the very onset of the program.

Research on expertise development has attempted to reveal the mechanisms through which some individuals are able to show levels of performance, skill-sets, or knowledge which are reproducibly superior to that of others active in that particular domain (Ericsson and Towne, 2010). The relative contributions of deliberate practice and innate cognitive aptitude have been hotly debated [e.g., the recent special issue on expertise development in Intelligence (Detterman, 2014), and the recent review by Hambrick et al. (2016)], and may reflect an ideological clash between the contrasting approaches of experimental and differential psychology, with the former focusing on the general processes of skill acquisition, and the latter upon the identification of key differentiating factors in individual performance (Hambrick et al., 2014b). Studies of expertise development on both sides of the argument have tended to remain focused upon a relatively restricted range of practice-intensive domains—primarily chess, music, sport and Scrabble—and to have followed well-worn investigative paths. These have included diary/retrospective studies of practice (Ericsson et al., 1993); the Expert-Performance Approach (EPA—Ericsson and Ward, 2007), including paradigms based on the original de Groot chess experiments (de Groot, 1946/1965; Tuffiash et al., 2007; Ericsson and Towne, 2010); and tests of either general intelligence (“g”) itself, or a restricted set of compartmentalized sub-skills believed on a priori grounds to be relevant to the domain (Bilalić et al., 2007; Grabner et al., 2007; Tuffiash et al., 2007). There is a danger that, in all of these approaches, research may be based more upon preconceived, theoretical assumptions concerning the demands of the domain, or upon strongly held ideological convictions about the nature of expertise, than on grounded empirical evidence. The time is therefore right for the exploration of new domains and for a fresh theoretical and methodological perspective (Hambrick et al., 2014b, 2016).

To this end, the current paper outlines a relatively unexplored domain of investigation—British cryptic crosswords—and proposes a novel methodology, termed the Grounded Expertise Components Approach (GECA). This places a far heavier emphasis upon the detailed understanding and characterization of the research population and upon a holistic and empirically argued view of the demands of the performance domain, rather than a small number of isolated elements, than has hitherto been the case.

A recent paper (Toma et al., 2014) contrasted two hypothesized cognitive drivers of proficiency (working memory (WM) capacity and strategy) in two “mind-game” domains: competitive Scrabble and national-level performance at US-style crosswords. In their introductory review, they characterized the skill-set necessary for US-style crossword solving as follows:

In contrast to competitive SCRABBLE proficiency, crossword proficiency relies on semantic aspects of language such as general word knowledge (Hambrick et al., 1999) and superior recognition for word meanings (Underwood et al., 1994). […] Unlike SCRABBLE, crossword puzzles do not require exceptional visuospatial strategies because the spatial layout of the game board is provided; therefore, visuospatial ability should not be as critical to crossword solving expertise as having an extensive understanding of word meanings. […] Semantic understanding is necessary for the process of creating a word while solving crossword puzzles; therefore, expert crossword players should primarily rely on superior knowledge of word definitions. (p. 728).

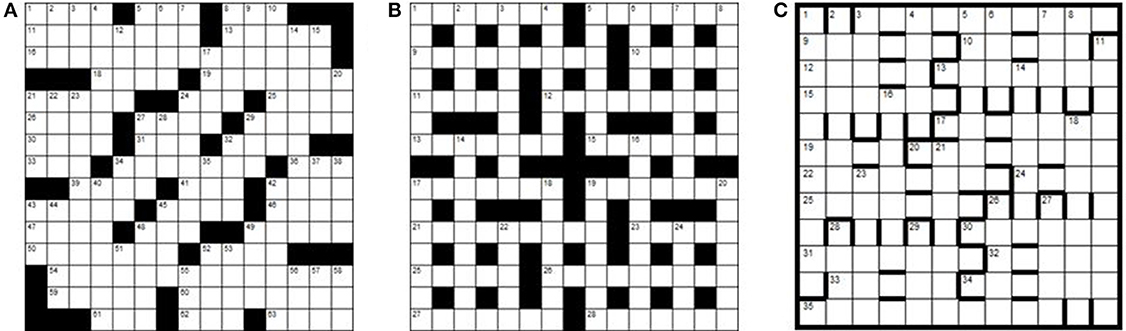

Toma's characterization of crossword expertise is certainly very plausible, so far as it relates to the American crossword puzzle. At the root of the challenge set by US-style crosswords is the nature of the puzzle layout which consists of a heavily interlocking grid with fully cross-checked letters (Figure 1A). Given the constraints of the US-style grid, the creator of the puzzle (“compiler”/“setter”) often has to resort to highly obscure words, slang, brand names, sections of phrases, acronyms and even word fragments in order to populate the squares, and it is primarily this quality which determines the difficulty of the crossword (Hambrick et al., 1999; Nickerson, 2011; Sutherland, 2012). Clues are almost entirely “straight-definition” with very few exceptions; these include puns, quiz-clues and obliquely referenced clues, such as “Present time?” (8 letters), where the answer is “YULETIDE” (Shortz, 2001; Nickerson, 2011). Essentially, therefore, US crosswords may be seen as semantically cued retrieval tasks (Nickerson, 1977, 2011), requiring considerable crystallized knowledge, much of it obscure “crosswordese” [words frequently found in crossword puzzles, but very rarely in conversation (Hambrick et al., 1999; Romano, 2006)]. Additionally, good reasoning ability is hypothesized to be necessary for the evaluation of candidate responses, the deduction of entries from cross-checking letters already present in the grid (pattern recognition/word fragment completion) and the re-examination of earlier interpretations of a recalcitrant clue in order to identify misreadings of punning or ambiguous phrasing (Nickerson, 1977, 2011; Hambrick et al., 1999).

Figure 1. US- and UK-style crossword grids compared. (A) US-style crossword grid. (B) UK-style blocked crossword grid. (C) UK-style barred (“advanced”) grid.

Failure to succeed in US-style crossword solving might therefore be hypothesized to arise from three causes (Hambrick et al., 1999):

1. insufficient knowledge to retrieve the target word from the preceding semantic cue;

2. inefficient retrieval strategies from long term memory;

3. weak reasoning abilities, leading to deficits in both clue interpretation and the use of ancillary information such as intersecting, cross-checking letters.

Consistent with this analysis, Toma's US-style crossword solvers were found to have significantly higher self-reported verbal SAT scores than Scrabble experts, and these scores were similar to those of undergraduates at a highly selective liberal arts college (average verbal SATs scores in the 95th percentile) acting as the control group (Toma et al., 2014). Additionally, in response to a free-text question (“What do you believe is the single most important skill you use during competition?”) participants reported their dominant cognitive abilities as “Good memory/recall” (29%) and “Mental flexibility” (26%); other key facets were “Pattern recognition” (16%), “General knowledge” (10%) and “Good vocabulary” (10%).

British-style cryptic crosswords are very popular within the UK and in other countries with historically close ties to Britain (e.g., Canada, Ireland, Australia, New Zealand, India, and Malta). In the UK, they appear in all the daily and weekend newspapers (both broadsheet and tabloid), and also in various literary, political and cultural magazines, on the web and in specialist puzzle collections.

Although superficially similar, British-style cryptic crosswords differ from their American counterparts in fundamentally critical ways. A typical layout (Figure 1B) comprises a 15 × 15 grid with half-turn rotational symmetry in which roughly half the letters are checked by intersecting clues. The remaining spaces are filled in with “blocks”. The implications of the blocked grid design are profound: with fewer cross-checking letters, the setter no longer has to resort to the level of obscurity seen in American puzzles in order to make the entries mesh. Indeed, a former editor advises would-be setters of the (London) Times daily crossword:

As far as vocabulary goes, obscure words are avoided. A high percentage of the vocabulary should be familiar to a person of reasonable education and knowledge […] mostly without recourse to reference books, while commuting to work on a train, for example. (Greer, 2001, p. 52).

Challenge is therefore no longer provided by the obscurity of the semantic retrieval task. Rather, the cryptic crossword is a tricky linguistic puzzle which “plays using language as a code”, exploiting the potential ambiguity of the English language, in multiple senses, levels and contexts (Aarons, 2012, p. 224). In a cryptic clue, the apparent meaning (“surface reading”) of the clue is phrased to be deliberately misleading. The solver learns to ignore this reading, and to look instead for a non-literal interpretation of the deconstructed clue components, comprising a grammatical set of coded instructions which, if executed precisely, will lead to the correct (and only) answer. The problem lies in recognizing and cracking the code: the task of the setter, like that of a magician, is to conceal the mechanism so subtly that the solution pathway is not easily detectable at first sight.

Solvers thus have to learn how to crack a cryptic crossword: the language is an artificial creation, meaning that “no-one is a native speaker of ‘Cryptic”’ (Aarons, 2012, p. 229). Although there is general agreement that the clues have to be fairly constructed (i.e., solvable), there are no hard-and-fast guidelines as to what the rules of engagement are (Aarons, 2012), leading to an almost infinite number of innovative ways to exploit the “versatile and quirky English language” (Connor, 2013). Nevertheless, there is some consensus over a number of basic mechanism types, and a wealth of “Teach-Yourself” primers exist (Gilbert, 2001; Greer, 2001; Stephenson, 2007; Manley, 2014). The vocabulary of cryptography is often used in the titles of these works: solvers are described as “cracking the codes” and deciphering the setter's “hidden messages”. Indeed, in WWII, the British secret service MI6 recruited cryptographers to work on the Enigma Project at Bletchley Park by placing a discreet notice in the Daily Telegraph asking if anyone could solve the cryptic crossword in less than 12 minutes. This eventually resulted in the recruitment of six code-breakers with requisite skills from 25 applicants (Singh, 1999; Greer, 2001; Connor, 2014).

Cryptic crossword clues usually comprise two elements: a straight definition, plus the cryptic instructions for assembling the required solution—the “wordplay” (Box 1). It is not always straightforward to tell which clue element fulfills which purpose, and there is often no clear division between the two parts (Schulman, 1996; Greer, 2001; Aarons, 2012; Sutherland, 2012; Manley, 2014). Furthermore, the setter may frame the surface reading of the clue as an entirely plausible but misleading sentence, thus deliberately trapping the unwary solver in a “red herring” based on the inherent linguistic ambiguities of English (Aarons, 2012). The solver must therefore “overcome a lifetime's parsing habits” in order to avoid being sucked into the “deep structure” of the text: they must remain at the surface in order to explore other non-intuitive interpretations of the clue's components (Schulman, 1996, p. 309). In short, the cryptic is “the complicated, intellectually brooding cousin of the definitional—it had mystique and depth, it played hard to get with a capricious, whimsical air” (Brandreth, 2013).

Box 1. Illustration of cryptic clue mechanisms.

British-style cryptic crossword clues must be treated as a grammatical set of coded instructions. The following two clues illustrate the process:

Clue (a) Schulman (1996, p. 309)

Active women iron some skirts and shirts (9)

The definition is “Active women” = an obliquely phrased straight definition for FEMINISTS

The wordplay comprises: FE (iron, chemical symbol) + MINIS (plural form of a type of skirt, hence the word “some”) + TS (plural of “T,” an abbreviation for “T-Shirt”)

The surface meaning is highly misleading; the interpretation of iron relies on a linguistic ambiguity (homonym employing different part of speech—noun, not verb).

Clue (b) 2013 Times Championship clue (http://www.piemag.com/2013/01/23/marks-mind/)

Speciality of the Cornish side that's perfect with new wingers (5,4)

The definition is “Speciality of the Cornish” = CREAM TEAS

The wordplay comprises: DREAM TEAM (“side that's perfect”) with D and M replaced by new letters on either edge (“with new wingers”).

The surface meaning is misleadingly suggestive of football/rugby and contains some non-intuitive parsing of the components.

The algebraic/programming nature of the cryptic clue means that wordplay components may be flexibly recombined or anagrammed to form new units, e.g.:

• A+B = C (FAT+HER = FATHER)

• rev(A) = B (TRAMS → SMART)

• anag(A+B) = C (CAT+HAT = ATTACH)

• trunc(A) = B (CUTTER → UTTER)

• substring(A+B+C) = D (e.g. Part of it 'it an iceberg (7) = TITANIC - Moorey (2009)

Of the UK daily cryptics, the most famous is probably the Times crossword. Not all crosswords are equally challenging, however, and there is a widely recognized hierarchy of challenge involved (Biddlecombe, 2011; Connor, 2012; Sutherland, 2012). Difficulty in solving a standard block-style cryptic crossword is largely commensurate with the degree of concealment used by the setter in the clue mechanics, although vocabulary and clueing style can also be a factor.

One way to demonstrate expertise in cryptics is by rapid solving. Cryptic experts may be defined as those who can routinely solve a daily cryptic at the more difficult end of the spectrum in less than 30 minutes (Friedlander and Fine, 2009). As a benchmark, the average solving time required to make the elite grand final of the Times National Crossword Championship (TNCC) is 9–15 minutes per puzzle (Biddlecombe, 2012). Conversely, many ordinary solvers tackle easier cryptics at the same level for decades, taking an hour or more to finish (if at all).

A second way to demonstrate expertise is by successfully solving advanced cryptics (Friedlander and Fine, 2009). Advanced cryptic crosswords are found in weekend newspapers and some magazines, and are generally “barred grid” (Figure 1C). Of these, the Listener Crossword is the most notoriously difficult, employing a high degree of clue mechanic concealment, obscure vocabulary, grids of startling originality and a thematic challenge, often involving a number of tricky lateral thinking steps on the basis of minimal guidance. Speed is not important—solvers have 12 days to submit their solution to each Listener puzzle. Very few entrants achieve an all-correct year (21 in 2010; 16 in 2011; 14 in 20121) and those submitting 42+ correctly (out of 52) appear on an annual roll of honor. The Magpie2, a monthly specialist magazine with 5 highly challenging advanced cryptic crosswords (and 1 mathematical puzzle) per issue, runs a similar all correct/roll of honor system, and is broadly of Listener standard.

Finally, for a few highly expert cryptic solvers, the ultimate challenge is to compose cryptics oneself. There are a number of clue-writing competitions [e.g., Azed monthly challenges in the Observer magazine and competitions run for Crossword Centre club members (Harrison, n.d.)] which attract entries from expert solvers; a few of these go on to become crossword professionals (editors or setters for local or national publications), though for most this is not their full-time occupation.

Given the above, we might therefore hypothesize that cryptic crossword solvers' skills depend less upon crystallized intelligence and cued vocabulary retrieval than those of their American counterparts, although these factors are still clearly relevant. Indeed, even if a word referenced by a cryptic clue is not known to the solver, it can often be deduced from the wordplay, and there are potentially two quite distinct avenues to the clue's solution (Coffey, 1998): the crystallized route, tapping general knowledge and vocabulary to intuit the response, perhaps using cross-checking letters; and fluid intelligence which taps the ability to “derive logical solutions to novel problems” (Hicks et al., 2015, p. 187—see also Cattell, 1963; Carroll, 1982; Kane et al., 2005) using clue components. Key cognitive abilities might therefore be hypothesized to include:

(i) The general capacity to analyze, reason, problem-solve and think “on one's feet,” which could reasonably be argued to draw heavily upon WM capacity and fluid intelligence (Kane et al., 2005; Shipstead et al., 2014); together with a liking for this type of cognitive challenge;

(ii) A specific aptitude for cryptographic or mathematical thinking. The similarity of cryptic crossword clues to algebra or computer programming has been noted in passing (Manley, 2014), but has not attracted much scholarly attention. An Australian conference paper (Simon, 2004) draws a number of close analogies between solving cryptic crossword puzzles and computer programming problems, and suggests that the cryptic crossword “could one day be harnessed as one of a set of predictors of computer aptitude” claiming that “while intuition can be extremely helpful in solving crossword puzzles, it cannot take the place of clear analytical thought” (p. 302). The hunch that computer programmers and mathematicians might be particularly adept at cryptic crosswords seems to be backed up by informal membership polls undertaken by two free-membership internet-based cryptic crossword clubs (de Cuevas, 2004; Lancaster, 2005): in both surveys those engaged in STEM-based employment (Science, Technology, Engineering, Mathematics), particularly IT, were strongly represented;

(iii) The visuospatial ability to mentally manipulate algebraic components of wordplay “fodder” (see Box 1). While many solvers use a physical jotting pad or electronic anagrammer to manipulate the letters, the ability to rapidly visualize and mentally process potentially promising combinations might be hypothesized to confer a speed advantage in solving cryptics (Minati and Sigala, 2013);

(iv) The ability to pattern-match and, most specifically, complete word fragments provided by cross-checking letters, as already discussed for US-style crosswords (Nickerson, 1977, 2011; Hambrick et al., 1999);

(v) The ability to break free from familiar patterns of thought (particularly red herrings deliberately supplied by the setter) using new and unusual interpretations of clue components. The concept of “breaking the frame” of context-induced fixedness has often been associated with traditional insight problems (Davidson, 2003; Pretz et al., 2003; DeYoung et al., 2008): it is the solver's perseveration with the erroneous approach to the problem which can render it unsolvable. The authors argue elsewhere (article in preparation) that cryptic crosswords are a form of insight problem, and that flexible, divergent, “breaking frame” thinking is critical to successful solving.

The above review suggests that cryptic crosswords make wide and complex demands on their solvers, who appear to require a good all-round blend of lexical aptitude, logical/analytical thinking skills and breaking frame/lateral thinking abilities. This combination of attributes implies a certain “entry level” of ability that might in other contexts readily translate into academic success.

Given the large disparity in cognitive demands and processes between the two forms of crossword (American definitional and British-style cryptic), it is essential to discriminate clearly between research undertaken in each field, since the findings from one area may not be applicable to the other, and cannot be cited uncritically as if the domains were congruent or interchangeable (Almond, 2010). Research into American-style crosswords has recently become more prolific (Nickerson, 1977, 2011; Hambrick et al., 1999; Toma et al., 2014; Moxley et al., 2015), while research into British-style cryptic crosswords has been comparatively sparse: this is mainly, one suspects, because of the separate crossword traditions of Britain and America, effectively making the subject unknown in America.

Previous research into cryptics has considered several discrete areas: exploration of the cognitive or linguistic challenges posed by cryptic clues (Forshaw, 1994; Schulman, 1996; Lewis, 2006; Aarons, 2012); the use of cryptic crosswords to preserve cognitive flexibility (“use-it-or-lose-it”) in aging populations (Winder, 1993; Forshaw, 1994; Almond, 2010); and finally a cluster of small-scale interrelated studies at the University of Nottingham, exploring individual differences in cryptic crossword solving (Underwood et al., 1988, 1994; Deihim-Aazami, 1999). Of all previous research, only the last three studies explored in any depth the question of what makes an expert solver excel in the cryptic crossword domain.

Starting from an interest in individual differences in reading ability and lexical memory, Underwood adopted the premise that cryptic crossword experts were likely to possess “particularly rich lexical networks” (Underwood et al., 1988, p. 302). In a comparison of 12 locally sourced cryptic crossword solvers with a convenience sample of 12 non-puzzlers on a battery of lexical tasks, the expectation was that the cryptic solvers, who were all of a good standard, would show particularly rapid, novel and accurate lexical data retrieval compared to the non-puzzlers. Unexpectedly, however, both groups showed very similar task performance, leading Underwood to conclude that cryptic crossword skills are “as much bound up in the cryptic puzzle codes as they are in lexical fluency” (1988, p. 306). In other words, in order to crack the cryptic challenge, solvers needed to apply problem-solving skills, in addition to possessing a good working vocabulary.

This conclusion was followed up in small-scale trials involving a convenience sample of 22 cryptic crossword enthusiasts (staff and students) from the University of Nottingham (Underwood et al., 1994; Deihim-Aazami, 1999). This population was split into “experts” (n = 14) and “intermediates” (n = 8) on the basis of their performance in solving 30 stand-alone cryptic crossword clues written by the researchers for the study. Participants were again submitted to a battery of lexical tasks, and also took the AH4 test of fluid intelligence (Heim, 1970). In a reversal of earlier findings experts out-performed the intermediates in the lexical tasks. Additionally, there was no significant difference between experts and intermediates on the AH4. From this, the researchers reasoned that success in cryptics was bound up with lexical skill and that fluid intelligence was not, after all, a factor (Underwood et al., 1994). They further hypothesized that greater exposure to cryptic crosswords over a number of years had enabled the expert solvers to broaden their vocabulary and their familiarity with cryptic clue architecture (Deihim-Aazami, 1999).

There were a number of issues with this research, however. The less-skilled group were all of student age, and had only recently commenced crossword solving, making them novices of unquantifiable future potential—“initiates” or “apprentices” according to Chi's taxonomy of proficiency (Chi, 2006), rather than experienced intermediates (“journeymen”). Additionally, neither sub-group was externally benchmarked for their performance in solving a grid-based, professionally written, publication-quality crossword of known difficulty. Both of these factors lead to doubts over the real levels of expertise present, and the assignment of participants to the correct groups. Furthermore, the experts were considerably older than the intermediates, which may have facilitated their lexical performance, as crystallized knowledge increases naturally with age (Horn and Cattell, 1967; Underwood et al., 1994). Finally, both groups scored exceptionally highly on the AH4, which was designed to be used on general populations educated only to high-school level (Deary and Smith, 2004), strongly suggesting that there were ceiling effects, given the degree-level education of the trial sample. This might explain the apparent lack of group differences in fluid intelligence.

Deihim-Aazami's research (1999) contained many elements of the EPA methodological framework (Ericsson and Smith, 1991; Ericsson, 2000, 2006; Ericsson and Williams, 2007; Tuffiash et al., 2007). The aim of the EPA is to facilitate ecologically valid lab-based research in order to enable researchers to observe, analyze and capture the essence of the performance domain as the participants engage in a representative task. This task is intended to demonstrate a clearly superior performance by the expert, and elucidate potential mechanisms for this superiority (Ericsson, 2000).

The three key stages of the EPA comprise (Tuffiash et al., 2007):

(i) Identifying a representative task which captures the essence of expertise in the target domain. In de Groot's highly influential chess studies (de Groot, 1946/1965; Gobet et al., 2004) this consisted of two tasks: (a) identifying the best next move; (b) recalling the board layout of a briefly displayed game. The first task was intended to simulate game-play, and the second to investigate domain-specific perceptual and mnesic mechanisms. De Groot's two paradigms became classics in other “mind game” studies. For example, in the Nottingham studies, participants cold-solved 30 freestanding cryptic clues, without the aid of a grid structure or intersecting letters (Underwood et al., 1994; Deihim-Aazami, 1999). Similarly, in Scrabble, Tuffiash adapted de Groot's first paradigm, asking participants to identify the best-scoring play, when presented with a set of 12 diverse game positions that might be encountered during a highly competitive Scrabble game (Tuffiash et al., 2007).

(ii) Observing participants engaged in this task, while collecting “process-tracing data,” often by means of a think-aloud protocol (Gilhooly and Green, 1996; Green and Gilhooly, 1996; Ericsson and Williams, 2007). Again, for Scrabble, Tuffiash recorded talk-aloud data for their participants as they debated the best next move (Tuffiash et al., 2007); and in the Nottingham trials, participants were asked to solve a further 37 isolated clues, talking aloud to explain their understanding of each clue's architecture and solution (Deihim-Aazami, 1999). Superior performance displayed by experts is argued to be directly linked to complex representations of the current task, and to be derived from a deeper and more detailed understanding of the underlying domain (Chase and Simon, 1973; Chi et al., 1981; Ericsson and Williams, 2007; Campitelli and Gobet, 2010). A systematic analysis of the material gained from process-tracing might therefore give insights into areas of comparative strength which underpin experts' superior performance (Tuffiash et al., 2007). Such data are commonly extended and enriched by identifying and experimentally manipulating additional cognitive subskills hypothesized to be key to the domain (Tuffiash et al., 2007): Tuffiash's Scrabble participants underwent an additional battery of primarily lexical tasks (Tuffiash et al., 2007); as did Deihim-Aazami's cryptic crossword participants (Deihim-Aazami, 1999), together with the AH4 (Heim, 1970);

(iii) Accounting for the development of experts' superior performance by conducting interviews with participants, and teachers/parents where relevant, to establish key indicators of domain experience, such as starting age, key achievement milestones, and the quantity and type of practice undertaken (Tuffiash et al., 2007). This last step, and the content of the questionnaire administered, is driven by researcher conviction that the origin of high expertise in niche domains arises from extensive exposure to dedicated and structured practice regimes over at least 10 years (Ericsson et al., 1993; Ericsson, 2000). Collection of practice and experience data was therefore a feature of both the Scrabble (Tuffiash et al., 2007) and the cryptic crossword studies (Deihim-Aazami, 1999).

The current research program could have followed the classic EPA path, by selecting representative tasks and key cognitive components from a purely theoretical standpoint, based on a priori detailed knowledge of the solving process. After all, the above review of hypothesized cognitive demands of British cryptics identified several promising avenues, and the Nottingham research, although small scale and conflicting in results, led the way in this domain. The current authors were reluctant, however, to impose their preconceived ideas upon the direction of the present study in this way. This reluctance was based on the conviction that one cannot conduct objective research on a niche population without first carefully characterizing this population across a large number of dimensions, leading to a grounded understanding of the drivers for participation in the field, the levels of immersion in the activity and the potential skills which are brought to it. A new approach (GECA) was therefore conceived, with the intention of providing empirical support for the direction and design of future controlled studies.

The components of the GECA comprise:

1. A wide-ranging survey characterizing the domain-specific population across a large number of dimensions, ranging from demographics, levels of participation and experience to more indirectly related areas such as participants' education, occupation and hobbies, and motivational drivers. This differs from the EPA in that the survey is not limited to the collection of practice and experience data only, and is undertaken at the very outset of the research program.

2. Analysis of the survey data to identify characteristics of both the expert and non-expert population, leading to a grounded research rationale for the design of key elements in subsequent stages of the investigation. This avoids the potential trap of confounds arising from preconceived theoretical or ideological assumptions.

3. A lab-based recording (if appropriate—see Hambrick et al., 2016) of domain-relevant performance, to elucidate both the strategic and cognitive mechanisms involved. Although this stage draws heavily on the EPA, the GECA focuses on a more naturalistic task, fully reflecting the totality of the cognitive demands of the domain, rather than a series of isolated challenges (e.g., the de Groot paradigms: on this see further the Discussion section below).

4. The identification of supplementary sub-tests, to probe specific empirically-indicated cognitive or strategic processes believed to be instrumental in distinguishing experts from non-experts within the domain: again, this flows from the initial characterization of the population.

An exploratory survey was therefore designed to capture responses of cryptic crossword solvers to a broad range of 84 questions (many with extended sub-sections), as above. Analysis of this large body of data has led to a very detailed characterization of the solving population, many aspects of which will be reported elsewhere; in the interests of brevity we report here only the key findings relevant to the design of the subsequent research program and to the establishment of the GECA as a powerful research methodology.

Although the net was cast very wide, the questionnaire was specifically set up to address the research question: “What is the nature of the cryptic crossword population in terms of their cognitive skills, motivation and expertise development?”. The hypotheses were as follows, largely flowing from the nature of cryptic solving and its cognitive demands identified earlier:

H1: cryptic crossword solvers would generally be academically able adults, given the cognitive complexity of the puzzle demands; this might imply that there is a cognitive ability threshold for entry into the domain;

H2: solvers' education and occupation would predominantly be in scientific or IT-related fields, rather than in language fields, implying that cryptics might particularly appeal to the logical and analytical thinker with an aptitude and liking for problem-solving;

H3: cryptic crossword solving regularly generates “Aha!” or insight moments, supporting the hypothesis that the cryptic clue is a classic type of insight problem through misdirection; and that this pleasurable experience is a salient driver of cryptic crossword participation;

H4: solvers would generally enjoy effortful cognitive activity in all spheres of life including work and hobbies, and that this would be an important driver of cryptic crossword participation;

H5: solving is essentially an intrinsically motivated activity, not generally undertaken for public acclaim or prize money3; practice/engagement levels for both expert and non-expert solvers would consequently be low and relatively unstructured compared to high profile competitive performance areas such as chess and music;

H6: cryptic solving would not normally begin in childhood, in view of the cognitive complexity of the task, but is more likely to commence in late teenage years (for US crosswords, see also Moxley et al., 2015).

An 84-item wide-ranging questionnaire was developed and piloted. Most of the survey material was devised specifically for the study, but also incorporated the short-form “Need for Cognition” scale (Cacioppo et al., 1984) and the “Work Preference Inventory” (Amabile et al., 1994): both scales are described more fully below (Results Section). The survey was approved by the School of Science Ethics Committee, University of Buckingham.

The questionnaire was made available both on the internet through SurveyMonkey® and on paper. Respondents were recruited in two phases:

• Survey 1 involved contacting advanced cryptic crossword solvers, speed solvers and compilers at the very high-expert end of the spectrum. Respondents were sought by means of adverts circulated (i) on the Crossword Centre website (Harrison, n.d., a UK-based advanced cryptic crossword forum, membership approx. 950) in May 2007 and May 2008; (ii) at the TNCC in Cheltenham, England in October 2007; and (iii) with the annual Listener Crossword statistics, in March 2007.

• Survey 2 took place in April 2010 and invited mainstream solvers of daily block-style cryptics to take the same survey in order to obtain comparative data from non-experts. Adverts were placed on a number of websites providing a daily analysis of (and answers to) a wide range of block-style puzzles from UK newspapers; the survey was also re-advertised on the Crossword Centre website.

As the two questionnaires used were identical, data from the two surveys were combined and analyzed together. Participants were assigned to expertise groupings on the basis of responses to key fields within the survey (see below, “Definition of Research Groups”), regardless of survey phase.

There were 935 responses to the surveys (S1 n = 257; S2 n = 678); however, post-consolidation reviews of the data identified a number of unworkably incomplete (n = 109), duplicated (n = 14) or spoof (n = 7) records, which were excluded from the analysis, leaving 805 responses in total (S1 n = 234; S2 n = 571). Participants were aged 18–84 (mean = 52.1; SD = 12.4), and males (n = 632, 78.5%) outnumbered females (n = 173, 21.5%). The majority of respondents were British (n = 709, 88%); the remainder were from the USA (n = 28, 3%), Australia (n = 26, 3%), Ireland (n = 14, 2%); Canada, New Zealand, India, Holland, France and Spain (each 1% or less).

All 805 participants responded to the initial pages of the survey; however, there was some attrition toward the end, with the lowest level of response to any section being 764. Overall reported numbers in the results section therefore vary according to the position of the question in the survey.

A key challenge in expertise studies is that of establishing rigorous, objectively-based and externally benchmarked criteria for assigning participants to research categories (Tuffiash et al., 2007). Ranking systems such as the Elo rating in chess (Gobet and Charness, 2006) and official Scrabble tournament metrics (Tuffiash et al., 2007) are particularly valuable, and have tended to encourage research in these areas (Grabner, 2014; Hambrick et al., 2014b). No such mathematically based ranking system exists for cryptics, and alternative methods have therefore been developed (Friedlander and Fine, 2009; Fine and Friedlander, 2010) resulting in both a 2-way (Ordinary/Expert) and a 3-way (Ordinary/High/Super-Expert) categorization of participant expertise.

The Ordinary/Expert groups were designated as follows (Fine and Friedlander, 2010):

• Ordinary (O): solvers who (by self-report) normally take longer than 30 min to solve quality broadsheet cryptics. They do not usually tackle advanced cryptics, and are not successful at this form of puzzle;

• Experts (E): defined as those who can routinely solve one quality broadsheet cryptic in 30 min or less, who compile crosswords professionally, or who tackle advanced cryptics with regular success.

The above definition of “Expert” is quite broadly conceived, and does not identify world-class performance in the same way as, for example, a FIDE Elo rating of 2200 (“Candidate Master”) can do. The concept of “Super-Expert” cryptic crossword solver was therefore developed for earlier publications (Friedlander and Fine, 2009) in order to permit a more rigorous 3-way analysis of expertise, where required. In accordance with this, Super-Experts (S) were defined as those who fell into one or more of the following categories:

• Those who edit or compose cryptics professionally, on at least an occasional basis, for broadsheet or specialist publications (“Pro”);

• Those who regularly speed-solve a cryptic in < 15 min; and/or had reached the final in the annual TNCC on at least one occasion (“Speed”);

• Those who had solved 42+ Listener (or 48+ Magpie) crosswords correctly in 1 year, and were thus named on the official roll of honor (“Advanced”).

Expert (E) participants not categorized as S were designated as “High Solvers” (H), enabling data to be analyzed using a 3-way structure (O/H/S). Conceptually, these three groups are similar to Chi's “Journeyman” (O), “Expert” (H) and “Master” (S) proficiency categories (Chi, 2006).

Survey responses were strongly encouraged from those who had been tackling cryptics for at least 2 years, and preferably for 5+ years. Respondents could thus be assigned to the appropriate research group on the basis of mature performance in the field, thus avoiding possible confounds which can arise when classifying inexperienced novices of unquantifiable potential. In the event, only 11 participants with less than 2 years' experience completed the survey (1.4% of the total); of these, 9 were classified as O and 2 as H.

Solvers (n = 805) fell equally between O (n = 401, 49.8%) and E (n = 404, 50.2%). This high proportion of experts was attributable to recruitment methodology (particularly for Survey 1) and is not believed representative of the general level of solver expertise within the cryptic crossword solving community. Within the E group, H solvers (n = 225, 28.0% of overall total) outnumbered S solvers (n = 179, 22.2%).

As in other expertise studies (Tuffiash et al., 2007; Toma et al., 2014), the qualifying bar for the S designation was set rigorously high. Inspection of individuals coded to S revealed that many were acknowledged experts in the field, including: 49 professional setters or editors; 27 TNCC finalists, including 5 outright winners; and 111 roll of honor Listener/Magpie crossword solvers, of whom 31 had achieved an all-correct year. Some individuals qualified as S by virtue of two or more Pro/Speed/Advanced criteria (n = 52, 29% of S group). S group members were all known by name/reputation to the authors, and their skill level may be objectively verified by reference to publicly available competition statistics and compiler listings. The S category thus indisputably represents an elite body of top-class performers in the field.

Demographic data were supplied by 805 respondents; key points for discussion are summarized in Table 1.

The proportion of males increased with expertise (O: M = 294, 73.3%; H: M = 178, 79.1%; S: M = 160, 89.4%): see Table 1. There was a highly significant association between expertise and gender [ = 19.01, p < 0.001, Cramer's V = 0.154]. Post-hoc tests using standardized residuals indicated that this was driven by female participation in the O (z = 2.2, p < 0.05) and S (z = −3.1, p < 0.01) groups, with female participation dropping from over 25% in O to around 10% in S; no other interactions were significant. The low proportion of female participants may be an artifact of sample selection (Crossword Centre4 members are predominantly male, and there is higher male participation in competitive events and professional crossword setting Balfour, 2004); however, subscriptions to the Magpie5 at the time of the survey also showed low levels of actual female participation, with membership running at 178M/10F, plus 6 couples, who solve and submit as a pair. This resulted in a female subscription level of 8% (16F out of 200, counting each member of the couple individually), comparable to our S data.

Respondents were asked to supply their handedness: responses were coded as right/non-right (i.e., including ambidexterity), but will be referred to as right/left for convenience. Solvers were predominantly right-handed with overall levels of left-handedness running at 11.3% (see Table 1). Levels of left-handedness among O and H solvers fell between 8 and 12%, which would be considered low-normal within the general population (10 to 13.5% e.g., Gobet and Campitelli, 2007). S solvers show higher levels of left-handedness (14.0%); however the association between handedness and expertise was not overall statistically significant [ = 3.893, p = 0.143, Cramer's V = 0.07]. Nevertheless, an analysis of the handedness of professional setters/editors (n = 49, all coded as S) shows a striking level (22.4%) of left-handedness; conversely other S group members (n = 130) now show a more conventional profile (10.8%). Reanalyzing the handedness statistics, with professionals identified separately, was statistically significant [ = 8.734, p = 0.033, Cramer's V = 0.104]. Post-hoc inspection of the standardized residuals indicated that this was driven solely by the level of left-handedness within the Pro group (z = 2.3, p < 0.05).

Expertise groups showed very similar age profiles (O: 18–84 years of age, Mdn = 54; H: 23–83 years of age, Mdn = 53; S: 21–81 years of age, Mdn = 54). A Kruskal-Wallis H analysis showed no statistical difference in age between the groups [H(2) = 0.045, p = 0.978].

Most cryptic crossword solvers began in their teens: 67.3% of respondents (n = 542) had started by the age of 20, and this rose to 88.2% by the age of 30 (n = 710). Starting age ranged from 6 to 65 (Mdn = 18), and the most popular age for commencing was 15 (n = 79; 9.8%) followed by 18 (n = 77; 9.6%). Only 21 respondents (2.6%; O: n = 2; H: n = 7; S: n = 12) claimed to have started solving cryptics before the age of 10; of these the majority (n = 20, 95%) had a parent or family member who also solved cryptics, and they learned the rules of solving from their parents (n = 19, 90%). There were significant group differences in starting age [H(2) = 122.70, p < 0.001]: S started earliest (Range = 6–40, Mdn = 15) followed by H (Range = 6–55, Mdn = 17) and then O (Range = 8–65, Mdn = 20). Pairwise comparisons, using Dunn's procedure (Dunn, 1964) with Bonferroni correction for multiple comparisons, revealed statistically significant differences in starting years between all groups, all with an adjusted significance of p < 0.001 (effect sizes O-H r = 0.21; H-S r = 0.27; O-S r = 0.45).

The number of years spent solving increased with expertise, and this was broadly in line with the 2–3 year group differences in starting age (O: Range = 1–62, Mdn = 30; H: Range = 1–64, Mdn = 34; S: Range = 5–66, Mdn = 37). Differences were statistically significant [H(2) = 42.81, p < 0.001]. Pairwise comparisons were performed, as previously. These revealed statistically significant differences in median years spent solving between O-H (p = 0.002, r = 0.14), H-S (p = 0.010, r = 0.15) and O-S (p < 0.001, r = 0.27) groups (adjusted p-values are presented). However, on average, members of each of the three groups had been tackling cryptics for approximately 30–40 years and were thus all highly experienced; and, regardless of group, 729 of the 805 respondents (90.6%) had been solving for 10 years or more. In common with findings in other expertise areas such as chess (Gobet and Ereku, 2014; Hambrick et al., 2014b; Campitelli, 2015), a small number of cryptic crossword solvers had achieved levels of high expertise within 5 years of starting (H = 9, S = 1). Conversely, many respondents (n = 57) had been solving for 45 years or more, but had remained as O.

The survey collected a wide range of data relating to practice, experience and the range and difficulty of puzzles undertaken. Full details will be discussed elsewhere; the current paper presents summarized data only for key fields.

Respondents (n = 802) estimated how many hours they spent each week solving cryptic crosswords. Results are summarized in Table 2A.

Hours spent solving cryptic crosswords increased with expertise (O: M = 7.02; H: M = 7.27; S: M = 7.85); however, the median was 6 hours across all groups. This equates to approximately 45 min–1 h per day, or 1–2 blocked grid crosswords at typical solving speeds. Analysis of the difference in distribution of hours spent solving crosswords across the three groups was not statistically significant [H(2) = 2.27, p = 0.321].

Participants were also asked to estimate the amount of time spent on other crossword activities such as cryptic crossword social gatherings, blogging, consulting on-line solution pages or message boards, composing or test-solving cryptic crosswords and entering competitions. Results (n = 803) are summarized in Table 2B. Crossword related activity increased with expertise (O: M = 1.31; H: M = 1.78; S: M = 3.97); analysis of the difference in time spent on crossword related activity across groups was statistically significant [H(2) = 47.01, p < 0.001]. Pairwise comparisons were performed, as previously. This revealed statistically significant differences in time spent between O-H (p = 0.009, r = 0.12), H-S (p = 0.001, r = 0.18) and O-S (p < 0.001, r = 0.28) groups (adjusted p-values are presented).

Much of this difference was driven by the inclusion of the 49 crossword setters/editors within the S category, who would be expected to spend considerable amounts of crossword related time each week to fulfill their professional obligations: see Table 2B. Excluding these individuals, all groups spent on average less than 2 h per week (around 20 min per day) on other crossword related activities. Differences between the groups were still statistically significant [H(2) = 16.76, p < 0.001]. Pairwise comparisons revealed statistically significant differences between O-H (p = 0.007, r = 0.12) and O-S (p = 0.001, r = 0.15) alone; however comparison of H-S was no longer significant.

Education data were supplied by 780 respondents (O: n = 383; H: n = 220; S: n = 177); key points for discussion are summarized in Table 3 with further discussion in the following text.

Respondents were asked to indicate their highest level of educational qualification either by radio button selection of standard UK options (e.g., O Level/GCSE, BA/BSc, PhD), or by free-text description, where these options were inappropriate. Data were reviewed independently by both authors, and assigned to the 8 bands of educational level (e.g., 0 = No Quals; 3 = A Level; 6 = BA/BSc; 8 = PhD) currently recognized by the UK Government (Gov.UK, 2015). An Independent Samples Mann-Whitney U test was conducted to determine whether there were differences in the distribution of educational qualification between expertise categories (O: n = 383; E: n = 397). The mean rank of E (403.76) was higher (more qualified) than for O respondents (376.76), although this trend was not statistically significant (U = 81,288.0, z = 1.77, p = 0.077).

Respondents as a whole were very highly educated (see Table 3A), with 81.4% (n = 635) having achieved a university qualification; this was higher for E solvers (83.6%, comprising H: 85.0%; S: 81.9%) than for O (79.1%), although this difference failed to achieve statistical significance [ = 2.626, p = 0.105, Cramer's V = 0.058]. Many solvers had postgraduate qualifications (37.3%, typically MSc/MA or PhD) and this was again higher for E solvers (39.3%, comprising H: 39.5%; S: 39.0%) than for O (35.2%). Again, these differences failed to achieve statistical significance [ 1.365, p = 0.243, Cramer's V = 0.042]. PhDs accounted for 11.9% (n = 93) of all qualifications (O: 10.7%; H: 13.6%; S: 12.4%).

In view of the median age of the respondent sample (54 when completing the survey in 2007/2010, giving an approximate matriculation period of 1970–1975), these findings are exceptionally high: overall participation in UK higher education “increased from 3.4% in 1950, to 8.4% in 1970, 19.3% in 1990 and 33% in 2000” (Bolton, 2012, p. 13).

Survey participants were asked to indicate in a free-text field the main subject they studied in their education. This information was independently reviewed by both authors and coded to 43 subject areas; after resolution of differences these were then aggregated to 17 broad subject fields (see Appendix A in Supplementary Material) within 6 educational sectors: Arts and Design; Business; Human Studies; STEM; Wordsmiths; Other (unclassifiable/too many subjects identified). Most participants specified one subject which, given the generally high level of education achieved, was considered likely to be their degree/postgraduate specialism.

Data were provided by 780 participants (O: n = 383; H: n = 220; S: n = 177), and were first analyzed by the 6 educational sectors. The analysis revealed a pronounced bias toward STEM subjects amongst all participants (see Table 3B). STEM subjects accounted for 51.4% of the main subjects studied, Wordsmith specialisms for 25.9% and Human Studies for 11.2%, with all other sectors being < 5% each. The proportion of STEM subjects increased with expertise (O: 49.3%; H: 50.0%; S: 57.6%). Conversely, Wordsmith subjects were least studied by S solvers (23.7%), although H studied them more than O (O: 25.1%; H: 29.1%). Overall, however, the differences in educational sector by expertise groups failed to achieve statistical significance at this aggregated level [ = 12.642, p = 0.244, Cramer's V = 0.09).

A further chi-square analysis of expertise was carried out by the 17 broad subject fields. The results were highly significant [ = 63.316, p = 0.001; Cramer's V = 0.201]. Post-hoc tests using standardized residuals indicated that this was driven by two categories: Mathematics (see Table 3C), which increased strikingly with expertise (O: 13.8%, n = 53, z = −2.5, p < 0.05; H: 19.1%, n = 42, z = −0.1, ns; S: 31.6%, n = 56, z = 3.7, p < 0.001); and Engineering, which was primarily studied by non-experts (O: 6%, n = 23, z = 2.0 p < 0.05; H: 2.3%, n = 5, z = −1.3, ns; S: 1.7%, n = 3, z = −1.5, ns). No other items were significant.

Finally, educational subject data by participant (n = 757; O: n = 368; H: n = 214; S: n = 175) were assigned to “RIASEC” coding (“Holland codes”: Gottfredson and Holland, 1996; Nauta, 2010: see further Box 2) using the standard listing supplied in the “CIP to HOC” section (Classification of Instructional Program Titles to Holland Occupational Codes) of the coding manual (Gottfredson and Holland, 1996). Forty-one distinct Holland codes were applied. Twenty-three records were not coded: in most cases participants had ceased education at 14–16 years old and were unable to supply a main subject. The mapping of subject area to code was relatively straightforward, and data assignation was double-checked by a second reviewer.

Box 2. RIASEC (“Holland” Codes)

RIASEC (“Holland”) codes consist of a 3-letter code (e.g. “IRE,” “AES”) comprising the three RIASEC types (in order of significance) which the subject most resembles:

• “R” (Realistic: mechanical ability; works with objects, animals, plants; independent)

• “I” (Investigative: thinkers who investigate, analyze, research or problem-solve; scholarly)

• “A” (Artistic: creative, imaginative, unstructured, word-skills)

• “S” (Social: communicators, helpers, trainers; compassionate)

• “E” (Enterprising: persuaders, leaders, influencers; outgoing, energetic)

• “C” (Conventional: organizers; liking for detailed, orderly work following instructions; careful, conforming, clerical)

See further Gottfredson and Holland (1996).

The most common full Holland code assigned to participant education was “IRE” (n = 304, 40.2%), and S participants were particularly associated with this code (O: 38.0%; H: 35.5%; S: 50.3%). Typical educational programs associated with “IRE” are Computer Programming, Engineering, Mathematics, Physics and Analytical Chemistry. The codes “AIE” (n = 95, 12.5%; O: 11.1%; H: 11.7%; S: 16.6%; Modern Languages and Classics), “ASE” (n = 77, 10.2%; O: 11.1%; H: 13.1%; S: 4.6%; English Language and Literature) and “IRS” (n = 40, 5.3%; O: 5.2%; H: 4.7%; S: 6.3%; Biochemistry, Genetics, Medical Specialties) all featured prominently; the other 37 codes each accounted for < 5% of the responses. Analysis of the distribution of full Holland codes for education by Expertise groups (top 9 codes only, with the remaining codes aggregated to form a 10th group) was statistically significant [ = 31.19, p = 0.027, Cramer's V = 0.144]. Inspection of standardized residuals indicated that this was driven by two values: the significantly large proportion of S participants coded to “IRE” (z = 2.1, p < 0.05) and the low proportion of S coded to “ASE” (z = −2.3, p < 0.05).

Following assignation of educational subject data to 3-letter codes, responses were analyzed by the primary RIASEC letter (see Table 4).

This aggregation revealed a very strong bias across all groups toward “I” subjects (57.2%) generally thought to be indicative of analytical, scholarly, scientific research. “A” was evident (26.9%), but was stronger for O and H (26.9%, 29.4%) than for S (24.0%); conventional and hands-on subjects (“C,” “R”) were particularly poorly represented across the board. A chi-square analysis was not, however, significant [ = 8.561, p = 0.574, Cramer's V = 0.075], although the proportion of S participants who took “I” subjects was noticeably higher (z = 1.2) than O (z = −0.5) or H (z = −0.4).

Respondents were asked to supply their main paid occupation during their working life; teachers and lecturers were asked to state their main specialism. 780 responses were received (O: n = 383; H: n = 220; S: n = 177). Independent analysis of these details by both authors allowed for the allocation of respondents to 40 occupation areas based on discipline/field of work. Following resolution of differences, these were then aggregated to 23 occupational fields and thence to 7 occupational sectors: STEM, Finance, Office/Business, Wordsmiths, Performance, Manual, Other. For details, see Appendix B in Supplementary Material.

Data were first examined by the 7 occupational sectors (see Table 5A). The analysis revealed a pronounced bias toward STEM occupations amongst all participants. STEM accounted for 45.4% of occupations (n = 354), accompanied by a further 10.4% (n = 81) in the Financial area, where numerical/data handling skills are also assumed to be of importance. Office/Business accounted for 19.4% (n = 151) and Wordsmiths for 14.4% (n = 112), with all other sectors being < 6% each. Differences in occupational sector by expertise groups were statistically significant at this aggregated level [ = 23.73, p = 0.022, Cramer's V = 0.123], and inspection of standardized residuals indicated that the key driver was Finance, where incidence increased with expertise (O: 7.8%, n = 30, z = −1.5, ns; H: 10.0%, n = 22, z = −0.2, ns; S: 16.4%, n = 29, z = 2.5, p < 0.05). Involvement in general Office/Business activities decreased with expertise (O: 22.5%, n = 86, z = 1.4, ns; H: 17.7%, n = 39, z = −0.6, ns; S: 14.7%, n = 26, z = −1.4, ns); and, as before, Wordsmith activity was seen predominantly in H (17.3%, n = 38, z = 1.1, ns) with S showing least involvement (S: 10.2%, n = 18, z = −1.5, ns; O: 14.6%, n = 56, z = 0.1, ns). However, these latter drivers failed to achieve statistical significance.

Data relating to key areas of interest (STEM and Finance) were explored in finer detail (see Table 5B). By far the largest category, accounting for 24.2% of responses (n = 189) across all groups, was Technology/IT. A chi-square analysis of Table 5B data was highly significant [ = 46.08, p < 0.001; Cramer's V = 0.172]. Post-hoc tests using standardized residuals indicated that this was driven by four areas: Banking/Accountancy, which increased with expertise (O: 6.3%, n = 24, z = −1.1, ns; H: 6.4%, n = 14, z = −0.8, ns; S: 13.0%, n = 23, z = 2.5, p < 0.05); Engineering, predominantly pursued by O solvers (O: 6.0%, n = 23, z = 2.7, p < 0.01; H: 0.9%, n = 2, z = −2.0, p < 0.05; S: 1.1%, n = 2, z = −1.7, ns); and Technology/IT which increased with expertise (O: 20.6%, n = 79, z = −1.4, ns; H: 24.5%, n = 54, z = 0.1, ns; S: 31.6%, n = 56, z = 2.0, p < 0.05). Thus, nearly of S solvers pursued a career in the IT field, compared to of O solvers. The aggregated category (Not STEM/Finance) was also significant, with S showing a significantly lower proportion of job activity outside these two areas (O: 47.0%, n = 180, z = 0.8, ns; H: 47.3%, n = 104, z = 0.7, ns; S: 34.5%, n = 61, z = −2.0, p < 0.05).

Participant occupation data (n = 769; O: n = 380; H: n = 217; S: n = 172) were assigned to Holland codes by an independent coder, using the standard listing supplied in the “DOT to HOC” section (Dictionary of Occupational Titles to Holland Occupational Codes) of the coding manual (Gottfredson and Holland, 1996). Sixty-one distinct Holland codes were assigned in this process. Eleven participant records were not coded: in these cases respondents had either not worked (e.g., through ill-health) or were unclassifiable.

Following double-checking by both authors and resolution of differences, the full Holland codes were scrutinized. The code “IRE” was again most commonly found, being assigned to 155 participants overall (20.2%), and this increased with expertise (O: 18.7%; H: 21.2%; S: 22.1%). Occupations typically assigned to this code included geologists, statisticians, mathematics teachers/lecturers, software engineers and science teachers/lecturers. The codes “IER” (n = 56, 7.3%; O: 7.6%; H: 7.8%; S: 5.8%; engineers, financial consultants, systems analysts), “AIE” (n = 51, 6.6%; O: 6.6%; H: 8.8%; S: 4.1%; English teacher, writers/editors), “IRC” (n = 45, 5.9%; O: 5.3%; H: 5.5%; S: 7.6%; computer programmers) and “CSI” (n = 30, 3.9%; O: 3.2%; H: 3.7%; S: 5.8%; accountants) were also prominent; the other 56 Holland codes each accounted for < 3.9% of the responses. Analysis of the distribution of full Holland codes by expertise (top 19 codes only, with the remaining codes aggregated to form a 20th group) failed, however, to achieve statistical significance [ = 34.72, p = 0.622, Cramer's V = 0.150).

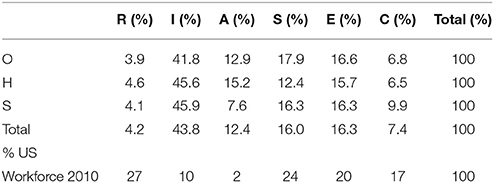

Responses were also analyzed by the primary RIASEC letter and compared with indicative norms for the 2010 US workforce (McClain and Reardon, 2015): see Table 6.

Table 6. RIASEC analysis of main occupational field by expertise group in comparison with US workforce norms.

Overall scores again showed a strong bias toward “I” activities, accounting for 43.8% of the responses (O: 41.8%; H: 45.6%; S: 45.9%), compared to 10% in the 2010 US population norms (McClain and Reardon, 2015). Given the broadly comparable economic and technological profiles of the US and the UK, this appears considerably higher than might be expected. Employment in “I” fields typically involves relatively small numbers of largely STEM-based occupations involving highly-qualified individuals (McClain and Reardon, 2015).

“A” occupations (12.4%; publishers, journalists, writers, English teachers) were also considerably more prominent than US population norms (2%), and this Wordsmith activity was once more higher for H participants, with S being particularly low. While a chi-square analysis of primary RIASEC codes by expertise failed to achieve overall significance [ = 10.05, p = 0.436, Cramer's V = 0.081), the comparatively low proportion of S occupied in “A” careers approached significance (S: 7.6%, S = 13, z = −1.8).

Code “S” was more prominent for survey occupation data than for education, and tended to reflect occupational team-building, committee and communications skills: for example, “SEC” (n = 29, 3.8%; e.g., civil servant) and “SER” (n = 22, 2.9%; e.g., company board director). Careers assigned to “R” tend to involve high levels of employment in relatively low-grade practical or mechanical tasks (McClain and Reardon, 2015), and were poorly represented among our survey population. Careers coded to “C” were more common for S (z = 1.2) than for O (z = −0.4) or H (z = −0.5), reflecting the larger proportion of accountants in this group (“CSI”).

As part of the Holland coding process, occupation complexity was also recorded, using the Cx rating score in the “DOT to HOC” section of the coding manual (Gottfredson and Holland, 1996). This rating reflects the cognitive complexity of work demands (for the calculation algorithm, see Gottfredson and Holland, 1996, p. 723). Holland Cx scores range from < 40 to >80; a Cx rating of 65 or higher is associated with a college degree and 4–10 years of “On-Job-Training” (Reardon et al., 2007).

Survey respondents were engaged in cognitively complex jobs, with the mean and median scores for all groups being close to 70: see Table 7. Over half the respondents (53.8%) fell into the 70–79 band. Typical career options in the 60–69 band include secondary school teachers, middle-ranking civil servants and journalists; and in the 70–79 band lawyers, physicians, software engineers, company directors and university faculty staff. There were 151 teachers: 102 were predominantly secondary school specialists (Cx = 66); 49 were university faculty members (Cx = 74), including 9 at UK professorial level (Cx = 78).

Job complexity increased slightly with expertise (O: M = 69.2; H: M = 69.4; S: M = 69.7) and there were fewer S participants in the two lower complexity bands; however, job complexity did not differ significantly across expertise groups [H(2) = 1.230, p = 0.541).

The short-form “Need for Cognition” (NFC) scale seeks responses to 18 statements relating to a person's tendency to seek out, engage in and enjoy effortful thinking (Cacioppo et al., 1984; von Stumm and Ackerman, 2013). Sample questions include (both reverse coded): “I like tasks that require little thought once I've learned them” and “Thinking is not my idea of fun.” Respondents (n = 764; O: n = 377; H: n = 212; S: n = 175) rated each statement on a 5-point Likert scale (1 = “Completely Disagree”; 5 = “Completely Agree”). Full details of the results obtained will be discussed elsewhere; the current paper presents summarized data only.

Scores were corrected for reverse coding and averaged by participant to produce an individual NFC score. Overall, respondents showed mean NFC levels significantly in excess of the mid-point, 3: t(763) = 36.55, p < 0.001, d = 1.32. Indeed, no expertise group means fell below 3 on any of the individual 18 statements. NFC increased with expertise (O: M = 3.71, Mdn = 3.78; H: M = 3.77, Mdn = 3.78; S: M = 3.79, Mdn = 3.83) but differences were not statistically significant [H(2) = 3.319, p = 0.190]. A Mann-Whitney U test was also conducted to determine whether there were differences in the distribution of NFC scores between broad expertise categories (O: n = 377; E: n = 387). The mean rank of E (396.54) was higher (greater NFC) than for O respondents (368.09) and this approached statistical significance (U = 78,383.5, z = 1.783, p = 0.075).

In a separate question (reported later in Table 12), participants were asked to rate suggested motivators for their engagement with cryptic crossword solving. Full details of these motivators will be discussed elsewhere; however the wording of one suggested motivator is strongly reminiscent of the NFC: “My brain constantly demands to be engaged in intellectual pursuits in all I do.” Responses (n = 786; O: n = 388; H; n = 221; S: n = 177) rated this statement on a 5-point Likert scale, as above. Scores increased with expertise (O: M = 2.72, Mdn = 3; H: M = 3.2, Mdn = 3; S: M = 3.36, Mdn = 4) and differences were statistically significant [H(2) = 37.79, p < 0.001]. Pairwise comparisons were performed as above. These revealed statistically significant differences between O-H (p < 0.001, r = 0.18) and O-S (p < 0.001, r = 0.23) groups (adjusted p-values are presented). The difference between H-S was not statistically significant.

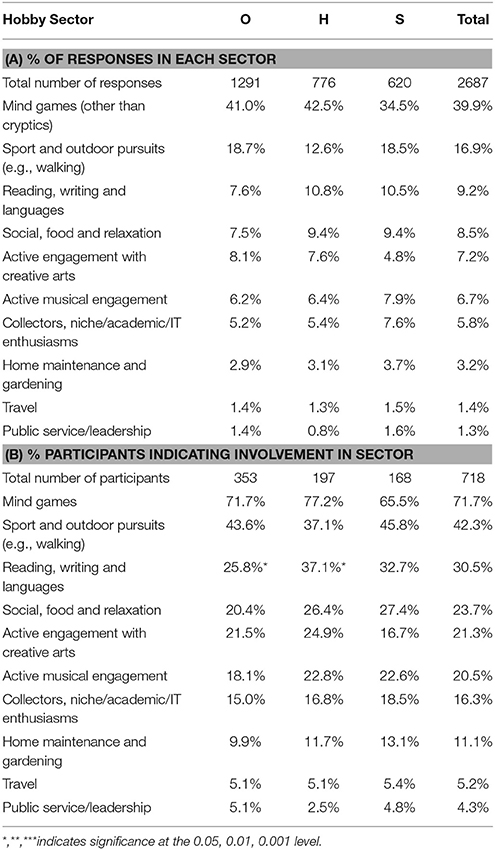

Participants were also asked about their hobbies outside cryptic crosswords. Data were collected in two parts: the first collected details of levels of engagement with other mind game activity (e.g., chess, Sudoku, Scrabble, non-cryptic crosswords); the second asked for free-text details of any other significant hobby activity. Data from these two sources were segmented, coded and combined to provide a rounded picture of cryptic crossword enthusiasts' leisure-time activities; mind game data were included only for those participants who engaged “regularly” with these activities. Final consolidated data related to 718 participants (O: n = 353; H: n = 197; S: n = 168) who participated in a total of 2687 hobbies (responses per participant, Range = 1–14; M = 3.74, Mdn = 3).

Sixty-two participants (8%) indicated 8% (n = 62) indicated that they had no important hobbies other than cryptic crosswords, or left this field blank (O: n = 31, 8%; H: n = 22, 10%; S: n = 9, 5%).

Hobbies were coded to hobby areas by an independent coder; these were then aggregated to 10 broad hobby sectors (see Table 8A), and three researchers (including the authors) then reviewed and agreed all codings. Overall, hobby replies (n = 2687) showed a pronounced bias toward cognitively challenging pastimes: 39.9% (n = 1073) related to other mind games (9.1% Sudoku, 4.9% Trivial Pursuits/Pub Quizzes; though only 2.8% non-cryptic crosswords and 2.3% Scrabble); 9.2% to reading/writing, and learning foreign languages (predominantly reading 6.8%); and 5.8% to academic or niche pursuits (e.g., astronomy, mycology, philately, philology/semiotics and transport enthusiasms). Responses also showed engagement with a wide range of sporting and outdoor activities, whether as participants or spectators (16.9%); and an active interest in the creative arts (7.2%) and music (6.7%).

Table 8. Hobby sectors by expertise, showing % of total responses and % of participants with at least one interest in the sector.

Data were then examined by expertise group for each hobby sector to identify differences in respondent participation levels (n = 718) for each hobby sector (Table 8B). Findings will be reported in greater detail elsewhere. There was a particularly striking tendency for participants to pursue at least one mind game11 in addition to cryptic crosswords (71.7%), with H showing highest levels (O: n = 253, 71.7%, z = 0.0; H: n = 152, 77.2%, z = 0.9; S: n = 110, 65.5%, z = −1.0). Overall, there were modest differences between the groups in a few sectors: e.g., H and S solvers were more likely to have a hobby involving active musical engagement (O: n = 64, 18.1%, z = −1.0; H: n = 45, 22.8%, z = 0.7; S: n = 38, 22.6%, z = 0.6), but S were less likely to engage in creative arts (O: n = 76, 21.5%, z = 0.1; H: n = 49, 24.9%, z = 1.1; S: n = 28, 16.7%, z = −1.3). However, only the comparison of participation in reading/writing/languages by expertise group was statistically significant [ = 8.103, p = 0.017, Cramer's V = 0.106]. Scores were higher among E solvers than O, with H showing particularly high levels of involvement (O: n = 91, 25.8%, z = −1.6; H: n = 73, 37.1%, z = 1.7; S: n = 55, 32.7%, z = 0.5).

In addition to the hobbies question, participants were asked to indicate their level of ability in music-making. Responses were given on a 6-point Likert scale (1 = “No interest/ability”; 6 = “Total immersion/professional level”). There were 767 responses to this question (O: n = 377; H: n = 215; S: n = 175). Overall, participants indicated that they had a modest competence in music (M = 3.02, Mdn = 3). Musical ratings were slightly higher for S participants (O: M = 3.00; H: M = 2.99; S: M = 3.13; all groups Mdn = 3). However, the overall comparison failed to achieve statistical significance [H(2) = 1.864, p = 0.394].

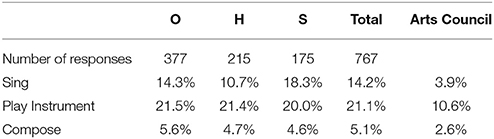

Participants were also asked whether they still participated in musical activities, and whether they sang, played or composed. Responses are summarized in Table 9.

Table 9. Active participation in musical activities at time of survey, by expertise group, in comparison with Arts Council figures for England 2013/14.

Responses were evenly matched across all groups, with the exception of singing, which was particularly high for S (18.3%, z = 1.4) and low for H (10.7%, z = −1.4). Even so, differences in the distribution of singing participation between groups failed to reach statistical significance [ = 4.564, p = 0.102, Cramer's V = 0.077]. Comparison with levels of singing, playing and composing in the general adult English population (DCMS, 2015) suggests that in all three areas the level of active music participation amongst cryptic crossword solvers is markedly above population norms.

Finally, hobbies (n = 2687) were analyzed by RIASEC coding using the 2-letter RIASEC code (“IR,” “AS” etc.) as supplied by the “Leisure Activities Finder” (Holmberg et al., 1997). Twenty-six of the 30 available codes were used. Over 70% of the hobbies fell into one of 7 categories: as Table 10 indicates, there were no marked differences in the distribution of responses between expertise groups; although H participants engaged least with both personal and team sport, and most with the arts; and S participants engaged least with word-based games and activities.

On a person-specific basis, primary RIASEC hobby codes were then aggregated and averaged to produce an individual primary RIASEC code profile. Results are shown in Table 11.

Once again, the aggregation revealed a very strong tendency across all groups toward “I” activities (27.2%; O: 26.0%; H: 29.6%; S: 26.8%). “A” activities were even more important, and were higher among H solvers than O and S (30.7%; O: 30.2%; H: 32.8%, S: 29.3%): these involved musical, cultural, literary and word-based activities. “S” activities featured prominently for O and S participants, but not for H (22.8%; O: 24.1%; H: 17.3%; S: 26.3%): these tended to be socializing activities involving clubs, sports and family/friends.

Analysis of the distribution of RIASEC hobby scores by expertise was significant only for “S” activities [H(2) = 9.221, p = 0.010]. Pairwise comparisons were performed as above; adjusted p-values are presented. There were only statistically significant differences in scores between H-S, with S showing highest and H lowest scores (p = 0.009, r = 0.15).

Participants were asked to complete the “Work Preference Inventory” (WPI; Amabile et al., 1994), which was “designed as a direct, explicit assessment of individual differences in the degree to which adults perceive themselves to be intrinsically and extrinsically motivated toward what they do” (p. 952). Typical questions include “To me, success means doing better than other people” and “I have to feel that I'm earning something for what I do.” There were 766 responses (O: n = 377; H: n = 215; S: n = 174) rating each of 29 statements on a 5-point Likert scale (1 = “Completely Disagree”; 5 = “Completely Agree”). Full details of the results obtained will be discussed elsewhere; the current paper presents summarized data only.

Data were analyzed using Amabile et al.'s (1994) 2-factor breakdown, into Intrinsically (IM: n = 14) and Extrinsically (EM: n = 15) motivated statements, and averaged for each participant within these categories. Overall, respondents showed higher scores on IM items (M = 3.63, Mdn = 3.64) than EM items (M = 2.62, Mdn = 2.60), and mean WPI levels were significantly different from the mid-point (3) for both categories [IM: t(765) = 32.73, p < 0.001, d = 1.18; EM: t(765) = −18.97, p < 0.001, d = −0.69]. A Wilcoxon signed-rank test determined that there was a highly statistically significant difference (Mdn Diff = 1.04) between responses on IM and EM (z = 22.74, p < 0.001, r = 0.58).

IM increased with expertise (O: M = 3.58; H: M = 3.67; S: M = 3.68) and differences were statistically significant [H(2) = 6.42, p = 0.040]. Pairwise comparisons were performed as above. These revealed differences in IM between O-S which approached statistical significance (p = 0.096, r = 0.09). The differences between H-O and H-S were not statistically significant. EM statements were rated most highly by H solvers and least highly by S (O: M = 2.60; H: M = 2.69; S: M = 2.57) but differences were not statistically significant [H(2) = 3.12, p = 0.21].

Participants were also asked to rate 26 statements relating to their motivation for solving cryptic crosswords on a 5-point Likert scale (1 = “Completely Disagree”; 5 = “Completely Agree”). The 26 statements were independently assigned to Intrinsic (IM: n = 19) and Extrinsic (EM: n = 7) motivational categories by the authors, following the methodology used in the WPI (Amabile et al., 1994). There were 786 responses (O: n = 388; H: n = 221; S: n = 177). Full details of the results obtained will be discussed elsewhere; the current paper presents summarized data only.

Data were analyzed into IM and EM statements (as above), and averaged for each participant within these categories. Overall, respondents again showed higher scores on IM items (M = 2.87, Mdn = 2.84) than on EM items (M = 1.53, Mdn = 1.43): see Table 12A. A Wilcoxon signed-rank test determined that there was a highly statistically significant difference (Mdn Diff = 1.41) between responses on IM and EM (z = 24.23, p < 0.001, r = 0.61).

IM increased with expertise (O: M = 2.73; H: M = 2.96; S: M = 3.04) and differences were statistically significant [H(2) = 39.28, p < 0.001]. Pairwise comparisons were performed as above. These revealed significant differences in IM between O-S (p < 0.001, r = 0.23) and O-H (p < 0.001, r = 0.19). The difference between H-S was not statistically significant.

EM increased with expertise (O: M = 1.32; H: M = 1.52; S: M = 2.00) and differences were statistically significant [H(2) = 166.85, p < 0.001]. Pairwise comparisons were performed as above. These revealed significant differences in EM between O-S, O-H and H-S (all p < 0.001; effect sizes O-H r = 0.20; O-S r = 0.54; H-S r = 0.38).

Table 12B shows the 5 highest and 5 lowest ranked responses to the 26 statements (with abbreviated descriptions). All groups rated the “Aha!” or “Penny-Drop Moment” (PDM) as a key motivational factor for solving cryptics; closely allied with this was the statement “Solving well-written clues gives me a buzz—it makes me smile or laugh out loud” which was ranked 4th in importance. In a separate question (“Is your enjoyment of the “penny-drop” moment enhanced if you have had to struggle with the clue?”) only 11 of the 797 respondents (1.4%) claimed not to have had a PDM when solving cryptics, whereas 634 (79.5%) agreed that it had been strengthened by a stiff challenge. All 5 top-rated statements related to intrinsically motivated reasons, primarily concerned with intellectual challenge and the joyful and satisfying feeling of cracking a clue. There was no statistically significant difference between the groups for any of these statements. The lowest rated statements were all concerned with extrinsically driven reasons such as competition, prestige or collaboration: the median score for all of these questions was 1 across all groups, indicating a rejection of these suggestions.

Respondents were not generally drawn to cryptic crossword solving in order to learn new words (see Table 12B, other items): this suggestion came 14th, and the average response score was significantly lower than the mid-point (3): t(785) = −12.02, p < 0.001, d = −0.43. H solvers were most likely to agree more strongly with this statement (O: M = 2.43; H: M = 2.56; S: M = 2.49), but comparison between expertise groups was not statistically significant [H(2) = 1.641, p = 0.440].