- Faculty of Educational Sciences, Centre for Educational Measurement at the University of Oslo, University of Oslo, Oslo, Norway

Introduction

In an ever-changing society, in which scientific knowledge increases rapidly, and workplace demands shift toward “twenty-first century skills,” the ability to adapt one's thinking, drive, and emotions to changing and novel problem situations has become essential (OECD, 2013). As a consequence, a number of researchers from different disciplines developed assessment tools that allow for inferences on the level of cognitive adaptability an individual is able to achieve (Martin and Rubin, 1995; Ployhart and Bliese, 2006; Birney et al., 2012; Martin et al., 2013; Colé et al., 2014). However, the limited evidence on construct validity that comes along with existing assessments that do not provide interactive and dynamic performance tasks points to the need of considering alternative assessment methods (Bohle Carbonell et al., 2014). With the advancement of computer-based assessments of complex problem solving (CPS) in educational contexts, new opportunities of measuring adaptability occur, which may overcome these challenges (Wood et al., 2009; Greiff and Martin, 2014). Specifically, the rich data obtained from such assessments, which, for instance, include information on response times, sequences of actions, and the navigation through the assessment), allow researchers to study cognitive adaptability in more depth, as they go beyond mere performance measures (i.e., correct vs. incorrect). The main message of this article consequently reads: It is time to exploit the potential of computer-based assessments of CPS in order to measure cognitive adaptability as a twenty-first century skill.

Existing Conceptualizations of Cognitive Adaptability

VandenBos (2007) defined adaptability as the “capacity to make appropriate responses to changed or changing situations; the ability to modify or adjust one's behavior in meeting different circumstances or different people” (p. 17). This capacity does not only involve cognitive and behavioral aspects but also affective adjustments to novelty and changes (Martin and Rubin, 1995; Martin et al., 2013). Existing research pointed out that the abilities to solve complex and ill-structured problems, to deal with uncertainty, and to adapt emotionally and culturally are essential facets of the construct (Pulakos et al., 2000). Nevertheless, cognitive psychology often refers adaptability to “cognitive flexibility,” describing the general ability to deal with novelty (Beckmann, 2014). When students face novelty or changes in a problem situation, a number of processes need to take place (Ployhart and Bliese, 2006): First, students have to recognize that there is novel information or changes. Second, they have to decide whether or not the novel information or the changes are relevant for the problem situation. Third, the scientific credibility and validity of the information must be evaluated. Finally, it needs to be decided whether or not their strategies to solve the problem need to be adjusted. Although, the problem situation may change drastically such that the number of variables and their connections change, adaptability does not necessarily require students to change their strategies. By contrast, novel information about the problem structure, the goals to achieve, or the context of the problem may require different strategies, particularly when students have to extract or generate information from sources other than the ones provided (Beckmann, 2014). In such situations, adaptability may be indicated by adjustments in strategic actions.

In light of the current conceptualizations of cognitive adaptability and the processes involved, it seems as if computer-based assessments have the potential to capture the many aspects of the construct (Gonzalez et al., 2013).

Transferring Approaches of Measuring Complex Problem Solving to Adaptability

Because many real-life situations students face are complex and subject to novelty and change, studying adaptability in complex problem-solving situations provides a more realistic perspective on the construct than in simple problem situations (Jonassen, 2011). Moreover, many problems students are asked to solve in specific domains such as science comprise a number of variables that are connected in complex ways (Scherer, 2014). The ability to solve such complex problems by interacting with the problem environment, in which the information that is necessary in order to solve the problem is not given in the beginning of the problem solving process, refers to “complex problem solving” (CPS; Funke, 2010). In two recently published opinion papers, Funke (2014) and Greiff and Martin (2014) elaborated on the importance of CPS in educational and psychological contexts, and pointed out that the construct comprises two main dimensions (see also Greiff et al., 2013): First, students interact with the problem environment in order to acquire knowledge about the variables involved and their interconnections (knowledge acquisition). In this phase, a mental model about the problem situation is generated, which may be challenged by novel or changing information in the course of problem solving. As a consequence, students may revise and adapt their mental model. Second, the acquired knowledge is used to solve the problem, that is, to achieve a specific goal state (knowledge application).

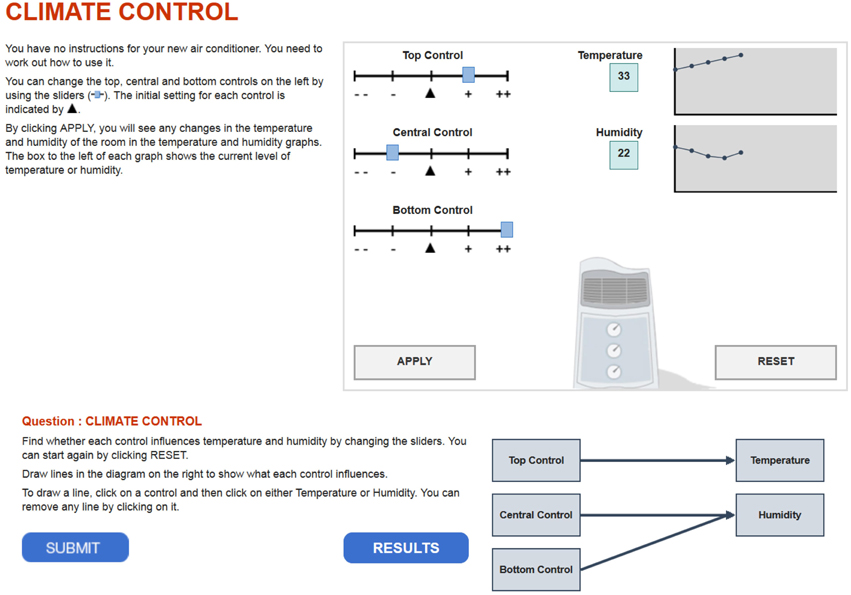

Although, CPS is difficult to measure, and many discussions emerged about the reliability and validity of CPS assessments (Danner et al., 2011; Scherer, 2015), Funke (2014) and Greiff and Martin (2014) pointed to two promising measurement approaches: Computer-Simulated Microworlds (CSMs) and Minimal Complex Systems (MCSs). The former present students with a complex environment, that is comprised of a large number of variables such that the problem cannot be fully understood (Funke, 2010; Danner et al., 2011; Scherer et al., 2014). Given that CSMs allow for changes in the problem situation over time or as function of students' interactions with the problem, and thus present novel information to students, cognitive adaptability may be captured by these kinds of CPS assessments. This potential has promoted the concept of dynamic testing as a way to evaluate cognitive adaptability (Beckmann, 2014). Given that dynamic testing requires adjustments to novelty and changes, I argue that this feature has the potential to track adaptability in a straightforward way. For instance, situations may occur, which appear to be novel to the student; however, the acquired knowledge and skills enable a person to handle the situation appropriately. Other situations may occur, in which prior knowledge and skills are not sufficient and novel problem-solving approaches are needed. In such situations, adaptability can be evaluated by looking at students' reactions on dynamics and novelty. These reactions may manifest in changes of problem solving behavior or, if adjustments are not necessary, in the stability of problem solving behavior. For instance, changes in the number of variables in a problem situation may not require different problem solving strategies, because varying one variable at a time may still be a reasonable and goal-driving approach (Kuhn et al., 2008). In this respect, the advantages of computer-based assessments come into play. Specifically, it is generally possible to record students' actions in log-files, which contain not only information on the performance, but also on process data such as response times and sequences of actions. I believe that evaluating these rich data sources obtained from CSMs will make the concept of adaptability accessible to educational measurement. However, due to time-intense single tasks, the reliability and scalability of microworlds is severely threatened (Greiff et al., 2012; Scherer, 2015). As a response to this challenge, Greiff et al. (2013) developed MCSs to assess CPS with multiple independent tasks. Recent research has indicated their scalability in measuring CPS and its dimensions; and their enormous potential was recognized in the Programme for International Student Assessment (PISA) in 2012 (OECD, 2014). In MCSs, students are presented with a system (i.e., the problem environment) that simulates a specific scientific concept (e.g., climate control; Figure 1). Their first task is to generate knowledge about this system of variables and their relations by testing how changes in the input variables (e.g., top, central, and bottom control; Figure 1) affect the output variables (e.g., temperature, humidity; Figure 1). Students represent their mental model about these relations in a path diagram. Their second task is to apply this knowledge in a problem situation, where they have to reach a specific goal state or outcome value (e.g., specific levels of temperature and humidity). This task concludes their work on a minimal complex system and further MCSs may be administered subsequently. Given this design, MCSs allow for incorporating interactive, dynamic, and uncertain elements into the problem environment, but still provide sufficient psychometric characteristics in terms of reliability and validity (Greiff et al., 2013). As a consequence, the MCS approach qualifies for assessing students' cognitive adaptability.

Figure 1. Example of a CPS minimal complex system task administered in PISA 2012 (OECD, 2014). The figure has been retrieved from http://www.oecd.org/pisa/test/testquestions/question3/ [accessed 29/9/2015].

Surprisingly, a systematic investigation of adaptability has not yet been conducted with these promising assessments, although it would be straightforward to transfer elements of dynamics and novelty from CSMs to MCSs in order to evaluate the students' reactions and adjustments. For instance, MCSs were used to assess students' complex problem-solving skills in PISA 2012. Students had to work on a sequence of MCSs, which differed in their characteristics such as the number of input and output variables, the relations among these variables, and the situational contexts (e.g., climate control vs. ticket machine; OECD, 2014). But given that too many of these characteristics in two adjacent MCSs were varied at the same time, a systematic investigation of adaptability may be compromised. In fact, adaptability is best examined when changes and novelty are systematically controlled in the test design. Moreover, the current MCSs in PISA 2012 do not contain a dynamic component, which manifests, for instance, in system changes over time without any interaction with the problem solver (so-called “eigendynamics”; Funke, 2010). Nevertheless, MCSs generally have the potential to assess cognitive adaptability, if these design elements are incorporated. In this regard, I can think of a number of scenarios: For instance, after the completion of a complex problem solving task, a new task can be presented which appears to be identical to the previous task; however, the connections between the variables, the number of variables, and whether or not they change dynamically over time have changed. In such a scenario, students would have to recognize the changes and adapt their problem solving behavior. In another possible scenario, novel information about the problem structure or the problem goal is presented during the problem solving process (Goode and Beckmann, 2010). Since this information may originate from different sources, students have to evaluate the information according to its credibility and relevance for the problem in addition to recognizing the new situation. All of these possible scenarios demand computer-based assessments that (1) are highly interactive tools to track not only students' cognitive performance but also their specific behavior; (2) contain a variety of data available to infer on adaptability (e.g., time, actions, performance; Pool, 2013); (3) allow for the incorporation of novelty and dynamics; (4) evaluate adaptability as change or stability across complex problem solving situations. In my opinion, these features will address the current need for valid assessments of adaptability, which marry the advantages of different, computer-based assessments of CPS (Beckmann, 2014).

Conclusion

On the basis of the conceptualizations of adaptability and existing CPS assessments, the following conclusions is drawn: Marrying the two CPS assessments traditions, namely computer-simulated microworlds and minimal complex systems, by transferring elements of dynamic changes and novelty from CSMs to MCSs provides a potential approach to evaluate cognitive adaptability. These different aspects may guide researchers through the processes of developing valid assessments of the construct. There is the hope that, although adaptability is by no means considered to be a novel construct, the current innovations in computer-based assessments of CPS provide new ways to evaluate this essential twenty-first century skill.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Beckmann, J. F. (2014). The umbrella that is too wide and yet too small: why dynamic testing has still not delivered on the promise that was never made. J. Cogn. Educ. Psychol. 13, 308–323. doi: 10.1891/1945-8959.13.3.308

Birney, D. P., Beckmann, J. F., and Wood, R. E. (2012). Precursors to the development of flexible expertise: metacognitive self-evaluations as antecedences and consequences in adult learning. Learn. Individ. Differ. 22, 563–574. doi: 10.1016/j.lindif.2012.07.001

Bohle Carbonell, K., Stalmeijer, R. E., Könings, K. D., Segers, M., and van Merriënboer, J. J. G. (2014). How experts deal with novel situations: a review of adaptive expertise. Educ. Res. Rev. 12, 14–29. doi: 10.1016/j.edurev.2014.03.001

Colé, P., Duncan, L. G., and Blaye, A. (2014). Cognitive flexibility predicts early reading skills. Front. Psychol. 5:565. doi: 10.3389/fpsyg.2014.00565

Danner, D., Hagemann, D., Holt, D. V., Hager, M., Schankin, A., Wüstenberg, S., et al. (2011). Measuring performance in dynamic decision making: reliability and validity of the tailorshop simulation. J. Indiv. Diff. 32, 225–233. doi: 10.1027/1614-0001/a000055

Funke, J. (2010). Complex problem solving: a case for complex cognition? Cogn. Process. 11, 133–142. doi: 10.1007/s10339-009-0345-0

Funke, J. (2014). Analysis of minimal complex systems and complex problem solving require different forms of causal cognition. Front. Psychol. 5:739. doi: 10.3389/fpsyg.2014.00739

Gonzalez, C., Figueroa, I., Bellows, B., Rhodes, D., and Youmans, R. (2013). “A new behavioral measure of cognitive flexibility,” in Engineering Psychology and Cognitive Ergonomics. Understanding Human Cognition, Vol. 8019, ed D. Harris (Berlin/Heidelberg: Springer), 297–306.

Goode, N., and Beckmann, J. F. (2010). You need to know: there is a causal relationship between structural knowledge and control performance in complex problem solving tasks. Intelligence 38, 345–352. doi: 10.1016/j.intell.2010.01.001

Greiff, S., and Martin, R. (2014). What you see is what you (don't) get: a comment on Funke's (2014) opinion paper. Front. Psychol. 5:1120. doi: 10.3389/fpsyg.2014.01120

Greiff, S., Wüstenberg, S., and Funke, J. (2012). Dynamic problem solving: a new assessment perspective. Appl. Psychol. Meas. 36, 189–213. doi: 10.1177/0146621612439620

Greiff, S., Wüstenberg, S., Molnar, G., Fischer, A., Funke, J., and Csapo, B. (2013). Complex problem solving in educational contexts – Something beyond g: concept, assessment, measurement invariance, and construct validity. J. Educ. Psychol. 105, 364–379. doi: 10.1037/a0031856

Jonassen, D. H. (2011). Learning to Solve Problems: A Handbook of Designing Problem-solving Learning Environments. New York, NY: Routledge.

Kuhn, D., Iordanou, K., Pease, M., and Wirkala, C. (2008). Beyond control of variables: what needs to develop to achieve skilled scientific thinking? Cogn. Dev. 23, 435–451. doi: 10.1016/j.cogdev.2008.09.006

Martin, A. J., Nejad, H. G., Colmar, S., and Liem, D. A. D. (2013). Adaptability: how students' responses to uncertainty and novelty predict their academic and non-academic outcomes. J. Educ. Psychol. 105, 728–746. doi: 10.1037/a0032794

Martin, M. M., and Rubin, R. B. (1995). A new measure of cognitive flexibility. Psychol. Rep. 76, 623–626. doi: 10.2466/pr0.1995.76.2.623

OECD (2013). OECD Skills Outlook 2013: First Results from the Survey of Adult Skills. Paris: OECD Publishing.

OECD (2014). PISA 2012 Results: Creative Problem Solving: Students' Skills in Tackling Real-Life Problems, Vol. V. Paris: OECD Publishing.

Ployhart, R., and Bliese, P. (2006). “Individual adaptability (I-ADAPT) theory: Conceptualizing the antecedents, consequences, and measurement of individual differences in adaptability,” in Understanding Adaptability: A Prerequisite for Effective Performance within Complex Environments, Vol. 6, eds C. S. Burke, L. G. Pierce and E. Salas (Amsterdam: Elsevier), 3–39.

Pool, R. (2013). New Directions in Assessing Performance of Individuals and Groups. Washington, DC: The National Academies Press.

Pulakos, E. D., Arad, S., Donovan, M. A., and Plamondon, K. E. (2000). Adaptability in the workplace: development of a taxonomy of adaptive performance. J. Appl. Psychol. 85, 612–624. doi: 10.1037/0021-9010.85.4.612

Scherer, R. (2014). Komplexes problemlösen im fach chemie: ein domänenspezifischer Zugang [Complex problem solving in chemistry: a domain-specific approach]. Z. Pädagogische Psychol. 28, 181–192. doi: 10.1024/1010-0652/a000136

Scherer, R. (2015). “Psychometric challenges in modeling scientific problem-solving competency: An item response theory approach,” in Data Science, Learning by Latent Structures, and Knowledge Discovery, eds B. Lausen, S. Krolak-Schwerdt and M. Böhmer (Berlin Heidelberg: Springer), 379–388.

Scherer, R., Meßinger-Koppelt, J., and Tiemann, R. (2014). Developing a computer-based assessment of complex problem solving in Chemistry. Int. J. STEM Educ. 1, 1–15. doi: 10.1186/2196-7822-1-2

VandenBos, G. R. (2007). American Psychological Association Dictionary of Psychology. Washington, DC: APA.

Keywords: adaptability, cognitive flexibility, complex problem solving, computer-based assessments, dynamic testing

Citation: Scherer R (2015) Is it time for a new measurement approach? A closer look at the assessment of cognitive adaptability in complex problem solving. Front. Psychol. 6:1664. doi: 10.3389/fpsyg.2015.01664

Received: 25 July 2015; Accepted: 14 October 2015;

Published: 28 October 2015.

Edited by:

Howard Thomas Everson, City University of New York, USAReviewed by:

Patricia Heitmann, Institute for Educational Quality Improvement (IQB), GermanyCopyright © 2015 Scherer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ronny Scherer, cm9ubnkuc2NoZXJlckBjZW1vLnVpby5ubw==

Ronny Scherer

Ronny Scherer