95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Psychol. , 02 November 2012

Sec. Cognitive Science

Volume 3 - 2012 | https://doi.org/10.3389/fpsyg.2012.00471

This article is part of the Research Topic Face Processing: perspectives from cognitive science and psychopathology View all 8 articles

Facial expressions are of eminent importance for social interaction as they convey information about other individuals’ emotions and social intentions. According to the predominant “basic emotion” approach, the perception of emotion in faces is based on the rapid, automatic categorization of prototypical, universal expressions. Consequently, the perception of facial expressions has typically been investigated using isolated, de-contextualized, static pictures of facial expressions that maximize the distinction between categories. However, in everyday life, an individual’s face is not perceived in isolation, but almost always appears within a situational context, which may arise from other people, the physical environment surrounding the face, as well as multichannel information from the sender. Furthermore, situational context may be provided by the perceiver, including already present social information gained from affective learning and implicit processing biases such as race bias. Thus, the perception of facial expressions is presumably always influenced by contextual variables. In this comprehensive review, we aim at (1) systematizing the contextual variables that may influence the perception of facial expressions and (2) summarizing experimental paradigms and findings that have been used to investigate these influences. The studies reviewed here demonstrate that perception and neural processing of facial expressions are substantially modified by contextual information, including verbal, visual, and auditory information presented together with the face as well as knowledge or processing biases already present in the observer. These findings further challenge the assumption of automatic, hardwired categorical emotion extraction mechanisms predicted by basic emotion theories. Taking into account a recent model on face processing, we discuss where and when these different contextual influences may take place, thus outlining potential avenues in future research.

Dating back to Darwin (1872) it has been proposed that emotions are universal biological states that are accompanied by distinct facial expressions (Ekman, 1992). This “basic emotion” approach assumes that the expressions of emotion in a face and their perception are unique, natural, and intrinsic phenomena (Smith et al., 2005) that are reliable markers of emotions, co-vary with the subjective experience, belong to a whole set of emotional responses, are readily judged as discrete categories, and as such are essential for successful and efficient social interaction (Matsumoto et al., 2008).

As a consequence, experimental work on the perception of emotional facial expressions has often relied on a set of isolated, de-contextualized, static photographs of actors posing facial expressions that maximize the distinction between categories (Barrett et al., 2011) and give researchers substantial control of the duration, appearance, and physical properties of the stimulus. This work has demonstrated that humans generally are able to identify facial expressions with high accuracy when these are presented as singletons (Matsumoto, 2001; Elfenbein and Ambady, 2002). With respect to the underlying neural mechanisms, cognitive neuroscience research has revealed that emotional face processing engages a widely distributed network of brain areas (Haxby et al., 2000; Haxby and Gobbini, 2011). Core brain regions of face processing are located in inferior occipital gyrus (occipital face area, OFA, Puce et al., 1996), lateral fusiform gyrus (fusiform face area, FFA, Kanwisher et al., 1997), and posterior superior temporal sulcus (pSTS, Hoffman and Haxby, 2000). According to an influential model of face perception, incoming visual information is first encoded structurally, based on the immediate perceptual input, and then transformed into a more abstract, perspective-independent model of the face that can be compared to other faces in memory (Bruce and Young, 1986). The structural encoding phase has been linked to computations in OFA, whereas the more abstract, identity-based encoding occurs in FFA (Rotshtein et al., 2005). The pSTS has been linked to the processing of dynamic information about faces including social signals such as eye gaze and emotional expression (Haxby and Gobbini, 2011). Other regions involved in face processing are amygdala and insula, implicated in the processing of emotion and facial expressions, regions of the reward circuitry such as caudate and orbitofrontal cortex, implicated in the processing of facial beauty and sexual relevance, and inferior frontal gyrus, implicated in semantic aspects of face processing and medial prefrontal cortex, implicated in theory of mind operations when viewing familiar faces of relatives and friends (Haxby et al., 2000; Ishai, 2008).

As mentioned above, the neurocognitive model of facial expression processing has been developed based on experiments using single, context-less faces, a situation rather artificial and low in ecological validity1. Outside the laboratory, however, faces rarely are perceived as single entities and most likely appear within a situational context, which may have a strong impact on how they are perceived.

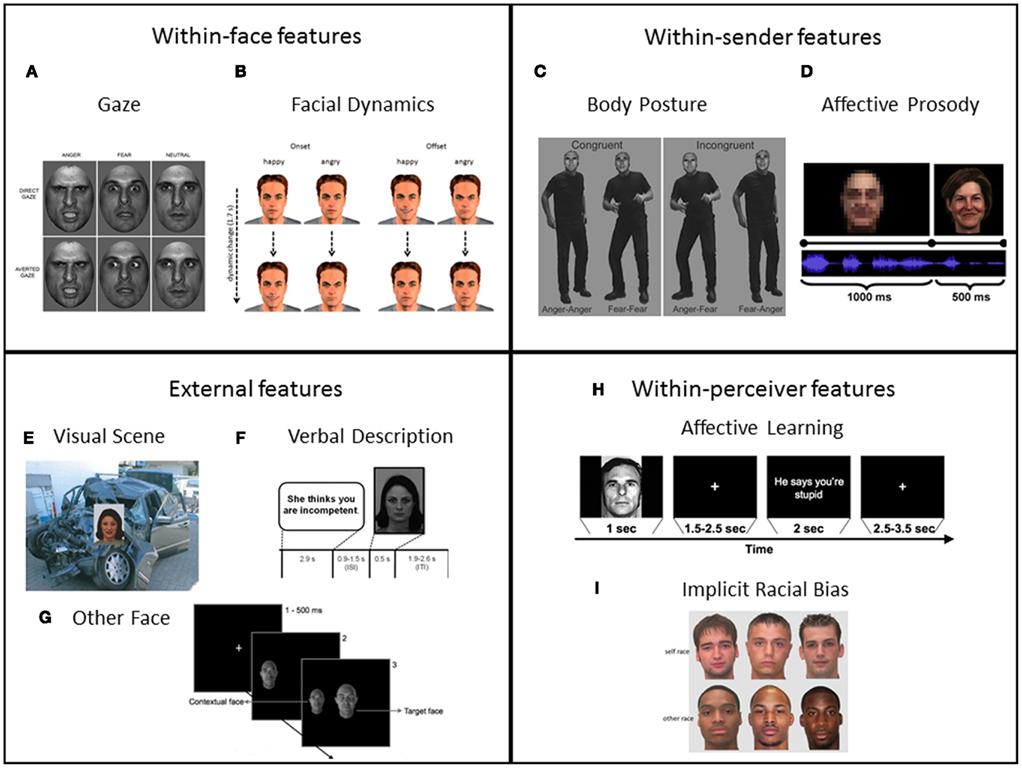

In this article, we review recent studies in which contextual influences on facial expression perception were investigated at the level of subjective perception and at the level of the underlying neural mechanisms. According to Brunswik’s functional lens model of perception (Brunswik, 1956; see also Scherer, 2003), the transmission of a percept (such as an emotional expression) can be broken down into different stages, including the encoding of a state into distal cues by the sender, the actual transmission of the cues, and the perception of the proximal cues by the receiver. Accordingly, we will structure our review following this sequence, beginning with contextual effects occurring mainly during the encoding of the expression, followed by effects occurring during the transmission from sender to receiver, and finally looking at effects occurring mainly during the decoding/perception by the receiver. We additionally introduce a distinction between distal context effects coming from the face of the sender and effects transmitted by other channels of the sender, which results in the following four types of contextual influences on face perception: (1) within-face features (i.e., characteristics that occur in the same face as the “core” facial expression such as eye gaze and facial dynamics), (2) within-sender features (i.e., concurrent context cues from the sender such as affective prosody and body posture), (3) external features from the environment surrounding the face (i.e., concurrent multisensory cues originating outside the sender such as visual scene, other faces, social situations), and (4) within-perceiver features (i.e., affective learning processes and implicit processing biases in the perceiver). Following this systematization of different types of context, we review and summarize experimental findings from studies where these four types of features served as context cues for the perception of facial expressions (see Figure 1).

Figure 1. Examples of the research paradigms established to investigate contextual influences on affective face processing along the nomenclature of within-face, within-sender, external, and within-perceiver features: (A) Eye gaze: direct versus averted eye gaze combined with angry, fearful, and neutral facial expressions. Reproduced with permission from Ewbank et al., 2010. (B) Facial dynamics: time course (start and end frame of video clips) of facial expressions (happy versus angry), which were used to investigate onset versus offset of facial expressions. Reproduced with permission from Mühlberger et al., 2011. (C) Compound facial expressions + body postures (congruent and incongruent, anger and fear is expressed in faces and body postures). Reproduced with permission from Meeren et al., 2005. (D) Concurrent affective prosody presented simultaneously with (happy) facial expression (after blurred baseline condition). Adapted with permission from Müller et al., 2011. (E) Visual affective background picture (negative, threat) presented together with facial expression (fear). Adapted with permission from Righart and de Gelder, 2008a. (F) Preceding verbal description (negative) used as situational context for neutral face. Adapted with permission from Schwarz et al., 2012. (G) Task-irrelevant context faces presented together with target face (center of the screen). Adapted with permission from Mumenthaler and Sander, 2012. (H) CS + trial (with social UCS consisting of verbal insult) used in a social conditioning paradigm. Reproduced with permission from Davis et al., 2010. (I) Self race (here White) versus other race (here Black) faces as typically used in studies on implicit racial bias. Adapted with permission from Lucas et al., 2011.

Within the face, eye gaze is probably the most powerful contextual cue. For example, expressions of joy and anger appear to be considerably more intense when combined with direct than with averted gaze (Adams and Kleck, 2003, 2005). In a series of studies it has been shown that the categorization of happy and sad expressions as well as angry and fearful expressions was impaired when eye gaze was averted, in comparison to direct gaze conditions (Bindemann et al., 2008). However, other studies suggest an interaction of gaze direction and facial expressions, in that angry faces appear more intense and are more easily recognized when paired with a direct gaze, whereas the opposite seems to be true for fearful faces (Adams et al., 2003, 2006; Adams and Kleck, 2005; Sander et al., 2007; Adams and Franklin, 2009; Benton, 2010; Ewbank et al., 2010). This has been explained in the context of appraisal theory of emotion, which assumes that gaze and expression are always integrated by the observer during an appraisal of stimulus relevance (for a recent review, Graham and Labar, 2012). In line with this assumption, angry faces are evaluated as being angrier when showing direct gaze, as eye contact implies a potential threat in form of imminent attack of the sender, while fearful faces are perceived as more fearful when showing averted gaze, as this might indicate potential threat in the environment (Adams and Kleck, 2003; Sander et al., 2007). These behavioral results were replicated by N’Diaye et al. (2009) who additionally observed increased activity in the amygdala as well as in fusiform and medial prefrontal areas to angry faces with direct gaze and fearful faces with averted gaze. This effect was found to be absent or considerably reduced in patients with right and left amygdala damage (Cristinzio et al., 2010). A recent study showed that amygdala responses to rapidly presented fear expressions are preferentially tuned to averted gaze, whereas more sustained presentations lead to preferential responses to fearful expressions with direct gaze (Adams et al., 2012a). This is in line with findings showing that gaze direction modulates expression processing only when the facial emotion is difficult to discriminate (Graham and LaBar, 2007). Moreover, it has been demonstrated that when gaze direction is rendered ambiguous and embedded in explicit, contextual information about intentions of angry and fearful faces, a similar pattern of amygdala activation is observed as in prior results to non-ambiguous gaze cues: angry faces, which were contextualized by explicit information (“The people bumped into you by accident. You are in a bad mood today and so you start to insult them. Thereupon, the people become very angry at/afraid of you”) targeting the observer elicited stronger amygdala responses than angry faces targeting another person, whereas the opposite pattern was observed for fearful faces (Boll et al., 2011). By showing that contextual information interacts with facial expression in the same manner as gaze direction, this study underlines the significance and meaning of certain gaze directions for human observers. Altogether, gaze interacts with facial expression, but this also depends on the relative timing and the nature of the stimuli used. The neural substrates seem to mainly involve the STS and the amygdala, suggesting that the amygdala is involved in processes going beyond basic emotion recognition or arousal processing, as integral part of an appraisal system that is sensitive to expression and gaze direction, among other features (Graham and Labar, 2012).

The temporal dynamics of facial movements are a further contextual cue expressed in the face. Particularly the time course of facial movements when expressing an emotion as well as whether an expression starts developing (i.e., a face turns from neutral to angry) or ends (i.e., a face turns neutral from angry) may constitute important non-emotional context cues. It has been demonstrated several times that dynamically evolving facial expressions are much better recognized, rated as more arousing, elicit larger emotion-congruent facial muscle responses (Weyers et al., 2006; Sato and Yoshikawa, 2007a,b), and also elicit stronger amygdala activity compared to static faces (LaBar et al., 2003; Sato et al., 2004). Comparing dynamic to static faces, enhanced emotion-specific brain activation patterns have been found in the parahippocampal gyrus including the amygdala, fusiform gyrus, superior temporal gyrus, inferior frontal gyrus, and occipital and orbitofrontal cortex (Trautmann et al., 2009). Moreover, dynamic compared to static facial expressions are associated with enhanced emotion discrimination at early and late stages of electro-cortical face processing (Recio et al., 2011). Comparing onset and offset of facial expressions, it has been shown that perceived valence and threat of angry and happy facial expressions depend on their dynamics in terms of on-versus offset of the respective facial expression. While the onset of happy facial expressions was rated as highly positive and not threatening, the onset of angry facial expressions was rated as highly negative and highly threatening. Furthermore, the offset of angry compared to the onset of angry facial expressions was associated with activity in reward-related brain areas, whereas onset of angry as well as offset of happy facial expressions were associated with activations in threat-related brain areas (Mühlberger et al., 2011).

Besides concurrent visual information, acoustic information from the sender may also serve as context for the perception, recognition, and evaluation of facial expressions. Indeed, the identification of the emotion in a face is biased in the direction of simultaneously presented affective prosody (de Gelder and Vroomen, 2000). Furthermore, it was demonstrated that this effect occurred even under instructions to base the judgments exclusively on information in the face. Increased accuracy rates and shorter response latencies in emotion recognition tasks when facial expressions are combined with affective prosody have been found in several other studies (de Gelder et al., 1999; Dolan et al., 2001). In one of the first neuroimaging studies on face and affective prosody processing, Dolan et al. (2001) contrasted emotional congruent to emotional incongruent conditions in an audiovisual paradigm and found greater activation of the left amygdala and right fusiform gyrus in congruent as compared to incongruent conditions. Further fMRI studies on the audiovisual integration of non-verbal emotional information revealed that this perceptual gain during audiovisual compared to visual stimulation alone is accompanied by enhanced BOLD responses in pSTG, left middle temporal gyrus and thalamus when comparing the bi-modal condition to either unimodal condition (Pourtois et al., 2005; Ethofer et al., 2006; Kreifelts et al., 2007). In a recent neuroimaging study it has been found that subjects rated fearful and neutral faces as being more fearful when accompanied by sounds of human screams than compared to neutral sounds (Müller et al., 2011). Moreover, the imaging data revealed that an incongruence of emotional valence between faces and sounds led to increased activation in areas involved in conflict monitoring such as the middle cingulate cortex and the right superior frontal cortex. Further analyses showed that, independent of emotional valence congruency, the left amygdala was consistently activated when the information from both modalities was emotional. If a neutral stimulus was present in one modality and emotional in the other, activation in the left amygdala was significantly attenuated compared to when an emotional stimulus was present in both modalities. This result points at additive effects in the amygdala when emotional information is present in both modalities. This congruency effect was also recently confirmed in a study where laughter as played via headphones increased the perceived intensity of a concurrently shown happy facial expression (Sherman et al., 2012). Taken together, results from audiovisual paradigms point at massive influences of auditory context cues in terms of affective prosody on the processing of facial expressions. Again, this is reflected by congruency effects with better recognition rates and larger activations in face processing and emotion processing areas.

The most obvious within-sender context for a face is the body it belongs to, which also underlies non-verbal communication via postures and other body language (e.g., gestures). Meeren et al. (2005) found that observers judging a facial expression are strongly influenced by the concomitant emotional body language. In this study, pictures of fearful and angry faces and bodies were used to create face-body compound images, with either congruent or incongruent emotional expressions. When face and body conveyed conflicting emotional information, the recognition of the facial expression was attenuated. Also, the electro-cortical P1 component was enhanced in response to congruent face-body compounds, which points to the existence of a rapid neural mechanism assessing the degree of agreement between simultaneously presented facial and bodily emotional expressions already after 100 ms. Van den Stock et al. (2007) used facial expressions morphed on a continuum between happy and fearful, and combined these with happy or fearful body expressions. Ratings of facial expressions were influenced by body context (congruency) with the highest impact for the most ambiguous facial expressions. In another study, it was shown that identical facial expressions convey strikingly different emotions depending on the affective body postures in which they were embedded (Aviezer et al., 2008). Moreover, it was shown that eye movements, i.e., characteristic fixation patterns previously thought to be determined solely by the facial expression, were systematically modulated by this emotional context. These effects were even observed when participants were told to avoid using the context or were led to believe that the context was irrelevant (Aviezer et al., 2011). In addition, these effects were not influenced by working memory load. Overall, these results suggest that facial expressions and their body contexts are integrated automatically, with modulating effects on perception in both directions.

Emotion research frequently uses language in form of emotion labels, both to instruct participants and to assess recognition performance. As the following examples demonstrate, language can guide and even bias participants on how to read facial expressions in this kind of research. For example, when participants were asked to repeat an emotion word such as “anger” aloud either three times (temporarily increasing its accessibility) or 30 times (temporarily reducing its accessibility), reduced accessibility of the meaning of the word led to slower and less accurate emotion recognition, even when participants were not required to verbally label the target faces (Lindquist et al., 2006). In a similar vein, morphed faces depicting an equal blend of happiness and anger were found to be perceived as angrier when those faces were paired with the word “angry” (Halberstadt and Niedenthal, 2001). It has furthermore been demonstrated that verbalizing words disrupts the ability to make correct perceptual judgments about faces, presumably because it interferes with access to language necessary for judgment (Roberson and Davidoff, 2000). In line with the latter findings, at the neural level it was demonstrated that emotional labeling of negative emotional faces produced a decreased response in limbic brain areas such as the amygdala, which might reflect that putting feeling into words has regulatory effects at least for negative emotions (Lieberman et al., 2007). Overall, these findings are in line with the language-as-a-context-hypothesis (Barrett et al., 2007), which proposes that language actively influences emotional perception by dynamically guiding the perceiver’s processing of structural information from the face.

Verbal descriptions of social situations also provide strong contextual cues which influence facial expression perception. Carroll and Russell (1996) let participants read six short stories of situations which created a context, afterward a facial expressions was shown and had to be rated. Indeed, in each of the 22 cases that were examined, contextual information overwrote the facial information (e.g., a person in a painful situation but displaying fear was judged as being in pain). These results are in line with an earlier study, where verbal descriptions of emotion-eliciting events were used as situational cues, which increased emotion recognition in faces (Trope, 1986). Using neuroimaging it was demonstrated that brain responses to ambiguous emotional faces (surprise) are modified by verbal descriptions of context conditions: stronger amygdala activation for surprised faces embedded in negative compared to positive contexts was found, thus demonstrating context-dependent neural processing of the very same emotional face (Kim et al., 2004). This effect has been recently extended to neutral faces, where context conditions of self-reference modulated perception and evaluation of neutral faces in terms of larger mPFC and fusiform gyrus activity to self- versus other-related neutral faces and more positive and negative ratings of the neutral faces when put in positive and negative contexts (Schwarz et al., 2012). The latter findings demonstrate that contextual influences might be most powerful when the information about the emotion from facial features is absent or ambiguous at best.

Probably the most frequent external context cue for a face is another face, as we often perceive persons surrounded by persons. Not surprisingly, it was demonstrated that facial expressions have a strong influence on the perception of other facial expressions. Russell and Fehr (1987) observed that the read-out of an emotion from a facial expression clearly depends on previously encountered facial expressions: a first expression (the anchor) displaced the judgment of subsequent facial expression, for instance, a neutral target face was categorized as sadder after a happy face was seen. In a series of three experiments it was shown that the implicit contextual information consisting of other facial expressions modulates valence assessments of surprised faces, such that they were interpreted as congruent with the valence of the contextual expressions (Neta et al., 2011). Recently, it was also demonstrated that congruent, but irrelevant faces in the periphery enhance recognition of target faces, whereas incongruent distracter faces reduced recognition of target faces (Mumenthaler and Sander, 2012). This contextual effect of concurrent faces was augmented when the peripheral face gazed at the target face, indicating social appraisal, where the appraisal of another person is integrated into the appraisal of the observer, thus facilitating emotion recognition. Social appraisal was furthermore demonstrated by facilitated recognition of fear in a centrally presented face when an angry peripheral face gazed at the central face. In addition to emotion recognition of the target, emotional expressions of context faces may also be used to inform the explicit social evaluations of the observer: male faces that were looked at by females with smiling faces were rated as more attractive by female participants than males looked at with neutral expressions. Revealing an interesting gender difference, in the same experiment male participants preferred the male faces that were being looked at by female faces with neutral expressions (Jones et al., 2007).

Faces usually are perceived together with other visual stimuli, for example the surrounding visual scene which might be constituted by non-animated visual objects. Barrett and Kensinger (2010) found that visual context is routinely encoded when facial expressions are observed. Participants remembered the context more often when asked to label an emotion in a facial expression than when asked to judge the expression’s simple affective significance (which can be done on the basis of the structural features of the face alone). The authors conclude that the structural features of the face, when viewed in isolation, might be insufficient for perceiving emotion. In a series of studies, Righart and de Gelder, 2006, 2008a,b) examined how the surrounding visual context affects facial expression recognition and its neural processing. Using event-related brain potentials to faces (fearful/neutral) embedded in visual scene contexts (fearful/neutral) while participants performed an orientation-decision task (face upright or inverted), they found that the presence of a face in a fearful context was associated with enhanced N170 amplitudes, and this effect was strongest for fearful faces on left-occipito-temporal sites (Righart and de Gelder, 2006). Interestingly, faces without any context showed the largest N170 amplitudes, indicating competition effects between scenes and faces. In a follow-up study, participants had to categorize facial expressions (disgust, fear, happiness) embedded in visual scenes with either congruent or incongruent emotional content (Righart and de Gelder, 2008b). A clear congruency effect was found such that categorization of facial expressions was speeded up by congruent scenes, and this advantage was not impaired by increasing task load. In another study, they investigated how the early stages of face processing are affected by emotional scenes when explicit categorizations of fearful and happy facial expressions are made (Righart and de Gelder, 2008a). Again, emotion effects were found with larger N170 amplitudes for faces in fearful scenes as compared to faces in happy and neutral scenes. Critically, N170 amplitudes were significantly increased for fearful faces in fearful scenes as compared to fearful faces in happy scenes and expressed in left-occipito-temporal scalp topography differences. Using videos as visual context, it was also demonstrated that both positive and negative contexts resulted in significantly different ratings of faces compared with those presented in neutral contexts (Mobbs et al., 2006). These effects were accompanied by alterations in several brain regions including the bilateral temporal pole, STS insula, and ACC. Moreover, an interaction was observed in the right amygdala when subtle happy and fear faces were juxtaposed with positive and negative movies, respectively, which again points at additive effects of context and facial expression. Together, these series of experiments clearly indicate that the information provided by the facial expression is automatically combined with the scene context during face processing. Mostly, congruency effects were observed such that congruent visual contexts helped identifying facial expressions and led to larger N170 amplitudes2 and alterations in face processing areas in the brain.

When we process a face, we compare it to previously encoded memory representations. Affective information stemming from previous encounters may thus guide our perception and evaluation. Affective or social conditioning studies have investigated this effect by pairing neutral faces with social cues (e.g., affective sounds, sentences) serving as unconditioned stimulus (UCS). For example, in one study, participants learned that faces predicted negative social outcomes (i.e., insults), positive social outcomes (i.e., compliments), or neutral social outcomes (Davis et al., 2010). Afterward, participants reported liking or disliking the faces in accordance with their learned social value. During acquisition, differential activation across the amygdaloid complex was observed. A region of the medial ventral amygdala and a region of the dorsal amygdala/substantia innominata showed signal increases to both negative and positive faces, whereas a lateral ventral region displayed a linear representation of the valence of faces such that activations in response to negatively associated faces were larger than those to positive ones, which in turn were larger compared to those elicited by faces associated with neutral sentences. In another social conditioning paradigm, Iidaka et al. (2010) found that a neutral face could be negatively conditioned by using a voice with negative emotional valence (male voice loudly saying “Stupid!”). Successful conditioning was indicated by elevated skin conductance responses as well as greater amygdala activation, demonstrating that the “perceptually” neutral face elicited different behavioral and neural responses after affective learning. Moreover, Morel et al. (2012) showed in a MEG study that even faces previously paired only once with negative or positive contextual information, are rapidly processed differently in the brain, already between 30 and 60 ms post-face onset. More precisely, the faces previously seen in a positive (happy) emotional context evoked a dissociated neural response as compared to those previously seen in either a negative (angry) or a neutral context. Source localization revealed two main brain regions involved in this very early effect: the bilateral ventral, occipito-temporal, extrastriate regions and the right anterior medial temporal regions. A recent study showed in two experiments that neutral faces which were paired with negative, positive, or neutral gossip (and were then presented alone in a binocular rivalry paradigm (faces were presented to one eye, houses to the other), only the faces previously paired with negative (but not positive or neutral) gossip dominated longer in visual consciousness (Anderson et al., 2011). These findings also demonstrate that social affective learning can influence vision in a completely top-down manner, independent of the basic structural features of a face. It is important to note that the contextual influences described here are based on previous encounters, the contextual information itself is not present at the time the face is seen again. All of these findings, however, were obtained with neutral faces only and have to be extended to emotional facial expressions as well.

In addition to the conditioning literature reviewed above, recognition memory studies employing old/new paradigms have also revealed massive context effects during encoding on recognition memory. It has been shown, for example, that the N170 amplitude during recognition is diminished when the face was presented in a contextual frame compared to no contextual frame during the encoding phase, but heightened when the contextual frame was of positive compared to negative valence (Galli et al., 2006). Similar effects have been observed when fearful faces were shown at encoding, but neutral faces of the same identity at retrieval (Righi et al., 2012), which has been also shown in a recent fMRI study, where several brain regions involved in familiar face recognition, including fusiform gyrus, posterior cingulate gyrus, and amygdala, plus additional areas involved in motivational control such as caudate and anterior cingulate cortex, were differentially modulated as a function of a previous encounter (Vrticka et al., 2009). Also, attractiveness during encoding was found to alter ERP responses during retrieval (Marzi and Viggiano, 2010). Additionally, faces associated earlier with positively or negatively valenced behaviors elicited stronger activity in brain areas associated with social cognition such as paracingulate cortex and STS (Todorov et al., 2007). However, a recent study investigating the effect of concurrent visual context during encoding of faces showed that facial identity was less well recognized when the face was seen with an emotional body expression or against an emotional background scene, compared to a neutral body or a neutral background scene. Most likely, this is due to orienting responses triggered by the visual context, which may lead to a less elaborate processing and in turn resulting in a decreased facial recognition memory (Van den Stock and de Gelder, 2012). In general, context cues present at the encoding phase have a great impact on how faces are remembered.

An interesting example for an interaction of within-face and within-perceiver contextual features is illustrated by research investigating the impact of race bias and stereotypes on the perception of faces. Effects of implicit race bias on face perception have been demonstrated both at the neural level and at the perceptual level. For example, participants with high implicit race bias (measured using the implicit association test, IAT) showed higher increases in amygdala activation toward black faces compared to participants with lower bias (Phelps et al., 2000; Cunningham et al., 2004). Similarly, multi-voxel pattern decoding of the race of a perceived face based on BOLD signal in FFA has been shown to be restricted to participants with high race bias (Brosch et al., in press), suggesting that race bias may decrease the similarity of high-level representations of black and white faces. With regard to the face-sensitive N170 component of the ERP, it has been found that pro-white bias was associated with larger N170 responses to black versus white faces, which may indicate that people with stronger in group preferences may see out-group faces as less normative and, thus, require greater engagement of early facial encoding processes (Ofan et al., 2011). At the behavioral level, the mental templates of out-group faces possess less trustworthy features in participants with high implicit bias (Dotsch et al., 2008). Race bias furthermore has been shown to interact with the perception of emotion in a face: in a change detection task, participants observed a black or white face that slowly morphed from one expression to another (either from anger to happiness or from happiness to anger) and had to indicate the offset of the first expression. Higher implicit bias was associated with a greater readiness to perceive anger in Black as compared with White faces, as indicated by a later perceived offset (or earlier onset) of the anger expressions (Hugenberg and Bodenhausen, 2004). Similarly, positive expressions were recognized faster in White as compared with Black faces (Hugenberg, 2005).

Obviously, the personality traits of the perceiver are important when discussing to within-perceiver features. As this vast literature is worth a review in its own, we only briefly summarize findings on some personality dimensions here (for extensive reviews, see Calder et al., 2011; Fox and Zougkou, 2011). Extraversion, for example, seems to be associated with prioritized processing of positive facial expressions on neural (higher amygdala activation) and behavioral level (Canli et al., 2002), whereas higher levels of trait-anxiety and neuroticism are associated with stronger reactions to negative facial expressions, as suggested by converging evidence from different paradigms. For example, it has been shown that the left amygdala of participants reporting higher levels of state-anxiety reacts more strongly to facial expressions of fear (Bishop et al., 2004b). Moreover, a lower recruitment of brain networks involved in attentional control including the dorsolateral and ventrolateral prefrontal cortex (dlPFC, vlPFC) was observed in participants reporting increased trait-anxiety (Bishop et al., 2004a). Another study found strong associations between amygdala activity in response to backward masked fearful faces and high levels of self-reported trait-anxiety (Etkin et al., 2004). Alterations in facial expressions processing are – not surprisingly – most evident in traits related to disorders with social dysfunctions like social phobia/anxiety (e.g., Wieser et al., 2010, 2011, 2012b; McTeague et al., 2011) and autism-spectrum disorders (for a review, see Harms et al., 2010). Whereas in social anxiety biases and elevated neural responses to threatening faces (i.e., angry faces) are most commonly observed (Miskovic and Schmidt, 2012), person with autism-spectrum disorders are often less able to recognize emotion in a face (e.g., Golan et al., 2006). Furthermore, people high on the autistic spectrum seem to process faces more feature-based when asked to identify facial expressions (Ashwin et al., 2006). In addition, emerging evidence suggests that processing of facial expressions is also depending on genetic factors such as variations in the serotonin receptor gene HTR3A (Iidaka et al., 2005), the neuropeptide B7W receptor-1 gene (Watanabe et al., 2012), and serotonin transporter (5-HTT) promoter gene (Hariri et al., 2002). Noteworthy, interactions of personality traits and contextual influences are also likely to occur. A recent study demonstrated that the way how visual contextual information affected the perception of facial expressions depended on the observer’s tendency to process positive or negative information as assessed with the BIS/BAS questionnaire (Lee et al., 2012).

The empirical findings reviewed in this paper clearly demonstrate that perception and evaluation of faces are influenced by the context in which these expressions occur. Basically, affective and social information gained from either within-face features (eye gaze, expression dynamics), within-sender features (body posture, prosody, affective learning), environmental features (visual scene, other faces, verbal descriptions of social situations), or within-perceiver features (affective learning, cognitive biases, personality traits) seems to dramatically influence how we perceive a facial expression. The studies as reviewed above altogether point at the notion that efficient emotion perception is not solely driven by the structural features present in a face. In addition, as the studies on affective learning and verbal descriptions of contexts show, contextual influences seem to be especially influential when the facial expression is either ambiguous (e.g., surprised faces) or no facial expression is shown (i.e., neutral faces). The latter effects not only highlight the notion of contextual influences but also pinpoint the issues of using so-called neutral faces as baseline condition in the research of facial expressions perception. Thus, using neutral faces as baseline, researchers have to be aware that these are probably influenced by the preceding faces (Russell and Fehr, 1987), which could be resolved or at least attenuated using longer inter-trial intervals, for example. Moreover, as contextual impact may be particularly high for neutral faces, one has to try to minimize contextual information when mere comparisons of neutral and other expressions are the main interest. In line with this viewpoint, it has been recently demonstrated that emotion-resembling features of neutral faces can drive their evaluation, likely due to overgeneralizations of highly adaptive perceptual processes by structural resemblance to emotional expressions (Adams et al., 2012b). This assumption is supported by a study that used an objective emotion classifier to demonstrate the relationship between a set of trait inferences and subtle resemblance to emotions in neutral faces (Said et al., 2009). Although people can categorize faces as emotionally neutral, they also vary considerably on their evaluations with regard to trait dimensions such as trustworthiness (Engell et al., 2007). A possible explanation is that neutral faces may contain structural properties that cause them to resemble faces with more accurate and ecologically relevant information such as emotional expressions (Montepare and Dobish, 2003). Another finding suggests that prototypical “neutral” faces may be evaluated as negative in some circumstances, which suggests that the inclusion of neutral faces as a baseline condition might introduce an experimental confound (Lee et al., 2008).

A large amount of the effects of context on facial expression processing seems to rely on congruency effects (i.e., facilitation of emotion perception when context information is congruent). Whereas some of the effects observed here can be explained by affective priming mechanisms (for example, when previously given verbal context influences the perception of the face presented afterward), other effects (for example, when concurrently available information from the auditory channels influences the perception of facial expressions) point to a supramodal emotion perception which rapidly integrates cues from the facial expression and affective contextual cues. Particularly the influences on surprised (ambiguous) and neutral faces show that contextual effects may play an even more important role when the emotional information is difficult to derive from the facial features alone. The latter is also in line with recent models of impression forming of other people based on minimal information (Todorov, 2011).

Beyond the scope of this paper, cultural influences obviously also define a major context for the perception of facial expressions (Viggiano and Marzi, 2010; Jack et al., 2012). In brief, facial expressions are most reliably identified by persons who share the same cultural background with senders (Ambady and Weisbuch, 2011), whereas, for instance, Eastern compared to Western observers use a culture-specific decoding strategy that is inadequate to reliably distinguish facial expressions of fear and disgust (Jack et al., 2009). Only recently it has also been shown that concurrent pain alters the processing particularly of happy facial expressions (Gerdes et al., 2012). The latter findings point at the notion that within-perceiver features also include alternations within the (central) nervous system of the perceiver.

Altogether, the studies reviewed above demonstrate that faces do not speak for themselves (Barrett et al., 2011), but are always, known or unbeknown to the perceiver, subject to contextual modulations lying within-face, within-sender, within-perceiver, or in the environment. Thus, the assumption of modular basic emotion recognition might only hold true for very rare cases, particularly when elicited emotions and resulting facial expressions are intense and exaggerated, and there is no reason to modify and manage the expression. These assumptions are also in line with the notion that even simple visual object perception is highly dependent on context (Bar, 2004).

The importance of context is congruent with several other theoretical approaches, such as Scherer’s (1992) assumption that facial expressions do not solely represent emotional, but also cognitive processes (for further discussion, see Brosch et al., 2010). In this view, facial expressions do not categorically represent few basic emotions, but are the result of sequential and cumulative stimulus evaluations (which also take into account context variables as reviewed above). Thus, facial expressions are supposed to simultaneously and dynamically express cognition and emotion. The categorical approach is further challenged by social-ecological approaches, which assume social cognition and perception to proceed in an ecologically adaptive manner (McArthur and Baron, 1983). Here it is proposed that different modalities are always combined to inform social perception. Hence, identification of facial expressions is especially fast and accurate when other modalities impart the same information. In this line, facial expressions also are contingent on the broader context of the face (i.e., what we refer to as within-face features) and the unexpressive features of the face/sender (i.e., within-sender features). This context-dependency is assumed to reflect adaption processes of humans to their ecological niche (Ambady and Weisbuch, 2011).

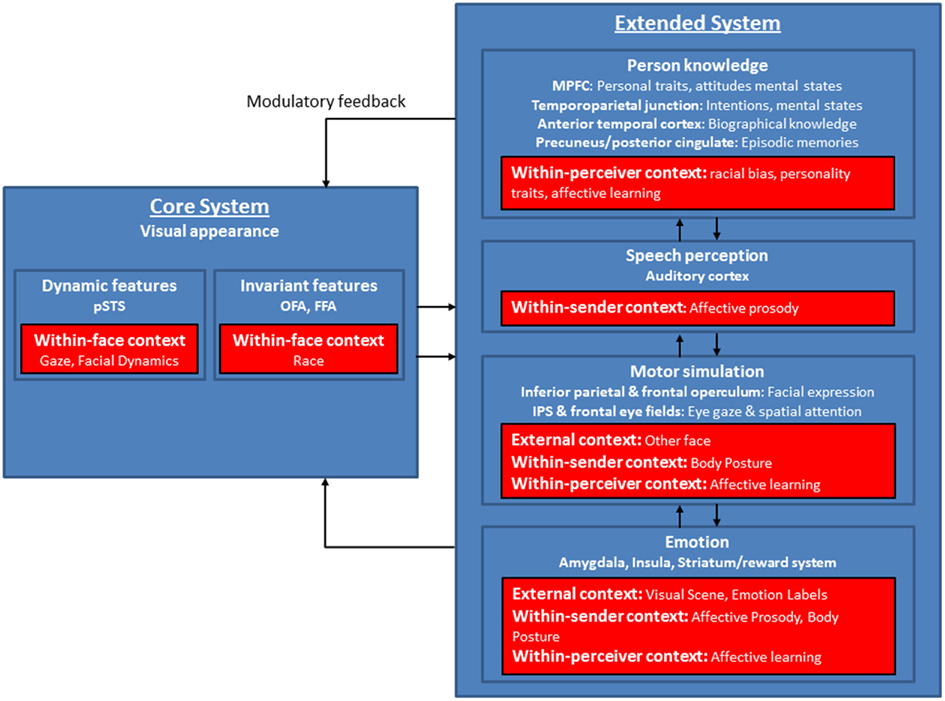

With regard to current neurocognitive models of face processing, we suggest that the model of distributed neural systems for face perception (Haxby and Gobbini, 2011) offers an avenue for integrating the findings of different sources of context as reviewed above, as it allows for multiple entry points of context into ongoing face processing (see Figure 2).

Figure 2. Modified version of the model of distributed neural systems for face processing (blue boxes). The red boxes indicate the contextual factors as outlined in the review and show distal cues (within-face, within-sender features), influences from the transmission phase (environmental features), and proximal perceptions (within-perceiver features) and their supposed target areas within the model. Modified after Haxby et al. (2000), Haxby and Gobbini (2011).

At different levels of face processing, within-face, within-sender, within-perceiver, and external features have been shown to strongly modulate the neurocognitive mechanisms involved in face perception. The neural substrates of these modulations mainly concern brain areas within the extended network of the model (see Figure 2), but also some additional areas related to self-referential processing, impression formation, and affective learning. Context variables like within-perceiver features as learning mechanisms and personality traits influence areas involved in person knowledge and emotion processes (medial PFC, temporo-parietal junction, limbic system), which are also modulated by within-sender features like affective prosody and body posture. External features most likely modulate face processing via emotional circuits (amygdala, insula, striatum), which through feedforward and feedback loops especially between the amygdala, and primary visual cortex, OFA, FFA, and STS (Carmichael and Price, 1995; Morris et al., 1998; Iidaka et al., 2001; Amaral et al., 2003; Catani et al., 2003; Vuilleumier et al., 2004; Price, 2007), may foster the interaction between facial expressions and affective context and lead to a unified and conscious percept (Tamietto and de Gelder, 2010). Activations in the extended network in turn influence processing in the core face network via top-down modulatory feedback volleys (Haxby and Gobbini, 2011). It is important to note that the influences of context might be seen even in early stages of face processing taking place in the core system (OFA; FFA; STS). Particularly within-face features are key players here, as the STS is clearly involved in gaze processing and processing of facial dynamics.

Besides the involved structures of the brain (“where?”), it is also essential for our further understanding of contextual influences on face processing to identify at which temporal stage of the processing stream (“when?”) the integration of context and facial expression takes place. To this end, measures with high temporal resolution like EEG are much better suited than measures with high spatial, but poor temporal resolution like fMRI (Brosch and Wieser, 2011). Indeed, several ERP studies point at the notion that the integration of context and facial expression seems to be an automatic and mandatory process, which takes place very early in the processing stream, probably even before the structural encoding is completed. Looking at combinations of facial expressions and body postures, the P1 component of the ERP was enhanced when faces were presented together with an incongruent body posture (Meeren et al., 2005), which suggests a rapid neural mechanism of integration, or at least an early sensitivity for non-matching. This assumption is also supported by EEG studies on face-voice integration (de Gelder et al., 2006), where it has been found, for instance, that the auditory N1 component occurring about 110 ms after presentation of an affective voice is significantly enhanced by a congruent facial emotion (Pourtois et al., 2000). ERP studies investigating how emotionally positive or negative information at encoding influences later recognition of faces in an old/new task (Galli et al., 2006) or how concurrent visual context affects facial expression processing (Righart and de Gelder, 2006) also show effects at early encoding stages in form of a contextual modulation of the N170 amplitude. Early modifications in face-related visual cortices (STS, fusiform gyrus) may be due to re-entrant projections from the amygdala (e.g., Vuilleumier et al., 2004), crosstalk between sensory areas (bi-modal stimulation) or directly by modulations of STS activity as a hub for multisensory integration (Barraclough et al., 2005).

Altogether, the literature as reviewed above nicely fits distributed as opposed to categorical models of face processing (Haxby et al., 2000; de Gelder et al., 2003), and clearly points at the notion that the face itself might not tell us the full story about the underlying emotions. Moreover, as ERP studies reveal, the integration of context and facial expression may occur at early stages of stimulus processing and in an automatic fashion. This network is the neural substrate of a much more complex and inferential process of everyday face-reading than previously conceptualized by categorical accounts, which includes not only the perceptual processing of facial features but also social knowledge concerning context.

As this review shows, different types of context influence face perception. However, a lot of questions remain unsolved, which need to be addressed in future research. It is still unknown, for example, how these various dimensions of contexts and facial expressions are coded and how they are integrated into a single representation. Moreover, given the complexity of such a process, the available models need to be refined with regard to the interconnections between the neuroanatomical structures underlying face perception as shown in Figure 2. Particularly, the exact time course of these processes is unknown so far, and more data is needed to decide whether this is an automatic and mandatory process (de Gelder and Van den Stock, 2011). Furthermore, elucidating the interactions of different types of contexts and their influence on facial expression perception is an important research question for social neuroscience. Especially the interactions of within-perceiver and within-sender features may be of interest here, as they may enhance our understanding of non-verbal social communication. Additionally, interactions of facial expressions and contexts have so far mainly been investigated in one direction (namely the effect of the context on the face) at the perception stage. However, it would also be interesting to investigate the impact of facial expressions on how the surrounding environment is affectively toned. Moreover, the reviewed results and especially the finding of a highly flexible perception of neutral faces suggest that standard paradigms for studying facial expression perception may need some modifications. As a minimal requirement, experimenters in facial expression research need to consider the intrinsically involved contextual influences. This is all the more important as experiments on language and other faces as contexts show that even within experimentally sound studies contextual influences cannot be precluded.

Taken together, the work reviewed here demonstrates that the impact of contextual cues on facial expression recognition is remarkable. At the neural level, this interconnection is implemented in widespread interactions between distributed core and extended face processing regions. As outlined earlier, many questions about these interactions are still unsolved. All the more, the time has come for social affective neuroscience research to put faces back in context.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This publication was funded by the German Research Foundation (DFG) and the University of Wuerzburg in the funding programme Open Access Publishing.

Adams, R. B., Ambady, N., Macrae, C. N., and Kleck, R. E. (2006). Emotional expressions forecast approach-avoidance behavior. Motiv. Emot. 30, 177–186.

Adams, R. B., and Franklin, R. G. (2009). Influence of emotional expression on the processing of gaze direction. Motiv. Emot. 33, 106–112.

Adams, R. B., Franklin, R. G., Kveraga, K., Ambady, N., Kleck, R. E., Whalen, P. J., et al. (2012a). Amygdala responses to averted vs direct gaze fear vary as a function of presentation speed. Soc. Cogn. Affect. Neurosci. 7, 568–577.

Adams, R. B., Nelson, A. J., Soto, J. A., Hess, U., and Kleck, R. E. (2012b). Emotion in the neutral face: a mechanism for impression formation? Cogn. Emot. 26, 431–441.

Adams, R. B., Gordon, H. L., Baird, A. A., Ambady, N., and Kleck, R. E. (2003). Effects of gaze on amygdala sensitivity to anger and fear faces. Science 300, 1536.

Adams, R. B., and Kleck, R. E. (2003). Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci. 14, 644–647.

Adams, R. B., and Kleck, R. E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion 5, 3–11.

Amaral, D. G., Behniea, H., and Kelly, J. L. (2003). Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience 118, 1099–1120.

Ambady, N., and Weisbuch, M. (2011). “On perceiving facial expressions: the role of culture and context,” in Oxford Handbook of Face Perception, eds A. J. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (New York: Oxford University Press), 479–488.

Anderson, E., Siegel, E. H., Bliss-Moreau, E., and Barrett, L. F. (2011). The visual impact of gossip. Science 332, 1446–1448.

Ashwin, C., Wheelwright, S., and Baron-Cohen, S. (2006). Finding a face in the crowd: testing the anger superiority effect in Asperger syndrome. Brain Cogn. 61, 78–95.

Aviezer, H., Bentin, S., Dudarev, V., and Hassin, R. R. (2011). The automaticity of emotional face-context integration. Emotion 11, 1406–1414.

Aviezer, H., Hassin, R. R., Ryan, J., Grady, C. L., Susskind, J., Anderson, A., et al. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19, 724–732.

Barraclough, N. E., Xiao, D. K., Baker, C. I., Oram, M. W., and Perrett, D. I. (2005). Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. J. Cogn. Neurosci. 17, 377–391.

Barrett, L. F., and Kensinger, E. A. (2010). Context is routinely encoded during emotion perception. Psychol. Sci. 21, 595–599.

Barrett, L. F., Lindquist, K. A., and Gendron, M. (2007). Language as context for the perception of emotion. Trends Cogn. Sci. (Regul. Ed.) 11, 327–332.

Barrett, L. F., Mesquita, B., and Gendron, M. (2011). Context in emotion perception. Curr. Dir. Psychol. Sci. 20, 286–290.

Batty, M., and Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Brain Res. Cogn. Brain Res. 17, 613–620.

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565.

Benton, C. P. (2010). Rapid reactions to direct and averted facial expressions of fear and anger. Vis. Cogn. 18, 1298–1319.

Bindemann, M., Burton, A. M., and Langton, S. R. H. (2008). How do eye gaze and facial expression interact? Vis. Cogn. 16, 708–733.

Bishop, S. J., Duncan, J., Brett, M., and Lawrence, A. D. (2004a). Prefrontal cortical function and anxiety: controlling attention to threat-related stimuli. Nat. Neurosci. 7, 184–188.

Bishop, S. J., Duncan, J., and Lawrence, A. D. (2004b). State anxiety modulation of the amygdala response to unattended threat-related stimuli. J. Neurosci. 24, 10364–10368.

Blau, V., Maurer, U., Tottenham, N., and McCandliss, B. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 3, 7.

Boll, S., Gamer, M., Kalisch, R., and Buchel, C. (2011). Processing of facial expressions and their significance for the observer in subregions of the human amygdala. Neuroimage 56, 299–306.

Brosch, T., Bar-David, E., and Phelps, E. A. (in press). Implicit race bias decreases the similarity of neural representations of black and white faces. Psychol. Sci.

Brosch, T., Pourtois, G., and Sander, D. (2010). The perception and categorisation of emotional stimuli: a review. Cogn. Emot. 24, 377–400.

Brosch, T., and Wieser, M. J. (2011). The (non) automaticity of amygdala responses to threat: on the issue of fast signals and slow measures. J. Neurosci. 31, 14451.

Brunswik, E. (1956). Perception and the Representative Design of Psychological Experiments, 2nd Edn. Berkeley: University of California Press.

Calder, A. J., Ewbank, M., and Passamonti, L. (2011). Personality influences the neural responses to viewing facial expressions of emotion. Philos. Trans. R. Soc. Lond. B Biol. Sci. 366, 1684–1701.

Canli, T., Sivers, H., Whitfield, S. L., Gotlib, I. H., and Gabrieli, J. D. (2002). Amygdala response to happy faces as a function of extraversion. Science 296, 2191.

Carmichael, S. T., and Price, J. L. (1995). Limbic connections of the orbital and medial prefrontal cortex in macaque monkeys. J. Comp. Neurol. 363, 615–641.

Carroll, J. M., and Russell, J. A. (1996). Do facial expressions signal specific emotions? Judging emotion from the face in context. J. Pers. Soc. Psychol. 70, 205–218.

Catani, M., Jones, D. K., Donato, R., and Ffytche, D. H. (2003). Occipito-temporal connections in the human brain. Brain 126, 2093–2107.

Cristinzio, C., N’Diaye, K., Seeck, M., Vuilleumier, P., and Sander, D. (2010). Integration of gaze direction and facial expression in patients with unilateral amygdala damage. Brain 133, 248–261.

Cunningham, W. A., Johnson, M. K., Raye, C. L., Gatenby, J. C., Gore, J. C., and Banaji, M. R. (2004). Separable neural components in the processing of black and white faces. Psychol. Sci. 15, 806–813.

Darwin, C. (1872). The Expression of the Emotions in Man and Animals, 3rd Edn. New York, NY: Oxford University Press.

Davis, F. C., Johnstone, T., Mazzulla, E. C., Oler, J. A., and Whalen, P. J. (2010). Regional response differences across the human amygdaloid complex during social conditioning. Cereb. Cortex 20, 612.

de Gelder, B., Bocker, K. B. E., Tuomainen, J., Hensen, M., and Vroomen, J. (1999). The combined perception of emotion from voice and face: early interaction revealed by human electric brain responses. Neurosci. Lett. 260, 133–136.

de Gelder, B., Meeren, H. K., Righart, R., Van den Stock, J., van de Riet, W. A., and Tamietto, M. (2006). Beyond the face: exploring rapid influences of context on face processing. Prog. Brain Res. 155, 37–48.

de Gelder, B., and Van den Stock, J. (2011). “Real faces, real emotions: perceiving facial expressions in naturalistic contexts of voices, bodies and scenes,” in The Oxford Handbook of Face Recognition, eds A. J. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (New York: Oxford University Press), 535–550.

de Gelder, B., and Vroomen, J. (2000). The perception of emotions by ear and by eye. Cogn. Emot. 14, 289–311.

de Gelder, B. C., Frissen, I., Barton, J., and Hadjikhani, N. (2003). A modulatory role for facial expressions in prosopagnosia. Proc. Natl. Acad. Sci. U.S.A. 100, 13105–13110.

Dolan, R. J., Morris, J. S., and de Gelder, B. (2001). Crossmodal binding of fear in voice and face. Proc. Natl. Acad. Sci. U.S.A. 98, 10006–10010.

Dotsch, R., Wigboldus, D. H. J., Langner, O., and van Knippenberg, A. (2008). Ethnic out-group faces are biased in the prejudiced mind. Psychol. Sci. 19, 978–980.

Eimer, M., and Holmes, A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13, 427–431.

Elfenbein, H. A., and Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128, 203–235.

Engell, A. D., Haxby, J. V., and Todorov, A. (2007). Implicit trustworthiness decisions: automatic coding of face properties in the human amygdala. J. Cogn. Neurosci. 19, 1508–1519.

Ethofer, T., Pourtois, G., and Wildgruber, D. (2006). Investigating audiovisual integration of emotional signals in the human brain. Prog. Brain Res. 156, 345–361.

Etkin, A., Klemenhagen, K. C., Dudman, J. T., Rogan, M. T., Hen, R., Kandel, E. R., et al. (2004). Individual differences in trait anxiety predict the response of the basolateral amygdala to unconsciously processed fearful faces. Neuron 44, 1043–1055.

Ewbank, M. P., Fox, E., and Calder, A. J. (2010). The interaction between gaze and facial expression in the amygdala and extended amygdala is modulated by anxiety. Front. Hum. Neurosci. 4:56. doi:10.3389/fnhum.2010.00056

Fox, E., and Zougkou, K. (2011). “Influence of personality traits on processing of facial expressions,” in Oxford Handbook of Face Perception, eds A. J. Calder, G. Rhodes, M. K. Johnson, and J. V. Haxby (New York: Oxford University Press), 515–534.

Galli, G., Feurra, M., and Viggiano, M. P. (2006). “Did you see him in the newspaper?” Electrophysiological correlates of context and valence in face processing. Brain Res. 1119, 190–202.

Gerdes, A., Wieser, M. J., Alpers, G. W., Strack, F., and Pauli, P. (2012). Why do you smile at me while I’m in pain? – Pain selectively modulates voluntary facial muscle responses to happy faces. Int. J. Psychophysiol. 85, 161–167.

Golan, O., Baron-Cohen, S., and Hill, J. (2006). The Cambridge mindreading (CAM) face-voice battery: testing complex emotion recognition in adults with and without Asperger syndrome. J. Autism Dev. Disord. 36, 169–183.

Graham, R., and LaBar, K. S. (2007). Garner interference reveals dependencies between emotional expression and gaze in face perception. Emotion 7, 296–313.

Graham, R., and Labar, K. S. (2012). Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia 50, 553–566.

Halberstadt, J. B., and Niedenthal, P. M. (2001). Effects of emotion concepts on perceptual memory for emotional expressions. J. Pers. Soc. Psychol. 81, 587–598.

Hariri, A. R., Mattay, V. S., Tessitore, A., Kolachana, B., Fera, F., Goldman, D., et al. (2002). Serotonin transporter genetic variation and the response of the human amygdala. Science 297, 400–403.

Harms, M. B., Martin, A., and Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol. Rev. 20, 290–322.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. (Regul. Ed.) 4, 223–232.

Haxby, J. V., and Gobbini, M. I. (2011). “Distributed neural systems for face perception,” in The Oxford Handbook of Face Perception, eds A. J. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (New York: Oxford University Press), 93–110.

Hoffman, E. A., and Haxby, J. V. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3, 80–84.

Hugenberg, K. (2005). Social categorization and the perception of facial affect: target race moderates the response latency advantage for happy faces. Emotion 5, 267–276.

Hugenberg, K., and Bodenhausen, G. V. (2004). Ambiguity in social categorization: the role of prejudice and facial affect in race categorization. Psychol. Sci. 15, 342–345.

Iidaka, T., Omori, M., Murata, T., Kosaka, H., Yonekura, Y., Okada, T., et al. (2001). Neural interaction of the amygdala with the prefrontal and temporal cortices in the processing of facial expressions as revealed by fMRI. J. Cogn. Neurosci. 13, 1035–1047.

Iidaka, T., Ozaki, N., Matsumoto, A., Nogawa, J., Kinoshita, Y., Suzuki, T., et al. (2005). A variant C178T in the regulatory region of the serotonin receptor gene HTR3A modulates neural activation in the human amygdala. J. Neurosci. 25, 6460–6466.

Iidaka, T., Saito, D. N., Komeda, H., Mano, Y., Kanayama, N., Osumi, T., et al. (2010). Transient neural activation in human amygdala involved in aversive conditioning of face and voice. J. Cogn. Neurosci. 22, 2074–2085.

Jack, R. E., Blais, C., Scheepers, C., Schyns, P. G., and Caldara, R. (2009). Cultural Confusions show that facial expressions are not universal. Curr. Biol. 19, 1543–1548.

Jack, R. E., Garrod, O. G. B., Yu, H., Caldara, R., and Schyns, P. G. (2012). Facial expressions of emotion are not culturally universal. Proc. Natl. Acad. Sci. U.S.A. 109, 7241–7244.

Jones, B. C., DeBruine, L. M., Little, A. C., Burriss, R. P., and Feinberg, D. R. (2007). Social transmission of face preferences among humans. Proc. R. Soc. Lond. B. Biol. Sci. 274, 899–903.

Kanwisher, N., McDermott, J., and Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311.

Kim, H., Somerville, L. H., Johnstone, T., Polis, S., Alexander, A. L., Shin, L. M., et al. (2004). Contextual modulation of amygdala responsivity to surprised faces. J. Cogn. Neurosci. 16, 1730–1745.

Kreifelts, B., Ethofer, T., Grodd, W., Erb, M., and Wildgruber, D. (2007). Audiovisual integration of emotional signals in voice and face: an event-related fMRI study. Neuroimage 37, 1445–1456.

Krolak-Salmon, P., Fischer, C., Vighetto, A., and Mauguiere, F. (2001). Processing of facial emotional expression: spatio-temporal data as assessed by scalp event related potentials. Eur. J. Neurosci. 13, 987–994.

LaBar, K. S., Crupain, M. J., Voyvodic, J. T., and McCarthy, G. (2003). Dynamic perception of facial affect and identity in the human brain. Cereb. Cortex 13, 1023–1033.

Lee, E., Kang, J. I., Park, I. H., Kim, J.-J., and An, S. K. (2008). Is a neutral face really evaluated as being emotionally neutral? Psychiatry Res. 157, 77–85.

Lee, T. H., Choi, J. S., and Cho, Y. S. (2012). Context modulation of facial emotion perception differed by individual difference. PLoS ONE 7, e32987. doi:10.1371/journal.pone.0032987

Lieberman, M. D., Eisenberger, N. I., Crockett, M. J., Tom, S. M., Pfeifer, J. H., and Way, B. M. (2007). Putting feelings into words – affect labeling disrupts amygdala activity in response to affective stimuli. Psychol. Sci. 18, 421–428.

Lindquist, K. A., Barrett, L. F., Bliss-Moreau, E., and Russell, J. A. (2006). Language and the perception of emotion. Emotion 6, 125–138.

Lucas, H. D., Chiao, J. Y., and Paller, K. A. (2011). Why some faces won’t be remembered: brain potentials illuminate successful versus unsuccessful encoding for same-race and other-race faces. Front. Hum. Neurosci. 5:20. doi:10.3389/fnhum.2011.00020

Marzi, T., and Viggiano, M. P. (2010). When memory meets beauty: insights from event-related potentials. Biol. Psychol. 84, 192–205.

Matsumoto, D. (2001). “Culture and emotion,” in The Handbook of Culture and Psychology, ed. D. Matsumoto (New York: Oxford University Press), 171–194.

Matsumoto, D., Keltner, D., Shiota, M. N., O’Sullivan, M., and Frank, M. (2008). “Facial expressions of emotion,” in Handbook of Emotions, eds M. Lewis, J. M. Haviland-Jones, and L. F. Barrett (New York: The Guilford Press), 211–234.

McArthur, L. Z., and Baron, R. M. (1983). Toward an ecological theory of social perception. Psychol. Rev. 90, 215.

McTeague, L. M., Shumen, J. R., Wieser, M. J., Lang, P. J., and Keil, A. (2011). Social vision: sustained perceptual enhancement of affective facial cues in social anxiety. Neuroimage 54, 1615–1624.

Meeren, H. K. M., van Heijnsbergen, C. C. R. J., and de Gelder, B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. U.S.A. 102, 16518–16523.

Miskovic, V., and Schmidt, L. A. (2012). Social fearfulness in the human brain. Neurosci. Biobehav. Rev. 36, 459–478.

Mobbs, D., Weiskopf, N., Lau, H. C., Featherstone, E., Dolan, R. J., and Frith, C. D. (2006). The Kuleshov effect: the influence of contextual framing on emotional attributions. Soc. Cogn. Affect. Neurosci. 1, 95–106.

Montepare, J. M., and Dobish, H. (2003). The contribution of emotion perceptions and their overgeneralizations to trait impressions. J. Nonverbal Behav. 27, 237–254.

Morel, S., Beaucousin, V., Perrin, M., and George, N. (2012). Very early modulation of brain responses to neutral faces by a single prior association with an emotional context: evidence from MEG. Neuroimage 61, 1461–1470.

Morris, J. S., Friston, K. J., Buechel, C., Frith, C. D., Young, A. W., Calder, A. J., et al. (1998). A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121, 47–57.

Mühlberger, A., Wieser, M. J., Gerdes, A. B. M., Frey, M. C. M., Weyers, P., and Pauli, P. (2011). Stop looking angry and smile, please: start and stop of the very same facial expression differentially activate threat- and reward-related brain networks. Soc. Cogn. Affect. Neurosci. 6, 321–329.

Mühlberger, A., Wieser, M. J., Herrmann, M. J., Weyers, P., Troger, C., and Pauli, P. (2009). Early cortical processing of natural and artificial emotional faces differs between lower and higher socially anxious persons. J. Neural Transm. 116, 735–746.

Müller, V. I., Habel, U., Derntl, B., Schneider, F., Zilles, K., Turetsky, B. I., et al. (2011). Incongruence effects in crossmodal emotional integration. Neuroimage 54, 2257–2266.

Mumenthaler, C., and Sander, D. (2012). Social appraisal influences recognition of emotions. J. Pers. Soc. Psychol. 102, 1118–1135.

N’Diaye, K., Sander, D., and Vuilleumier, P. (2009). Self-relevance processing in the human amygdala: gaze direction, facial expression, and emotion intensity. Emotion 9, 798–806.

Neta, M., Davis, F. C., and Whalen, P. J. (2011). Valence resolution of ambiguous facial expressions using an emotional oddball task. Emotion 11, 1425–1433.

Ofan, R. H., Rubin, N., and Amodio, D. M. (2011). Seeing race: N170 responses to race and their relation to automatic racial attitudes and controlled processing. J. Cogn. Neurosci. 23, 3153–3161.

Phelps, E. A., O’Connor, K. J., Cunningham, W. A., Funayama, E. S., Gatenby, J. C., Gore, J. C., et al. (2000). Performance on indirect measures of race evaluation predicts amygdala activation. J. Cogn. Neurosci. 12, 729–738.

Pourtois, G., de Gelder, B., Bol, A., and Crommelinck, M. (2005). Perception of facial expressions and voices and of their combination in the human brain. Cortex 41, 49–59.

Pourtois, G., de Gelder, B., Vroomen, J., Rossion, B., and Crommelinck, M. (2000). The time-course of intermodal binding between seeing and hearing affective information. Neuroreport 11, 1329–1333.

Price, J. L. (2007). Definition of the orbital cortex in relation to specific connections with limbic and visceral structures and other cortical regions. Ann. N. Y. Acad. Sci. 1121, 54–71.

Puce, A., Allison, T., Asgari, M., Gore, J. C., and McCarthy, G. (1996). Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J. Neurosci. 16, 5205–5215.

Recio, G., Sommer, W., and Schacht, A. (2011). Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 1376, 66–75.

Righart, R., and de Gelder, B. (2006). Context influences early perceptual analysis of faces – an electrophysiological study. Cereb. Cortex 16, 1249–1257.

Righart, R., and de Gelder, B. (2008a). Rapid influence of emotional scenes on encoding of facial expressions: an ERP study. Soc. Cogn. Affect. Neurosci. 3, 270–278.

Righart, R., and de Gelder, B. (2008b). Recognition of facial expressions is influenced by emotional scene gist. Cogn. Affect. Behav. Neurosci. 8, 264–272.

Righi, S., Marzi, T., Toscani, M., Baldassi, S., Ottonello, S., and Viggiano, M. P. (2012). Fearful expressions enhance recognition memory: electrophysiological evidence. Acta Psychol. (Amst.) 139, 7–18.

Roberson, D., and Davidoff, J. (2000). The categorical perception of colors and facial expressions: the effect of verbal interference. Mem. Cognit. 28, 977–986.

Rotshtein, P., Henson, R. N. A., Treves, A., Driver, J., and Dolan, R. J. (2005). Morphing marilyn into maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113.

Russell, J. A., and Fehr, B. (1987). Relativity in the perception of emotion in facial expressions. J. Exp. Psychol. Gen. 116, 223–237.

Said, C. P., Sebe, N., and Todorov, A. (2009). Structural resemblance to emotional expressions predicts evaluation of emotionally neutral faces. Emotion 9, 260–264.

Sander, D., Grandjean, D., Kaiser, S., Wehrle, T., and Scherer, K. R. (2007). Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. Eur. J. Cogn. Psychol. 19, 470–480.

Sato, W., Kochiyama, T., Yoshikawa, S., Naito, E., and Matsumura, M. (2004). Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Brain Res. Cogn. Brain Res. 20, 81–91.

Sato, W., and Yoshikawa, S. (2007a). Enhanced experience of emotional arousal in response to dynamic facial expressions. J. Nonverbal Behav. 31, 119–135.

Sato, W., and Yoshikawa, S. (2007b). Spontaneous facial mimicry in response to dynamic facial expressions. Cognition 104, 1–18.

Scherer, K. R. (2003). Vocal communication of emotion: a review of research paradigms. Speech Commun. 40, 227–256.

Schupp, H. T., Ohman, A., Junghofer, M., Weike, A. I., Stockburger, J., and Hamm, A. O. (2004). The facilitated processing of threatening faces: an ERP analysis. Emotion 4, 189–200.

Schwarz, K. A., Wieser, M. J., Gerdes, A. B. M., Mühlberger, A., and Pauli, P. (2012). Why are you looking like that? How the context influences evaluation and processing of human faces. Soc. Cogn. Affect. Neurosci. doi:10.1093/scan/nss013

Sherman, A., Sweeny, T. D., Grabowecky, M., and Suzuki, S. (2012). Laughter exaggerates happy and sad faces depending on visual context. Psychon. Bull. Rev. 19, 163–169.

Smith, M. L., Cottrell, G. W., Gosselin, F., and Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychol. Sci. 16, 184–189.

Tamietto, M., and de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709.

Todorov, A. (2011). “Evaluating faces on social dimensions,” in Social Neuroscience: Toward Understanding the Underpinnings of the Social Mind, eds A. Todorov, S. T. Fiske, and D. A. Prentice (New York: Oxford University Press), 54–76.

Todorov, A., Gobbini, M. I., Evans, K. K., and Haxby, J. V. (2007). Spontaneous retrieval of affective person knowledge in face perception. Neuropsychologia 45, 163–173.

Trautmann, S. A., Fehr, T., and Herrmann, M. (2009). Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284, 100–115.

Trope, Y. (1986). Identification and inferential processes in dispositional attribution. Psychol. Rev. 93, 239–257.

Van den Stock, J., and de Gelder, B. (2012). Emotional information in body and background hampers recognition memory for faces. Neurobiol. Learn. Mem. 97, 321–325.

Van den Stock, J., Righart, R., and de Gelder, B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7, 487–494.

Viggiano, M. P., and Marzi, T. (2010). “Context and social effects on face recognition,” in The Social Psychology of Visual Perception, eds E. Balcetis and G. D. Lassiter (New York: Psychology Press), 171–200.

Vrticka, P., Andersson, F., Sander, D., and Vuilleumier, P. (2009). Memory for friends or foes: the social context of past encounters with faces modulates their subsequent neural traces in the brain. Soc. Neurosci. 4, 384–401.

Vuilleumier, P., Richardson, M. P., Armony, J. L., Driver, J., and Dolan, R. J. (2004). Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat. Neurosci. 7, 1271–1278.

Watanabe, N., Wada, M., Irukayama-Tomobe, Y., Ogata, Y., Tsujino, N., Suzuki, M., et al. (2012). A Single nucleotide polymorphism of the neuropeptide B/W receptor-1 gene influences the evaluation of facial expressions. PLoS ONE 7, e35390. doi:10.1371/journal.pone.0035390

Weyers, P., Mühlberger, A., Hefele, C., and Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology 43, 450–453.

Wieser, M. J., Gerdes, A. B. M., Greiner, R., Reicherts, P., and Pauli, P. (2012a). Tonic pain grabs attention, but leaves the processing of facial expressions intact-Evidence from event-related brain potentials. Biol. Psychol. 90, 242–248.

Wieser, M. J., McTeague, L. M., and Keil, A. (2012b). Competition effects of threatening faces in social anxiety. Emotion 12, 1050–1060.

Wieser, M. J., McTeague, L. M., and Keil, A. (2011). Sustained preferential processing of social threat cues: bias without competition? J. Cogn. Neurosci. 23, 1973–1986.

Wieser, M. J., Pauli, P., Reicherts, P., and Mühlberger, A. (2010). Don’t look at me in anger! – Enhanced processing of angry faces in anticipation of public speaking. Psychophysiology 47, 241–280.

Keywords: facial expression, face perception, emotion, context, “basic emotion”

Citation: Wieser MJ and Brosch T (2012) Faces in context: a review and systematization of contextual influences on affective face processing. Front. Psychology 3:471. doi: 10.3389/fpsyg.2012.00471