94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Psychol. , 12 May 2011

Sec. Auditory Cognitive Neuroscience

volume 2 - 2011 | https://doi.org/10.3389/fpsyg.2011.00094

This article is part of the Research Topic The relationship between music and language View all 23 articles

After a brief historical perspective of the relationship between language and music, we review our work on transfer of training from music to speech that aimed at testing the general hypothesis that musicians should be more sensitive than non-musicians to speech sounds. In light of recent results in the literature, we argue that when long-term experience in one domain influences acoustic processing in the other domain, results can be interpreted as common acoustic processing. But when long-term experience in one domain influences the building-up of abstract and specific percepts in another domain, results are taken as evidence for transfer of training effects. Moreover, we also discuss the influence of attention and working memory on transfer effects and we highlight the usefulness of the event-related potentials method to disentangle the different processes that unfold in the course of music and speech perception. Finally, we give an overview of an on-going longitudinal project with children aimed at testing transfer effects from music to different levels and aspects of speech processing.

Over the centuries, many authors have been interested in the relationship between music and language and this rich and fruitful comparison has been examined from many different perspectives. Historically, one of the first was the question of the common or independent origin of music and language that was highlighted again more recently in the wonderful book “The origin of music” (Wallin et al., 2000).

Both Rousseau (1781/1993) and Darwin (1871/1981) were in favor of a common origin of music and language. In his book on the origin of language (1781/1993), Rousseau was a fervent advocate of the idea that the first languages were sung, not spoken and Darwin considered that music evolved from the love calls produced during the reproduction period to charm the persons from the opposite sex: “musical notes and rhythm were first acquired by the male or female progenitors of mankind for the sake of charming the opposite sex” (Darwin, 1871/1981, p. 336). Such a seduction function of the singing voice certainly persisted nowadays as can be seen from the cult devoted to la Callas or Michael Jackson… The philosopher Herbert Spencer (1820–1903) also favored a common origin of music and language, and proposed a physiological theory to explain their common primary function: express emotions (Spencer, 1857). To produce large intervals in the intonation of the voice or on a keyboard requires larger movements than to produce small intervals. There is thus a direct connection between emotion and movement: the more intense the feeling, the larger, and faster the movement.

This very same idea was previously developed by Descartes (1618/1987), who in the early part of his long carrier, wrote “L’abrégé de musique” (Compendium musicae, 1618), his principal contribution to music theory. In this book, Descartes divided music into three basic components: the physical aspect of sound, the nature of sensory perception, and the ultimate effect of this perception on the listener. Interestingly, the nature of perception results from the effects on the mind of the “animated spirits” through the pineal gland. Thus, listening to music activates the animated spirits in the brain who in turn affected the mind. When music has a fast tempo, the animated spirits are highly excited: they rush into the nerves that in turn excite the muscles. And you end up beating the tempo with your feet… (see also Cross, 2011 for the history of the link between the auditory and motor systems in music). Cartesian dualism does not therefore imply for the mind and body to be entirely separated. Rather, there are some instances, such as emotions, that cannot be attributed only to the mind or only to the body but who likely emerge from their tight union.

By contrast, to these early views, musicologists from the nineteenth and twentieth centuries seemed to favor the hypothesis of a different origin of music and language, and often argued that both evolved independently. While it is generally considered that both music and language have a survival value1 in the evolution of the human species, through the production of sounds allowing individuals to locate themselves in space and to warn each other about potential dangers, for instance (Levman, 1992), they nevertheless evolved as different systems. For Wallaschek (1891), music emerged from a basic need to download surplus of excessive energy through rhythmic productions. As noted by Brown (2003), synchronized movements to music are found in all cultures. Newman (1905/1969) also considered that music developed before and independently from language: “man certainly expresses his feeling in pure indefinite sound long before he had learned to agree with his fellows to attach certain meanings to certain stereotypes sounds” (p. 210).

While music and language both play a fundamental role in the organization of human societies, the main function of music and language differs. Music allows expressing emotions and thereby ensures social bounding (Boucourechliev, 1993). Ethnomusicological research has illustrated the social function of music by showing that music is invested of natural and supra-natural powers in all human societies (Nadel, 1930). By contrast, language permits to communicate thoughts relevant to the current context, to tell stories that happened years ago and to project into the future to plan upcoming events. Over the course of human evolution, language lost the isomorphism between sound and meaning (i.e., disappearance of onomatopoeias) to become symbolic (through the phenomenon of double articulation; Levman, 1992).

More recent years have witnessed a renewal of interest in the language–music comparison largely due to the development of cognitive science and to the advent of brain imaging methods. From the end of the nineteenth century until the early seventies, knowledge of the brain anatomo-functional organization was mainly derived from neurology and neuropsychology. In short, the dominant view was that language was located in brain regions of the left hemisphere (i.e., Broca and Wernicke areas) that were specifically devoted to language processing. However, the use of positron emission tomography (PET), functional magnetic resonance imaging (fMRI), magnetoencephalography (MEG), and electroencephalography (EEG), and event-related brain potentials (ERPs) led to two major discoveries. First, it became increasingly clear that language processing is largely distributed within the left hemisphere (see Vigneau et al., 2006 for a meta-analysis of fMRI data), involving more areas than the sole Broca and Wernicke regions, and that the right hemisphere also plays an important role in language perception and comprehension (e.g., Federmeier et al., 2008 based on ERP data). Second, it was demonstrated that some brain regions that had long been considered as language specific (e.g., Broca and Wernicke’s areas) are also activated by music processing (e.g., Maess et al., 2001; Levitin and Menon, 2003; Vuust et al., 2006; Abrams et al., 2010). This may not be so surprising when considering the similarities between language and music processing.

Both language and music are complex processing systems that entertain intimate relationships with attention, memory, and motor abilities. Moreover, neither language nor music can be considered as entities; rather they comprise several levels of processing: morphology, phonology, semantics, syntax and pragmatics in language and rhythm, melody, and harmony in music. Maybe most importantly, both speech and music are auditory signals that are sequential in nature (in contrast to visual information) and that unfold in time, according to the rules of syntax and harmony. Moreover, speech and musical sounds rely on the same acoustic parameters, frequency, duration, intensity, and timber.

Results of many experiments in the neuroscience of music using both behavioral and electrophysiological methods have shown that musicians are particularly sensitive to the acoustic structure of sounds. For instance, musical expertise decreases pitch discrimination thresholds for pure and harmonic tones (e.g., Spiegel and Watson, 1984; Kishon-Rabin et al., 2001; Micheyl et al., 2006; Bidelman and Krishnan, 2010; Bidelman et al., 2010; Strait et al., 2010), and increases discrimination accuracy for frequency and duration (e.g., Koelsch et al., 1999; Micheyl et al., 2006; Tervaniemi et al., 2006). Using more musical materials, it has also been shown that musical expertise increases sensitivity to pitch changes in melodic contours (e.g., Trainor et al., 1999; Fujioka et al., 2004) and that musicians recognize familiar melodies, and detect subtle variations of pitch, rhythm, and harmony within musical phrases faster, and more accurately than non-musicians (e.g., Besson and Faïta, 1995; Koelsch et al., 2002; Bidelman et al., 2010). Moreover, seminal studies using MRI, fMRI, or MEG have demonstrated that the development of the perceptual, cognitive, and motor abilities, through years of intensive musical practice in the case of professional musicians, largely influences brain anatomy and brain function (e.g., Elbert et al., 1995; Schlaug et al., 1995a,b; Amunts et al., 1997; Pantev et al., 1998; Keenan et al., 2001; Schmithorst and Wilke, 2002; Schneider et al., 2002, 2005; Gaser and Schlaug, 2003; Hutchinson et al., 2003; Luders et al., 2004; Bengtsson et al., 2005; Bermudez et al., 2009; Imfeld et al., 2009). Finally, results of experiments using a longitudinal approach, with non-musician adults or children being trained with music, have shown that the differences between musicians and non-musicians are more likely to result from musical training rather than from genetic predispositions for music (e.g., Lahav et al., 2007; Hyde et al., 2009; Moreno et al., 2009).

Based on the functional overlap of brain structures involved in language and music processing, and based on the findings of musicians’ increased sensitivity to acoustic parameters that are similar for music and speech, we developed a research program aimed at studying transfer of training effects between music and speech. The general hypothesis is that musicians should be more sensitive than non-musicians to speech sounds. To test this hypothesis we used both behavioral percentage of errors (%err) and reaction times (RTs), and electrophysiological methods (ERPs). Overall, the results that we have obtained until now are in line with this hypothesis. We briefly summarize these results below and we discuss several possible interpretations of these findings.

In the first two studies, we used natural speech and we parametrically manipulated the pitch of sentence final words (supra-segmental changes) so that pitch variations were larger (easy to detect) or subtle (difficult to detect). Sentences were spoken either in the native language of the listener (Schön et al., 2004) or in a foreign language, unknown to participants (Marques et al., 2007). In both studies, results showed that musicians outperformed non-musicians only when the pitch variation on the final word was difficult to detect. Analysis of the ERPs revealed an increased positivity (of the P3 family) to subtle pitch variations but only in musicians.

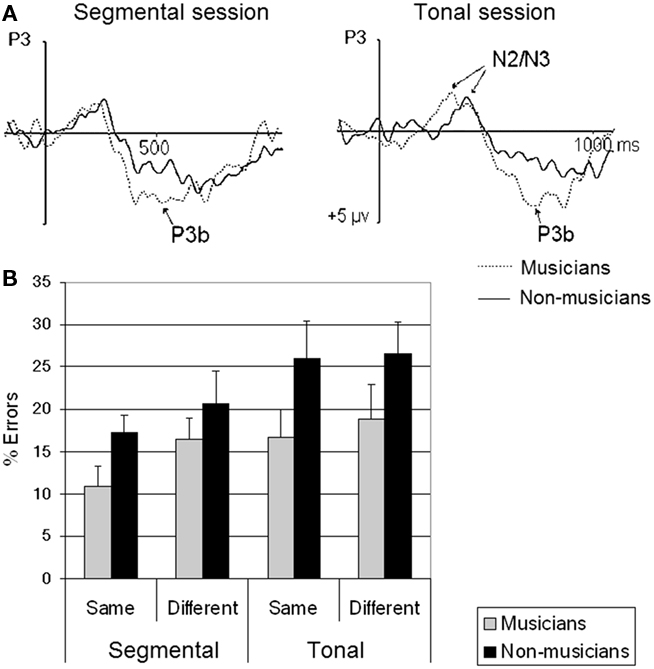

More recently, Marie et al. (in press a) examined the influence of musical expertise on lexical pitch (tone) and on segmental variations in a language, Mandarin Chinese, unfamiliar to the French participants. They listened to two sequences of four-monosyllabic Mandarin words that were either same or different. When different, one word of the sequence varied in tone (e.g., qíng/qíng) or in segmental cues (consonant or vowel; e.g., bán/zán). In line with previous behavioral data (Gottfried et al., 2004; Delogu et al., 2006, 2010; Lee and Hung, 2008), musicians detected tone and segmental variations better than non-musicians. Analysis of the ERPs showed no influence of musical expertise on the N1 component (perceptual processing, e.g., Rugg and Coles, 1995; Eggermont and Ponton, 2002) and P3a component (automatic orienting of attention, e.g., Squires et al., 1975; Escera et al., 2000). By contrast, the latency of the N2/N3 component to tone variations (categorization process, e.g., Fujioka et al., 2006; Moreno et al., 2009) and the amplitude and latency of the P3b component (decision processes; e.g., Duncan-Johnson and Donchin, 1977; Picton, 1992) to tone and segmental variations were larger/shorter in musicians than in non-musicians (see Figure 1).

Figure 1. (A) Difference-wave ERPs (different minus same final words) for the segmental (left) and tonal (right) sessions in musicians (dashed line) and non-musicians (soAlid line). (B) Percentage of errors for musicians (gray) and non-musicians (black) in the segmental and the tonal sessions and in the two experimental conditions (same and different words, with error bars). Adapted from Marie et al. (in press a).

Finally, we examined the influence of musical expertise on vowel duration and metric processing in natural speech (Magne et al., 2007; Marie et al., 2011). We used a specific time-stretching algorithm (Pallone et al., 1999) to create an unexpected lengthening of the penultimate syllable that disrupted the metric structure of words without modifying timber or frequency. Sentences final words were also semantically congruous or incongruous within the context. Participants performed two tasks in separate blocks of trials. In the metric task, they focused attention on the metric structure of final words to decide whether they were correctly pronounced or not. In the semantic task, they focused attention on the semantics of the sentence to decide whether the final word was expected within the context or not. In both tasks, musicians outperformed non-musicians (as measured by the percentage of errors). However, the pattern of ERP data differed between tasks. Independently of the direction of attention, the P2 component (perceptual processing) to syllabic lengthening was larger in musicians than in non-musicians. By contrast, the N400 effect (semantic processing) was not different in both groups (Marie et al., 2011).

Taken together, these different results showed that, compared to non-musicians, musicians were more sensitive to supra-segmental manipulations of pitch (i.e., intonation at the sentence level) in their own language (Schön et al., 2004) as well as in a foreign language (Marques et al., 2007), to segmental and to tone variations in a foreign language in which these variations are linguistically relevant (Marie et al., in press a) and to the metric structure of words (Marie et al., 2011). These differences were reflected in the pattern of brain waves that also differed between musicians and non-musicians. Based on these results we can argue that, through years of musical practice, musicians have developed an increased sensitivity to acoustic parameters that are important for music, such as frequency and duration. As argued above based upon the results of longitudinal studies with non-musicians, such an increased sensitivity is more likely to result from musical training than from genetic predispositions for music. Moreover, Musacchia et al. (2007, 2008) have reported positive correlations between the number of years of musical practice and the strength of subcortical pitch encoding as well as with the amplitude of ERPs cortical components. This sensitivity would extend from music to speech possibly because processing frequency and duration in music and speech draw upon the same pool of neural resources (e.g., Patel, 2003, 2008; Kraus and Chandrasekaran, 2010). In other words, common processes are involved in both cases. For instance, recent fMRI data coming from a direct comparison of temporal structure processing in music and speech suggested that similar anatomical resources are shared by the two domains (Abrams et al., in press). However, if the processes are common (i.e., domain-general) to music and speech, is it appropriate to take the results as evidence for transfer effects from music to speech?

At issue is how to reconcile an explanation in terms of common processing with the hypothesis of transfer of training effects from music to speech processing. We argue that enhanced sensitivity to acoustic features that are common to music and speech, and that imply domain-general processes, allows musicians to construct more elaborated percepts of the speech signal than non-musicians. This, in turn, facilitates stages of speech processing that are speech-specific (i.e., not common to music and speech). For example, acoustic processing of rapidly changing auditory patterns is a pre-requisite for speech processing that may be sub-served by the left planum temporale (Griffiths and Warren, 2002; Jancke et al., 2002; Hickok and Poeppel, 2007; Zaehle et al., 2008). This ability is necessary to hear formant transition (Bidelman and Krishnan, 2010) and to distinguish between phonemes (e.g., “b” and “p”). Correct phoneme discrimination is, in turn, a pre-requisite for correct word identification and for assessing word meaning, and children with language disorders often show temporal auditory processing difficulties (e.g., Overy, 2000; Tallal and Gaab, 2006; Gaab et al., 2007). In short, when long-term experience in one domain influences acoustic processing in the other domain, results can be interpreted as common acoustic processing. But when long-term experience in one domain influences the building-up of abstract and specific percepts in another domain, results are taken as evidence for transfer of training effects (see also Kraus and Chandrasekaran, 2010).

Similar conclusions were reached by Bidelman et al. (2009) from the results of an experiment specifically designed to directly compare the effects of linguistic and musical expertise on music and speech pitch processing. These authors presented homologs of musical intervals and of lexical tones to native Chinese, English musicians, and English non-musicians and recorded the brainstem frequency following response. Results showed that both pitch-tracking accuracy and pitch strength were higher in Chinese and English musicians as compared to English non-musicians. Thus, both linguistic and musical expertise similarly influenced the processing of pitch contour in music intervals and in Mandarin tones possibly because both draw into the same pool of neural resources. However, some interesting differences also emerged between Chinese and English musicians. While English musicians were more sensitive than Chinese to the parts of the stimuli that were similar to the notes of the musical scale, Chinese were more sensitive to rapid changes of pitch that were similar to those occurring in Mandarin Chinese. In line with the discussion above, the authors concluded that the “auditory brainstem is domain-general insomuch as it mediates pitch encoding in both music and language” but that “pitch extraction mechanisms are not homogeneous for Chinese non-musicians and English musicians as they depend upon interactions between specific features of the input signal, their corresponding output representations and the domain of expertise of the listener” (p. 8).

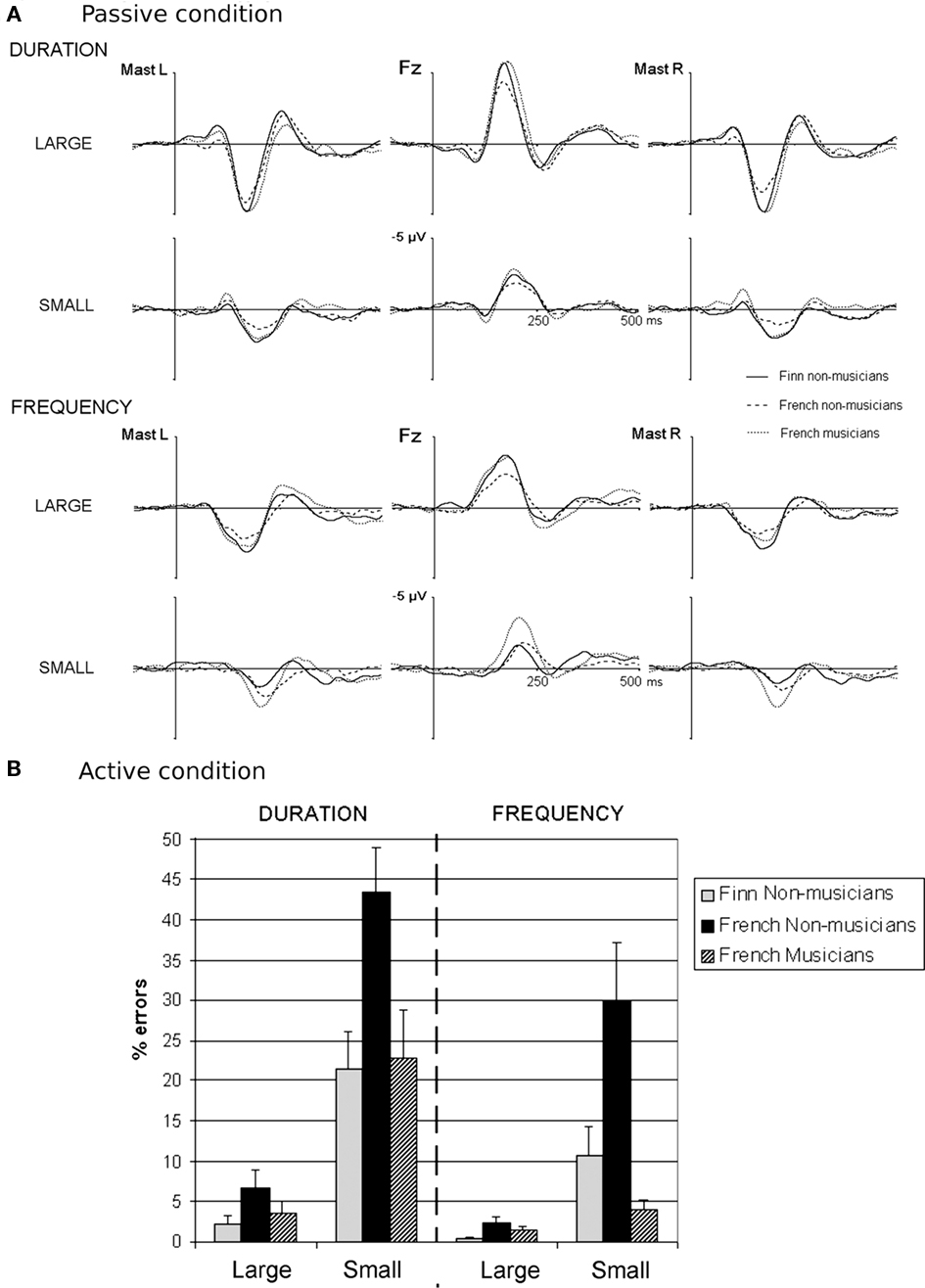

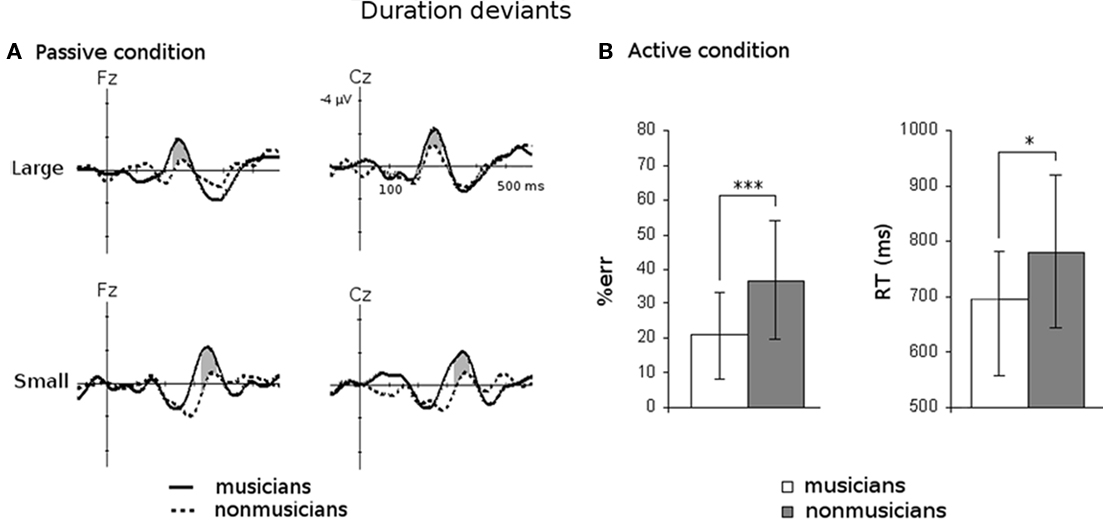

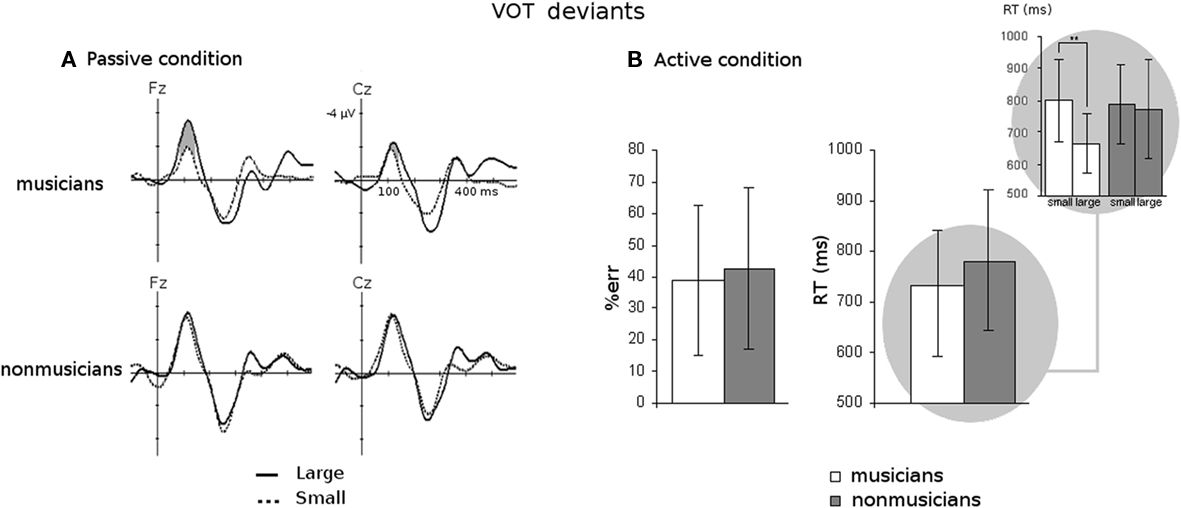

Supporting an interpretation in terms of common processes in music and speech, recent results showed that non-musician native speakers of a quantity language, Finnish, in which duration is a phonemically contrastive cue, pre-attentively, and attentively processed the duration of harmonic sounds as efficiently as French musicians and better than French non-musicians (Marie et al., in press b; see Figure 2). Moreover, results of Marie et al. (in press a) also support an interpretation in terms of transfer effects by showing that musicians were more sensitive than non-musicians to segmental variations (consonant or vowel changes; e.g., bán/zán) that is, to abstract phonological representations derived from the processing of acoustic parameters. More generally, this interpretation is also in line with results showing a positive influence of musical skills on phonological processing (Anvari et al., 2002; Slevc and Miyake, 2006; Jones et al., 2009; Moreno et al., 2009). Along these lines, we recently found that musician children (with an average of 4 years of musical training) are more sensitive [larger mismatch negativity (MMNs), lower error rate, and shorter RTs; see Figure 3] than non-musicians to syllabic duration (acoustic processing). Moreover, musician children were also sensitive to small differences in voice onset time (VOT; larger MMNs and shorter RTs for large than for small VOT deviants; see Figure 4). VOT is a fast temporal cue that allows to differentiate “ba” from “pa,” for instance, and that plays an important role in the development of phonological representations. By contrast, the MMNs and RTs recorded from non-musician children were not different for small and large differences in VOT which, in line with previous results by Phillips et al. (2000) with non-musician adults, was taken to indicate that non-musician children process all changes (whether large or small) as across-phonemic category changes (see Figure 4; Chobert et al., accepted). Finally, it is interesting to put these results in the perspective of previous findings by Musacchia et al. (2007) showing that Wave delta (∼8 ms post-stimulus onset) of the brainstem evoked response that is related to the encoding of stimulus onset, and thereby necessary for the encoding of attack, and rhythm in both music and speech, is larger in musicians than in non-musicians. Thus, increased sensitivity at low-level of sensory processing may have strong consequences at higher level of perceptual and cognitive processing. In this respect, it becomes very interesting to simultaneously record brainstem and cortical evoked potentials, as recently done by Musacchia et al. (2008). They compared musicians and non-musicians and found strong correlations between brainstem measures (e.g., pitch encoding, F0), cortical measures (e.g., the slope of the P1–N1 components) and the level of performance on tests of tonal memory (Sheashore and MAT-3), thereby showing clearer and stronger brain–behavior relationships in musicians than in non-musicians.

Figure 2. (A) Mismatch negativity (MMN) in Finn non-musicians, French musicians, and French non-musicians passively listening to large and small duration and frequency deviants in harmonic sounds. The MMN to duration deviants was larger in Finn and in French musicians than in French non-musicians. However, only the French musicians showed an enhanced MMN to small frequency deviants. Thus, linguistic and musical expertise similarly influenced the pre-attentive processing of duration but not of frequency deviants. (B) The percentage of errors to small duration and frequency deviants in the active discrimination task was lower for French musicians and Finn non-musicians than for French non-musicians. When attention is focused on the harmonic sounds, Finn non-musicians detected both Small Duration and Frequency deviants better than French non-musicians thereby showing a dissociation between the passive and active listening conditions for the frequency deviants. Adapted from Marie et al. (in press b).

Figure 3. (A) Mismatch negativity (MMN) elicited in musician and non-musician children (8 years old) passively listening to large and small duration deviants in speech sounds. In both cases, the MMN was larger in musician than in non-musician children thereby showing an influence of musical expertise on the pre-attentive processing of the duration of speech sounds. (B) Percentage of errors (%err) and reaction times (RTs) to duration deviants (collapsed across large and small deviants) in the active discrimination task. Percentage of errors was lower and RTs were shorter for musician than for non-musician children. Thus, the active processing of the duration of speech sound is also enhanced in musician compared to non-musician children. Adapted from Chobert et al., accepted.

Figure 4. (A) Mismatch negativity (MMN) elicited in musician and non-musician children (8 years old) passively listening to large and small deviants in voice onset time (VOT). The MMN was larger to large than to small VOT deviants only in musician children. The finding of no difference for large and for small VOT deviants in non-musician children suggests that they did not pre-attentively hear the difference between large and small VOT deviants. (B) Percentage of errors (%err) and reaction times (RTs) to VOT deviants (collapsed across large and small deviants) in the active discrimination task. No difference between musician and non-musician children were found on %err but, as found for MMN amplitude, the deviant size effect was significant on RTs for musician children with shorter RTs to large than to short deviants and with no difference for non-musician children. Adapted from Chobert et al., accepted.

In sum, the results reviewed above argue in favor of common processing of acoustic parameters such as frequency and duration in music and speech. Moreover, by showing that improved processing of these acoustic parameters has consequences at a higher level of speech processing (e.g., phonological level, lexical tone processing), they argue for positive transfer of training effects from musical expertise to speech processing. Of course, the challenge is then to try to disentangle the acoustic from the more abstract representations. This is not an easy task since these different aspects are strongly inter-mixed and interactive in speech perception and comprehension. Nevertheless, an interesting perspective for future research is to try to specify the upper limit for transfer effects, that is, whether musical expertise can influence phonological, semantic, syntactic, or pragmatic processing (e.g., Bigand et al., 2001; Patel, 2003, 2008; Koelsch et al., 2004, 2005; Poulin-Charronnat et al., 2005; Steinbeis and Koelsch, 2008; Fedorenko et al., 2009).

Because the results from our group reviewed above were most often obtained from designs in which participants were asked to focus attention on the sounds and because musicians often showed an overall facilitation compared to non-musicians, one could argue that these results reflect a general effect of focused attention (e.g., Fujioka et al., 2006; Moreno et al., 2009; Strait et al., 2010). For instance, in the Marie et al.’s (in press a) study musicians detected tone and segmental variations better than non-musicians in all experimental conditions. Similarly, in the Marie et al. (2011) study that manipulated the orientation of attention toward the metric structure of words or toward the semantics of the sentence, musicians also outperformed non-musicians independently of the direction of attention. However, analysis of the ERPs is very informative relative to the influence of attention. In both studies, results showed no influence of musical expertise on the N1 component that is known to be particularly sensitive to selective attention (e.g., Hillyard et al., 1973). Moreover, in the Marie et al.’s (2011) experiment, the P2 component elicited by unexpected syllabic lengthening was larger in musicians than in non-musicians (see also Atienza et al., 2002; Shahin et al., 2003; Bosnyak et al., 2007) and this differential effect occurred independently of the direction of attention. These results are in line with previous findings from Baumann et al. (2008) showing that the effect of selective attention on the N1 and P2 component elicited by sine waves and harmonic sounds had a different time course and scalp distribution than the effect of musical expertise. Finally, when present (e.g., in Marie et al., in press a), the P3a component, that is taken to reflect the automatic orienting of attention (Courchesne et al., 1975; Squires et al., 1975; Escera et al., 2000a), was of similar amplitude for both musicians and non-musicians.

Taken together, these findings indicate that the effect of musical expertise cannot be reduced to an attention effect. This is not to say, however, that attention plays no role in the results. Rather, musicians may have developed increased abilities to focus attention on sounds and this ability may in turn help them to categorize the sounds and to make the relevant decision. Once again, analysis of the ERPs is revealing in this respect. The findings of shorter latency of the N2/N3 component (categorization process) to tone variations and of shorter latency and larger amplitude of the P3b component (decision process) to tone and segmental variations in musicians than in non-musicians in the Marie et al. (in press a) experiment were interpreted along these lines. Moreover, because metric and semantic incongruities were inter-mixed in both tasks used by Marie et al. (2011), the higher level of performance of musicians in the semantic task was interpreted as revealing less interferences (increased ability to focus attention) between the two dimensions (metric and semantic) for musicians than for non-musicians.

Finally, it is also important to note that many results in the neuroscience of music literature have revealed effects of musical expertise on measures of brain activity that are typically considered as reflecting pre-attentive processing. Fascinating results from the groups of Nina Kraus and Jack Gandour have shown an early influence of both musical and linguistic expertise on subcortical activity, as measured by the brainstem evoked responses. For instance, musicians show more robust representations of pitch contour of Mandarin tones than non-musicians, even if none of the participants spoke Mandarin (e.g., Wong et al., 2007). Conversely, native speakers of Mandarin Chinese show more accurate and more robust brainstem encoding of musical intervals than English non-musicians (Bidelman et al., 2011). Finally, the frequency following response (generated primarily in the inferior colliculus) to both speech and music stimuli is larger in musicians than in non-musicians (Musacchia et al., 2007; Bidelman et al., 2009). Thus, subcortical activity related to the encoding of pitch, whether in music or in speech, seems to be modulated by top-down influences related to musical or to linguistic expertise rather than only attention (for the effect of attention on FFR see Galbraith et al., 1998; Fritz et al., 2007).

Similarly, many results have shown that the amplitude of the MMN (Näätänen et al., 1978), typically considered as being pre-attentively generated, is larger in musicians than in non-musicians passively listening to harmonic or speech sounds (e.g., Koelsch et al., 1999; Nager et al., 2003; Tervaniemi et al., 2006). For instance, Koelsch et al. (1999) and more recently, Marie et al. (in press b) reported enhanced MMN to pitch changes in harmonic tones in musicians compared to non-musicians. There has been a long-lasting controversy about whether the MMN only reflects pre-attentive processing (e.g., Woldorff and Hillyard, 1991; Näätänen et al., 1993) and recent evidence suggests that while the MMN is pre-attentively generated, the amplitude of this component can be modulated by attention (Sussman et al., 2003; Loui et al., 2005). Moreover, dissociations between pre-attentive (as reflected by the MMN) and attentive (as reflected by ERP components such as the E(R)AN or the N2b) have been reported in several experiments (Sussman et al., 2004; Tervaniemi et al., 2009). For instance, in an experiment by Tervaniemi et al. (2009) designed to compare the processing of harmonic and speech sounds under ignore and attend conditions, the effect of musical expertise was only significant on the N2b component but not on the MMN.

As mentioned above, language and music entertain strong relationships not only with attention but also with memory2. The involvement of a working memory (WM) network in musical tasks has been reported in several brain imaging studies (e.g., Janata et al., 2002; Gaab and Schlaug, 2003; Schulze et al., 2009), with larger activation in musicians than in non-musicians. Moreover, common brain regions have been found to be activated during verbal and music short-term memory tasks (Ohnishi et al., 2001; Hickok et al., 2003; Brown et al., 2004; Brown and Martinez, 2007; Koelsch et al., 2009; Gordon et al., 2010; Schön et al., 2010). However, few studies have aimed at directly testing whether musicians show enhanced verbal memory abilities than non-musicians. Using behavioral measures, Chan et al. (1998) have shown better verbal memory in musicians than non-musicians. However, the level of education was a possible confound as it differed between the two groups. More recently, Tierney et al. (2008) reported that musicians can hold more information and/or for longer in auditory memory than non-musicians and positive correlations have been found between the amount of musical training and verbal WM (Brandler and Rammsayer, 2003 and Jakobson et al., 2003; but see Helmbold et al., 2005 for different results). Moreover, Franklin et al. (2008) also reported superior verbal WM performance (on reading span and operation span) when the criteria for selecting musicians and non-musicians were very well-controlled for. Importantly, they showed an improvement in long-term verbal memory in musicians that disappeared when the task did not allow for articulatory rehearsal. That different strategies can be used by musicians and non-musicians to perform the task at hand, was also suggested by very recent results from Williamson et al. (2010) in a study aimed at directly comparing short-term memory for verbal and musical pitch materials. These authors used immediate serial-recall tasks of four to eight letters or tones sequences and varied phonological and pitch proximity. First, and in line with previous results by Semal et al. (1996), they found that in both cases acoustic similarity was associated with decreased performance in non-musicians which was taken as evidence for “shared processing or overlap in verbal and musical short-term memory” (p. 172). Second, they found no pitch proximity effect in musicians (i.e., no decrease in recall performance for tones with similar compared to dissimilar pitches) which was taken to result from the use of multi–modal strategies (auditory, verbal, and tactile) in this group. Finally and directly related to our concerns, results of Experiment 3 showed that the phonological similarity effect (i.e., impaired performance for phonologically similar compared to dissimilar letters) was not significantly different for musicians and non-musicians thereby suggesting that the storage of verbal items in memory is not influenced by musical expertise.

By contrast, George and Coch (2011) used several subtest of the test of memory and learning (TOMAL, Reynolds and Voress, 2007) and found that musicians scored higher than non-musicians across subtests (including digit forward and digit backward as well as letter forward and letter backward). However, the level of performance at each subtest was not detailed, and as mentioned above (and as acknowledged by the authors), the overall level of performance in the music group may be indicative of a general attention effect. Interestingly, the authors also reported that the P300 to deviant tones in an oddball paradigm was larger in amplitude and shorter in latency for musicians than non-musicians, which they interpreted as more efficient and faster updating of WM (Donchin and Coles, 1988) with increased musical expertise.

The level of performance in same-different tasks, in which participants have to judge whether two sequences of sounds are same or different, is also linked to the ability to maintain several sounds in WM. In this respect, results of Marie et al. (in press a) showing that musicians outperformed non-musicians in a same-different task on two successively presented sequences of four Mandarin monosyllabic words can also be interpreted as reflecting enhanced WM abilities in musicians compared to non-musicians. Moreover, the P300 component was also shorter and larger in musicians than in non-musicians which, as in the study of George and Coch (2011), can be taken as an index of faster and more efficient updating of WM in musicians than in non-musicians. However, even if the context updating hypothesis is a powerful and interesting interpretation of the functional interpretation of the P300 component (Donchin and Coles, 1988), other interpretations, based on the finding of strong correlations between P300 and RTs, also link P300 to decision processes (e.g., Renault et al., 1982; Picton, 1992). Thus, musicians can possibly make faster decisions and be more confident in their response than non-musicians. Similarly, when a tonal variation was included in the sequence, the latency of the N2 component that is typically related to categorization processes was shorter in musicians than in non-musicians. Thus, while the same/different task certainly recruits WM, the different aspects of the results cannot be entirely explained by enhanced WM in musicians compared to non-musicians. Finally, musicians also outperformed non-musicians in the on-line detection of unusual syllabic lengthening that did not specifically mobilize WM (Marie et al., 2011).

Taken together, these results give a somewhat mixed picture of whether musical expertise does influence verbal short-term memory. Further work is clearly needed to clarify the intricate connections between general cognitive functions such as attention and memory and the sensory, perceptive, and cognitive abilities that are shaped by musical training. In this perspective and to design well-controlled transfer experiments, it is necessary to include standardized tests of attention and memory with good reliability (e.g., Franklin et al., 2008; Strait et al., 2010) as well as perceptual tests (e.g., perceptual threshold and discrimination) and tests of general intelligence (e.g., Schellenberg and Peretz, 2008). Moreover, together with careful matching of the sample of participants, controlling for the materials (e.g., physical features and familiarity; Tervaniemi et al., 2009), the level of difficulty of the tasks at hand and the type of tasks to be used in a given experiment (e.g., Sadakata et al., 2010 for the use of identification tasks) are tricky issues that require careful consideration.

In the final section of this review, we will briefly present an overview of an on-going research project with children3 in which we tried to take these remarks into account to test different facets of transfer effects from music to speech processing and to determine whether musical training can, together with speech therapy interventions, help children with dyslexia (Dys) to compensate their language deficits. As discussed above, the hope is that, by increasing the sensitivity to basic acoustic parameters such as pitch or duration, musical expertise will facilitate the building-up of higher-order phonological representations (e.g., phonemic categories) that are necessary for reading and that are known to be deficient in Dys (Swan and Goswami, 1997; Anvari et al., 2002; Foxton et al., 2003; Overy et al., 2003; Gaab et al., 2005; Tallal and Gaab, 2006; Santos et al., 2007). To this end, we used a longitudinal approach that has recently been used by several authors (e.g., Hyde et al., 2009; Moreno et al., 2009; Herdener et al., 2010) did to address the question of whether the effects of musical expertise result from intensive musical practice or from specific predispositions for music.

This longitudinal study started in September 2008 after we received all the agreements from the academy inspector, the local school authorities, the teachers, and the parents. Out of the 70 children who participated in the study, 37 were normal readers (NR) and 33 were children with Dys. All children had similar middle to low socioeconomic backgrounds (as determined from the profession of the parents) and none of the children, and none of their parents, had formal training in music or painting. All children were attending the third grade at the beginning of the experiment and the 37 children who remained at the end of the experiment (29 NR and 8 Dys) were attending the fifth grade. As can be expected from such a long-lasting experimental program (two school years from September 2008 until June 2010), the attrition rate was unfortunately very high for dyslexic children (76%; NR: 22%).

We used a Test 1 – Training – Test 2 – Training – Test 3 procedure. During Test 1 (September–October 2008) each child participated in two testing sessions, each lasting for 2 h. One included standardized neuropsychological assessments with subtests of the WISC IV (Wechsler, 2003, including Digit span (direct, reverse, total), similarities and symbols), the Raven matrices (Raven, 1962), the NEPSY (Korkman et al., 1998: including tests of visual and auditory attention, orientation, and visuo-motor abilities), the ODEDYS (Jacquier-Roux et al., 2005; with reading tests of regular and irregular words and of pseudo-words) and the Alouette test (Lefavrais, 1967) typically used to assess text reading abilities. Writing abilities were tested using a graphic tablet that allows measuring several parameters related to writing (e.g., pressure, velocity). Two perceptual tests were specifically designed to assess frequency and rhythmic thresholds using a just noticeable difference (JND; Grassi and Soranzo, 2009) procedure and one test aimed at testing the children’s singing abilities. Finally, children were also tested using a simple RT task to assess speed of information processing and motor processing.

The other session included four experiments specifically designed to test for the influence of musical training at different levels of speech perception and comprehension using both behavioral and/or electrophysiological data. In the first experiment, aimed at testing pre-attentive speech processing, we recorded the MMN while children were presented with the same materials (deviant syllables that differed in duration, frequency and VOT from the standard syllable) as in the experiment by Chobert et al., accepted described above. The aim was to determine whether musical training will induce a similar pattern of results (i.e., increased sensitivity to duration and VOT deviants) as found for musician children with an average of 4 years of musical training. In the second experiment, we examined the implicit learning of statistical regularities in a sung artificial language. Very recent results from our laboratory (François and Schön, in press) have shown that musical expertise in adults improves the learning of the musical and linguistic structure of a continuous artificial sung language. In children, we hypothesized that musical training should improve the segmentation, as revealed by subsequent recognition of items and this enhancement should translate into larger N400 to unfamiliar items. The third experiment aimed at testing the attentive perception of speech in noise. To this end we presented VCV patterns (ABA, APA, AVA, and ADA) from Ziegler et al. (2005) in an ABX design in silence or in noise. Based on previous results at the subcortical level (e.g., Parbery-Clark et al., 2009a,b) we expected musical training to facilitate the perception of these VCV patterns in noise. Finally, and in order to test for the upper limit of transfer effects, the last experiment4 aimed at determining whether musical training would influence speech comprehension. Children listened to semantically congruous and incongruous sentences presented at normal or fast (accelerated) speech rates. Again, we expected musical training to increase comprehension (as seen from the N400 effect), specifically at fast speech rate.

Based upon the results in some of the most relevant tests performed during the neuropsychological and electrophysiological sessions in Test 1, children were pseudo-randomly assigned to music or to painting training so as to ensure that the two groups of children were homogeneous and did not differ before training (Schellenberg, 2001). Moreover, painting training was used to control for between-group differences in motivation and cognitive stimulation (Schellenberg, 2001) and to test for specific hypothesis related to the improvement of writing abilities. The first period of music or painting training5 started in November 2008 and ended in May 2009 (except for holiday periods) for the first school year. Right after the end of training, Test 2 was conducted using the very same procedure as in Test 1 and including both the neuropsychological and the electrophysiological sessions, each with the very same tests. The second period of training started in October 2009 and lasted until May 2010 for the second school year. Children were tested for the third time (Test 3) right after the end of the training, again by using the very same procedure as in Tests 1 and 2. Test 3 took place in May–June 2010. Since then we have been processing this huge amount data and we are looking forward to be able to present the results to the scientific community in the near future.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The experiments reviewed in this paper were conducted at the “Institut de Neurosciences Cognitives de la Méditerranée” (INCM), CNRS, and Université de la Méditerranée, Marseille, France. This research was supported by a grant from the ANR-Neuro (#024-01) to Mireille Besson. Julie Chobert is PhD students also supported by the ANR-Neuro (#024-01).

Abrams, D. A., Bhatara, A., Ryali, S., Balaban, E., Levitin, D. J., and Menon, V. (2010). Decoding temporal structure in music and speech relies on shared brain resources but elicits different fine-scale spatial patterns. Cereb. Cortex. doi: 10.1093/cercor/bhq198. [Epub ahead of print].

Amunts, K., Schlaug, G., Jancke, L., Steinmetz, H., Schleicher, A., and Zilles, K. (1997). Hand skills covary with the size of motor cortex: a macrostructural adaptation. Hum. Brain Mapp. 5, 206–215.

Anvari, S. H., Trainor, L. J., Woodside, J., and Levy, B. A. (2002). Relations among musical skills, phonological processing, and early reading ability in preschool children. J. Exp. Child Psychol. 83, 111–130.

Atienza, M., Cantero, J. L., and Dominguez-Marin, E. (2002). The time course of neural changes underlying auditory perceptual learning. Learn. Mem. 9, 138–150.

Baumann, S., Meyer, M., and Jäncke, L. (2008). Enhancement of auditory-evoked potentials in musicians reflects an influence of expertise but not selective attention. J. Cogn. Neurosci. 20, 1–12.

Bengtsson, S. L., Nagy, Z., Skare, S., Forsman, L., Forssberg, H., and Ullén, F. (2005). Extensive piano practicing has regionally specific effects on white matter development. Nat. Neurosci. 8, 1148–1150.

Bermudez, P., Lerch, J. P., Evans, A.C., and Zatorre, R. J. (2009). Neuroanatomical correlates of musicianship as revealed by cortical thickness and voxel-based morphometry. Cereb. Cortex 19, 1583–1596.

Besson, M., and Faïta, F. (1995). An event-related potential (ERP) study of musical expectancy: comparison of musicians with non-musicians. J. Exp. Psychol. Hum. Percept. Perform. 21, 1278–1296.

Bidelman, G. M., Gandour, J. T., and Krishnan, A. (2009). Cross-domain effects of music and language experience on the representation of pitch in the human auditory brainstem. J. Cogn. Neurosci. 29, 13165–13171.

Bidelman, G. M., Gandour, J. T., and Krishnan, A. (2011). Cross-domain effects of music and language experience on the representation of pitch in the human auditory brainstem. J. Cogn. Neurosci. 23, 425–434.

Bidelman, G. M., and Krishnan, A. (2010). Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Res. 1355, 112–125.

Bidelman, G. M., Krishnan, A., and Gandour, J. T. (2010). Enhanced brainstem encoding predicts musicians’ perceptual advantages with pitch. Eur. J. Neurosci. 33, 530–538.

Bigand, E., Tillmann, B., Poulin, B., D’Adamo, D. A., and Madurell, F. (2001). The effect of harmonic context on phoneme monitoring in vocal music. Cognition 81, 11–20.

Bosnyak, D. J., Gander, P. E., and Roberts, L. E. (2007). Does auditory discrimination training modify representations in both primary and secondary auditory cortex? Int. Congr. Ser. 1300, 25–28.

Boucourechliev, A. (1993). Le Langage Musical. Collections Les Chemins de la Musique. Paris: Fayard.

Brandler, S., and Rammsayer, T. H. (2003). Differences in mental abilities between musicians and non-musicians. Psychol. Music 31, 123–138.

Brown, S. (2003) Biomusicology and three biological paradoxes about music. Bull. Psychol. Arts 4, 15–17.

Brown, S., and Martinez, M. J. (2007). Activation of premotor vocal areas during musical discrimination. Brain Cogn. 63, 59–69.

Brown, S., Martinez, M. J., and Parsons, L. M. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport 15, 2033–2037.

Chan, A. S., Ho, Y. C., and Cheung, M. C. (1998). Music training improves verbal memory. Nature 396, 128.

Courchesne, E., Hilliard, S. A., and Galambos, R. (1975) Stimulus novelty, task relevance and the visual evoked potential in man. Electroencephalogr. Clin. Neurophysiol. 39, 131–143.

Darwin, C. (1871/1981). “The descent of man and selection in relation to sex,” in Facsimile of the First Edition, Original Edn. (Princeton NJ: Princeton University Press). [John Murray, London].

Delogu, F., Lampis, G., and Olivetti Belardinelli, M. (2006). Music-to-language transfer effect: may melodic ability improve learning of tonal languages by native nontonal speakers? Cogn. Process. 7, 203–207.

Delogu, F., Lampis, G., and Olivetti Belardinelli, M. (2010). From melody to lexical tone: musical ability enhances specific aspects of foreign language perception. Eur. J. Cogn. Psychol. 22, 46–61.

Descartes. (1618/1987). Abrégé de Musique. Compenduim musicae. épiméthée. Paris: Presses Universitaires de France.

Donchin, E., and Coles, M. G. H. (1988). Is the P300 component a manifestation of context updating? Behav. Brain Sci. 11, 357–374.

Duncan-Johnson, C., and Donchin, E. (1977). On quantifying surprise, the variation of event-related potentials with subjective probability. Psychophysiology 14, 456–467.

Eggermont, J., and Ponton, C. (2002). The neurophysiology of auditory perception: from single-units to evoked potentials. Audiol. Neurootol. 7, 71–99.

Elbert, T., Pantev, C., Wienbruc, C., Rockstroh, B., and Taub, E. (1995). Increased cortical representation of the fingers of the left hand in string players. Science 270, 305–307.

Escera, C., Alho, K., Schröger, E., and Winkler, I. (2000). Involuntary attention and distractibility as evaluated with event-related brain potentials. Audiol. Neurootol. 5, 151–166.

Federmeier, K. D., Wlotko, E. W., and Meyer, A. M. (2008). What’s “right” in language comprehension: event-related potentials reveal right hemisphere language capabilities. Lang. Linguist. Compass 2, 1–17.

Fedorenko, E., Patel, A. D., Casasanto, D., Winawer, J., and Gibson, E. (2009). Structural integration in language and music: evidence for a shared system. Mem. Cogn. 37, 1–9.

Foxton, J. M., Talcott, J. B., Witton, C., Brace, H., McIntyre, F., and Griffiths, T. D. (2003). Reading skills are related to global, but not local, acoustic pattern perception. Brief communication. Nat Neurosci. 6, 343–344.

François, C., and Schön, D. (in press). Musical expertise boosts implicit learning of both musical and linguistic structures. Cereb. Cortex. doi: 10.1093/cercor/bhr022 [Epub ahead of print].

Franklin, M. S., Rattray, K., Moore, K. S., Moher, J., Yip, C., and Jonides, J. (2008). The effects of musical training on verbal memory. Psychol. Music 36, 353–365.

Fritz, J. B., Elhilali, M., and Shamma, S. A. (2007). Adaptive changes in cortical receptive fields induced by attention to complex sounds. J. Neurophysio. 98, 2337–2346.

Fujioka, T., Ross, B., Kakigi, R., Pantev, C., and Trainor, L. (2006). One year of musical training affects development of auditory cortical-evoked fields in young children. Brain 129, 2593–2608.

Fujioka, T., Trainor, L. J., Ross, B., Kakigi, R., and Pantev, C. (2004). Musical training enhances automatic encoding of melodic contour and interval structure. J. Cogn. Neurosci. 16, 1010–1021.

Gaab, N., Gabrieli, J. D. E., Deutsch, G. K., Tallal, P., and Temple, E. (2007). Neural correlates of rapid auditory processing are disrupted in children with developmental dyslexia and ameliorated with training: an fMRI study. Restor. Neurol. Neurosci. 25, 295–310.

Gaab, N., and Schlaug, G. (2003). The effect of musicianship on pitch memory in performance matched groups. Neuroimage 14, 2291–2295.

Gaab, N., Tallal, P., Kim, H., Lakshminarayanan, K., Archie, J. J., Glover, G. H., and Gabrieli, J. D. (2005). Neural correlates of rapid spectrotemporal processing in musicians and nonmusicians. Ann. N. Y. Acad. Sci. 1060, 82–88.

Galbraith, G. C., Bhuta, S. M., Choate, A. K., Kitahara, J. M., and Mullen, T. A. Jr. (1998). Brain stem frequency-following response to dichotic vowels during attention. Neuroreport 9, 1889–1893.

Gaser, C., and Schlaug, G. (2003). Brain structures differ between musicians and non-musicians. J. Neurosci. 23, 9240–9245.

George, E. M., and Coch, D. (2011). Music training and working memory: an ERP study. Neuropsychologia 49, 1083–1094.

Gordon, R., Schön, D., Magne, C., Astésano, C., and Besson, M. (2010). Words and melody are intertwined in perception of sung words: EEG and behavioral evidence. PLoS ONE 5, 9889. doi: 10.1371/journal.pone.0009889

Gottfried, T. L., Staby, A. M., and Ziemer, C. J. (2004). Musical experience and mandarin tone discrimination and imitation. J. Acoust. Soc. Am. 115, 2545.

Grassi, M., and Soranzo, A. (2009). MLP: a MATLAB toolbox for rapid and reliable auditory threshold estimations. Behav. Res. Methods 41, 20–28.

Griffiths, T. D., and Warren, J. D. (2002) The planum temporale as a computational hub. Trends Neurosci 25, 348–353.

Groussard, M., La Joie, R., Rauchs, G., Landeau, B., Chételat, G., Viader, F., Desgranges, B., Eustache, F., and Platel, H. (2010). When music and long-term memory interact: effects of musical expertise on functional and structural plasticity in the hippocampus. PLoS ONE 5, 10:e13225. doi:10.1371/journal.pone.0013225

Helmbold, N., Rammsayer, T., and Altenmüller, E. (2005). Differences in primary mental abilities between musicians and non-musicians. J. Individ. Diff. 26, 74–85.

Herdener, M., Esposito, F., di Salle, F., Boller, C., Hilti, C. C., Habermeyer, B., Scheffler, K., Wetzel, S., Seifritz, E., and Cattapan-Ludewig, K. (2010) Musical training induces functional plasticity in human hippocampus. J. Neurosci. 30, 1377–1384.

Hickok, G., Buchsbaum, B., Humphries, C., and Muftuler, T. (2003). Auditory– motor interaction revealed by fMRI: speech, music, and working memory in area Spt. J. Cogn. Neurosci. 15, 673–682.

Hickok, G., and Poeppel, D. (2007). The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402

Hillyard, S. A., Hink, R. F., Schwent, V. L., and Picton, T. W. (1973). Electrical signs of selective attention in the human brain. Science 182, 177–180.

Hutchinson, S., Lee, L. H. L., Gaab, N., and Schlaug, G. (2003). Cerebellar volume: gender and musicianship effects. Cereb. Cortex 13, 943–949.

Hyde, K. L., Lerch, J., Norton, A., Forgeard, M., Winner, E., Evans, A. C., and Schlaug, G. (2009) Musical training shapes structural brain development. J. Neurosci. 29, 3019–3025.

Imfeld, A., Oechslin, M. S., Meyer, M., Loenneker, T., and Jancke, L. (2009). White matter plasticity in the corticospinal tract of musicians: a diffusion tensor imaging study. Neuroimage 46, 600–607.

Jacquier-Roux, M., Valdois, S., and Zorman, M. (2005). Odédys. Outil de déPistage des Dyslexies. Grenoble: Cogni-Sciences.

Jakobson, L. S., Cuddy, L. L., and Kilgour, A. R. (2003). Time tagging: a key to musicians’ superior memory. Music Percept. 20, 307–313.

Janata, P., Tillman, B., and Bharucha, J. J. (2002). Listening to polyphonic music recruits domain-general attention and working memory circuits. Cogn. Affect. Behav. Neurosci. 2, 121–140.

Jancke, L., Wustenberg, T., Scheich, H., and Heinze, H. J. (2002). Phonetic perception and the temporal cortex. Neuroimage 15, 733–746.

Jones, J. L., Lucker, J., Zalewski, C., Brewer, C., and Drayna, D. (2009). Phonological processing in adults with deficits in musical pitch recognition. J. Commun. Disord. 42, 226–234.

Keenan, J. P., Halpern, A. R., Thangaraj, V., Chen, C., Edelman, R. R., and Schlaug, G. (2001). Absolute pitch and planum temporale. Neuroimage 14, 1402–1408.

Kishon-Rabin, L., Amir, O., Vexler, Y., and Zaltz, Y. (2001). Pitch discrimination: are professional musicians better than non-musicians? J. Basic Clin. Physiol. Pharmacol. 12, 125–143.

Koelsch, S., Gunter, T. C., Wittfoth, M., and Sammler, D. (2005). Interaction between syntax processing in language and in music: an ERP study. J. Cogn. Neurosci. 17, 1565–1579.

Koelsch, S., Kasper, E., Sammler, D., Schulze, K., Gunter, T., and Friederici, A. D. (2004). Music, language and meaning: brain signatures of semantic processing. Nat. Neurosci. 7, 302–327.

Koelsch, S., Schmidt, B. H., and Kansok, J. (2002) Influences of musical expertise on the ERAN: an ERP-study. Psychophysiology 39, 657–663.

Koelsch, S., Schröger, E., and Tervaniemi, M. (1999). Superior pre-attentive auditory processing in musicians. Neuroreport, 10, 1309–1313.

Koelsch, S., Schulze, K., Sammler, D., Fritz, T., Muller, K., and Gruber, O. (2009). Functional architecture of verbal and tonal working memory: an FMRI study. Hum. Brain Mapp. 30, 859–873.

Korkman, M., Kirk, U., and Kemp, S. (1998). Manual for the NEPSY: A Developmental Neuropsychological Assessment. San Antonio, TX: Psychological Testing Corporation.

Kraus, N., and Chandrasekaran, B. (2010). Music training for the development of auditory skills. Nat. Rev. Neurosci. 11, 599–605.

Lahav, A., Saltzman, E., and Schlaug, G. (2007). Action representation of sound: audiomotor recognition network while listening to newly acquired actions. J. Neurosci. 27, 308–314.

Lee, C. H., and Hung, T. H. (2008). Identification of Mandarin tones by English speaking musicians and non-musicians. J. Acoust. Soc. Am. 124, 3235–3248.

Levitin, D. J., and Menon, V. (2003). Musical structure is processed in “language” areas of the brain: a possible role for Brodmann area 47 in temporal coherence. Neuroimage 20, 2142–2152.

Loui, P., Grent-’t-Jong, T., Torpey, D., and Woldorff, M. (2005). Effects of attention on the neural processing of harmonic syntax in Western music. Cogn. Brain Res. 25, 678–87.

Luders, E., Gaser, C., Jancke, L., and Schlaug, G. (2004). A voxel-based approach to gray-matter asymmetries. Neuroimage 22, 656–664.

Maess, B., Koelsch, S., Gunter, T. C., and Friederici, A. D. (2001). Musical syntax is processed in broca’s area: an MEG study. Nat. Neurosci. 4, 540–545.

Magne, C., Astésano, C., Aramaki, M., Ystad, S., Kronland-Martinet, R., and Besson, M. (2007). Influence of syllabic lengthening on semantic processing in spoken french: behavioral and electrophysiological evidence. Cereb. Cortex 17, 2659–2668.

Marie, C., Delogu, F., Lampis, G., Olivetti Belardinelli, M., and Besson, M. (in press a). Influence of musical expertise on segmental and tonal processing in Mandarin Chinese. J. Cogn. Neurosci. doi: 10.1162/jocn.2010.21585

Marie, C., Kujala, T., and Besson, M. (in press b). Musical and linguistic expertise influence preattentive and attentive processing of non-speech sounds. Cortex doi: 10.1016/j.cortex. 2010.11.006

Marie, C., Magne, C., and Besson, M. (2011). Musicians and the metric structure of words. J. Cogn. Neurosci. 23, 294–305.

Marques, C., Moreno, S., Castro, S. L., and Besson, M. (2007). Musicians detect pitch violation in a foreign language better than non-musicians: behavioural and electrophysiological evidence. J. Cogn. Neurosci. 19, 1453–1463.

Micheyl, C., Delhommeau, K., Perrot, X., and Oxenham, A. J. (2006). Influence of musical learning and psychoacoustical training on pitch discrimination. Hear. Res. 219, 36–47.

Moreno, S., Marques, C., Santos, A., Santos, M., Castro, S. L., and Besson, M. (2009). Musical training influences linguistic abilities in 8-year-old children: more evidence for brain plasticity. Cereb. Cortex 19, 712–723.

Musacchia, G., Sams, M., Skoe, E., and Kraus, N. (2007). Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. 104, 15894–15898.

Musacchia, G., Strait, D., and Kraus, N. (2008). Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and nonmusicians. Hear. Res. 241, 34–42.

Näätänen, R., Gaillard, A. W. K., and Mäntysalo, S. (1978). Early selective attention effect on evoked potential reinterpretes. Acta Psychol. (Amst.) 42, 312–329.

Näätänen, R., Paavilainen, P., Tiitinen, H., Jiang, D., and Alho, K. (1993). Attention andmismatch negativity. Psychophysiology 30, 436–450.

Nager, W., Kohlmetz, C., Altenmüller, E., Rodriguez-Fornells, A., and Münte, T. F. (2003). The fate of sounds in conductor’s brains: an ERP study. Cogn. Brain Res. 17, 83–93.

Newman, E. (1905/1969). “Herbert Spencer and the origin of music,” in Musical Studies, ed. Newman (New York: Haskell House Publishers), 189–216.

Ohnishi, T., Matsuda, H., Asada, T., Aruga, M., Hirakata, M., Nishikawa, M., Katoh, A., and Imabayashi, E. (2001). Functional anatomy of musical perception in musicians. Cereb. Cortex 11, 754–760.

Overy, K. (2000). Dyslexia, temporal processing and music: the potential of music as an early learning aid for dyslexic children. Psychol. Music 28, 218–229.

Overy, K., Nicolson, R. I., Fawcett, A. J., and Clarke, E. F. (2003). Dyslexia and music: measuring musical timing skills. Dyslexia 9, 18–36.

Pallone, G., Boussard, P., Daudet, L., Guillemain, P., and Kronland-Martinet, R. (1999). “A wavelet based method for audio-video synchronization in broadcasting applications,” in Proceedings of the Conference on Digital Audio Effects DAFx-99 1999, Trondheim, 59–62.

Pantev, C., Oostenveld, R., Engelien, A., Ross, B., Roberts, L.E., and Hoke, M. (1998). Increased auditory cortical representation in musicians. Nature 392, 811–814.

Parbery-Clark, A., Skoe, E., Lam, C., and Kraus, N. (2009a). Musician enhancement for speech in noise. Ear. Hear. 30, 653–661.

Parbery-Clark, A., Skoe, E., and Kraus, N. (2009b). Musical experience limits the degradative effects of background noise on the neural processing of sound. J. Neurosci. 25, 14100–14107.

Phillips, C., Pellathy, T., Marantz, A., Yellin, E., Wexler, K., Poeppel, D., McGinnis, M., and Roberts, T. (2000). Auditory cortex accesses phonological categories: an MEG mismatch study. J. Cogn. Neurosci. 12, 1038–1055.

Picton, T. W. (1992). The P300 wave of the human event-related potential. J. Clin. Neurophysiol. 9, 456–479.

Poulin-Charronnat, B., Bigand, E., Madurell, F., and Peereman, R. (2005). Musical structure modulates semantic priming in vocal music. Cognition 94, B67–B78.

Renault, B., Ragot, R., Lesevre, N., and Remond, A. (1982). Onset and offset of brain events as indices of mental chronometry. Science 215, 1413–1415.

Reynolds, C. R., and Voress, J. (2007). Test of Memory and Learning (TOMAL-2), 2nd Edn, Austin, TX: Pro-Ed.

Rugg, M. D., and Coles, M. G. H. (1995). “The ERP and cognitive psychology: conceptual issues,” in Electrophysiology of Mind: Event Related Brain Potentials and Cognition, eds M. D. Rugg, and M. G. H. Coles (Oxford: Oxford University Press), 27–39.

Sadakata, M., van der Zanden, L., and Sekiyama, K. (2010). “Influence of musical training on perception of L2 speech,” in 11th Annual Conference of the International Speech Communication Association, Makuhari, 118–121.

Santos, A., Joly-Pottuz, B., Moreno, S., Habib, M., and Besson, M. (2007). Behavioural and event-related potential evidence for pitch discrimination deficit in dyslexic children: improvement after intensive phonic intervention. Neuropsychologia 45, 1080–1090.

Schellenberg, E. G., and Peretz, I. (2008). Music, language and cognition: Unresolved issues. Trends Cogn. Sci. 12, 45–46.

Schlaug, G., Jäncke, L., Huang, Y., Staiger, J. F., and Steinmetz, H. (1995a). Increased corpus callosum size in musicians. Neuropsychologia 33, 1047–1055.

Schlaug, G., Jäncke, L., Huang, Y., and Steinmetz, H. (1995b). In vivo evidence of structural brain asymmetry in musicians. Science 267, 699–671.

Schmithorst, V. J., and Wilke, M. (2002). Differences in white matter architecture between musicians and non-musicians: a diffusion tensor imaging study. Neurosci. Lett. 321, 57–60.

Schneider, P., Scherg, M., Dosch, H. G., Specht, H. J., Gutschalk, A., and Rupp, A. (2002). Morphology of Heschl’s gyrus reflects enhanced activation in the auditory cortex of musicians. Nat. Neurosci. 5, 688–694.

Schneider, P., Sluming, V., Roberts, N., Scherg, M., Goebel, R., Specht, H. J., Dosch, H. G., Bleeck, S., Stippich, C., and Rupp, A. (2005). Structural and functional asymmetry of lateral Heschl’s gyrus reflects pitch perception preference. Nat. Neurosci. 8, 1241–1247.

Schön, D., Gordon, R., Campagne, A., Magne, C., Astesano, C., Anton, J. L., and Besson, M. (2010). More evidence for similar cerebral networks in language, music and song perception. Neuroimage 51, 450–461.

Schön, D., Magne, C., and Besson, M. (2004). The music of speech: music facilitates pitch processing in language. Psychophysiology 41, 341–349.

Schulze, K., Gaab, N., and Schlaug, G. (2009). Perceiving pitch absolutely: comparing absolute and relative pitch possessors in a pitch memory task. BMC Neurosci. 10, 106. doi: 10.1186/1471-2202-10-106

Semal, C., Demany, L., Ueda, K., and Halle, P. A. (1996). Speech versus nonspeech in pitch memory. J. Acoust. Soc. Am. 100, 1132–1140.

Shahin, A., Bosnyak, D. J., Trainor, L. J., and Roberts, L. E. (2003). Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J. Neurosci. 23, 5545–5552.

Slevc, L. R., and Miyake, A. (2006). Individual differences in second-language proficiency: does musical ability matter? Psychol. Sci. 17, 675–681.

Spiegel, M. F., and Watson, C. S. (1984). Performance on frequency discrimination tasks by musicians and nonmusicians. J. Acoust. Soc. Am. 76, 1690–1695.

Squires, K. C., Squires, N. K., and Hillyard, S. A. (1975). Decision-related cortical potentials during an auditory signal detection task with cued observation intervals. J. Exp. Psychol. Hum. Percept. Perform. 1, 268–279.

Steinbeis, N., and Koelsch, S. (2008). Comparing the processing of music and language meaning using EEG and fMRI provides evidence for similar and distinct neural representations. PLoS One 3, 2226. doi: 10.1371/journal.pone.0002226

Strait, D. L., Kraus, N., Parbery-Clark, A., and Ashley, R. (2010). Musical experience shapes top-down auditory mechanisms: evidence from masking and auditory attention performance. Hear. Res. 261, 22–29.

Sussman, E., Kujala, T., Halmetoja, J., Lyytinen, H., Alku, P., and Näätänen, R. (2004). Automatic and controlled processing of acoustic and phonetic contrasts. Hear. Res. 190, 128–140.

Sussman, E., Winkler, I., and Wang, W. (2003). MMN and attention: competition for deviance detection. Psychophysiology 40, 430–435.

Swan, D., and Goswami, U. (1997). Phonological awareness deficits in developmental dyslexia and phonological representations hypothesis. J. Exp. Child Psychol. 66, 18–41.

Tallal, P., and Gaab, N. (2006). Dynamic auditory processing, musical experience and language development. Trends Neurosci. 29, 382–390.

Tervaniemi, M., Castaneda, A., Knoll, M., and Uther, M. (2006). Sound processing in amateur musicians and nonmusicians: event-related potential and behavioral indices. Neuroreport 17, 1225–1228.

Tervaniemi, M., Kruck, S., Baene, W. D., Schröger, E., Alter, K., and Friederici, A. D. (2009). Top-down modulation of auditory processing: effects of sound context, musical expertise and attentional focus. Eur. J. Neurosci. 30, 1636–1642.

Tierney, A., Bergeson, T. R., and Pisoni, D. B. (2008). Effecs of early musical experience on auditory sequence memory. Empir. Musicol. Rev. 3, 178–186.

Trainor, L. J., Desjardins, R. N., and Rockel, C. (1999). A comparison of contour and interval processing in musicians and nonmusicians using event-related potentials. Aust. J. Psychol. 51, 147–153.

Vigneau, M., Beaucousin, V., Herve, P. Y., Duffau, H., Crivello, F., Houde, O., Mazoyer, B., and Tzourio-Mazoyer, N. (2006). Meta-analyzing left hemisphere language areas: phonology, semantics, and sentence processing. Neuroimage 30, 1414–1432.

Vuust, P., Roepstorff, A., Wallentin, M., Mouridsen, K., and Ostergaard, L. (2006). It don’t mean a thing. Keeping the rhythm during polyrhythmic tension, activates language areas (BA47). Neuroimage 31, 832–841.

Wechsler, D. (2003). WISC-IV: Technical and Interpretive Manual. San Antonio, TX: The Psychological Corporation.

Williamson, V. J., Baddeley, A. D., and Hitch, G. J. (2010). Musicians’ and nonmusicians’ short-term memory for verbal and musical sequences: comparing phonological similarity and pitch proximity. Mem. Cognit. 38, 163–175.

Woldorff, M. G., and Hillyard, S. A. (1991). Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalogr. Clin. Neurophysiol. 79, 170–191.

Wong, P. C. M., Skoe, E., Russo, N. M., Dees, T., and Kraus, N. (2007). Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat. Neurosci. 10, 420–422.

Keywords: transfer effects, music training, speech processing, passive listening, mismatch negativity, active discrimination, attention, working memory

Citation: Besson M, Chobert J and Marie C (2011) Transfer of training between music and speech: common processing, attention, and memory. Front. Psychology 2:94. doi: 10.3389/fpsyg.2011.00094

Received: 10 March 2011;

Paper pending published: 05 April 2011;

Accepted: 29 April 2011;

Published online: 12 May 2011.

Edited by:

Lutz Jäncke, University of Zurich, SwitzerlandReviewed by:

Psyche Loui, Beth Israel Deaconess Medical Center/Harvard Medical School, USACopyright: © 2011 Besson, Chobert and Marie. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Mireille Besson, Institut de Neurosciences Cognitives de la Méditerranée, CNRS, Université de la Méditerranée, 31-Chemin Joseph Aiguier, 13402 Marseille CEDEX 20, France. e-mail:bWlyZWlsbGUuYmVzc29uQGluY20uY25ycy1tcnMuZnI=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.