- 1Aulin College, Northeast Forestry University, Harbin, China

- 2College of Computer and Control Engineering, Northeast Forestry University, Harbin, China

- 3School of Computer Science and Technology, Wuhan University of Science and Technology, Wuhan, Hubei, China

- 4College of International Studies, National University of Defense Technology, Nanjing, China

- 5Basic Education College, National University of Defense Technology, Changsha, China

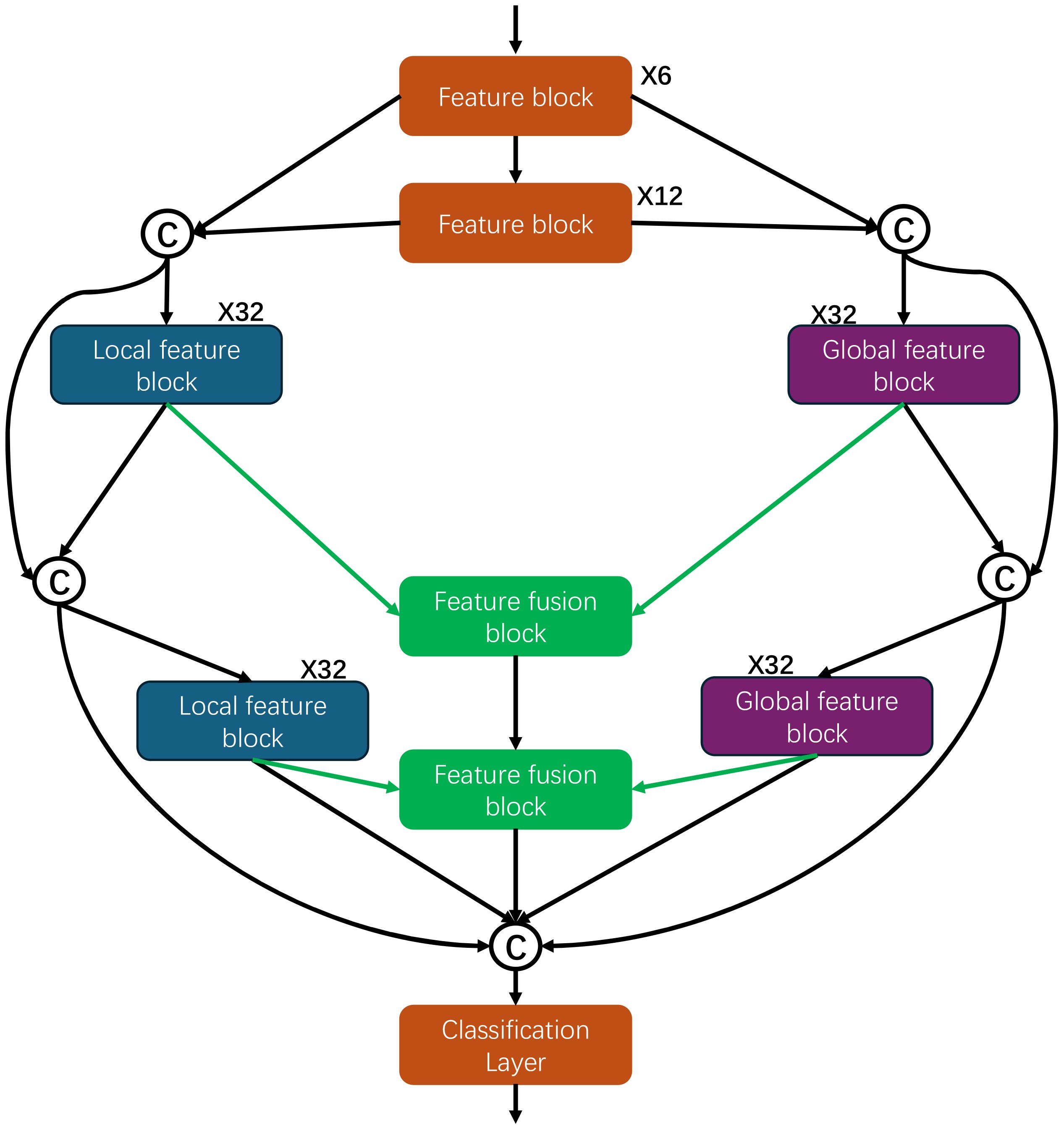

Addressing the issues with insufficient multi-scale feature perception and incomplete understanding of global information in traditional convolutional neural networks for image classification of wheat leaf disease, this paper proposes a global local feature network, i.e. GLNet, which adopts a unique global-local convolutional neural network architecture, realizes the comprehensive capturing of multi-scale features in an image by processing the global feature block and local feature block in parallel and integrating the information of both of them with the help of a feature fusion block. By processing global and local feature blocks in parallel and integrating the information of both effectively with the help of feature fusion blocks, the model realizes the comprehensive capture of multi-scale features in images. This innovative design significantly enhances the model ability to understand the features of wheat leaf disease images, and thus demonstrates excellent performance and accuracy in the task of classifying wheat leaf disease images in real-world scenarios. The successful application of GLNet provides new ideas and effective tools for solving complex image classification problems.

Introduction

Wheat is among the most extensively cultivated food crops worldwide, supplying vital sustenance and nutrition to billions of people globally (Erenstein et al., 2022). As reported by the Food and Agriculture Organization of the United Nations, wheat constitutes over one-third of the world’s cereal production and is a crucial component of the human food supply (Dadrasi et al., 2023). However, wheat is subject to a variety of diseases during its growth, including wheat leaf rust, powdery mildew, red mold and stripe rust (Singh et al., 2023). These diseases substantially diminish both the yield and quality of wheat, leading to significant losses in the agricultural economy (Rebouh et al., 2023). For instance, in the case of wheat blast disease, this condition not only impacts yield but also results in elevated levels of toxins in the grains, thereby posing a serious threat to both human and animal health.

Hence, timely and accurate diagnosis and management of wheat diseases are crucial for ensuring food security and sustainable agricultural development (John et al., 2023). Traditional methods of wheat disease diagnosis rely on field observation and empirical judgment of agronomists, which is not only time-consuming and laborious, but also involves a certain degree of subjectivity and risk of misjudgment. In modern large-scale agricultural production, there is an urgent need for an efficient and reliable automated disease diagnostic tool to improve the diagnostic efficiency and accuracy, to realize early warning and precise prevention and control (Edan et al., 2023).

Traditional methods of wheat disease diagnosis mainly include visual inspection and laboratory testing. The visual inspection method relies on the experience and knowledge of agricultural experts, and although it can be carried out in the field in real time, it is inefficient and highly dependent on experts (Guerrero et al., 2013). In addition, visual inspection is difficult to cover and detect quickly when dealing with large planting environments and is prone to missing early disease symptoms. Traditional laboratory testing methods, while accurate, are hindered by their cumbersome, time-consuming, and costly nature, making them impractical for large-scale monitoring and real-time diagnosis. In response to these challenges, automated and intelligent methods for diagnosing wheat diseases have emerged as key areas of research focus. Recent advancements in information technology and agricultural techniques have fostered the adoption of image processing technology for identifying and classifying crop diseases, marking it as a burgeoning diagnostic tool. However, traditional image processing methods rely on artificially designed features, which are difficult to adapt to the complex and changing field environment and diverse disease symptoms, and the classification effect is limited.

Deep learning, a leading technology in artificial intelligence, excels particularly in tasks involving image recognition and classification (Khasim et al., 2024). Convolutional Neural Network, as an important model of deep learning, mimics the processing of the human brain’s visual system through a hierarchical architecture, and is able to automatically extract multi-level features from image data, thus realizing efficient image classification and recognition (Ketkar and Moolayil, 2021). Deep learning methods have already achieved remarkable results in the fields of medical image analysis, automatic driving, security monitoring, etc., and have brought new hope for agricultural image processing. In the field of agriculture, image analysis techniques based on deep learning are gradually applied to crop pest detection and classification. By constructing large-scale image datasets and training deep learning models, automated and intelligent diagnosis of crop diseases can be realized. Deep learning methods exhibit superior accuracy and robustness compared to traditional methods, adeptly adapting to complex and dynamic field environments.

There have been some research attempts to apply deep learning to wheat disease image classification. Studies (Lin et al., 2019) have shown that deep learning-based methods for wheat disease classification have achieved better results to some extent. For example, some studies have used convolutional neural networks to classify wheat leaf diseases and achieved high classification accuracy. However, these methods based on convolutional neural networks often neglect the extraction of global features from wheat leaf disease images. Global features play an important role in wheat leaf disease images because they not only contain information about the overall distribution and morphology of the disease, but also capture important information about the environmental background, lighting conditions, and overall leaf morphology.

Therefore, to address this issue, we propose the Global Local Feature Network (GLNet) for classifying wheat leaf disease images. GLNet initially employs a bottleneck block, comprised of small convolutional kernels, to extract features from wheat leaf disease images. Subsequently, an inverted bottleneck block utilizes a large convolutional kernel to capture global features from the images, while another inverted bottleneck block, employing a similar architecture but with small convolutional kernels, extracts local features. Furthermore, a feature fusion block effectively enhances the interaction between these global and local features. The main contributions of this paper include:

1. a convolutional neural network-based wheat leaf disease image classification network, namely GLNet, is designed and implemented. GLNet improves its global feature sensing ability by introducing a large kernel convolution because of traditional convolutional neural network, which improves the accuracy of classification.

2. an effective feature fusion block is designed, which can effectively enhance the interaction between global and local features.

3. the effectiveness of the model is verified through experiments on a public dataset, and compared and analyzed with existing methods, demonstrating its potential in practical applications and proving the advantages of GLNet in image classification of wheat leaf disease.

Related work

Convolutional neural networks for image classification

Alexnet (Krizhevsky et al., 2012) with its unique network architecture and technological innovations such as ReLU activation function, Dropout regularization, data augmentation, and local response normalization, which opens up new paths in the field of deep learning. At the same time, by introducing deeper network layers, it is able to extract more levels of abstract features compared to the previous shallow networks, and thus performs well in dealing with complex image recognition tasks. The core design idea of VGGNet (Simonyan and Zisserman, 2014) is relatively simple and intuitive, which reduces the number of parameters of the model while increasing the depth of the network by connecting multiple smaller-sized convolutional kernels (usually 3x3) in series instead of larger-sized convolutional kernels. This design strategy not only improves the accuracy of the model, but also enhances the model’s ability to extract image features. ResNet (He et al., 2016) addresses the issues of gradient vanishing and model degradation in deep neural networks during training by incorporating residual connections. This innovation enables the successful training of deeper neural networks compared to previous models, resulting in significant improvements in performance across various tasks. InceptionNet (Szegedy et al., 2015) enhances model accuracy and performance through the introduction of the Inception block. This block facilitates simultaneous utilization of convolutional kernels of varying sizes and pooling operations at the same network level, enabling the capture of image features across multiple scales. By reducing computational demands and parameter count, this network architecture efficiently extracts features while managing resource consumption. It finds extensive application in computer vision tasks such as image classification and target detection. ACNet (Ding et al., 2019) improves model accuracy and efficiency by introducing asymmetric convolutional strategies. These enhancements strengthen feature extraction capabilities during training while maintaining consistent computation through convolution kernel fusion during inference. The fundamental concept involves allowing convolution kernels to dynamically adjust their size or shape based on input data, or combining kernels of different sizes to capture multi-scale features. This approach enhances the model’s understanding and generalization of complex scenes. EfficientNet (Tan and Le, 2019) achieves a unified scaling of the network depth, width and resolution through composite scaling techniques, thus significantly reducing the number of parameters and computation of the model while maintaining high performance. MobileNet (Howard et al., 2017) is a compact convolutional neural network architecture developed by the Google team. Its primary objective is to substantially reduce model size and computational complexity while preserving model accuracy. This design makes it particularly well-suited for deployment on mobile devices and embedded systems. DenseNet (Huang et al., 2017) greatly facilitates feature reuse and gradient propagation by using the output of each layer directly as the input of all subsequent layers through a dense connectivity mechanism, effectively mitigating the problem of gradient vanishing and improving the training efficiency and performance of the model. Meanwhile, DenseNet further reduces the number of parameters and improves the computational efficiency of the model by introducing Bottleneck and Transition layers to control the width and depth of the network. ShuffleNet (Zhang et al., 2018) effectively reduces the number of parameters and computational complexity of the model by adopting innovative techniques such as Group Convolution and Channel Shuffle.

Image classification network for wheat leaf disease

M-bCNN (Lin et al., 2019) uses a unique convolutional kernel matrix arrangement, employing parallel convolutional layers. Techniques like DropConnect, exponential linear units, and local response normalization are integrated to combat overfitting and gradient vanishing. Compared to traditional networks, M-bCNN effectively boosts data streams, neurons, and connectivity channels with a modest parameter increase, enhancing its nonlinear mapping capabilities and data characterization. Feng et al (Xiao et al., 2021). constructed a wheat leaf disease image recognition model based on MobileNetV2 and used the training parameters on the ImageNet dataset as the initial parameters of the model. Jiang et al (Jiang et al., 2021). enhanced the VGG16 model through multi-task learning, leveraging pre-trained weights from ImageNet for transfer learning and fine-tuning to improve wheat leaf disease understanding. RFE-CNN (Xu et al., 2023) combines RCAB, FB, EML, and CNN to enhance Convolutional Neural Networks’ accuracy in classifying wheat leaf disease images.WR-EL (Pan et al., 2022) integrates multiple CNN models using bagging, snapshot ensembling, and SGDR algorithms to boost accuracy in wheat leaf disease image classification. Khan et al (Khan et al., 2022). developed an efficient machine learning framework for identifying and categorizing various wheat diseases, focusing on brown rust and yellow rust. The method involves several stages: initially, gathering data from diverse fields in Pakistan while accounting for illumination and orientation parameters. Next, preprocessing the data using segmentation and scaling techniques to distinguish healthy from affected areas. Lastly, training the machine learning model on the prepared dataset.Abdulaziz Alharbi et al (Alharbi et al., 2023). proposed a wheat disease classification network using Few-Shot Learning with EfficientNet as the backbone, capable of classifying 18 wheat diseases. Introduced an attention mechanism to enhance feature selection effectiveness. Bansal et al (Bansal et al., 2023). proposed a hybrid model for detecting and classifying wheat leaf spot diseases, combining Faster R-CNN for regional convolutional neural network-based detection with SVM for classification. Shafi et al (Shafi et al., 2023). Utilized a pre-trained U2 Net model for background removal and extraction of rust-affected wheat leaves. Applied deep learning classifiers, specifically Xception and ResNet-50, to assess the severity of stripe rust disease. Kukreja et al (Kukreja and Kumar, 2021). proposed a deep learning based method called Deep Convolutional Neural Network (DCNN) to automatically classify wheat rust infestation without human intervention. In addition, this DCNN training and testing process produced definitive and high classification results for wheat rust disease.

GLNet

As shown in the Figure 1, GLNet mainly consists of the following parts, which are feature extraction block, local feature block, global feature block and multi-scale feature fusion block.

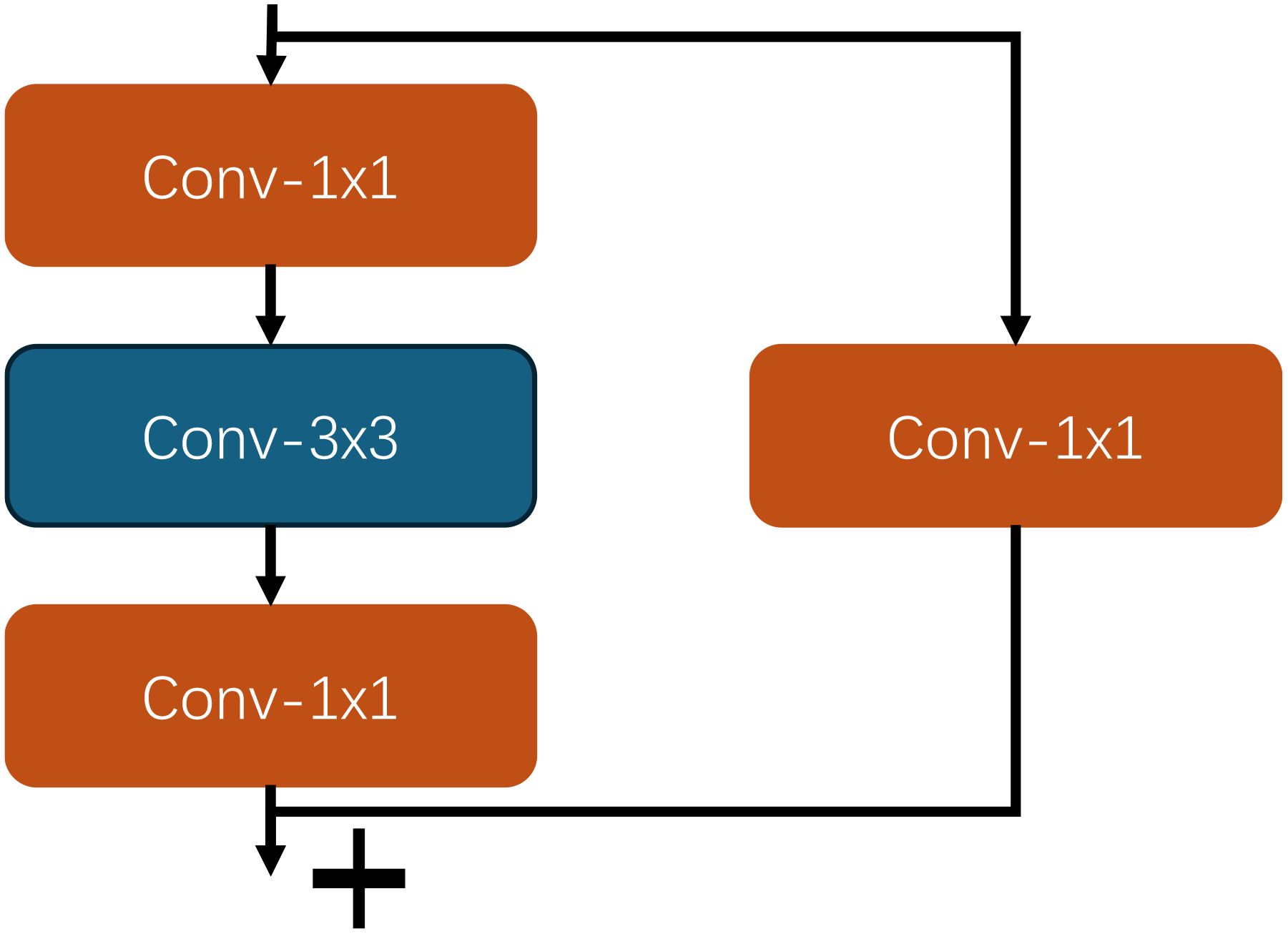

Feature extraction block

As shown in the Figure 2, the feature extraction block consists of two branches, the first branch consists of two 1x1 convolutional layers and a 3x3 convolutional layer. Specifically, the first 1x1 convolutional layer is used to reduce the dimensionality of the input features, thus reducing the computational complexity and the number of parameters. The next 3x3 convolutional layer is used to increase the sensory field, thus capturing more complex spatial features. Finally, a second 1x1 convolutional layer further extracts and combines the features.

The second branch is relatively simple and consists of a 1x1 convolutional layer. This 1x1 convolutional layer is mainly used to directly extract and combine input features, providing additional nonlinear transformations.

With the combination of these two branches, the feature extraction block is able to efficiently extract multi-scale and multi-level features, thus improving the expressiveness and performance of the model.

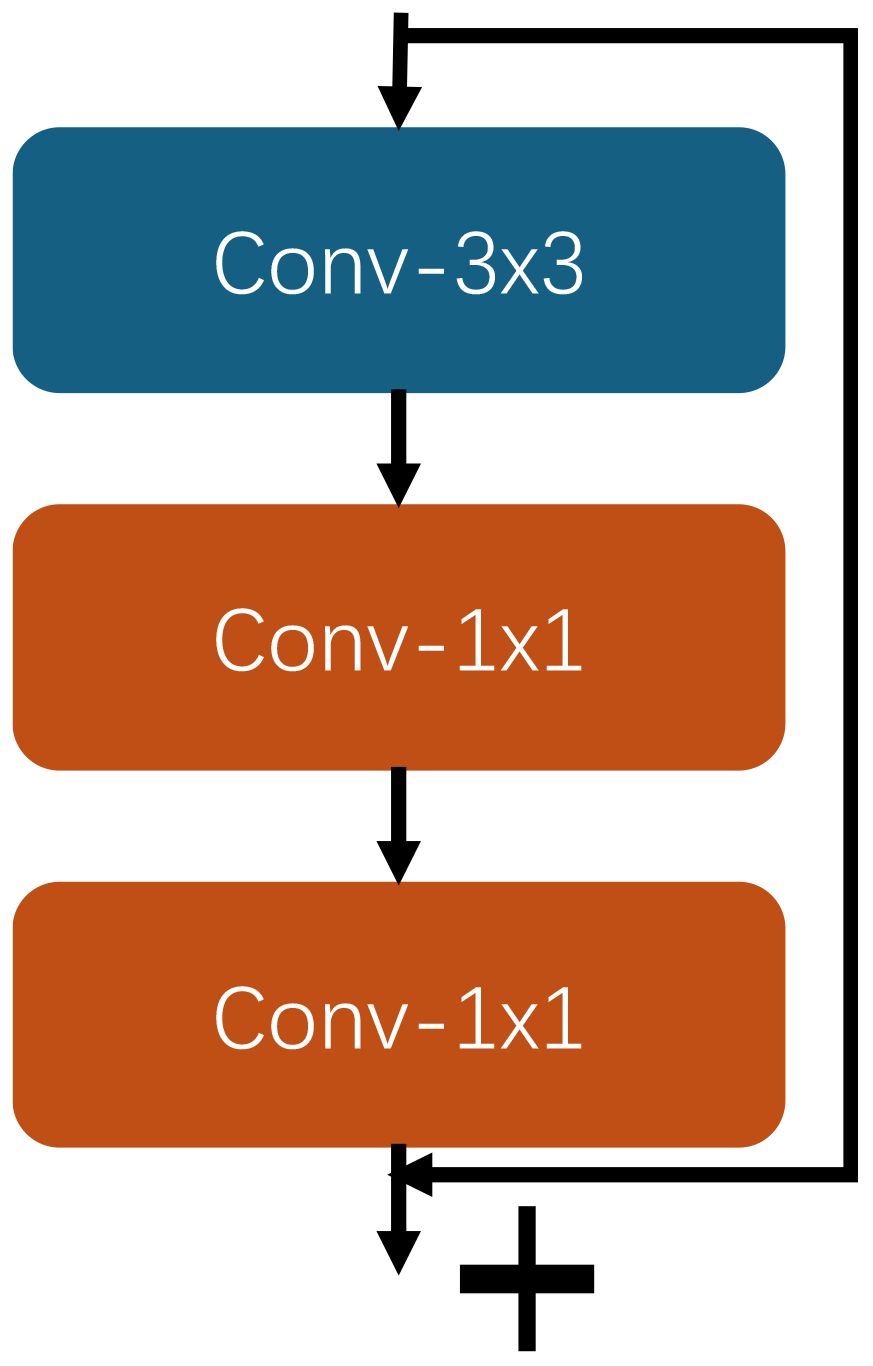

Local feature block

As shown in the Figure 3, the local feature block consists of two branches. The first branch consists of a 3x3 convolutional layer and two 1x1 convolutional layers. First, the 3x3 convolutional layer enhances feature representation by extending the receptive field to capture more complex and diverse spatial features. Following this, a 1x1 convolutional layer reduces input feature channel numbers, thereby decreasing computational complexity and parameters. Subsequently, another 1x1 convolutional layer further extracts and recombines features based on this dimensionality reduction.

The second branch is a Residual Connection that passes the input features directly to the output, skipping the intermediate convolutional operations. This connection helps to alleviate the gradient vanishing problem and promotes the training stability and efficiency of deep neural networks.

Through the combination of these two branches, the local feature block can effectively extract multi-scale and multi-level features, while the residual connection is utilized to maintain the stability and efficiency of the model training, ensuring that the input features are combined with the convolutionally processed features, thus achieving better feature learning results.

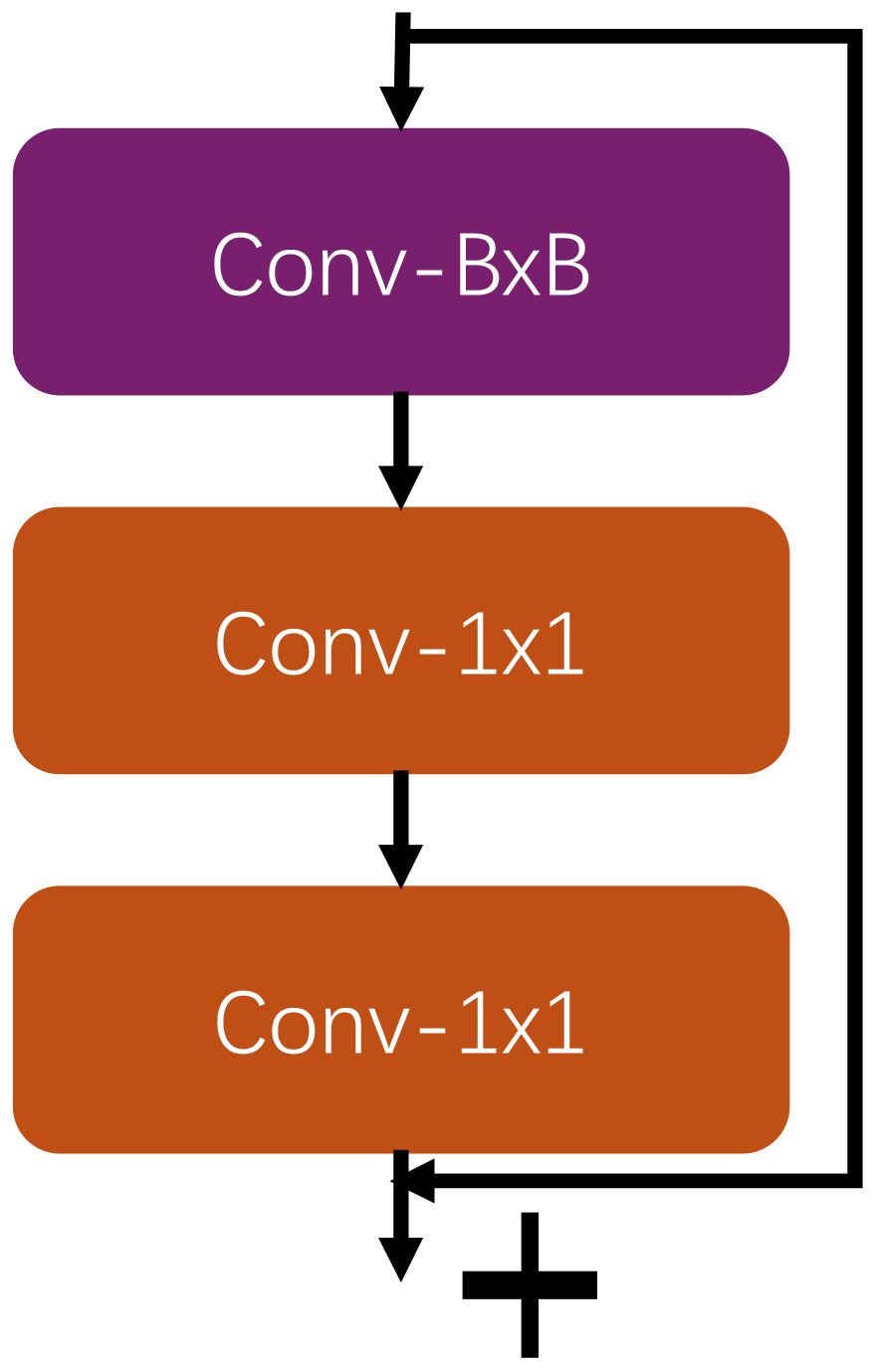

Global feature block

As shown in the Figure 4, the global feature block consists of two branches. The first branch consists of a convolutional layer sufficient to cover the size of the feature map and two 1x1 convolutional layers. First, the convolutional layer that is sufficient to cover the size of the feature map is used for global information extraction; this convolutional layer captures the global features of the entire feature map and provides richer contextual information. If the feature map size is 32, the convolution kernel size for Conv-BxB is 31. If the feature map size is 16, the convolution kernel size for Conv-BxB is 15. Then, the first 1x1 convolutional layer is used for dimensionality reduction to reduce the number of feature channels, thus reducing the computational complexity and the number of parameters. Next, the second 1x1 convolutional layer further extracts features and recombines them on the basis of dimensionality reduction to enhance the feature representation.

The second branch: is a Residual Connection, which passes the input features directly to the output, skipping the intermediate convolution operation. This connection can alleviate the problem of gradient vanishing and promote the stability and efficiency of deep neural network training.

Through the combination of these two branches, the global feature block can effectively extract global features, while using residual connection to maintain the stability and efficiency of model training, ensuring the combination of input features and convolutionally processed features, thus achieving better feature learning results. This design not only captures the global information, but also reduces the computational complexity through the 1x1 convolutional layer, making the model more computationally efficient while retaining efficient feature representation.

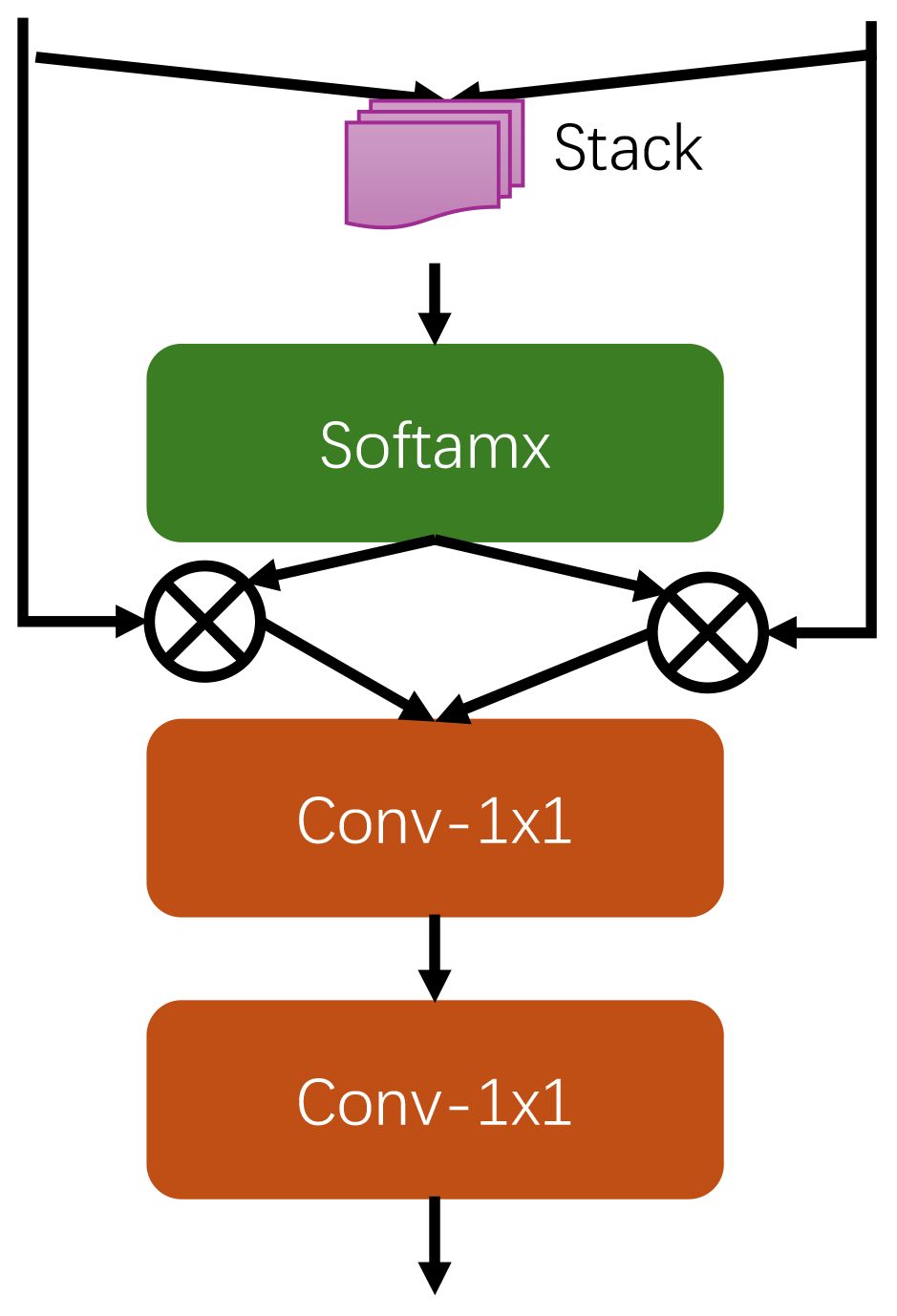

Feature fusion block

As shown in the Figure 5, feature fusion block mainly consists of two 1x1 convolutional layers and Softmax function. The process is as follows, first, the global and local features are stacked together to form a comprehensive feature map. Then, the Softmax function is used to calculate the weights of the global and local features so as to assign appropriate weight values to the features of both scales. The computed weights are then assigned to the original global and local features, thereby adjusting the importance of the respective features. The weighted global and local features are then summed element by element to form the fused features. Finally, the fused features are further processed to further extract and combine features through two 1x1 convolutional layers to enhance feature expression.

Through this process of feature fusion block, the global and local features can be effectively combined to make full use of multi-scale information, thus enhancing the feature learning ability and expression ability of the model. After the weight adjustment and element-by-element summing operation, the global and local features can work better together in the fusion process, and finally the feature representation is further optimized by the 1x1 convolutional layer to enhance the overall performance of the model.

Classification layer

The classification layer consists of two fully connected layers, the first fully connected layer has an output dimension of 256 and is used to map the input features to a more compact feature space, thus capturing more discriminative features. The output dimension of the second fully-connected layer is the number of categories, which is responsible for mapping the features extracted from the previous layer to specific classification results. Each output node corresponds to a category, and the output values of these nodes are transformed into the probability of each category through the Softmax function. Magnetic tiles, a Dropout layer with a dropout rate of 0.5 is included between the two fully connected layers to prevent overfitting.The Dropout layer randomly discards half of the neurons, thus making the model more robust during training and avoiding over-reliance on training data.

Through this design, the classification layer can effectively extract and utilize the input features, and improve the generalization ability of the model through the Dropout layer, and finally achieve accurate classification results.

Experiments

Implementation details

GLNet is implemented based on Tensorflow and Keras with a batch_szie size of 40, epoch of 100, optimizer of Adamax, learning rate of 1e-4, and loss function of cross-entropy loss function. This paper reproduces all the comparison networks based on the same hyperparameters, and all the experiments in this paper are performed in a Tesla P100. The training is stopped when the accuracy does not increase for more than three epochs.

To evaluate the performance of GLNet and comparison networks, we use Accuracy (ACC), Precision (Prec), Recall and F1 score (F1). And the categories of Prec, Recall and F1 are balanced in a way using macro.

Dataset

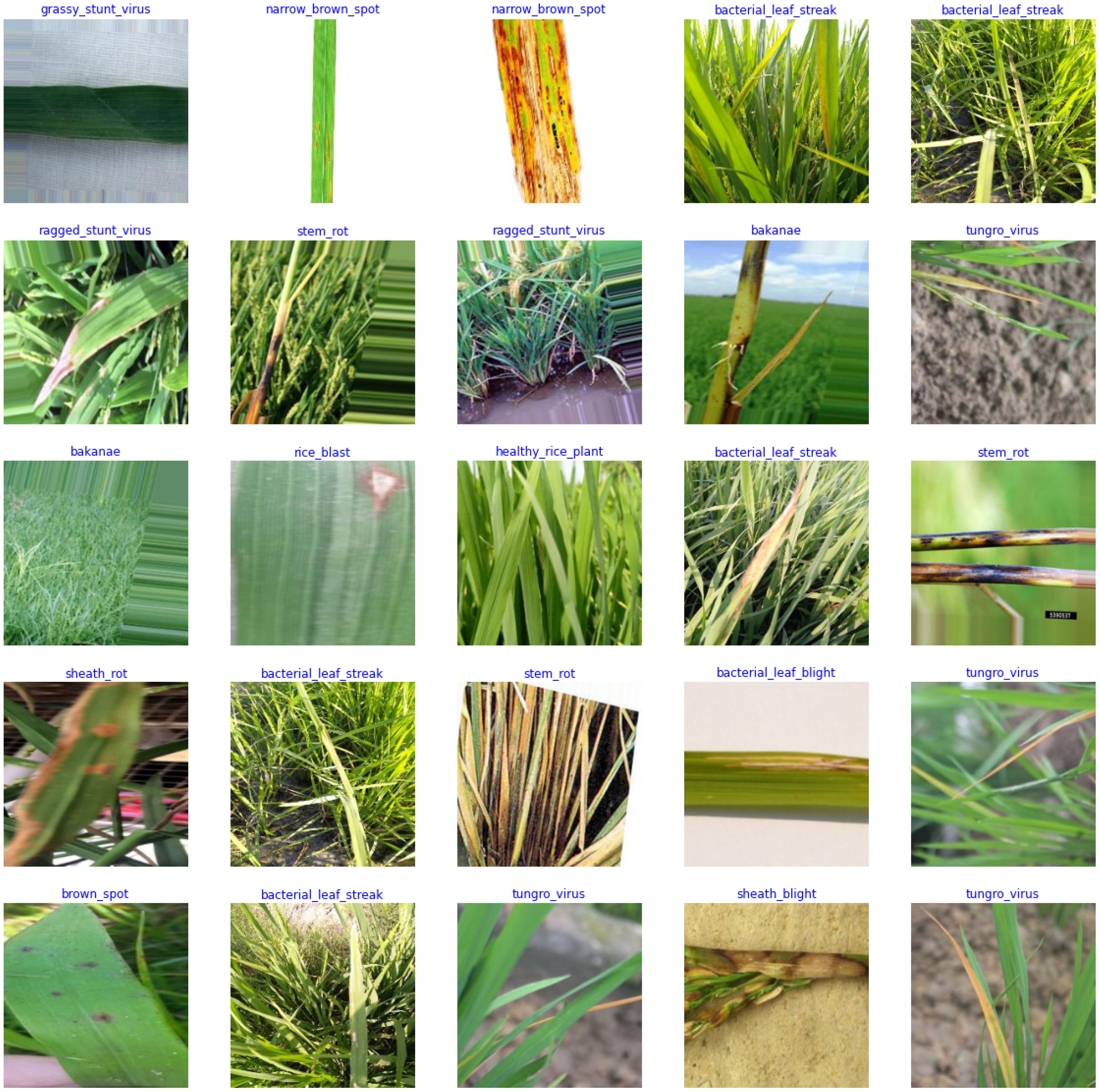

This paper validates the performance of GLNet using the Philippines Rice Diseases dataset, which has a total of 14 categories. They are Rice Blast (140 photos), Sheath Blight (98 photos), Brown Spot (150 photos), Narrow Brown Spot (98 photos), Sheath Rot (98 photos), Stem Rot (100 photos), Bakanae (100 photos), Rice False Smut (99 photos), Bacterial Leaf Blight (140 photos), Bacterial Leaf Streak (99 photos), Tungro Virus (100 photos), Ragged Stunt Virus (100 photos), and Grassy Stunt Virus (100 photos). Figure 6 shows examples of the different categories.

Comparison experiment

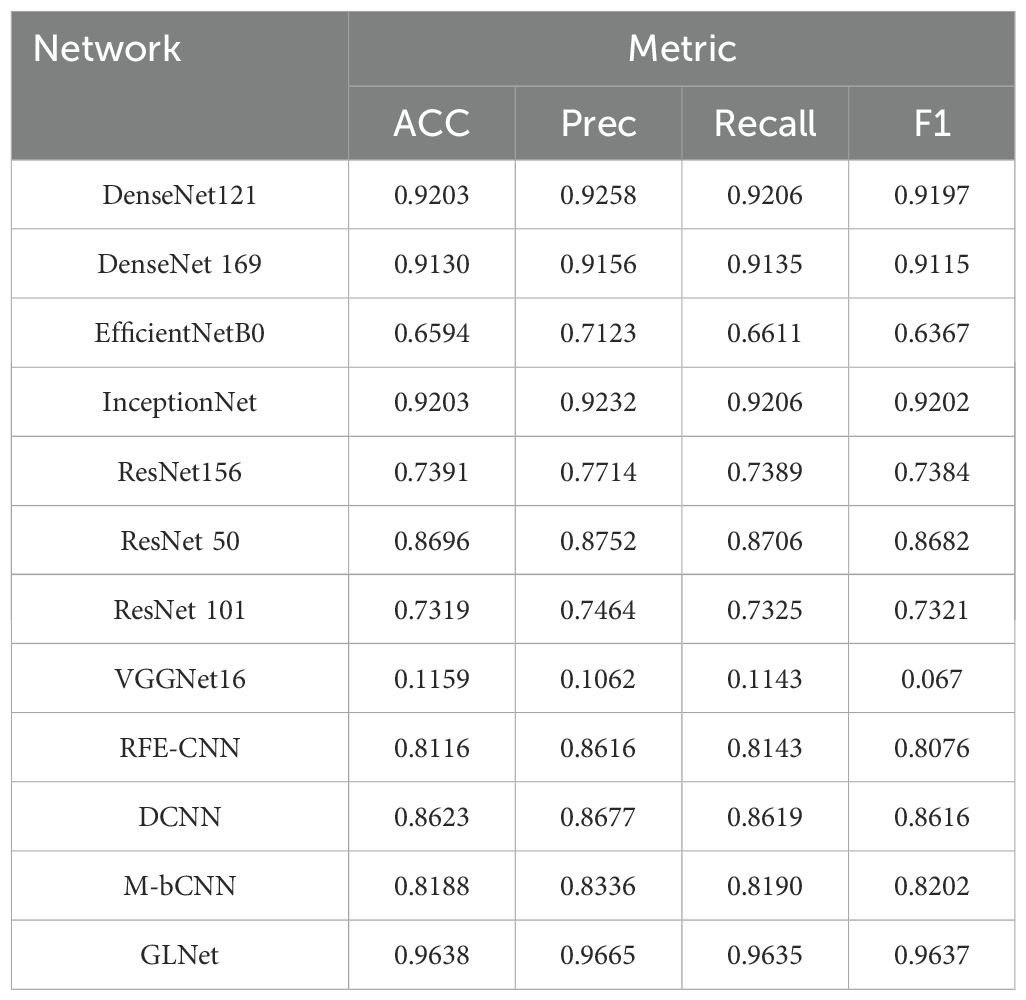

To validate the performance of GLNet, we compare it with typical image classification networks including VGGNet, InceptionNet, InceptionNet, DenseNet, and EfficientNetb0.

We can get the following conclusions from the data in Table 1.

First, upon examining these results in detail, it becomes evident that traditional convolutional neural networks (CNNs) such as VGGNet16, ResNet152, ResNet50, and ResNet101, tend to excel at capturing local details but often overlook global contextual information, particularly in the context of wheat leaf disease image classification where this issue is particularly pronounced. Their performance, as indicated by metrics like ACC, Prec, recall, and F1 score, is generally lower compared to more advanced networks.

GLNet, on the other hand, addresses this limitation by introducing a global feature block that effectively captures the overall image architecture and contextual information, thereby compensating for the traditional network’s shortcomings in global feature perception. This enhancement allows GLNet to excel in understanding and classifying wheat leaf disease images, as evidenced by its top-tier performance across all metrics, with an accuracy of 0.9638, a precision of 0.9665, a recall of 0.9635, and an F1 score of 0.9637.

Second, GLNet leverages a combination of local and global feature blocks, seamlessly integrating the information from both through a feature fusion block. The local feature blocks focus on capturing local details and texture features within the image, while the global feature blocks provide a broader context and overall architectural information. By utilizing soft weight assignment and element-wise summation, the feature fusion block ensures that the advantages of both local and global features are comprehensively utilized. This dual focus enables GLNet to analyze wheat leaf disease images from a more holistic perspective, significantly improving recognition accuracy and robustness across different disease types.

In comparison to other advanced networks like DenseNet121, DenseNet169, EfficientNetB0, InceptionNet, RFE-CNN, DCNN, and M-bCNN, GLNet demonstrates superior performance, with higher accuracy, precision, recall, and F1 scores. This highlights the effectiveness of GLNet’s architecture in capturing both local and global features, which is crucial for accurately classifying wheat leaf disease images.

In summary, the superiority of GLNet in the wheat leaf disease image classification task stems from its ability to effectively integrate local and global features and achieve a more comprehensive feature understanding and expression through the feature fusion block, which improves the classification performance and the practicality of the model.

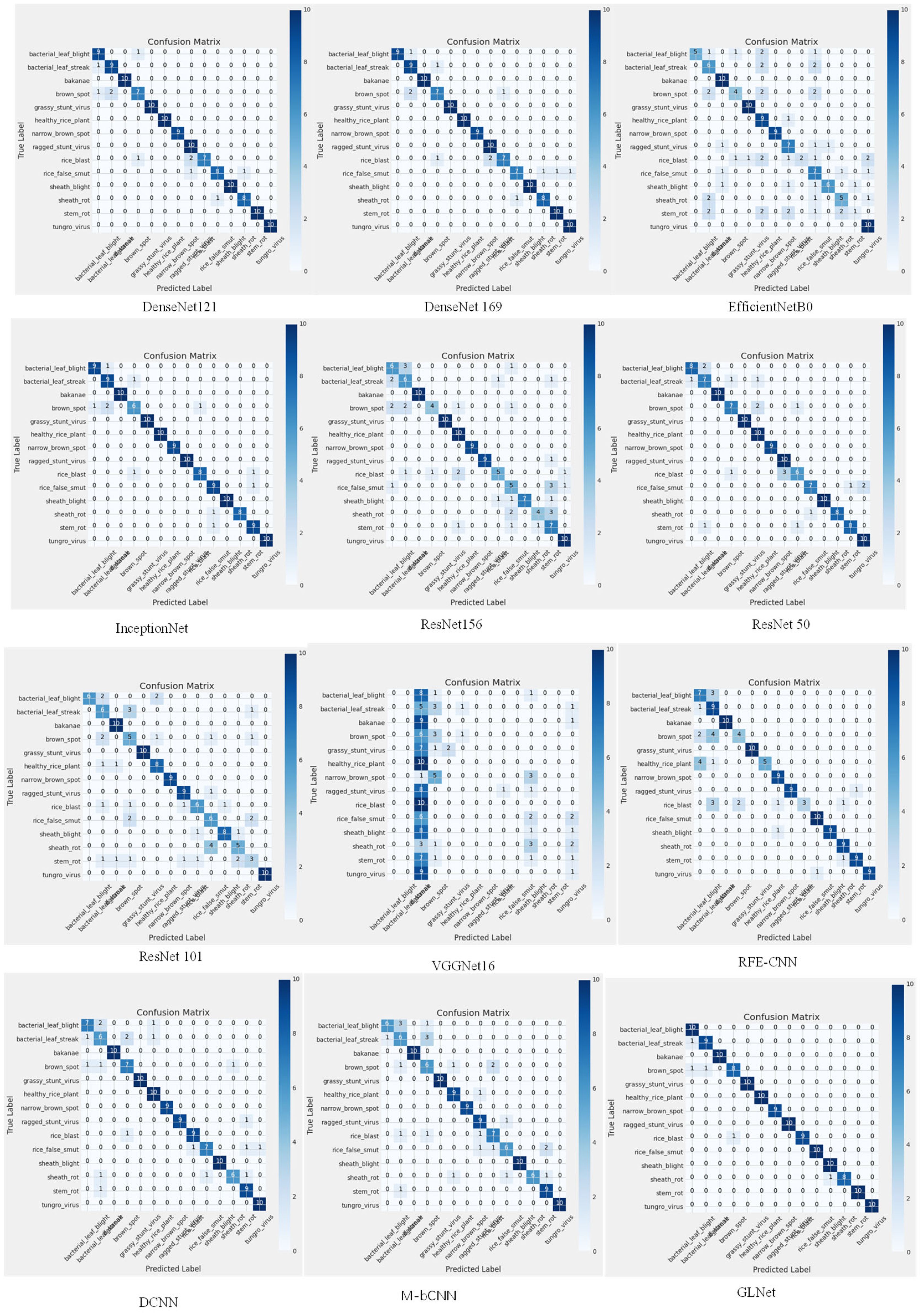

As shown in detail in Figure 7, the GLNet model exhibits excellent classification ability for each category of wheat leaf disease images, and this remarkable result strongly demonstrates the effectiveness of the global feature introduction strategy. This strategy enables the model to capture and learn the complex features of wheat leaf disease images from a more comprehensive perspective, which greatly improves the classification accuracy and generalization ability.

Ablation experiment

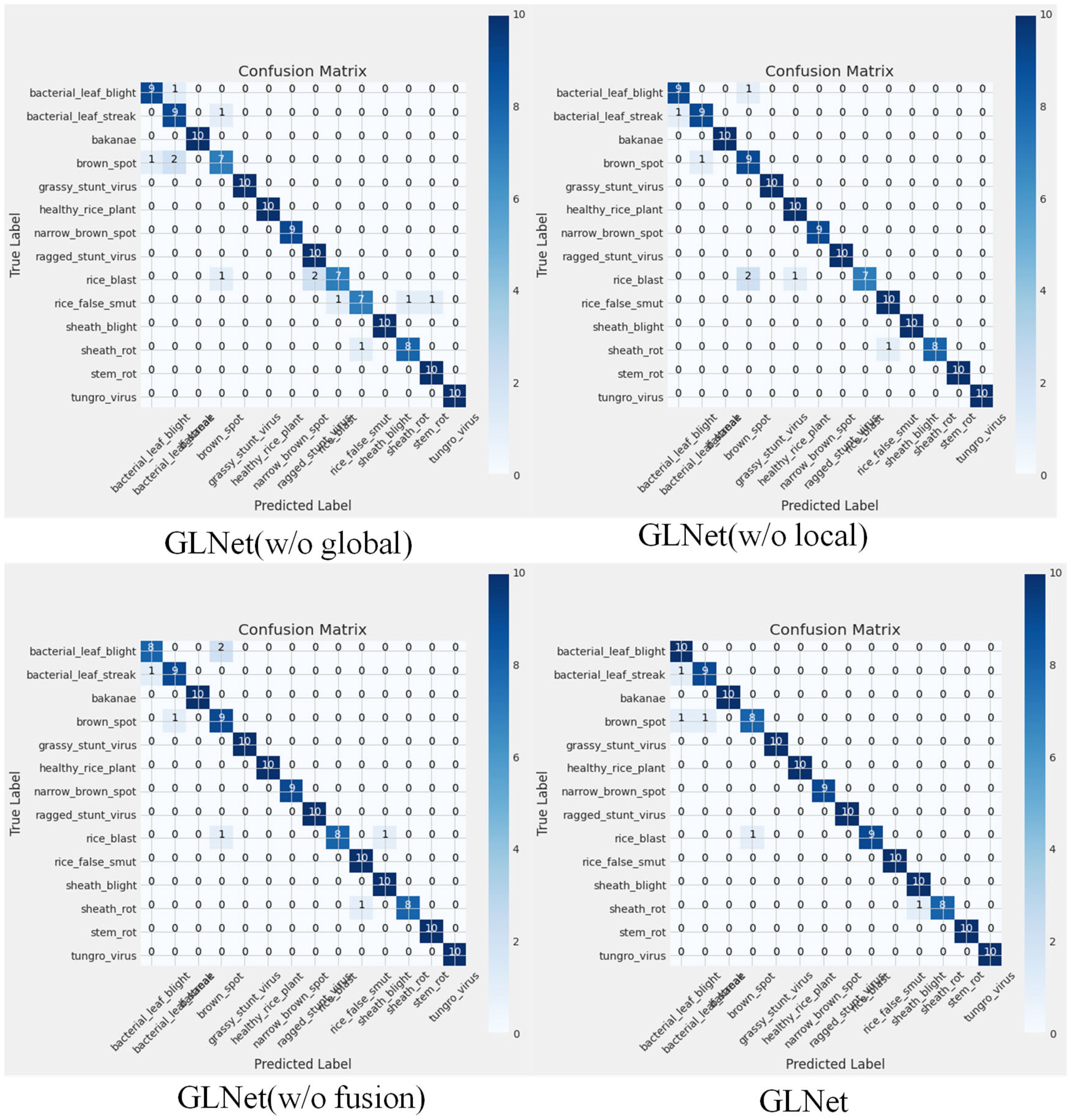

To verify the validity of different blocks in GLNet, we designed the following real: GLNet(w/o global) represents that GLNet does not use global feature blocks, GLNet(w/o local) represents that GLNet does not use local feature blocks, and GLNet(w/o fusion) represents that GLNet does not use feature fusion blocks.

By comparing the result of Table 2, we can get the following conclusions:

First, In the field of wheat leaf disease image recognition, local features play an important role. In the GLNet model, the local feature block is responsible for capturing these subtleties, which can be clearly demonstrated by the experimental results in Table 2. This is clearly evidenced by the experimental results in Table 2, where the ACC of GLNet (w/o local) is 0.9493, Prec is 0.9549, Recall is 0.9492, and F1 is 0.9491, which is a significant decline compared with the full GLNet. This indicates that in the absence of the local feature block, GLNet is difficult to effectively focus on detailed features such as the unique texture of localized lesions on leaves, and is unable to accurately differentiate and identify these key local lesion information, which in turn leads to a significant reduction in classification performance, highlighting the irreplaceable nature of localized features in providing precise detail information for the model to accurately identify different disease types.

Second, Global features are also indispensable in the task of wheat leaf disease image recognition, which is responsible for capturing the overall architecture of the entire leaf image as well as the background information. Leaf blade as a whole, its lesions are not only reflected in the local lesions, but also include the overall color change, the distribution of lesions on the leaf blade, and the contrast relationship with the surrounding healthy tissues, which are important clues reflecting the overall pathological state of the leaf blade. Analyzing the experimental data, the indexes of GLNet (w/o global) were relatively poor, with ACC of 0.9130, Prec of 0.9149, Recall of 0.9135, and F1 of 0.9115, which were much lower than that of the complete GLNet. Without the guidance of global features, GLNet will not be able to fully understand the overall pathology of the leaf, leading to inaccurate judgment of the overall lesion distribution and severity, thus affecting the improvement of classification performance.

The feature fusion block plays a key role in GLNet, which allows local and global features to work together. Wheat leaf disease images contain multiple levels of information from microscopic localized spots to macroscopic leaf overall status only when they are effectively integrated can they be maximized. For example, if local texture features are combined with global features such as overall color and spot distribution, the model will be able to judge and classify the disease more comprehensively and accurately. The data in Table 2 show that the performance of the GLNet (w/o fusion) version shows a significant decrease, with ACC, Prec, Recall, and F1 of 0.9493, 0.9541, 0.9492, and 0.9497, respectively, which are different from the best performance of the full GLNet.

As can be seen from Figure 8, we can clearly see that each building block in GLNet plays an active role in processing all types of wheat leaf disease images. It is worth noting that only when these blocks work in concert, i.e., are utilized simultaneously, GLNet can perform at its best and achieve optimal classification results. This fully illustrates the close cooperation and complementarity between the various components of the GLNet architecture, which together promote the overall model’s ability to recognize wheat leaf disease images.

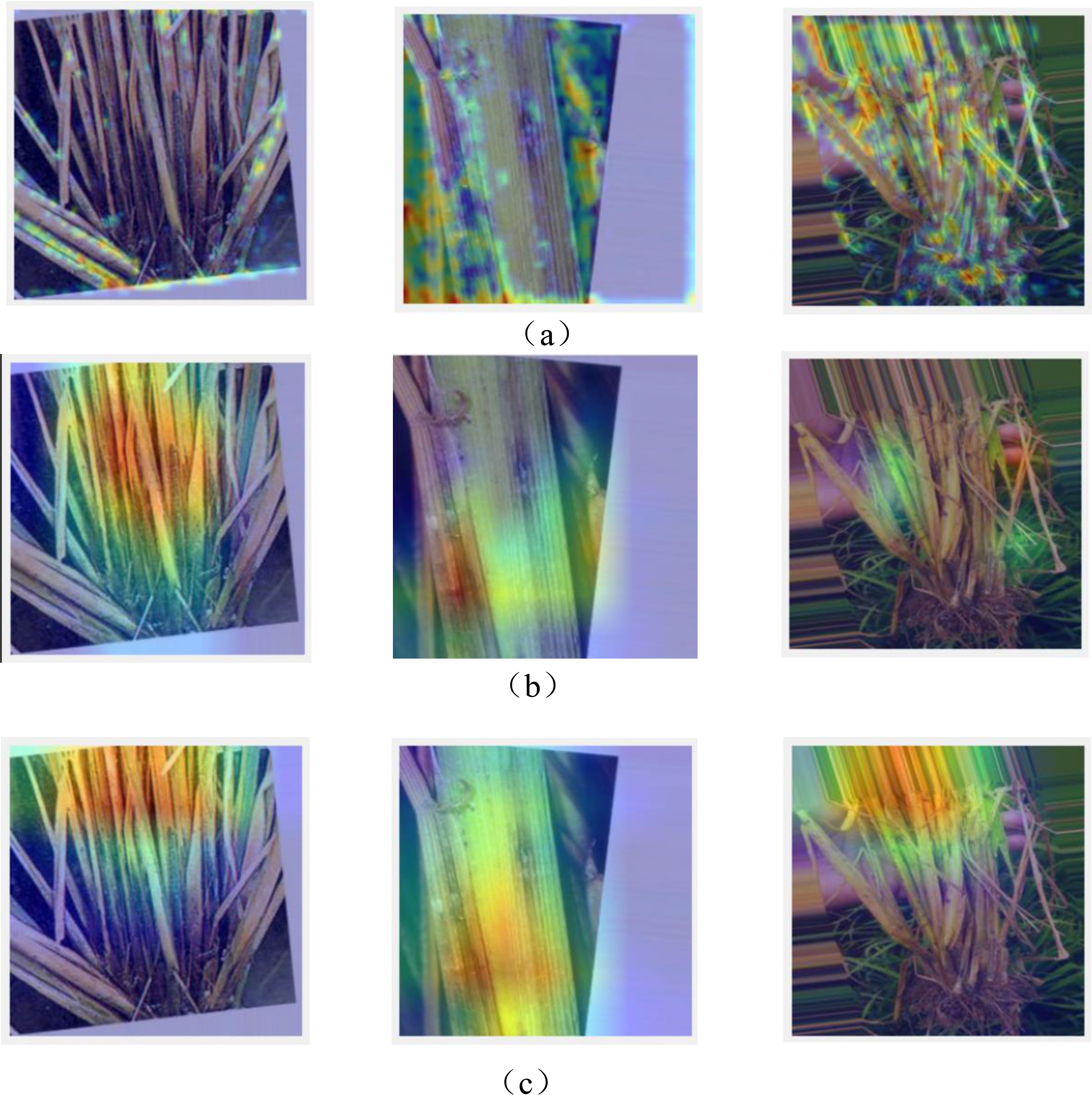

We visualized the output of Local feature block and Global feature block using Grad-CAM. As can be seen from Figure 9, we can see that the Local feature block can focus more on the local region of the wheat leaf disease image, while the Global feature block can focus on more regions than the Local feature block with its ability to learn global features.

Figure 9. (A) Visualization of the input of the Local feature block and Global feature block. (B) Visualization of the output of the Local feature block. (C) Visualization of the output of the global feature block.

Conclusion

When dealing with the wheat leaf disease image classification task, traditional convolutional neural networks often face the problems of insufficient local feature perception and incomplete understanding of global information. To overcome these shortcomings, GLNet is proposed as a new solution in this paper.GLNet adopts a global-local network architecture, which effectively integrates local and global features by introducing parallel processing of global and local feature blocks and utilizing feature fusion blocks. This design not only enables the model to better capture the multi-scale features of an image, but also significantly improves the performance and accuracy in the wheat leaf disease classification task.

The innovation of GLNet is its ability to simultaneously process and fuse local details and global background information at different scales. Experimental results show that the performance of GLNet significantly decreases in the absence of local features, global features, or feature fusion blocks, further validating the effectiveness and necessity of its design. This makes GLNet a powerful tool for dealing with the task of classifying wheat leaf disease images and provides new technical support and methodology for disease identification and prediction in the agricultural field.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Author contributions

SzL: Writing – original draft. SL: Writing – review & editing. MJ: Writing – review & editing. YC: Formal analysis, Data curation, Writing – original draft, Conceptualization. BY: Writing – review & editing, Writing – original draft.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the Key Research and Development Program Heilongjiang Province of China (No.2022ZX01A35).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alharbi, A., Khan, M. U. G., Tayyaba, B. (2023). Wheat disease classification using continual learning. IEEE Access 11, 90016–90026. doi: 10.1109/ACCESS.2023.3304358

Bansal, A., Sharma, R., Sharma, V., Jain, A. K., Kukreja, V. (2023). “A deep learning approach to detect and classify wheat leaf spot using faster R-CNN and support vector machine,” in 2023 IEEE 8th International Conference for Convergence in Technology (I2CT). 1–6 (Lonavla, India: IEEE).

Dadrasi, A., Chaichi, M., Nehbandani, A., Sheikhi, A., Salmani, F., Nemati, A. (2023). Addressing food insecurity: An exploration of wheat production expansion. PloS One 18, e0290684. doi: 10.1371/journal.pone.0290684

Ding, X., Guo, Y., Ding, G., Han, J. (2019). “Acnet: Strengthening the kernel skeletons for powerful cnn via asymmetric convolution blocks,” in Proceedings of the IEEE/CVF international conference on computer vision. 1911–1920 (Seoul, South Korea).

Erenstein, O., Jaleta, M., Mottaleb, K. A., Sonder, K., Donovan, J., Braun, H.-J. (2022). “Global trends in wheat production, consumption and trade,” in Wheat improvement: food security in a changing climate (Springer International Publishing, Cham), 47–66.

Guerrero, J. M., Guijarro, M., Montalvo, M., Romeo, J., Emmi, L., Ribeiro, A., et al. (2013). Automatic expert system based on images for accuracy crop row detection in maize fields. Expert Systems With Applications 40, 656–664. doi: 10.1016/j.eswa.2012.07.073

He, K., Zhang, X., Ren, S., Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778 (Las Vegas, Nevada, USA).

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., et al. (2017). Mobilenets: Efficient convolutional neural networks for mobile vision applications. doi: 10.1109/CVPR.2017.322

Huang, G. G., Liu, Z., van der Maaten, L., Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition. 4700–4708 (Honolulu, Hawaii, USA).

Jiang, Z., Dong, Z., Jiang, W., Yang, Y. (2021). Recognition of rice leaf diseases and wheat leaf diseases based on multi-task deep transfer learning. Comput. Electron. Agric. 186, 106184. doi: 10.1016/j.compag.2021.106184

John, M. A., Bankole, I., Ajayi-Moses, O., Ijila, T., Jeje, O., Lalit, P. (2023). Relevance of advanced plant disease detection techniques in disease and Pest Management for Ensuring Food Security and Their Implication: A review. Am. J. Plant Sci. 14, 1260–1295. doi: 10.4236/ajps.2023.1411086

Khan, H., Haq, I. U., Munsif, M., Mustaqueem, Khan, S. U., Lee, M. Y. (2022). Automated wheat diseases classification framework using advanced machine learning technique. Agriculture 12, 1226. doi: 10.3390/agriculture12081226

Khasim, S., Ghosh, H., Rahat, I. S., Shaik, K., Yesubabu, M. (2024). Deciphering microorganisms through intelligent image recognition: machine learning and deep learning approaches, challenges, and advancements. EAI Endorsed Trans. Internet Things 10, 1–8. doi: 10.4108/eetiot.4484

Krizhevsky, A., Sutskever, I., Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems 25, 1–9. doi: 10.5555/2999134.2999278

Kukreja, V., Kumar, D. (2021). “Automatic classification of wheat rust diseases using deep convolutional neural networks,” in 2021 9th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO). 1–6 (Noida, India: IEEE).

Lin, Z., Mu, S., Huang, F., Mateen, K. A., Wang, M., Gao, W., et al. (2019). A unified matrix-based convolutional neural network for fine-grained image classification of wheat leaf diseases. IEEE Access 7, 11570–11590. doi: 10.1109/Access.6287639

Pan, Q., Gao, M., Wu, P., Yan, J., AbdelRahman, M. A. E. (2022). Image classification of wheat rust based on ensemble learning. Sensor 22, 6047. doi: 10.3390/s22166047

Rebouh, N. Y., Khugaev, C. V., Utkina, A. O., Isaev, K. V., Mohamed, E. S., Kucher, D. E. J. A. (2023). Contribution of eco-friendly agricultural practices in improving and stabilizing wheat crop yield: A review. Agronomy 13, 2400. doi: 10.3390/agronomy13092400

Shafi, U., Mumtaz, R., Qureshi, M. D. M., Mahmood, Z., Tanveer, S. K., Ul Haq, I., et al. (2023). Embedded AI for wheat yellow rust infection type classification. IEEE Access 11, 23726–23738. doi: 10.1109/ACCESS.2023.3254430

Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. doi: 10.48550/arXiv.1409.1556

Singh, J., Chhabra, B., Raza, A., Yang, S. H., Sandhu, K. S. (2023). Important wheat diseases in the US and their management in the 21st century. Front. Plant Sci. 13, 1010191. doi: 10.3389/fpls.2022.1010191

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., et al. (2015). “Going deeper with convolutions,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 1–9 (Boston, Massachusetts, USA).

Tan, M., Le, Q. (2019). “Efficientnet: Rethinking model scaling for convolutional neural networks,” in International conference on machine learning. 6105–6114 (USA: PMLR).

Xiao, F., Li, D., Wang, W., Zheng, G., Liu, H., Sun, Y., et al. (2021). Image recognition of wheat leaf diseases based on lightweight convolutional neural network and transfer learning. Journal of Henan Agricultural Sciences 50, 174. doi: 10.15933/j.cnki.1004-3268.2021.04.023

Xu, L., Cao, B., Zhao, F., Ning, S., Xu, P., Zhang, W., et al. (2023). Wheat leaf disease identification based on deep learning algorithms. Physiological and Molecular Plant Pathology 123, 101940. doi: 10.1016/j.pmpp.2022.101940

Keywords: convolutional neural network, wheat leaf disease, image classification, multiscale features, GLNet model

Citation: Li S, Liu S, Ji M, Cao Y and Yun B (2024) GLNet: global-local feature network for wheat leaf disease image classification. Front. Plant Sci. 15:1471705. doi: 10.3389/fpls.2024.1471705

Received: 28 July 2024; Accepted: 25 November 2024;

Published: 20 December 2024.

Edited by:

Ying Bi, Zhengzhou University, ChinaCopyright © 2024 Li, Liu, Ji, Cao and Yun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mingyu Ji, amltaW5neXVAbmVmdS5lZHUuY24=

Shangze Li

Shangze Li Shen Liu1

Shen Liu1 Yuhao Cao

Yuhao Cao Bai Yun

Bai Yun