- 1School of Big Data, Yunnan Agricultural University, Kunming, China

- 2Key Laboratory for Crop Production and Intelligent Agriculture of Yunnan Province, Yunnan Agricultural University, Kunming, China

- 3School of Mechanical and Electrical Engineering, Yunnan Agricultural University, Kunming, China

Deep learning models have been widely applied in the field of crop disease recognition. There are various types of crops and diseases, each potentially possessing distinct and effective features. This brings a great challenge to the generalization performance of recognition models and makes it very difficult to build a unified model capable of achieving optimal recognition performance on all kinds of crops and diseases. In order to solve this problem, we have proposed a novel ensemble learning method for crop leaf disease recognition (named ELCDR). Unlike the traditional voting strategy of ensemble learning, ELCDR assigns different weights to the models based on their feature extraction performance during ensemble learning. In ELCDR, the models’ feature extraction performance is measured by the distribution of the feature vectors of the training set. If a model could distinguish more feature differences between different categories, then it receives a higher weight during ensemble learning. We conducted experiments on the disease images of four kinds of crops. The experimental results show that in comparison to the optimal single model recognition method, ELCDR improves by as much as 1.5 (apple), 0.88 (corn), 2.25 (grape), and 1.5 (rice) percentage points in accuracy. Compared with the voting strategy of ensemble learning, ELCDR improves by as much as 1.75 (apple), 1.25 (corn), 0.75 (grape), and 7 (rice) percentage points in accuracy in each case. Additionally, ELCDR also has improvements on precision, recall, and F1 measure metrics. These experiments provide evidence of the effectiveness of ELCDR in the realm of crop leaf disease recognition.

1 Introduction

Crops face continuous threats from different diseases during planting, making disease control a long-standing and crucial challenge for farmers. Early detection of crop diseases is an essential task in agriculture (Applalanaidu and Kumaravelan, 2021). In the early stage, farmers and experts relied on their knowledge and experience to diagnose crop diseases. However, this approach is inefficient, expensive, and characterized by low accuracy. With the development of information technology, researchers began to apply machine learning (ML) and deep learning (DL) technologies for crop disease recognition. ML and DL technologies offer the potential to automate and enhance the accuracy of crop disease recognition. In recent years, DL has become the mainstream technology in the field of crop disease recognition due to its automated feature extraction, high accuracy, and user-friendliness. Many researchers tried to apply more advanced DL models to recognize diseases in different crops. For instance, Fuentes et al. (2018) used faster R-CNN to recognize tomato diseases, achieving a recognition rate of approximately 96%. Nachtigall et al. (2016) proposed a technique for apple disease recognition based on AlexNet, achieving an accuracy of 96.6%. Jiang et al. (2020) applied convolution neural networks (CNNs) to recognize four different rice diseases, achieving an accuracy of 96.8%. There are also many recognition studies on crops based on DL models, including grape (Xie et al., 2020), mango (Singh et al., 2019), millet (Coulibaly et al., 2019), olive (Cruz et al., 2017), and cucumber (Kawasaki et al., 2015). The DL models used in these studies include Xception, MCNN, VGG, LeNet, and custom CNN. In these studies, different DL models are applied by the researchers who expect that better models will bring better recognition accuracy.

There are many kinds of crops and crop diseases. While the leaves of different crops may exhibit distinct morphological features, the symptoms caused by different diseases can often appear visually similar (Ngugi et al., 2021). This brings a great challenge to the generalization performance of the recognition model. Different DL models have their own feature extraction mechanisms, resulting in different recognition performances in different crops’ disease recognition. This variability also makes it difficult to build a common model that can achieve optimal recognition performance across all crop types and diseases. To solve this problem, we proposed a novel ensemble learning method for crop leaf disease recognition (named ELCDR), which can integrate different DL models to improve the generalization performance of the recognition method.

The contributions of this paper include the following key aspects:

1) We proposed a novel ensemble learning method for crop leaf disease recognition (named ELCDR). Compared with the crop disease recognition methods that are based on a single model, ELCDR demonstrates better recognition performance and generalization performance.

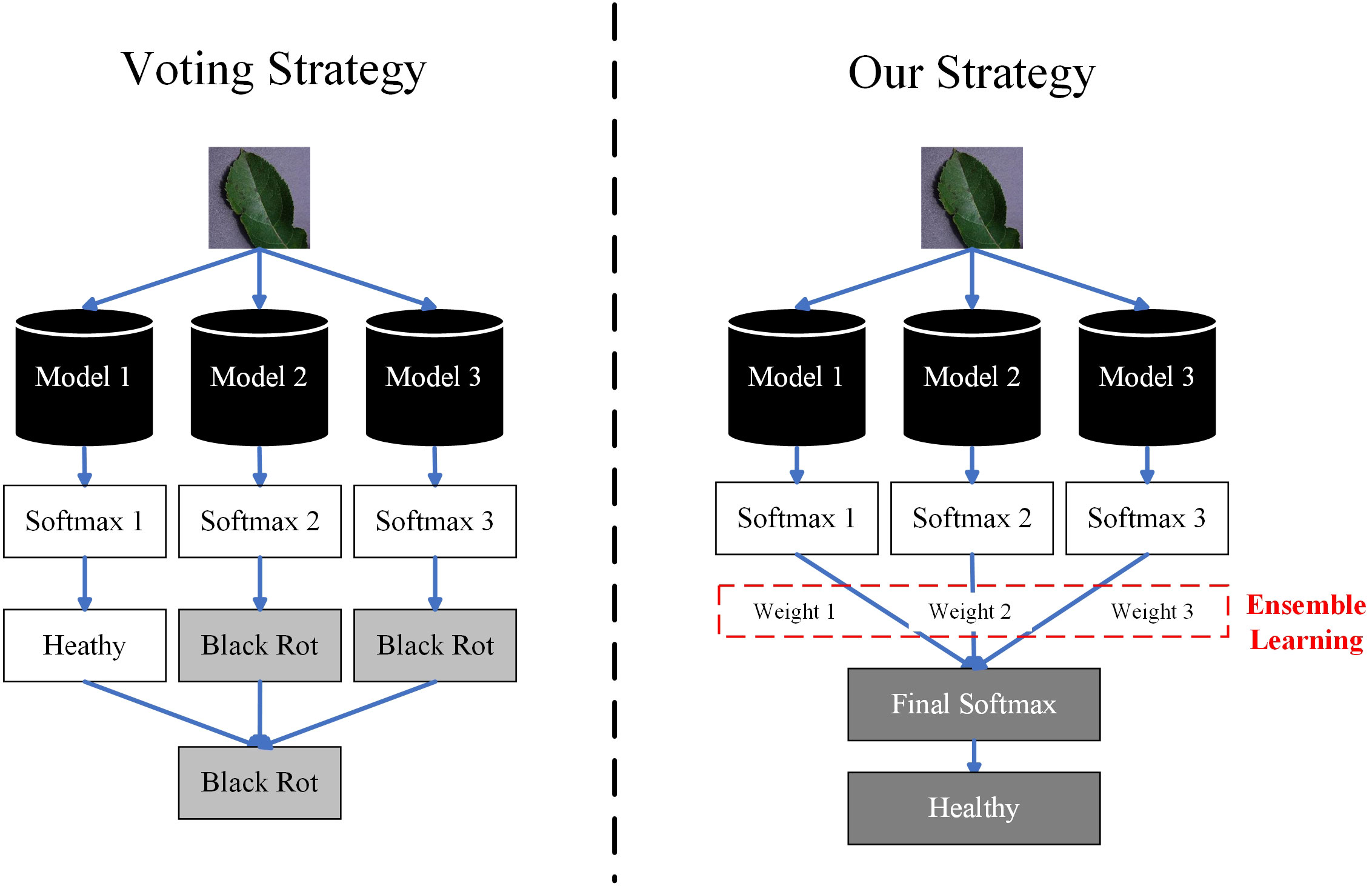

2) We proposed an innovative ensemble learning strategy and deployed it within ELCDR. Compared with the traditional voting strategy, our strategy can realize more reasonable ensemble learning. By this, ELCDR can achieve better recognition performance and generalization performance than the methods based on the voting strategy.

3) We executed experiments on the dataset that includes four different crop types, and the results showed the effectiveness of our methods.

2 Related works

The recognition of crop leaf disease images essentially constitutes an image classification. In the past, many researchers tried to achieve automated crop disease image recognition using traditional machine learning technologies. Support vector machine (SVM) is the most widely used machine learning algorithm in the research field of crop disease image recognition. Raza et al (Shan-E-Ahmed et al., 2015). proposed an SVM-based method that can detect tomato powdery mildew with an accuracy of more than 90%. Islam et al. (2017) suggested an SVM-based approach for recognizing two potato diseases, with an accuracy of 95%. Additionally, Pantazi et al. (2019) proposed a multiple crop disease recognition system based on SVM, also achieving an accuracy of 95%. On the other hand, Kaur et al. (2018) applied SVM to recognize various diseases of soybean, with the highest accuracy reaching 62.53%. Furthermore, the k-nearest neighbor (KNN) (Hossain et al., 2019), k-means (Prakash et al., 2017), transductive support vector machine (TSVM) (Ahmed et al., 2019), and multiple linear regression (MLR) (Sun et al., 2018) are also the traditional machine learning technologies that are widely applied in crop leaf disease recognition. All of these recognition methods based on traditional machine learning need to select image features manually or by using other selection algorithms. The quality of feature selection significantly impacts the performance of recognition. This leads the traditional machine learning methods to have a certain threshold for use and may have an unstable performance.

In recent years, due to developments in deep learning technologies, researchers have been incorporating deep learning models into the realm of crop disease image recognition. Deep learning models are proficient at automating feature selection and extraction, allowing for end-to-end deployment. This has led deep learning models to gradually become the mainstream methods in the field of crop disease image recognition. For instance, Jiang et al. (2019) achieved real-time disease recognition for apples using the VGG model and attained an accuracy of 78.8%. Additionally, some studies have applied VGG to recognize other crops (Paymode and Malode, 2021) (Bhagat et al., 2023) (Kundu et al., 2021). VGG is a widely applied deep learning model in crop disease recognition studies because it has a simple network structure and a smaller convolutional kernel. Researchers also introduced other deep learning models to crop leaf disease recognition, such as ResNet (Stephen et al., 2023), MobileNet (Chen et al., 2021), AlexNet (Chen et al., 2022), and GoogLeNet (Yang et al., 2023). However, the diverse array of crops and diseases poses a great challenge to the generalization performance of recognition models. This challenge makes it very difficult to build a unified model that can achieve optimal recognition performance on all kinds of crops and diseases. When applying deep learning models to a new kind of crop or disease, researchers often need to optimize the model to adapt to the unique characteristics of that specific crop and disease (Ganesan and Chinnappan, 2022) (Reddy et al., 2023). Otherwise, the models may fail to achieve their optimal recognition performance.

In order to improve the generalization performance of the recognition method, researchers have introduced ensemble learning to image-based crop disease recognition (Li et al., 2021). Ensemble learning (Ganaie et al., 2022) is an effective way to improve the generalization performance of the recognition method. The authors of refs (Chaudhary et al., 2020) (Mathew et al., 2022) (Palanisamy and Sanjana, 2023). introduced the voting strategy of ensemble learning to crop disease recognition and observed improvements in recognition accuracy in the experiments. When we apply the voting strategy to recognize an image, each of the models costs a vote for a particular category. Then, the image is assigned to the category which receives most of the votes. Voting is the simplest and most effective ensemble learning strategy, but it treats all models as equally important. Even if a model fails to extract effective features, its vote still has significant weight. This is obviously unreasonable. Furthermore, if each model votes for a different category, it becomes challenging to determine which category should the image belong to, rendering the voting strategy ineffective.

To solve this problem, we have proposed a novel ensemble learning method for crop leaf disease recognition method, named ELCDR. Different from the traditional voting strategy, it assigns varying weights to the models during ensemble learning. These weights are determined based on the feature extraction performance of each model, which can be measured by examining the distribution of feature vectors. Using this approach, ELCDR can achieve more accurate and stable disease recognition performance across different corps. Figure 1 shows the primary differences between the voting strategy and our proposed strategy when applied in ensemble learning.

3 A novel ensemble learning method for crop leaf disease recognition

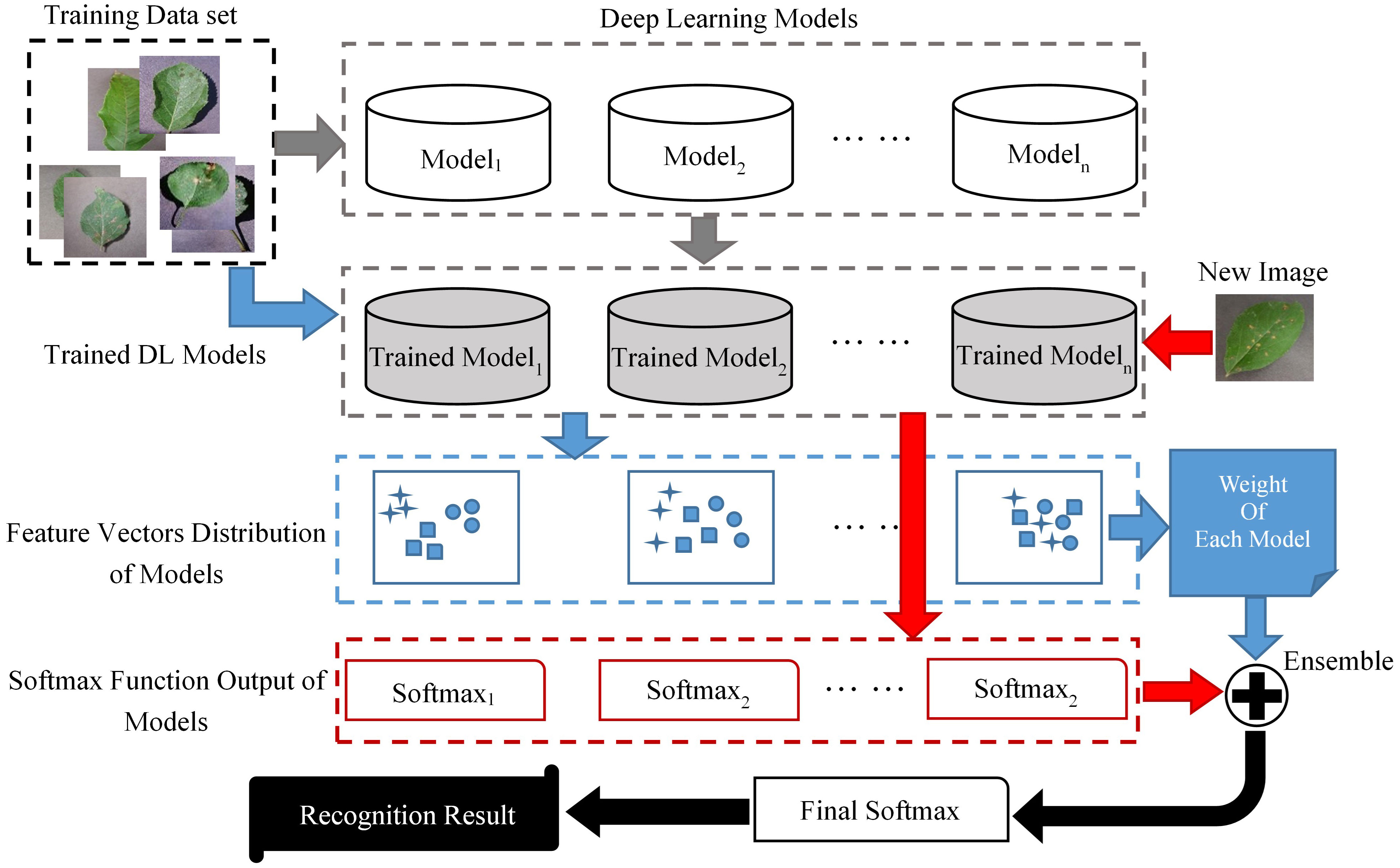

The basic flow of ELCDR is shown in Figure 1. There are four stages in ELCDR, and they are represented by arrows of different colors in Figure 2.

1) Stage 1 is represented by the gray arrows. We build a training set and multiple DL models at the beginning of this stage. Then, we use the training set to train each of the DL models, respectively. If there are n DL models, then we will get n trained models at the last of this stage.

2) Stage 2 is represented by the blue arrows. This stage’s main purpose is to measure each model’s feature extraction performance, and then calculate the ensemble learning weight of each model. We input the training set into each of the trained models to get the feature vector distribution of each trained model, respectively. Then, we can get each model’s feature extraction performance by measuring the vector distribution of all the trained models. At last, we calculate the ensemble learning weight for each model based on their feature extraction performance. The details about how to measure the feature extraction performance of models are introduced in Section 3.1, and the details about how to calculate the ensemble learning weight are also introduced in Section 3.1. If there are n trained models, then we will get their ensemble learning weight as ω1 to ωn by this stage.

3) Stage 3 is represented by the red arrows. Once a new image is input into the trained models, we can get the softmax function output of each model. If there are n trained models, then we can extract their softmax function output as sf1 to sfn.

4) Stage 4 is represented by the black arrows. Based on the ensemble learning weight and the image’s softmax function output, we can calculate the final softmax function output by the following formula:

In Formula (1), n means the number of trained models. Lastly, we can get the new image’s recognition result based on the final softmax function output. The details regarding how to calculate the final softmax function output are provided in Section 3.2.

3.1 Weight calculation by measuring the feature extraction performance of the models

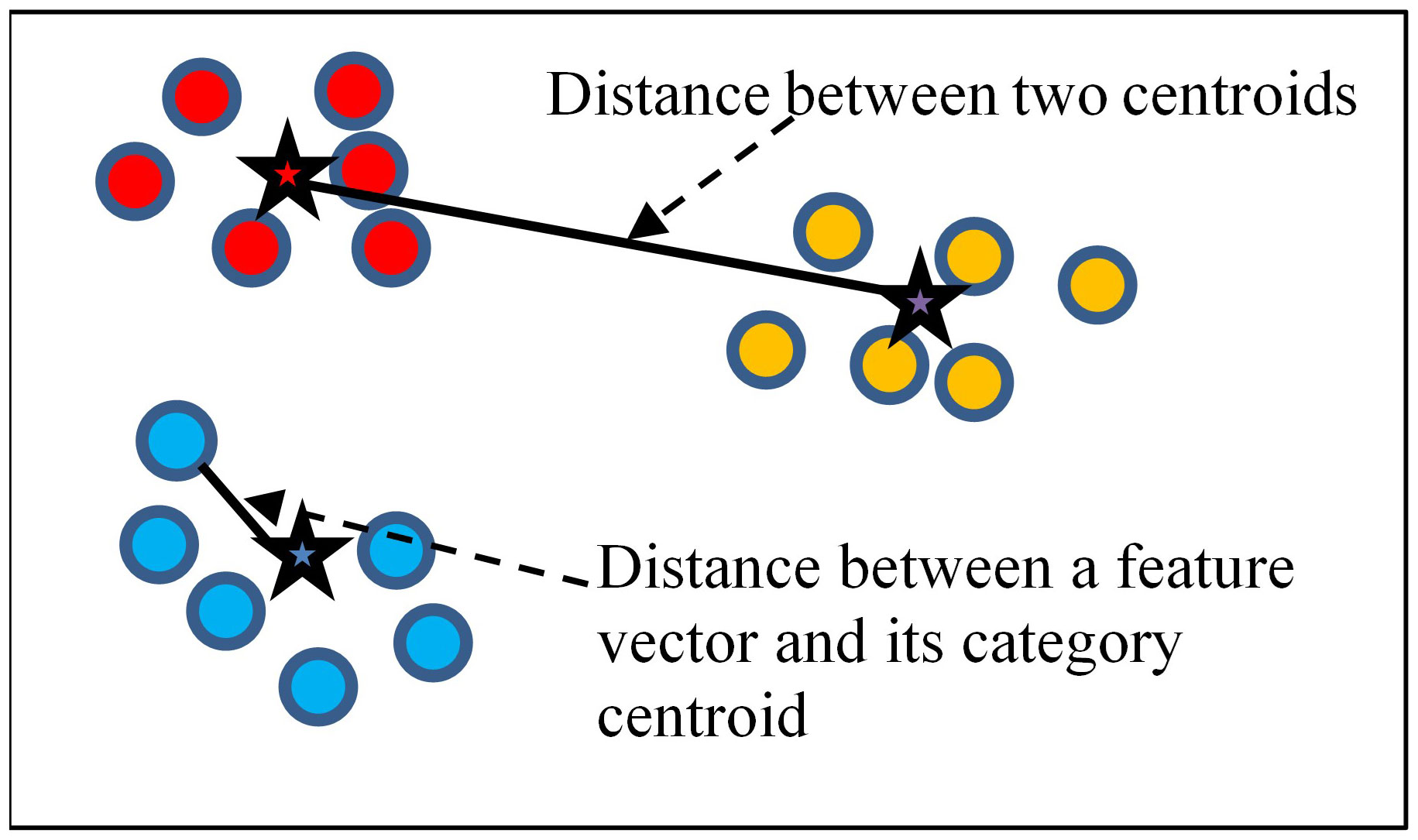

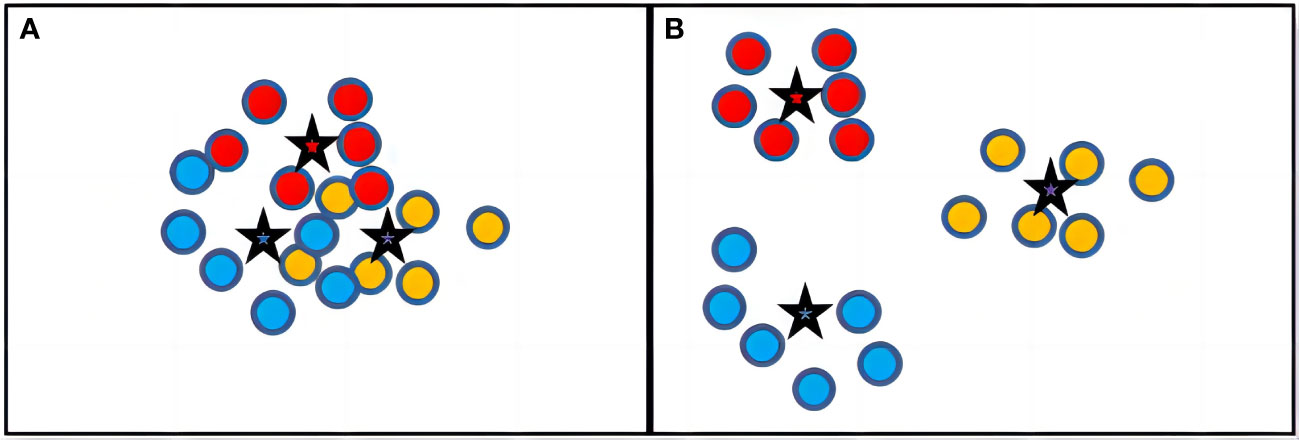

The traditional ensemble learning method generally uses a voting weighting strategy, but we find this strategy to be irrational. Different DL models may exhibit different feature extraction performances on the same dataset. If a model could extract a more effective feature, it should be assigned more weight during the ensemble process. Otherwise, the model with less effective feature extraction should be assigned less weight. By this thought, we have introduced a novel weight calculation method that measures the feature extraction performance of the models. This method uses the vectors’ distribution of the training set to measure a model’s feature extraction performance. If there are t images in the training set, they will be divided into k categories. Once we input the training set into the DL model, we can get t feature vectors corresponding to the images. Figure 3 shows an example of feature vector distribution in a two-dimensional space. In this space, there are 18 feature vectors represented by spots. All of the vectors can be divided into three categories, which we use different colors to represent. Each category has a category centroid represented by a star. The category centroid is an average vector of all vectors in the category. For a specific categoryp, if there are total m vectors in categoryp, its centroid cenp can be calculated by the following formula:

We believe that a model’s feature extraction performance can be measured by considering the in-category distance and the between-categories distance of the training set feature vectors.

The in-category distance in a vector space means the average distance of all vectors to their respective category centroid. We use to represent the in-category distance, and can be calculated using the following formula:

In Formula (3), k means the total number of categories, and the function means the Euclidean distance between two vectors.

The between-categories distance means the average distance between all category centroids. We use to represent the in-category distance, and can be calculated using the following formula:

In Formula (4), is the combination number formula.

If a model could effectively extract the images’ feature, then the feature vectors that belong to the same category should be closely distributed around their category centroid. Additionally, the category centroid of different categories should be distributed far apart from each other. As shown in Figure 4, there are two different models’ feature vector spaces (ModelA and ModelB). ModelB obviously has evidently extracted a more effective feature because it is very easy to determine the category of an image in the vector space of ModelB. In contrast, the vectors of ModelA are closely distributed together, making it very challenging to distinguish the category of an image. Therefore, we can conclude that ModelB has a higher feature extraction performance than ModelA. From this perspective, we should assign a higher weight to ModelB than to ModelA during ensemble learning.

Figure 4 Example of feature vector distribution of different models. (A) Feature Vectors Distribution of ModelA. (B) Feature Vectors Distribution of ModelB.

The distribution of vectors in the same category can be measured by , and the distribution of different categories can be measured by . So, if a model has a bigger and a smaller , then we could consume it to have better feature extraction performance. We use FEPg to represent the feature extraction performance of modelg, and it can be calculated by the following formula:

We should give the model that has a higher FEP more weight when performing ensemble learning. So, the weight formula is defined as follows:

In this formula, means the weight assigned to modelg, and n means the total number of models used during ensemble learning.

In ELCDR’s stage 2, we input the training set into the trained models and then calculate their weights by measuring their feature extraction performance.

3.2 Ensemble learning strategy of ELCDR

Once a new image is input to the trained DL models, we can get a softmax function output from each model. The softmax function output is the probability distribution of this image belonging to each category. For example, if we input imgx into a trained DL model, we get a softmax function output as [0.2, 0.6, 0.2]. It means that there are three categories in total, and imgx has the most probability that belongs to the second category. If we input the imgx to multiple trained DL models, we would get the corresponding multiple softmax function output. Then, we can use the weighting strategy in Section 3.2 to integrate them into a final softmax function output . . is calculated as follows:

In this formula, means the softmax output of the ith model, means the weight of the ith model, and there are n models in total to ensemble learning. At last, we choose the category that has the most probability in the as the final crop disease recognition result.

3.3 The basic steps of ELCDR

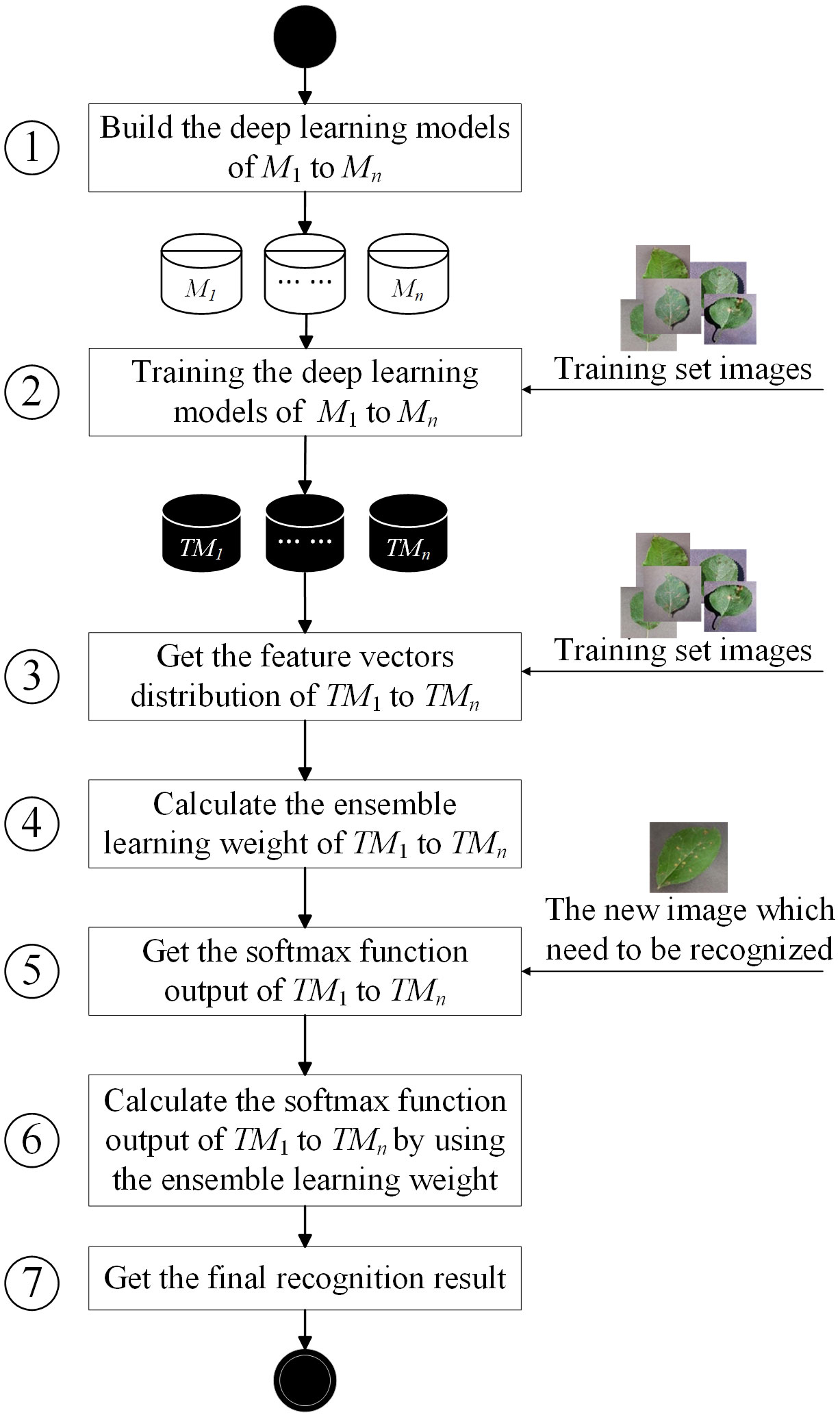

The basic flowchart of ELCDR is shown in Figure 5, comprising seven basic steps. In this section, we will introduce the details of each step.

Step 1. In this step, we need to decide how many and which deep learning models for deployment in ensemble learning are necessary. If we aim to deploy n models, then we need to build these models and set their hyperparameters. Generally, we can choose the models that are widely used in crop disease recognition to deploy, such as VGG, ResNet, and MobileNet. After completing this step, we can have n models: M1, M2,…, and Mn.

Step 2. After the step of building the model, we can use the training set images to train the models one by one. Each image in the training set is labeled to indicate its crop disease category. If there are h images in the training set, they could be divided into k crop disease categories. This means that for each imagei∈ {image1, image2,…, imageh}, it will belong to a specific categoryj∈ {category1, category2,…, categoryk}. After the training process, we can get n trained models: TM1, TM2,…, and TMn.

Step 3. In this step, we need to input the training set images to the trained models one by one and extract the feature vectors in the pooling layer of the trained models. For each imagei∈ {image1, image2,…, imageh}, if we input it to TMg, then we could extract its feature vector in the pooling layer of TMg. Since there are h images, we can get h feature vectors in the feature vector space of TMg. Then, we can calculate the feature extraction performance of TMg by Formulae (2–5) in Section 3.2. For each TMg∈ {TM1, TM2,…, TMn}, we can calculate its feature extraction performance FEGg by this way. So, we can get the feature extraction performance of all the models as {FEG1, FEG2,…, FEGn}.

Step 4. Since we have obtained the feature extraction performance of every model, we can calculate the ensemble learning weight of each model by using Formula (6). Then, we can get the ensemble learning weight of all the models as {w1, w2,…, wn}.

Step 5. Once we want to recognize a new crop disease image, we need to input it into the trained models one by one. Then, we can get the softmax function output of each model. After this step, we can get n softmax function output. We use sfi to represent the softmax function output of TMi as follows:

In Formula (8), si means that from the perspective of TMi, the possibility of the new image belonging to categoryj is .

Step 6. After getting the softmax function output of each trained model, we need to calculate the final softmax function output by Formula (7). Then, we can get the final softmax function output .

Step 7. After completing the previous step, we can have the final softmax function output as follows:

In Formula (9), means the final possibility of the new image belonging to categoryj. So, we will generally choose the category that has the maximum possibility value as the final recognition result.

4 Experiments

To verify the effectiveness of ELCDR, our experiments were performed on a dataset that includes four kinds of crops. We will mainly address three research questions (RQ) as follows:

1) RQ 1: Can ELCDR achieve better crop disease recognition performance than single model methods?

2) RQ 2: Can our weighting strategy achieve better crop disease recognition performance than the voting and average weighting strategies?

3) RQ 3: Is our feature extraction performance metric effective?

4.1 Dataset

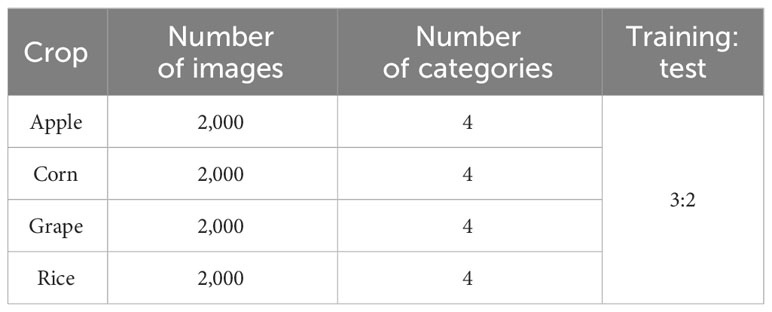

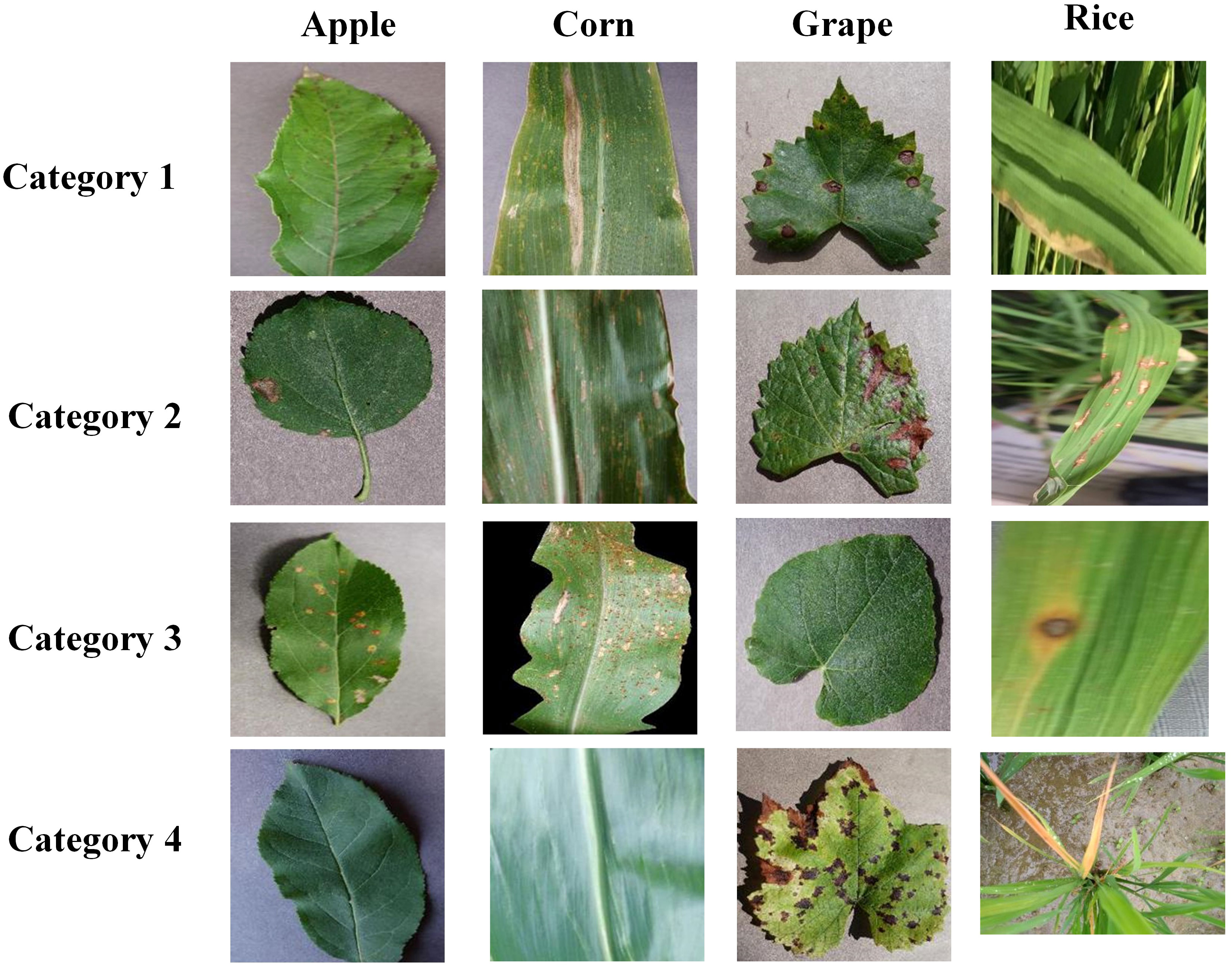

As shown in Table 1, we have built a dataset which includes four kinds of crops, and the details of the dataset are shown in Table 1. In this dataset, each crop category has four image categories. The datasets for apple, corn, and grape were taken from the PlantVillage dataset (Hughes and Salathe, 2015), and the images were captured against a simple background. The rice leaf images were taken from the Sambalpur University’s dataset (Sethy et al., 2020), and these images were captured in a natural environment with complex backgrounds. The images in Figure 6 are the example images of the dataset. The dataset has been uploaded to the Kaggle website and can be accessed by the following website address: https://www.kaggle.com/datasets/zhangguangchuan/crop-disease-dataset.

4.2 Performance metrics

We used accuracy, precision, recall, and confusion matrix to measure the performance of crop disease recognition methods in our experiments. These metrics are the most common metrics in the research field of image recognition. For their specific definition, please refer to our previous article (He et al., 2022).

4.3 Experiment details

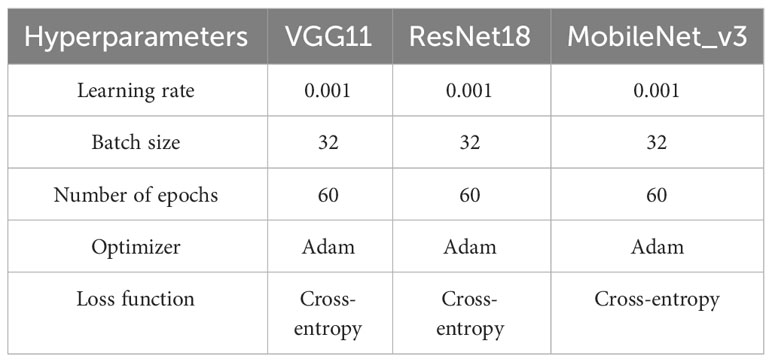

We deployed three DL models in ELCDR, which are widely used in crop disease recognition methods, specifically VGG11, ResNet18, and MobileNet_v3. Their network structure is shown in Figure 7. The main idea of the VGG model is to construct a deep network model by reusing simple foundation blocks (Simonyan and Zisserman, 2014). VGG uses small convolutional kernels and pooling layers, with deeper layers and more channels. It hopes to extract more features by increasing the number of channels. VGG11 has 11 parameter layers, consisting of 8 convolutional layers and 3 fully connected layers. ResNet is a deep residual network developed by Microsoft Research Asia (He et al., 2016). It uses residual blocks and residual connections to construct the network, which allows for training deeper networks and avoids gradient vanishing problem. It can achieve better classification performance by continuously increasing the network depth. ResNet18 has 18 parameter layers, including 8 residual blocks. MobileNet_V3 was proposed by Google in 2019 and is the third-generation network of MobileNet (Howard et al., 2019). MobileNet_V3 is a lightweight model and can construct a very small, low latency, and low consumption model by only setting two hyperparameters. MobileNet_V3 mainly consists of 11 bottleneck layers. All of these models are widely applied in crop disease recognition systems and research studies. Thus, we chose them to test the effectiveness of our method in the experiment.

To answer RQ 1, we tested ELCDR using each of the crops from the dataset, and then we compared its performance against that of VGG11, ResNet18, and MobileNet_v3, respectively.

To answer RQ 2, we compared the performance of ELCDR with the voting and average weighting strategies.

To answer RQ 3, we calculated the ensemble learning weight and recognition performance for each model separately to investigate whether our weighting strategy effectively reflects the feature extraction performance of different models.

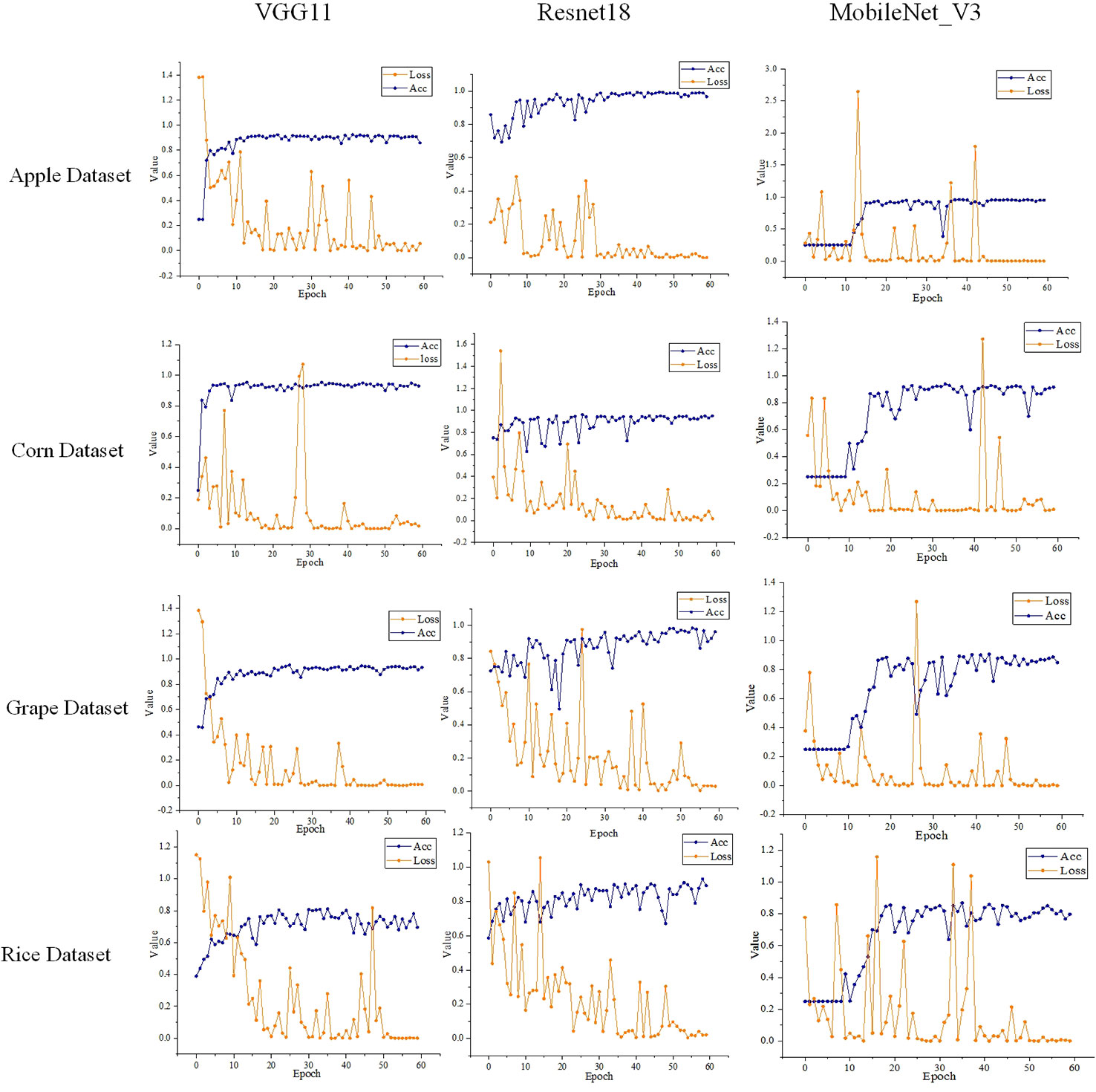

The hyperparameter settings of the models in the experiment are shown in Table 2. The number of epochs was set as 60 in the experiment, as we found that the models’ loss function had basically converged after 50 training epochs in the experiment.

The models’ training loss and accuracy in the training process on different datasets are shown in Figure 8. We can find that as the number of training epochs increases, the loss gradually decreases while the accuracy gradually improves. After over 50 training epochs, the models’ loss and accuracy basically no longer show significant changes. During the experiments, we extracted the output from the pooling layer to serve as the feature vectors when calculating the feature extraction performance of a model.

4.4 Experiment results

4.4.1 Comparison of recognition performance between ELCDR and different single models

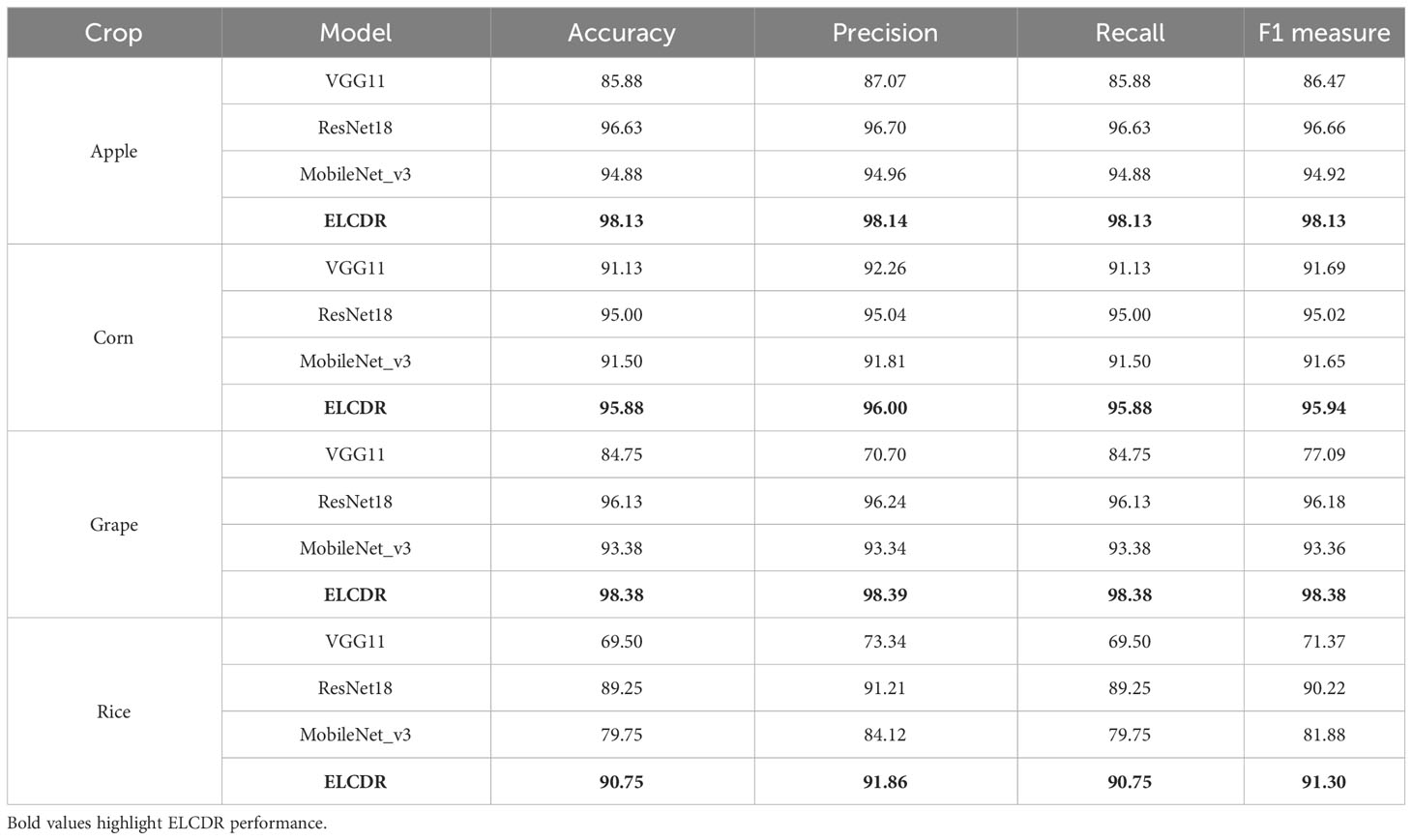

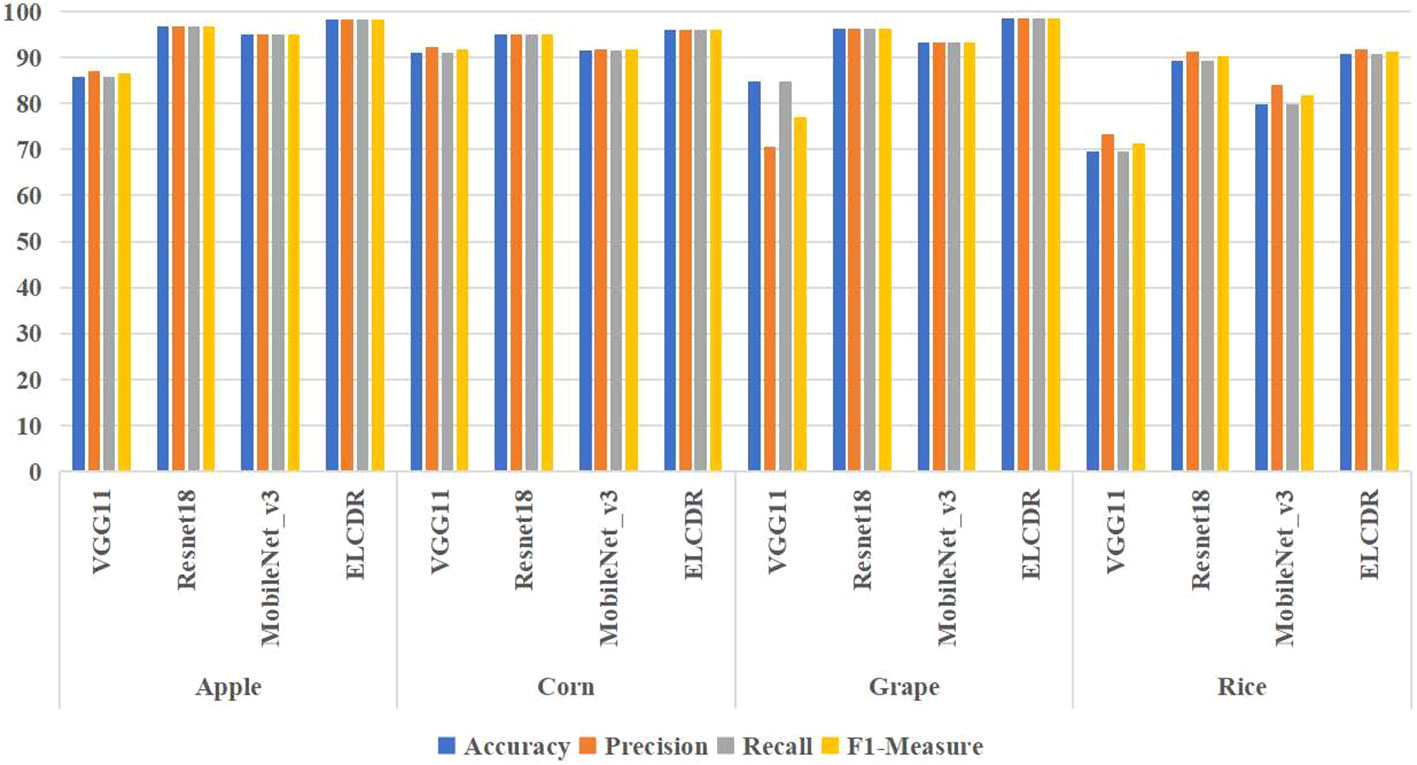

In order to answer RQ 1, we compared the recognition performance of ELCDR with VGG11, ResNet18, and MobileNet_v3. The results are shown in Table 3 and Figure 9.

From Table 3 and Figure 9, we can find that the accuracy, precision, recall, and F1 measure of ELCDR are the highest across all crop datasets. The single model that has the best recognition performance is ResNet18, while the single VGG11 model has the worst recognition performance. Compared with ResNet18, ELCDR improves by as much as 1.5 (apple), 0.88 (corn), 2.25 (grape), and 1.5 (rice) percentage points in accuracy in each case. As observed in Table 3, ELCDR also has improvements in precision, recall, and F1 measure over the single ResNet18 model.

In Figure 10, it can be found that ELCDR recognizes the greatest number of correct images on each category of the apple, corn, and rice datasets. In the case of the grape dataset, while ELCDR recognizes a smaller number of correct images than VGG11 in the category of “black rot,” it still recognizes the greatest number of correct images in total.

Based on these results, we can answer research question 1: compared with the single model methods, ELCDR can achieve better crop disease recognition performance. Table 3 and Figure 9 show that ELCDR attains higher accuracy, precision, recall, and F1 measure than the methods relying on a single deep learning model. Additionally, Figure 10 shows that ELCDR can recognize more correct images than the methods that are based on the single deep learning model. These findings have proven that the ensemble learning strategy of ELCDR is effective in achieving a better crop disease recognition performance than the single model methods.

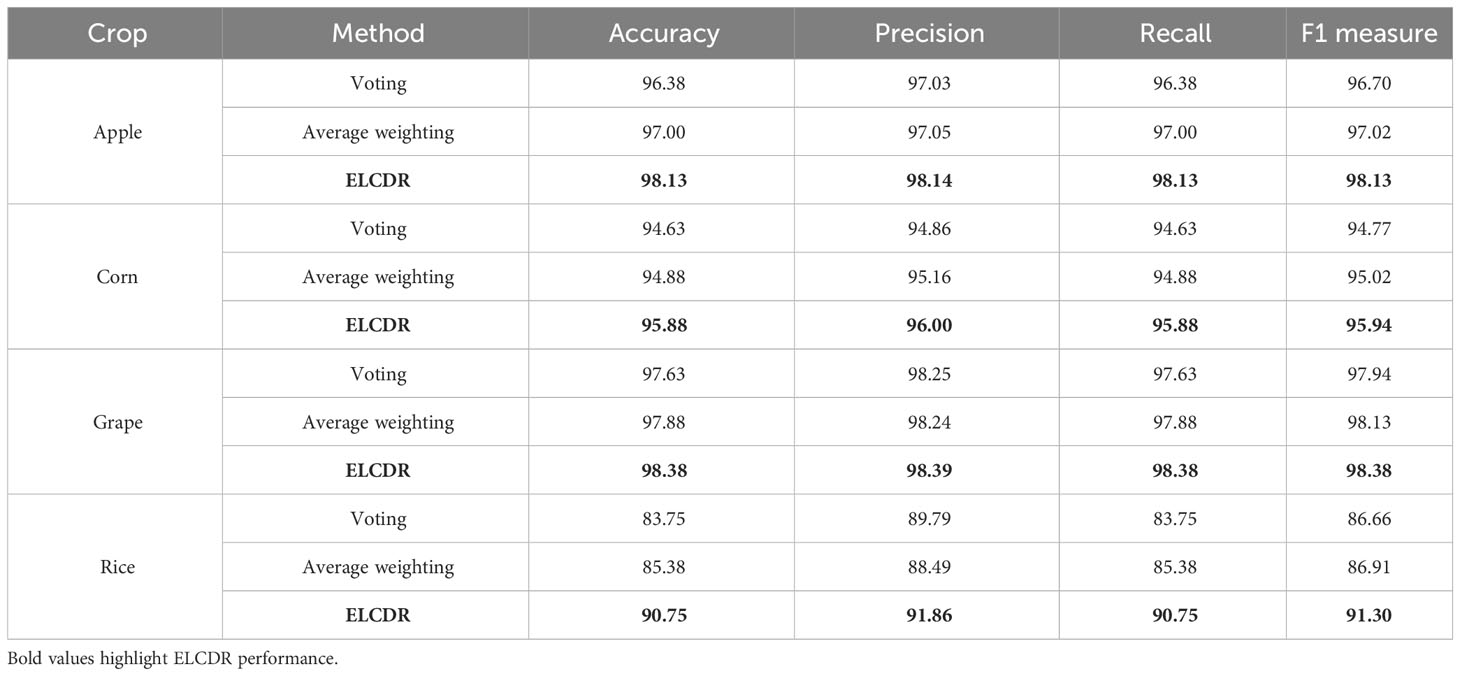

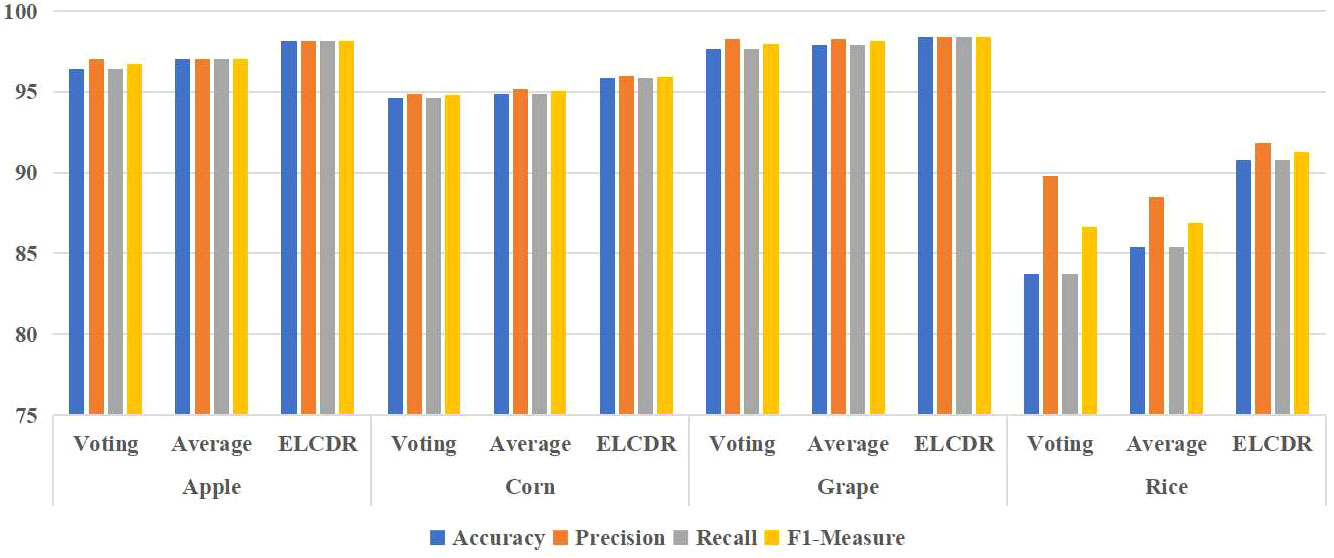

4.4.2 Comparison of the recognition performance between ELCDR and other ensemble learning strategies

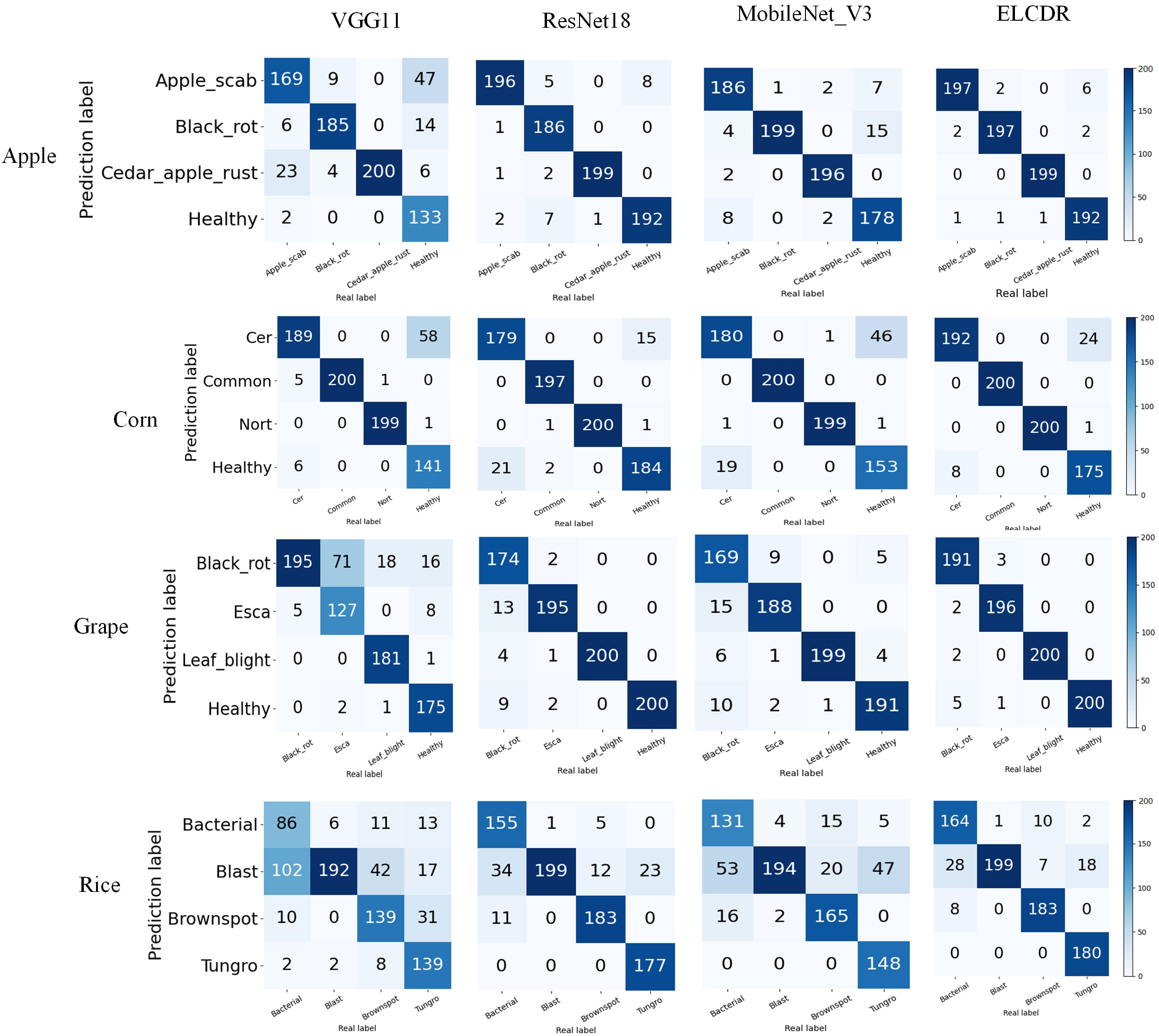

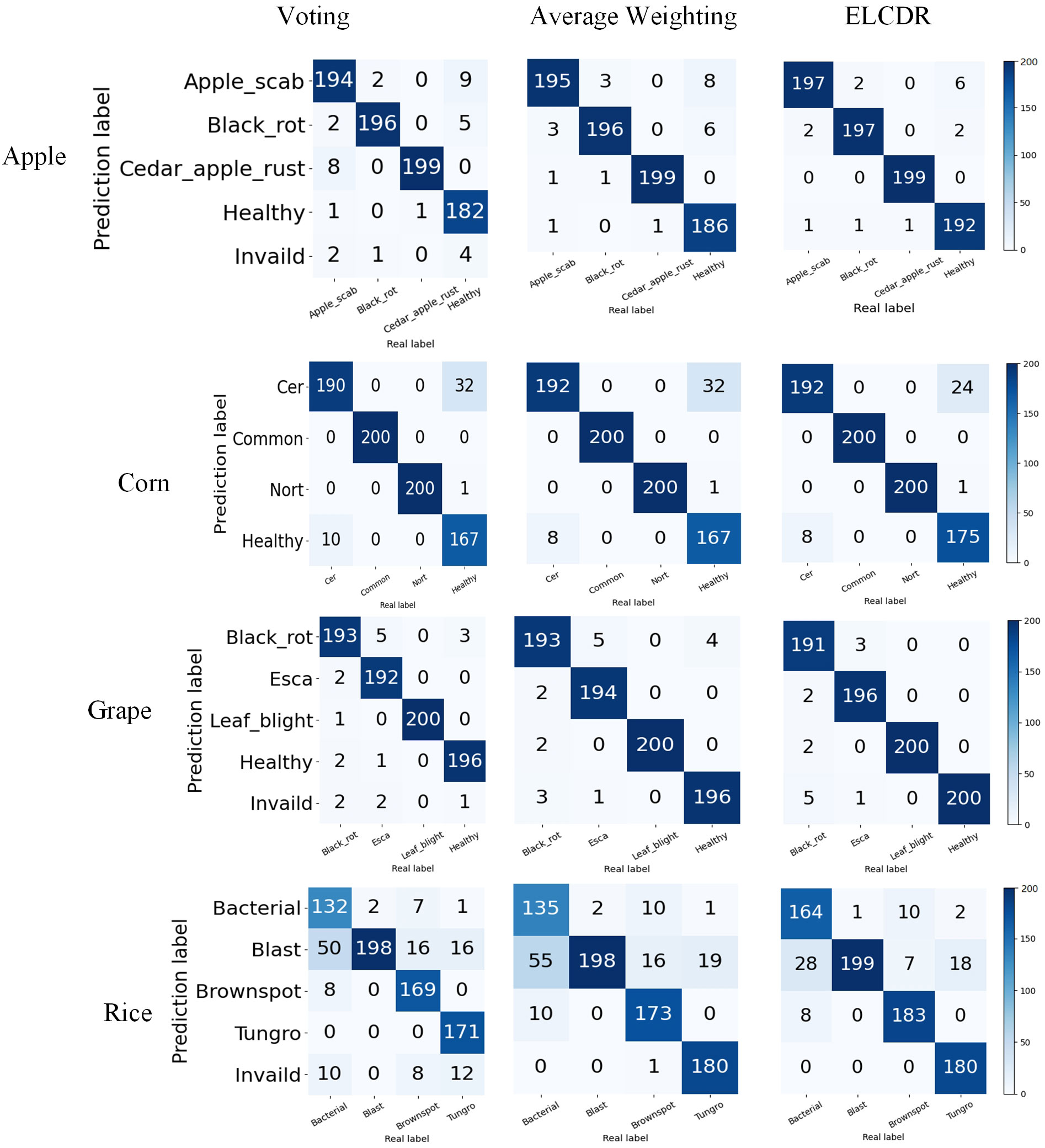

To answer research question 2, we also investigated the performance of the ensemble learning strategy of voting and average weighting. The results are shown in Table 4 and Figure 11. The average weighting strategy calculates the final softmax function output as follows:

Table 4 Comparison of the recognition performance between ELCDR and other ensemble learning strategies.

Figure 11 Comparison of the recognition performance between ELCDR and other ensemble learning strategies.

In Formula (10), sfi means the softmax function output of model i, and n means the number of the models. We can see in Table 4 and Figure 11, ELCDR consistently achieves the best recognition performance on the experiment dataset. On the apple, corn, and grape datasets, the average weighting strategy achieves better performance than the voting strategy. On the rice dataset, the voting strategy has better performance than the average weighting strategy. However, ELCDR consistently achieves the best recognition performance regardless of the dataset. Compared with the voting strategy, ELCDR improves by as much as 1.75 (apple), 1.25 (corn), 0.75 (grape), and 7 (rice) percentage points in accuracy in each case. Compared with the average weighting strategy, ELCDR improves by as much as 1.13 (apple), 1 (corn), 0.5 (grape), and 5.37 (rice) percentage points in accuracy in each case. Especially on the rice dataset, the voting strategy and the average weighting strategy both achieve the worse performance than the single ResNet18 model, and the performance improvement of ELCDR is most evident in this case. This might be because the images in the rice dataset have a complex background, making it challenging for the models to extract the efficient feature, while the voting strategy and the average weighting strategy cannot determine which model has extracted the most efficient features. The recognition performance of the voting strategy and the average weighting becomes worse. In contrast, ELCDR can determine which model has extracted the most efficient features and assigns more weight during ensemble learning, consistently achieving better recognition performance. We will further discuss this hypothesis in research question 3.

In Figure 12, we can see that ELCDR consistently recognizes the greatest number of correct images on each dataset. When recognizing an image using the voting strategy, if each model votes for a different category, the voting strategy is considered invalid for that image. We can see that in Figure 12, there are consistently some images for which the voting strategy is invalid. This is also the main reason why the voting strategy achieves the worse performance than the other ensemble learning strategies.

By this, we can answer research question 2: the weighting strategy of ELCDR achieves better recognition performance than the voting strategy and average weighting strategy. Table 4 and Figure 11 show that ELCDR can achieve better accuracy, precision, recall, and F1 measure than the voting strategy and average weighting strategy. Figure 12 shows that ELCDR can recognize more correct images than the voting strategy and average weighting strategy. These results have proven that the ensemble learning strategy of ELCDR is more effective than the voting strategy and average weighting strategy.

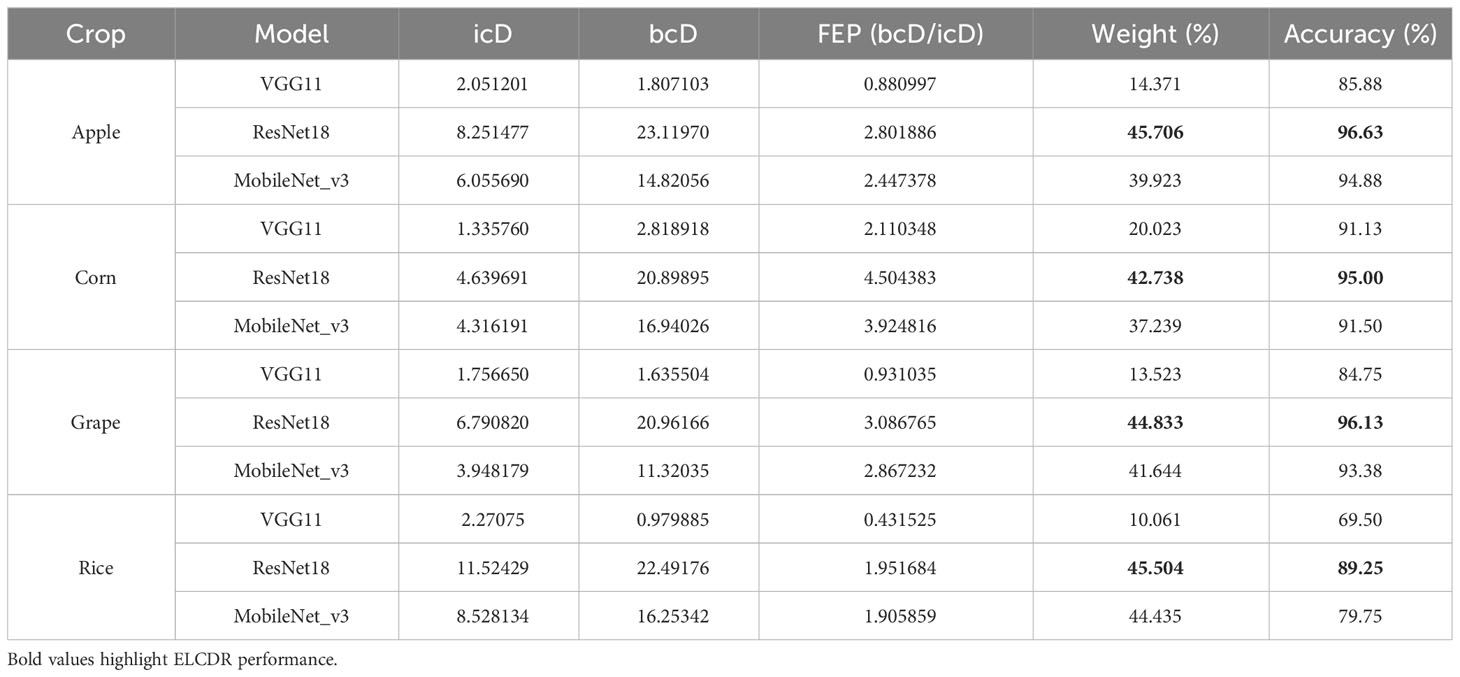

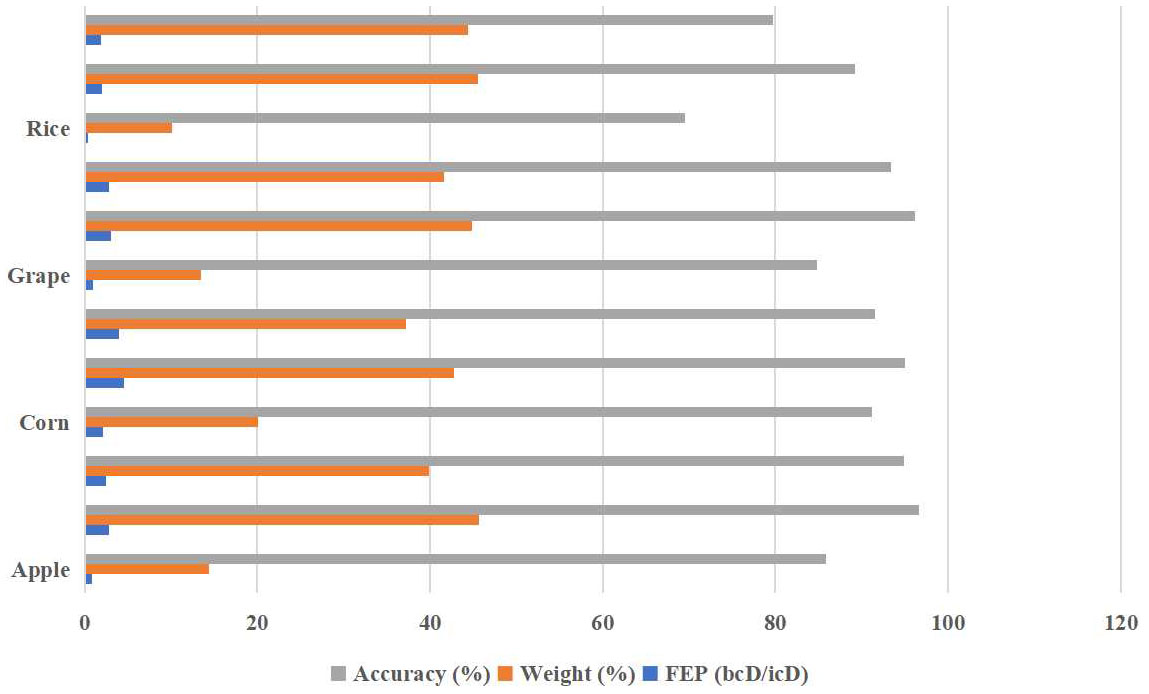

4.4.3 Comparison of the ensemble learning weights of different models

In order to answer research question 3, we calculated the in-category distance (icD), between-categories distance (bcD), feature extraction performance (FEP), weight, and accuracy performance for each model integrated into ELCDR. The results are shown in Table 5 and Figure 13. We can find that the ResNet18 model consistently achieved the best accuracy performance on each of the datasets, resulting in the highest FEP and ensemble learning weight. Conversely, the VGG11 model consistently demonstrated the worst accuracy performance on each of the datasets, resulting in the lowest FEP and ensemble learning weight. So, the FEP and weight distribution of the models in ELCDR are consistent with their recognition performance. It means that the model that has better feature extraction and recognition performance receives greater weight during ensemble learning with ELCDR, while those with lower performance receive lower weight.

By this, we can answer research question 3 that the feature extraction performance metric of ELCDR is effective. We can use it to measure the model’s feature extraction performance and calculate ensemble learning weight efficiently.

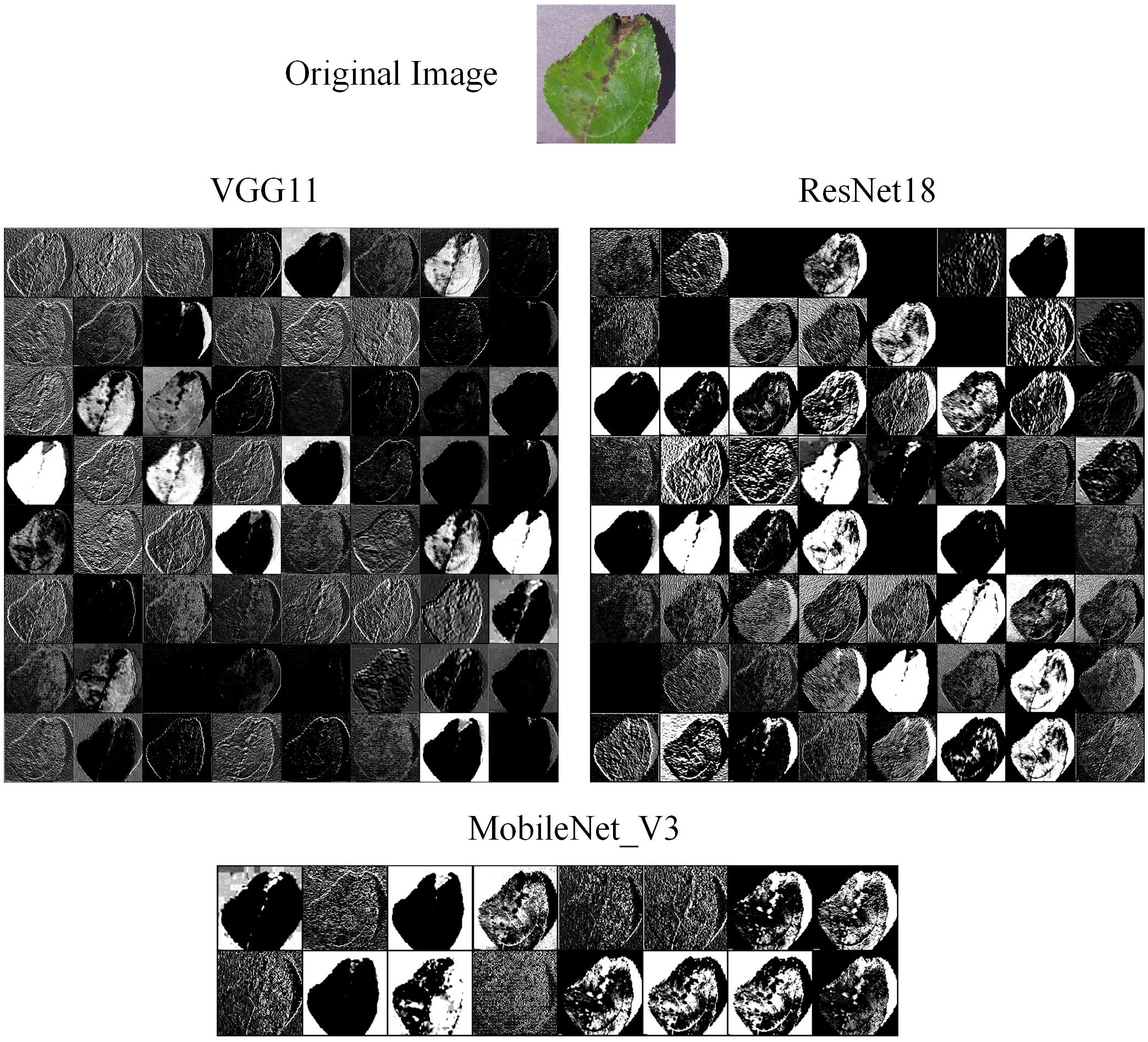

We also compared the feature maps of different models in the experiment. The original image was input into VGG11, ResNet18, and MobileNet_V3, respectively. Then, we extracted the feature maps from their convolutional layer. The feature maps are shown in Figure 14. We can find that the feature map of ResNet18 has the most texture detail features and lesion features, while the feature map of VGG11 is the blurriest. This means that ResNet18 has extracted the most effective features, and VGG11 has extracted the least. MobileNet_V3 is a lightweight model, so it cannot extract as many effective features as ResNet18. However, we also can find that the feature map of MobileNet_V3 has more texture detail features and lesion features than the VGG11. Therefore, we can suggest that different models have different feature extraction performance, which is the main reason why different weights are assigned to these models in ensemble learning.

4.5 Discussion

In this section, we conducted experiments to assess the crop leaf recognition performance of ELCDR. The experimental results show that ELCDR can achieve better recognition performance than the methods that are based on a single model. It can also achieve better recognition performance than the traditional ensemble learning methods that rely on the voting or average weighting strategies. This is because we applied a novel weight calculation method, which can measure different models’ feature extraction performance through the distribution of feature vectors. With this weight calculation method, we assign more weight to the models with better recognition performance when conducting ensemble learning for crop leaf disease recognition. Otherwise, the models with poorer performance receive less weight. All of the experimental results verify the effectiveness of the ELCDR as proposed in this paper.

5 Conclusions

Compared with the crop leaf disease recognition methods based on single models, ensemble learning methods have the advantage of integrating multiple single learning models to get more accurate, stable, and robust results. This advantage stems from the fact that different models can extract image features from various perspectives. Ensemble learning can combine these features effectively to get a more powerful integrated model.

Traditional ensemble learning methods generally use the voting or average weight strategies, treating all integrated models as equally important. However, different models may have different feature extraction ability. When using ensemble learning, it is essential to assign more weight to the models that have better feature extraction ability and less weight to those that have a weaker feature extraction ability. To solve this problem, we have introduced a novel ensemble learning method for crop leaf disease recognition, named ELCDR. This approach measures each model’s feature extraction ability by calculating the model’s feature vector distribution and calculates the ensemble learning weight for each model based on the model’s feature extraction ability. Through this approach, ELCDR can integrate more effective features from different models, to obtain more accurate, stable, and robust crop leaf disease recognition results. In order to verify the recognition performance of ELCDR, we compared its performance with the recognition methods which are based on a single model, voting strategy, and average weighting strategy in the experiments. The experimental results clearly demonstrate that ELCDR can achieve better accuracy, recall, precision, and F1 measure performance than the recognition methods which are based on a single model, voting strategy, and average weighting strategy. Compared with the VGG11 model, ELCDR improves by as much as 12.25 (apple), 4.75 (corn), 13.63 (grape), and 21.25 (rice) percentage points in accuracy in each case. Compared with the ResNet18 model, ELCDR improves by as much as 1.5 (apple), 0.88 (corn), 2.25 (grape), and 1.5 (rice) percentage points in accuracy in each case. Compared with the MobileNet_V3 model, ELCDR improves by as much as 3.25 (apple), 4.38 (corn), 5 (grape), and 11 (rice) percentage points in accuracy in each case. Compared with the voting strategy, ELCDR improves by as much as 1.75 (apple), 1.25 (corn), 0.75 (grape), and 7 (rice) percentage points in accuracy in each case. Compared with the average weighting strategy, ELCDR improves by as much as 1.13 (apple), 1 (corn), 0.5 (grape), and 5.37 (rice) percentage points in accuracy in each case. These experimental results validate that ELCDR consistently has better recognition performance than the methods that are based on a single model or traditional voting strategy.

We have successfully verified the effectiveness of our proposed feature extraction ability metric in the experiments. However, our new method currently only completes the recognition task in a small range of scenarios. We still face some challenges as follows:

1) The effectiveness of ELCDR on more complex datasets, which may involve a greater variety of crops and harsh environmental conditions, still needs further verification.

2) It remains to be determined how the number of integrated models in ELCDR impacts the recognition performance.

3) Identifying the optimal combination of models to achieve the best recognition performance for ELCDR is another area of potential research.

In the future, we aim to compare the potential benefits and limitations of the existing crop leaf disease recognition methods and explore a robust and accurate crop leaf disease recognition segmentation method.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

YH: Conceptualization, Writing – original draft, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software. GZ: Validation, Visualization, Writing – review & editing. QG: Conceptualization, Data curation, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported by the National Natural Science Foundation of China (No. 32101611), the Youth Project of Basic Research Program of Yunnan Province (No. 202101AU070096), the Major Project of Science and Technology of Yunnan Province (Nos. 202202AE090021 and 202302AE090020), and the Open Research Program of State Key Laboratory for Conservation and Utilization of Bio-Resource in Yunnan (No. GZKF2021009).

Acknowledgments

Appreciations are given to the editor and reviewers of the journal.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ahmed, M. H., Islam, T., Ema, R. R. (2019). “A new hybrid intelligent GAACO algorithm for automatic image segmentation and plant leaf or fruit diseases identification using TSVM classifier,” in Proceedings of the 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’s Bazar, Bangladesh. (ECCE), 1–6. doi: 10.1109/ECACE.2019.8679219

Applalanaidu, M. V., Kumaravelan, G. (2021). “A review of machine learning approaches in plant leaf disease detection and classification,” in Proceedings of the 2021 Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV), Tirunelveli, India. (ECCE), 716–724. doi: 10.1109/ICICV50876.2021.9388488

Bhagat, M., Kumar, D., Kumar, S. (2023). Bell pepper leaf disease classification with LBP and VGG-16 based fused features and RF classifier. Int. J. Inf. Technol. 15 (1), 465–475. doi: 10.1007/s41870-022-01136-z

Chaudhary, A., Thakur, R., Kolhe, S., Kamal, R. (2020). A particle swarm optimization based ensemble for vegetable crop disease recognition. Comput. Electron. Agricult. 178, 105747. doi: 10.1016/j.compag.2020.105747

Chen, H.-C., Widodo, A. M., Wisnujati, A., Rahaman, M., Lin, J. C.-W., Chen, L., et al. (2022). AlexNet convolutional neural network for disease detection and classification of tomato leaf. Electronics 11, 951. doi: 10.3390/electronics11060951

Chen, J., Zhang, D., Suzauddola, M., Zeb, A. (2021). Identifying crop diseases using attention embedded MobileNet-V2 model. Appl. Soft Computing 113, 107901. doi: 10.1016/j.asoc.2021.107901

Coulibaly, S., Kamsu-Foguem, B., Kamissoko, D., Traore, D. (2019). Deep neural networks with transfer learning in millet crop images. Comput. Industry 108, 115–120. doi: 10.1016/j.compind.2019.02.003

Cruz, A. C., Luvisi, A., Bellis, L. D., Ampatzidis, Y. (2017). X-FIDO: an effective application for detecting olive quick decline syndrome with deep learning and data fusion. Front. Plant Sci. 8. doi: 10.3389/fpls.2017.01741

Fuentes, A. F., Yoon, S., Lee, J., Park, D. S. (2018). High-Performance deep neural network-Based tomato plant diseases and pests diagnosis system with refinement filter bank. Front. Plant Sci. 9. doi: 10.3389/fpls.2018.01162

Ganaie, M., Hu, M., Malik, A., Tanveer, M., Suganthan, P. (2022). Ensemble deep learning: A review. Eng. Appl. Artif. Intelligence. 115, 105151. doi: 10.1016/j.engappai.2022.105151

Ganesan, G., Chinnappan, J. (2022). Hybridization of ResNet with YOLO classifier for automated paddy leaf disease recognition: An optimized model. J. Field Robotics 39 (7), 1085–1109. doi: 10.1002/rob.22089

He, Y., Gao, Q., Ma, Z. (2022). Crop leaf disease image recognition method based on bilinear residual networks. Math. Problems Eng. 2022, 2948506. doi: 10.1155/2022/2948506

He, K., Zhang, X., Ren, S., Sun, J. (2016). “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA. (IEEE), 770–778. doi: 10.1109/CVPR.2016.90

Hossain, E., Hossain, M. F., Rahaman, M. A. (2019). “A color and texture based approach for the detection and classification of plant leaf disease using KNN classifier,” in Proceedings of the 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’s Bazar, Bangladesh. (IEEE), 1–6. doi: 10.1109/ECACE.2019.8679247

Howard, A., Sandler, M., Chen, B., Wang, W., Chen, L. C., Tan, M., et al. (2019). “Searching for mobilenetv3,” in International Conference on Computer Vision 2019 (ICCV 2019), Seoul, South Korea. (ICCV). doi: 10.48550/arXiv.1905.02244

Hughes, D. P., Salathe, M. (2015). An open access repository of images on plant health to enable the development of mobile disease diagnostics. Computer Sci. 2015, 1–13. doi: 10.48550/arXiv.1511.08060

Islam, M., Dinh, A., Wahid, K., Bhowmik, P. (2017). “Detection of potato diseases using image segmentation and multiclass support vector machine,” in Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada. (CCECE), 1–4. doi: 10.1109/CCECE.2017.7946594

Jiang, P., Chen, Y., Liu, B., He, D., Liang, C. (2019). Real-time detection of apple leaf diseases using deep learning approach based on improved convolutional neural networks. IEEE Access 7, 59069–59080. doi: 10.1109/ACCESS.2019.2914929

Jiang, F., Lu, Y., Chen, Y., Cai, D., Li, G. (2020). Image recognition of four rice leaf diseases based on deep learning and support vector machine. Comput. Electron. Agricult. 179 (2), 105824. doi: 10.1016/j.compag.2020.105824

Kaur, S., Pandey, S., Goel, S. (2018). Semi-automatic leaf disease detection and classification system for soybean culture. IET Image Processing 12 (6), 1038–1048. doi: 10.1049/iet-ipr.2017.0822

Kawasaki, Y., Uga, H., Kagiwada, S., Lyatomi, H. (2015). “Basic study of automated diagnosis of viral plant diseases using convolutional neural networks,” in Proceedings of the International Symposium on Visual Computing, ISVC 2015. Lecture Notes in Computer Science, Vol. 9475 (Springer, Cham). doi: 10.1007/978-3-319-27863-6_59

Kundu, N., Rani, G., Dhaka, V. S., Gupta, K., Nayak, S. C., Verma, S., et al. (2021). IoT and interpretable machine learning based framework for disease prediction in pearl millet. Sensors 21, 5386. doi: 10.3390/s21165386

Li, H., Jin, Y., Zhong, J., Zhao, R. (2021). A fruit tree disease diagnosis model based on stacking ensemble learning. Complexity 2021, 6868592. doi: 10.1155/2021/6868592

Mathew, A., Antony, A., Mahadeshwar, Y., Khan, T., Kulkarni, A. (2022). Plant disease detection using GLCM feature extractor and voting classification approach. Materials Today: Proc. 58, Part 1, 407–415. doi: 10.1016/j.matpr.2022.02.350

Nachtigall, L. G., Araujo, R. M., Nachtigall, G. R. (2016). “Classification of apple tree disorders using convolutional neural networks,” in Proceedings of the 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), San Jose, CA, USA. (ICTAI), 472–476. doi: 10.1109/ICTAI.2016.0078

Ngugi, L. C., Abelwahab, M., Abo-Zahhad, M. (2021). Recent advances in image processing techniques for automated leaf pest and disease recognition – A review. Inf. Process. Agricult. 8 (1), 27–51. doi: 10.1016/j.inpa.2020.04.004

Palanisamy, S., Sanjana, N. (2023). “Corn leaf disease detection using genetic algorithm and weighted voting,” in Proceedings of the 2023 2nd International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), Coimbatore, India. (ICAECA), doi: 10.1109/ICAECA56562.2023.10200196

Pantazi, X. E., Moshou, D., Tamouridou, A. A. (2019). Automated leaf disease detection in different crop species through image features analysis and One Class Classifiers. Comput. Electron. Agricult. 156, 96–104. doi: 10.1016/j.compag.2018.11.005

Paymode, A. S., Malode, V. B. (2021). Transfer learning for multi-crop leaf disease image classification using convolutional neural network VGG. Artif. Intell. Agricult. 6, 23–33. doi: 10.1016/j.aiia.2021.12.002

Prakash, R. M., Saraswathy, G. P., Ramalakshmi, G., Mangaleswari, K. H., Kaviya, T. (2017). “Detection of leaf diseases and classification using digital image processing,” in Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India. (ICIIECS), 1–4. doi: 10.1109/ICIIECS.2017.8275915

Reddy, S. R. G., Varma, G. P. S., Davuluri, R. L. (2023). Resnet-based modified red deer optimization with DLCNN classifier for plant disease identification and classification. Comput. Electrical Engineering 105, 108492. doi: 10.1016/j.compeleceng.2022.108492

Sethy, P. K., Barpanda, N. K., Rath, A. K., , MTech, S. K. B. (2020). Deep feature based rice leaf disease identification using support vector machine. Comput. Electron. Agricult. 175, 105527. doi: 10.1016/j.compag.2020.105527

Shan-E-Ahmed, R., Gillian, P., Clarkson J, P., Rajpoot, N. M. (2015). Automatic detection of diseased tomato plants using thermal and stereo visible light images. PloS One 10 (4), e0123262. doi: 10.1371/journal.pone.0123262

Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. ImageNet Large Scale Visual Recognition Challenge 2014, 1–14. doi: 10.48550/arXiv.1409.1556

Singh, U. P., Chouhan, S. S., Jain, S., Jain, S. (2019). Multilayer convolution neural network for the classification of mango leaves infected by anthracnose disease. IEEE Access 7, 43721–43729. doi: 10.1109/ACCESS.2019.2907383

Stephen, A., Punitha, A., Chandrasekar, A. (2023). Designing self attention-based ResNet architecture for rice leaf disease classification. Neural Comput. Applic. 35, 6737–6751. doi: 10.1007/s00521-022-07793-2

Sun, G., Jia, X., Geng, T. (2018). Plant diseases recognition based on image processing technology. J. Electrical Comput. Eng. 2018, 1–7. doi: 10.1155/2018/6070129

Xie, X., Ma, Y., Liu, B., He, J., Li, S., Wang, H. (2020). A deep-learning-based real-time detector for grape leaf diseases using improved convolutional neural networks. Front. Plant Sci. 11. doi: 10.3389/fpls.2020.00751

Keywords: crop disease, recognition, ensemble learning, weight, feature extraction performance

Citation: He Y, Zhang G and Gao Q (2024) A novel ensemble learning method for crop leaf disease recognition. Front. Plant Sci. 14:1280671. doi: 10.3389/fpls.2023.1280671

Received: 21 August 2023; Accepted: 28 November 2023;

Published: 08 January 2024.

Edited by:

Jucheng Yang, Tianjin University of Science and Technology, ChinaReviewed by:

Guoxiong Zhou, Central South University Forestry and Technology, ChinaAibin Chen, Central South University Forestry and Technology, China

Copyright © 2024 He, Zhang and Gao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Quan Gao, Z2FvcUB5bmF1LmVkdS5jbg==

Yun He

Yun He Guangchuan Zhang2,3

Guangchuan Zhang2,3