- 1College of Mechanical and Electronic Engineering, Shandong Agricultural University, Tai’an, Shandong, China

- 2Key Laboratory of Horticultural Machinery and Equipment of Shandong Province, Shandong Agricultural University, Tai’an, Shandong, China

- 3Intelligent Engineering Laboratory of Agricultural Equipment of Shandong Province, Shandong Agricultural University, Tai’an, Shandong, China

Apple leaf diseases without timely control will affect fruit quality and yield, intelligent detection of apple leaf diseases was especially important. So this paper mainly focuses on apple leaf disease detection problem, proposes a machine vision algorithm model for fast apple leaf disease detection called LALNet (High-speed apple leaf network). First, an efficient sacked module for apple leaf detection, known as EALD (efficient apple leaf detection stacking module), was designed by utilizing the multi-branch structure and depth-separable modules. In the backbone network of LALNet, (High-speed apple leaf network) four layers of EALD modules were superimposed and an SE(Squeeze-and-Excitation) module was added in the last layer of the model to improve the attention of the model to important features. A structural reparameterization technique was used to combine the outputs of two layers of deeply separable convolutions in branch during the inference phase to improve the model’s operational speed. The results show that in the test set, the detection accuracy of the model was 96.07%. The total precision was 95.79%, the total recall was 96.05%, the total F1 was 96.06%, the model size was 6.61 MB, and the detection speed of a single image was 6.68 ms. Therefore, the model ensures both high detection accuracy and fast execution speed, making it suitable for deployment on embedded devices. It supports precision spraying for the prevention and control of apple leaf disease.

1 Introduction

There are approximately more than 80 countries worldwide engaged in large-scale apple production, and as the area under apple production continues to expand (Zhang, 2021), the incidence of pests and diseases affecting apples has become increasingly severe. Apple leaf diseases, if left untreated, would pose a serious threat to the growth, development and quality of apples. Currently, traditional methods of diagnosing apple leaf diseases rely heavily on human judgment, requiring experienced and highly skilled field workers. Errors in worker judgment can lead to delayed prevention or excessive control measures, both of which can be detrimental. Therefore, efficient and rapid assessment of apple leaf diseases plays a critical role in improving apple quality and increasing grower profitability.

With the development of computer vision and artificial intelligence, deep learning has received increasing attention in the field of image processing (Shun et al., 2019; Zhang and Lu, 2021), while deep learning techniques have a wide range of ap plications in agriculture (Kamilaris and Prenafeta-Boldú, 2018; Zheng et al., 2019; Sharma et al., 2020; Arumugam et al., 2022). In the research of plant leaf disease classification, Aditya Karleka et al. designed a deep learning convolutional neural network Soybean leaf diseases classification (SoyNet) by increasing the diversity of pooling operations, adding Relu functions and dropout operations rationally for identifying and classifying soybean plant The proposed model achieved 98.14% recognition accuracy with good precision, recall and F1 score (Guo et al., 2022). Paul Shekonya Kanda et al. proposed an intelligent method based on deep learning to identify nine common tomato diseases. The method employed a residual neural network algorithm to identify tomato diseases and used five network depths to measure the accuracy of the network. According to the experimental result, this method obtained the highest F1 score of 99.5%, outperforming most previous competing methods in tomato leaf disease identification (Zhang et al., 2021). Laixiang Xu et al. proposed a new deep learning model for peanut leaf disease recognition. This proposed model was a combination of an improved X-ception, a partially activated feature fusion module and two attention enhancement branches. The model obtained 99.69% accuracy in the test set, which is 9.67% - 23.34% higher than Inception-V4, ResNet 34 and MobileNet-V3, demonstrating the feasibility of the model (Gill and Khehra, 2022). It shows that by designing specific network parameter settings in convolutional neural networks for plant disease classification, adding residual structure, adding attention mechanism, and other operations were capable of achieving higher accuracy.

In the study of apple leaf disease classification convolutional neural network model numerous scholars have done a lot of researches on improving the accuracy of apple tree leaf disease classification recognition, reducing the parameters and training time of specific recognition networks. For example, Yong et al. proposed a DenseNet-121 deep convolutional network based on three methods of regression, multi-label classification and focal loss function to identify apple leaf diseases. The proposed method achieved 93.51%, 93.31%, and 93.71% accuracy on the test set, respectively, outperforming the traditional cross-entropy loss function-based multi-classification method with 92.29% accuracy (Zhong and Zhao, 2020). Lili et al. proposed a convolutional neural network based on the AlexNet model for the classification of five diseases of apple tree leaves, which uses dilated convolution to extract coarse-grained features of diseases in the model, which helps to reduce the number of parameters while maintaining a large field of perception, and adds parallel convolutional modules to extract leaf disease features at multiple scales. Subsequently, a series of 3 × 3 convolutional shortcut connections allowed the model to handle additional nonlinearities. The final recognition accuracy of the model was 97.36% and the model size was 5.87 MB (Li et al., 2022). Qian et al. proposed an improved model based on VGG16 to identify apple leaf diseases, in which a global average polarization layer was used instead of a fully connected layer to reduce parameters and a batch normalization layer was added to improve convergence speed. A migration learning strategy is used to avoid long training time. The experimental results show that the overall accuracy of apple leaf classification based on the proposed model could reach 99.01%. Compared with the classical VGG16, the model parameters are reduced with 89%, the recognition accuracy is improved with 6.3%, and the training time is reduced to 0.56% of the original model (Yan et al., 2020).

In apple leaf disease classification and recognition research, scholars have achieved high recognition accuracy using deep learning techniques, however, how to ensure apple leaf disease recognition accuracy while making the model run faster is still the focus of research. Therefore, this paper proposes the LALNet model, in the next section in-depth discussion of the research content of this paper, in the second section, mainly introduces the data set of this paper, the main components of the LALNet network using the multi-branching structure and the depth separable module to design the efficient leaf detection EALD module, in the LALNet in the use of the EALD module stacking and add SE attention module, Finally, in the inference stage using structural re-parameterization technique to improve the running speed of the model. In Section III, the model was trained, validated and tested using publicly available apple leaf disease datasets, and a comparative analysis of this paper’s model with state-of-the-art apple leaf classification models was performed to provide a comprehensive evaluation of the model to ensure its reliability. In Sec. IV, the research work of this paper was fully summarized and the limitations of this research and future research directions were discussed. Thus, the proposed LALNet model improves the speed of image recognition while ensuring recognition accuracy, and finally, this research can support intelligent apple leaf spray control.

2 Tests and methods

2.1 Apple leaf data set

In this study, apple leaf disease images were collected at the apple experimental field of Shandong Agricultural University (117.12°E,36.20°N) and at the Tianping Lake experimental demonstration base of Shandong Fruit Tree Research Institute, National Apple Engineering Technology Research Center (117.01°E,36.21°N), which were collected several times in July 2022 under favorable weather conditions.

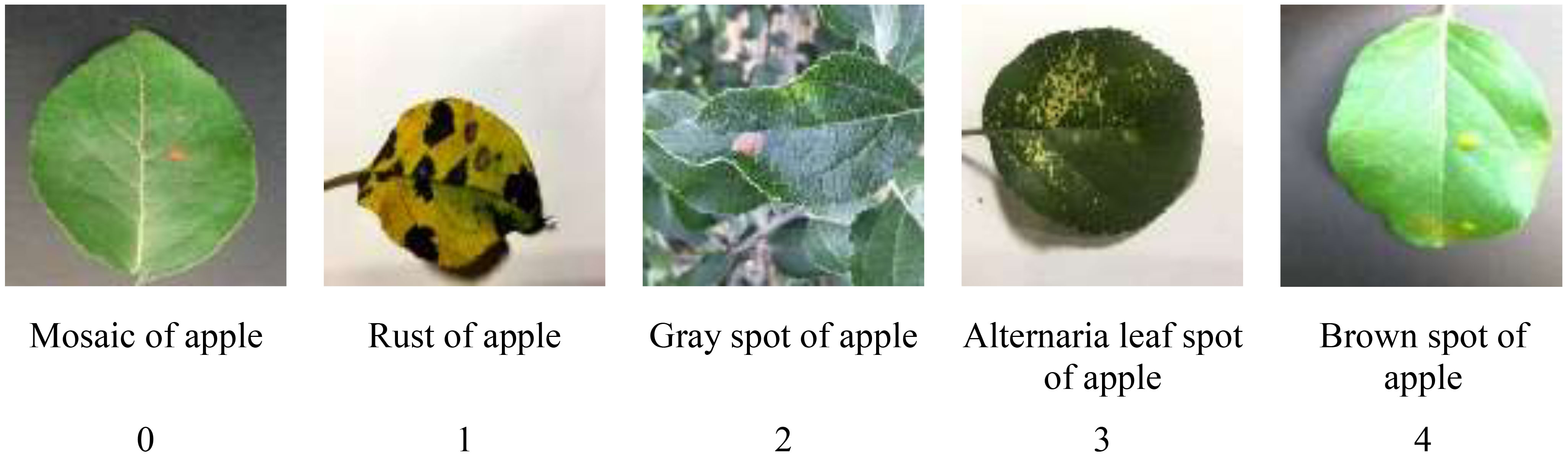

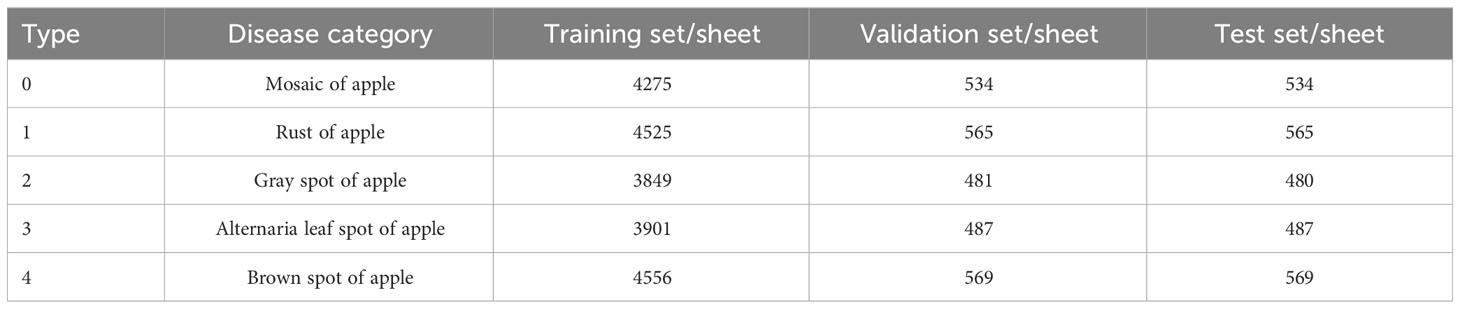

Meanwhile, Baidu public dataset of apple leaf pathology images (Ai Studio poublic datasets, 2023) was used to expand the dataset of this paper. This dataset contains five types of common apple leaf diseases, namely apple mosaic, rust, gray spot, alternaria leaf spot and brown spot. For the convenience of training management, apple mosaic, rust, gray spot, alternaria leaf spot, and brown spot were represented by the numbers 0, 1, 2, 3, and 4, respectively, and some apple leaf disease images are shown in Figure 1. After flipping, panning and contrast enhancement to pre-process the data set of this paper, a total of 25,000 disease images with image size of 224*224 were obtained. In order to use this dataset for training, validation and testing, the data is divided as shown in Table 1, 80% of the images were used for model training, 10% of the images were used for model validation and 10% of the images were used for model testing.

2.2 LALNet network model

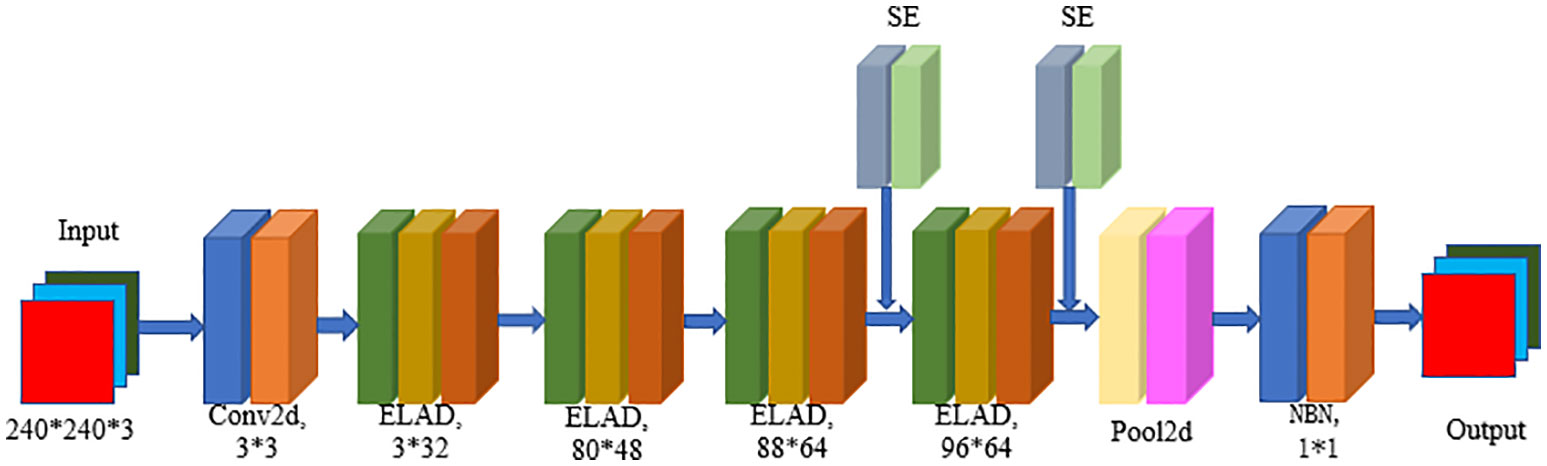

The LALNet lightweight apple leaf disease identification network model was mainly constructed by referring to the typical ResNet network model and MobileNet network model structure, using depth separable modules in the network and lightweight attention modules to lighten the parameters of the network model, and using structural reparameterization in inference to improve the inference speed of the network model. The flowchart of the LALNet network was shown in Figure 2, which modeled the main components of the ELAD module and the SE Attention Mechanism module.

2.2.1 ResNet network

In recent years, Convolutional neural nerve network (CNN) has been continuously evolving and growing, representing one of the prominent architectures in deep learning networks (Kawakura and Shibasaki, 2020; Deepan and Sudha, 2021). However, as the network depth increases, it becomes increasingly difficult to train, leading to the problem of network degradation. To address this problem, in 2015, a research team from Microsoft Research proposed ResNet (Residual Network) (He et al., 2016), a deep learning network that introduced residual connections. These connections made it easier to train deeper networks.

The network structure of ResNet was shown in Figure 3. The network mainly consists of an input layer, convolutional layers, residual modules, pooling layers, and fully connected layers. Input layer: input image data; Convolutional layer: extracts features of the image; Residual block: consists of two or more convolutional layers with residual connections; Pooling layer: reduces the dimensionality of the image; Fully connected layer: connects the outputs of all convolutional layers (Shifang et al., 2021). The network structure of ResNet consists of two main components: the residual blocks and the backbone network (Chunshan et al., 2020). Each residual block contains two or more convolutional layers and a residual connection, whose main function was to pass the residuals of the input data directly to the next residual block, which increases the mobility of the data so that the gradient can remain valid in deeper layers of the network and thus reduce the effect of gradient disappearance. ResNet constructs a deeper network by stacking more and more residual blocks to solve more complex problems.

2.2.2 MobileNet network model

Lightweight network design differs from traditional neural networks by placing greater emphasis on compactness of the model structure for running networks on embedded devices. Google proposed MobileNet V1, a classical lightweight network that can be deployed on mobile (Wenjie et al., 2021), which uses deep separable convolution instead of traditional convolution to reduce the network parameters while ensuring network accuracy (Howard et al., 2019). MobileNet V2 further improves the performance of the model by adding inverse residual structure and linear units and using nonlinear activation functions in high-dimensional space based on V1. MobileNet V3 (Hu et al., 2020), based on V2, introduces lightweight attention (squeeze and excitation) (Zhou et al., 2022) modules that effectively suppress unnecessary channels, while the model uses the h-swish activation function to reduce the computational cost of applying nonlinear activation functions and achieve better parameter reduction.

Deeply separable convolution (DSC) holds the key to lightweight network design, as shown in Figure 4. This convolution is a decomposable convolutional structure that decomposes standard convolution into deep wise convolution, which is the process of combining features to create feature vectors of new dimensions, and Pointwise convolution, which is the process of filtering the input feature vectors. Compared to traditional convolution, deep separable convolution can reduce the parameters of the model to improve the detection speed. For example, the input feature map size for H×W, the number of input channels for M, the convolution kernel size for K×K, the number of output channels for N, and the output feature map size for OT×OT. The normal convolution computes Nc is.

The deeply separable convolution computation Na is.

The ratio of computational cost between depth wise separable convolution and regular convolution is.

From the ratio of deeply separable convolutional to normal convolutional computation, it is shown that the reduction of deeply separable convolutional computation is related to the number of channels and the size of the convolutional kernel, with the larger the size of the convolutional kernel, the larger the computational reduction.

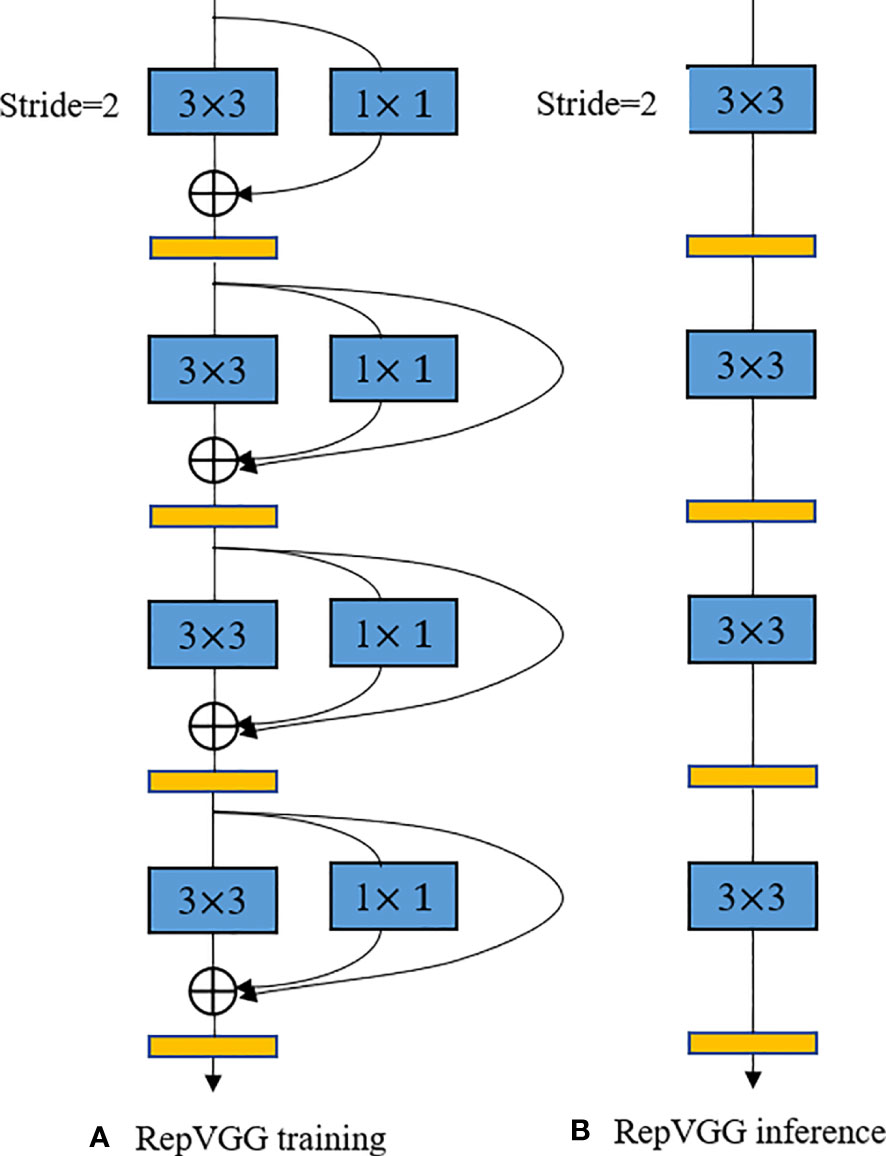

2.2.3 Structural reparameterization

The structural re-parameterization is a technique for optimizing neural network models (Ding et al., 2021). This technique enables efficient training and deployment of deep learning models in scenarios with limited computational resources by using constant parameter transformations to reduce the storage and computational resources of the model through simplification of the network structure. As shown in Figure 5, the earlier RepVGG model uses a simple architecture consisting of stacked 3*3 Conv and ReLU to achieve structural decoupling during training and inference, and uses a multi-branch structure during training, and then uses reparameterization to equivalently transform the multi-branch architecture to a VGG single-way architecture with stacked 3*3 convolutional layers after training was completed, using this structured reparameterization method to enable RepVGG to achieve ImageNet to achieve more than 80% accuracy and run several times faster (Transactions of the Chinese Society of Agricultural Engineering et al., 2021; Hu et al., 2022).

2.2.4 LALNet model construction

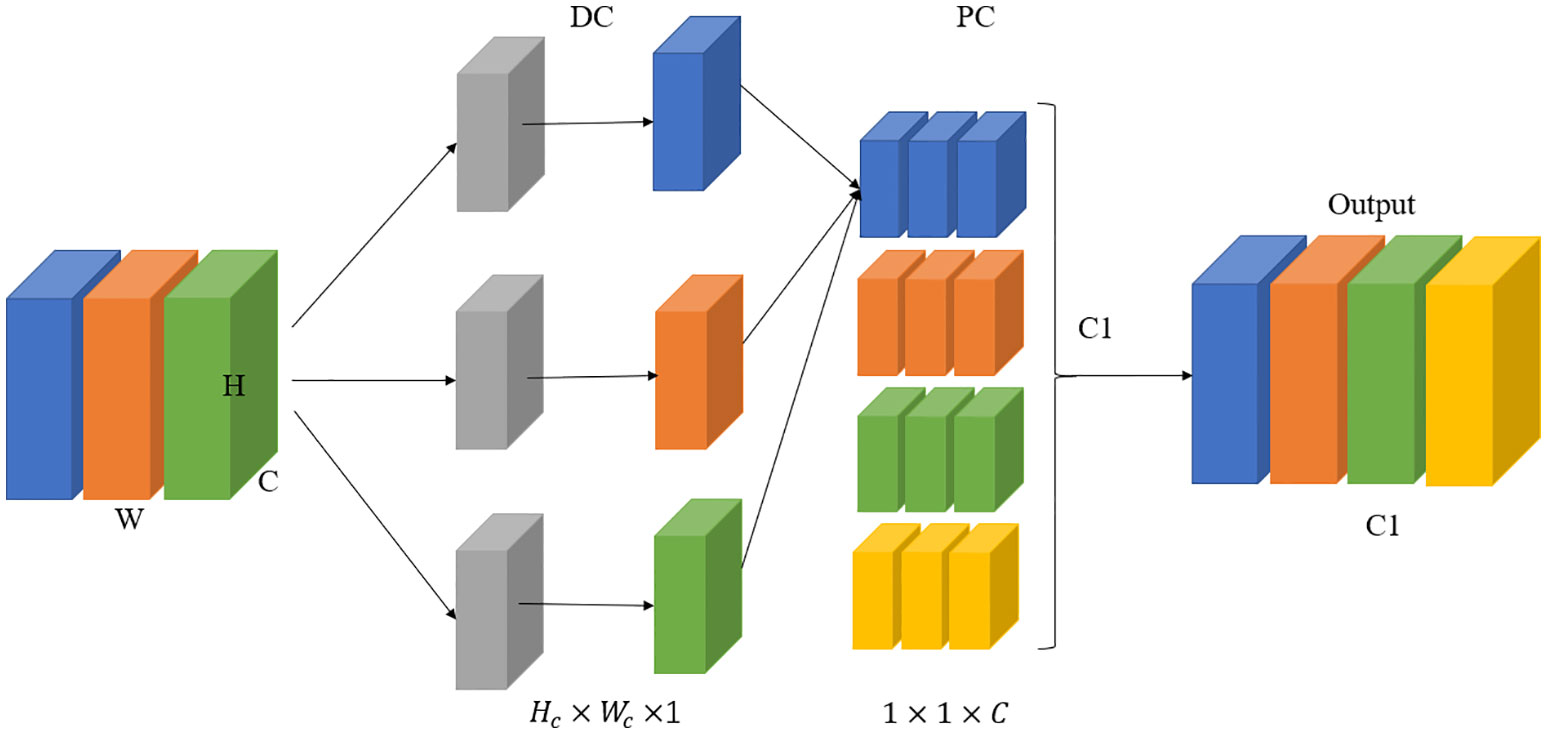

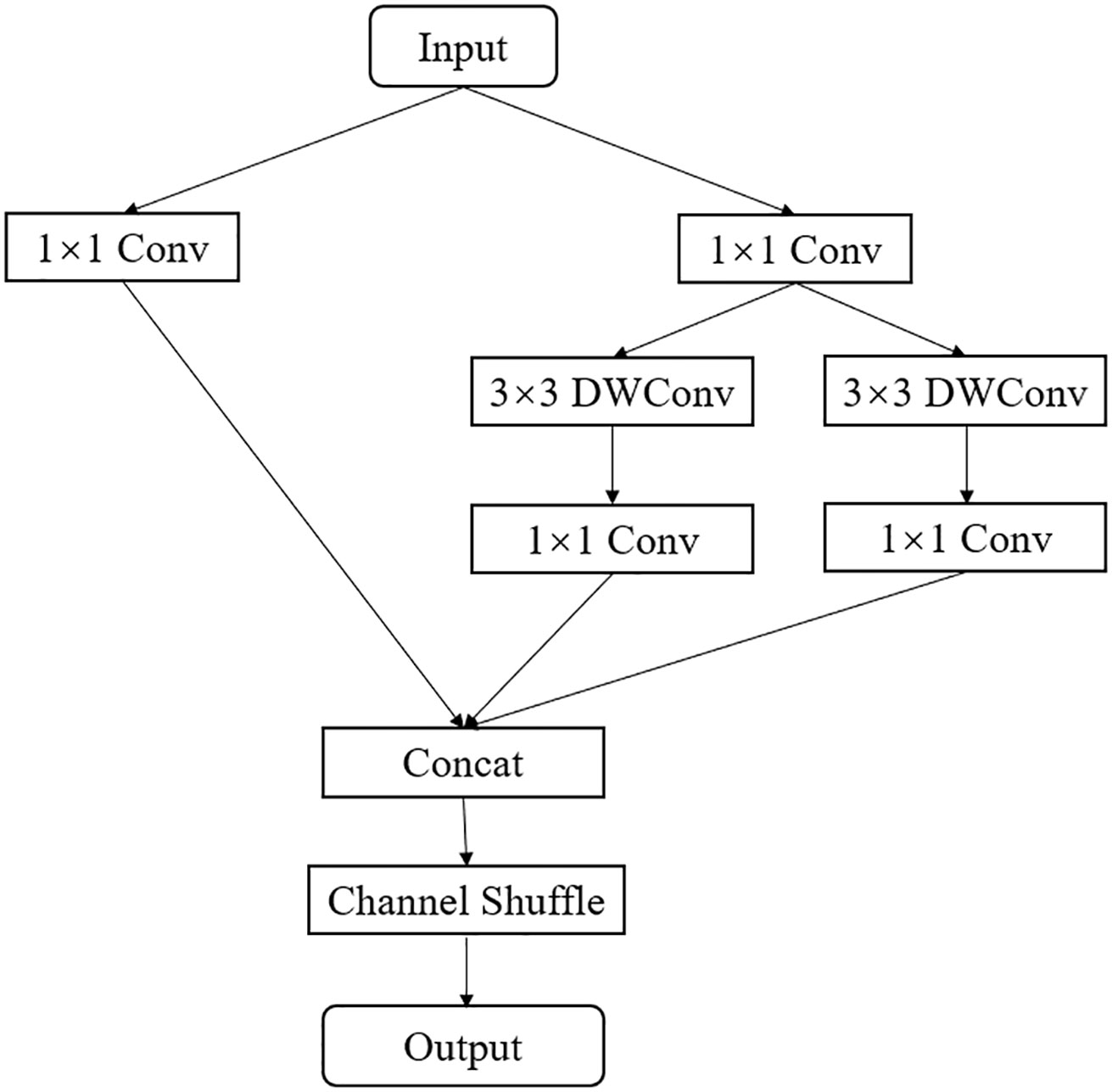

Inspired by the depth-wise separable convolutions in ResNet and MobileNet, this paper proposes an efficient EALD module. The EALD module, as shown in Figure 6, uses a multi-branch structure and depth-wise separable modules to extract more feature information with fewer parameters and computational complexity. First, the module uses a standard 1x1 convolution kernel for dimensionality reduction, followed by different branches for feature extraction. The first and second branches use 3x3 depth separable modules to extract complex features. In the third branch, a 1x1 standard convolution is used to extract residual information and to enhance the interplay of module features. Then, the outputs of the three branches are summed and the channel number is restored using a 1x1 pointwise convolution. Finally, channel shuffling was performed to facilitate information fusion between channels, thereby improving the feature recognition capability.

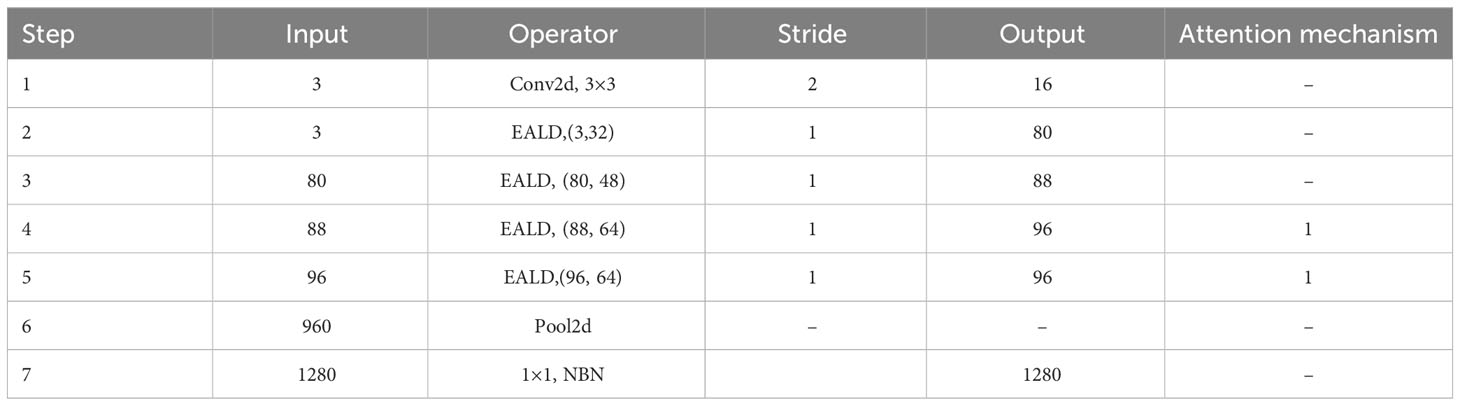

The LALNet lightweight apple leaf disease classification model was stacked using the EALD module, and the network structure of the LALNet model follows in Table 2. First, the initialized feature extraction of three channels of the image was performed in step one using a standard convolution with a convolution kernel of 3*3, which has a step size of two and an output channel number of 16. The EALD module was used for feature extraction in steps two-five with a step size of 1. The SE attention module was added in steps four and five to increase the feature extraction capability. In step six, an adaptive averaging pooling layer was used and then a linear layer with 960 input features and 1280 output features was passed. In step seven, the output of the linear layer was passed through another batch normalization layer so that a linear layer with 1280 input features and number of output classes was applied as the final layer.

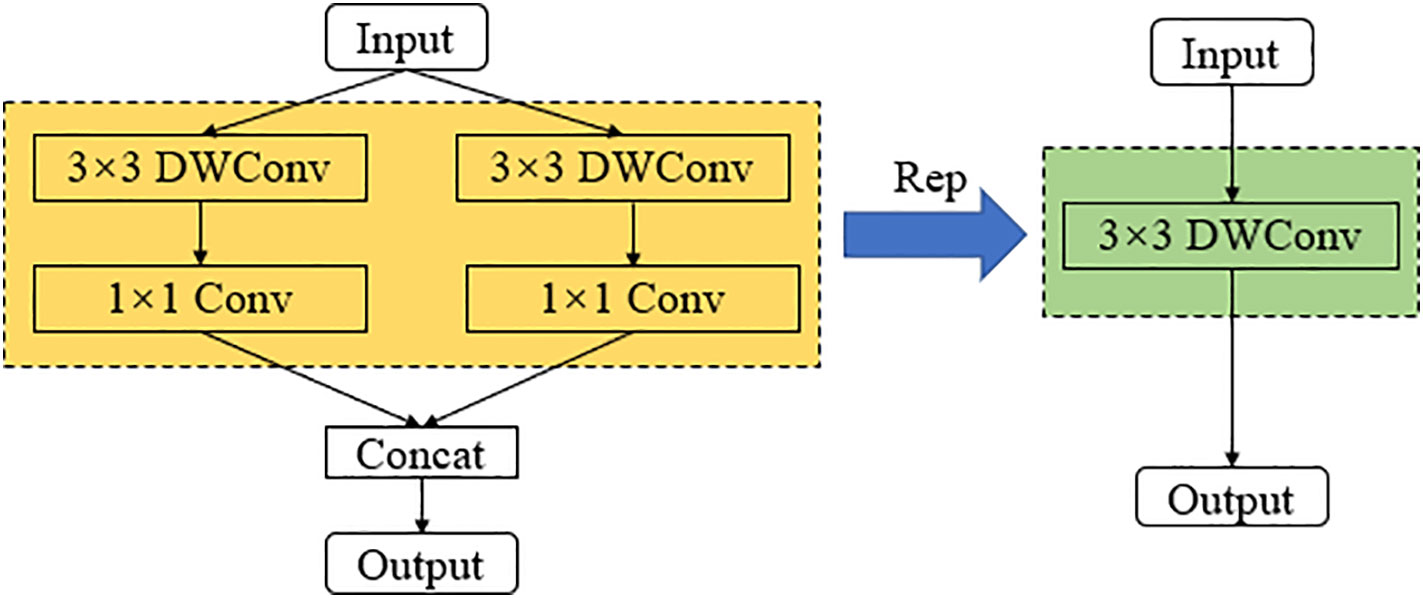

While a multi-branch structure reduces the number of parameters in a model, many researchers argue that having too many branches can affect the model’s runtime speed during inference. Therefore, this paper optimizes the structure of the model during recognition using a re-parameterization strategy. As shown in Figure 7, the convolutional layers with 3x3 depth-wise separable convolutions and their respective batch normalization (BN) layers in the first and second branches are fused. After fusion, a set of 3x3 depth-wise separable convolutional groups is used to represent the common parameters of the two branches, thereby improving the model’s recognition speed during inference.

3 Results and discussion

3.1 Experiment environment

In this study, the hardware experimental environment consisted of a Lenovo laptop (y9000p) with an Intel Pentium i5-12700H processor running at a frequency of 3.5GHz, and a GeForce GTX 3060 6G GPU. The software experimental environment involved a Windows 10 operating system, Python 3.8 as the programming language, PyTorch 1.10.0 as the machine learning library, and CUDA 10.2 as the parallel computing framework.

3.2 Evaluative metrics

The following metrics are commonly used when evaluating the performance of classification models:

Accuracy: This is a measure of the overall accuracy of the model’s predictions. It indicates the percentage of correct predictions made by the model across all samples.

Precision: This is a measure of the proportion of actual positive samples for which the model predicts a positive outcome.

Recall: This is a measure of the proportion of actual positive samples for which the model is predicted to be positive.

F1 value: This is a combined precision and recall metric that measures the overall predictive effectiveness of the model for positive samples.

where: A- Accuracy; P-precision; R-recall rate;

TP-True positive, the number of samples correctly predicted as positive;

TN-True negative, the number of negative samples predicted as negative;

FP-False positive, the number of negative samples predicted as positive;

FN-False negative, the number of positive samples predicted as negative.

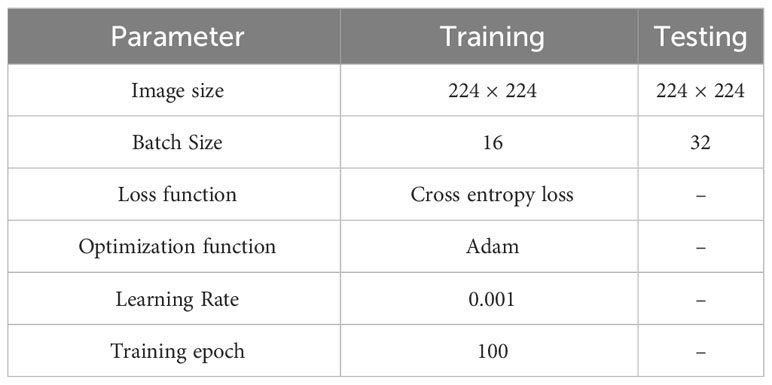

3.3 Model training, testing parameters

In training and testing the LALNet model, the parameters of the training and testing models were finally selected after several tests and trials to suit the data set and computer performance of this paper as shown in Table 3 below, the image size of the training and testing models was 224*224, the Batch Size was 16 during training, the Batch Size was 16 during testing, the loss function was Cross entropy loss, the optimization function was Adam, the learning rate was 0.001, and the number of training rounds was 100.

3.4 Accuracy of the model training

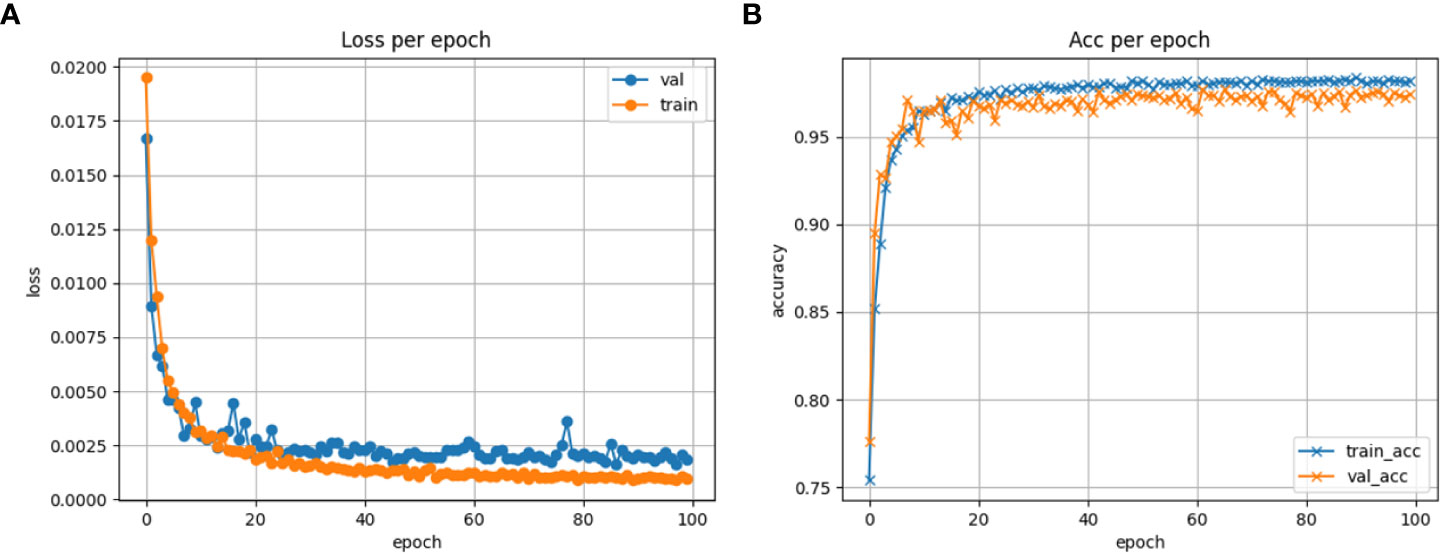

In the training process of the model, it is common to evaluate the loss and accuracy of the training and validation sets to assess the performance of the model on the dataset (Pang et al., 2020). In this paper, both the training and validation of the model were performed using the same parameters and number of training epochs. The loss and accuracy of the model on the training and validation sets were monitored. Figure 8A shows the loss graph of the training and validation of the model, while Figure 8B shows the accuracy graph of the training and validation of the model in this paper. From the figures, it can be seen that the model initially had lower accuracy and higher loss values with significant fluctuations. However, as the training progressed, both the accuracy and loss values of the model stabilized. Therefore, the model did not experience overfitting or underfitting problems, indicating a good training performance of the model.

Figure 8 Model training monitoring graph. (A) Loss plot of model training. (B) Accuracy plot of model training.

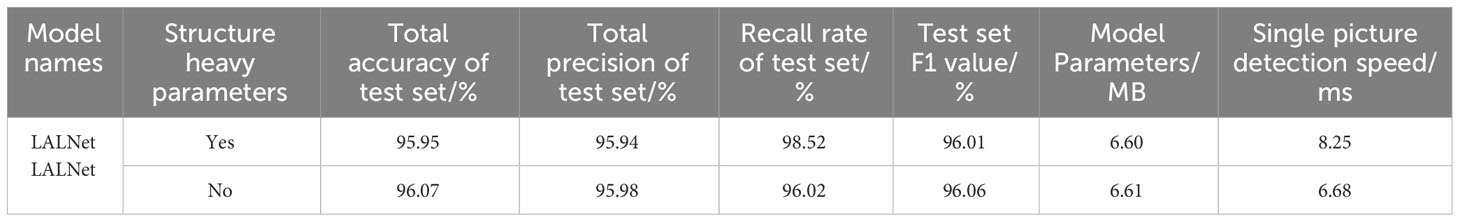

3.5 Analysis of structural re-parameterization results

In this study, structural re-parameterization was applied to the EALD module during the model inference phase, with the goal of improving the model’s runtime speed during inference. The recognition accuracy and single frame recognition speed of the model with and without structural reparameterization were evaluated on the test set (Yueming et al., 2023). The experimental results, as shown in Table 4, indicate that the parameter size of the model remained almost unchanged after reparameterization. Although there was a slight decrease of 1% in detection accuracy, the model’s detection speed improved by 19.03%. Therefore, this re-parameterization method demonstrates its effectiveness in improving the model running speed while maintaining the model performance.

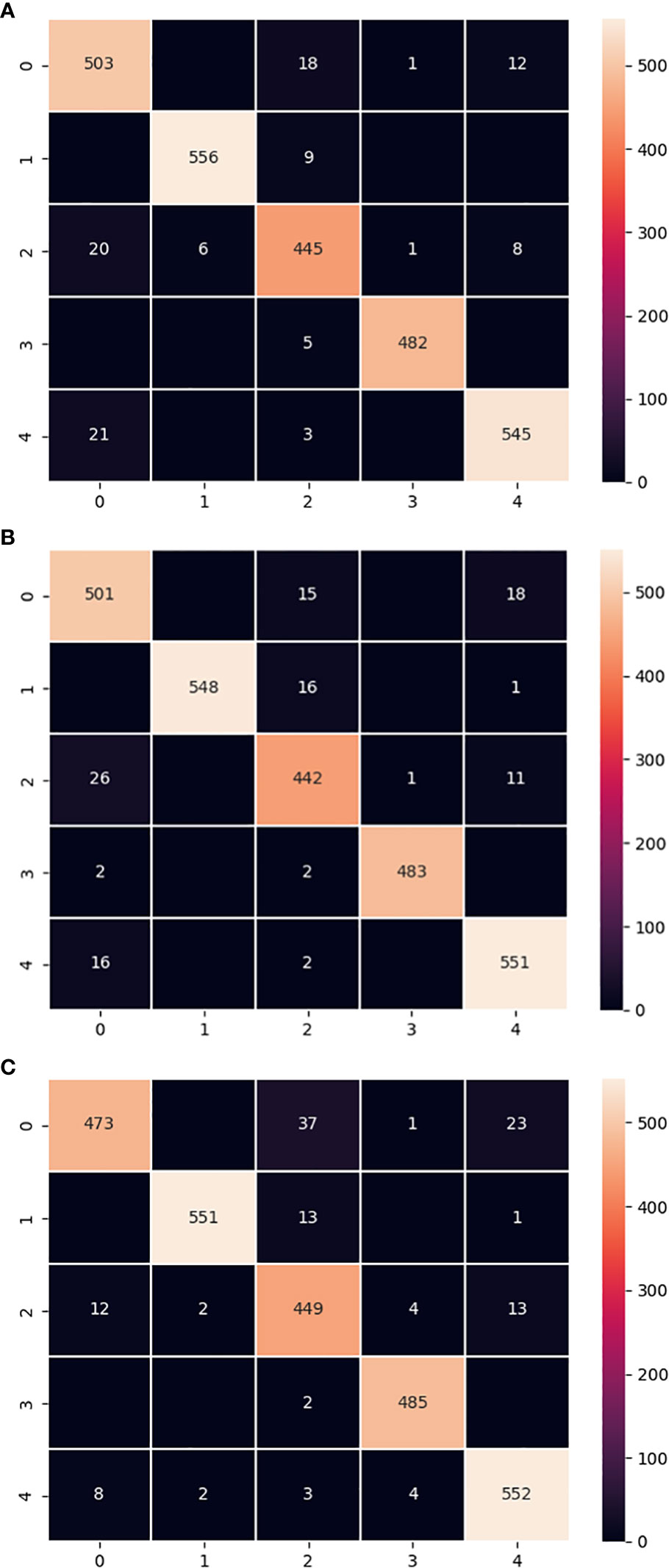

3.6 Analysis of model parameters, efficiency

The confusion matrix is a common tool for evaluating the performance of classifiers, which assesses the performance of the model by tracking the relationship between the actual and predicted labels of the classifier (Simonyan and Zisserman, 2014; Görtler et al., 2022). The confusion matrix of the LALNet model with MobileNet V3-small model and ShuffleNet V2 model on the test set was shown in Figure 9. From the confusion matrix Figure 9A, it can be seen that the label 0 correctly predicted images of 503, label 1’s correctly predicted images of 556, label 2’s correctly predicted images of 445, label 3’s correctly predicted images of 482, and label 4’s correctly predicted images of 545. By comparing Figures 9A, B, it was found that the correctly predicted images of label 4 in the MobileNet V3-small model exceeded the LALNet model, and the rest of the labels were slightly lower than the LALNet model. From the comparison of Figures 9A, C, it was found that the correct predicted images of label 2 in the ShuffleNet V2 model exceeded the LALNet model, and the rest of the labels were slightly lower than the LALNet model. By comparing the three confusion matrices, it was observed that each model recognized different types and numbers of confused labels, which indicated that different models had different recognition of apple leaf diseases. It was also found that the LALNet model integrated the correct label matching slightly better than the other two models, thus indicating the superior design of the LALNet model.

Figure 9 Confusion matrix for the 3 models. (A) LALNet model confusion matrix. (B) MobileNet V3-small model confusion matrix. (C) ShuffleNet V2 model confusion matrix.

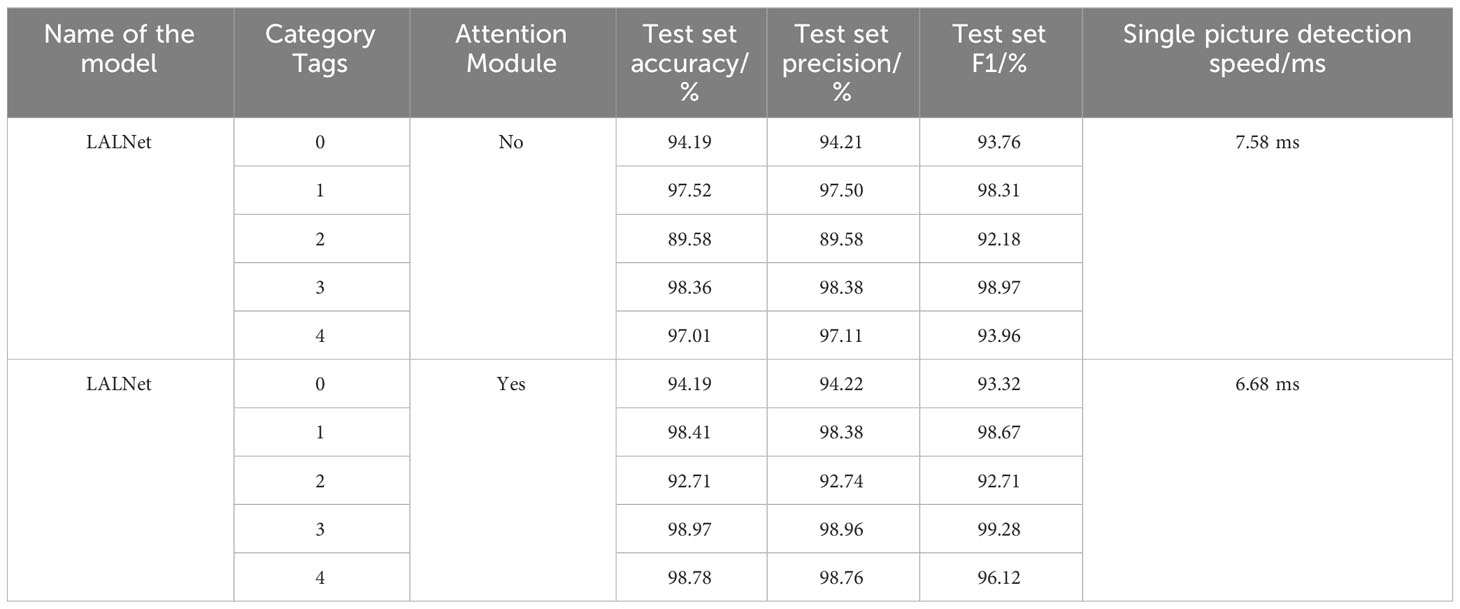

This paper conducts a comparative test on whether the lalnet model uses the attention mechanism. The results are shown in Table 5. It can be seen from Table 5 that when the attention module is not added, the total accuracy of the LALNet model was 95.46%, the total precision was 95.79%, the total F1 was 95.43%, and the single picture detection speed is 6.68ms. When the attention module was added, the total accuracy of lalnet model was 96.07%, and the total F1 was 96.06%. The accuracy and F1 values were improved. At the same time, the single image detection speed was also slightly reduced to 7.58ms. Thus, the attention mechanism can improve the performance of the model to a certain extent, making it more accurate and robust, but it will also affect the detection speed of the model.

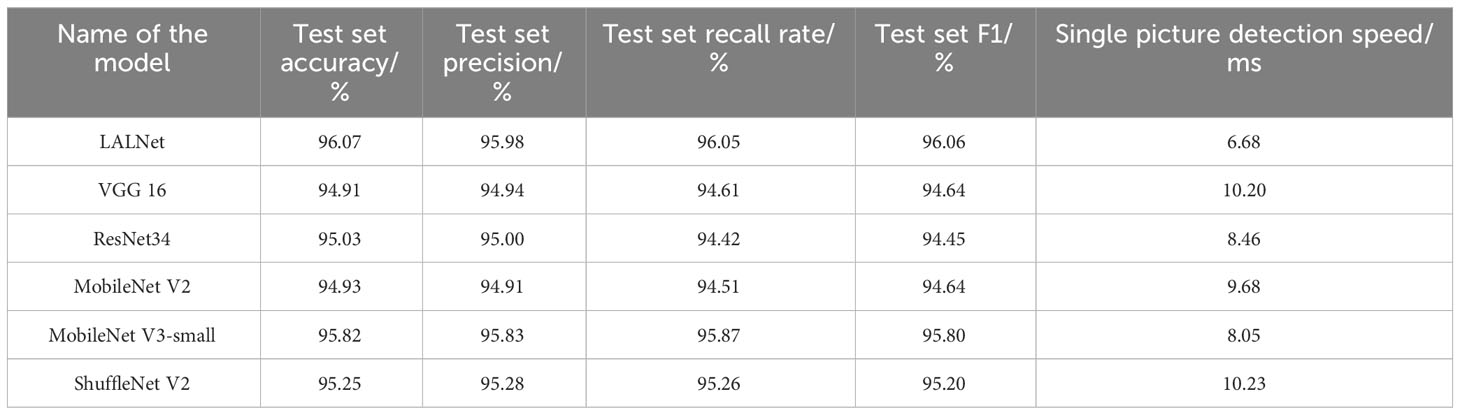

3.7 Comparative analysis of different models in the experimental study

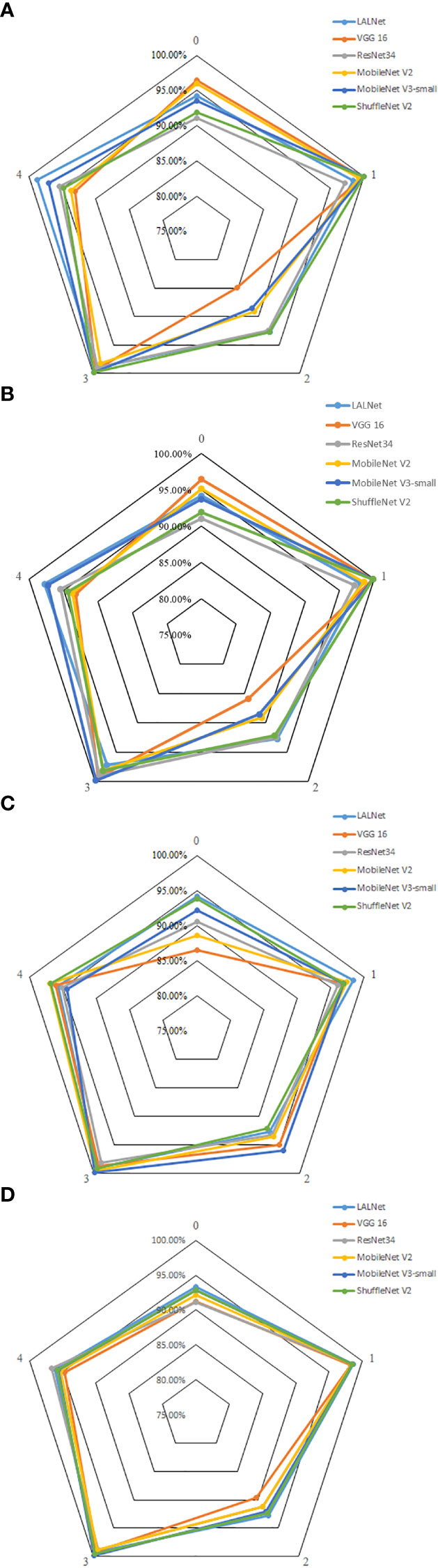

To further validate the performance of the model in classifying different types of apple leaf categories, the model was evaluated using six different network models: LALNet, VGG16 (Ma et al., 2018), ResNet34, MobileNet V2, MobileNet V3-small, and ShuffleNet V2 (Zhong and Zhao, 2020). The experimental results on the test set are shown in Table 6, while the performance metrics for different leaf diseases are shown in Figure 10.

Figure 10 Comparison of different apple leaf disease evaluation indexes of the 6 models. (A) Accuracy, (B) Precision, (C) Recall rate, (D) F1 values.

From the data results in Table 6, it could be seen that the LALNet model had an overall accuracy of 96.07% on the test set, which was higher than the other six models. In addition, the total precision, the total recall and total F1 values of the LALNet model were 95.98%,96.05% and 96.06%, respectively, which were also better than the other six models. In terms of detection speed, the single image detection speed of the LALNet model was 6.68ms faster than that of the other six classical models, and the single image detection speed was 16.79% higher than that of the lightweight MobileNet V3-small model, which means that the LALNet model has better real-time detection performance in practical applications.

In the performance evaluation of the different classical models compared, VGG 16 has the lowest total test set accuracy of 94.91%, the lowest total test set F1 value of 94.64%, and the second lowest total test set recall of 94.61%. MobileNet V3-large has a test set total accuracy of 95.93% and a test set total recall of 95.87%. Comparison of the models reveals that the detection accuracy of the lightweight model MobileNet V3-small exceeds the detection accuracy of ResNet34 and VGG16 models while ensuring the detection speed, which indicates that the lightweight structure design is superior in terms of model architecture, but still lacking compared to LALNet. In comparison, it was found that the LALNet model is faster in single image detection while ensuring detection accuracy, so it is more advantageous in apple leaf disease detection application scenarios that require fast response.

As shown in Figure 10, the accuracy, recall and F1 values of different models varied for different leaf disease categories. The LALNet model performed consistently in terms of accuracy, fluctuating around 95% for different disease categories, with the LALNet model achieving the best accuracy for category 1 and category 4, 98.41% and 98.78%, respectively. All models performed the worst accuracy on category 2, with the VGG 16 model having a lower accuracy of only 84.92% on category 2. In terms of recall, LALNet models performed best in category 1 and category 4 on the four disease categories, while the greatest variability in performance was found among the six models in category 2, where the VGG 16 model had about 85% recall on category 2 and ShuffleNet V2 about 94% on category 2. In terms of F1 values, LALNet models had the best F1 values in categories 1 and 4, while all models had F1 values above 95% in categories 0 and 2. The comparison showed that the LALNet models performed consistently in terms of accuracy, recall and F1 values, which achieved better performance for each disease category. Compared to other models, LALNet shows superior recognition accuracy in most disease categories, further validating the reliability and effectiveness of LALNet as an excellent model for apple leaf disease recognition.

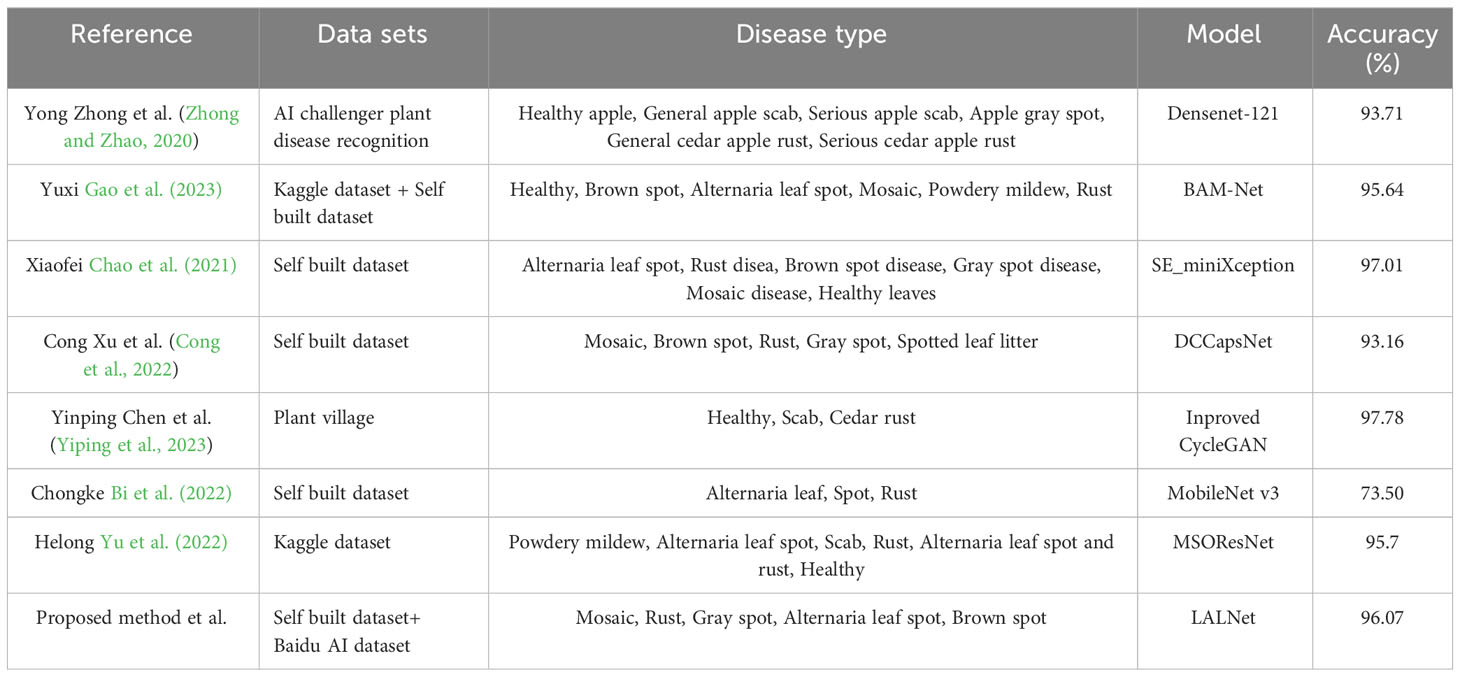

To further analyze the performance of this paper’s model in apple leaf disease detection, the LALNet model was compared and analyzed with existing state-of-the-art apple leaf disease detection methods, as shown in Table 7. It can be seen that the LALNet model achieves 96.07% accuracy on the self-built dataset and the Baidu AI dataset, and this paper’s model shows good performance in the disease detection task compared with other methods. In the comparison, it can be found that the detection accuracy of this paper’s model is close to or even exceeds some advanced research results, and it also can be found that the overall detection accuracy of seven models exceeds 90%, and Yinping Chen et al. achieved 97.78% on the PlantVillage dataset.However, we should also pay attention to the limitations of different methods due to the experimental environments in which the hardware devices different and the datasets used are also very different, which will affect the test results, especially the detection speed of the model.

4 Conclusion and limitations

In this paper, it proposed a fast apple leaf disease detection model LALNet. Firstly, an efficient leaf detection stacking EALD module was designed using multi-branch structure and depth separable modules, which can obtain more accurate identification information with less parameters and computation. Further, the EALD module was used in the LALNet model to stack four layers and add the SE module in the last layer of the model to improve the attention of the network model to focus on important features. Finally, the structural reparameterization technique was used to combine the outputs of two layers of deeply separable convolutions in the branch to improve the speed of the model during the inference phase. The proposed fast apple leaf disease detection model has an overall accuracy of 96.07% in the test set, precision of 95.98%, and F1 score of 96.06%, a model size of 6.61 MB, and a detection speed of 6.68 ms for a single image, thus the model meets the detection accuracy while ensuring its operation speed and is suitable for use on embedded devices.

However, it is important to acknowledge the limitations of this study in order to provide readers with a comprehensive assessment. First, the dataset used in this research has limitations in terms of data collection methods, sample size, and range of disease types covered, which may affect the generalizability of the model. Second, the performance of the model in real-world applications may be affected by factors such as lighting variations, different capture angles, and variations in leaf quality, which may affect its detection performance. Finally, while the focus of this study was on common apple leaf diseases, it does not cover all possible disease types that may be present in practical cultivation. Future research should consider collecting more diverse and comprehensive datasets and further optimizing the model to improve its accuracy and robustness.

In future work, we aim to further improve the performance of the LALNet model by addressing the aforementioned limitations. In addition, we plan to use the model in an intelligent tracked apple spraying robot to achieve precision spraying and reduce pesticide use.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Author contributions

RH prepared materials. ZY was responsible for the experiment design. GA and RL performed the program development. GA, and HX analyzed the data, and SY were responsible for writing the manuscript. SY and RL contributed to reviewing the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was the Innovation Team Fund for Fruit Industry of Modern Agricultural Technol-ogy System in Shandong Province (SDAIT-06-12) and equipment post and State Key Laboratory of Mechanical System and Vibration (Grant No. MSV202002).

Acknowledgments

We are very grateful to all the authors for their support and contribution with the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ai Studio poublic datasets. (2023). “Pathological images of apple leaves.” Available at: https://aistudio.baidu.com/aistudio/datasetdetail/11591/0.

Arumugam, K., Swathi, Y., Sanchez, D. T., Okoronkwo, E. (2022). Towards applicability of machine learning techniques in agriculture and energy sector. Mater. Today.: Proc. 51, 2260–2263. doi: 10.1016/j.matpr.2021.11.394

Bi, C., Wang, J., Duan, Y., Fu, D., Kang, J., Shi, Y. (2022). MobileNet based apple leaf diseases identification. Mobile. Netw. Appl. 27, 172–180. doi: 10.1007/s11036-020-01640-1

Chao, X., Hu, X., Feng, J., Zhang, Z., Wang, M., He, D. (2021). Construction of apple leaf diseases identification networks based on xception fused by SE module. Appl. Sci. 11 (10), 4614. doi: 10.3390/app11104614

Chunshan, W., Ji, Z., Huarui, W., Guifa, T., Chunjiang, Z., Jiuxi, L. (2020). Identification of vegetable leaf diseases based on improved Multi-scale ResNet. Trans. Chin. Soc. Agric. Eng. (Transactions. CSAE). 36 (20), 209–217. doi: 10.11975/j.issn.1002-6819.2020.20.025

Cong, X., Xuqi, W., Shanwen, Z. (2022). Dilated convolution capsule network for apple leaf disease identification. Front. Plant Sci. 13, 1002312. doi: 10.3389/FPLS.2022.1002312

Deepan, P., Sudha, L. R. (2021). “Deep learning algorithm and its applications to ioT and computer vision,” In Manoharan, K. G., Nehru, J. A., Balasubramanian, S. (eds). Artificial intelligence and ioT. Studies in Big Data (Singapore: Springer) 85, 223–244. doi: 10.1007/978-981-33-6400-4_11

Ding, X., Zhang, X., Ma, N., Han, J. G., Ding, G. G., Sun, J. (2021). “Repvgg: Making vgg-style convnets great again,” in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (Nashville, TN, USA). 2021, 13733–13742. doi: 10.1109/CVPR46437.2021.01352

He, K., Zhang, X., Ren, S., Sun, J. (2016). “Deep residual learning for image recognition,” in 2016 IEEE conference on computer vision and pattern recognition (CVPR). (Las Vegas, NV, USA). 2016, 770–778. doi: 10.1109/CVPR.2016.90

Gao, Y., Cao, Z., Cai, W., Gong, G., Zhou, G., Li, L. (2023). Apple leaf disease identification in complex background based on BAM-net. Agronomy 13 (5), 1240. doi: 10.3390/agronomy13051240

Gill, H. S., Khehra, B. S. (2022). Fruit image classification using deep learning. Res. Sq. preprint, 06. doi: 10.21203/rs.3.rs-574901/v1

Görtler, J., Hohman, F., Moritz, D., Wongsuphasawat, K., Ren, D. H., Rahul, N. (2022). “Neo: Generalizing confusion matrix visualization to hierarchical and multi-output labels,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. (New York, USA: Association for Computing Machinery). 408, 1–13. doi: 10.1145/3491102.3501823

Guo, B., Li, B., Huang, Y., Hao, F. Y., Xu, B. L., Dong, Y. Y. (2022). Bruise detection and classification of strawberries based on thermal images. Food Bioprocess. Technol. 15 (5), 1133–1141. doi: 10.1007/s11947-022-02804-5

Howard, A., Sandler, M., Chen, B., Wang, W., Chen, L. C., Tan, M., et al. (2019). “Searching for mobileNetV3,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), pp. 1314–1324. doi: 10.1109/ICCV.2019.00140

Hu, M., Feng, J., Hua, J., Lai, B., Huang, J. Q., Gong, X. J., et al. (2022). “Online convolutional re-parameterization,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA. 2022, 568–577. doi: 10.1109/CVPR52688.2022.00065

Hu, J., Shen, L., Sun, G. (2020). “Squeeze-and-excitation networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition. pp, 7132–7141. doi: 10.48550/arXiv.1709.01507

Kamilaris, A., Prenafeta-Boldú, F. X. (2018). Deep learning in agriculture: A survey. Comput. Electron. Agric. 147, 70–90. doi: 10.1016/j.compag.2018.02.016

Kawakura, S., Shibasaki, R. (2020). Deep learning-based self-driving car: Jetbot with nvidia ai board to deliver items at agricultural workplace with object-finding and avoidance functions. Eur. J. Agric. Food Sci. 2 (3). doi: 10.24018/ejfood.2020.2.3.45

Li, L. L., Sun, S. J., Mu, Y., Hu, Y., Gong, T. L. (2022). Lightweight-convolutional neural network for apple leaf disease identification. Frostierrs. Plant Sci. 13, 831219. doi: 10.3389/fpls.2022.831219

Ma, N., Zhang, X., Zheng, H. T., Sun, J. (2018). “Shufflenet V2: Practical guidelines for efficient cnn architecture design,” In Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds). Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), Springer, Cham, vol 11218. doi: 10.1007/978-3-030-01264-9_8

Pang, L., Liu, H., Chen, Y., Miao, J. (2020). Real-time concealed object detection from passive millimeter wave images based on the YOLOv3 algorithm. Sensors 20 (6), 1678. doi: 10.3390/s20061678

Sharma, A., Jain, A., Gupta, P., Chowdary, V. (2020). Machine learning applications for precision agriculture: A comprehensive review. IEEE Access 9, 4843–4873. doi: 10.1109/ACCESS.2020.3048415

Shifang, S., Yan, Q., Yuan, R. (2021). Recognition of grape leaf diseases and mobile application based on transfer learning. Trans. Chin. Soc. Agric. Eng. (Transactions. CSAE). 37 (10), 127–134. doi: 10.11975/j.issn.1002-6819.2021.10.015

Shun, Z., Yihong, G., Jinjun, W. (2019). The development of deep convolutional neural networks and its applications on computer vision. Chin. J. Comput. 42 (3), 453–482. doi: 10.11897/SP.J.1016.2019.00453

Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. IEEE Conference on Computer Vision and Pattern Recognition 1, 1–14. doi: 10.48550/arXiv.1409.1556

Wang, D., Wang, J. (2021). Crop disease classification with transfer learning and residual networks. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE) 37 (4), 199–207. doi: 10.11975/j.issn.1002-6819.2021.4.024

Wenjie, Q. I. U., Jin, Y. E., Liangqing, H. U., Juan, Y. A. N. G., Qili, L. I., Jianyou, M. O., et al. (2021). Distilled-mobilenet model of convolutional neural network simplified structure for plant disease recognition. Smart. Agric. 3 (1), 109–117. doi: 10.12133/j.smartag.2021.3.1.202009-SA004

Yan, Q., Yang, B., Wang, W., Wang, B., Chen, P., Zhang, J.. (2020). Apple leaf diseases recognition based on an improved convolutional neural network. Sensors 20 (12), 3535. doi: 10.3390/s20123535

Yiping, C., Jinchao, P., Qiufeng, W. (2023). Apple leaf disease identification via improved CycleGAN and convolutional neural network. Soft. Computing. 27 (14), 9773–9786. doi: 10.1007/S00500-023-07811-Y

Yu, H., Cheng, X., Chen, C., Heidari, A., Liu, J., Cai, Z., et al. (2022). Apple leaf disease recognition method with improved residual network. Multimed. Tools Appl. 81, 7759–7782. doi: 10.1007/s11042-022-11915-2

Yueming, W., Meng, Q., Deqing, Z., Hai, J. (2023). Obfuscation-resilient android malware detection based on graph convolution neural networks. J. Software. 34 (6), 0–0. doi: 10.13328/j.cnki.jos.006848

Zhang, Q. Q. (2021). Study on the evolution and advantage evaluation of Apple production distribution in China (Shanxi, China: Northwest A&F University).

Zhang, C., Lu, Y. (2021). Study on artificial intelligence: The state of the art and future prospects. J. Ind. Inf. Integration. 23, 100224. doi: 10.1016/j.jii.2021.100224

Zhang, M., Su, H., Wen, J. (2021). Classification of flower image based on attention mechanism and multi-loss attention network. Comput. Commun. 179, 307–317. doi: 10.1016/j.comcom.2021.09.001

Zheng, Y. Y., Kong, J. L., Jin, X. B., Wang, X. Y., Zou, M. (2019). CropDeep: The crop vision dataset for deep-learning-based classification and detection in precision agriculture. Sensors 19 (5), 1058. doi: 10.3390/s19051058

Zhong, Y., Zhao, M. (2020). Research on deep learning in apple leaf disease recognition. Comput. Electron. Agric. 168, 105146. doi: 10.1016/j.compag.2019.105146

Keywords: apple leaf disease, deep learning, deep separable convolution, re-parameterization, leaf detection network

Citation: Ang G, Han R, Yuepeng S, Longlong R, Yue Z and Xiang H (2023) Construction and verification of machine vision algorithm model based on apple leaf disease images. Front. Plant Sci. 14:1246065. doi: 10.3389/fpls.2023.1246065

Received: 24 June 2023; Accepted: 14 August 2023;

Published: 13 September 2023.

Edited by:

Tonghai Liu, Tianjin Agricultural University, ChinaReviewed by:

Aibin Chen, Central South University Forestry and Technology, ChinaJun Liu, Weifang University of Science and Technology, China

Copyright © 2023 Ang, Han, Yuepeng, Longlong, Yue and Xiang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Song Yuepeng, dXB0b25zb25nQHNkYXUuZWR1LmNu; Ren Longlong, cmVubG9uZ2xvbmdAc2RhdS5lZHUuY24=

Gao Ang

Gao Ang Ren Han1

Ren Han1 Ren Longlong

Ren Longlong Zhang Yue

Zhang Yue Han Xiang

Han Xiang