- National Key Laboratory of Crop Genetic Improvement, National Center of Plant Gene Research (Wuhan), College of Engineering, Huazhong Agricultural University, Wuhan, China

High-throughput phenotyping of yield-related traits is meaningful and necessary for rice breeding and genetic study. The conventional method for rice yield-related trait evaluation faces the problems of rice threshing difficulties, measurement process complexity, and low efficiency. To solve these problems, a novel intelligent system, which includes an integrated threshing unit, grain conveyor-imaging units, threshed panicle conveyor-imaging unit, and specialized image analysis software has been proposed to achieve rice yield trait evaluation with high throughput and high accuracy. To improve the threshed panicle detection accuracy, the Region of Interest Align, Convolution Batch normalization activation with Leaky Relu module, Squeeze-and-Excitation unit, and optimal anchor size have been adopted to optimize the Faster-RCNN architecture, termed ‘TPanicle-RCNN,’ and the new model achieved F1 score 0.929 with an increase of 0.044, which was robust to indica and japonica varieties. Additionally, AI cloud computing was adopted, which dramatically reduced the system cost and improved flexibility. To evaluate the system accuracy and efficiency, 504 panicle samples were tested, and the total spikelet measurement error decreased from 11.44 to 2.99% with threshed panicle compensation. The average measuring efficiency was approximately 40 s per sample, which was approximately twenty times more efficient than manual measurement. In this study, an automatic and intelligent system for rice yield-related trait evaluation was developed, which would provide an efficient and reliable tool for rice breeding and genetic research.

Introduction

Rice is the staple food for over half of the world’s population (Zhang, 2007; Sandhu et al., 2019), the yield of which is of great significance to human security and development (Tester and Langridge, 2010). In rice research, the evaluation of rice yield-related traits is an essential step for rice breeding (Qian et al., 2016; Yang et al., 2020) and functional gene analysis (Sakamoto and Matsuoka, 2008). Generally, rice yield is determined by crucial factors, including the number of panicles (Xing and Zhang, 2010), the number of filled spikelet’s (Borrell et al., 2014), and 1,000 grain weight (Richards, 2000). However, the traditional method for rice yield-related trait evaluation is manual and faces the problems of rice threshing difficulties, measurement process complexity, and low efficiency. Therefore, an efficient and reliable tool for rice yield-related trait evaluation is urgently needed.

With the rapid development of machine vision, a growing number of image-based technologies have been applied in agriculture (Sankaran et al., 2010; Rebolledo et al., 2016; Confalonieri et al., 2017), and several studies on rice yield-related trait evaluation have been reported. Since it is difficult to thresh all the spikelet’s in the panicle, most of the studies focus on grain traits measurement. ImageJ, a Java-based open source software, was used for isolated grain trait measurement (Igathinathane et al., 2009), and Smart Grain, an open source software, was released for grain trait measurement in complicated situations (Tanabata et al., 2012). Duan developed a yield traits scorer for automatic extraction of yield-related traits with high throughput (Duan et al., 2011). However, panicle threshing performance is still a bottleneck that some unfilled spikelet’s would remain in the threshed panicle, which would have a great negative effect on the measurement of rice yield-related traits. Some researchers have attempted to directly analyze rice panicles without threshing processes. Sandhu proposed a method for rice panicle maturity evaluation based on three-dimensional point cloud construction and analysis (Sandhu et al., 2019). Hu developed a 22 yield-related trait extraction method based on X-ray computed tomography imaging (Hu et al., 2020). P-TRAP, a commercial software program, was designed for yield spread-related trait analysis (Al-Tam et al., 2013). However, the low measurement efficiency and high cost had a negative impact on the practical application. Thus, an automatic panicle analysis system with high efficiency and high accuracy would have great application prospects.

According to existing research, there are generally two ways to obtain rice yield traits. The most common method was to investigate the grain traits by threshing the panicle manually (Yang et al., 2014), because the unfilled spikelet’s were hard to be completely taken off by the threshing machine, which limited the efficiency and accuracy of rice yield traits extraction. On the other hand, the X-ray technology was able to be used for filled and unfilled spikelet’s identification (Hu et al., 2020), but it is difficult to be widely used in practical rice yield traits extraction, because of the high cost, low efficiency, and radiation. In order to solve the problem of residual panicle spikelet’s by threshing machine, we promote a new way with threshed panicle compensation, which would identify the number of residual spikelet’s in the threshed panicle and compensate it into the evaluation of spikelet yield traits. In order to achieve it, a robust and reliable method for threshed panicle identification is needed.

In recent years, artificial intelligence technology has been significantly promoted and widely used in agriculture (Chen et al., 2018; Escamilla-Garcia et al., 2020; van Klompenburg et al., 2020; Dhaka et al., 2021; Kattenborn et al., 2021). Sun developed a soybean yield prediction model based on a convolutional neural network (Sun et al., 2019) and a long short-term memory network (Hochreiter and Schmidhuber, 1997). Zhou analyzed drone images for maize leaf coverage based on the Deeplabv3 plus model (Zhou et al., 2019). Object detection is an important research field of deep learning image processing, which is widely used in various agricultural scenes. The state-of-the-art methods can be categorized into two main types: one-stage methods and two stage-methods. One-stage methods prioritize inference speed, and example models include YOLO, SSD, and Retina (Liu et al., 2016; Redmon et al., 2016; Lin et al., 2020). Two-stage methods prioritize detection accuracy, and example models include Faster R-CNN, Mask R-CNN, and Cascade R-CNN (Ren et al., 2015; He et al., 2017; Cai et al., 2018). Faster RCNN is a classical two-stage object detector consisting of object proposal, feature extraction, and bounding box regression, which formulates detection as a coarse-to-fine process. The Deep–Fruits model was proposed for fruit identification based on Faster-RCNN (Sa et al., 2016). Faster-RCNN has also been applied for pest detection (Shen et al., 2018; He et al., 2020; Li et al., 2021) and panicle spikelet counting (Wu et al., 2019; Deng et al., 2021; Yu et al., 2021). Therefore, we adopted a two-stage detector, Faster R-CNN, as the basis for the threshed panicle detection. However, the current Faster R-CNN detector has shown weak performances with small and overlapped objects (Tong et al., 2020), and the grain size is approximately 30 × 60 pixels in the threshed panicle image of 2,048 × 4,096 pixels. Building on these preliminary observations, appropriate improvement for the Faster R-CNN architecture should be performed to achieve accurate detection of the threshed panicle.

The aim of this study is to build an automatic and intelligent system for rice yield trait evaluation. Firstly, a new deep learning architecture was proposed to achieve threshed panicle compensation on the basis of the Faster R-CNN architecture, termed ‘TPanicle-RCNN.’ Then, equipped with automatic control, machine vision, and deep learning algorithms, we developed a novel intelligent system, which includes an integrated threshing unit, grain conveyor-imaging units, and threshed panicle conveyor-imaging unit, and specialized image analysis software. Finally, the threshed panicle compensation was performed to achieve automatic and accurate acquisition of rice yield-related traits.

Materials and Methods

System Design

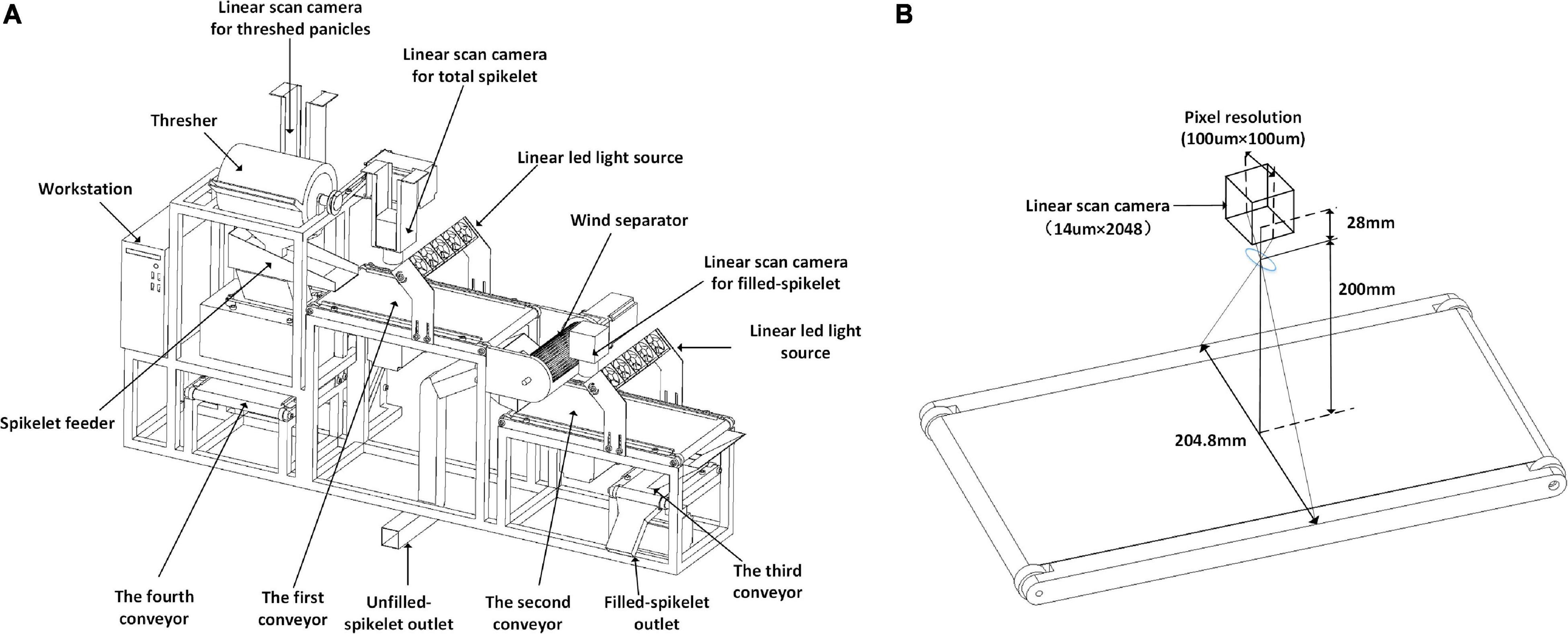

The system sketch is shown in Figure 1A, which mainly consists of the panicle threshing unit, servo air separator, and three conveyor imaging units. The threshing unit is derived from a semi-feeding drum thresher (TSL-150A, Top Cloud-AGRI, China) and is driven by a servo motor (Panasonic, Japan). The operator holds the panicle and puts it into the thresher; as a result, all the filled spikelet’s and most of the unfilled spikelet’s are threshed from the panicle. Then, the threshed spikelet’s spread out on the first conveyor by the vibration feeder. The servo air separator is designed with a cross-flow fan and is fixed between two grain conveyor lines; as a result, the filled spikelet’s are separated from the unfilled spikelet’s. The conveyor-imaging units are constructed with a panicle conveyor line and two grain conveyor lines; as a result, images of threshed panicles, total spikelet’s, and filled spikelet’s are obtained, which were grayscale images saved in PNG format. Finally, the filled spikelet’s and unfilled spikelet’s are individually collected from the specific outlets.

Figure 1. System sketch of automatic rice yield-related trait evaluation: (A) system composition details and (B) conveyor imaging unit.

The details of the conveyor imaging units are shown in Figure 1B. The linear camera (SG-11-02K40-00-R, DASLA, Canada) is equipped with a 28 mm lens (Nikon, Japan), the charge-coupled device (CCD) size is 14μm×2048, and the field of vision (FOV) is 204.8 mm with a pixel resolution of 100μm. Motion control is conducted by a programmable logic controller (PLC, Omron, Japan) programed by CX-Programmer 9.5 (Omron, Japan). The workstation m415 (Lenovo, China) is equipped with an i5-7500 CPU, 8 GB memory, and 1 T hard disk, and the Alibaba cloud (Alibabacloud) is adopted, with the configuration of a Tesla M40, 16 GB memory, and 30 GB cloud storage.

System Workflow

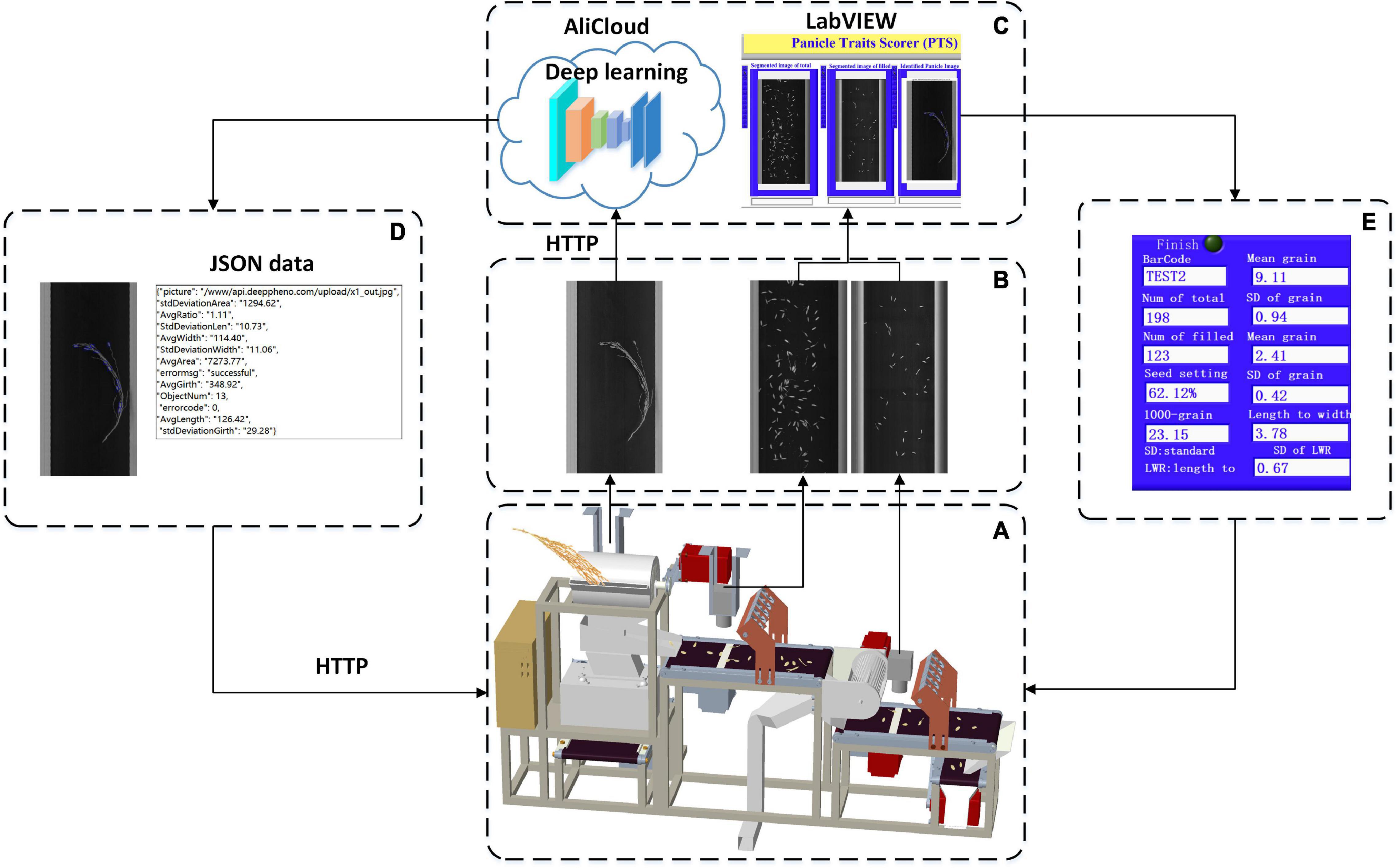

The system workflow is depicted in Figure 2: (1) First, the operator started the system and inputs the barcode. (2) Second, the panicle is held and put into the thresher after which the threshed panicle is placed on the fourth conveyor line for image acquisition, while all the threshed grains spread out onto the first conveyor line with a spikelet’s feeder for image acquisition. (3) Then, the grains go through a wind separator that blows the unfilled spikelet’s away, while the filled spikelet’s falls onto the second conveyor for image acquisition. (4) Next, all the filled spikelet’s are collected and weighed by the third conveyor and weighing device. (5) Finally, the images of threshed panicles, total spikelet’s, and filled spikelet’s are analyzed by specific local algorithms and cloud-deployed deep learning models for yield-related trait evaluation. The system workflow is shown in Supplementary Video 1.

Software Design

The software workflow in the system is shown in Figure 3 and was developed to achieve the functions of PLC communication, image acquisition, image analysis, and data storage. First, PLC communication was designed to control the panicle thresher, vibration feeder, conveyor lines, and wind separator, as shown in Figure 3A. Second, image acquisition was achieved by the NI-Vision module (National Instruments, United States) to obtain images of threshed panicles, total spikelet’s, and filled spikelet’s, as shown in Figure 3B. The image analysis algorithms were written in C + + and complied with the Dynamic Link Library (DLL), which was invoked by the user software developed by LabVIEW 8.6 (National Instruments, United States), and the threshed panicle identification model was trained in the local server and deployed in the Alibaba cloud. Finally, as shown in Figure 3E, the measurement results were displayed on the software interface, and the data were saved in one Excel file.

Figure 3. Software workflow in the system: (A) system control, (B) image acquisition, (C) image analysis, (D) cloud communication, and (E) result exhibition.

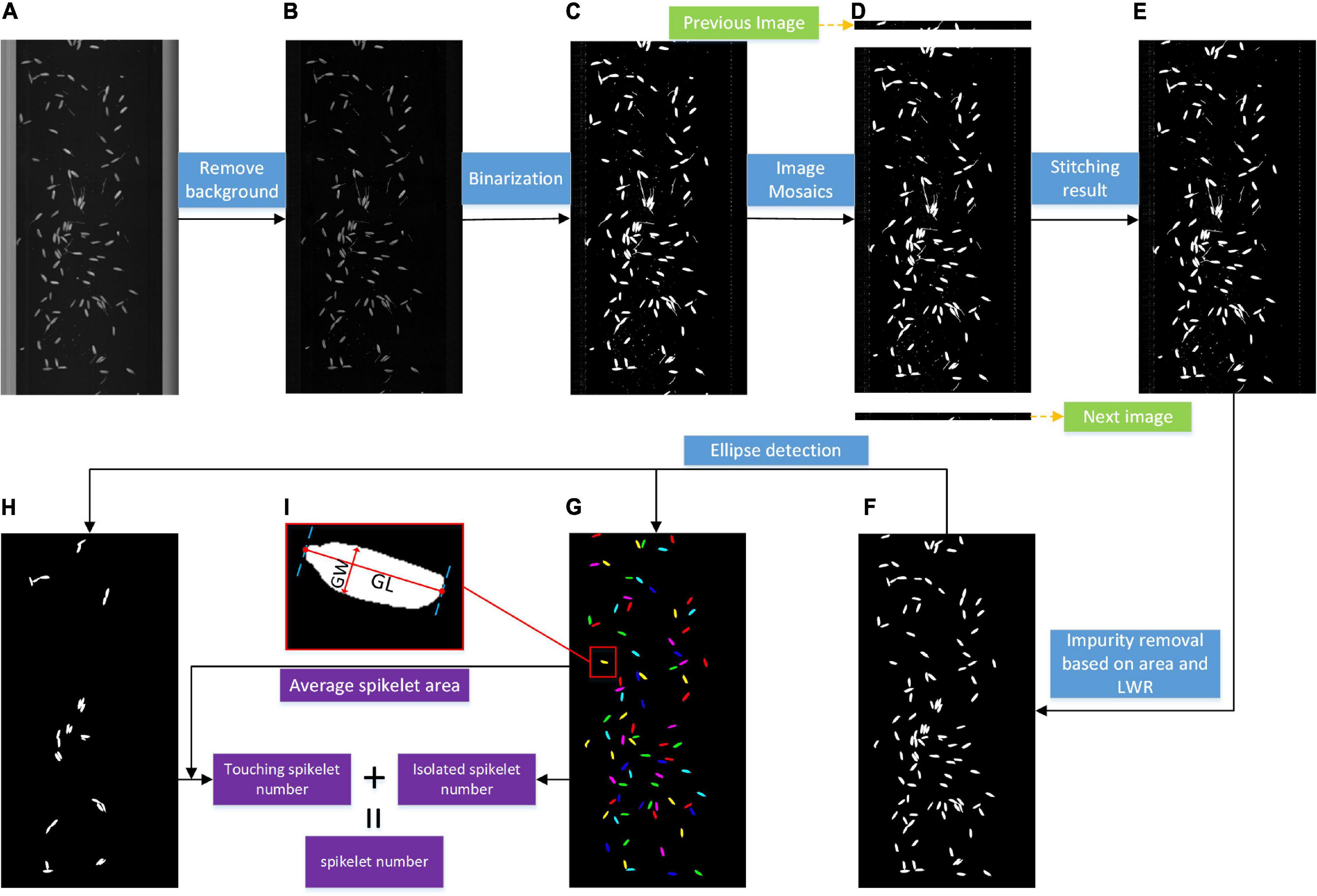

Pipeline for the Grain Image Analysis

The grain image analysis pipeline is presented in Figure 4. First, background subtraction was conducted to enhance the foreground contrast shown in Figure 4B. Second, a fixed threshold was applied to obtain the binary image shown in Figure 4C. Because of continuous linear scanning, the grain was probably distributed on two adjacent images; therefore, sequence image stitching was implemented based on the bottom connected region shown in Figure 4D, and a binary image with complete grains (Figure 4E) was obtained. Then, the impurity removal algorithm was carried out based on the optimal thresholds of the area and length width ratio (LWR), as shown in Figure 4F, which was determined by the distribution of the grain size and a large number of experiments. Next, ellipse detection was used to obtain the isolated grain image (Figure 4G) and touching grain image (Figure 4H), and the range of minor and major axis lengths was set according to grain size; the minimum match score was set to 800, while 1,000 represented a perfect match.

Based on the above image processing, the number of connected regions in the isolated grain image was taken as the isolated spikelet number, while the areas of the connected regions in the touching grain image were divided by the average area of isolated grains, and the sum of the results was regarded as the touching spikelet number. The total number was calculated by adding the isolated spikelet number and touching the spikelet number. The filled spikelet number could also be calculated. Moreover, the grain shape parameters were extracted by the following contour computation method based on the filled isolated grain image. The Euclidean distance between the two farthest points of the contour is regarded as the grain length (GL), and the maximum perpendicular distance is regarded as the grain width (GW).

Pipeline for the Threshed Panicle Analysis

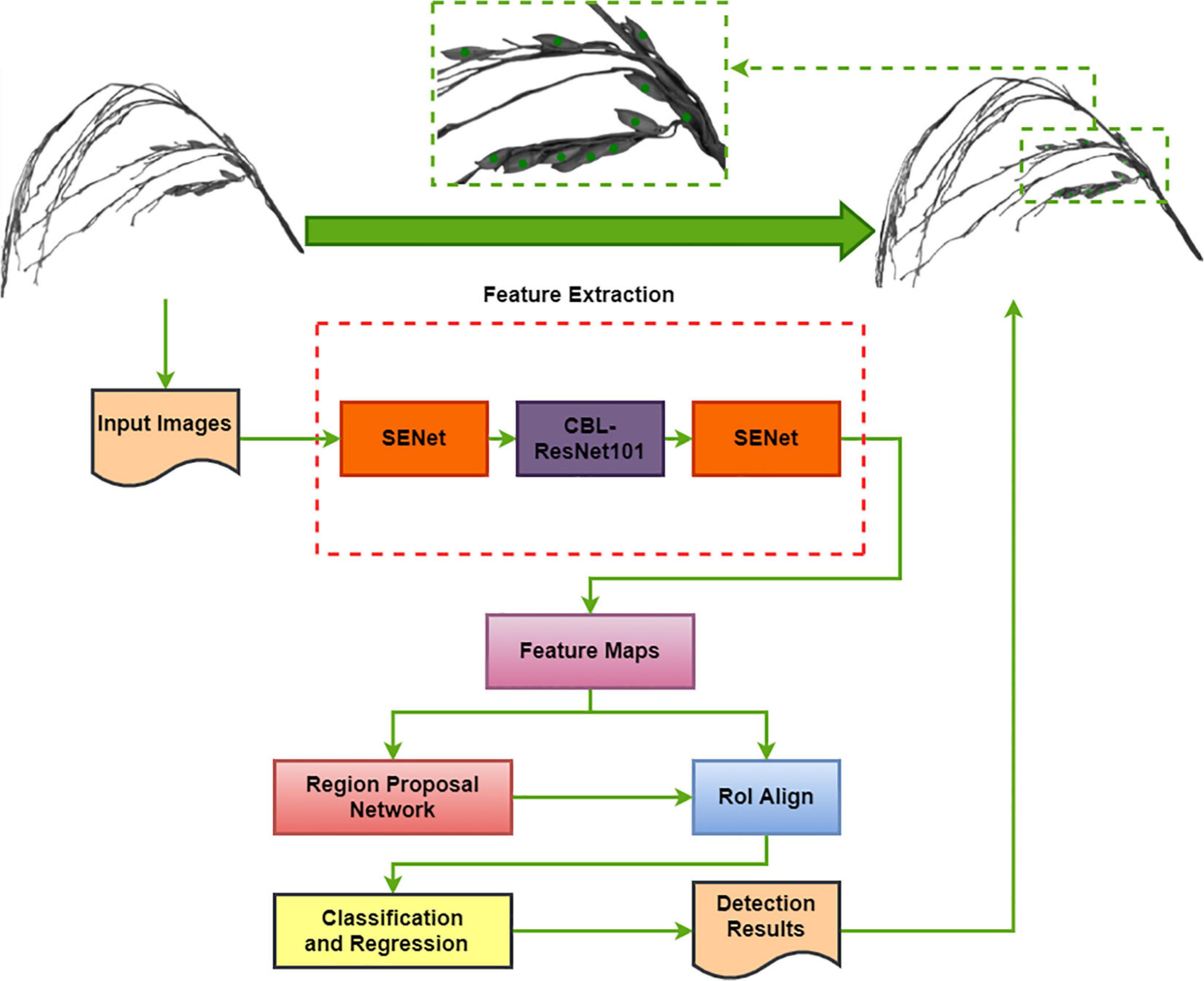

Since Faster-RCNN is a classical two-stage object detector that formulates detection as a coarse-to-fine process, the deep learning architecture for threshed panicle analysis was proposed on the basis of Faster-RCNN, termed ‘TPanicle-RCNN,’ while two feature extraction networks, VGG (Simonyan and Zisserman, 2014) and RESNET (He et al., 2016), were studied. To construct the model, 1,072 rice panicles, including 536 indica and 536 japonica panicles, were threshed by the threshing unit, a total of 1,072 threshed panicle images were captured and manually labeled with Labelimg (LabelImg.), and all the ground-truth bounding boxes and annotation files saved in the PASCAL VOC data format (Krizhevsky et al., 2012). Then the dataset was divided into a training set and a test set at a ratio of 4:1, with half indica and half japonica in each data set. Then, the datasets were augmented four times by image flipping and brightness adjustment, while 3,432 training images and 856 testing images were obtained. All the training and testing data are available at https://pan.baidu.com/s/1-XawHGseIc5bboVOP48Fkw?pwd=153w with the extraction code ‘153w’ for non-commercial research purposes.

Model Improvement

The Block diagram of the TPanicle-RCNN model is depicted in Figure 5, while the red rectangles indicate the model improvements compared with the original Faster R-CNN architecture. The RoIPool operation had adopted two quantization processes, which would result in a deviation of the ultimate box, while the location error would put a great negative effect on the threshed panicle detection, especially when the grains were close or slightly overlapped. To improve it, the Region of Interest Align (RoIAlign) designed in Mask R-CNN architecture, was applied to calculate the exact values of the candidate-box coordinates in this method. The original Faster R-CNN architecture used nine anchors to detect regional proposals consisting of three scales 1282, 2562, and 5122, and three aspect ratios, 1:1, 1:2, and 2:1, which were not suitable for the small object detection. Therefore the scale vectors were optimized to 322, 642, and 1282 based on the sizes of the grain. The integration of Convolution, Batch normalization, and Leaky Relu (CBL) was used to replace the traditional convolution and activation in the Res101 network (Wu and He, 2018), which was helpful to speed up the training efficiency and improve the accuracy. Moreover, the squeeze-and-excitation unit had been embedded into the head and tail of the feature extraction net Res101[48], and the channel attention layer had merged global average pooling and maximum pooling, which was able to enhance the effective information and improve model accuracy. In conclusion, the RoIAlign, CBL module, Squeeze-and-Excitation unit, and optimal anchor size had been adopted in the TPanicle-RCNN model.

Figure 5. Block diagram of the TPanicle-RCNN model. The dotted red rectangles indicate the model improvements.

Model Training

Pytorch 1.7.1 was adopted as a deep learning framework, and python 3.6 was applied. Since the training dataset was small, the fine-tuning training technique had been applied. The Faster-RCNN and TPanicle-RCNN models were initialized with pre-trained weights obtained by training the ImageNet dataset (Ioffe and Szegedy, 2015). And then the models were trained on the 3,432 training set to further optimize the pre-trained net. During the fine-tuning stage, the batch-size was set to four to achieve the maximum utilization of graphic processing unit GPU memory. The intersection-over-union (IoU) was set to 0.5, which means that when its IoU was ≥ 0.5, the predicted bounding box was regarded as positive. The number of region proposals was set to 600, the shortest side of the image was set to 1,000 pixels, and the longest side of the image was set to 2,000 pixels, in accordance with grain number and image size. Other parameters were configured by the default settings of the Faster R-CNN network. Finally, the optimal model was deployed on Alibaba cloud for threshed panicle analysis, and the spikelet number was returned as the total spikelet compensation.

System Evaluation

To evaluate the threshed panicle model performance, 856 testing images were tested, and the indicators of precision, recall, PR curve, F1 values, and average precision (AP) were used, the calculations of which are shown in Equations 1–4. TP represents the true positive targets, where the targets were correctly identified, FP represents the false positive targets, where the background was identified as the target, and FN represents the false negative targets, where the targets were missed.

To evaluate the system accuracy, 504 randomly selected panicle samples were tested, and the results of the system measurement were compared with manual measurements, including the threshed panicle spikelet number (TPSN), unfilled spikelet number, and filled spikelet number, and the mean value of three manual measurements was taken as the ground truth. Additionally, the indicators of R square, the mean absolute percentage error (MAPE), and the root mean square error (RMSE) were computed using Equations 5–7 to evaluate the system performance. Additionally, 200 randomly selected panicle samples were tested to evaluate the system efficiency.

where n is the total number of measurements; Xmi is the manual measurement results; Xai is the system measurement results; and is the mean of the manual measurements.

Results

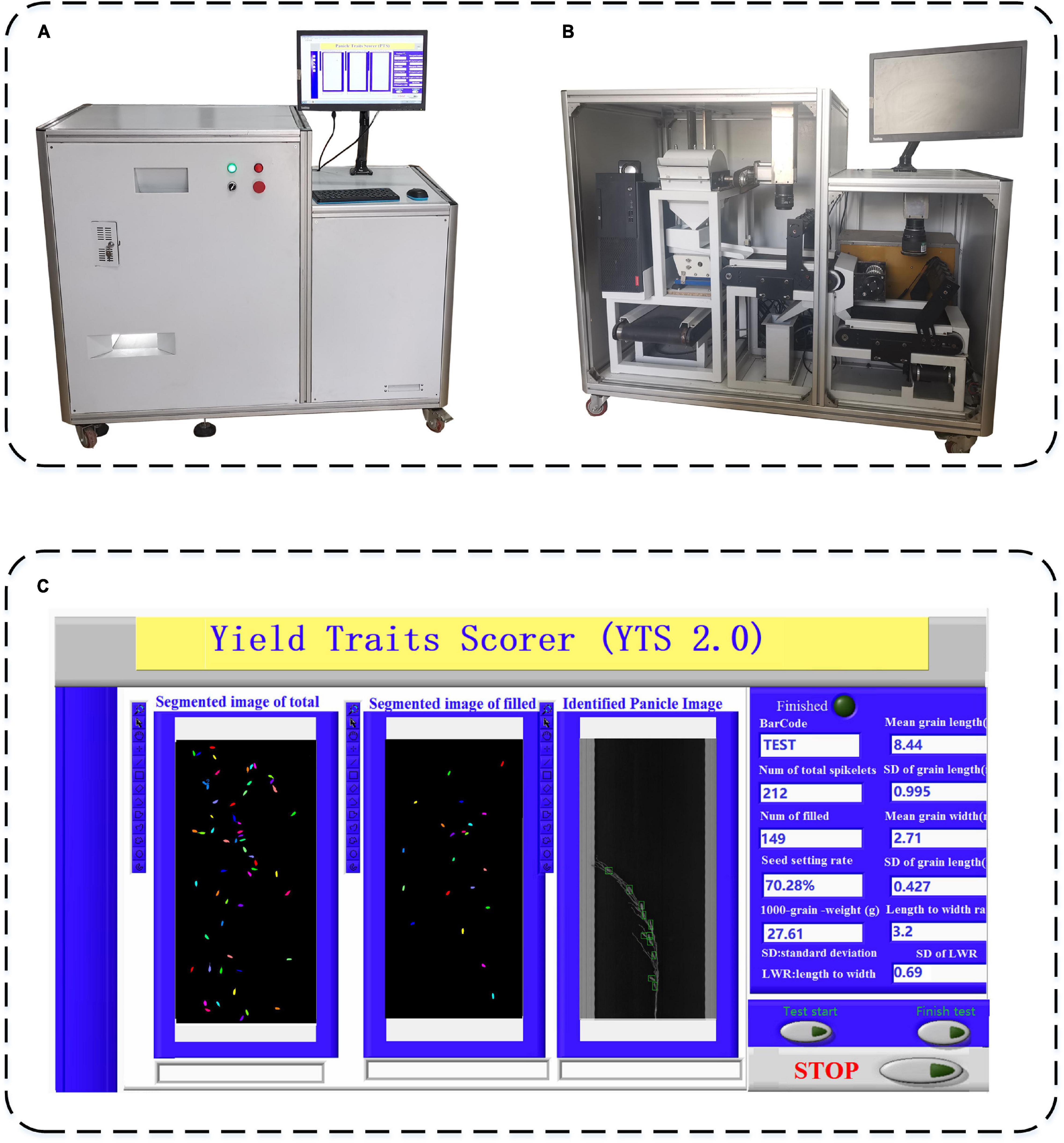

The prototype of the automatic rice yield-related trait evaluation system is shown in Figure 6. The system hardware composition is shown in Figure 6A, and the details of the system’s internal structure are depicted in Figure 6B, while the system software interface is exhibited in Figure 6C. From the results, the specific functions of system hardware and software were realized, and images of threshed panicles, total spikelet’s, and filled spikelet’s were analyzed for rice yield-related traits, including the total spikelet number, seed setting rate, grain shape, and grain weight. To demonstrate the system, the performance with different object detection models and rice varieties were studied, and furthermore, the accuracy and efficiency of the system were evaluated in detail.

Figure 6. The prototype of an automatic rice yield-related trait evaluation system, (A) system hardware composition, (B) system internal structure, and (C) system software interface.

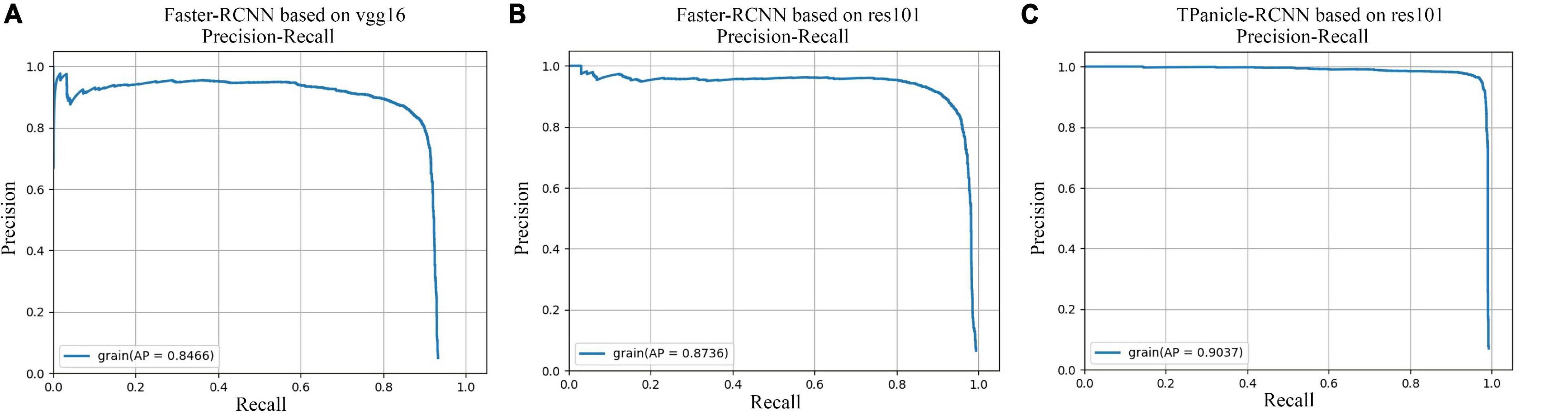

Evaluation of Models on the Testing Dataset

The models were evaluated by the 856 testing dataset, while comparisons of precision–recall curves of Faster R-CNN and TPanicle-RCNN models are shown in Figure 7, which showed that the average precision (AP) with the IoU ≥ 0.5 was 0.836, 0.873, 0.903 for the Faster-RCNN based on vgg16 (Figure 7A), resl01 (Figure 7B), and TPanicle-RCNN based on ResNet101 (Figure 7C), respectively. The results indicated that the res101 network had better performance than vgg16 in the feature extraction of small objects, therefore the Faster-RCNN improvement was based on ResNet101. Based on the advantages of RoIAlign, CBL module, Squeeze-and-Excitation unit, and optimal anchor size, the precision of TPanicle-RCNN was significantly greater than that of the original Faster R-CNN under the same recall conditions for threshed panicle, and the AP has reached 0.903, with an increase of 0.03. With the confidence threshold set as 0.5, the F1 value of improved Faster-RCNN was 0.929, an increase of 0.044.

Figure 7. Comparisons of precision–recall curves, (A) Faster-RCNN based on vgg16, (B) Faster-RCNN based on ResNet101, (C) TPanicle-RCNN based on ResNet101.

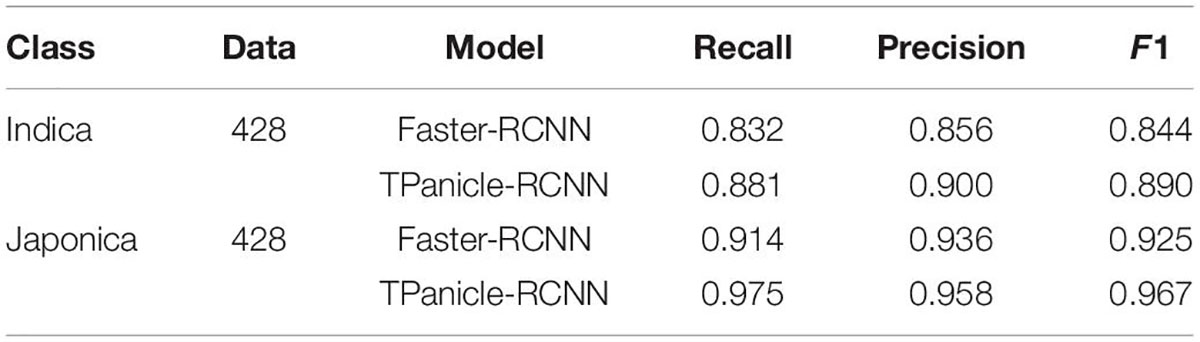

The Performance for Indica and Japonica

The threshed panicle identification performance for the indica and japonica varieties in the testing dataset was analyzed to evaluate the reliability and flexibility of the system, and the results are shown in Table 1 with 428 indica and 428 japonica samples. With the Faster-RCNN based on ResNet101, the recall, precision, and F1 scores were 0.832, 0.856, and 0.844 for indica varieties, while the recall, precision, and F1 scores were 0.914, 0.936, and 0.925 for japonica varieties, respectively. With the TPanicle-RCNN, the recall, precision, and F1 scores were 0.881, 0.900, and 0.891 for indica varieties, while the recall, precision, and F1 scores were 0.975, 0.958, and 0.967 for indica varieties, respectively. From the results, the TPanicle-RCNN yielded better performance than the original Faster-RCNN in general, the improvements in recall, and precision was 0.049, 0.044 for indica, and 0.061, 0.018 for japonica, which indicated that the TPanicle-RCNN was able to greatly improve the recall rate and recognition accuracy of the threshed panicle. Additionally, the models performed better on the japonica varieties than the indica varieties, because the spikelet number in the japonica threshed panicles was obviously less than that in the indica threshed panicles, which led to an increase in the recall rate, and in the results, the average gap between the F1 values was 0.076 for the improved Faster-RCNN.

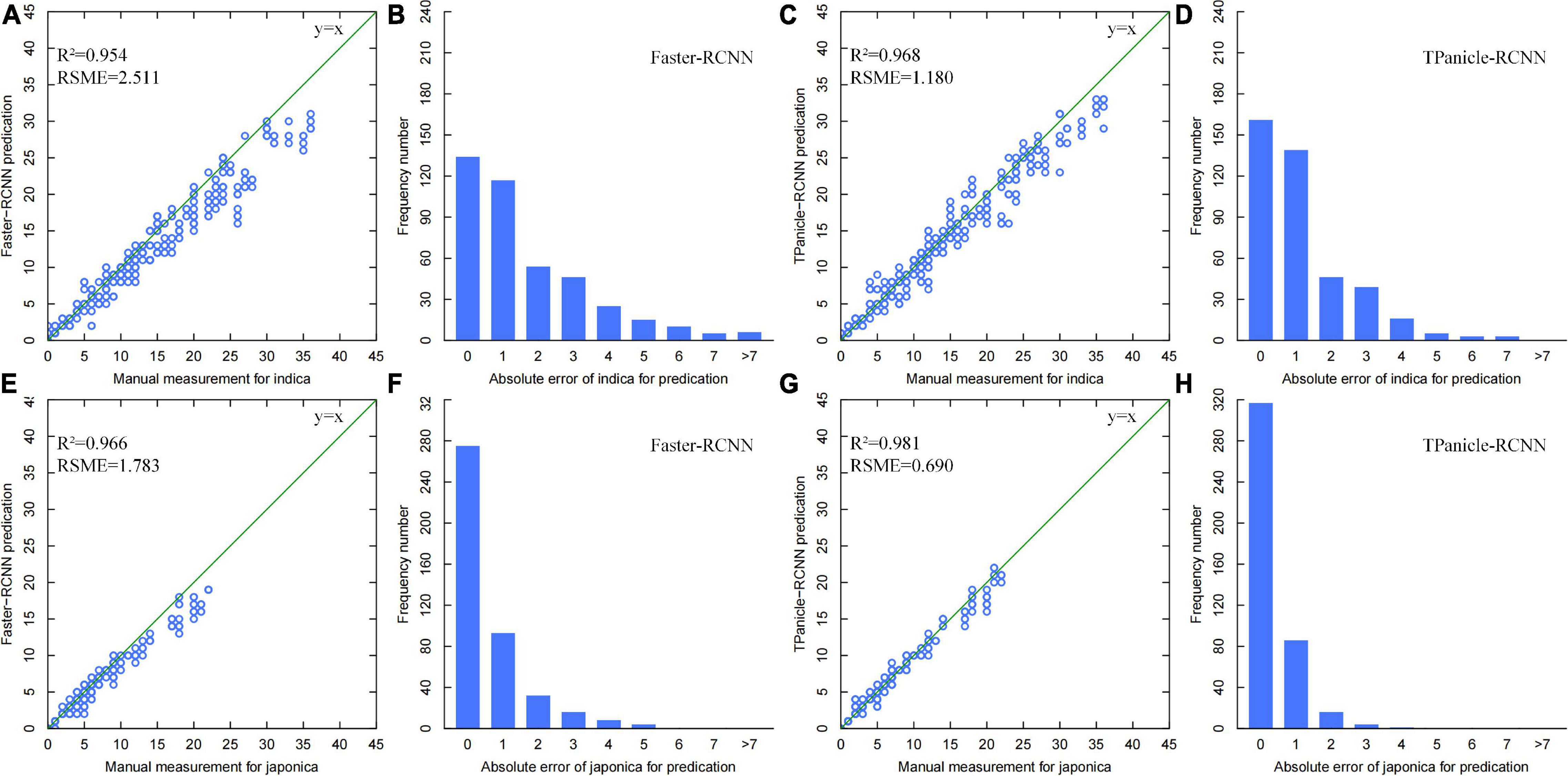

The threshed panicle spikelet number (TPSN) was measured manually and identified by the model inference, while the scatter plots of manual vs. model measurement and error distribution diagrams for the 428 indica and 428 japonica rice samples are shown in Figure 8, without positive and negative sample division based on IoU. From the results, the R2 and RMSE of the improved Faster-RCNN were 0.968 and 1.18, respectively, for the indica varieties, with an improvement of 0.014 and 53%. Meanwhile, the R2 and RMSE of the improved Faster-RCNN were 0.981 and 0.690, respectively, for the japonica varieties, with an improvement of 0.015 and 61.23%. The results demonstrated high consistency with manual measurements and a significant improvement compared with the original Faster-RCNN. The error distribution diagrams also indicated that the error distribution was normal in general, and 80% of identification errors constituted fewer than 2 spikelet’s. On the basis of these results, the error distribution of indica varieties was more discrete than that of japonica, and the improved Faster-RCNN has greatly decreased the error, which was preferred and used in the system.

Figure 8. (A,B) Threshed panicle spikelet number scatter plots of manual versus model measurement and error distribution diagrams for indica rice samples with Faster-RCNN measurement (Brichet et al., 2017), TPanicle-RCNN measurement (C,D), and japonica rice samples with Faster-RCNN measurement (E,F), TPanicle-RCNN measurement (G,H).

Accuracy of the Automatic Rice Yield-Related Trait Evaluation System

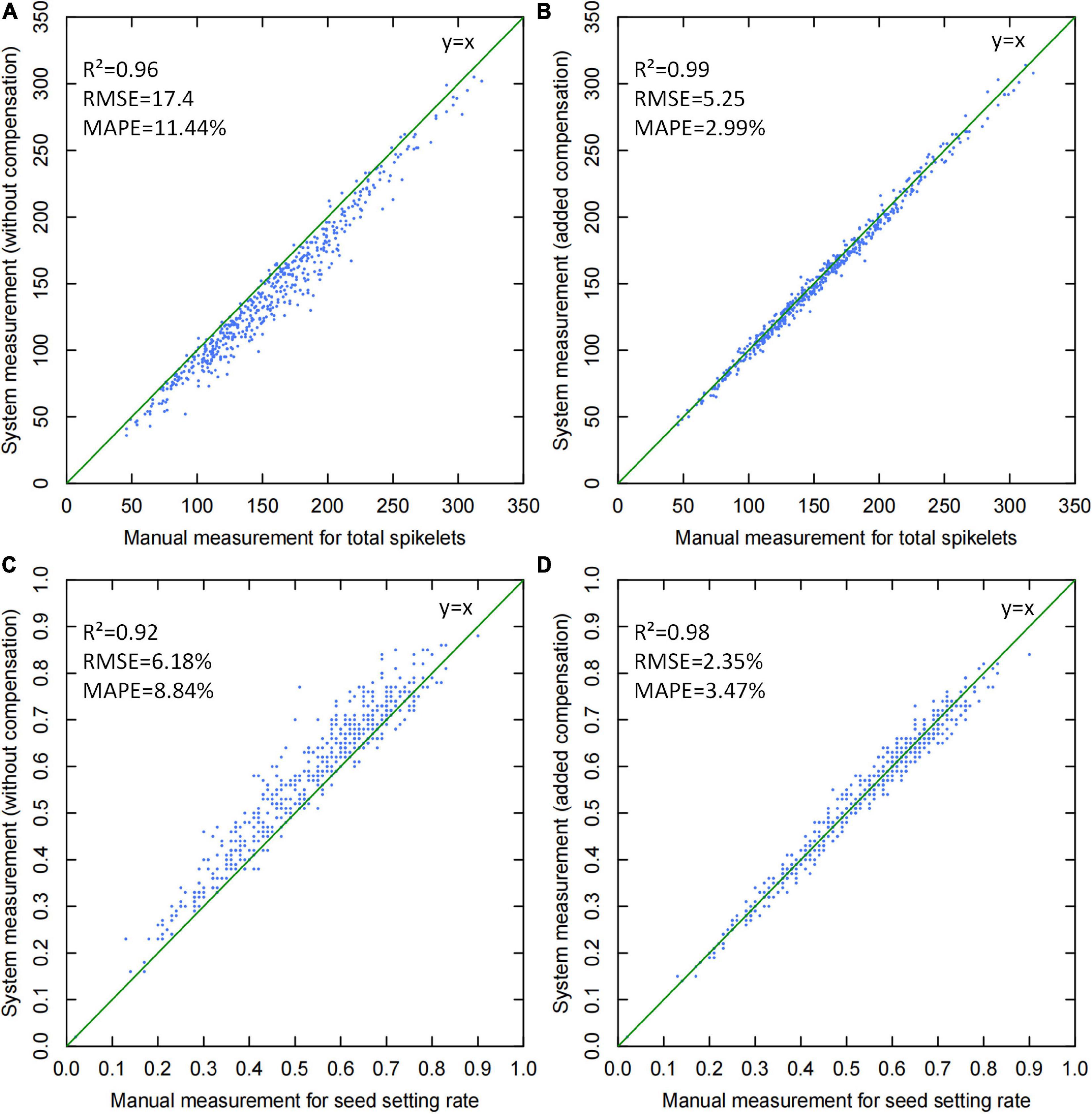

To test the system accuracy, 504 randomly selected panicle samples were measured, and the scatter plots of manual vs. system measurements are shown in Figure 9. From the results, yield-related traits, including total spikelet number and seed setting rate, were mainly analyzed to evaluate the effect of threshed panicle compensation. If threshed panicle compensation was not conducted, the R2 and MAPE of the total spikelet measurement were 0.96 and 11.44%, respectively, which were improved to 0.99 and 2.99% by threshed panicle compensation. Additionally, the R2 and MAPE of the seed setting rate measurement were 0.92 and 8.84%, respectively, which were improved to 0.98 and 3.47% by threshed panicle compensation. In conclusion, the accuracy of the entire system was significantly improved by threshed panicle compensation, enhancing the reliability of the automatic rice yield-related trait evaluation system.

Figure 9. Scatter plots of manual versus system measurements for yield-related trait evaluation: (A) total spikelet measurement without threshed panicle compensation, (B) total spikelet measurement with threshed panicle compensation, (C) seed setting rate measurement without threshed panicle compensation, and (D) seed setting rate measurement with threshed panicle compensation.

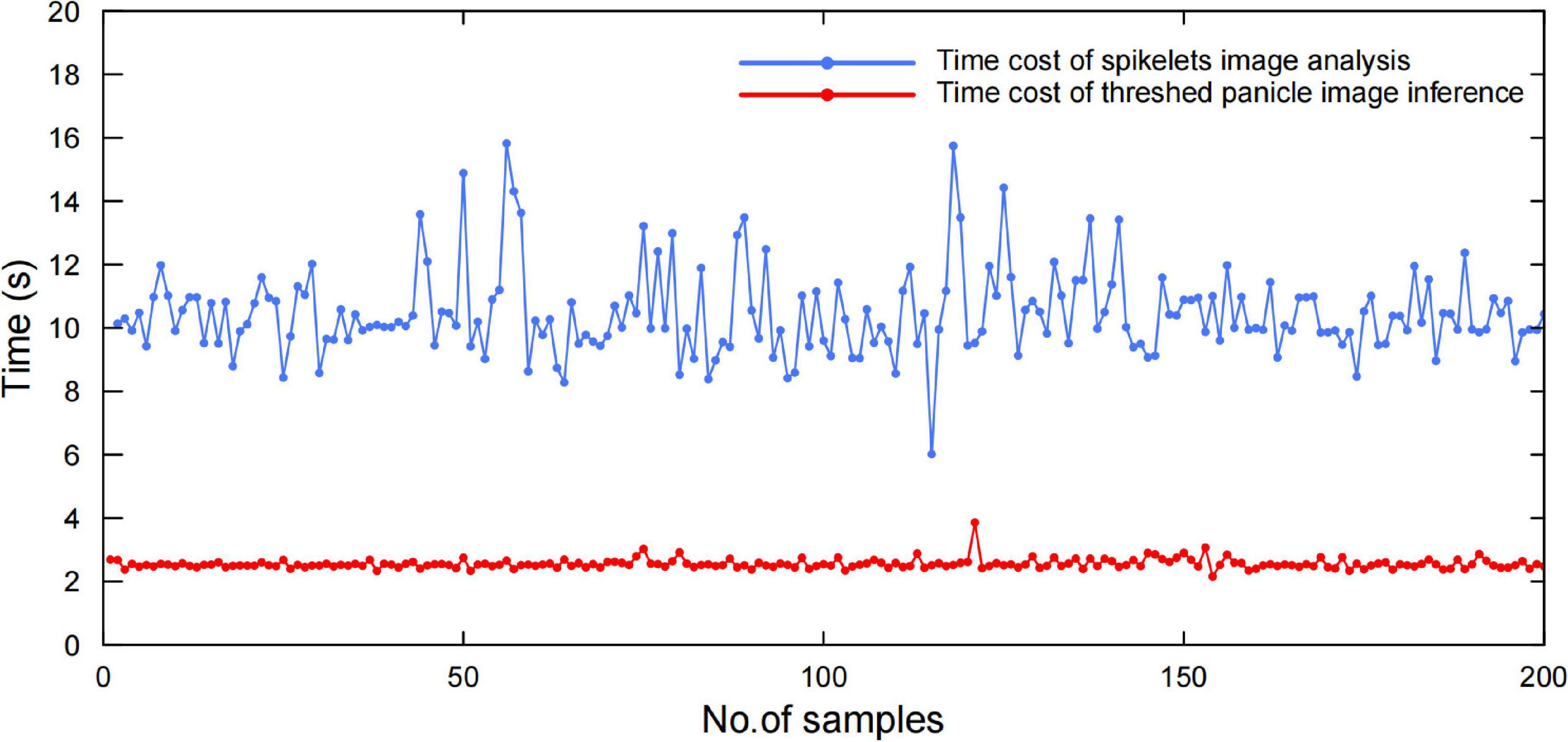

System Efficiency Evaluation

To evaluate the system efficiency, 200 randomly selected panicle samples were tested, while the time costs of panicle threshing, image acquisition, and image analysis were recorded individually. The results proved that the average efficiency was approximately 40 s, in which the time costs of panicle threshing, panicle image acquisition, and image analysis were approximately 25, 5, and 10 s, respectively, as shown in Figure 10. Additionally, the spikelet image analysis was performed in parallel with the panicle analysis, in which the time cost was approximately 4 s. Therefore, the whole system efficiency was approximately 40 sper panicle, which was approximately 20 times more efficient than manual measurement.

Discussion

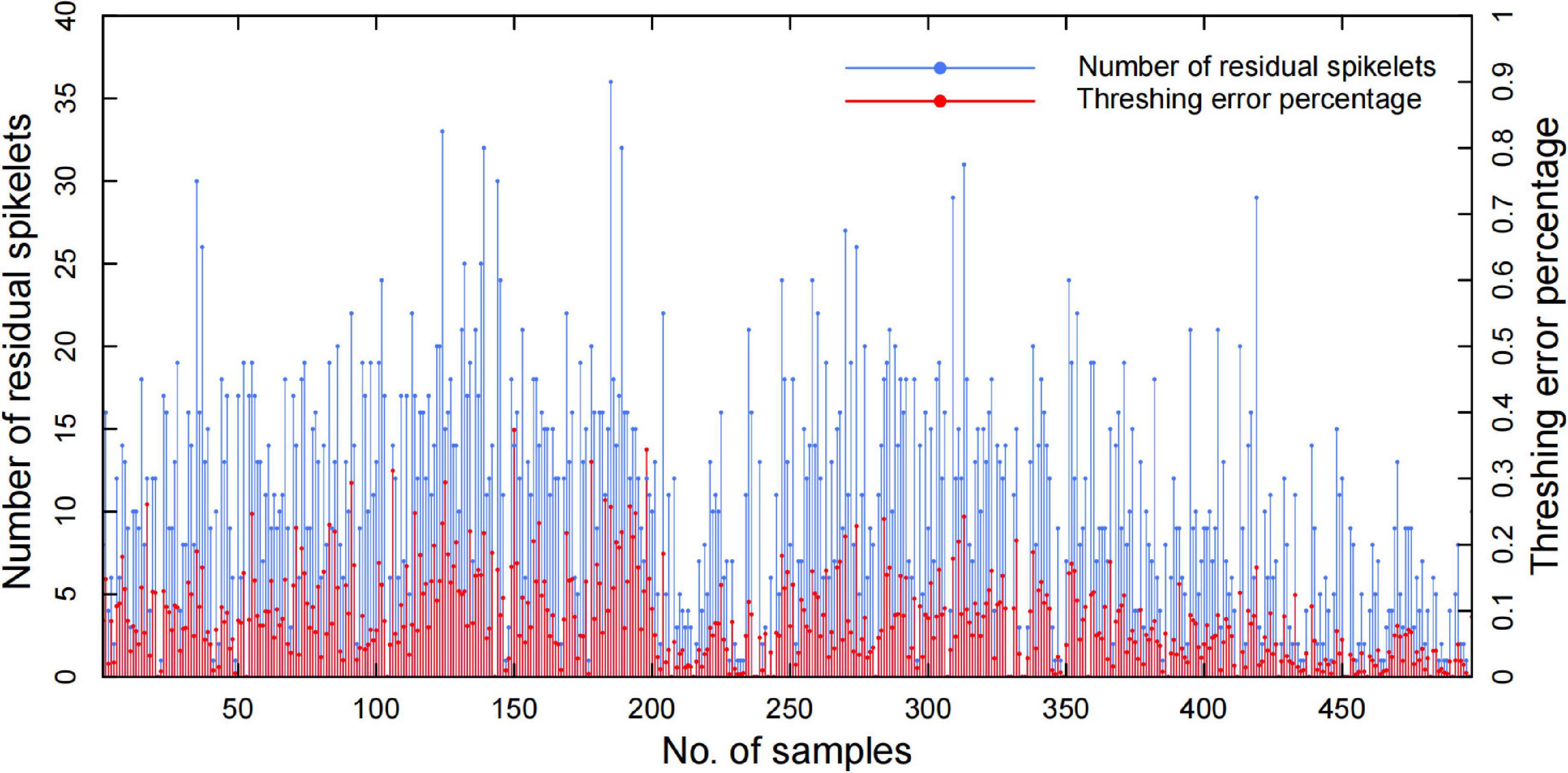

The results demonstrated that the system measurement accuracy mainly depended on the threshed panicle compensation, which had a high correlation with the threshing effect. To evaluate the threshing effect, the spikelet’s in the threshed panicle, unfilled spikelet outlet, and filled spikelet outlet were counted manually to obtain the threshing error percentage, and the results of 504 panicle samples are shown in Figure 11. The threshing results demonstrated that the filled spikelet’s were able to be threshed well but a few unfilled spikelet’s remained in the panicle, and the average spikelet number in the threshed panicle was 10.48, which led to a 9.37% average threshing error percentage. Additionally, the results showed great fluctuation in the threshing performance due to the panicle type and threshing time. In general, the threshing error contributed 81.9% of the total spikelet measurement error, while the threshed panicle compensation decreased the threshing error by 90.18%, which was of great significance to automatic yield-related trait evaluation.

Figure 11. Automatic threshing performance of the residual spikelet number and threshing error percentage.

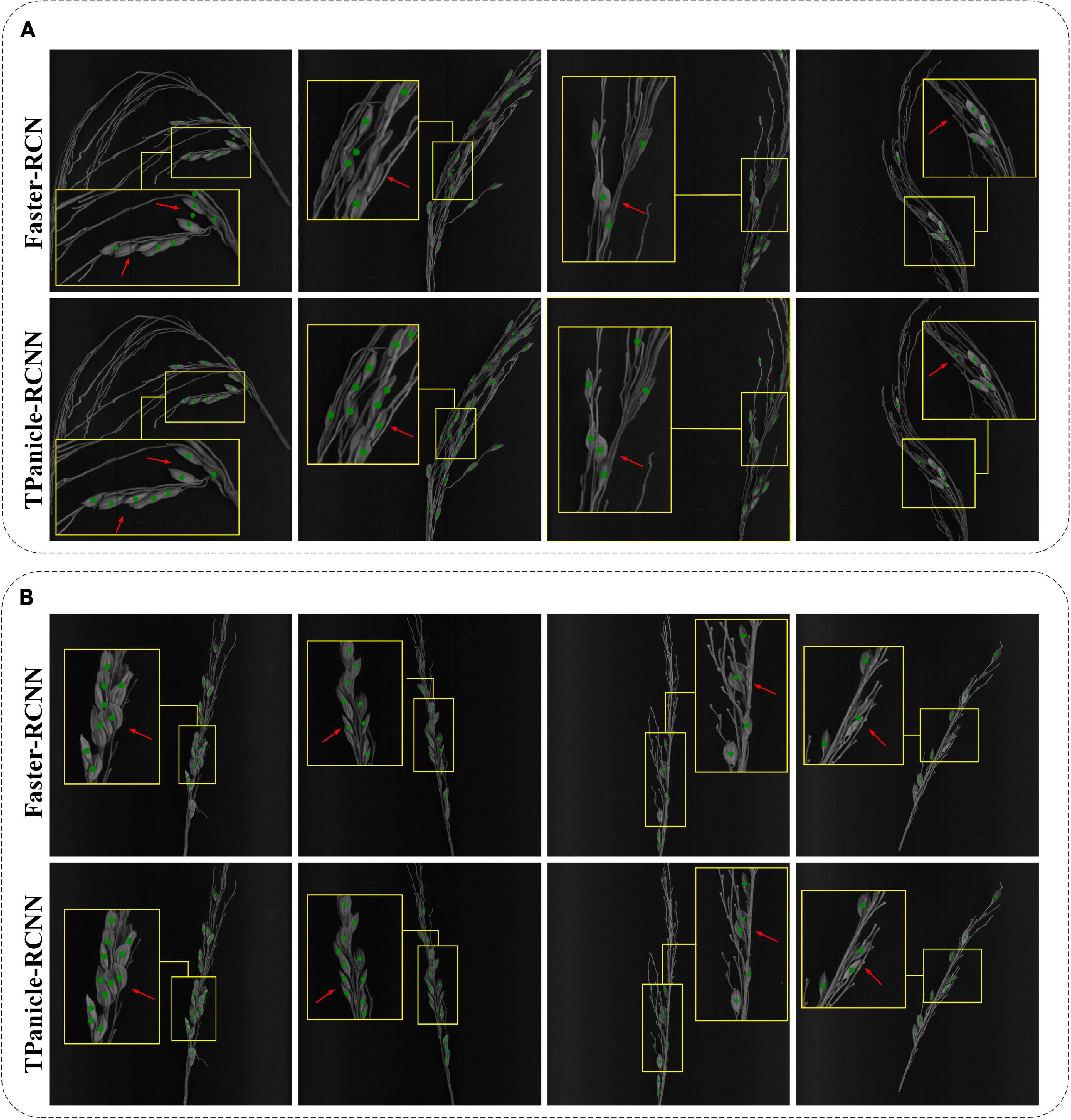

Compared with the original Faster-RCNN architecture, it was demonstrated that the TPanicle-RCNN had significantly increased the performance, and the detailed prediction cases are shown in Figure 12, including indica varieties (Figure 12A) and japonica varieties (Figure 12B). The results proved that the CBL module, Squeeze-and-Excitation unit, and optimal anchor size were able to help extract more effective features, so as to improve the model accuracy, while the RoIAlign was able to significantly improve the regression accuracy of the target box. Therefore the TPanicle-RCNN had performed a higher recall rate and grain position accuracy, which was indicated by the red arrow in Figure 12. Regarding the performance of the japonica and indica varieties, the results showed that the spikelet’s in indica threshed panicles were dense, and the threshed panicle structure was more complex, while the japonica panicles were easier to thresh. Therefore, the recall rate and precision value of indica varieties were lower than that of japonica varieties. Overall, the TPanicle-RCNN had a better performance of adaptability and reliability, regardless of panicle varieties and density.

Figure 12. Threshed panicle identification of the indica (A) and japonica (B) subspecies for the Faster-RCNN model and TPanicle-RCNN model. The red arrow indicates the comparison of the identification results by different models.

According to the time cost of each step, the system efficiency was mainly decided by the threshing time, and the share of threshed panicle image inference was approximately one in eight, which indicated that the cloud computing mode did not distinctly decrease the system efficiency. In contrast, cloud computing dramatically reduced the system cost and improved the system flexibility. Thus, this study demonstrated a novel automatic system for rice yield-related trait evaluation with high accuracy and efficiency, which was of great significance to rice breeding and genetic research.

In the past studies on rice yield traits evaluation, it was difficult to balance the accuracy, automation, and practicality. For example, using X-ray computed tomography to analyze rice panicle traits could reach an R2 of 0.98 for grain number (Hu et al., 2020). However, this method required 2 min to scan and reconstruct each panicle, while the cost and radiation risk limited the practical application. We have also tried to design threshing equipment that could improve the threshing performance, and the threshing error is about 5%. However, the complicated mechanical structure and threshing error limited its popularization (Huang et al., 2013). Therefore, the existing research and equipment are still unable to meet the needs of the practical rice yield traits evaluation with high accuracy and efficiency. In this research, we have demonstrated an intelligent method that could solve the threshing problem by threshed panicle compensation, and provide an efficient and reliable tool for rice breeding and genetic research.

Conclusion

This study developed a novel automatic system, for rice yield-related trait evaluation based on the technologies of automatic control, machine vision, and deep learning, in which the threshing problem has been skillfully solved by threshed panicle compensation. Moreover, a new deep learning architecture for threshed panicle analysis was proposed on the basis of Faster-RCNN, termed ‘TPanicle-RCNN’ and deployed in the cloud, which increased automation and improved measurement accuracy. The TPanicle-RCNN was improved by integration of the RoIAlign, CBL module, Squeeze-and-Excitation unit, and optimal anchor size, while various datasets were used to evaluate the threshed panicle identification model. The results indicated that the TPanicle-RCNN showed good performance on both japonica and indica varieties, while the F1 score was 0.929 with an increase of 0.044. To evaluate the system accuracy, 504 panicle samples were tested, and the total spikelet measurement error decreased from 11.44 to 2.99% with threshed panicle compensation. The results also proved that the system measurement was approximately 20 times more efficient than manual measurement and that cloud computing dramatically reduced the system cost and improved the system flexibility. In conclusion, this study provides a novel and powerful tool for phenotyping yield-related traits that will benefit rice breeding and genetic research in the future.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

CH and WL designed the research, performed the experiments, analyzed the data, and wrote the manuscript. ZZ, XH, JYe, JYa, LD, and XL contributed to the experiments. WY supervised the project and wrote the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by grants from the National Natural Science Foundation of China (U21A20205 and 31600287), key projects of Natural Science Foundation of Hubei Province (2021CFA059), major science and technology projects in Hubei Province, Fundamental Research Funds for the Central Universities (2662018JC004 and 2021ZKPY006), and HZAU-AGIS Cooperation Fund (SZYJY2022014).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2022.900408/full#supplementary-material

Supplementary Video 1 | The system workflow and operation procedures of YTS-2.0.

References

Al-Tam, F., Adam, H., Dos Anjos, A., Lorieux, M., Larmande, P., Ghesquiere, A., et al. (2013). P-TRAP: a Panicle Trait Phenotyping tool. BMC Plant Biol. 13:122. doi: 10.1186/1471-2229-13-122

Borrell, A. K., Mullet, J. E., George-Jaeggli, B., Van Oosterom, E. J., Hammer, G. L., Klein, P. E., et al. (2014). Drought adaptation of stay-green sorghum is associated with canopy development, leaf anatomy, root growth, and water uptake. J. Exp. Bot. 65, 6251–6263. doi: 10.1093/jxb/eru232

Brichet, N., Fournier, C., Turc, O., Strauss, O., Artzet, S., Pradal, C., et al. (2017). A robot-assisted imaging pipeline for tracking the growths of maize ear and silks in a high-throughput phenotyping platform. Plant Methods 13:96. doi: 10.1186/s13007-017-0246-7

Cai, Z., and Vasconcelos, N. IEEE (2018). Cascade R-CNN: Delving into High Quality Object Detection. Salt Lake City, UT: 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 6154–6162. doi: 10.1109/CVPR.2018.00644

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., and Yuille, A. L. (2018). DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Machine Intell. 40, 834–848. doi: 10.1109/TPAMI.2017.2699184

Confalonieri, R., Paleari, L., Foi, M., Movedi, E., Vesely, F. M., Thoelke, W., et al. (2017). PocketPlant3D: analysing canopy structure using a smartphone. Biosyst. Eng. 164, 1–12. doi: 10.1016/j.biosystemseng.2017.09.014

Deng, R., Tao, M., Huang, X., Bangura, K., Jiang, Q., Jiang, Y., et al. (2021). Automated Counting Grains on the Rice Panicle Based on Deep Learning Method. Sensors 21:281. doi: 10.3390/s21010281

Dhaka, V. S., Meena, S. V., Rani, G., Sinwar, D., Kavita., Ijaz, M. F., et al. (2021). A Survey of Deep Convolutional Neural Networks Applied for Prediction of Plant Leaf Diseases. Sensors 21:4749. doi: 10.3390/s21144749

Duan, L., Yang, W., Huang, C., and Liu, Q. (2011). A novel machine-vision-based facility for the automatic evaluation of yield-related traits in rice. Plant Methods 7:44. doi: 10.1186/1746-4811-7-44

Escamilla-Garcia, A., Soto-Zarazua, G. M., Toledano-Ayala, M., Rivas-Araiza, E., and Gastelum-Barrios, A. (2020). Applications of Artificial Neural Networks in Greenhouse Technology and Overview for Smart Agriculture Development. Appl. Sci. Basel 10:3835. doi: 10.3390/app10113835

He, K., Zhang, X., Ren, S., Sun, J., and IEEE (2016). Deep Residual Learning for Image Recognition. Las Vegas, NV: 2016 IEEE Conference on Computer Vision and Pattern Recognition. doi: 10.1109/CVPR.2016.90

He, K. M., Gkioxari, G., Dollar, P., Girshick, R., and IEEE (2017). Mask R-CNN. Venice: 16th IEEE International Conference on Computer Vision (ICCV), 2980–2988. doi: 10.1109/ICCV.2017.322

He, Y., Zhou, Z., Tian, L., Liu, Y., and Luo, X. (2020). Brown rice planthopper (Nilaparvata lugens Stal) detection based on deep learning. Precision Agricult. 21, 1385–1402. doi: 10.1007/s11119-020-09726-2

Hochreiter, S., and Schmidhuber, J. (1997). Long short-term memory. Neural Comput. 9, 1735–1780. doi: 10.1162/neco.1997.9.8.1735

Hu, W., Zhang, C., Jiang, Y., Huang, C., Liu, Q., Xiong, L., et al. (2020). Nondestructive 3D Image Analysis Pipeline to Extract Rice Grain Traits Using X-Ray Computed Tomography. Plant Phenom. 2020, 3414926–3414926. doi: 10.34133/2020/3414926

Huang, C., Duan, L., Liu, Q., and Yang, W. (2013). Development of a whole-feeding and automatic rice thresher for single plant. Mathe. Comp. Modell. 58, 684–690. doi: 10.1016/j.mcm.2011.10.033

Igathinathane, C., Pordesimo, L. O., and Batchelor, W. D. (2009). Major orthogonal dimensions measurement of food grains by machine vision using ImageJ. Food Res. Int. 42, 76–84. doi: 10.1016/j.foodres.2008.08.013

Ioffe, S., and Szegedy, C. (2015). Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. Lille: 32nd International Conference on Machine Learning, 448–456.

Kattenborn, T., Leitloff, J., Schiefer, F., and Hinz, S. (2021). Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogram. Rem. Sens. 173, 24–49. doi: 10.1016/j.isprsjprs.2020.12.010

Krizhevsky, A., Sutskever, I., and Hinton, G. (2012). ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inform. Process. Syst. 25, 1097–1105.

Li, W., Wang, D., Li, M., Gao, Y., Wu, J., and Yang, X. (2021). Field detection of tiny pests from sticky trap images using deep learning in agricultural greenhouse. Comp. Electron. Agric. 183:106048. doi: 10.1016/j.compag.2021.106048

Lin, T.-Y., Goyal, P., Girshick, R., He, K., and Dollar, P. (2020). Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Machine Intell. 42, 318–327. doi: 10.1109/TPAMI.2018.2858826

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., et al. (2016). SSD: Single Shot MultiBox Detector. Amsterdam: 14th European Conference on Computer Vision (ECCV), 21–37. doi: 10.1007/978-3-319-46448-0_2

Qian, Q., Guo, L., Smith, S. M., and Li, J. (2016). Breeding high-yield superior quality hybrid super rice by rational design. Natl. Sci. Rev. 3, 283–294. doi: 10.1038/nplants.2017.31

Rebolledo, M. C., Pena, A. L., Duitama, J., Cruz, D. F., Dingkuhn, M., Grenier, C., et al. (2016). Combining Image Analysis, Genome Wide Association Studies and Different Field Trials to Reveal Stable Genetic Regions Related to Panicle Architecture and the Number of Spikelets per Panicle in Rice. Front. Plant Sci. 7:1384. doi: 10.3389/fpls.2016.01384

Redmon, J., Divvala, S., Girshick, R., and Farhadi, A. Ieee. (2016). You Only Look Once: Unified, Real-Time Object Detection. Seattle, WA: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 779–788. doi: 10.1109/CVPR.2016.91

Ren, S., He, K., Girshick, R., and Sun, J. (2015). “Faster R-CNN: towards real-time object detection with region proposal networks,” in Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS), Dec 07-12, 2015, Montreal, QC. doi: 10.1109/TPAMI.2016.2577031

Richards, R. A. (2000). Selectable traits to increase crop photosynthesis and yield of grain crops. J. Exp. Bot. 51, 447–458. doi: 10.1093/jexbot/51.suppl_1.447

Sa, I., Ge, Z., Dayoub, F., Upcroft, B., Perez, T., and Mccool, C. (2016). DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 16:1222. doi: 10.3390/s16081222

Sakamoto, T., and Matsuoka, M. (2008). Identifying and exploiting grain yield genes in rice. Curr. Opin. Plant Biol. 11, 209–214. doi: 10.1016/j.pbi.2008.01.009

Sandhu, J., Zhu, F., Paul, P., Gao, T., Dhatt, B. K., Ge, Y., et al. (2019). PI-Plat: a high-resolution image-based 3D reconstruction method to estimate growth dynamics of rice inflorescence traits. Plant Methods 15:162. doi: 10.1186/s13007-019-0545-2

Sankaran, S., Mishra, A., Ehsani, R., and Davis, C. (2010). A review of advanced techniques for detecting plant diseases. Comp. Electron. Agric. 72, 1–13.

Shen, Y., Zhou, H., Li, J., Jian, F., and Jayas, D. S. (2018). Detection of stored-grain insects using deep learning. Comp. Electron. Agric. 145, 319–325.

Simonyan, K., and Zisserman, A. (2015). “Very deep convolutional networks for large-scale image recognition,” in Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015) May 7, 2015 - May 9, 2015, San Diego, CA.

Sun, J., Di, L., Sun, Z., Shen, Y., and Lai, Z. (2019). County-Level Soybean Yield Prediction Using Deep CNN-LSTM Model. Sensors 19:4363. doi: 10.3390/s19204363

Tanabata, T., Shibaya, T., Hori, K., Ebana, K., and Yano, M. (2012). SmartGrain: High-Throughput Phenotyping Software for Measuring Seed Shape through Image Analysis. Plant Physiol. 160, 1871–1880. doi: 10.1104/pp.112.205120

Tester, M., and Langridge, P. (2010). Breeding Technologies to Increase Crop Production in a Changing World. Science 327, 818–822. doi: 10.1126/science.1183700

Tong, K., Wu, Y., and Zhou, F. (2020). Recent advances in small object detection based on deep learning: A review. Image Vision Comp. 97:103910.

van Klompenburg, T., Kassahun, A., and Catal, C. (2020). Crop yield prediction using machine learning: A systematic literature review. Comp. Electron. Agric. 177:105709.

Wu, W., Liu, T., Zhou, P., Yang, T., Li, C., Zhong, X., et al. (2019). Image analysis-based recognition and quantification of grain number per panicle in rice. Plant Methods 15:122. doi: 10.1186/s13007-019-0510-0

Wu, Y. X., and He, K. M. (2018). Group Normalization. Munich: 15th European Conference on Computer Vision (ECCV), 3–19. doi: 10.1007/978-3-030-01261-8_1

Xing, Y., and Zhang, Q. (2010). Genetic and Molecular Bases of Rice Yield. Ann. Rev. Plant Biol. 61, 421–442. doi: 10.1146/annurev-arplant-042809-112209

Yang, W., Feng, H., Zhang, X., Zhang, J., Doonan, J. H., Batchelor, W. D., et al. (2020). Crop Phenomics and High-Throughput Phenotyping: Past Decades, Current Challenges, and Future Perspectives. Mol. Plant 13, 187–214. doi: 10.1016/j.molp.2020.01.008

Yang, W., Guo, Z., Huang, C., Duan, L., Chen, G., Jiang, N., et al. (2014). Combining high-throughput phenotyping and genome-wide association studies to unlock the genetic architecture underlying the natural variation of rice. Nat. Commun. 5:5087. doi: 10.1038/ncomms6087

Yu, L., Shi, J., Huang, C., Duan, L., Wu, D., Fu, D., et al. (2021). An integrated rice panicle phenotyping method based on X-ray and RGB scanning and deep learning. Crop J. 9, 42–56. doi: 10.1016/j.cj.2020.06.009

Zhang, Q. (2007). Strategies for developing green super rice. Proc. Natl. Acad. Sci. U. S. A. 104, 16402–16409. doi: 10.1073/pnas.0708013104

Keywords: rice panicle, yield traits, high-throughput, Faster-RCNN, cloud computation

Citation: Huang C, Li W, Zhang Z, Hua X, Yang J, Ye J, Duan L, Liang X and Yang W (2022) An Intelligent Rice Yield Trait Evaluation System Based on Threshed Panicle Compensation. Front. Plant Sci. 13:900408. doi: 10.3389/fpls.2022.900408

Received: 20 March 2022; Accepted: 22 June 2022;

Published: 22 July 2022.

Edited by:

Xiuliang Jin, Key Laboratory of Crop Physiology and Ecology, Institute of Crop Sciences (CAAS), ChinaReviewed by:

Md Nashir Uddin, The University of Tokyo, JapanKang Yu, Technical University of Munich, Germany

Copyright © 2022 Huang, Li, Zhang, Hua, Yang, Ye, Duan, Liang and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wanneng Yang, eXduQG1haWwuaHphdS5lZHUuY24=

†These authors have contributed equally to this work

Chenglong Huang

Chenglong Huang Weikun Li

Weikun Li Zhongfu Zhang

Zhongfu Zhang Junya Yang

Junya Yang Lingfeng Duan

Lingfeng Duan Xiuying Liang

Xiuying Liang Wanneng Yang

Wanneng Yang