- 1Department of Agricultural and Biological Engineering, University of Florida, Gainesville, FL, United States

- 2USDA/ARS Environmental Microbial and Food Safety Laboratory, Beltsville Agricultural Research Center, Beltsville, MD, United States

- 3Department of Horticultural Sciences, University of Florida, Fort Pierce, FL, United States

Identification and segregation of citrus fruit with diseases and peel blemishes are required to preserve market value. Previously developed machine vision approaches could only distinguish cankerous from non-cankerous citrus, while this research focused on detecting eight different peel conditions on citrus fruit using hyperspectral (HSI) imagery and an AI-based classification algorithm. The objectives of this paper were: (i) selecting the five most discriminating bands among 92 using PCA, (ii) training and testing a custom convolution neural network (CNN) model for classification with the selected bands, and (iii) comparing the CNN’s performance using 5 PCA bands compared to five randomly selected bands. A hyperspectral imaging system from earlier work was used to acquire reflectance images in the spectral region from 450 to 930 nm (92 spectral bands). Ruby Red grapefruits with normal, cankerous, and 5 other common peel diseases including greasy spot, insect damage, melanose, scab, and wind scar were tested. A novel CNN based on the VGG-16 architecture was developed for feature extraction, and SoftMax for classification. The PCA-based bands were found to be 666.15, 697.54, 702.77, 849.24 and 917.25 nm, which resulted in an average accuracy, sensitivity, and specificity of 99.84%, 99.84% and 99.98% respectively. However, 10 trials of five randomly selected bands resulted in only a slightly lower performance, with accuracy, sensitivity, and specificity of 98.87%, 98.43% and 99.88%, respectively. These results demonstrate that an AI-based algorithm can successfully classify eight different peel conditions. The findings reported herein can be used as a precursor to develop a machine vision-based, real-time peel condition classification system for citrus processing.

Introduction

Infections and other blemishes on the peels of citrus typically lower market value, and for certain locales, can prevent import or export. Citrus Canker and Citrus Black Spot (CBS) are among the latter category, but other lesser peel conditions such as melanose, greasy spot, wind damage and insect damage might only affect fruit price. Citrus Canker, included in this study, is a severe disease which has negatively impacted the Florida Citrus Industry for over two decades, it is usually characterized by conspicuous, erumpent lesions on leaves, stems, and fruit, and can cause defoliation, blemished fruit, premature fruit drop, twig dieback, and tree decline (Schubert et al., 2001). Considered one of the most devastating diseases that threaten fresh market citrus crops, canker at best reduces visual appeal to consumers, and at worst disqualifies entire shipments of fruit for export (Dewdney et al., 2022). Detection and removal of infected citrus at or before the packinghouse is essential to minimizing losses due to canker, but manual removal of infected fruit is inefficient and expensive. Automated identification of citrus peel conditions which not only detects more severe infections, but also differentiates between them and superficial blemishes would help assure fruit quality and safety, while also enhancing the competitiveness and profitability of the citrus industry.

Machine vision offers great potential for quality evaluation and safety inspection for food and agricultural products. Early work to inspect citrus for defects includes Sweet and Edward’s method to assess damages due to citrus blight disease on citrus plants using reflectance spectra of the entire tree (Sweet and Edward, 1986). Pydipati et al. (2006) utilized various textural features extracted with the color co-occurrence method (CCM) to identify diseased and normal citrus leaves using discriminant analysis. Blasco et al. (2007) showed that different spectra facilitate detection of different injuries and pathogens. Contemporary machine vision work with citrus canker inspection has harnessed Hyperspectral Imagery (HSI) and deep learning (DL) to improve and automate feature selection.

Instead of imaging with three broad frequency bands as typical cameras do, hyperspectral imaging cameras produce dozens of images in narrow bands, which can accentuate defects. HSI can also be used to evaluate the entire surface of food products and crops (assuming a rotating mechanism is present), as opposed to spot measurements with traditional visible/near-infrared spectroscopy. Food defects detected with HSI include bruises on cucumbers (Ariana et al., 2006), cracks in shell eggs (Lawrence et al., 2008), diseased poultry carcasses (Qin et al., 2008), and degradation of spinach leaves (Diezma et al., 2013). Contaminants detected with HSI include traces of nuts in wheat flour (Zhao et al., 2018), foreign objects in cut vegetables (Cho, 2021), contaminants on meat (Gorji et al., 2022) and fecal and ingesta contaminants on poultry carcasses (Park et al., 2007). Fresh fruits inspected with HSI include mandarins (Zhang et al., 2020), nectarines (Huang et al., 2021), jujubes (Pham and Liou, 2022), citrus (Gómez-Sanchis et al., 2008; Qin et al., 2013; Kim et al., 2014), tart cherries (Qin and Lu, 2005), pears (Lee et al., 2014), and mangoes (Rivera et al., 2014).

Out of the many DL algorithms, convolutional neural networks (CNNs) are widely used for object detection and image classification tasks (Guo et al., 2016; Liang et al., 2019; Krishnaswamy Rangarajan and Purushothaman, 2020). CNNs can outperform traditional machine vision methods, especially when processing time is a consideration (Fan et al., 2020). A few well-known CNN architectures researched for fruit inspection include ResNet (Mohinani et al., 2022), VGG-16 (Sustika et al., 2018) and AlexNet (Sustika et al., 2018; Behera et al., 2021; Ismail and Malik, 2022). With adequate training data, even several disease classes (26) and crop species (14) have been classified with 99.35% accuracy (Mohanty et al., 2016). The many applications of CNN for object detection and image classification include the disease classification in eggplant by Krishnaswamy Rangarajan and Purushothaman, 2020, classification of tobacco leaf pests by Swasono et al. (2019), kiwifruit defect detection by Yao et al. (2021), volunteer cotton plant detection by Yadav et al. (2022a); Yadav et al. (2022b); Yadav et al. (2022c); Yadav et al. (2022d), and identification of fecal contamination on meat carcasses by Gorji et al. (2022), etc. For citrus specifically, Syed-Ab-Rahman et al. (2022) distinguished CBS, canker, and citrus greening disease or Huanglongbing (HLB) on citrus leaves with a two-stage CNN, achieving mid-90’s detection accuracy on each of the three diseases. CNNs have been recently employed to detect, inspect, and track oranges on a rolling conveyor with 93.6% accuracy (Chen et al., 2021), to grade lemons with 100% accuracy (Jahanbakhshi et al., 2020), and to detect citrus fruit diseases (Dhiman et al., 2022). CNNs are often designed to balance speed with classification performance, as Fan et al. (2020) did when creating a custom architecture for an online apple sorting system. CNNs have a unique ability for automatic feature extraction which makes classification and detection tasks easier and autonomous.

Since the ability to automatically extract features allows for recognition of spectral features (Zhao and Du, 2016) in necessarily large HSI datasets, CNNs also complement HSI. CNNs were paired with HSI to detect anthracnose in olives (Fazari et al., 2021) with 100% accuracy for images taken 5 days after inoculation. Liu et al. (2018) employed an autoencoder combined with a CNN to learn features, quickly (14 ms per sample) and accurately (91.1% accuracy) detecting cucumber defects. VGG16 is one such widely used CNN architecture that is structurally simple and by successive means of 3x3 convolution improves the network’s performance for better feature extraction (Yang et al., 2021). It has been successfully used for many past image classification tasks, such as eggplant disease classification at 99.4% accuracy (Krishnaswamy Rangarajan and Purushothaman, 2020).

Citrus inspection has also employed HSI. Abdulridha et al. (2019) classified cankerous trees with 100% accuracy by imaging a citrus grove with an HSI camera on a UAV. Kim et al. (2014) used spectral information divergence (SID) to segment CBS from other orange peel conditions in HSI with 97% accuracy. But accurate classification of diseased citrus has been achieved with a small number of bands: Qin et al. (2011) used four, Lorente et al. (2013) used three plus the normalized difference vegetation index (NDVI), Bulanon et al. (2013) used two, and Jie et al. (2021) used three. However, all but the last of these extracted features without the aid of DL. And, the focus of Kim et al. (2014) was CBS detection, not classification of the other peel conditions.

The sheer size of HSI presents a processing challenge, which can be circumvented by reducing the number of bands from which features are extracted, while still retaining important spectral features. Spectral matching algorithms is one family of methods to segment images (Qin et al., 2009) and reduce dimensionality. van der Meer (2006) showed that SID outperforms other classical spectral matching algorithms.

Principal Component Analysis (PCA) is another popular means of reducing dimensionality, widely employed to extract and select features (Khalid et al., 2014; Jollife and Cadima, 2016). Su et al. (2014) used PCA as a standard by which to evaluate performance of other methods of selecting hyperspectral bands for display. Rodarmel and Shan (2002) and Farrell and Mersereau (2005) reduced hyperspectral imagery to fewer principal components using PCA without negatively impacting performance. PCA can also be employed to rank bands by contribution to the principal components, instead of projecting to a lower dimensional space. Li et al. (2019) used PCA loadings to select bands from hyperspectral imagery to inspect apples for decay. Li et al. (2011) used PCA to first select six bands from HSI cubes of oranges, and afterwards to extract the third principal component image. Eight types of defects were distinguished from healthy fruit with 94% accuracy. The advantage of using PCA is that the bands corresponding to the principal components are uncorrelated and therefore information contained in each of the bands is maximum (Cocianu et al., 2009).

For commercial fruit inspection applications, there are financial and performance reasons for eventually choosing a multi-spectral imaging (MSI) system over an HSI one. However, it is necessary to start with the full 92 HSI bands, determine the most prominent bands for classification and then implement those bands in MSI. Reduction from the original HSI’s 92 bands to just a few principal component bands is undesirable since these would still require the entire range of 92 bands to generate the reduced set of PCA bands. Instead, if a few salient spectral bands can be chosen from the 92, bandpass filters can capture images at only those wavelengths. PCA’s ubiquity, simplicity, and proven value for feature reduction make it an appealing technique for band selection, especially since it can also be employed to rank bands by contribution to the principal components, instead of projecting to a lower dimensional space, as nonlinear techniques do. Previous efforts to use a few selected HSI bands for online citrus disease inspection largely relied on manual feature extraction methods, which do not necessarily generalize to new defect conditions or other fruit varieties. On the other hand, a custom CNN can automatically extract optimal features. Therefore, the combination of a reduced set of spectral bands with a CNN-based feature extractor holds great potential for fast and accurate multiclass defect inspection. Moreover, many previous approaches dealt with only a few classes, while this study seeks to distinguish between eight unique peel condition classes with comparable or better performance.

The overall goal of this study was to use a DL-based approach with CNN to classify eight different peel conditions of citrus with HSI images. For this, we developed a custom CNN network that could do automatic feature extraction and classification of eight different peel conditions. The specific objectives were to (i) develop a CNN model and train it to classify HSI images of citrus with eight different peel conditions using 5 randomly selected bands (RS-5), (ii) use PCA-based 5 most contributing bands (henceforth abbreviated as PCA-5) and then train the developed CNN for classification of HSI images of citrus, (iii) study the effect of choosing the number of PCA-based bands on the performance metrics (accuracy, sensitivity and specificity) of the CNN model and (iv) compare the results of using RS-5 bands with that of PCA-5 bands for training the CNN model and classifying the HSI images of citrus.

Materials and methods

Hyperspectral imaging system

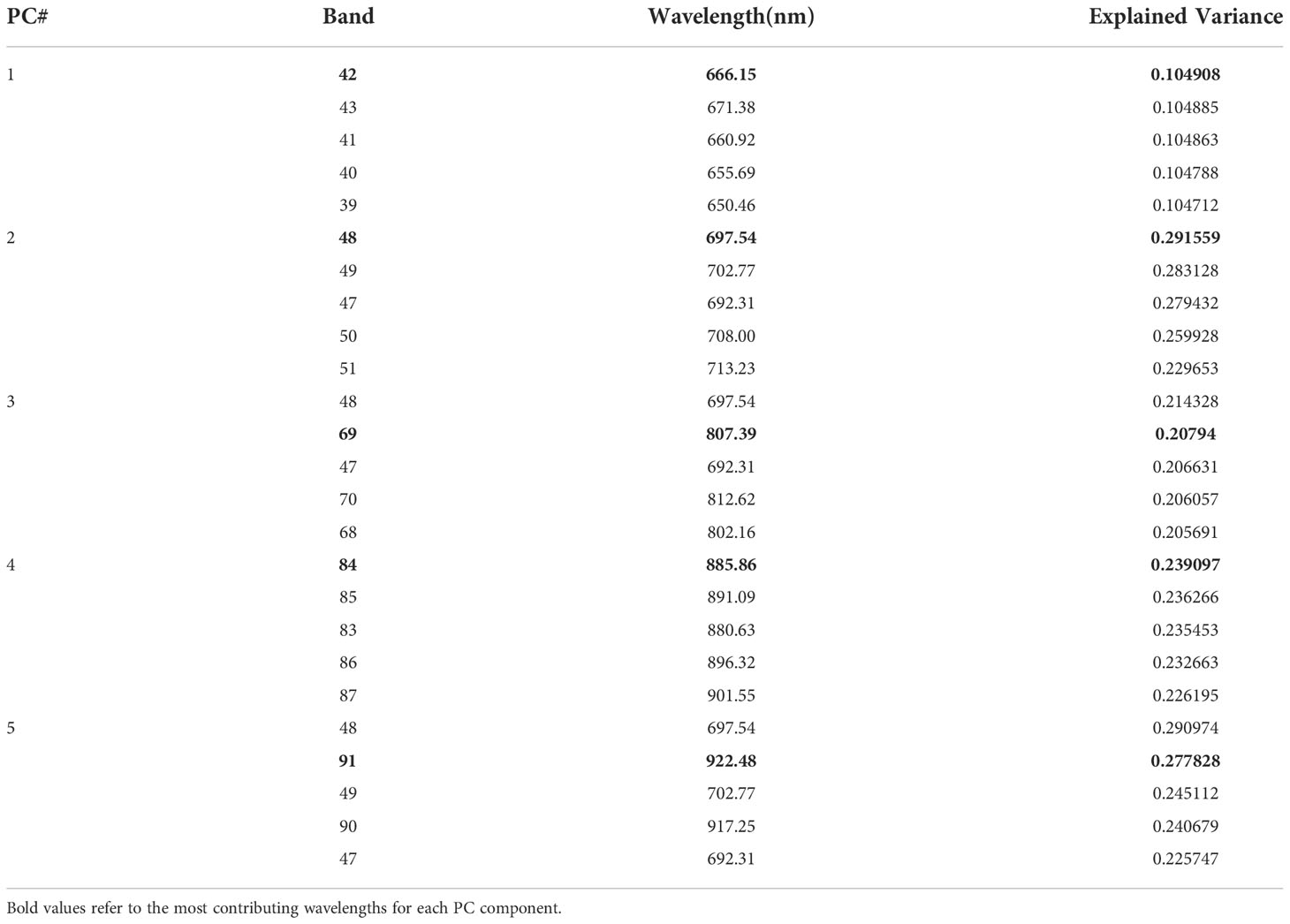

A hyperspectral imaging system was developed in previous work for acquiring reflectance images from citrus samples, and its schematic diagram is shown in Figure 1 (Kim et al., 2001; Qin et al, 2008). This unit was based on the original concept by Kim et al. (2001). It is a push broom, line-scan based imaging system that utilizes an electron-multiplying charge-coupled-device (EMCCD) imaging device (iXon, Andor Technology Inc., South Windsor, CT, USA). The EMCCD has 1004×1002 pixels and is thermoelectrically cooled to -80°C via a double-stage Peltier device. An imaging spectrograph (ImSpector V10E, Spectral Imaging Ltd., Oulu, Finland) and a C-mount lens (Rainbow CCTV H6X8, International Space Optics, S.A., Irvine, CA, USA) are mounted to the EMCCD. The instantaneous field of view (IFOV) is limited to a thin line by the spectrograph aperture slit (30 μm), and the spectral resolution of the imaging spectrograph is 2.8 nm. Through the slit, light from the scanned IFOV line is dispersed by a prism-grating-prism device and projected onto the EMCCD. Therefore, for each line-scan, a two-dimensional (spatial and spectral) image is created with the spatial dimension along the horizontal axis and the spectral dimension along the vertical axis of the EMCCD.

Figure 1 (A) Hyperspectral imaging system for acquiring reflectance images from citrus samples. (B) HSI single band sample image with canker peel condition on 5 fruit instances.

The reflectance light source consists of two 21 V, 150 W halogen lamps powered with a DC voltage regulated power supply (TechniQuip, Danville, CA, USA). The light is transmitted through optical fiber bundles toward line light distributors. Two line lights are arranged to illuminate the IFOV. A programmable, motorized positioning table (BiSlide-MN10, Velmex Inc., Bloomfield, NY, USA) moves citrus samples (five for each run) transversely through the line of the IFOV. 1,740-line scans were performed for five fruit samples, and 400 pixels covering the scene of the fruit at each scan were saved, generating a 3-D hyperspectral image cube with the spatial dimension of 1740×400 for each band.

The hyperspectral imaging system parameterization and data-transfer interface software were developed using a SDK (Software Development Kit) provided by the camera manufacturer on a Microsoft (MS) Visual Basic (Version 6.0) platform in the MS Windows operating system (Kim et al., 2001). Spectral calibration of the system was performed using an Hg-Ne spectral calibration lamp (Oriel Instruments, Stratford, CT, USA). Because of inefficiencies of the system at certain wavelength regions (e.g., low light output in the visible region less than 450 nm, and low quantum efficiency of the EMCCD in the NIR region beyond 930 nm), only the wavelength range between 450 nm and 930 nm (totaling 92 bands with a spectral resolution of 5.2 nm) was used in this investigation.

Citrus samples

Grapefruit is one of the citrus varieties that are most susceptible to canker which emerged as major Florida citrus disease in early 2000s. Ruby Red grapefruit, a high value citrus crop in Florida, was used in this study. Fruit samples with normal marketable, canker, five common diseased peel conditions (i.e., greasy spot, insect damage, melanose, scab, and wind scar) and with mixed peel conditions were collected at the University of Florida and first reported by Qin et al. (2008). The diseases on the fruit surface show different symptoms. Greasy spot, melanose, and scab are all caused by fungi, which generate surface blemishes that are formed by infection of immature fruit during the growing season. Greasy spot produces small necrotic specks, and the affected areas are colored in brown to black and exhibit greasy in appearance. Melanose is characterized by scattered raised pustules with dark brown to black in color. Scab appears as corky raised lesions usually with the color of light brown. Different from the fungal diseases, citrus canker is caused by bacteria, and it is featured with conspicuous dark lesions. Most circular in shape, canker lesions vary in size, and they are superficial (up to 1 mm deep) on the fruit peel (Timmer et al., 2000). Diameter of the canker lesions tested in this study was approximately in the range of 2-9 mm. Insect damage is characterized by irregular grayish tracks on the fruit surface, which are generated by larvae of leafminers that burrow under the epidermis of the fruit rind. Wind scar, which is caused by leaves, twigs, or thorns rubbing against the fruit, is a common physical injury on the fruit peel, and the scar tissue is generally gray.

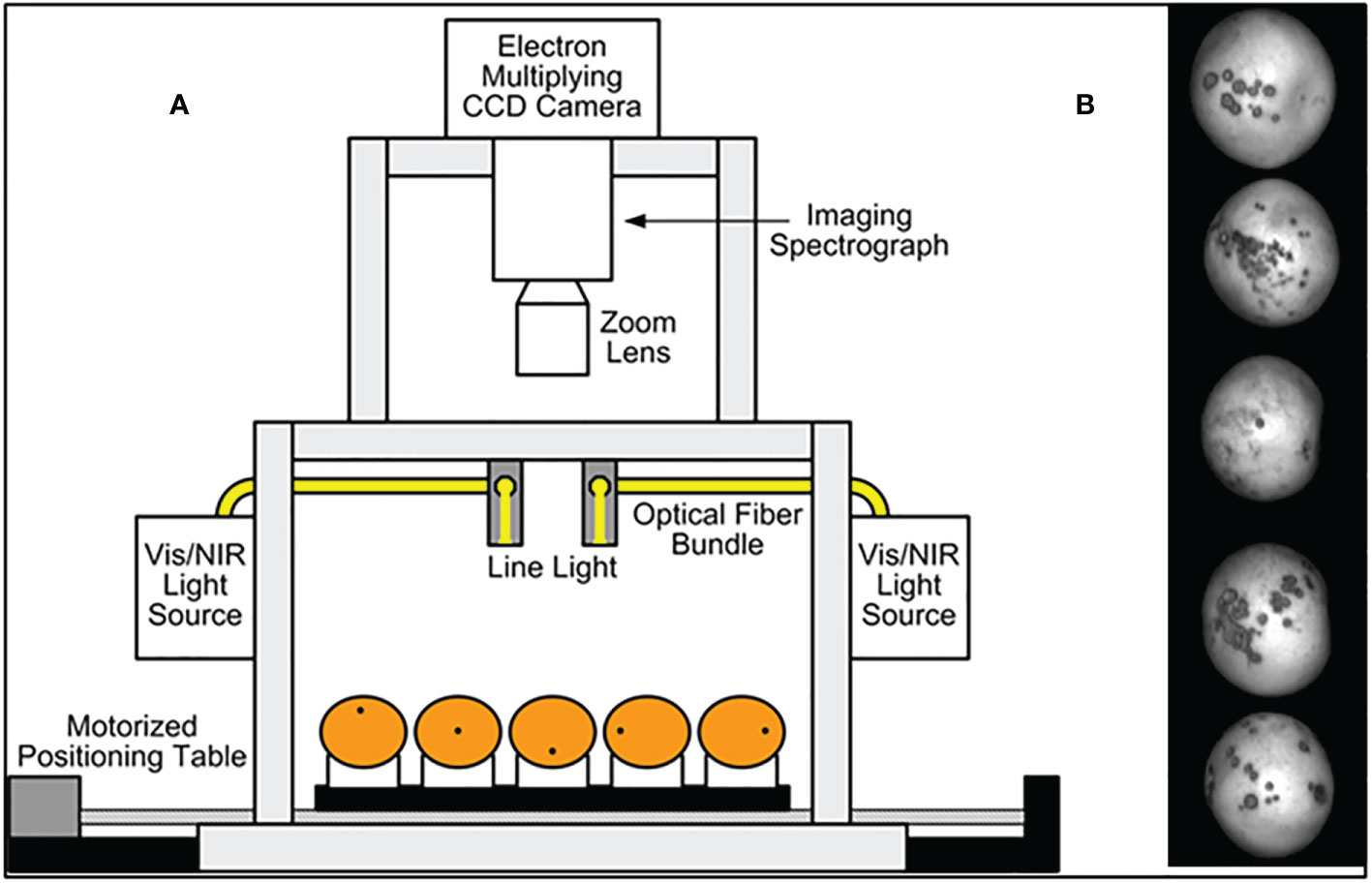

Fruit samples were handpicked from a grapefruit grove in Fort Pierce, Florida during the spring 2008 harvest season. All the grapefruits were washed and treated with chlorine and sodium o-phenylphenate (SOPP) at the Indian River Research and Education Center of University of Florida in Fort Pierce, Florida. The samples were then stored in an environmental control chamber maintained at 4°C, and they were removed from cold storage about 2 hours before imaging to allow them to reach room temperature. During image acquisition, the citrus samples were placed on the rubber cups that were fixed on the positioning table (Figure 1) to make sure the diseased areas were on the top of each fruit as first reported in Qin et al. (2008). Each image band of the dataset is denoted by its center wavelength and is about 5.2 nm from adjacent bands. Figure 2 displays one fruit from each of the eight defect classes of the dataset at a selected wavelengths representing the range of imaged wavelength bands. The MATLAB (The MathWorks Inc., Natick, MA) program was used to apply the same grayscale transformation to different images, to show varying levels of contrast among both fruits and defects. Being orange/yellow, the fruits themselves generally appeared brighter at longer wavelengths, but certain infections are more apparent in specific bands. Greasy spot and melanose are all but invisible at higher wavelength bands, but prominent at 577 nm. Scab and insect damage appear to contrast with healthy peel most at 640 nm. These suggest that classifying multiple peel conditions will require multiple wavelengths.

Figure 2 Plots of images of eight different peel conditions using the 23rd fruit from each class. Each HSI image contains five fruit samples for each of the peel conditions, but just one is displayed here.

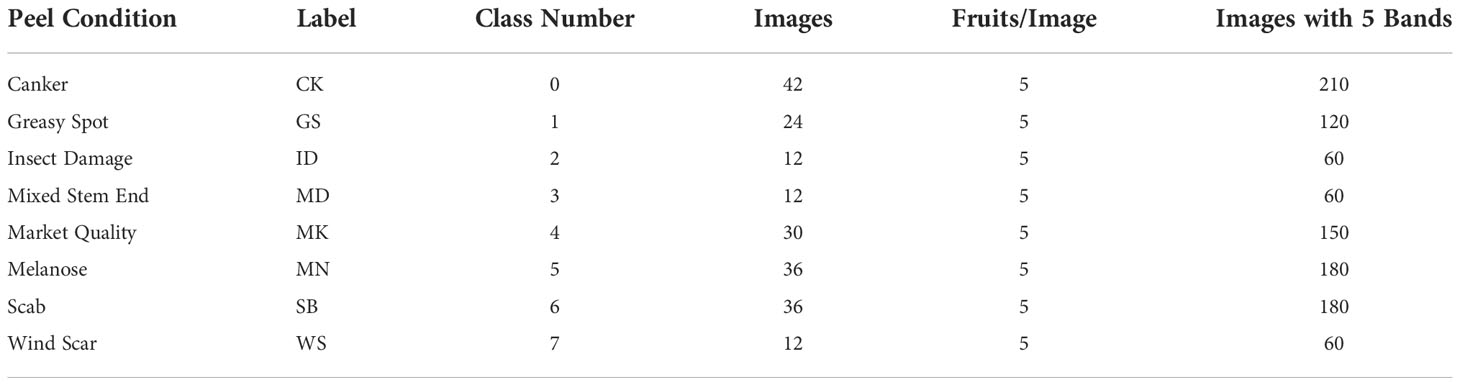

Table 1 shows the number of HSI image samples that were collected for each of the eight different peel conditions, with each image including five fruits. This resulted in 1020 HSI image samples consisting of 5100 fruit specimens.

CNN with softmax classifier

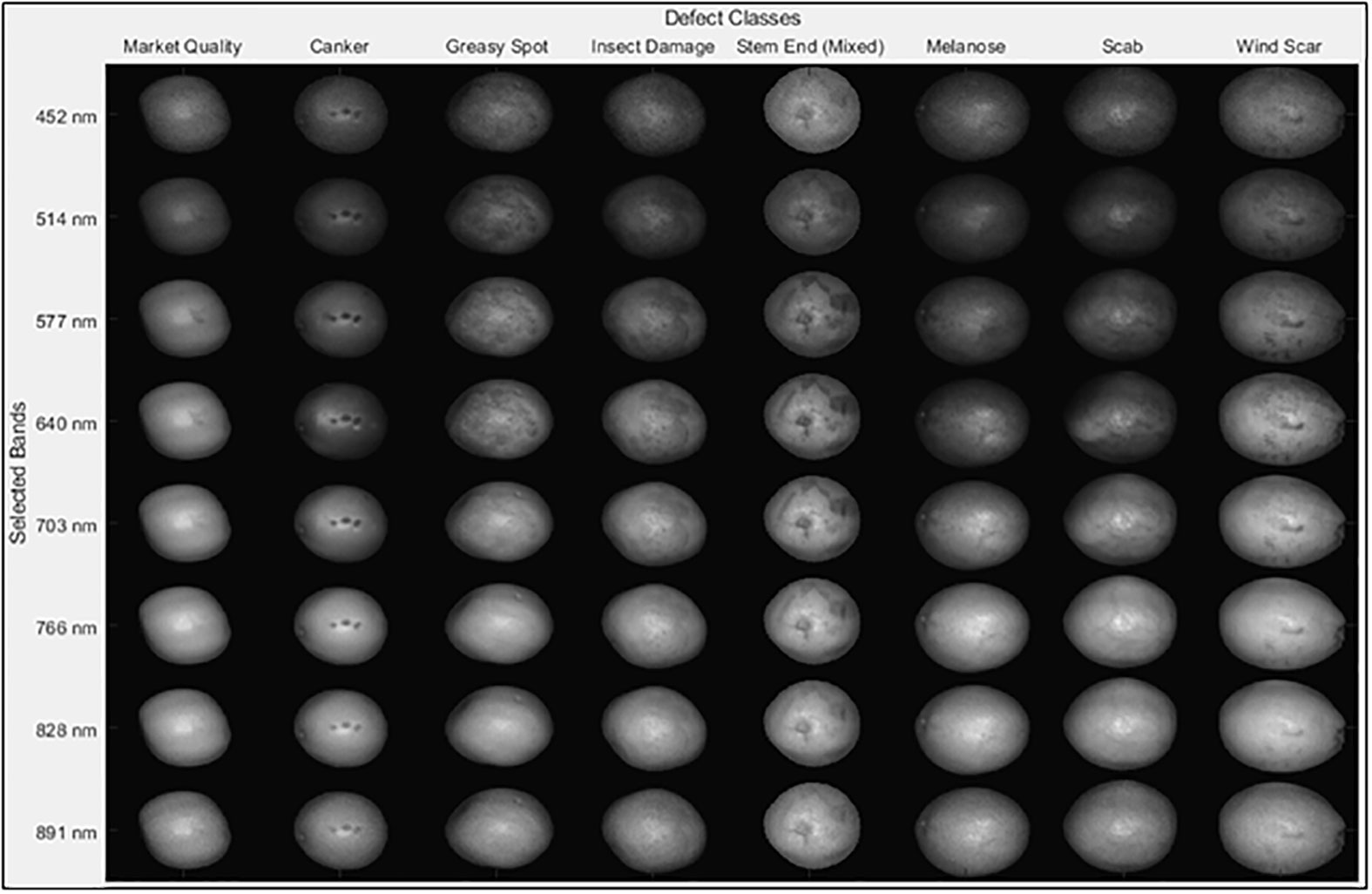

The CNN architecture that we developed for this study is a modified version of VGG16 (Simonyan and Zisserman, 2014) that was developed by the Visual Geometry Group (VGG) in 2014 at Oxford University. The original architecture of VGG16 consists of 13 convolution layers, 3 fully connected (FC) layers and five max-pooling layers with softmax as the classifier. The original architecture was designed to accept input images of size 224x224 pixels which is why the trained weights are of similar size and therefore wasn’t suitable for our case applications for images of size 870x200 pixels. The customized network accepts images along a single channel which is why all the 92 spectral channels of each HSI input image were reshaped accordingly. To minimize the number of parameters significantly and hence the training and computation time, two of the three FC layers were reduced from 4096 to 128 units each and third to 8 units (corresponding to the number of classes) as more than 90% of the parameters and hence the weights are present in the last three FC layers (Braga-Neto, 2020). Padding of type “SAME” was used which ensured a convolution filter was applied to each of the input pixels. Adadelta (Zeiler, 2012) was used as an optimization algorithm for the training process with a learning rate of 0.01. The network architecture is shown in Figure 3.

Figure 3 CNN network architecture generated by Netron visualization software that was used to classify hyperspectral images of citrus peels with eight different conditions.

The custom VGG16 network was attached to the softmax classifier that is a generalized version of logistic regression function used for multi-class classification (Stanford, 2013; Yadav et al., 2022a). Mathematically, a softmax classifier function is given as (Qi et al., 2018):

The softmax transforms the input into a probability distribution function that ranges from 0 to 1.

Principal component analysis

Principal component analysis (PCA) is a multivariate statistical analysis technique that is used to analyze inter-correlated dependent variables in data. It is used to extract the most important information from data and then express them as a set of new orthogonal variables that are called principal components (Rodarmel and Shan, 2002). Assuming a set of bands as B1, B2, B3…, Bp then the first principal component is the standardized linear combination of features Z1 = Ф11B1 + Ф12B2 +…. Ф1pBp with the greatest variance. The components Ф11, Ф12, … Ф1p are called loadings of the first principal component and Ф1= (Ф11+ Ф12+…. Ф1p) T is called the loading vector. PCA is a widely used technique in dimension reduction which eliminates highly correlated information from the ones with higher variance. Therefore, it is a commonly used tool in HSI based image processing to select the most important bands for classification purposes (Rodarmel and Shan, 2002). A scree plot in PCA analysis shows variance among each of the PC and therefore can be used to choose the number of components (Holland, 2019).

Performance metrics

The CNN-based model was trained and validated on image datasets divided in the ratio 4:1 using the metrices accuracy, sensitivity and specificity as defined in equations (2)-(4). In the equations, TP, TN, FP, and FN represent True Positive, True Negative, False Positive and False Negative respectively.

Sensitivity and Specificity are also known as true positive rate (TPR) and true negative rate (TNR) respectively. Besides these, we also used the area under receiver operating characteristic curve (AU-ROC) as the performance measure since it has been shown to give a better measure of classifier performance as compared to overall accuracy (Bradley, 1997). The ROC is plotted by putting TPR along y-axis and FPR (1-Sensitivity) along the x-axis and each point in the curve represents probability of classification at a particular threshold value. In other words, it considers the whole range of threshold values between 0 and 1 unlike the overall accuracy. The AU-ROC value represents degree of separability of a particular class. The advantage of using this metric is that it is not affected by class distribution, classification priori probability and misclassification cost (Xu-hui et al., 2009).

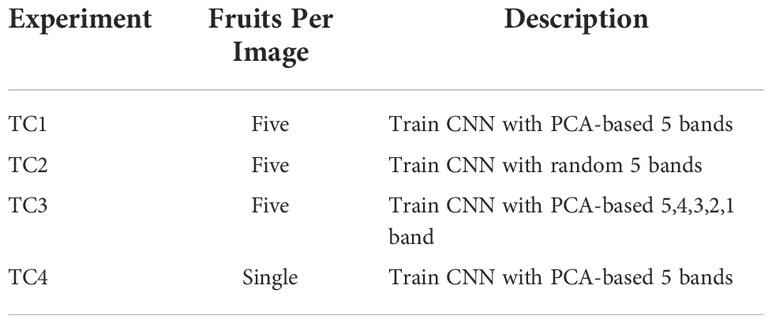

Experiment setup

In this study we evaluated several band reductions approaches in conjunction with an CNN classifier to reduce computational demands. The first approach identified the PCA-5 bands and then used them to train the CNN model and classify the images using a CNN approach. This approach was later repeated using the 4,3,2 and single most contributing band to determine their classification accuracy in comparison to the PCA-5 bands. The entire process was repeated three times after which a fixed-effect test was done to analyze the effect of choosing the number of PCA-based bands on the accuracy, sensitivity, and specificity as explained by Borenstein et al. (2010). In a third approach, it was desirable to compare 10 sets of 5 randomly chosen bands (RS-5) with the PCA-5 results. In both cases, the CNN-based model was used to train and classify images. The resulting RS-5 accuracy, sensitivity and specificity were analyzed statistically and compared to the PCA-5 results. The first three approaches were based on groups of five fruit exhibiting the same peel conditions as shown in Figure 1. In the final approach we re-packaged the HSI dataset into individual fruits HSI images, so all training and classification were done on single fruit. Consequently, we have considered four test cases scenario shown in Table 2; (TC1) Determine the five strongest HSI bands among the 92 bands using PCA (referred to as PCA-5) and run CNN based training and classification to determine accuracy, sensitivity and specificity; (TC2) Compare results of TC1 with mean results from 10 RS-5 groups from the 92 original bands; (TC3) Compare CNN accuracy, sensitivity and specificity results from best five, four, three, two and single PCA selected bands (abbreviated as PCA-5, PCA-4, PCA-3, PCA-2, and PCA-1, respectively); and finally (TC4) Separate the original 5 fruit per HSI image data set into an individual fruit HSI data set, and compare performance of the PCA-5 CNN classifier for individual fruit to that of the five fruit classifier in TC1.

CNN model training

The Google Colab Pro+ (Google LLC., 342 Melno Park, CA) platform was used to train the CNN model on an NVIDIA Tesla P100-PCIE GPU 343 (Santa Clara, CA) running Compute Unified Device Architecture (CUDA) version 11.2 and driver 344 version 460.32.03. The training was done with “EarlyStopping” enabled with a value of “patience” as 10 and “mode” set as “min”. This is used when the goal is to minimize the loss during the training process. By enabling this functionality, the training stops when the loss stops decreasing for any consecutive 10 iterations. Similarly, “ReduceLROnPlateu” functionality was used with a factor of 0.1 and patience of 7. This functionality reduces the learning rate by a factor of 0.1 for every 7 consecutive iterations without any improvement in the loss function. In each of the experiments, the CNN model was trained for 25 iterations with a batch size of 8 as the loss function converged in almost all the cases except in the case of TC4 in which the training iterations were increased to 35.

Results and discussion

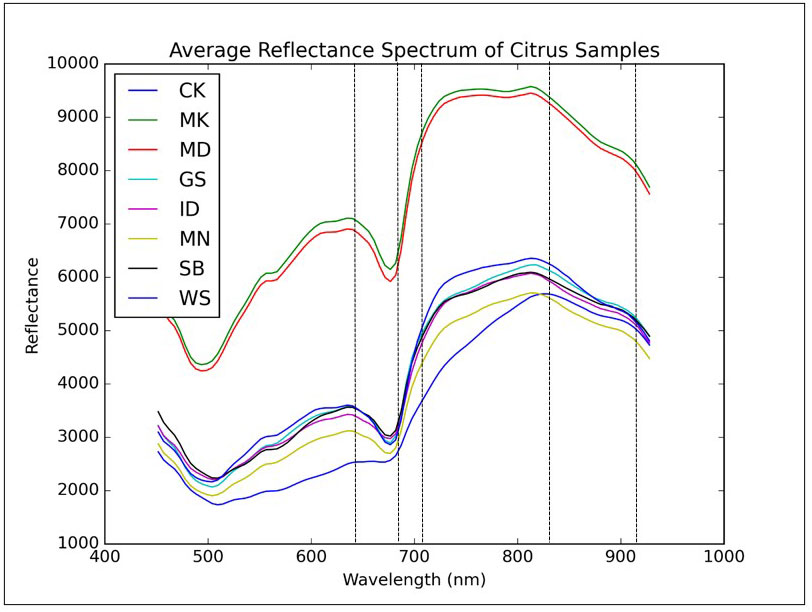

A typical reflectance spectrum of the grapefruit samples with normal and diseased conditions over the wavelength range between 450 nm and 930 nm are shown in Figure 4 to demonstrate the general spectral patterns. Each spectrum plot in Figure 4 was extracted from the hyperspectral image using an average spatial window covering 5×5 pixels for each peel condition. A cankerous sample was also included for the purpose of comparisons.

Figure 4 Mean reflectance spectra of grapefruits with eight different peel conditions. Dashed lines represent five wavelengths (666.15, 697.54, 702.77, 849.24 and 917.25 nm) selected by principal components analysis.

All the reflectance spectra were featured as two local minima around 500 nm and 675 nm due to the light absorption of carotenoid and chlorophyll A in the fruit peel, respectively. Chlorophyll is responsible for the green color of the citrus peel, while the yellow color of the fruit peel is related to carotenoid. The carotenoid and chlorophyll absorptions for greasy spot and canker were not as prominent as other peel conditions. Values of the reflectance for the diseased peel conditions were consistently lower than those from the normal fruit surface over the entire spectral region. Canker had the lowest reflectance except for the spectral region from 450 nm to 550 nm, in which greasy spot showed similar reflectance with canker. In the spectral region from 450 nm to 930 nm, the relative reflectance values of canker and normal peel were in the range of 12-43% and 31-66%, respectively, while other peel conditions generally had values in between.

For canker disease, once the bacterial pathogen invades the fruit peel, the region infected continually loses its moisture content through the season, and the lesions on the fruit surface usually become dark in appearance. This may be the reason for the low reflectance characteristics of the canker disease in visible and short-wavelength near-infrared region. The spectral differences between canker and normal as well as other diseased peel conditions provide a basis for detecting citrus canker using hyperspectral or multispectral imaging approach.

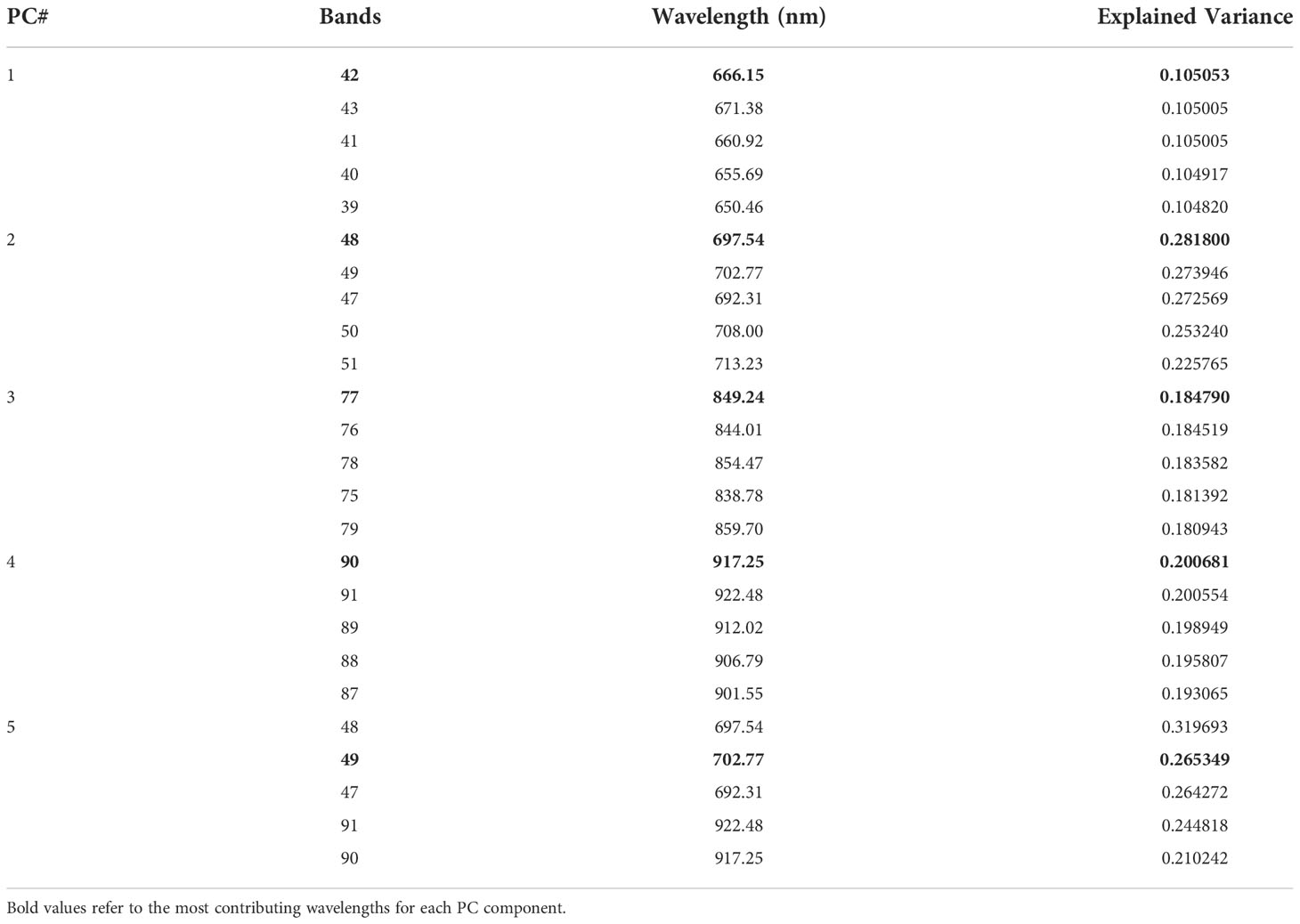

TC1: CNN classification with PCA-5 bands

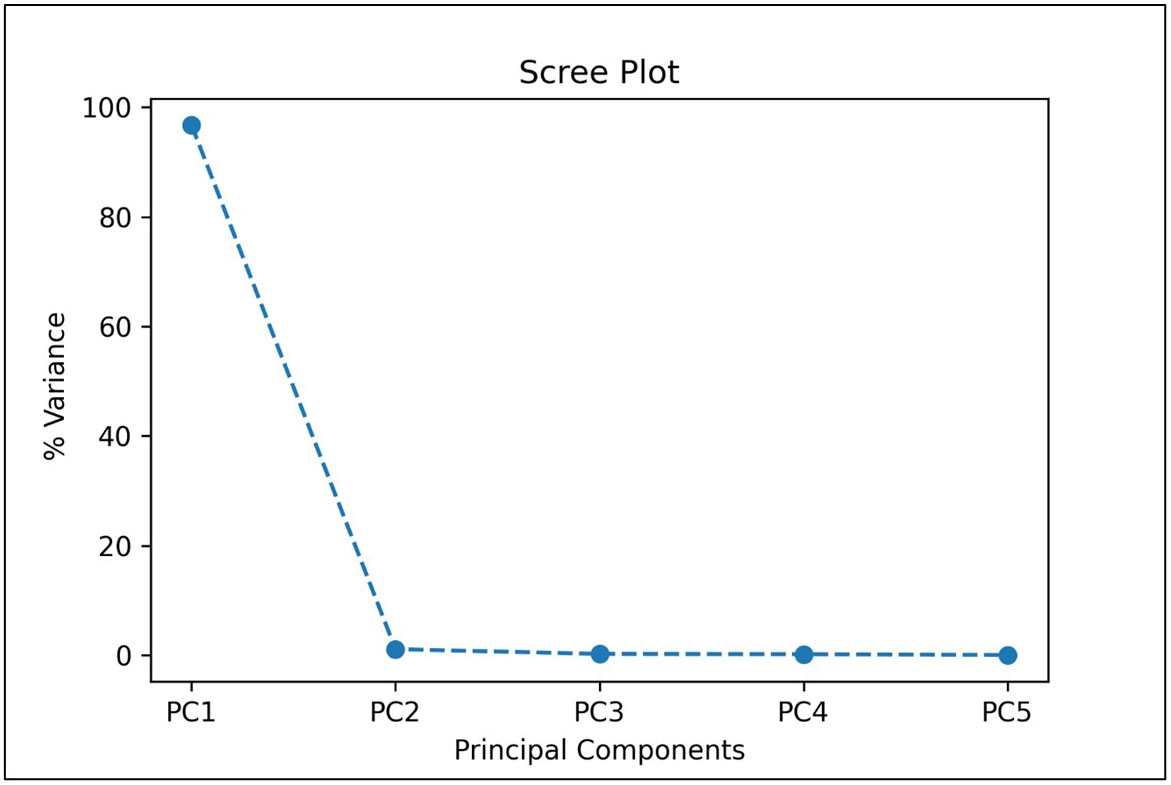

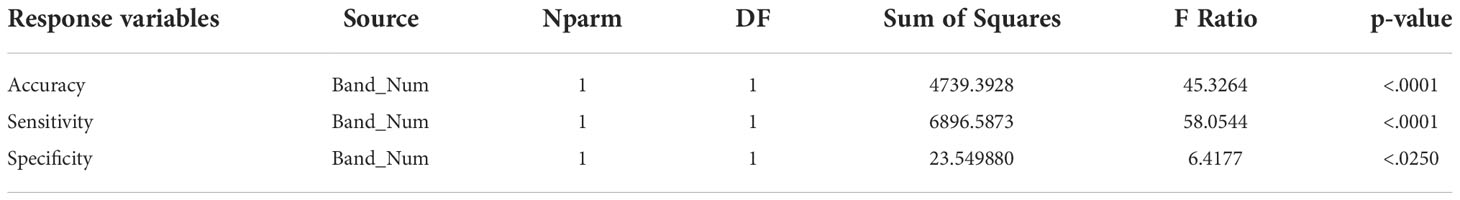

In our first approach, TC1, we trained the CNN model using the five most important bands based on PCA, which would significantly reduce the computational complexity and thus training and validation time required when using all the 92 bands of the HSI images. We also considered five bands to be a reasonable transition from hyperspectral analysis (5 or more bands) to multispectral implementation (4 or less bands). From the given scree plot (Figure 5), it was found that the first 5 PCs accounted for a total of 98.32% of variance, with PC1 accounting for 96.74% by itself.

Figure 5 A scree plot of PCA analysis showing the percentage of variances accounted by each of the principal component.

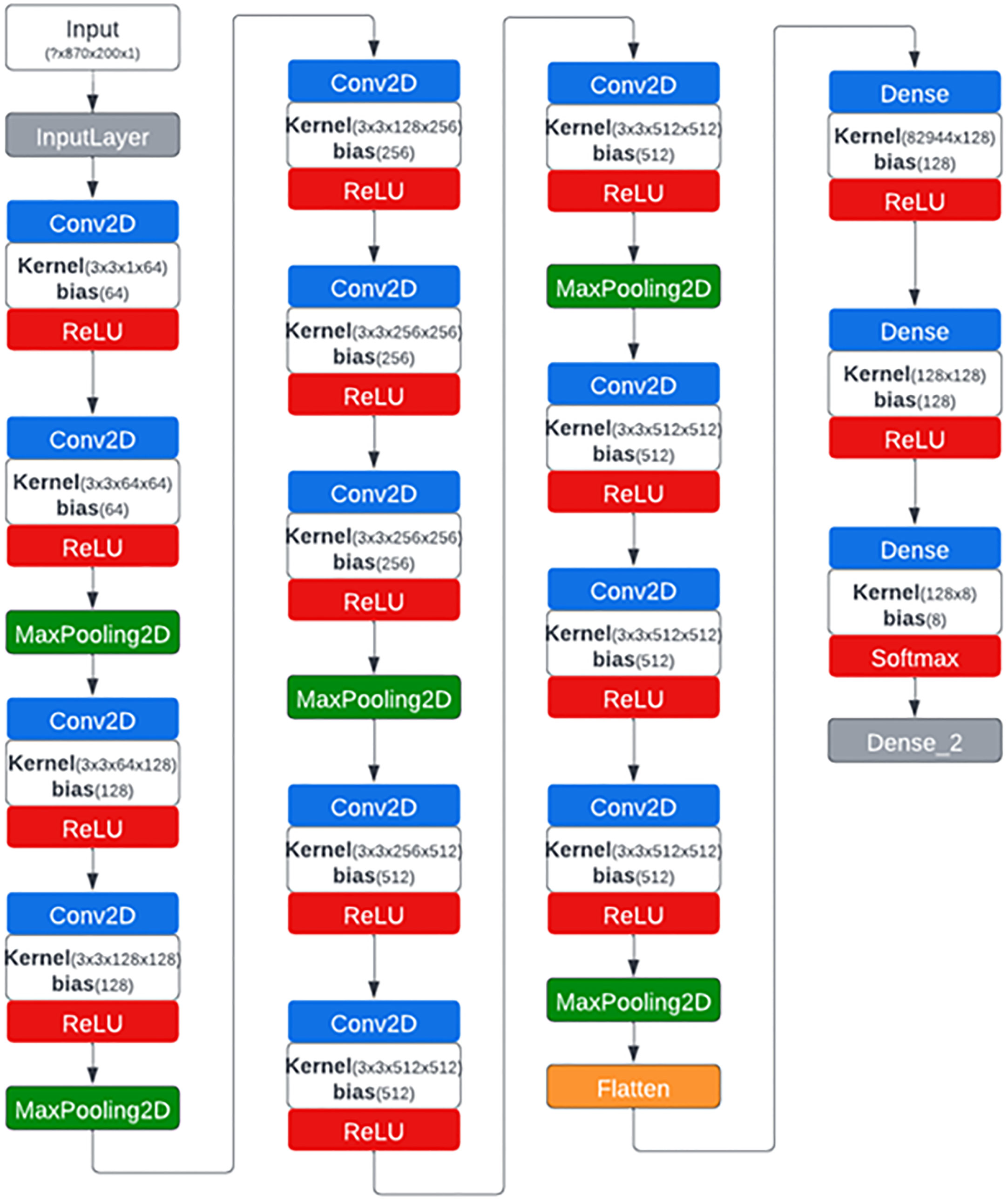

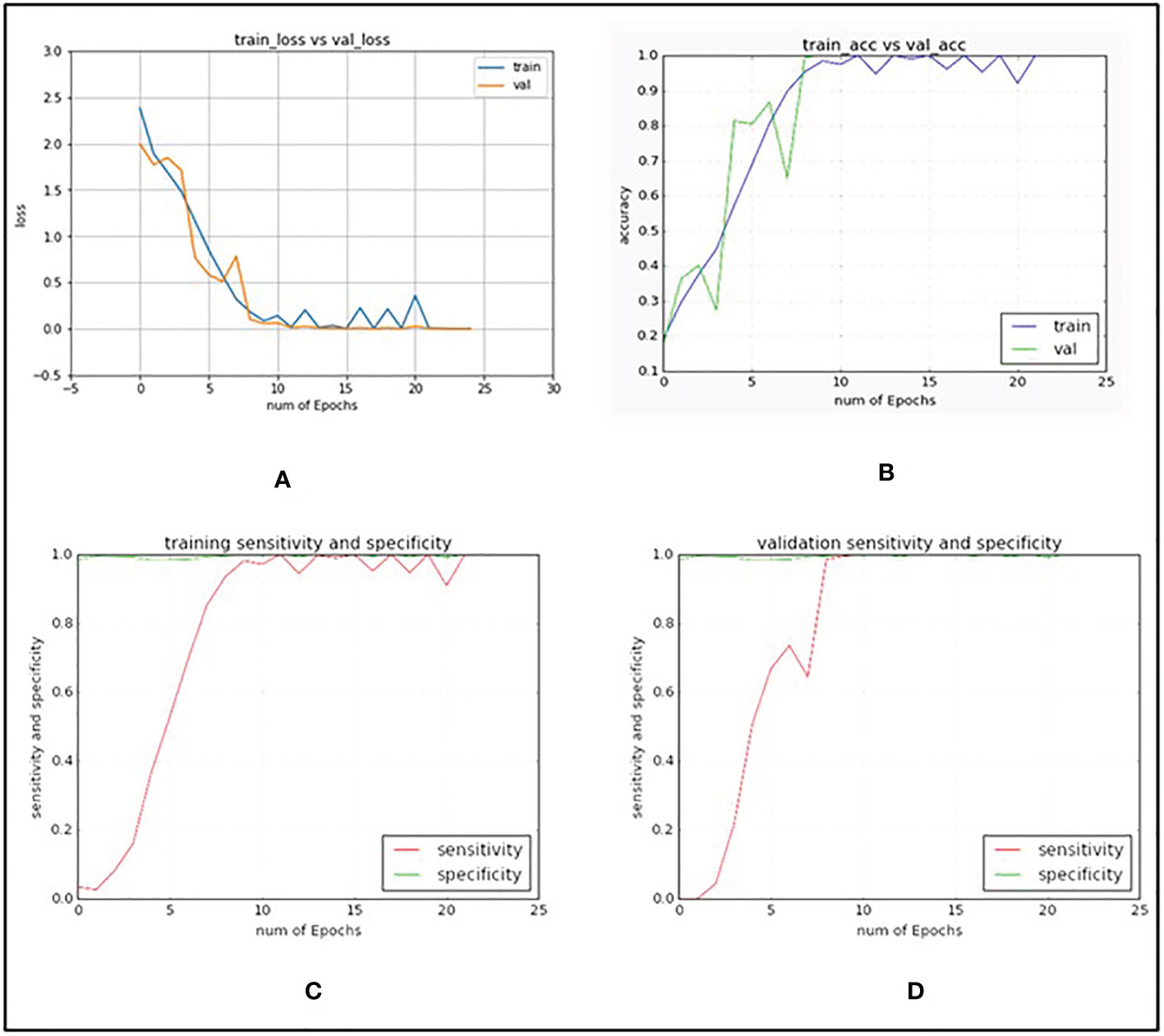

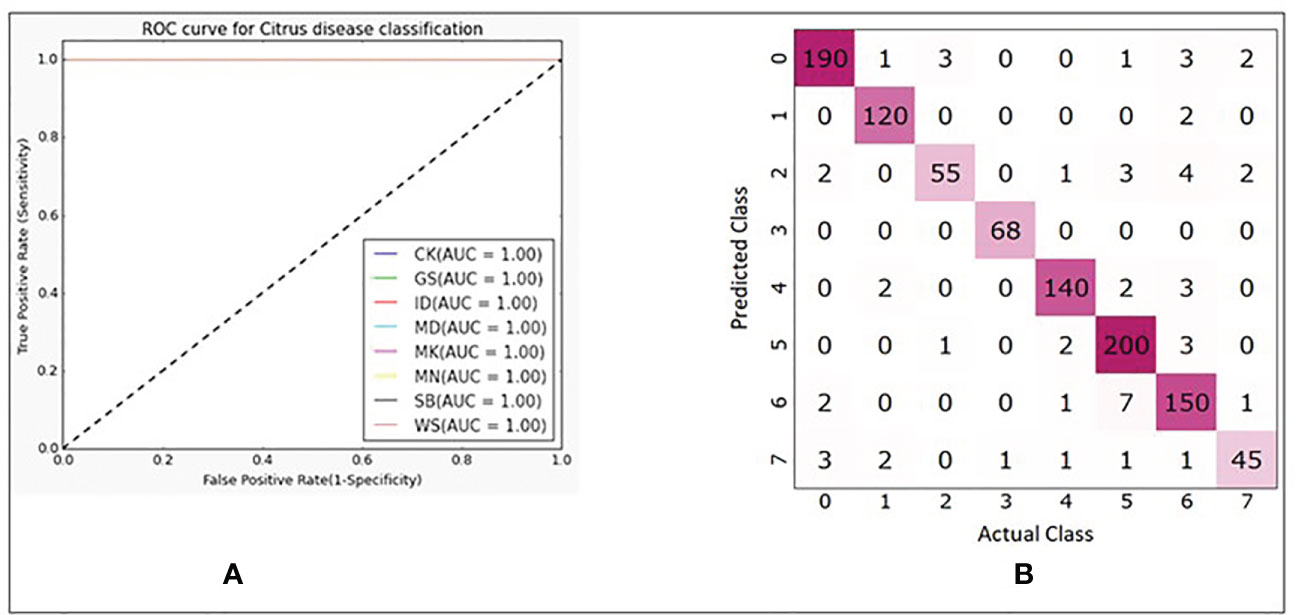

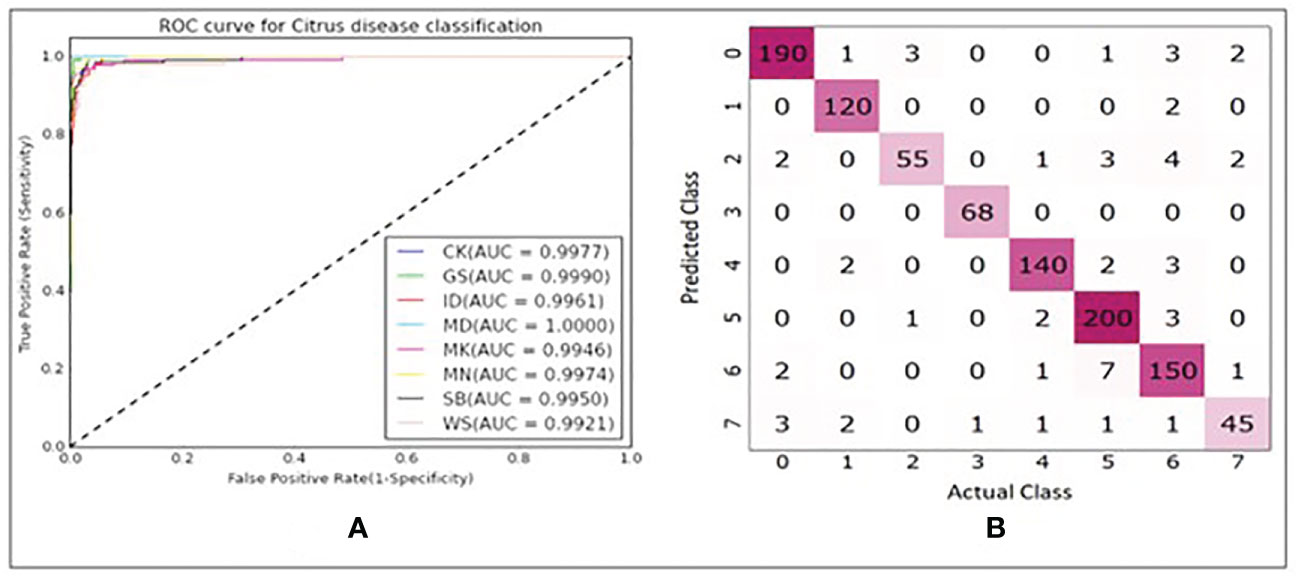

In Table 3, explained variance for each of the PC and corresponding bands are shown. From this, it was found that the most important bands were 42, 48, 49, 77 and 90. For PC2 and PC5 the same band 48 was the most important one, therefore, the second most important band for PC5 i.e., band 49 was chosen as the most important band in PC5. Once the PCA-5 bands were determined, the custom CNN model was trained to classify the images. Based upon three trials of training and validating with PCA-5 most important bands, the average accuracy, sensitivity, and specificity were found to be 99.84%, 99.84% and 99.98% respectively. The training and validation loss graphs that were generated on HSI dataset with 5 fruit instances are shown in Figure 6A, B. These graphs were generated during one of the three trails. The graphs converged within the 25th iteration (epoch). The sensitivity and specificity were also tracked and plotted during the training process using both the training (Figure 6C) and validation datasets (Figure 6D). The AU-ROC as seen in Figure 7A, shows that the CNN model when trained with PCA-5 bands was able to discriminate all the classes almost equally on HSI images with 5 fruit instances over all the range of threshold values. The graphs also show that the ability of the model to detect TPR improves during the training process while its ability to detect TNR remains almost the same. The confusion matrix in Figure 7B shows the total number of correctly classified and misclassified images in the validation dataset. In this example trial run, all the 204 validation images were correctly classified.

Figure 6 Graphs generated when custom VGG16 network was trained and validated with PCA-5 most contributing bands and with images containing 5 fruit instances (TC3). (A) CNN training and validation loss graph. (B) CNN training and validation accuracy graph. (C) CNN sensitivity and specificity graphs on training dataset. (D) CNN sensitivity and specificity graphs on validation dataset.

Figure 7 (A) ROC plots with corresponding AUC values for each class. (B) Confusion matrix showing number of correctly and misclassified images for each class (refer to Table 1 for class numbers along x and y axis).

TC2: CNN classification with 10 replicates of RS-5

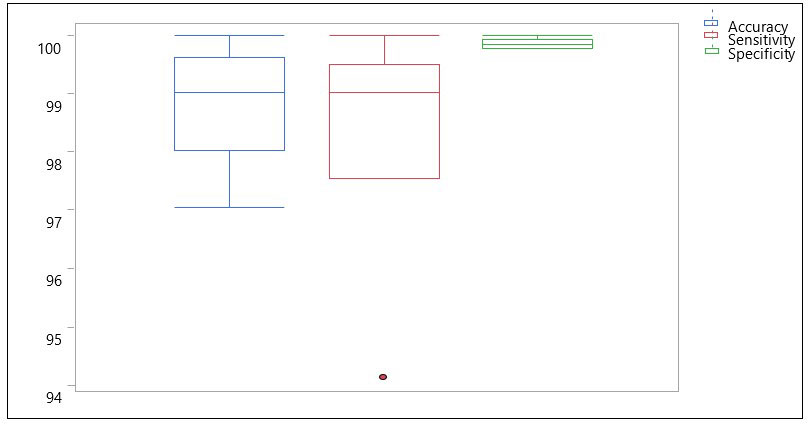

As a result of the high accuracy of TC1, the question was raised, would the CNN do just as well if 5 random bands were selected out of the 92. Consequently, the model was trained and tested for 10 repetitions (with 5 random combinations each time) and the results are shown in box plots (Figure 8). It was found that, the CNN was able to classify the HSI images at an average accuracy, sensitivity, and specificity of 98.87%, 98.43% and 99.88% respectively. This performance was better than our previous approach (spectral information divergence) which was used to classify canker versus non-canker peel conditions resulting in an overall accuracy of 96.2% (Qin et al., 2009). However, when compared to the PCA-5 bands (TC1), the performance was found to be slightly lower, indicating to use the TC1 approach.

Figure 8 Box plots showing distribution of accuracy, sensitivity and specificity using 10 sets of RS-5 bands for the CNN model with images containing 5 fruits.

Similarly, based on the AU-ROC, images belonging to the class MD were the most separable with a perfect AUC value of 1 followed by CK (AUC = 0.99818), GS (AUC = 0.98198), MK (AUC = 0.9712), MN (AUC = 0.94564), ID (AUC = 0.93866), WS (AUC = 0.93114) and SB (AUC = 0.88222). This was an interesting result as the number of images available for SB (180) were more than that of WS (60) and still the features of SB were less discriminative than that of WS. Similarly, even though ID, MD and WS had equal number of images available (60 each), the features of MD were more distinctive than those of ID and WS. This makes sense because MD always has a stem end feature along with other mixed peel conditions.

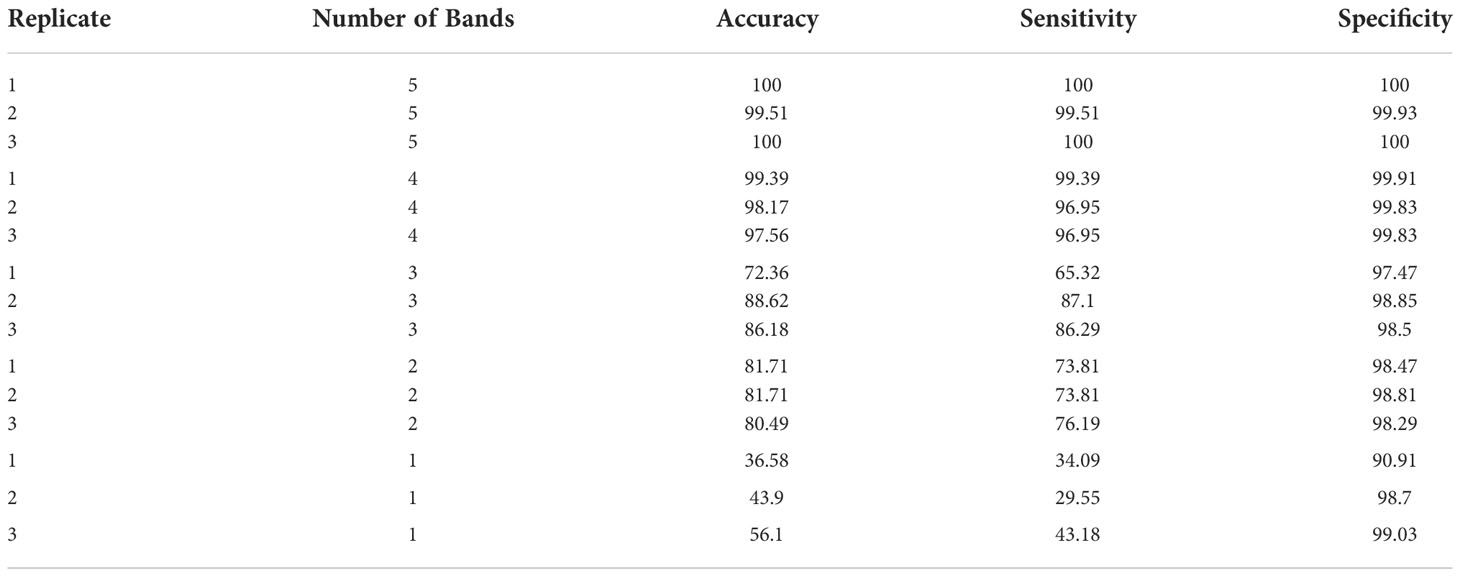

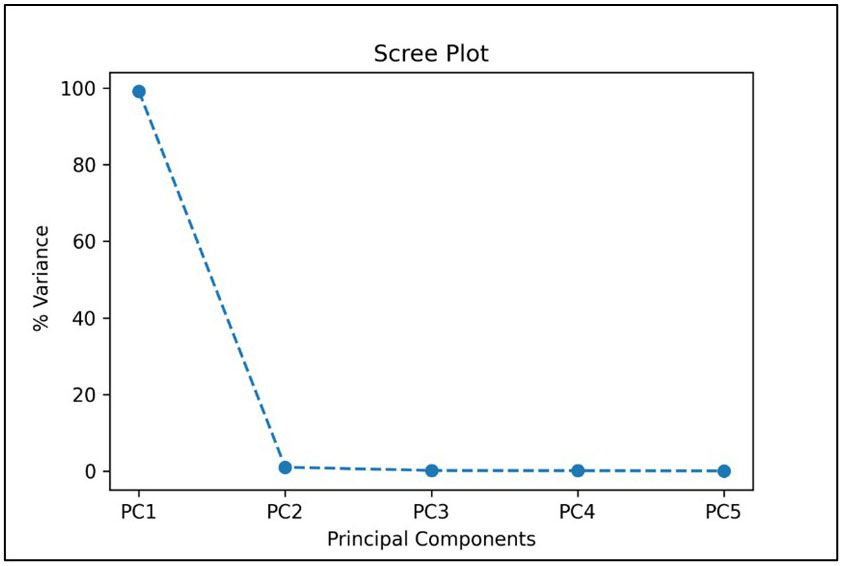

TC3: Comparing results of CNN with PCA-5, PCA-4, PCA-3, PCA-2, PCA-1 bands

As a practical matter, it should always be considered how well the classifier would work if the imaging system was implemented in a multispectral application using four or less bands in a custom filtered multispectral camera. Consequently, in TC3, CNN performance was evaluated using PCA-5, PCA-4, PCA-3, PCA-2, PCA-1 band combinations (Table 4). These results were used to test the effect of number of PCA-based bands on the performance of CNN-based classifier using a fixed-effect model with JMP Pro version 16.1.0 software (SAS Institute, North Carolina, U.S.A.). Number of PCA-based bands had significant effects on all the three-performance metrics (accuracy, sensitivity, and specificity) of the CNN model in classifying HSI images of eight different peel conditions of citrus fruits as shown in Table 5, where the p-values for accuracy, sensitivity and specificity were found to be <.0001, <.0001 and <.0250 respectively at 95% confidence level (α = 0.05). However, number of PCA-based bands had relatively more significant effects on accuracy and sensitivity than the specificity because of higher variances among the group means for the formers than the latter as reflected by their corresponding F-ratios (Table 5). This essentially means that if one needs to obtain higher order of performance in classifying HSI images of citrus peels then one must be careful in choosing the number of PCA-based bands, since there is a significant drop off in performance when going from 4 bands to 3 bands. Thus, choosing the PCA-5 bands resulted in a mean accuracy, sensitivity, and specificity of 99.84%, 99.84% and 99.98% respectively, while 3 bands resulted in a mean accuracy, sensitivity, and specificity of 82.34%, 79.57% and 98.27% respectively, and single band resulted in a mean accuracy, sensitivity, and specificity of 45.53%, 35.61% and 96.21% respectively.

Table 5 Fixed-effect model test to see if the number of PCA-based bands has any effect on accuracy, sensitivity, and specificity. .

TC4: comparing results of single fruit images with PCA-5 bands to that of TC1

In the TC4 approach, we compared the results of CNN-based classifier by training and validating the model using HSI images containing single fruit instances using PCA-5 bands. The scree plot showing the 5 PCs with the corresponding variance percentages is shown in Figure 9. It was found that the top 5 PCs contributed to a total of 100% of the variances in the dataset and the 5 most important bands were found to be 42, 48, 69, 84 and 91 respectively. Table 6 shows the values of explained variances corresponding to the top 5 bands based on the top 5 PCs.

Figure 9 Scree plot showing the top 5 principal components when used with HSI images containing single fruit instances.

The plots of using the PCA-5 bands (42, 48, 69, 84 and 91) for training and validating CNN model are shown in Figures 10A–D. The accuracy, sensitivity and specificity were found to be 98.53%, 98.53% and 99.80% respectively.

Figure 10 Graphs generated with PCA-5 bands on images with single fruit instances. (A) CNN training and validation loss graph. (B) CNN training and validation accuracy graph. (C) CNN sensitivity and specificity graphs on training dataset. (D) CNN sensitivity and specificity graphs on validation dataset.

In Figures 10A–D, it is seen that the training iterations were slightly less than 35 as no improvement was observed in 10 consecutive iterations because “EarlyStopping” functionality was used with a patience value of 10. It is also worth noting that due to lack of convergence of the loss function within 25 iterations, the CNN model was trained for 50 iterations even though it was found to stop within the 35th iteration (Figures 10A–D). In a trial of 3 repetitions, the average accuracy, sensitivity, and specificity were found to be 94.41%, 94.31% and 99.23% respectively. The loss functions on training data converged close to 0 while on the validation data it converged around 0.4 (Figure 10A). Based on the AU-ROC, images of MD class were the most accurately classified while the ones belonging to WS class were the least accurately classified (Figure 11A). The sensitivity on training dataset improved to almost perfect value of 1 (Figure 10C) while it converged around 90% on the validation dataset (Figure 10D). The specificity remained almost perfect throughout the training process as seen in the case of TC1. The diagonal elements in the confusion matrix show the number of correctly classified images and the non-diagonal elements represent the number of mis-classified images (Figure 11B). Even though ID class had the highest number of misclassified images (12), it still resulted in better performance than SB and WS over a range of thresholds as seen by AU-ROC (Figure 11A). When compared to the TC1, TC4 based approach resulted in a decrement of overall performance. In other words, the CNN resulted in more misclassifications of images with single fruit instances than the ones with 5 fruit instances. It is assumed that this may be because a greater number of features were able to propagate through the CNN network when trained with larger images (870x200 pixels) with multiple fruit instances in TC1 than in TC4 (174x200 pixels). It should be noted that classes MD, ID and WS had significantly fewer number of single fruit specimens than the other classes (MD, ID and WS had 60 images each while other classes had between 120 and 210). MD has very distinct features of nearly constant size due to stem end which likely attributed to its excellent accuracy, while ID and WS has highly variable feature size and shape due to nature of insect damage and wind scar. It is likely that the lower number of samples of ID and WS resulted in higher number of misclassifications of single fruit images in TC4 compared to the five fruit images in TC1. In addition, the SB class has strong similarity with CK and MN, which can be seen in Figure 11B confusion matrix where SB misclassified 7 times into MN and 2 times into CK. This could be improved possibly by a more balanced number of HSI for all classes. However, this remains to be a subject for future study.

Figure 11 (A) ROC plots with corresponding AUC values for each class. (B) Confusion matrix showing number of correctly and misclassified images for each class(refer to Table 1 for class numbers along x and y axis).

Conclusion

In this paper, we were able to show that the developed CNN models were able to classify HSI images of citrus peel with eight different conditions at a higher accuracy than our previously developed methods. We were also able to demonstrate the capability of PCA-based 5 most important band selection and then using them for training the CNN which resulted in a better performance than randomly selecting 5 bands. Apart from this, we were also able to demonstrate that using 5 fruit samples in each image results in slightly better classification accuracy (~ 4% higher) than the images with single fruit samples. Therefore, this study recommends using PCA for most important band selection, and then using those bands for training the CNN model to classify HSI images of citrus peels (possibly containing multiple fruit samples). WS, ID and SB had greater misclassification rates as they all appear spectrally similar. It was also observed that adjusting the threshold value of the classifier may slightly decrease the misclassification rate of ID. It, however, may not improve the performance of WS images as its misclassification rate remained the lowest in either of the cases.

The potential outcome of this study could be to deploy the trained CNN model on a machine vision based scouting systems in the field or at processing lines for real-time citrus peel condition detection at a faster speed and possibly better accuracy.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

First author: PY (methodology, software, validation, data curation, analysis, original draft preparation). Second author: TB (conceptualization, funding acquisition, methodology, investigation, review and editing). Third author: QF (data preparation, review and editing). Fourth author: JQ, MK and MR contributed equally (provided data, review and editing). All authors contributed to the article and approved the submitted version.

Acknowledgments

The authors gratefully acknowledge the financial support of Florida Fresh Packer Association and USDA Technical Assistance for Specialty Crops. The authors would also like to thank Dr. Moon Kim and Dr. Kuanglin Chao of USDA-ARS Food Safety Laboratory for helping set up hyperspectral imaging system, and Mr. Mike Zingaro and Mr. Greg Pugh of University of Florida, for their help in designing and building the hyperspectral imaging system.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdulridha, J., Batuman, O., Ampatzidis, Y. (2019). UAV-based remote sensing technique to detect citrus canker disease utilizing hyperspectral imaging and machine learning. Remote Sens. 11 (11), 1373. doi: 10.3390/RS11111373

Ariana, D. P., Lu, R., Guyer, D. E. (2006). Near-infrared hyperspectral reflectance imaging for detection of bruises on pickling cucumbers. Comput. Electron. Agric. 53 (1), 60–70. doi: 10.1016/J.COMPAG.2006.04.001

Behera, S. K., Rath, A. K., Sethy, P. K. (2021). Maturity status classification of papaya fruits based on machine learning and transfer learning approach. Inf. Process. Agric. 8 (2), 244–250. doi: 10.1016/J.INPA.2020.05.003

Blasco, J., Aleixos, N., Gómez, J., Moltó, E. (2007). Citrus sorting by identification of the most common defects using multispectral computer vision. J. Food Eng. 83 (3), 384–393. doi: 10.1016/J.JFOODENG.2007.03.027

Borenstein, M., Hedges, L. V., Higgins, J. P., Rothstein, H. R. (2010). A basic introduction to fixed‐effect and random-effects models for meta-analysis. Research synthesis methods, 1 (2), 97–111.

Bradley, A. P. (1997). The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern recognition, 30 (7), 1145–1159.

Braga-Neto, U. (2020). “Fundamentals of pattern recognition and machine learning,” in Fundamentals of pattern recognition and machine learning (Switzerland: Cham). doi: 10.1007/978-3-030-27656-0

Bulanon, D. M., Burks, T. F., Kim, D. G., Ritenour, M. A. (2013). Citrus black spot detection using hyperspectral image analysis. Agric. Eng. International: CIGR J. 15 (3), 171–180.

Chen, Y., An, X., Gao, S., Li, S., Kang, H. (2021). A deep learning-based vision system combining detection and tracking for fast on-line citrus sorting. Front. Plant Sci. 12. doi: 10.3389/fpls.2021.622062

Cho, B. K. (2021). Application of spectral imaging for safety inspection of fresh cut vegetables. IOP Conf. Series: Earth Environ. Sci. 686 (1), 1–7. doi: 10.1088/1755-1315/686/1/012001

Cocianu, C., State, L., Vlamos, P. (2009). Neural implementation of a class of pca learning algorithms. Economic Comput. Economic Cybernetics Stud. Res. 3.

Dewdney, M. M., Walker, C., Roberts, P. D., Peres, N. A. (2022) 2022–2023 FLORIDA CITRUS PRODUCTION GUIDE: CITRUS BLACK SPOT. Available at: https://edis.ifas.ufl.edu/publication/CG088.

Dhiman, P., Kukreja, V., Manoharan, P., Kaur, A., Kamruzzaman, M. M., Dhaou, I.B., et al. (2022). A novel deep learning model for detection of severity level of the disease in citrus fruits. Electron. (Switzerland) 11 (3), 495. doi: 10.3390/electronics11030495

Diezma, B., Lleó, L., Roger, J. M., Herrero-Langreo, A., Lunadei, L., Ruiz-Altisent, M. (2013). Examination of the quality of spinach leaves using hyperspectral imaging. Postharvest Biol. Technol. 85, 8–17. doi: 10.1016/J.POSTHARVBIO.2013.04.017

Fan, S., Li, J., Zhang, Y., Tian, X., Wang, Q., He, X., et al. (2020). On line detection of defective apples using computer vision system combined with deep learning methods. J. Food Eng. 286, 110102. doi: 10.1016/J.JFOODENG.2020.110102

Farrell, M. D., Mersereau, R. M. (2005). On the impact of PCA dimension reduction for hyperspectral detection of difficult targets. IEEE Geosci. Remote Sens. Lett. 2 (2), 192–195. doi: 10.1109/LGRS.2005.846011

Fazari, A., Pellicer-Valero, O. J., Gómez-Sanchis, J., Bernardi, B., Cubero, S., Benalia, S., et al. (2021). Application of deep convolutional neural networks for the detection of anthracnose in olives using VIS/NIR hyperspectral images. Comput. Electron. Agric. 187, 106252. doi: 10.1016/J.COMPAG.2021.106252

Gómez-Sanchis, J., Gómez-Chova, L., Aleixos, N., Camps-Valls, G., Montesinos-Herrero, C., Moltó, E., et al. (2008). Hyperspectral system for early detection of rottenness caused by penicillium digitatum in mandarins. J. Food Eng. 89 (1), 80–86. doi: 10.1016/J.JFOODENG.2008.04.009

Gorji, H. T., Shahabi, S. M., Sharma, A., Tande, L. Q., Husarik, K., Qin, J., et al. (2022). Combining deep learning and fluorescence imaging to automatically identify fecal contamination on meat carcasses. Sci. Rep. 12 (1), 2392. doi: 10.1038/s41598-022-06379-1

Guo, Y., Liu, Y., Oerlemans, A., Lao, S., Wu, S., Lew, M. S. (2016). Deep learning for visual understanding: A review. Neurocomputing 187, 27–48. doi: 10.1016/j.neucom.2015.09.116

Huang, F. H., Liu, Y. H., Sun, X. Y., Yang, H. (2021). Quality inspection of nectarine based on hyperspectral imaging technology. Syst. Sci. Control. Eng. 9 (1), 350–357. doi: 10.1080/21642583.2021.1907260

Ismail, N., Malik, O. A. (2022). Real-time visual inspection system for grading fruits using computer vision and deep learning techniques. Inf. Process. Agric. 9 (1), 24–37. doi: 10.1016/J.INPA.2021.01.005

Jahanbakhshi, A., Momeny, M., Mahmoudi, M., Zhang, Y. D. (2020). Classification of sour lemons based on apparent defects using stochastic pooling mechanism in deep convolutional neural networks. Scientia Hortic. 263, 109133. doi: 10.1016/J.SCIENTA.2019.109133

Jie, D., Wu, S., Wang, P., Li, Y., Ye, D., Wei, X. (2021). Research on citrus grandis granulation determination based on hyperspectral imaging through deep learning. Food Analytical Methods 14 (2), 280–289. doi: 10.1007/S12161-020-01873-6/TABLES/3

Jollife, I. T., Cadima, J. (2016). Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A: Mathematical Phys. Eng. Sci. 374 (2065). doi: 10.1098/rsta.2015.0202

Khalid, S., Khalil, T., Nasreen, S. (2014). A survey of feature selection and feature extraction techniques in machine learning. Proc. 2014 Sci. Inf. Conference SAI 2014, 372–378. doi: 10.1109/SAI.2014.6918213

Kim, D. G., Burks, T. F., Ritenour, M. A., Qin, J. (2014). Citrus black spot detection using hyperspectral imaging. Int. J. Agric. Biol. Eng. 7 (6), 20–27. doi: 10.25165/IJABE.V7I6.1143

Kim, M. S., Chen, Y. R., Mehl, P. M. (2001). Hyperspectral reflectance and fluorescence imaging system for food quality and safety. Trans. ASAE 44 (3), 721–729. doi: 10.13031/2013.6099

Krishnaswamy Rangarajan, A., Purushothaman, R. (2020). Disease classification in eggplant using pre-trained VGG16 and MSVM. Sci. Rep. 10 (1), 1–11. doi: 10.1038/s41598-020-59108-x

Lawrence, K. C., Yoon, S. C., Heitschmidt, G. W., Jones, D. R., Park, B. (2008). Imaging system with modified-pressure chamber for crack detection in shell eggs. Sens. Instrumentation Food Qual. Saf. 2 (2), 116–122. doi: 10.1007/S11694-008-9039-Z/FIGURES/7

Lee, W. H., Kim, M. S., Lee, H., Delwiche, S. R., Bae, H., Kim, D. Y., et al. (2014). Hyperspectral near-infrared imaging for the detection of physical damages of pear. J. Food Eng.130, 1–7. doi: 10.1016/J.JFOODENG.2013.12.032

Liang, W.-j., Zhang, H., Zhang, G.-f., Cao, H.-x. (2019). Rice blast disease recognition using a deep convolutional neural network. Sci. Rep. 9 (1), 1–10. doi: 10.1038/s41598-019-38966-0

Li, J., Luo, W., Wang, Z., Fan, S. (2019). Early detection of decay on apples using hyperspectral reflectance imaging combining both principal component analysis and improved watershed segmentation method. Postharvest Biol. Technology 149(December 2018), 235–246. doi: 10.1016/j.postharvbio.2018.12.007

Li, J., Rao, X., Ying, Y. (2011). Detection of common defects on oranges using hyperspectral reflectance imaging. Comput. Electron. Agric. 78 (1), 38–48. doi: 10.1016/J.COMPAG.2011.05.010

Liu, Z., He, Y., Cen, H., Lu, R. (2018). Deep feature representation with stacked sparse auto-encoder and convolutional neural network for hyperspectral imaging-based detection of cucumber defects. Trans. ASABE 61 (2), 425–436. doi: 10.13031/TRANS.12214

Lorente, D., Aleixos, N., Gómez-Sanchis, J., Cubero, S., Blasco, J. (2013). Selection of optimal wavelength features for decay detection in citrus fruit using the ROC curve and neural networks. Food Bioprocess Technol. 6 (2), 530–541. doi: 10.1007/S11947-011-0737-X/TABLES/7

Mohanty, S. P., Hughes, D. P., Salathé, M. (2016). Using deep learning for image-based plant disease detection. Frontiers in plant science, 7, 1419.

Mohinani, H., Chugh, V., Kaw, S., Yerawar, O., Dokare, I. (2022). Vegetable and fruit leaf diseases detection using ResNet. (Kolkata, India) 1–7. doi: 10.1109/irtm54583.2022.9791744

Park, B., Windham, W. R., Lawrence, K. C., Smith, D. P. (2007). Contaminant classification of poultry hyperspectral imagery using a spectral angle mapper algorithm. Biosyst. Eng. 96 (3), 323–333. doi: 10.1016/J.BIOSYSTEMSENG.2006.11.012

Pham, Q. T., Liou, N.-S. (2022). The development of on-line surface defect detection system for jujubes based on hyperspectral images. Comput. Electron. Agric. 194, 106743. doi: 10.1016/J.COMPAG.2022.106743

Pydipati, R., Burks, T. F., Lee, W. S. (2006). Identification of citrus disease using color texture features and discriminant analysis. Comput. Electron. Agric. 52 (1–2), 49–59. doi: 10.1016/J.COMPAG.2006.01.004

Qin, J., Burks, T. F., Kim, M. S., Chao, K., Ritenour, M. A. (2008). Citrus canker detection using hyperspectral reflectance imaging and PCA-based image classification method. Sens. Instrumentation Food Qual. Saf. 2 (3), 168–177. doi: 10.1007/S11694-008-9043-3/TABLES/1

Qin, J., Burks, T. F., Ritenour, M. A., Bonn, G. W. (2009). Detection of citrus canker using hyperspectral reflectance imaging with spectral information divergence. J. Food Eng. 183 (191), 183–191. doi: 10.1016/j.jfoodeng.2009.01.014

Qin, J., Burks, T. F., Zhao, X., Niphadkar, N., Ritenour, M. A. (2011). Multispectral detection of citrus canker using hyperspectral band selection. Trans. ASABE 54 (6), 2331–2341. doi: 10.13031/2013.40643

Qin, J., Chao, K., Kim, M. S., Lu, R., Burks, T. F. (2013). Hyperspectral and multispectral imaging for evaluating food safety and quality. In J. Food Eng. (Vol. 118 Issue 2 pp, 157–171). doi: 10.1016/j.jfoodeng.2013.04.001

Qin, J., Lu, R. (2005). Detection of pits in tart cherries by hyperspectral transmission imaging. Trans. ASAE 48 (5), 1963–1970. doi: 10.13031/2013.19988

Qi, X., Wang, T., Liu, J. (2018) in Proceedings - 2017 2nd International Conference on Mechanical, Control and Computer Engineering, ICMCCE (Harbin, China). 151–155. doi: 10.1109/ICMCCE.2017.49

Rivera, N. V., Gómez-Sanchis, J., Chanona-Pérez, J., Carrasco, J. J., Millán-Giraldo, M., Lorente, D., et al. (2014). Early detection of mechanical damage in mango using NIR hyperspectral images and machine learning. Biosyst. Eng. 122, 91–98. doi: 10.1016/J.BIOSYSTEMSENG.2014.03.009

Rodarmel, C., Shan, J. (2002). “Principal component analysis for hyperspectral image classification,” in Surveying and Land Information Science (Munich, Germany), vol. 62 (2), 115–122.

Schubert, T. S., Rizvi, S. A., Sun, X., Gottwald, T. R., Graham, J. H., Dixon, W. N. (2001). Meeting the challenge of eradicating citrus canker in Florida - again. Plant Dis. 85 (4), 340–356. doi: 10.1094/PDIS.2001.85.4.340

Simonyan, K., Zisserman, A. (2014) Very deep convolutional networks for Large-scale image recognition. Available at: http://arxiv.org/abs/1409.1556.

Stanford (2013) Softmax regression. Available at: http://ufldl.stanford.edu/tutorial/supervised/SoftmaxRegression/.

Su, H., Du, Q., Du, P. (2014). Hyperspectral image visualization. IEEE J. Selected Topics Appl. Earth Observations Remote Sens. 7 (8), 2647–2658. doi: 10.1109/JSTARS.2013.2272654

Sustika, R., Subekti, A., Pardede, H. F., Suryawati, E., Mahendra, O., Yuwana, S. (2018). Evaluation of deep convolutional neural network architectures for strawberry quality inspection. Researchgate.Net 7, 75–80. doi: 10.14419/ijet.v7i4.40.24080

Swasono, D. I., Tjandrasa, H., Fathicah, C. (2019). Classification of tobacco leaf pests using VGG16 transfer learning. Proc. 2019 Int. Conf. Inf. Communication Technol. Systems ICTS 2019, 176–181. doi: 10.1109/ICTS.2019.8850946

Sweet, H. C., Edwards, G. J. (1986). Citrus blight assessment using a microcomputer; quantifying damage using an apple computer to solve reflectance spectra of entire trees. Florida scientist, 48–54.

Syed-Ab-Rahman, S. F., Hesamian, M. H., Prasad, M. (2022). Citrus disease detection and classification using end-to-end anchor-based deep learning model. Appl. Intell. 52 (1), 927–938. doi: 10.1007/S10489-021-02452-W/TABLES/4

Timmer, L. W., Garnsey, S. M., Graham, J. H. (Eds.). (2000). Compendium of citrus diseases (Vol. 1, pp. 19-20) Saint Paul^ eMN MN: APS press.

Xu-hui, W., Ping, S., Li, C., Ye, W. (2009). A ROC curve method for performance evaluation of support vector machine with optimization strategy. In 2009 International Forum on Computer Science-Technology and Applications (IEEE) Vol. 2, pp. 117–120.

van der Meer, F. (2006). The effectiveness of spectral similarity measures for the analysis of hyperspectral imagery. Int. J. Appl. Earth Observation Geoinformation 8 (1), 3–17. doi: 10.1016/J.JAG.2005.06.001

Yadav, P. K., Thomasson, J. A., Hardin, R., Searcy, S. W., Braga-neto, U., Popescu, S. C., et al. (2022a). Detecting volunteer cotton plants in a corn field with deep learning on UAV remote-sensing imagery. ArXiv Preprint 06673, 1–38. doi: 10.48550/arXiv.2207.06673

Yadav, P. K., Thomasson, J. A., Hardin, R. G., Searcy, S. W., Braga-Neto, U. M., Popescu, S. C., et al. (2022b). Volunteer cotton plant detection in corn field with deep learning. Autonomous Air Ground Sens. Syst. Agric. Optimization Phenotyping VII 12114, 1211403. doi: 10.1117/12.2623032

Yadav, P. K., Thomasson, J. A., Searcy, S. W., Hardin, R. G., Braga-Neto, U. M., Popescu, S. C., et al. (2022c). Assessing the performance of YOLOv5 algorithm for detecting volunteer cotton plants in corn fields at three different growth stages. ArXiv Preprint 00519, 1–18. doi: 10.48550/arXiv.2208.00519

Yadav, P. K., Thomasson, J. A., Searcy, S. W., Hardin, R. G., Popescu, S. C., Martin, D. E., et al. (2022d). Computer vision for volunteer cotton detection in a corn field with UAS remote sensing imagery and spot-spray applications. ArXiv Preprint 07334, 1–39. doi: 10.48550/arXiv.2207.07334

Yang, H., Ni, J., Gao, J., Han, Z., Luan, T. (2021). A novel method for peanut variety identification and classification by improved VGG16. Sci. Rep. 11 (1), 15756. doi: 10.1038/s41598-021-95240-y

Yao, J., Qi, J., Zhang, J., Shao, H., Yang, J., Li, X. (2021). A real-time detection algorithm for kiwifruit defects based on yolov5. Electron. (Switzerland) 10 (14), 1711. doi: 10.3390/electronics10141711

Zhang, H., Zhang, S., Dong, W., Luo, W., Huang, Y., Zhan, B., et al. (2020). Detection of common defects on mandarins by using visible and near infrared hyperspectral imaging. Infrared Phys. Technol. 108, 103341. doi: 10.1016/J.INFRARED.2020.103341

Zhao, W., Du, S. (2016). Spectral-spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote Sens. 54 (8), 4544–4554. doi: 10.1109/TGRS.2016.2543748

Keywords: hyperspectral imaging, citrus canker, disease detection, food safety, convolution neural network (CNN), machine vision

Citation: Yadav PK, Burks T, Frederick Q, Qin J, Kim M and Ritenour MA (2022) Citrus disease detection using convolution neural network generated features and Softmax classifier on hyperspectral image data. Front. Plant Sci. 13:1043712. doi: 10.3389/fpls.2022.1043712

Received: 13 September 2022; Accepted: 18 November 2022;

Published: 07 December 2022.

Edited by:

Muhammad Fazal Ijaz, Sejong University, South KoreaReviewed by:

Debaleena Datta, University of Engineering and Management, IndiaParvathaneni Naga Srinivasu, Prasad V. Potluri Siddhartha Institute of Technology, India

Jana Shafi, Prince Sattam Bin Abdulaziz University, Saudi Arabia

Copyright © 2022 Yadav, Burks, Frederick, Qin, Kim and Ritenour. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Thomas Burks, dGJ1cmtzQHVmbC5lZHU=

Pappu Kumar Yadav

Pappu Kumar Yadav Thomas Burks

Thomas Burks Quentin Frederick

Quentin Frederick Jianwei Qin

Jianwei Qin Moon Kim2

Moon Kim2 Mark A. Ritenour

Mark A. Ritenour